Cloud Computing Lecture 1 Parallel and Distributed Computing

Cloud Computing Lecture #1 Parallel and Distributed Computing Jimmy Lin The i. School University of Maryland Monday, January 28, 2008 Material adapted from slides by Christophe Bisciglia, Aaron Kimball, & Sierra Michels-Slettvet, Google Distributed Computing Seminar, 2007 (licensed under Creation Commons Attribution 3. 0 License) This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3. 0 United States See http: //creativecommons. org/licenses/by-nc-sa/3. 0/us/ for details

Today’s Topics ¢ Course overview ¢ Introduction to parallel and distributed processing i. School

What’s the course about? ¢ Integration of research and teaching l l ¢ Criteria for success: at the end of the course l l ¢ Team leaders get help on a tough problem Team members gain valuable experience Each team will have a publishable result Each team will have a paper suitable for submission to an appropriate conference/journal Along the way: l l l Build a community of hadoopers at Maryland Generate lots of publicity Have lots of fun! i. School

Hadoop Zen ¢ Don’t get frustrated (take a deep breath)… l ¢ Be patient… l ¢ This is the first time I’ve taught this course Be flexible… l ¢ This is bleeding edge technology Lots of uncertainty along the way Be constructive… l Tell me how I can make everyone’s experience better i. School

Things to go over… ¢ Course schedule ¢ Course objectives ¢ Assignments and deliverables ¢ Evaluation i. School

My Role ¢ To hack alongside everyone ¢ To substantively contribute ideas where appropriate ¢ To serve as a facilitator and a resource ¢ To make sure everything runs smoothly i. School

Outline ¢ Web-Scale Problems ¢ Parallel vs. Distributed Computing ¢ Flynn's Taxonomy ¢ Programming Patterns i. School

Web-Scale Problems ¢ Characteristics: l l ¢ Lots of data Lots of crunching (not necessarily complex itself) Examples: l l l Obviously, problems involving the Web Empirical and data-driven research (e. g. , in HLT) “Post-genomics era” in life sciences High-quality animation The serious hobbyist i. School

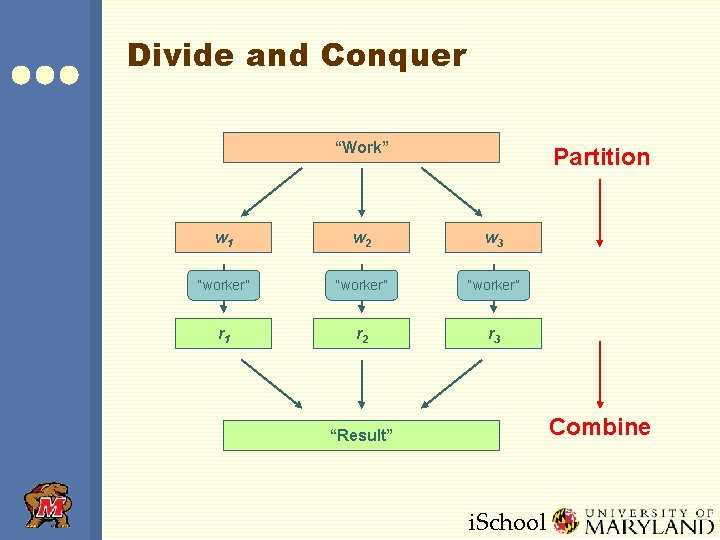

It all boils down to… ¢ Divide-and-conquer ¢ Throwing more hardware at the problem Simple to understand… a lifetime to master… i. School

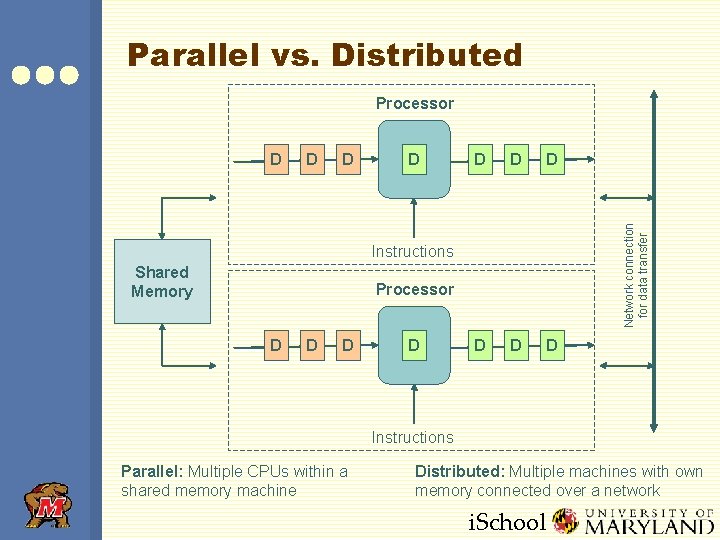

Parallel vs. Distributed ¢ Parallel computing generally means: l l ¢ Vector processing of data Multiple CPUs in a single computer Distributed computing generally means: l Multiple CPUs across many computers i. School

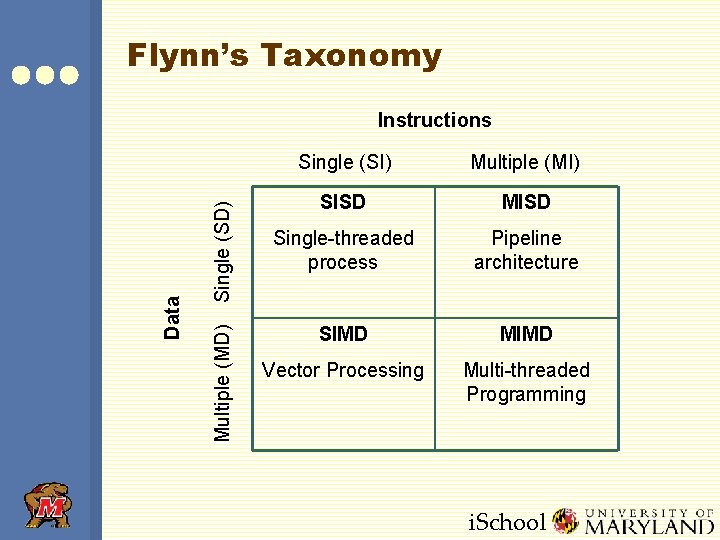

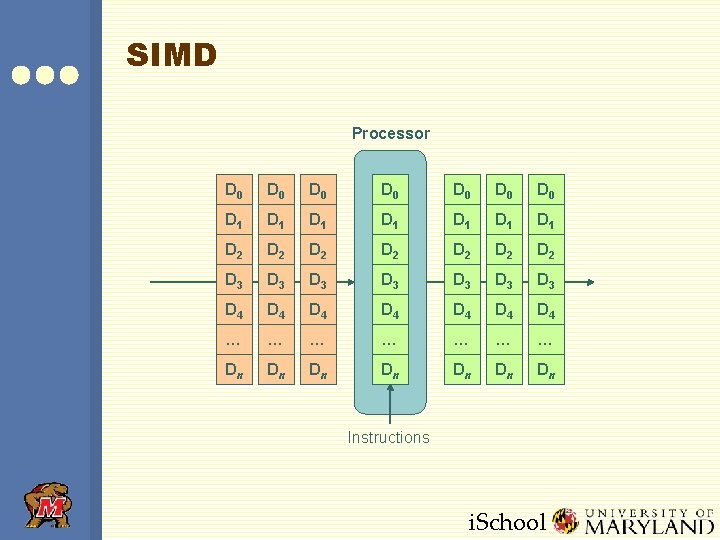

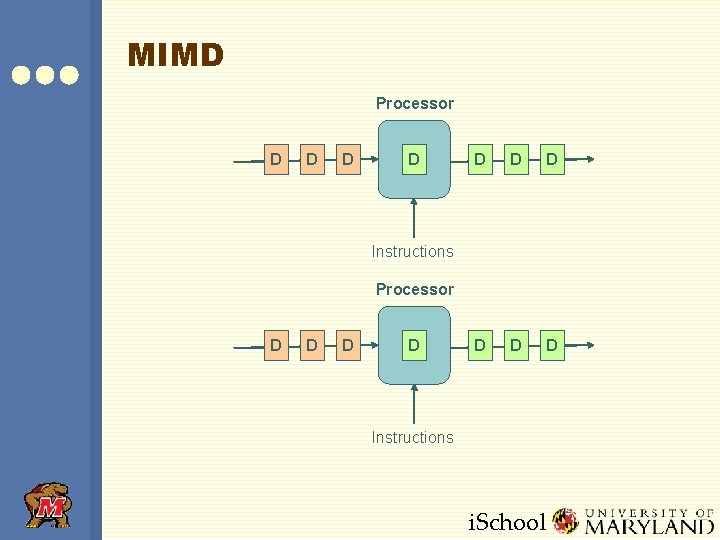

Flynn’s Taxonomy Single (SD) Multiple (MD) Data Instructions Single (SI) Multiple (MI) SISD MISD Single-threaded process Pipeline architecture SIMD MIMD Vector Processing Multi-threaded Programming i. School

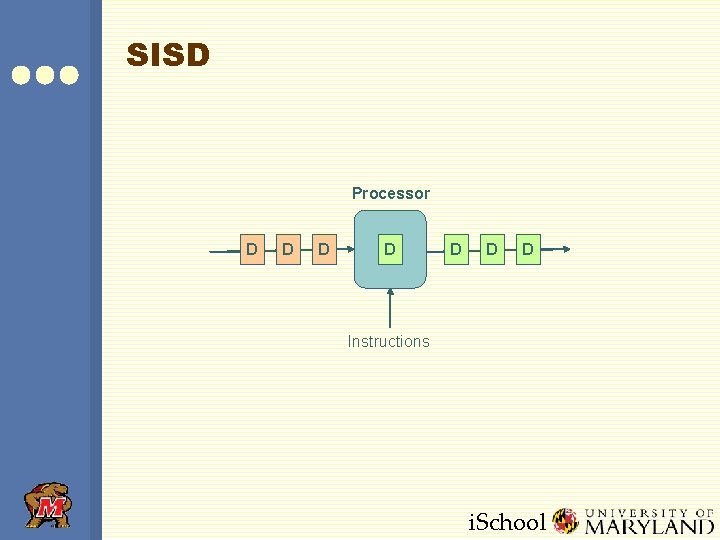

SISD Processor D D D D Instructions i. School

SIMD Processor D 0 D 0 D 1 D 1 D 2 D 2 D 3 D 3 D 4 D 4 … … … … Dn Dn Instructions i. School

MIMD Processor D D D D D Instructions Processor D D Instructions i. School

Parallel vs. Distributed Processor D D D D D Network connection for data transfer D Instructions Shared Memory Processor D D Instructions Parallel: Multiple CPUs within a shared memory machine Distributed: Multiple machines with own memory connected over a network i. School

Divide and Conquer “Work” Partition w 1 w 2 w 3 “worker” r 1 r 2 r 3 Combine “Result” i. School

Different Workers ¢ Different threads in the same core ¢ Different cores in the same CPU ¢ Different CPUs in a multi-processor system ¢ Different machines in a distributed system i. School

Parallelization Problems ¢ How do we assign work units to workers? ¢ What if we have more work units than workers? ¢ What if workers need to share partial results? ¢ How do we aggregate partial results? ¢ How do we know all the workers have finished? ¢ What if workers die? What is the common theme of all of these problems? i. School

General Theme? ¢ Parallelization problems arise from: l l Communication between workers Access to shared resources (e. g. , data) ¢ Thus, we need a synchronization system! ¢ This is tricky: l l Finding bugs is hard Solving bugs is even harder i. School

Multi-Threaded Programming ¢ Difficult because l l ¢ Thus, we need: l l l ¢ Semaphores (lock, unlock) Conditional variables (wait, notify, broadcast) Barriers Still, lots of problems: l l l ¢ Don’t know the order in which threads run Don’t know when threads interrupt each other Deadlock, livelock Race conditions. . . Moral of the story: be careful! i. School

Patterns for Parallelism ¢ Several programming methodologies exist to build parallelism into programs ¢ Here are some… i. School

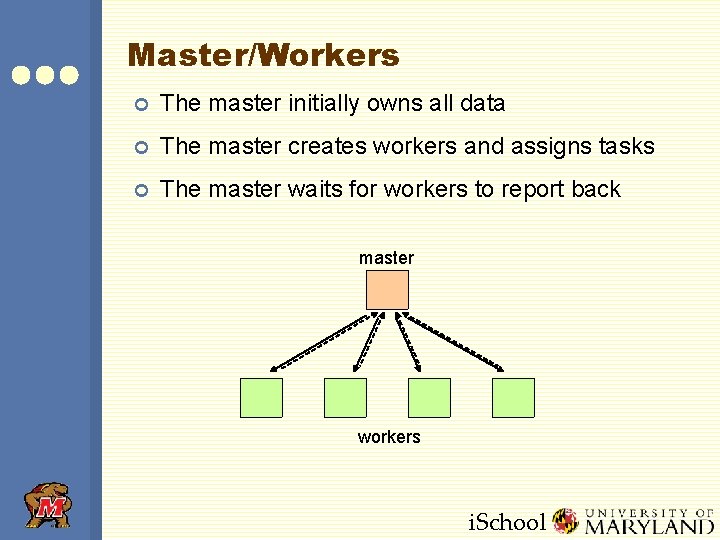

Master/Workers ¢ The master initially owns all data ¢ The master creates workers and assigns tasks ¢ The master waits for workers to report back master workers i. School

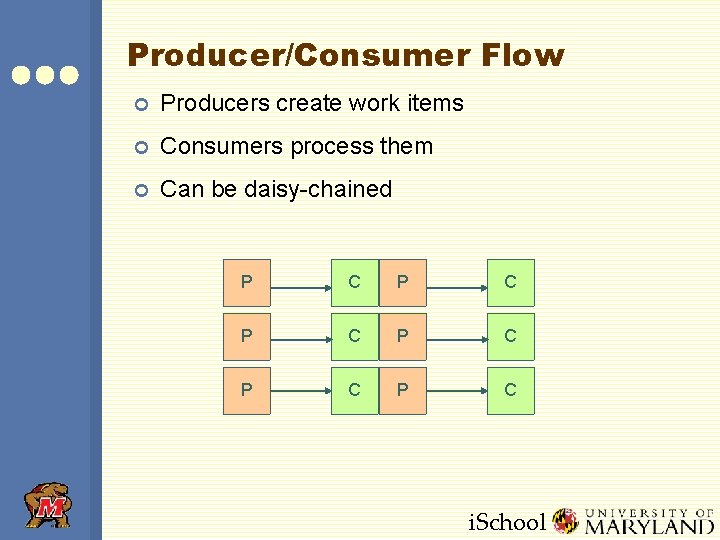

Producer/Consumer Flow ¢ Producers create work items ¢ Consumers process them ¢ Can be daisy-chained P C P C P C i. School

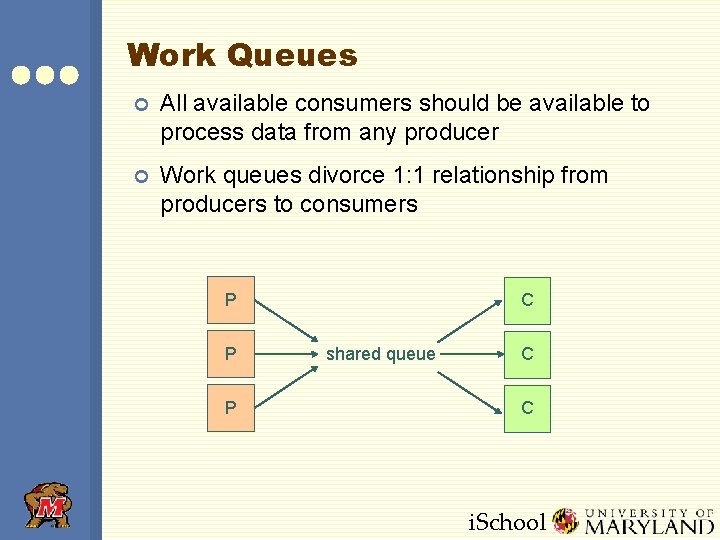

Work Queues ¢ All available consumers should be available to process data from any producer ¢ Work queues divorce 1: 1 relationship from producers to consumers P P P C shared queue C C i. School

And finally… ¢ The above solutions represent general patterns ¢ In reality: l l ¢ Lots of one-off solutions, custom code Burden on the programmer to manage everything Can we push the complexity onto the system? l Map. Reduce…for next time i. School

- Slides: 25