CLOUD COMPUTING ARCHITECTURES APPLICATIONS LECTURE 4 POPULAR MAPREDUCE

- Slides: 37

CLOUD COMPUTING ARCHITECTURES & APPLICATIONS LECTURE #4 POPULAR MAPREDUCE IMPLEMENTATIONS. DISTRIBUTED FILE SYSTEMS. LECTURERS LAZAR KIRCHEV, Ph. D <L. KIRCHEV@SAP. COM> ILIYAN NENOV <ILIYAN. NENOV@SAP. COM> KRUM BAKALSKY <KRUM. BAKALSKY@SAP. COM> 21 March, 2011

OUTLINE Map. Reduce implementations Google Map. Reduce Hadoop Distributed File Systems Google File System (GFS) Hadoop File System (HDFS) 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 2

Map. Reduce Implementations

Google Map. Reduce Execution environment: Dual-processor x 86 processors machines running Linux with ~ 16 GB RAM Commodity networking hardware – gigabit/second A cluster consists of hundreds or thousands of machines 2 TB disk space, attached to individual machines Execution of Map. Reduce Input data split into M pieces for parallel mapping Intermediate keys are partitioned into R pieces by a partitioning function (the default one is hash(key) mod R) 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 4

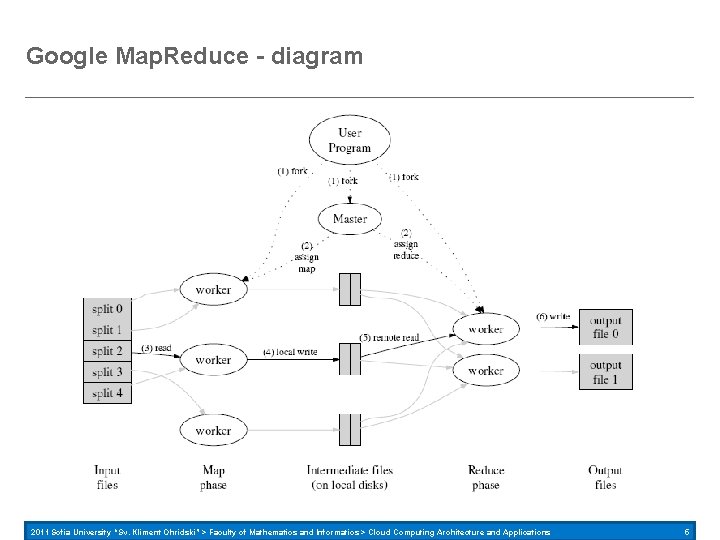

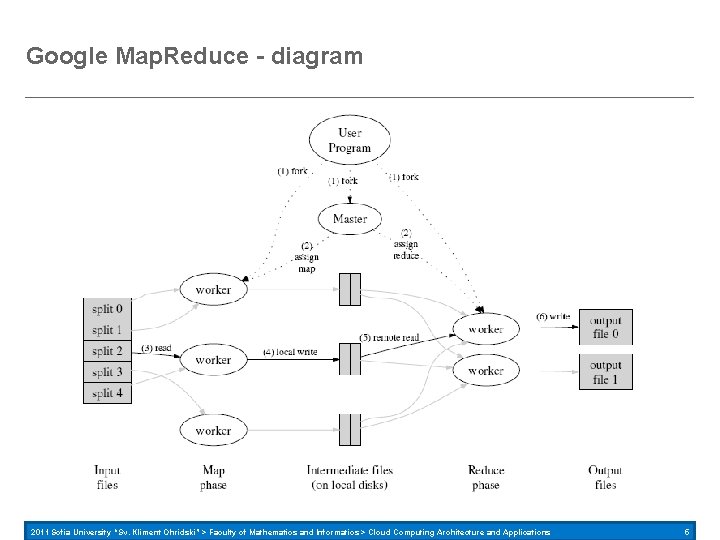

Google Map. Reduce - diagram 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 5

Google Map. Reduce – execution flow Split input into pieces of 16 to 64 MB and start many copies of the program on the cluster Master/workers pattern Master assigns map or reduce tasks to idle workers Map worker Reads input split for the map task Parses key/value pairs from the input data and passes each pair to the Map function Key/value pairs produced by Map are buffered in memory Writing output Buffered pairs periodically written to local disk, partitioned into R regions The locations of the regions are passed to the master The master forwards the locations to the reduce workers 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 6

Google Map. Reduce – execution flow Reduce worker Reads data from local disks of map workers Sorts data by the intermediate keys Reduction phase Reduce worker iterates the sorted data and for each intermediate key passes the key and the corresponding values to the Reduce function Append the output of the Reduce function to a final output file for this partiotion When all map and reduce tasks are completed, the master notifies the user program; after completion, the output is available in the R output files 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 7

Google Map. Reduce – Master Data Structures For each map and reduce task the master stores: its status (idle, in-progress or completed) Identity of the worker machine (for non-idle tasks) For each completed map task the master stores the locations and sizes of the intermediate file regions produced by the map task; these are updated as map tasks are completed 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 8

Google Map. Reduce – fault tolerance With thousands of machines the failure is the rule, not the exception Worker failures Master pings workers Any map task completed by a worker are reset to idle state for rescheduling Any map or reduce tasks in progress are reset to idle When a map task is re-executed, the reduce tasks are notified for that Master failure Its failure is unlikely If the master fails, the Map. Reduce computation is aborted 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 9

Google Map. Reduce – data locality and granularity of tasks Input data is stored on the local disks of the cluster machines The master takes into consideration the location of the input files and attempts to schedule a map task on the same machine or at least on a machine in the same network Map phase is divided into M pieces and reduce phase – into R M and R should be much larger than the number of worker machines Improved load balancing and worker failure recovery Practical bounds for M and R – master must make O(M + R) scheduling decisions and keeps O(M * R) state in memory Example: M = 200 000 and R = 5 000 with 2 000 workers 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 10

Google Map. Reduce Backup Tasks Stragglers Master schedules backup executions for remaining in progress tasks Leads to significant improvements of completion time Partitioning function Users specify the number of output files R Data is partitioned with a partitioning function on the intermediate key Default is hash(key) mod R User specified partitioning functions are supported Combiner function Optional function which does partial merging on the output of Map before sending it to the reducers Usually the code is the same as the code of the reduce function 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 11

Google Map. Reduce Input and output types Plain text, key/value pairs, … Each input type knows how to split itself into ranges for parallel map Users can implement a reader interface to add support for new types Skipping bad records Bugs in user code that cause the Map or Reduce function to crash deterministically on certain records Map. Reduce can detect such crashes and skip problematic records Status information HTTP server in master, exporting status pages They contain progress of computation, std err and std out of tasks, tasks statistics, etc. Counters Count occurrences of various events Counter values propagated to master, where they are aggregated 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 12

Hadoop Open source implementation of Map. Reduce Used for: Search (Yahoo, Amazon) Log processing (Facebook, Yahoo, Context. Web. Joost, Last. fm) Recommendation Systems (Facebook) Data Warehouse (Facebook, AOL) Video and Image Analysis (New York Times, Eyealike) 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 13

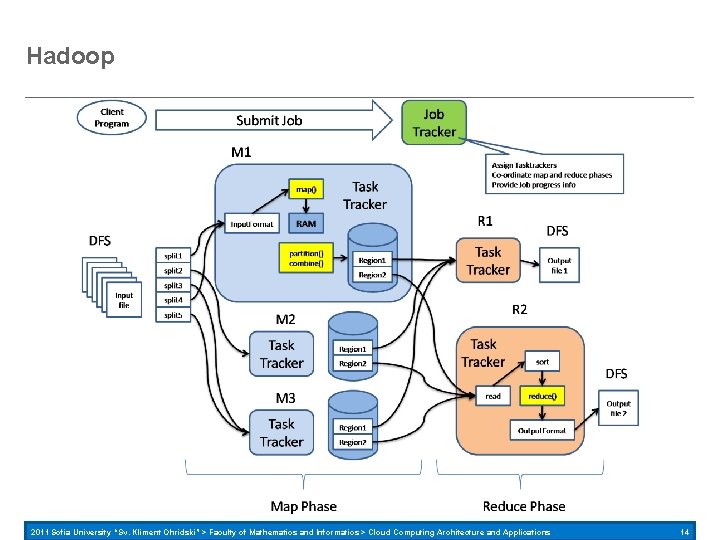

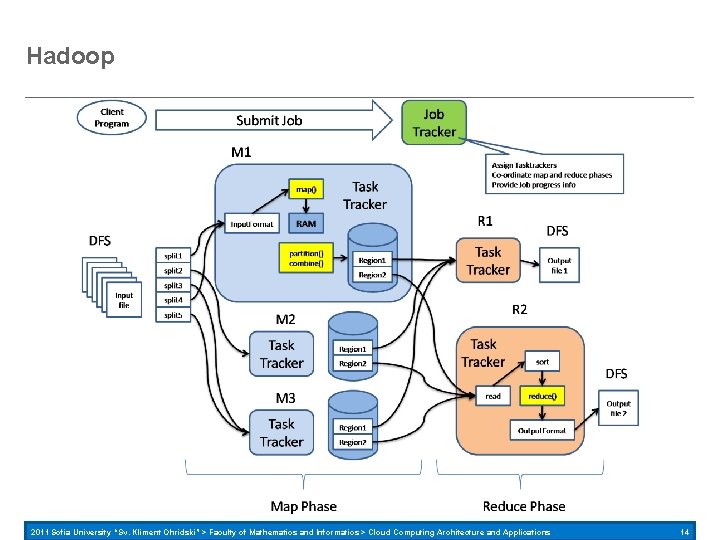

Hadoop 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 14

Hadoop Master/workers pattern Job. Tracker – master keeps track of workers provides interface for job submission distributes pieces of the input to available Task. Trackers Task. Tracker – workers execute map and reduce tasks extracts input Parses key/value pairs and executes the Map function on them The key/value pairs resulting from the Map function are buffered in memory Writing output Periodically the memory buffer is saved in local files Key/value pairs are sorted by a combine function and placed in one of R local files When finished, the Task. Tracker notifies the Job. Tracker 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 15

Hadoop After the map has finished, Job. Tracker notifies the reduce Task. Trackers Read files remotely Sort key/value pairs for each key For each key pass the collected values to the Reduce function Write the key/aggregated. Value to the output file One output file for each reduce Task. Tracker When all tasks are completed, the Job. Tracker notifies the client program The Job. Tracker takes into account data locality when assigning tasks to Task. Trackers Job. Tracker may execute a single task on many Task. Trackers to improve performance 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 16

Hadoop – fault tolerance A heartbeat is sent from the Task. Tracker to the Job. Tracker periodically If a map Task. Tracker fails, the Job. Tracker reassigns all splits, assigned to the failed Task. Tracker to another Task. Tracker If a reduce Task. Tracker fails, the Job. Tracker assigns the reduce task to another Task. Tracker In the last version of Hadoop the Job. Tracker saves periodically checkpoints to a file When a Job. Tracker starts, it first checks for checkpoints and if available – starts from the saved state 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 17

Distributed File Systems

Google File System - assuptions High component failure rates Inexpensive commodity components fail all the time “Modest” number of HUGE files Just a few million Each is 100 MB or larger; multi-GB files typical Files are write-once, mostly appended to Perhaps concurrently Large streaming reads High sustained throughput favored over low latency 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 19

Google File System – design decisions Files stored as chunks Fixed size (64 MB) Reliability through replication Each chunk replicated across 3+ chunkservers Single master to coordinate access, keep metadata Simple centralized management No data caching Little benefit due to large data sets, streaming reads Familiar interface, but customize the API Simplify the problem; focus on Google apps Add snapshot and record append operations 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 20

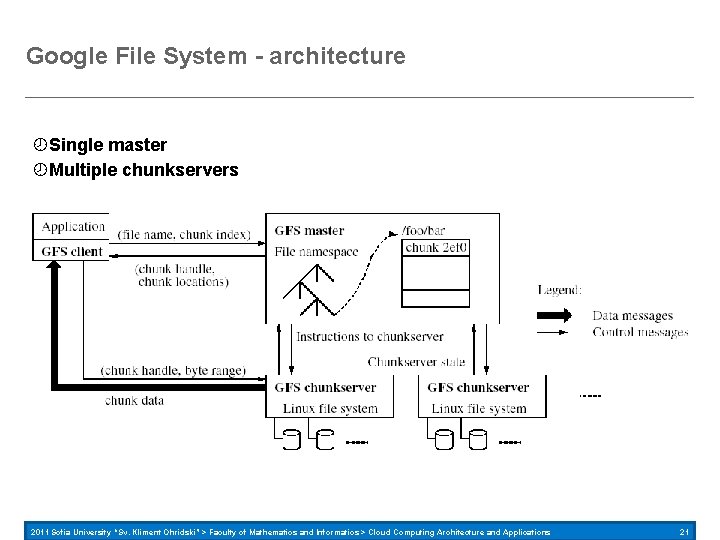

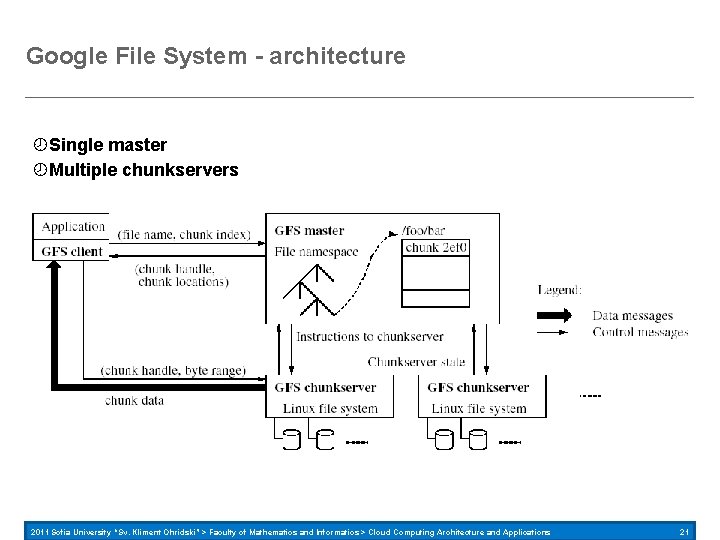

Google File System - architecture Single master Multiple chunkservers 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 21

Google File System - architecture Single master is a: Single point of failure Scalability bottleneck GFS solutions: Shadow masters Minimize master involvement never move data through it, use only for metadata and cache metadata at clients large chunk size master delegates authority to primary replicas in data mutations (chunk leases) 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 22

Google File System - metadata Global metadata is stored on the master File and chunk namespaces Mapping from files to chunks Locations of each chunk’s replicas All in memory (64 bytes / chunk) Fast Easily accessible Master has an operation log for persistent logging of critical metadata updates persistent on local disk replicated checkpoints for faster recovery 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 23

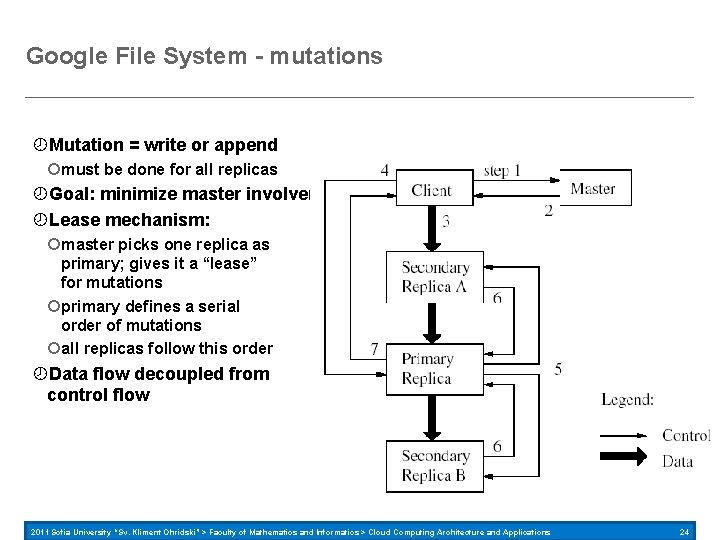

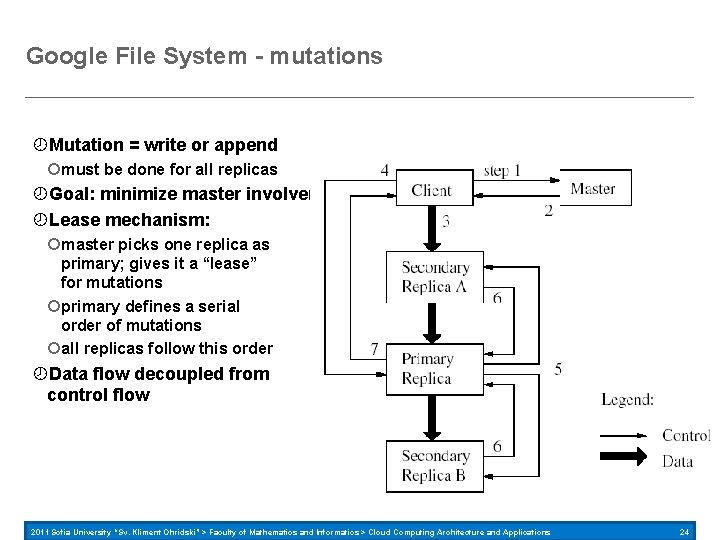

Google File System - mutations Mutation = write or append must be done for all replicas Goal: minimize master involvement Lease mechanism: master picks one replica as primary; gives it a “lease” for mutations primary defines a serial order of mutations all replicas follow this order Data flow decoupled from control flow 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 24

Google File System – atomic append Client specifies data GFS appends it to the file atomically at least once GFS picks the offset works for concurrent writers Used heavily by Google apps e. g. , for files that serve as multiple-producer/single-consumer queues 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 25

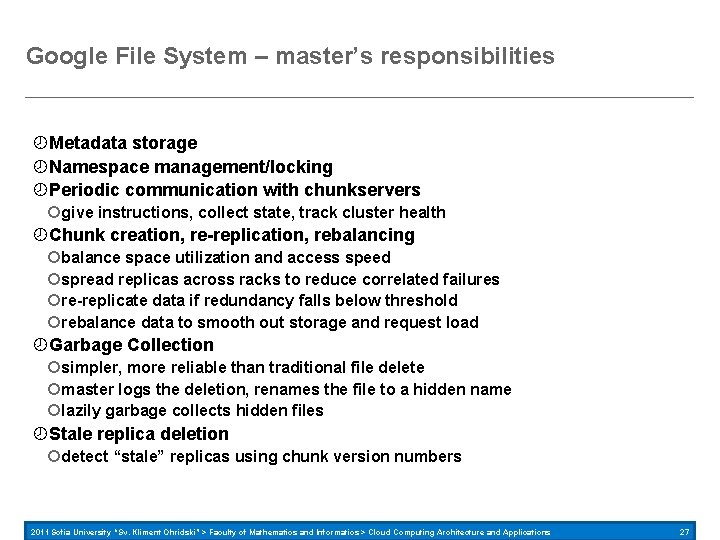

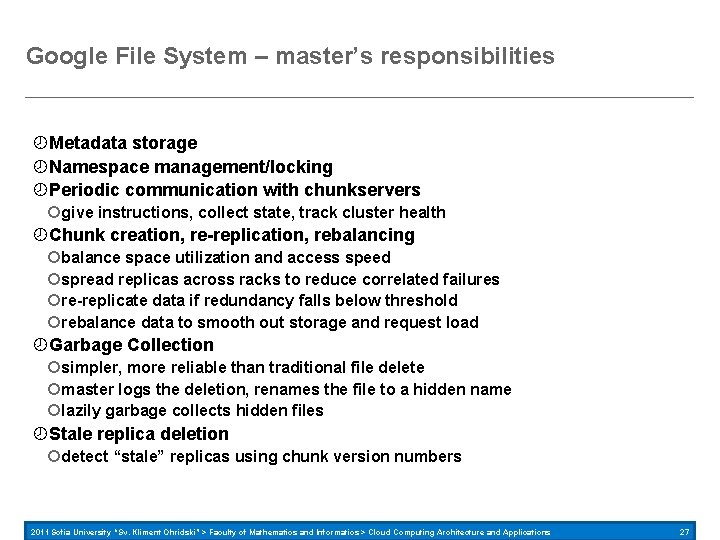

Google File System – master’s responsibilities Metadata storage Namespace management/locking Periodic communication with chunkservers give instructions, collect state, track cluster health Chunk creation, re-replication, rebalancing balance space utilization and access speed spread replicas across racks to reduce correlated failures re-replicate data if redundancy falls below threshold rebalance data to smooth out storage and request load Garbage Collection simpler, more reliable than traditional file delete master logs the deletion, renames the file to a hidden name lazily garbage collects hidden files Stale replica deletion detect “stale” replicas using chunk version numbers 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 27

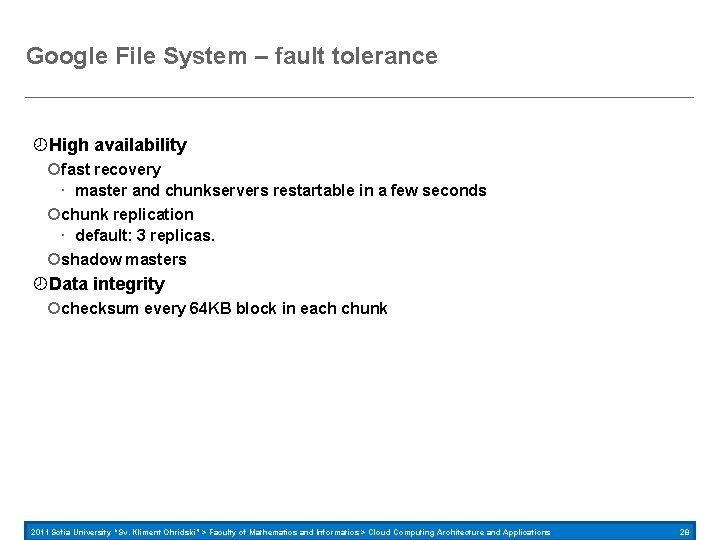

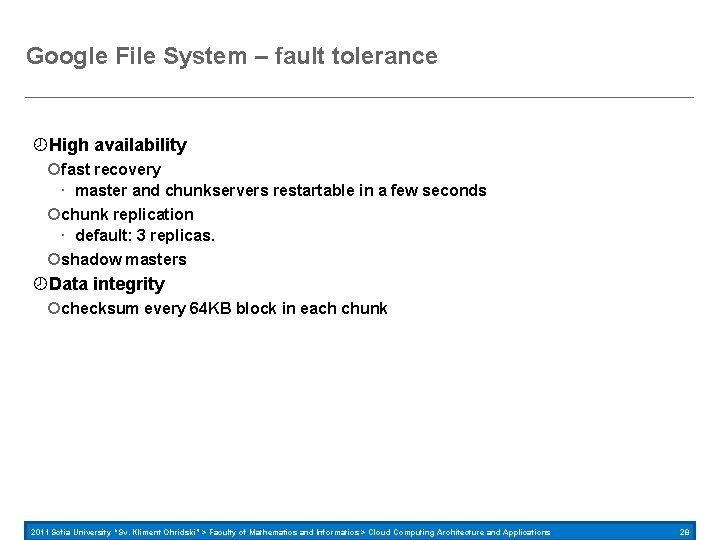

Google File System – fault tolerance High availability fast recovery master and chunkservers restartable in a few seconds chunk replication default: 3 replicas. shadow masters Data integrity checksum every 64 KB block in each chunk 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 28

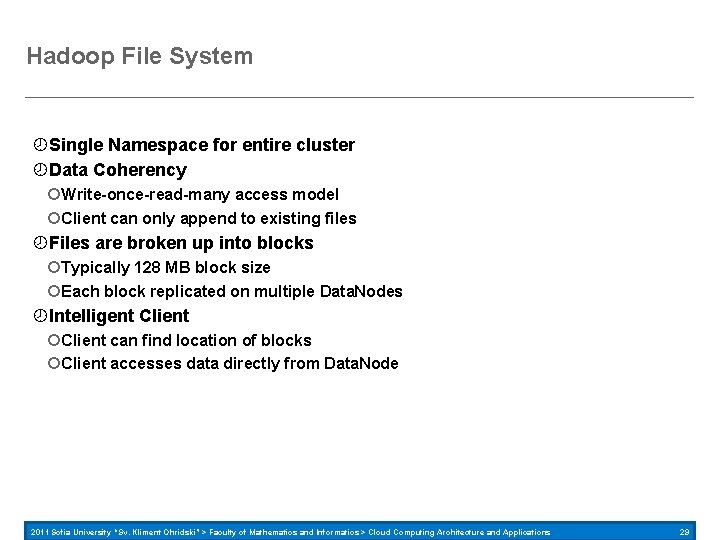

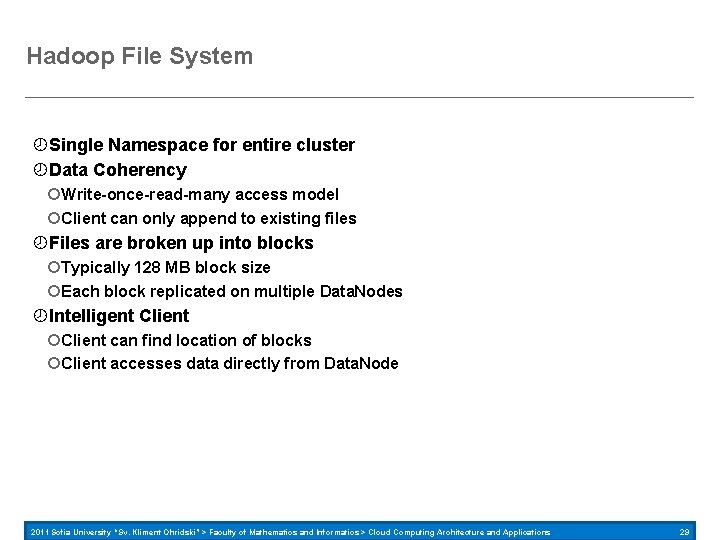

Hadoop File System Single Namespace for entire cluster Data Coherency Write-once-read-many access model Client can only append to existing files Files are broken up into blocks Typically 128 MB block size Each block replicated on multiple Data. Nodes Intelligent Client can find location of blocks Client accesses data directly from Data. Node 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 29

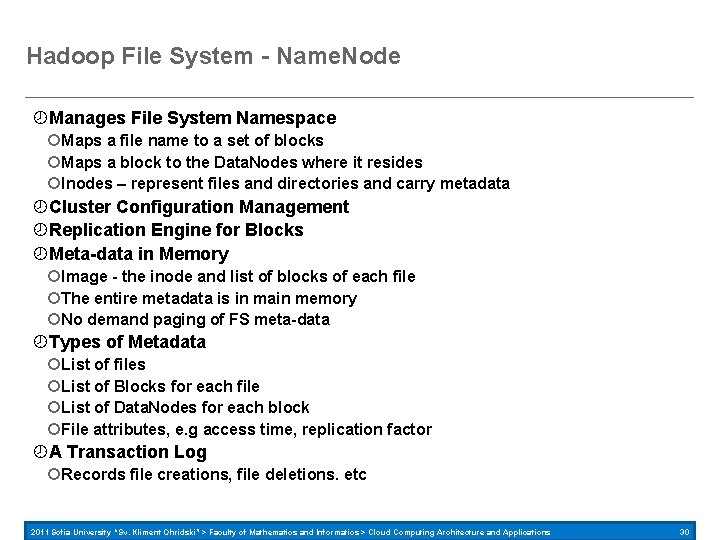

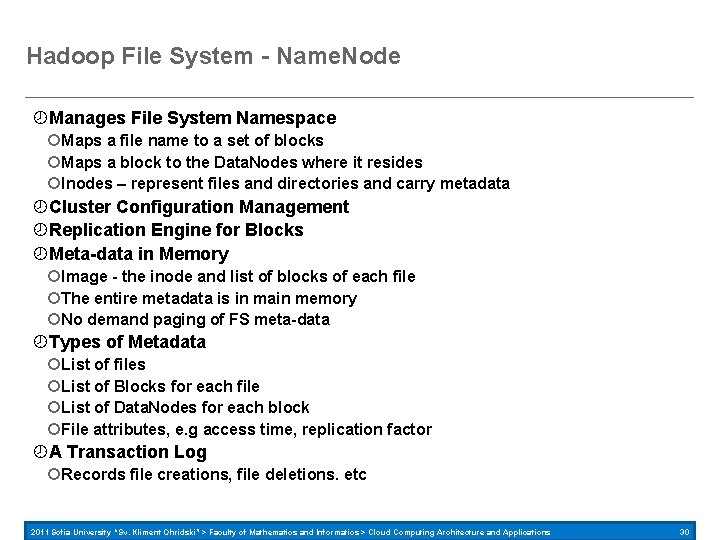

Hadoop File System - Name. Node Manages File System Namespace Maps a file name to a set of blocks Maps a block to the Data. Nodes where it resides Inodes – represent files and directories and carry metadata Cluster Configuration Management Replication Engine for Blocks Meta-data in Memory Image - the inode and list of blocks of each file The entire metadata is in main memory No demand paging of FS meta-data Types of Metadata List of files List of Blocks for each file List of Data. Nodes for each block File attributes, e. g access time, replication factor A Transaction Log Records file creations, file deletions. etc 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 30

Hadoop File System - Data. Node A Block Server Stores data in the local file system (e. g. ext 3) Stores meta-data of a block (e. g. CRC) Serves data and meta-data to Clients Block Report Periodically sends a report of all existing blocks to the Name. Node Facilitates Pipelining of Data Forwards data to other specified Data. Nodes Image Checkpoint file is never changed by the Name. Node, replaced by next checkpoint At startup Name. Node initialize namespace from the image and replays the journal 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 31

Hadoop File System – block placement Current Strategy One replica on random node on local rack Second replica on a random remote rack Third replica on same remote rack Additional replicas are randomly placed Clients read from nearest replica Name. Node detects Data. Node failures Chooses new Data. Nodes for new replicas Balances disk usage Balances communication traffic to Data. Nodes Balancer Block placement strategy does not take into account disk space Balancer moves replicated blocks to balance disk space usage Replication Name. Node ensures that blocks are not under- or over- replicated Ensures even distribution of replicated blocks 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 32

Hadoop File Systme – data correctness Use Checksums to validate data Use CRC 32 File Creation Client computes checksum per 512 byte Data. Node stores the checksum File access Client retrieves the data and checksum from Data. Node If Validation fails, Client tries other replicas 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 33

Hadoop File System – Name. Node failure A single point of failure Transaction Log stored in multiple directories A directory on the local file system A directory on a remote file system (NFS/CIFS) Need to develop a real HA solution 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 34

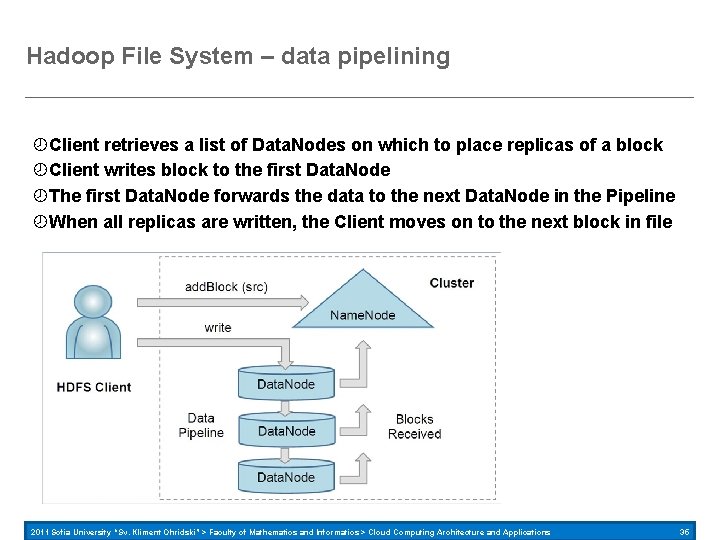

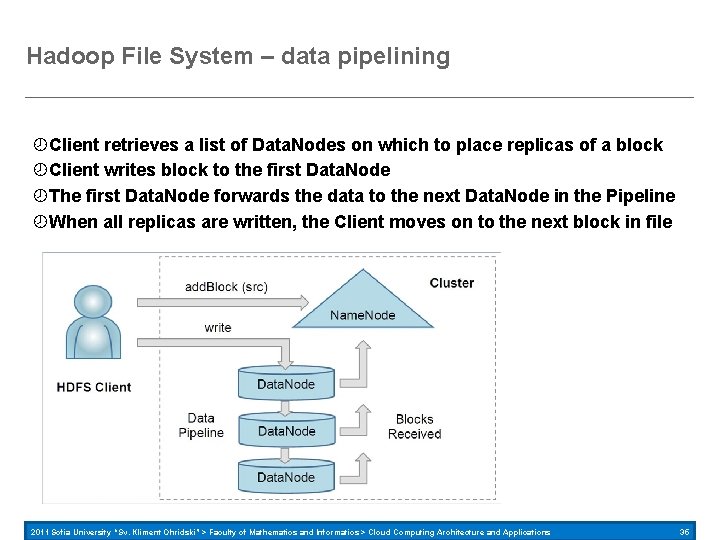

Hadoop File System – data pipelining Client retrieves a list of Data. Nodes on which to place replicas of a block Client writes block to the first Data. Node The first Data. Node forwards the data to the next Data. Node in the Pipeline When all replicas are written, the Client moves on to the next block in file 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 35

Hadoop File System – Checkpoint. Node Copies FSImage and Transaction Log from Name. Node to a temporary directory Merges FSImage and Transaction Log into a new FSImage in temporary directory Uploads new FSImage to the Name. Node Transaction Log on Name. Node is purged Backup. Node Creates regular checkpoints Maintains an in-memory image of the file system namespace, always synchronized with the Name. Node 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 36

END OF LECTURE #4

The Grid Headline area White space Drawing area 2011 Sofia University “Sv. Kliment Ohridski” > Faculty of Mathematics and Informatics > Cloud Computing Architecture and Applications 39