Cloud Computing and the Map Reduce paradigm Helmut

- Slides: 19

Cloud Computing and the Map. Reduce paradigm Helmut Neukirchen helmut@hi. is Iðnaðarverkfræði-, vélaverkfræði- og tölvunarfræðideild Verkfræði- og náttúruvísindasvið Háskóli Íslands

Cloud Computing: Introduction • Historic example: Amazon invested into hardware to handle Christmas shopping peak. – Outside Christmas season, 90% of the hardware is idle. – Amazon started to sell idle CPU time. Cloud computing: Provide computer resources on demand access them via a network: • Just a hype? It depends! The Internet is often visualised as a cloud Cloud computing Armbrust et al. : “Above the Clouds: A Berkeley View of Cloud Computing”, Technical Report EECS-2009 -28, EECS Department, University of California, Berkeley.

Cloud Computing: Advantages • Users do not need to invest into hardware. – “Pay as you go”: just pay for actual usage. • Users can scale on demand. – 1 CPU for 100 h costs same as 100 CPUs for 1 h, • Cheaper. – Economy of scale (hardware prices, administration cost), – Averaging/multiplexing (better utilisation of hardware), – Energy (move to where energy is cheap).

Cloud Computing: Disadvantages • Cloud computing is not suitable – if you have already invested in hardware. • You have the hardware, so use it. – if you are as big as cloud computing providers. • Then, you can do it cheaper than cloud provider. – if privacy/business secrets are an issue. • Privacy laws may forbid to transmit data to cloud. • Business secrets should not leave your company. – if you want to be in full control of your IT. • Danger of cloud provider having technical problems.

Elastic Infrastructure as a Service • Cloud offering Infrastructure as a Service (Iaa. S): – Provides virtual machines (=CPU and RAM) and storage server (=hard disk). • Possible to install own operating system and software on it. – Virtualisation: • You do not know on which hardware your virtual machine executes. • You do not know on which hardware your data is stored. • Scalability by “elasticity”: you can get as many resources as you need. – Virtual machines can be started/terminated on demand. – Data can be stored/disposed as needed.

Amazon Cloud Example: Services • Elastic Compute Cloud (EC 2): virtual machines. – Predefined image (hard disk partition dump containing operating system to boot and all needed software) or upload your own. – No storage: once virtual machine is stopped, everything written to file system of image is lost. • Elastic Block Store (EBS): stores raw blocks (like hard disk). – For example to store EC 2 images. • Simple Storage Service (S 3): storage as object/file. – For example to store application data.

Amazon Cloud Example: Practical issues • Costs: – Free Usage Tier for trying out. – Small EC 2 instance: $0. 09/h http: //aws. amazon. com/ec 2/#pricing – S 3: $0. 125/GB and month http: //aws. amazon. com/s 3/#pricing • Create virtual machine instance via Web interface or API. – Log in on instance: • ssh (Linux instance). – Don’t forget to shut down instance if not needed anymore!

Amazon Cloud: Why use it at HÍ? • Why use, e. g. , Amazon EC 2, if we have the Nordic High Performance Computing cluster (3456 cores, 72 TByte storage)? • You are superuser on your virtual machine: – You can on your own install whatever software you need! • Amazon grants for students, teachers and researchers: – Funding in form of credit for Amazon Cloud usage. – Easy to apply, easy to get! – http: //aws. amazon. com/education/

http: //goodqualitywristbands. blogspot. com

Map. Reduce: Introduction • Map. Reduce is used by Google to process their data: – >20 petabytes (1 PB=1024 terabytes) per day [as of 2008]. • Before data can be processes, it needs to be stored. • Google uses ordinary desktop PCs instead of reliable, expensive server and storage hardware. – Cheap, but will fail more often. – Google File System (GFS) designed to tolerate failures. • Understanding GFS helps understanding Map. Reduce.

Google File System (GFS) • Internally, files data is stored in 64 MB chunks. – Each chunk stored as ordinary file in Linux filesystem. • Hundreds of nodes acting as GFS chunkservers. – Each responsible for storing a subset of all chunks on their local hard disk. – Fault tolerance by replication: each chunk is stored three times (at different chunkservers). Ghemawat, Gobioff, Leung: “The Google File System”, ACM Symposium on Operating Systems Principles (SOSP), 2003

Map. Reduce • Parallel/distributed processing to achieve speed-up of computations on huge data sets. • Map. Reduce programming model / paradigm: Map. Reduce is only applicable for problems that can be broken down into independent sub-problems that just need to be joined afterwards. – Break input data into a number smaller chunks. – Carry out processing on each chunk in parallel and independent from other chunks and produce intermediary results based on that chunk only (map). • Move the job to where the data is stored: do processing on that node where the data is stored (i. e. one of the three associated GFS chunk servers). Massive speed-up in comparison to traditional approaches with central storage. – Combine intermediary results into a final result (reduce). • Application developer needs to provide only corresponding map and reduce functions, the Map. Reduce infrastructure takes care of the rest. Dean, Ghemawat: “Map. Reduce: Simplified Data Processing on Large Clusters”, Proceedings of the 6 th symposium on Operating Systems design and implementation, 2004

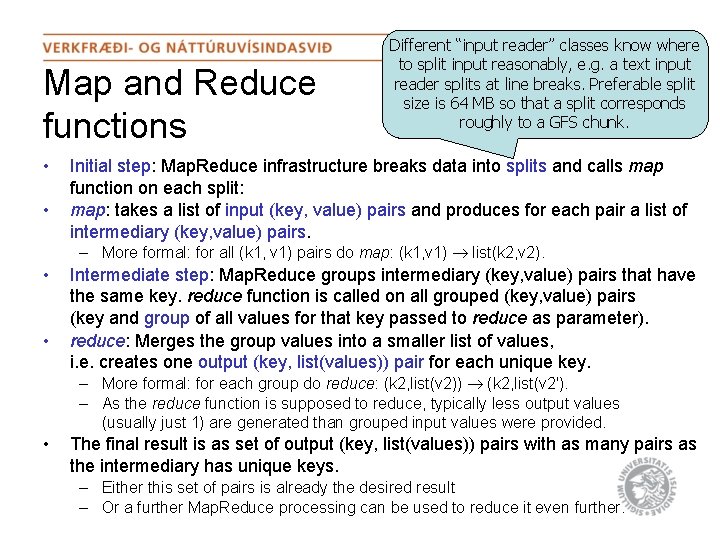

Map and Reduce functions • • Different “input reader” classes know where to split input reasonably, e. g. a text input reader splits at line breaks. Preferable split size is 64 MB so that a split corresponds roughly to a GFS chunk. Initial step: Map. Reduce infrastructure breaks data into splits and calls map function on each split: map: takes a list of input (key, value) pairs and produces for each pair a list of intermediary (key, value) pairs. – More formal: for all (k 1, v 1) pairs do map: (k 1, v 1) list(k 2, v 2). • • Intermediate step: Map. Reduce groups intermediary (key, value) pairs that have the same key. reduce function is called on all grouped (key, value) pairs (key and group of all values for that key passed to reduce as parameter). reduce: Merges the group values into a smaller list of values, i. e. creates one output (key, list(values)) pair for each unique key. – More formal: for each group do reduce: (k 2, list(v 2)) (k 2, list(v 2'). – As the reduce function is supposed to reduce, typically less output values (usually just 1) are generated than grouped input values were provided. • The final result is as set of output (key, list(values)) pairs with as many pairs as the intermediary has unique keys. – Either this set of pairs is already the desired result – Or a further Map. Reduce processing can be used to reduce it even further.

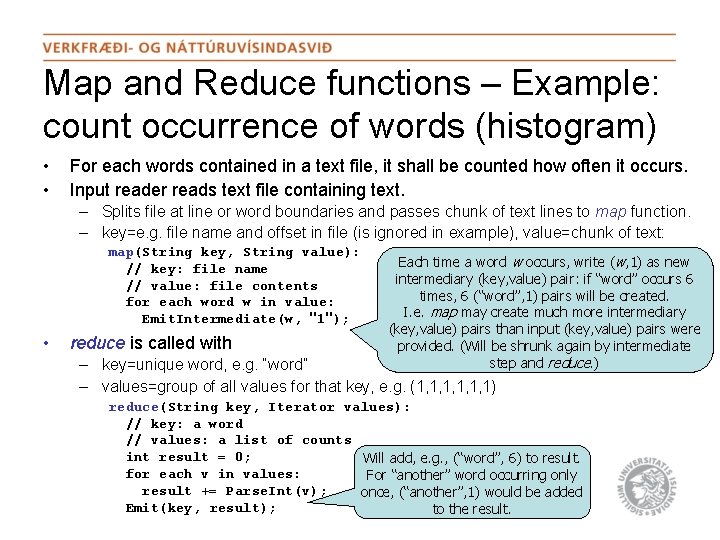

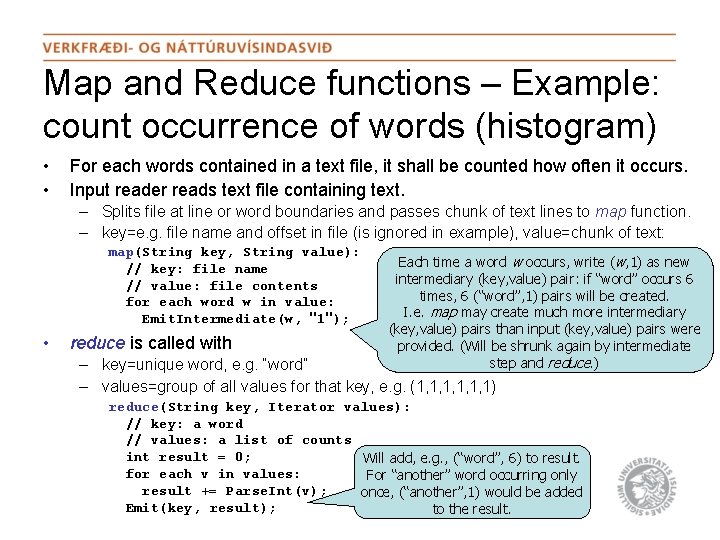

Map and Reduce functions – Example: count occurrence of words (histogram) • • For each words contained in a text file, it shall be counted how often it occurs. Input reader reads text file containing text. – Splits file at line or word boundaries and passes chunk of text lines to map function. – key=e. g. file name and offset in file (is ignored in example), value=chunk of text: map(String key, String value): // key: file name // value: file contents for each word w in value: Emit. Intermediate(w, "1"); • reduce is called with Each time a word w occurs, write (w, 1) as new intermediary (key, value) pair: if “word” occurs 6 times, 6 (“word”, 1) pairs will be created. I. e. map may create much more intermediary (key, value) pairs than input (key, value) pairs were provided. (Will be shrunk again by intermediate step and reduce. ) – key=unique word, e. g. “word” – values=group of all values for that key, e. g. (1, 1, 1, 1) reduce(String key, Iterator values): // key: a word // values: a list of counts int result = 0; Will add, e. g. , (“word”, 6) to result. for each v in values: For “another” word occurring only result += Parse. Int(v); once, (“another”, 1) would be added Emit(key, result); to the result.

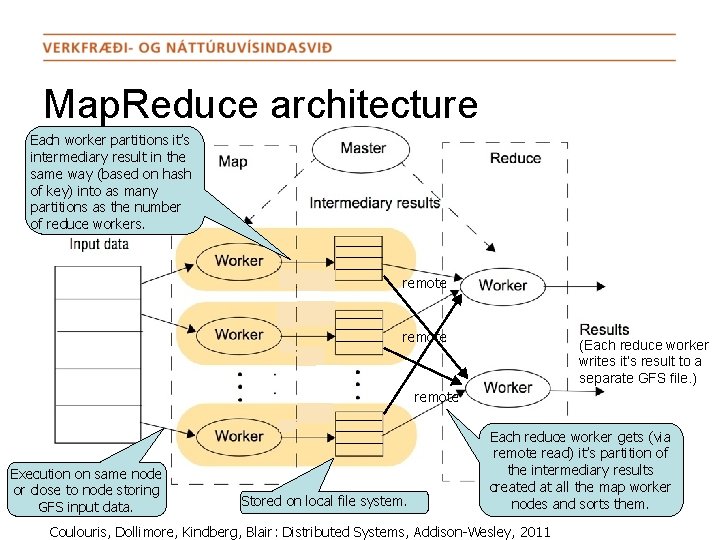

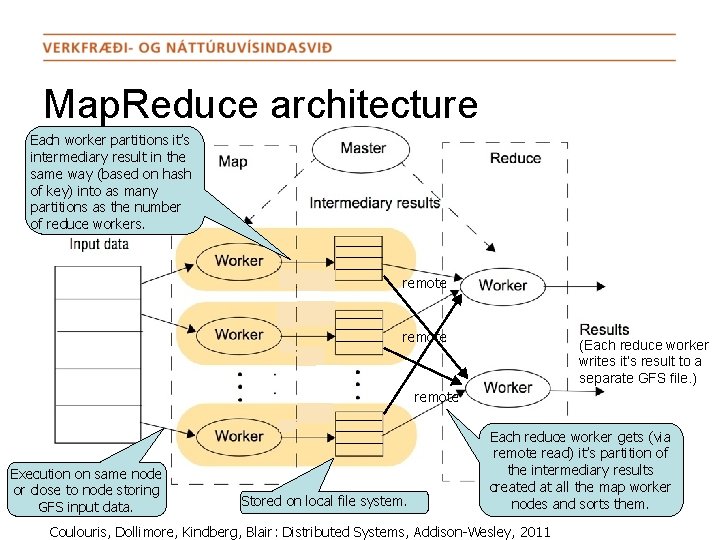

Map. Reduce architecture Each worker partitions it’s intermediary result in the same way (based on hash of key) into as many partitions as the number of reduce workers. remote (Each reduce worker writes it’s result to a separate GFS file. ) remote Execution on same node or close to node storing GFS input data. Stored on local file system. Each reduce worker gets (via remote read) it’s partition of the intermediary results created at all the map worker nodes and sorts them. Coulouris, Dollimore, Kindberg, Blair: Distributed Systems, Addison-Wesley, 2011

Apache Hadoop • Open-source implementation of Map. Reduce. – http: //hadoop. apache. org • Hadoop Distributed File System (HDFS): – Implements ideas of Google File System (GFS). • Hadoop Map. Reduce: – Implements ideas of Google Map. Reduce framework.

http: //goodqualitywristbands. blogspot. com

Particle Physics Data Analysis • Particle Physics (e. g. CERN LHC) – simplified: – Physicists have assumptions about particles. – Simulate expected outcome of particle collision. • Probabilities of particles and their tracks. – Do actual experiment: record collision of particles. • Observe particles and their tracks. – Compare recorded outcome with expected outcome: • If it is the same: nice (assumed model is correct). • If it is not the same: maybe even nicer (discovered something new). • Petabytes of data produced at LHC. – Data processing (LHC Computing Grid) designed a decade ago. • Assumed that petabytes is too much: data gets pre-filtered. • Danger: rare (=most interesting) events get filtered out.

Outlook • Possible to analyse unfiltered particle physics data? – Some say: will be impossible due to petabytes of data. – Some say: should be trivial using Map. Reduce. Find out if Hadoop can be applied (probabilities are histograms)! • In principle reasonable to use existing hardware (Nordic High Performance Computing cluster), but: – Will have to install and configure a lot, but have no root privileges. Decided to use Amazon Cloud for evaluation! – Problem of Map. Reduce in a Cloud: virtualisation. • If S 3 or EBS used to store data: not possible to execute map jobs where data is stored. Find out if local HDFS storage is feasible in Amazon Cloud!