CIS 694EEC 693 Android Sensor Programming Lecture 25

- Slides: 27

CIS 694/EEC 693 Android Sensor Programming Lecture 25 Wenbing Zhao Department of Electrical Engineering and Computer Science Cleveland State University w. zhao 1@csuohio. edu 12/2/2020 Android Sensor Programming 1

Outline n Google mobile vision: Text recognition 12/2/2020 Android Sensor Programming 2

Optical Character Recognition (OCR) n Optical Character Recognition (OCR) https: //codelabs. developers. google. com/codelabs/mobile-vision-ocr/#0 n OCR gives a computer the ability to read text that appears in an image, letting applications make sense of signs, articles, flyers, pages of text, menus, or any other place that text appears as part of an image q q Initializing the Mobile Vision Text. Recognizer Setting up a Processor to receive frames from a camera as they come in and look for text Rendering out that text to the screen at its location Sending that text to Android's Text. To. Speech engine to speak it aloud 12/2/2020 Android Sensor Programming 3

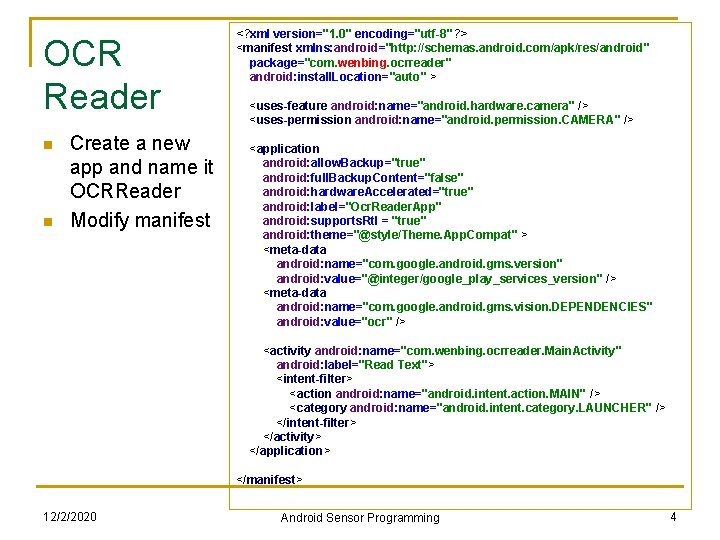

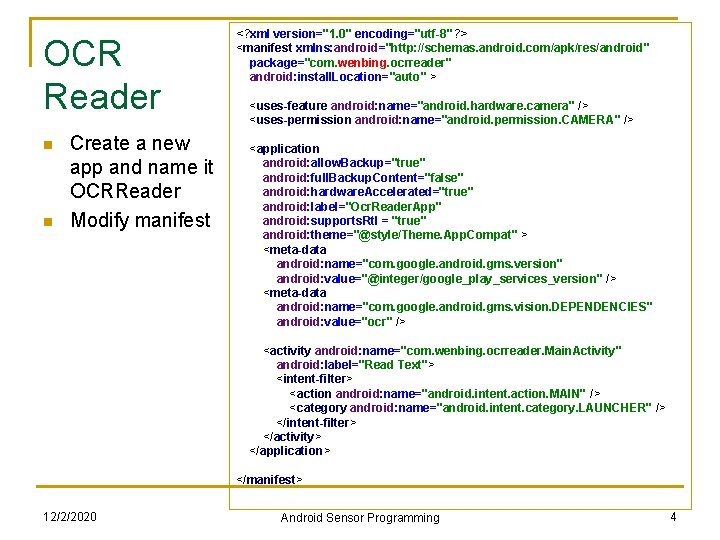

OCR Reader n n Create a new app and name it OCRReader Modify manifest <? xml version="1. 0" encoding="utf-8"? > <manifest xmlns: android="http: //schemas. android. com/apk/res/android" package="com. wenbing. ocrreader" android: install. Location="auto" > <uses-feature android: name="android. hardware. camera" /> <uses-permission android: name="android. permission. CAMERA" /> <application android: allow. Backup="true" android: full. Backup. Content="false" android: hardware. Accelerated="true" android: label="Ocr. Reader. App" android: supports. Rtl = "true" android: theme="@style/Theme. App. Compat" > <meta-data android: name="com. google. android. gms. version" android: value="@integer/google_play_services_version" /> <meta-data android: name="com. google. android. gms. vision. DEPENDENCIES" android: value="ocr" /> <activity android: name="com. wenbing. ocrreader. Main. Activity" android: label="Read Text"> <intent-filter> <action android: name="android. intent. action. MAIN" /> <category android: name="android. intent. category. LAUNCHER" /> </intent-filter> </activity> </application> </manifest> 12/2/2020 Android Sensor Programming 4

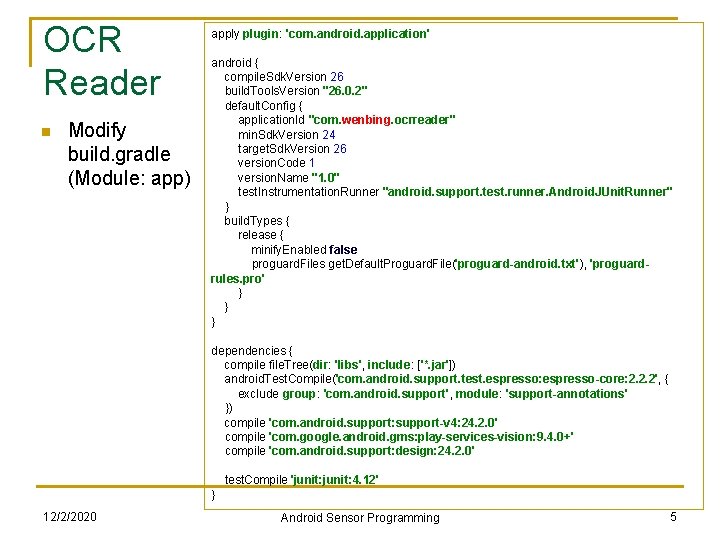

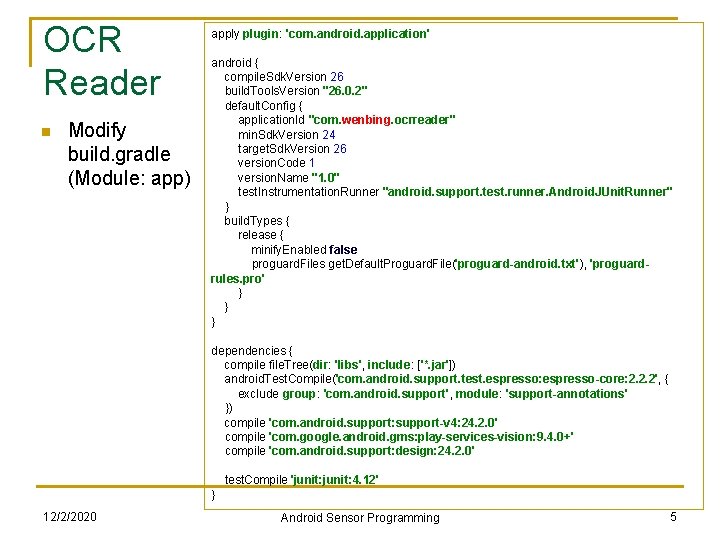

OCR Reader n Modify build. gradle (Module: app) apply plugin: 'com. android. application' android { compile. Sdk. Version 26 build. Tools. Version "26. 0. 2" default. Config { application. Id "com. wenbing. ocrreader" min. Sdk. Version 24 target. Sdk. Version 26 version. Code 1 version. Name "1. 0" test. Instrumentation. Runner "android. support. test. runner. Android. JUnit. Runner" } build. Types { release { minify. Enabled false proguard. Files get. Default. Proguard. File('proguard-android. txt'), 'proguardrules. pro' } } } dependencies { compile file. Tree(dir: 'libs', include: ['*. jar']) android. Test. Compile('com. android. support. test. espresso: espresso-core: 2. 2. 2', { exclude group: 'com. android. support', module: 'support-annotations' }) compile 'com. android. support: support-v 4: 24. 2. 0' compile 'com. google. android. gms: play-services-vision: 9. 4. 0+' compile 'com. android. support: design: 24. 2. 0' test. Compile 'junit: 4. 12' } 12/2/2020 Android Sensor Programming 5

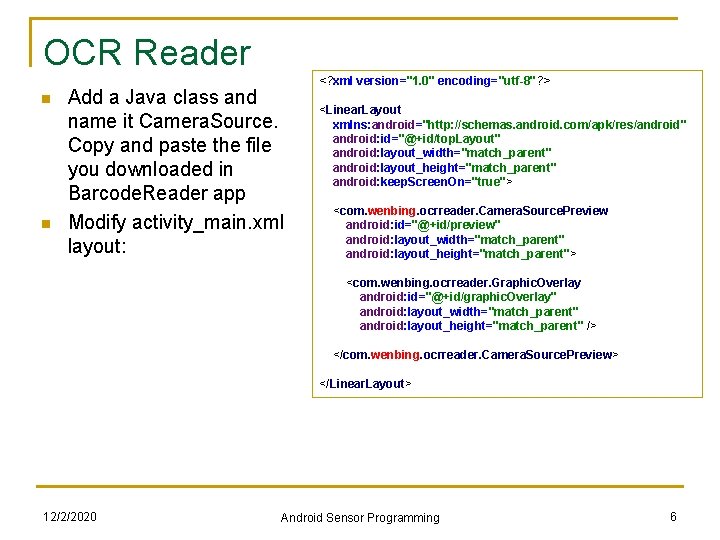

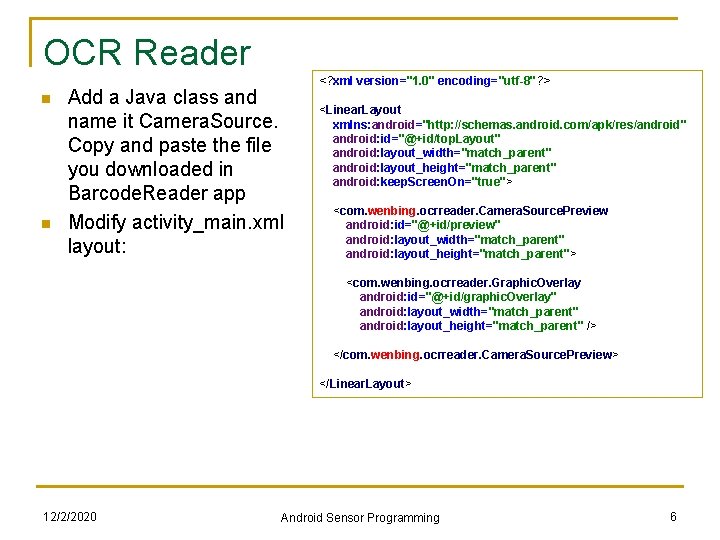

OCR Reader n n Add a Java class and name it Camera. Source. Copy and paste the file you downloaded in Barcode. Reader app Modify activity_main. xml layout: <? xml version="1. 0" encoding="utf-8"? > <Linear. Layout xmlns: android="http: //schemas. android. com/apk/res/android" android: id="@+id/top. Layout" android: layout_width="match_parent" android: layout_height="match_parent" android: keep. Screen. On="true"> <com. wenbing. ocrreader. Camera. Source. Preview android: id="@+id/preview" android: layout_width="match_parent" android: layout_height="match_parent"> <com. wenbing. ocrreader. Graphic. Overlay android: id="@+id/graphic. Overlay" android: layout_width="match_parent" android: layout_height="match_parent" /> </com. wenbing. ocrreader. Camera. Source. Preview> </Linear. Layout> 12/2/2020 Android Sensor Programming 6

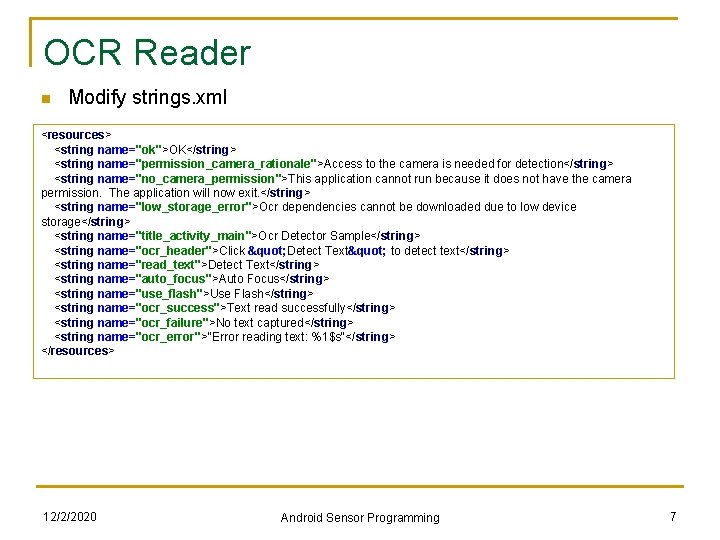

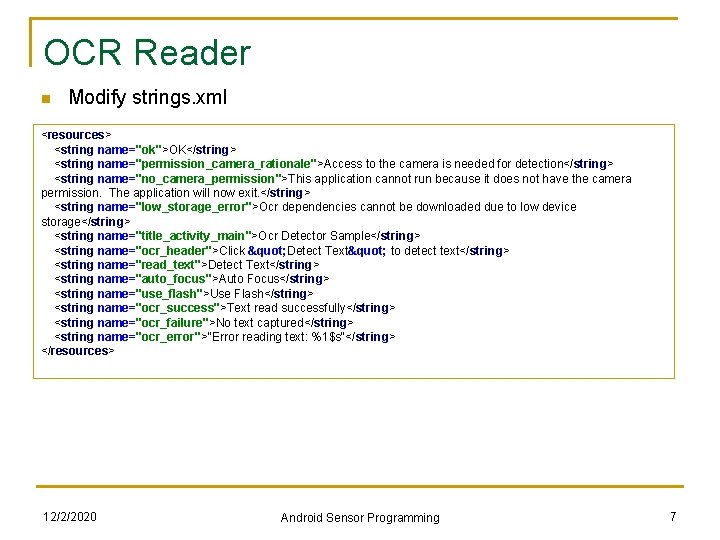

OCR Reader n Modify strings. xml <resources> <string name="ok">OK</string> <string name="permission_camera_rationale">Access to the camera is needed for detection</string> <string name="no_camera_permission">This application cannot run because it does not have the camera permission. The application will now exit. </string> <string name="low_storage_error">Ocr dependencies cannot be downloaded due to low device storage</string> <string name="title_activity_main">Ocr Detector Sample</string> <string name="ocr_header">Click " Detect Text" to detect text</string> <string name="read_text">Detect Text</string> <string name="auto_focus">Auto Focus</string> <string name="use_flash">Use Flash</string> <string name="ocr_success">Text read successfully</string> <string name="ocr_failure">No text captured</string> <string name="ocr_error">"Error reading text: %1$s"</string> </resources> 12/2/2020 Android Sensor Programming 7

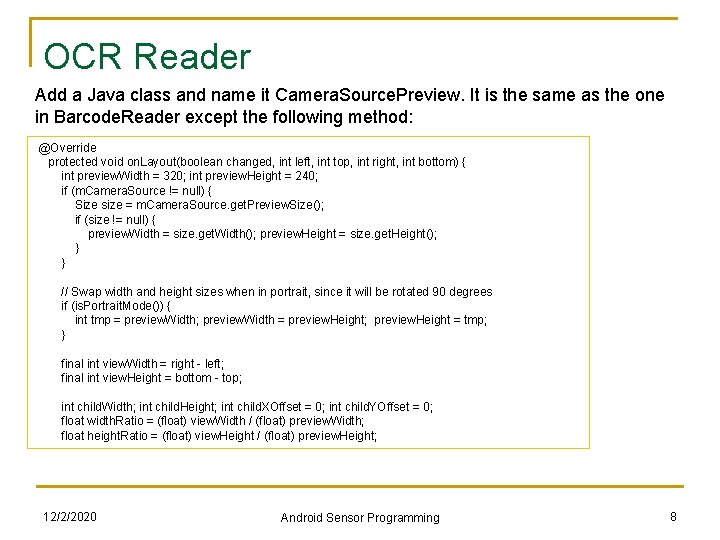

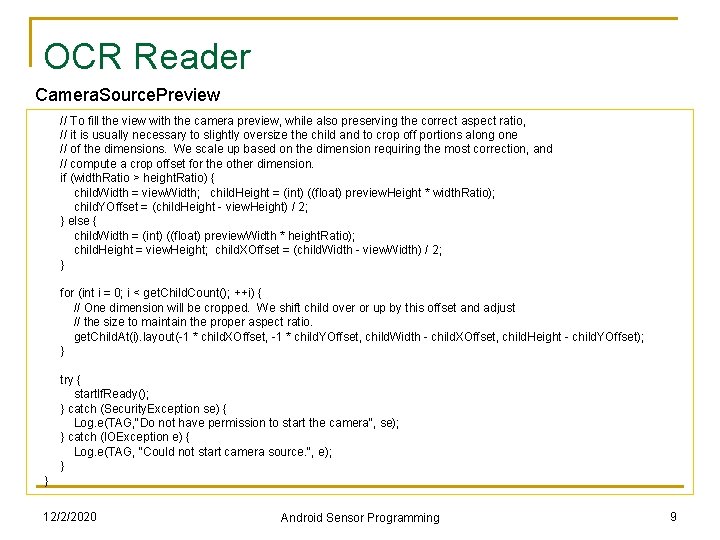

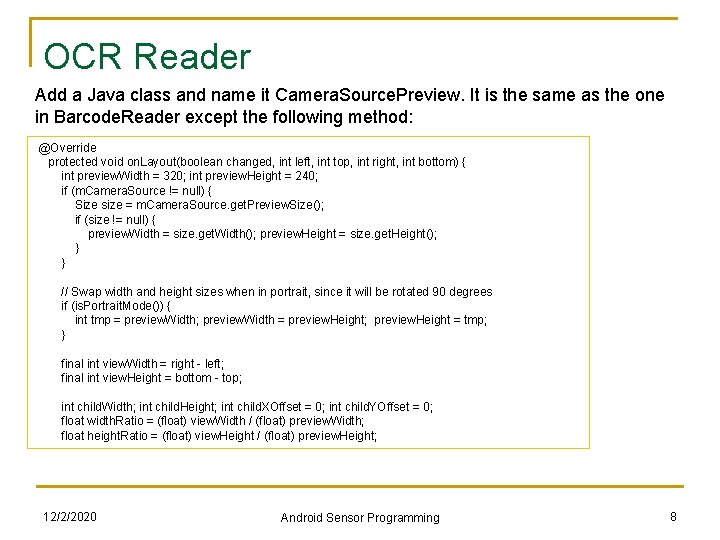

OCR Reader Add a Java class and name it Camera. Source. Preview. It is the same as the one in Barcode. Reader except the following method: @Override protected void on. Layout(boolean changed, int left, int top, int right, int bottom) { int preview. Width = 320; int preview. Height = 240; if (m. Camera. Source != null) { Size size = m. Camera. Source. get. Preview. Size(); if (size != null) { preview. Width = size. get. Width(); preview. Height = size. get. Height(); } } // Swap width and height sizes when in portrait, since it will be rotated 90 degrees if (is. Portrait. Mode()) { int tmp = preview. Width; preview. Width = preview. Height; preview. Height = tmp; } final int view. Width = right - left; final int view. Height = bottom - top; int child. Width; int child. Height; int child. XOffset = 0; int child. YOffset = 0; float width. Ratio = (float) view. Width / (float) preview. Width; float height. Ratio = (float) view. Height / (float) preview. Height; 12/2/2020 Android Sensor Programming 8

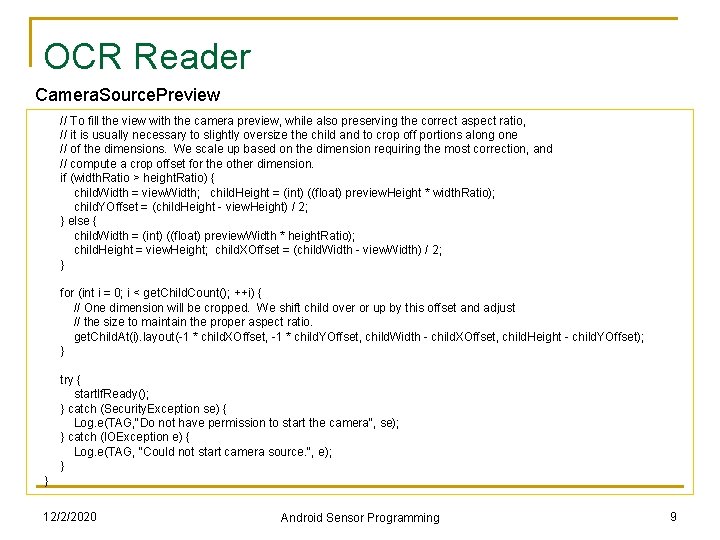

OCR Reader Camera. Source. Preview // To fill the view with the camera preview, while also preserving the correct aspect ratio, // it is usually necessary to slightly oversize the child and to crop off portions along one // of the dimensions. We scale up based on the dimension requiring the most correction, and // compute a crop offset for the other dimension. if (width. Ratio > height. Ratio) { child. Width = view. Width; child. Height = (int) ((float) preview. Height * width. Ratio); child. YOffset = (child. Height - view. Height) / 2; } else { child. Width = (int) ((float) preview. Width * height. Ratio); child. Height = view. Height; child. XOffset = (child. Width - view. Width) / 2; } for (int i = 0; i < get. Child. Count(); ++i) { // One dimension will be cropped. We shift child over or up by this offset and adjust // the size to maintain the proper aspect ratio. get. Child. At(i). layout(-1 * child. XOffset, -1 * child. YOffset, child. Width - child. XOffset, child. Height - child. YOffset); } try { start. If. Ready(); } catch (Security. Exception se) { Log. e(TAG, "Do not have permission to start the camera", se); } catch (IOException e) { Log. e(TAG, "Could not start camera source. ", e); } } 12/2/2020 Android Sensor Programming 9

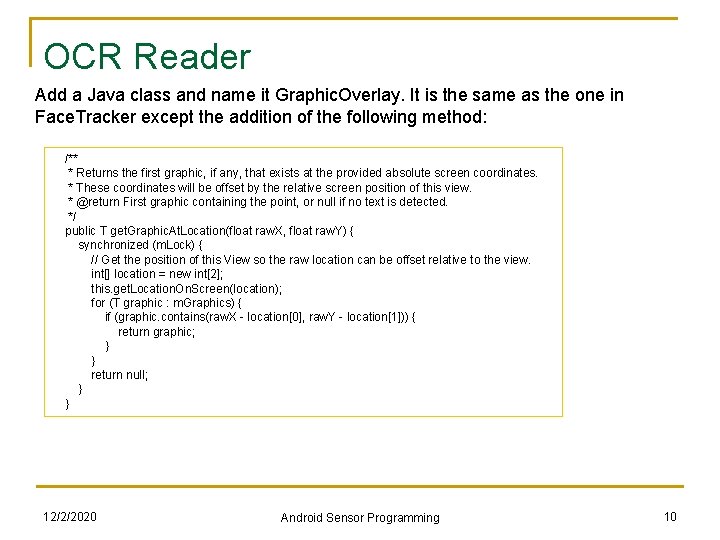

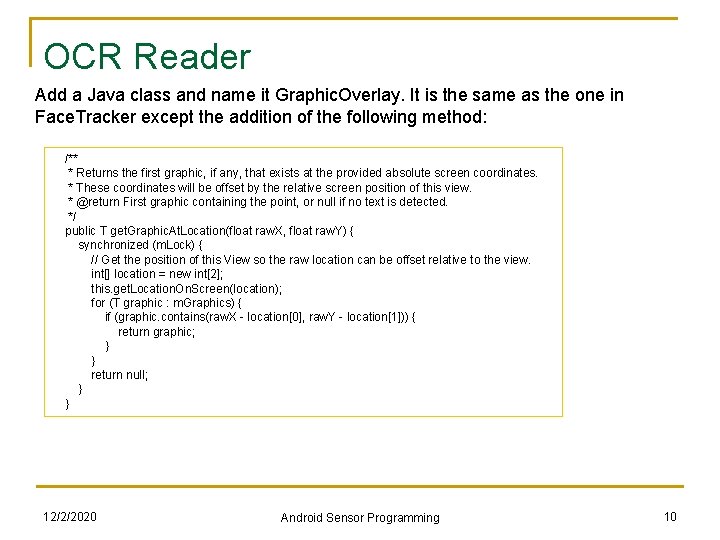

OCR Reader Add a Java class and name it Graphic. Overlay. It is the same as the one in Face. Tracker except the addition of the following method: /** * Returns the first graphic, if any, that exists at the provided absolute screen coordinates. * These coordinates will be offset by the relative screen position of this view. * @return First graphic containing the point, or null if no text is detected. */ public T get. Graphic. At. Location(float raw. X, float raw. Y) { synchronized (m. Lock) { // Get the position of this View so the raw location can be offset relative to the view. int[] location = new int[2]; this. get. Location. On. Screen(location); for (T graphic : m. Graphics) { if (graphic. contains(raw. X - location[0], raw. Y - location[1])) { return graphic; } } return null; } } 12/2/2020 Android Sensor Programming 10

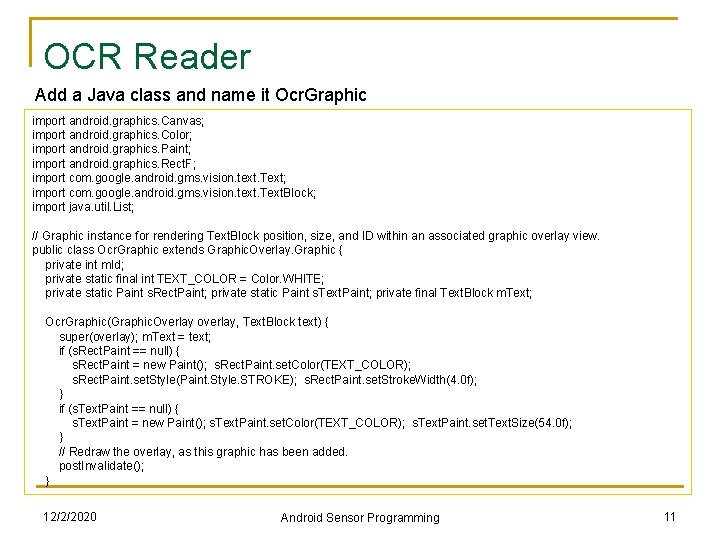

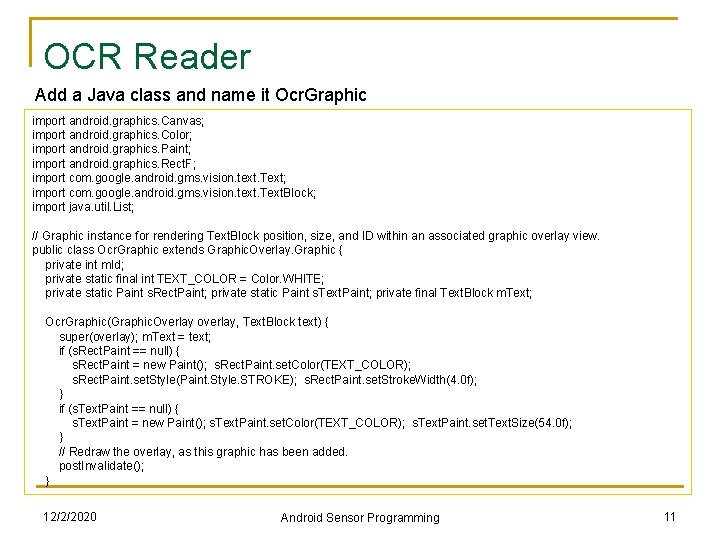

OCR Reader Add a Java class and name it Ocr. Graphic import android. graphics. Canvas; import android. graphics. Color; import android. graphics. Paint; import android. graphics. Rect. F; import com. google. android. gms. vision. text. Text. Block; import java. util. List; // Graphic instance for rendering Text. Block position, size, and ID within an associated graphic overlay view. public class Ocr. Graphic extends Graphic. Overlay. Graphic { private int m. Id; private static final int TEXT_COLOR = Color. WHITE; private static Paint s. Rect. Paint; private static Paint s. Text. Paint; private final Text. Block m. Text; Ocr. Graphic(Graphic. Overlay overlay, Text. Block text) { super(overlay); m. Text = text; if (s. Rect. Paint == null) { s. Rect. Paint = new Paint(); s. Rect. Paint. set. Color(TEXT_COLOR); s. Rect. Paint. set. Style(Paint. Style. STROKE); s. Rect. Paint. set. Stroke. Width(4. 0 f); } if (s. Text. Paint == null) { s. Text. Paint = new Paint(); s. Text. Paint. set. Color(TEXT_COLOR); s. Text. Paint. set. Text. Size(54. 0 f); } // Redraw the overlay, as this graphic has been added. post. Invalidate(); } 12/2/2020 Android Sensor Programming 11

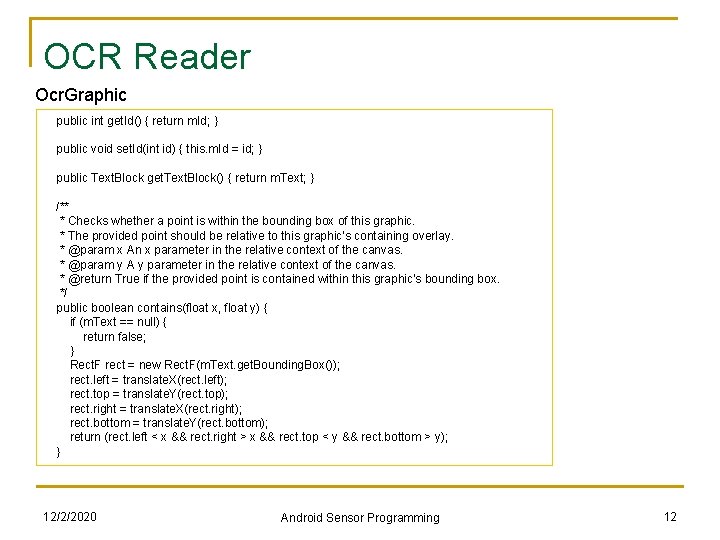

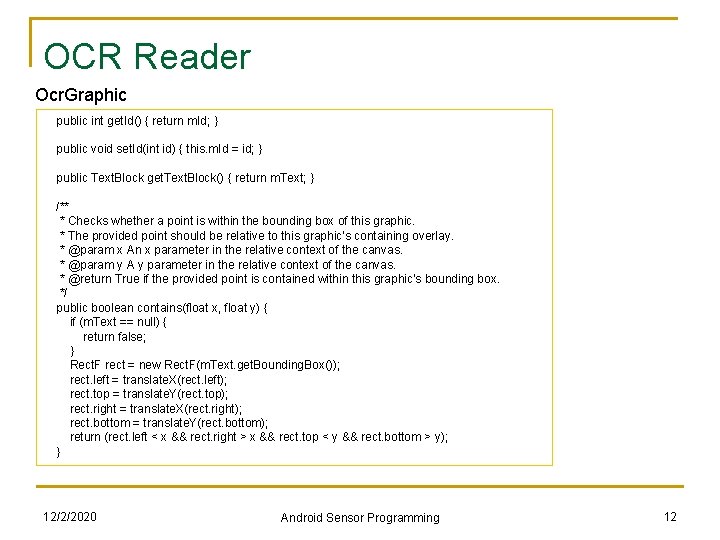

OCR Reader Ocr. Graphic public int get. Id() { return m. Id; } public void set. Id(int id) { this. m. Id = id; } public Text. Block get. Text. Block() { return m. Text; } /** * Checks whether a point is within the bounding box of this graphic. * The provided point should be relative to this graphic's containing overlay. * @param x An x parameter in the relative context of the canvas. * @param y A y parameter in the relative context of the canvas. * @return True if the provided point is contained within this graphic's bounding box. */ public boolean contains(float x, float y) { if (m. Text == null) { return false; } Rect. F rect = new Rect. F(m. Text. get. Bounding. Box()); rect. left = translate. X(rect. left); rect. top = translate. Y(rect. top); rect. right = translate. X(rect. right); rect. bottom = translate. Y(rect. bottom); return (rect. left < x && rect. right > x && rect. top < y && rect. bottom > y); } 12/2/2020 Android Sensor Programming 12

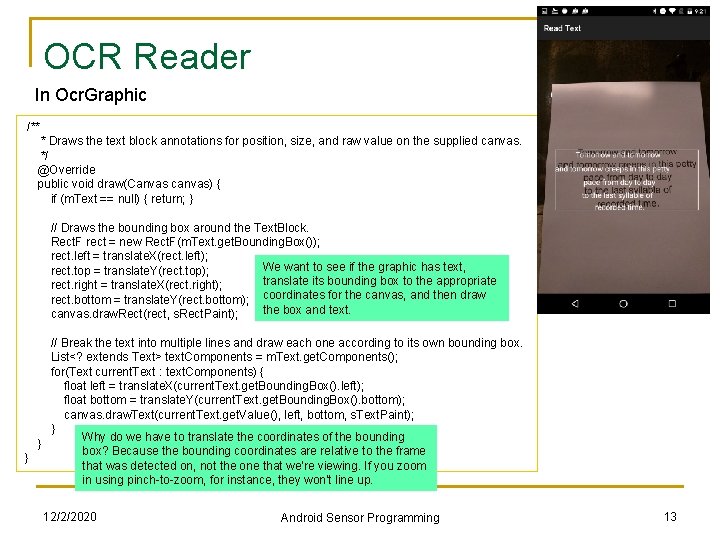

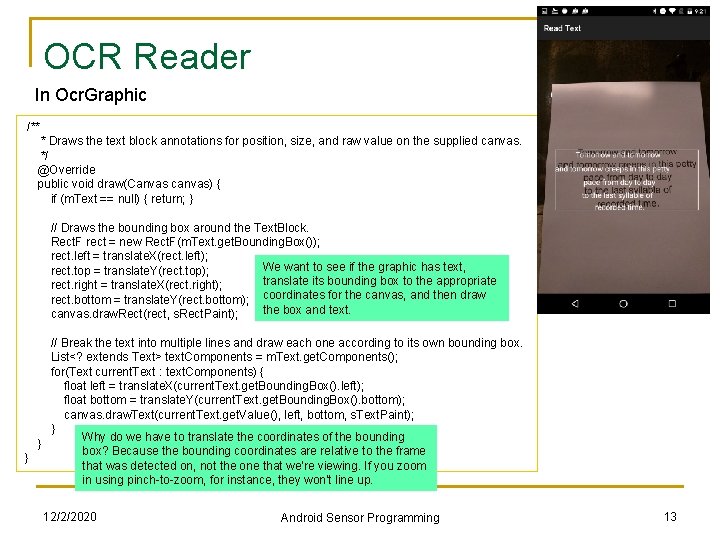

OCR Reader In Ocr. Graphic /** * Draws the text block annotations for position, size, and raw value on the supplied canvas. */ @Override public void draw(Canvas canvas) { if (m. Text == null) { return; } // Draws the bounding box around the Text. Block. Rect. F rect = new Rect. F(m. Text. get. Bounding. Box()); rect. left = translate. X(rect. left); We want to see if the graphic has text, rect. top = translate. Y(rect. top); translate its bounding box to the appropriate rect. right = translate. X(rect. right); rect. bottom = translate. Y(rect. bottom); coordinates for the canvas, and then draw the box and text. canvas. draw. Rect(rect, s. Rect. Paint); // Break the text into multiple lines and draw each one according to its own bounding box. List<? extends Text> text. Components = m. Text. get. Components(); for(Text current. Text : text. Components) { float left = translate. X(current. Text. get. Bounding. Box(). left); float bottom = translate. Y(current. Text. get. Bounding. Box(). bottom); canvas. draw. Text(current. Text. get. Value(), left, bottom, s. Text. Paint); } Why do we have to translate the coordinates of the bounding } box? Because the bounding coordinates are relative to the frame } that was detected on, not the one that we're viewing. If you zoom in using pinch-to-zoom, for instance, they won't line up. 12/2/2020 Android Sensor Programming 13

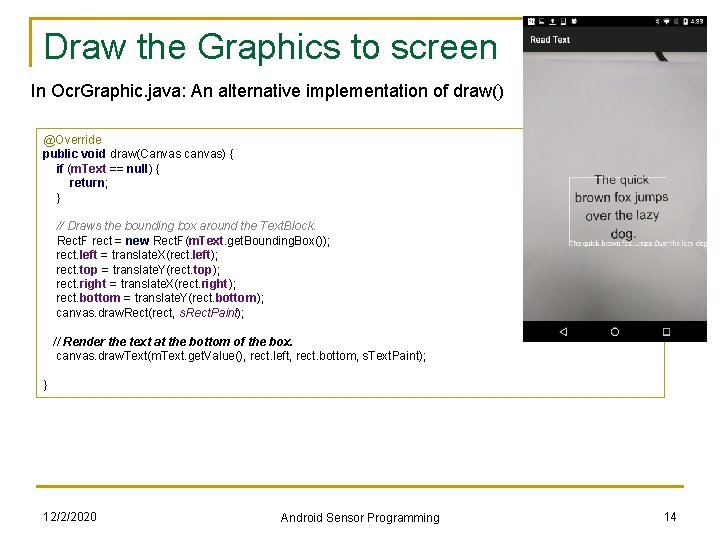

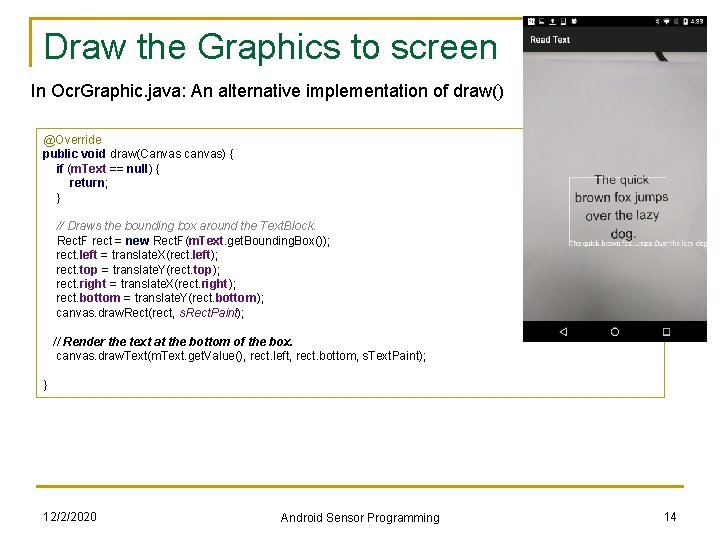

Draw the Graphics to screen In Ocr. Graphic. java: An alternative implementation of draw() @Override public void draw(Canvas canvas) { if (m. Text == null) { return; } // Draws the bounding box around the Text. Block. Rect. F rect = new Rect. F(m. Text. get. Bounding. Box()); rect. left = translate. X(rect. left); rect. top = translate. Y(rect. top); rect. right = translate. X(rect. right); rect. bottom = translate. Y(rect. bottom); canvas. draw. Rect(rect, s. Rect. Paint); // Render the text at the bottom of the box. canvas. draw. Text(m. Text. get. Value(), rect. left, rect. bottom, s. Text. Paint); } 12/2/2020 Android Sensor Programming 14

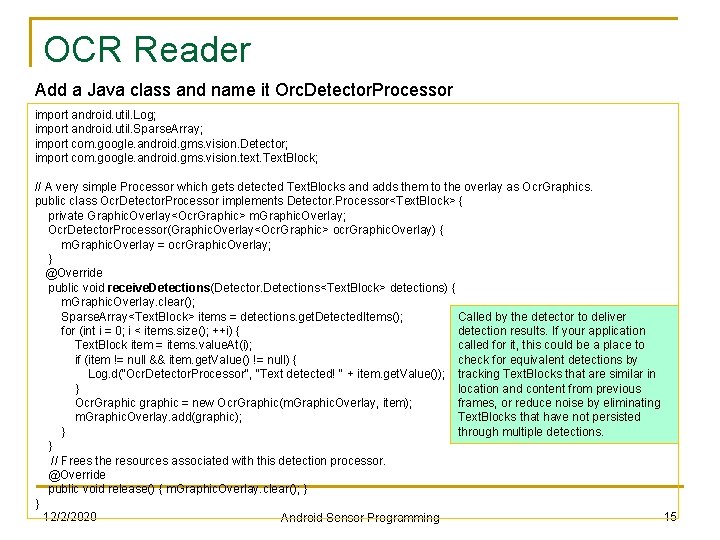

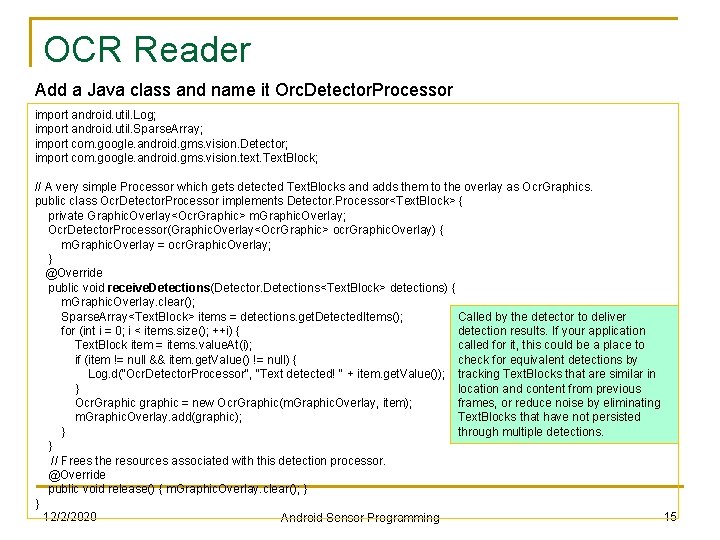

OCR Reader Add a Java class and name it Orc. Detector. Processor import android. util. Log; import android. util. Sparse. Array; import com. google. android. gms. vision. Detector; import com. google. android. gms. vision. text. Text. Block; // A very simple Processor which gets detected Text. Blocks and adds them to the overlay as Ocr. Graphics. public class Ocr. Detector. Processor implements Detector. Processor<Text. Block> { private Graphic. Overlay<Ocr. Graphic> m. Graphic. Overlay; Ocr. Detector. Processor(Graphic. Overlay<Ocr. Graphic> ocr. Graphic. Overlay) { m. Graphic. Overlay = ocr. Graphic. Overlay; } @Override public void receive. Detections(Detector. Detections<Text. Block> detections) { m. Graphic. Overlay. clear(); Sparse. Array<Text. Block> items = detections. get. Detected. Items(); Called by the detector to deliver for (int i = 0; i < items. size(); ++i) { detection results. If your application Text. Block item = items. value. At(i); called for it, this could be a place to if (item != null && item. get. Value() != null) { check for equivalent detections by Log. d("Ocr. Detector. Processor", "Text detected! " + item. get. Value()); tracking Text. Blocks that are similar in } location and content from previous Ocr. Graphic graphic = new Ocr. Graphic(m. Graphic. Overlay, item); frames, or reduce noise by eliminating m. Graphic. Overlay. add(graphic); Text. Blocks that have not persisted } through multiple detections. } // Frees the resources associated with this detection processor. @Override public void release() { m. Graphic. Overlay. clear(); } } 12/2/2020 15 Android Sensor Programming

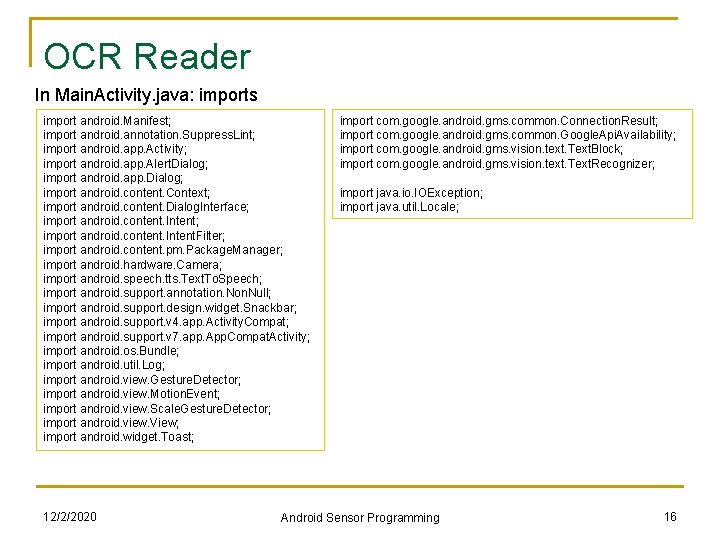

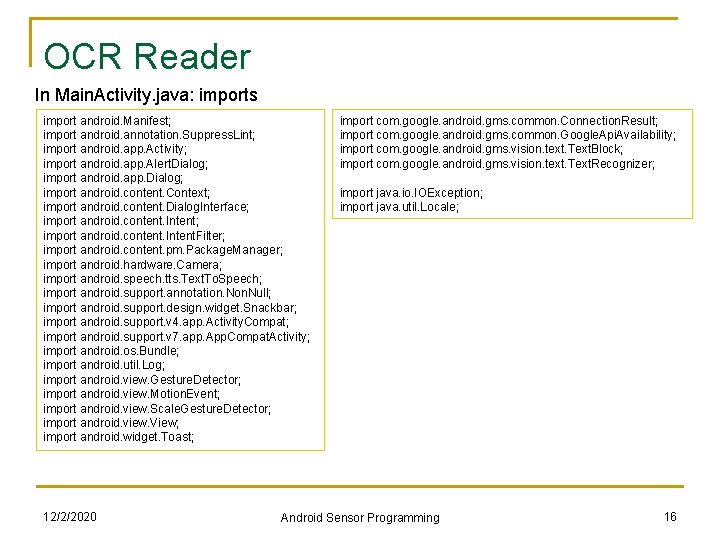

OCR Reader In Main. Activity. java: imports import android. Manifest; import android. annotation. Suppress. Lint; import android. app. Activity; import android. app. Alert. Dialog; import android. app. Dialog; import android. content. Context; import android. content. Dialog. Interface; import android. content. Intent. Filter; import android. content. pm. Package. Manager; import android. hardware. Camera; import android. speech. tts. Text. To. Speech; import android. support. annotation. Null; import android. support. design. widget. Snackbar; import android. support. v 4. app. Activity. Compat; import android. support. v 7. app. App. Compat. Activity; import android. os. Bundle; import android. util. Log; import android. view. Gesture. Detector; import android. view. Motion. Event; import android. view. Scale. Gesture. Detector; import android. view. View; import android. widget. Toast; 12/2/2020 import com. google. android. gms. common. Connection. Result; import com. google. android. gms. common. Google. Api. Availability; import com. google. android. gms. vision. text. Text. Block; import com. google. android. gms. vision. text. Text. Recognizer; import java. io. IOException; import java. util. Locale; Android Sensor Programming 16

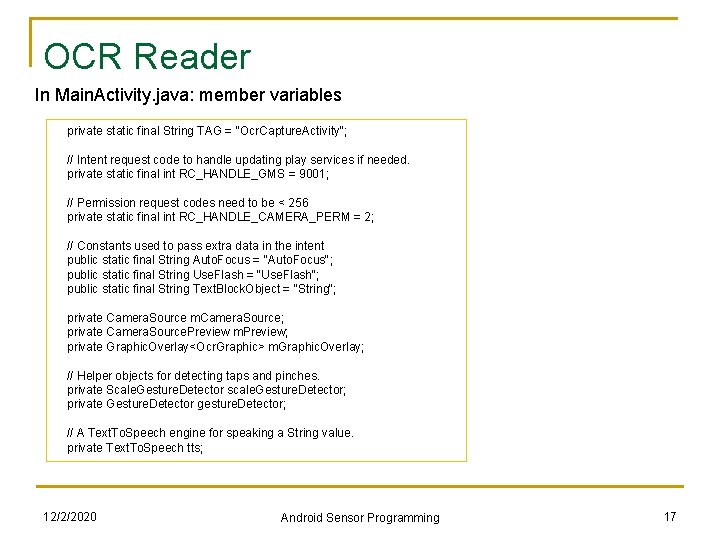

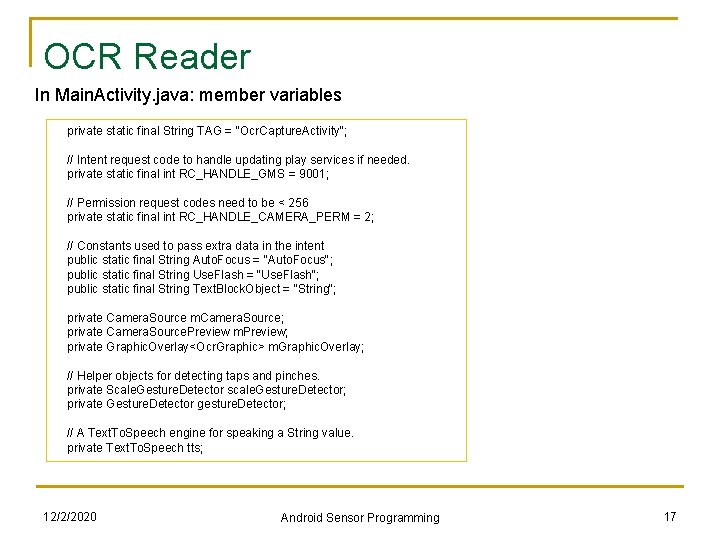

OCR Reader In Main. Activity. java: member variables private static final String TAG = "Ocr. Capture. Activity"; // Intent request code to handle updating play services if needed. private static final int RC_HANDLE_GMS = 9001; // Permission request codes need to be < 256 private static final int RC_HANDLE_CAMERA_PERM = 2; // Constants used to pass extra data in the intent public static final String Auto. Focus = "Auto. Focus"; public static final String Use. Flash = "Use. Flash"; public static final String Text. Block. Object = "String"; private Camera. Source m. Camera. Source; private Camera. Source. Preview m. Preview; private Graphic. Overlay<Ocr. Graphic> m. Graphic. Overlay; // Helper objects for detecting taps and pinches. private Scale. Gesture. Detector scale. Gesture. Detector; private Gesture. Detector gesture. Detector; // A Text. To. Speech engine for speaking a String value. private Text. To. Speech tts; 12/2/2020 Android Sensor Programming 17

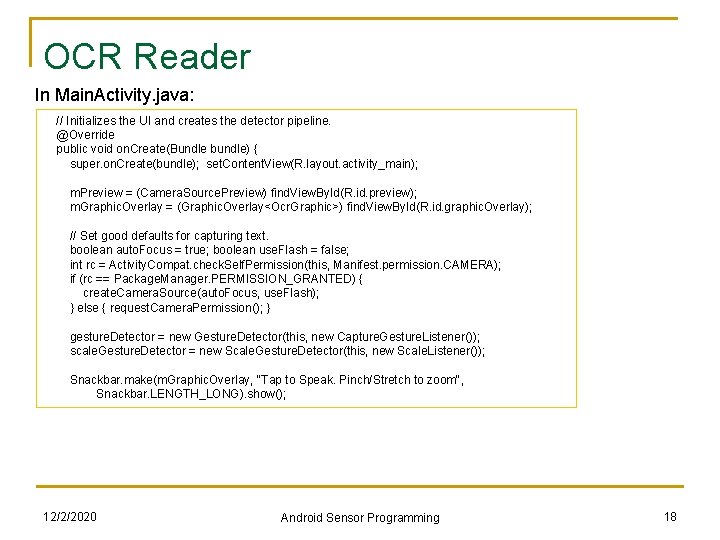

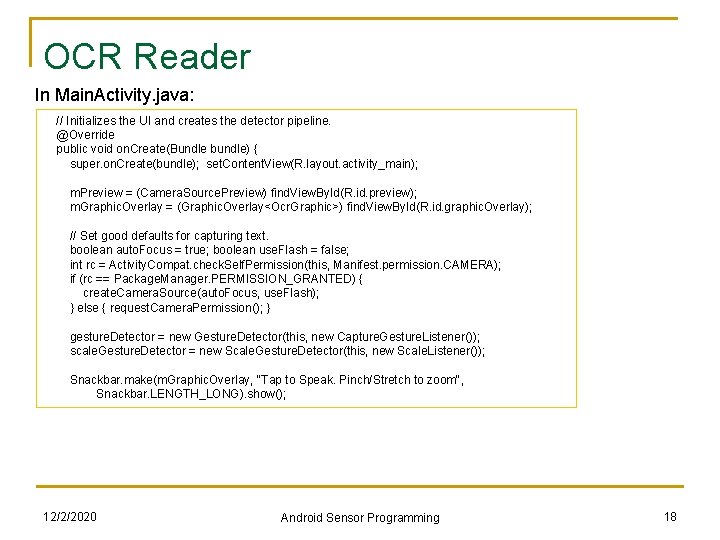

OCR Reader In Main. Activity. java: // Initializes the UI and creates the detector pipeline. @Override public void on. Create(Bundle bundle) { super. on. Create(bundle); set. Content. View(R. layout. activity_main); m. Preview = (Camera. Source. Preview) find. View. By. Id(R. id. preview); m. Graphic. Overlay = (Graphic. Overlay<Ocr. Graphic>) find. View. By. Id(R. id. graphic. Overlay); // Set good defaults for capturing text. boolean auto. Focus = true; boolean use. Flash = false; int rc = Activity. Compat. check. Self. Permission(this, Manifest. permission. CAMERA); if (rc == Package. Manager. PERMISSION_GRANTED) { create. Camera. Source(auto. Focus, use. Flash); } else { request. Camera. Permission(); } gesture. Detector = new Gesture. Detector(this, new Capture. Gesture. Listener()); scale. Gesture. Detector = new Scale. Gesture. Detector(this, new Scale. Listener()); Snackbar. make(m. Graphic. Overlay, "Tap to Speak. Pinch/Stretch to zoom", Snackbar. LENGTH_LONG). show(); 12/2/2020 Android Sensor Programming 18

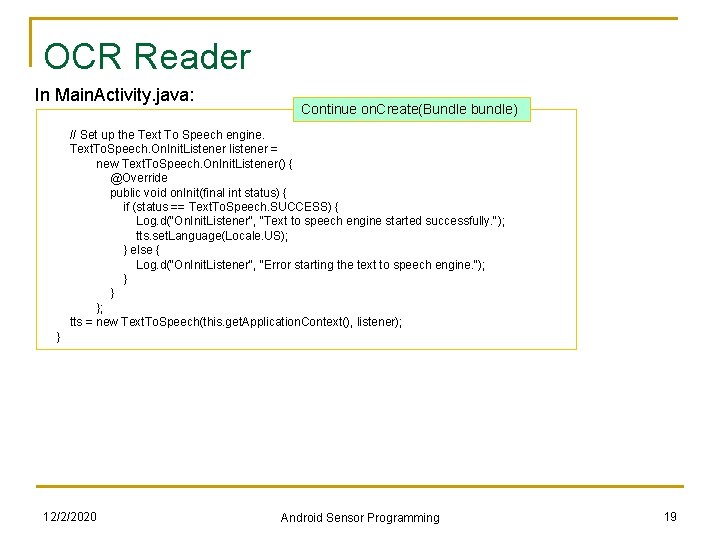

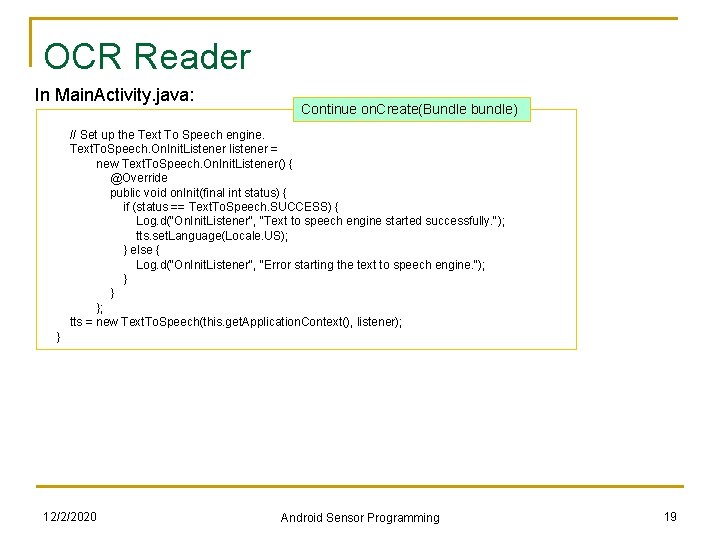

OCR Reader In Main. Activity. java: Continue on. Create(Bundle bundle) // Set up the Text To Speech engine. Text. To. Speech. On. Init. Listener listener = new Text. To. Speech. On. Init. Listener() { @Override public void on. Init(final int status) { if (status == Text. To. Speech. SUCCESS) { Log. d("On. Init. Listener", "Text to speech engine started successfully. "); tts. set. Language(Locale. US); } else { Log. d("On. Init. Listener", "Error starting the text to speech engine. "); } } }; tts = new Text. To. Speech(this. get. Application. Context(), listener); } 12/2/2020 Android Sensor Programming 19

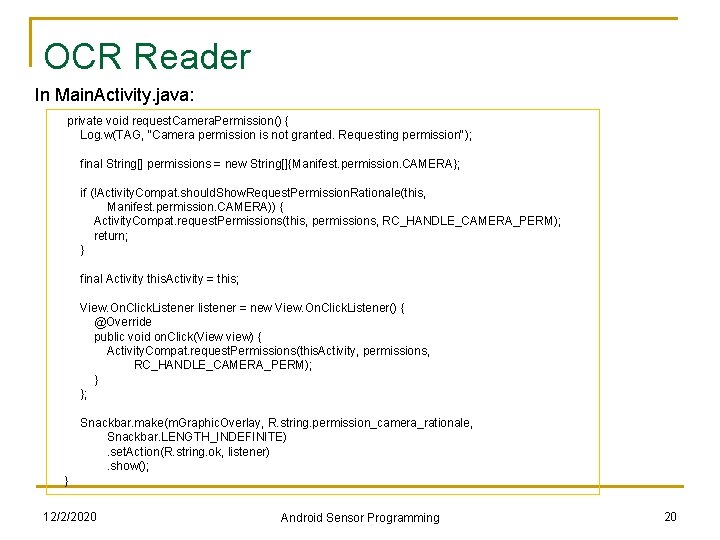

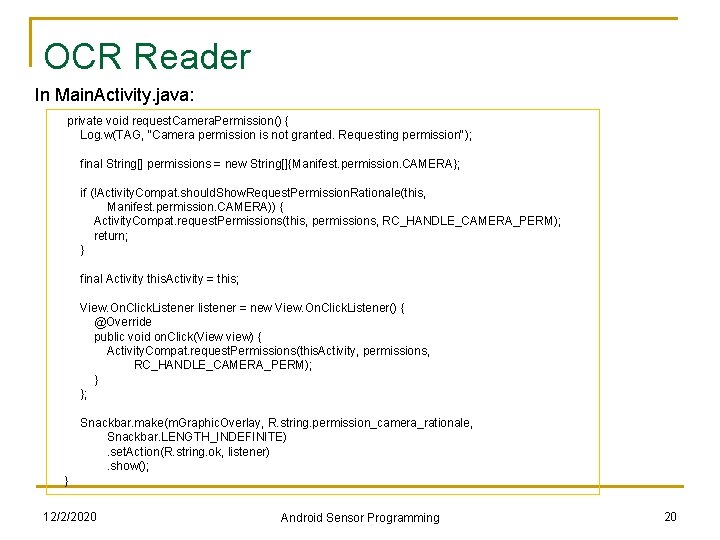

OCR Reader In Main. Activity. java: private void request. Camera. Permission() { Log. w(TAG, "Camera permission is not granted. Requesting permission"); final String[] permissions = new String[]{Manifest. permission. CAMERA}; if (!Activity. Compat. should. Show. Request. Permission. Rationale(this, Manifest. permission. CAMERA)) { Activity. Compat. request. Permissions(this, permissions, RC_HANDLE_CAMERA_PERM); return; } final Activity this. Activity = this; View. On. Click. Listener listener = new View. On. Click. Listener() { @Override public void on. Click(View view) { Activity. Compat. request. Permissions(this. Activity, permissions, RC_HANDLE_CAMERA_PERM); } }; Snackbar. make(m. Graphic. Overlay, R. string. permission_camera_rationale, Snackbar. LENGTH_INDEFINITE). set. Action(R. string. ok, listener). show(); } 12/2/2020 Android Sensor Programming 20

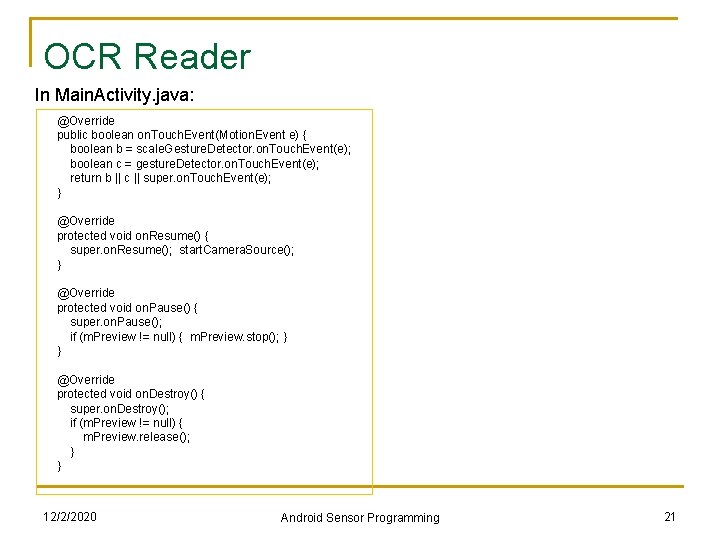

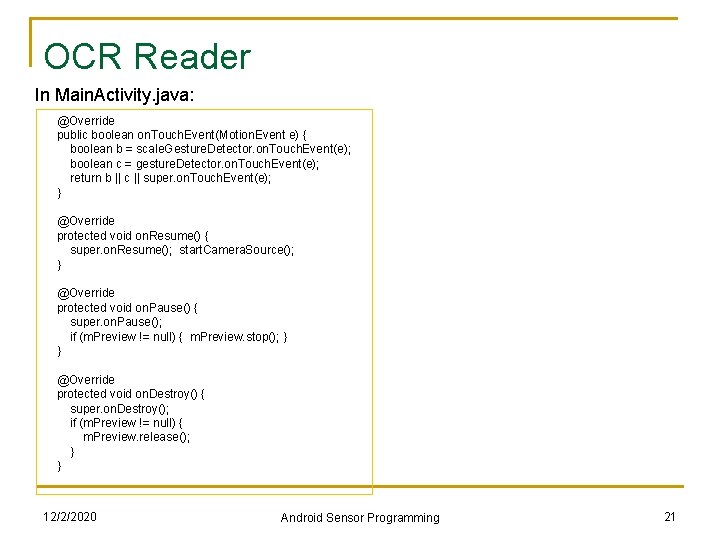

OCR Reader In Main. Activity. java: @Override public boolean on. Touch. Event(Motion. Event e) { boolean b = scale. Gesture. Detector. on. Touch. Event(e); boolean c = gesture. Detector. on. Touch. Event(e); return b || c || super. on. Touch. Event(e); } @Override protected void on. Resume() { super. on. Resume(); start. Camera. Source(); } @Override protected void on. Pause() { super. on. Pause(); if (m. Preview != null) { m. Preview. stop(); } } @Override protected void on. Destroy() { super. on. Destroy(); if (m. Preview != null) { m. Preview. release(); } } 12/2/2020 Android Sensor Programming 21

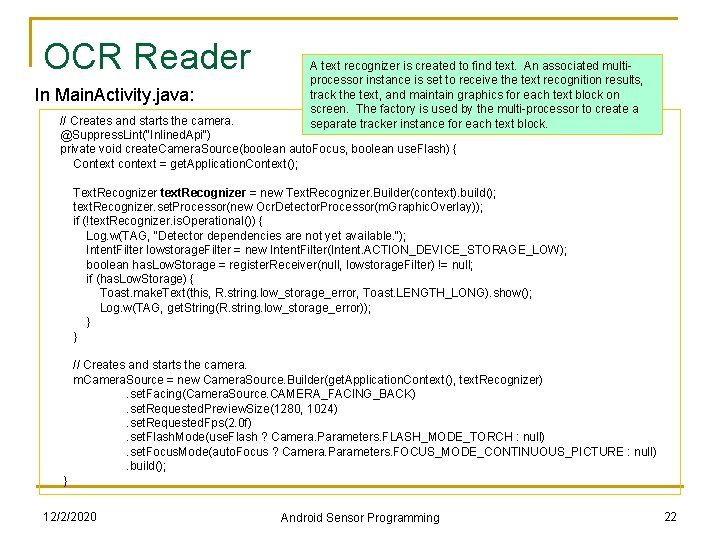

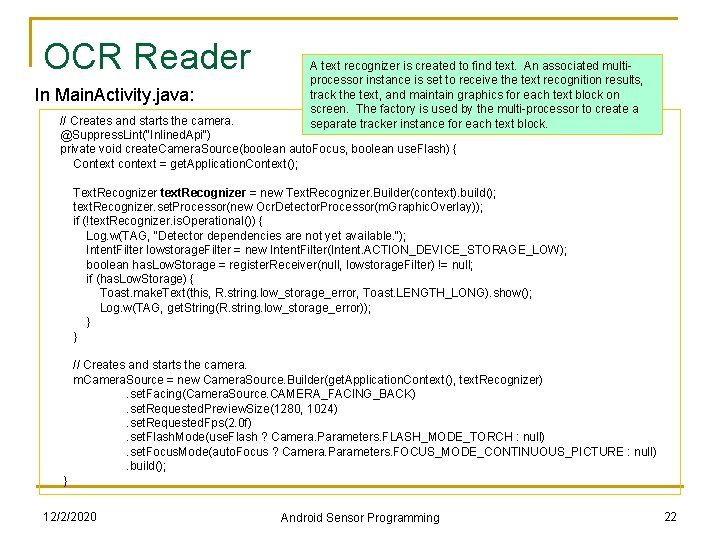

OCR Reader In Main. Activity. java: A text recognizer is created to find text. An associated multiprocessor instance is set to receive the text recognition results, track the text, and maintain graphics for each text block on screen. The factory is used by the multi-processor to create a separate tracker instance for each text block. // Creates and starts the camera. @Suppress. Lint("Inlined. Api") private void create. Camera. Source(boolean auto. Focus, boolean use. Flash) { Context context = get. Application. Context(); Text. Recognizer text. Recognizer = new Text. Recognizer. Builder(context). build(); text. Recognizer. set. Processor(new Ocr. Detector. Processor(m. Graphic. Overlay)); if (!text. Recognizer. is. Operational()) { Log. w(TAG, "Detector dependencies are not yet available. "); Intent. Filter lowstorage. Filter = new Intent. Filter(Intent. ACTION_DEVICE_STORAGE_LOW); boolean has. Low. Storage = register. Receiver(null, lowstorage. Filter) != null; if (has. Low. Storage) { Toast. make. Text(this, R. string. low_storage_error, Toast. LENGTH_LONG). show(); Log. w(TAG, get. String(R. string. low_storage_error)); } } // Creates and starts the camera. m. Camera. Source = new Camera. Source. Builder(get. Application. Context(), text. Recognizer). set. Facing(Camera. Source. CAMERA_FACING_BACK). set. Requested. Preview. Size(1280, 1024). set. Requested. Fps(2. 0 f). set. Flash. Mode(use. Flash ? Camera. Parameters. FLASH_MODE_TORCH : null). set. Focus. Mode(auto. Focus ? Camera. Parameters. FOCUS_MODE_CONTINUOUS_PICTURE : null). build(); } 12/2/2020 Android Sensor Programming 22

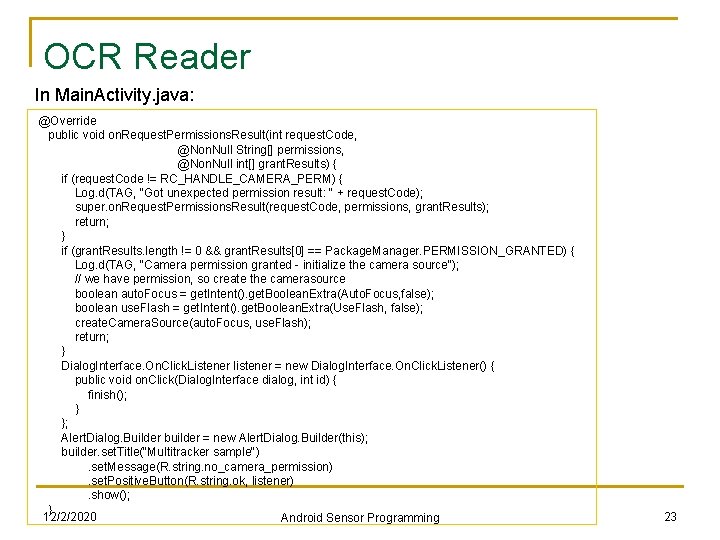

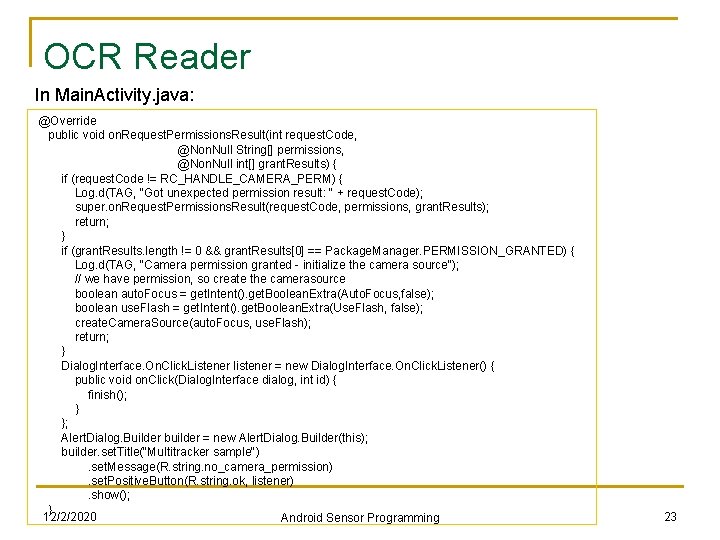

OCR Reader In Main. Activity. java: @Override public void on. Request. Permissions. Result(int request. Code, @Non. Null String[] permissions, @Non. Null int[] grant. Results) { if (request. Code != RC_HANDLE_CAMERA_PERM) { Log. d(TAG, "Got unexpected permission result: " + request. Code); super. on. Request. Permissions. Result(request. Code, permissions, grant. Results); return; } if (grant. Results. length != 0 && grant. Results[0] == Package. Manager. PERMISSION_GRANTED) { Log. d(TAG, "Camera permission granted - initialize the camera source"); // we have permission, so create the camerasource boolean auto. Focus = get. Intent(). get. Boolean. Extra(Auto. Focus, false); boolean use. Flash = get. Intent(). get. Boolean. Extra(Use. Flash, false); create. Camera. Source(auto. Focus, use. Flash); return; } Dialog. Interface. On. Click. Listener listener = new Dialog. Interface. On. Click. Listener() { public void on. Click(Dialog. Interface dialog, int id) { finish(); } }; Alert. Dialog. Builder builder = new Alert. Dialog. Builder(this); builder. set. Title("Multitracker sample"). set. Message(R. string. no_camera_permission). set. Positive. Button(R. string. ok, listener). show(); } 12/2/2020 Android Sensor Programming 23

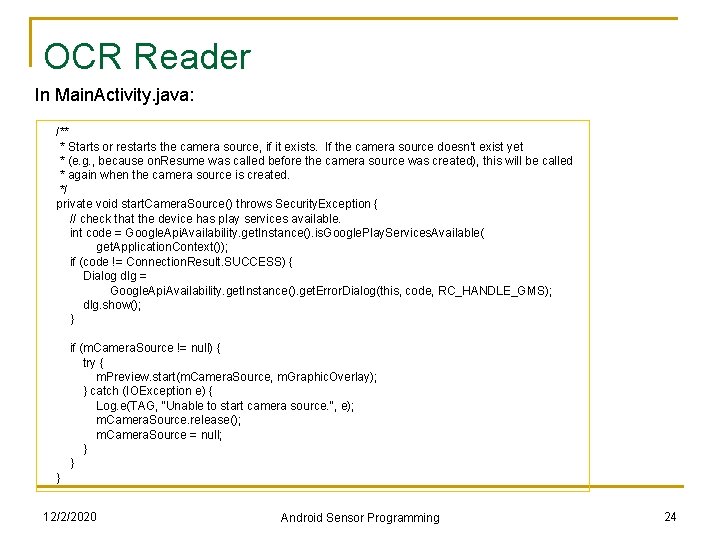

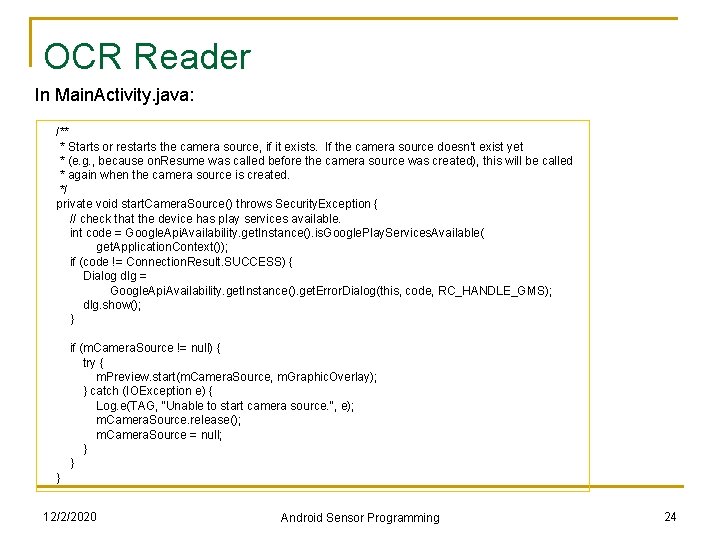

OCR Reader In Main. Activity. java: /** * Starts or restarts the camera source, if it exists. If the camera source doesn't exist yet * (e. g. , because on. Resume was called before the camera source was created), this will be called * again when the camera source is created. */ private void start. Camera. Source() throws Security. Exception { // check that the device has play services available. int code = Google. Api. Availability. get. Instance(). is. Google. Play. Services. Available( get. Application. Context()); if (code != Connection. Result. SUCCESS) { Dialog dlg = Google. Api. Availability. get. Instance(). get. Error. Dialog(this, code, RC_HANDLE_GMS); dlg. show(); } if (m. Camera. Source != null) { try { m. Preview. start(m. Camera. Source, m. Graphic. Overlay); } catch (IOException e) { Log. e(TAG, "Unable to start camera source. ", e); m. Camera. Source. release(); m. Camera. Source = null; } } } 12/2/2020 Android Sensor Programming 24

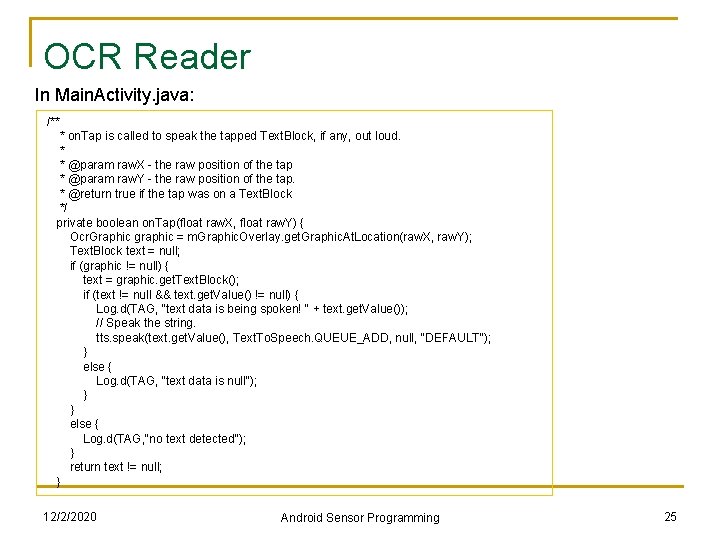

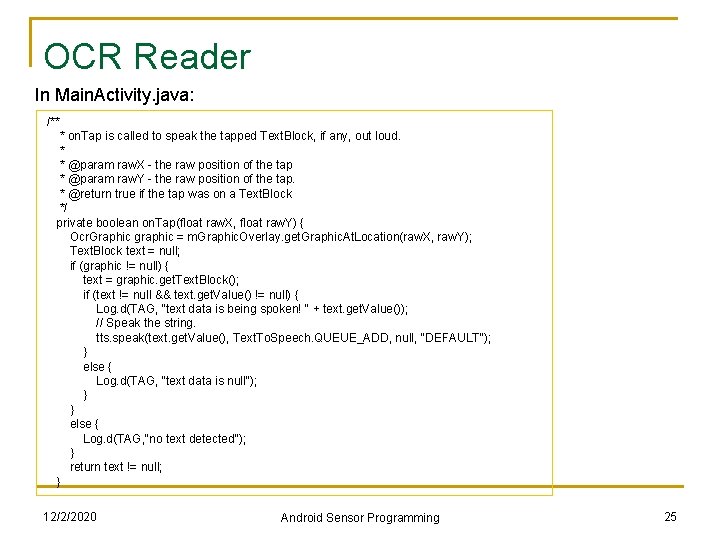

OCR Reader In Main. Activity. java: /** * on. Tap is called to speak the tapped Text. Block, if any, out loud. * * @param raw. X - the raw position of the tap * @param raw. Y - the raw position of the tap. * @return true if the tap was on a Text. Block */ private boolean on. Tap(float raw. X, float raw. Y) { Ocr. Graphic graphic = m. Graphic. Overlay. get. Graphic. At. Location(raw. X, raw. Y); Text. Block text = null; if (graphic != null) { text = graphic. get. Text. Block(); if (text != null && text. get. Value() != null) { Log. d(TAG, "text data is being spoken! " + text. get. Value()); // Speak the string. tts. speak(text. get. Value(), Text. To. Speech. QUEUE_ADD, null, "DEFAULT"); } else { Log. d(TAG, "text data is null"); } } else { Log. d(TAG, "no text detected"); } return text != null; } 12/2/2020 Android Sensor Programming 25

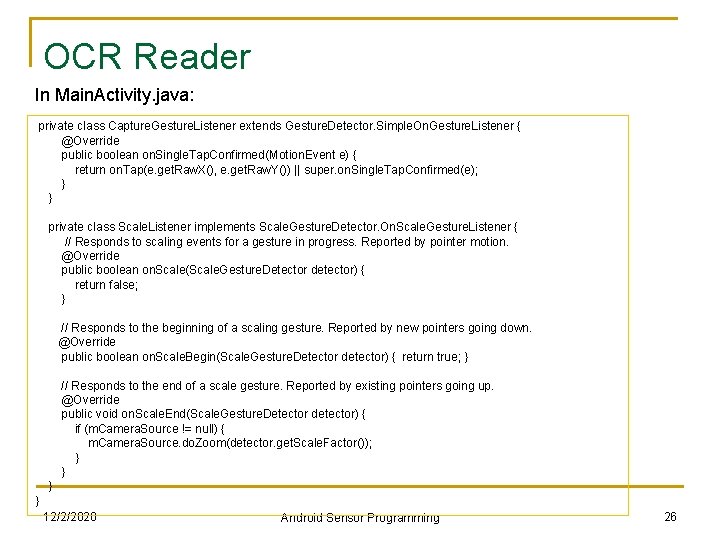

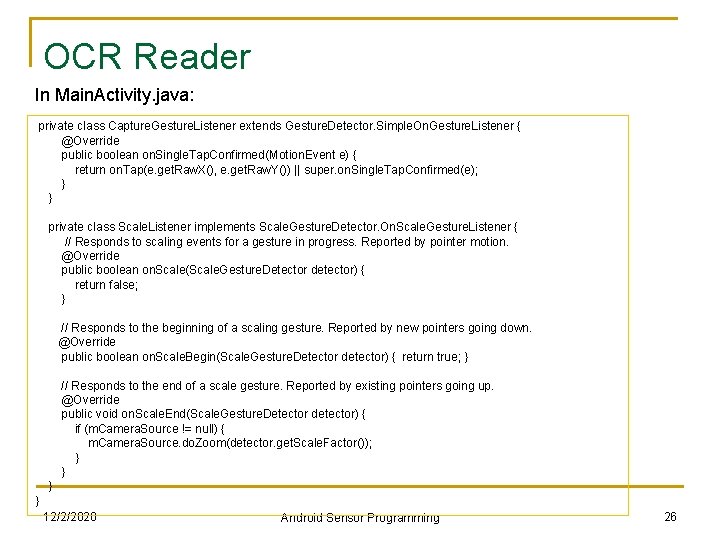

OCR Reader In Main. Activity. java: private class Capture. Gesture. Listener extends Gesture. Detector. Simple. On. Gesture. Listener { @Override public boolean on. Single. Tap. Confirmed(Motion. Event e) { return on. Tap(e. get. Raw. X(), e. get. Raw. Y()) || super. on. Single. Tap. Confirmed(e); } } private class Scale. Listener implements Scale. Gesture. Detector. On. Scale. Gesture. Listener { // Responds to scaling events for a gesture in progress. Reported by pointer motion. @Override public boolean on. Scale(Scale. Gesture. Detector detector) { return false; } // Responds to the beginning of a scaling gesture. Reported by new pointers going down. @Override public boolean on. Scale. Begin(Scale. Gesture. Detector detector) { return true; } // Responds to the end of a scale gesture. Reported by existing pointers going up. @Override public void on. Scale. End(Scale. Gesture. Detector detector) { if (m. Camera. Source != null) { m. Camera. Source. do. Zoom(detector. get. Scale. Factor()); } } 12/2/2020 Android Sensor Programming 26

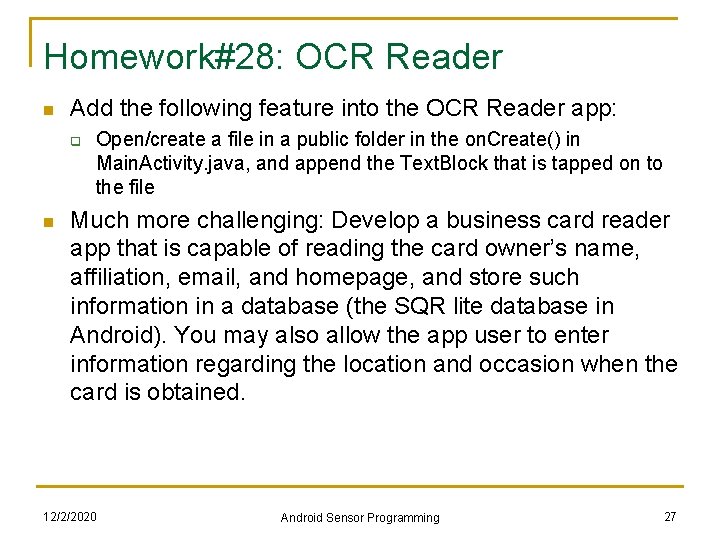

Homework#28: OCR Reader n Add the following feature into the OCR Reader app: q n Open/create a file in a public folder in the on. Create() in Main. Activity. java, and append the Text. Block that is tapped on to the file Much more challenging: Develop a business card reader app that is capable of reading the card owner’s name, affiliation, email, and homepage, and store such information in a database (the SQR lite database in Android). You may also allow the app user to enter information regarding the location and occasion when the card is obtained. 12/2/2020 Android Sensor Programming 27