CIKM 02 Boosting to Correct Inductive Bias in

CIKM’ 02 Boosting to Correct Inductive Bias in Text Classification Yan Liu, Yiming Yang and Jaime Carbonell School of Computer Science Carnegie Mellon University Nov 1, 2002 Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

Introduction to Boosting • Boosting – Running weak learning algorithm on sampled examples – Combining the classifiers produced by the weak learners into a single composite classifier • Characteristics – Error-driven based sampling – Combination strategies • Variant – Ada. Boost VS. Adaptive Resampling Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

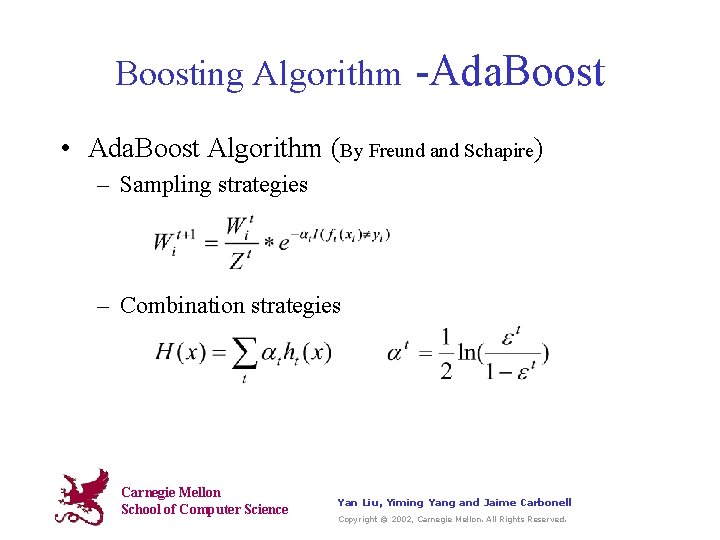

Boosting Algorithm -Ada. Boost • Ada. Boost Algorithm (By Freund and Schapire) – Sampling strategies – Combination strategies Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

Boosting Algorithm -Ada. Boost • Theoretical Analysis – Bound on Error • Training error drops exponentially fast [Schapire] • A qualitative bound on the generalization error – Connections • Logistic regression [Friedman] • Game theory and linear programming [Schapire] • Exponential model [Lebanon & Lafferty] • Applications Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

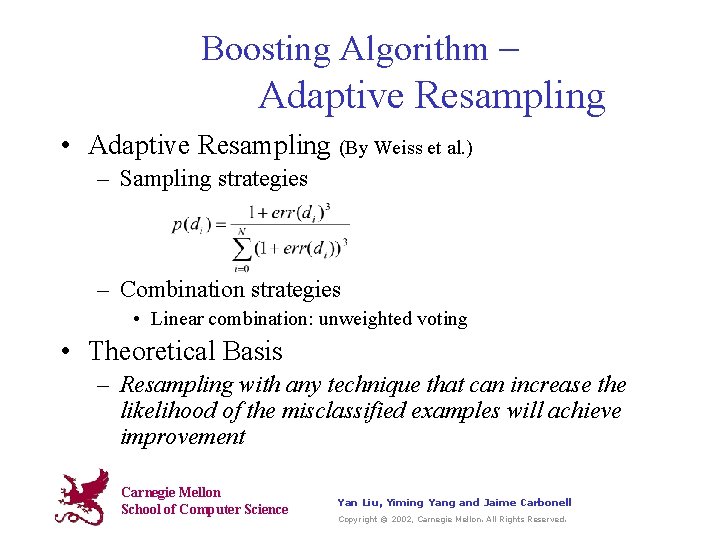

Boosting Algorithm – Adaptive Resampling • Adaptive Resampling (By Weiss et al. ) – Sampling strategies – Combination strategies • Linear combination: unweighted voting • Theoretical Basis – Resampling with any technique that can increase the likelihood of the misclassified examples will achieve improvement Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

Task Identification • Perspective – How boosting reacts to the inductive bias of different classifiers? • Main Focus – How well boosting works for “non-weak” learning Algorithms? • Decision Tree, Naïve Bayes, Support Vector Machines and Rocchio-based classifier Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

Inductive Bias • Inductive Learning – Inducing classification functions from a set of training examples • Inductive Bias – The underlying assumptions in the inductive inferences – Restriction bias VS. Preference bias • Search Space vs. Search Strategy Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

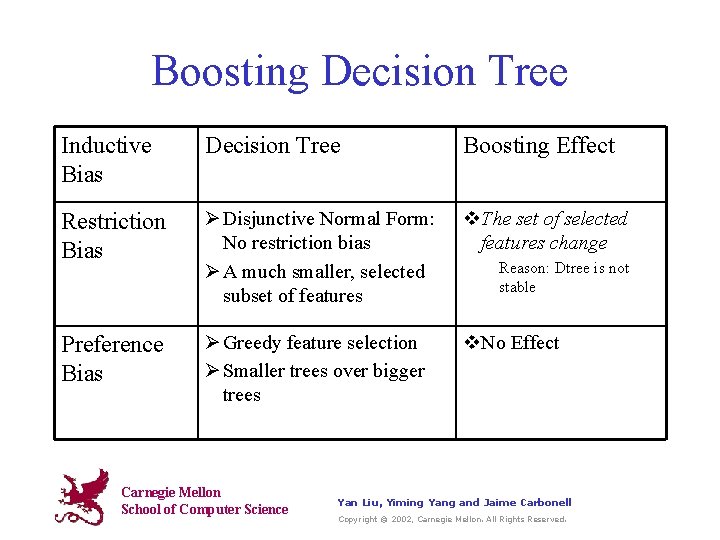

Boosting Decision Tree Inductive Bias Decision Tree Boosting Effect Restriction Bias Ø Disjunctive Normal Form: No restriction bias Ø A much smaller, selected subset of features v. The set of selected features change Preference Bias Ø Greedy feature selection Ø Smaller trees over bigger trees v. No Effect Carnegie Mellon School of Computer Science Reason: Dtree is not stable Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

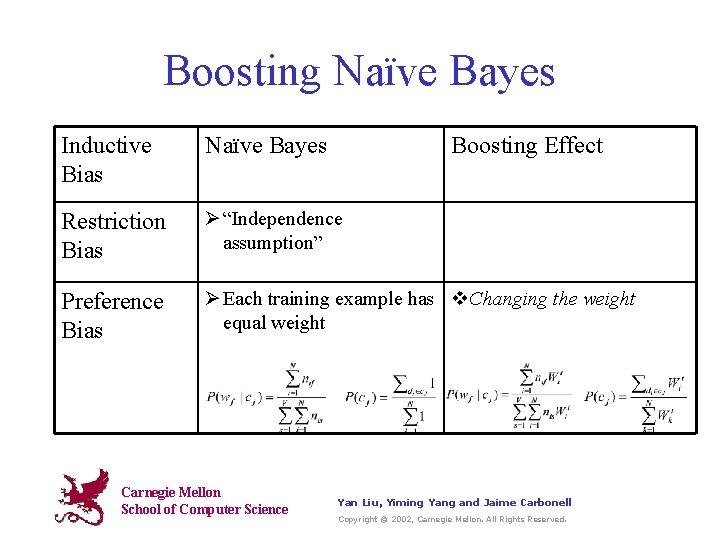

Boosting Naïve Bayes Inductive Bias Naïve Bayes Restriction Bias Ø “Independence assumption” Preference Bias Ø Each training example has v. Changing the weight equal weight Carnegie Mellon School of Computer Science Boosting Effect Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

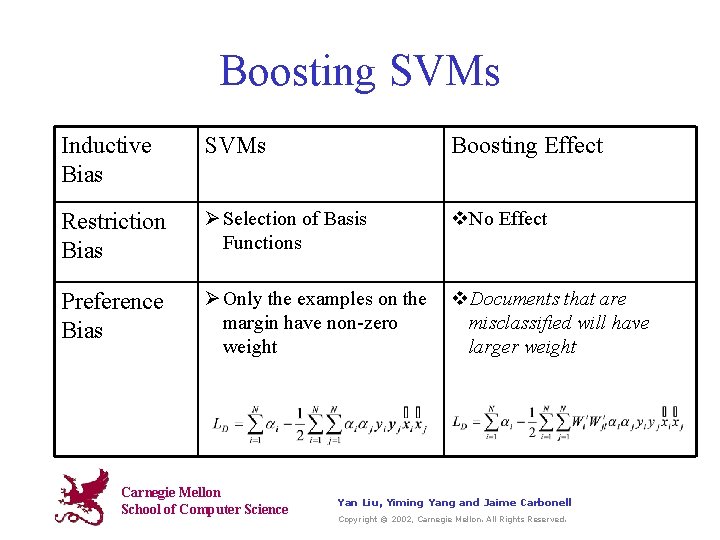

Boosting SVMs Inductive Bias SVMs Boosting Effect Restriction Bias Ø Selection of Basis Functions v. No Effect Preference Bias Ø Only the examples on the margin have non-zero weight v. Documents that are misclassified will have larger weight Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

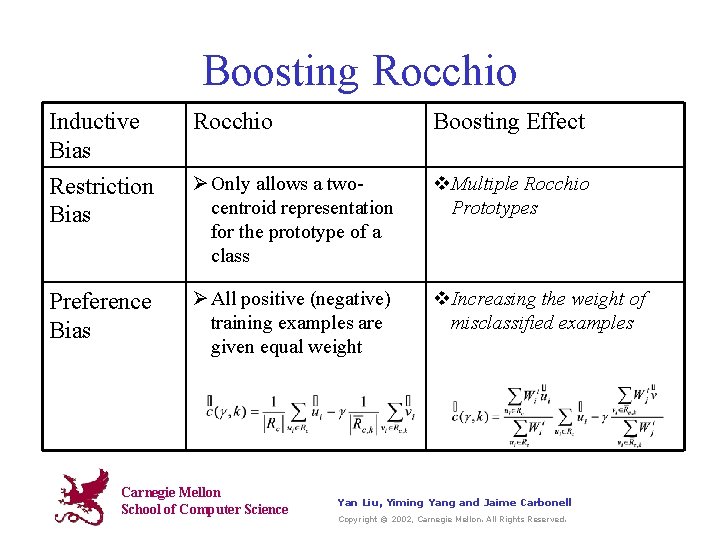

Boosting Rocchio Inductive Bias Restriction Bias Rocchio Boosting Effect Ø Only allows a twocentroid representation for the prototype of a class v. Multiple Rocchio Prototypes Preference Bias Ø All positive (negative) training examples are given equal weight v. Increasing the weight of misclassified examples Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

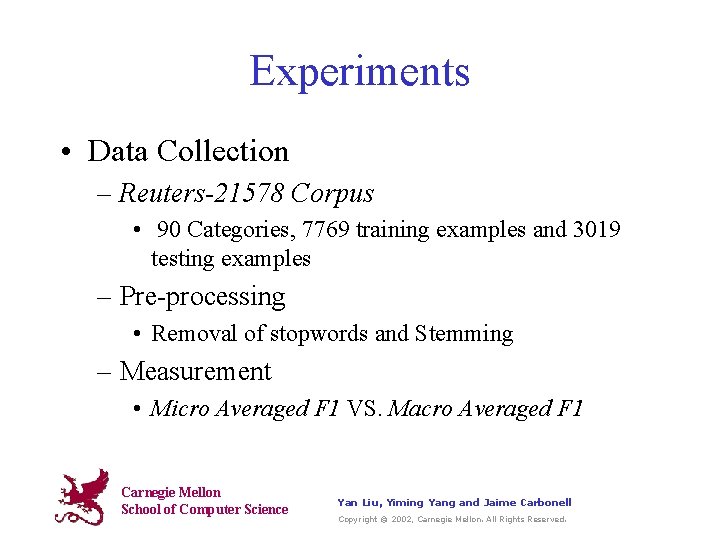

Experiments • Data Collection – Reuters-21578 Corpus • 90 Categories, 7769 training examples and 3019 testing examples – Pre-processing • Removal of stopwords and Stemming – Measurement • Micro Averaged F 1 VS. Macro Averaged F 1 Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

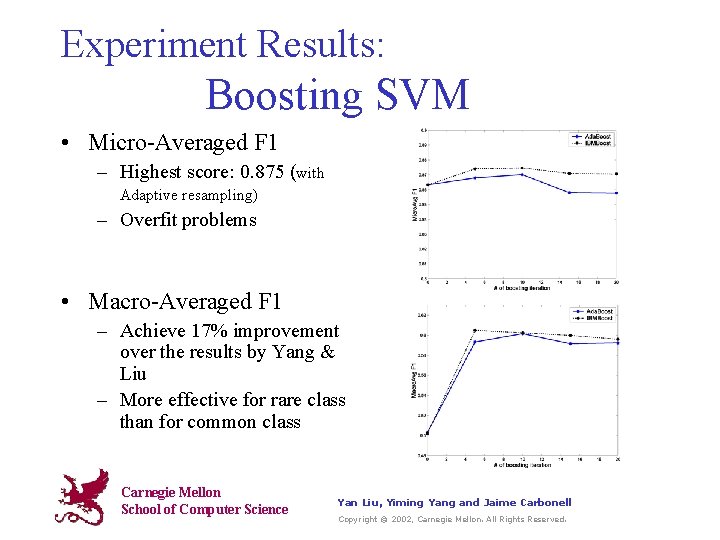

Experiment Results: Boosting SVM • Micro-Averaged F 1 – Highest score: 0. 875 (with Adaptive resampling) – Overfit problems • Macro-Averaged F 1 – Achieve 17% improvement over the results by Yang & Liu – More effective for rare class than for common class Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

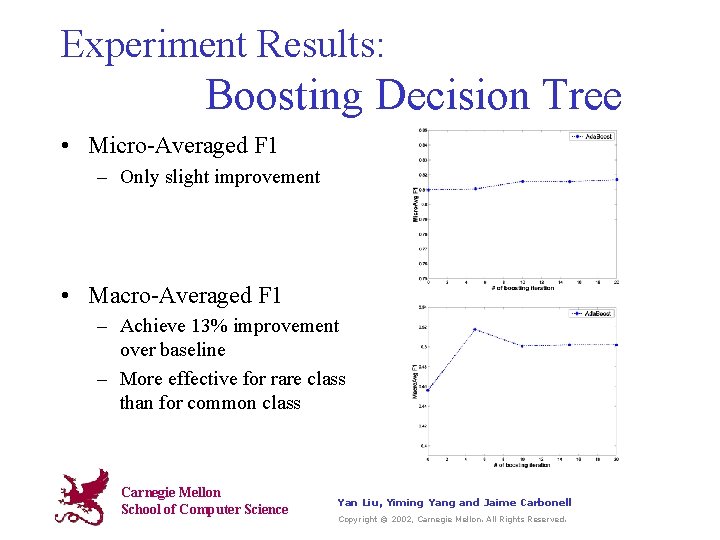

Experiment Results: Boosting Decision Tree • Micro-Averaged F 1 – Only slight improvement • Macro-Averaged F 1 – Achieve 13% improvement over baseline – More effective for rare class than for common class Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

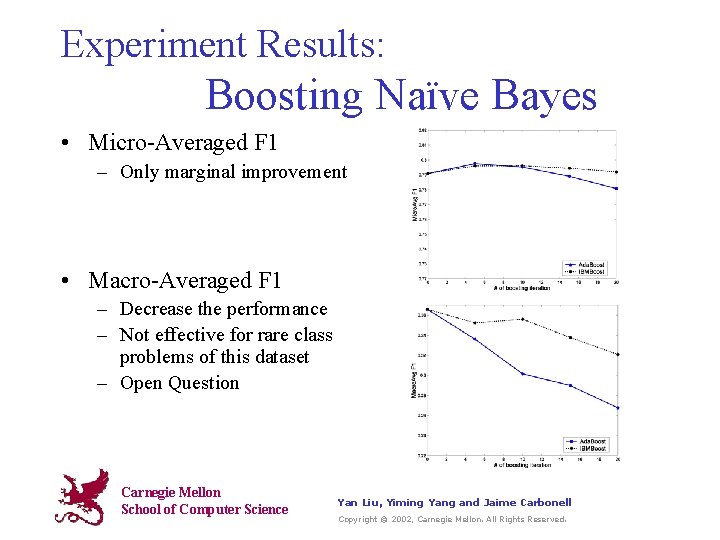

Experiment Results: Boosting Naïve Bayes • Micro-Averaged F 1 – Only marginal improvement • Macro-Averaged F 1 – Decrease the performance – Not effective for rare class problems of this dataset – Open Question Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

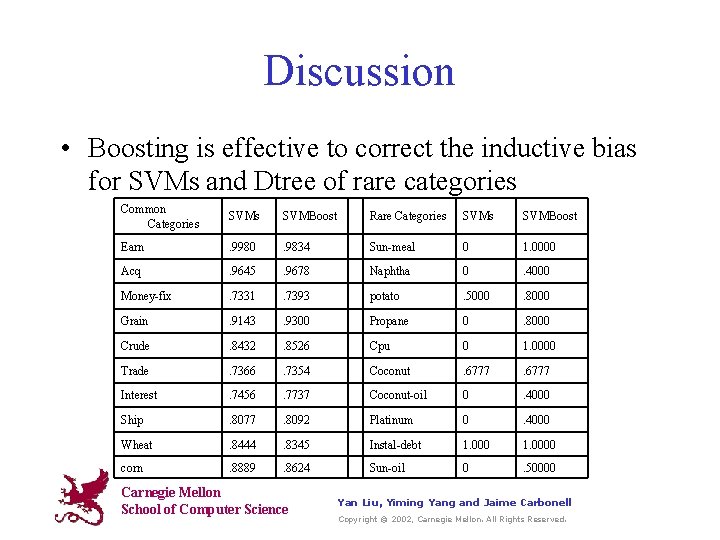

Discussion • Boosting is effective to correct the inductive bias for SVMs and Dtree of rare categories Common Categories SVMBoost Rare Categories SVMBoost Earn . 9980 . 9834 Sun-meal 0 1. 0000 Acq . 9645 . 9678 Naphtha 0 . 4000 Money-fix . 7331 . 7393 potato . 5000 . 8000 Grain . 9143 . 9300 Propane 0 . 8000 Crude . 8432 . 8526 Cpu 0 1. 0000 Trade . 7366 . 7354 Coconut . 6777 Interest . 7456 . 7737 Coconut-oil 0 . 4000 Ship . 8077 . 8092 Platinum 0 . 4000 Wheat . 8444 . 8345 Instal-debt 1. 0000 corn . 8889 . 8624 Sun-oil 0 . 50000 Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

Conclusion • Rare Categories: effectiveness to correct the inductive bias varies across classifiers – good for SVMs and DTree – We achieve 13 -17% improvement in Macro F 1 measure by boosting SVMs and Dtree • Common Categories: not significantly effective to correct the inductive bias – However, we achieve the best micro-averaged F 1 by boosting SVMs Carnegie Mellon School of Computer Science Yan Liu, Yiming Yang and Jaime Carbonell Copyright © 2002, Carnegie Mellon. All Rights Reserved.

- Slides: 17