Chinese Poetry Generation with Planning based Neural Network

Chinese Poetry Generation with Planning based Neural Network Yiding Wen, Jiefu Liang, Jiabao Zeng

Poetry Generation More than text generation: need to follow some specific structural, rhythmical and tonal patterns Some approaches: ● ● Based on semantic and grammar templates Statistical machine translation methods Consider it as a sequence to sequence generation problem …. . .

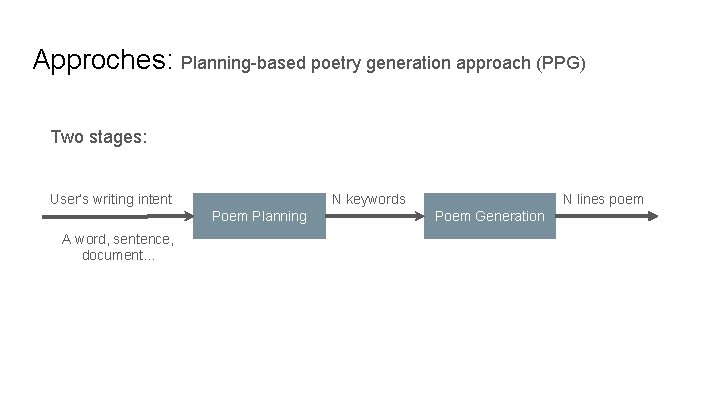

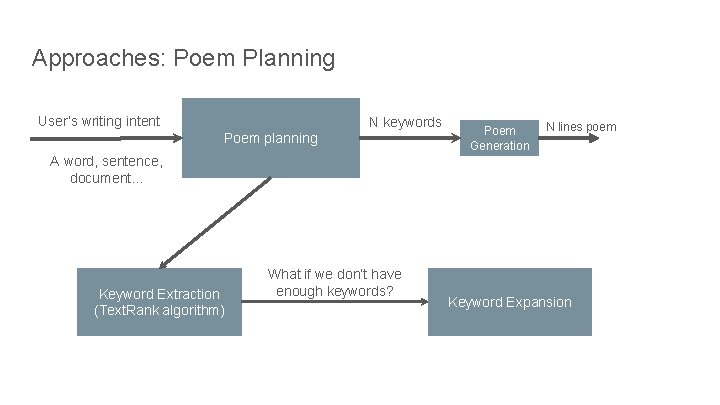

Approches: Planning-based poetry generation approach (PPG) Two stages: User’s writing intent N keywords Poem Planning A word, sentence, document. . . N lines poem Poem Generation

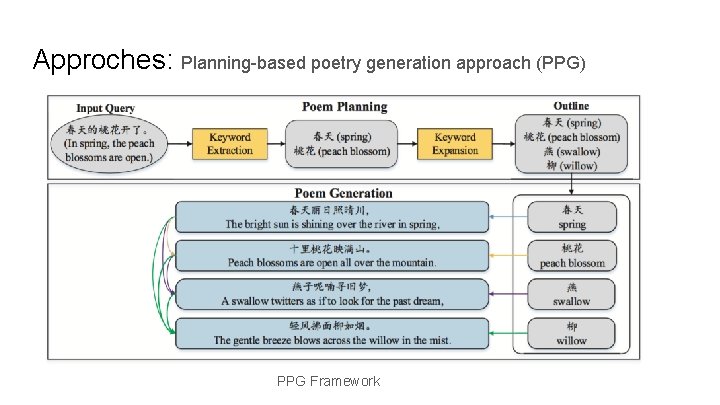

Approches: Planning-based poetry generation approach (PPG) PPG Framework

Approaches: Poem Planning User’s writing intent N keywords Poem planning Poem Generation N lines poem A word, sentence, document. . . Keyword Extraction (Text. Rank algorithm) What if we don’t have enough keywords? Keyword Expansion

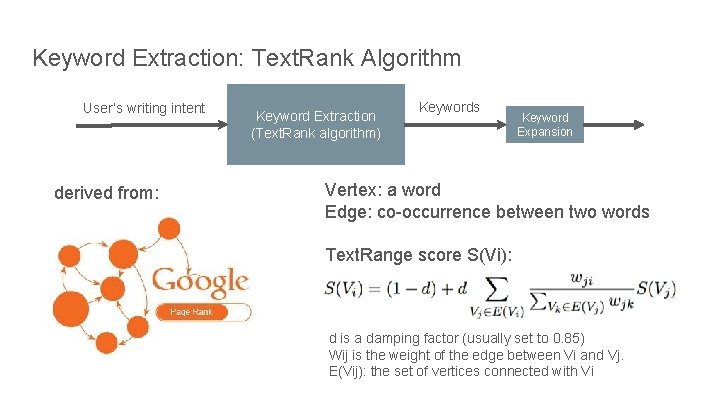

Keyword Extraction: Text. Rank Algorithm User’s writing intent derived from: Keyword Extraction (Text. Rank algorithm) Keywords Keyword Expansion Vertex: a word Edge: co-occurrence between two words Text. Range score S(Vi): d is a damping factor (usually set to 0. 85) Wij is the weight of the edge between Vi and Vj. E(Vij): the set of vertices connected with Vi

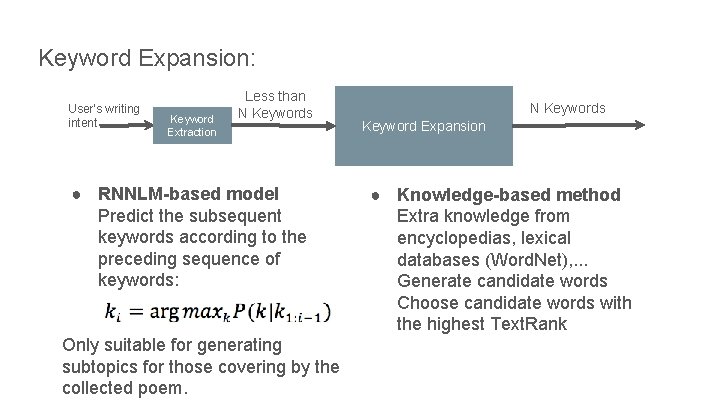

Keyword Expansion: User’s writing intent Keyword Extraction Less than N Keywords ● RNNLM-based model Predict the subsequent keywords according to the preceding sequence of keywords: Only suitable for generating subtopics for those covering by the collected poem. N Keywords Keyword Expansion ● Knowledge-based method Extra knowledge from encyclopedias, lexical databases (Word. Net), . . . Generate candidate words Choose candidate words with the highest Text. Rank

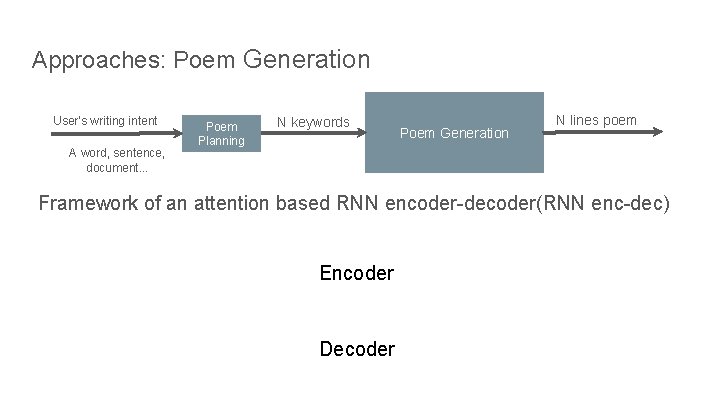

Approaches: Poem Generation User’s writing intent A word, sentence, document. . . Poem Planning N keywords Poem Generation N lines poem Framework of an attention based RNN encoder-decoder(RNN enc-dec) Encoder Decoder

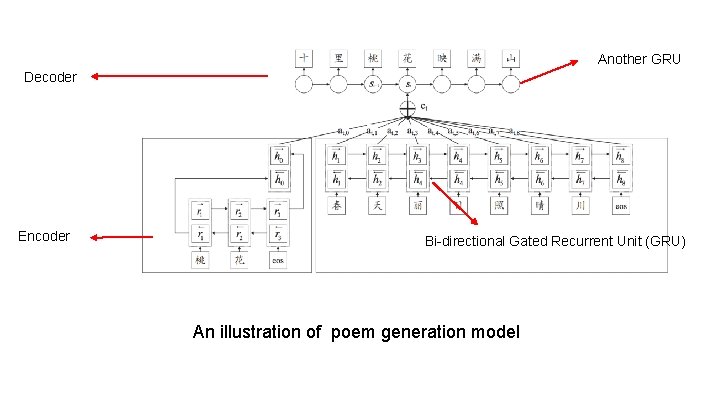

Another GRU Decoder Encoder Bi-directional Gated Recurrent Unit (GRU) An illustration of poem generation model

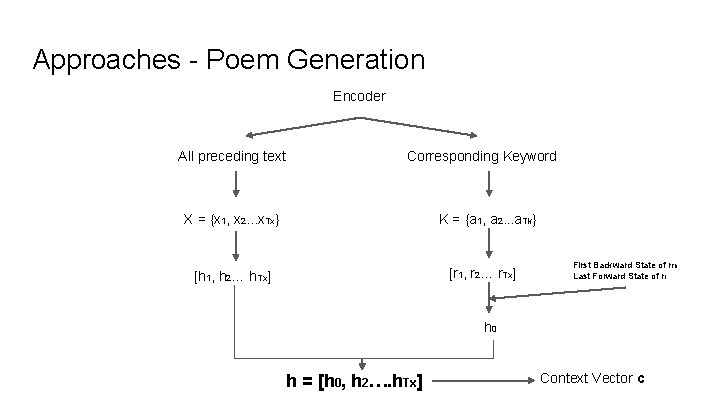

Approaches - Poem Generation Encoder All preceding text Corresponding Keyword X = {x 1, x 2. . . x. Tx} K = {a 1, a 2. . . a. Tk} [r 1, r 2… r. Tx] [h 1, h 2… h. Tx] First Backward State of r. Tk Last Forward State of r 1 h 0 h = [h 0, h 2…. h. Tx] Context Vector c

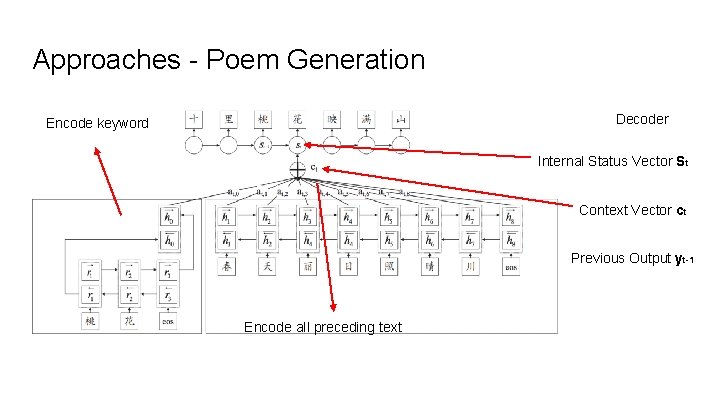

Approaches - Poem Generation Decoder Encode keyword Internal Status Vector St Context Vector ct Previous Output yt-1 Encode all preceding text

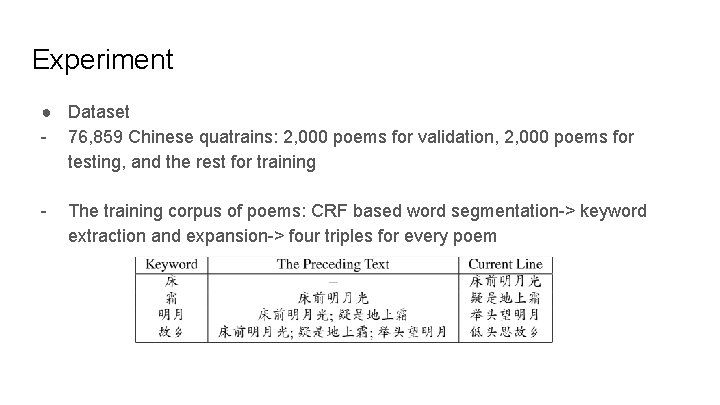

Experiment ● Dataset - 76, 859 Chinese quatrains: 2, 000 poems for validation, 2, 000 poems for testing, and the rest for training - The training corpus of poems: CRF based word segmentation-> keyword extraction and expansion-> four triples for every poem

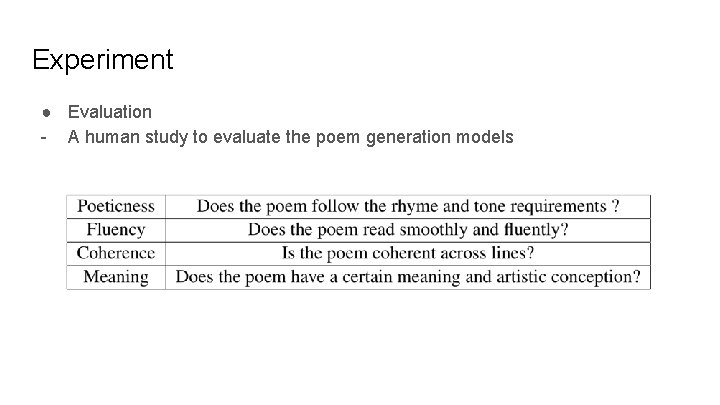

Experiment ● Evaluation - A human study to evaluate the poem generation models

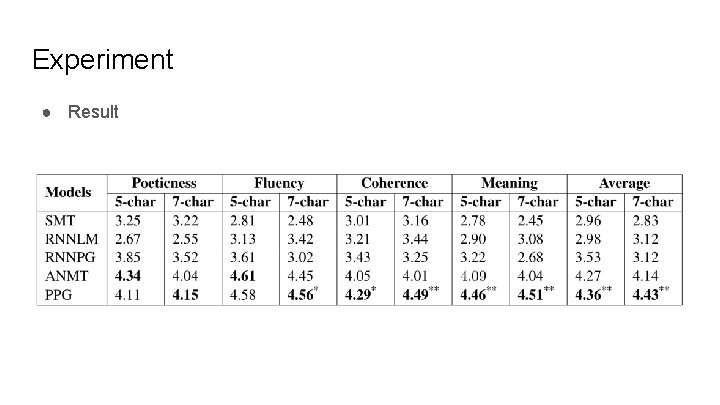

Experiment ● Result

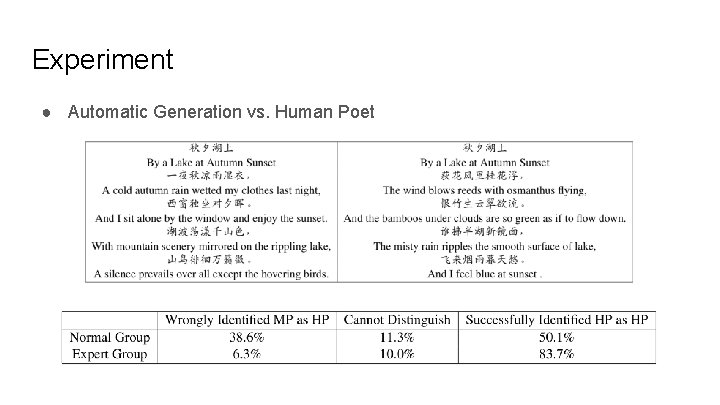

Experiment ● Automatic Generation vs. Human Poet

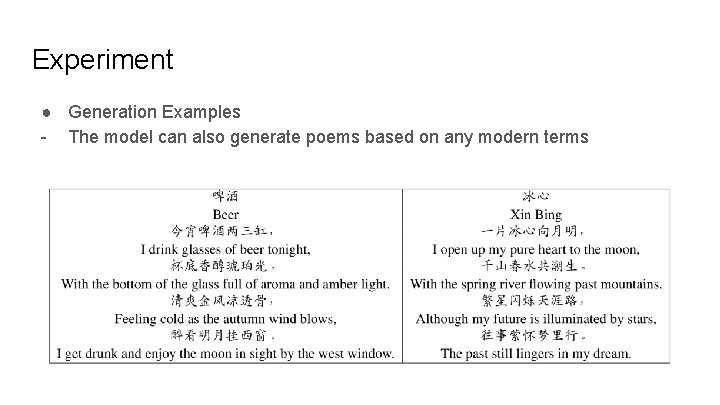

Experiment ● Generation Examples - The model can also generate poems based on any modern terms

Conclusion and Future Work - PPG (Planning-based Poetry Generation): two stages - Topic planning: p. LSA, LDA or word 2 vec Other forms of literary genres: Song iambics, Yuan Qu etc. , or poems in other languages

Thank you! Enjoy the spring break and good luck on homework : )

- Slides: 18