Characterizing the Sort Operation on Multithreaded Architectures Layali

![References [1] Jiménez-González, D. , Navarro, J. J. and Larriba-Pey J. CC-Radix: a Cache References [1] Jiménez-González, D. , Navarro, J. J. and Larriba-Pey J. CC-Radix: a Cache](https://slidetodoc.com/presentation_image/df0a370295fb1ab7f9453aa040e95cc7/image-17.jpg)

- Slides: 18

Characterizing the Sort Operation on Multithreaded Architectures Layali Rashid, Wessam M. Hassanein , and Moustafa A. Hammad* The Advanced Computer Architecture Group @ U of C (ACAG) Department of Electrical and Computer Engineering, *Department of Computer Science University of Calgary 1

Outline v v v v Multithreaded Architecture Motivation Parallel Radix Sort and Quick Sort Our Approach: Multithreaded Radix and Quick sort Experimental Methodology Timing and Memory Analysis Conclusions SDMAS'08 University of Calgary 2

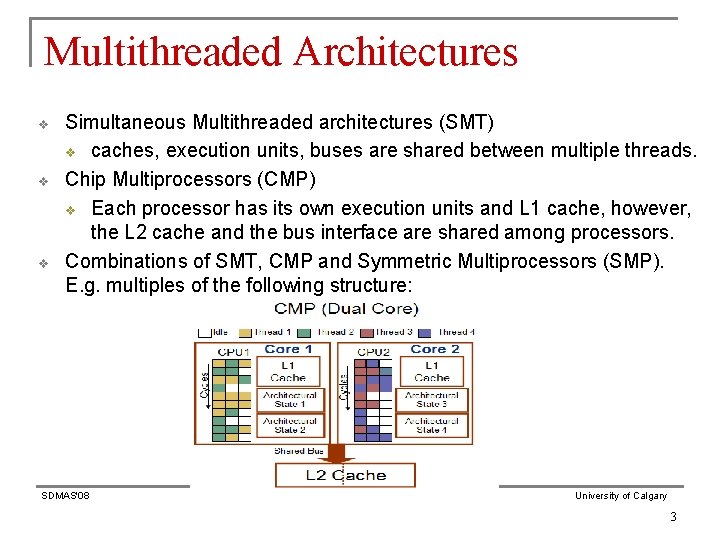

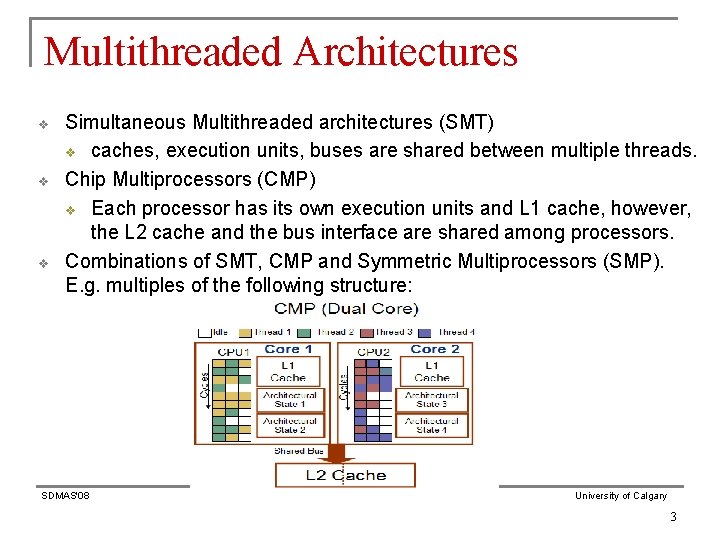

Multithreaded Architectures v v v Simultaneous Multithreaded architectures (SMT) v caches, execution units, buses are shared between multiple threads. Chip Multiprocessors (CMP) v Each processor has its own execution units and L 1 cache, however, the L 2 cache and the bus interface are shared among processors. Combinations of SMT, CMP and Symmetric Multiprocessors (SMP). E. g. multiples of the following structure: SDMAS'08 University of Calgary 3

Motivation v v v New forms of multithreading have opened opportunities for the improvement of data management operations to better utilize the underlying hardware resources. The sort operation is a core part of many critical applications. Sorts suffer from high data-dependencies that vastly limits performance. SDMAS'08 University of Calgary 4

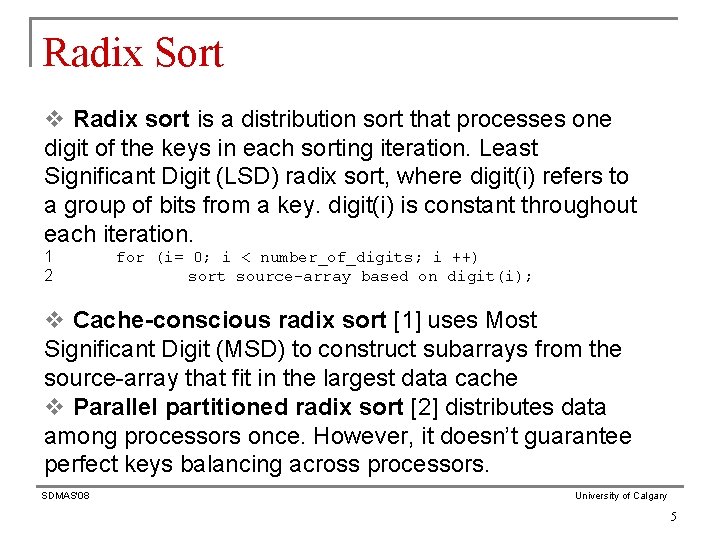

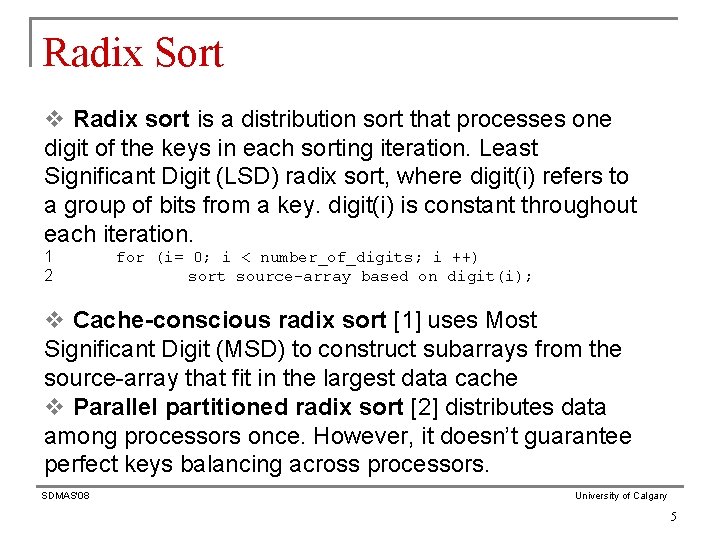

Radix Sort v Radix sort is a distribution sort that processes one digit of the keys in each sorting iteration. Least Significant Digit (LSD) radix sort, where digit(i) refers to a group of bits from a key. digit(i) is constant throughout each iteration. 1 2 for (i= 0; i < number_of_digits; i ++) sort source-array based on digit(i); v Cache-conscious radix sort [1] uses Most Significant Digit (MSD) to construct subarrays from the source-array that fit in the largest data cache v Parallel partitioned radix sort [2] distributes data among processors once. However, it doesn’t guarantee perfect keys balancing across processors. SDMAS'08 University of Calgary 5

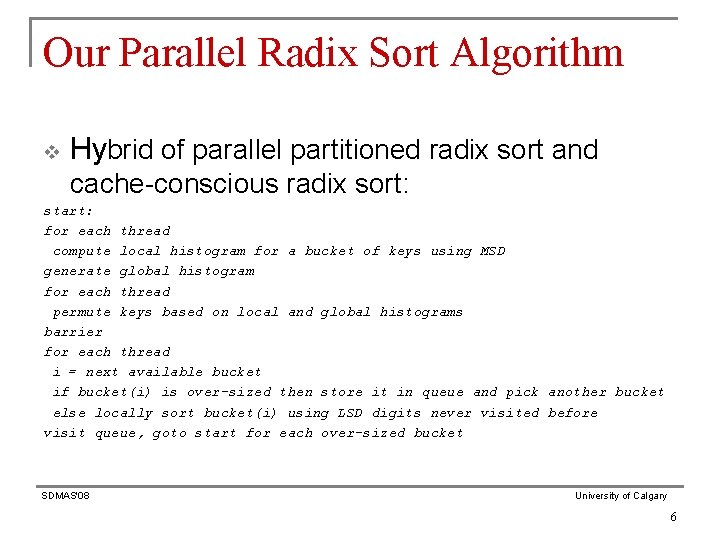

Our Parallel Radix Sort Algorithm v Hybrid of parallel partitioned radix sort and cache-conscious radix sort: start: for each thread compute local histogram for a bucket of keys using MSD generate global histogram for each thread permute keys based on local and global histograms barrier for each thread i = next available bucket if bucket(i) is over-sized then store it in queue and pick another bucket else locally sort bucket(i) using LSD digits never visited before visit queue, goto start for each over-sized bucket SDMAS'08 University of Calgary 6

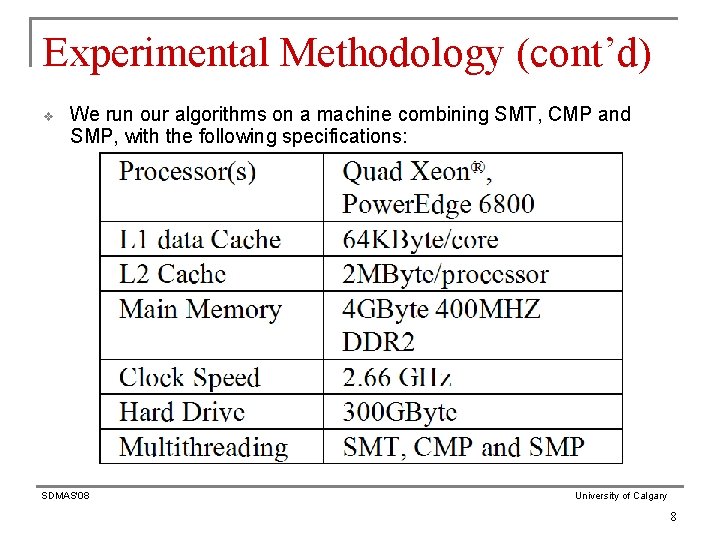

Experimental Methodology v We implemented all algorithms in C, and we use the Intel® C++ Compiler for Linux version 9. 1. v We use Open. MP C/C++ library version 2. 5 to initiate multiple threads in our multi-threaded codes. v Hardware events are collected using Intel® VTune™ Performance Analyzer for Linux 9. 0. v Our runs sort datasets ranges from 1× 10^7 to 6× 10^7 keys, which fits smoothly in our main memory. v We run three typical datasets: v v v SDMAS'08 Random: keys are generated by calling the random () C function, which return numbers ranging from 0 -2^31. Gaussian: each key is the average of four consecutive calls to the random () C function. Zero: all keys are set to a constant. This constant is randomly picked using the random () C function University of Calgary 7

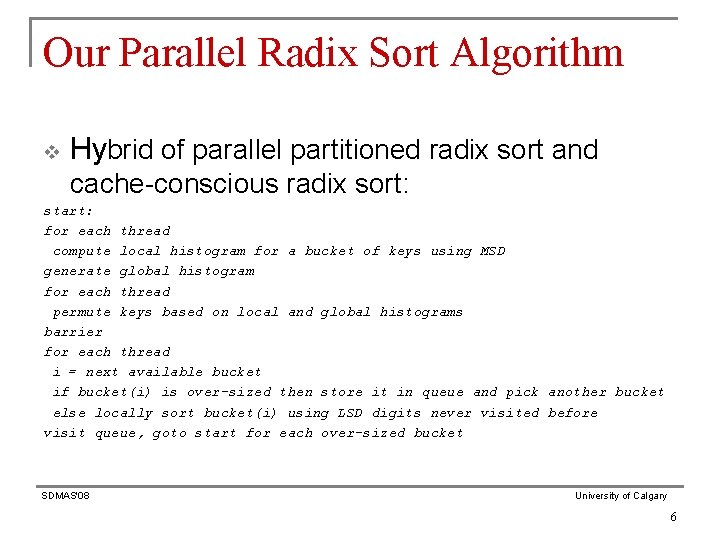

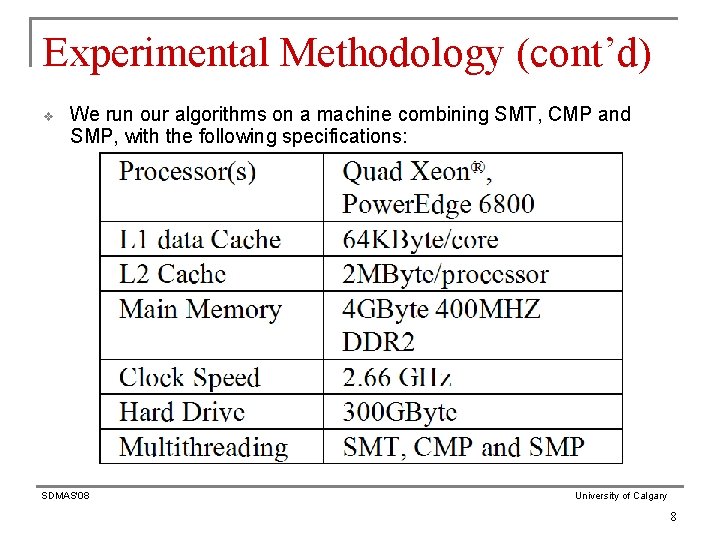

Experimental Methodology (cont’d) v We run our algorithms on a machine combining SMT, CMP and SMP, with the following specifications: SDMAS'08 University of Calgary 8

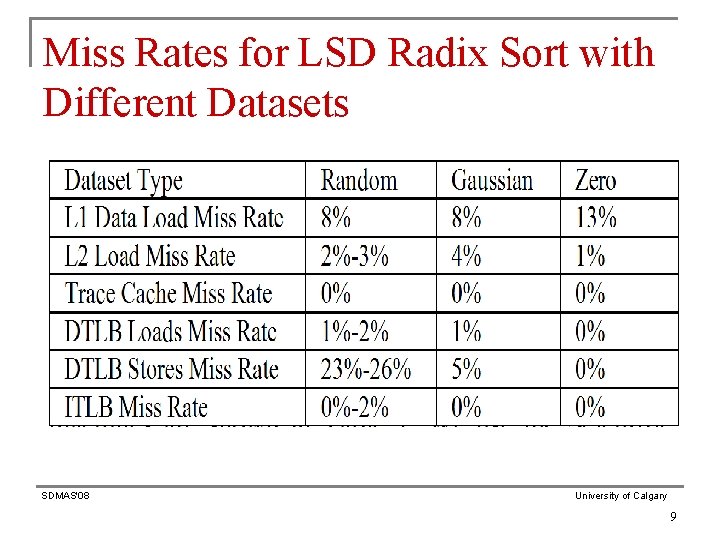

Miss Rates for LSD Radix Sort with Different Datasets SDMAS'08 University of Calgary 9

Radix Sort Timing for the Random Datasets v Speedups range from 54% for two threads to up to 300% for 16 threads compared to LSD radix sort. SDMAS'08 University of Calgary 10

Radix Sort Timing for the Gaussian Datasets v Speedups range from 7% for two threads to up to 237% for 16 threads compared to LSD radix sort. SDMAS'08 University of Calgary 11

Quick Sort v v v Quicksort is a comparison-based, divide-andconquer sort algorithm. Memory-tuned quick sort [3] uses insertion sort to sort the source subarrays to increase data locality. Simple fast parallel quick sort [4] in which a pivot is picked by the processor with the smallest ID. Then each processor processes an L 1 -data-cache-size block of keys from the left side of the pivot and another block from the right side. When the remaining subarrays are small enough to be sorted by one processor, memory-tuned quick sort is used. SDMAS'08 University of Calgary 12

Our Parallel Quick Sort v We choose to implement the best parallel quick sort we find, "simple fast parallel quick sort" with some optimization that includes the following: v Dynamically adjusting the block-sizes: since each memory location in the blocks is referenced once on average, our block-size is dynamically adjusted for each subarrary such that it provides good data balancing across threads, and is not necessary equal to the L 1 cache size. SDMAS'08 University of Calgary 13

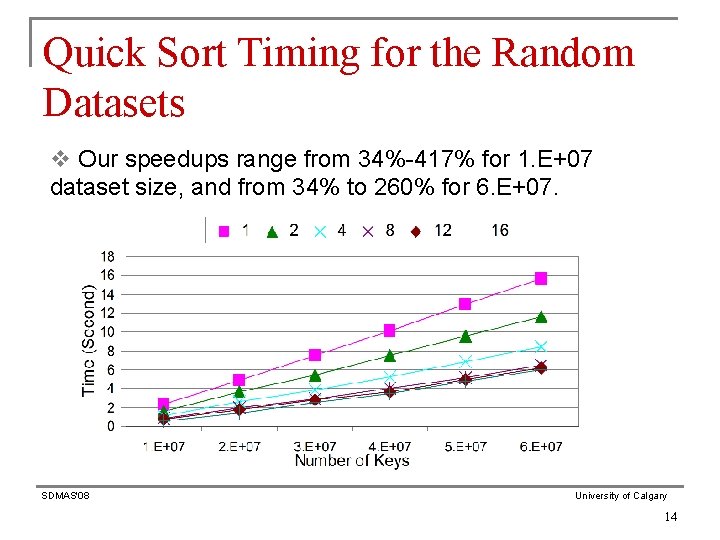

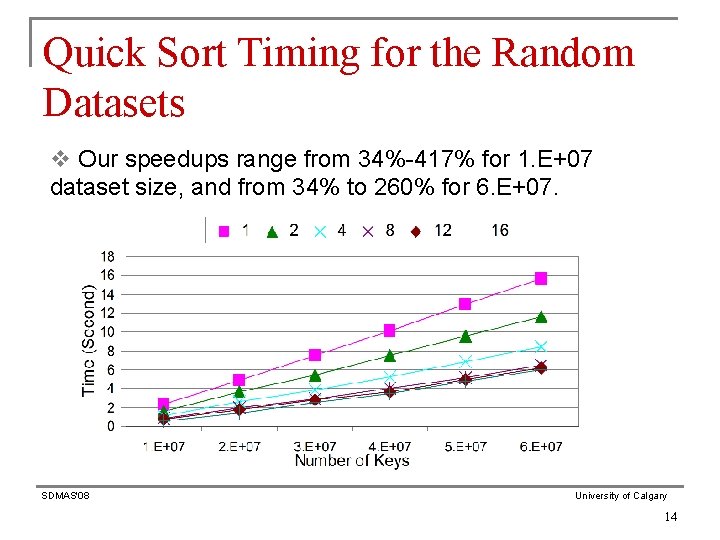

Quick Sort Timing for the Random Datasets v Our speedups range from 34%-417% for 1. E+07 dataset size, and from 34% to 260% for 6. E+07. SDMAS'08 University of Calgary 14

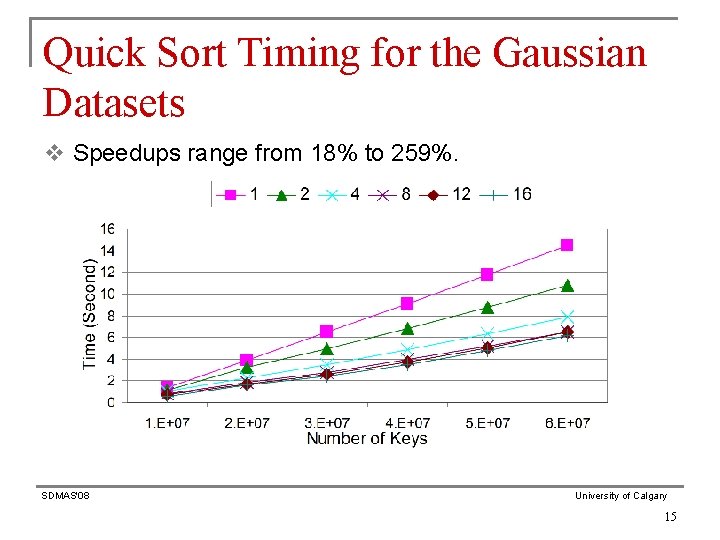

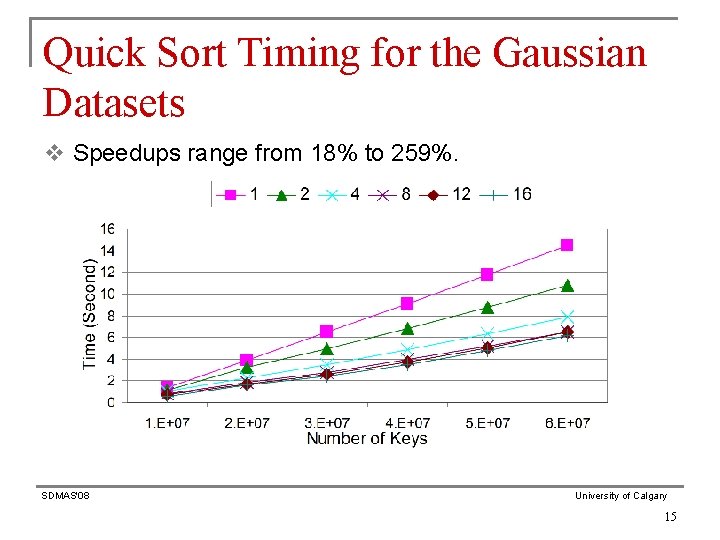

Quick Sort Timing for the Gaussian Datasets v Speedups range from 18% to 259%. SDMAS'08 University of Calgary 15

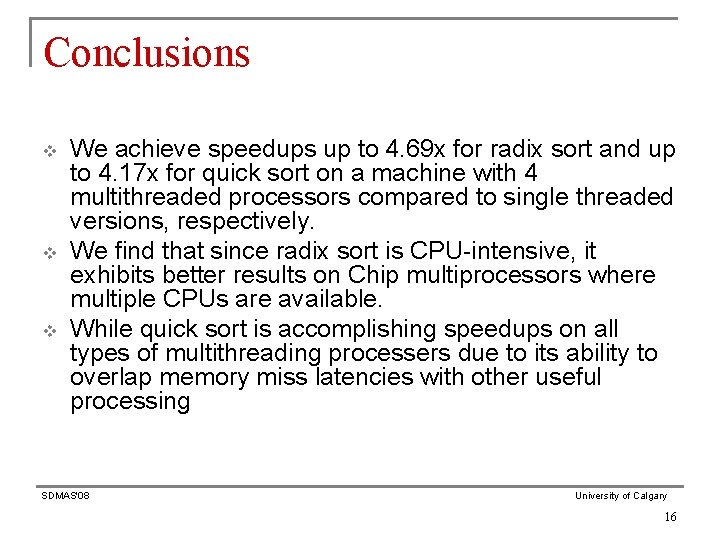

Conclusions v v v We achieve speedups up to 4. 69 x for radix sort and up to 4. 17 x for quick sort on a machine with 4 multithreaded processors compared to single threaded versions, respectively. We find that since radix sort is CPU-intensive, it exhibits better results on Chip multiprocessors where multiple CPUs are available. While quick sort is accomplishing speedups on all types of multithreading processers due to its ability to overlap memory miss latencies with other useful processing SDMAS'08 University of Calgary 16

![References 1 JiménezGonzález D Navarro J J and LarribaPey J CCRadix a Cache References [1] Jiménez-González, D. , Navarro, J. J. and Larriba-Pey J. CC-Radix: a Cache](https://slidetodoc.com/presentation_image/df0a370295fb1ab7f9453aa040e95cc7/image-17.jpg)

References [1] Jiménez-González, D. , Navarro, J. J. and Larriba-Pey J. CC-Radix: a Cache Conscious Sorting Based on Radix Sort. In Proceedings of the 11 th Euromicro Conference on Parallel Distributed and Network-Based Processing (PDP). Pages 101 -108, 2003. [2] Lee, S. , Jeon, M. , Kim, D. and Sohn, A. Partition Parallel Radix Sort. Journal of Parallel and Distributed Computing. Pages: 656 - 668, 2002. [3] La. Marca, A. and Ladner, R. The Influence of Caches on the Performance of Sorting. In Proceeding of the ACM/SIAM Symposium on Discrete Algorithms. Pages: 370– 379, 1997. [4] Tsigas, P. and Zhang, Yi. A Simple, Fast Parallel Implementation of Quicksort and its Performance Evaluation on Sun Enterprise 10000. In Proceedings of the 11 th EUROMICRO Conference on Parallel Distributed and Network-Based Processing (PDP). Pages: 372 – 381, 2003. SDMAS'08 University of Calgary 17

The End SDMAS'08 University of Calgary 18