CHARACTERISTICS OF A GOOD MEASURING INSTRUMENT TEST The

- Slides: 38

CHARACTERISTICS OF A GOOD MEASURING INSTRUMENT (TEST) • The most essential of these are: 1. 2. 3. 4. 5. Validity Reliability Objectivity Differentiability Usability.

1. VALIDITY: � � � Test experts generally agree that the most important quality of a test is its validity. The word “validity” means effectiveness” It refers to the accuracy with which a thing is measured. A test is said to be valid if it measures what it claims to measure.

• According to Gronlund • “Validity refers to the appropriateness of the interpretations made from test scores and other evaluation results, with regard to a particular use”.

Nature/Characteristics of Validity: • When using the term validity in relation to testing and evaluation, there a number of cautions to be borne in mind. These are: • a. Validity refers to the appropriateness of the interpretation of the results of a test not to the test itself.

• B. Validity is a matter of degree: Validity does not exist on an all-or-none basis. So we should avoid speaking valid or invalid test. Validity is best considered in terms of categories that specify degree, such as high validity, moderate validity and low validity.

• C. Validity is always specific to some particular use or interpretation. No test is valid for all purpose. When describing validity, it is necessary to consider the specific interpretation or use to be made of the result and it is not valid for all purposes.

Types/Approaches to Test Validation: • According to Gronlund (1990), there are three basic approaches to the validity of tests. These are 1. Content validity 2. Criterion-related validity 3. Construct validity

1. CONTENT VALIDITY According to Gronlund (1990) it refers to the extent to which the test content represents a specified universe of content. It means that the “test content” (Test Items) should measure the “course content” (Curriculum/objectives).

• Johanson, B & Christensen, L. (2008) stated that when making your decision, try to answer these tree questions: • Do the items appear to represent the thing you are trying to measure? • Does the set of items fully representing the important content areas or topics? • Have you included all relevant items?

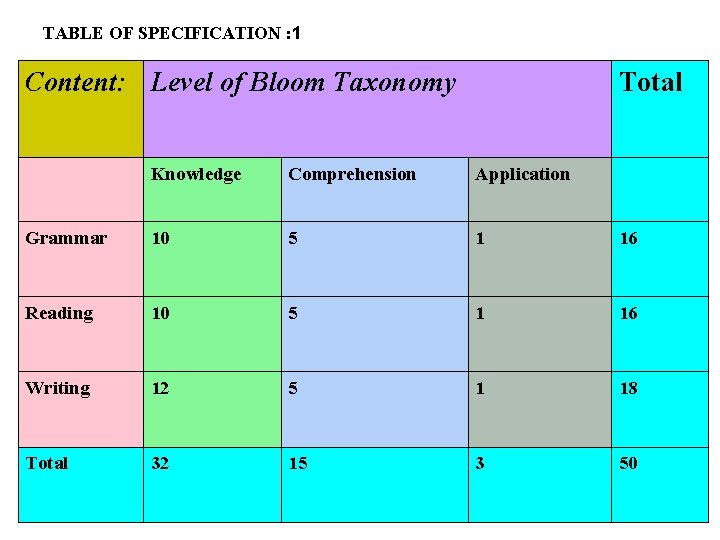

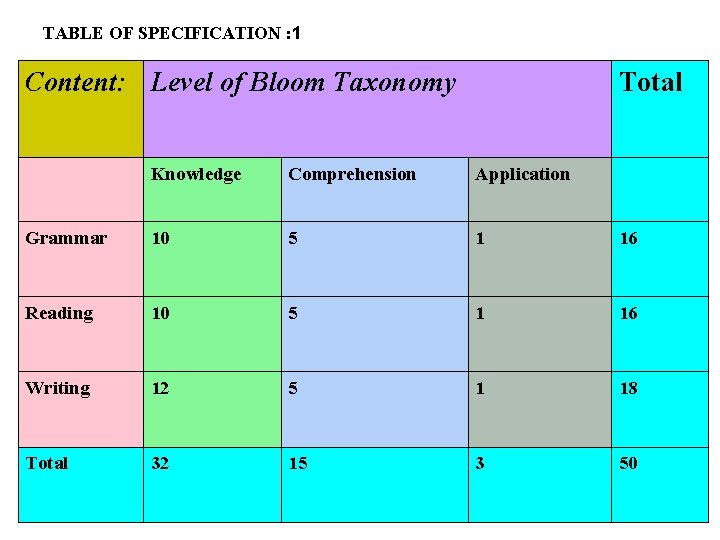

• Table of specification is used to ensure the content validity of a test.

TABLE OF SPECIFICATION : 1 Content: Level of Bloom Taxonomy Total Knowledge Comprehension Application Grammar 10 5 1 16 Reading 10 5 1 16 Writing 12 5 1 18 Total 32 15 3 50

2. CRITERION RELATED VALIDITY: Criterion-Related validity deals whenever we need prediction of future performance of students or to assess/estimate present/current performance on some criterion (valued measure other the test itself). It refers to the extent to which the test correlates with some criterion.

Types of Criterion-Related Evidence (validity): • According to Rizvi, A. (1973) there are two types of Criterion-Related Evidence (validity) according to time factor. These are • i. Concurrent Evidence (Validity) • ii. Predictive Evidence (Validity)

i. Concurrent Evidence (Validity): • It refers to the extent to which the test correlates with some criterion obtained at the same time (i. e. concurrently). • For example when Maths test scores developed by a class room teacher correlated with another Maths test or with Teachers rating, you have concurrent Evidence (Validity).

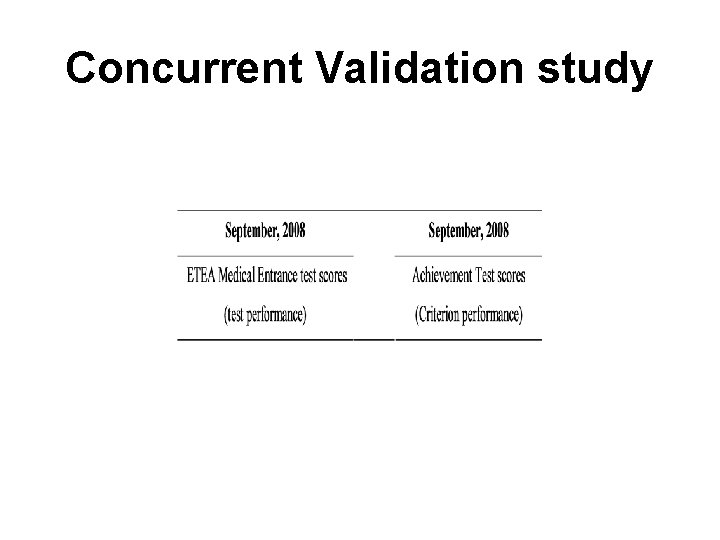

Concurrent Validation study

ii. Predictive Evidence (Validity): • According to Gronlund “it refers to the extent to which the test correlates with some criterion obtained after a stated interval of time”.

The predictive validity of a test is determined by • establishing the relationship between scores on the test and some measure of success in the situation of interest. • The test use to predict success is referred to as the Predictor and • the behaviour predicted is referred to as the “Criterion”

Predictive validation study

Predictive validation study

CONSTRUCT VALIDITY: It refers to the extent to which the test measure the construct it claims to measure. � A construct is a psychological trait or quality that we assume to exists in order to explain some aspect of behavour. � Mathematical reasoning, intelligence, creativity, sociability, honesty and anxiety are the examples of construct. �

There are three basic steps to construct validity. 1. To identify the construct e. g intelligence. 2. From theory the student can analyses the prediction e. g it is trait to know. A. General knowledge. B. Reasoning power. C. To solve numerical problems. D. Decision power. 3. Then measure these factors through test items.

FACTORS INFLUENCING VALIDITY. Three types factors: 1. FACTORS IN THE TEST ITSELF: 2. FACTORS IN THE TEST ADMINISTRATION AND SCORING. 3. PERSONAL FACTORS OF STUDENT

2. RELIABILTY According to Gronlund “Reliability refers to the consistency of measurement” According to Ebel “The ability of the test to measure the same quality when it is administered to an individual on two different occasions or by two different testers or evaluators is known as reliability”.

Nature/Characteristics of Reliability: • The meaning of Reliability can further clarified by noting the following general points: 1. Reliability refers to the result obtained with an evaluation instrument (test) and not to the instrument (test) itself.

2. Reliability refers to some particular type of consistency. • • Test scores are not reliable in general. They are reliable (or generalizable) over different period of time, over different samples of questions, over different raters, and the like.

3. Reliability is a necessary but not a sufficient condition for validity. • • A valid test must also be a reliable test, but high reliability does not ensure that a satisfactory degree of validity will be present. In summary, reliability merely provides the consistency that makes validity possible.

Reliability is primarily statistical • The two widely used method of expressing reliability are “Standard Error of Measurement” and Reliability Co-efficient. • Reliability Co-efficient “is a correlation Co-efficient that indicates the degree of relationship between two set of measures obtained from same instrument or procedure”

Methods of estimating Reliability • Lien, A. J. (1976) mentioned three basic methods of estimating Reliability 1. Test-Re-Test Method 2. Alternative Form / Equivalent forms Method 3. Split-Halves Method/Internal Consistency Method

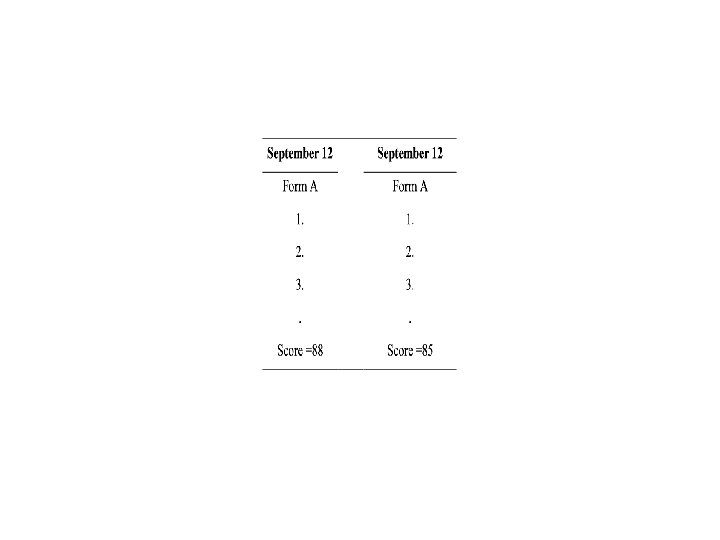

1. Test-Re-Test Method • In this method the same test is administered twice to the same group of pupils with a given time interval between the two administration (from several minutes to several years). • The resulting scores are correlated, and this correlation coefficient provides a measure of stability; that is, it indicates how stable the test results are over the given period of time.

2. THE PARALLEL FORMS METHOD (EQUIVALENT FORMS) In this method two equivalent forms of a test are given to the same group of students and the scores obtained on the two forms are correlated.

In this method the factors of memory and practice are almost entirely eliminated since the two forms of the test can ordinarily be administered on the test are really equivalent, otherwise the correlation between them will tend to underestimate their true reliability.

3. THE SPLIT-HALF METHOD. In this method the test is so designed that it can be divided into two equivalent halves, say odd-numbered and even-numbered items. It is then administered as whole only once. The scores of even-numbered and oddnumbered items are correlated

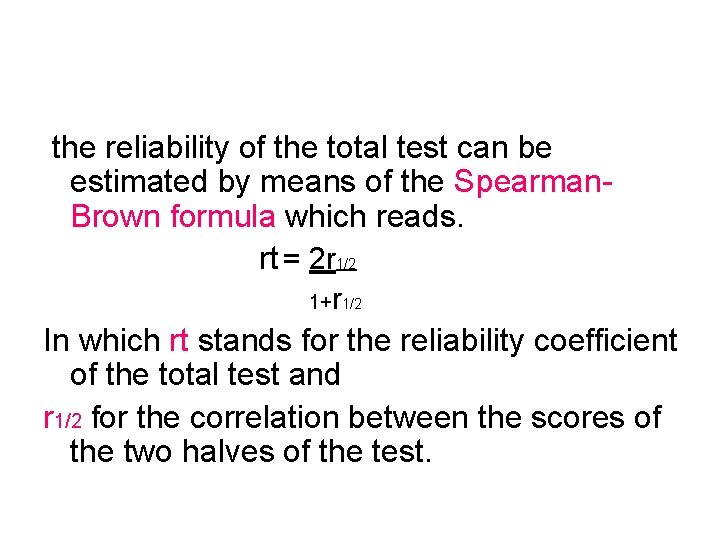

the reliability of the total test can be estimated by means of the Spearman. Brown formula which reads. rt = 2 r 1/2 1+r 1/2 In which rt stands for the reliability coefficient of the total test and r 1/2 for the correlation between the scores of the two halves of the test.

3. PRACTICALLY OR USABILITY. After constructing the test, we have to administer and then to score the test and improve the quality of the test. it contains the following steps. 1. EASE OF ADMINISTRATION: It consists of two points. a. Instructions: If the instructions are not clear, teacher and student both will be in difficulty. Such as; how many questions have to solve or how much time is available for the test? b. Timing: Fixing the time for the test is also a difficult fob. Season must be kept in moving in preparing time table for the test.

SUFFICIENT TIME. Time should keep according to the number and nature of the questions.

COST OF TESTING. The test should inexpensive. It should not be burden of students or institute, but reliability and validity must be kept in mind.

EASY TO SCORE. The test should be so constructed that its scoring must be easy. There should be specific marks for every part and every questions. Scoring key must be provided to the evaluator. There should be objectivity in scoring the test.