Chapter One Introduction to Pipelined Processors Principle of

- Slides: 37

Chapter One Introduction to Pipelined Processors

Principle of Designing Pipeline Processors (Design Problems of Pipeline Processors)

Instruction Prefetch and Branch Handling • The instructions in computer programs can be classified into 4 types: – Arithmetic/Load Operations (60%) – Store Type Instructions (15%) – Branch Type Instructions (5%) – Conditional Branch Type (Yes – 12% and No – 8%)

Instruction Prefetch and Branch Handling • Arithmetic/Load Operations (60%) : – These operations require one or two operand fetches. – The execution of different operations requires a different number of pipeline cycles

Instruction Prefetch and Branch Handling • Store Type Instructions (15%) : – It requires a memory access to store the data. • Branch Type Instructions (5%) : – It corresponds to an unconditional jump.

Instruction Prefetch and Branch Handling • Conditional Branch Type (Yes – 12% and No – 8%) : – Yes path requires the calculation of the new address – No path proceeds to next sequential instruction.

Instruction Prefetch and Branch Handling • Arithmetic-load and store instructions do not alter the execution order of the program. • Branch instructions and Interrupts cause some damaging effects on the performance of pipeline computers.

Handling Example – Interrupt System of Cray 1

Cray-1 System • The interrupt system is built around an exchange package. • When an interrupt occurs, the Cray-1 saves 8 scalar registers, 8 address registers, program counter and monitor flags. • These are packed into 16 words and swapped with a block whose address is specified by a hardware exchange address register

Instruction Prefetch and Branch Handling • In general, the higher the percentage of branch type instructions in a program, the slower a program will run on a pipeline processor.

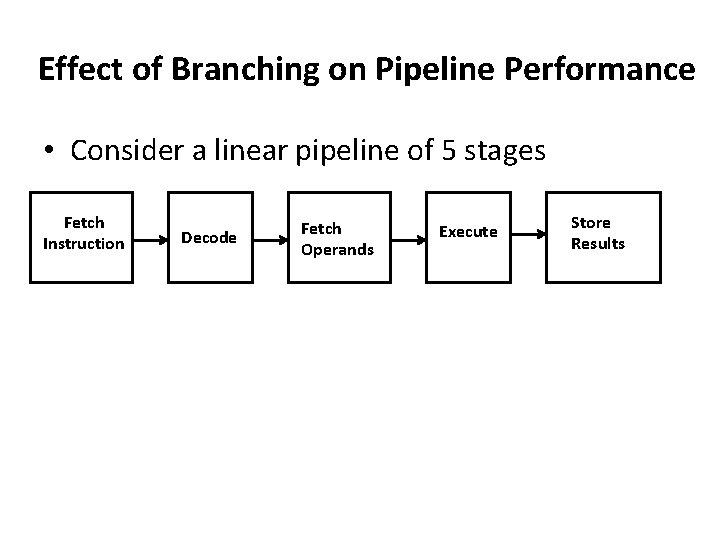

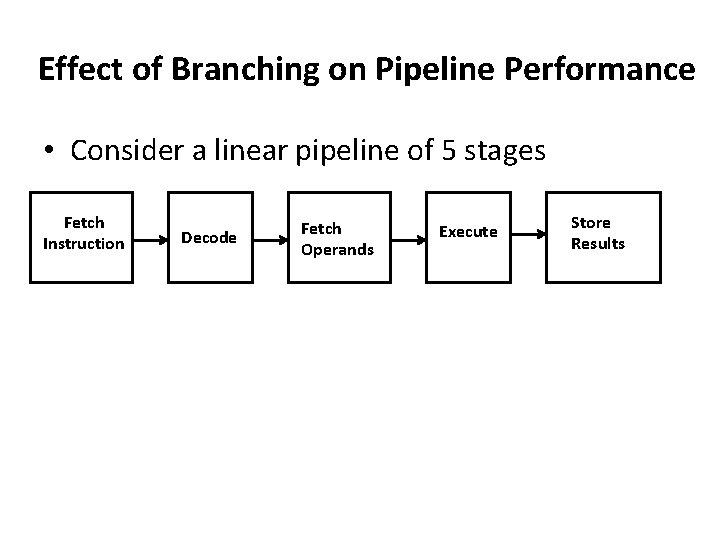

Effect of Branching on Pipeline Performance • Consider a linear pipeline of 5 stages Fetch Instruction Decode Fetch Operands Execute Store Results

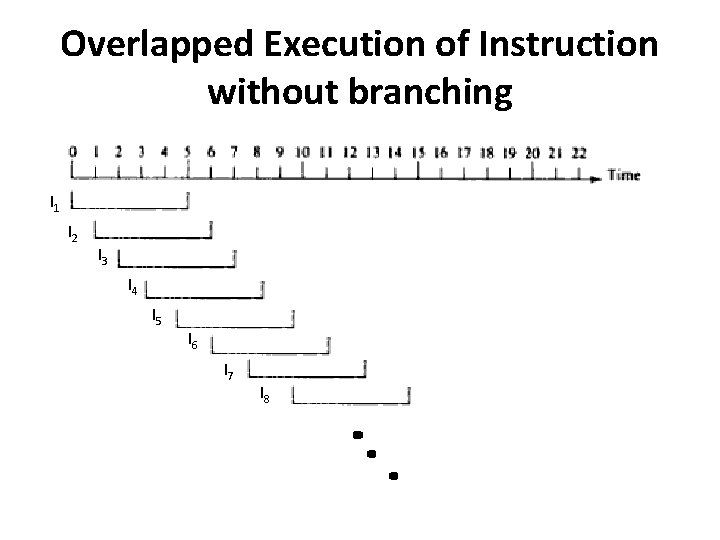

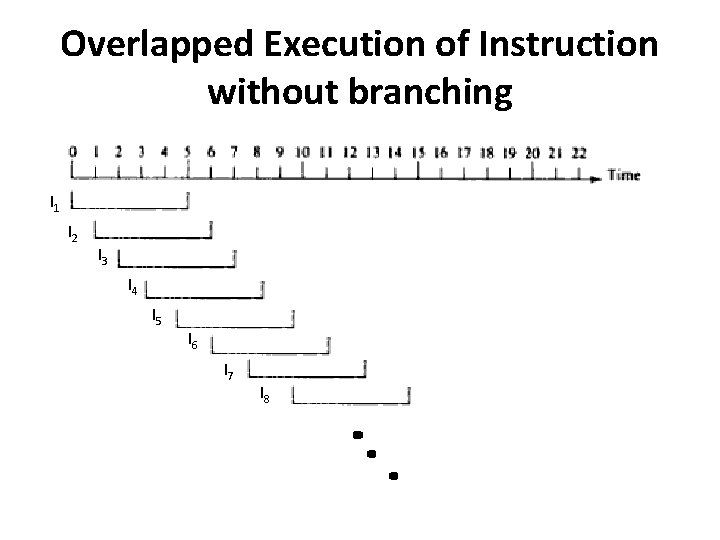

Overlapped Execution of Instruction without branching I 1 I 2 I 3 I 4 I 5 I 6 I 7 I 8

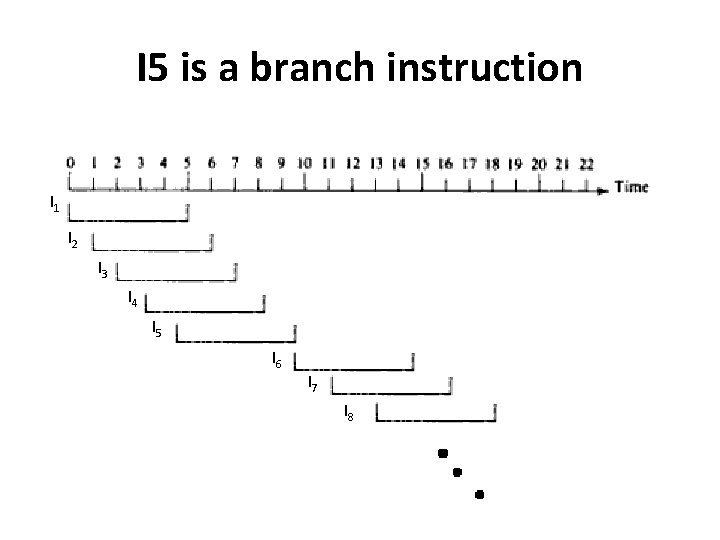

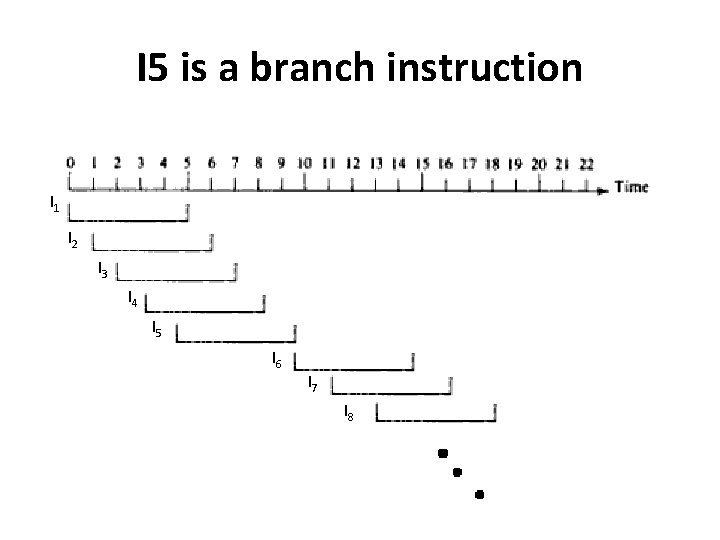

I 5 is a branch instruction I 1 I 2 I 3 I 4 I 5 I 6 I 7 I 8

Estimation of the effect of branching on an n-segment instruction pipeline

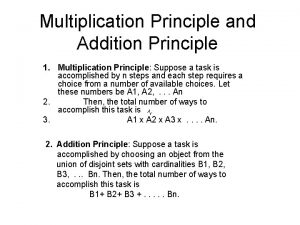

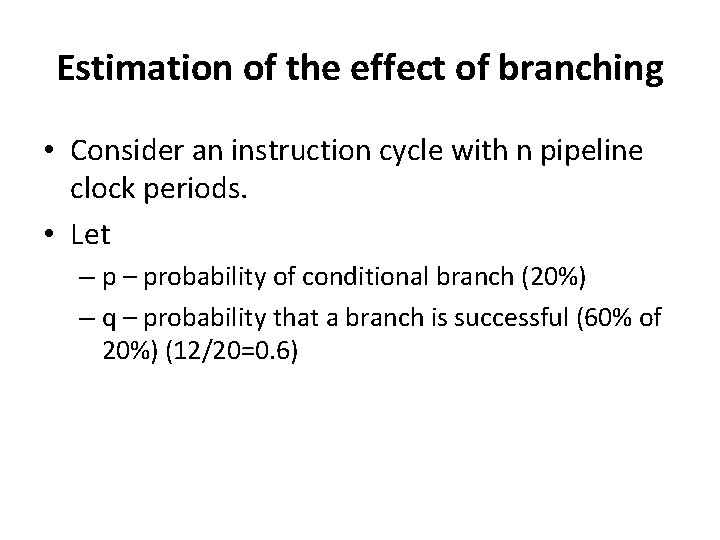

Estimation of the effect of branching • Consider an instruction cycle with n pipeline clock periods. • Let – probability of conditional branch (20%) – q – probability that a branch is successful (60% of 20%) (12/20=0. 6)

Estimation of the effect of branching • Suppose there are m instructions • Then no. of instructions of successful branches = mxpxq (mx 0. 2 x 0. 6) • Delay of (n-1)/n is required for each successful branch to flush pipeline.

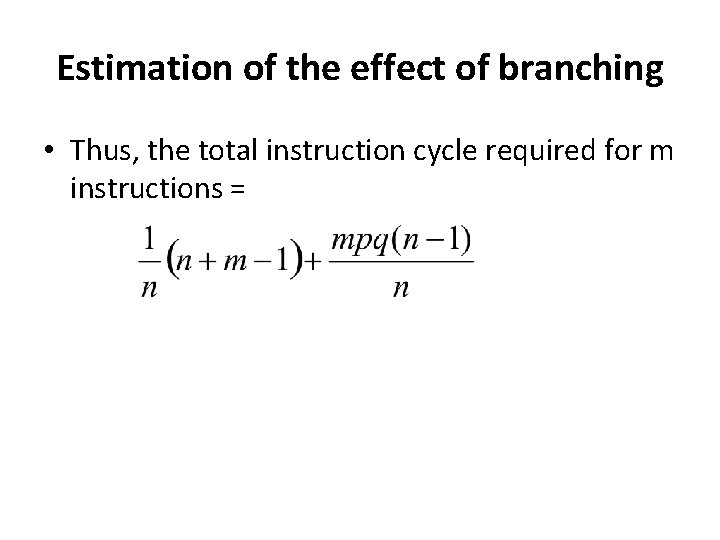

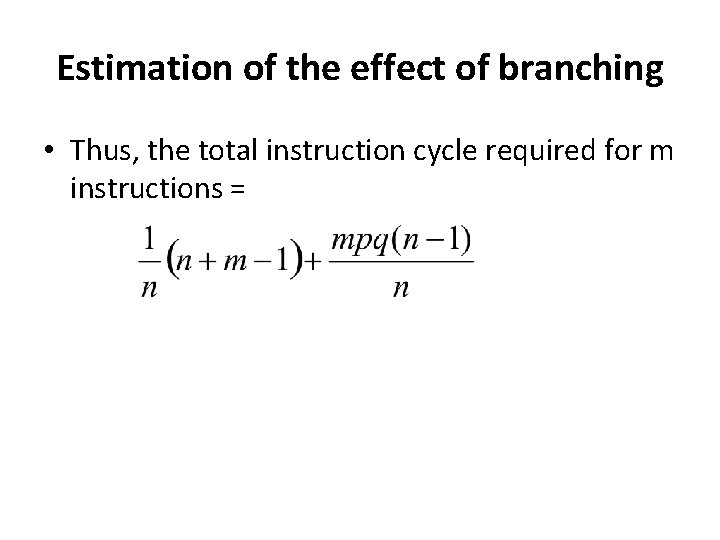

Estimation of the effect of branching • Thus, the total instruction cycle required for m instructions =

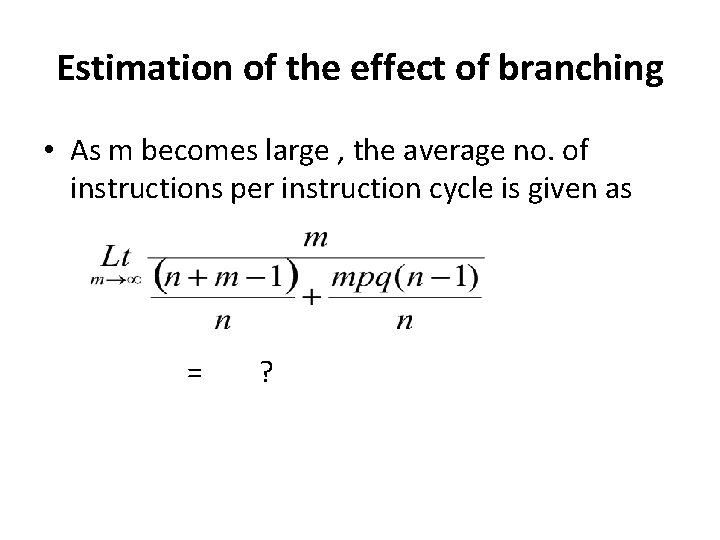

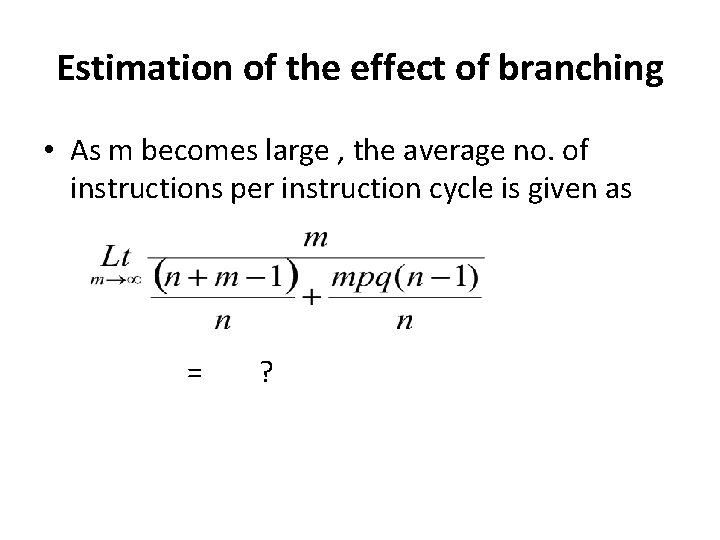

Estimation of the effect of branching • As m becomes large , the average no. of instructions per instruction cycle is given as = ?

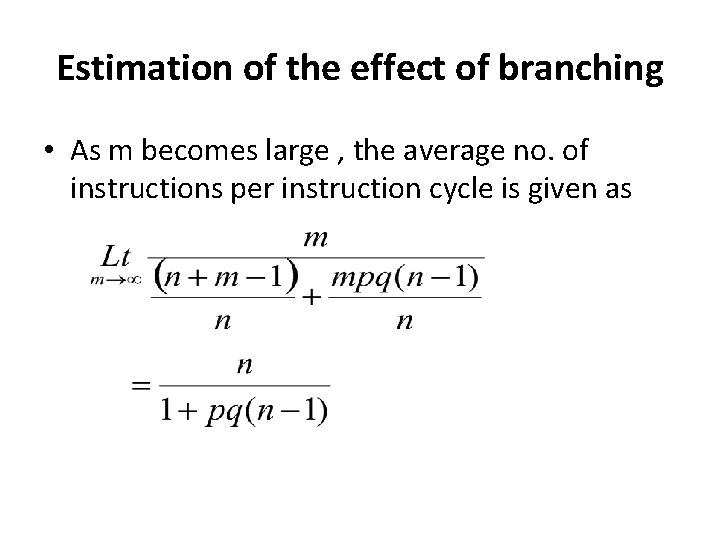

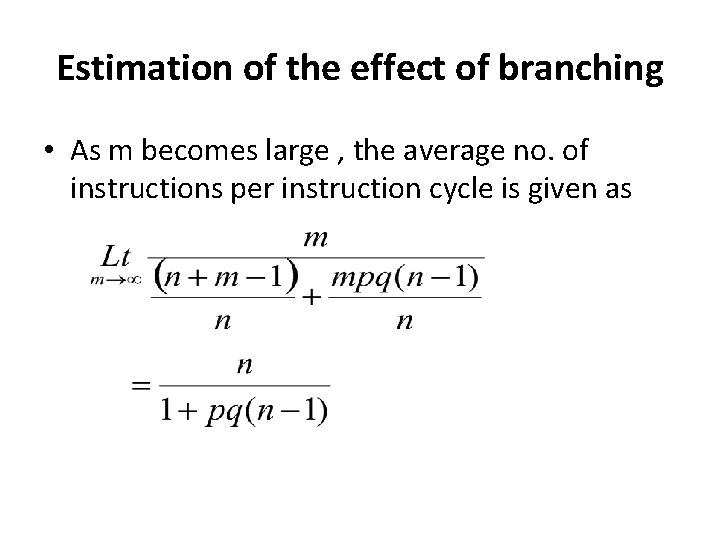

Estimation of the effect of branching • As m becomes large , the average no. of instructions per instruction cycle is given as

Estimation of the effect of branching • When p =0, the above measure reduces to n, which is ideal. • In reality, it is always less than n.

Solution = ?

Multiple Prefetch Buffers • Three types of buffers can be used to match the instruction fetch rate to pipeline consumption rate 1. Sequential Buffers: for in-sequence pipelining 2. Target Buffers: instructions from a branch target (for out-of-sequence pipelining)

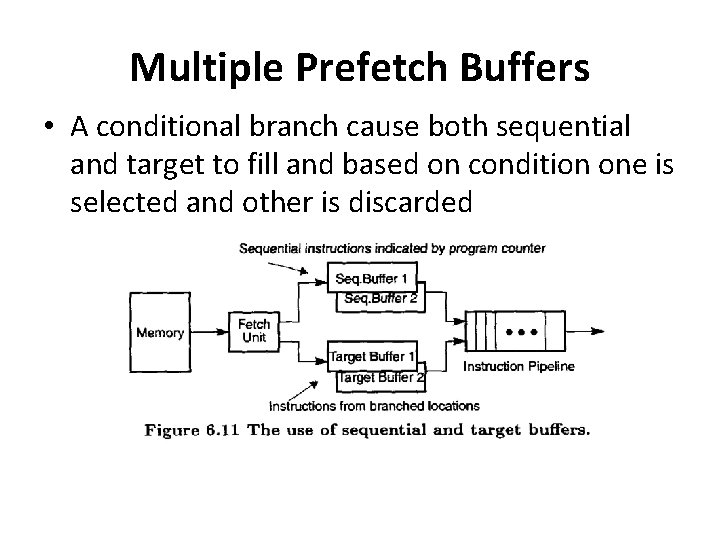

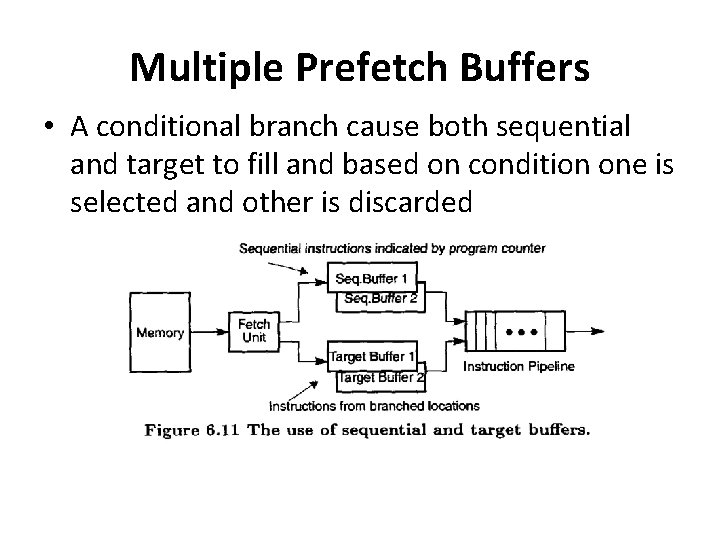

Multiple Prefetch Buffers • A conditional branch cause both sequential and target to fill and based on condition one is selected and other is discarded

Multiple Prefetch Buffers 3. Loop Buffers – Holds sequential instructions within a loop

Data Buffering and Busing Structures

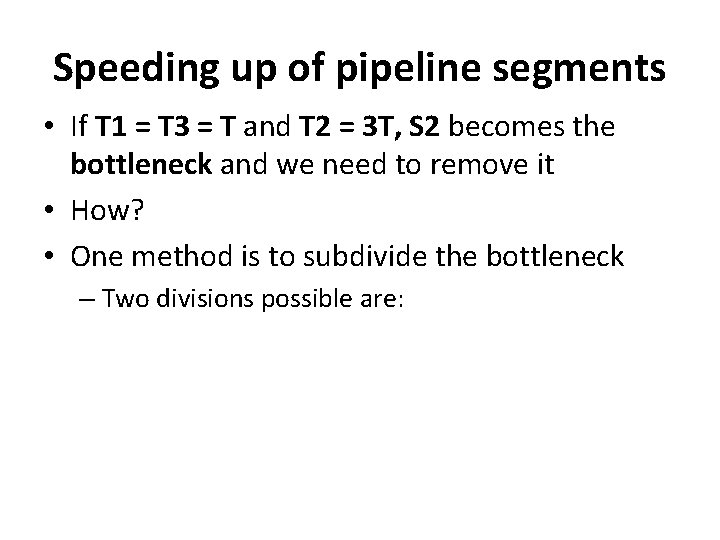

Speeding up of pipeline segments • The processing speed of pipeline segments are usually unequal. • Consider the example given below: S 1 S 2 S 3 T 1 T 2 T 3

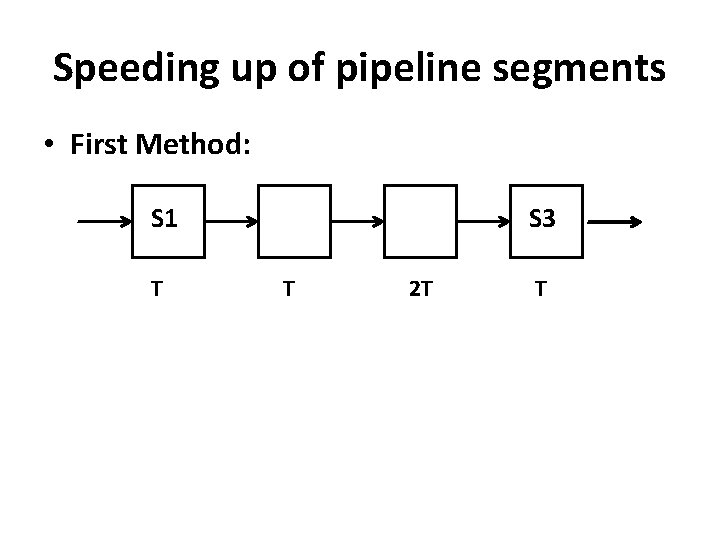

Speeding up of pipeline segments • If T 1 = T 3 = T and T 2 = 3 T, S 2 becomes the bottleneck and we need to remove it • How? • One method is to subdivide the bottleneck – Two divisions possible are:

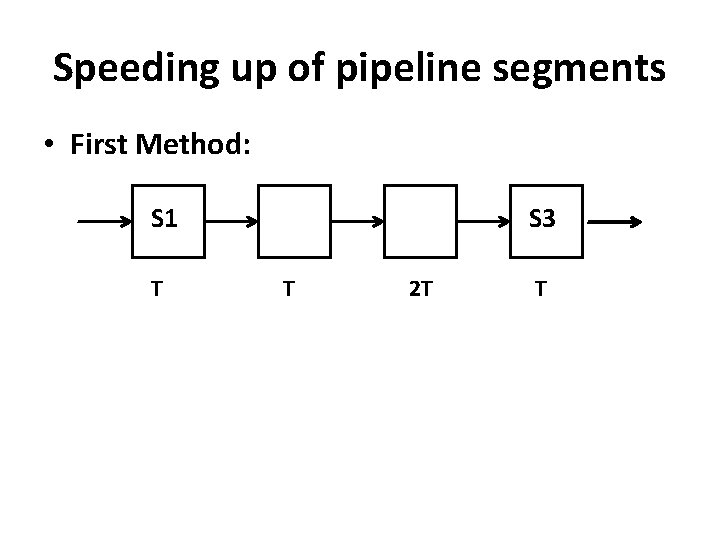

Speeding up of pipeline segments • First Method: S 1 T S 3 T 2 T T

Speeding up of pipeline segments • First Method: S 1 T S 3 T 2 T T

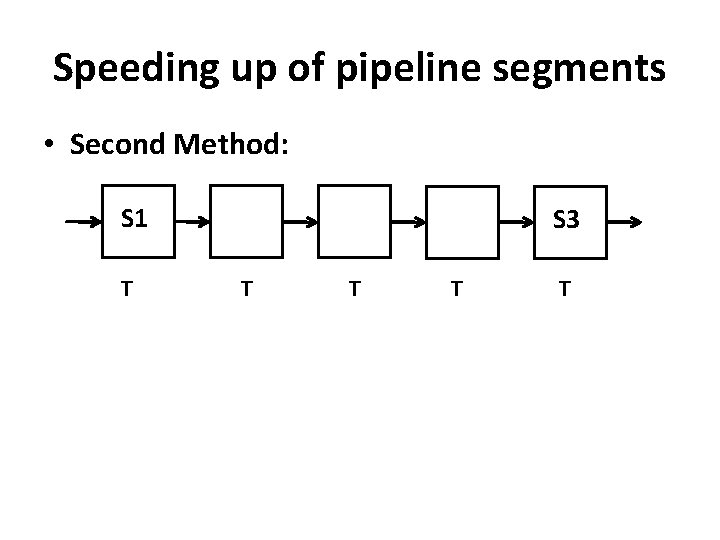

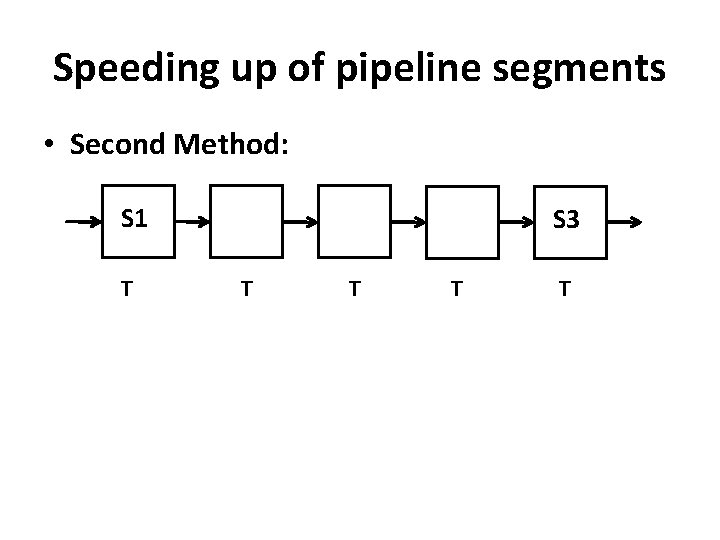

Speeding up of pipeline segments • Second Method: S 1 T S 3 T T

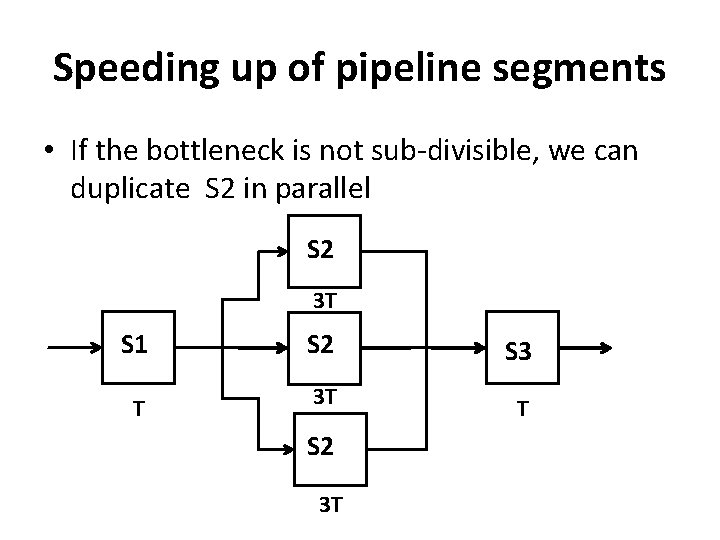

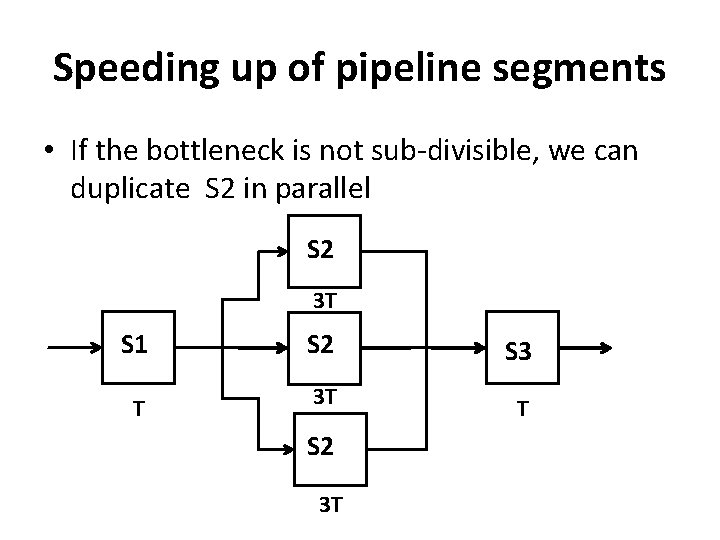

Speeding up of pipeline segments • If the bottleneck is not sub-divisible, we can duplicate S 2 in parallel S 2 3 T S 1 T S 2 3 T S 3 T

Speeding up of pipeline segments • Control and Synchronization is more complex in parallel segments

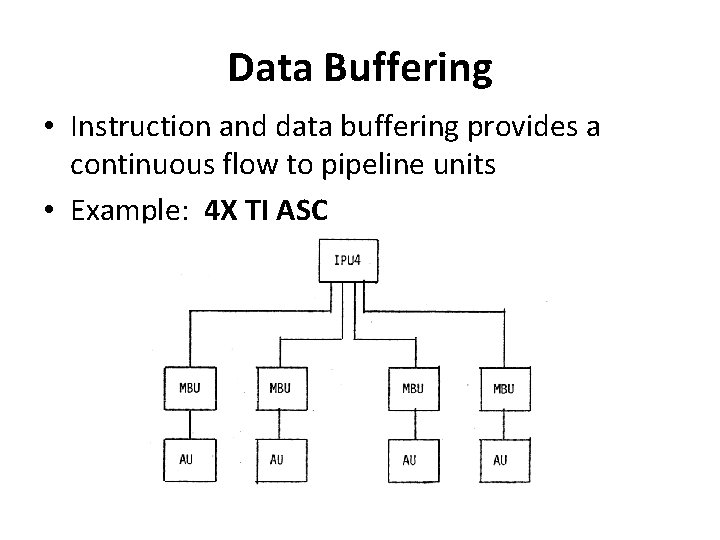

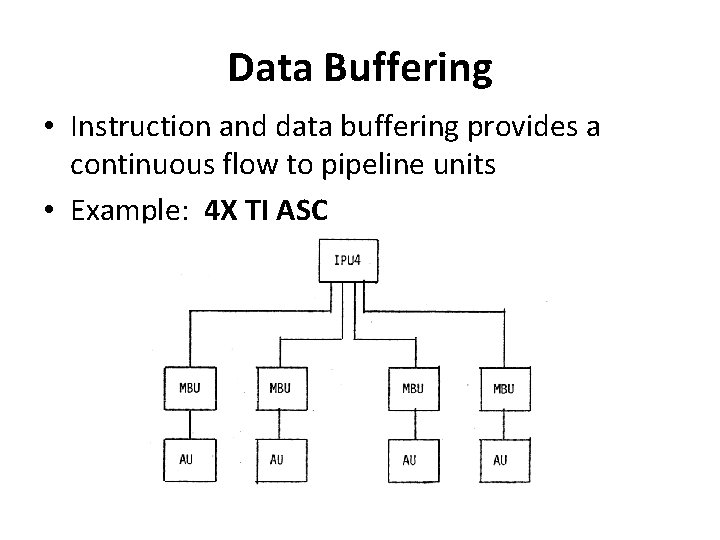

Data Buffering • Instruction and data buffering provides a continuous flow to pipeline units • Example: 4 X TI ASC

Example: 4 X TI ASC • In this system it uses a memory buffer unit (MBU) which – Supply arithmetic unit with a continuous stream of operands – Store results in memory • The MBU has three double buffers X, Y and Z (one octet per buffer) – X, Y for input and Z for output

Example: 4 X TI ASC • This provides pipeline processing at high rate and alleviate mismatch bandwidth problem between memory and arithmetic pipeline

Busing Structures • PBLM: Ideally subfunctions in pipeline should be independent, else the pipeline must be halted till dependency is removed. • SOLN: An efficient internal busing structure. • Example : TI ASC

Example : TI ASC • In TI ASC, once instruction dependency is recognized, update capability is incorporated by transferring contents of Z buffer to X or Y buffer.

Mips pipeline datapath

Mips pipeline datapath Pipelined processor design

Pipelined processor design Hyper threading

Hyper threading Pipelining protocol

Pipelining protocol Pipelined datapath

Pipelined datapath Pipelined datapath

Pipelined datapath Programming massively parallel processors

Programming massively parallel processors Linear pipelining in computer architecture

Linear pipelining in computer architecture Interrupt handling in arm processors

Interrupt handling in arm processors History of processors

History of processors Handlers classification of parallel computing structure

Handlers classification of parallel computing structure Digital camera processors

Digital camera processors Disadvantages of intel

Disadvantages of intel Embeded processors

Embeded processors Embedded innovator winter 2010

Embedded innovator winter 2010 Comparison of word processors

Comparison of word processors Characterization of query processors

Characterization of query processors Parallel processors from client to cloud

Parallel processors from client to cloud Programming massively parallel processors

Programming massively parallel processors Programming massively parallel processors

Programming massively parallel processors Gas processors association

Gas processors association Beagleboard embedded processors

Beagleboard embedded processors Network systems design using network processors

Network systems design using network processors Explain single pass macro processor

Explain single pass macro processor Difference between superscalar and vliw

Difference between superscalar and vliw Macro processors

Macro processors Language and processors for requirement

Language and processors for requirement Introduction of telecommunication

Introduction of telecommunication Programming massively parallel processors, kirk et al.

Programming massively parallel processors, kirk et al. One god one empire one religion

One god one empire one religion One one one little puppy run

One one one little puppy run One king one law one faith

One king one law one faith One god one empire one emperor

One god one empire one emperor One ford plan

One ford plan See one do one teach one

See one do one teach one One price policy

One price policy One face one voice one habit and two persons

One face one voice one habit and two persons See one do one teach one

See one do one teach one