Chapter Nine Evaluating Results from Samples Review of

- Slides: 22

Chapter Nine: Evaluating Results from Samples • Review of concepts of testing a null hypothesis. • Test statistic and its null distribution • Type I and Type II errors • Level of significance, probability of a Type II error, and power.

Objective is to develop concepts that are useful for planning studies. • Choose test statistic (usually this is a sample mean) • Balance two types of errors by choice of α (probability of a Type I error) and β (probability of a Type II error) • Determine sample size so that α and β specifications are met. • Even arbitrary rules have defined α and β.

Statistical Sampling of Populations • ASSUME there is a defined population and that we want to estimate such parameters as the mean of the population. • Observe a small number of randomly selected members from the larger population. • Apply appropriate statistics to the sample to generate correct conclusions.

Why random samples? • Other sampling procedures have failed consistently. – 1932 Literary Digest Poll. – 1948 Opinion Polls of Outcome of Presidential race. • Random samples, correctly drawn, have known properties. – Value of theorems and mathematical argument.

Procedure of Examining a Test of a Hypothesis • Start with question. – Is a student a random guesser or knowledgeable about statistics? • Specify a null hypothesis – H 0: Student is a random guesser. – Null hypothesis should be plausible. – Probability measures generated by null hypothesis should be easy to compute with.

Procedure of Examining a Test of a Hypothesis • Specify a statistic, a sample size, and a rule. – Statistic=S 4, number of correct answers in a four question true-false test, with questions of equal difficulty in random order. – Sample size is the length of the test, 4 questions (an unreasonably short examination). – Rule is to reject H 0 when S 4≥ 4. – The value 4 is the “critical value”. • This is an example of a “binomial test. ”

Procedure of Examining a Test of a Hypothesis • Determine the properties of the procedure. – Specify the null distribution of your test statistic. – Specify the alternative that you want to be able to detect with low error rate. • Student who has an 80 percent chance of correctly answering each question.

Type I error • Definition: to reject the null hypothesis when it is true. • This example: Call a random guesser a knowledgeable student. • Can a Type I error happen? – Yes; anytime a random guesser gets 4 or more correct answers (that is, exactly 4 correct). – Hopefully, the probability of a Type I error is small.

Level of Significance • Definition: The level of significance is the probability of a Type I error (reject the null hypothesis when it is true) and is usually denoted by α. • That is, α=Pr 0{Reject H 0} • Example problem: α=Pr 0{S 4≥ 4} – Notice that α is a right sided probability. • Problem is to find α.

Cumulative Distribution Function • Use the cumulative distribution function (cdf) to get the answer. • Definition: The cdf of the random variable X at the argument x (FX(x)) is the probability that the random variable X≤x; that is, FX(x)=Pr{X≤x}. • Use table look-up on reported cdf to get answer.

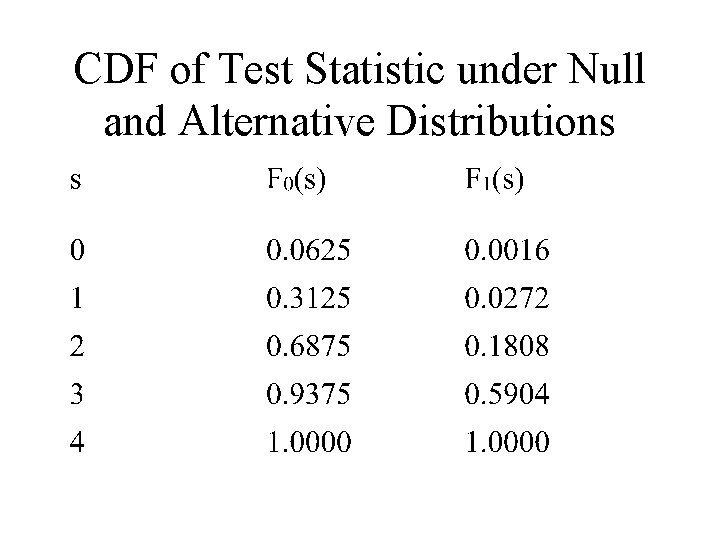

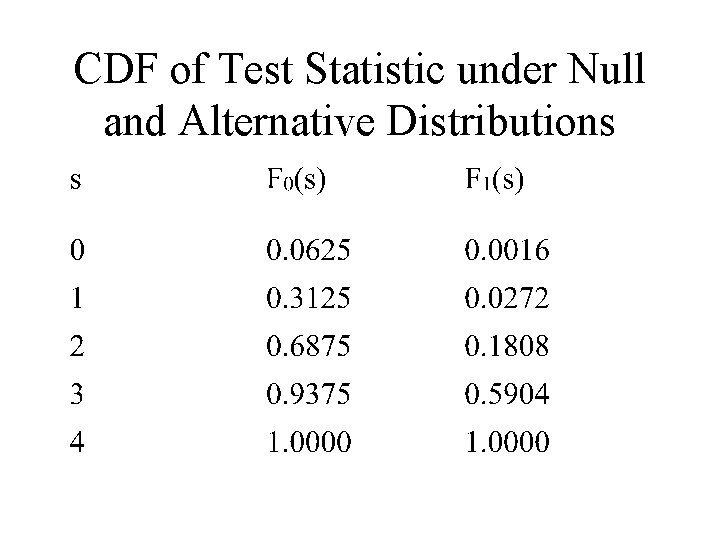

CDF of Test Statistic under Null and Alternative Distributions

Finding Level of Significance α • • α=Pr 0{Reject H 0} In this problem, α=Pr 0{S 4≥ 4} This is a right-sided probability. Since cdf’s are left-sided probabilities, use the complement principle. • Pr 0{S 4≥ 4}=1 -Pr 0{S 4≤ 3}=1 -F 0(3)=1 -0. 9375 • The answer is 0. 0625.

Type II error • Definition: to accept the null hypothesis when it is false. • This example: Call a knowledgeable student a random guesser. • Can a Type II error happen? – Yes; anytime a knowledgeable student makes a mistake. – Hopefully, the probability of a Type II error is small.

Probability of a Type II error β • Definition: The probability of a Type II error (accept the null hypothesis when it is false) is usually denoted by β. • That is, β=Pr 1{Accept H 0}. • Example problem: β=Pr 1{S 4≤ 3}=F 1(3)=0. 5904.

Summary of Findings • The probability of a Type I error α=0. 0625 • The probability of a Type II error β=0. 5904 for a student who is able to answer 80 percent of the true-false questions correctly. • The value of α is within reasonable norms. • The value of β is unreasonably large. • The test procedure is not satisfactory.

Further Interpretation of error rates • The Type I error rate α should be small and roughly equal to β. – Minimax argument assuming cost of a Type I error is same as cost of a Type II error. • Increasing the sample size while holding α constant will reduce β. • There is a tradeoff of α and β. • Changing the design of the measuring process may lead to reduced error rates.

Power • Definition: The power of a procedure is 1 -β. • Error rates should be small • Power should be large.

Additional Definitions • Statistic: a random variable whose value will be completely specified by the observation of an experimental process. • Parameter: a property of the population being studied, such as its mean or variance. • Standard error: standard deviation of a statistic.

Observed significance level. • Definition of observed significance level: a statistic equal to the probability of observing a result as extreme or more extreme from the null hypothesis as observed in the given data. • Three types of observed significance level: right, left, and two-sided.

Finding the observed significance level in this example. • A student takes the four question true-false test and has two answers correct. What is the observed significance level? • What side? Here, right side. • Apply definition of observed significance level: osl=Pr 0{S 4≥ 2}.

Finding the observed significance level in this example • Use complement rule to get to a left sided probability. • Pr 0{S 4≥ 2}=1 - Pr 0{S 4≤ 1}=1 -F 1(1). • The answer is 1 -0. 3125=0. 6875. • This is a statistic whose value is the probability that a random guesser does as well or better than the student who took the test.

Summary of Lecture • We have reviewed the basic concepts of tests of hypotheses. • We have shown how to find the error rates and observed significance level in a binomial test from a tabulation of the cdfs. • Interpretation point is that error rates should be small. Increase the sample size, change the design, or change the specifications if error rates are too large.