Chapter 6 Linear Predictive Coding LPC of Speech

![LPC parameters and their relationships (7) • Replace z with expjω: • P(expjω)=|A(P)(expjω)|expjφ(ω)[1+exp[-j((p+1)ω+ 2φ(ω))] LPC parameters and their relationships (7) • Replace z with expjω: • P(expjω)=|A(P)(expjω)|expjφ(ω)[1+exp[-j((p+1)ω+ 2φ(ω))]](https://slidetodoc.com/presentation_image_h/7e438b3a7364a88a0a6d66d068963b9d/image-22.jpg)

- Slides: 23

Chapter 6 Linear Predictive Coding (LPC) of Speech Signals • • 6. 1 Basic Concepts of LPC 6. 2 Auto-Correlated Solution of LPC 6. 3 Covariance Solution of LPC 6. 4 LPC related parameters and their relationships

6. 1 Basic Concepts of LPC (1) • It is a parametric de-convolution algorithm • x(n) is generated by an unknown sequence e(n) exciting a unknown system V(Z) which is supposed to be a linear non time-variant system. • V(Z) = G(Z)/A(Z), E(Z)V(Z) = X(Z) • G(Z) = Σj=0 Q gj. Z-j, A(Z) = Σi=0 P ai. Z-i • Where ai and gj are parameters, real and a 0 = 1 • If an algorithm could estimate all these parameters, then V(Z) could be found, and E(Z) could be found also. This finishes de-convolution.

Basic Concepts of LPC (2) • There are some limitations for the model • (1) G(Z) = 1 then V(Z) = 1/A(Z) this is so called “Full Poles Models” and the parametric de-convolution became coefficients(ai) estimation problem. • (2) e(n) sequence is of form Ge(n), where e(n) is a periodic pulse or a Gaussian white noise sequence. For the first case e(n) = Σδ(n-r. Np) and for the second case R(k) = E[e(n)e(n+k)] = δ(k) and the value of e(n) satisfied with Normal distribution. G is a nonnegative real number controlling the amplitude. • The way is x(n)->V(Z)(P, ai)->e(n), G->type of e(n)

Basic Concepts of LPC (3) • Suppose x(n) and type of e(n) are known, what is the optimized estimation of P and ai, e(n) and G? It is the LMS algorithm. • Suppose x(n) is the predicted value of x(n), it is the linear sum of previous P’ known values of x: • x(n) = Σi=1 P’ ai x(n-i) • The predicted error • ε(n) = x(n)-x(n) = x(n) - Σi=1 P’ ai x(n-i) • It is a stochastic sequence. The variance of it could be used to evaluate the quality of prediction.

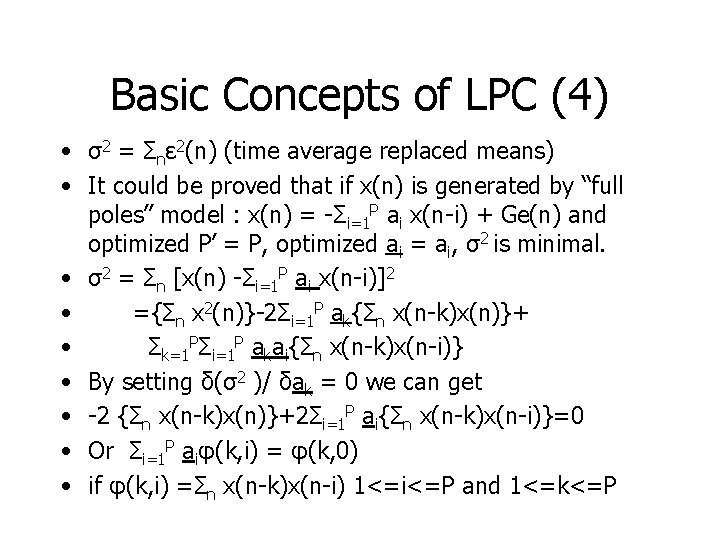

Basic Concepts of LPC (4) • σ2 = Σnε 2(n) (time average replaced means) • It could be proved that if x(n) is generated by “full poles” model : x(n) = -Σi=1 P ai x(n-i) + Ge(n) and optimized P’ = P, optimized ai = ai, σ2 is minimal. • σ2 = Σn [x(n) -Σi=1 P ai x(n-i)]2 • ={Σn x 2(n)}-2Σi=1 P ak{Σn x(n-k)x(n)}+ • Σk=1 PΣi=1 P akai{Σn x(n-k)x(n-i)} • By setting δ(σ2 )/ δak = 0 we can get • -2 {Σn x(n-k)x(n)}+2Σi=1 P ai{Σn x(n-k)x(n-i)}=0 • Or Σi=1 P aiφ(k, i) = φ(k, 0) • if φ(k, i) =Σn x(n-k)x(n-i) 1<=i<=P and 1<=k<=P

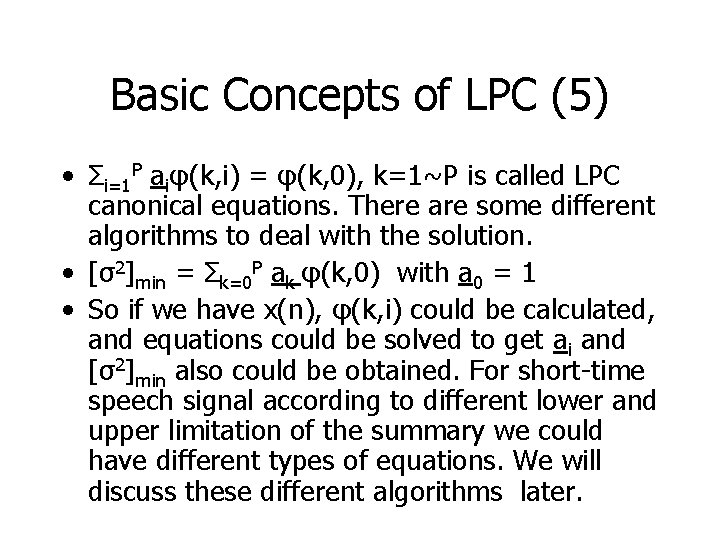

Basic Concepts of LPC (5) • Σi=1 P aiφ(k, i) = φ(k, 0), k=1~P is called LPC canonical equations. There are some different algorithms to deal with the solution. • [σ2]min = Σk=0 P ak φ(k, 0) with a 0 = 1 • So if we have x(n), φ(k, i) could be calculated, and equations could be solved to get ai and [σ2]min also could be obtained. For short-time speech signal according to different lower and upper limitation of the summary we could have different types of equations. We will discuss these different algorithms later.

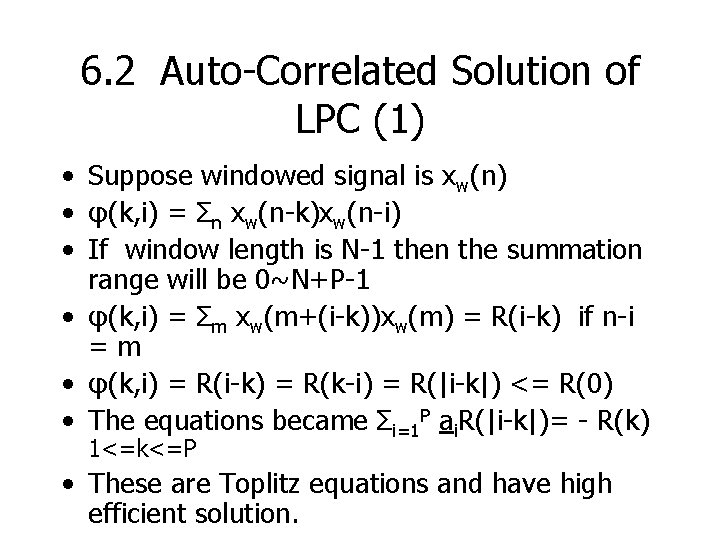

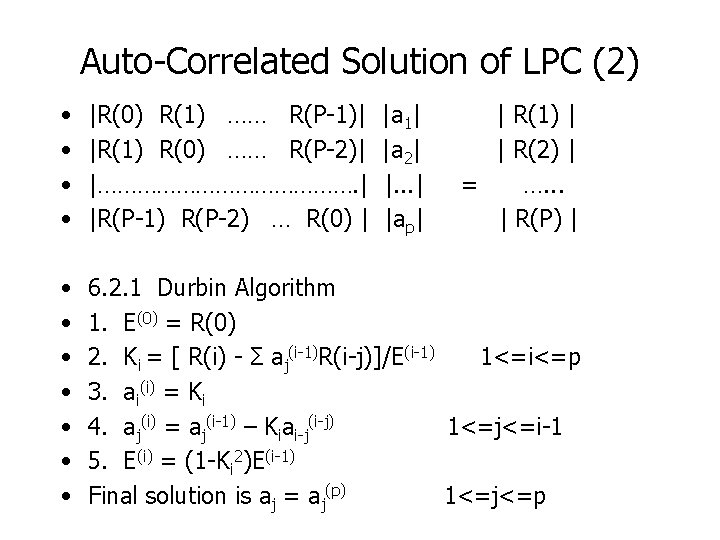

6. 2 Auto-Correlated Solution of LPC (1) • Suppose windowed signal is xw(n) • φ(k, i) = Σn xw(n-k)xw(n-i) • If window length is N-1 then the summation range will be 0~N+P-1 • φ(k, i) = Σm xw(m+(i-k))xw(m) = R(i-k) if n-i =m • φ(k, i) = R(i-k) = R(k-i) = R(|i-k|) <= R(0) • The equations became Σi=1 P ai. R(|i-k|)= - R(k) 1<=k<=P • These are Toplitz equations and have high efficient solution.

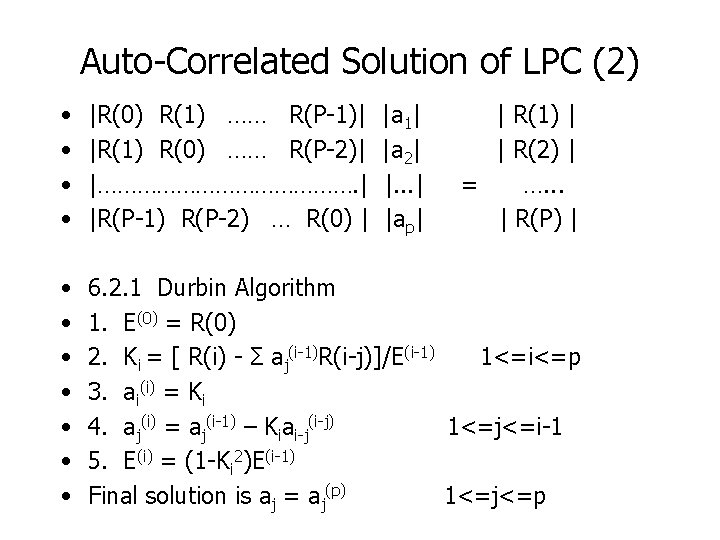

Auto-Correlated Solution of LPC (2) • • |R(0) R(1) …… R(P-1)| |R(1) R(0) …… R(P-2)| |…………………. | |R(P-1) R(P-2) … R(0) | • • 6. 2. 1 Durbin Algorithm 1. E(0) = R(0) 2. Ki = [ R(i) - Σ aj(i-1)R(i-j)]/E(i-1) 1<=i<=p 3. ai(i) = Ki 4. aj(i) = aj(i-1) – Kiai-j(i-j) 1<=j<=i-1 5. E(i) = (1 -Ki 2)E(i-1) Final solution is aj = aj(p) 1<=j<=p |a 1| |a 2| |. . . | |ap| | R(1) | | R(2) | = …. . . | R(P) |

Auto-Correlated Solution of LPC (3) • For iteration i we got a set of coefficients for the predictor of i-th order and the minimal predicted error energy E(i). We also can get it by {R(k)} : • E(i) = R(0) –Σk=1 i ak. R(k), 1<=i<=p • Ki is the reflect coefficient : -1<=Ki<=1 It is a sufficient and necessary condition for stable H(z) during iteration.

Auto-Correlated Solution of LPC (4) • 6. 2. 2 Schur algorithm • At first an auxilary sequence is defined. Its properties are : • (1) qi(j) = R(j) when i = 0 • (2) qi(j) = 0 when i > 0, j=1~p • (3) qp(0) = E(p) is the predicted error energy. • (4) |qi(j)| <= R(0), it is equal only if i=j=0 • The algorithm is as following: • 1. r(j) = R(j)/R(0), r(-j) = r(j), j=0~p • 2. a 0 = 1, E(0) = 1 • 3. q 0(j) = r(j) -p<j<p

Auto-Correlated Solution of LPC (5) • 4. i = 1, k 1 = r(1) • 5. For i-p<=j<=p qi(j) = qi-1(j) + ki *qi-1 (i-j) ki = qi-1(j)/qi(0) aj(i) = qi-1(i-j) E(i) = E(i-1)(1 -ki 2) • 6. If i<p, back to step 5 • 7. Stop • If we only calculate ki, then only first two expressions in step 5 are enough. It is suitable for fix-point calculation (r<=1) or hardware implementation.

Covariance Solution of LPC (1) • If not using windowing, but limiting the range of summation, we could get : • σ2 = Σn=0 N-1ε 2(n) n=0~N-1 • φ(k, i) = Σn=0 N-1 x(n-k)x(n-i) k=1~p, i=0~p • = Σm=-i. N-i-1 x(m+(i-k))x(m) let n-i=m, m=-i~N-i-1 • The equations will be like following : • |φ(1, 1) φ(1, 2) …… φ(1, p)| |a 1| |φ(1, 0)| • |φ(2, 1) φ(2, 2) …… φ(2, p)| |a 2| |φ(2, 0)| • . ………………………=………… • |φ(p, 1) φ(p, 2) …… φ(p, p)| |ap| |φ(p, 0)|

Covariance Solution of LPC (2) • The matrix is a covariance matrix and it is positive determined, but not Toplitz. There is no high efficient algorithm to solve. Only common used LU algorithm could be applied. Its advantage is not having big predicted error on the two ends of window. So when N~P the estimated parameters have more accuracy than auto-correlated method. But in speech processing very often N>>P, so the advantage is not obvious.

Burg(Lattice) Algorithm (1) • It is an iterative procedure. It directly calculates the linear predicted coefficients and reflect coefficients. It does not have stable problems, nor windowing. But the computing time is much longer than the autocorrelated approach. • (1) Init : f(0)(m)=b(0)(m)=x(m) m=0~N-1, i=1 • (2) calculate ki and predicted coefficients aj(i) : • • • ki=2Σm=0 N-1[f(i-1)(m)*b(i-1)(m-1)]/ {Σm=0 N-1[f(i-1)(m)]2+ Σm=0 N 1[b(i-1)(m)]2} aj(i) = aj(i-1) - kiai-j(i-1) ai(i) = ki 1<=j<=i-1

Burg(Lattice) Algorithm (2) • • (3) Calculate forward and backward predicted errors: f(i)(m) = f(i-1)(m) – kib(i-1)(m-1) and b(i)(m) = b(i-1)(m-1) –kif(i-1)(m) m=0~N-1 (4) i++ (5) If i<p goto (2) (6) Stop Final result is aj = aj(p) j=1~p Its disadvantage is based on stability. In real case speech is not stationary, so sometime it does not work well, in particular for the formant estimation. • It is good for parameter analysis in speech synthesis.

LPC parameters and their relationships (1) • • • (1) Reflect Coefficients Also known as PARCOR coefficients If {aj} are known, ki could be found as following : aj(p) = aj 1<=j<=p ki = ai(i) aj(i-1) = (aj(i) + aj(i) ai-j(i))/(1 -ki 2) 1<=j<=i-1 The inverse process : aj(i) = ki aj(i) = aj(I-1) - kj ai-j(i-1) at last aj= aj(p) 1<=j<=p -1<=ki<=1 is the sufficient and necessary condition for stable system function

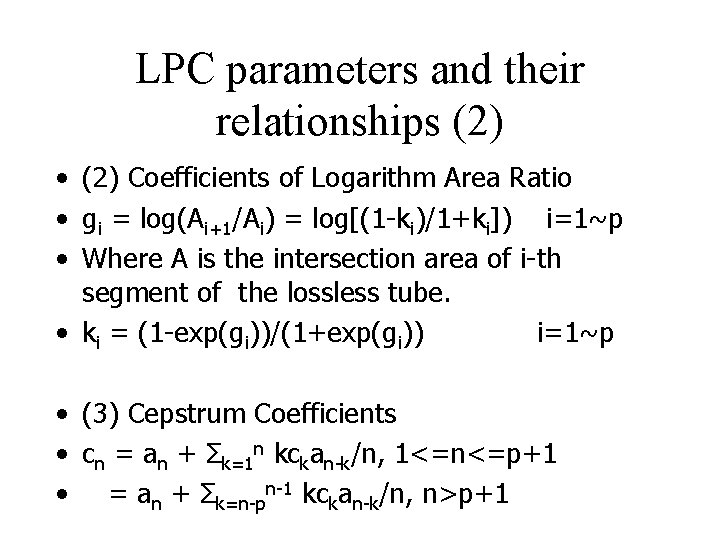

LPC parameters and their relationships (2) • (2) Coefficients of Logarithm Area Ratio • gi = log(Ai+1/Ai) = log[(1 -ki)/1+ki]) i=1~p • Where A is the intersection area of i-th segment of the lossless tube. • ki = (1 -exp(gi))/(1+exp(gi)) i=1~p • (3) Cepstrum Coefficients • cn = an + Σk=1 n kckan-k/n, 1<=n<=p+1 • = an + Σk=n-pn-1 kckan-k/n, n>p+1

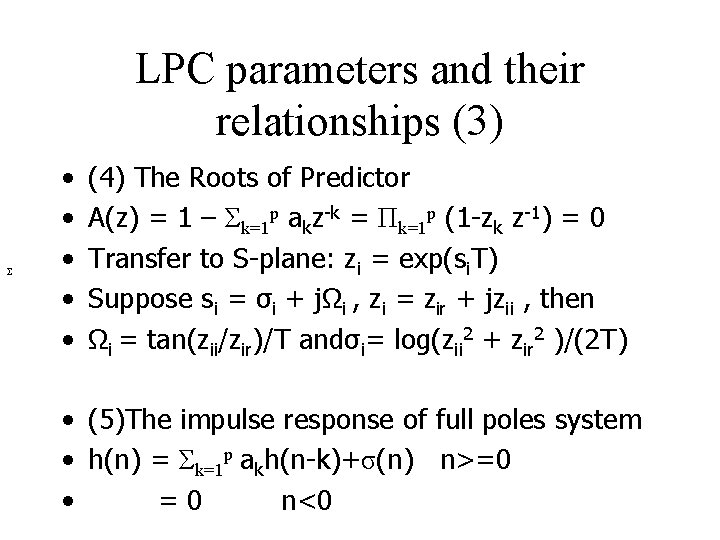

LPC parameters and their relationships (3) Σ • • • (4) The Roots of Predictor A(z) = 1 – Σk=1 p akz-k = Πk=1 p (1 -zk z-1) = 0 Transfer to S-plane: zi = exp(si. T) Suppose si = σi + jΩi , zi = zir + jzii , then Ωi = tan(zii/zir)/T andσi= log(zii 2 + zir 2 )/(2 T) • (5)The impulse response of full poles system • h(n) = Σk=1 p akh(n-k)+σ(n) n>=0 • =0 n<0

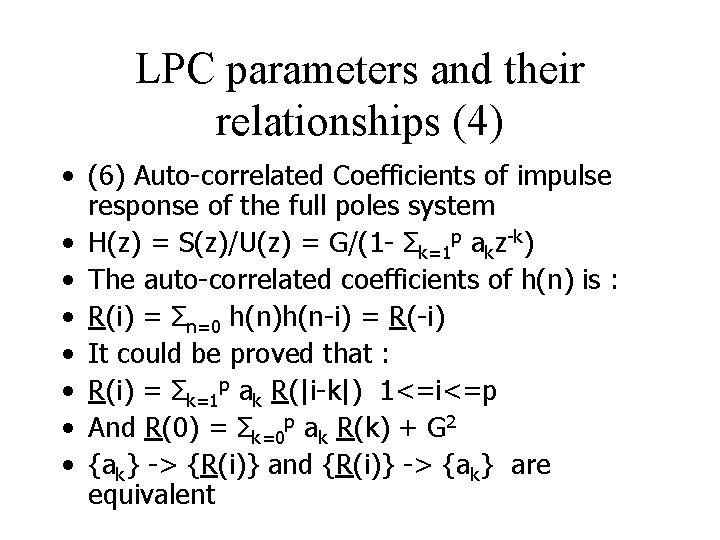

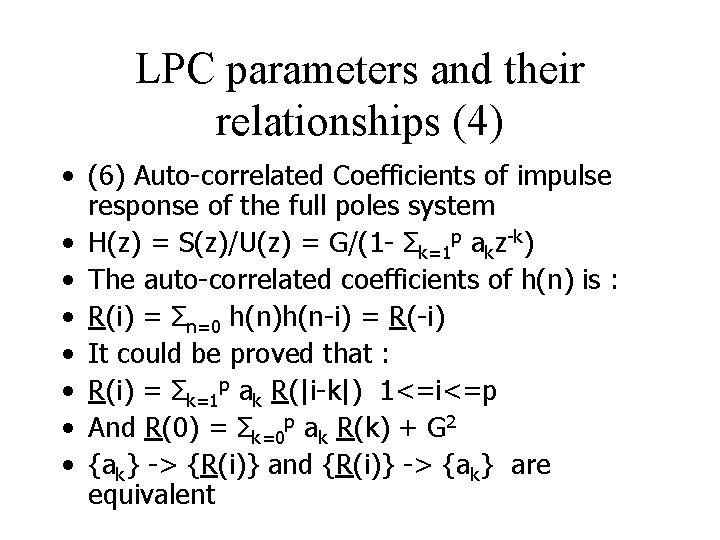

LPC parameters and their relationships (4) • (6) Auto-correlated Coefficients of impulse response of the full poles system • H(z) = S(z)/U(z) = G/(1 - Σk=1 p akz-k) • The auto-correlated coefficients of h(n) is : • R(i) = Σn=0 h(n)h(n-i) = R(-i) • It could be proved that : • R(i) = Σk=1 p ak R(|i-k|) 1<=i<=p • And R(0) = Σk=0 p ak R(k) + G 2 • {ak} -> {R(i)} and {R(i)} -> {ak} are equivalent

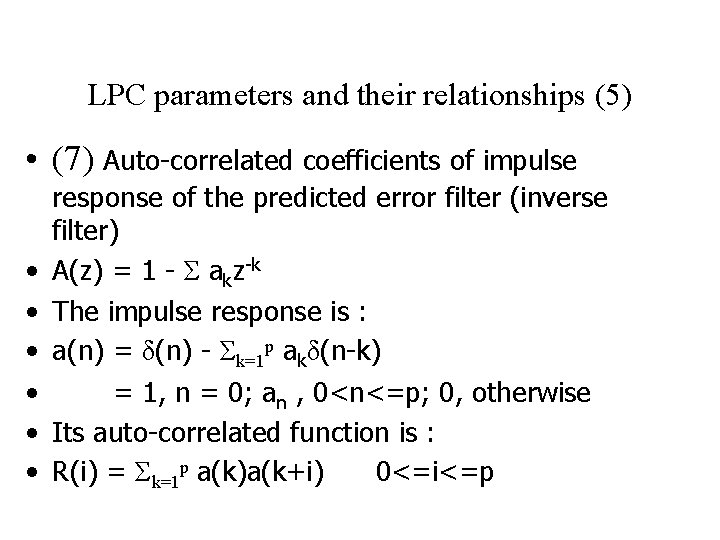

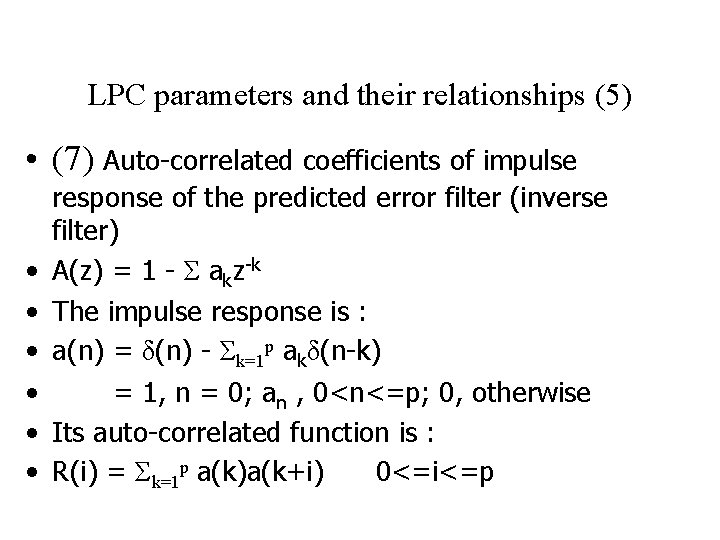

LPC parameters and their relationships (5) • (7) Auto-correlated coefficients of impulse • • • response of the predicted error filter (inverse filter) A(z) = 1 - Σ akz-k The impulse response is : a(n) = δ(n) - Σk=1 p akδ(n-k) = 1, n = 0; an , 0<n<=p; 0, otherwise Its auto-correlated function is : R(i) = Σk=1 p a(k)a(k+i) 0<=i<=p

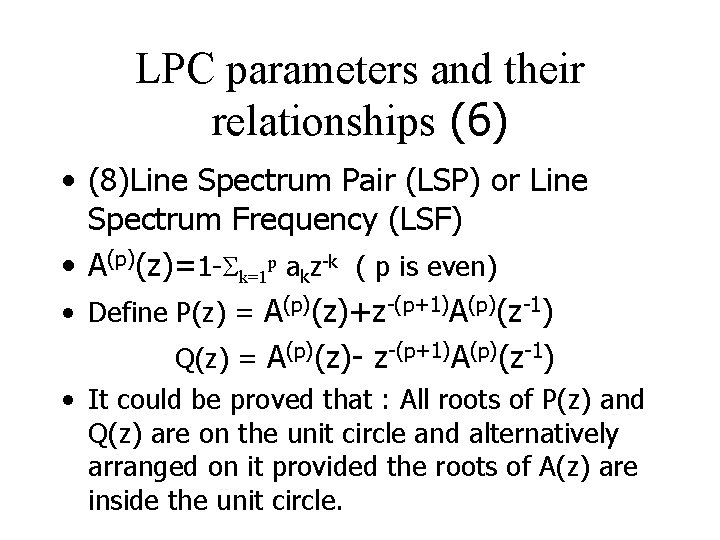

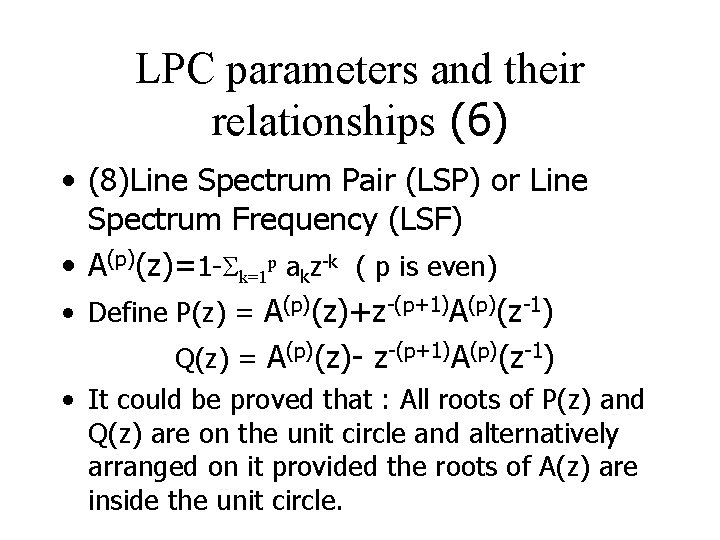

LPC parameters and their relationships (6) • (8)Line Spectrum Pair (LSP) or Line Spectrum Frequency (LSF) • A(p)(z)=1 -Σk=1 p akz-k ( p is even) • Define P(z) = A(p)(z)+z-(p+1)A(p)(z-1) Q(z) = A(p)(z)- z-(p+1)A(p)(z-1) • It could be proved that : All roots of P(z) and Q(z) are on the unit circle and alternatively arranged on it provided the roots of A(z) are inside the unit circle.

![LPC parameters and their relationships 7 Replace z with expjω PexpjωAPexpjωexpjφω1expjp1ω 2φω LPC parameters and their relationships (7) • Replace z with expjω: • P(expjω)=|A(P)(expjω)|expjφ(ω)[1+exp[-j((p+1)ω+ 2φ(ω))]](https://slidetodoc.com/presentation_image_h/7e438b3a7364a88a0a6d66d068963b9d/image-22.jpg)

LPC parameters and their relationships (7) • Replace z with expjω: • P(expjω)=|A(P)(expjω)|expjφ(ω)[1+exp[-j((p+1)ω+ 2φ(ω))] • Q(expjω)=|A(P)(expjω)|expjφ(ω)[1+exp[-j((p+1)ω+ 2φ(ω)+π)] • If the roots of A(P)(z) are inside the unit circle, whenωis 0~π, φ(ω) changes from 0 and returns to 0, the amount [(p+1)ω+2φ(ω)] will be 0~(p+1)π • P(expjω)=0 : [(p+1)ω+2φ(ω)]=kπ, k=1, 3, …P+1 • Q(expjω)=0 : [(p+1)ω+2φ(ω)]=kπ, k=0, 2, …P • The roots of P and Q : Zk = expjωk [(p+1)ω+2φ(ω)]=kπ, k=0, 1, 2, …P+1 • And ω0 < ω1 < ω2 < … < ωP+1

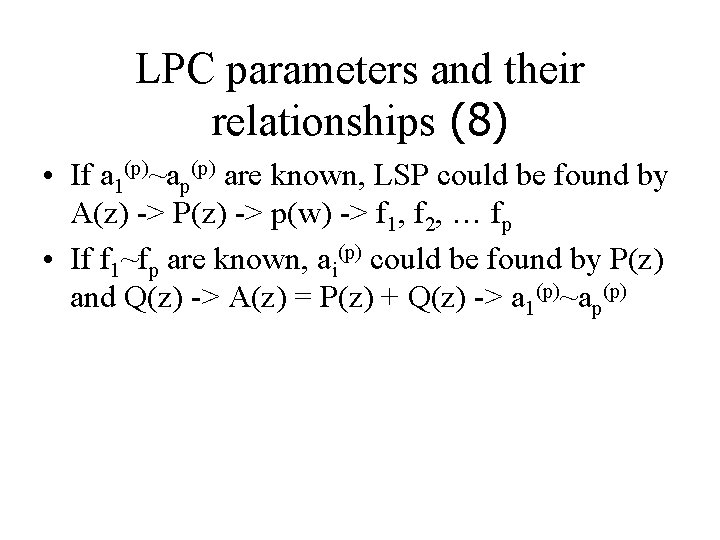

LPC parameters and their relationships (8) • If a 1(p)~ap(p) are known, LSP could be found by A(z) -> P(z) -> p(w) -> f 1, f 2, … fp • If f 1~fp are known, ai(p) could be found by P(z) and Q(z) -> A(z) = P(z) + Q(z) -> a 1(p)~ap(p)