CHAPTER 6 CPU SCHEDULING CGS 3763 Operating System

- Slides: 37

CHAPTER 6 CPU SCHEDULING CGS 3763 - Operating System Concepts UCF, Spring 2004 1

OVERVIEW • CPU/IO Burst Cycle • Scheduling Criteria • Scheduling Algorithms – – – First Come, First Serve (FCFS) Priority Scheduling Shortest Job First Round Robin Multi-Level Queuing • Scheduling for Multi-Processors • Scheduling for Real-Time Systems • Evaluating Scheduling Algorithms – Deterministic Modeling – Queuing Models – Simulations 2

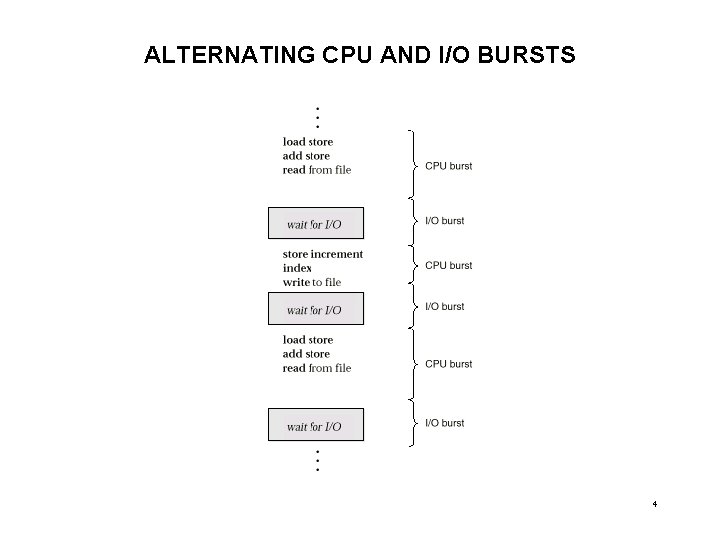

BASIC CONCEPTS • OS scheduling occurs in several places: – Long Term: When can jobs enter a system – Short Term: Determining which process gets the CPU – Medium Term: Used to balance process mix • In this chapter, focus on short term or CPU scheduling • Object is to maximum CPU utilization – Primarily applies to multiprogramming systems – Also increases memory and I/O device utilization • Multiprogramming requires that the CPU be shared among numerous processes • This sharing in part based on concept of CPU–I/O Burst Cycle – Process execution consists of a cycle of CPU execution followed by an I/O operation and waiting – This fact allows us to share the CPU during I/O operations 3

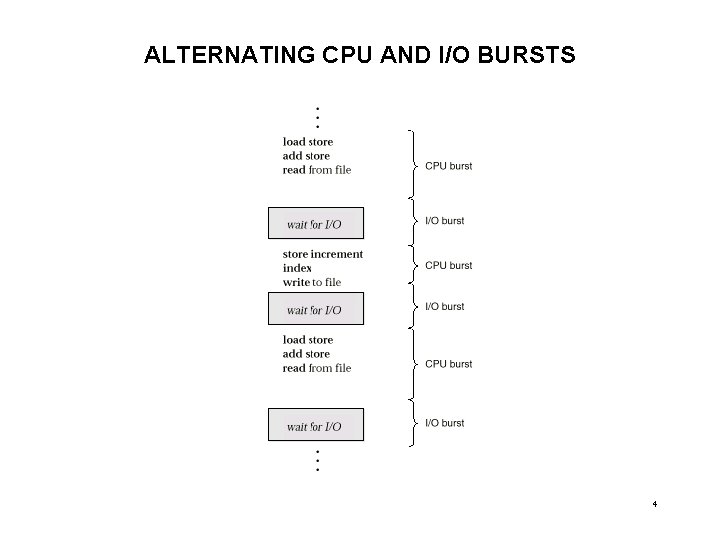

ALTERNATING CPU AND I/O BURSTS 4

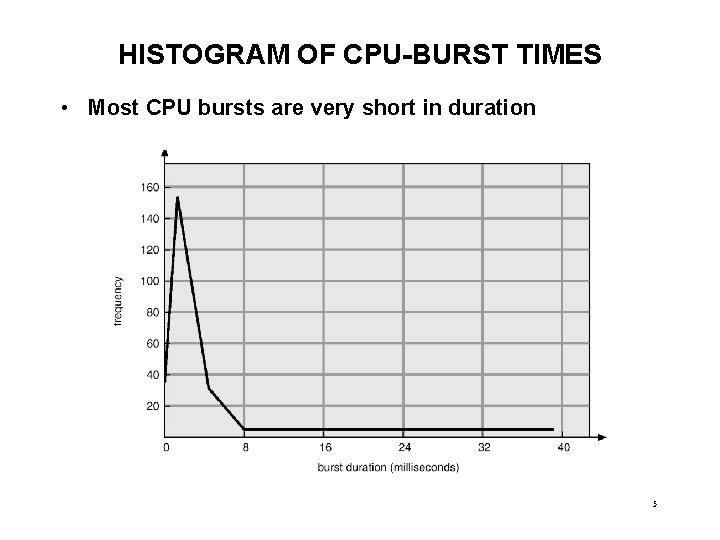

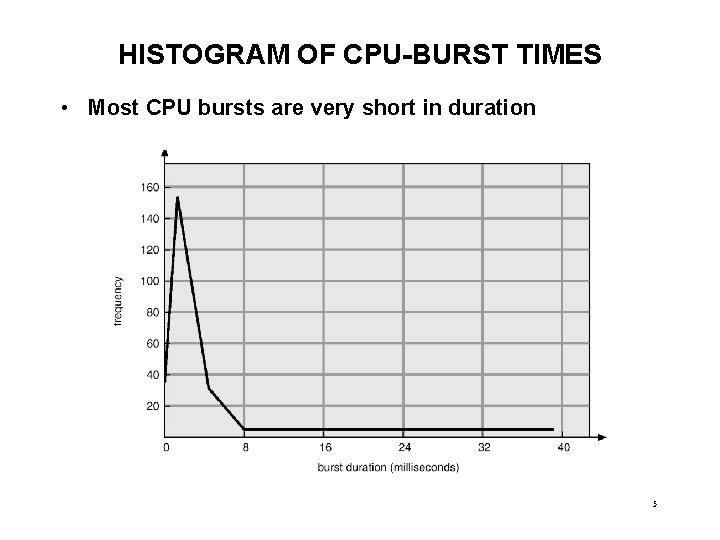

HISTOGRAM OF CPU-BURST TIMES • Most CPU bursts are very short in duration 5

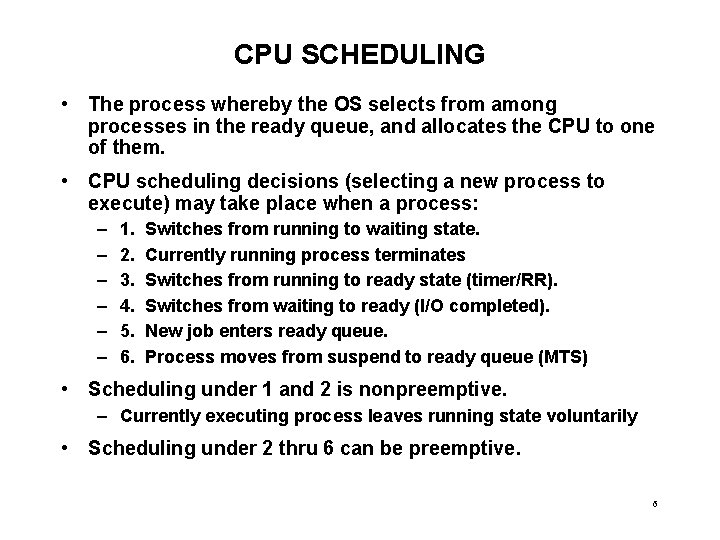

CPU SCHEDULING • The process whereby the OS selects from among processes in the ready queue, and allocates the CPU to one of them. • CPU scheduling decisions (selecting a new process to execute) may take place when a process: – – – 1. 2. 3. 4. 5. 6. Switches from running to waiting state. Currently running process terminates Switches from running to ready state (timer/RR). Switches from waiting to ready (I/O completed). New job enters ready queue. Process moves from suspend to ready queue (MTS) • Scheduling under 1 and 2 is nonpreemptive. – Currently executing process leaves running state voluntarily • Scheduling under 2 thru 6 can be preemptive. 6

DISPATCHER • Dispatcher is term given to OS module which assigns control of the CPU to the process selected by the short-term scheduler; • The Dispatcher performs the following: – switching context from one process to another – switching to user mode after switch is made – jumping to the proper location in the user program to restart that program • Dispatch latency – time it takes for the dispatcher to stop one process and start another running. – The longer the dispatch latency, the less time available for the CPU to perform useful work. 7

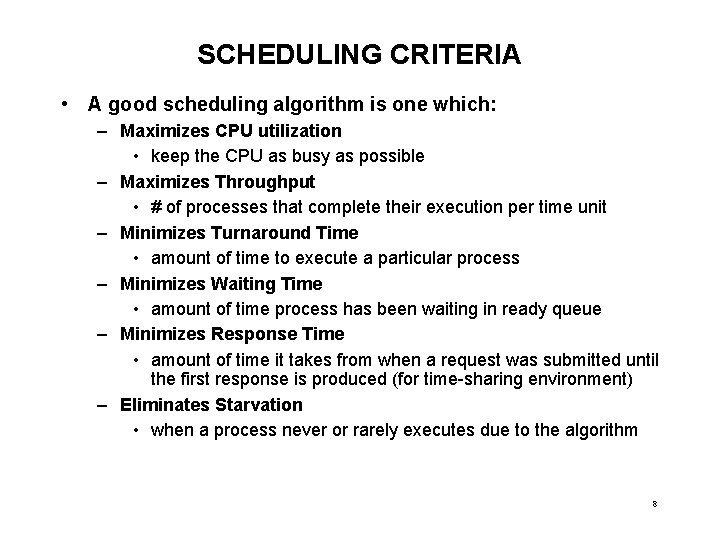

SCHEDULING CRITERIA • A good scheduling algorithm is one which: – Maximizes CPU utilization • keep the CPU as busy as possible – Maximizes Throughput • # of processes that complete their execution per time unit – Minimizes Turnaround Time • amount of time to execute a particular process – Minimizes Waiting Time • amount of time process has been waiting in ready queue – Minimizes Response Time • amount of time it takes from when a request was submitted until the first response is produced (for time-sharing environment) – Eliminates Starvation • when a process never or rarely executes due to the algorithm 8

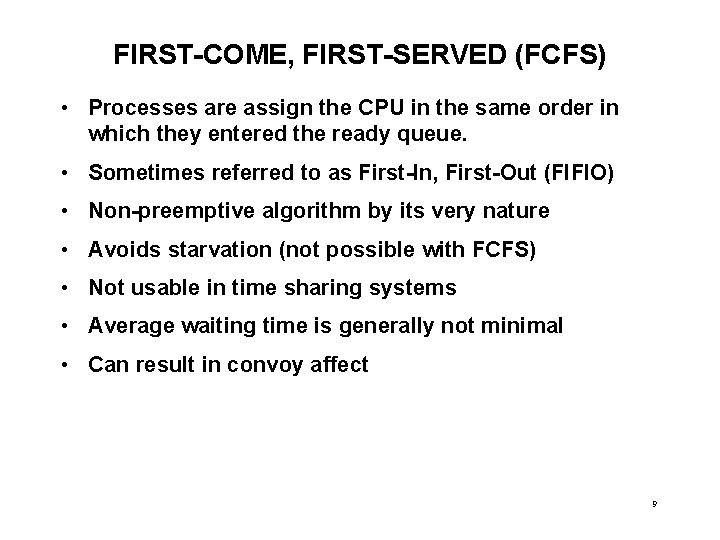

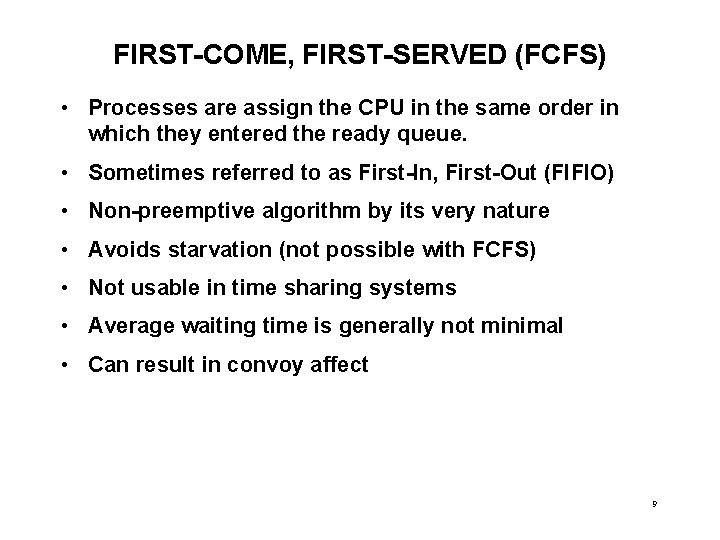

FIRST-COME, FIRST-SERVED (FCFS) • Processes are assign the CPU in the same order in which they entered the ready queue. • Sometimes referred to as First-In, First-Out (FIFIO) • Non-preemptive algorithm by its very nature • Avoids starvation (not possible with FCFS) • Not usable in time sharing systems • Average waiting time is generally not minimal • Can result in convoy affect 9

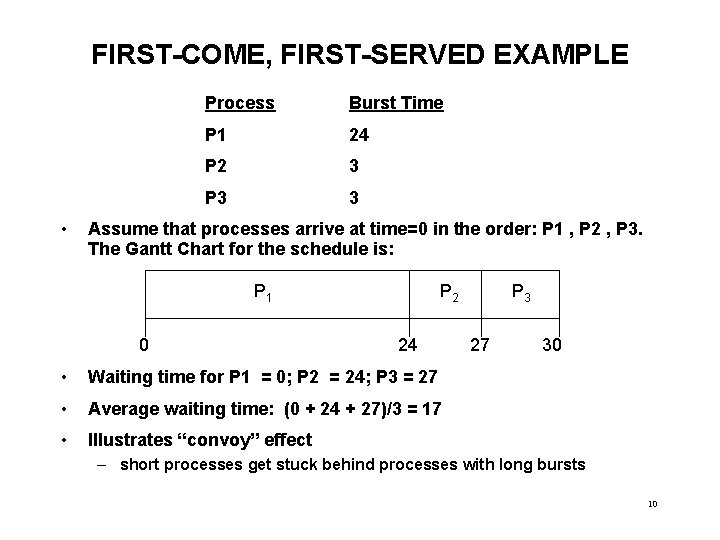

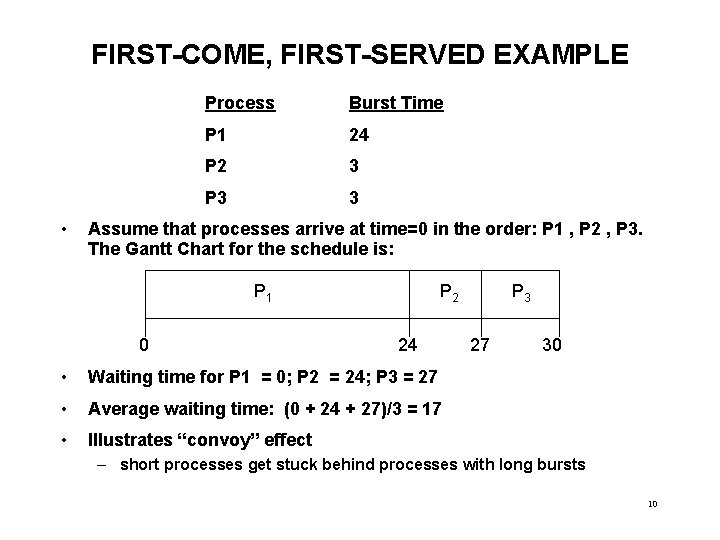

FIRST-COME, FIRST-SERVED EXAMPLE • Process Burst Time P 1 24 P 2 3 P 3 3 Assume that processes arrive at time=0 in the order: P 1 , P 2 , P 3. The Gantt Chart for the schedule is: P 1 0 P 2 24 • Waiting time for P 1 = 0; P 2 = 24; P 3 = 27 • Average waiting time: (0 + 24 + 27)/3 = 17 • Illustrates “convoy” effect P 3 27 30 – short processes get stuck behind processes with long bursts 10

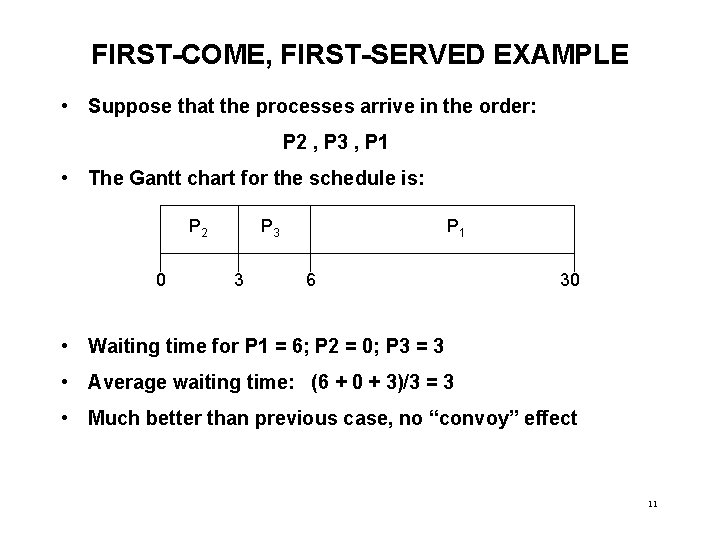

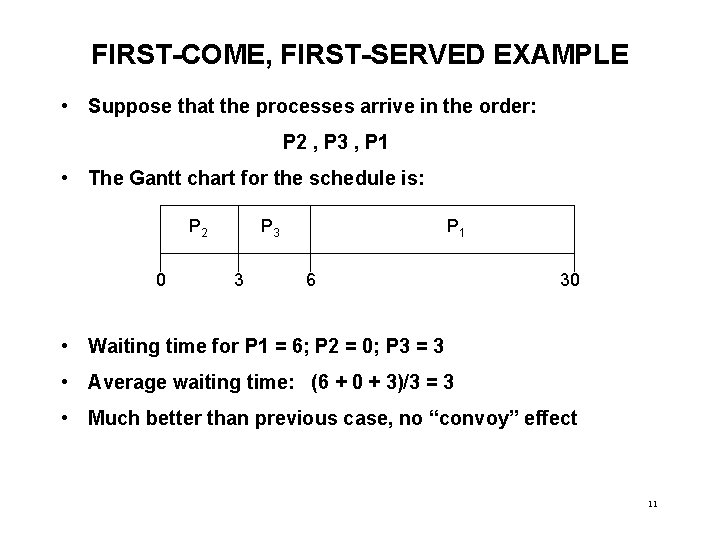

FIRST-COME, FIRST-SERVED EXAMPLE • Suppose that the processes arrive in the order: P 2 , P 3 , P 1 • The Gantt chart for the schedule is: P 2 0 P 3 3 P 1 6 30 • Waiting time for P 1 = 6; P 2 = 0; P 3 = 3 • Average waiting time: (6 + 0 + 3)/3 = 3 • Much better than previous case, no “convoy” effect 11

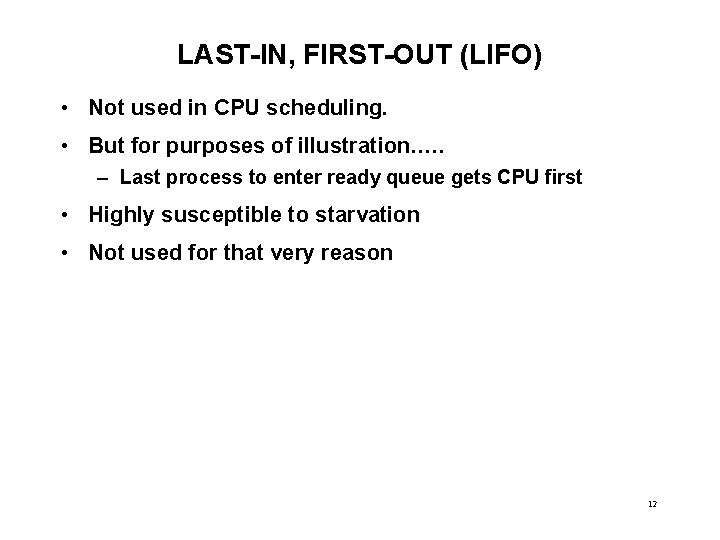

LAST-IN, FIRST-OUT (LIFO) • Not used in CPU scheduling. • But for purposes of illustration…. . – Last process to enter ready queue gets CPU first • Highly susceptible to starvation • Not used for that very reason 12

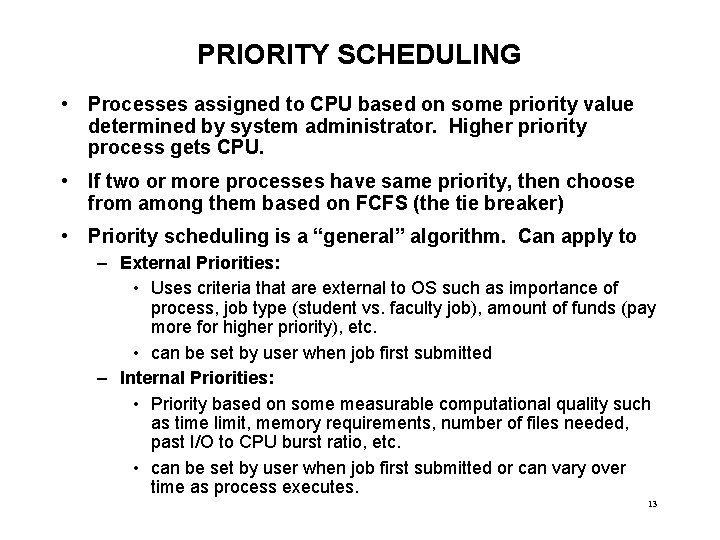

PRIORITY SCHEDULING • Processes assigned to CPU based on some priority value determined by system administrator. Higher priority process gets CPU. • If two or more processes have same priority, then choose from among them based on FCFS (the tie breaker) • Priority scheduling is a “general” algorithm. Can apply to – External Priorities: • Uses criteria that are external to OS such as importance of process, job type (student vs. faculty job), amount of funds (pay more for higher priority), etc. • can be set by user when job first submitted – Internal Priorities: • Priority based on some measurable computational quality such as time limit, memory requirements, number of files needed, past I/O to CPU burst ratio, etc. • can be set by user when job first submitted or can vary over time as process executes. 13

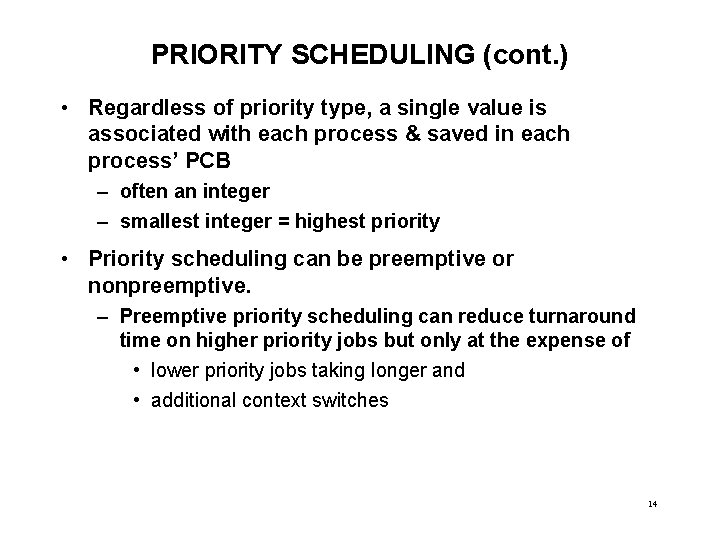

PRIORITY SCHEDULING (cont. ) • Regardless of priority type, a single value is associated with each process & saved in each process’ PCB – often an integer – smallest integer = highest priority • Priority scheduling can be preemptive or nonpreemptive. – Preemptive priority scheduling can reduce turnaround time on higher priority jobs but only at the expense of • lower priority jobs taking longer and • additional context switches 14

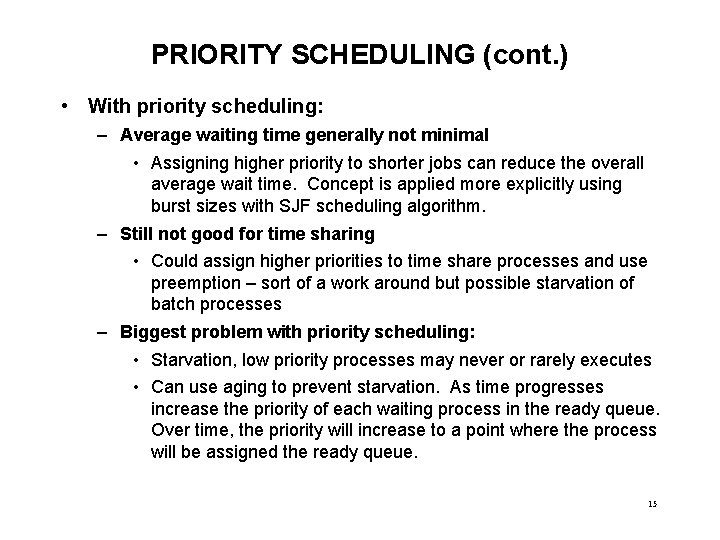

PRIORITY SCHEDULING (cont. ) • With priority scheduling: – Average waiting time generally not minimal • Assigning higher priority to shorter jobs can reduce the overall average wait time. Concept is applied more explicitly using burst sizes with SJF scheduling algorithm. – Still not good for time sharing • Could assign higher priorities to time share processes and use preemption – sort of a work around but possible starvation of batch processes – Biggest problem with priority scheduling: • Starvation, low priority processes may never or rarely executes • Can use aging to prevent starvation. As time progresses increase the priority of each waiting process in the ready queue. Over time, the priority will increase to a point where the process will be assigned the ready queue. 15

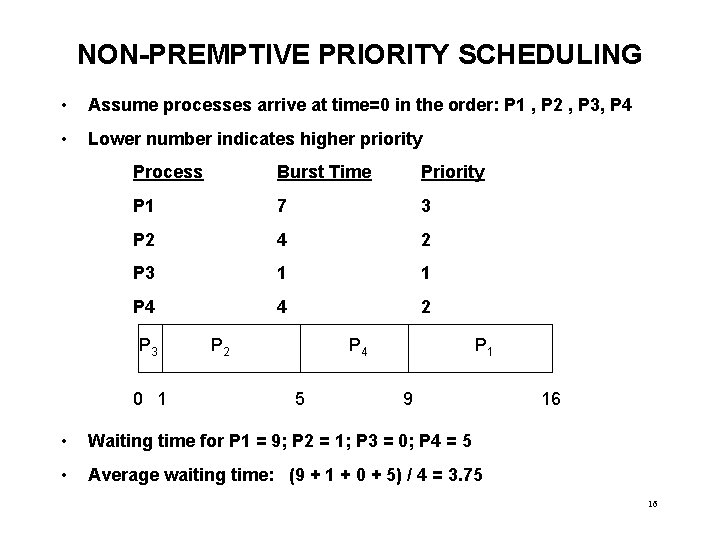

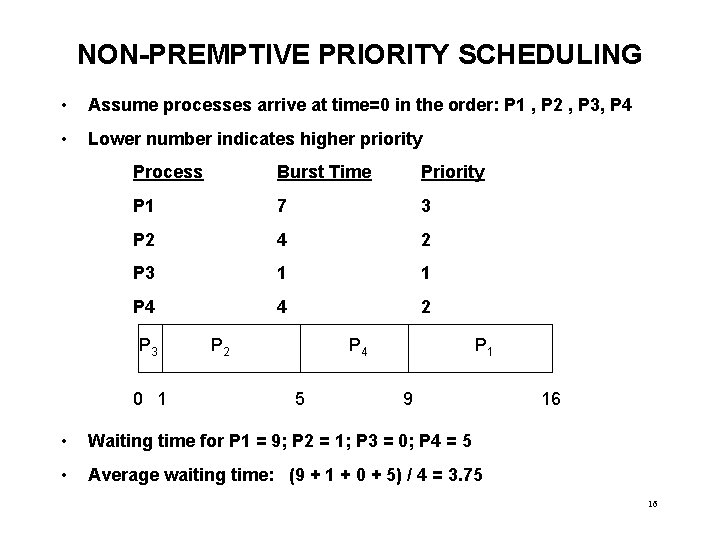

NON-PREMPTIVE PRIORITY SCHEDULING • Assume processes arrive at time=0 in the order: P 1 , P 2 , P 3, P 4 • Lower number indicates higher priority Process Burst Time Priority P 1 7 3 P 2 4 2 P 3 1 1 P 4 4 2 P 3 0 1 P 2 P 4 5 P 1 9 • Waiting time for P 1 = 9; P 2 = 1; P 3 = 0; P 4 = 5 • Average waiting time: (9 + 1 + 0 + 5) / 4 = 3. 75 16 16

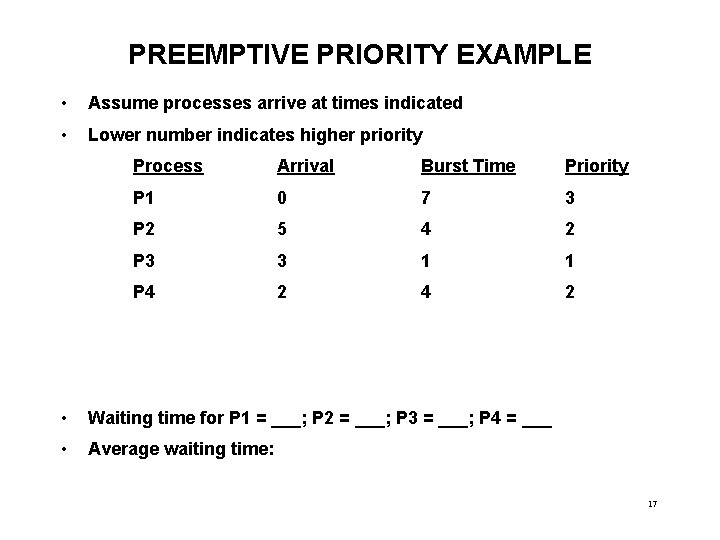

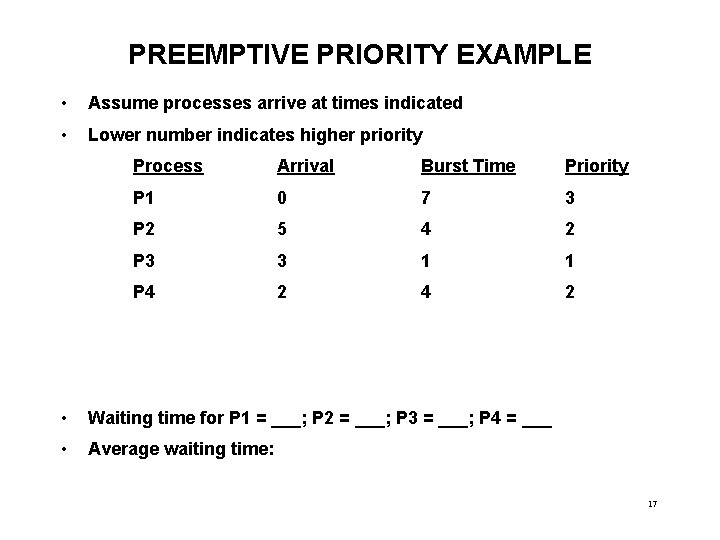

PREEMPTIVE PRIORITY EXAMPLE • Assume processes arrive at times indicated • Lower number indicates higher priority Process Arrival Burst Time Priority P 1 0 7 3 P 2 5 4 2 P 3 3 1 1 P 4 2 • Waiting time for P 1 = ___; P 2 = ___; P 3 = ___; P 4 = ___ • Average waiting time: 17

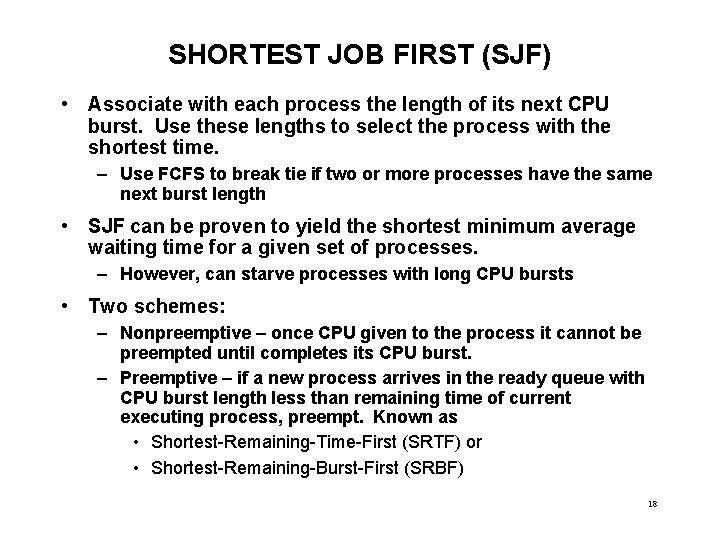

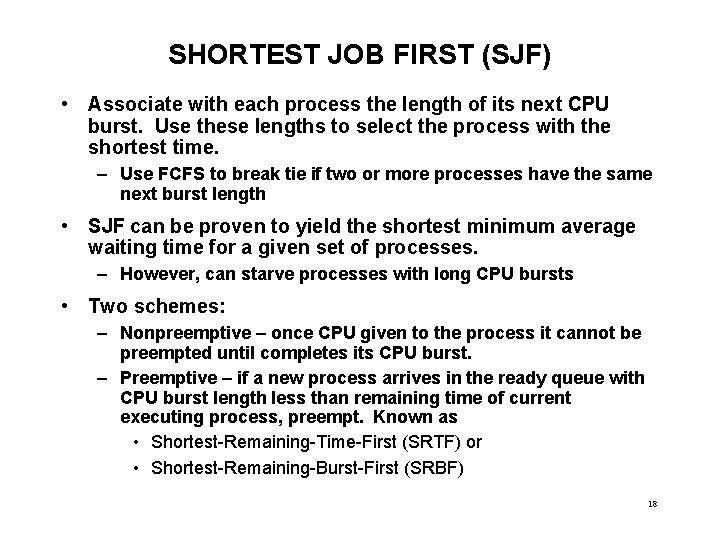

SHORTEST JOB FIRST (SJF) • Associate with each process the length of its next CPU burst. Use these lengths to select the process with the shortest time. – Use FCFS to break tie if two or more processes have the same next burst length • SJF can be proven to yield the shortest minimum average waiting time for a given set of processes. – However, can starve processes with long CPU bursts • Two schemes: – Nonpreemptive – once CPU given to the process it cannot be preempted until completes its CPU burst. – Preemptive – if a new process arrives in the ready queue with CPU burst length less than remaining time of current executing process, preempt. Known as • Shortest-Remaining-Time-First (SRTF) or • Shortest-Remaining-Burst-First (SRBF) 18

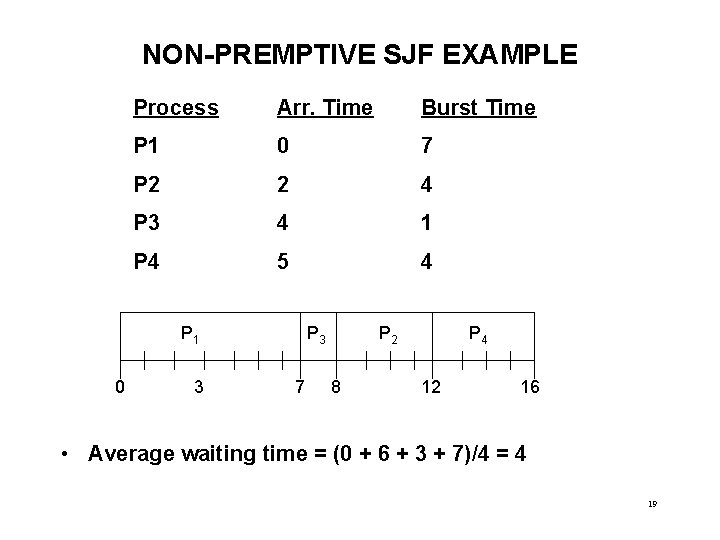

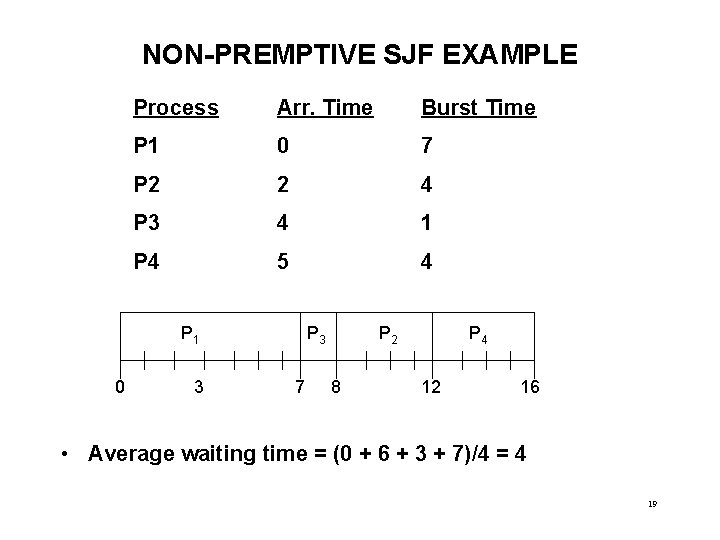

NON-PREMPTIVE SJF EXAMPLE Process Arr. Time Burst Time P 1 0 7 P 2 2 4 P 3 4 1 P 4 5 4 P 1 0 3 P 3 7 P 2 8 P 4 12 16 • Average waiting time = (0 + 6 + 3 + 7)/4 = 4 19

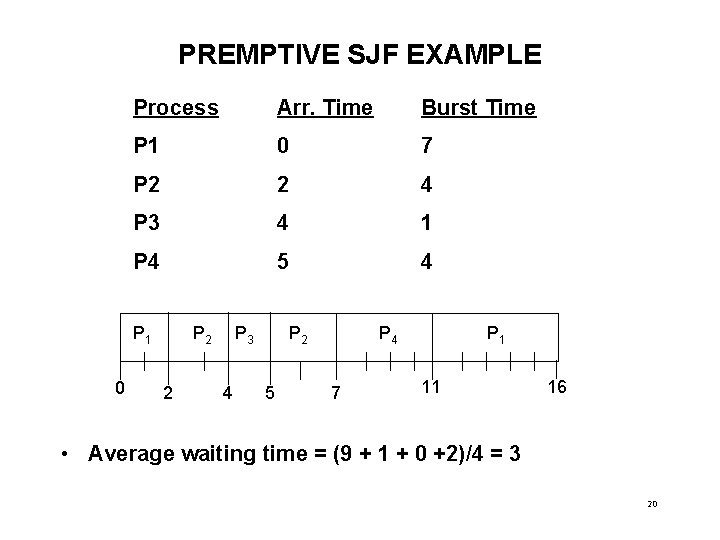

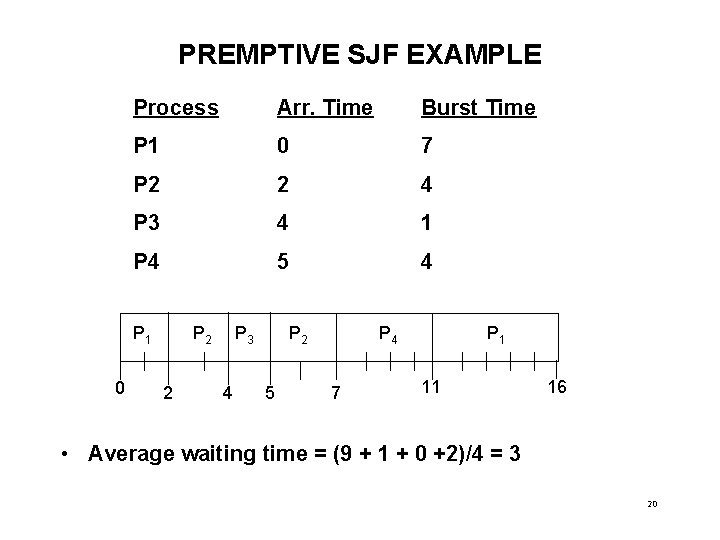

PREMPTIVE SJF EXAMPLE Process Arr. Time Burst Time P 1 0 7 P 2 2 4 P 3 4 1 P 4 5 4 P 1 0 P 2 2 P 3 4 P 2 5 P 4 7 P 1 11 16 • Average waiting time = (9 + 1 + 0 +2)/4 = 3 20

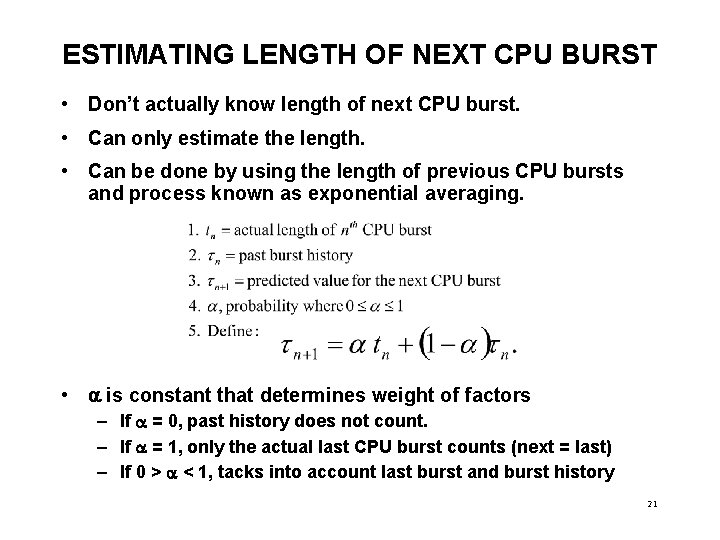

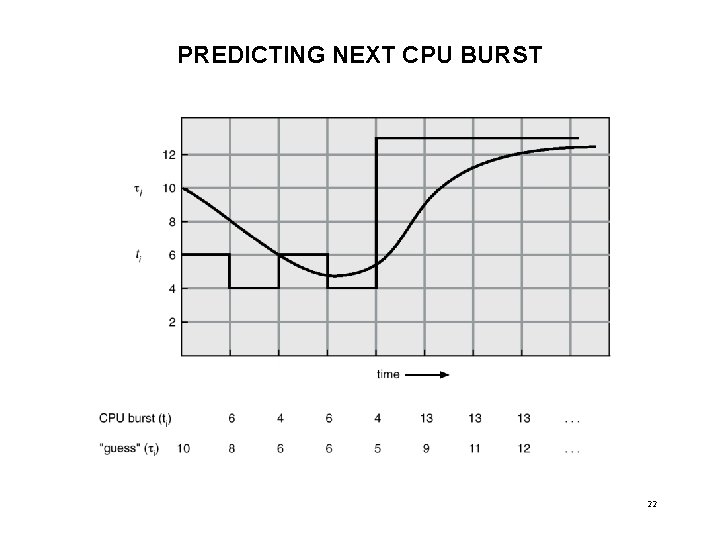

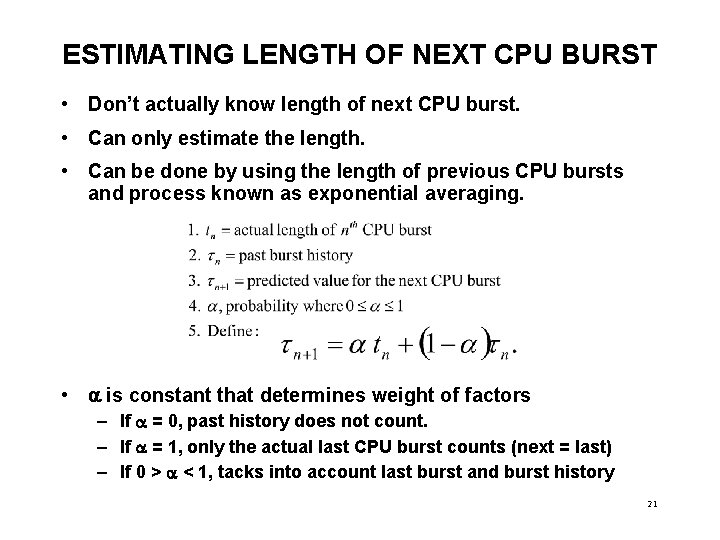

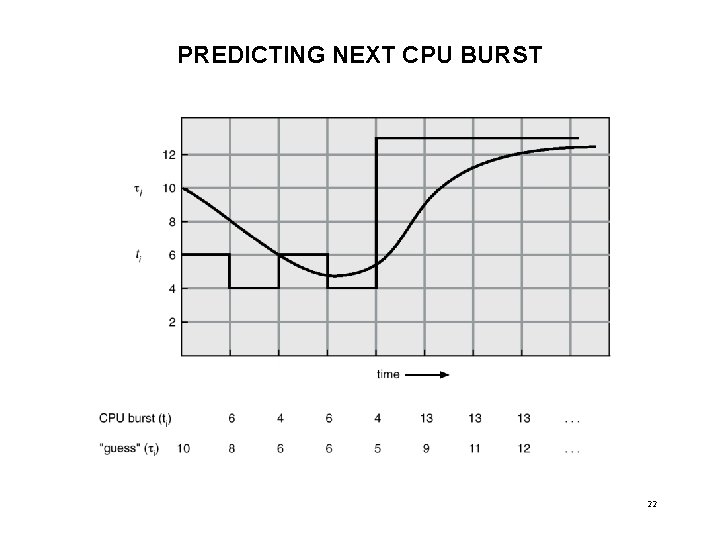

ESTIMATING LENGTH OF NEXT CPU BURST • Don’t actually know length of next CPU burst. • Can only estimate the length. • Can be done by using the length of previous CPU bursts and process known as exponential averaging. • is constant that determines weight of factors – If = 0, past history does not count. – If = 1, only the actual last CPU burst counts (next = last) – If 0 > < 1, tacks into account last burst and burst history 21

PREDICTING NEXT CPU BURST 22

ROUND ROBIN SCHEDULING • Each process gets a small unit or slice of CPU time – referred to as a time quantum – usually 10 -100 milliseconds • After time has elapsed (timer interrupt), the process is preempted and added to the end of the ready queue – Assumes process has not already terminated or moved to waiting state • The process at front of ready queue assigned CPU next – Operates on a FCFS basis when selecting next process 23

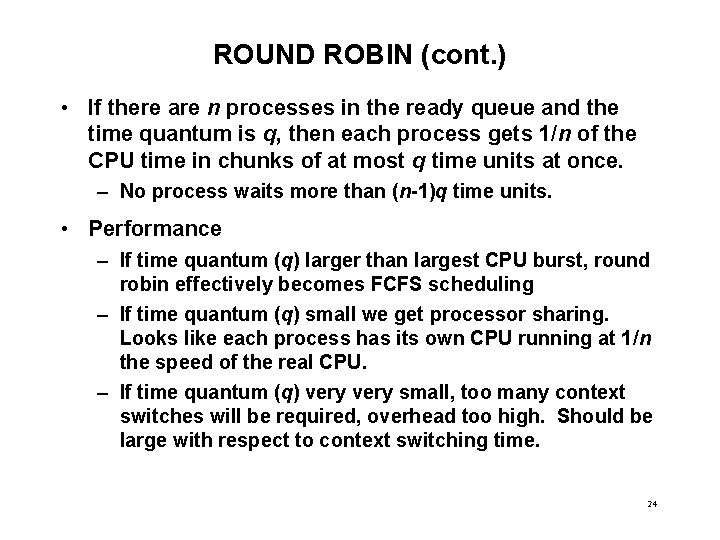

ROUND ROBIN (cont. ) • If there are n processes in the ready queue and the time quantum is q, then each process gets 1/n of the CPU time in chunks of at most q time units at once. – No process waits more than (n-1)q time units. • Performance – If time quantum (q) larger than largest CPU burst, round robin effectively becomes FCFS scheduling – If time quantum (q) small we get processor sharing. Looks like each process has its own CPU running at 1/n the speed of the real CPU. – If time quantum (q) very small, too many context switches will be required, overhead too high. Should be large with respect to context switching time. 24

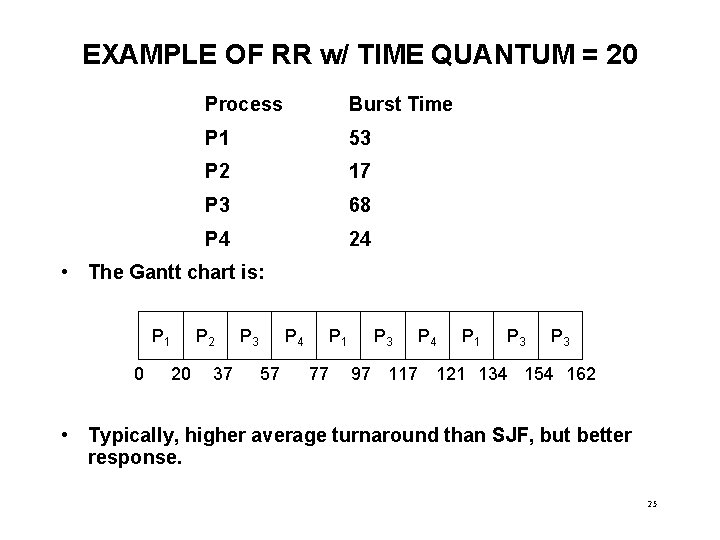

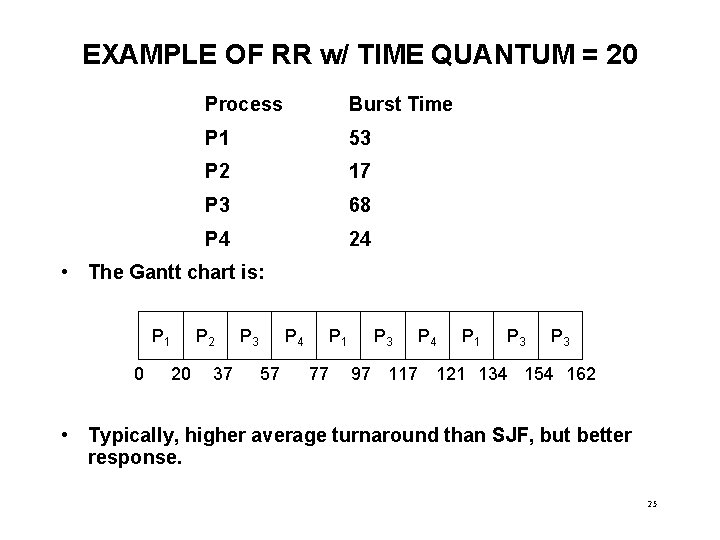

EXAMPLE OF RR w/ TIME QUANTUM = 20 Process Burst Time P 1 53 P 2 17 P 3 68 P 4 24 • The Gantt chart is: P 1 0 P 2 20 37 P 3 P 4 57 P 1 77 P 3 P 4 P 1 P 3 97 117 121 134 154 162 • Typically, higher average turnaround than SJF, but better response. 25

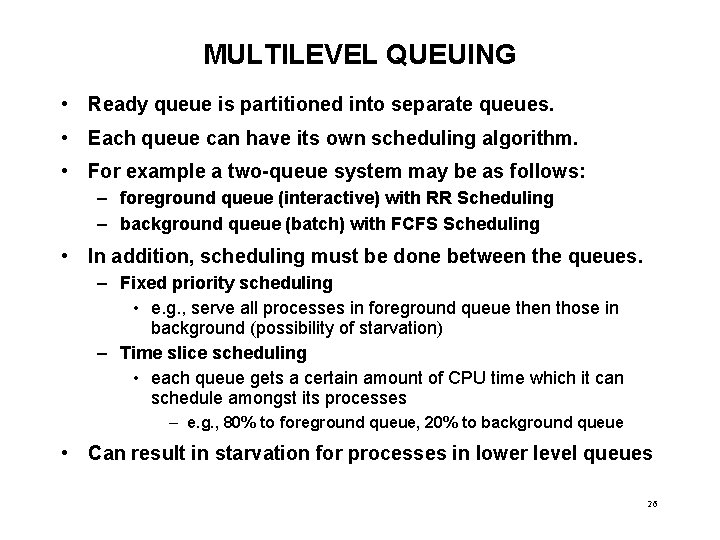

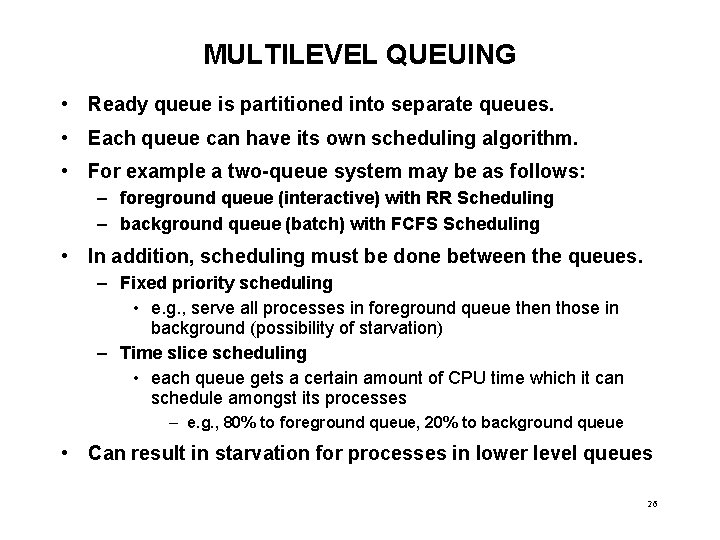

MULTILEVEL QUEUING • Ready queue is partitioned into separate queues. • Each queue can have its own scheduling algorithm. • For example a two-queue system may be as follows: – foreground queue (interactive) with RR Scheduling – background queue (batch) with FCFS Scheduling • In addition, scheduling must be done between the queues. – Fixed priority scheduling • e. g. , serve all processes in foreground queue then those in background (possibility of starvation) – Time slice scheduling • each queue gets a certain amount of CPU time which it can schedule amongst its processes – e. g. , 80% to foreground queue, 20% to background queue • Can result in starvation for processes in lower level queues 26

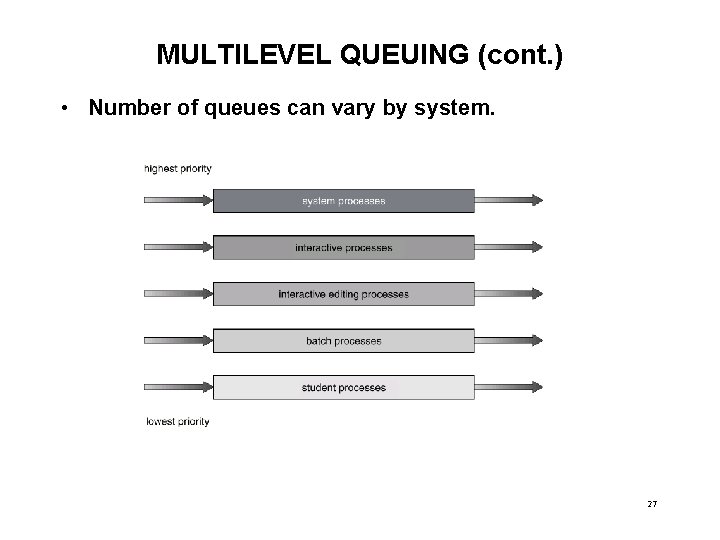

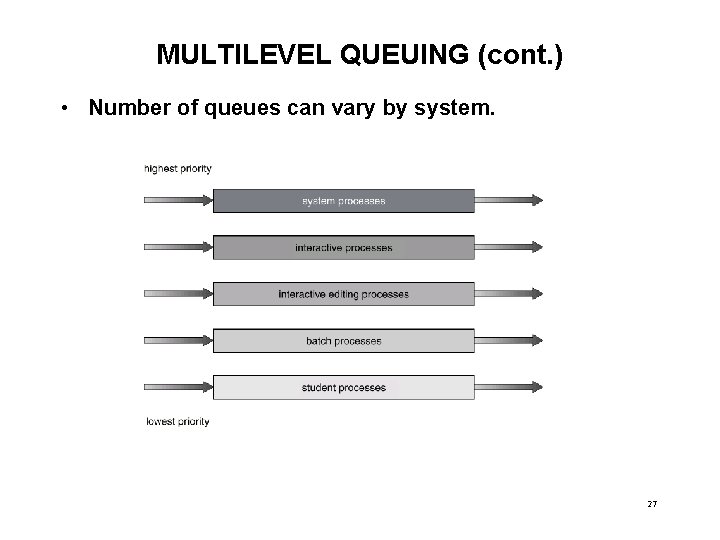

MULTILEVEL QUEUING (cont. ) • Number of queues can vary by system. 27

MULTILEVEL FEEDBACK QUEUING • A process can move between the various queues using an aging algorithm • Multilevel-feedback-queue scheduler defined by the following parameters: – number of queues – scheduling algorithms for each queue – method used to determine which queue a process will enter when that process needs service – method used to determine when to upgrade a process • (move to a higher queue) – method used to determine when to demote a process • (move to a lower queue) 28

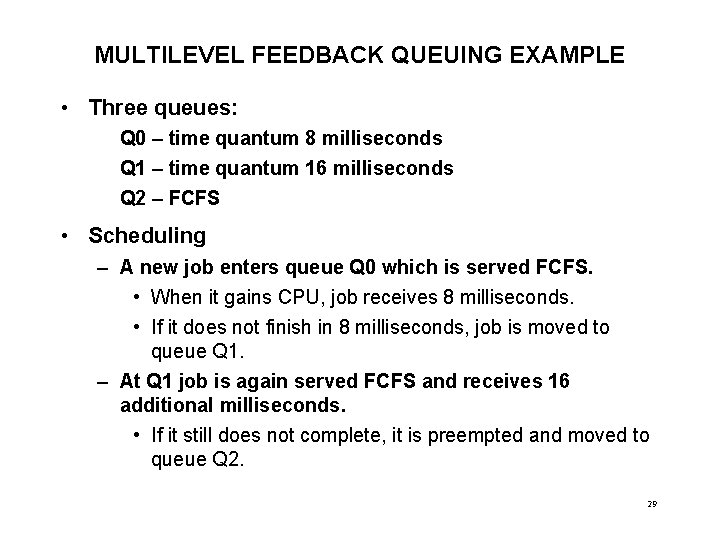

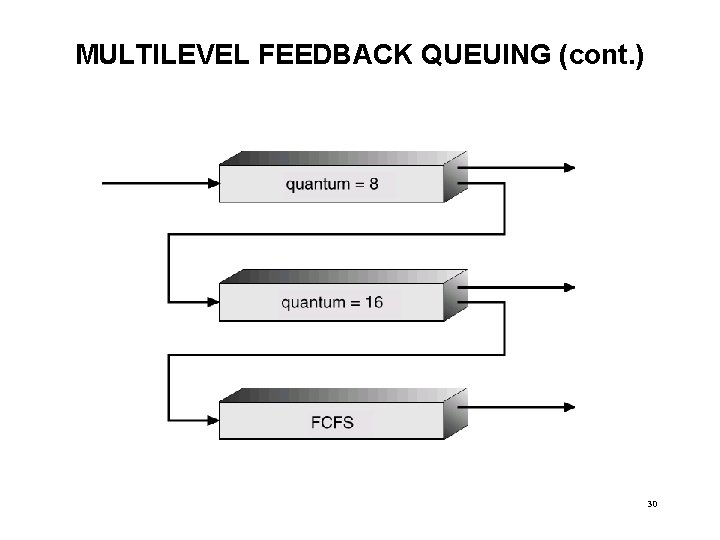

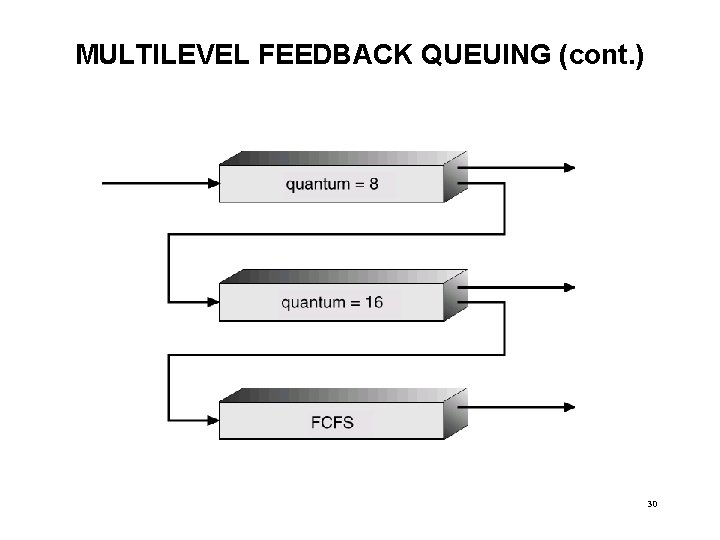

MULTILEVEL FEEDBACK QUEUING EXAMPLE • Three queues: Q 0 – time quantum 8 milliseconds Q 1 – time quantum 16 milliseconds Q 2 – FCFS • Scheduling – A new job enters queue Q 0 which is served FCFS. • When it gains CPU, job receives 8 milliseconds. • If it does not finish in 8 milliseconds, job is moved to queue Q 1. – At Q 1 job is again served FCFS and receives 16 additional milliseconds. • If it still does not complete, it is preempted and moved to queue Q 2. 29

MULTILEVEL FEEDBACK QUEUING (cont. ) 30

MUTLI-PROCESSOR SCHEDULING • CPU scheduling more complex when multiple CPUs (processors) are available. – Homogeneous: All processors alike and have access to same resources. – Heterogeneous: Processors can be different (e. g. , speed, capability) or have access to different resources • Requires additional effort to determine which processes can run on which processors/CPUs • Individual vs. Common Ready Queues – Does each processor have its own queue or are all jobs placed in single queue (allows load sharing) • What if process already allocated memory? • Processor scheduling options: – centralized scheduler, can be dedicated CPU – each processor is self-scheduling – must have access to shared OS data structures if a single queue. 31

REAL-TIME SCHEDULING • Hard real-time systems: – Guaranteed completion times for all critical tasks – Can’t use secondary storage or virtual memory – Generally special purpose processors • Soft real-time computing: – Approximation of hard real-time system – Very small dispatch latencies – Uses priority scheduling • critical processes receive priority over lower priority (nonreal-time) processes. • must not age non-real time processes to a level equal to or greater than that of real time processes. 32

SCHEDULING ALGORITHM EVALUATION • How do we decide which scheduling algorithm(s) to use with an operating system? – Determine what your evaluation criteria will be: • Utilization, turn around time, response time, etc. – Evaluate each algorithm with respect to your criteria • Deterministic Modeling • Queuing Models • Simulations 33

DETERMINISTIC MODELING • Take a particular predetermined workload and define the performance of each algorithm for that workload. • Gantt chart problems are examples of this method • Simple, fast (if small workload) • Must have exact data • Gives answer for very specific situation • Used primarily for illustration purposes 34

QUEUING MODELS • Treat OS and processes as system or network of queues and servers • Use queuing network analysis to determine characteristics of the system • Various software packages on the market to perform the analysis • Generally must make assumptions about the distribution of CPU bursts and arrival rates of processes • At best, queuing models provide an approximation of a real world system 35

LITTLE’S FORMULA • Little’s formula gives us an example of how queuing analysis works n=L*W • Where n = average queue length L = average arrival rate into the queue W = average wait time in queue • Assume system is in steady state – # processes arriving in ready queue equals number of processes leaving ready queue. • If average queue length = 14 and one process arrives in the queue every 7 seconds then: 14 processes in queue = 1 process / 7 seconds * W W = 98 seconds (average wait time in queue) 36

SIMULATIONS • Build a computer model of the operating system and its scheduling algorithms. Run the model using statistical data from a real system. Compare results. • Data for the model can come from many sources: – Randomly generated – Generated based on predetermined distributions (e. g. , uniform, exponential, Poisson) – Captured using trace tapes from existing system. • The more detailed the simulation, the greater the cost – Programming time (writing the simulator) – Storage of trace data – Running time (varies with level of complexity and size of data set) 37