Chapter 5 Multiprocessor and ThreadLevel Parallelism Introduction and

- Slides: 38

Chapter 5 Multiprocessor and Thread-Level Parallelism • Introduction and Taxonomy • SMP Architectures and Snooping Protocols • Distributed Shared-Memory Architectures • Performance Evaluations • Synchronization Issues • Memory Consistency 1

• Thread-Level parallelism – Have multiple program counters – Uses MIMD model – Targeted for tightly-coupled shared-memory multiprocessors • For n processors, need n threads • Amount of computation assigned to each thread = grain size – Threads can be used for data-level parallelism, but the overheads may outweigh the benefit Copyright © 2012, Elsevier Inc. All rights reserved. Introduction

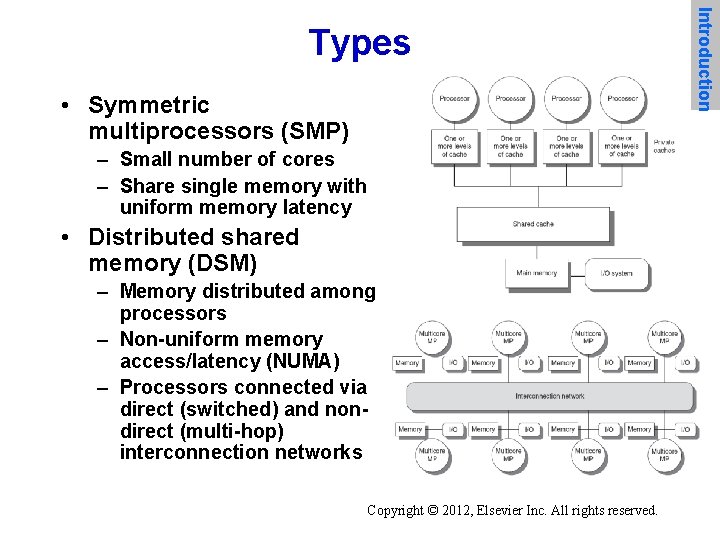

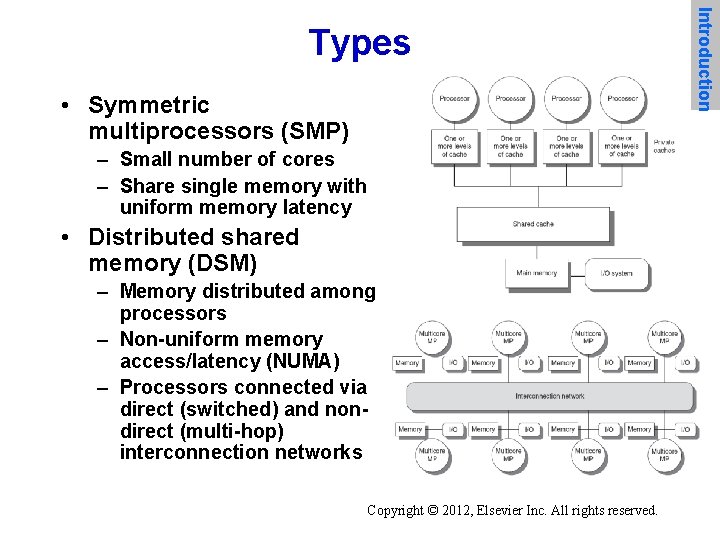

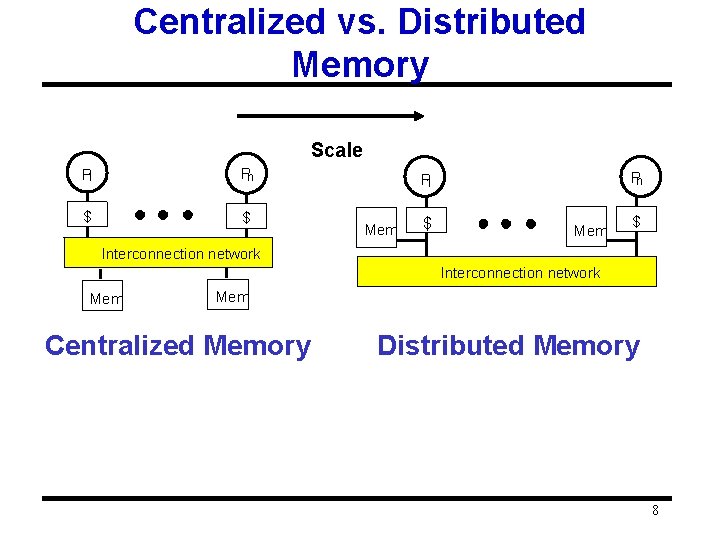

• Symmetric multiprocessors (SMP) – Small number of cores – Share single memory with uniform memory latency • Distributed shared memory (DSM) – Memory distributed among processors – Non-uniform memory access/latency (NUMA) – Processors connected via direct (switched) and nondirect (multi-hop) interconnection networks Copyright © 2012, Elsevier Inc. All rights reserved. Introduction Types

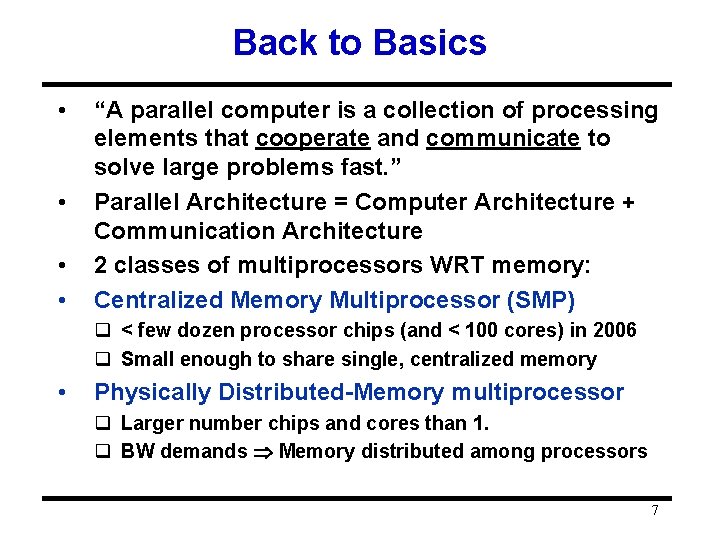

Parallel Computers • Definition: “A parallel computer is a collection of processiong elements that cooperate and communicate to solve large problems fast. ” – Almasi and Gottlieb, Highly Parallel Computing , 1989 • Questions about parallel computers: – – – – How large a collection? How powerful are processing elements? How do they cooperate and communicate? How are data transmitted? What type of interconnection? What are HW and SW primitives for programmer? Does it translate into performance? 4

What level Parallelism? • Bit level parallelism: 1970 to ~1985 – 4 bits, 8 bit, 16 bit, 32 bit microprocessors • Instruction level parallelism (ILP): ~1985 to today – – Pipelining Superscalar, Out-of-order execution VLIW Limits to benefits of ILP? • Process Level or Thread level parallelism; mainstream for general purpose computing? – Servers are parallel – Highend Desktop dual-core PC (more cores today!) – What about future CMP? 5

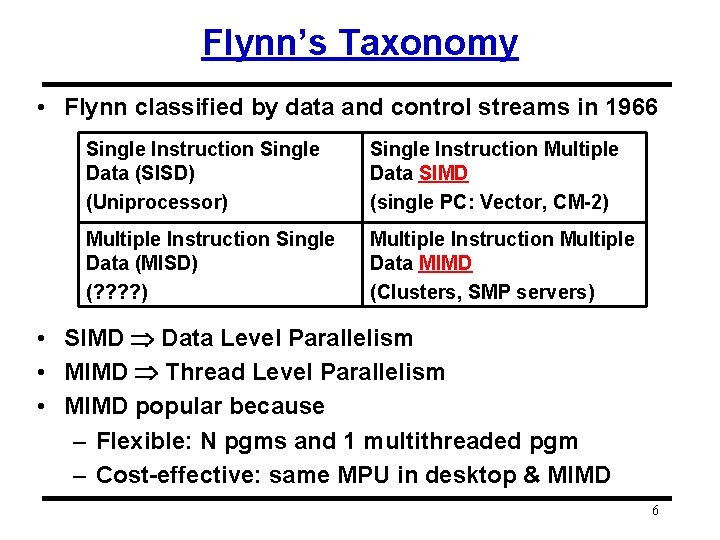

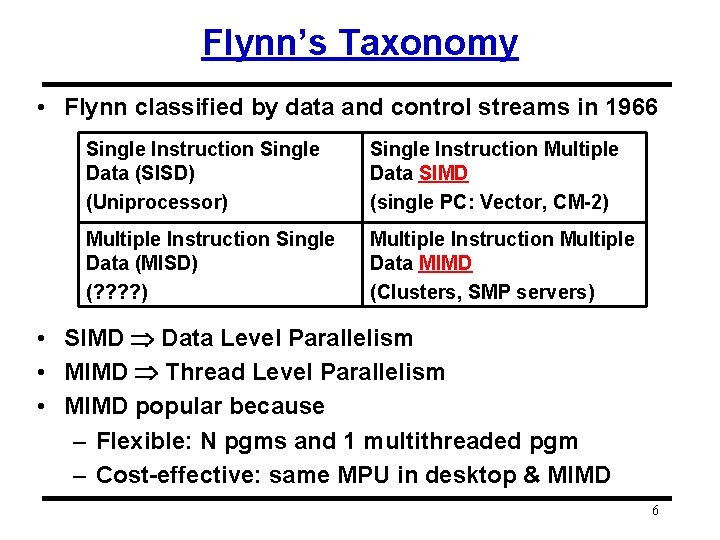

Flynn’s Taxonomy • Flynn classified by data and control streams in 1966 Single Instruction Single Data (SISD) (Uniprocessor) Single Instruction Multiple Data SIMD (single PC: Vector, CM-2) Multiple Instruction Single Data (MISD) (? ? ) Multiple Instruction Multiple Data MIMD (Clusters, SMP servers) • SIMD Data Level Parallelism • MIMD Thread Level Parallelism • MIMD popular because – Flexible: N pgms and 1 multithreaded pgm – Cost-effective: same MPU in desktop & MIMD 6

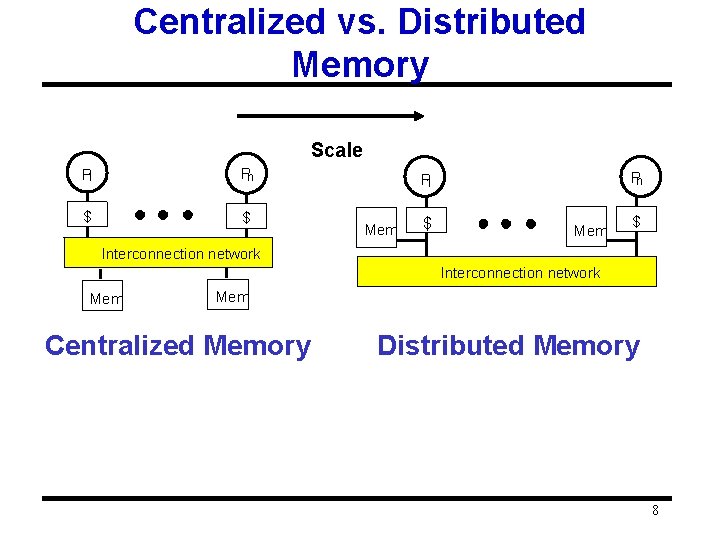

Back to Basics • • “A parallel computer is a collection of processing elements that cooperate and communicate to solve large problems fast. ” Parallel Architecture = Computer Architecture + Communication Architecture 2 classes of multiprocessors WRT memory: Centralized Memory Multiprocessor (SMP) q < few dozen processor chips (and < 100 cores) in 2006 q Small enough to share single, centralized memory • Physically Distributed-Memory multiprocessor q Larger number chips and cores than 1. q BW demands Memory distributed among processors 7

Centralized vs. Distributed Memory Scale P 1 Pn $ $ Pn P 1 Mem $ Interconnection network Mem Centralized Memory Distributed Memory 8

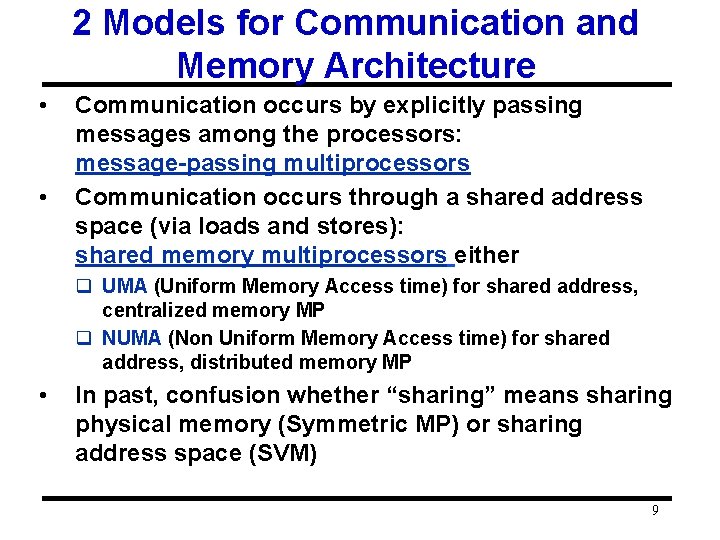

2 Models for Communication and Memory Architecture • • Communication occurs by explicitly passing messages among the processors: message-passing multiprocessors Communication occurs through a shared address space (via loads and stores): shared memory multiprocessors either q UMA (Uniform Memory Access time) for shared address, centralized memory MP q NUMA (Non Uniform Memory Access time) for shared address, distributed memory MP • In past, confusion whether “sharing” means sharing physical memory (Symmetric MP) or sharing address space (SVM) 9

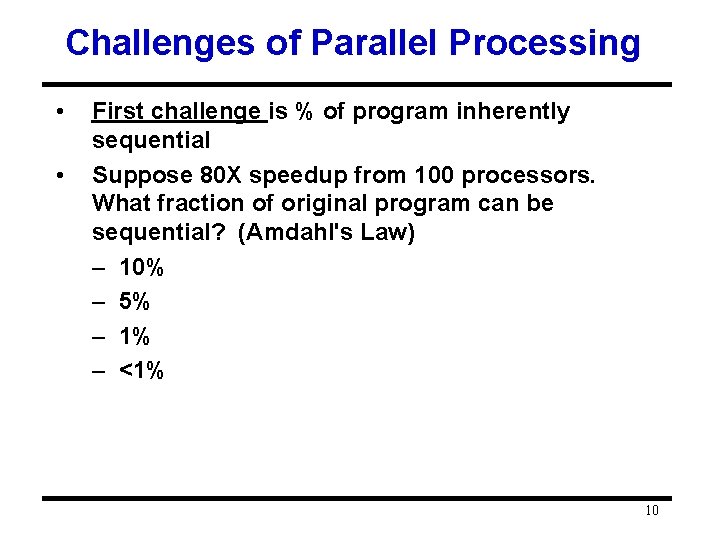

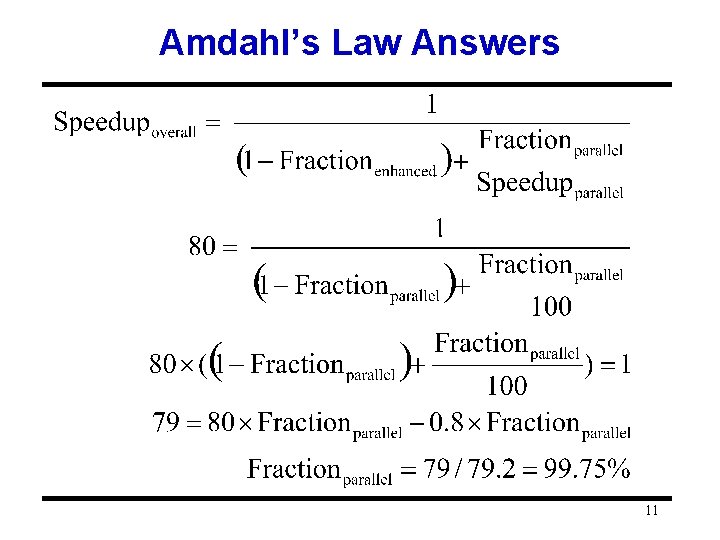

Challenges of Parallel Processing • • First challenge is % of program inherently sequential Suppose 80 X speedup from 100 processors. What fraction of original program can be sequential? (Amdahl's Law) – 10% – 5% – 1% – <1% 10

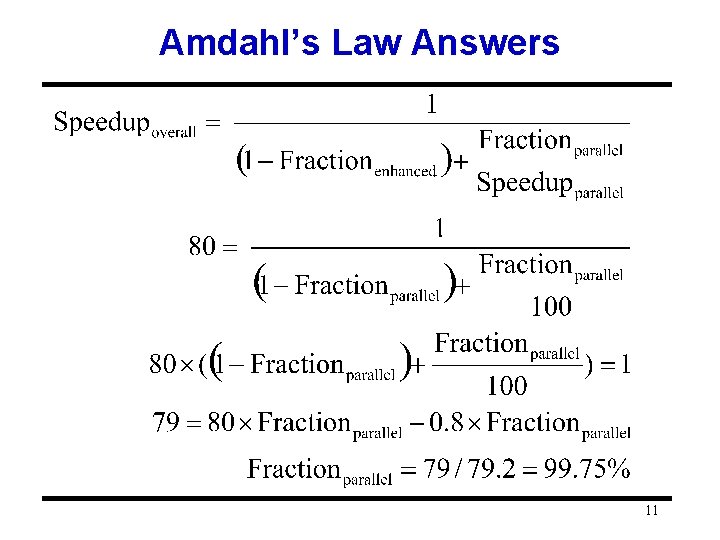

Amdahl’s Law Answers 11

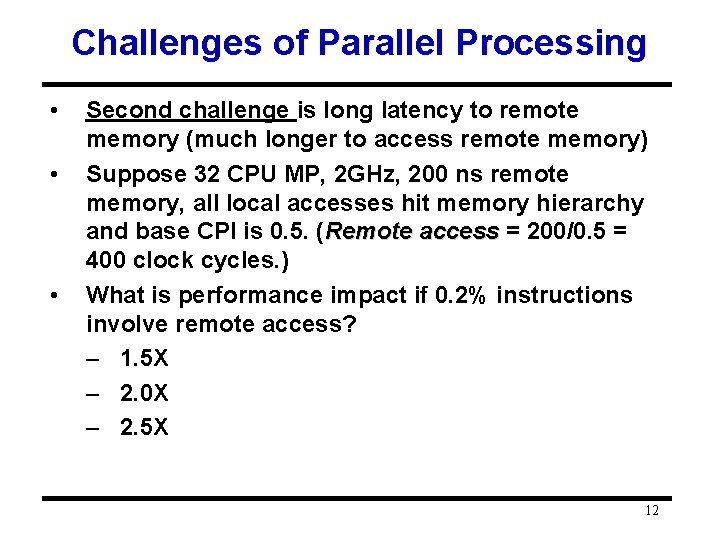

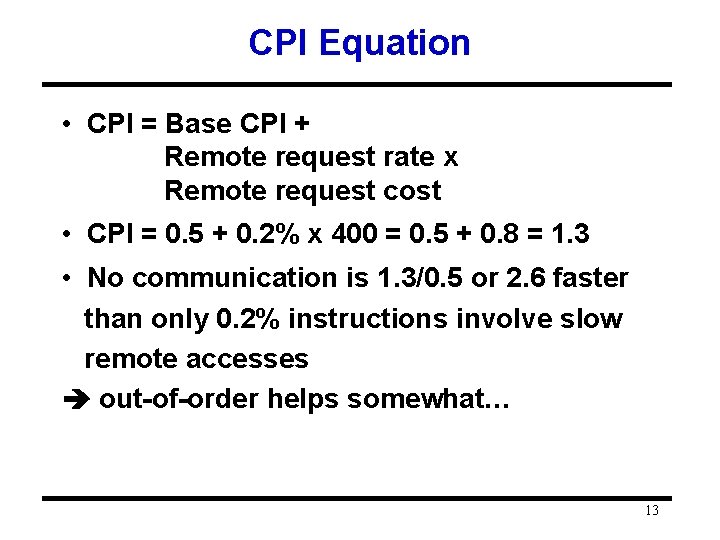

Challenges of Parallel Processing • • • Second challenge is long latency to remote memory (much longer to access remote memory) Suppose 32 CPU MP, 2 GHz, 200 ns remote memory, all local accesses hit memory hierarchy and base CPI is 0. 5. (Remote access = 200/0. 5 = 400 clock cycles. ) What is performance impact if 0. 2% instructions involve remote access? – 1. 5 X – 2. 0 X – 2. 5 X 12

CPI Equation • CPI = Base CPI + Remote request rate x Remote request cost • CPI = 0. 5 + 0. 2% x 400 = 0. 5 + 0. 8 = 1. 3 • No communication is 1. 3/0. 5 or 2. 6 faster than only 0. 2% instructions involve slow remote accesses out-of-order helps somewhat… 13

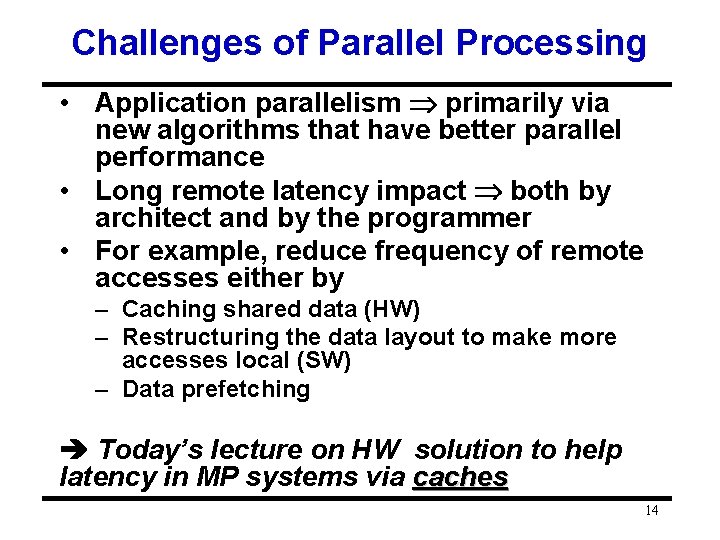

Challenges of Parallel Processing • Application parallelism primarily via new algorithms that have better parallel performance • Long remote latency impact both by architect and by the programmer • For example, reduce frequency of remote accesses either by – Caching shared data (HW) – Restructuring the data layout to make more accesses local (SW) – Data prefetching Today’s lecture on HW solution to help latency in MP systems via caches 14

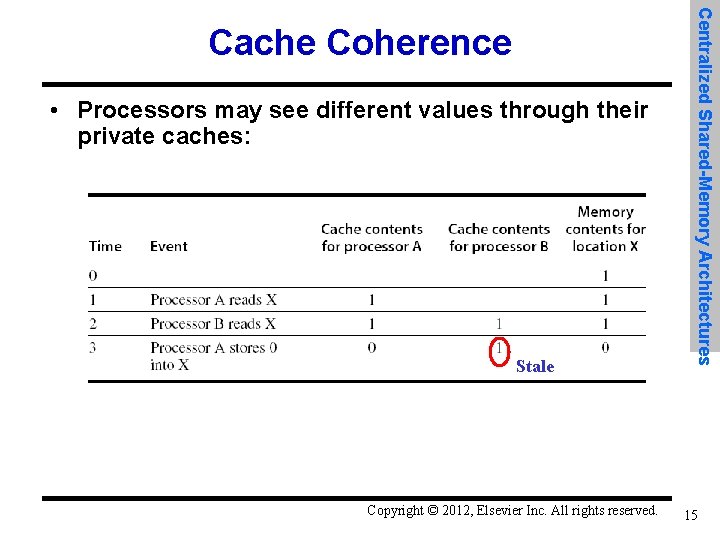

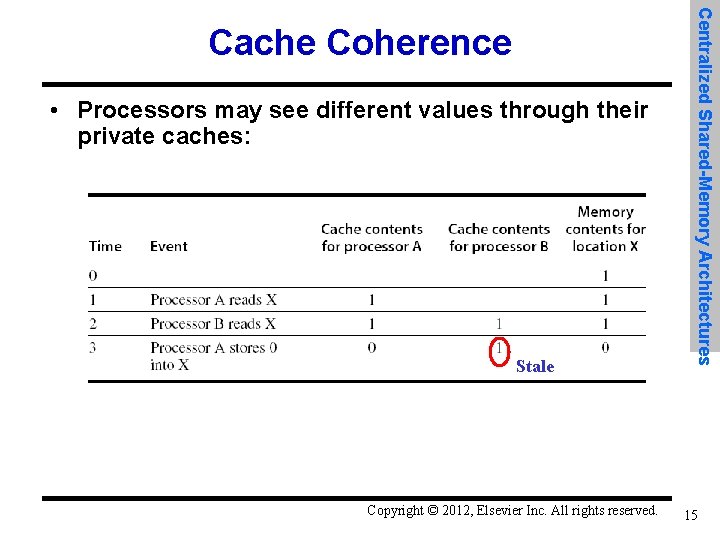

• Processors may see different values through their private caches: Stale Copyright © 2012, Elsevier Inc. All rights reserved. Centralized Shared-Memory Architectures Cache Coherence 15

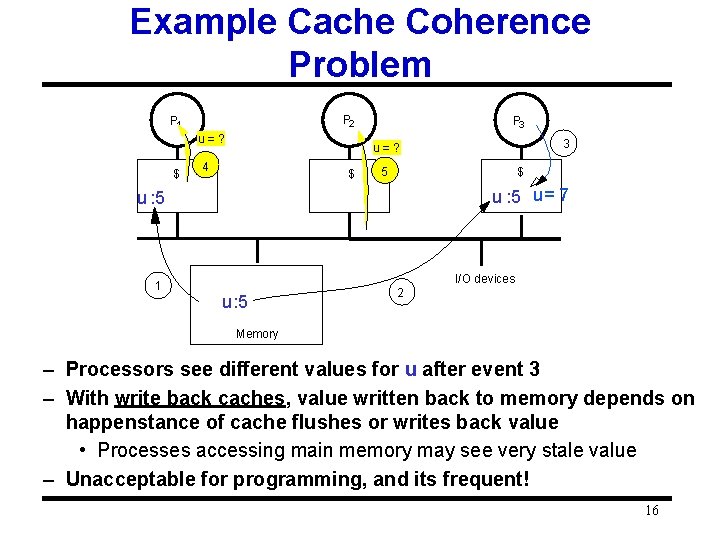

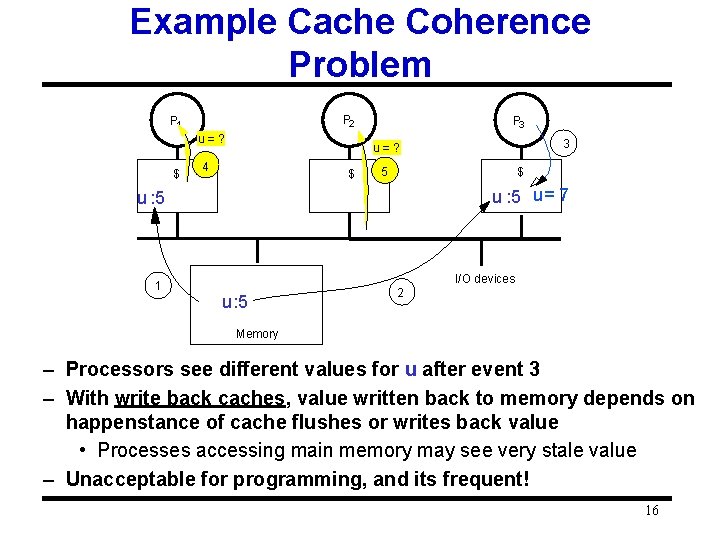

Example Cache Coherence Problem P 2 P 1 u=? $ P 3 4 $ 5 $ u : 5 u = 7 u : 5 1 3 u=? I/O devices u : 5 2 Memory – Processors see different values for u after event 3 – With write back caches, value written back to memory depends on happenstance of cache flushes or writes back value • Processes accessing main memory may see very stale value – Unacceptable for programming, and its frequent! 16

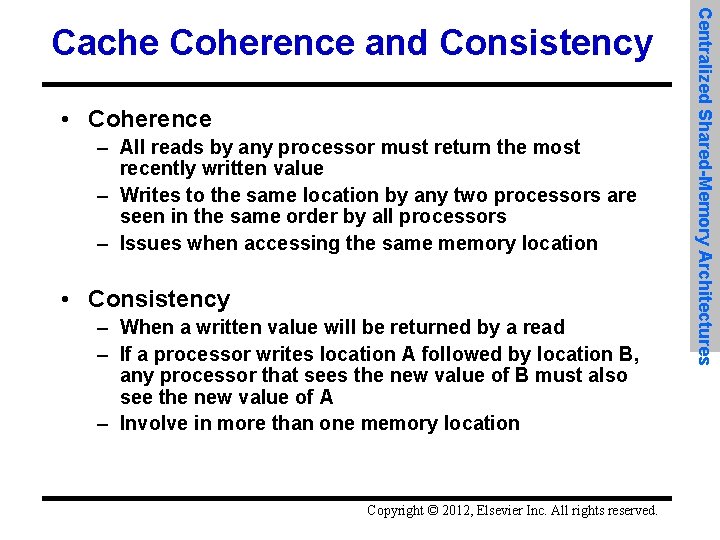

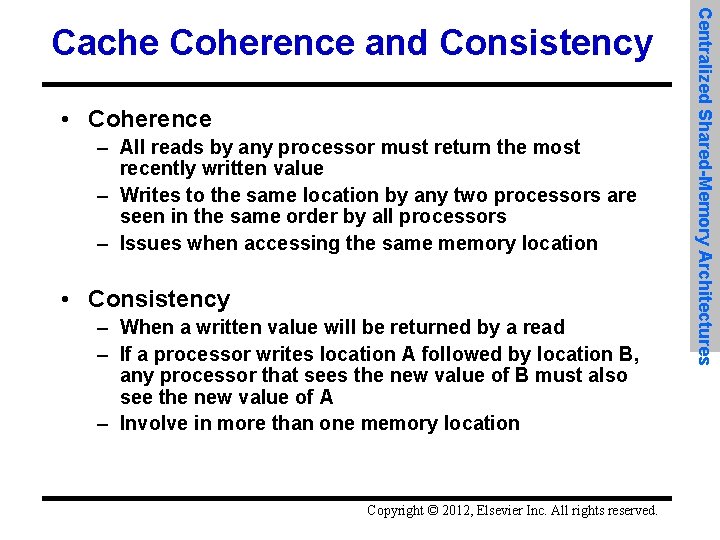

• Coherence – All reads by any processor must return the most recently written value – Writes to the same location by any two processors are seen in the same order by all processors – Issues when accessing the same memory location • Consistency – When a written value will be returned by a read – If a processor writes location A followed by location B, any processor that sees the new value of B must also see the new value of A – Involve in more than one memory location Copyright © 2012, Elsevier Inc. All rights reserved. Centralized Shared-Memory Architectures Cache Coherence and Consistency

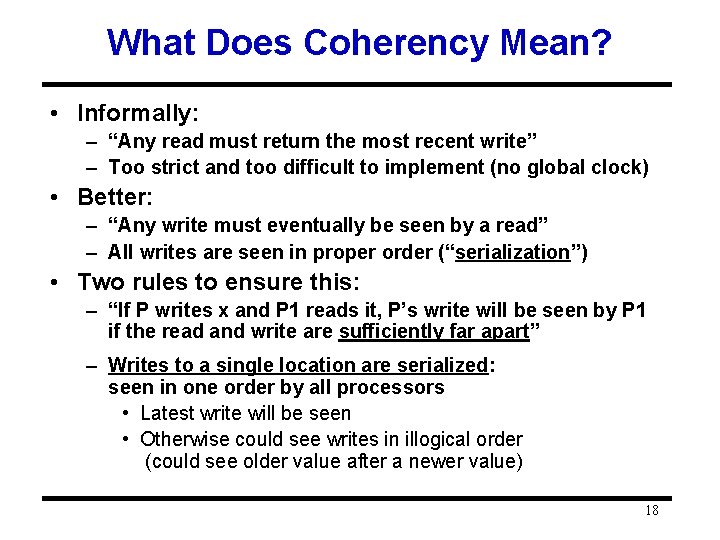

What Does Coherency Mean? • Informally: – “Any read must return the most recent write” – Too strict and too difficult to implement (no global clock) • Better: – “Any write must eventually be seen by a read” – All writes are seen in proper order (“serialization”) • Two rules to ensure this: – “If P writes x and P 1 reads it, P’s write will be seen by P 1 if the read and write are sufficiently far apart” – Writes to a single location are serialized: seen in one order by all processors • Latest write will be seen • Otherwise could see writes in illogical order (could see older value after a newer value) 18

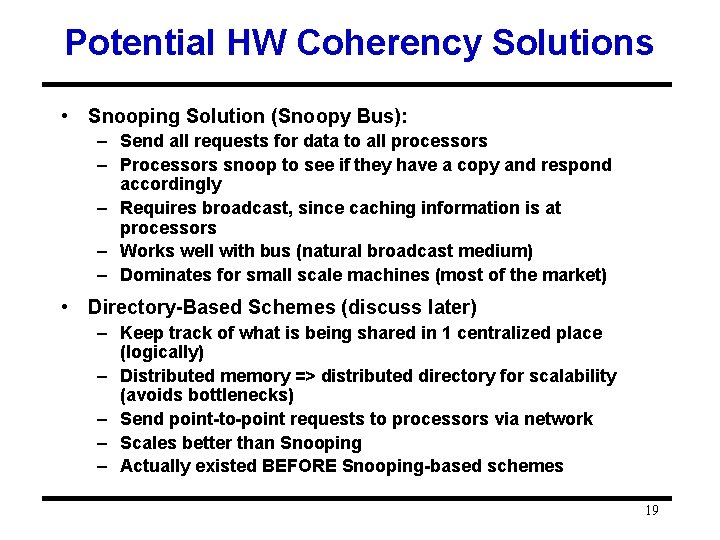

Potential HW Coherency Solutions • Snooping Solution (Snoopy Bus): – Send all requests for data to all processors – Processors snoop to see if they have a copy and respond accordingly – Requires broadcast, since caching information is at processors – Works well with bus (natural broadcast medium) – Dominates for small scale machines (most of the market) • Directory-Based Schemes (discuss later) – Keep track of what is being shared in 1 centralized place (logically) – Distributed memory => distributed directory for scalability (avoids bottlenecks) – Send point-to-point requests to processors via network – Scales better than Snooping – Actually existed BEFORE Snooping-based schemes 19

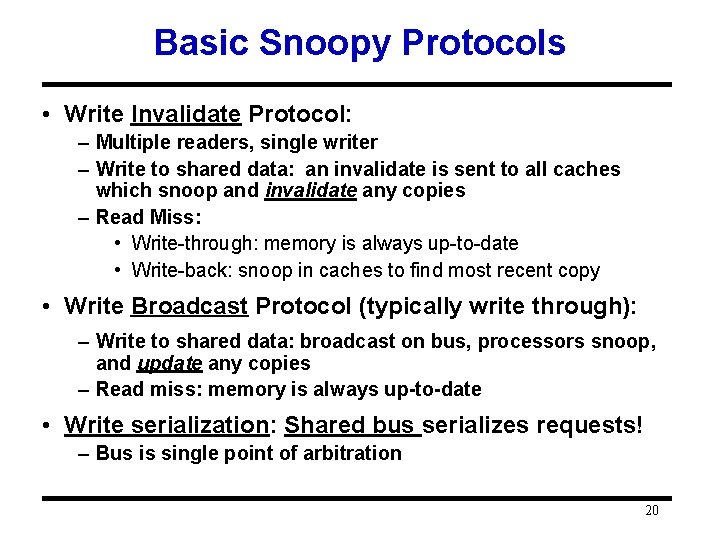

Basic Snoopy Protocols • Write Invalidate Protocol: – Multiple readers, single writer – Write to shared data: an invalidate is sent to all caches which snoop and invalidate any copies – Read Miss: • Write-through: memory is always up-to-date • Write-back: snoop in caches to find most recent copy • Write Broadcast Protocol (typically write through): – Write to shared data: broadcast on bus, processors snoop, and update any copies – Read miss: memory is always up-to-date • Write serialization: Shared bus serializes requests! – Bus is single point of arbitration 20

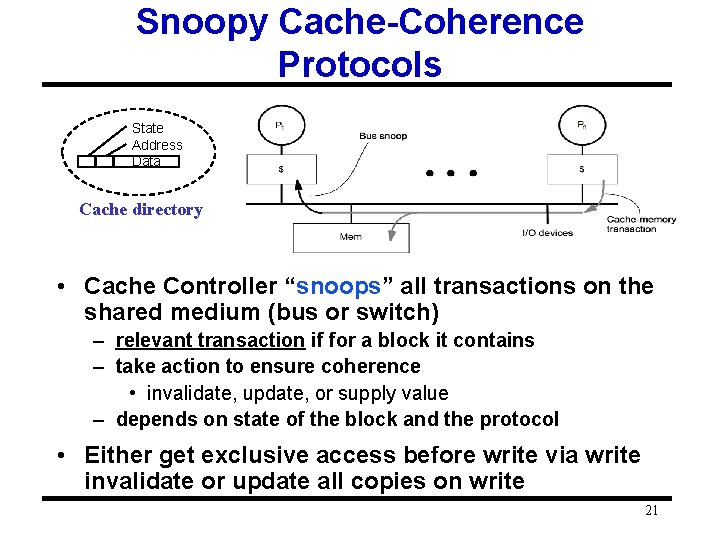

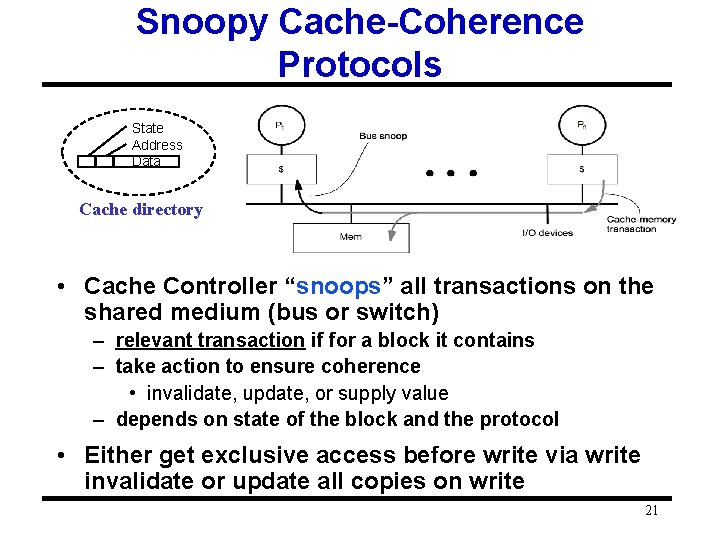

Snoopy Cache-Coherence Protocols State Address Data Cache directory • Cache Controller “snoops” all transactions on the shared medium (bus or switch) – relevant transaction if for a block it contains – take action to ensure coherence • invalidate, update, or supply value – depends on state of the block and the protocol • Either get exclusive access before write via write invalidate or update all copies on write 21

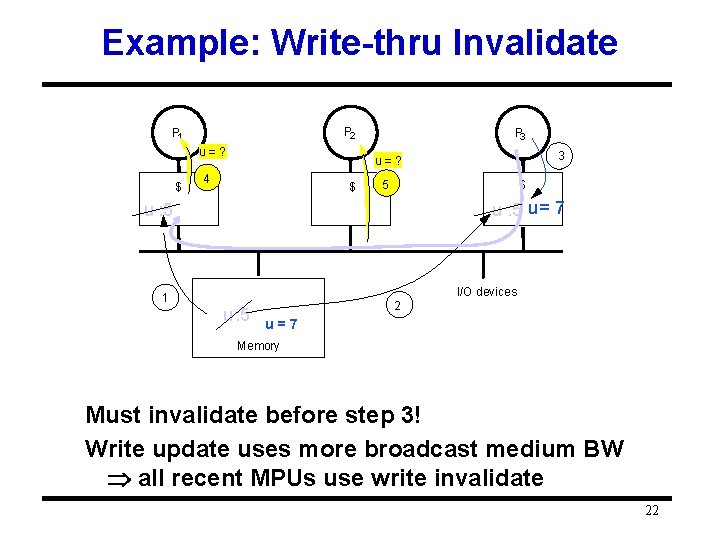

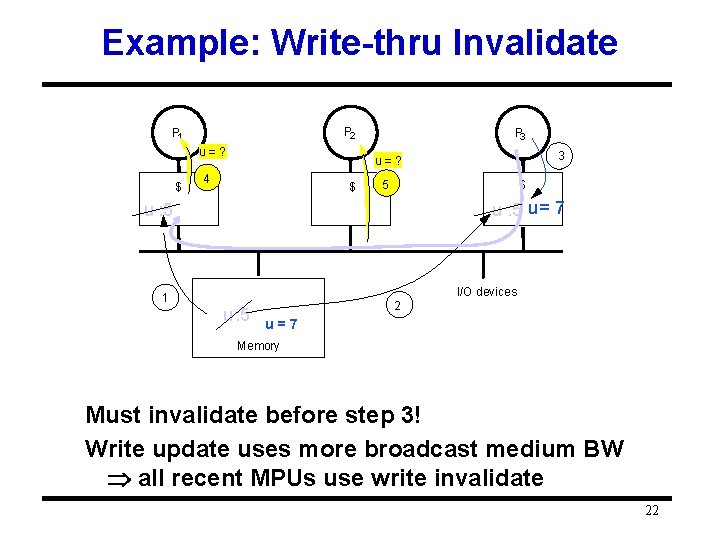

Example: Write-thru Invalidate P 2 P 1 u=? $ P 3 3 u=? 4 $ 5 $ u : 5 u = 7 u : 5 I/O devices 1 u : 5 2 u=7 Memory Must invalidate before step 3! Write update uses more broadcast medium BW all recent MPUs use write invalidate 22

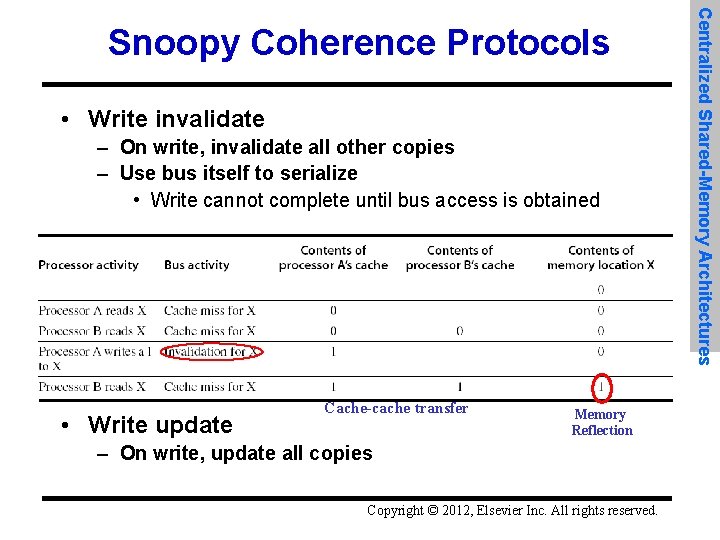

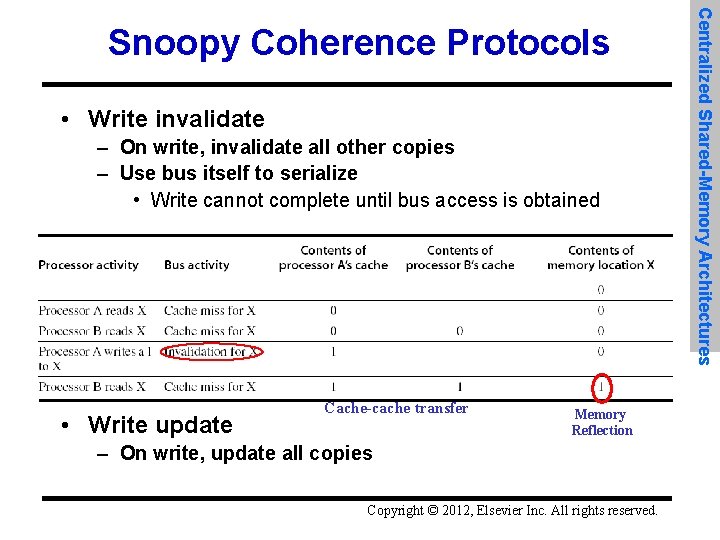

• Write invalidate – On write, invalidate all other copies – Use bus itself to serialize • Write cannot complete until bus access is obtained • Write update Cache-cache transfer Memory Reflection – On write, update all copies Copyright © 2012, Elsevier Inc. All rights reserved. Centralized Shared-Memory Architectures Snoopy Coherence Protocols

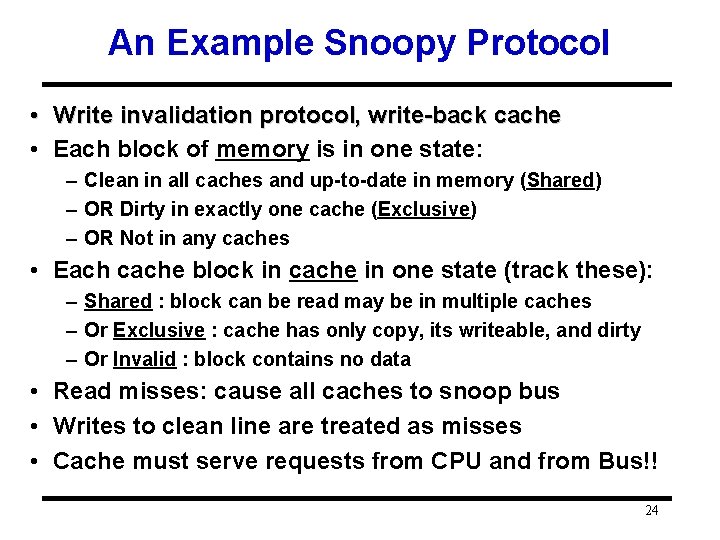

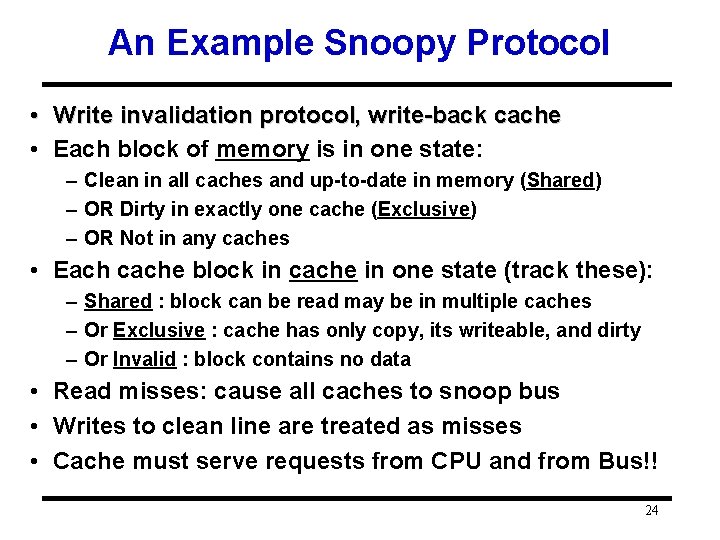

An Example Snoopy Protocol • Write invalidation protocol, write-back cache • Each block of memory is in one state: – Clean in all caches and up-to-date in memory (Shared) – OR Dirty in exactly one cache (Exclusive) – OR Not in any caches • Each cache block in cache in one state (track these): – Shared : block can be read may be in multiple caches – Or Exclusive : cache has only copy, its writeable, and dirty – Or Invalid : block contains no data • Read misses: cause all caches to snoop bus • Writes to clean line are treated as misses • Cache must serve requests from CPU and from Bus!! 24

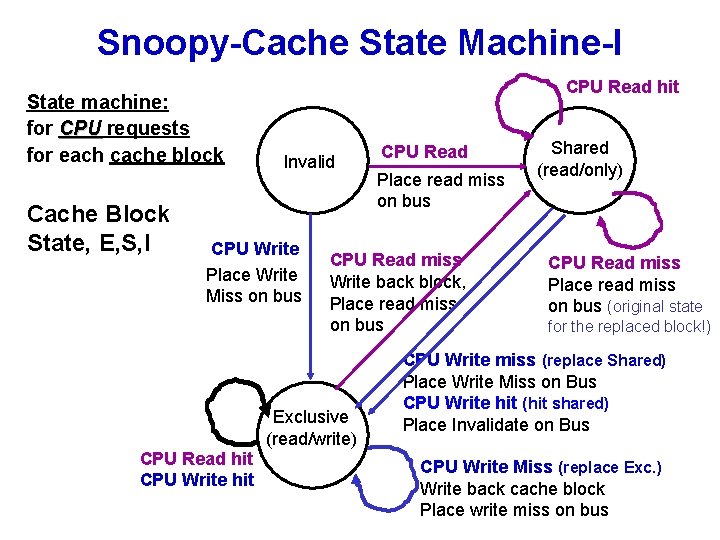

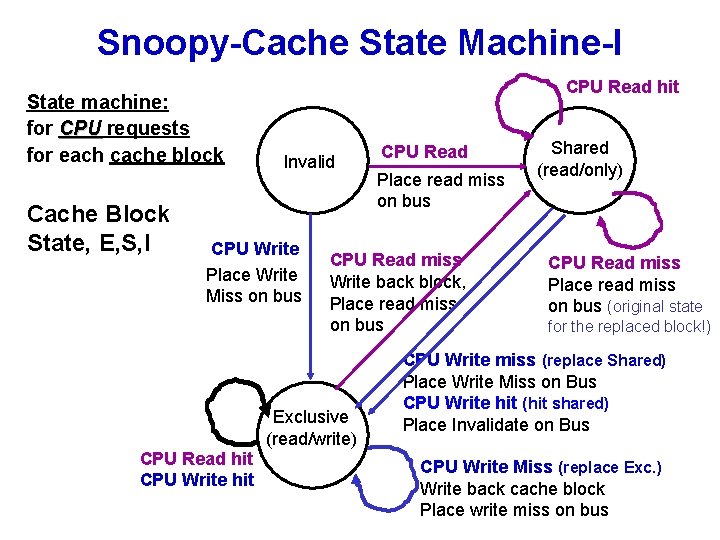

Snoopy-Cache State Machine-I State machine: for CPU requests for each cache block Cache Block State, E, S, I CPU Read hit Invalid CPU Write Place Write Miss on bus Place read miss on bus CPU Read miss Write back block, Place read miss on bus Exclusive (read/write) CPU Read hit CPU Write hit CPU Read Shared (read/only) CPU Read miss Place read miss on bus (original state for the replaced block!) CPU Write miss (replace Shared) Place Write Miss on Bus CPU Write hit (hit shared) Place Invalidate on Bus CPU Write Miss (replace Exc. ) Write back cache block 25 Place write miss on bus

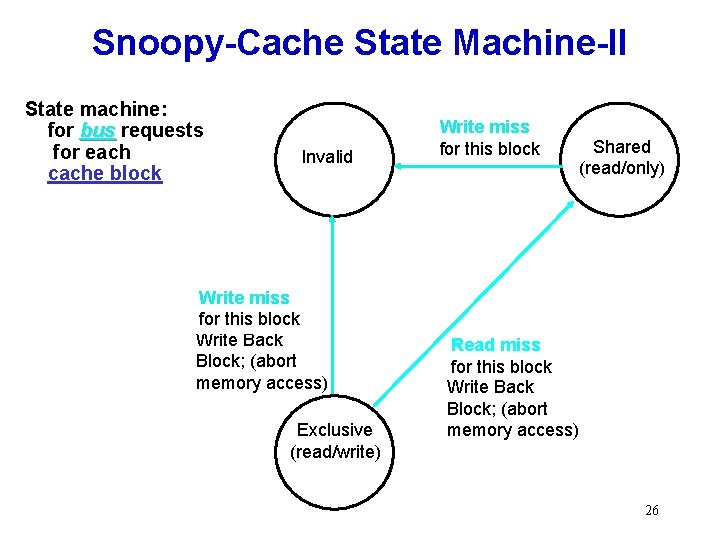

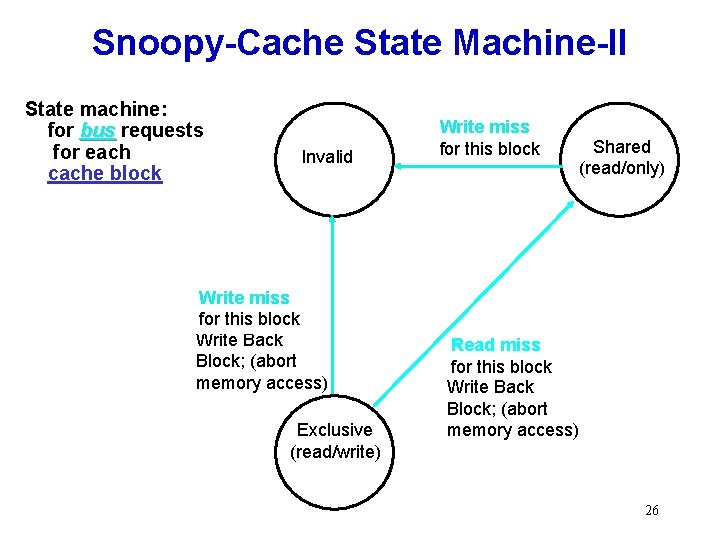

Snoopy-Cache State Machine-II State machine: for bus requests for each cache block Invalid Write miss for this block Write Back Block; (abort memory access) Exclusive (read/write) Write miss for this block Shared (read/only) Read miss for this block Write Back Block; (abort memory access) 26

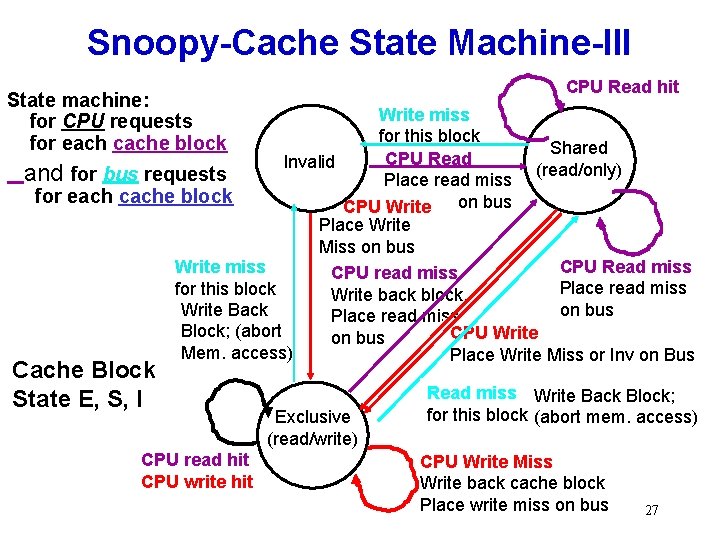

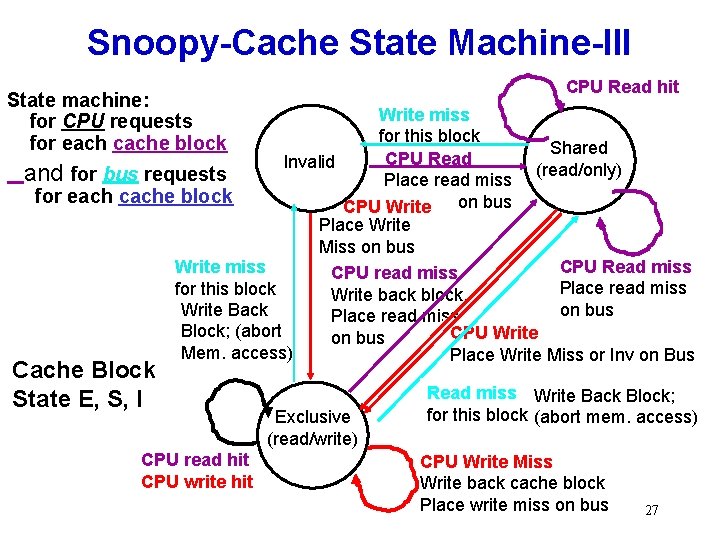

Snoopy-Cache State Machine-III CPU Read hit State machine: for CPU requests for each cache block Write miss for this block Shared CPU Read Invalid (read/only) and for bus requests Place read miss for each cache block CPU Write on bus Place Write Miss on bus CPU Read miss Write miss CPU read miss Place read miss for this block Write back block, Write Back on bus Place read miss Block; (abort CPU Write on bus Mem. access) Place Write Miss or Inv on Bus Cache Block State E, S, I CPU read hit CPU write hit Exclusive (read/write) Read miss Write Back Block; for this block (abort mem. access) CPU Write Miss Write back cache block Place write miss on bus 27

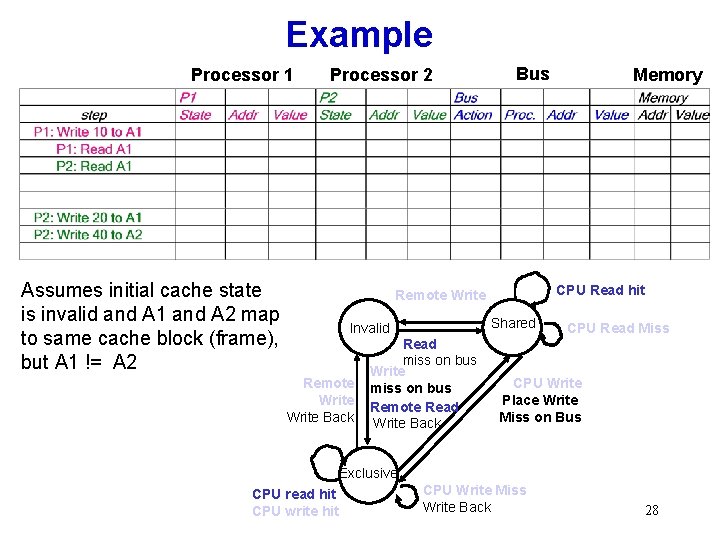

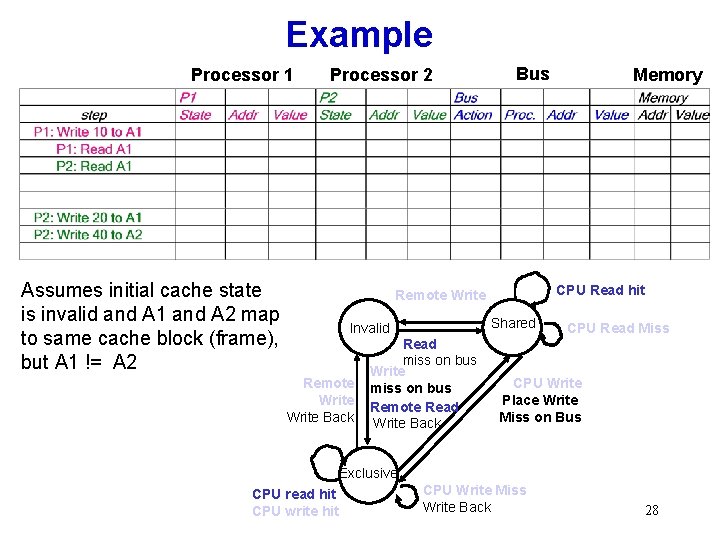

Example Processor 1 Processor 2 Assumes initial cache state is invalid and A 1 and A 2 map to same cache block (frame), but A 1 != A 2 Bus CPU Read hit Remote Write Shared Invalid Remote Write Back Read miss on bus Write miss on bus Remote Read Write Back Memory CPU Read Miss CPU Write Place Write Miss on Bus Exclusive CPU read hit CPU write hit CPU Write Miss Write Back 28

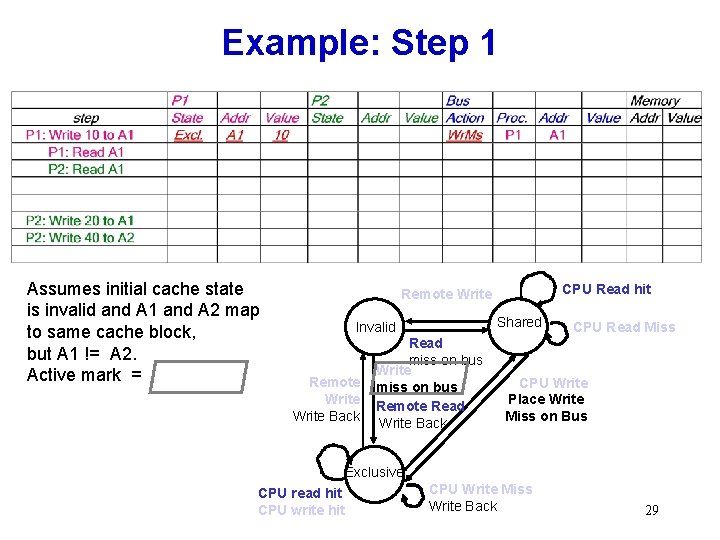

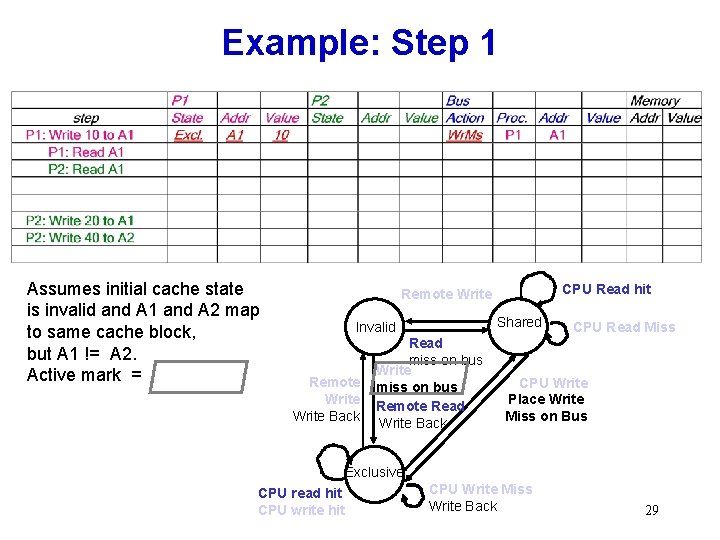

Example: Step 1 Assumes initial cache state is invalid and A 1 and A 2 map to same cache block, but A 1 != A 2. Active mark = CPU Read hit Remote Write Shared Invalid Remote Write Back Read miss on bus Write miss on bus Remote Read Write Back CPU Read Miss CPU Write Place Write Miss on Bus Exclusive CPU read hit CPU write hit CPU Write Miss Write Back 29

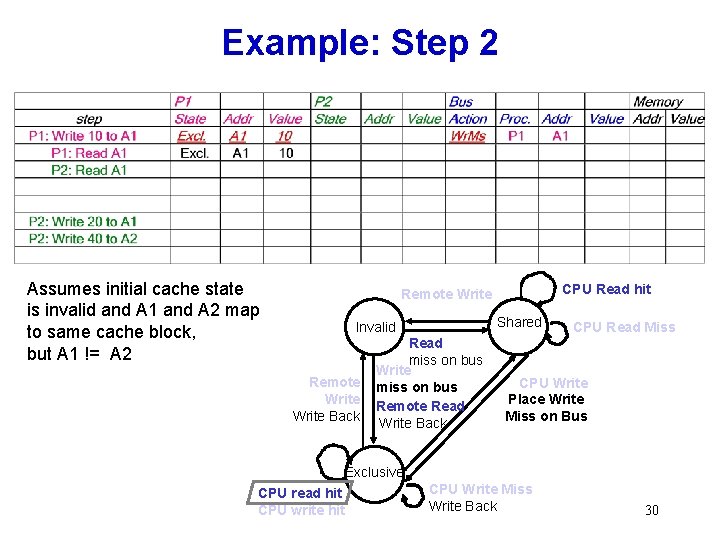

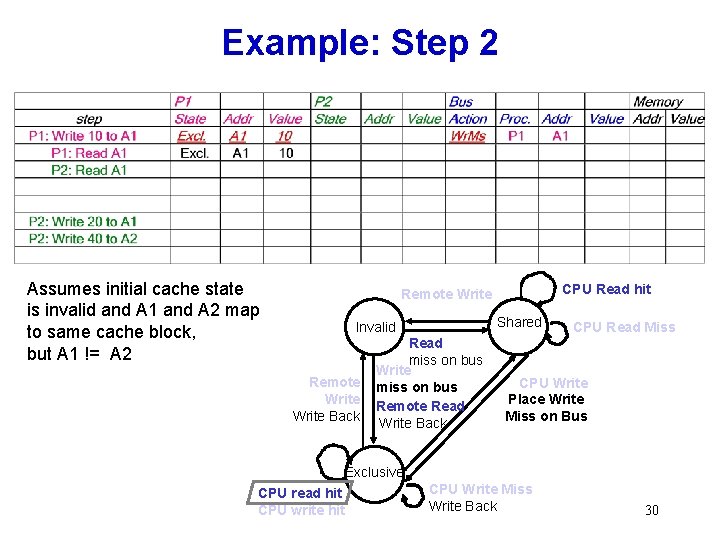

Example: Step 2 Assumes initial cache state is invalid and A 1 and A 2 map to same cache block, but A 1 != A 2 CPU Read hit Remote Write Shared Invalid Remote Write Back Read miss on bus Write miss on bus Remote Read Write Back CPU Read Miss CPU Write Place Write Miss on Bus Exclusive CPU read hit CPU write hit CPU Write Miss Write Back 30

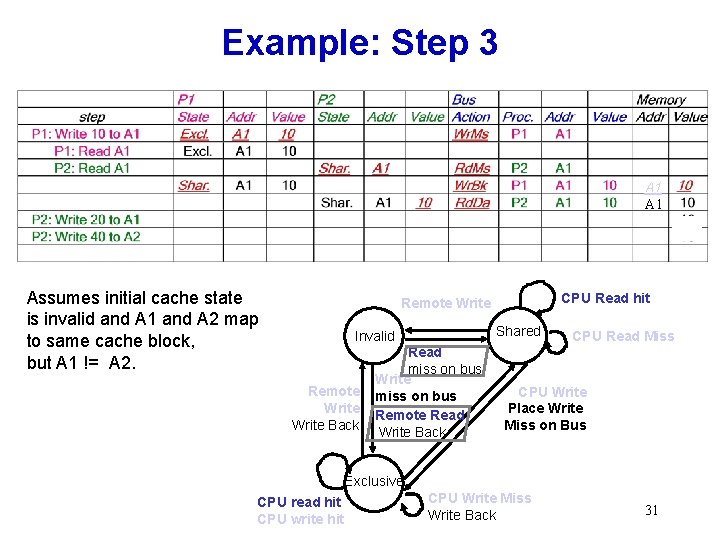

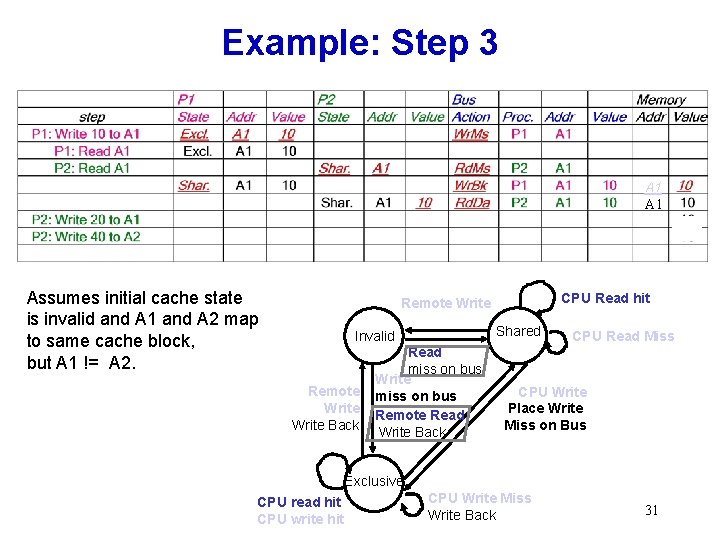

Example: Step 3 A 1 Assumes initial cache state is invalid and A 1 and A 2 map to same cache block, but A 1 != A 2. CPU Read hit Remote Write Shared Invalid Remote Write Back Read miss on bus Write miss on bus Remote Read Write Back CPU Read Miss CPU Write Place Write Miss on Bus Exclusive CPU read hit CPU write hit CPU Write Miss Write Back 31

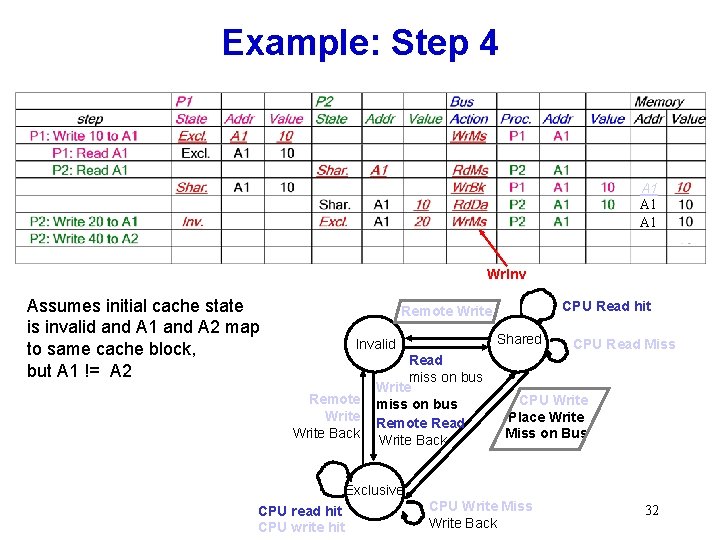

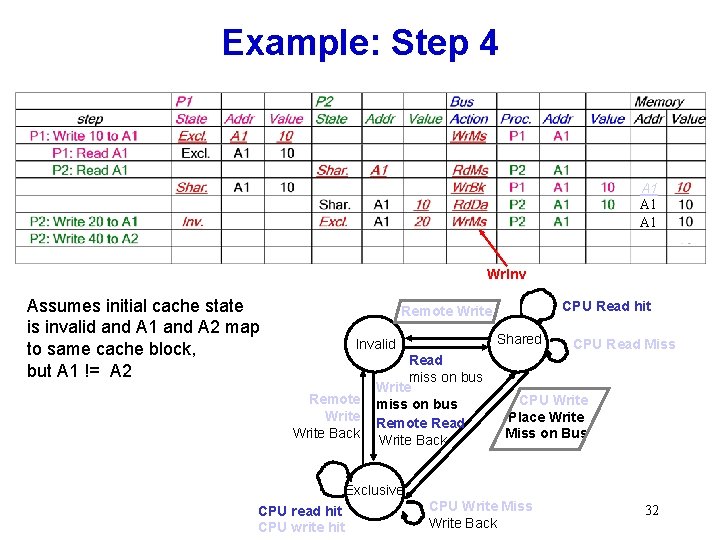

Example: Step 4 A 1 A 1 Wr. Inv Assumes initial cache state is invalid and A 1 and A 2 map to same cache block, but A 1 != A 2 CPU Read hit Remote Write Shared Invalid Remote Write Back Read miss on bus Write miss on bus Remote Read Write Back CPU Read Miss CPU Write Place Write Miss on Bus Exclusive CPU read hit CPU write hit CPU Write Miss Write Back 32

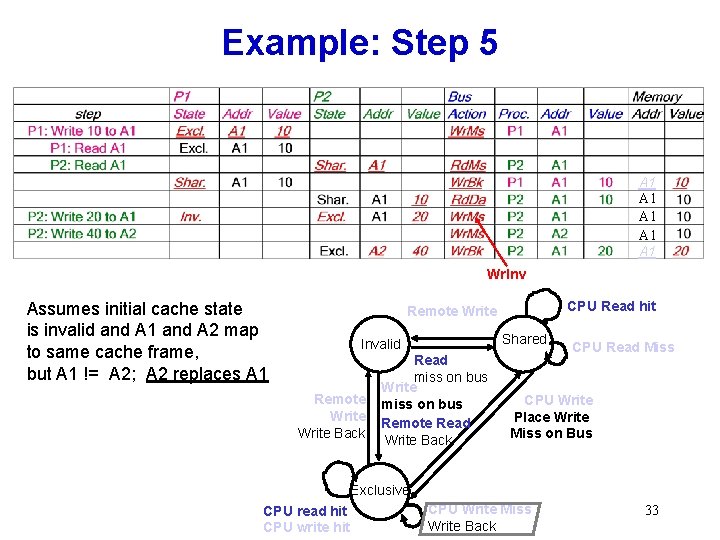

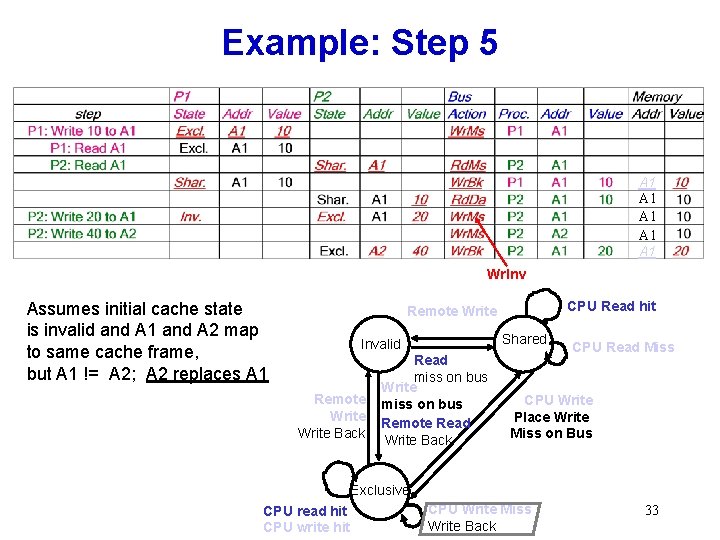

Example: Step 5 A 1 A 1 A 1 Wr. Inv Assumes initial cache state is invalid and A 1 and A 2 map to same cache frame, but A 1 != A 2; A 2 replaces A 1 CPU Read hit Remote Write Shared Invalid Remote Write Back Read miss on bus Write miss on bus Remote Read Write Back CPU Read Miss CPU Write Place Write Miss on Bus Exclusive CPU read hit CPU write hit CPU Write Miss Write Back 33

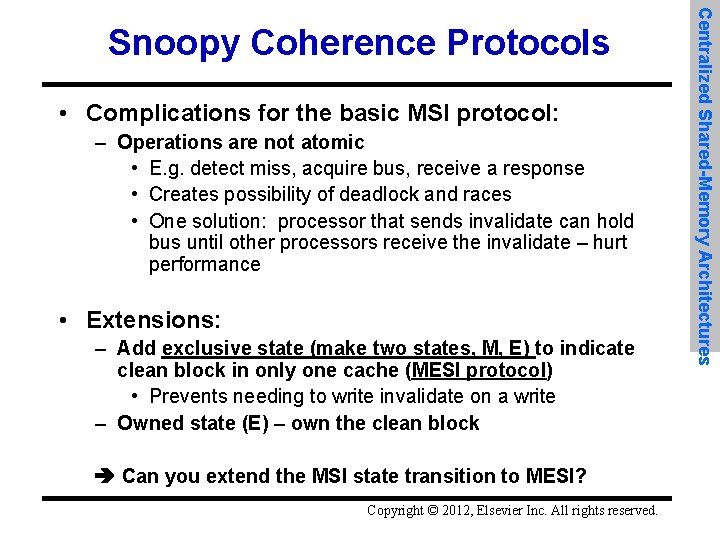

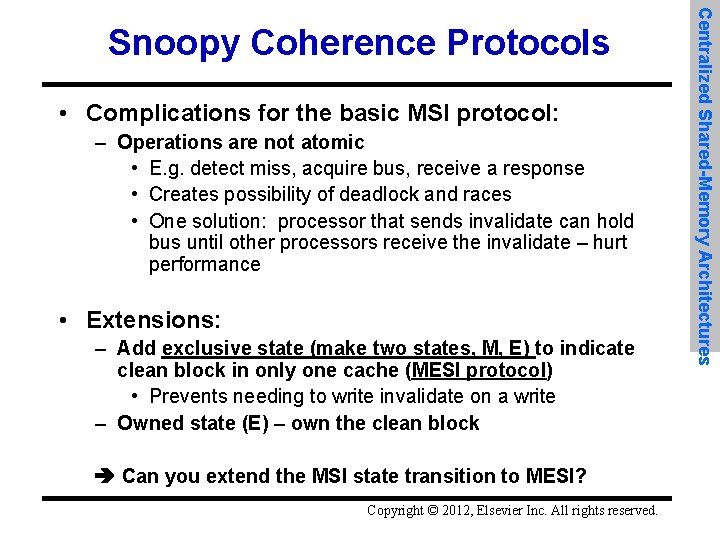

• Complications for the basic MSI protocol: – Operations are not atomic • E. g. detect miss, acquire bus, receive a response • Creates possibility of deadlock and races • One solution: processor that sends invalidate can hold bus until other processors receive the invalidate – hurt performance • Extensions: – Add exclusive state (make two states, M, E) to indicate clean block in only one cache (MESI protocol) • Prevents needing to write invalidate on a write – Owned state (E) – own the clean block Can you extend the MSI state transition to MESI? Copyright © 2012, Elsevier Inc. All rights reserved. Centralized Shared-Memory Architectures Snoopy Coherence Protocols

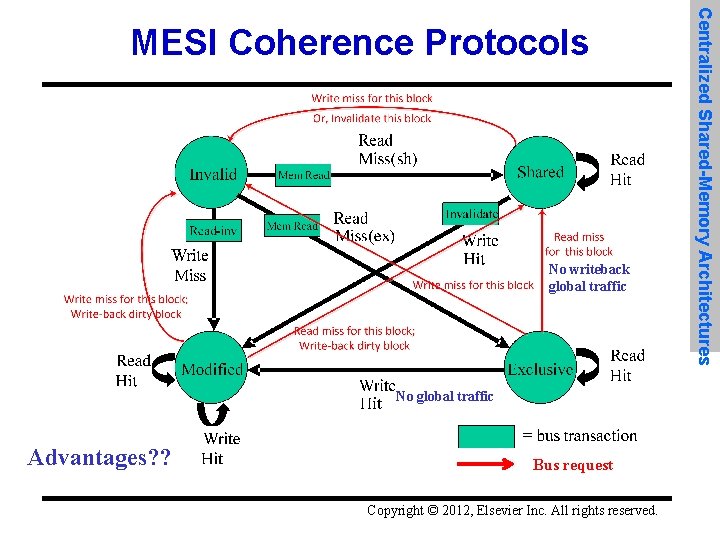

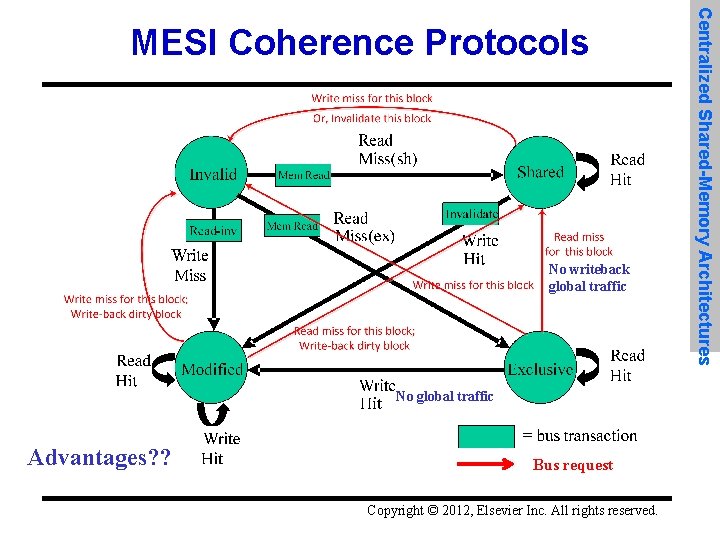

No writeback global traffic No global traffic Advantages? ? Bus request Copyright © 2012, Elsevier Inc. All rights reserved. Centralized Shared-Memory Architectures MESI Coherence Protocols

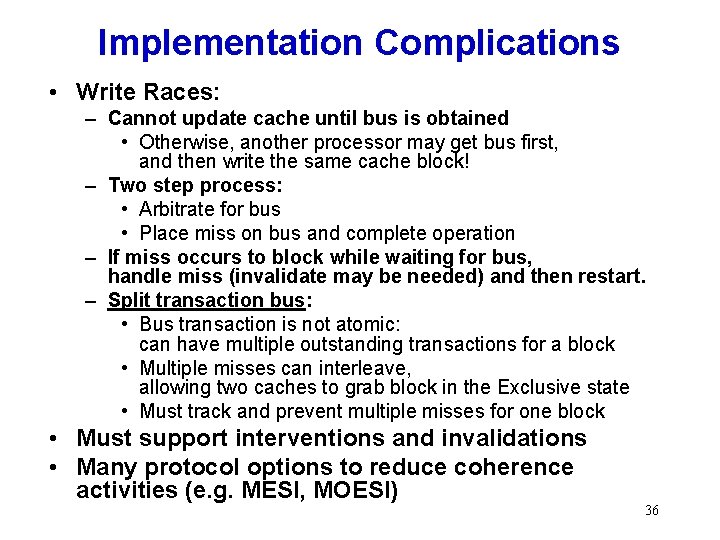

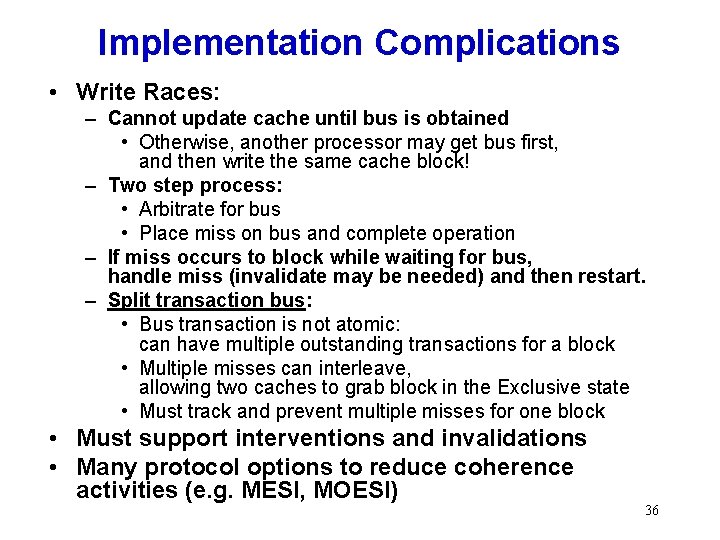

Implementation Complications • Write Races: – Cannot update cache until bus is obtained • Otherwise, another processor may get bus first, and then write the same cache block! – Two step process: • Arbitrate for bus • Place miss on bus and complete operation – If miss occurs to block while waiting for bus, handle miss (invalidate may be needed) and then restart. – Split transaction bus: • Bus transaction is not atomic: can have multiple outstanding transactions for a block • Multiple misses can interleave, allowing two caches to grab block in the Exclusive state • Must track and prevent multiple misses for one block • Must support interventions and invalidations • Many protocol options to reduce coherence activities (e. g. MESI, MOESI) 36

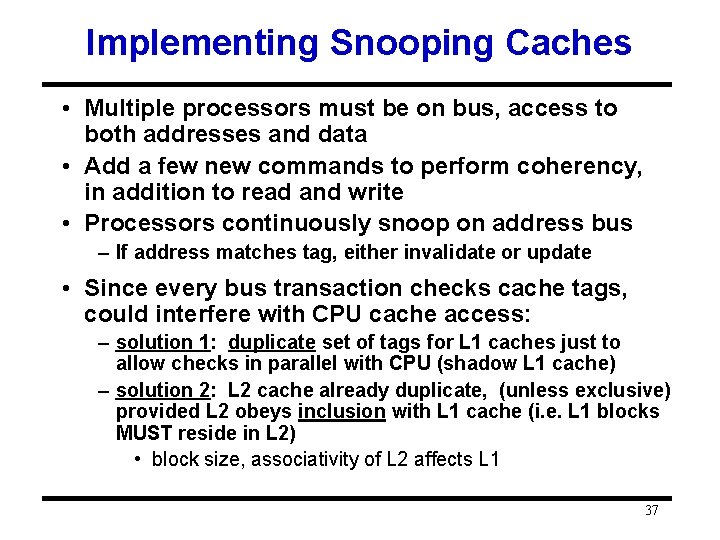

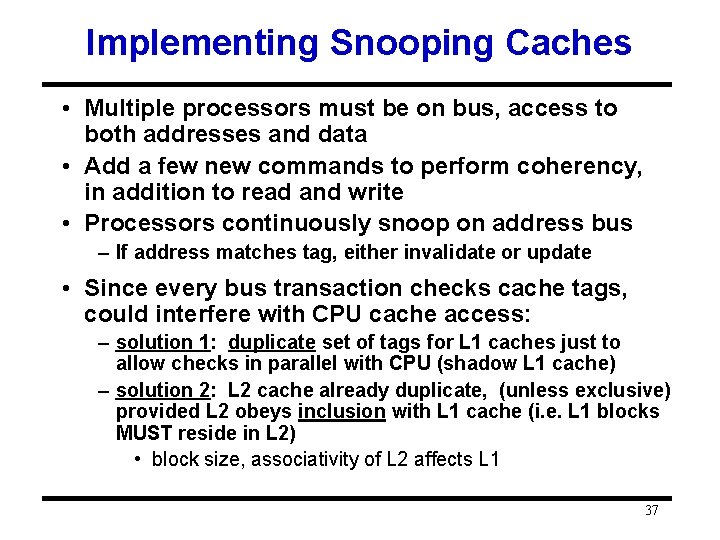

Implementing Snooping Caches • Multiple processors must be on bus, access to both addresses and data • Add a few new commands to perform coherency, in addition to read and write • Processors continuously snoop on address bus – If address matches tag, either invalidate or update • Since every bus transaction checks cache tags, could interfere with CPU cache access: – solution 1: duplicate set of tags for L 1 caches just to allow checks in parallel with CPU (shadow L 1 cache) – solution 2: L 2 cache already duplicate, (unless exclusive) provided L 2 obeys inclusion with L 1 cache (i. e. L 1 blocks MUST reside in L 2) • block size, associativity of L 2 affects L 1 37

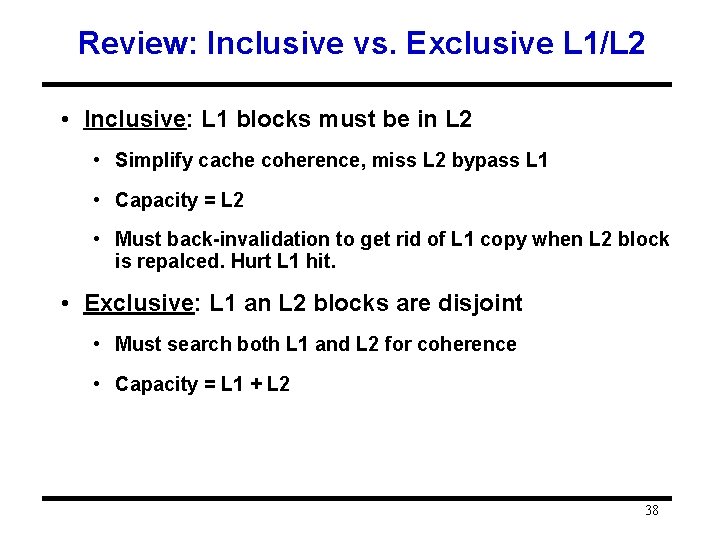

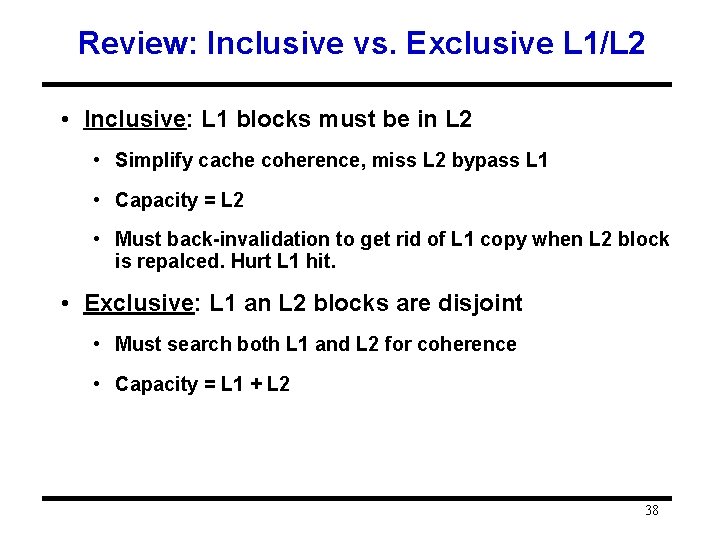

Review: Inclusive vs. Exclusive L 1/L 2 • Inclusive: L 1 blocks must be in L 2 • Simplify cache coherence, miss L 2 bypass L 1 • Capacity = L 2 • Must back-invalidation to get rid of L 1 copy when L 2 block is repalced. Hurt L 1 hit. • Exclusive: L 1 an L 2 blocks are disjoint • Must search both L 1 and L 2 for coherence • Capacity = L 1 + L 2 38