Chapter 5 Memory CSE 820 Michigan State University

- Slides: 16

Chapter 5 Memory CSE 820 Michigan State University Computer Science and Engineering

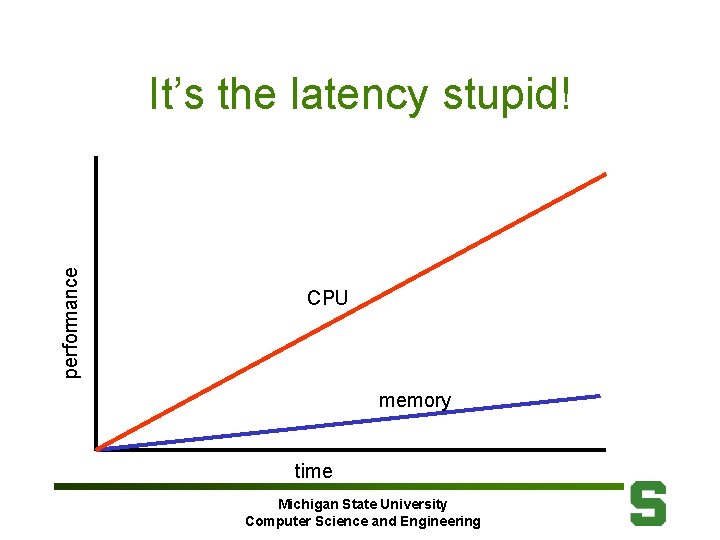

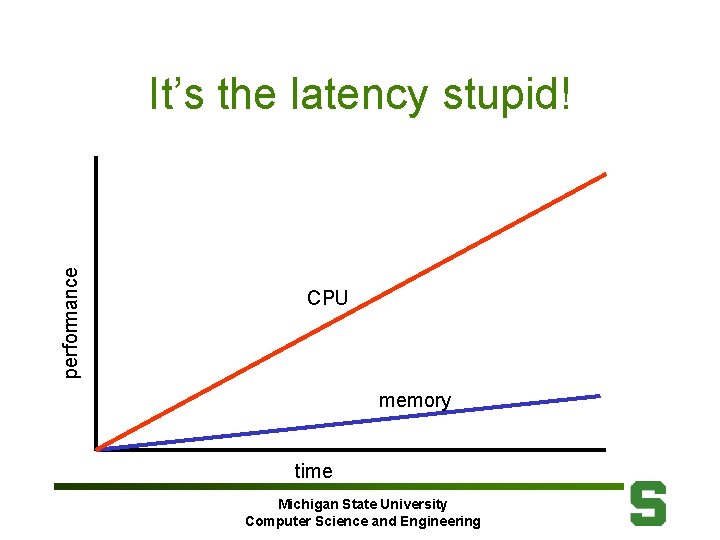

performance It’s the latency stupid! CPU memory time Michigan State University Computer Science and Engineering

Vocabulary • • Cache Virtual memory Memory stall cycles Direct mapped Valid bit Block address Write through Michigan State University Computer Science and Engineering

Vocabulary • • Instruction cache Average memory access time Cache hit Page Miss penalty Fully associative Dirty bit Michigan State University Computer Science and Engineering

Vocabulary • • Block offset Write back Data cache Hit time Cache miss Page fault Miss rate Michigan State University Computer Science and Engineering

Vocabulary • • N-way set associative Least-recently used Tag field Write allocate Unified cache Misses per instruction block Michigan State University Computer Science and Engineering

Vocabulary • • Locality Address trace Set Random replacement Index field No-write allocate Write buffer Write stall Michigan State University Computer Science and Engineering

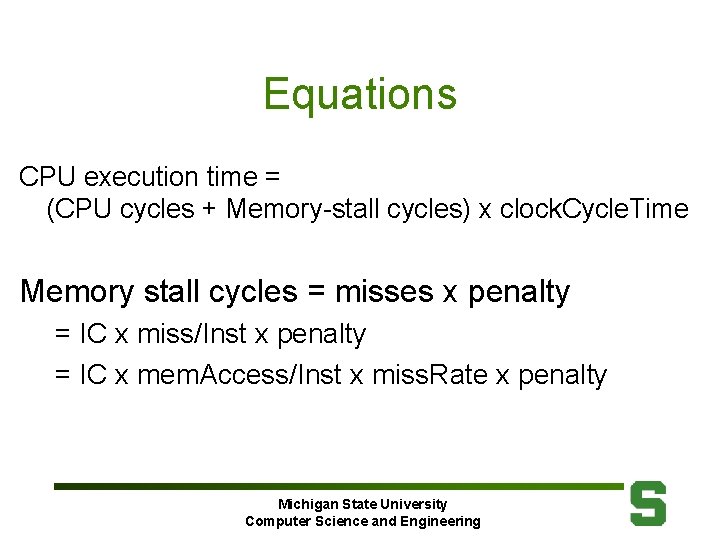

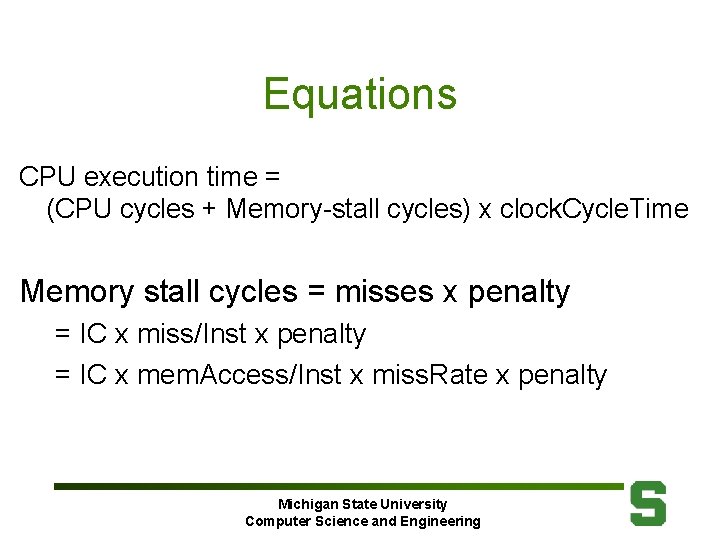

Equations CPU execution time = (CPU cycles + Memory-stall cycles) x clock. Cycle. Time Memory stall cycles = misses x penalty = IC x miss/Inst x penalty = IC x mem. Access/Inst x miss. Rate x penalty Michigan State University Computer Science and Engineering

Hierarchy Questions 1. Block Placement Where can a block be placed? 2. Block Identification How is a block found? 3. Block Replacement Which block should be replaced on a miss? 4. Write Strategy What happens on a write? Michigan State University Computer Science and Engineering

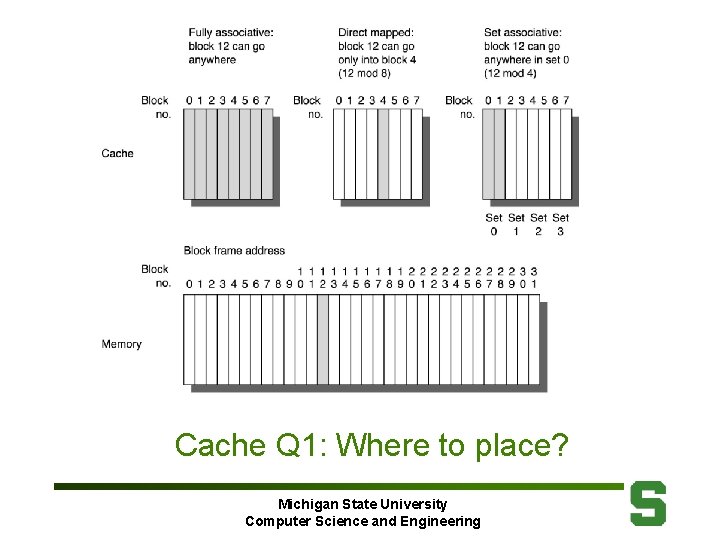

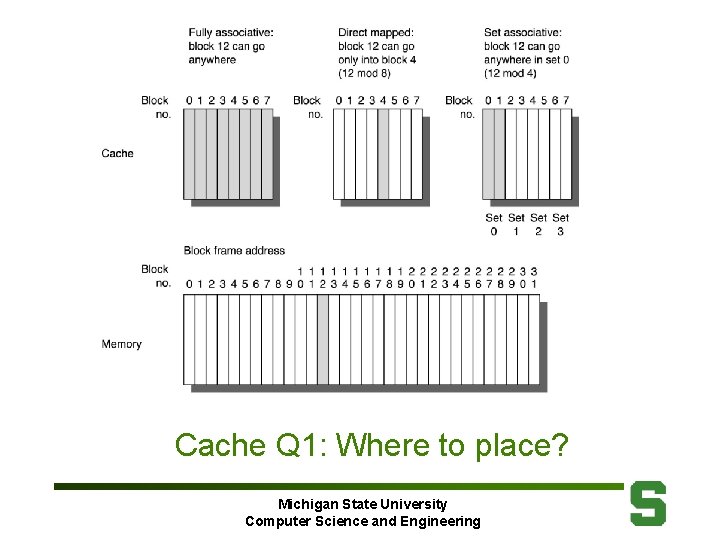

Cache Q 1: Where to place? Michigan State University Computer Science and Engineering

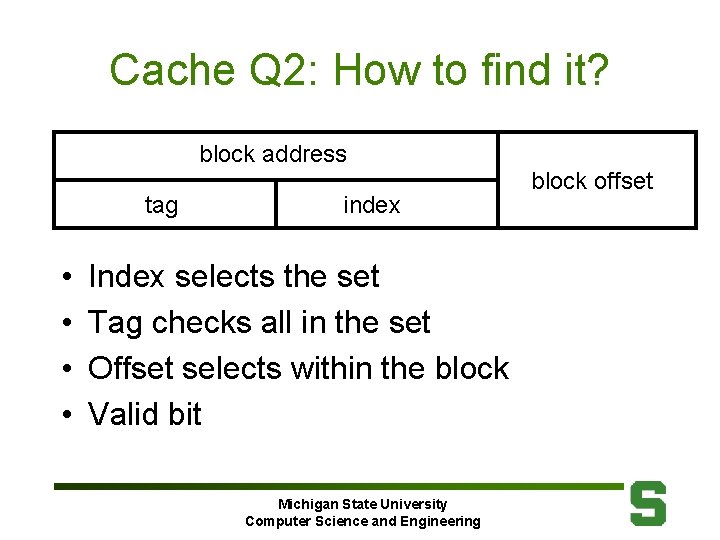

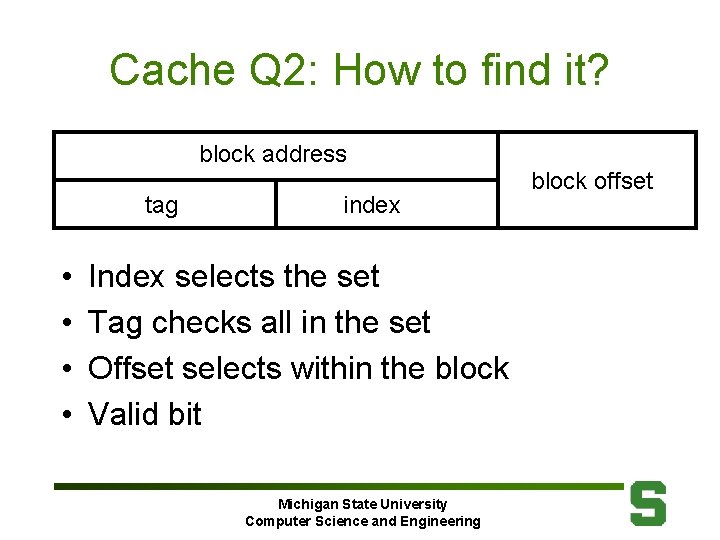

Cache Q 2: How to find it? block address tag • • index Index selects the set Tag checks all in the set Offset selects within the block Valid bit Michigan State University Computer Science and Engineering block offset

Cache Q 3: Replacement? • Random – Simplest • FIFO • LRU – Approximation – Outperforms others for large caches Michigan State University Computer Science and Engineering

Cache Q 4: write? Reads are most common and easiest • Write through • Write back – On replacement – Dirty bit • Write allocate • No-write allocate Michigan State University Computer Science and Engineering

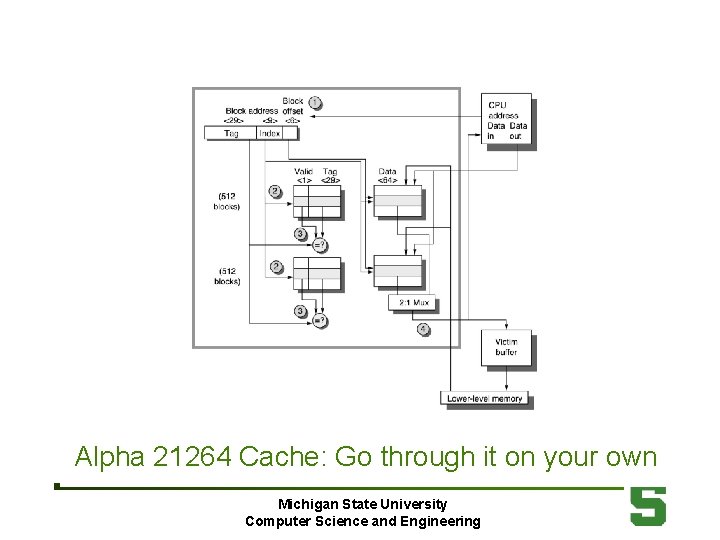

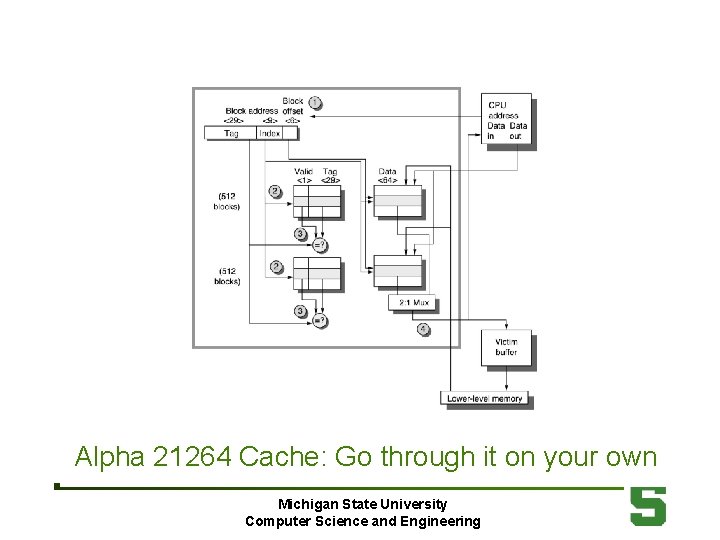

Alpha 21264 Cache: Go through it on your own Michigan State University Computer Science and Engineering

Equations • Fig 5. 9 has 12 memory performance equations • Most are variations on CPU = IC x CPI x cycles Avg. Mem. Access = Hit + Miss. Rate x Miss. Penalty Michigan State University Computer Science and Engineering

Avg. Mem. Access = Hit + Miss. Rate x Miss. Penalty 17 Cache Optimizations • Reduce miss penalty Multilevel, critical first, read-before-write, merging writes, victim cache • Reduce miss rate Larger blocks and caches, higher associativity, way prediction, compiler optimizations • Reduce miss rate & penalty with parallelism Non-blocking, hardware and software prefetch • Reduce hit time Size, translation, pipelined, trace Michigan State University Computer Science and Engineering