Chapter 5 Large and Fast Exploiting Memory Hierarchy

- Slides: 32

Chapter 5 Large and Fast: Exploiting Memory Hierarchy 1

The Hamming Single Error Correcting, Double Error Detecting Code (SEC/DED) § Hamming distance § Number of bits that are different between two bit patterns, calculated using exclusive OR § Minimum distance = 2 provides single bit error detection § E. g. parity code § Minimum distance = 3 provides single error correction, 2 bit error detection § To calculate how many bits are needed for SEC, § let p be total number of parity bits and d number of data bits in p+d bit word. § If p error correction bits are to point to error bit (p +d cases) plus one case to indicate that no error exists, we need: § 2 p ≥ p + d + 1 bits (e. g. 8 data bits, d = 8, p = 4) 2

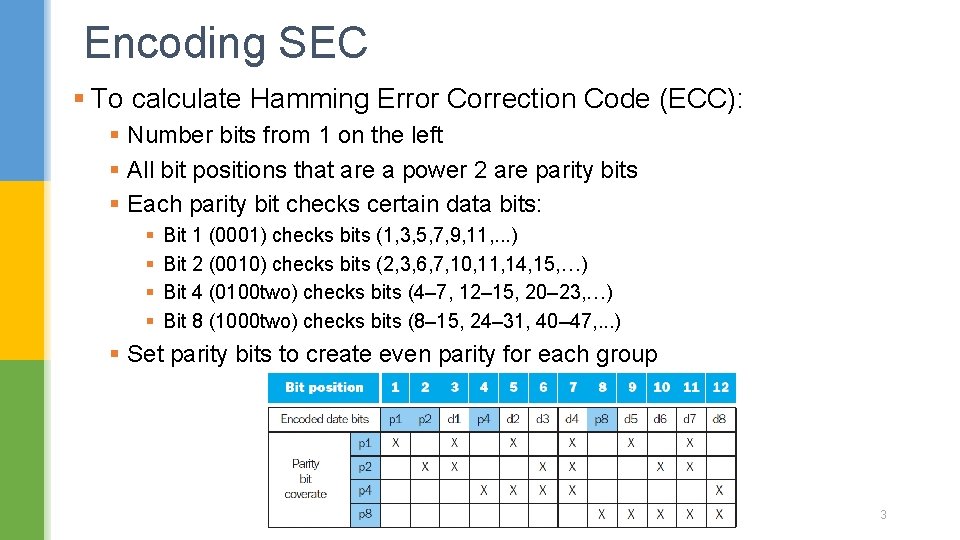

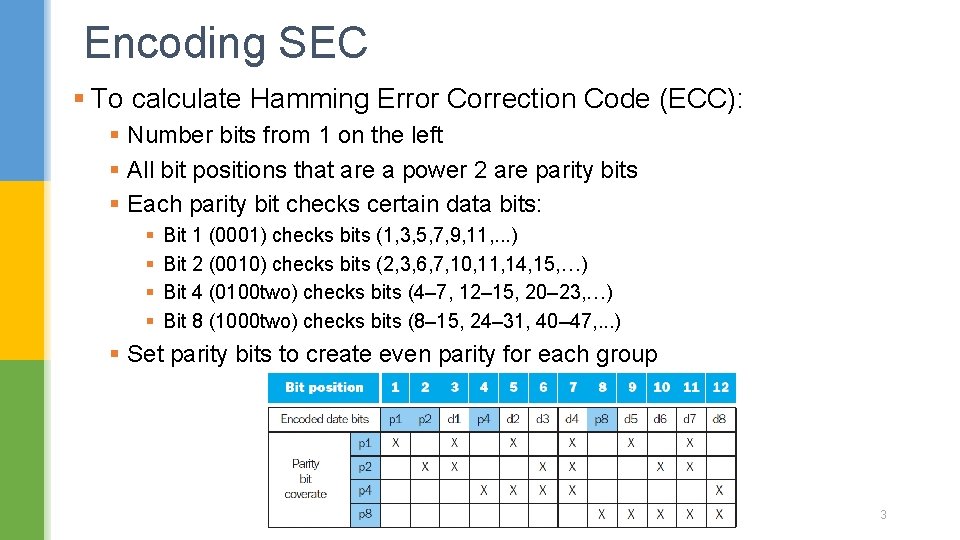

Encoding SEC § To calculate Hamming Error Correction Code (ECC): § Number bits from 1 on the left § All bit positions that are a power 2 are parity bits § Each parity bit checks certain data bits: § § Bit 1 (0001) checks bits (1, 3, 5, 7, 9, 11, . . . ) Bit 2 (0010) checks bits (2, 3, 6, 7, 10, 11, 14, 15, …) Bit 4 (0100 two) checks bits (4– 7, 12– 15, 20– 23, …) Bit 8 (1000 two) checks bits (8– 15, 24– 31, 40– 47, . . . ) § Set parity bits to create even parity for each group 3

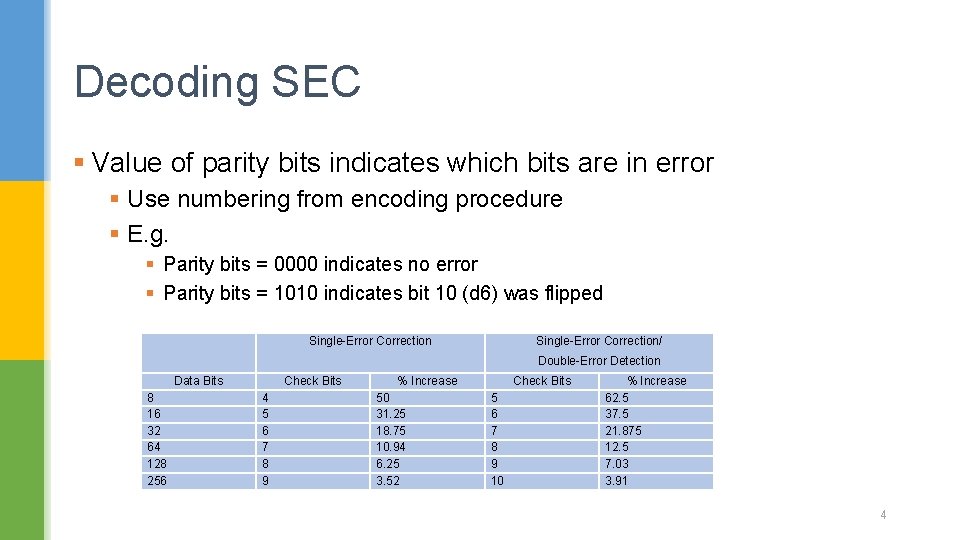

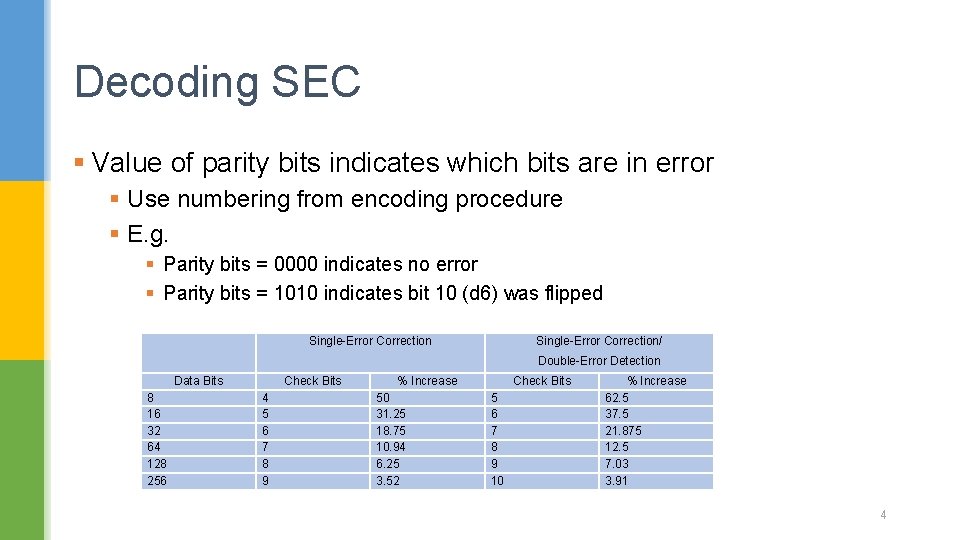

Decoding SEC § Value of parity bits indicates which bits are in error § Use numbering from encoding procedure § E. g. § Parity bits = 0000 indicates no error § Parity bits = 1010 indicates bit 10 (d 6) was flipped Single-Error Correction/ Double-Error Detection Data Bits 8 16 32 64 128 256 Check Bits 4 5 6 7 8 9 % Increase 50 31. 25 18. 75 10. 94 6. 25 3. 52 Check Bits 5 6 7 8 9 10 % Increase 62. 5 37. 5 21. 875 12. 5 7. 03 3. 91 4

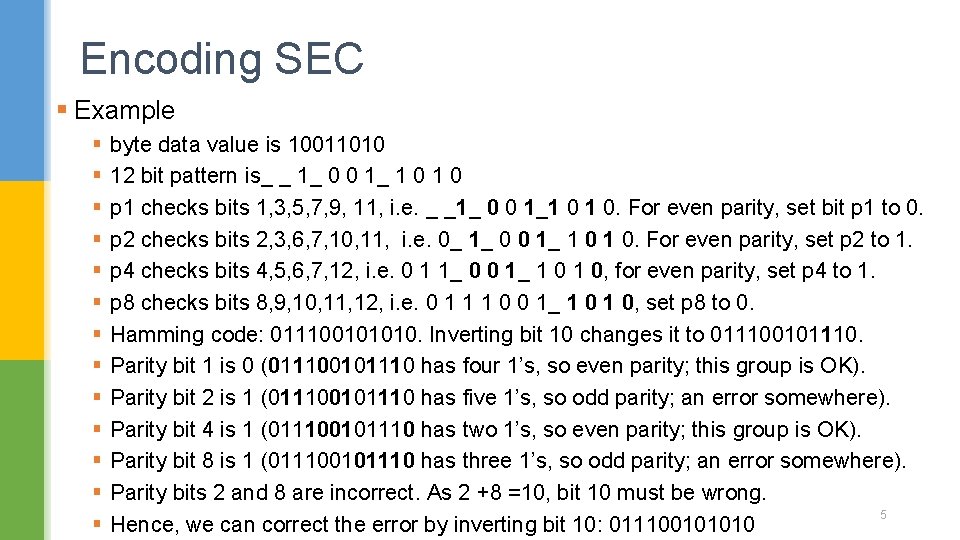

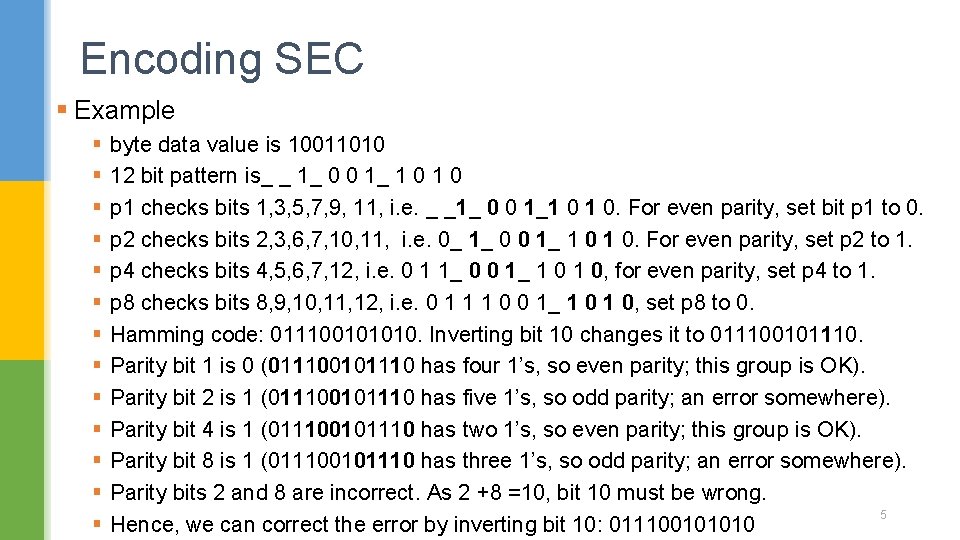

Encoding SEC § Example § § § § byte data value is 10011010 12 bit pattern is_ _ 1_ 0 0 1_ 1 0 p 1 checks bits 1, 3, 5, 7, 9, 11, i. e. _ _1_ 0 0 1_1 0 1 0. For even parity, set bit p 1 to 0. p 2 checks bits 2, 3, 6, 7, 10, 11, i. e. 0_ 1_ 0 0 1_ 1 0. For even parity, set p 2 to 1. p 4 checks bits 4, 5, 6, 7, 12, i. e. 0 1 1_ 0 0 1_ 1 0, for even parity, set p 4 to 1. p 8 checks bits 8, 9, 10, 11, 12, i. e. 0 1 1 1 0 0 1_ 1 0, set p 8 to 0. Hamming code: 011100101010. Inverting bit 10 changes it to 011100101110. Parity bit 1 is 0 (011100101110 has four 1’s, so even parity; this group is OK). Parity bit 2 is 1 (011100101110 has five 1’s, so odd parity; an error somewhere). Parity bit 4 is 1 (011100101110 has two 1’s, so even parity; this group is OK). Parity bit 8 is 1 (011100101110 has three 1’s, so odd parity; an error somewhere). Parity bits 2 and 8 are incorrect. As 2 +8 =10, bit 10 must be wrong. 5 Hence, we can correct the error by inverting bit 10: 011100101010

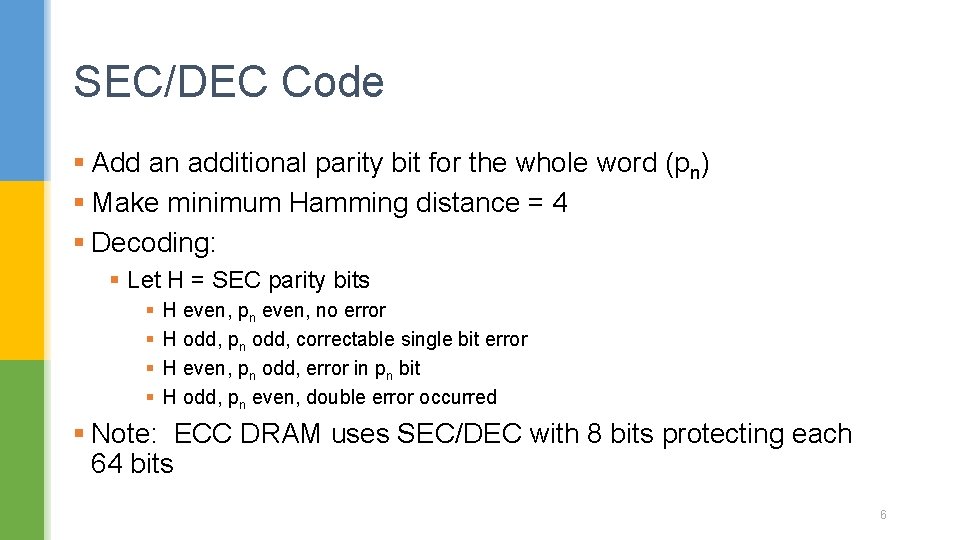

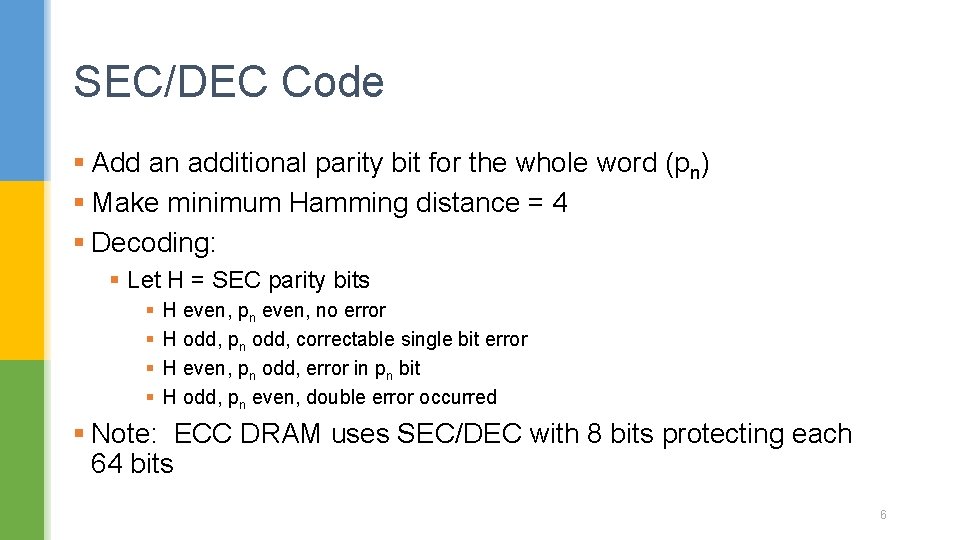

SEC/DEC Code § Add an additional parity bit for the whole word (pn) § Make minimum Hamming distance = 4 § Decoding: § Let H = SEC parity bits § § H even, pn even, no error H odd, pn odd, correctable single bit error H even, pn odd, error in pn bit H odd, pn even, double error occurred § Note: ECC DRAM uses SEC/DEC with 8 bits protecting each 64 bits 6

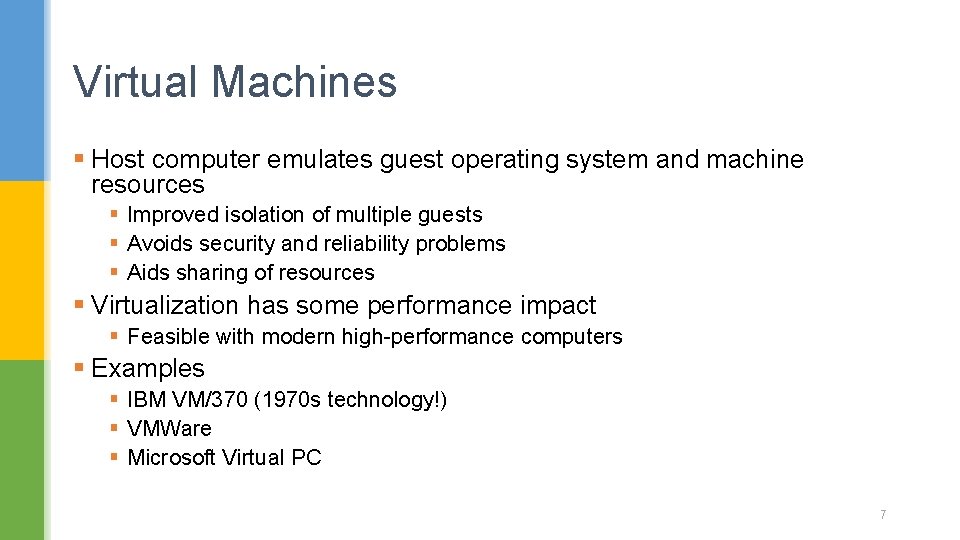

Virtual Machines § Host computer emulates guest operating system and machine resources § Improved isolation of multiple guests § Avoids security and reliability problems § Aids sharing of resources § Virtualization has some performance impact § Feasible with modern high-performance computers § Examples § IBM VM/370 (1970 s technology!) § VMWare § Microsoft Virtual PC 7

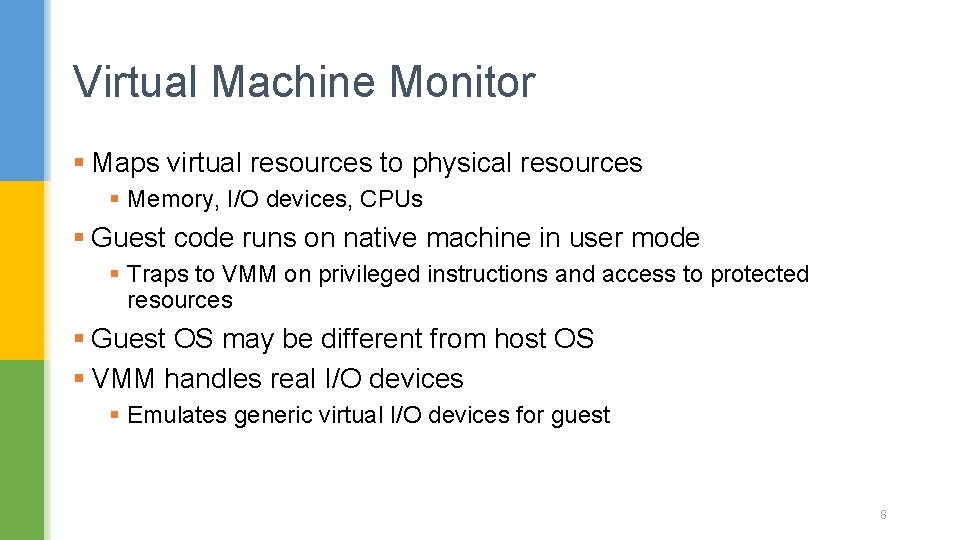

Virtual Machine Monitor § Maps virtual resources to physical resources § Memory, I/O devices, CPUs § Guest code runs on native machine in user mode § Traps to VMM on privileged instructions and access to protected resources § Guest OS may be different from host OS § VMM handles real I/O devices § Emulates generic virtual I/O devices for guest 8

Benefits of VMs § Managing software § VMs provide an abstraction that can run the complete software stack, even including old operating systems like DOS. § A typical deployment might be some VMs running legacy OSes, many running the current stable OS release, and a few testing the next OS release. § Managing hardware § One reason for multiple servers is to have each application running with the compatible version of the operating system on separate computers, as this separation can improve dependability. § VMs allow these separate software stacks to run independently yet share hardware, thereby consolidating the number of servers. 9

Requirements of a Virtual Machine Monitor § The qualitative requirements are: § Guest software behaves the same as running on the native hardware, § Guest software cannot change the allocation of real system resources directly. § To “virtualize” the processor, the VMM must control access to privileged state, I/O, exceptions, and § VMM has a higher privilege level than the guest VM, which generally runs in user mode § The basic system requirements to support VMMs are: § At least two processor modes, system and user. § A privileged subset of instructions that is available only in system mode, resulting in a trap if executed in user mode § All physical resources only accessible using privileged instructions § Including page tables, interrupt controls, I/O registers 10

Example: Timer Virtualization § In native machine, on timer interrupt § OS suspends current process, handles interrupt, selects and resumes next process § With Virtual Machine Monitor § VMM suspends current VM, handles interrupt, selects and resumes next VM § If a VM requires timer interrupts § VMM emulates a virtual timer § Emulates interrupt for VM when physical timer interrupt occurs 11

Instruction Set Architecture Support § Virtualization - An architecture that allows the VM to execute directly on the hardware § examples – IBM 370, ARMv 8; without virtualization – x 86, MIPS, ARMv 7 § A conventional guest OS runs as a user mode program on top of the VMM. § If a guest OS attempts to access or modify information related to hardware resources via a privileged, it will trap to the VMM. 12

Protection and Instruction Set Architecture § Protection is a joint effort of architecture and operating systems § x 86 instruction POPF loads the flag registers from the top of the stack in memory. § One of the flags is the Interrupt Enable (IE) flag. § If POPF instruction is run in user mode, it simply changes all the flags except IE. § In system mode, it does change the IE. § Since a guest OS runs in user mode inside a VM, this is a problem, as it expects to see a changed IE. 13

Virtual Memory § Use main memory as a “cache” for secondary (disk) storage § Managed jointly by CPU hardware and the operating system (OS) § Programs share main memory § Each gets a private virtual address space holding its frequently used code and data § Protected from other programs § CPU and OS translate virtual addresses to physical addresses § VM “block” is called a page § VM translation “miss” is called a page fault § Translation enforces protection 14

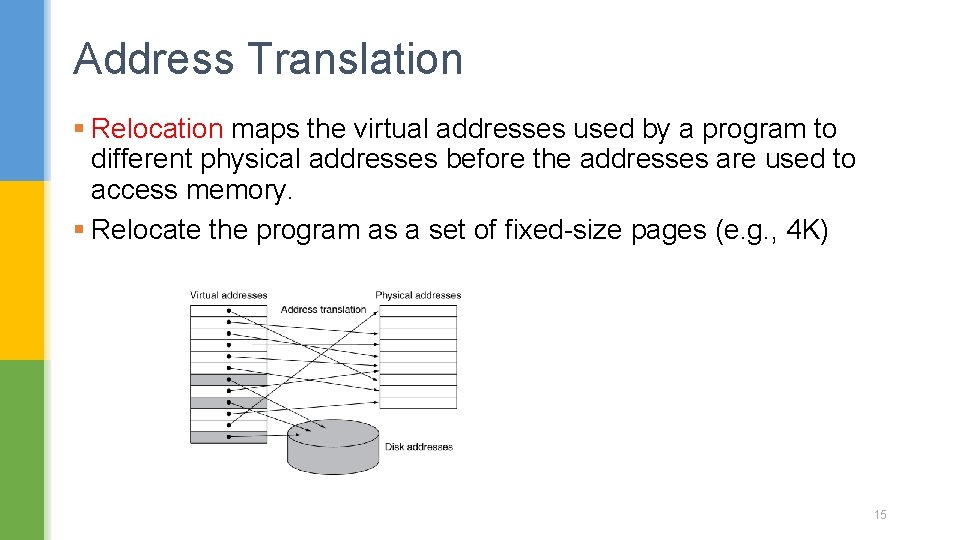

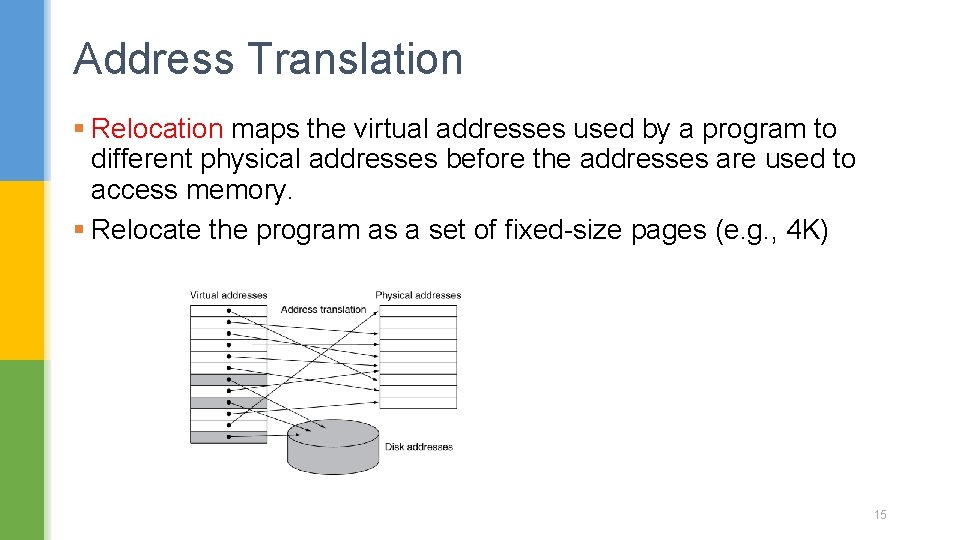

Address Translation § Relocation maps the virtual addresses used by a program to different physical addresses before the addresses are used to access memory. § Relocate the program as a set of fixed-size pages (e. g. , 4 K) 15

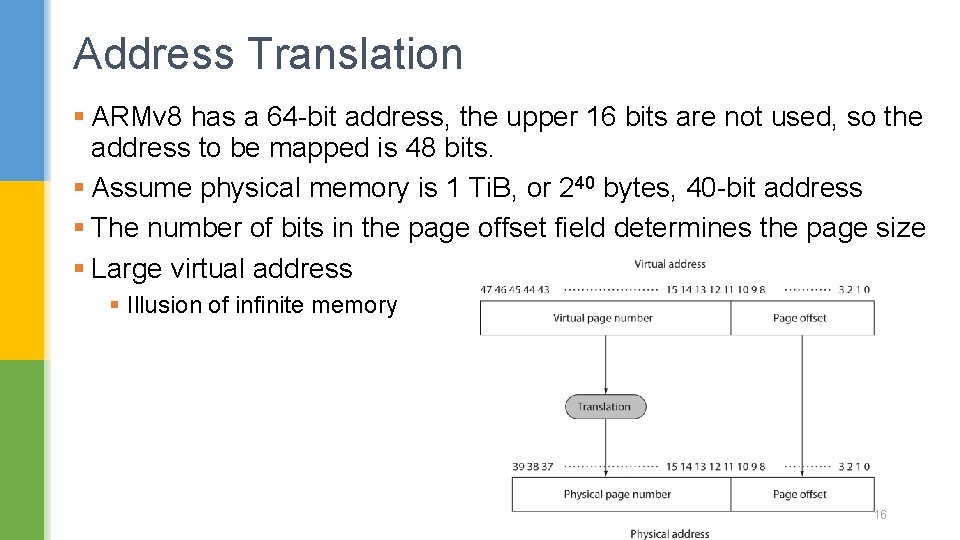

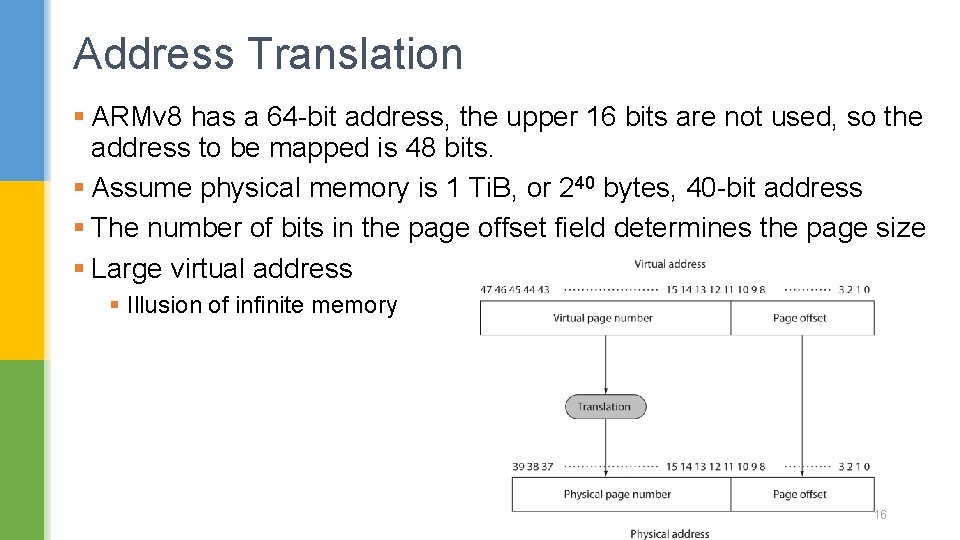

Address Translation § ARMv 8 has a 64 -bit address, the upper 16 bits are not used, so the address to be mapped is 48 bits. § Assume physical memory is 1 Ti. B, or 240 bytes, 40 -bit address § The number of bits in the page offset field determines the page size § Large virtual address § Illusion of infinite memory 16

Page Fault Penalty § On page fault, the page must be fetched from disk § Takes millions of clock cycles § Handled by OS code § Pages should be large enough to try to amortize the high access time; 4 – 64 Ki. B § Try to minimize page fault rate § Fully associative placement § Page faults handled in software - smart replacement algorithms § Write-through will not work for virtual memory, since writes take too long. § Instead, virtual memory systems use write-back. 17

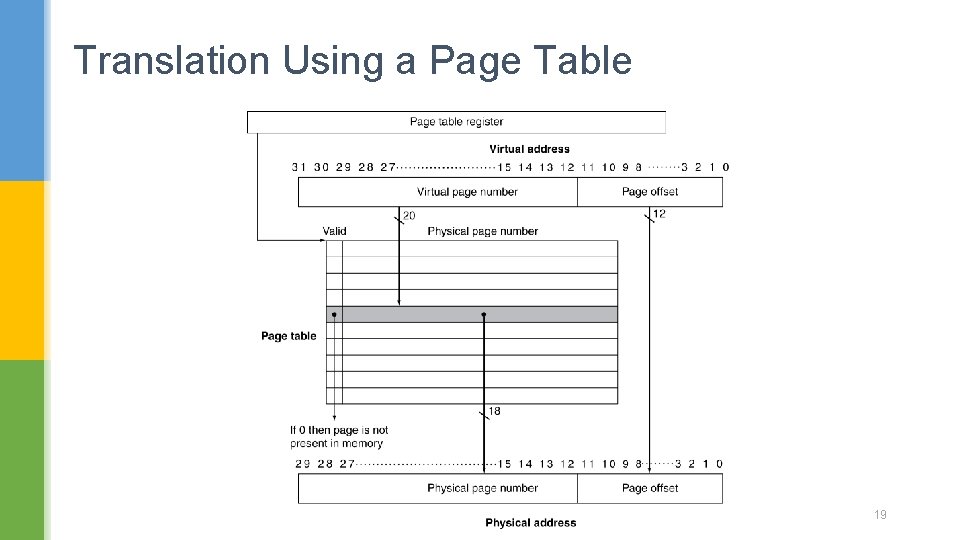

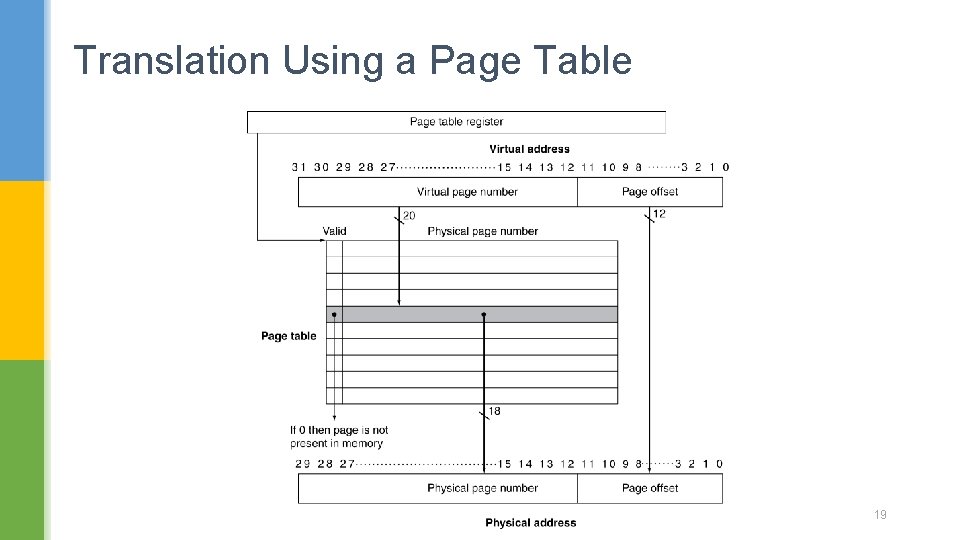

Page Tables § Stores placement information § Array of page table entries, indexed by virtual page number § Page table register in CPU points to page table in physical memory § If page is present in memory § PTE stores the physical page number § Plus other status bits (referenced, dirty, …) § If page is not present § PTE can refer to location in swap space on disk 18

Translation Using a Page Table 19

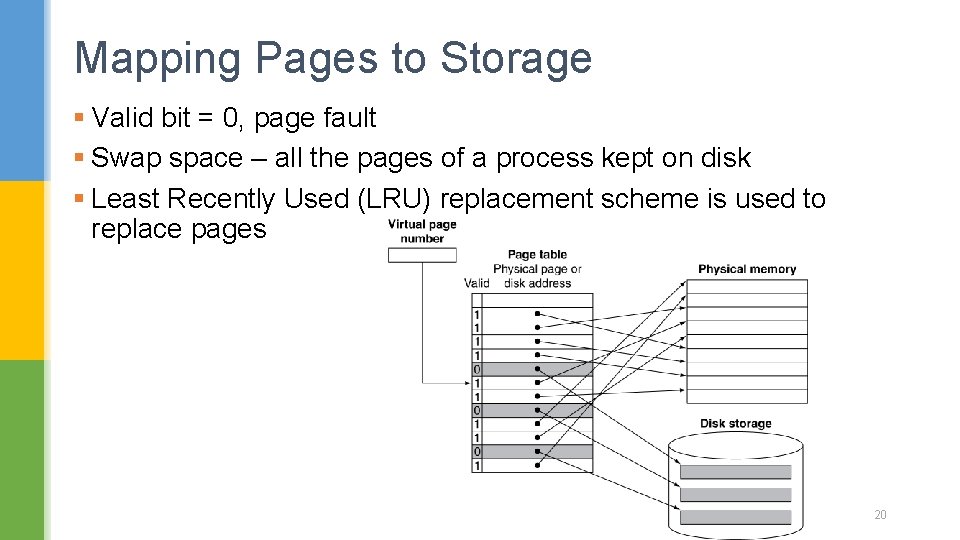

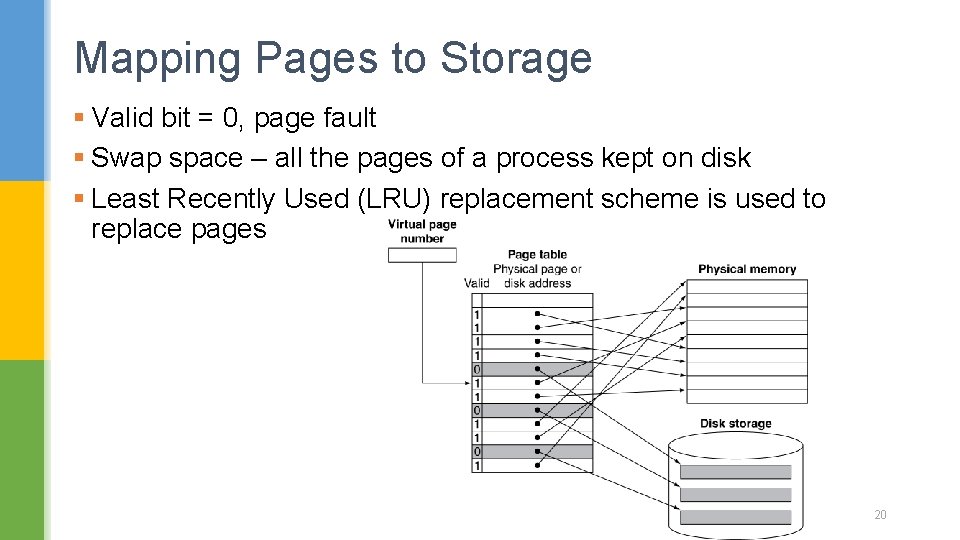

Mapping Pages to Storage § Valid bit = 0, page fault § Swap space – all the pages of a process kept on disk § Least Recently Used (LRU) replacement scheme is used to replace pages 20

Replacement and Writes § To reduce page fault rate, prefer least-recently used (LRU) replacement § Reference bit (aka use bit) in PTE set to 1 on access to page § Periodically cleared to 0 by OS § A page with reference bit = 0 has not been used recently § Disk writes take millions of cycles § Block at once, not individual locations § Write through is impractical § Use write-back § Dirty bit in PTE set when page is written 21

Virtual Memory for Large Virtual Addresses § With a single level page table for a 48 -bit address with 4 Ki. B pages, we need 64 billion table entries. § As each page table entry is 8 bytes for ARMv 8, it would require 0. 5 Ti. B just to map the virtual addresses to physical addresses. § Reduce the amount of storage required for the page table § Keep a limit register that restricts the size of the page table for a given process § page table would grow as a process consumes more space § Divide the page table and let it grow from the highest address down, as well as from the lowest address up to grow in two directions. 22

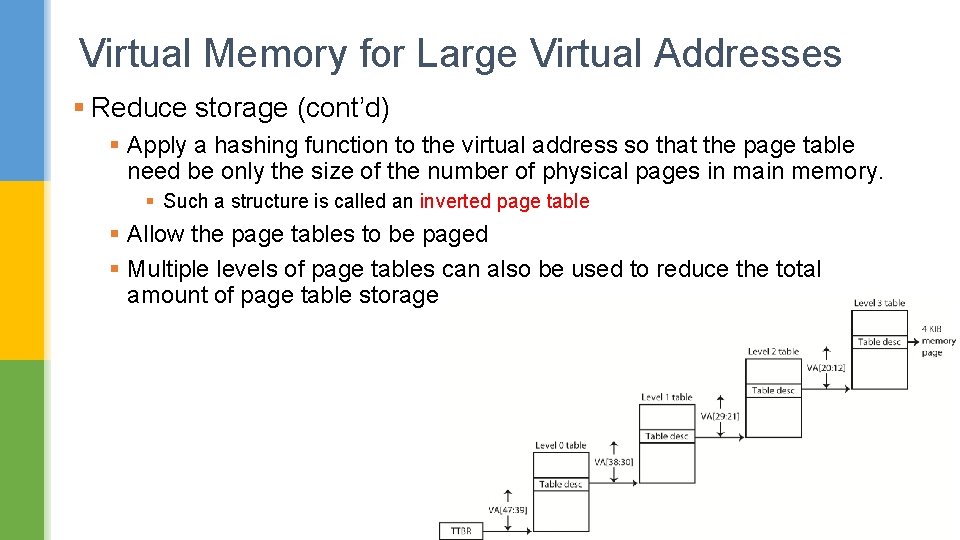

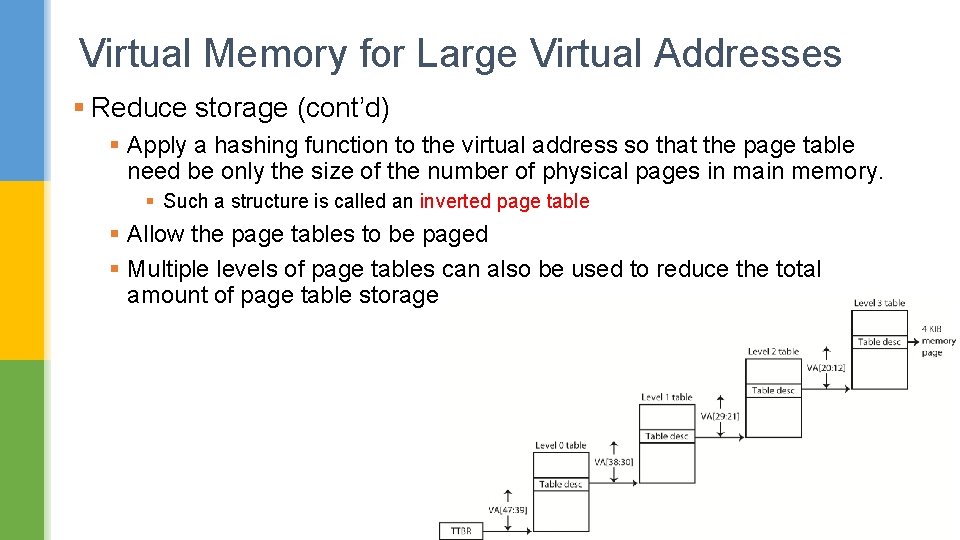

Virtual Memory for Large Virtual Addresses § Reduce storage (cont’d) § Apply a hashing function to the virtual address so that the page table need be only the size of the number of physical pages in main memory. § Such a structure is called an inverted page table § Allow the page tables to be paged § Multiple levels of page tables can also be used to reduce the total amount of page table storage 23

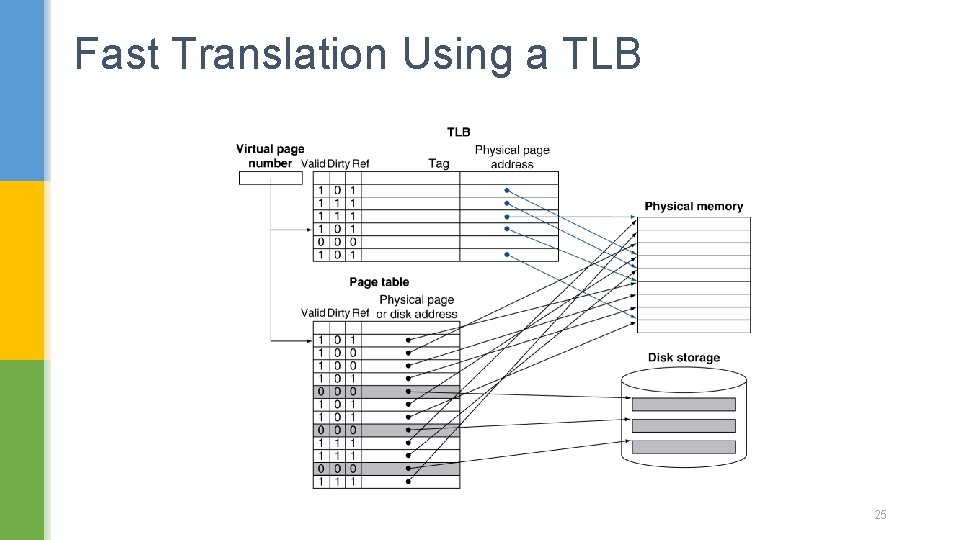

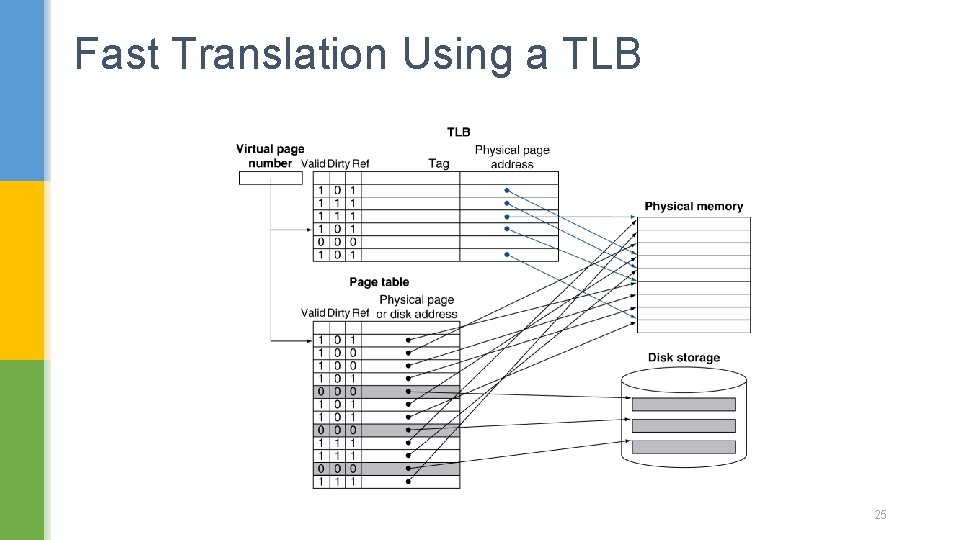

Fast Address Translation Using a TLB § Address translation would appear to require extra memory references § One to access the PTE § Then the actual memory access § But access to page tables has good locality § So use a fast cache of PTEs within the CPU § Called a Translation Look-aside Buffer (TLB) § Typical: 16– 512 PTEs, 0. 5– 1 cycle for hit, 10– 100 cycles for miss, 0. 01%– 1% miss rate § Misses could be handled by hardware or software 24

Fast Translation Using a TLB 25

TLB Misses § If page is in memory § Load the PTE from memory and retry § Could be handled in hardware § Can get complex for more complicated page table structures § Or in software § Raise a special exception, with optimized handler § If page is not in memory (page fault) § OS handles fetching the page and updating the page table § Then restart the faulting instruction 26

TLB Miss Handler § TLB miss indicates § Page present, but PTE not in TLB § Page not present § Must recognize TLB miss before destination register overwritten § Raise exception § Handler copies PTE from memory to TLB § Then restarts instruction § If page not present, page fault will occur 27

Page Fault Handler § Use faulting virtual address to find PTE § Locate page on disk § Choose page to replace § If dirty, write to disk first § Read page into memory and update page table § Make process runnable again § Restart from faulting instruction 28

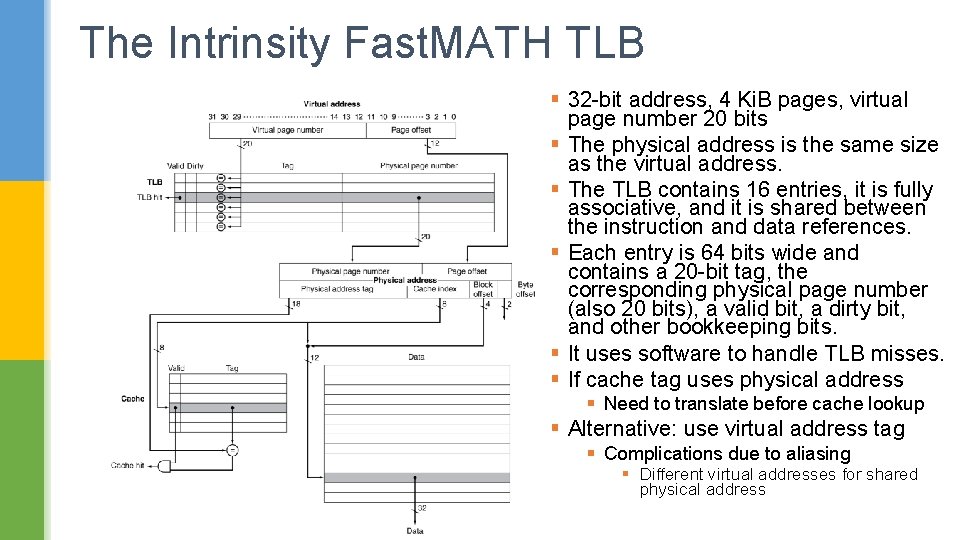

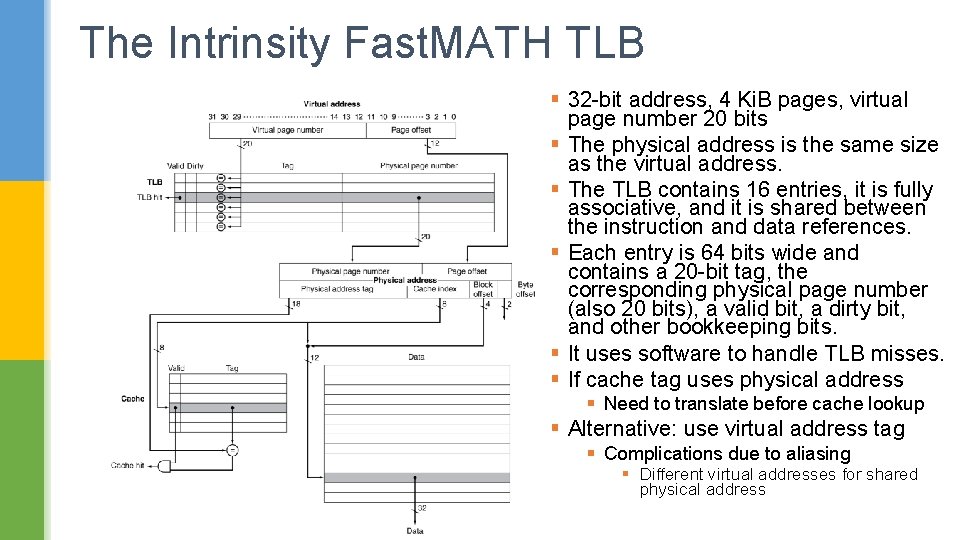

The Intrinsity Fast. MATH TLB § 32 -bit address, 4 Ki. B pages, virtual page number 20 bits § The physical address is the same size as the virtual address. § The TLB contains 16 entries, it is fully associative, and it is shared between the instruction and data references. § Each entry is 64 bits wide and contains a 20 -bit tag, the corresponding physical page number (also 20 bits), a valid bit, a dirty bit, and other bookkeeping bits. § It uses software to handle TLB misses. § If cache tag uses physical address § Need to translate before cache lookup § Alternative: use virtual address tag § Complications due to aliasing § Different virtual addresses for shared physical address

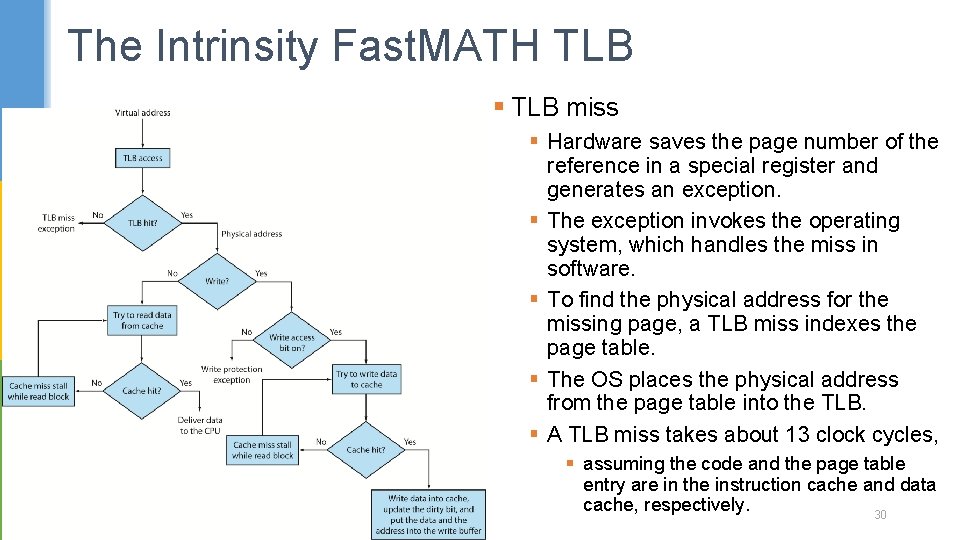

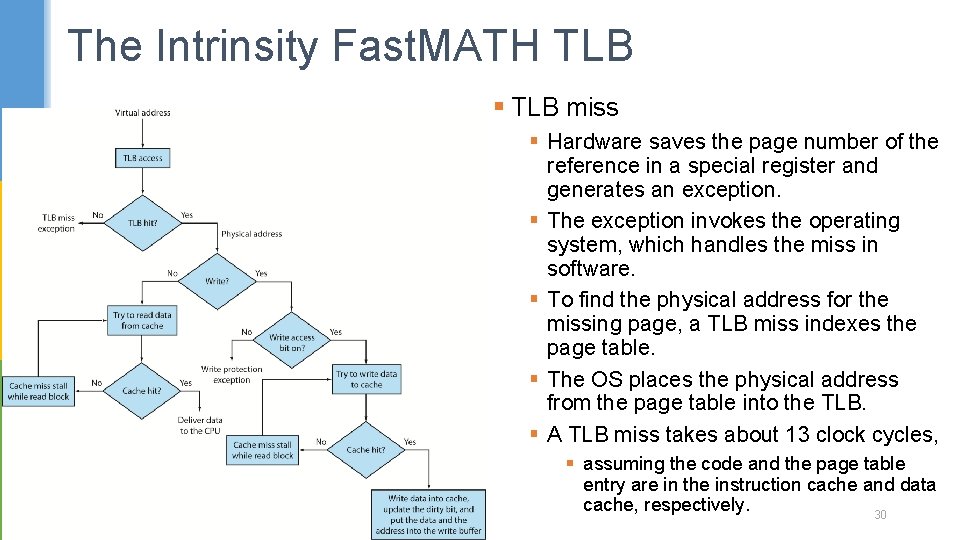

The Intrinsity Fast. MATH TLB § TLB miss § Hardware saves the page number of the reference in a special register and generates an exception. § The exception invokes the operating system, which handles the miss in software. § To find the physical address for the missing page, a TLB miss indexes the page table. § The OS places the physical address from the page table into the TLB. § A TLB miss takes about 13 clock cycles, § assuming the code and the page table entry are in the instruction cache and data cache, respectively. 30

Virtual Memory Protection § Different tasks can share parts of their virtual address spaces § But need to protect against errant access § Requires OS assistance § Hardware support for OS protection § Privileged supervisor mode (aka kernel mode) § Privileged instructions only available in supervisor mode to write mode bit, page table pointer and TLB § Page table pointer, mode bit and TLB state information only accessible in supervisor mode § System call exception (e. g. , syscall in MIPS) transfers control to a dedicated location in supervisor code space § Switching to supervisor mode – PC saved in exception link register (ELR) § Return to user mode - use the exception return (ERET) instruction 31

The Memory Hierarchy The BIG Picture § Common principles apply at all levels of the memory hierarchy § Based on notions of caching § At each level in the hierarchy, common operational alternatives, and how these determine their behavior: § Block placement § Finding a block § Replacement on a miss § Write policy 32