Chapter 5 kMeans Clustering Section 5 1 Introduction

- Slides: 32

Chapter 5 k-Means Clustering

Section 5. 1 Introduction

Objectives n n n 3 State differences between hierarchical and non-hierarchical clustering methods. List advantages and disadvantages of both types of methods. State the data conditions under which each method tends to work well.

Hierarchical versus Non-Hierarchical Clustering Methods: Basic Differences Hierarchical Non-Hierarchical n Involves a tree-like n Assigns objects into construction process pre-specified number of where clusters at any clusters using a level of the tree are a distance/similarity metric. combination of clusters No tree-like structure exists. below that level. n Assignment of object to n Once an observation cluster is not fixed through has joined another the iteration process. observation in a step, n Iterate to minimize or successive steps keep maximize a criterion such as them together. separation or within-cluster similarity. 4

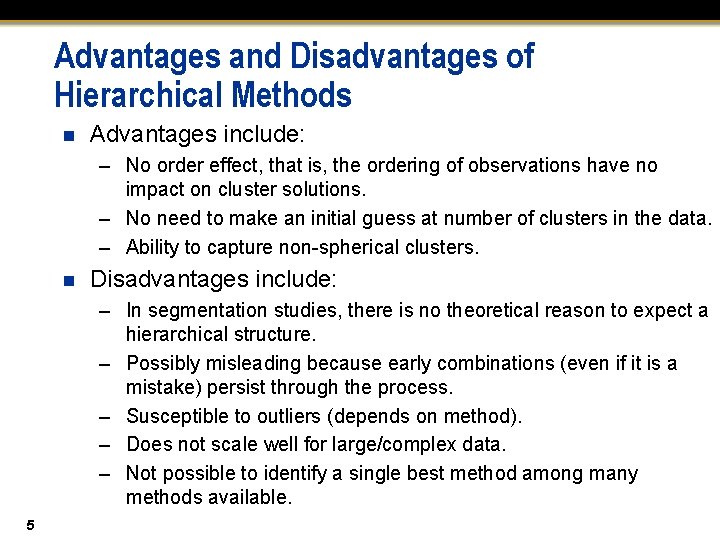

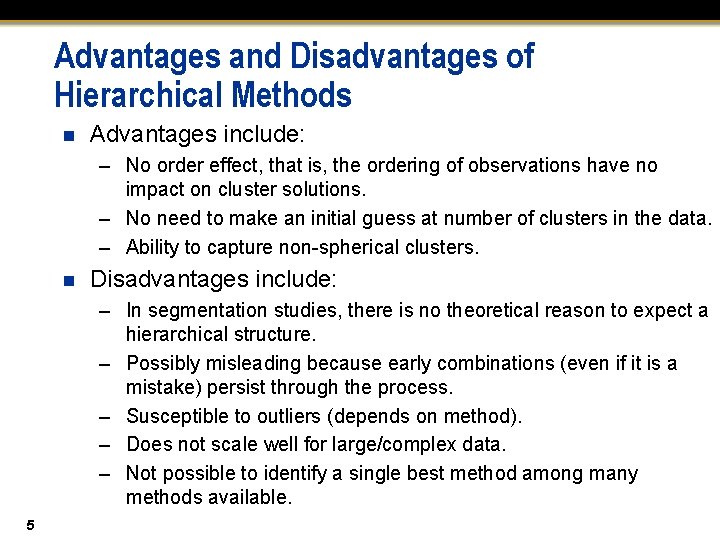

Advantages and Disadvantages of Hierarchical Methods n Advantages include: – No order effect, that is, the ordering of observations have no impact on cluster solutions. – No need to make an initial guess at number of clusters in the data. – Ability to capture non-spherical clusters. n Disadvantages include: – In segmentation studies, there is no theoretical reason to expect a hierarchical structure. – Possibly misleading because early combinations (even if it is a mistake) persist through the process. – Susceptible to outliers (depends on method). – Does not scale well for large/complex data. – Not possible to identify a single best method among many methods available. 5

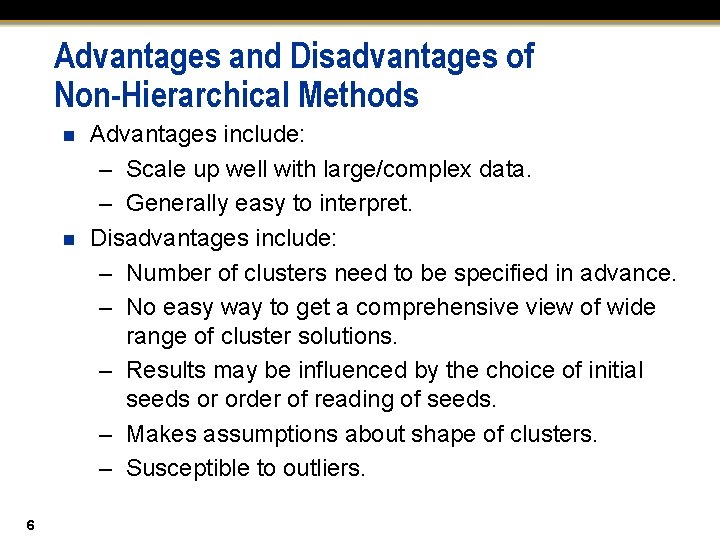

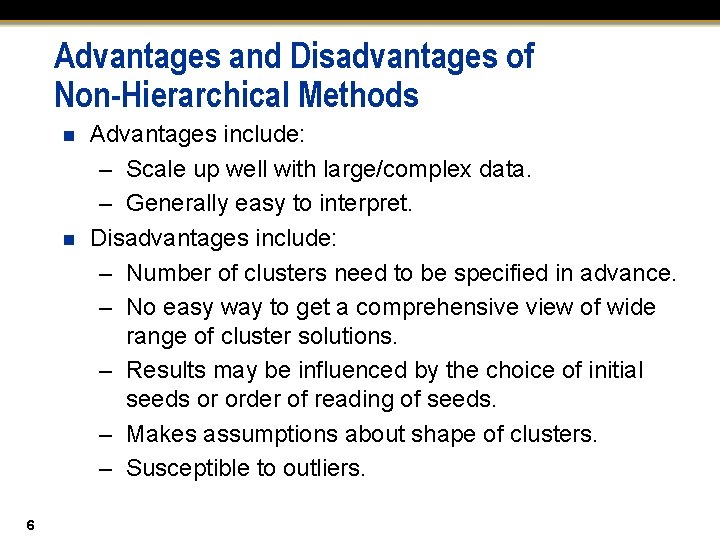

Advantages and Disadvantages of Non-Hierarchical Methods n n 6 Advantages include: – Scale up well with large/complex data. – Generally easy to interpret. Disadvantages include: – Number of clusters need to be specified in advance. – No easy way to get a comprehensive view of wide range of cluster solutions. – Results may be influenced by the choice of initial seeds or order of reading of seeds. – Makes assumptions about shape of clusters. – Susceptible to outliers.

Section 5. 2 Mechanics of k-Means Clustering

Objectives n n 8 State the procedure for conducting k-means analysis. Assign observations to clusters and update cluster centers using the k-means procedure.

k-Means Procedure 1. Select k cluster centers. 2. Assign cases to closest center. 3. Update cluster centers. 4. Re-assign cases. 5. Repeat steps 3 and 4 until convergence. 9

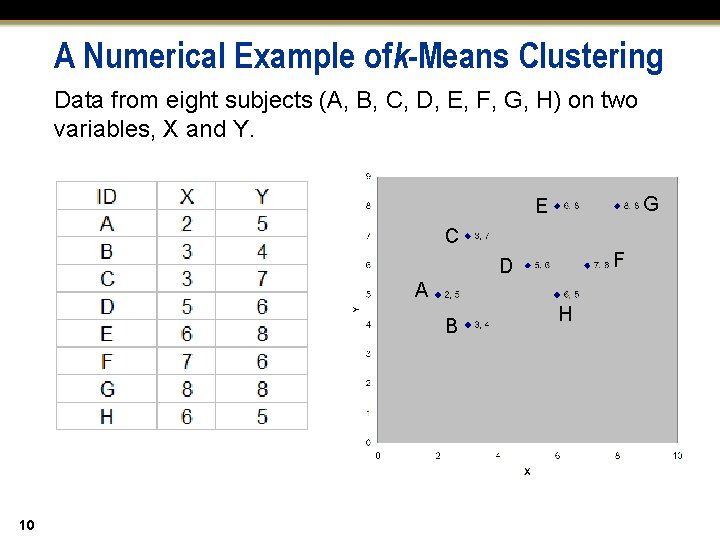

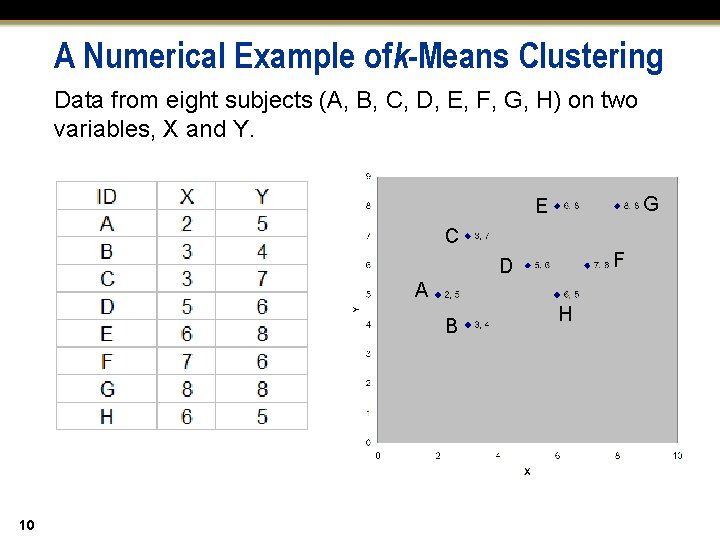

A Numerical Example ofk-Means Clustering Data from eight subjects (A, B, C, D, E, F, G, H) on two variables, X and Y. G E C F D A B 10 H

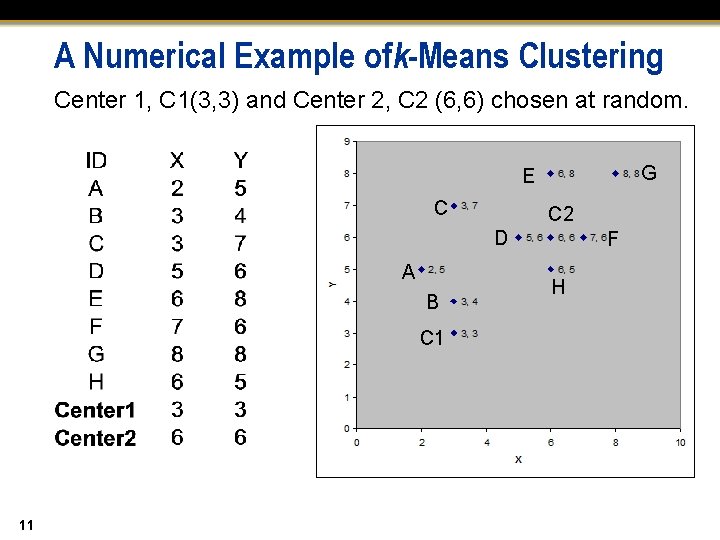

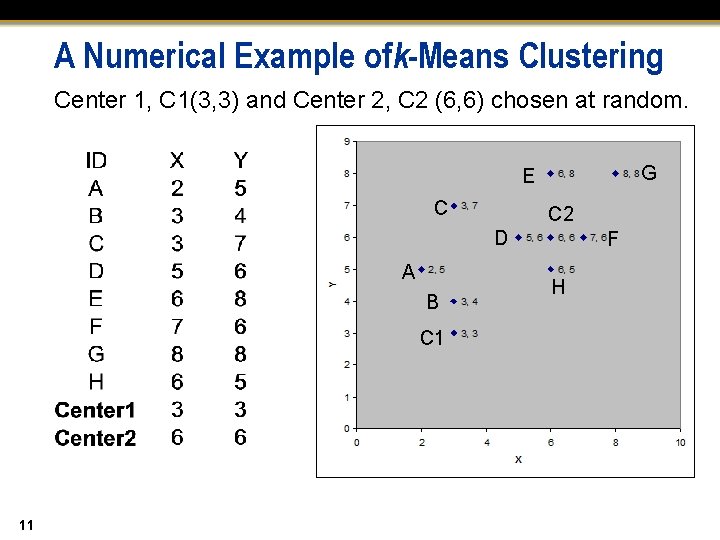

A Numerical Example ofk-Means Clustering Center 1, C 1(3, 3) and Center 2, C 2 (6, 6) chosen at random. G E C C 2 D A B C 1 11 F H

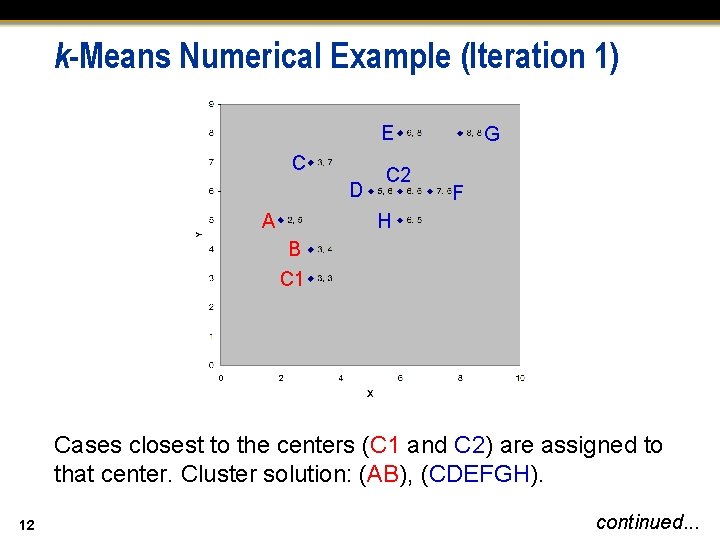

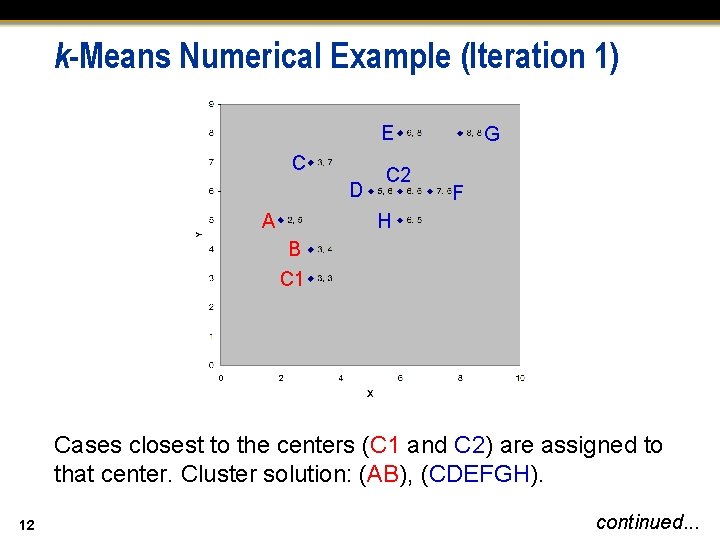

k-Means Numerical Example (Iteration 1) E C D A C 2 G F H B C 1 Cases closest to the centers (C 1 and C 2) are assigned to that center. Cluster solution: (AB), (CDEFGH). 12 continued. . .

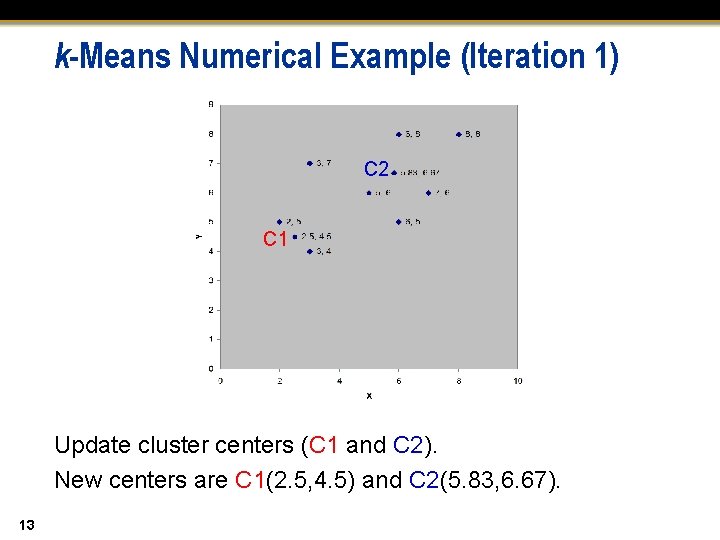

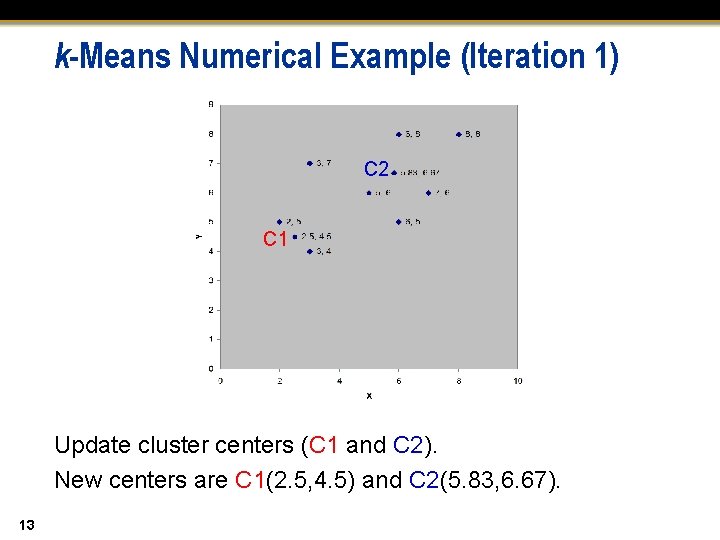

k-Means Numerical Example (Iteration 1) C 2 C 1 Update cluster centers (C 1 and C 2). New centers are C 1(2. 5, 4. 5) and C 2(5. 83, 6. 67). 13

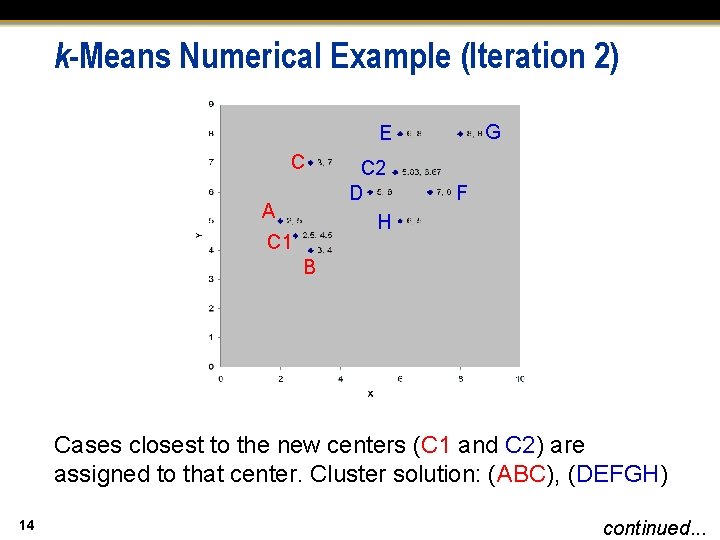

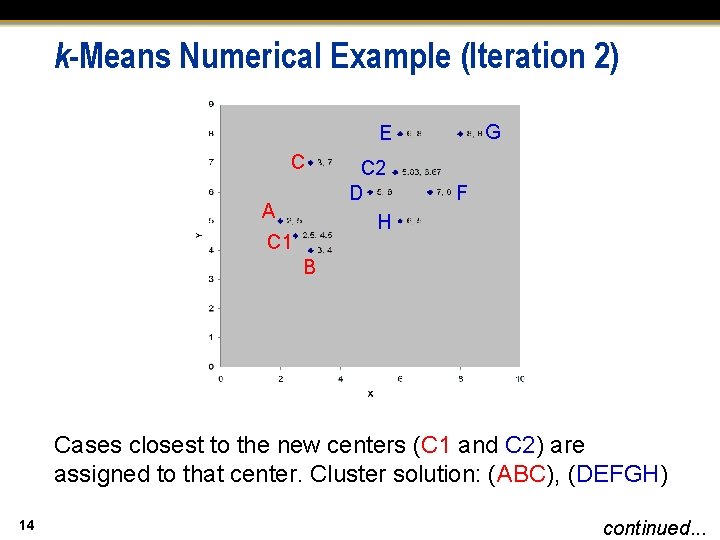

k-Means Numerical Example (Iteration 2) G E C A C 1 C 2 D H F B Cases closest to the new centers (C 1 and C 2) are assigned to that center. Cluster solution: (ABC), (DEFGH) 14 continued. . .

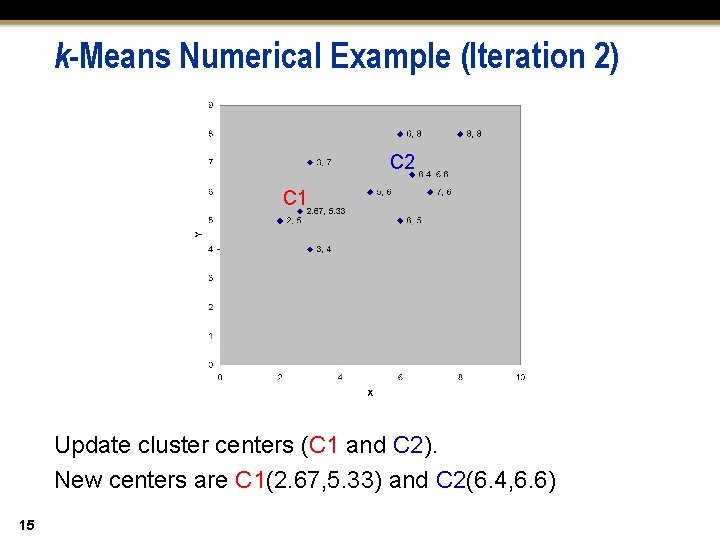

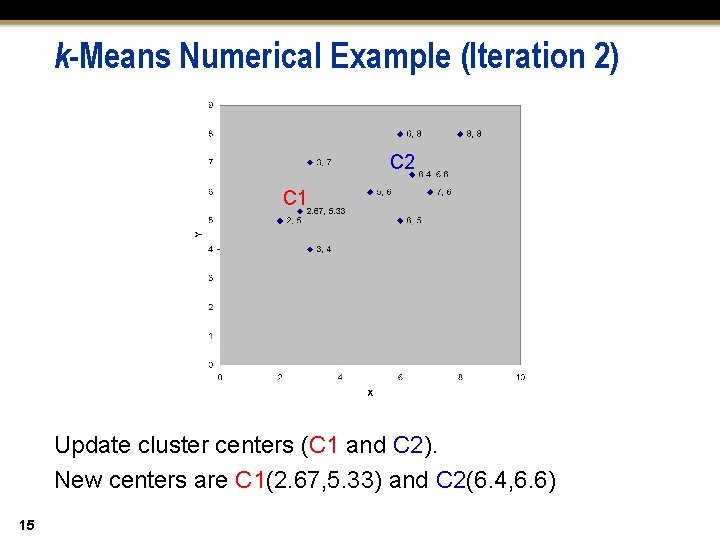

k-Means Numerical Example (Iteration 2) C 2 C 1 Update cluster centers (C 1 and C 2). New centers are C 1(2. 67, 5. 33) and C 2(6. 4, 6. 6) 15

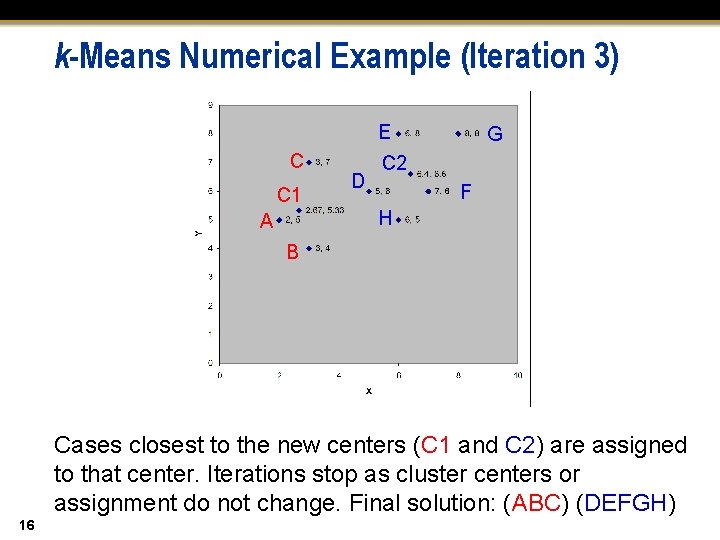

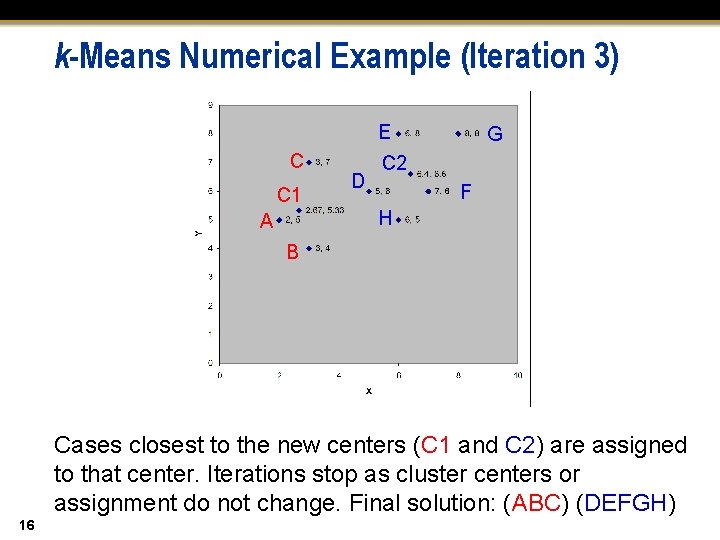

k-Means Numerical Example (Iteration 3) E C C 1 D G C 2 F H A B Cases closest to the new centers (C 1 and C 2) are assigned to that center. Iterations stop as cluster centers or assignment do not change. Final solution: (ABC) (DEFGH) 16

Section 5. 3 Applications of k-Means Clustering

Objectives n n n 18 State the variables and the business issues in the catalogue company data set. Use SAS Enterprise Miner for checking distributions of variables (bases) and applying transformations. Use SAS Enterprise Miner to conduct k-means cluster analysis on this data set.

A Few Cautions Before Beginning Most of the caveats mentioned in discussing hierarchical clustering also apply to k-means clustering. These include the following: n Selection of relevant clustering variables n Pre-processing data to handle skewed distributions, outliers, and different measurement scales n Checking for reliability/validity of cluster solutions In addition, for k-means clustering, you may need to consider the following: n Multiple starting seeds n Pre-specified seeds from running of hierarchical cluster on the same data set 19

Business Problem and Data Description n n 20 ABC is a supplier of identification products serving 90, 000+ customers in USA. ABC wants to segment their customers based on their past and future expected transaction patterns with ABC, as well as selected firmographic variables. – ABC wants to consider between 2 -10 segments. ABC wants to profile and understand the segments using the bases. ABC also wants to profile and validate the segments using the descriptors.

Plan of Analysis SAS data set name: kmeans_demo. TR 1. Explore this data set using SAS Enterprise Miner. In particular, look at the distributions of bases. 2. Use transformation as appropriate on bases. 3. Run k-means using SAS Enterprise Miner. Interpret results from k-means. 3. Profile clusters using bases only. 4. Profile and validate clusters using descriptors. 21

Checking Distributions and Handling Transformations This demonstration illustrates using SAS Enterprise Miner to get a feel for data, checking distributions, and handling transformations. 22

Summary of Checking Distributions and Handling Transformations n n 23 Of the six bases, there are three numeric variables and three categorical variables. The three categorical variables do not seem to have very rare classes. Of the three numeric variables, HDCNT_LAST and lt_st_sales show large, right skew. – Max. Normal transformations on these three variables fail to correct the skew problems. – Log transformations help to even out the distributions of the variables. Max. Normal transformations select square root for the variable tele_rank and that seems reasonable.

Applying k-Means This demonstration illustrates how to run k-means clustering and interpret the results. 24

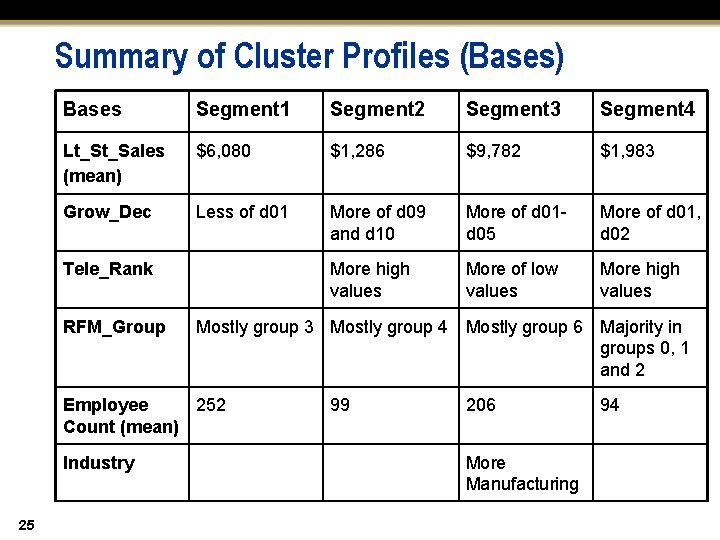

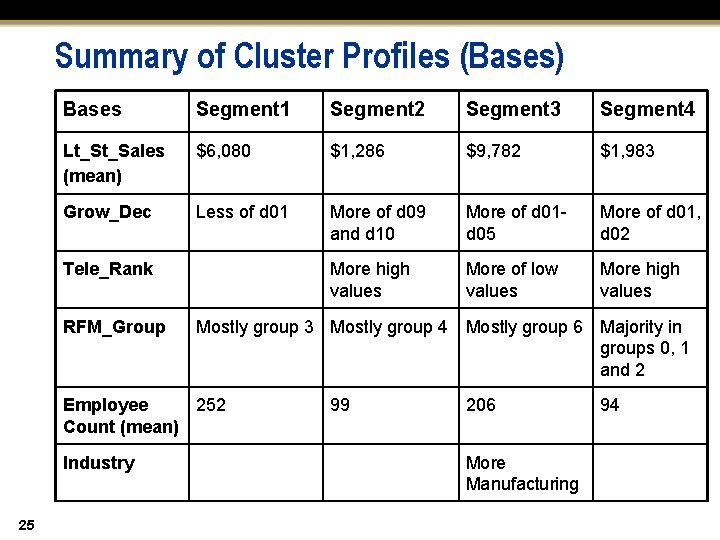

Summary of Cluster Profiles (Bases) Bases Segment 1 Segment 2 Segment 3 Segment 4 Lt_St_Sales (mean) $6, 080 $1, 286 $9, 782 $1, 983 Grow_Dec Less of d 01 More of d 09 and d 10 More of d 01 d 05 More of d 01, d 02 More high values More of low values More high values Tele_Rank RFM_Group Mostly group 3 Mostly group 4 Mostly group 6 Majority in groups 0, 1 and 2 Employee 252 Count (mean) Industry 25 99 206 More Manufacturing 94

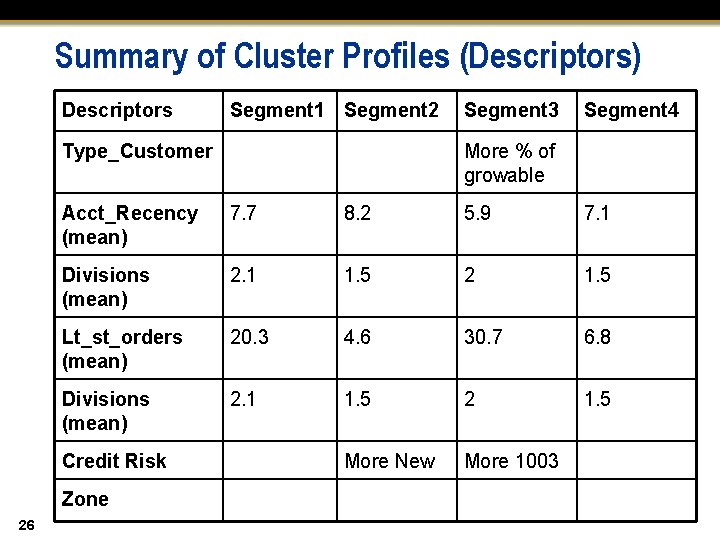

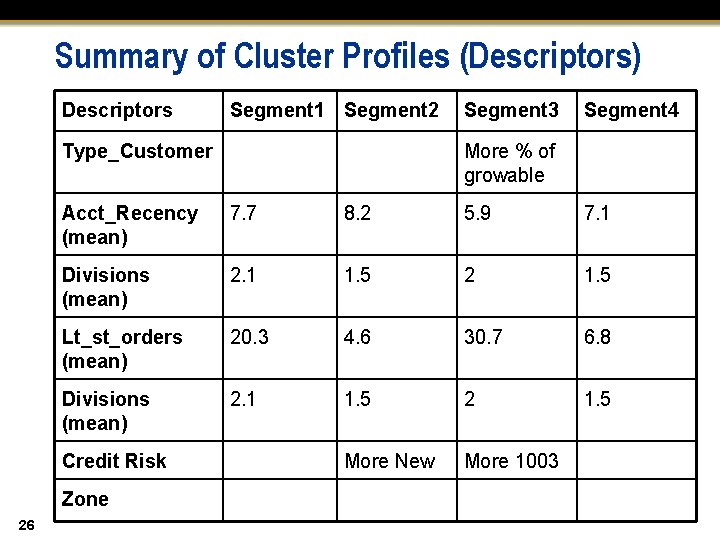

Summary of Cluster Profiles (Descriptors) Descriptors Segment 1 Segment 2 Type_Customer Segment 4 More % of growable Acct_Recency (mean) 7. 7 8. 2 5. 9 7. 1 Divisions (mean) 2. 1 1. 5 2 1. 5 Lt_st_orders (mean) 20. 3 4. 6 30. 7 6. 8 Divisions (mean) 2. 1 1. 5 2 1. 5 More New More 1003 Credit Risk Zone 26 Segment 3

Where Do You Go From Here? 27 In practice, you should: n Sort the data differently and rerun cluster analysis to check for order effect. n Use different transformations on the bases. n Trim (or, Winsorize) outliers/atypical observations. n Use a different method (you used Average) such as Ward’s or Centroid method to calculate distances. n Force a different number of cluster solutions (by switching from automatic to user specify in SAS Enterprise Miner and then specifying the number of clusters) and evaluate those solutions. n Use other variables (such as brand or product-line specific data) to further profile/validate the cluster solutions.

Section 5. 4 Scoring New Data

Objectives n n 29 Use scoring nodes to assign cluster memberships to new data based on cluster model built earlier. Understand how distances of an observation from cluster centers can be used for assigning probabilities of cluster membership of that observation to each cluster.

Scoring New Data Once a cluster model has been developed and validated, a firm typically continues to use that model for a while. Meanwhile, fresh (new) data keeps pouring in. Therefore, important questions that need to be answered include: n How do you cluster newly acquired accounts that make first purchase after the cluster model was built? n How do you handle fresh data from existing accounts that were used in clustering? – Should the fresh data be combined with the old data for these accounts? – If you combine fresh and old data, do you need to rerun and revalidate the cluster model? 30

Business Problem and Data Description n n 31 ABC used the kmeans_demo. TR data to build and validate cluster models a year ago. In the last year (from the time the clusters were built until now), ABC acquired some new customers. The data set kmeans_new contains data of 4, 000 new customers chosen at random from all of the new customers acquired by ABC. The variables are identical between the two data sets. ABC wants to assign cluster membership to these 4, 000 new accounts.

Scoring New Data This demonstration illustrates scoring new data with cluster memberships. 32