Chapter 5 CPU Scheduling Operating System Concepts 10

- Slides: 30

Chapter 5: CPU Scheduling Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

Chapter 5: CPU Scheduling n Basic Concepts n Scheduling Criteria n Scheduling Algorithms n Thread Scheduling n Multiple-Processor Scheduling n Real-Time CPU Scheduling n Operating Systems Examples n Algorithm Evaluation Operating System Concepts – 10 th Edition 5. 2 Silberschatz, Galvin and Gagne © 2018

Objectives n To introduce CPU scheduling, which is the basis for multiprogrammed operating systems n To describe various CPU-scheduling algorithms n To discuss evaluation criteria for selecting a CPU-scheduling algorithm for a particular system n To examine the scheduling algorithms of several operating systems Operating System Concepts – 10 th Edition 5. 3 Silberschatz, Galvin and Gagne © 2018

Shortest-Job-First (SJF) Scheduling n Associate with each process the length of its next CPU burst l n Use these lengths to schedule the process with the shortest time SJF is optimal – gives minimum average waiting time for a given set of processes l The difficulty is knowing the length of the next CPU request l Could ask the user If two processes have same length next CPU burst, FCFS scheduling is used to break the tie. n Two schemes: n l l nonpreemptive – once CPU given to the process it cannot be preempted until it completes its CPU burst. Preemptive – if a new process arrives with CPU burst length less than remaining time of current executing process, preempt. This scheme is know as the shortest-Remaining-Time-First (SRTF). Operating System Concepts – 10 th Edition 5. 4 Silberschatz, Galvin and Gagne © 2018

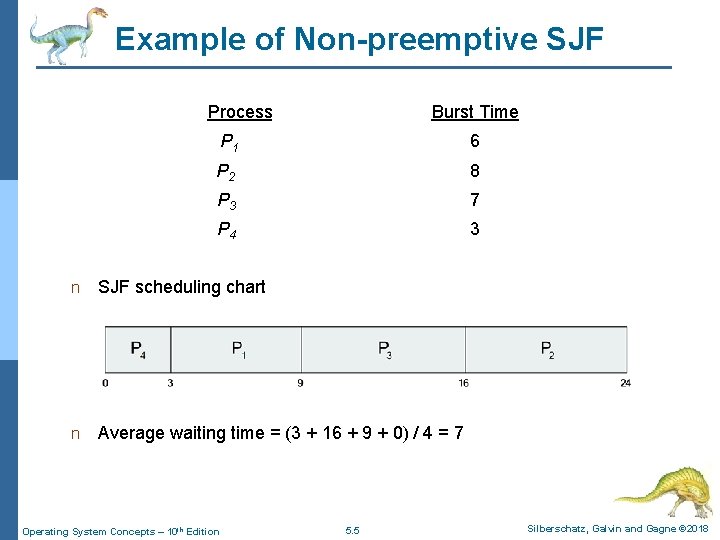

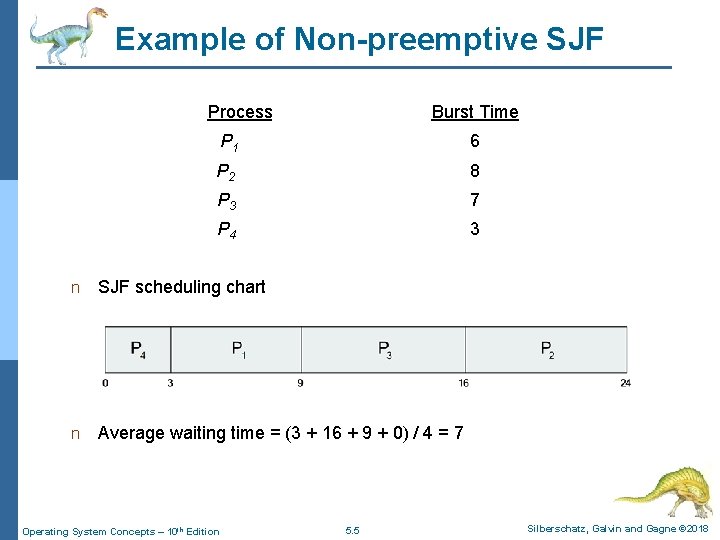

Example of Non-preemptive SJF Process. Arrival Time Burst Time P 1 0. 0 6 P 2 2. 0 8 P 3 4. 0 7 P 4 5. 0 3 n SJF scheduling chart n Average waiting time = (3 + 16 + 9 + 0) / 4 = 7 Operating System Concepts – 10 th Edition 5. 5 Silberschatz, Galvin and Gagne © 2018

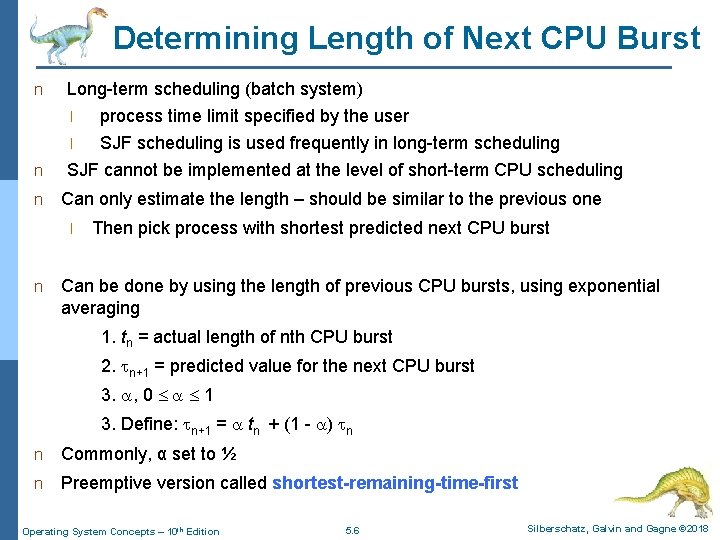

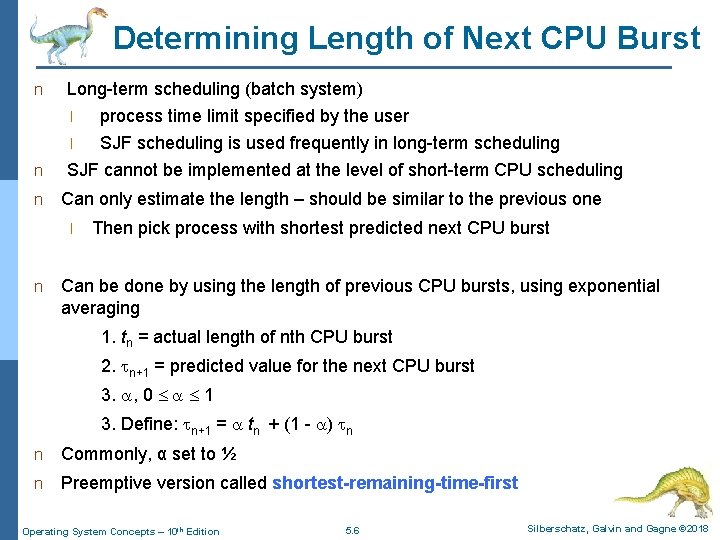

Determining Length of Next CPU Burst n Long-term scheduling (batch system) n process time limit specified by the user l SJF scheduling is used frequently in long-term scheduling SJF cannot be implemented at the level of short-term CPU scheduling l n Can only estimate the length – should be similar to the previous one l n Then pick process with shortest predicted next CPU burst Can be done by using the length of previous CPU bursts, using exponential averaging 1. tn = actual length of nth CPU burst 2. n+1 = predicted value for the next CPU burst 3. , 0 1 3. Define: n+1 = tn + (1 - ) n n Commonly, α set to ½ n Preemptive version called shortest-remaining-time-first Operating System Concepts – 10 th Edition 5. 6 Silberschatz, Galvin and Gagne © 2018

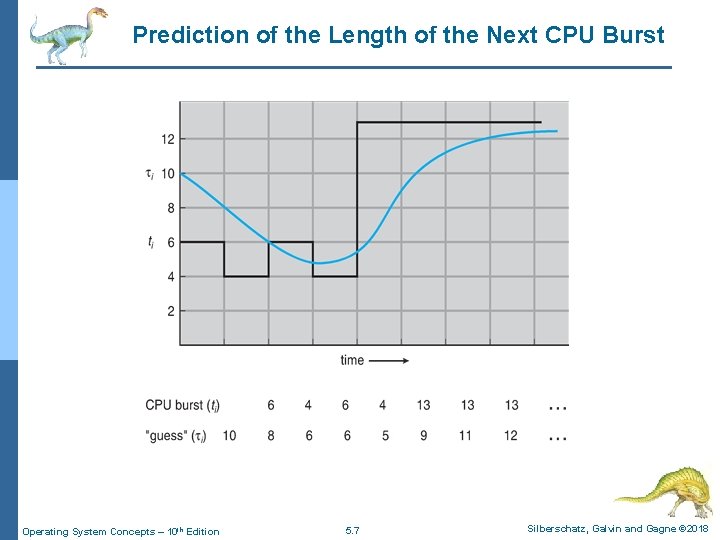

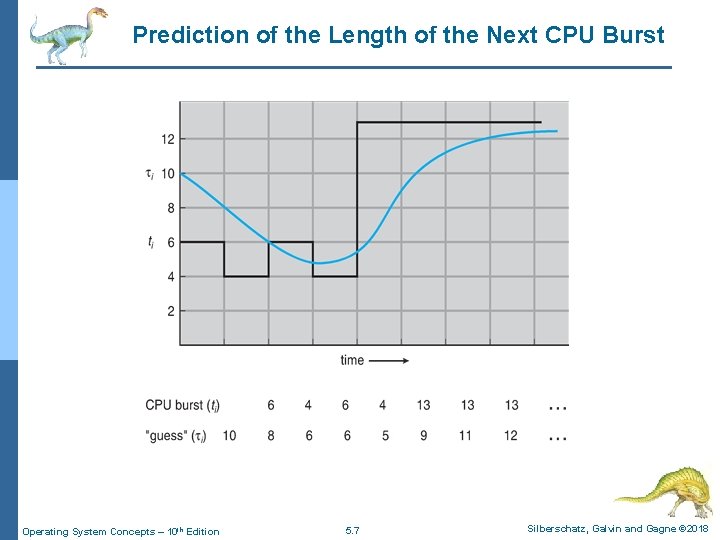

Prediction of the Length of the Next CPU Burst Operating System Concepts – 10 th Edition 5. 7 Silberschatz, Galvin and Gagne © 2018

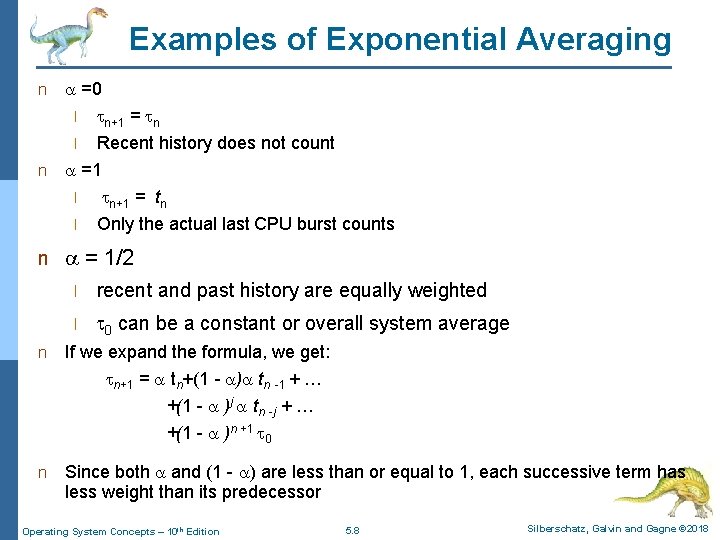

Examples of Exponential Averaging n =0 n+1 = n l Recent history does not count n =1 l n+1 = tn l Only the actual last CPU burst counts l n = 1/2 l recent and past history are equally weighted l 0 can be a constant or overall system average n If we expand the formula, we get: n+1 = tn+(1 - ) tn -1 + … +(1 - )j tn -j + … +(1 - )n +1 0 n Since both and (1 - ) are less than or equal to 1, each successive term has less weight than its predecessor Operating System Concepts – 10 th Edition 5. 8 Silberschatz, Galvin and Gagne © 2018

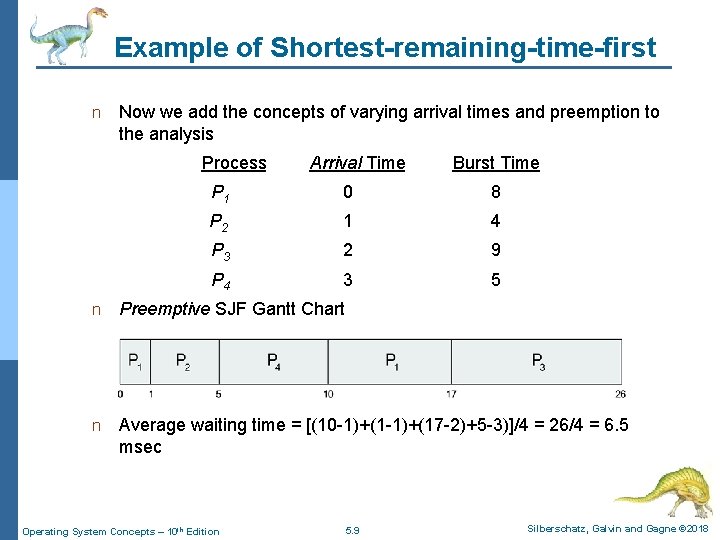

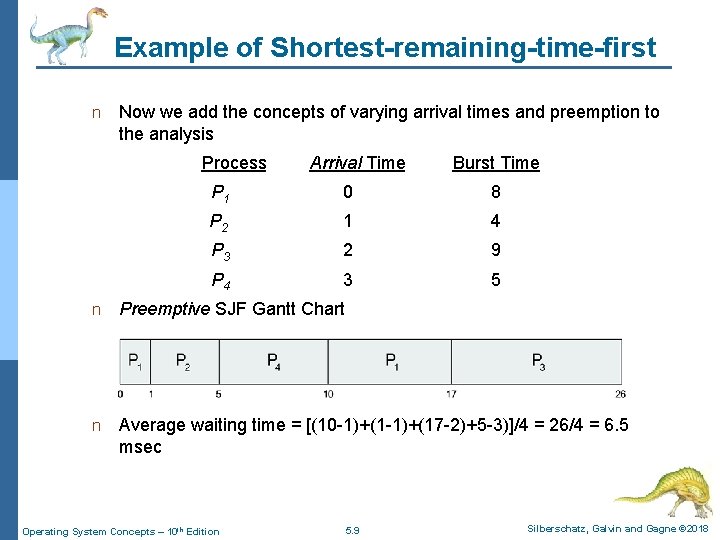

Example of Shortest-remaining-time-first n Now we add the concepts of varying arrival times and preemption to the analysis Process. Aarri Arrival Time. T Burst Time P 1 0 8 P 2 1 4 P 3 2 9 P 4 3 5 n Preemptive SJF Gantt Chart n Average waiting time = [(10 -1)+(17 -2)+5 -3)]/4 = 26/4 = 6. 5 msec Operating System Concepts – 10 th Edition 5. 9 Silberschatz, Galvin and Gagne © 2018

SRTF discussion n SRTF Pros & Cons l Optimal (average response time) (+) l Hard to predict future (-) l Unfair (-) Operating System Concepts – 10 th Edition 5. 10 Silberschatz, Galvin and Gagne © 2018

Priority Scheduling n A priority number (integer) is associated with each process n The CPU is allocated to the process with the highest priority (smallest integer highest priority) l Preemptive l Nonpreemptive n Equal-priority processes are scheduled in FCFS order n SJF is priority scheduling where priority is the inverse of predicted next CPU burst time n Problem Starvation – low priority processes may never execute n Solution Aging – as time progresses increase the priority of the process Operating System Concepts – 10 th Edition 5. 11 Silberschatz, Galvin and Gagne © 2018

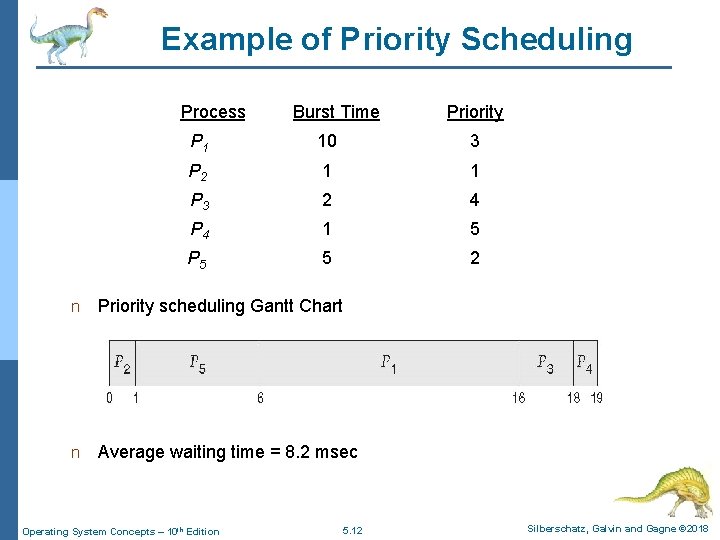

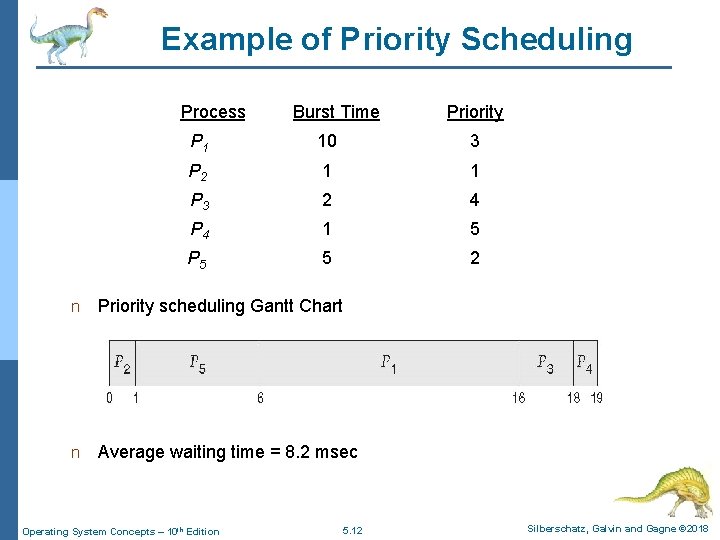

Example of Priority Scheduling Process. A arri Burst Time. T Priority P 1 10 3 P 2 1 1 P 3 2 4 P 4 1 5 P 5 5 2 n Priority scheduling Gantt Chart n Average waiting time = 8. 2 msec Operating System Concepts – 10 th Edition 5. 12 Silberschatz, Galvin and Gagne © 2018

Round Robin (RR) n Each process gets a small unit of CPU time (time quantum q), usually 10100 milliseconds. After this time has elapsed, the process is preempted and added to the end of the ready queue. n RR scheduling is implemented as a FIFO queue of processes. n New processes are added to the tail of the ready queue. n A process may itself leave the CPU if its CPU burst is less than 1 quantum, otherwise timer will cause an interrupt and the process will be put at the tail of the queue Operating System Concepts – 10 th Edition 5. 13 Silberschatz, Galvin and Gagne © 2018

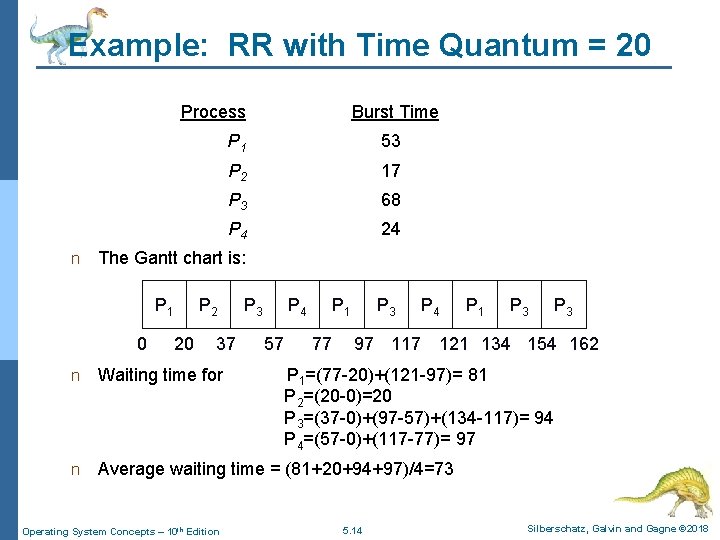

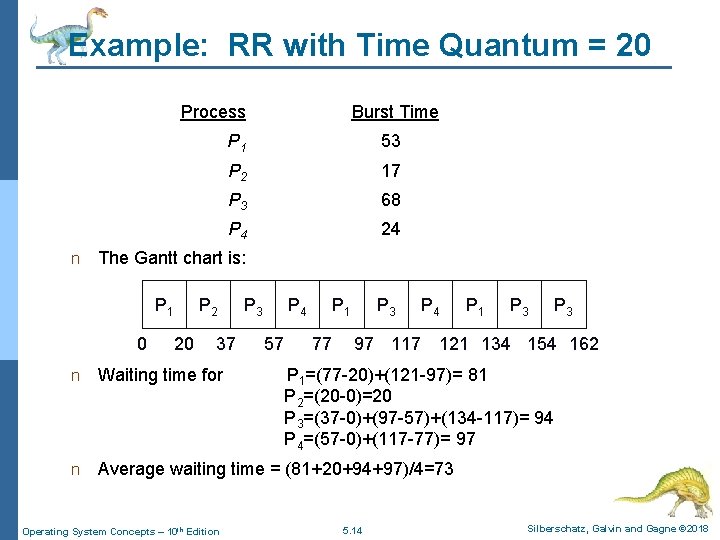

Example: RR with Time Quantum = 20 Process n Burst Time P 1 53 P 2 17 P 3 68 P 4 24 The Gantt chart is: P 1 0 P 2 20 37 P 3 P 4 57 P 1 77 P 3 P 4 P 3 97 117 121 134 154 162 n Waiting time for n Average waiting time = (81+20+94+97)/4=73 Operating System Concepts – 10 th Edition P 1=(77 -20)+(121 -97)= 81 P 2=(20 -0)=20 P 3=(37 -0)+(97 -57)+(134 -117)= 94 P 4=(57 -0)+(117 -77)= 97 5. 14 Silberschatz, Galvin and Gagne © 2018

Round Robin (RR) n Typically, higher average turnaround than SJF, but better response. n Round-Robin Pros and Cons: l Better for short jobs, Fair (+) l Context-switching time adds up for long jobs (-) Operating System Concepts – 10 th Edition 5. 15 Silberschatz, Galvin and Gagne © 2018

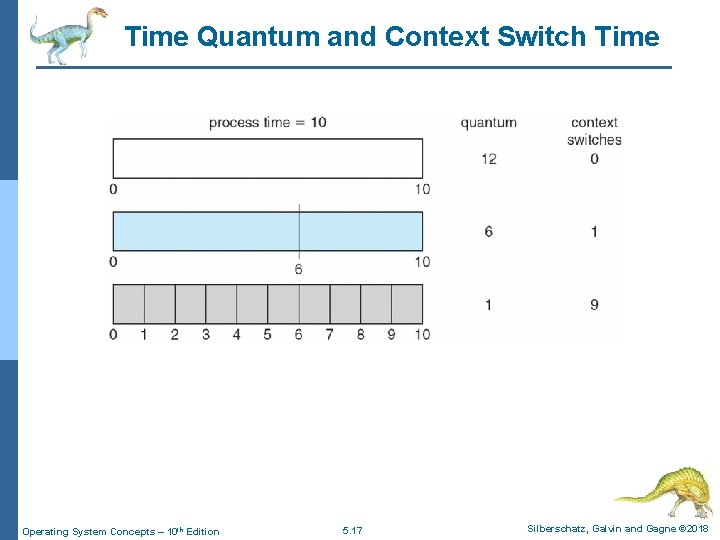

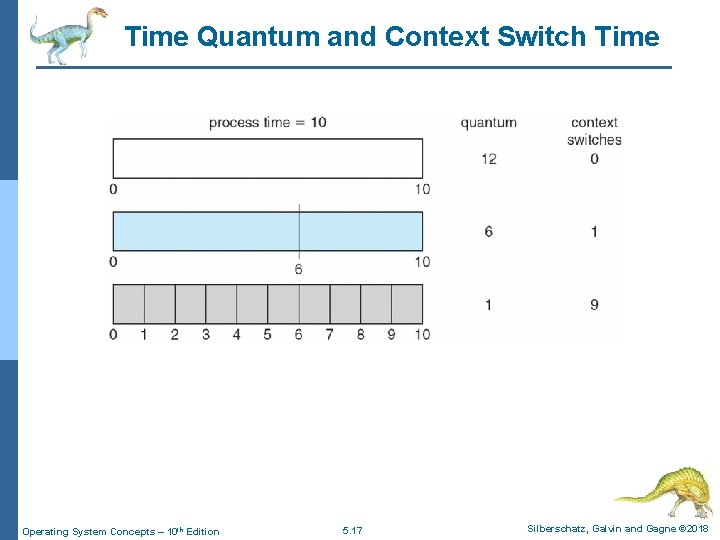

Round Robin (RR) n If there are n processes in the ready queue and the time quantum is q, then each process gets 1/n of the CPU time in chunks of at most q time units at once. l No process waits more than (n-1)q time units. n Example l 5 processes, time quantum = 20 4 each process will get up to 20 ms every 80 ms. n Performance l q large FCFS l q small processor sharing l 4 appears l l as though each of n processes has its own processor running at 1/n the speed of the real processor q must be large with respect to context switch, otherwise overhead is too high. q usually 10 ms to 100 ms, context switch < 10 sec Operating System Concepts – 10 th Edition 5. 16 Silberschatz, Galvin and Gagne © 2018

Time Quantum and Context Switch Time Operating System Concepts – 10 th Edition 5. 17 Silberschatz, Galvin and Gagne © 2018

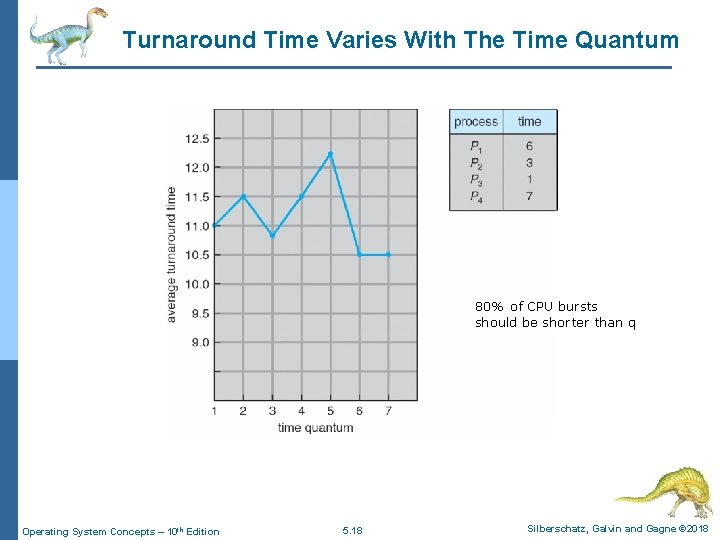

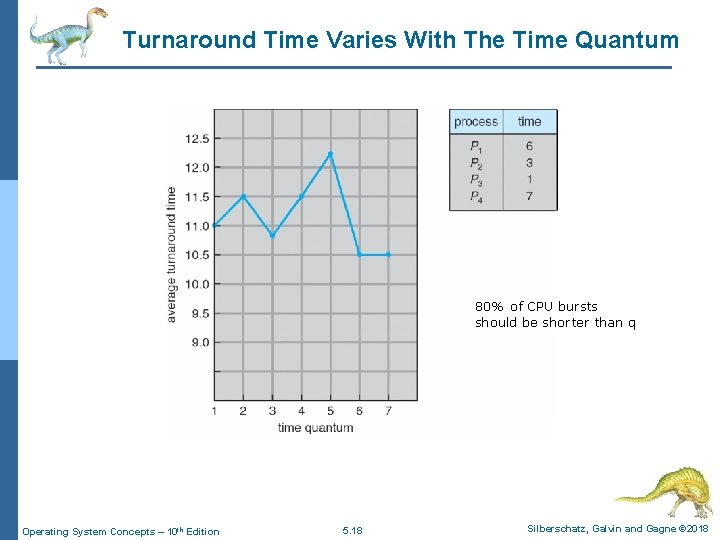

Turnaround Time Varies With The Time Quantum 80% of CPU bursts should be shorter than q Operating System Concepts – 10 th Edition 5. 18 Silberschatz, Galvin and Gagne © 2018

Turnaround Time Varies With The Time Quantum n The average turnaround time can be improved if most processes finish their next CPU burst in a single time quantum. n Example l 3 processes, CPU burst = 10 for each 4 quantum = 1, average turnaround time = 29 4 quantum = 10, average turnaround time = 20 4 If context-switch time is added, average turnaround time will increase for smaller time quantum as there will be more number of context switches. n A rule of thumb l 80 percent of the CPU bursts should be shorter than the time quantum. Operating System Concepts – 10 th Edition 5. 19 Silberschatz, Galvin and Gagne © 2018

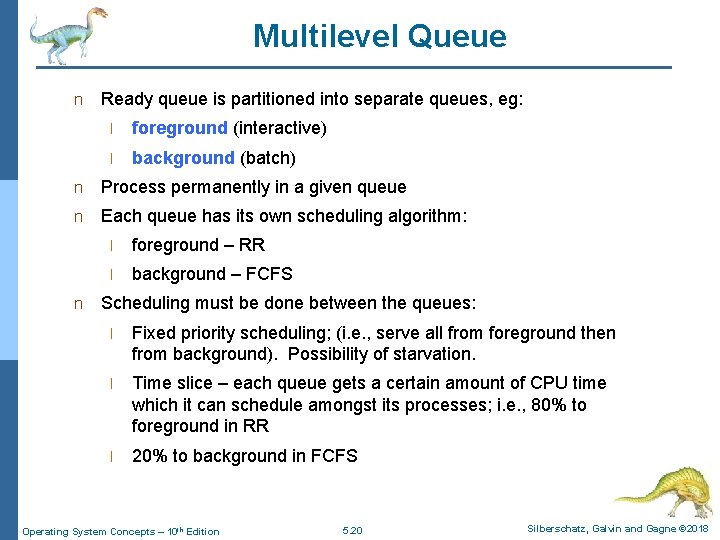

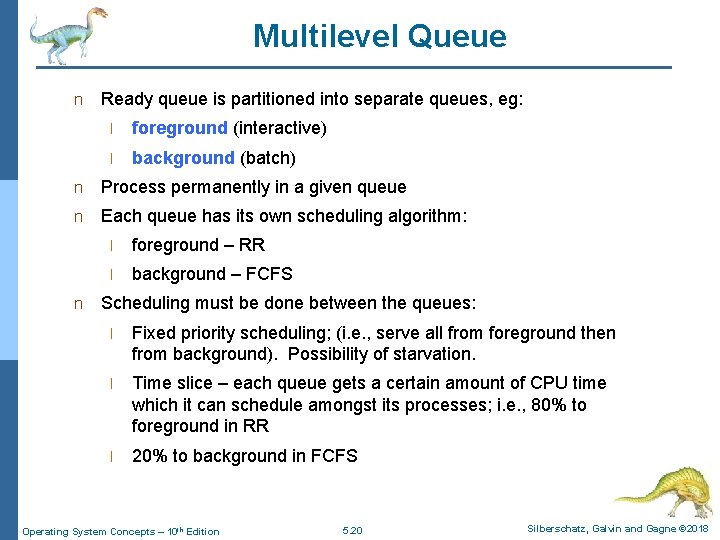

Multilevel Queue n Ready queue is partitioned into separate queues, eg: l foreground (interactive) l background (batch) n Process permanently in a given queue n Each queue has its own scheduling algorithm: n l foreground – RR l background – FCFS Scheduling must be done between the queues: l Fixed priority scheduling; (i. e. , serve all from foreground then from background). Possibility of starvation. l Time slice – each queue gets a certain amount of CPU time which it can schedule amongst its processes; i. e. , 80% to foreground in RR l 20% to background in FCFS Operating System Concepts – 10 th Edition 5. 20 Silberschatz, Galvin and Gagne © 2018

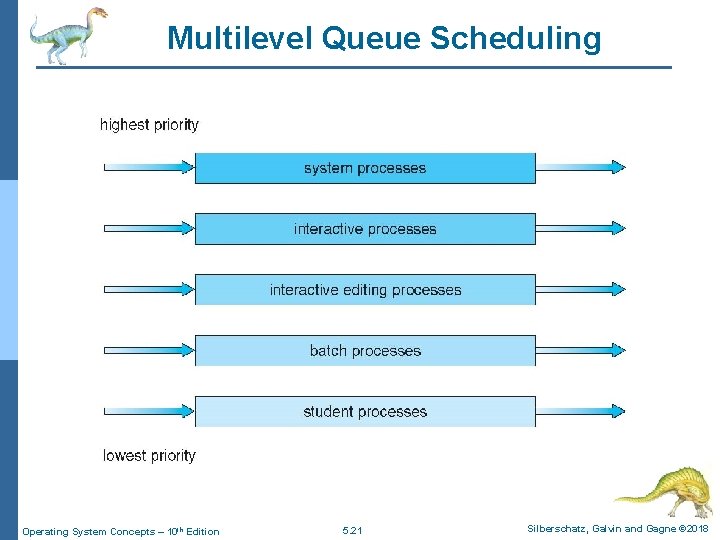

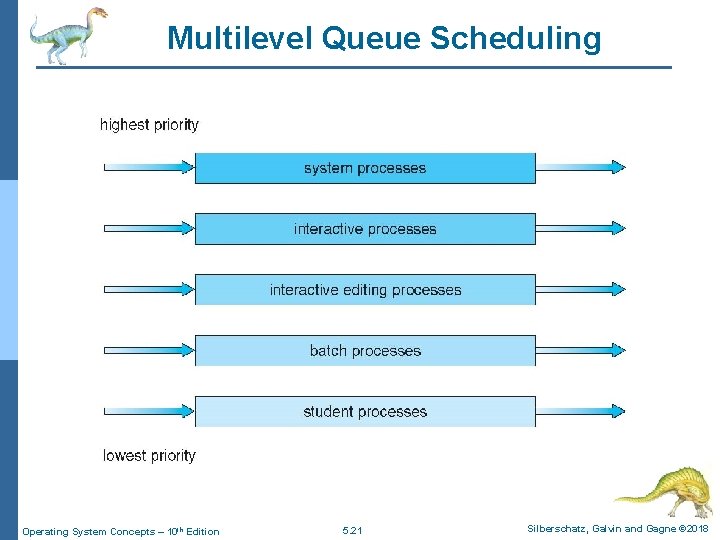

Multilevel Queue Scheduling Operating System Concepts – 10 th Edition 5. 21 Silberschatz, Galvin and Gagne © 2018

Multilevel Feedback Queue n A process can move between the various queues; aging can be implemented this way CPU-bound processes are moved to lower-priority queue. n I/O-bound and interactive processes get the higher-priority queue. n A process that waits too long in a lower-priority queue is moved to a higherpriority queue. n n Multilevel-feedback-queue scheduler defined by the following parameters: l number of queues l scheduling algorithms for each queue l method used to determine when to upgrade a process l method used to determine when to demote a process l method used to determine which queue a process will enter when that process needs service Operating System Concepts – 10 th Edition 5. 22 Silberschatz, Galvin and Gagne © 2018

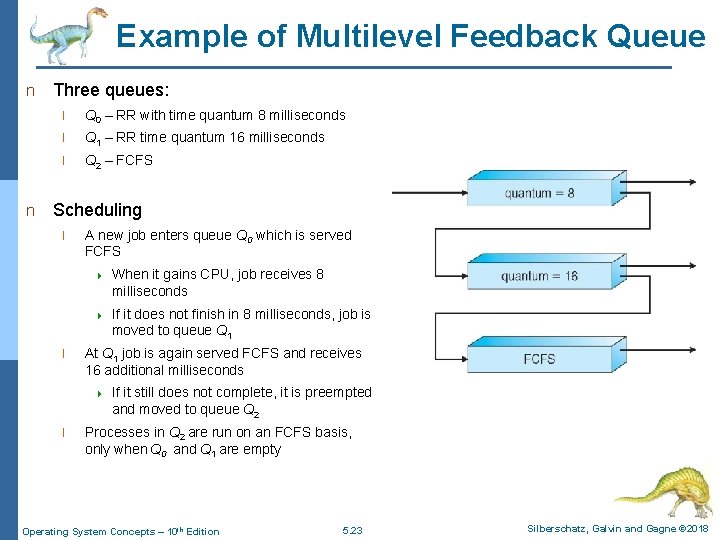

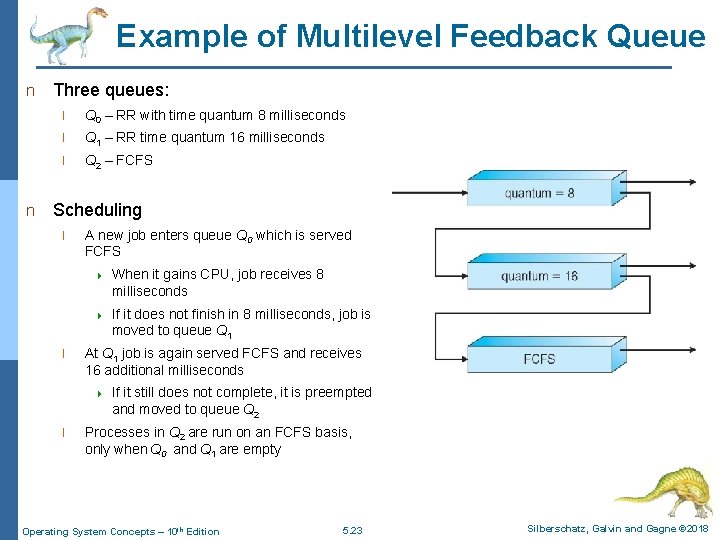

Example of Multilevel Feedback Queue n n Three queues: l Q 0 – RR with time quantum 8 milliseconds l Q 1 – RR time quantum 16 milliseconds l Q 2 – FCFS Scheduling l l A new job enters queue Q 0 which is served FCFS 4 When it gains CPU, job receives 8 milliseconds 4 If it does not finish in 8 milliseconds, job is moved to queue Q 1 At Q 1 job is again served FCFS and receives 16 additional milliseconds 4 l If it still does not complete, it is preempted and moved to queue Q 2 Processes in Q 2 are run on an FCFS basis, only when Q 0 and Q 1 are empty Operating System Concepts – 10 th Edition 5. 23 Silberschatz, Galvin and Gagne © 2018

Fairness n Unfair in scheduling: STCF: *must* be unfair to be optimal l Favor I/O jobs? Penalize CPU. n How do we implement fairness? l Could give each queue a fraction of the CPU. 4 But this isn’t always fair. What if there’s one long-running job, and 100 short-running ones? l Could adjust priorities: increase priority of jobs, as they don’t get service. This is what’s done in UNIX. 4 Problem is that this is ad hoc – what rate should you increase priorities? 4 And, as system gets overloaded, no job gets CPU time, so everyone increases in priority. 4 The result is that interactive jobs suffer – both short and long jobs have high priority! l Operating System Concepts – 10 th Edition 5. 24 Silberschatz, Galvin and Gagne © 2018

Lottery scheduling: random simplicity n Give every job some number of lottery tickets n On each time slice, randomly pick a winning ticket. n On average, CPU time is proportional to # of tickets given to each job. n How do you assign tickets? l To approximate SRTF, short running jobs get more, long running jobs get fewer. l To avoid starvation, every job gets at least one ticket (so everyone makes progress). Operating System Concepts – 10 th Edition 5. 25 Silberschatz, Galvin and Gagne © 2018

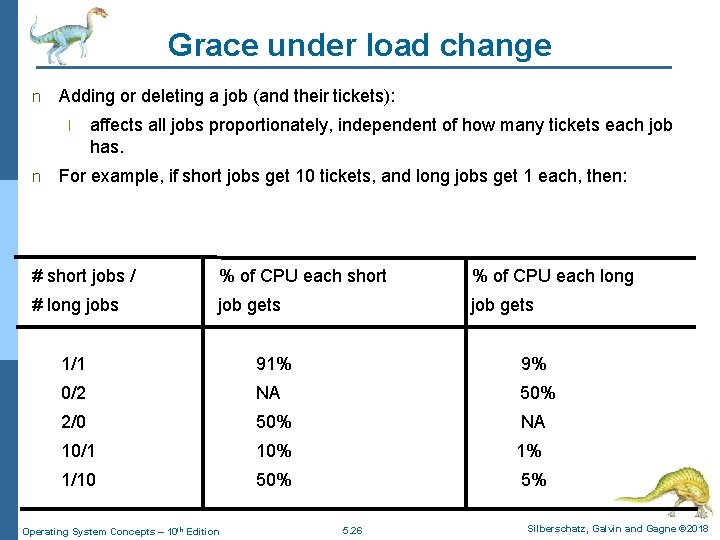

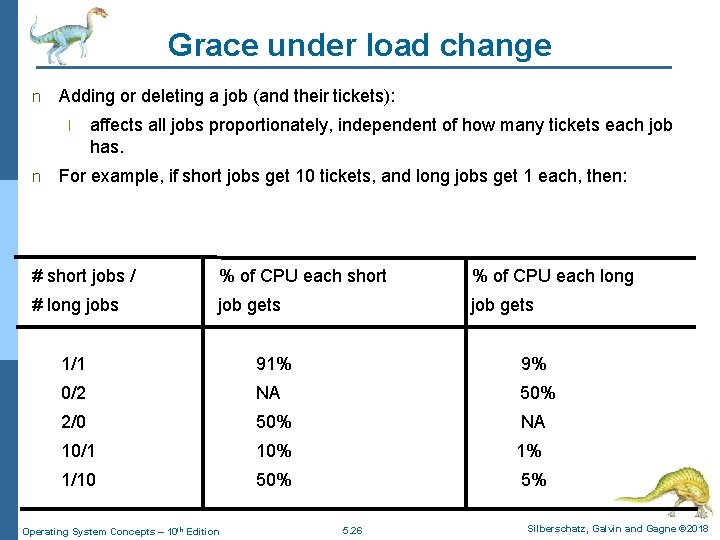

Grace under load change n Adding or deleting a job (and their tickets): l n affects all jobs proportionately, independent of how many tickets each job has. For example, if short jobs get 10 tickets, and long jobs get 1 each, then: # short jobs / % of CPU each short % of CPU each long # long jobs job gets 1/1 91% 9% 0/2 NA 50% 2/0 50% NA 10/1 10% 1% 1/10 50% 5% Operating System Concepts – 10 th Edition 5. 26 Silberschatz, Galvin and Gagne © 2018

Thread Scheduling n Distinction between user-level and kernel-level threads n When threads supported, threads scheduled, not processes n Many-to-one and many-to-many models, thread library schedules user-level threads to run on LWP n l Known as process-contention scope (PCS) since scheduling competition is within the process l Typically done via priority set by programmer and are not adjusted by the thread library l Preempt the thread currently running in favor of a higher-priority thread l no guarantee of time slicing among threads of equal priority Kernel thread scheduled onto available CPU is system-contention scope (SCS) – competition among all threads in system l One-to-one model, such as Windows and Linux schedule threads using only SCS Operating System Concepts – 10 th Edition 5. 27 Silberschatz, Galvin and Gagne © 2018

Pthread Scheduling n n API allows specifying either PCS or SCS during thread creation l PTHREAD_SCOPE_PROCESS schedules threads using PCS scheduling l PTHREAD_SCOPE_SYSTEM schedules threads using SCS scheduling In the many-to-many model, the PTHREAD_SCOPE_PROCESS policy schedules user-level threads onto available LWPs l The number of LWPs is maintained by the thread library n The PTHREAD_SCOPE_SYSTEM scheduling policy will create and bind an LWP for each user-level thread n The Pthread IPC (Interprocess Communication) provides two functions for setting and getting the contention scope policy: n l pthread_attr_setscope(pthread_attr_t *attr, int scope) l pthread_attr_getscope(pthread_attr_t *attr, int *scope) Can be limited by OS – Linux and Mac OS X only allow PTHREAD_SCOPE_SYSTEM Operating System Concepts – 10 th Edition 5. 28 Silberschatz, Galvin and Gagne © 2018

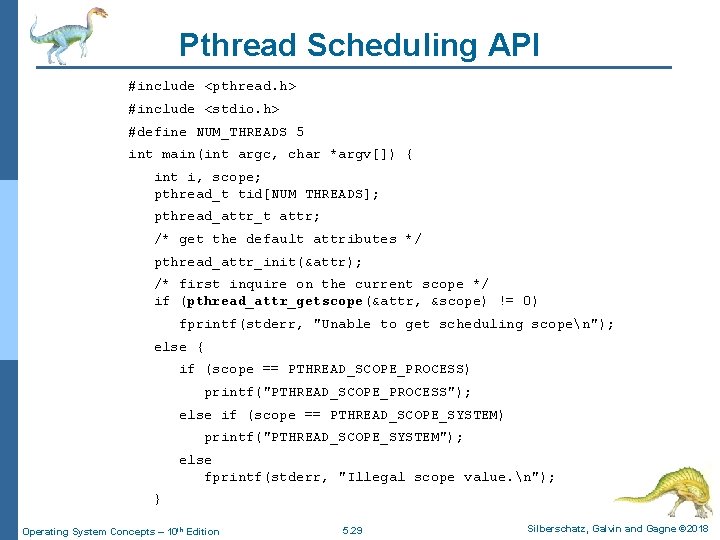

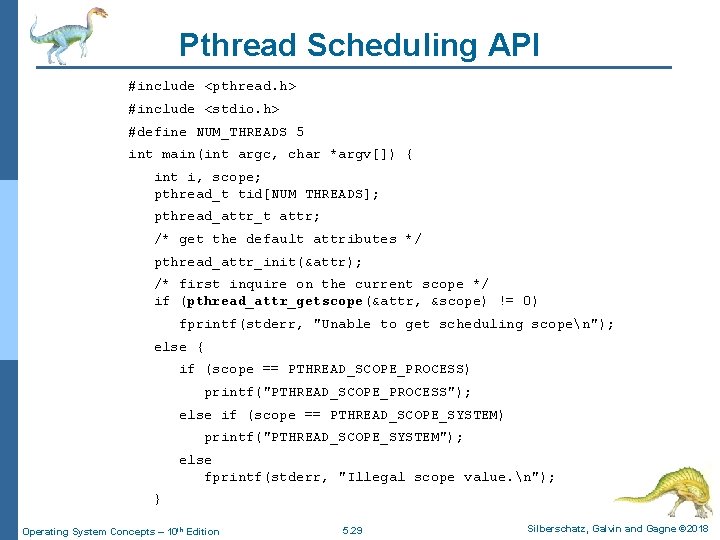

Pthread Scheduling API #include <pthread. h> #include <stdio. h> #define NUM_THREADS 5 int main(int argc, char *argv[]) { int i, scope; pthread_t tid[NUM THREADS]; pthread_attr_t attr; /* get the default attributes */ pthread_attr_init(&attr); /* first inquire on the current scope */ if (pthread_attr_getscope(&attr, &scope) != 0) fprintf(stderr, "Unable to get scheduling scopen"); else { if (scope == PTHREAD_SCOPE_PROCESS) printf("PTHREAD_SCOPE_PROCESS"); else if (scope == PTHREAD_SCOPE_SYSTEM) printf("PTHREAD_SCOPE_SYSTEM"); else fprintf(stderr, "Illegal scope value. n"); } Operating System Concepts – 10 th Edition 5. 29 Silberschatz, Galvin and Gagne © 2018

Pthread Scheduling API /* set the scheduling algorithm to PCS or SCS */ pthread_attr_setscope(&attr, PTHREAD_SCOPE_SYSTEM); /* create threads */ for (i = 0; i < NUM_THREADS; i++) pthread_create(&tid[i], &attr, runner, NULL); /* now join on each thread */ for (i = 0; i < NUM_THREADS; i++) pthread_join(tid[i], NULL); } /* Each thread will begin control in this function */ void *runner(void *param) { /* do some work. . . */ pthread_exit(0); } Operating System Concepts – 10 th Edition 5. 30 Silberschatz, Galvin and Gagne © 2018