Chapter 4 Threads Concurrency Operating System Concepts 10

- Slides: 46

Chapter 4: Threads & Concurrency Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

Chapter 4: Threads n Overview n Multicore Programming n Multithreading Models n Thread Libraries n Implicit Threading n Threading Issues n Operating System Examples Operating System Concepts – 10 th Edition 4. 2 Silberschatz, Galvin and Gagne © 2018

Objectives n Identify the basic components of a thread, and contrast threads and processes n Describe the benefits and challenges of designing multithreaded applications n Illustrate different approaches to implicit threading including thread pools, fork-join, and Grand Central Dispatch n Describe how the Windows and Linux operating systems represent threads n Design multithreaded applications using the Pthreads, Java, and Windows threading APIs Operating System Concepts – 10 th Edition 4. 3 Silberschatz, Galvin and Gagne © 2018

Motivation n A thread is a basic unit of CPU utilization; l it comprises a thread ID, a program counter (PC), a register set, and a stack. n It shares with other threads belonging to the same process its code section, data section, and other operating-system resources, such as opened files and signals. n Traditionally, a process has a single thread of control, but most modern applications are multithreaded n Threads run within an application/process n Multiple tasks with the application can be implemented by separate threads 4 Update display 4 Fetch data 4 Spell checking 4 Answer a network request n Process creation is heavy-weight while thread creation is light-weight n Can simplify code, increase efficiency n Kernels are generally multithreaded Operating System Concepts – 10 th Edition 4. 4 Silberschatz, Galvin and Gagne © 2018

Single and Multithreaded Processes Operating System Concepts – 10 th Edition 4. 5 Silberschatz, Galvin and Gagne © 2018

Multithreaded Server Architecture Operating System Concepts – 10 th Edition 4. 6 Silberschatz, Galvin and Gagne © 2018

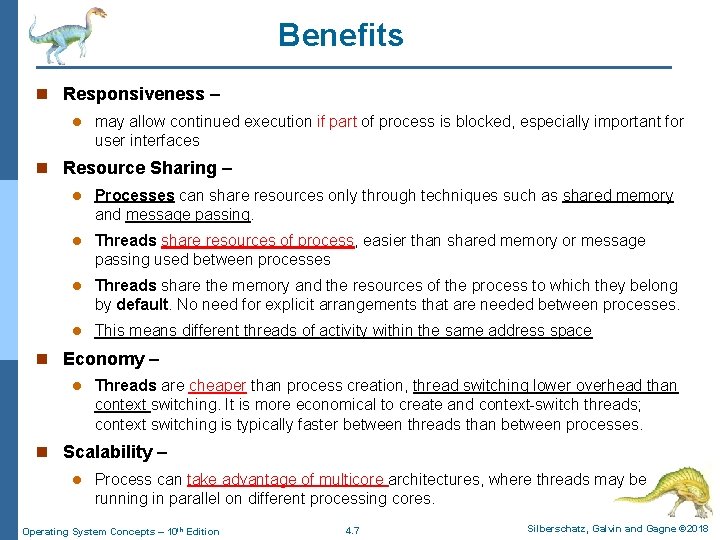

Benefits n Responsiveness – l may allow continued execution if part of process is blocked, especially important for user interfaces n Resource Sharing – l Processes can share resources only through techniques such as shared memory and message passing. l Threads share resources of process, easier than shared memory or message passing used between processes l Threads share the memory and the resources of the process to which they belong by default. No need for explicit arrangements that are needed between processes. l This means different threads of activity within the same address space n Economy – l Threads are cheaper than process creation, thread switching lower overhead than context switching. It is more economical to create and context-switch threads; context switching is typically faster between threads than between processes. n Scalability – l Process can take advantage of multicore architectures, where threads may be running in parallel on different processing cores. Operating System Concepts – 10 th Edition 4. 7 Silberschatz, Galvin and Gagne © 2018

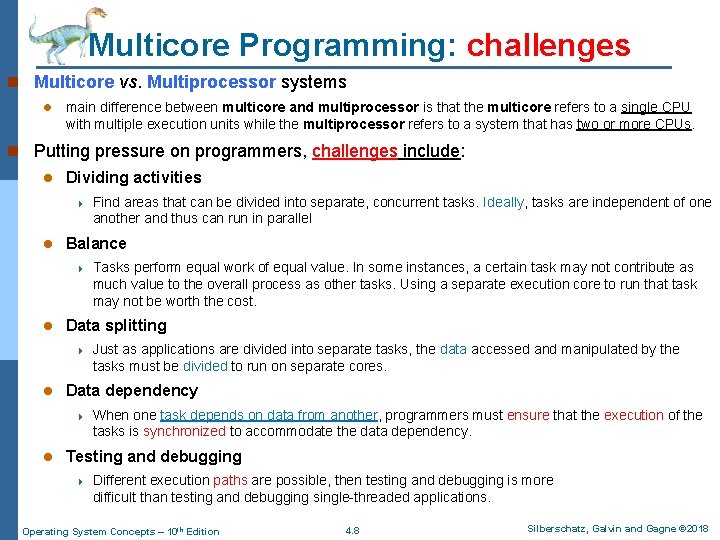

Multicore Programming: challenges n Multicore vs. Multiprocessor systems l main difference between multicore and multiprocessor is that the multicore refers to a single CPU with multiple execution units while the multiprocessor refers to a system that has two or more CPUs. n Putting pressure on programmers, challenges include: l Dividing activities 4 l Balance 4 l Just as applications are divided into separate tasks, the data accessed and manipulated by the tasks must be divided to run on separate cores. Data dependency 4 l Tasks perform equal work of equal value. In some instances, a certain task may not contribute as much value to the overall process as other tasks. Using a separate execution core to run that task may not be worth the cost. Data splitting 4 l Find areas that can be divided into separate, concurrent tasks. Ideally, tasks are independent of one another and thus can run in parallel When one task depends on data from another, programmers must ensure that the execution of the tasks is synchronized to accommodate the data dependency. Testing and debugging 4 Different execution paths are possible, then testing and debugging is more difficult than testing and debugging single-threaded applications. Operating System Concepts – 10 th Edition 4. 8 Silberschatz, Galvin and Gagne © 2018

Multicore Programming n Parallelism : l implies a system can perform more than one task simultaneously (at the same time) n Concurrency: l supports more than one task making progress l Single processor/core: scheduler providing concurrency n Thus, it is possible to have concurrency without parallelism; l the CPU schedulers were designed to provide the illusion of parallelism by rapidly switching between processes, thereby allowing each process to make progress. l These scheduled processes were running concurrently, but not in parallel. Operating System Concepts – 10 th Edition 4. 9 Silberschatz, Galvin and Gagne © 2018

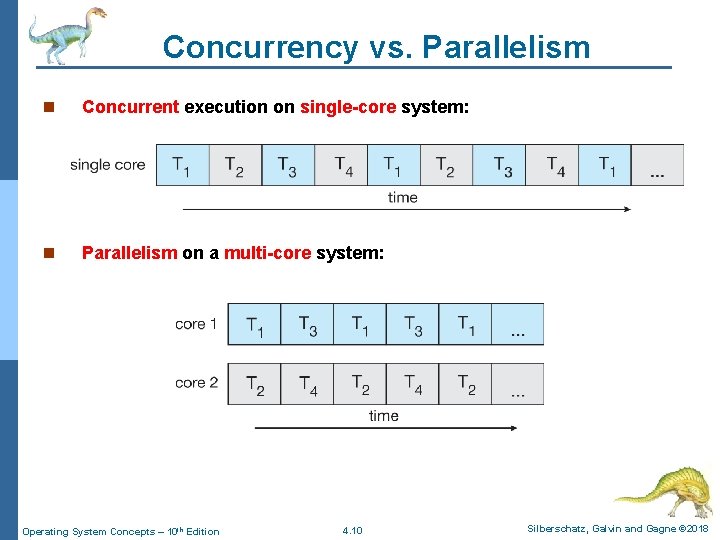

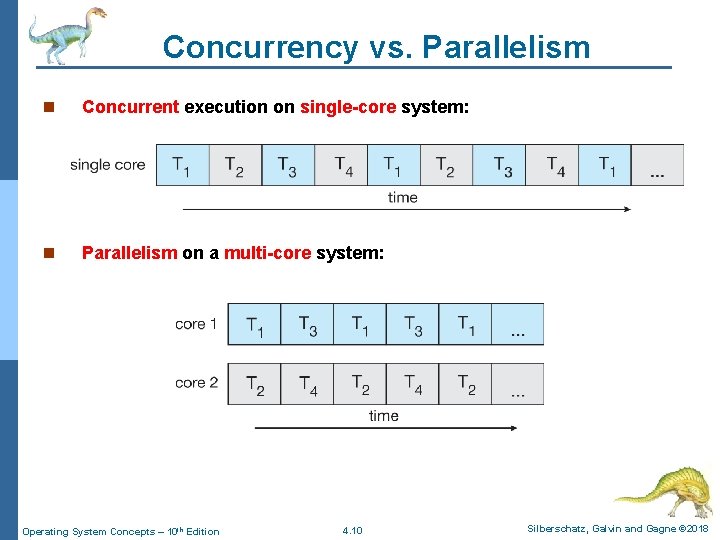

Concurrency vs. Parallelism n Concurrent execution on single-core system: n Parallelism on a multi-core system: Operating System Concepts – 10 th Edition 4. 10 Silberschatz, Galvin and Gagne © 2018

Types of Parallelism Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

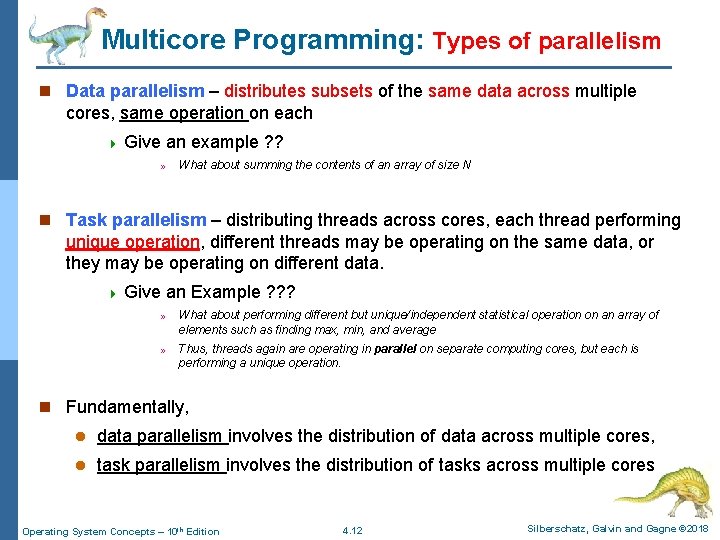

Multicore Programming: Types of parallelism n Data parallelism – distributes subsets of the same data across multiple cores, same operation on each 4 Give » an example ? ? What about summing the contents of an array of size N n Task parallelism – distributing threads across cores, each thread performing unique operation, different threads may be operating on the same data, or they may be operating on different data. 4 Give an Example ? ? ? » What about performing different but unique/independent statistical operation on an array of elements such as finding max, min, and average » Thus, threads again are operating in parallel on separate computing cores, but each is performing a unique operation. n Fundamentally, l data parallelism involves the distribution of data across multiple cores, l task parallelism involves the distribution of tasks across multiple cores Operating System Concepts – 10 th Edition 4. 12 Silberschatz, Galvin and Gagne © 2018

Data and Task Parallelism Operating System Concepts – 10 th Edition 4. 13 Silberschatz, Galvin and Gagne © 2018

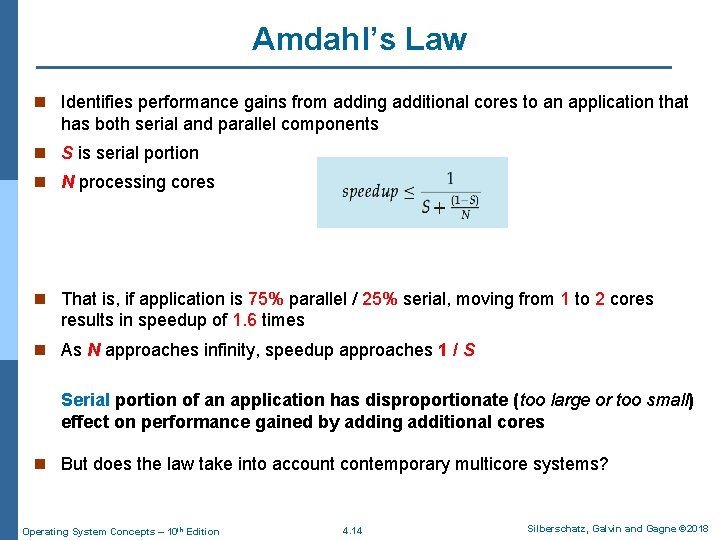

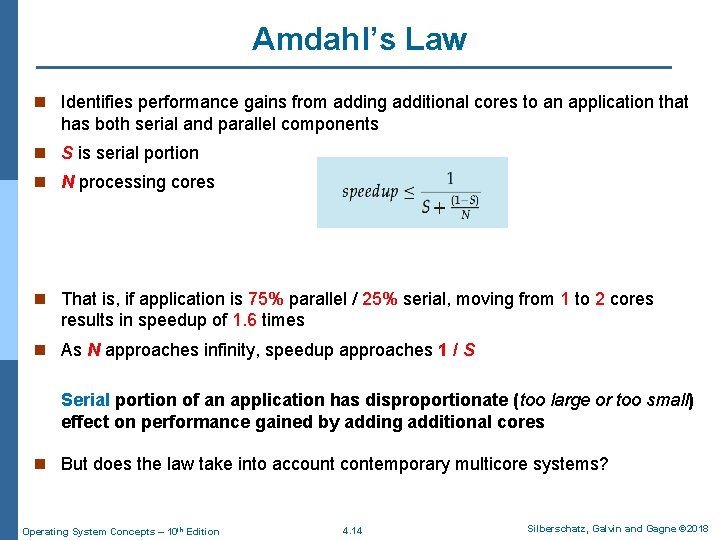

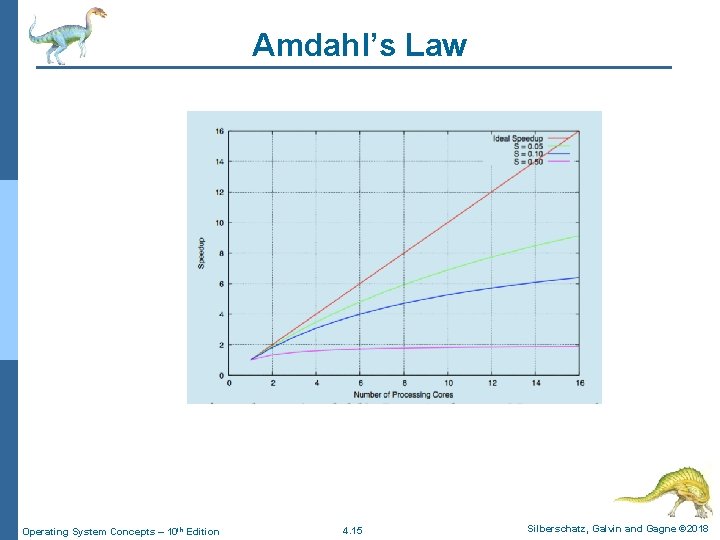

Amdahl’s Law n Identifies performance gains from adding additional cores to an application that has both serial and parallel components n S is serial portion n N processing cores n That is, if application is 75% parallel / 25% serial, moving from 1 to 2 cores results in speedup of 1. 6 times n As N approaches infinity, speedup approaches 1 / S Serial portion of an application has disproportionate (too large or too small) effect on performance gained by adding additional cores n But does the law take into account contemporary multicore systems? Operating System Concepts – 10 th Edition 4. 14 Silberschatz, Galvin and Gagne © 2018

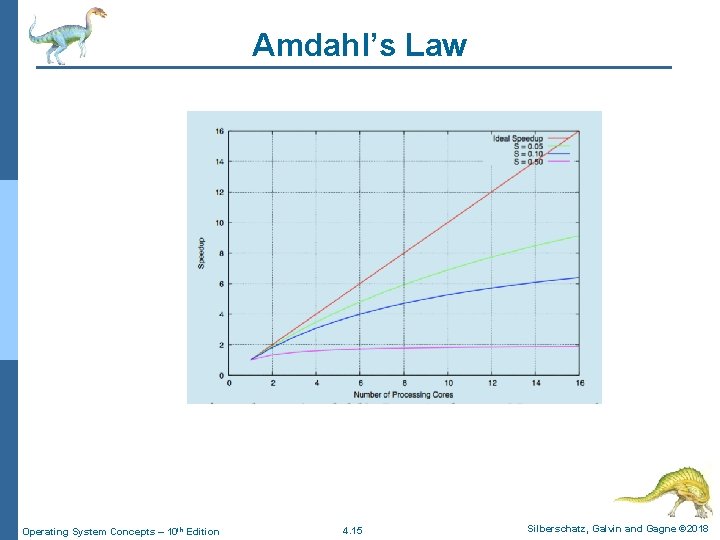

Amdahl’s Law Operating System Concepts – 10 th Edition 4. 15 Silberschatz, Galvin and Gagne © 2018

Multithreading Models Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

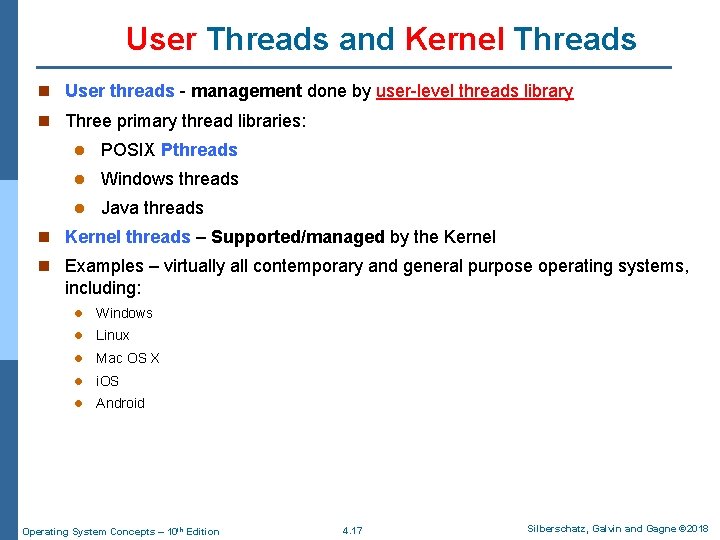

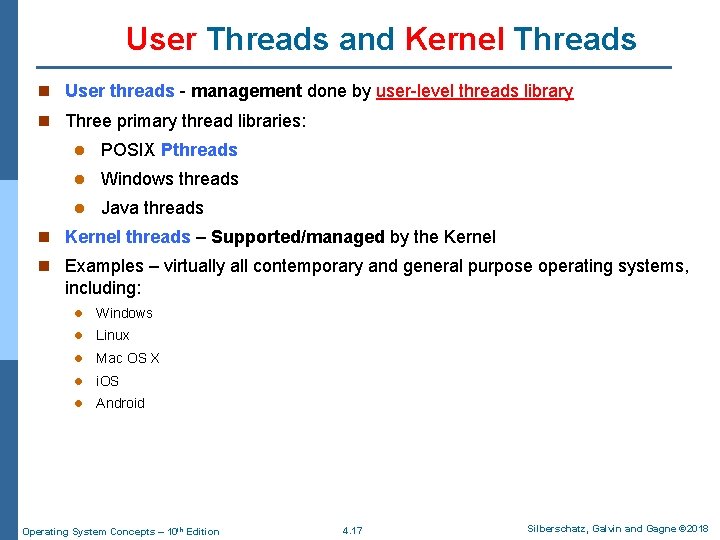

User Threads and Kernel Threads n User threads - management done by user-level threads library n Three primary thread libraries: l POSIX Pthreads l Windows threads l Java threads n Kernel threads – Supported/managed by the Kernel n Examples – virtually all contemporary and general purpose operating systems, including: l Windows l Linux l Mac OS X l i. OS l Android Operating System Concepts – 10 th Edition 4. 17 Silberschatz, Galvin and Gagne © 2018

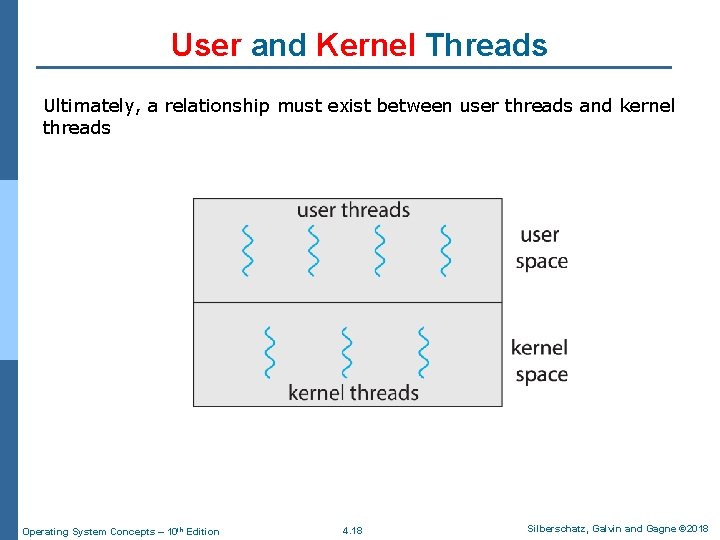

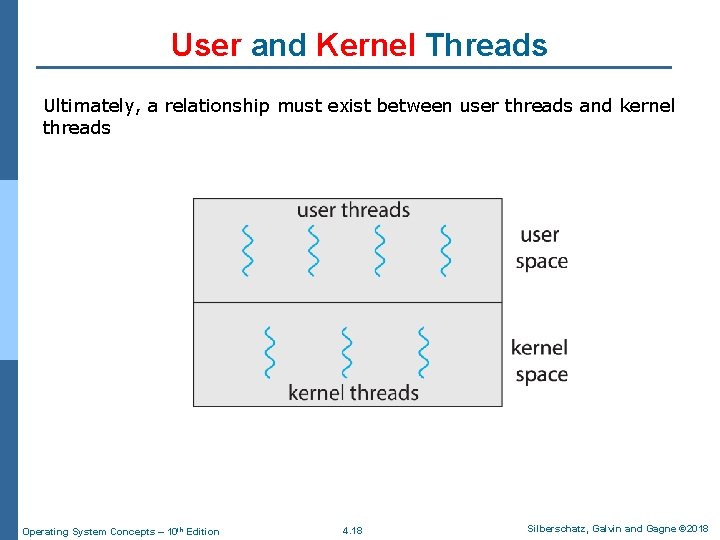

User and Kernel Threads Ultimately, a relationship must exist between user threads and kernel threads Operating System Concepts – 10 th Edition 4. 18 Silberschatz, Galvin and Gagne © 2018

Multithreading Models n Many-to-One l Many user level threads are mapped to one kernel level thread n One-to-One l Each user level thread is mapped to one kernel level thread n Many-to-Many l Many user level threads are mapped to many kernel level threads Operating System Concepts – 10 th Edition 4. 19 Silberschatz, Galvin and Gagne © 2018

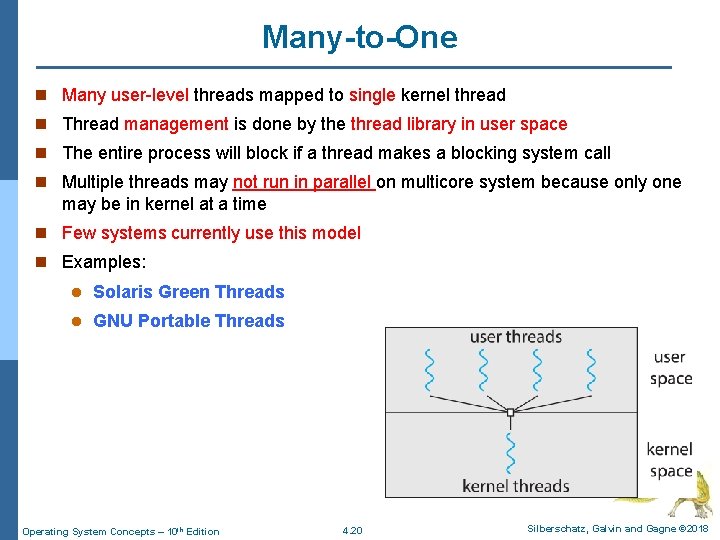

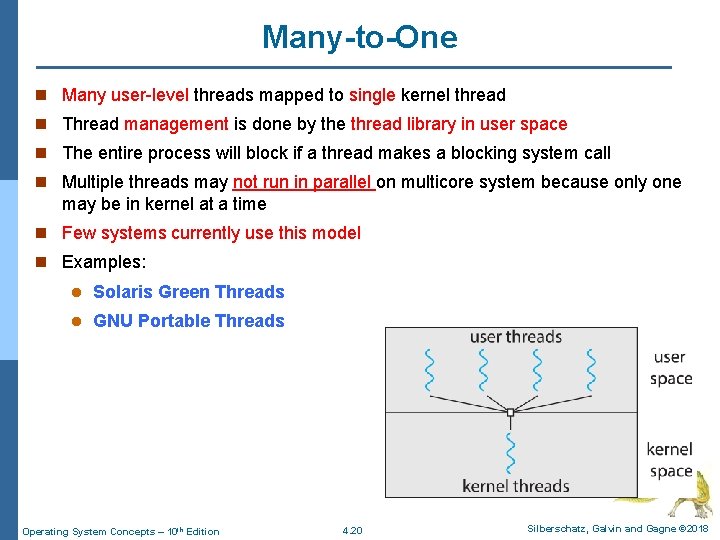

Many-to-One n Many user-level threads mapped to single kernel thread n Thread management is done by the thread library in user space n The entire process will block if a thread makes a blocking system call n Multiple threads may not run in parallel on multicore system because only one may be in kernel at a time n Few systems currently use this model n Examples: l Solaris Green Threads l GNU Portable Threads Operating System Concepts – 10 th Edition 4. 20 Silberschatz, Galvin and Gagne © 2018

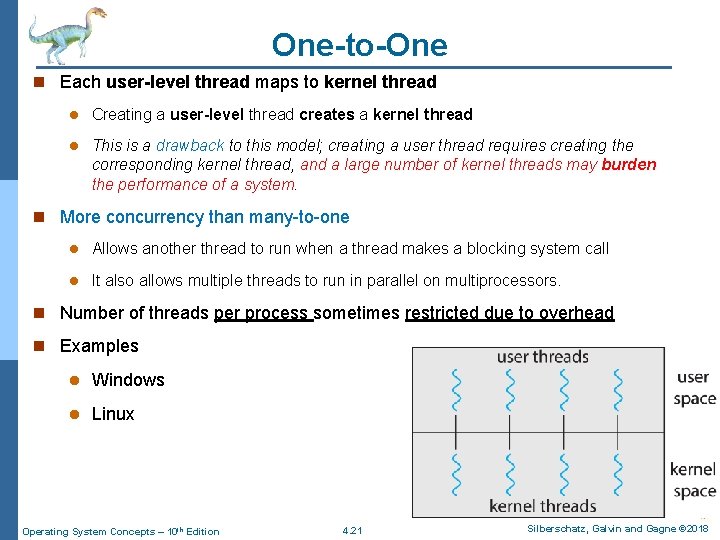

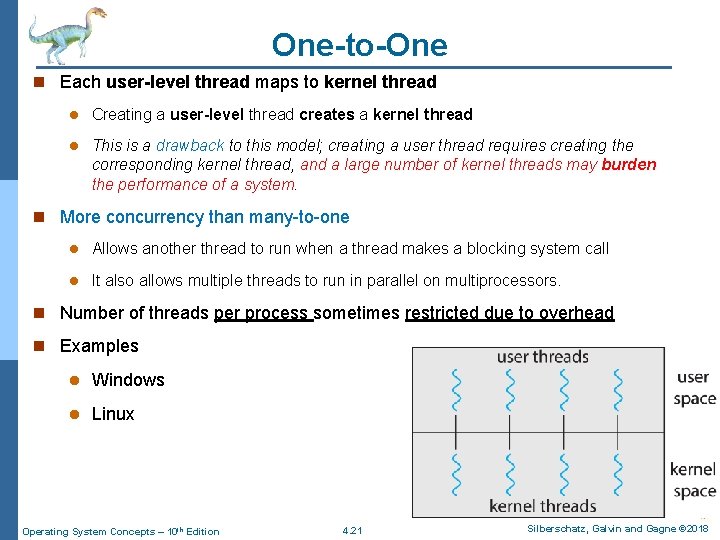

One-to-One n Each user-level thread maps to kernel thread l Creating a user-level thread creates a kernel thread l This is a drawback to this model; creating a user thread requires creating the corresponding kernel thread, and a large number of kernel threads may burden the performance of a system. n More concurrency than many-to-one l Allows another thread to run when a thread makes a blocking system call l It also allows multiple threads to run in parallel on multiprocessors. n Number of threads per process sometimes restricted due to overhead n Examples l Windows l Linux Operating System Concepts – 10 th Edition 4. 21 Silberschatz, Galvin and Gagne © 2018

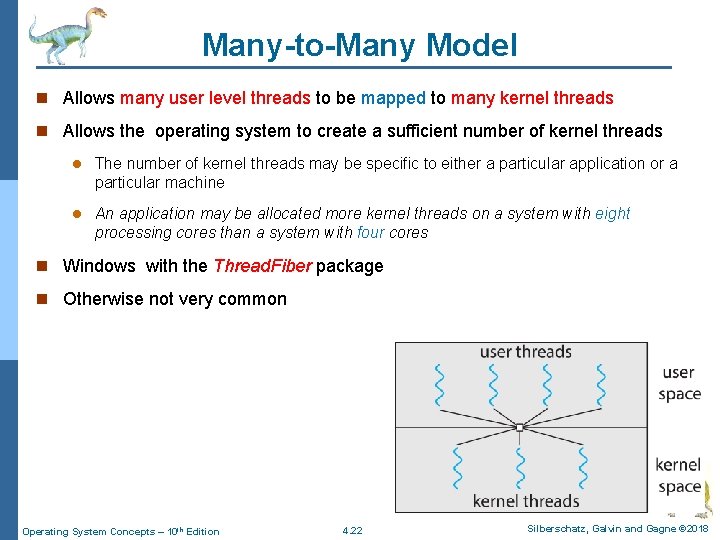

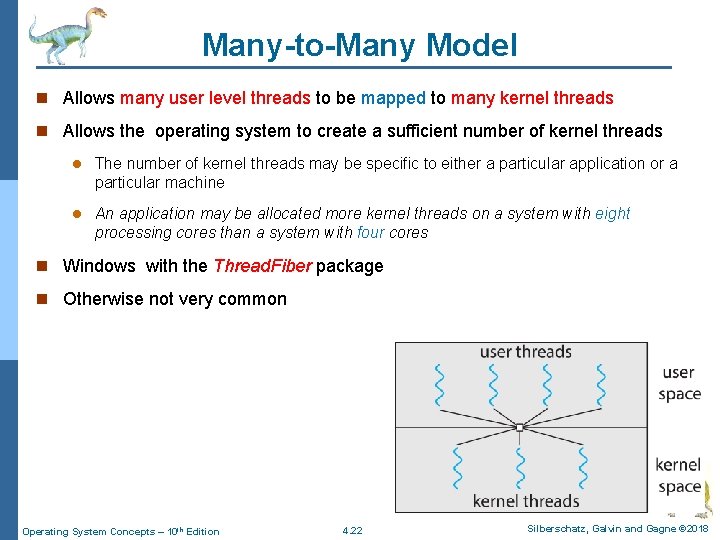

Many-to-Many Model n Allows many user level threads to be mapped to many kernel threads n Allows the operating system to create a sufficient number of kernel threads l The number of kernel threads may be specific to either a particular application or a particular machine l An application may be allocated more kernel threads on a system with eight processing cores than a system with four cores n Windows with the Thread. Fiber package n Otherwise not very common Operating System Concepts – 10 th Edition 4. 22 Silberschatz, Galvin and Gagne © 2018

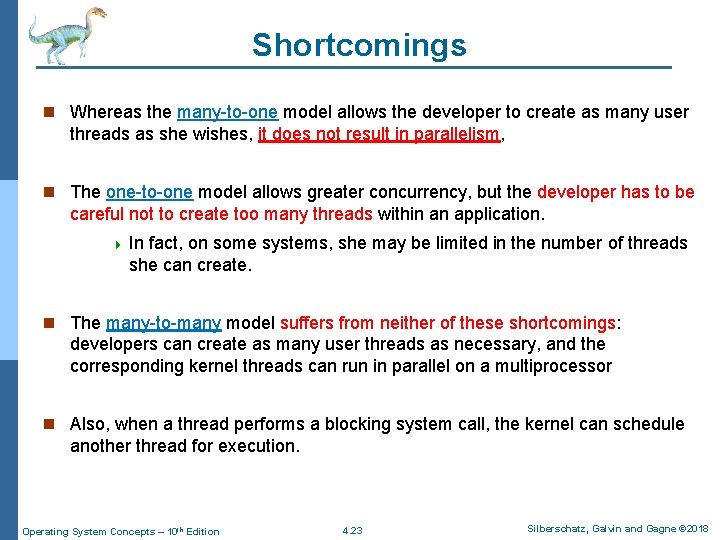

Shortcomings n Whereas the many-to-one model allows the developer to create as many user threads as she wishes, it does not result in parallelism, n The one-to-one model allows greater concurrency, but the developer has to be careful not to create too many threads within an application. 4 In fact, on some systems, she may be limited in the number of threads she can create. n The many-to-many model suffers from neither of these shortcomings: developers can create as many user threads as necessary, and the corresponding kernel threads can run in parallel on a multiprocessor n Also, when a thread performs a blocking system call, the kernel can schedule another thread for execution. Operating System Concepts – 10 th Edition 4. 23 Silberschatz, Galvin and Gagne © 2018

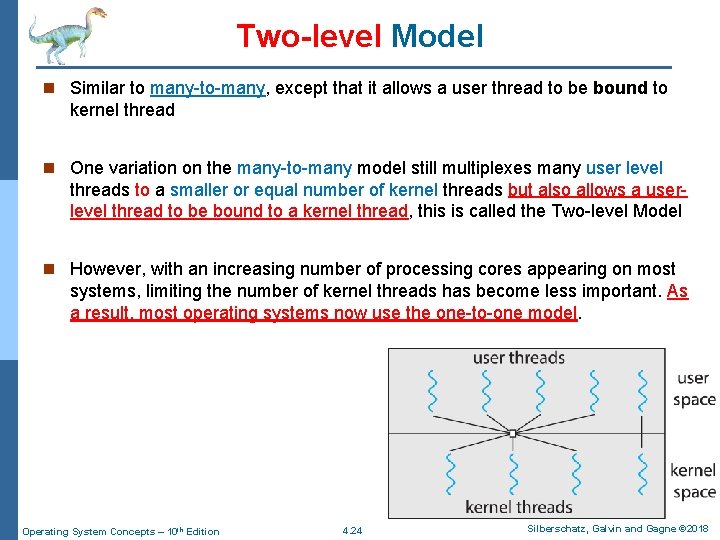

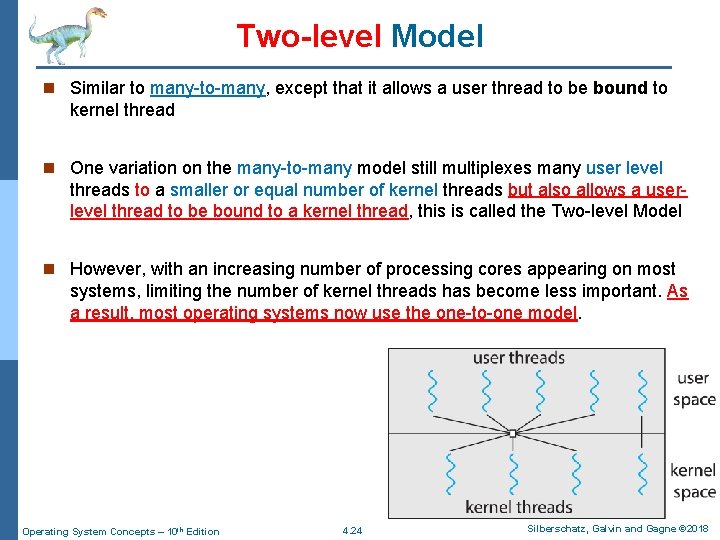

Two-level Model n Similar to many-to-many, except that it allows a user thread to be bound to kernel thread n One variation on the many-to-many model still multiplexes many user level threads to a smaller or equal number of kernel threads but also allows a userlevel thread to be bound to a kernel thread, this is called the Two-level Model n However, with an increasing number of processing cores appearing on most systems, limiting the number of kernel threads has become less important. As a result, most operating systems now use the one-to-one model. Operating System Concepts – 10 th Edition 4. 24 Silberschatz, Galvin and Gagne © 2018

Thread Libraries Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

Thread Libraries n Thread library provides programmer with API for creating and managing threads n Two primary ways of implementing l l Library entirely in user space 4 All code and data structures for the library exist in user space. 4 This means that invoking a function in the library results in a local function call in user space and not a system call. Kernel-level library supported by the OS 4 Implement a kernel-level library supported directly by the operating system 4 Code and data structures for the library exist in kernel space 4 Invoking a function in the API for the library typically results in a system call to the kernel n Examples of thread libraries: 4 POSIX Pthreads: may be provided as either a user-level or a kernel-level library 4 Windows Threads: a kernel-level library available on Windows systems 4 Java Threads: Often JVM is running on top of a host OS, thus, Java thread API is generally implemented using a thread library available on the host system Operating System Concepts – 10 th Edition 4. 26 Silberschatz, Galvin and Gagne © 2018

Examples (This part is self-reading, you need to practice with Java threads and Pthreads) Note: you may stop by my office if you are stuck somewhere Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

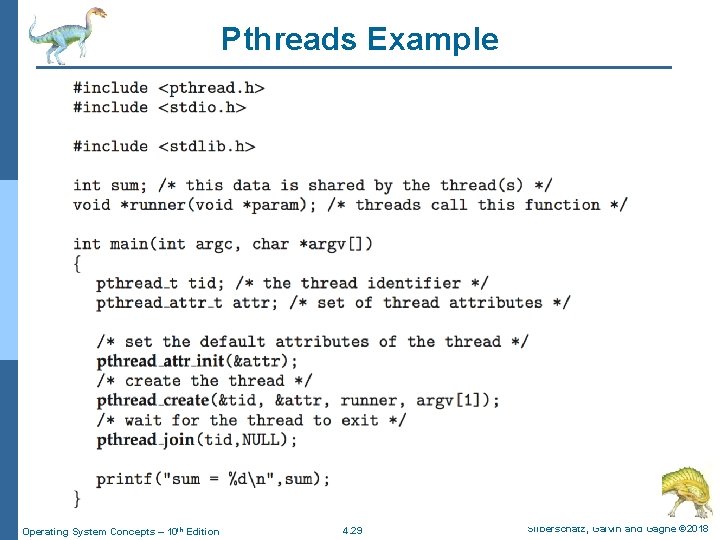

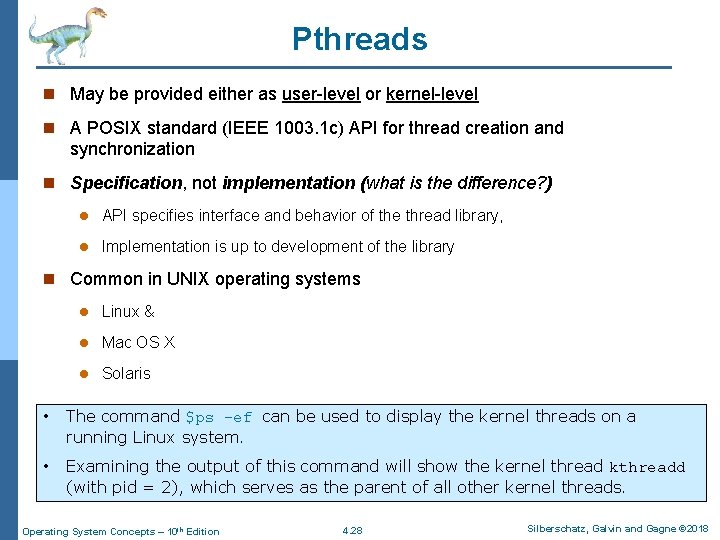

Pthreads n May be provided either as user-level or kernel-level n A POSIX standard (IEEE 1003. 1 c) API for thread creation and synchronization n Specification, not implementation (what is the difference? ) l API specifies interface and behavior of the thread library, l Implementation is up to development of the library n Common in UNIX operating systems l Linux & l Mac OS X l Solaris • The command $ps -ef can be used to display the kernel threads on a running Linux system. • Examining the output of this command will show the kernel thread kthreadd (with pid = 2), which serves as the parent of all other kernel threads. Operating System Concepts – 10 th Edition 4. 28 Silberschatz, Galvin and Gagne © 2018

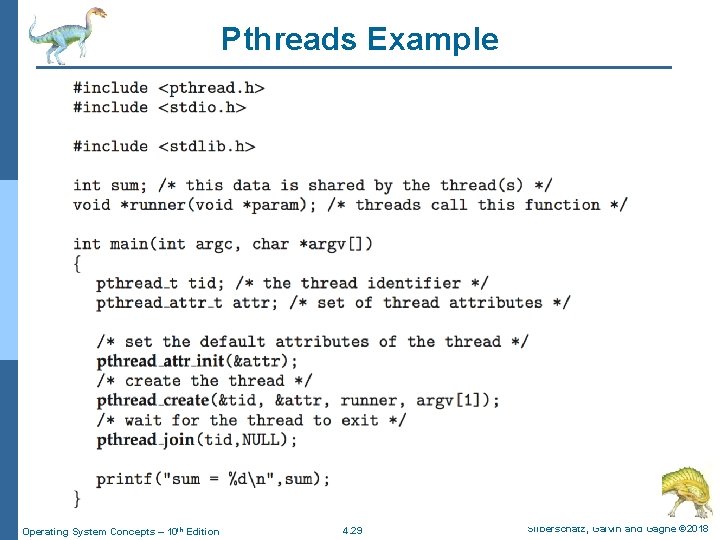

Pthreads Example Operating System Concepts – 10 th Edition 4. 29 Silberschatz, Galvin and Gagne © 2018

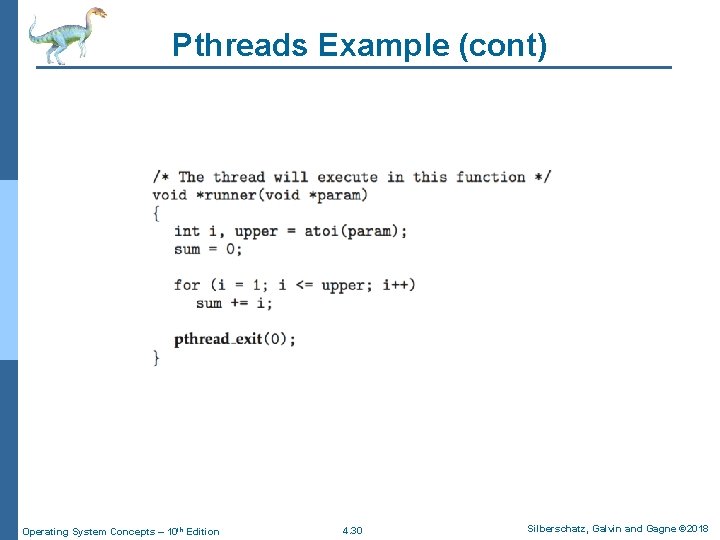

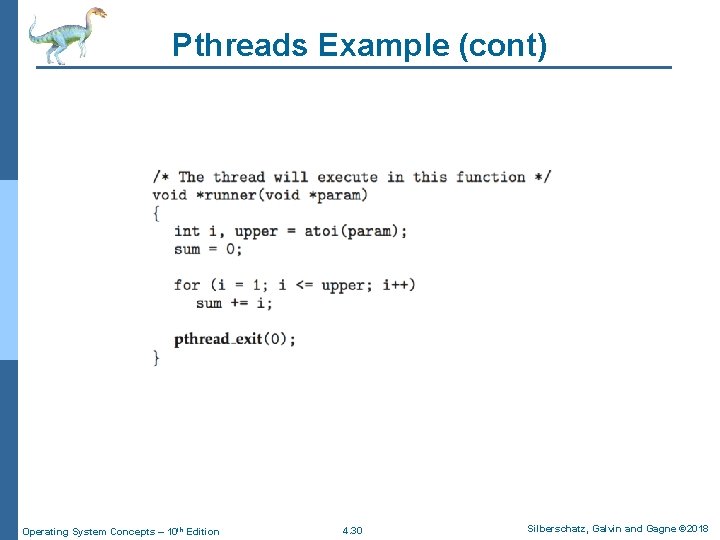

Pthreads Example (cont) Operating System Concepts – 10 th Edition 4. 30 Silberschatz, Galvin and Gagne © 2018

Pthreads Code for Joining 10 Threads Operating System Concepts – 10 th Edition 4. 31 Silberschatz, Galvin and Gagne © 2018

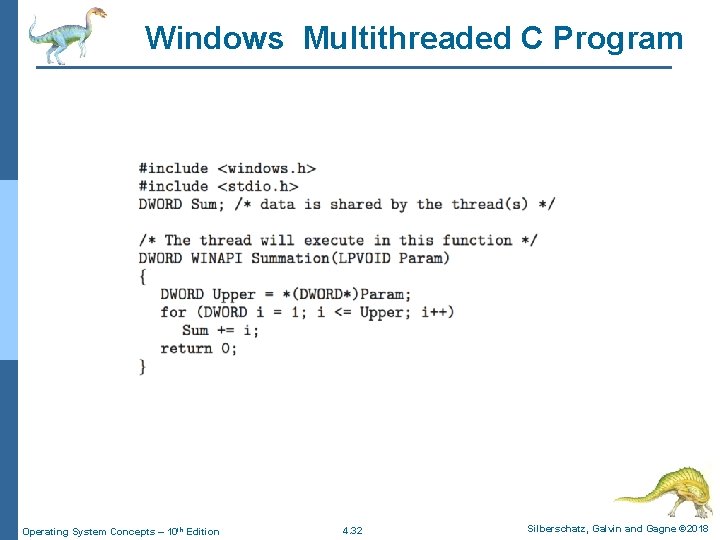

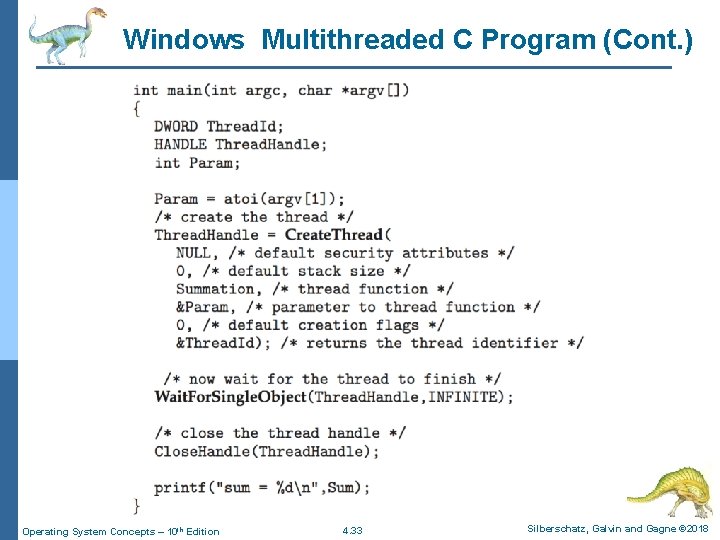

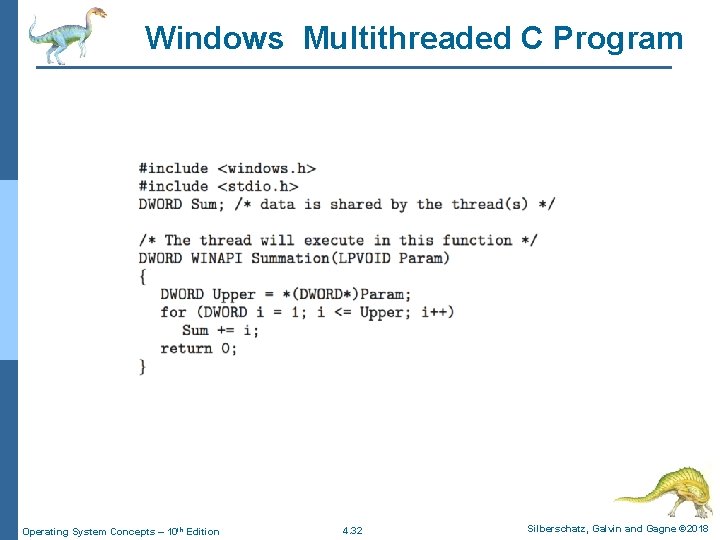

Windows Multithreaded C Program Operating System Concepts – 10 th Edition 4. 32 Silberschatz, Galvin and Gagne © 2018

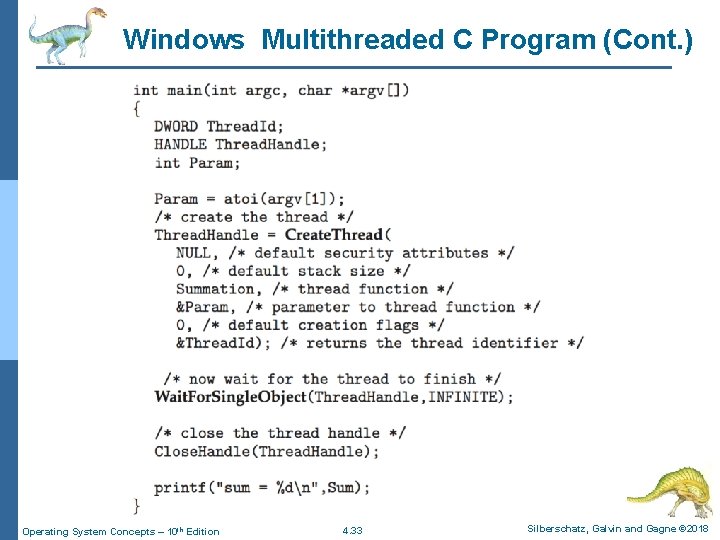

Windows Multithreaded C Program (Cont. ) Operating System Concepts – 10 th Edition 4. 33 Silberschatz, Galvin and Gagne © 2018

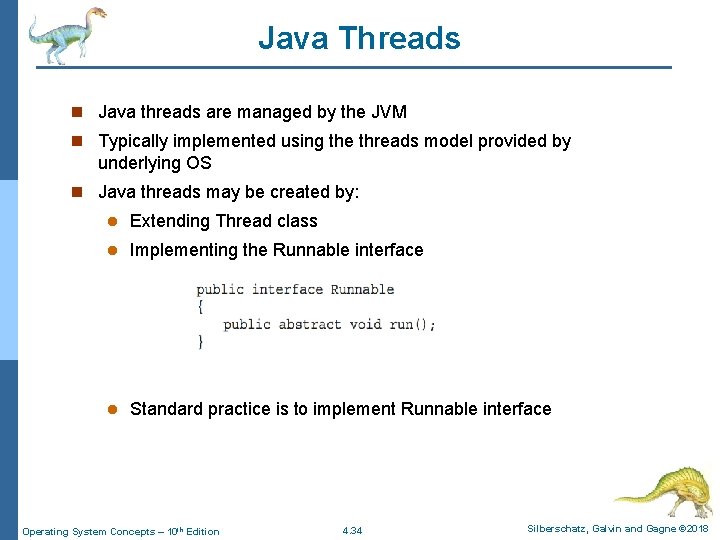

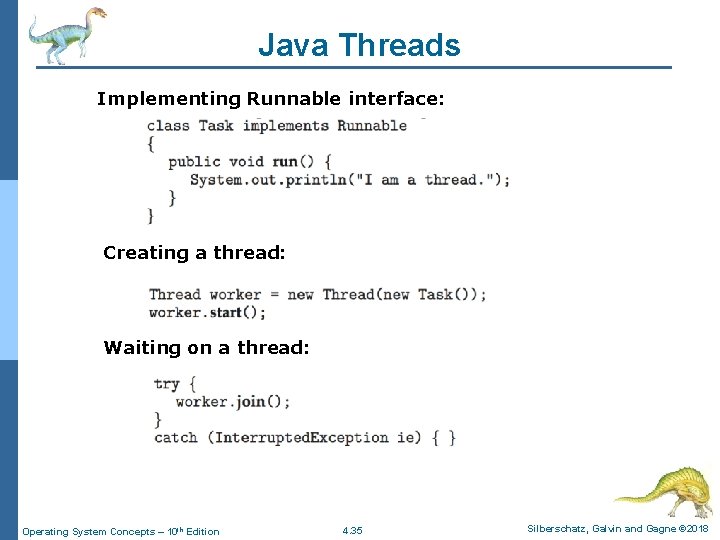

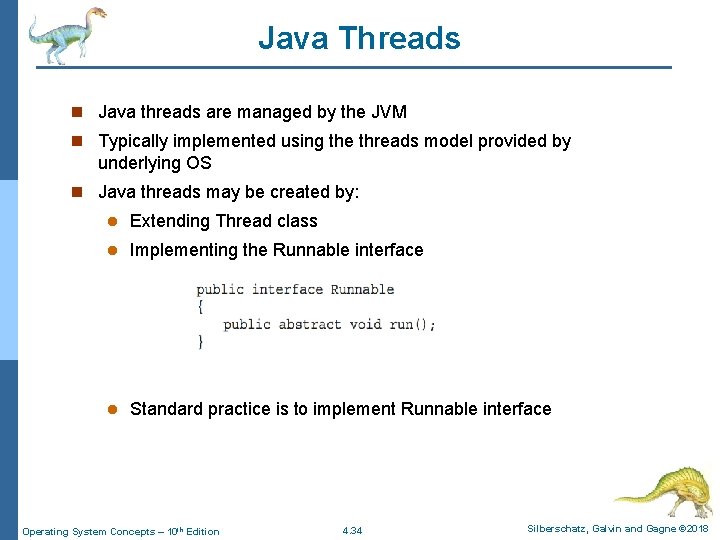

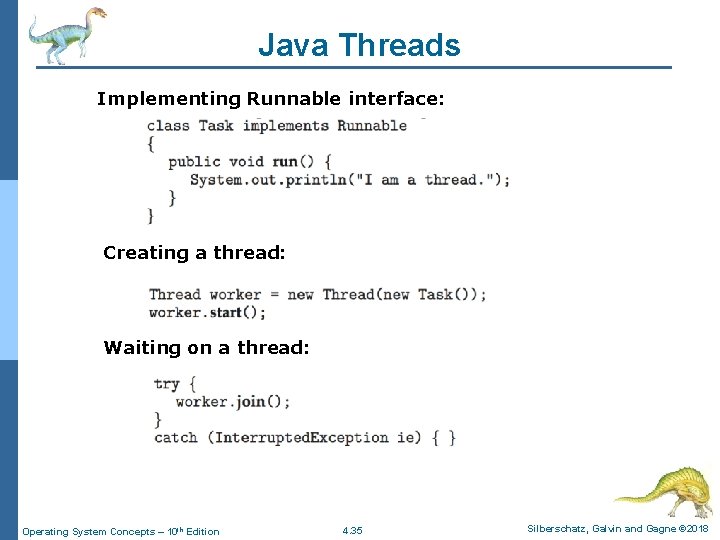

Java Threads n Java threads are managed by the JVM n Typically implemented using the threads model provided by underlying OS n Java threads may be created by: l Extending Thread class l Implementing the Runnable interface l Standard practice is to implement Runnable interface Operating System Concepts – 10 th Edition 4. 34 Silberschatz, Galvin and Gagne © 2018

Java Threads Implementing Runnable interface: Creating a thread: Waiting on a thread: Operating System Concepts – 10 th Edition 4. 35 Silberschatz, Galvin and Gagne © 2018

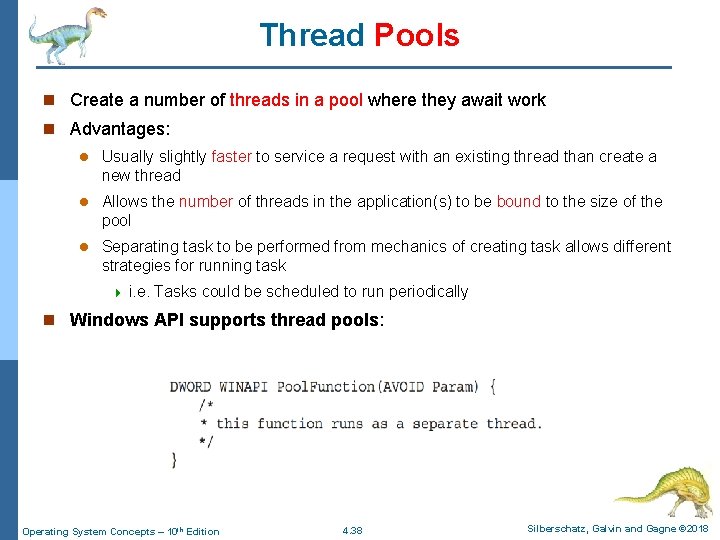

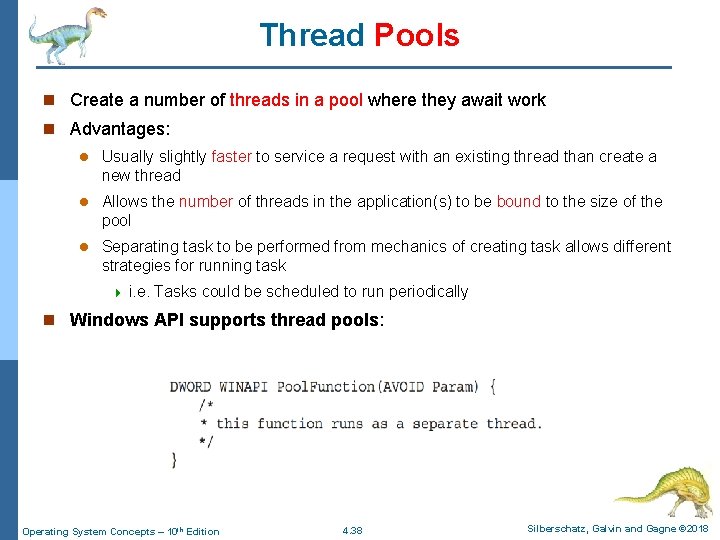

Thread Pools n Create a number of threads in a pool where they await work n Advantages: l Usually slightly faster to service a request with an existing thread than create a new thread l Allows the number of threads in the application(s) to be bound to the size of the pool l Separating task to be performed from mechanics of creating task allows different strategies for running task 4 i. e. Tasks could be scheduled to run periodically n Windows API supports thread pools: Operating System Concepts – 10 th Edition 4. 38 Silberschatz, Galvin and Gagne © 2018

Threading Issues Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

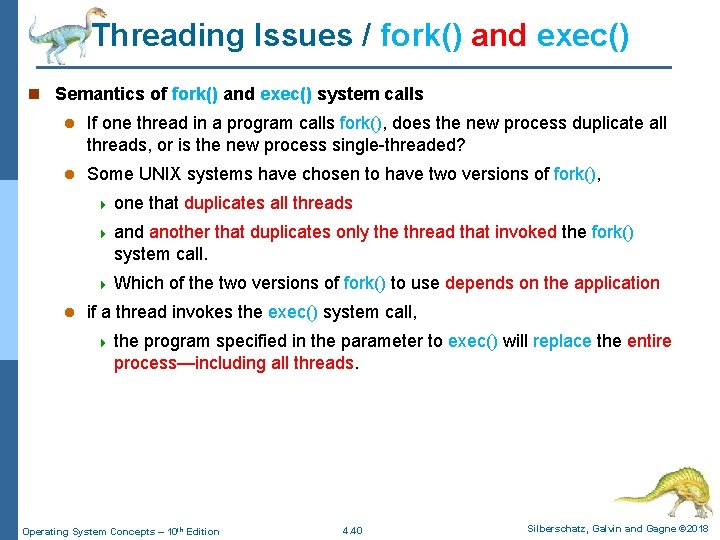

Threading Issues / fork() and exec() n Semantics of fork() and exec() system calls l If one thread in a program calls fork(), does the new process duplicate all threads, or is the new process single-threaded? l Some UNIX systems have chosen to have two versions of fork(), 4 one that duplicates all threads 4 and another that duplicates only the thread that invoked the fork() system call. 4 Which l of the two versions of fork() to use depends on the application if a thread invokes the exec() system call, 4 the program specified in the parameter to exec() will replace the entire process—including all threads. Operating System Concepts – 10 th Edition 4. 40 Silberschatz, Galvin and Gagne © 2018

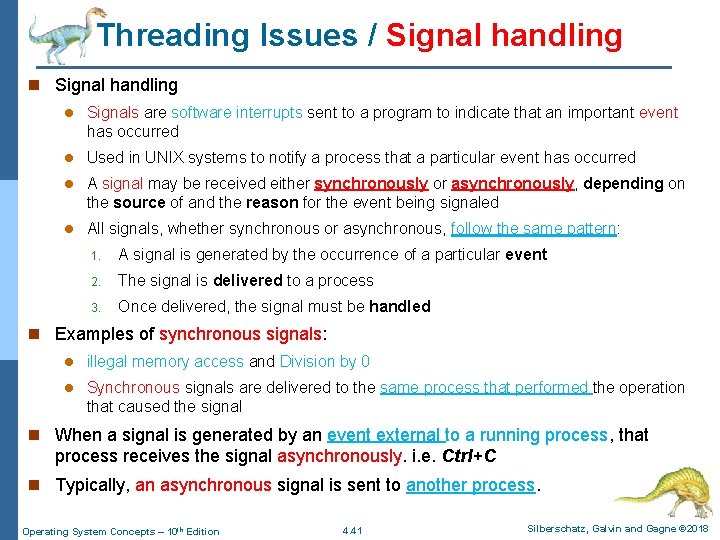

Threading Issues / Signal handling n Signal handling l Signals are software interrupts sent to a program to indicate that an important event has occurred l Used in UNIX systems to notify a process that a particular event has occurred l A signal may be received either synchronously or asynchronously, depending on the source of and the reason for the event being signaled l All signals, whether synchronous or asynchronous, follow the same pattern: 1. A signal is generated by the occurrence of a particular event 2. The signal is delivered to a process 3. Once delivered, the signal must be handled n Examples of synchronous signals: l illegal memory access and Division by 0 l Synchronous signals are delivered to the same process that performed the operation that caused the signal n When a signal is generated by an event external to a running process, that process receives the signal asynchronously. i. e. Ctrl+C n Typically, an asynchronous signal is sent to another process. Operating System Concepts – 10 th Edition 4. 41 Silberschatz, Galvin and Gagne © 2018

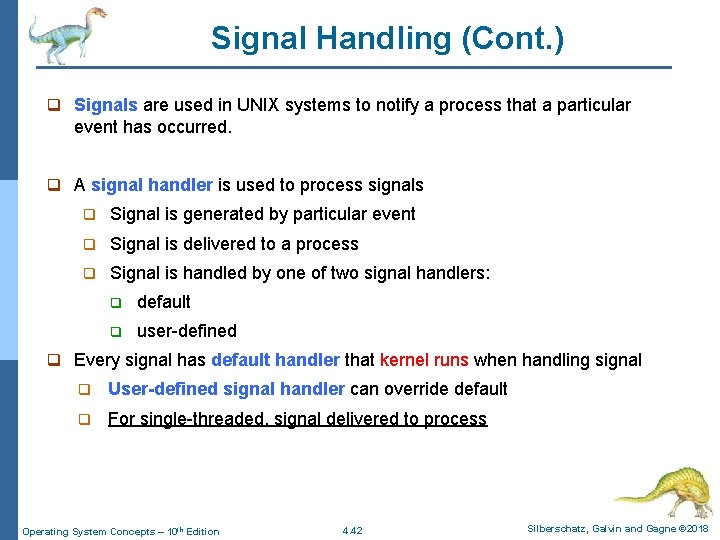

Signal Handling (Cont. ) q Signals are used in UNIX systems to notify a process that a particular event has occurred. q A signal handler is used to process signals q Signal is generated by particular event q Signal is delivered to a process q Signal is handled by one of two signal handlers: q default q user-defined q Every signal has default handler that kernel runs when handling signal q User-defined signal handler can override default q For single-threaded, signal delivered to process Operating System Concepts – 10 th Edition 4. 42 Silberschatz, Galvin and Gagne © 2018

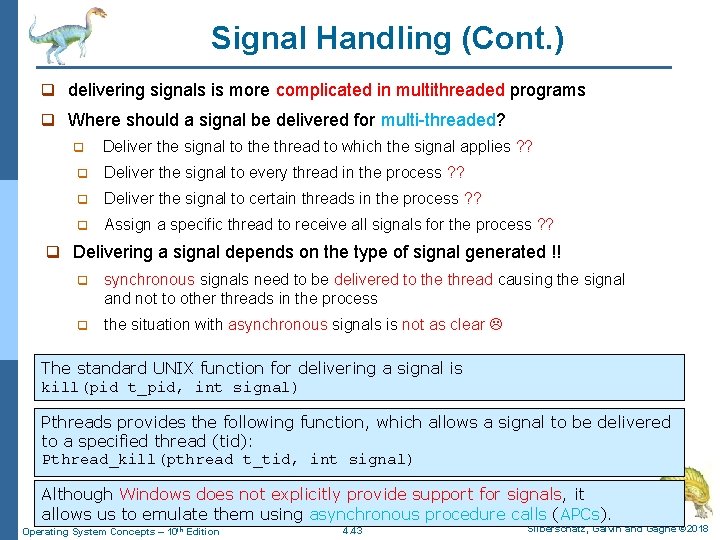

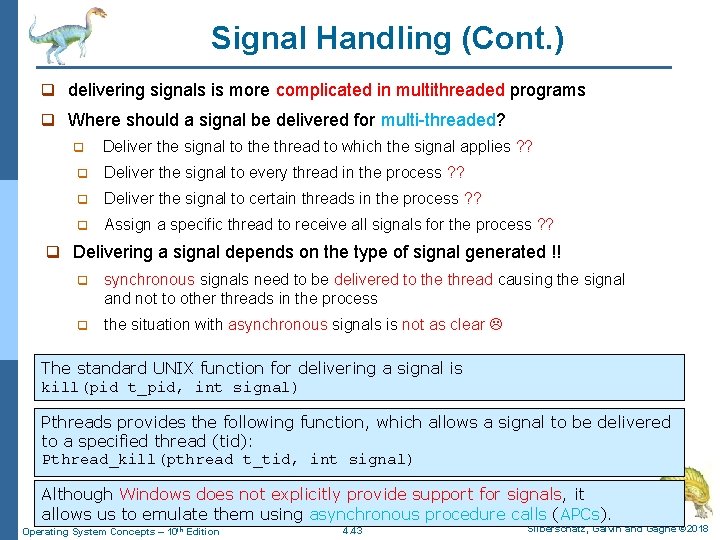

Signal Handling (Cont. ) q delivering signals is more complicated in multithreaded programs q Where should a signal be delivered for multi-threaded? q Deliver the signal to the thread to which the signal applies ? ? q Deliver the signal to every thread in the process ? ? q Deliver the signal to certain threads in the process ? ? q Assign a specific thread to receive all signals for the process ? ? q Delivering a signal depends on the type of signal generated !! q synchronous signals need to be delivered to the thread causing the signal and not to other threads in the process q the situation with asynchronous signals is not as clear The standard UNIX function for delivering a signal is kill(pid t_pid, int signal) Pthreads provides the following function, which allows a signal to be delivered to a specified thread (tid): Pthread_kill(pthread t_tid, int signal) Although Windows does not explicitly provide support for signals, it allows us to emulate them using asynchronous procedure calls (APCs). Operating System Concepts – 10 th Edition 4. 43 Silberschatz, Galvin and Gagne © 2018

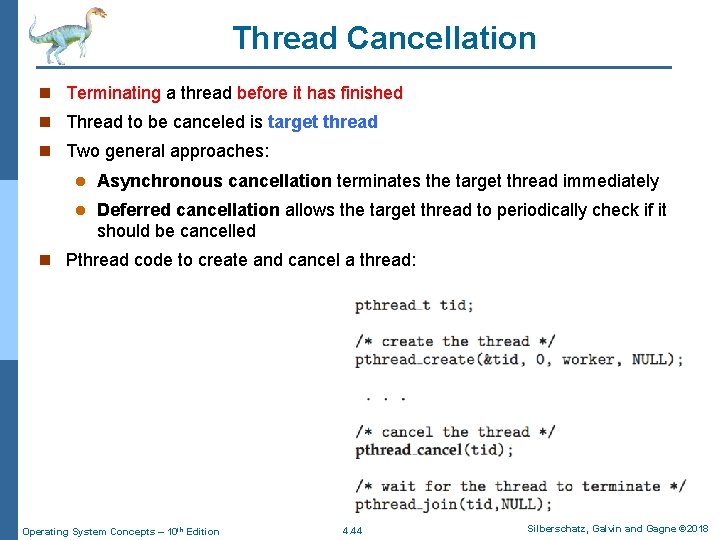

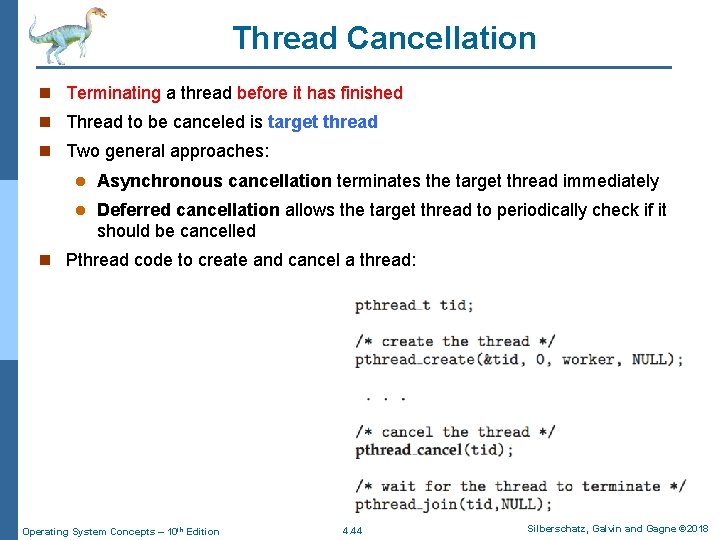

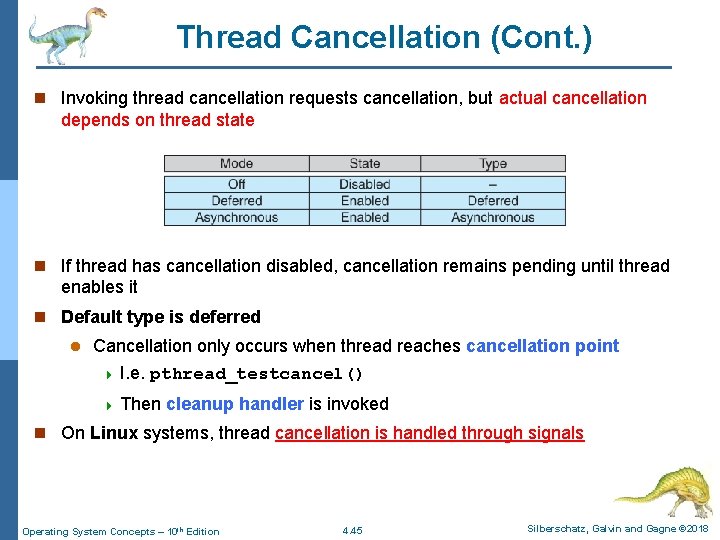

Thread Cancellation n Terminating a thread before it has finished n Thread to be canceled is target thread n Two general approaches: l Asynchronous cancellation terminates the target thread immediately l Deferred cancellation allows the target thread to periodically check if it should be cancelled n Pthread code to create and cancel a thread: Operating System Concepts – 10 th Edition 4. 44 Silberschatz, Galvin and Gagne © 2018

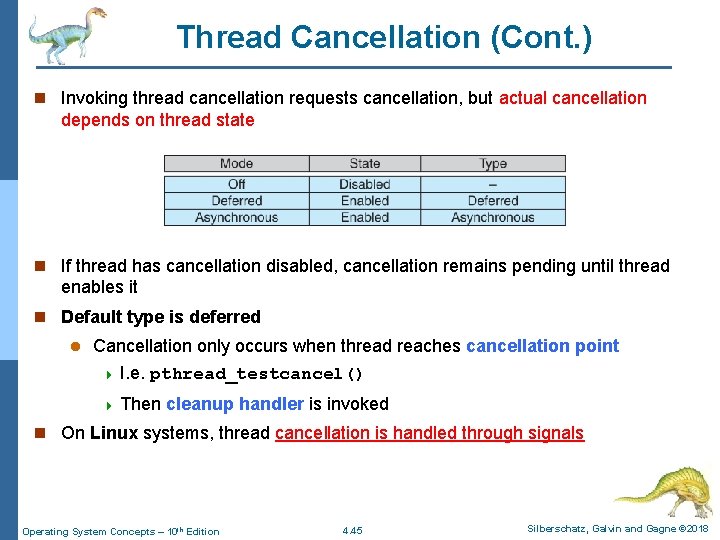

Thread Cancellation (Cont. ) n Invoking thread cancellation requests cancellation, but actual cancellation depends on thread state n If thread has cancellation disabled, cancellation remains pending until thread enables it n Default type is deferred l Cancellation only occurs when thread reaches cancellation point 4 I. e. pthread_testcancel() 4 Then cleanup handler is invoked n On Linux systems, thread cancellation is handled through signals Operating System Concepts – 10 th Edition 4. 45 Silberschatz, Galvin and Gagne © 2018

Thread-Local Storage (TLS) n Threads belonging to a process share the data of the process n However, in some circumstances, each thread might need its own copy of certain data. n Thread-Local Storage (TLS) allows each thread to have its own copy of data l For example, in a transaction-processing system, we might service each transaction in a separate thread. l Furthermore, each transaction might be assigned a unique identifier. Thus, we could use threadlocal storage for the identifier. n Useful when you do not have control over the thread creation process (i. e. , when using a thread pool) n TLS is Different from local variables l Local variables visible only during single function invocation l TLS visible across function invocations n Similar to static data l TLS is unique to each thread Operating System Concepts – 10 th Edition 4. 46 Silberschatz, Galvin and Gagne © 2018

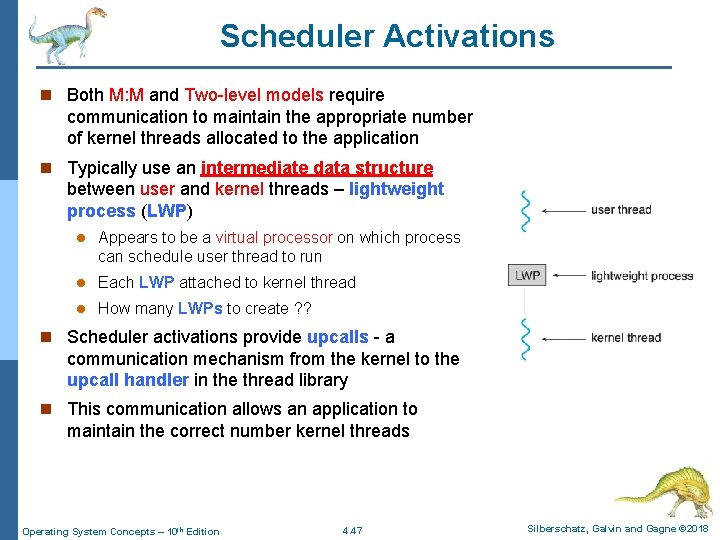

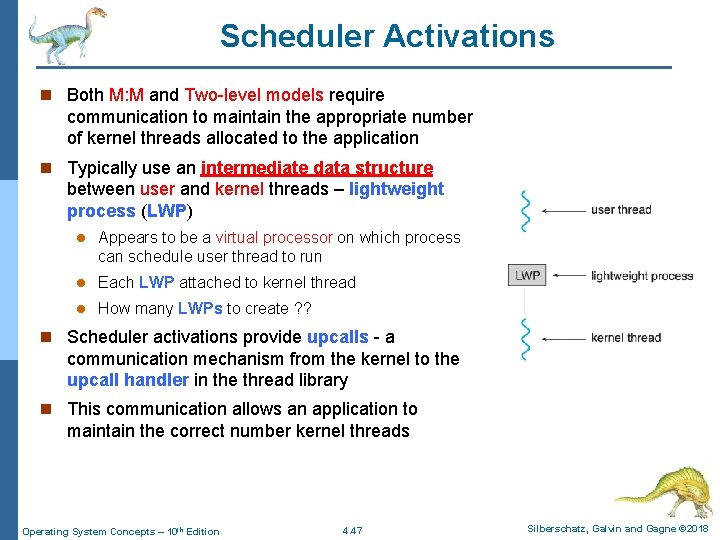

Scheduler Activations n Both M: M and Two-level models require communication to maintain the appropriate number of kernel threads allocated to the application n Typically use an intermediate data structure between user and kernel threads – lightweight process (LWP) l Appears to be a virtual processor on which process can schedule user thread to run l Each LWP attached to kernel thread l How many LWPs to create ? ? n Scheduler activations provide upcalls - a communication mechanism from the kernel to the upcall handler in the thread library n This communication allows an application to maintain the correct number kernel threads Operating System Concepts – 10 th Edition 4. 47 Silberschatz, Galvin and Gagne © 2018

End of Chapter 4 Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018