Chapter 3 Transport Layer Part B Course on

Chapter 3: Transport Layer Part B Course on Computer Communication and Networks, CTH/GU The slides are adaptation of the slides made available by the authors of the course’s main textbook 3: Transport Layer 3 b-1

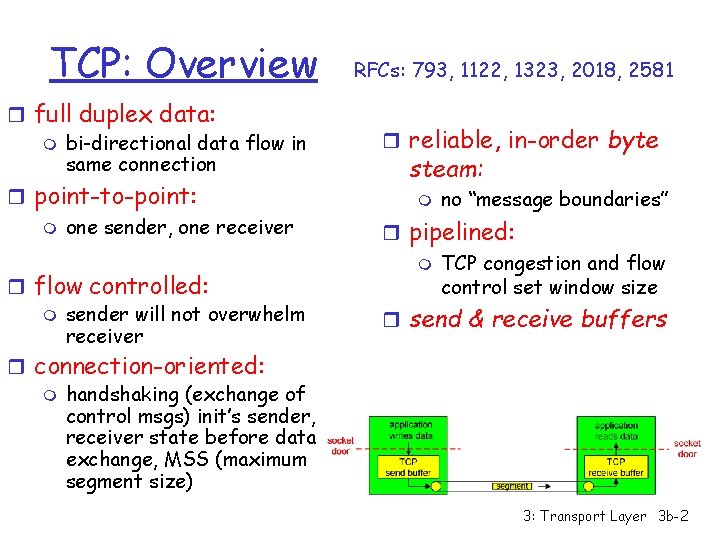

TCP: Overview r full duplex data: m bi-directional data flow in same connection r point-to-point: m one sender, one receiver r flow controlled: m sender will not overwhelm receiver r connection-oriented: m handshaking (exchange of control msgs) init’s sender, receiver state before data exchange, MSS (maximum segment size) RFCs: 793, 1122, 1323, 2018, 2581 r reliable, in-order byte steam: m no “message boundaries” r pipelined: m TCP congestion and flow control set window size r send & receive buffers 3: Transport Layer 3 b-2

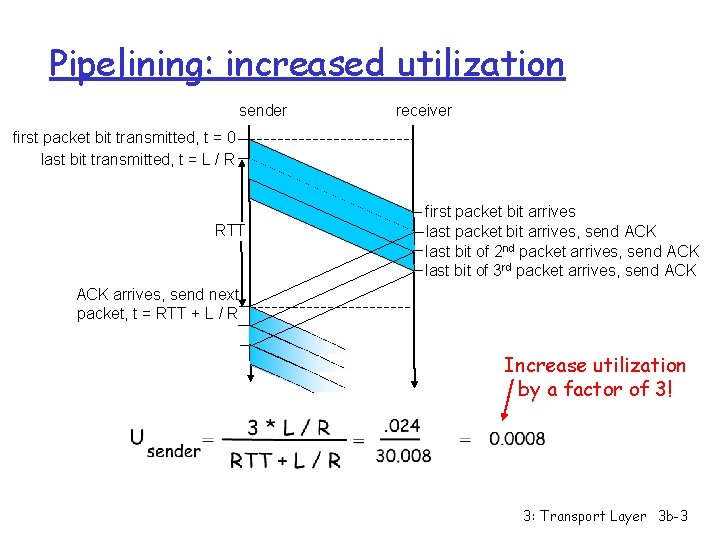

Pipelining: increased utilization sender receiver first packet bit transmitted, t = 0 last bit transmitted, t = L / R RTT first packet bit arrives last packet bit arrives, send ACK last bit of 2 nd packet arrives, send ACK last bit of 3 rd packet arrives, send ACK arrives, send next packet, t = RTT + L / R Increase utilization by a factor of 3! 3: Transport Layer 3 b-3

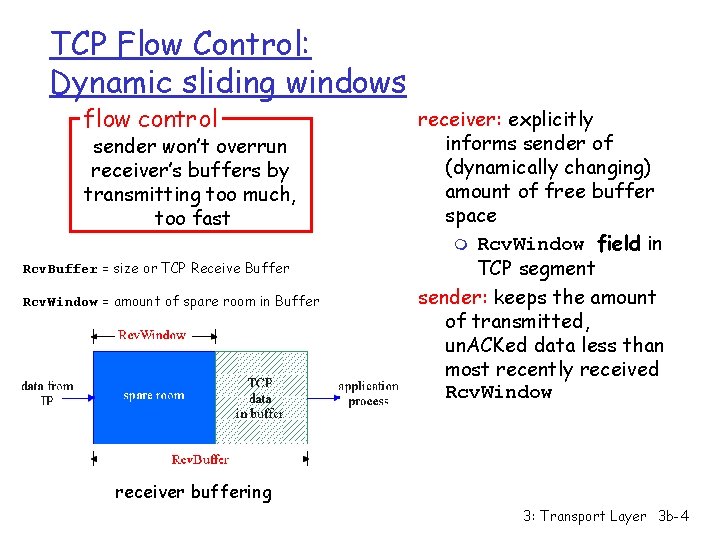

TCP Flow Control: Dynamic sliding windows flow control sender won’t overrun receiver’s buffers by transmitting too much, too fast Rcv. Buffer = size or TCP Receive Buffer Rcv. Window = amount of spare room in Buffer receiver: explicitly informs sender of (dynamically changing) amount of free buffer space m Rcv. Window field in TCP segment sender: keeps the amount of transmitted, un. ACKed data less than most recently received Rcv. Window receiver buffering 3: Transport Layer 3 b-4

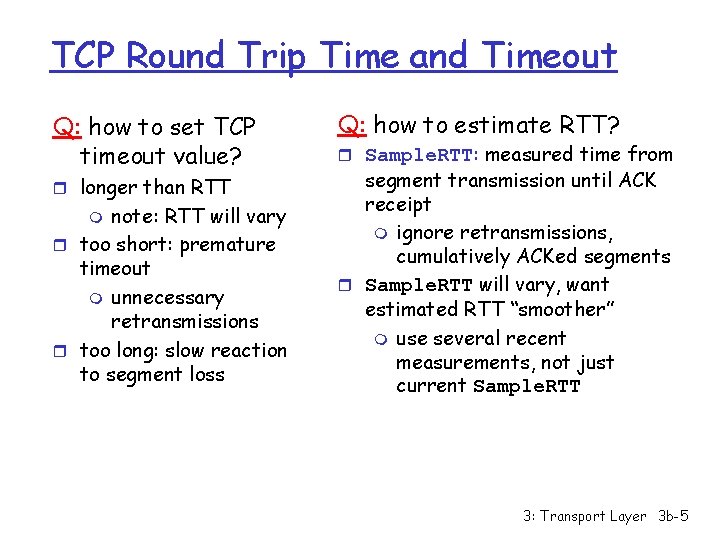

TCP Round Trip Time and Timeout Q: how to set TCP timeout value? r longer than RTT note: RTT will vary r too short: premature timeout m unnecessary retransmissions r too long: slow reaction to segment loss m Q: how to estimate RTT? r Sample. RTT: measured time from segment transmission until ACK receipt m ignore retransmissions, cumulatively ACKed segments r Sample. RTT will vary, want estimated RTT “smoother” m use several recent measurements, not just current Sample. RTT 3: Transport Layer 3 b-5

TCP Round Trip Time and Timeout Estimated. RTT = (1 -x)*Estimated. RTT + x*Sample. RTT r Exponential weighted average: influence of given sample decreases exponentially fast r typical value of x: 0. 1 Setting the timeout r Estimted. RTT plus “safety margin” r large variation in Estimated. RTT -> larger safety margin Timeout = Estimated. RTT + 4*Deviation = (1 -x)*Deviation + x*|Sample. RTT-Estimated. RTT| 3: Transport Layer 3 b-6

Example RTT estimation: 3: Transport Layer 3 b-7

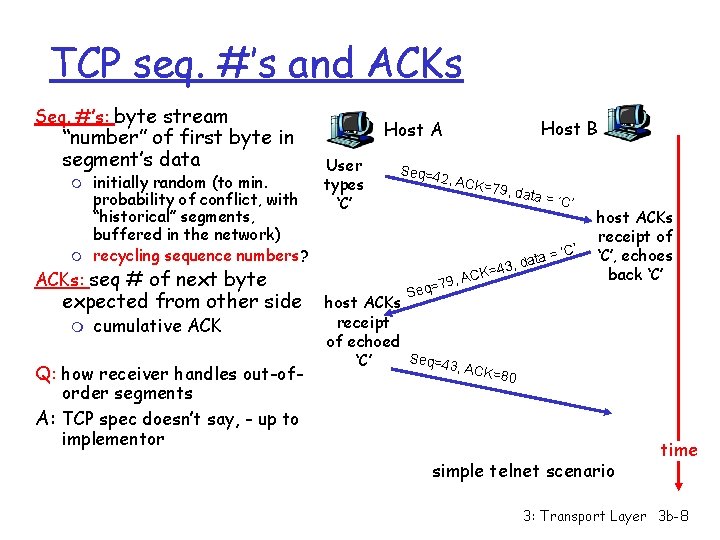

TCP seq. #’s and ACKs Seq. #’s: byte stream “number” of first byte in segment’s data m m initially random (to min. probability of conflict, with “historical” segments, buffered in the network) recycling sequence numbers? ACKs: seq # of next byte expected from other side m cumulative ACK Q: how receiver handles out-oforder segments A: TCP spec doesn’t say, - up to implementor Host B Host A User types ‘C’ Seq=4 2, ACK = 79, da ta ata = d , 3 4 K= Se , AC q=79 = ‘C’ host ACKs receipt of ‘C’, echoes back ‘C’ host ACKs receipt of echoed Seq=4 ‘C’ 3, ACK =80 simple telnet scenario time 3: Transport Layer 3 b-8

![TCP ACK generation [RFC 1122, RFC 2581] Event TCP Receiver action in-order segment arrival, TCP ACK generation [RFC 1122, RFC 2581] Event TCP Receiver action in-order segment arrival,](http://slidetodoc.com/presentation_image_h2/eff6ea9641e01b9518ad4e36e30ec48f/image-9.jpg)

TCP ACK generation [RFC 1122, RFC 2581] Event TCP Receiver action in-order segment arrival, no gaps, everything else already ACKed delayed ACK. Wait up to 500 ms for next segment. If no next segment, send ACK in-order segment arrival, no gaps, one delayed ACK pending immediately send single cumulative ACK out-of-order segment arrival higher-than-expect seq. # gap detected send duplicate ACK, indicating seq. # of next expected byte arrival of segment that partially or completely fills gap immediate ACK if segment starts at lower end of gap 3: Transport Layer 3 b-9

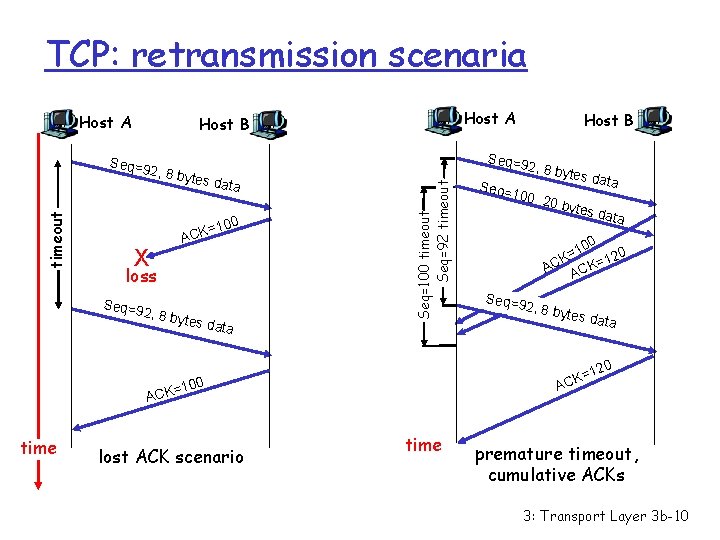

TCP: retransmission scenaria Host A , 8 byt es dat a 100 X = ACK loss Seq=9 2 , 8 byt es dat a 0 10 = K 120 = C K A AC Seq=9 2, 8 by tes da t a 20 100 lost ACK scenario 2, 8 by tes da ta Seq= 100, 2 0 byte s data K=1 AC = ACK time Host B Seq=9 Seq=100 timeout Seq=92 timeout Seq=9 2 timeout Host A Host B time premature timeout, cumulative ACKs 3: Transport Layer 3 b-10

Fast Retransmit r Time-out period often relatively long: m long delay before resending lost packet r Detect lost segments via duplicate ACKs. m m Sender often sends many segments back-toback If segment is lost, there will likely be many duplicate ACKs. r If sender receives 3 ACKs for the same data, it supposes that segment after ACKed data was lost: m fast retransmit: resend segment before timer expires 3: Transport Layer 3 b-11

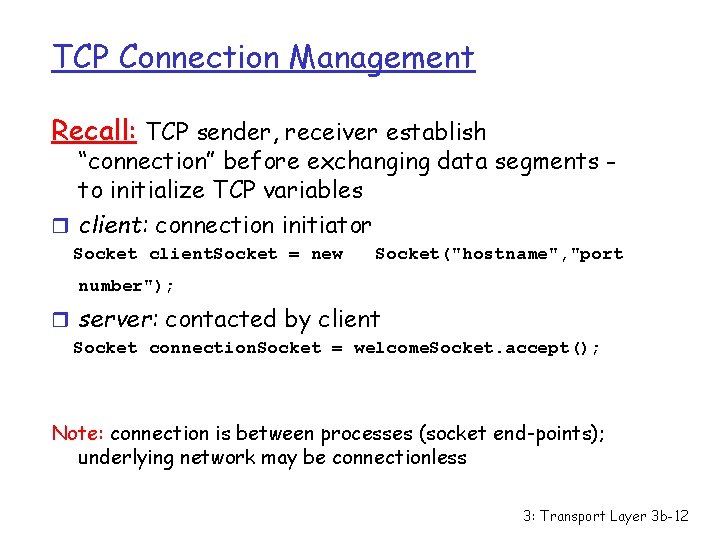

TCP Connection Management Recall: TCP sender, receiver establish “connection” before exchanging data segments to initialize TCP variables r client: connection initiator Socket client. Socket = new Socket("hostname", "port number"); r server: contacted by client Socket connection. Socket = welcome. Socket. accept(); Note: connection is between processes (socket end-points); underlying network may be connectionless 3: Transport Layer 3 b-12

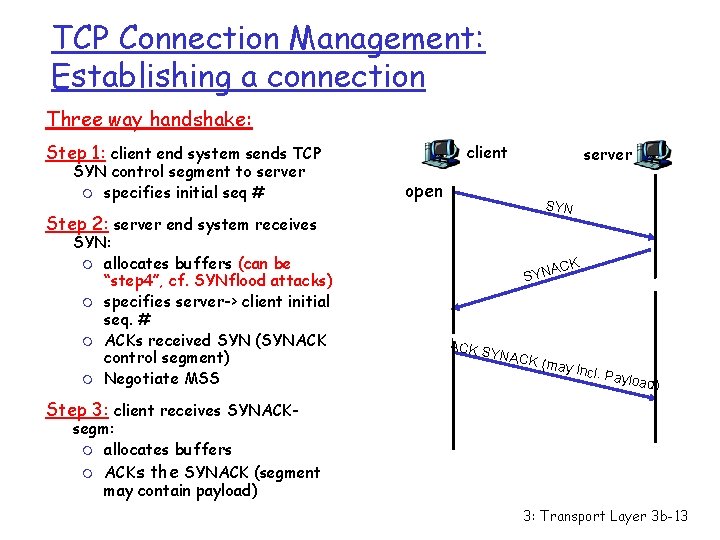

TCP Connection Management: Establishing a connection Three way handshake: Step 1: client end system sends TCP SYN control segment to server m specifies initial seq # client server open SYN Step 2: server end system receives SYN: m allocates buffers (can be “step 4”, cf. SYNflood attacks) m specifies server-> client initial seq. # m ACKs received SYN (SYNACK control segment) m Negotiate MSS ACK SYN ACK S YNAC K (may i ncl. Pa yload) Step 3: client receives SYNACK- segm: m allocates buffers m ACKs the SYNACK (segment may contain payload) 3: Transport Layer 3 b-13

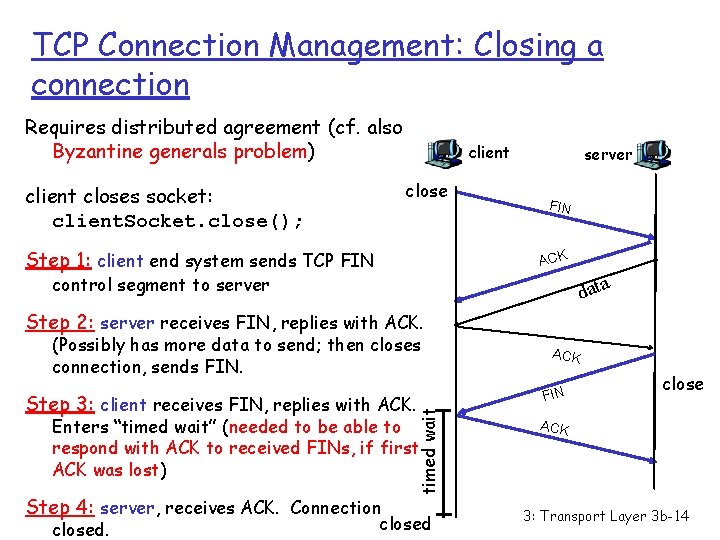

TCP Connection Management: Closing a connection Requires distributed agreement (cf. also Byzantine generals problem) client closes socket: client. Socket. close(); Step 1: client end system sends TCP FIN server FIN ACK control segment to server data Step 2: server receives FIN, replies with ACK. (Possibly has more data to send; then closes connection, sends FIN. timed wait Step 3: client receives FIN, replies with ACK. Enters “timed wait” (needed to be able to respond with ACK to received FINs, if first ACK was lost) Step 4: server, receives ACK. Connection closed ACK FIN close ACK 3: Transport Layer 3 b-14

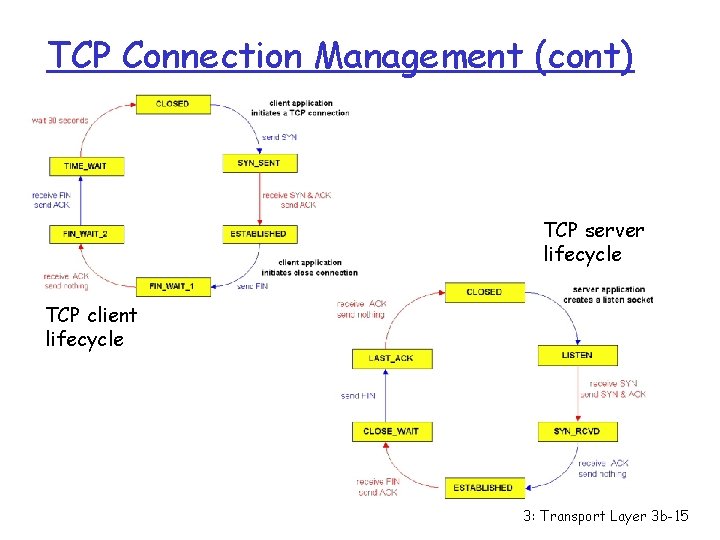

TCP Connection Management (cont) TCP server lifecycle TCP client lifecycle 3: Transport Layer 3 b-15

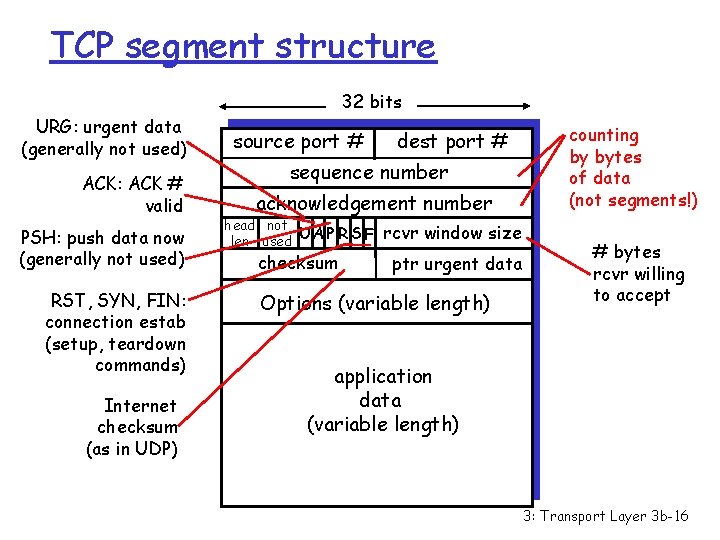

TCP segment structure 32 bits URG: urgent data (generally not used) ACK: ACK # valid PSH: push data now (generally not used) RST, SYN, FIN: connection estab (setup, teardown commands) Internet checksum (as in UDP) source port # dest port # sequence number acknowledgement number head not UA P R S F len used checksum rcvr window size ptr urgent data Options (variable length) counting by bytes of data (not segments!) # bytes rcvr willing to accept application data (variable length) 3: Transport Layer 3 b-16

Principles of Congestion Control Congestion: a top-10 problem! r informally: “too many sources sending too much data too fast for network to handle” r different from flow control! r manifestations: m lost packets (buffer overflow at routers) m long delays (queueing in router buffers) 3: Transport Layer 3 b-17

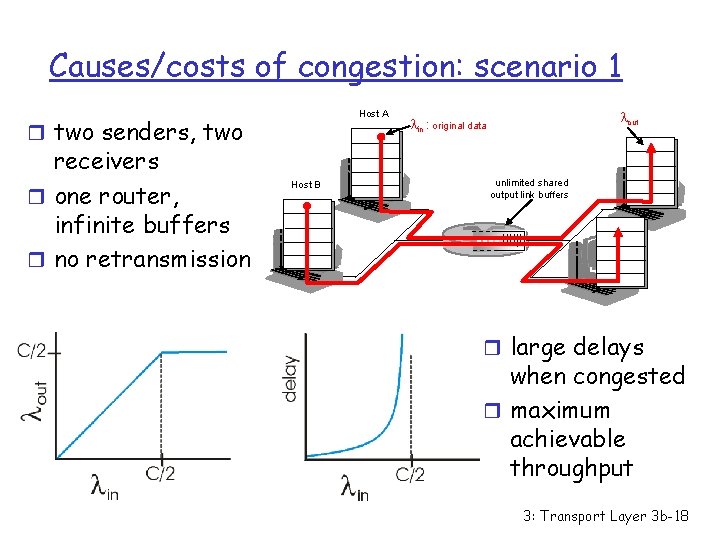

Causes/costs of congestion: scenario 1 Host A r two senders, two receivers r one router, infinite buffers r no retransmission Host B lout lin : original data unlimited shared output link buffers r large delays when congested r maximum achievable throughput 3: Transport Layer 3 b-18

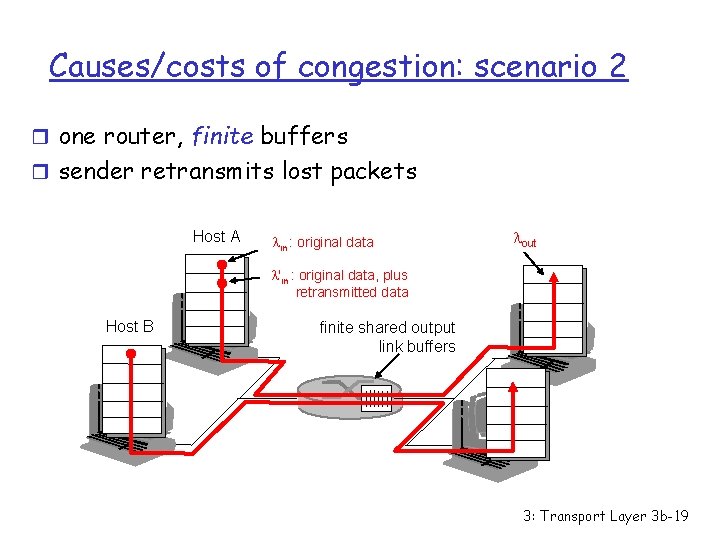

Causes/costs of congestion: scenario 2 r one router, finite buffers r sender retransmits lost packets Host A lin : original data lout l'in : original data, plus retransmitted data Host B finite shared output link buffers 3: Transport Layer 3 b-19

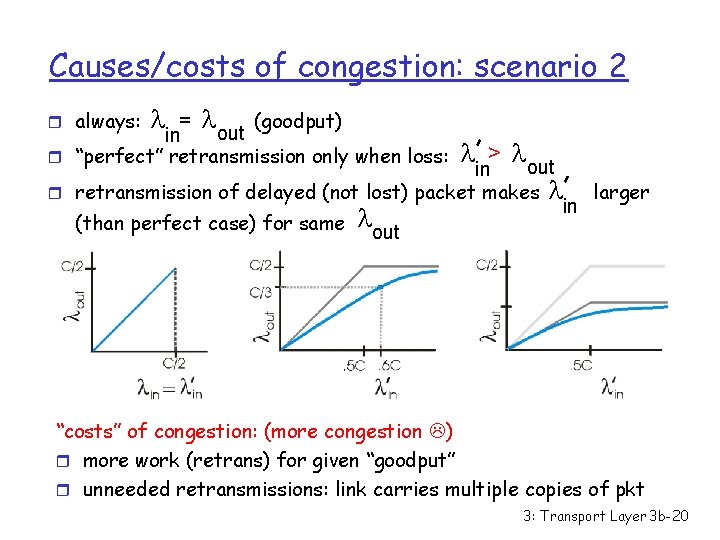

Causes/costs of congestion: scenario 2 = l (goodput) out in r “perfect” retransmission only when loss: r always: r l l > lout in retransmission of delayed (not lost) packet makes l in l (than perfect case) for same out larger “costs” of congestion: (more congestion ) r more work (retrans) for given “goodput” r unneeded retransmissions: link carries multiple copies of pkt 3: Transport Layer 3 b-20

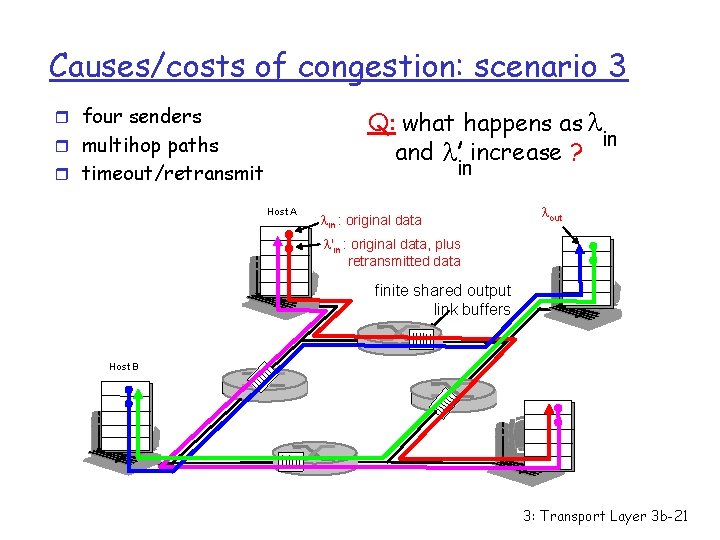

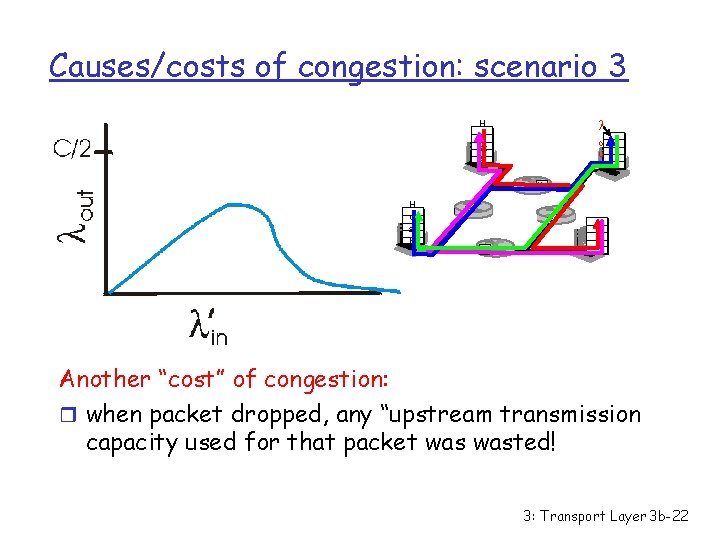

Causes/costs of congestion: scenario 3 r four senders Q: what happens as l in and l increase ? r multihop paths in r timeout/retransmit Host A lin : original data lout l'in : original data, plus retransmitted data finite shared output link buffers Host B 3: Transport Layer 3 b-21

Causes/costs of congestion: scenario 3 H o st A l o u t H o st B Another “cost” of congestion: r when packet dropped, any “upstream transmission capacity used for that packet wasted! 3: Transport Layer 3 b-22

Summary causes of Congestion: r Bad network design (bottlenecks) r Bad use of network : feed with more than can go through r … congestion (bad congestion-control policies e. g. dropping the wrong packets, etc) 3: Transport Layer 3 b-23

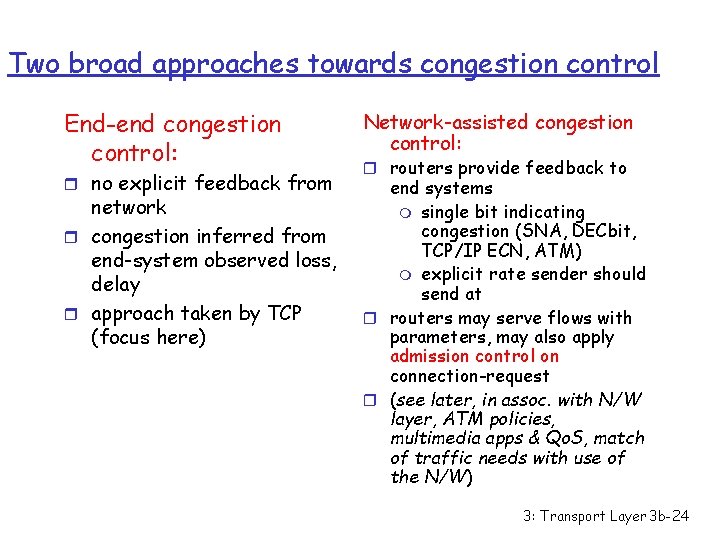

Two broad approaches towards congestion control End-end congestion control: Network-assisted congestion control: r no explicit feedback from end systems m single bit indicating congestion (SNA, DECbit, TCP/IP ECN, ATM) m explicit rate sender should send at r routers may serve flows with parameters, may also apply admission control on connection-request r (see later, in assoc. with N/W layer, ATM policies, multimedia apps & Qo. S, match of traffic needs with use of the N/W) network r congestion inferred from end-system observed loss, delay r approach taken by TCP (focus here) r routers provide feedback to 3: Transport Layer 3 b-24

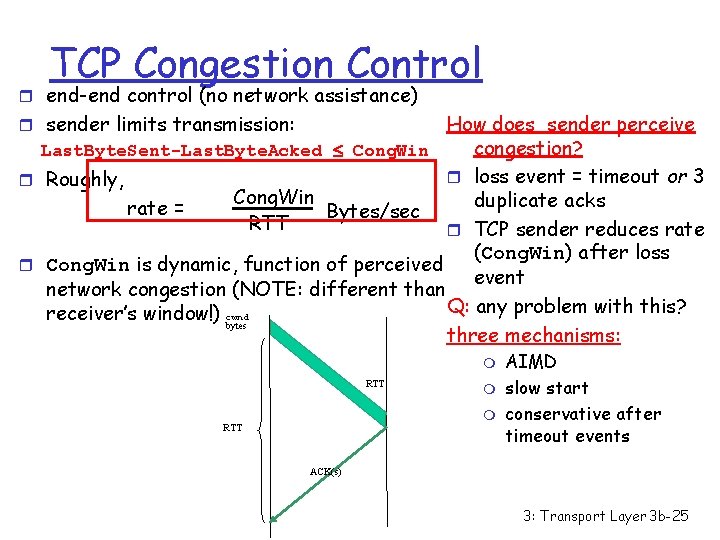

TCP Congestion Control r end-end control (no network assistance) How does sender perceive congestion? r loss event = timeout or 3 r Roughly, Cong. Win duplicate acks rate = Bytes/sec RTT r TCP sender reduces rate (Cong. Win) after loss r Cong. Win is dynamic, function of perceived event network congestion (NOTE: different than Q: any problem with this? receiver’s window!) cwnd bytes three mechanisms: r sender limits transmission: Last. Byte. Sent-Last. Byte. Acked Cong. Win m RTT m m RTT AIMD slow start conservative after timeout events ACK(s) 3: Transport Layer 3 b-25

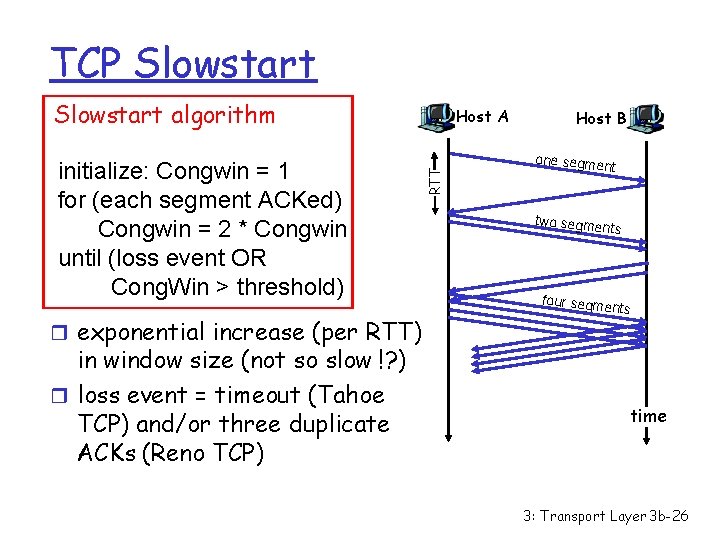

TCP Slowstart algorithm r exponential increase (per RTT) in window size (not so slow !? ) r loss event = timeout (Tahoe TCP) and/or three duplicate ACKs (Reno TCP) RTT initialize: Congwin = 1 for (each segment ACKed) Congwin = 2 * Congwin until (loss event OR Cong. Win > threshold) Host A Host B one segme nt two segme nts four segme nts time 3: Transport Layer 3 b-26

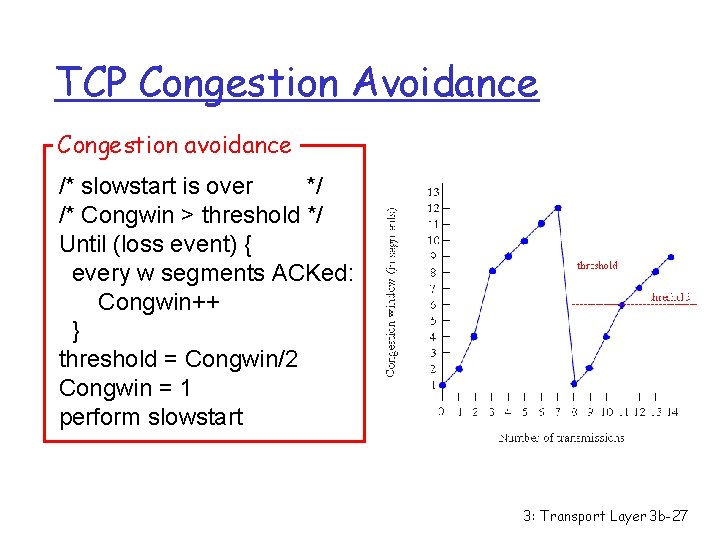

TCP Congestion Avoidance Congestion avoidance /* slowstart is over */ /* Congwin > threshold */ Until (loss event) { every w segments ACKed: Congwin++ } threshold = Congwin/2 Congwin = 1 perform slowstart 3: Transport Layer 3 b-27

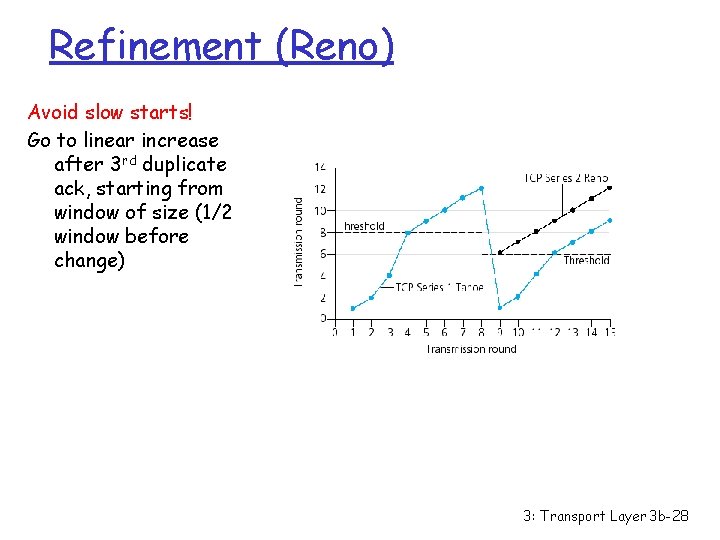

Refinement (Reno) Avoid slow starts! Go to linear increase after 3 rd duplicate ack, starting from window of size (1/2 window before change) 3: Transport Layer 3 b-28

TCP AIMD multiplicative decrease: cut Cong. Win in half after loss event additive increase: increase Cong. Win by 1 MSS every RTT in the absence of loss events: probing Long-lived TCP connection 3: Transport Layer 3 b-29

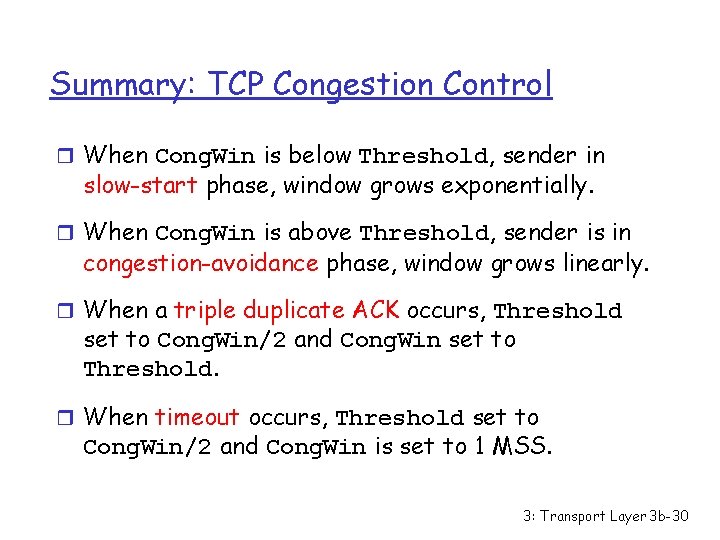

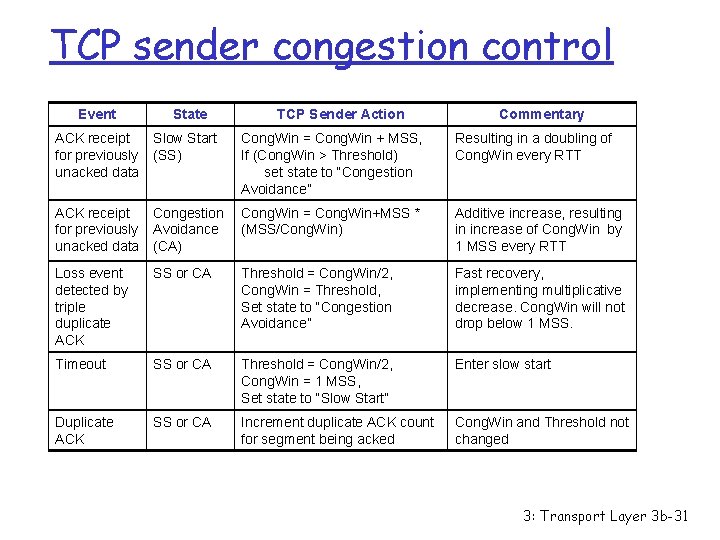

Summary: TCP Congestion Control r When Cong. Win is below Threshold, sender in slow-start phase, window grows exponentially. r When Cong. Win is above Threshold, sender is in congestion-avoidance phase, window grows linearly. r When a triple duplicate ACK occurs, Threshold set to Cong. Win/2 and Cong. Win set to Threshold. r When timeout occurs, Threshold set to Cong. Win/2 and Cong. Win is set to 1 MSS. 3: Transport Layer 3 b-30

TCP sender congestion control Event State TCP Sender Action Commentary ACK receipt Slow Start for previously (SS) unacked data Cong. Win = Cong. Win + MSS, If (Cong. Win > Threshold) set state to “Congestion Avoidance” Resulting in a doubling of Cong. Win every RTT ACK receipt Congestion for previously Avoidance unacked data (CA) Cong. Win = Cong. Win+MSS * (MSS/Cong. Win) Additive increase, resulting in increase of Cong. Win by 1 MSS every RTT Loss event detected by triple duplicate ACK SS or CA Threshold = Cong. Win/2, Cong. Win = Threshold, Set state to “Congestion Avoidance” Fast recovery, implementing multiplicative decrease. Cong. Win will not drop below 1 MSS. Timeout SS or CA Threshold = Cong. Win/2, Cong. Win = 1 MSS, Set state to “Slow Start” Enter slow start Duplicate ACK SS or CA Increment duplicate ACK count for segment being acked Cong. Win and Threshold not changed 3: Transport Layer 3 b-31

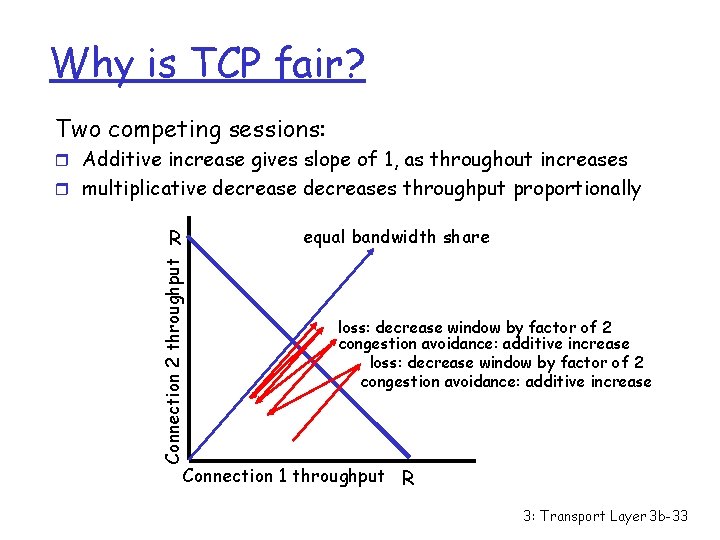

TCP Fairness TCP’s congestion avoidance effect: AIMD: additive increase, multiplicative decrease m m increase window by 1 per RTT decrease window by factor of 2 on loss event Fairness goal: if N TCP sessions share same bottleneck link, each should get 1/N of link capacity TCP connection 1 TCP connection 2 bottleneck router capacity R 3: Transport Layer 3 b-32

Why is TCP fair? Two competing sessions: r Additive increase gives slope of 1, as throughout increases r multiplicative decreases throughput proportionally equal bandwidth share Connection 2 throughput R loss: decrease window by factor of 2 congestion avoidance: additive increase Connection 1 throughput R 3: Transport Layer 3 b-33

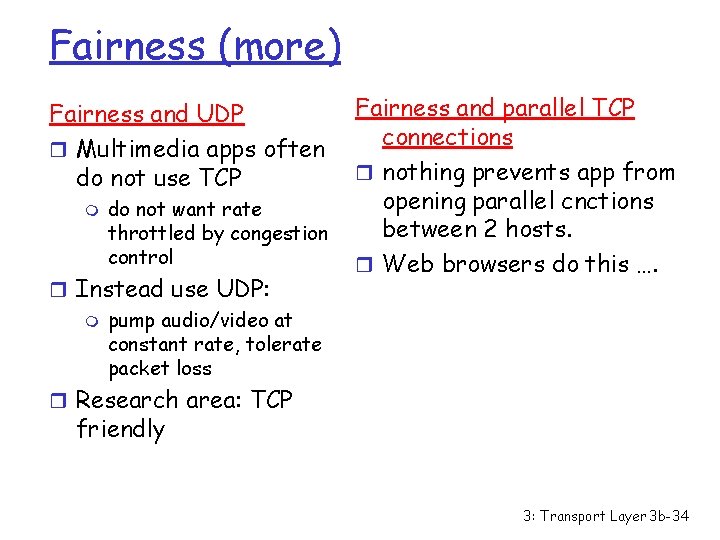

Fairness (more) Fairness and UDP r Multimedia apps often do not use TCP m do not want rate throttled by congestion control r Instead use UDP: m pump audio/video at constant rate, tolerate packet loss Fairness and parallel TCP connections r nothing prevents app from opening parallel cnctions between 2 hosts. r Web browsers do this …. r Research area: TCP friendly 3: Transport Layer 3 b-34

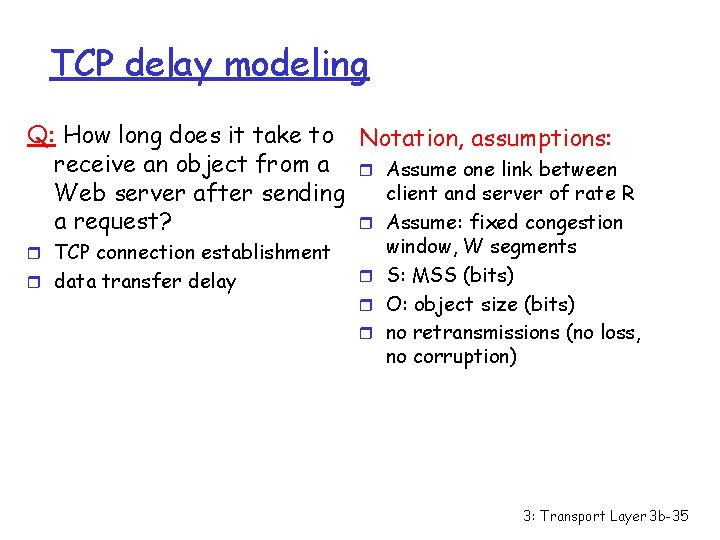

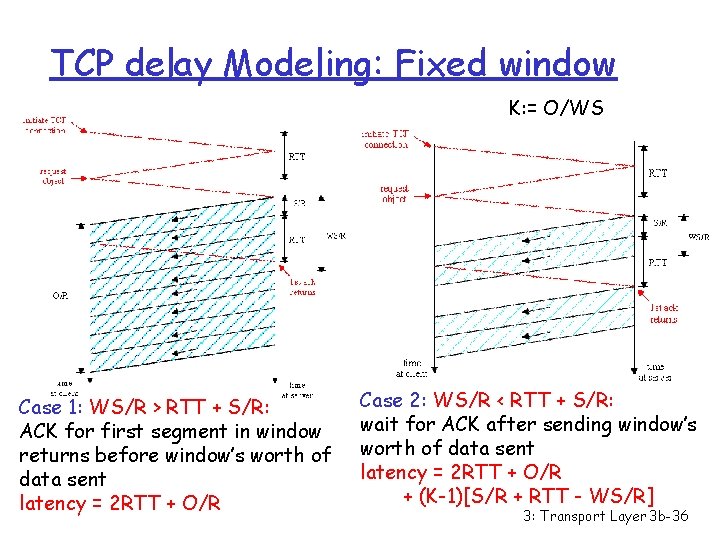

TCP delay modeling Q: How long does it take to Notation, assumptions: receive an object from a r Assume one link between client and server of rate R Web server after sending r Assume: fixed congestion a request? r TCP connection establishment r data transfer delay window, W segments r S: MSS (bits) r O: object size (bits) r no retransmissions (no loss, no corruption) 3: Transport Layer 3 b-35

TCP delay Modeling: Fixed window K: = O/WS Case 1: WS/R > RTT + S/R: ACK for first segment in window returns before window’s worth of data sent latency = 2 RTT + O/R Case 2: WS/R < RTT + S/R: wait for ACK after sending window’s worth of data sent latency = 2 RTT + O/R + (K-1)[S/R + RTT - WS/R] 3: Transport Layer 3 b-36

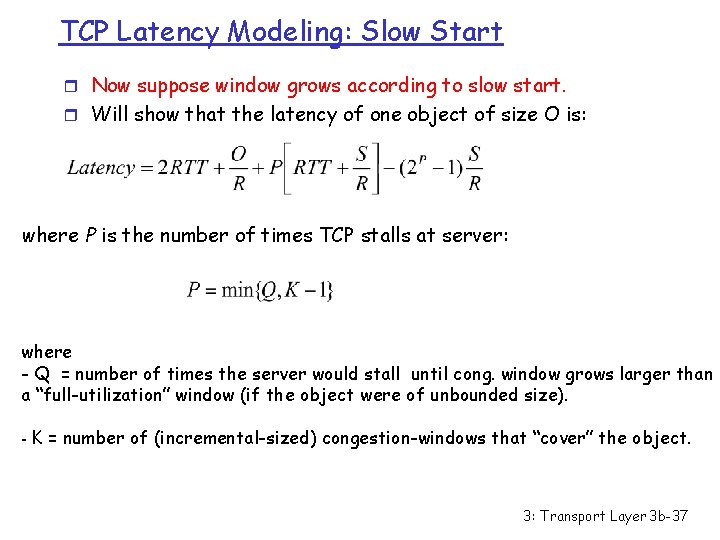

TCP Latency Modeling: Slow Start r Now suppose window grows according to slow start. r Will show that the latency of one object of size O is: where P is the number of times TCP stalls at server: where - Q = number of times the server would stall until cong. window grows larger than a “full-utilization” window (if the object were of unbounded size). - K = number of (incremental-sized) congestion-windows that “cover” the object. 3: Transport Layer 3 b-37

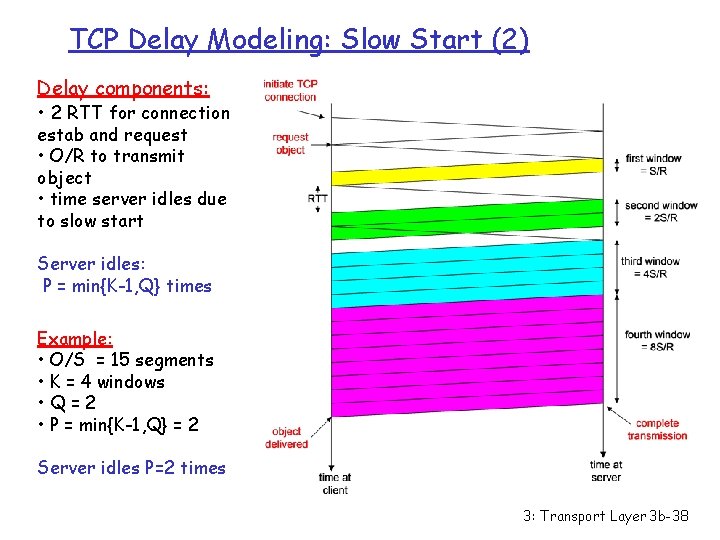

TCP Delay Modeling: Slow Start (2) Delay components: • 2 RTT for connection estab and request • O/R to transmit object • time server idles due to slow start Server idles: P = min{K-1, Q} times Example: • O/S = 15 segments • K = 4 windows • Q=2 • P = min{K-1, Q} = 2 Server idles P=2 times 3: Transport Layer 3 b-38

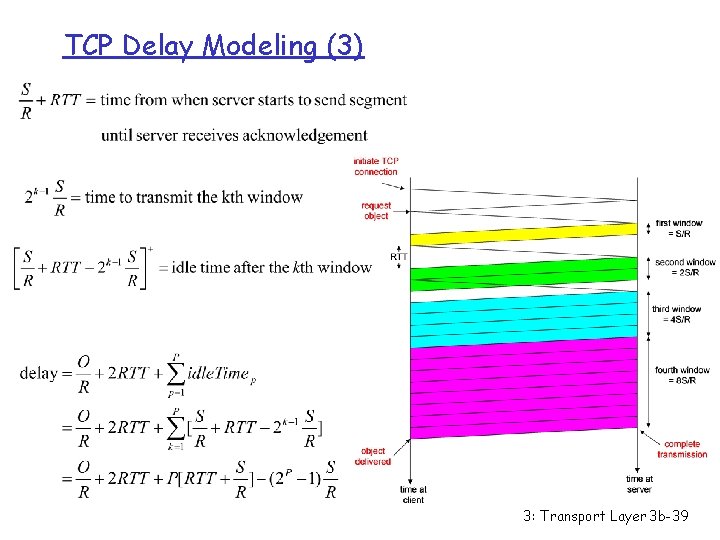

TCP Delay Modeling (3) 3: Transport Layer 3 b-39

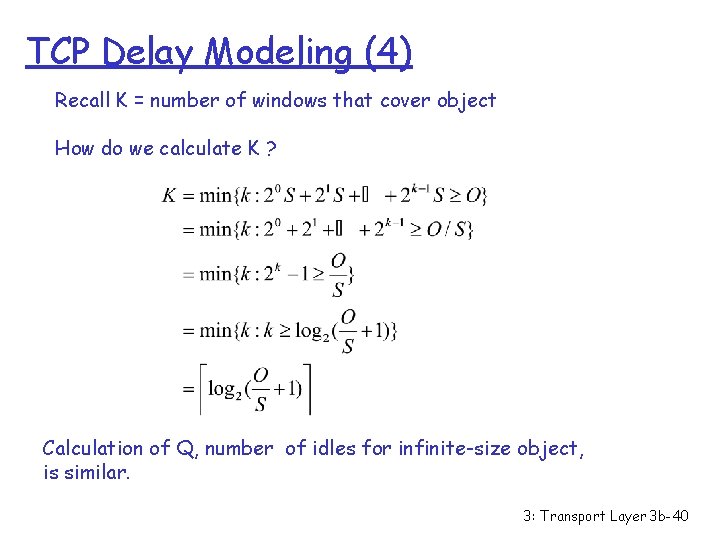

TCP Delay Modeling (4) Recall K = number of windows that cover object How do we calculate K ? Calculation of Q, number of idles for infinite-size object, is similar. 3: Transport Layer 3 b-40

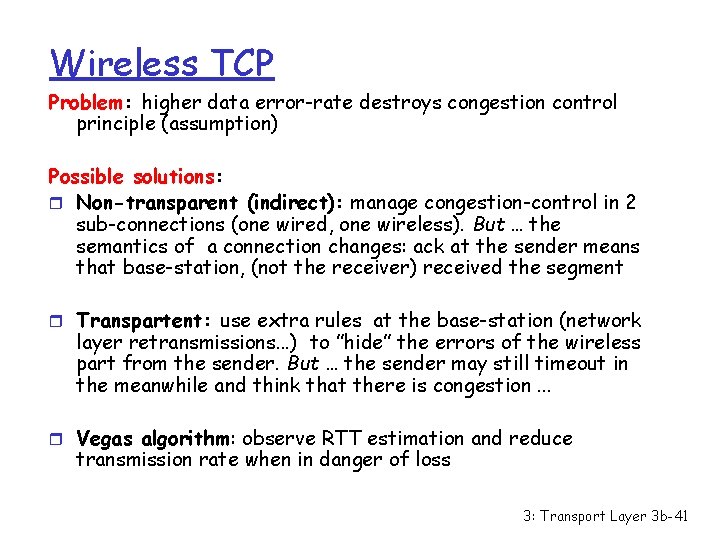

Wireless TCP Problem: higher data error-rate destroys congestion control principle (assumption) Possible solutions: r Non-transparent (indirect): manage congestion-control in 2 sub-connections (one wired, one wireless). But … the semantics of a connection changes: ack at the sender means that base-station, (not the receiver) received the segment r Transpartent: use extra rules at the base-station (network layer retransmissions. . . ) to ”hide” the errors of the wireless part from the sender. But … the sender may still timeout in the meanwhile and think that there is congestion. . . r Vegas algorithm: observe RTT estimation and reduce transmission rate when in danger of loss 3: Transport Layer 3 b-41

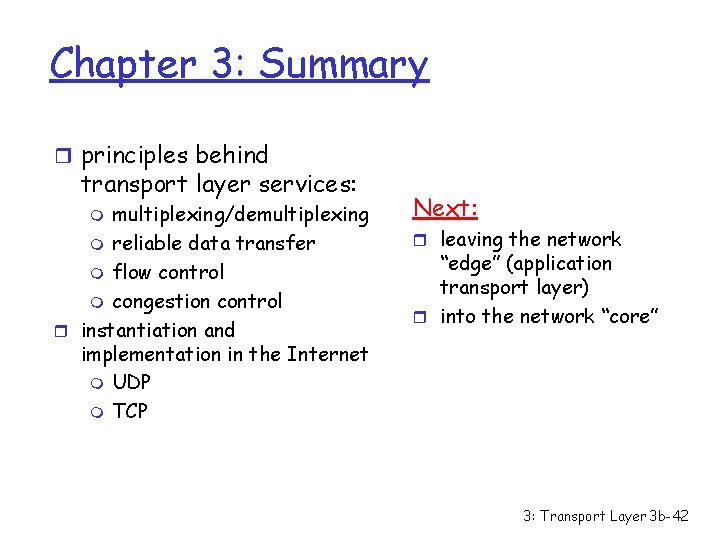

Chapter 3: Summary r principles behind transport layer services: multiplexing/demultiplexing m reliable data transfer m flow control m congestion control r instantiation and implementation in the Internet m UDP m TCP m Next: r leaving the network “edge” (application transport layer) r into the network “core” 3: Transport Layer 3 b-42

- Slides: 42