Chapter 3 3 OS Policies for Virtual Memory

- Slides: 38

Chapter 3. 3 : OS Policies for Virtual Memory Fetch policy n Placement policy n Replacement policy n Resident set management n Cleaning policy n Load control n From : Operating Systems by W. Stallings, Prentice-Hall, 1995 1

Fetch Policy n n Demand Paging: Pages are fetched when needed (ie. , when a page fault occurs) n Process starts with a flurry of page faults, eventually locality takes over Prepaging (anticipatative): Pages other than the one needed are brought in n Prepaging makes use of disk storage characteristics. If pages are stored contiguously it may be more efficient to fetch them in one go n The policy is ineffective if the extra pages are not referenced 2

Placement Policy n The placement policy is concerned with determining where in real memory a process piece is to reside n With anything other than pure segmentation this is not an issue (refer to best-fit, first-fit etc. ) 3

Replacement Policy n All page frames are used. A page fault has occurred. New page must go into a frame. n Which one do you take out? 4

Replacement Algorithm Objectives The page being replaced should be the page least likely to be referenced in the near future n There is a link between past history and the future because of locality n Thus most algorithms base their decision on past history n 5

Scope of Replacements n The set of frames from which these algorithms choose is based on the scope n Local Scope: Only frames belonging to the faulting process can be replaced. n Global Scope: All frames can be replaced n Some frames will be locked (e. g. Kernel, system buffers etc. , ) 6

Replacement Algorithms Optimal n Not-recently-used (NRU) n First-in, first-out (FIFO) n Least recently used (LRU) n Not frequently used (NFU) n Modified NFU (~LRU) n 7

Optimal Replacement Algorithm Replace the page which is least likely to be referenced or for which the time to the next reference is the longest (Replace page needed at the farthest point in future) n This algorithm is impossible to implement because OS must have perfect knowledge of future events n This algorithm is used to compare other algorithms n 8

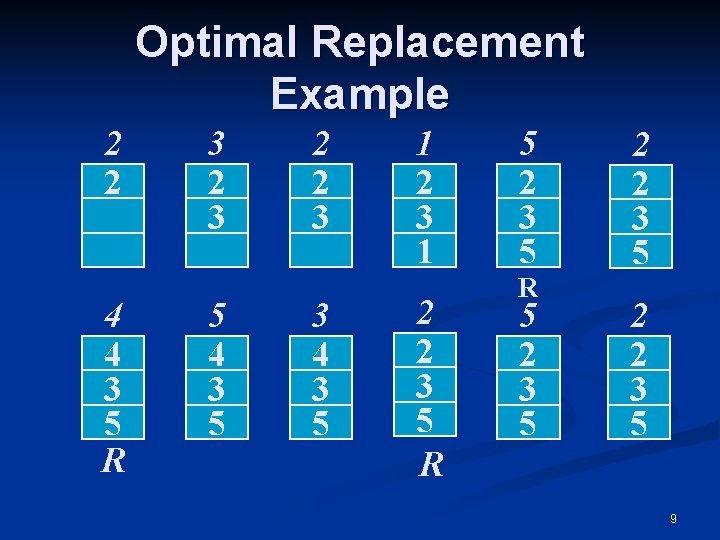

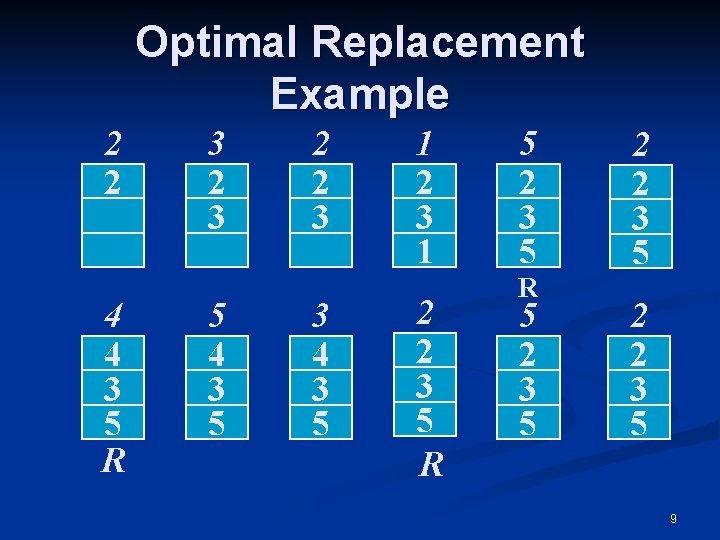

Optimal Replacement Example 2 2 4 4 3 5 R 3 2 3 5 4 3 5 2 2 3 3 4 3 5 1 2 3 1 2 2 3 5 R 5 2 3 5 2 2 3 5 9

Optimal Replacement Example (Cont. ) 1’st page fault : page 1 is replaced by page 5 because page 1 does not appear in the page address stream in the future n 2’nd page fault: page 2 is replaced by page 4 because page 2 will be referenced after two (pages 5 and 3) references n 3’rd page fault: page 4 is replaced by 2 because page 4 is not in the stream any more n 10

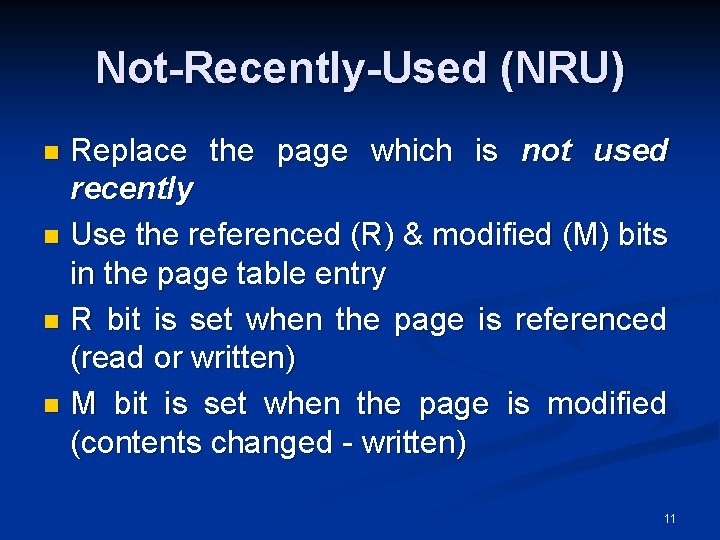

Not-Recently-Used (NRU) Replace the page which is not used recently n Use the referenced (R) & modified (M) bits in the page table entry n R bit is set when the page is referenced (read or written) n M bit is set when the page is modified (contents changed - written) n 11

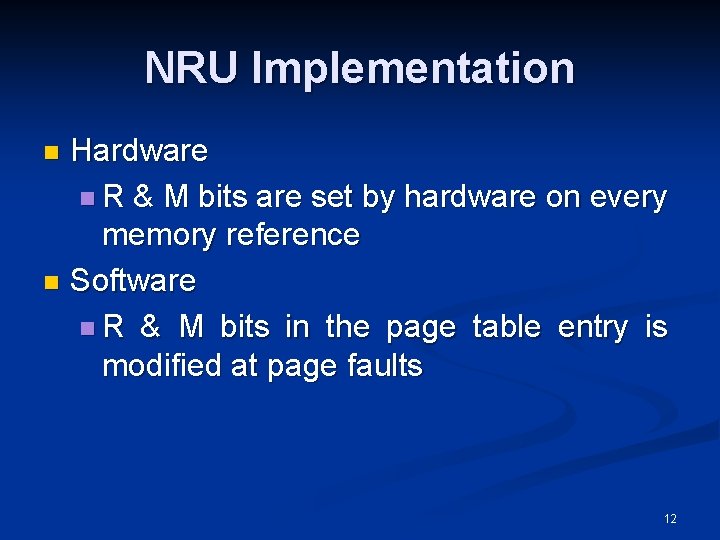

NRU Implementation Hardware n R & M bits are set by hardware on every memory reference n Software n R & M bits in the page table entry is modified at page faults n 12

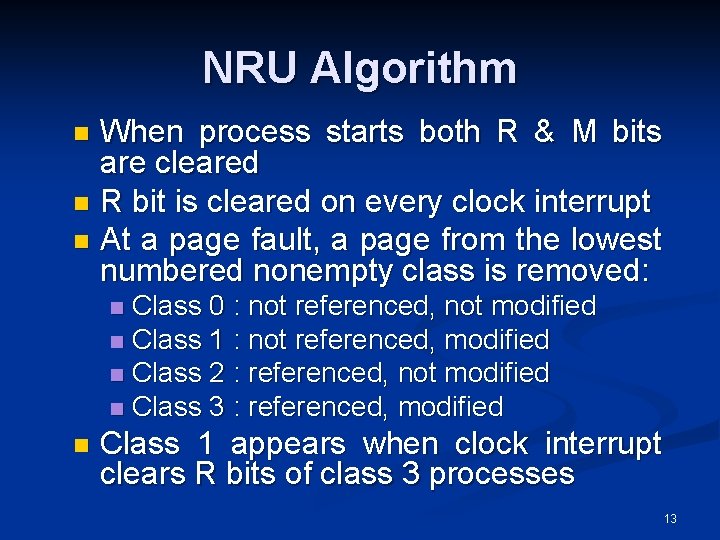

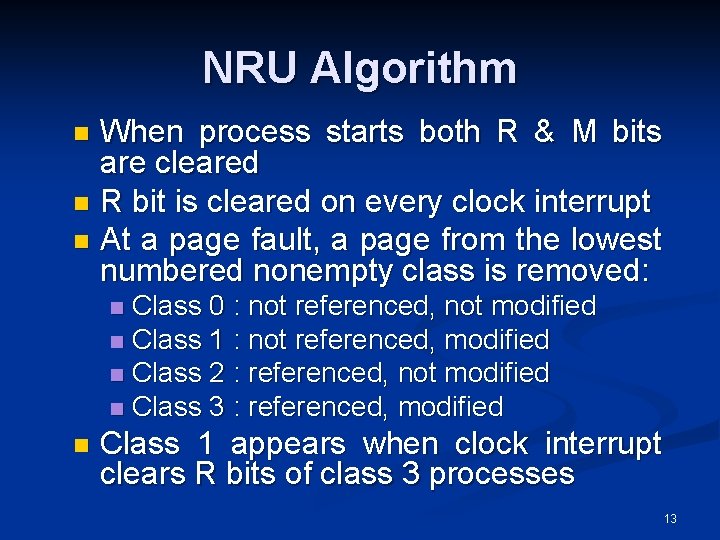

NRU Algorithm When process starts both R & M bits are cleared n R bit is cleared on every clock interrupt n At a page fault, a page from the lowest numbered nonempty class is removed: n Class 0 : not referenced, not modified n Class 1 : not referenced, modified n Class 2 : referenced, not modified n Class 3 : referenced, modified n n Class 1 appears when clock interrupt clears R bits of class 3 processes 13

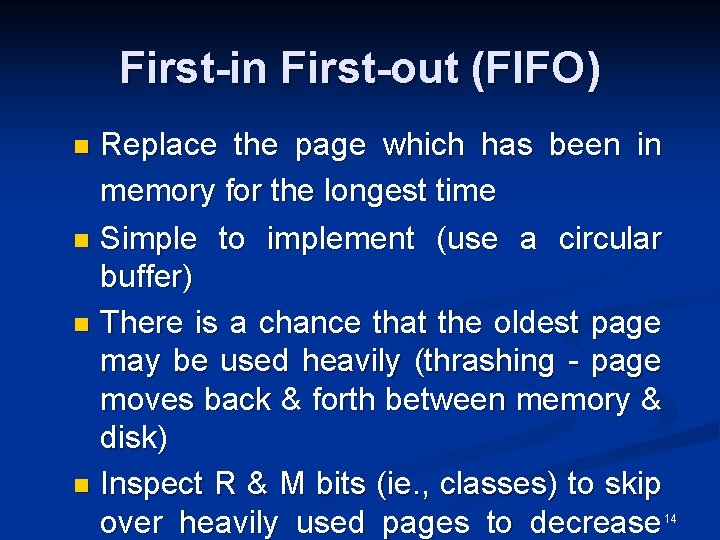

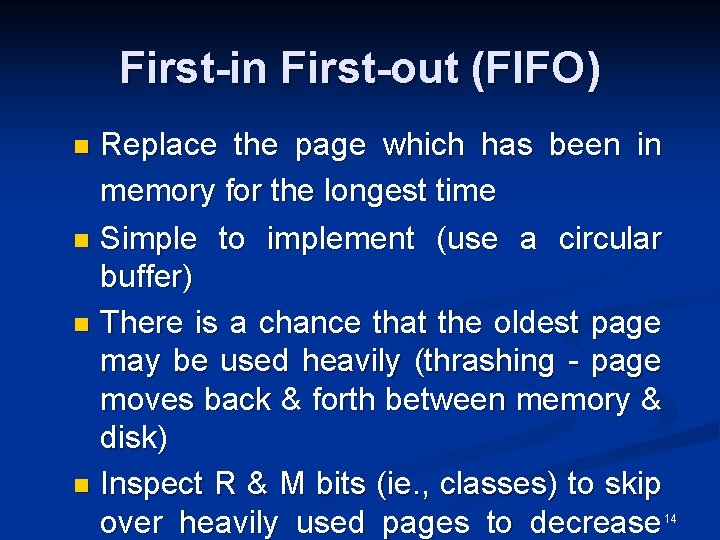

First-in First-out (FIFO) Replace the page which has been in memory for the longest time n Simple to implement (use a circular buffer) n There is a chance that the oldest page may be used heavily (thrashing - page moves back & forth between memory & disk) n Inspect R & M bits (ie. , classes) to skip over heavily used pages to decrease n 14

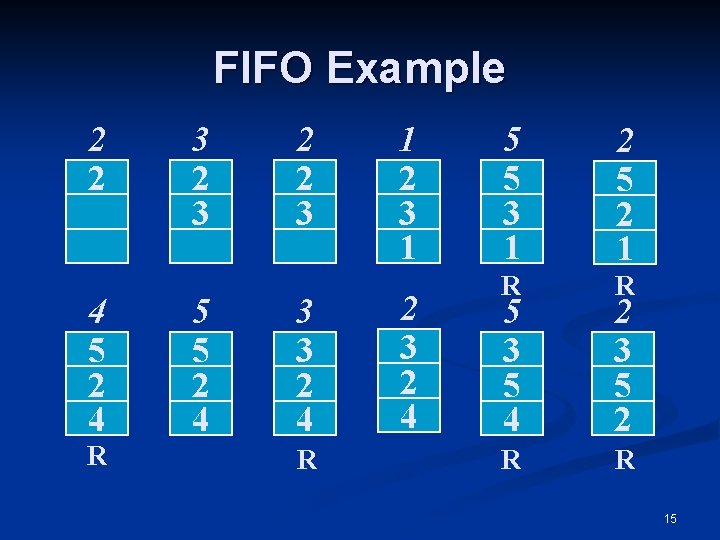

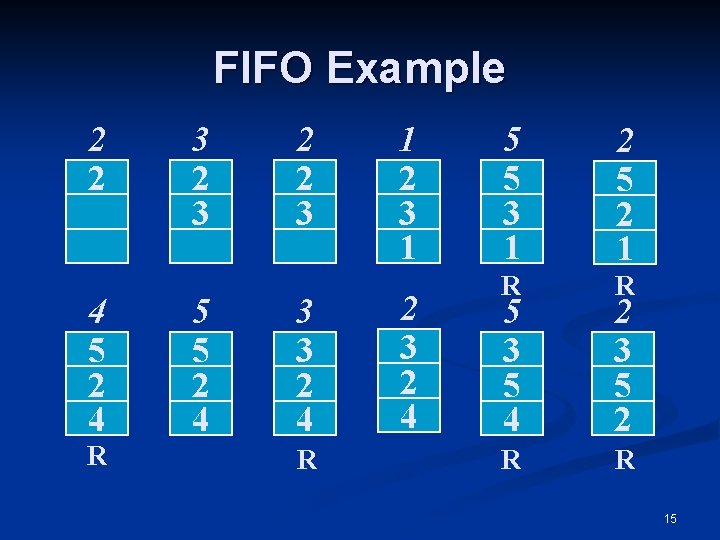

FIFO Example 2 2 4 5 2 4 R 3 2 3 5 5 2 4 2 2 3 3 3 2 4 R 1 2 3 2 4 5 5 3 1 2 5 2 1 R R 5 3 5 4 2 3 5 2 R R 15

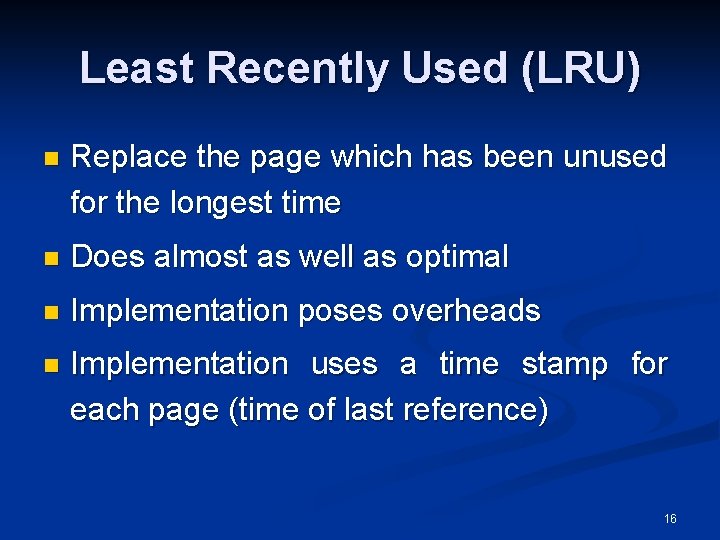

Least Recently Used (LRU) n Replace the page which has been unused for the longest time n Does almost as well as optimal n Implementation poses overheads n Implementation uses a time stamp for each page (time of last reference) 16

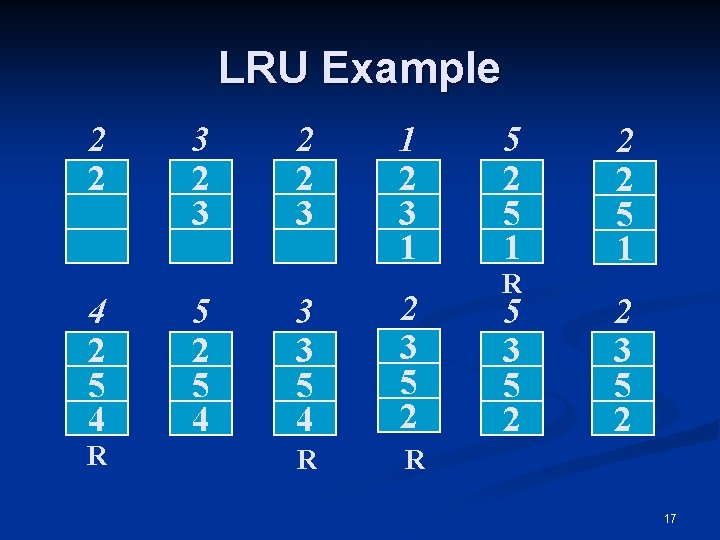

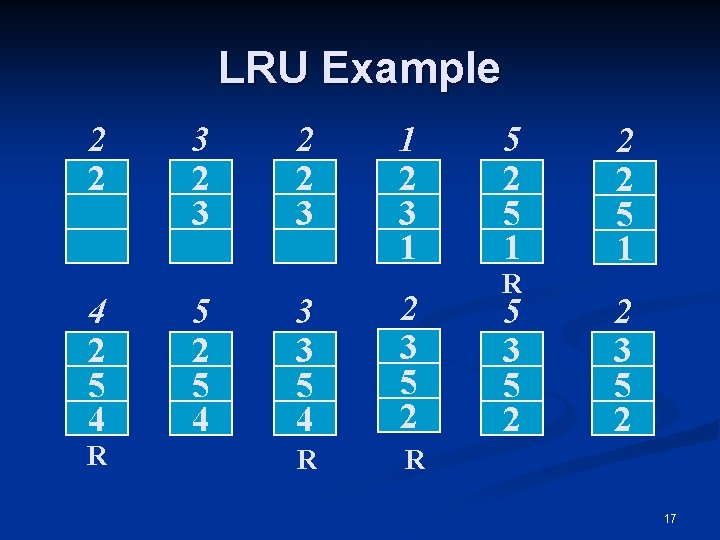

LRU Example 2 2 4 2 5 4 R 3 2 3 5 2 5 4 2 2 3 1 3 3 5 4 2 3 5 2 R R 5 2 5 1 R 5 3 5 2 2 2 5 1 2 3 5 2 17

LRU Example (Cont. ) n n 1’st page fault: page 5 replaces page 3 because page 3 hasn’t been referenced in the last two references 2’nd page fault: page 4 replaces page 1 because page 1 hasn’t been referenced in the last two references 3’rd page fault: page 3 replaces page 2 because page 2 hasn’t been referenced in the last two references 4’th page fault: page 2 replaces page 4 because page 4 hasn’t been referenced in the last two references 18

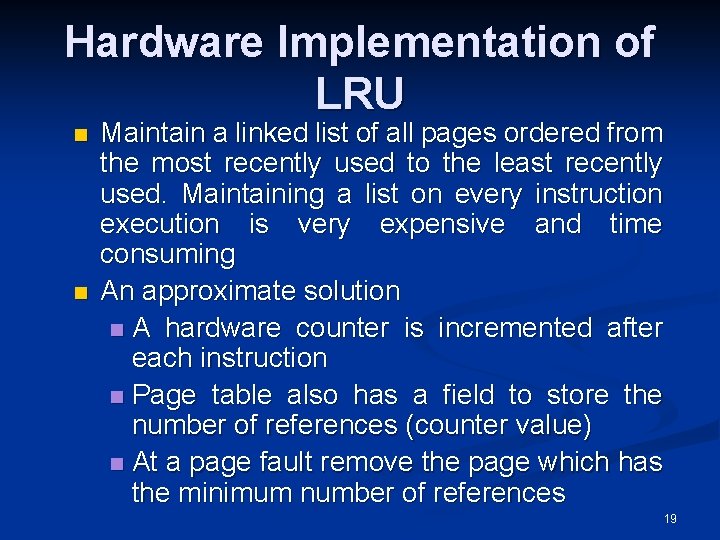

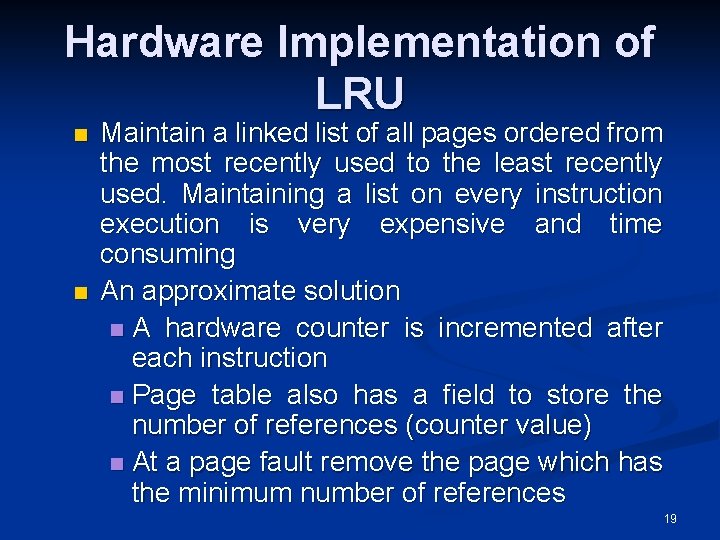

Hardware Implementation of LRU n n Maintain a linked list of all pages ordered from the most recently used to the least recently used. Maintaining a list on every instruction execution is very expensive and time consuming An approximate solution n A hardware counter is incremented after each instruction n Page table also has a field to store the number of references (counter value) n At a page fault remove the page which has the minimum number of references 19

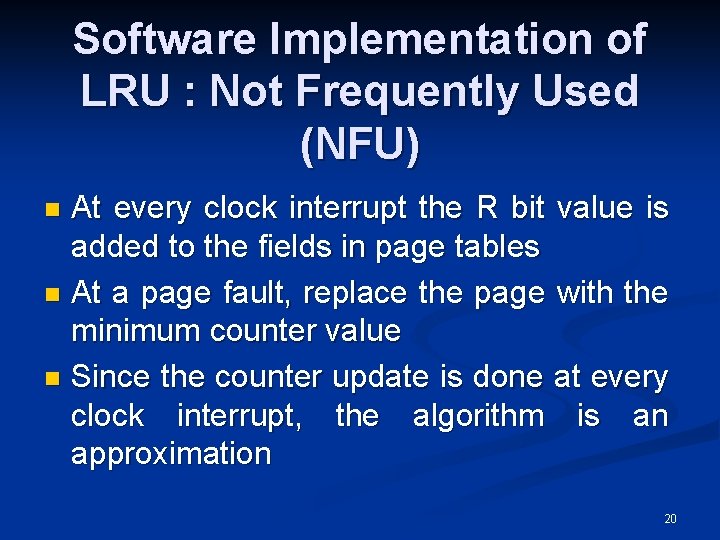

Software Implementation of LRU : Not Frequently Used (NFU) At every clock interrupt the R bit value is added to the fields in page tables n At a page fault, replace the page with the minimum counter value n Since the counter update is done at every clock interrupt, the algorithm is an approximation n 20

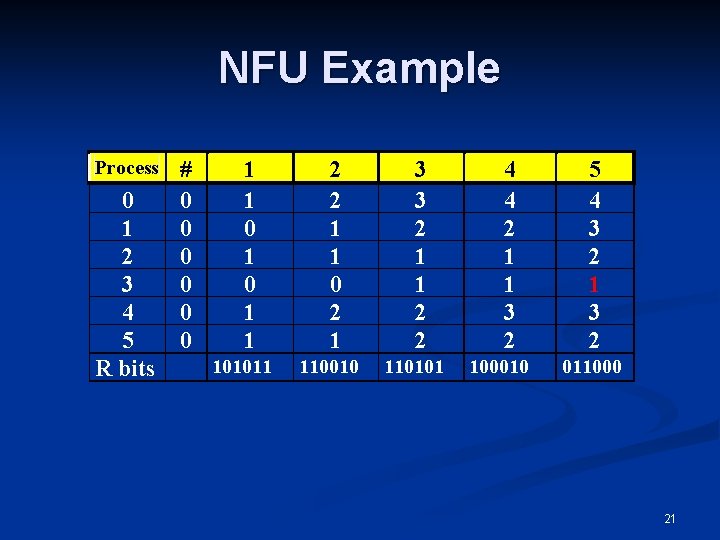

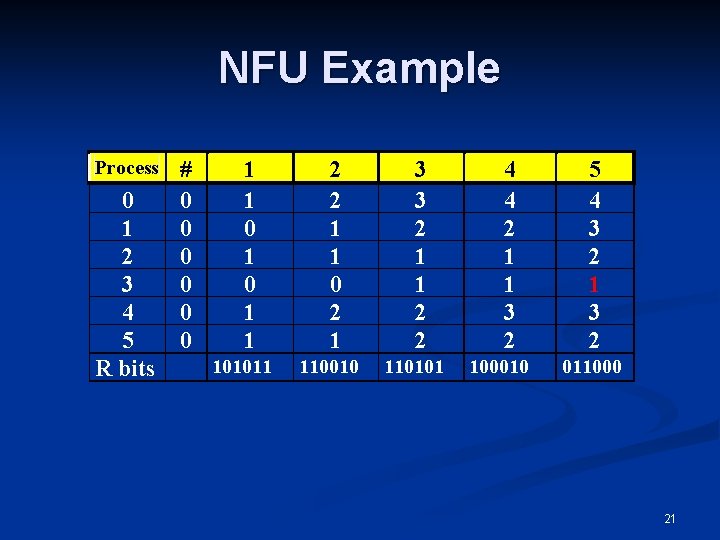

NFU Example Process 0 1 2 3 4 5 R bits # 0 0 0 1 1 101011 2 2 1 1 0 2 1 110010 3 3 2 1 1 2 2 110101 4 4 2 1 1 3 2 100010 5 4 3 2 1 3 2 011000 21

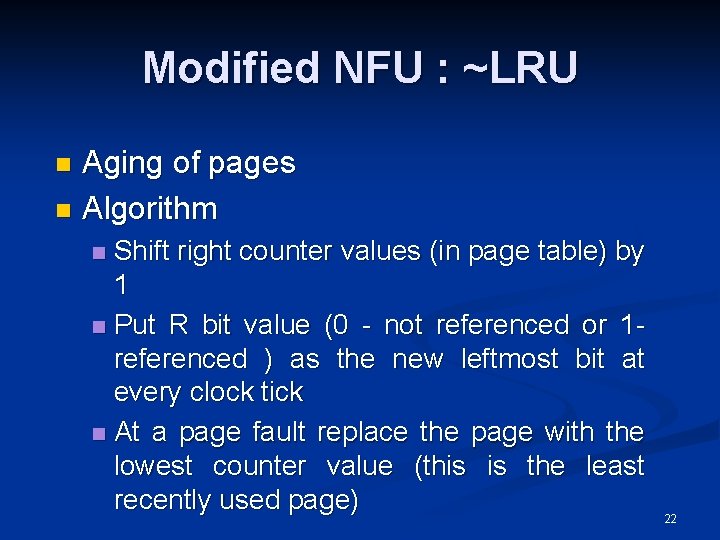

Modified NFU : ~LRU Aging of pages n Algorithm n Shift right counter values (in page table) by 1 n Put R bit value (0 - not referenced or 1 referenced ) as the new leftmost bit at every clock tick n At a page fault replace the page with the lowest counter value (this is the least recently used page) n 22

Modified NFU : ~LRU (Cont. ) Example Suppose counter is 1 0 1 1 0 & R bit = 0 New counter becomes 0 1 1 n Leading zeros represent the number of clock ticks that the page has not been referenced n 23

Differences Between NFU & ~LRU n NFU counts the number of times that a page is accessed in a given period n ~LRU incorporates a time factor by shifting right (aging) the counter value 24

Resident Set Management n Resident Set: Set of a process' pages which are in main memory n OS must manage the size and allocation policies which effect the resident set 25

Factors Include Smaller resident set per process, implies more processes in memory, implies OS will always find at least one ready process (if not swapping must occur) n If process' resident set is small, page fault frequency will be higher n Increasing the resident set size beyond some limit does not effect page fault frequency n 26

Resident Set Management Policies n Fixed allocation policy Each process has a fixed number of pages n Variable allocation policy The number of pages per process can change 27

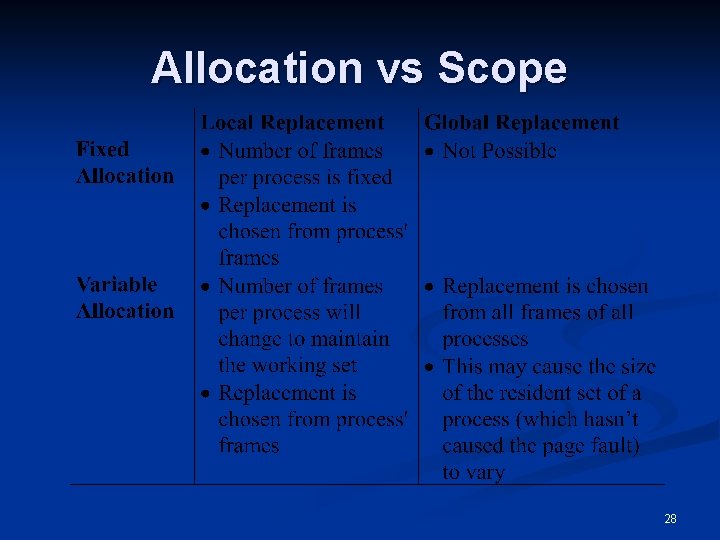

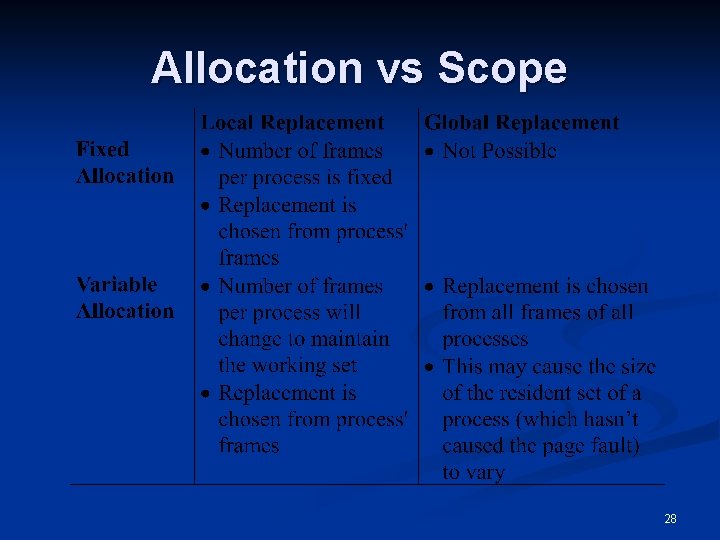

Allocation vs Scope 28

Fixed Allocation, Local Scope n Frame number process is decided beforehand can't be changed n Too Small: High page faults, poor performance n Too Large: Small number of processes, high processor idle time and/or swapping 29

Variable Allocation, Global Scope n Easiest to implement n Processes with high page fault rates will tend to grow. However replacement problems exist 30

Variable Allocation, Local Scope Allocate new process a resident set size n Prepaging or demand to fill up allocation n Select replacement from within faulting process n Re-evaluate allocation occasionally n 31

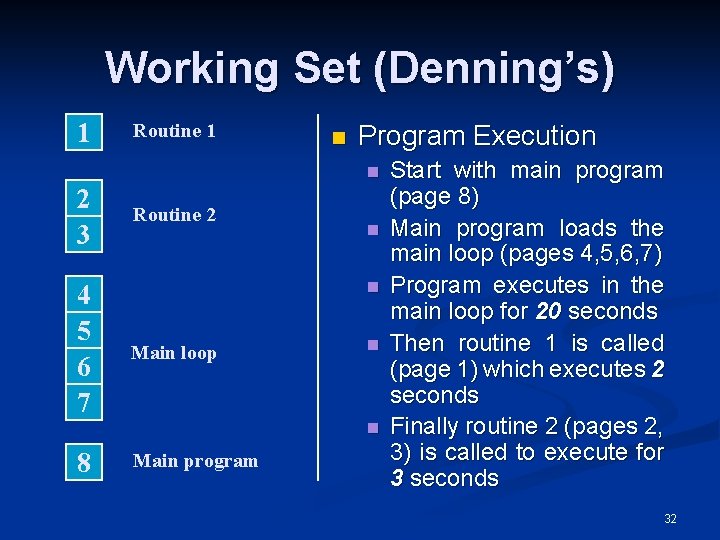

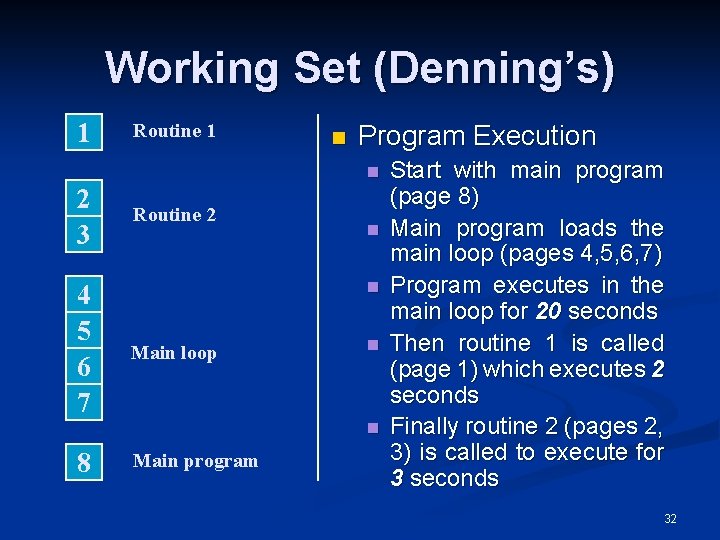

Working Set (Denning’s) 1 Routine 1 n Program Execution n 2 3 4 5 6 7 Routine 2 n n Main loop n n 8 Main program Start with main program (page 8) Main program loads the main loop (pages 4, 5, 6, 7) Program executes in the main loop for 20 seconds Then routine 1 is called (page 1) which executes 2 seconds Finally routine 2 (pages 2, 3) is called to execute for 3 seconds 32

Working Set (Cont. ) n n In the previous example, the process needs pages 4, 5, 6, 7 for most of the time (20 seconds in a total of 25 seconds execution time) If these pages are kept in memory the number of page faults will decrease. Otherwise, thrashing may occur The set of pages that a process is currently using is called its working set Rule: Do not run a process if its working set can not be kept in memory 33

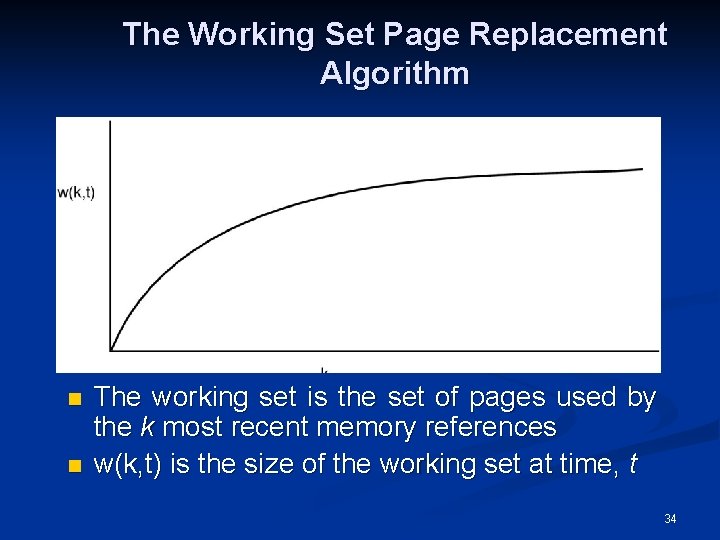

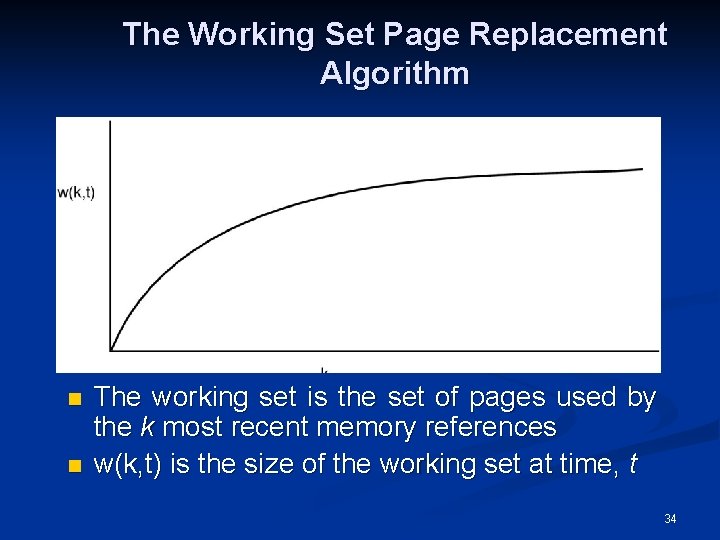

The Working Set Page Replacement Algorithm n n The working set is the set of pages used by the k most recent memory references w(k, t) is the size of the working set at time, t 34

Working Set Implementation n Use aging as in ~LRU n Pages with 1 (referenced) in “n” clock ticks are assumed to be a member of the working set n “n” is experimentally determined 35

Cleaning Policy n Cleaning Policy: Deciding when a modified page should be written out to secondary memory n Demand Precleaning: Page is written out only when it has been selected for replacement n Means a page fault may imply two I/O operations which severely effects performance 36

Cleaning Policy (Cont. ) n Precleaning: Pages written before frames are needed (so they can be written out in batches) n No sense writing out batches of pages and then finding them changed again n Page Buffering is a nice compromise 37

Load Control n Load Control: Controlling the number of processes that are resident in memory n Too few processes imply lack of ready processes, implies swapping n Too many processes implies high page fault frequency which leads to 38