Chapter 2 Processes and Threads 2 1 Processes

- Slides: 99

Chapter 2 Processes and Threads 2. 1 Processes 2. 2 Threads 2. 3 Interprocess communication 2. 4 Classical IPC problems 2. 5 Scheduling 1

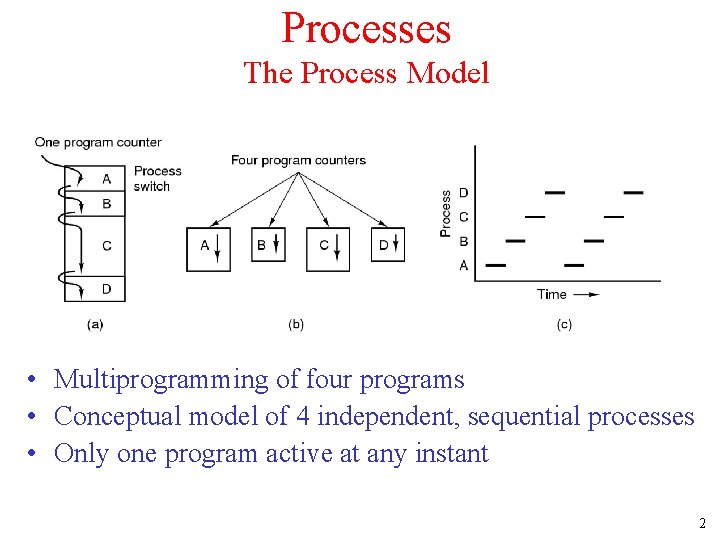

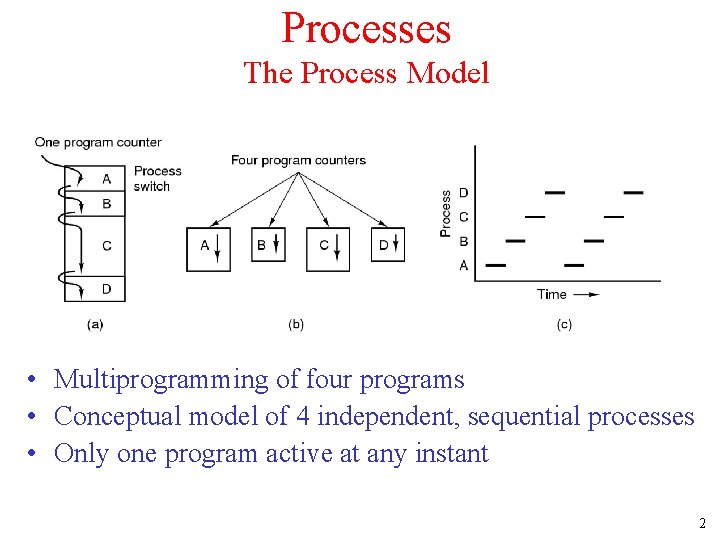

Processes The Process Model • Multiprogramming of four programs • Conceptual model of 4 independent, sequential processes • Only one program active at any instant 2

Process Creation Principal events that cause process creation 1. System initialization • Execution of a process creation system 1. User request to create a new process 2. Initiation of a batch job 3

Process Termination Conditions which terminate processes 1. Normal exit (voluntary) 2. Error exit (voluntary) 3. Fatal error (involuntary) 4. Killed by another process (involuntary) 4

Process Hierarchies • Parent creates a child process, child processes can create its own process • Forms a hierarchy – UNIX calls this a "process group" • Windows has no concept of process hierarchy – all processes are created equal 5

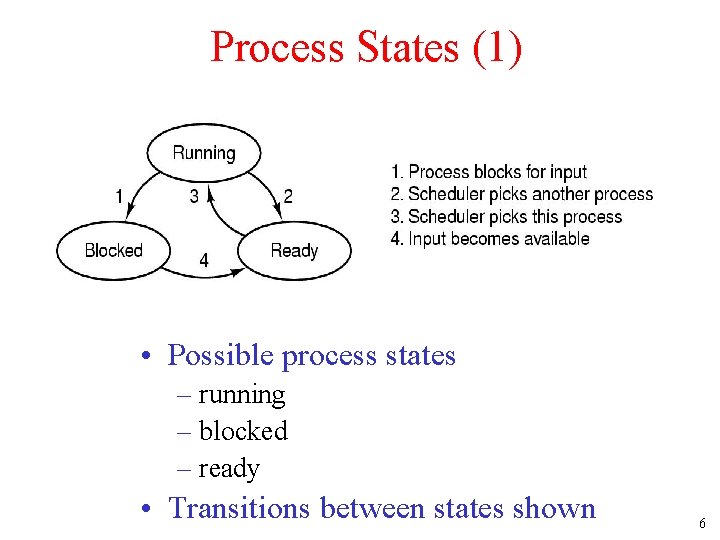

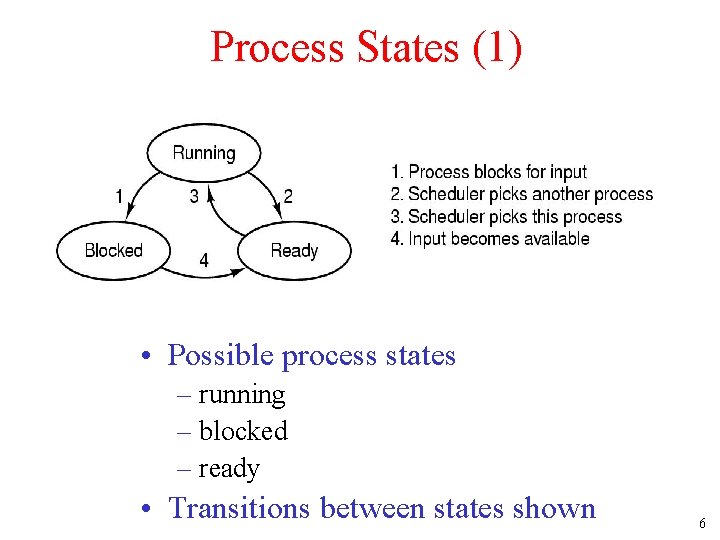

Process States (1) • Possible process states – running – blocked – ready • Transitions between states shown 6

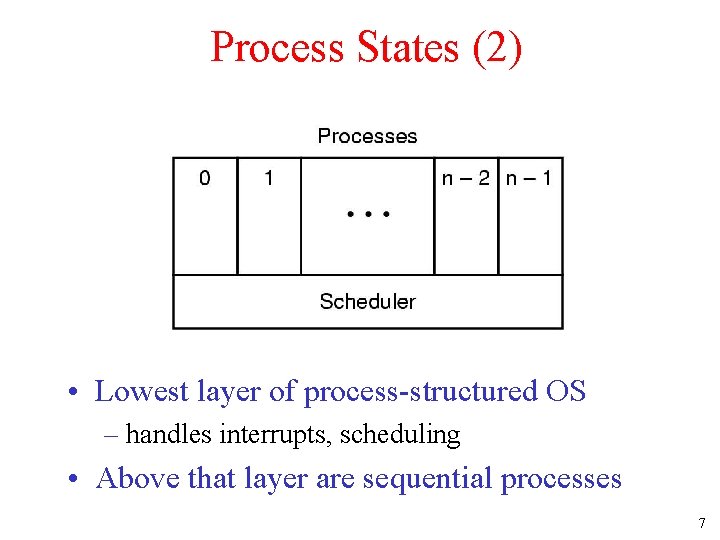

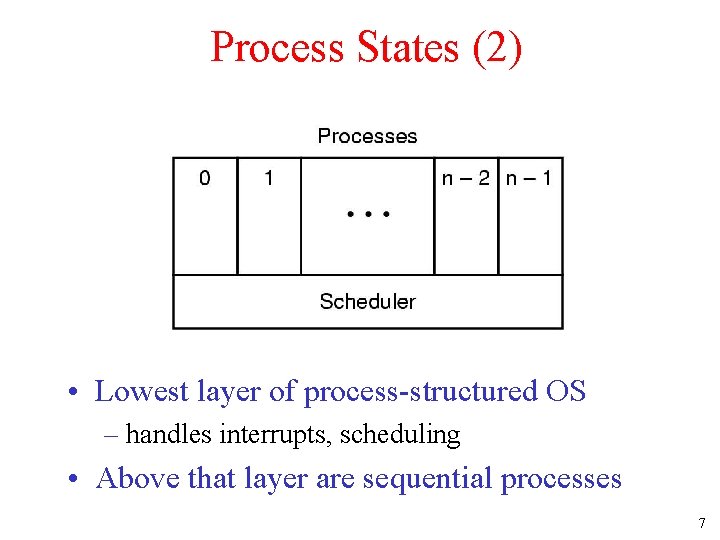

Process States (2) • Lowest layer of process-structured OS – handles interrupts, scheduling • Above that layer are sequential processes 7

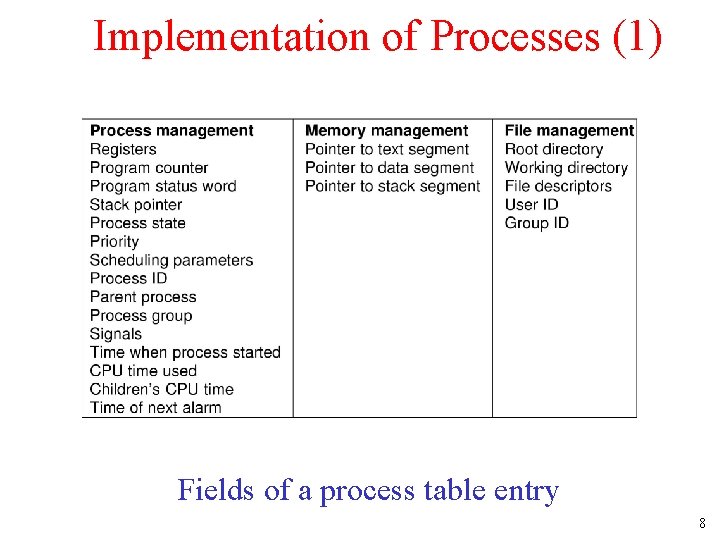

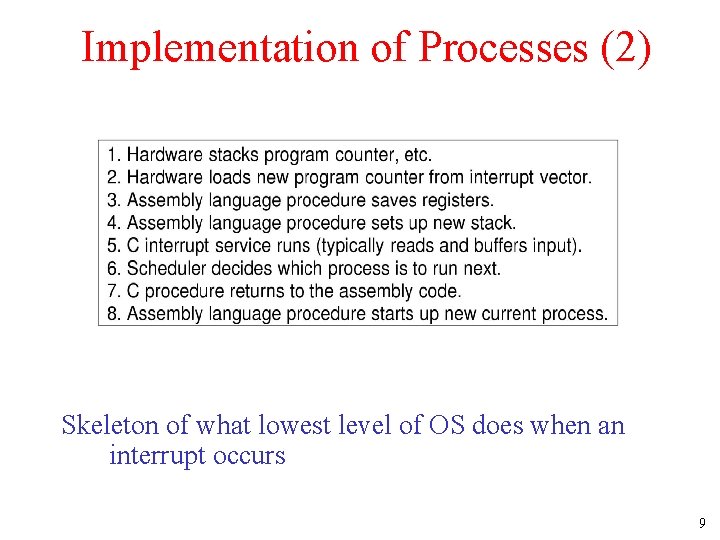

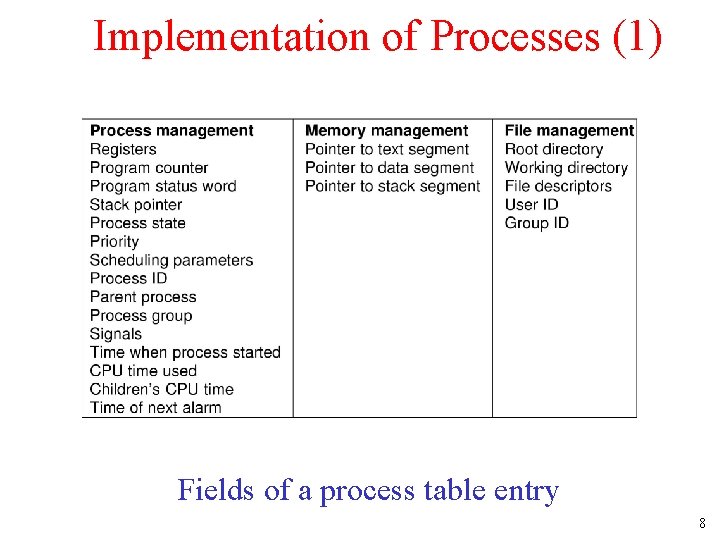

Implementation of Processes (1) Fields of a process table entry 8

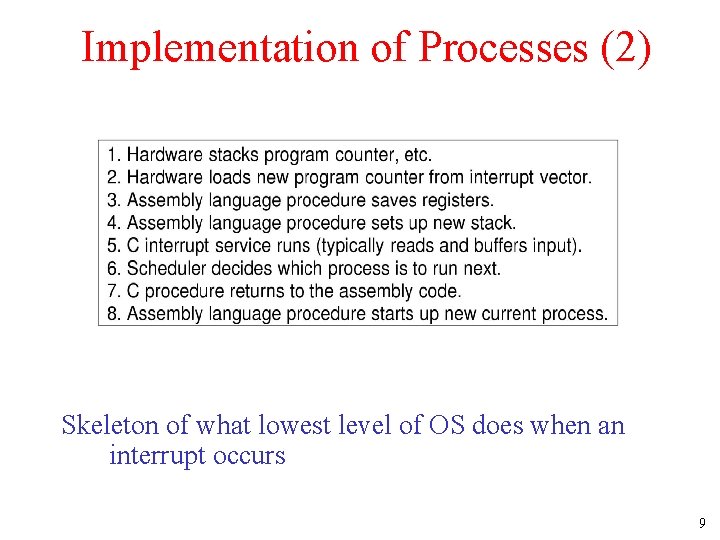

Implementation of Processes (2) Skeleton of what lowest level of OS does when an interrupt occurs 9

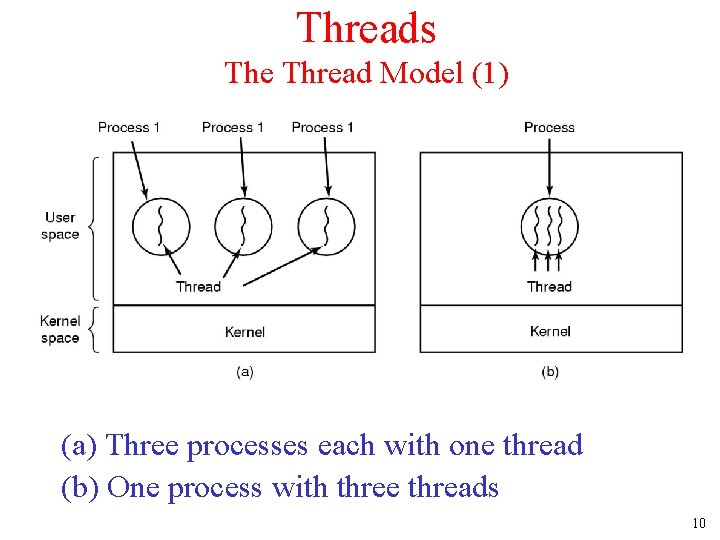

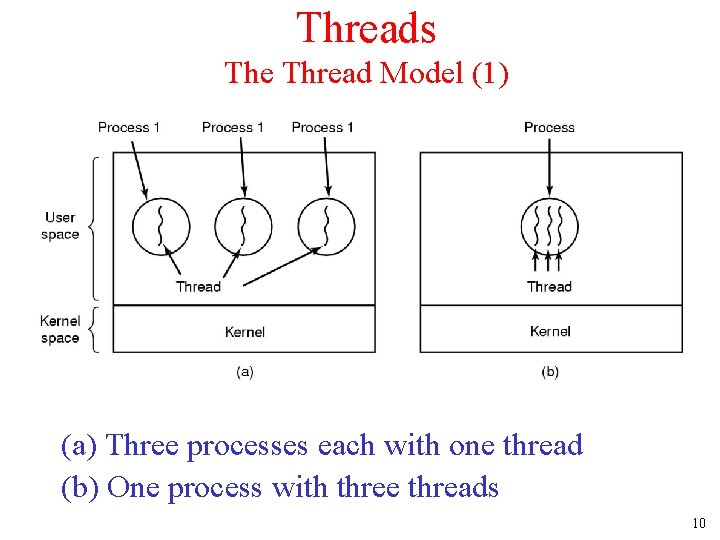

Threads The Thread Model (1) (a) Three processes each with one thread (b) One process with three threads 10

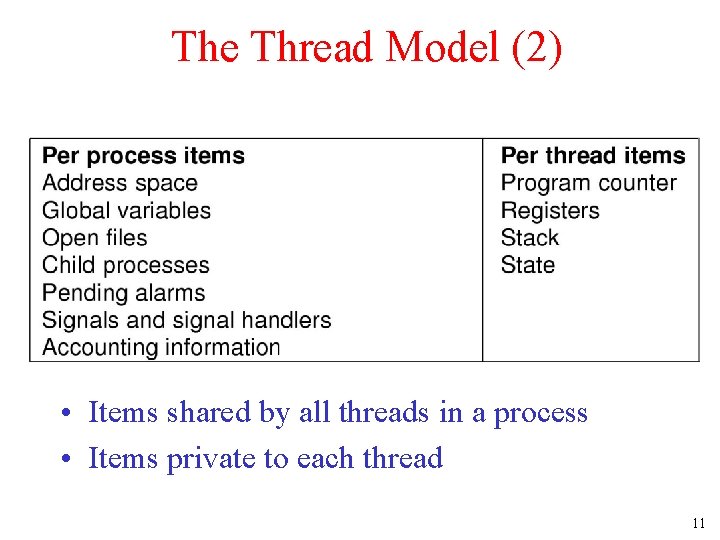

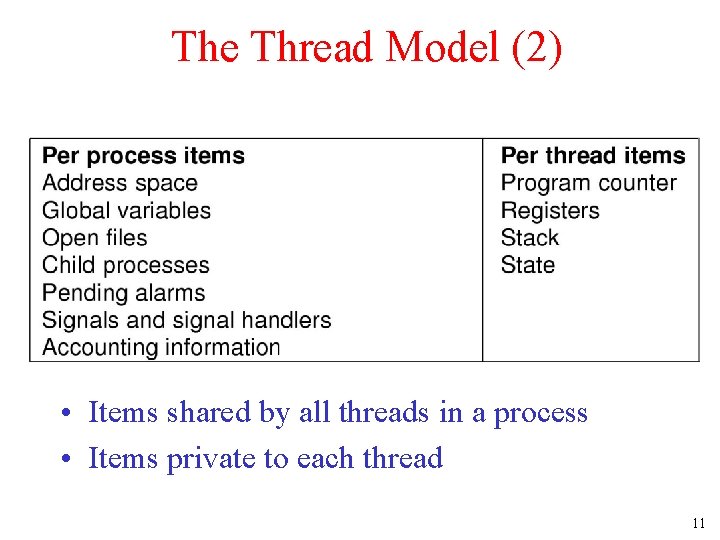

The Thread Model (2) • Items shared by all threads in a process • Items private to each thread 11

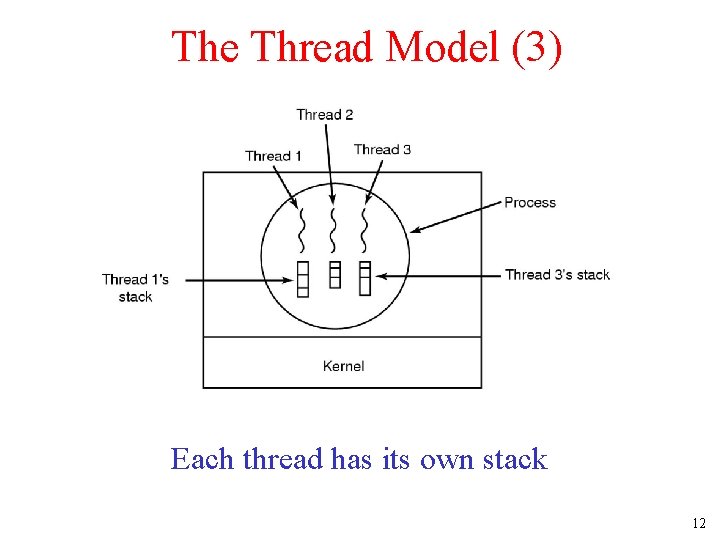

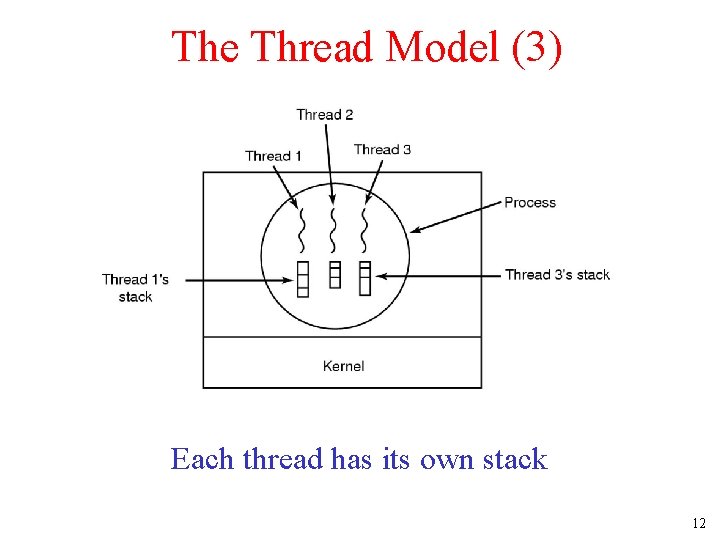

The Thread Model (3) Each thread has its own stack 12

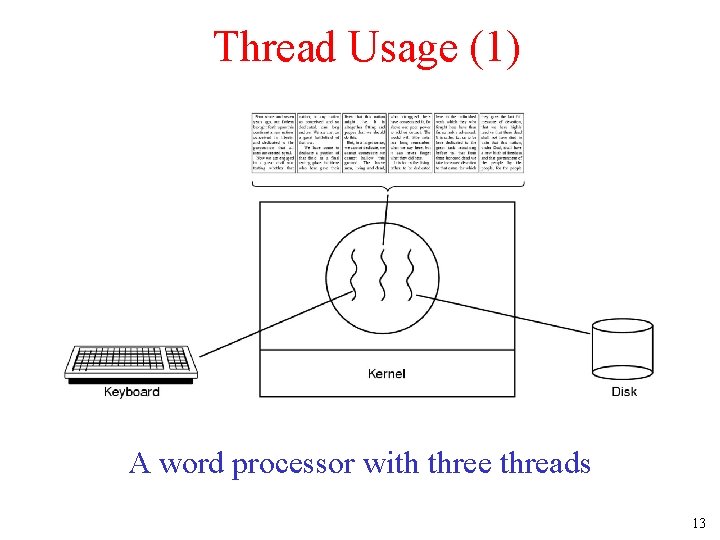

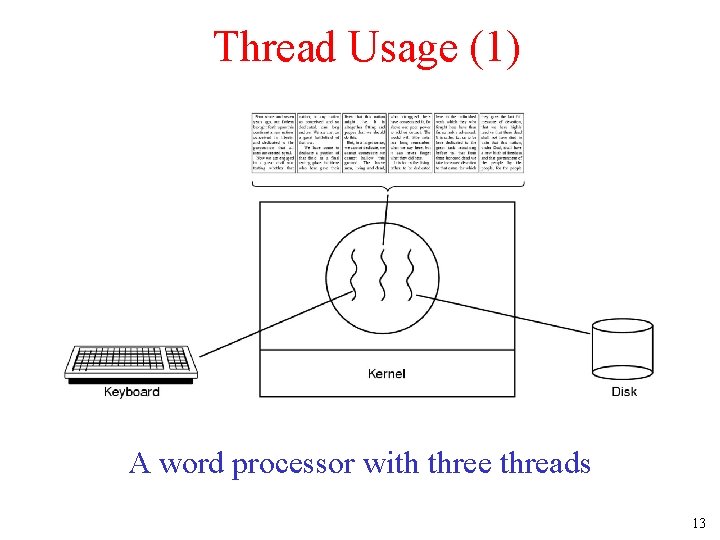

Thread Usage (1) A word processor with three threads 13

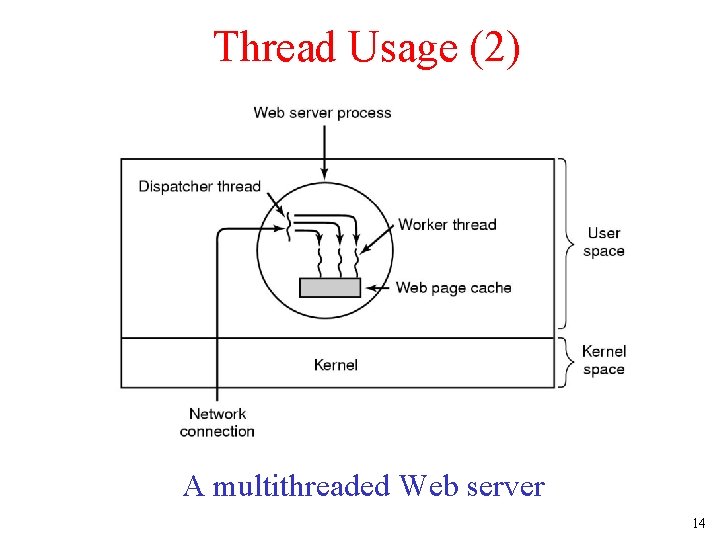

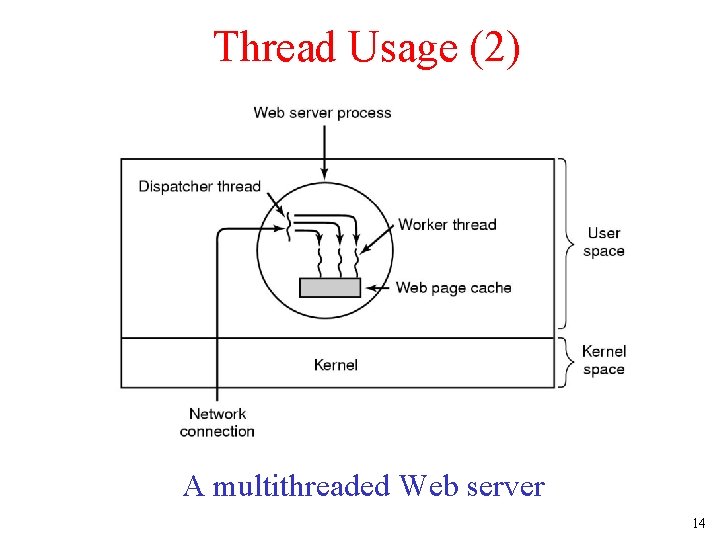

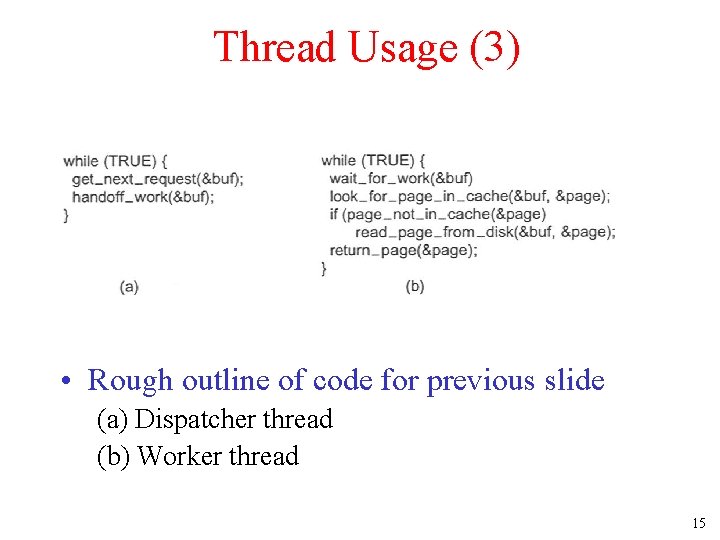

Thread Usage (2) A multithreaded Web server 14

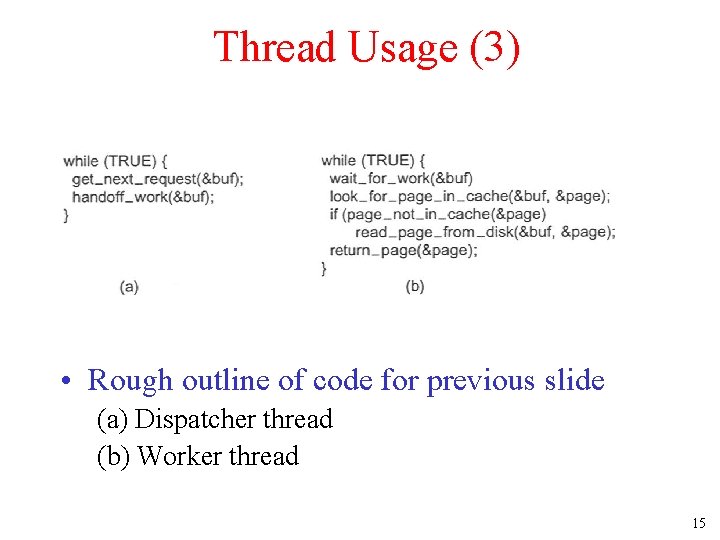

Thread Usage (3) • Rough outline of code for previous slide (a) Dispatcher thread (b) Worker thread 15

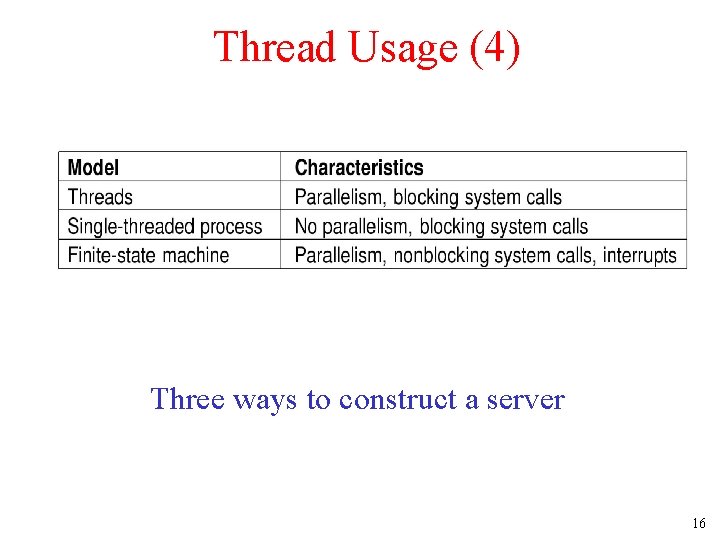

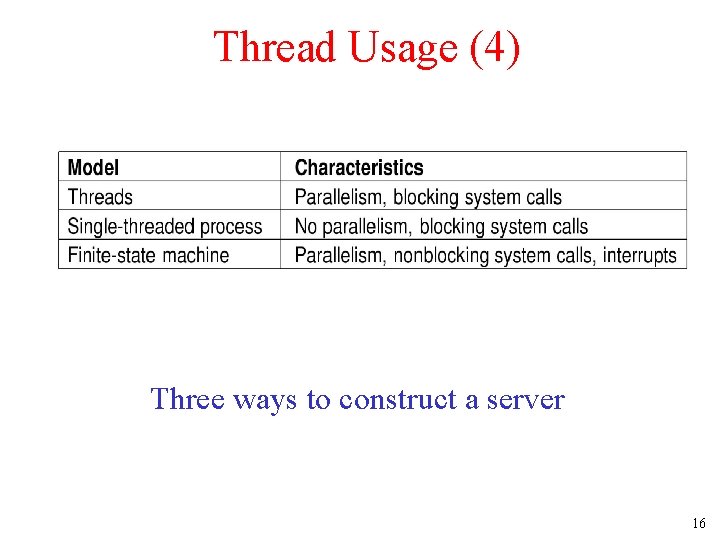

Thread Usage (4) Three ways to construct a server 16

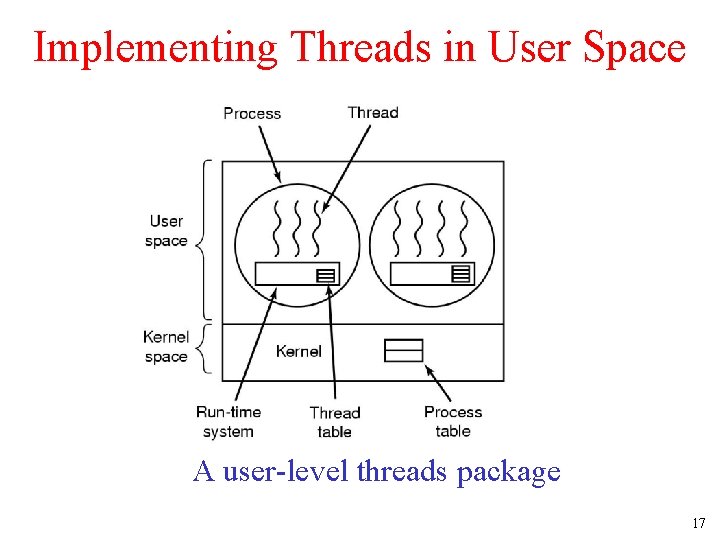

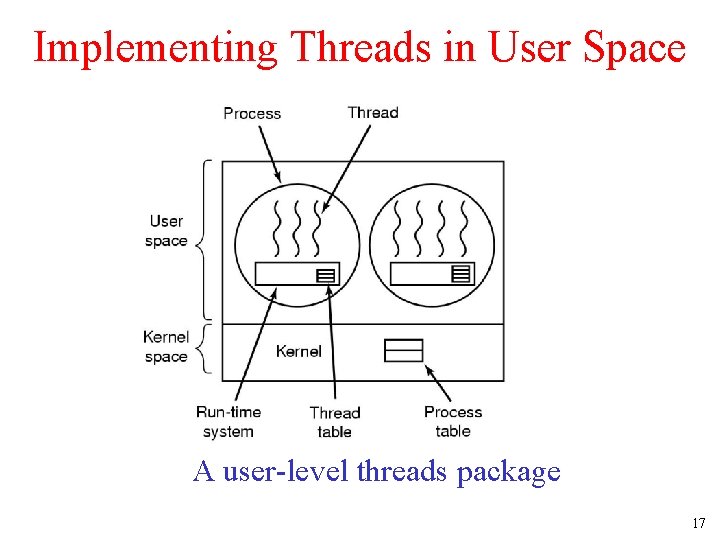

Implementing Threads in User Space A user-level threads package 17

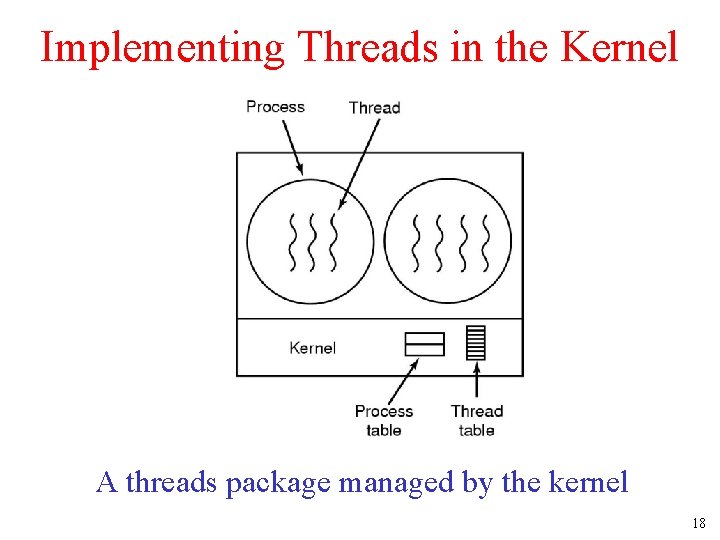

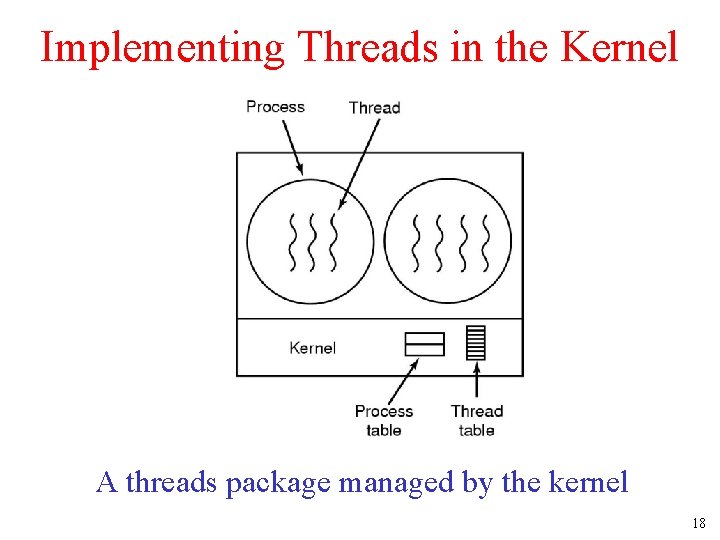

Implementing Threads in the Kernel A threads package managed by the kernel 18

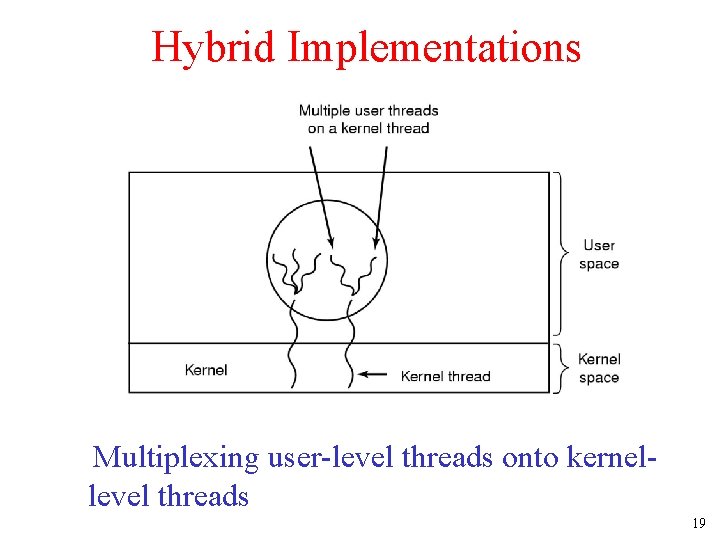

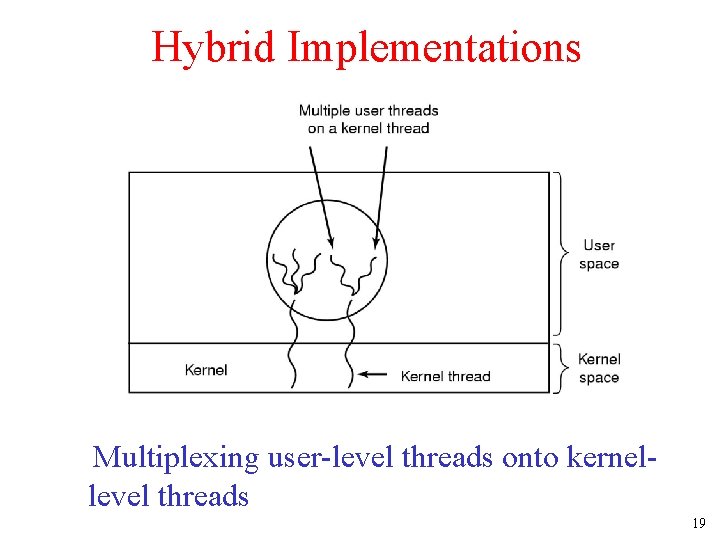

Hybrid Implementations Multiplexing user-level threads onto kernellevel threads 19

Scheduler Activations • Goal – mimic functionality of kernel threads – gain performance of user space threads • Avoids unnecessary user/kernel transitions • Kernel assigns virtual processors to each process – lets runtime system allocate threads to processors • Problem: Fundamental reliance on kernel (lower layer) calling procedures in user space (higher layer) 20

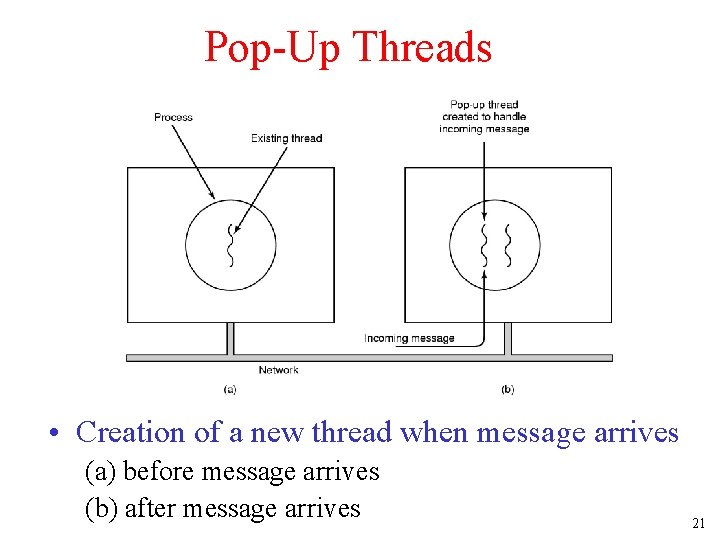

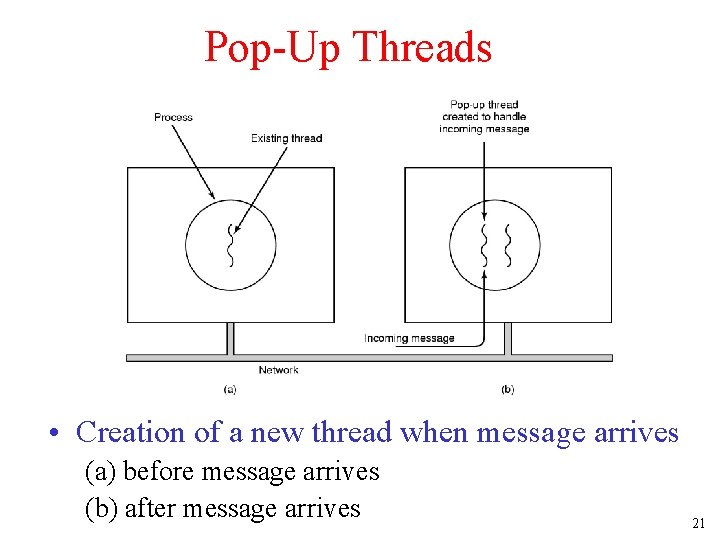

Pop-Up Threads • Creation of a new thread when message arrives (a) before message arrives (b) after message arrives 21

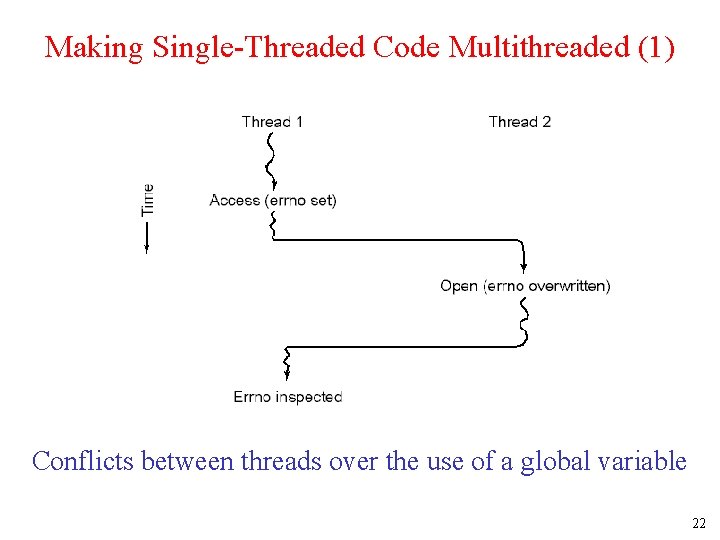

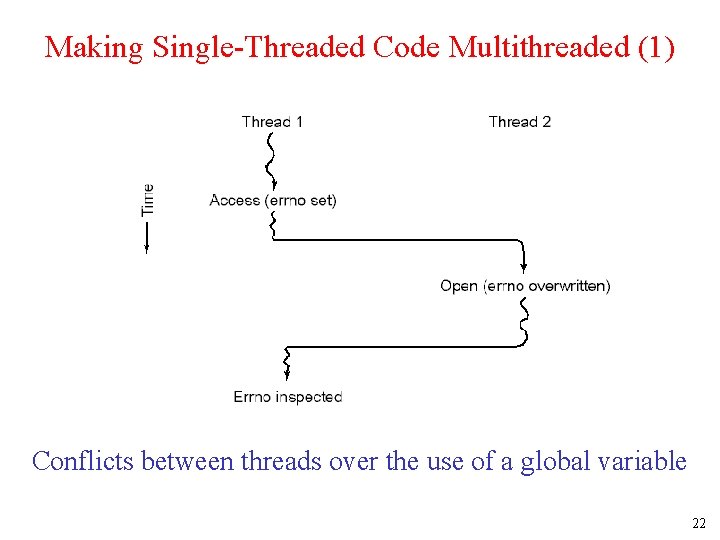

Making Single-Threaded Code Multithreaded (1) Conflicts between threads over the use of a global variable 22

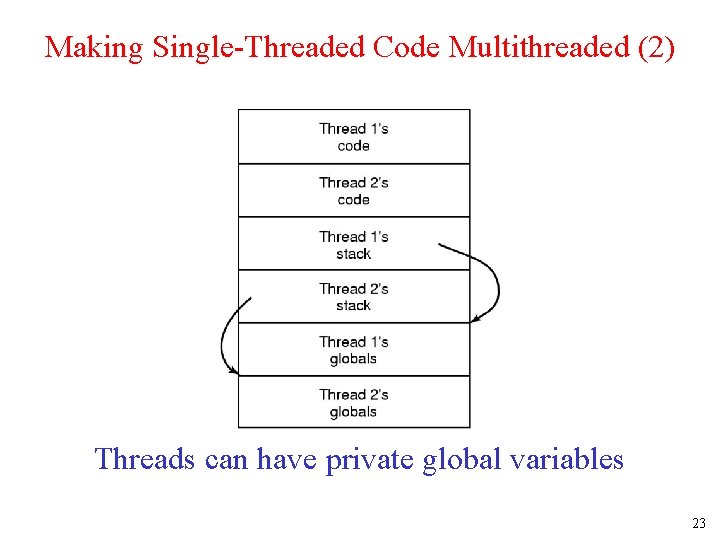

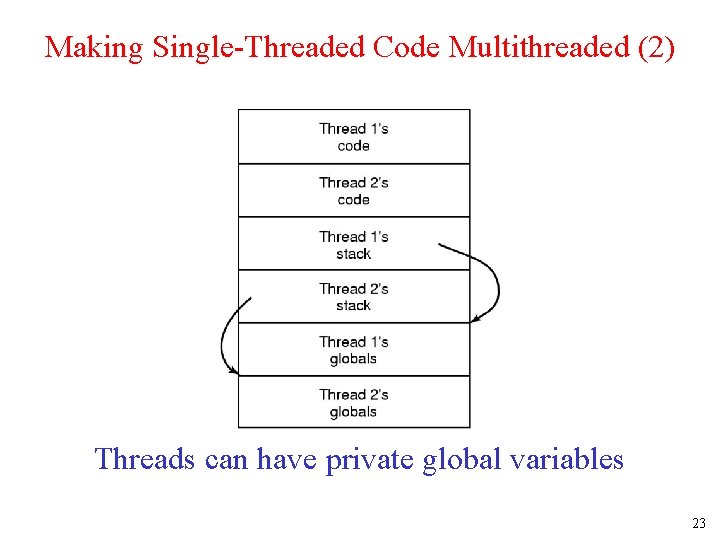

Making Single-Threaded Code Multithreaded (2) Threads can have private global variables 23

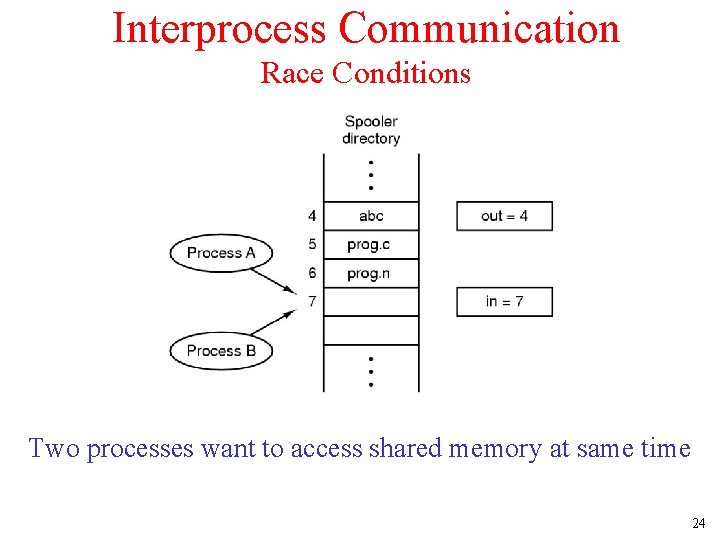

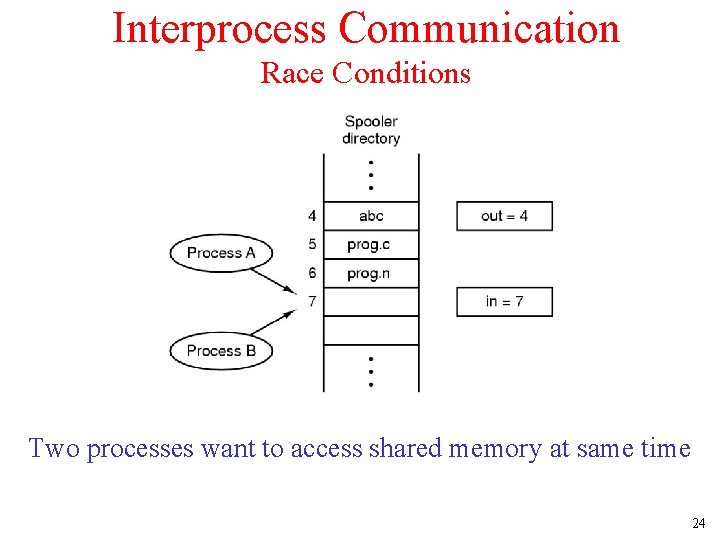

Interprocess Communication Race Conditions Two processes want to access shared memory at same time 24

Critical Regions (1) Four conditions to provide mutual exclusion 1. 2. 3. 4. No two processes simultaneously in critical region No assumptions made about speeds or numbers of CPUs No process running outside its critical region may block another process No process must wait forever to enter its critical region 25

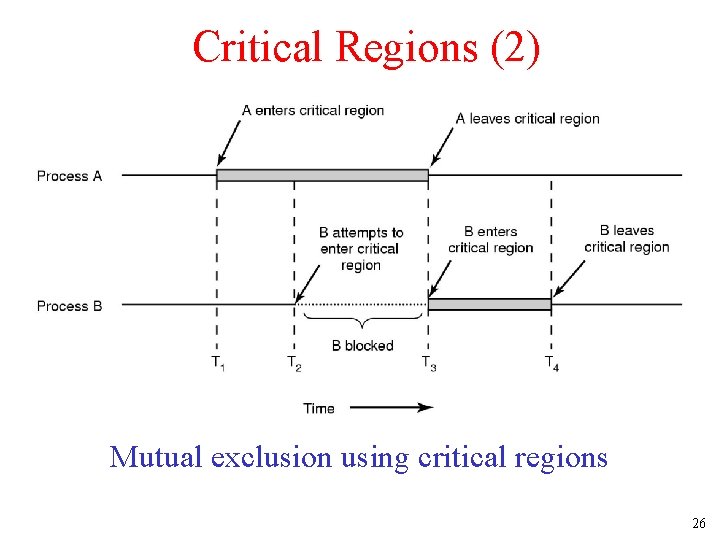

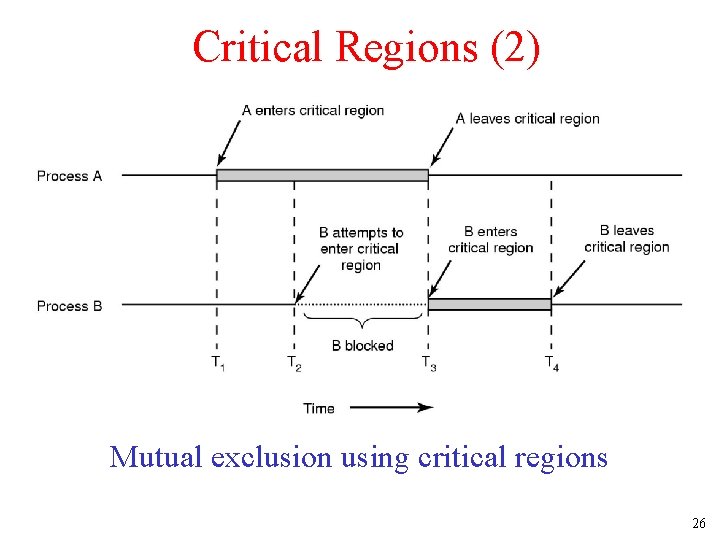

Critical Regions (2) Mutual exclusion using critical regions 26

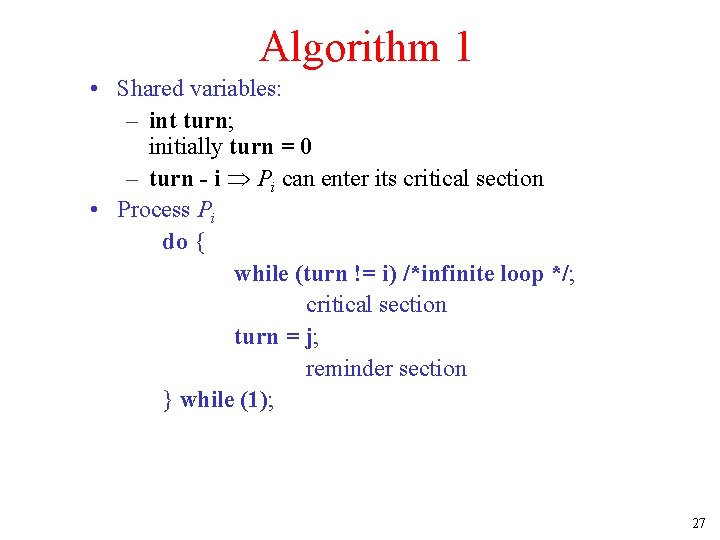

Algorithm 1 • Shared variables: – int turn; initially turn = 0 – turn - i Pi can enter its critical section • Process Pi do { while (turn != i) /*infinite loop */; critical section turn = j; reminder section } while (1); 27

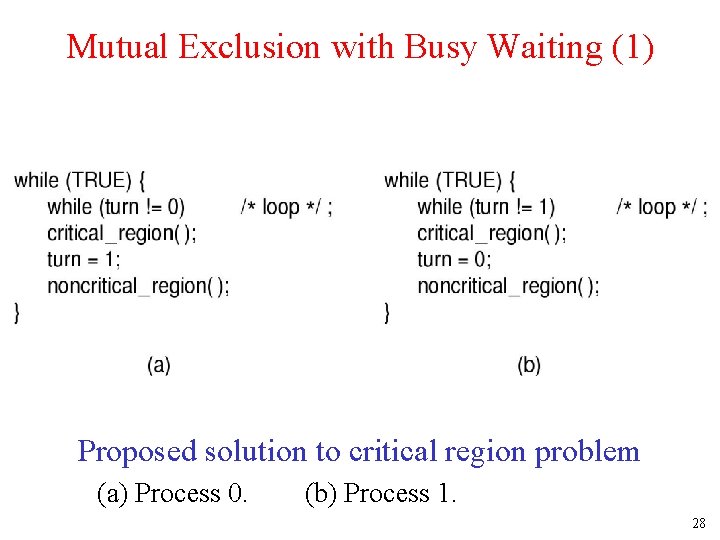

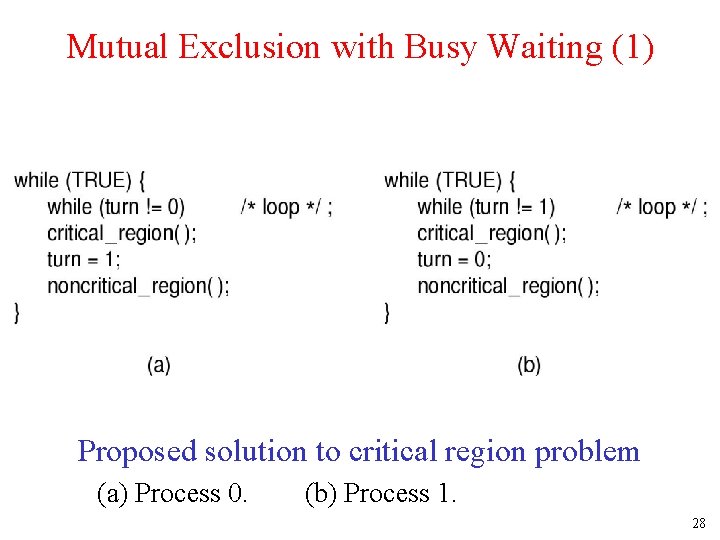

Mutual Exclusion with Busy Waiting (1) Proposed solution to critical region problem (a) Process 0. (b) Process 1. 28

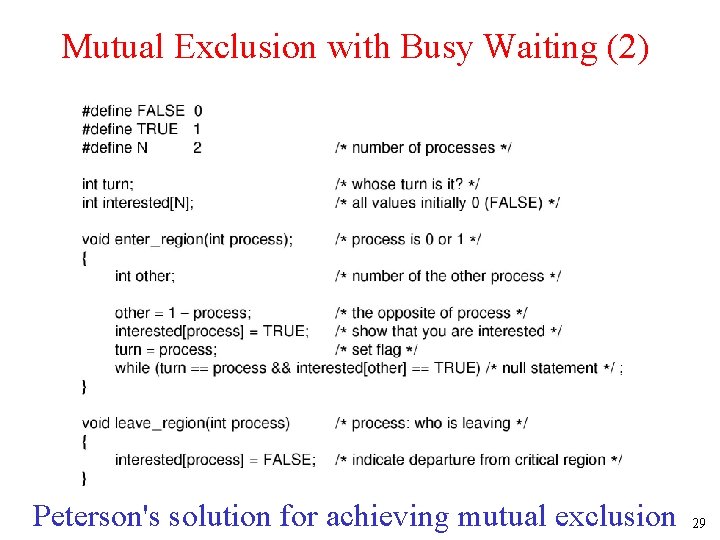

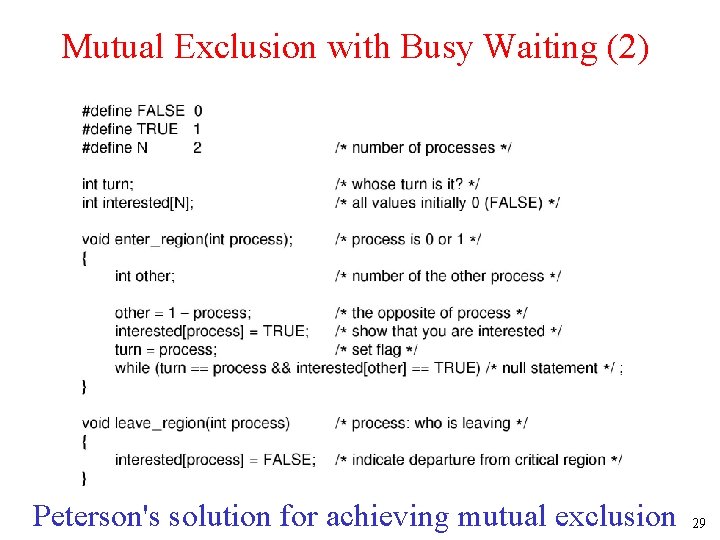

Mutual Exclusion with Busy Waiting (2) Peterson's solution for achieving mutual exclusion 29

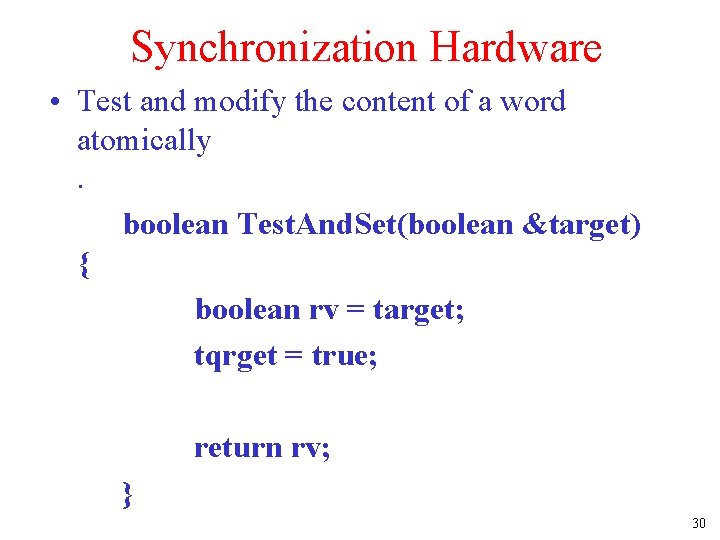

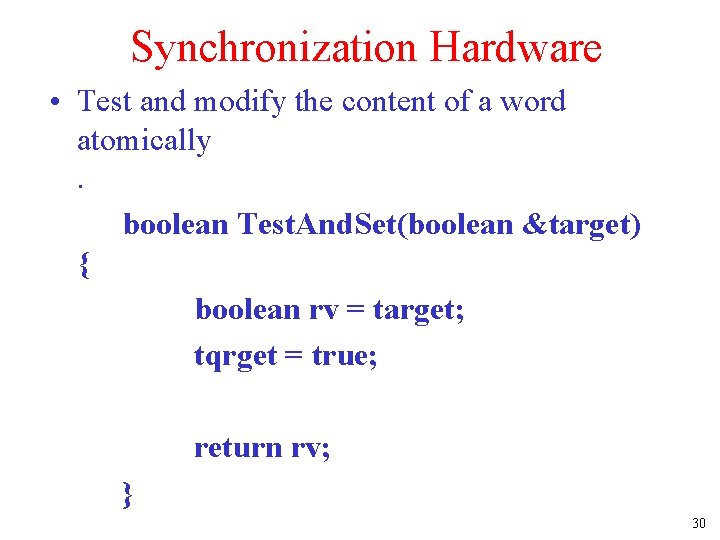

Synchronization Hardware • Test and modify the content of a word atomically. boolean Test. And. Set(boolean &target) { boolean rv = target; tqrget = true; return rv; } 30

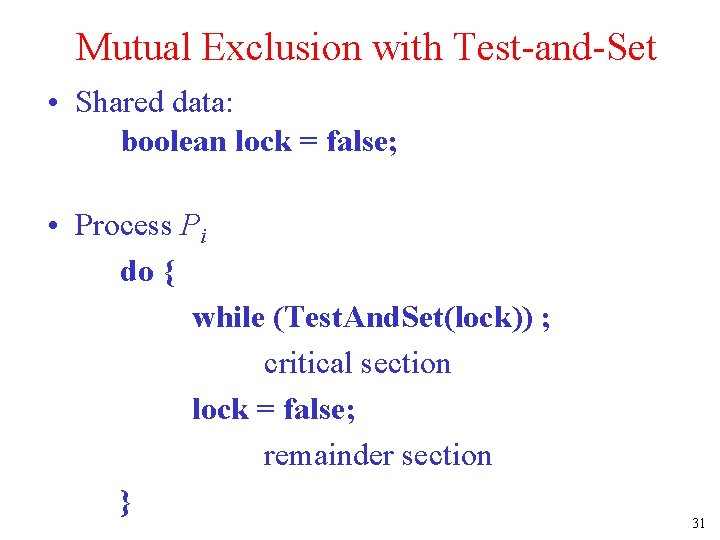

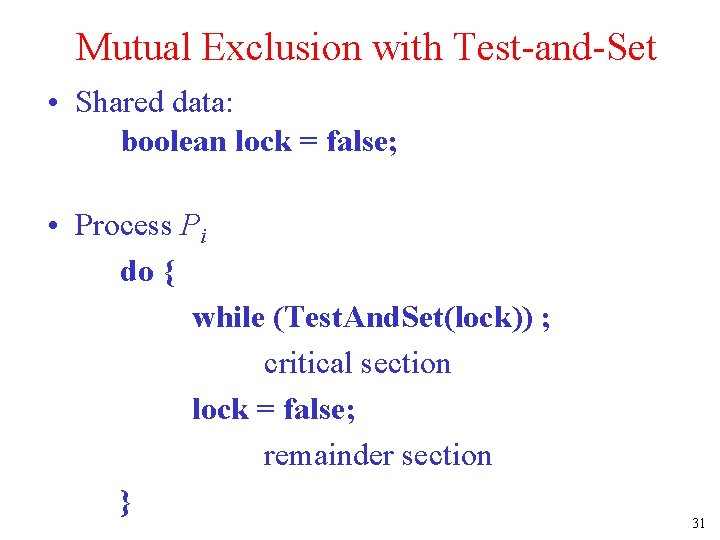

Mutual Exclusion with Test-and-Set • Shared data: boolean lock = false; • Process Pi do { while (Test. And. Set(lock)) ; critical section lock = false; remainder section } 31

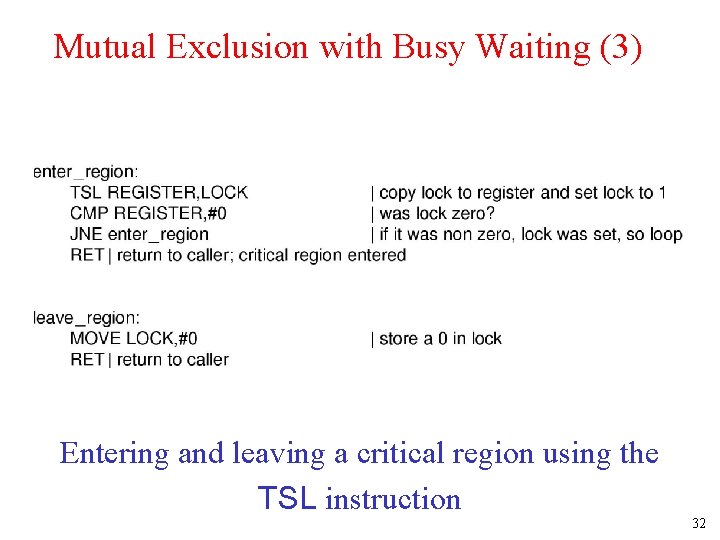

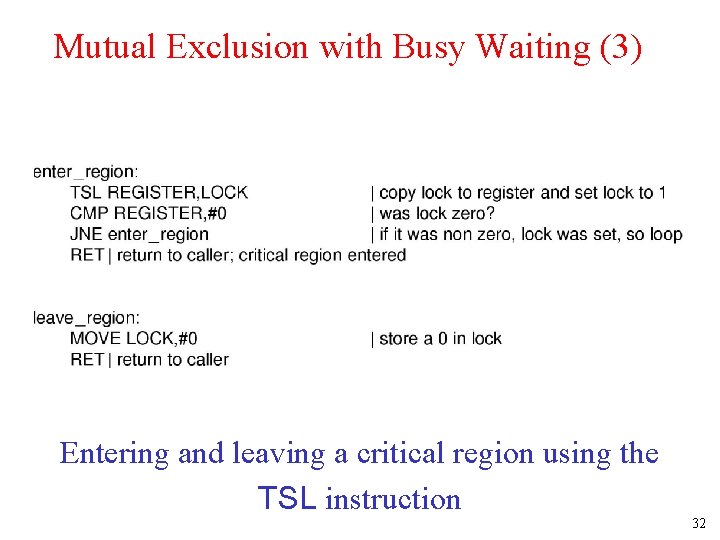

Mutual Exclusion with Busy Waiting (3) Entering and leaving a critical region using the TSL instruction 32

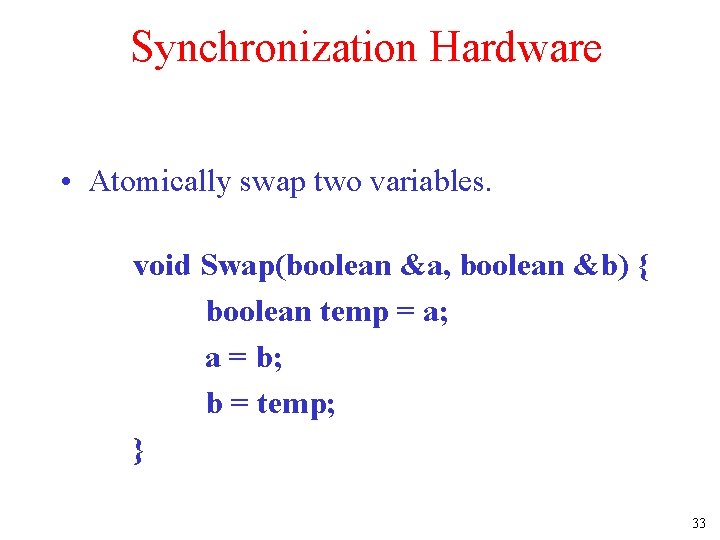

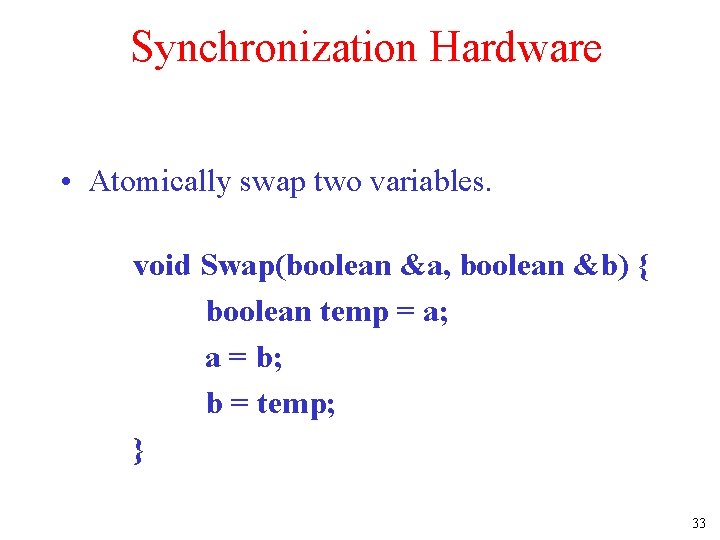

Synchronization Hardware • Atomically swap two variables. void Swap(boolean &a, boolean &b) { boolean temp = a; a = b; b = temp; } 33

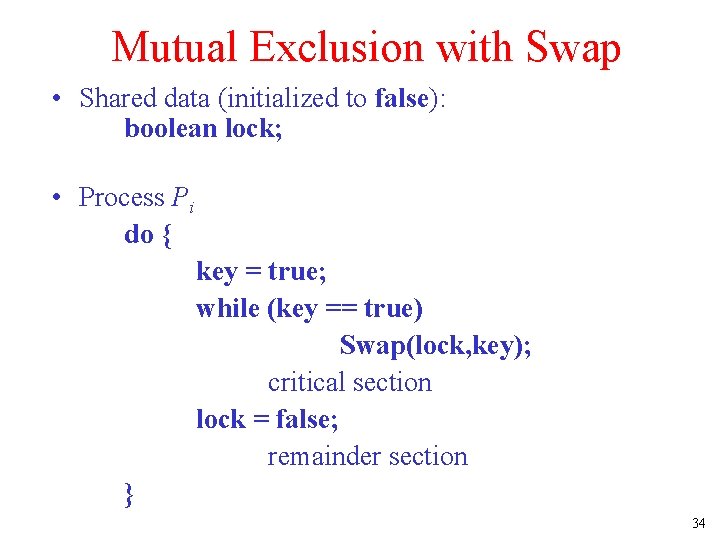

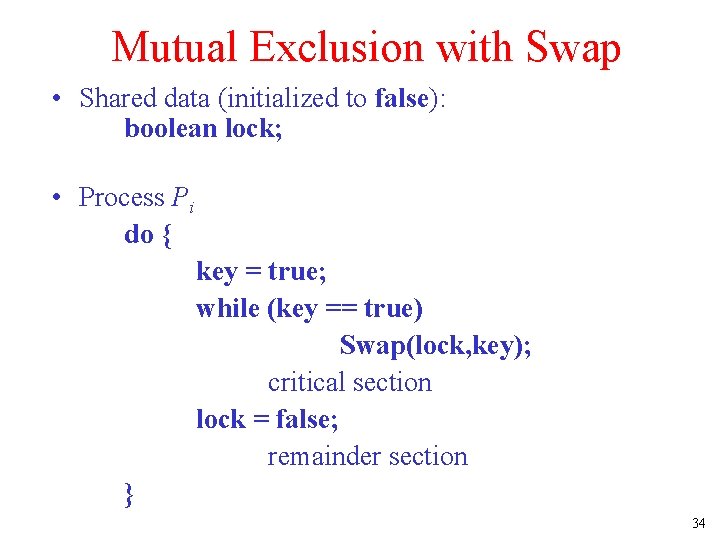

Mutual Exclusion with Swap • Shared data (initialized to false): boolean lock; • Process Pi do { key = true; while (key == true) Swap(lock, key); critical section lock = false; remainder section } 34

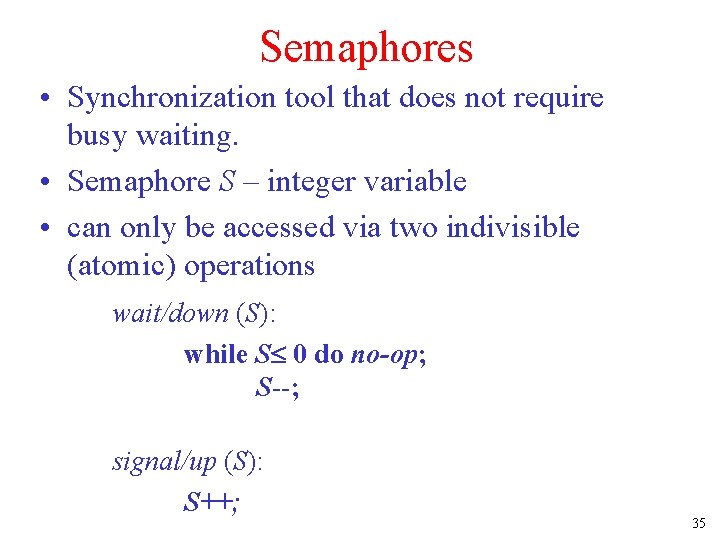

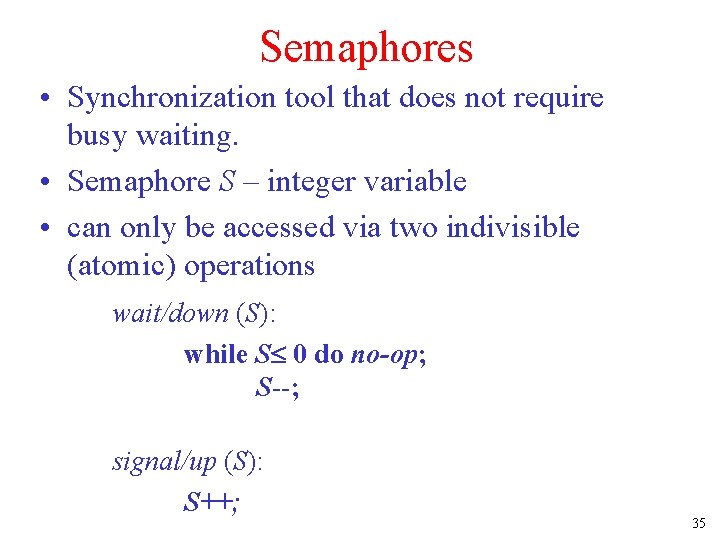

Semaphores • Synchronization tool that does not require busy waiting. • Semaphore S – integer variable • can only be accessed via two indivisible (atomic) operations wait/down (S): while S 0 do no-op; S--; signal/up (S): S++; 35

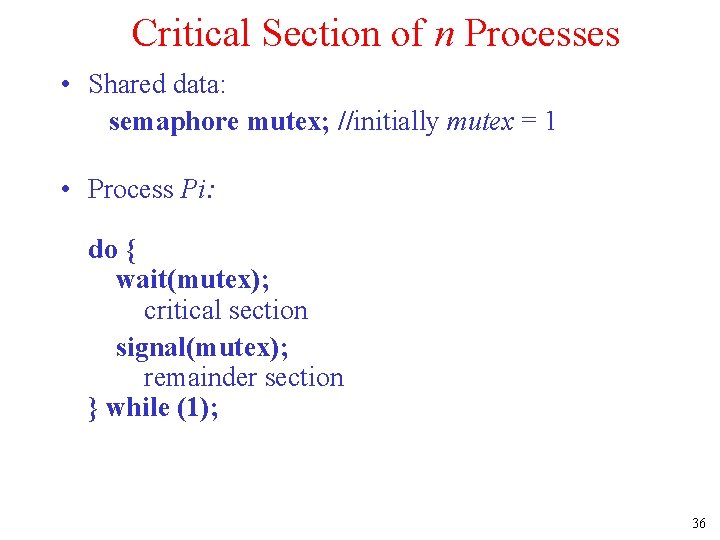

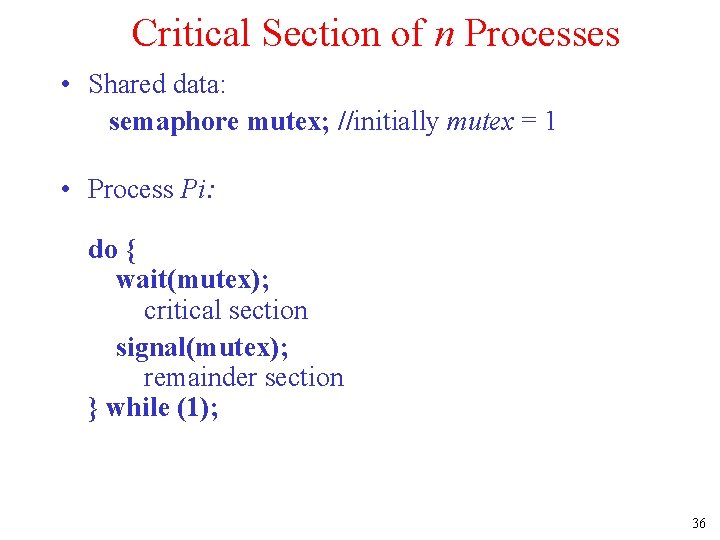

Critical Section of n Processes • Shared data: semaphore mutex; //initially mutex = 1 • Process Pi: do { wait(mutex); critical section signal(mutex); remainder section } while (1); 36

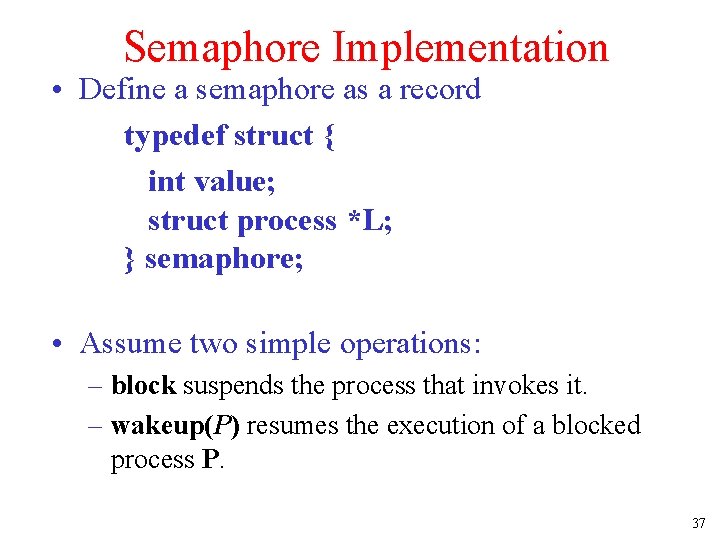

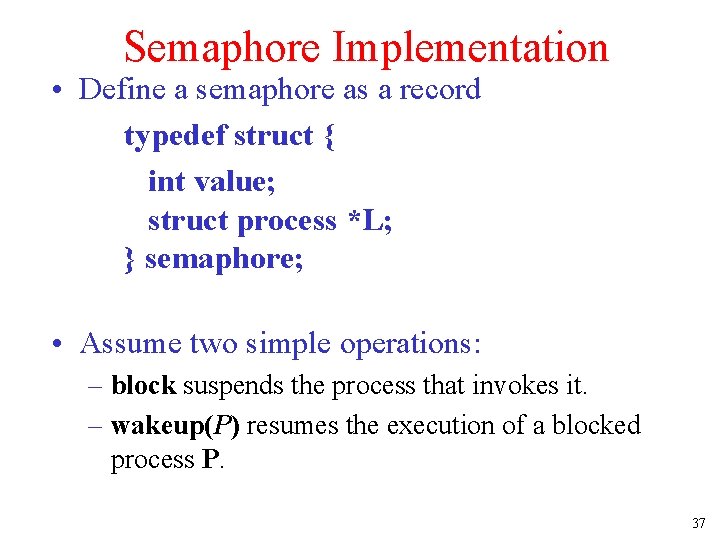

Semaphore Implementation • Define a semaphore as a record typedef struct { int value; struct process *L; } semaphore; • Assume two simple operations: – block suspends the process that invokes it. – wakeup(P) resumes the execution of a blocked process P. 37

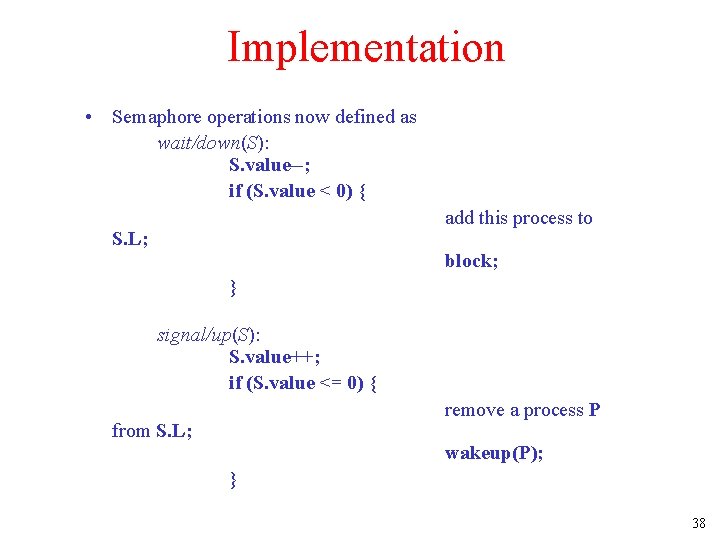

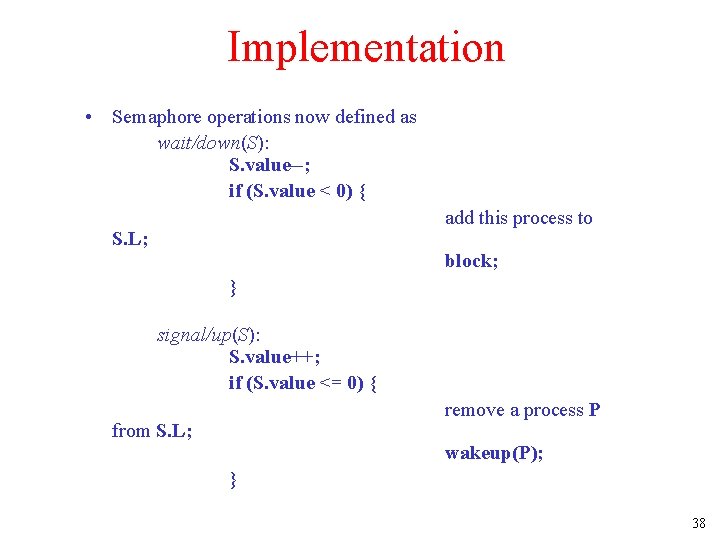

Implementation • Semaphore operations now defined as wait/down(S): S. value--; if (S. value < 0) { add this process to S. L; block; } signal/up(S): S. value++; if (S. value <= 0) { remove a process P from S. L; wakeup(P); } 38

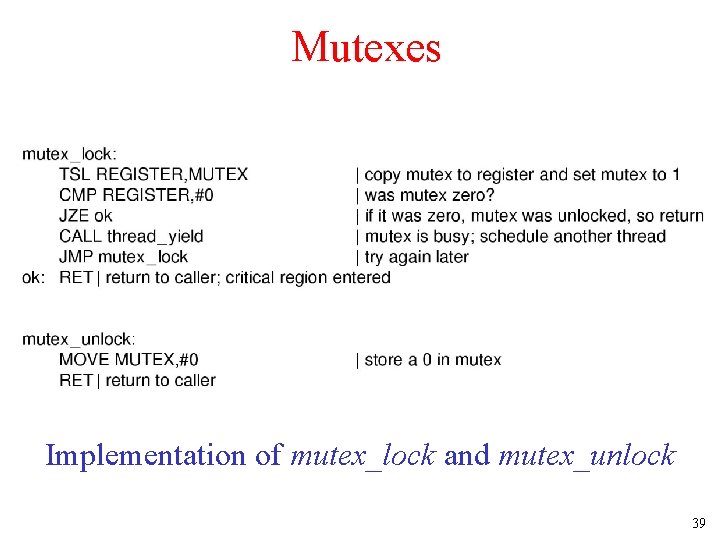

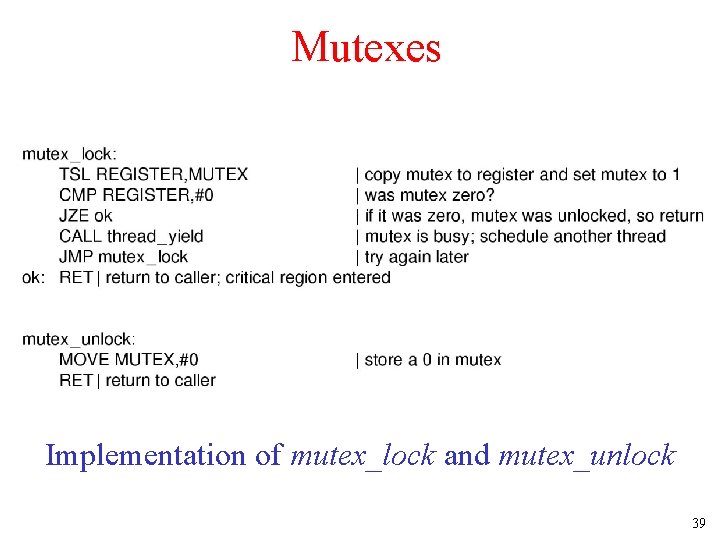

Mutexes Implementation of mutex_lock and mutex_unlock 39

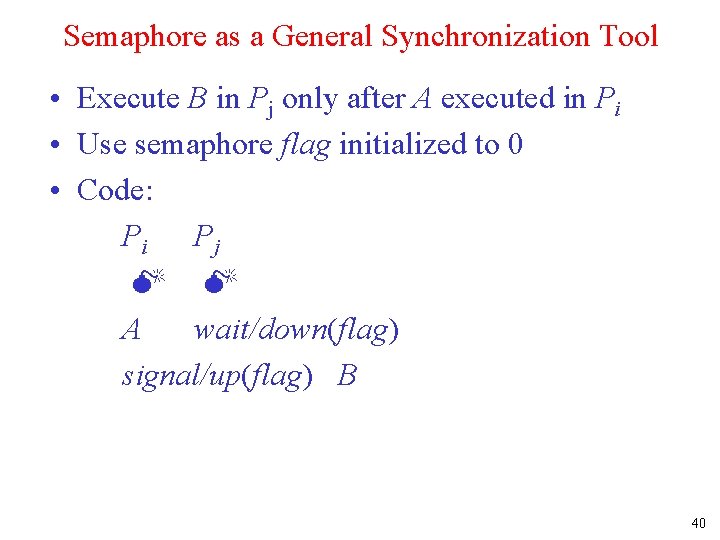

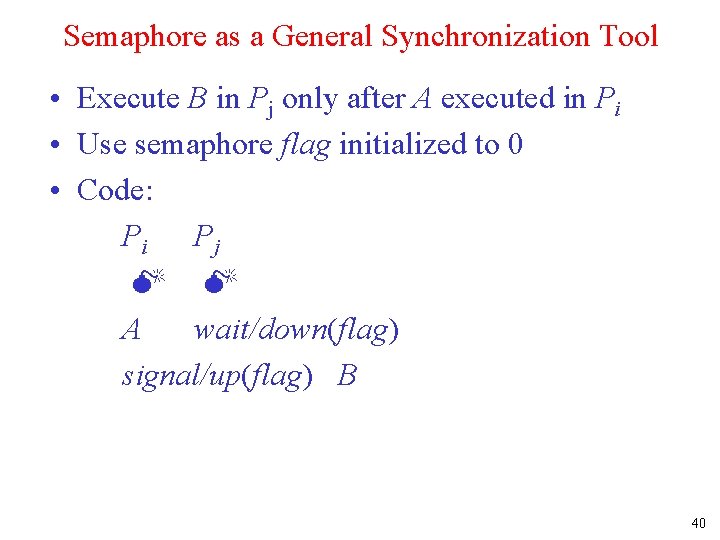

Semaphore as a General Synchronization Tool • Execute B in Pj only after A executed in Pi • Use semaphore flag initialized to 0 • Code: Pi Pj A wait/down(flag) signal/up(flag) B 40

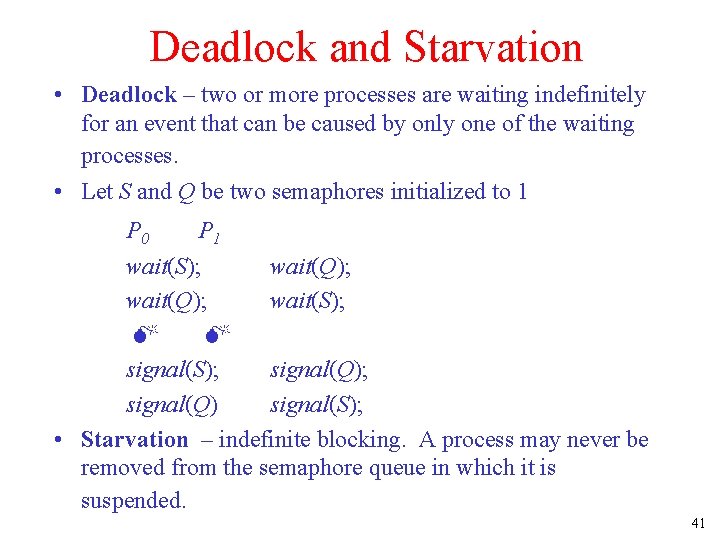

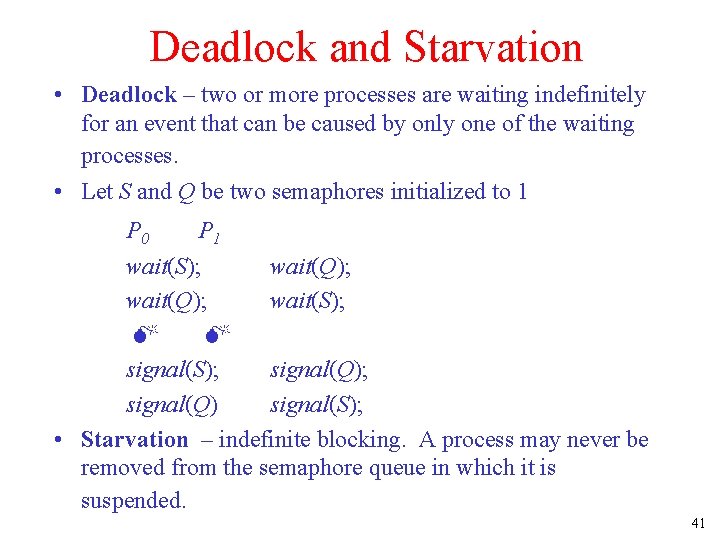

Deadlock and Starvation • Deadlock – two or more processes are waiting indefinitely for an event that can be caused by only one of the waiting processes. • Let S and Q be two semaphores initialized to 1 P 0 P 1 wait(S); wait(Q); wait(S); signal(Q); signal(Q) signal(S); • Starvation – indefinite blocking. A process may never be removed from the semaphore queue in which it is suspended. 41

Two Types of Semaphores • Counting semaphore – integer value can range over an unrestricted domain. • Binary semaphore – integer value can range only between 0 and 1; can be simpler to implement. • Can implement a counting semaphore S as a binary semaphore. 42

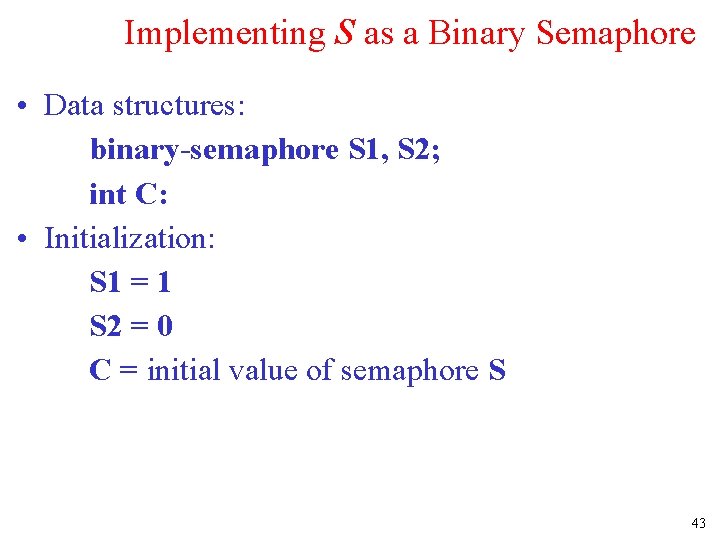

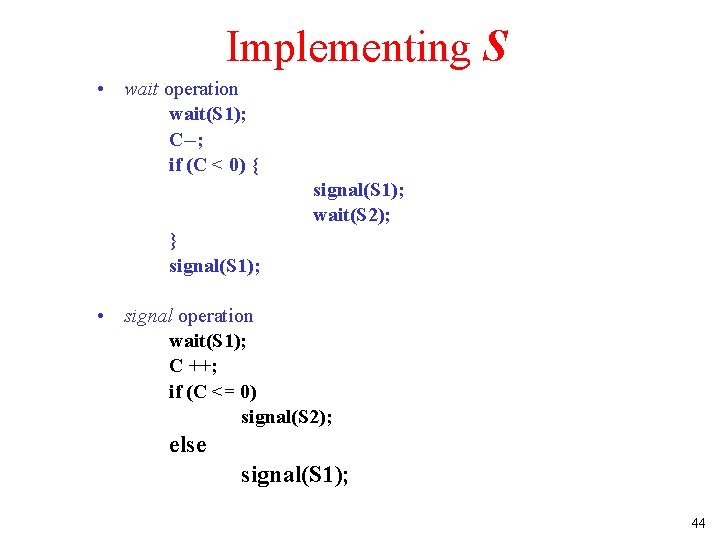

Implementing S as a Binary Semaphore • Data structures: binary-semaphore S 1, S 2; int C: • Initialization: S 1 = 1 S 2 = 0 C = initial value of semaphore S 43

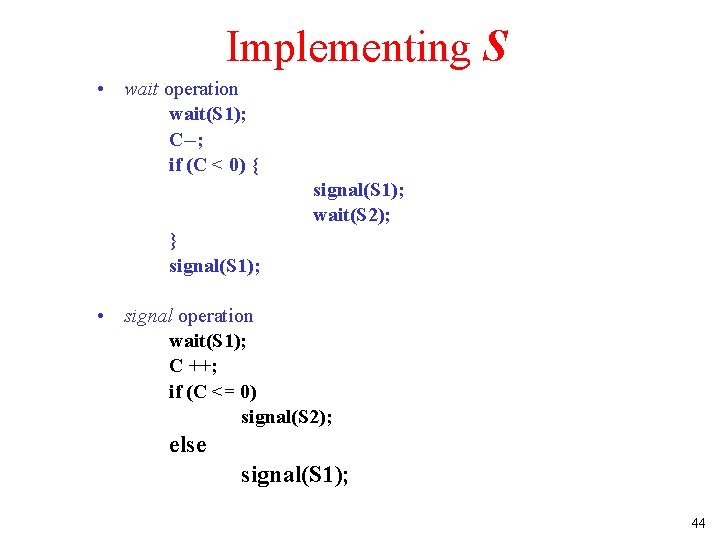

Implementing S • wait operation wait(S 1); C--; if (C < 0) { signal(S 1); wait(S 2); } signal(S 1); • signal operation wait(S 1); C ++; if (C <= 0) signal(S 2); else signal(S 1); 44

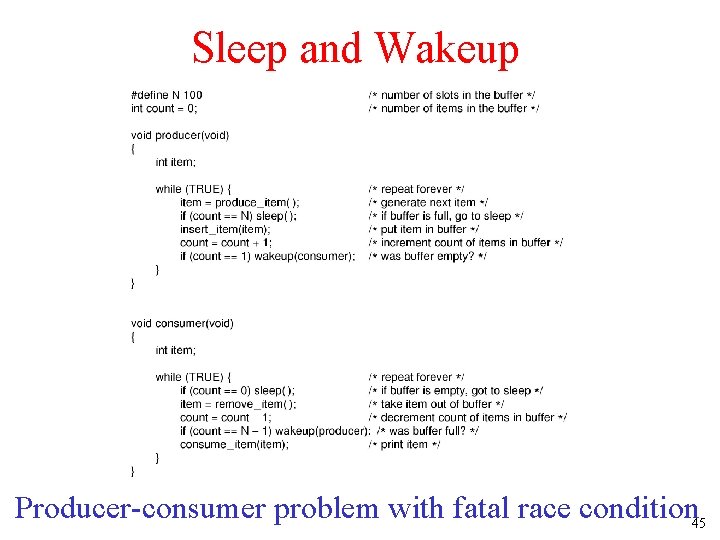

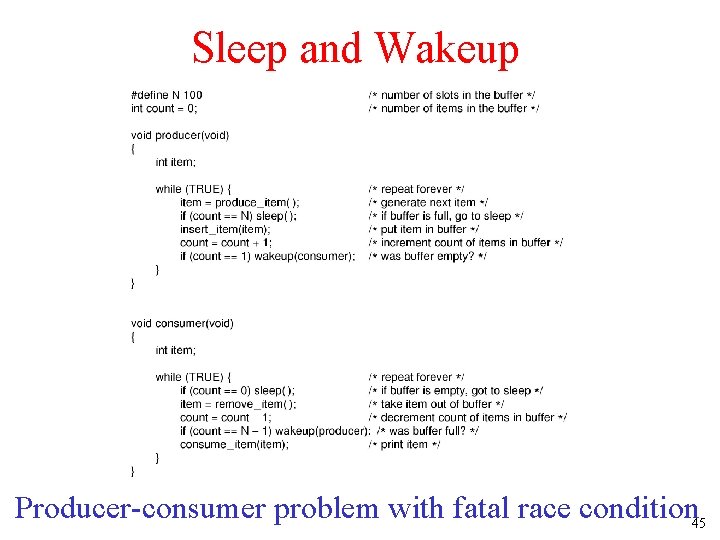

Sleep and Wakeup Producer-consumer problem with fatal race condition 45

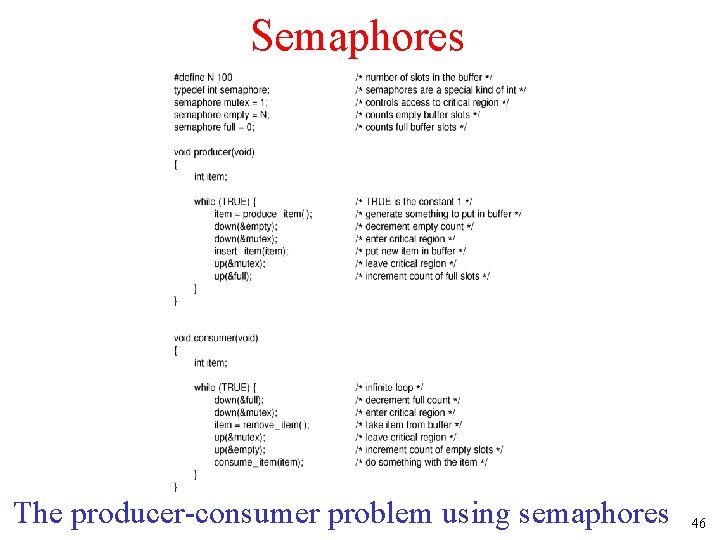

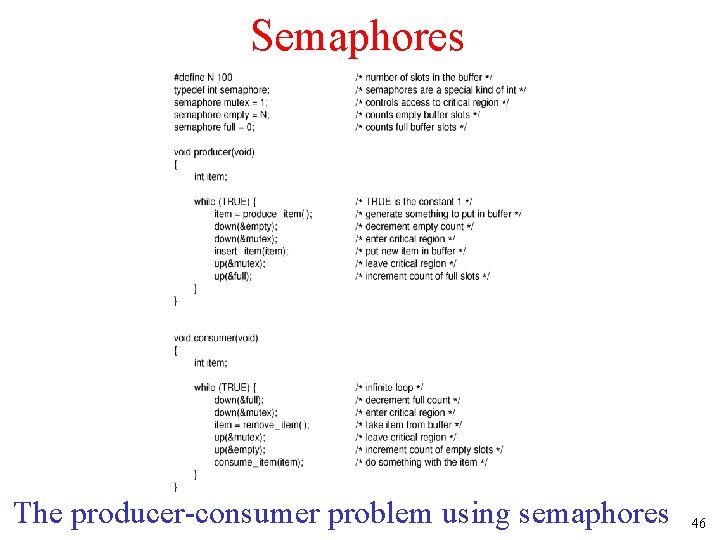

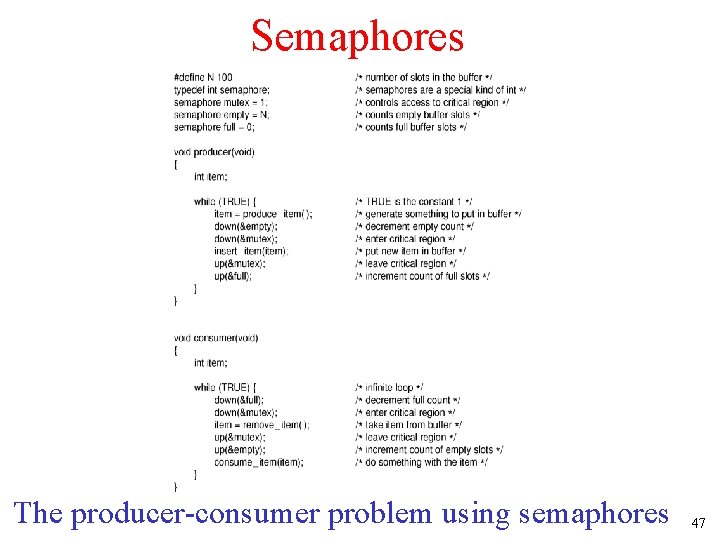

Semaphores The producer-consumer problem using semaphores 46

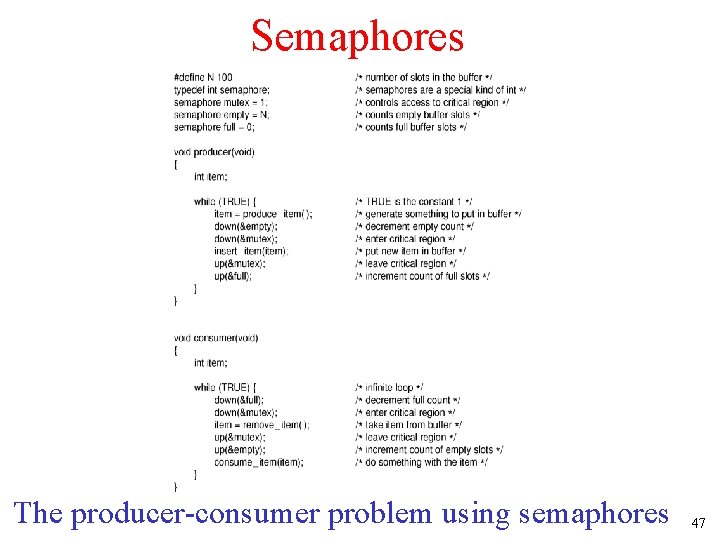

Semaphores The producer-consumer problem using semaphores 47

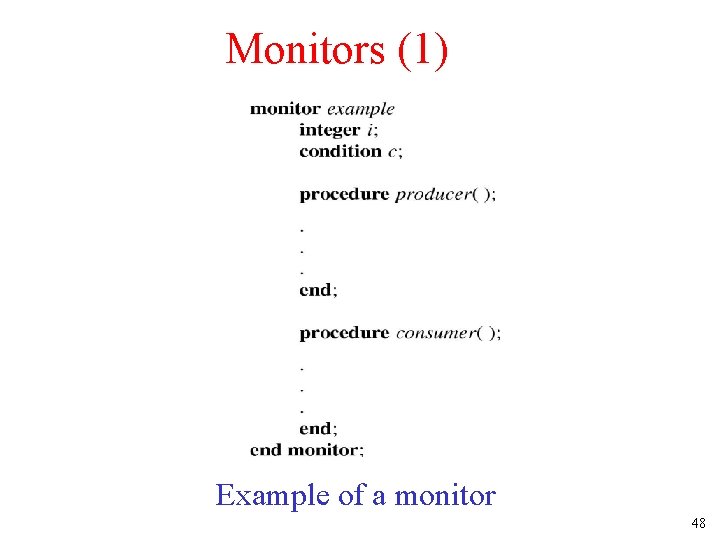

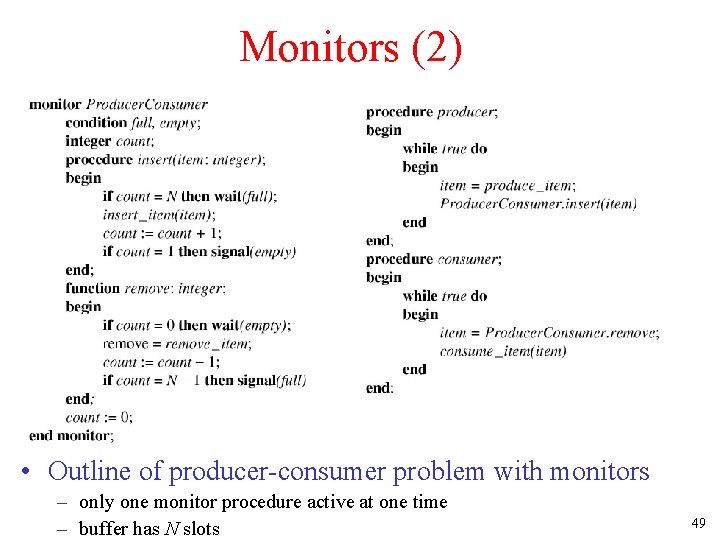

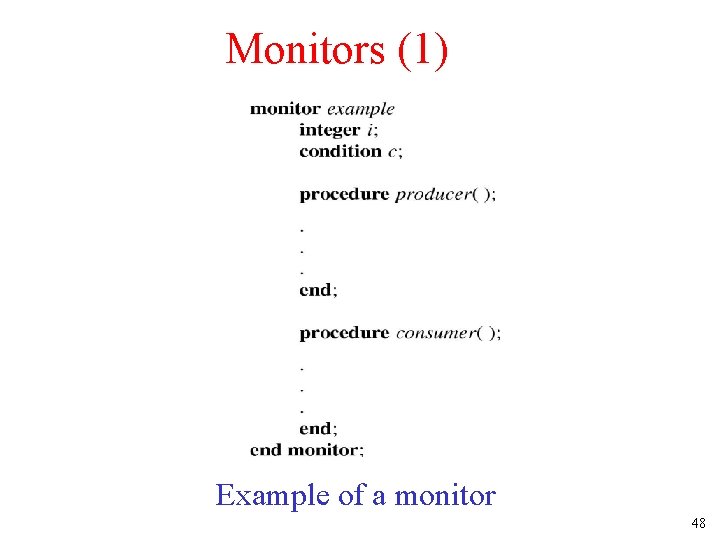

Monitors (1) Example of a monitor 48

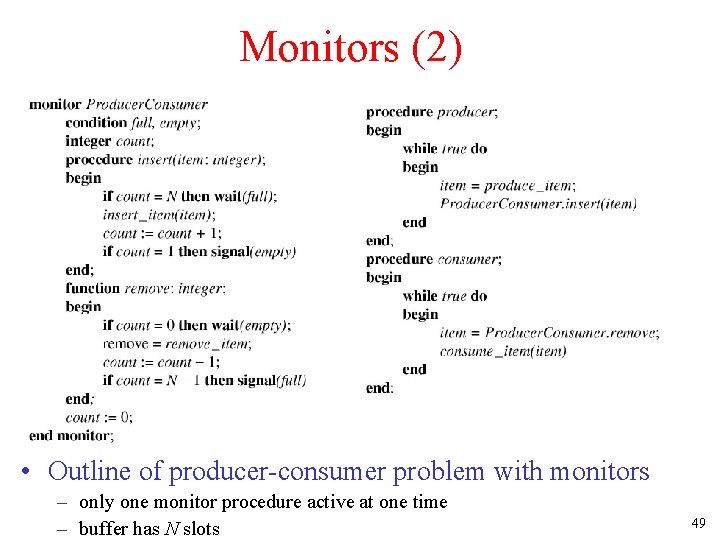

Monitors (2) • Outline of producer-consumer problem with monitors – only one monitor procedure active at one time – buffer has N slots 49

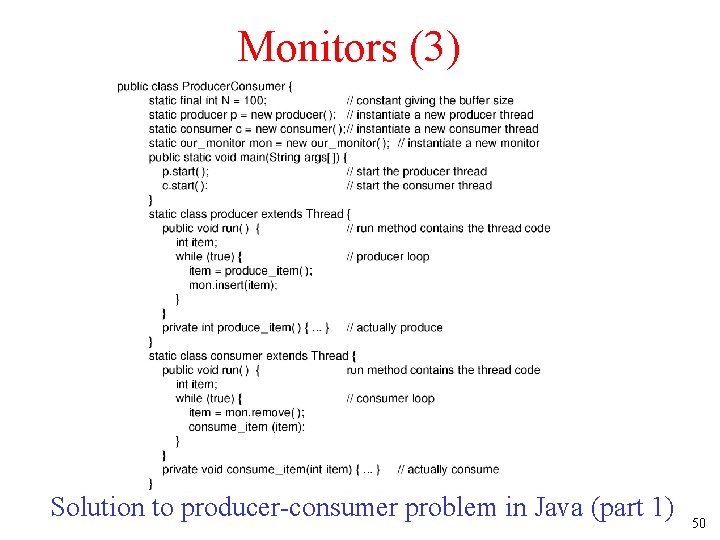

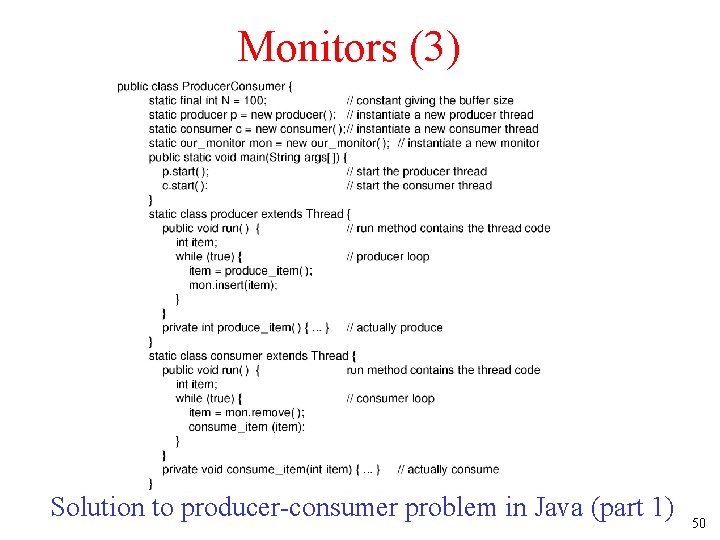

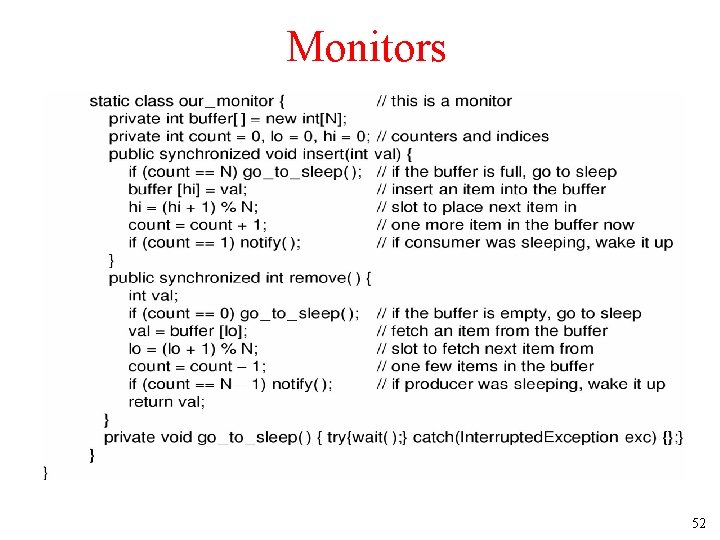

Monitors (3) Solution to producer-consumer problem in Java (part 1) 50

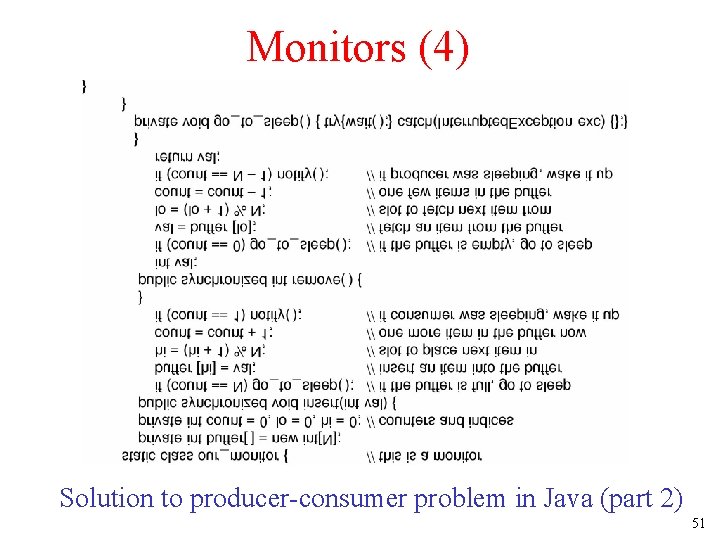

Monitors (4) Solution to producer-consumer problem in Java (part 2) 51

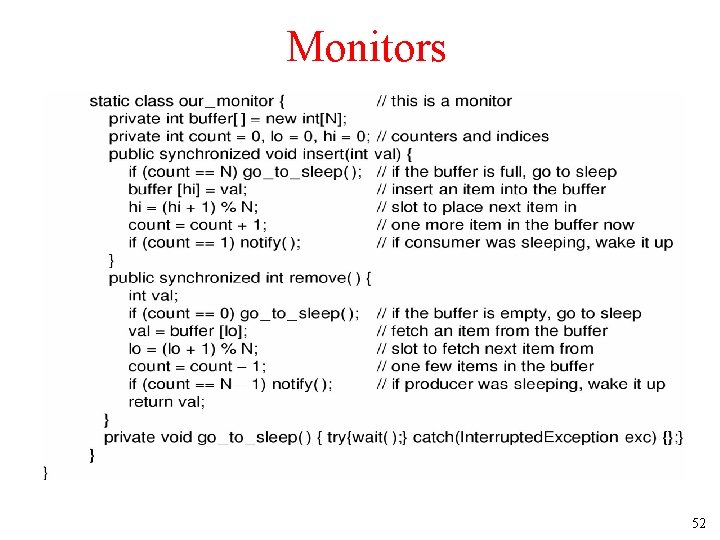

Monitors 52

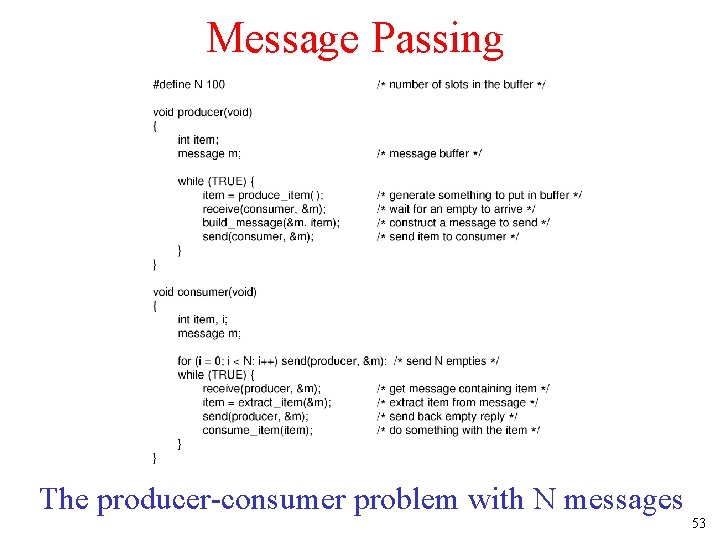

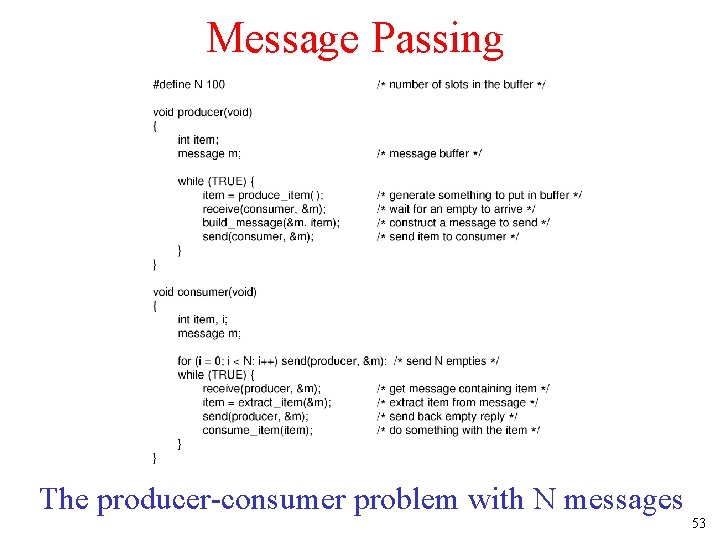

Message Passing The producer-consumer problem with N messages 53

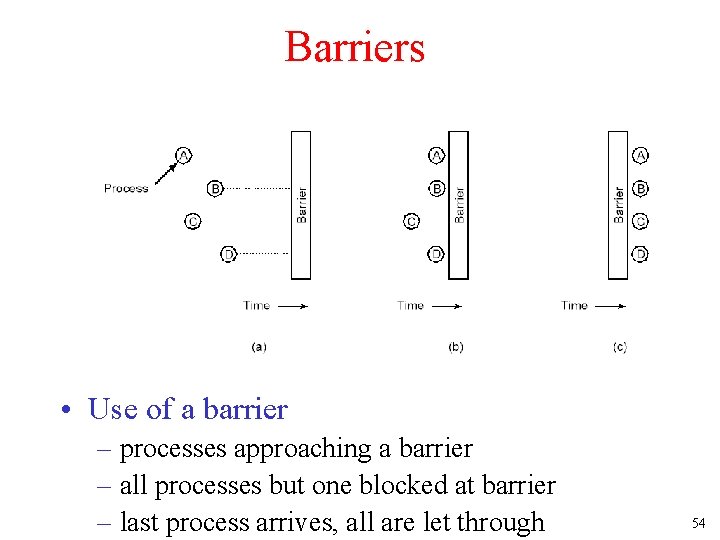

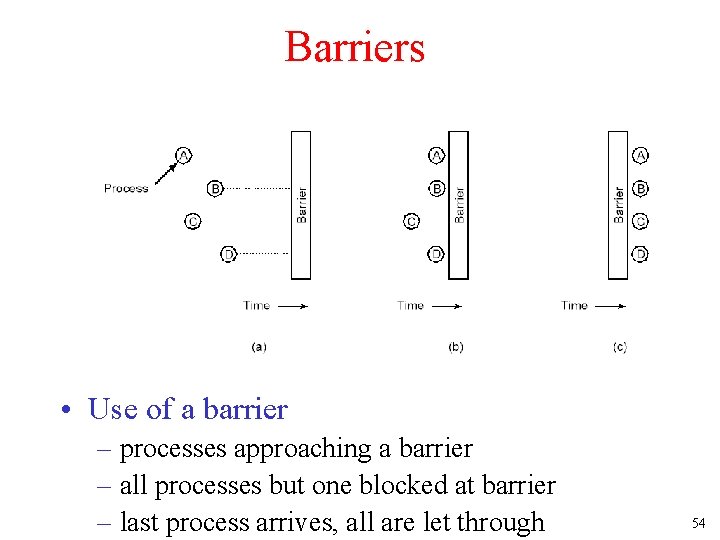

Barriers • Use of a barrier – processes approaching a barrier – all processes but one blocked at barrier – last process arrives, all are let through 54

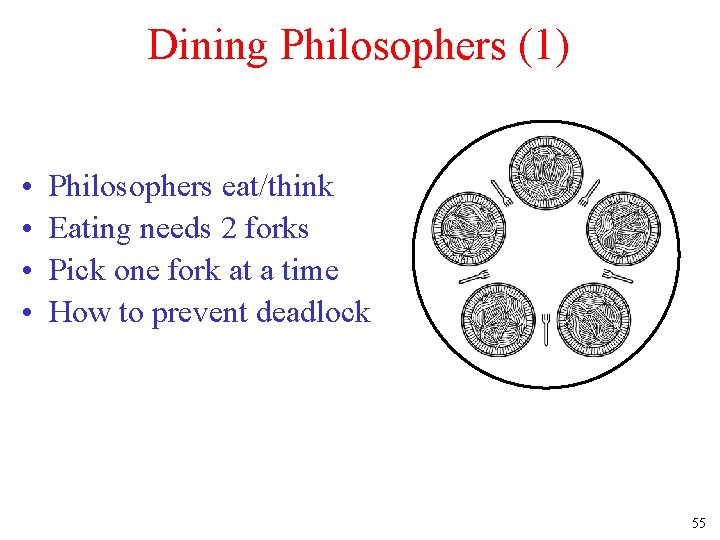

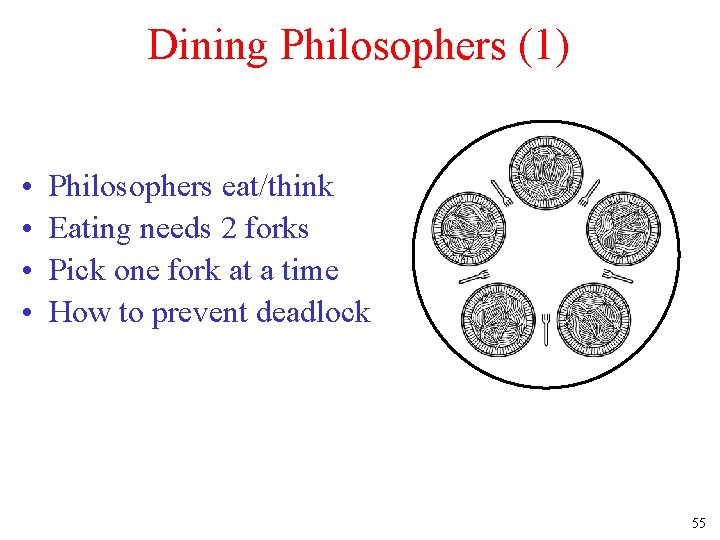

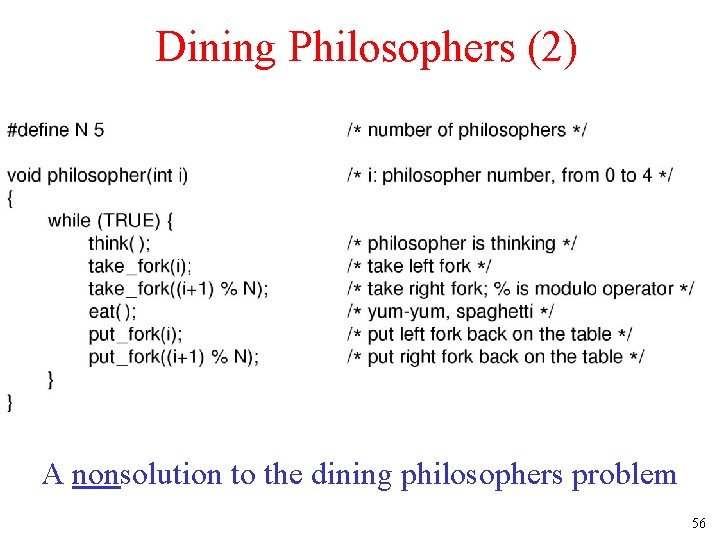

Dining Philosophers (1) • • Philosophers eat/think Eating needs 2 forks Pick one fork at a time How to prevent deadlock 55

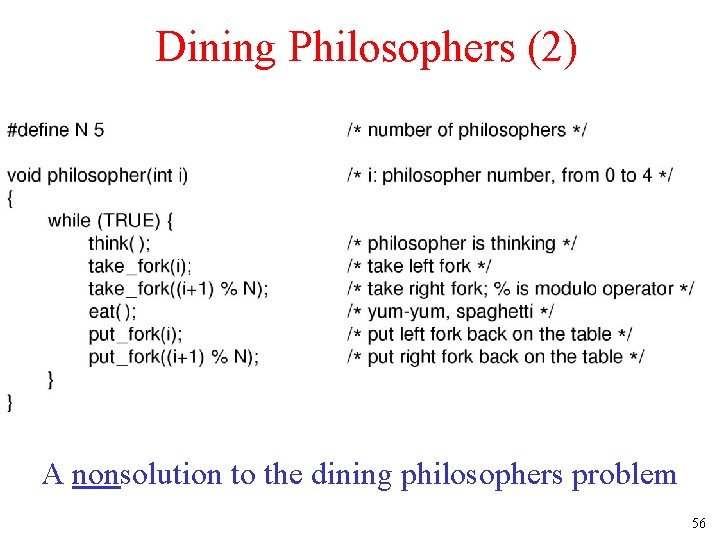

Dining Philosophers (2) A nonsolution to the dining philosophers problem 56

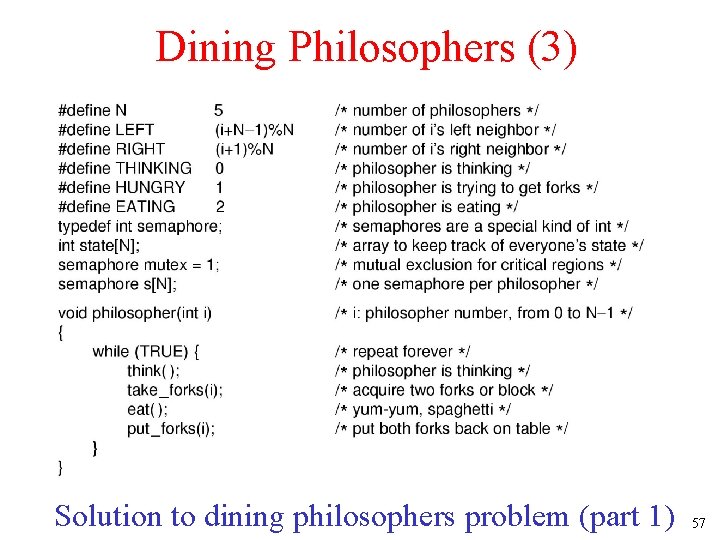

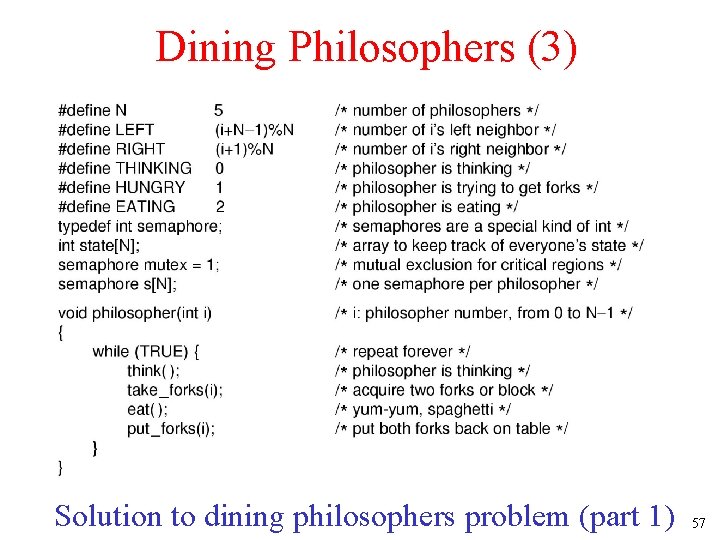

Dining Philosophers (3) Solution to dining philosophers problem (part 1) 57

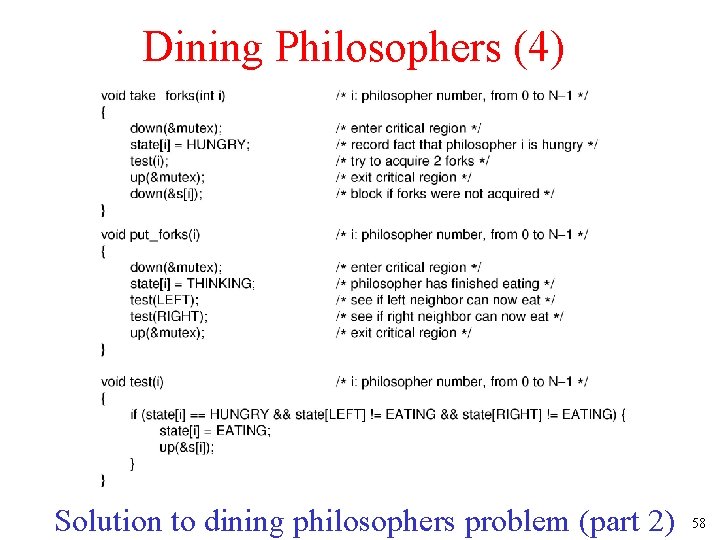

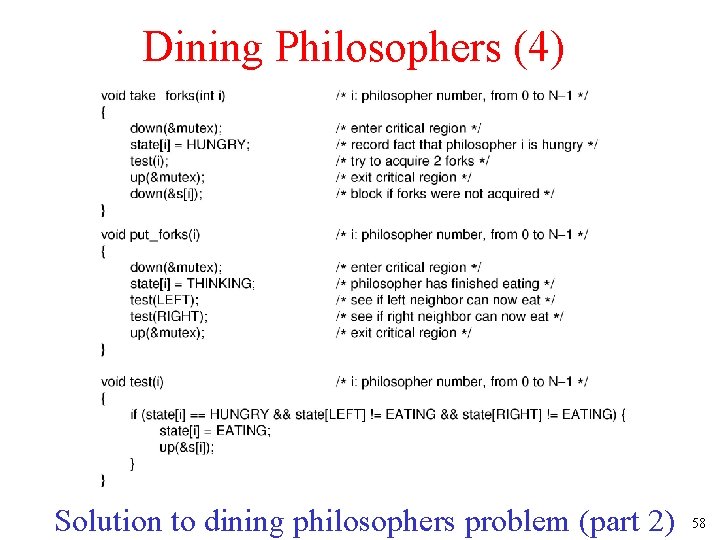

Dining Philosophers (4) Solution to dining philosophers problem (part 2) 58

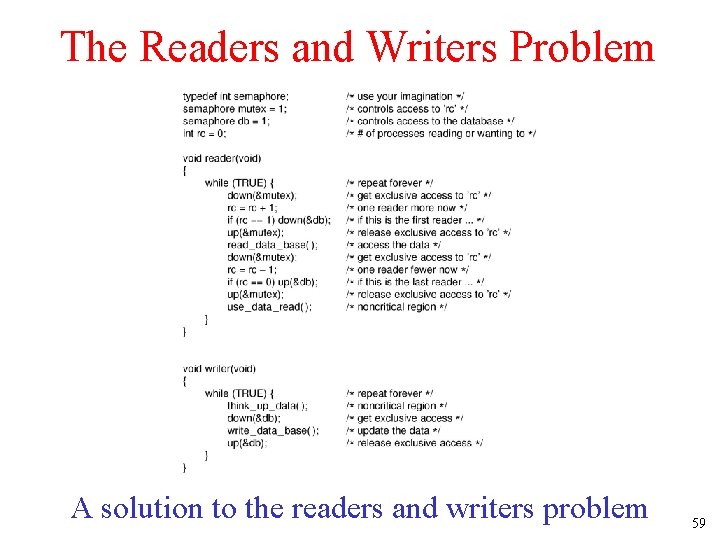

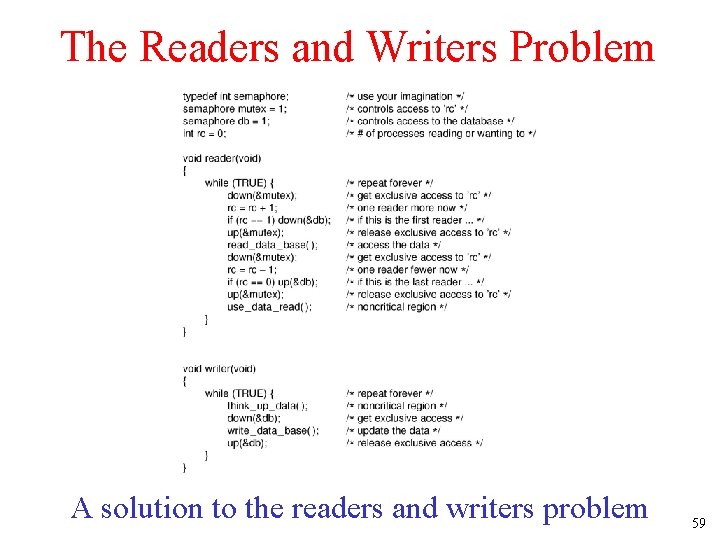

The Readers and Writers Problem A solution to the readers and writers problem 59

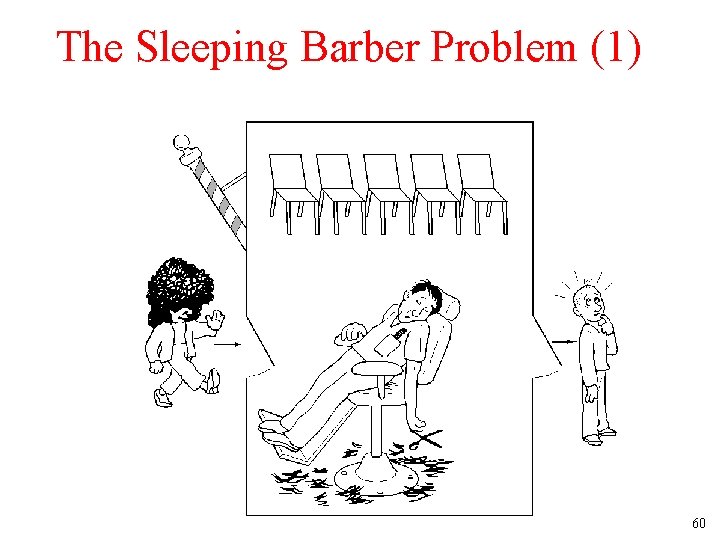

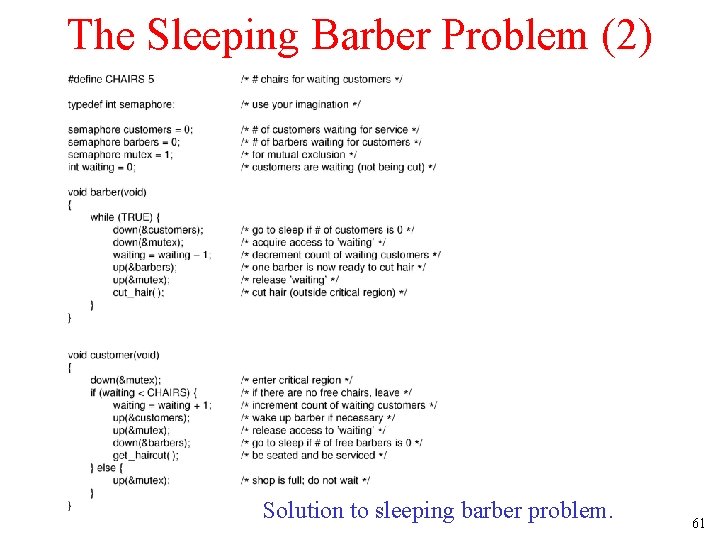

The Sleeping Barber Problem (1) 60

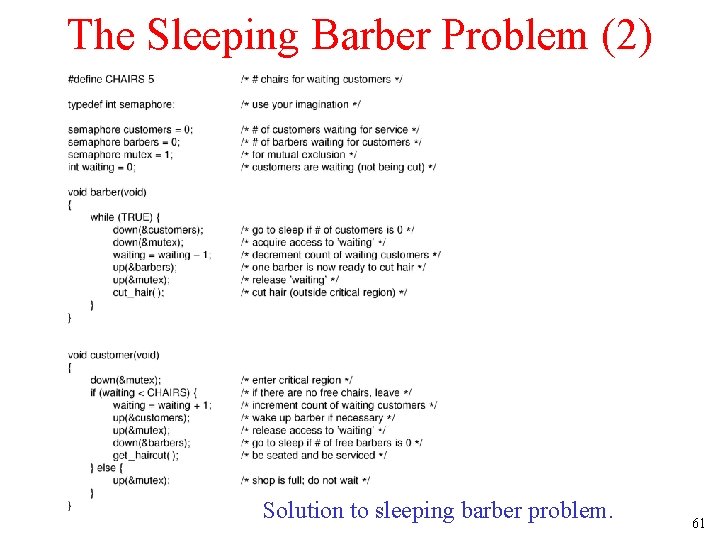

The Sleeping Barber Problem (2) Solution to sleeping barber problem. 61

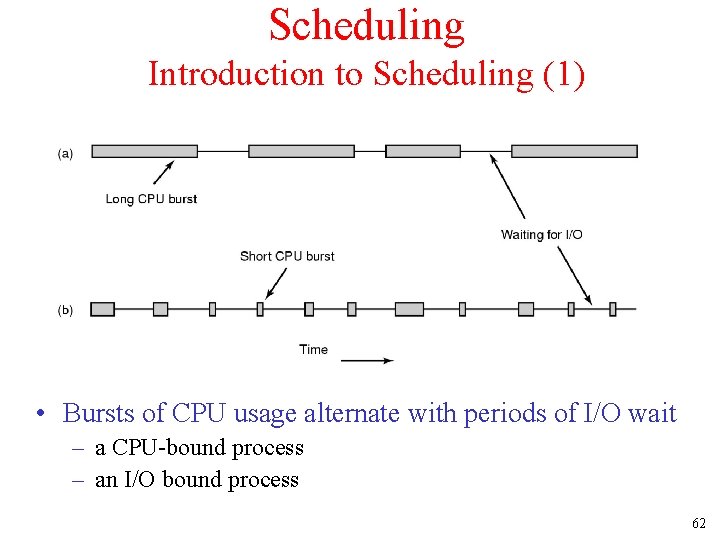

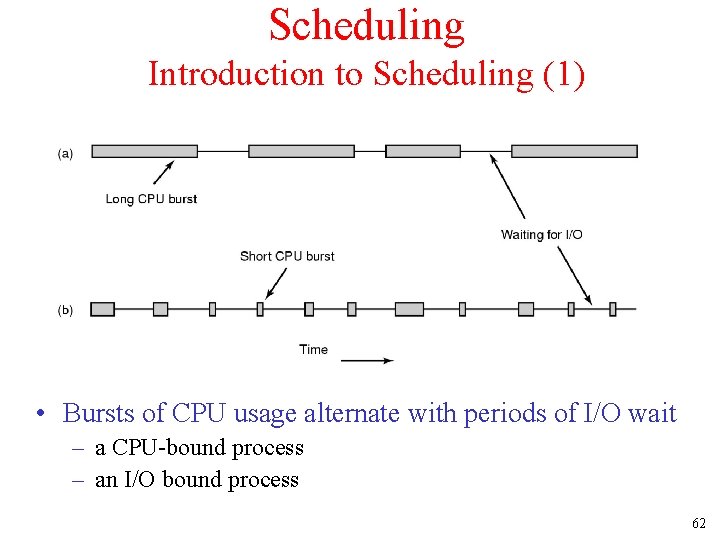

Scheduling Introduction to Scheduling (1) • Bursts of CPU usage alternate with periods of I/O wait – a CPU-bound process – an I/O bound process 62

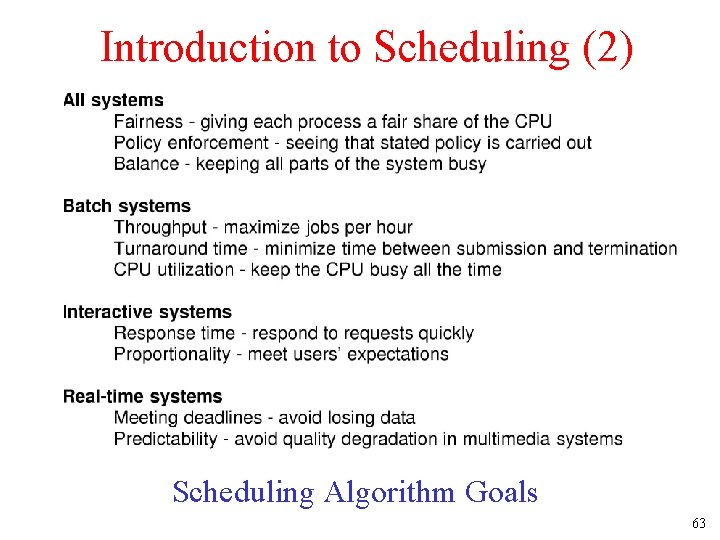

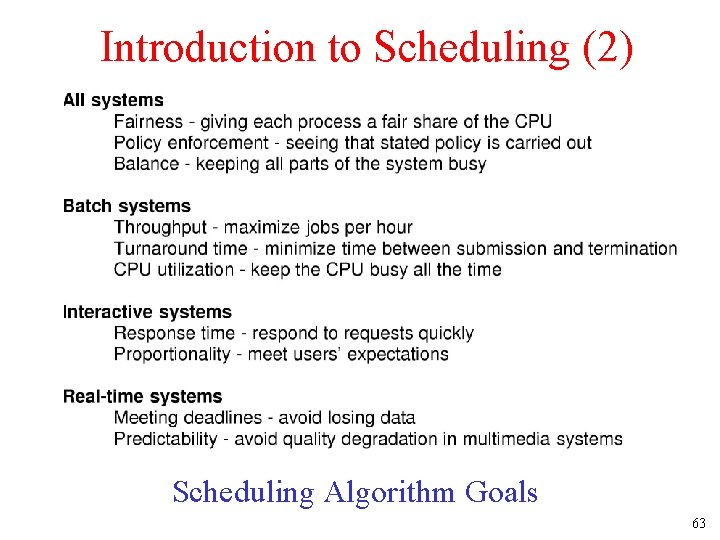

Introduction to Scheduling (2) Scheduling Algorithm Goals 63

Histogram of CPU-burst Times 64

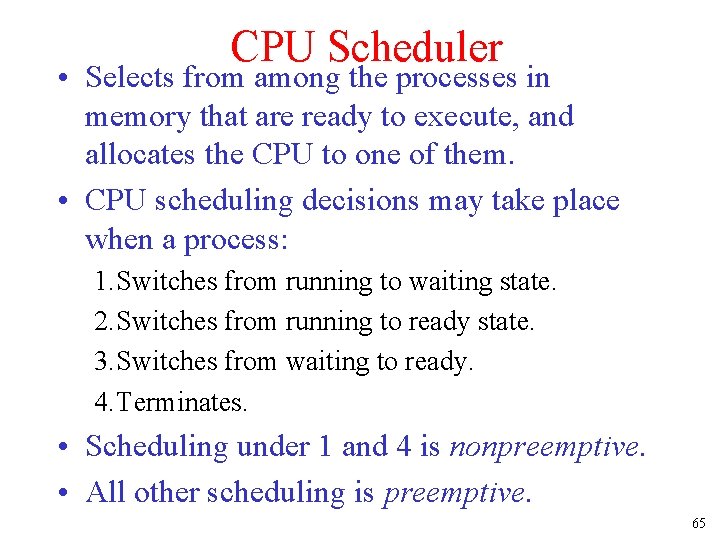

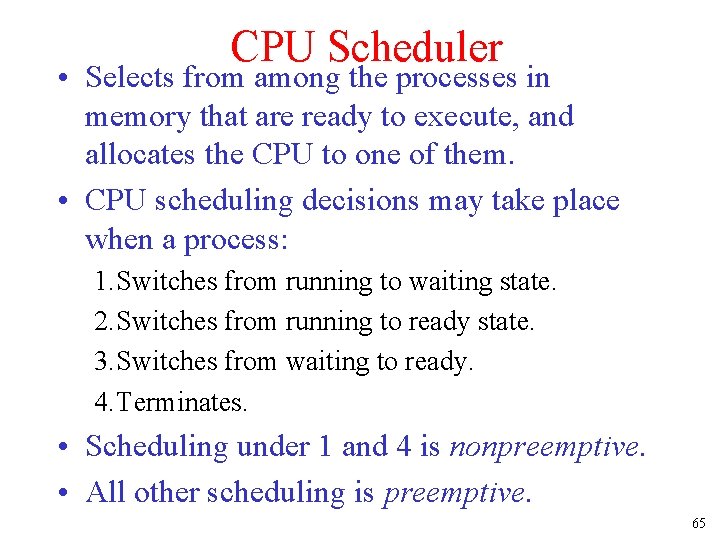

CPU Scheduler • Selects from among the processes in memory that are ready to execute, and allocates the CPU to one of them. • CPU scheduling decisions may take place when a process: 1. Switches from running to waiting state. 2. Switches from running to ready state. 3. Switches from waiting to ready. 4. Terminates. • Scheduling under 1 and 4 is nonpreemptive. • All other scheduling is preemptive. 65

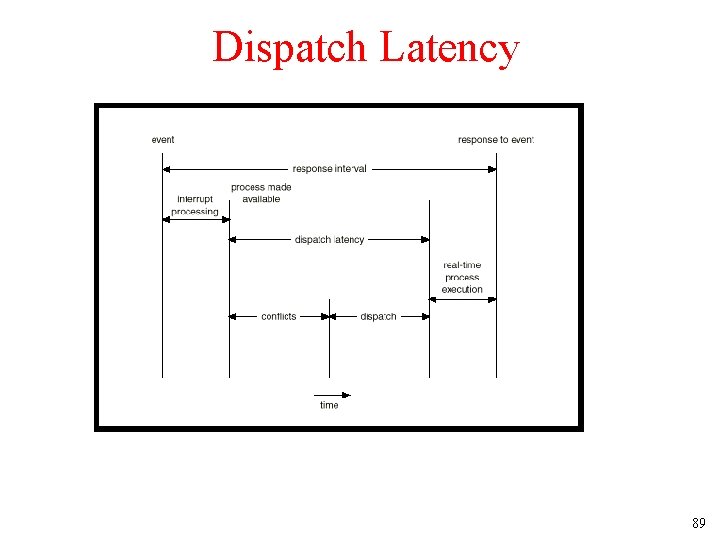

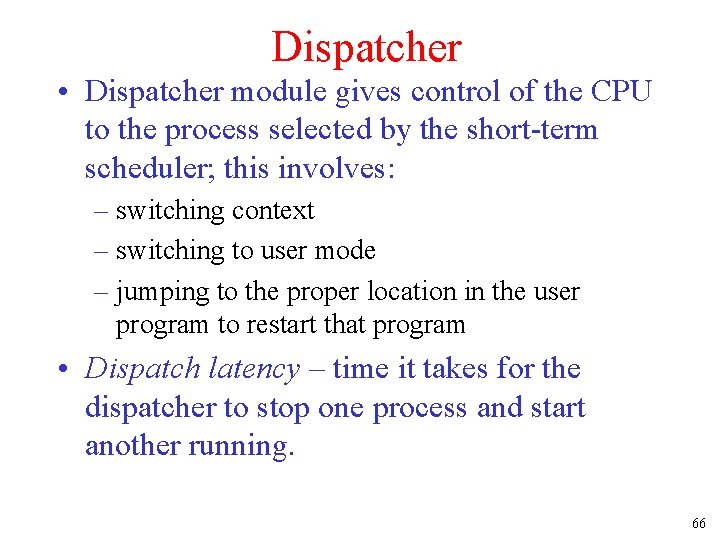

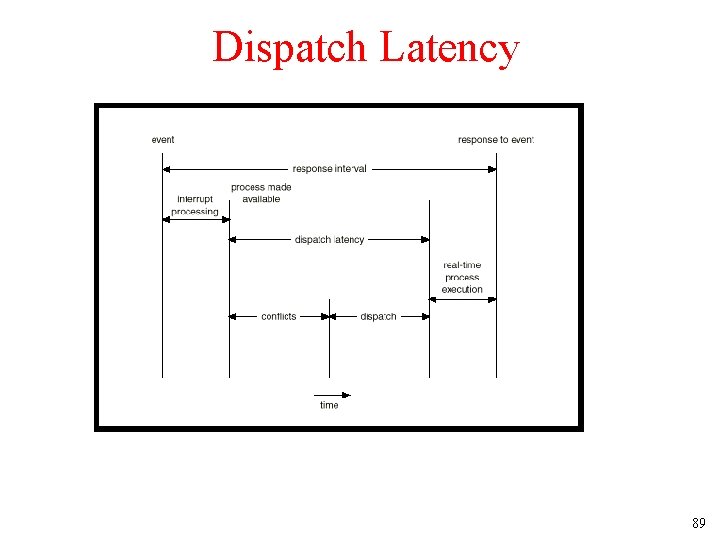

Dispatcher • Dispatcher module gives control of the CPU to the process selected by the short-term scheduler; this involves: – switching context – switching to user mode – jumping to the proper location in the user program to restart that program • Dispatch latency – time it takes for the dispatcher to stop one process and start another running. 66

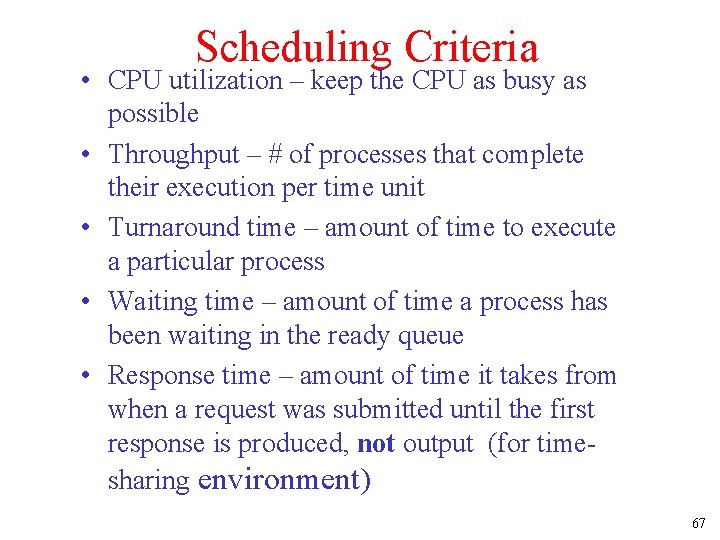

Scheduling Criteria • CPU utilization – keep the CPU as busy as possible • Throughput – # of processes that complete their execution per time unit • Turnaround time – amount of time to execute a particular process • Waiting time – amount of time a process has been waiting in the ready queue • Response time – amount of time it takes from when a request was submitted until the first response is produced, not output (for timesharing environment) 67

Optimization Criteria • • • Max CPU utilization Max throughput Min turnaround time Min waiting time Min response time 68

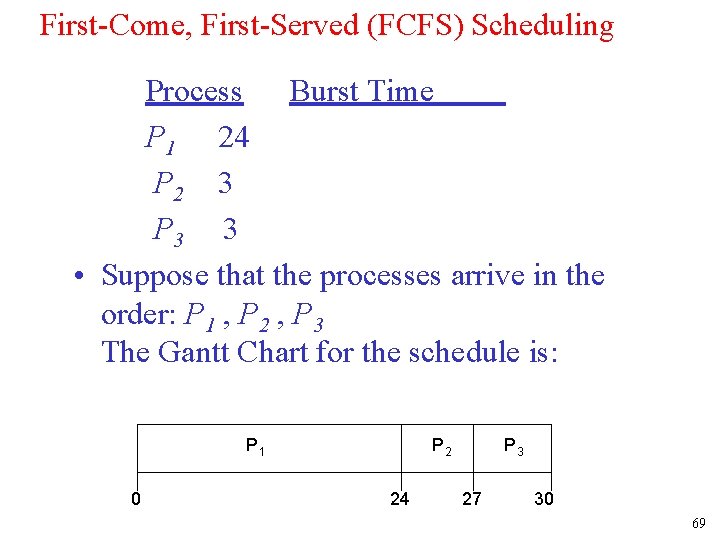

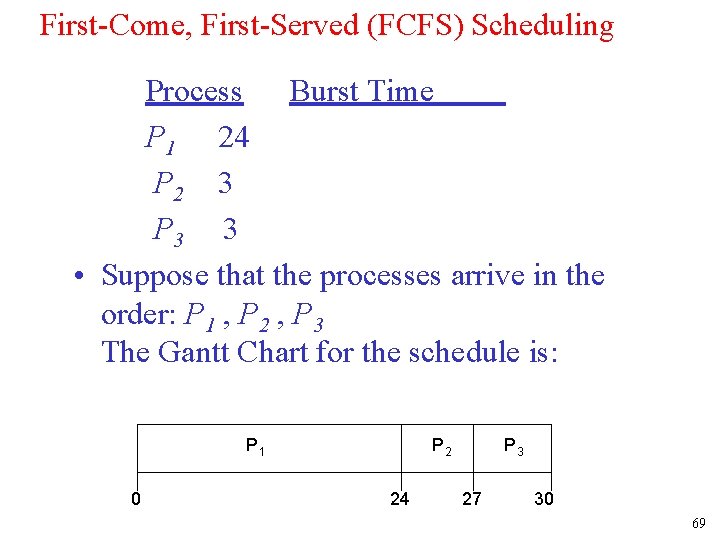

First-Come, First-Served (FCFS) Scheduling Process Burst Time P 1 24 P 2 3 P 3 3 • Suppose that the processes arrive in the order: P 1 , P 2 , P 3 The Gantt Chart for the schedule is: P 1 0 P 2 24 P 3 27 30 69

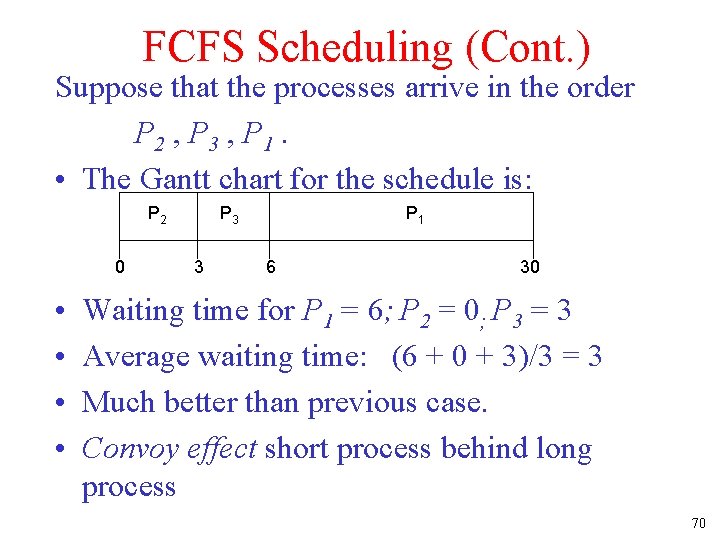

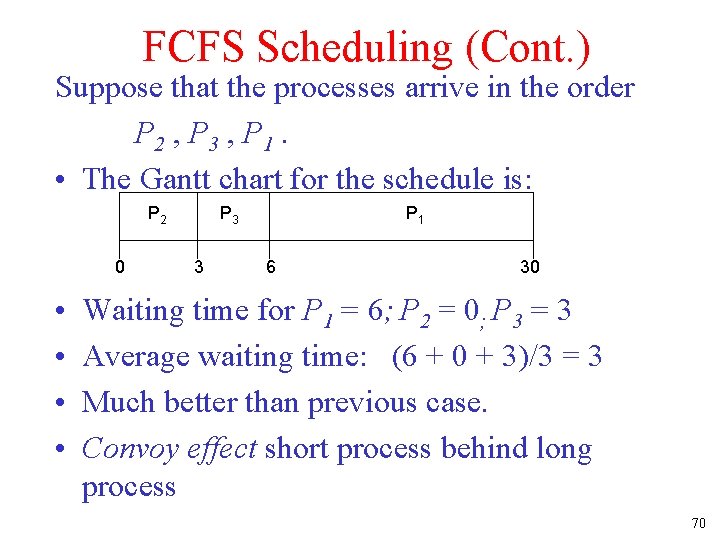

FCFS Scheduling (Cont. ) Suppose that the processes arrive in the order P 2 , P 3 , P 1. • The Gantt chart for the schedule is: P 2 0 • • P 3 3 P 1 6 30 Waiting time for P 1 = 6; P 2 = 0; P 3 = 3 Average waiting time: (6 + 0 + 3)/3 = 3 Much better than previous case. Convoy effect short process behind long process 70

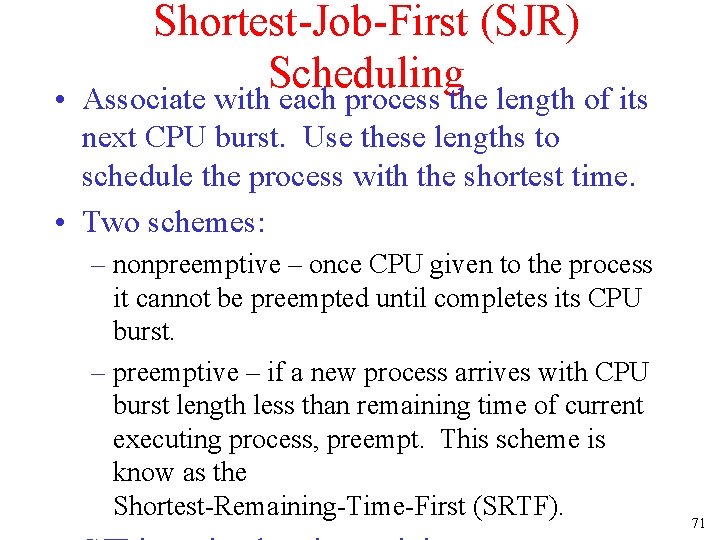

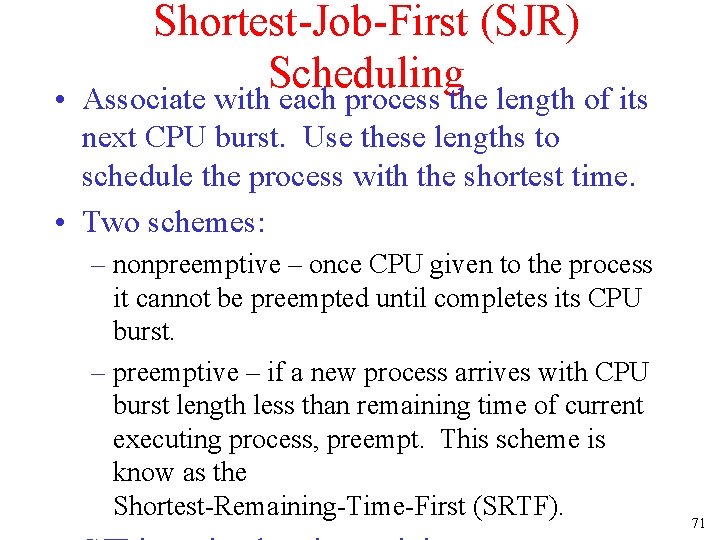

• Shortest-Job-First (SJR) Scheduling Associate with each process the length of its next CPU burst. Use these lengths to schedule the process with the shortest time. • Two schemes: – nonpreemptive – once CPU given to the process it cannot be preempted until completes its CPU burst. – preemptive – if a new process arrives with CPU burst length less than remaining time of current executing process, preempt. This scheme is know as the Shortest-Remaining-Time-First (SRTF). 71

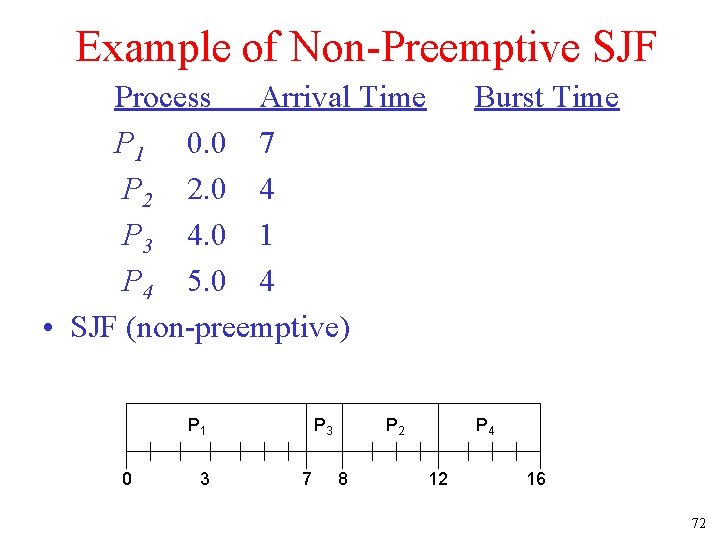

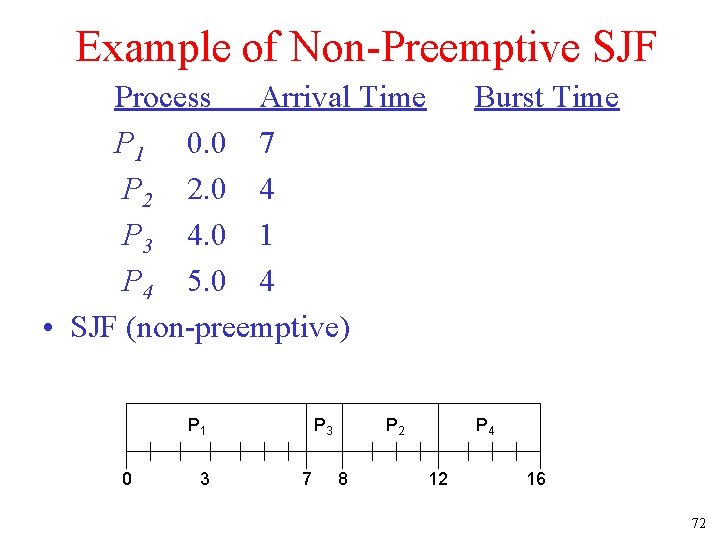

Example of Non-Preemptive SJF Process Arrival Time P 1 0. 0 7 P 2 2. 0 4 P 3 4. 0 1 P 4 5. 0 4 • SJF (non-preemptive) P 1 0 3 P 3 7 Burst Time P 2 8 P 4 12 16 72

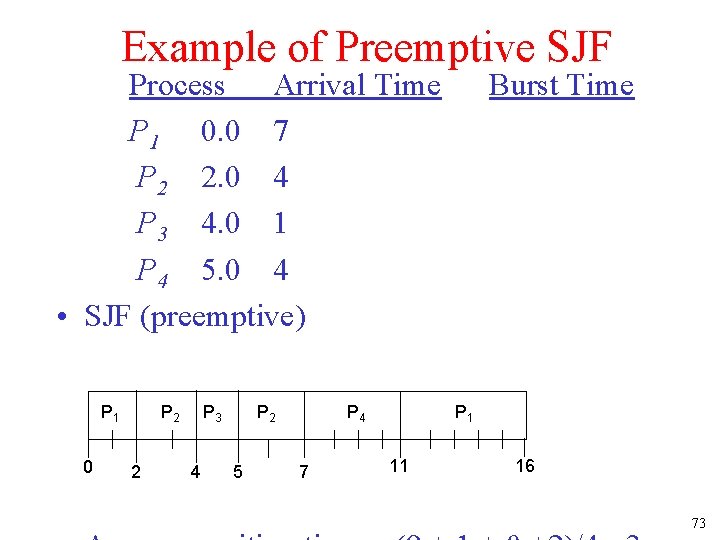

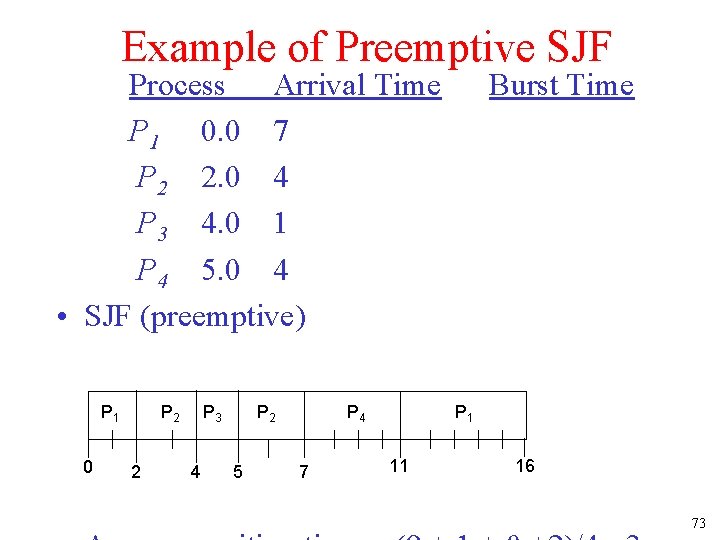

Example of Preemptive SJF Process Arrival Time P 1 0. 0 7 P 2 2. 0 4 P 3 4. 0 1 P 4 5. 0 4 • SJF (preemptive) P 1 0 P 2 2 P 3 4 P 2 5 P 4 7 Burst Time P 1 11 16 73

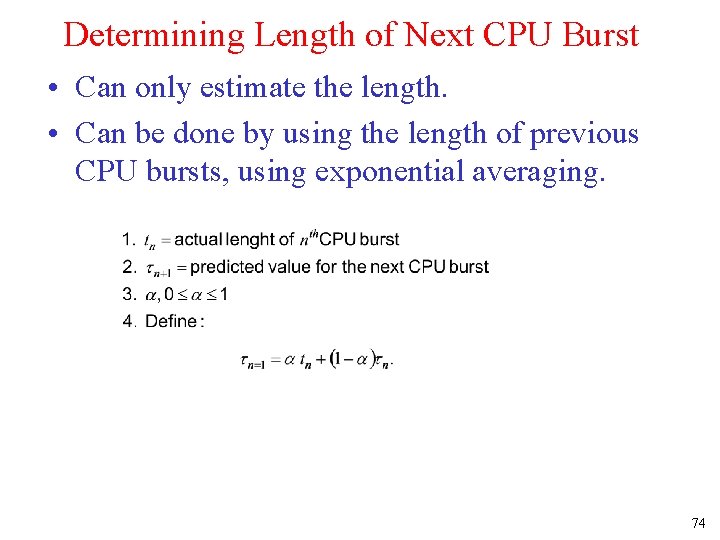

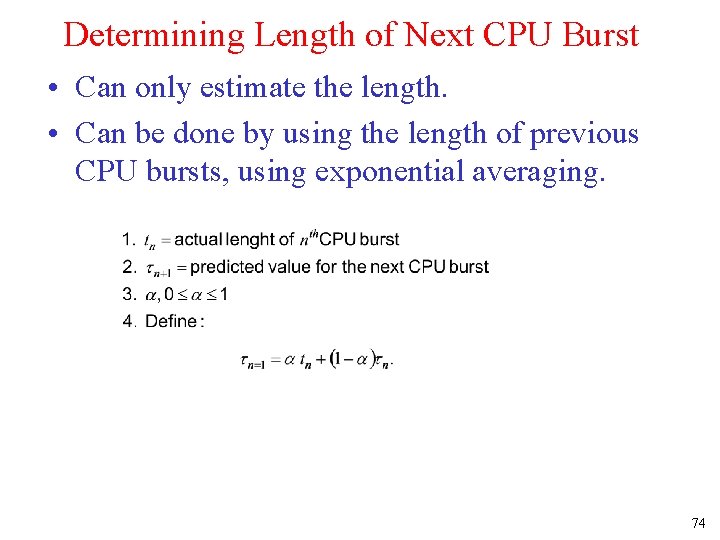

Determining Length of Next CPU Burst • Can only estimate the length. • Can be done by using the length of previous CPU bursts, using exponential averaging. 74

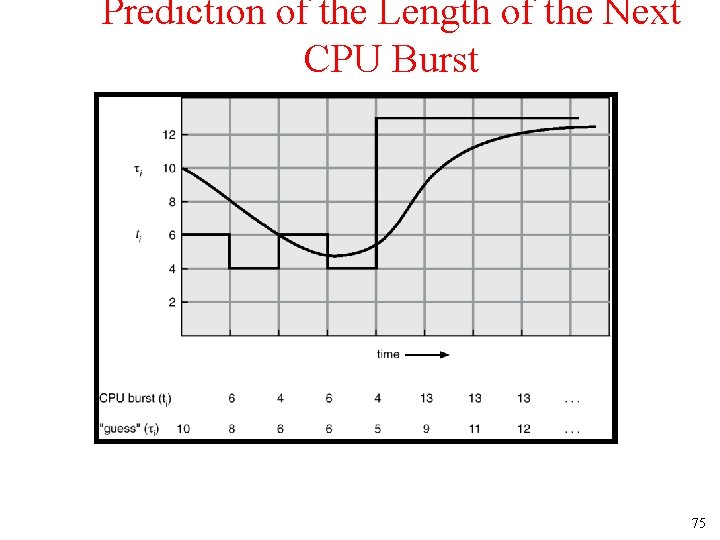

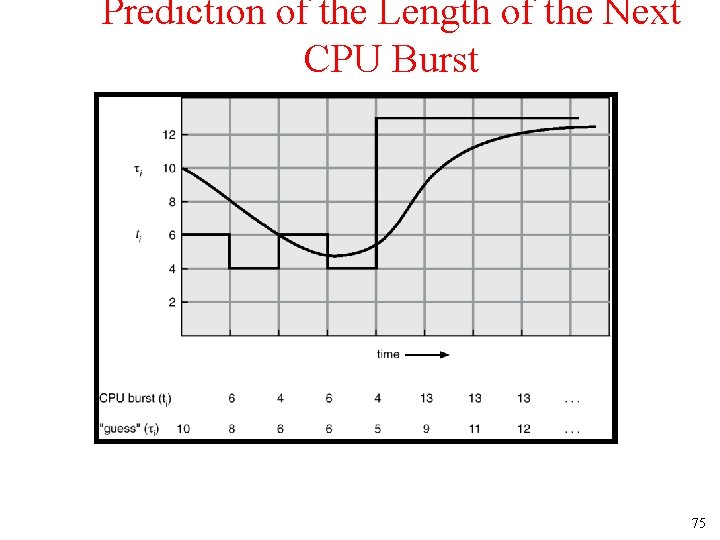

Prediction of the Length of the Next CPU Burst 75

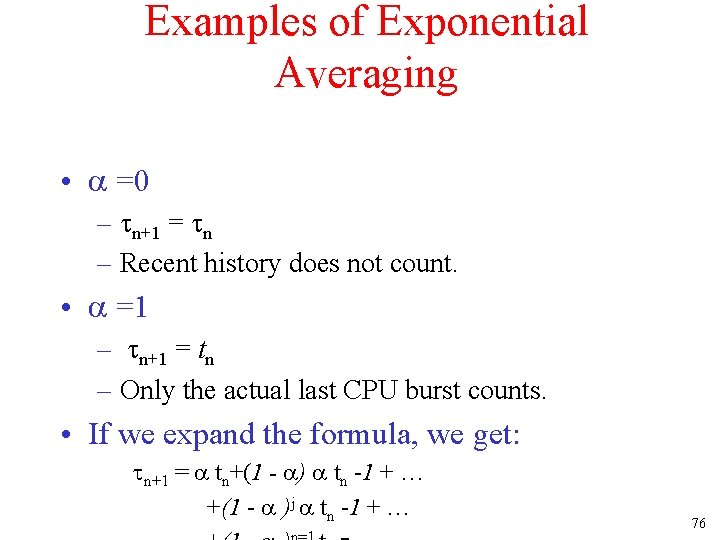

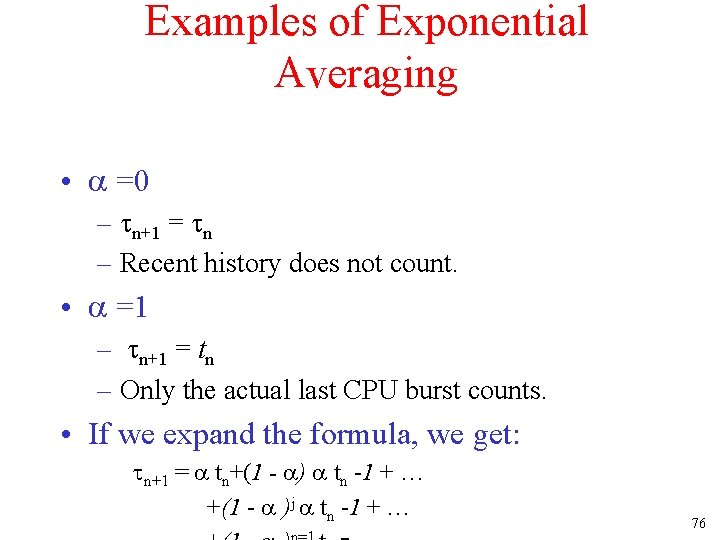

Examples of Exponential Averaging • =0 – n+1 = n – Recent history does not count. • =1 – n+1 = tn – Only the actual last CPU burst counts. • If we expand the formula, we get: n+1 = tn+(1 - ) tn -1 + … +(1 - )j tn -1 + … 76

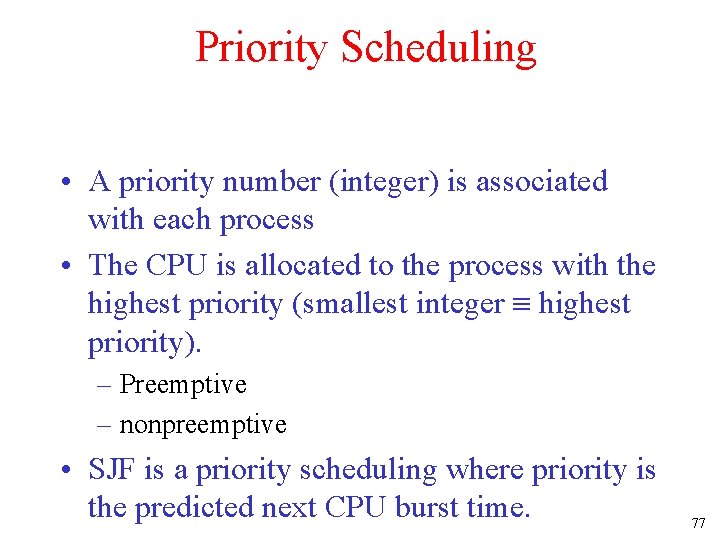

Priority Scheduling • A priority number (integer) is associated with each process • The CPU is allocated to the process with the highest priority (smallest integer highest priority). – Preemptive – nonpreemptive • SJF is a priority scheduling where priority is the predicted next CPU burst time. 77

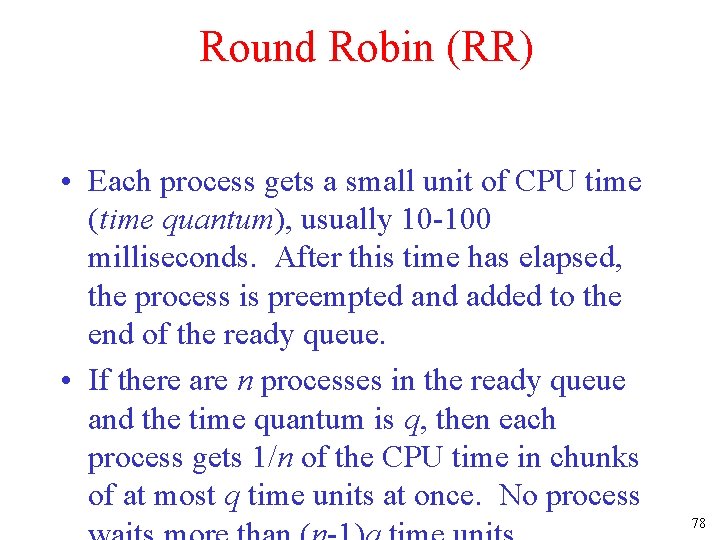

Round Robin (RR) • Each process gets a small unit of CPU time (time quantum), usually 10 -100 milliseconds. After this time has elapsed, the process is preempted and added to the end of the ready queue. • If there are n processes in the ready queue and the time quantum is q, then each process gets 1/n of the CPU time in chunks of at most q time units at once. No process 78

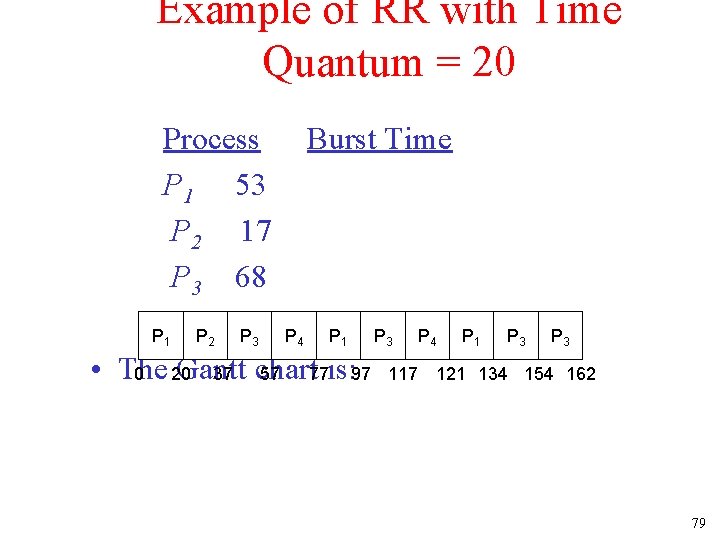

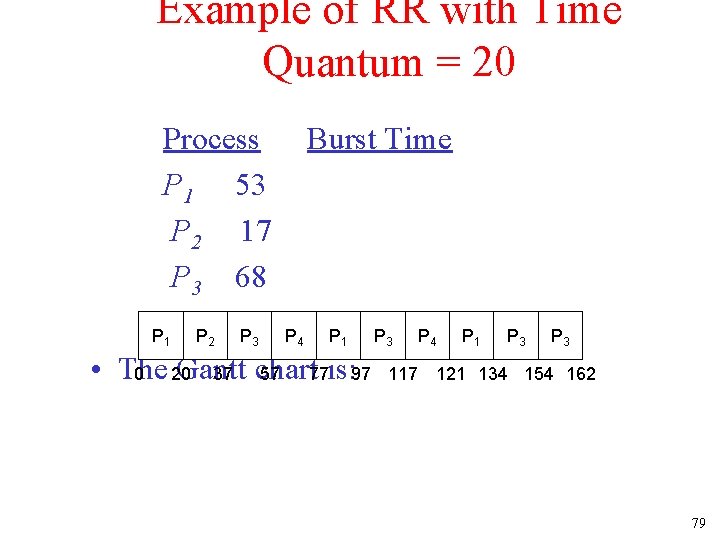

Example of RR with Time Quantum = 20 Process Burst Time P 1 53 P 2 17 P 3 68 P 4 P P 24 P P P P • The Gantt 0 20 37 chart 57 77 is: 97 117 121 134 154 162 1 2 3 4 1 3 3 79

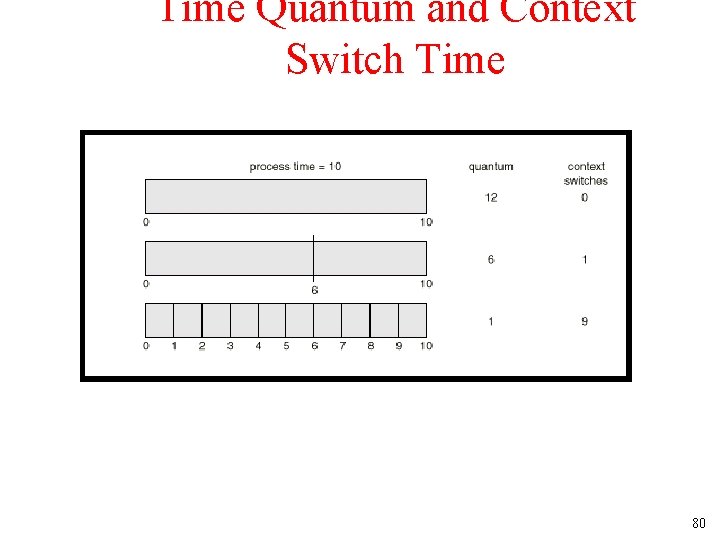

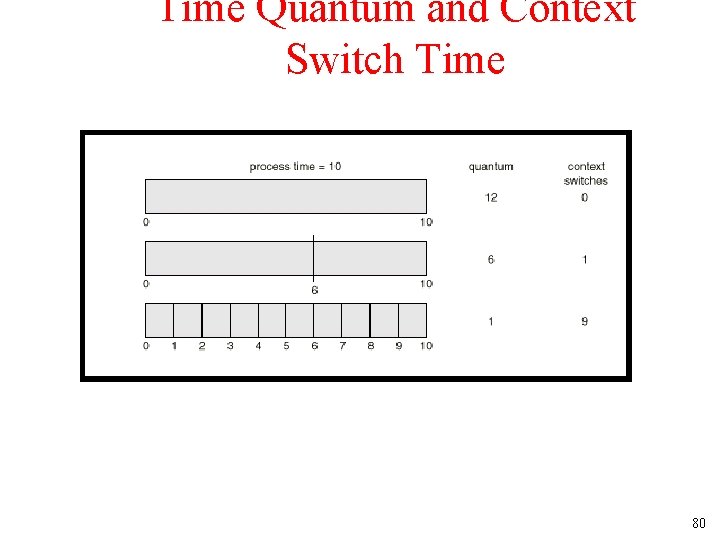

Time Quantum and Context Switch Time 80

Turnaround Time Varies With The Time Quantum 81

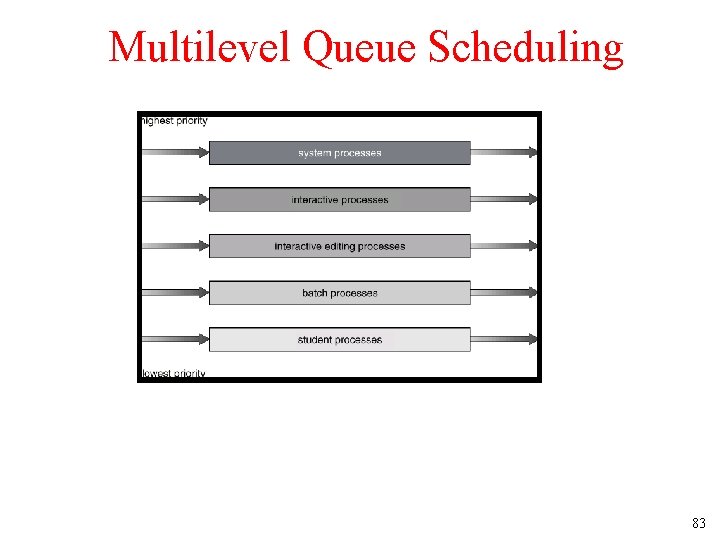

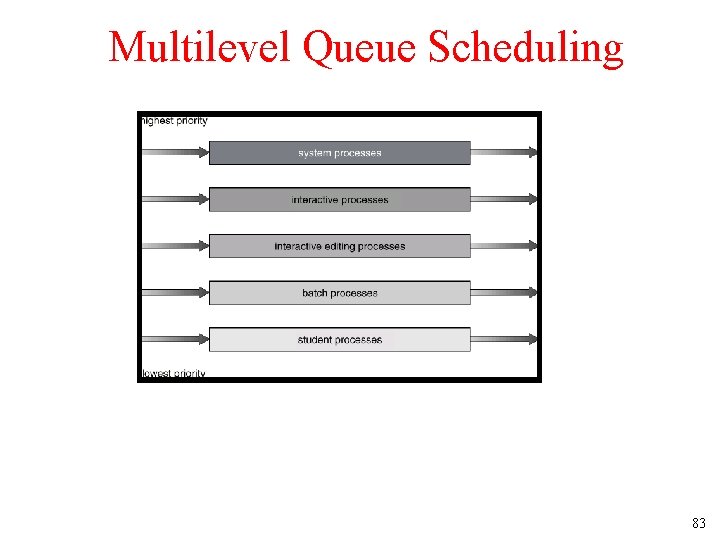

Multilevel Queue • Ready queue is partitioned into separate queues: foreground (interactive) background (batch) • Each queue has its own scheduling algorithm, foreground – RR background – FCFS • Scheduling must be done between the 82

Multilevel Queue Scheduling 83

Multilevel Feedback Queue • A process can move between the various queues; aging can be implemented this way. • Multilevel-feedback-queue scheduler defined by the following parameters: – number of queues – scheduling algorithms for each queue – method used to determine when to upgrade a process – method used to determine when to demote a 84

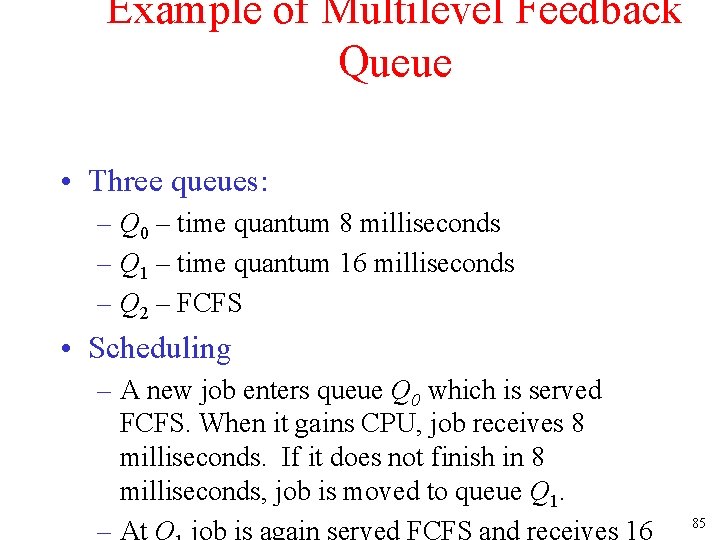

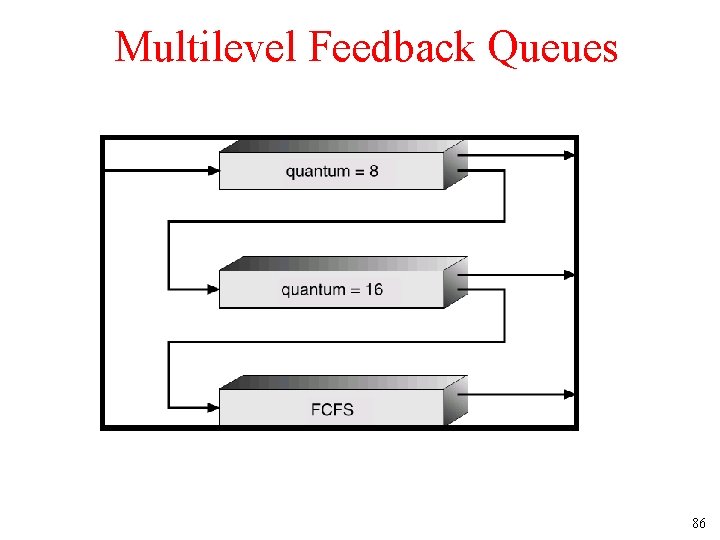

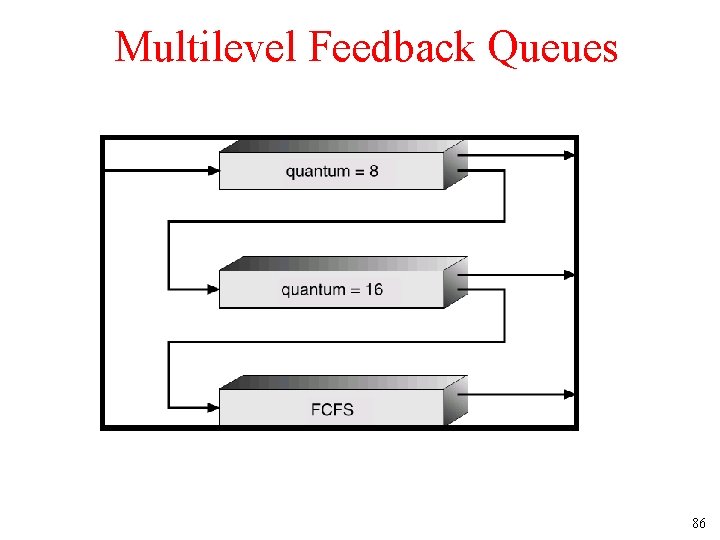

Example of Multilevel Feedback Queue • Three queues: – Q 0 – time quantum 8 milliseconds – Q 1 – time quantum 16 milliseconds – Q 2 – FCFS • Scheduling – A new job enters queue Q 0 which is served FCFS. When it gains CPU, job receives 8 milliseconds. If it does not finish in 8 milliseconds, job is moved to queue Q 1. – At Q job is again served FCFS and receives 16 85

Multilevel Feedback Queues 86

Multiple-Processor Scheduling • CPU scheduling more complex when multiple CPUs are available. • Homogeneous processors within a multiprocessor. • Load sharing • Asymmetric multiprocessing – only one processor accesses the system data structures, alleviating the need for data sharing. 87

Real-Time Scheduling • Hard real-time systems – required to complete a critical task within a guaranteed amount of time. • Soft real-time computing – requires that critical processes receive priority over less fortunate ones. 88

Dispatch Latency 89

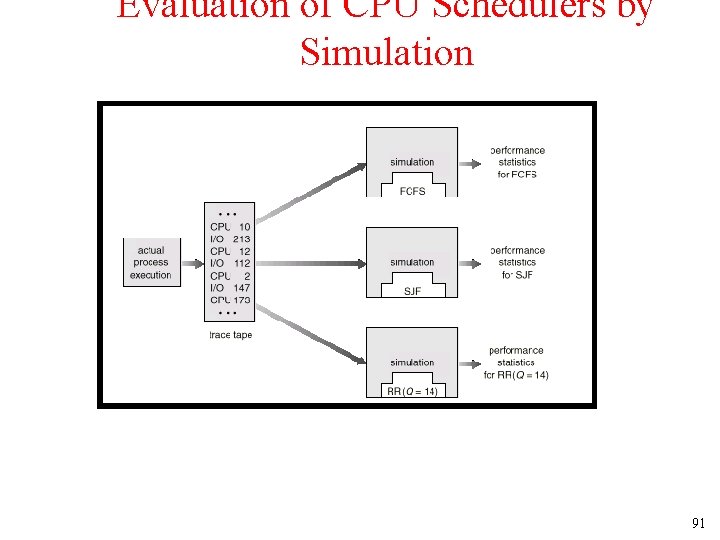

Algorithm Evaluation • Deterministic modeling – takes a particular predetermined workload and defines the performance of each algorithm for that workload. • Queueing models • Implementation 90

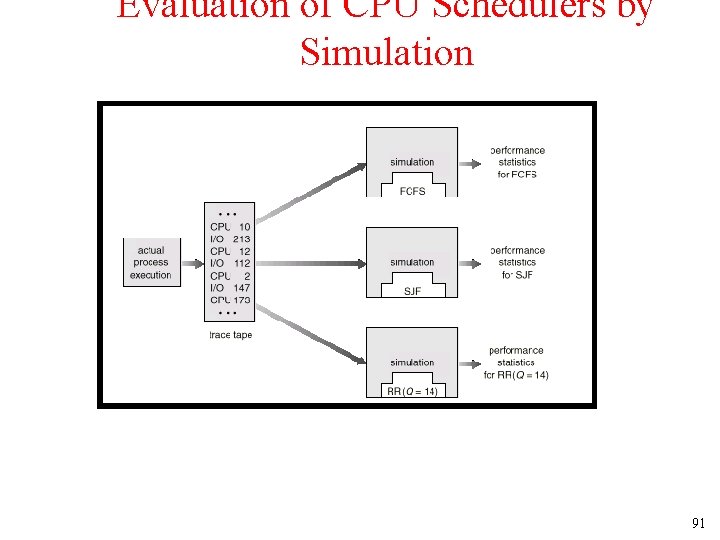

Evaluation of CPU Schedulers by Simulation 91

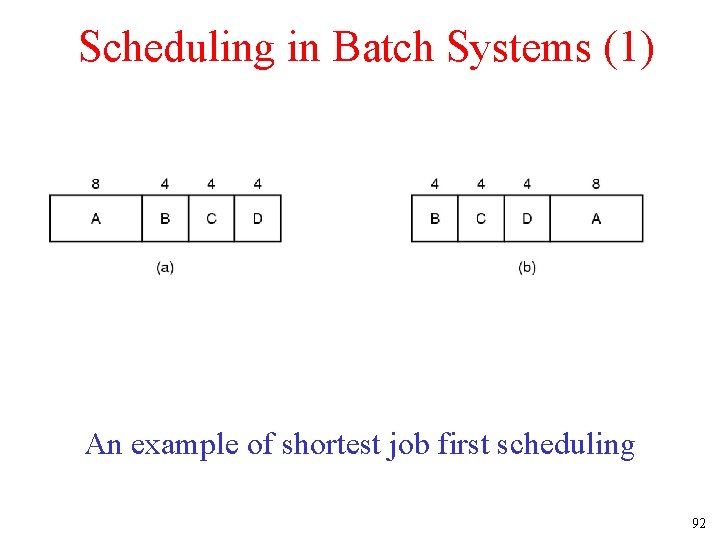

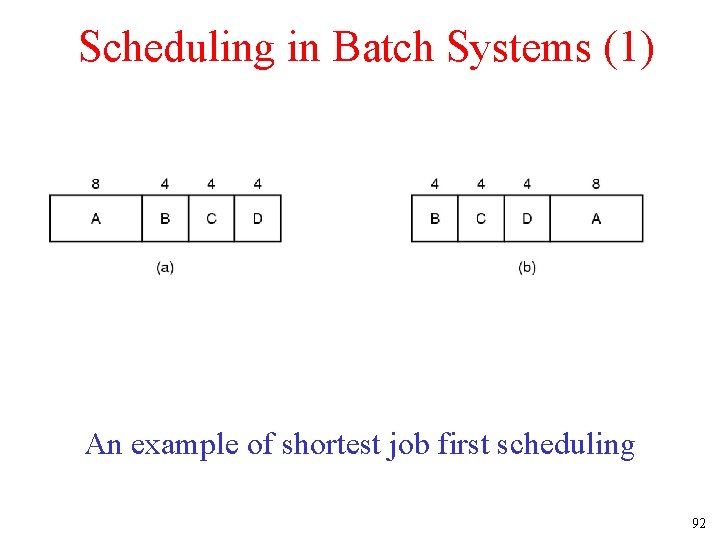

Scheduling in Batch Systems (1) An example of shortest job first scheduling 92

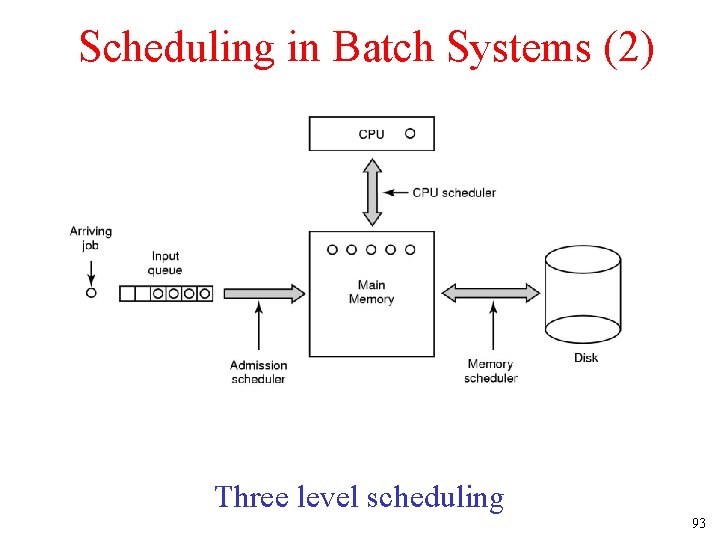

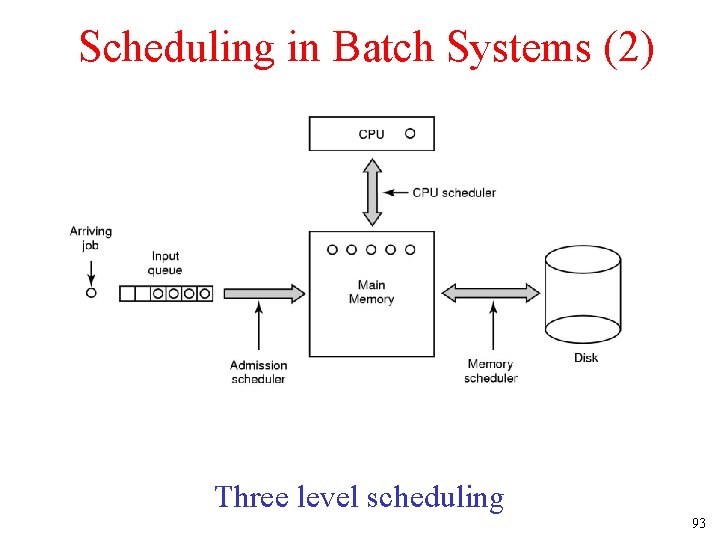

Scheduling in Batch Systems (2) Three level scheduling 93

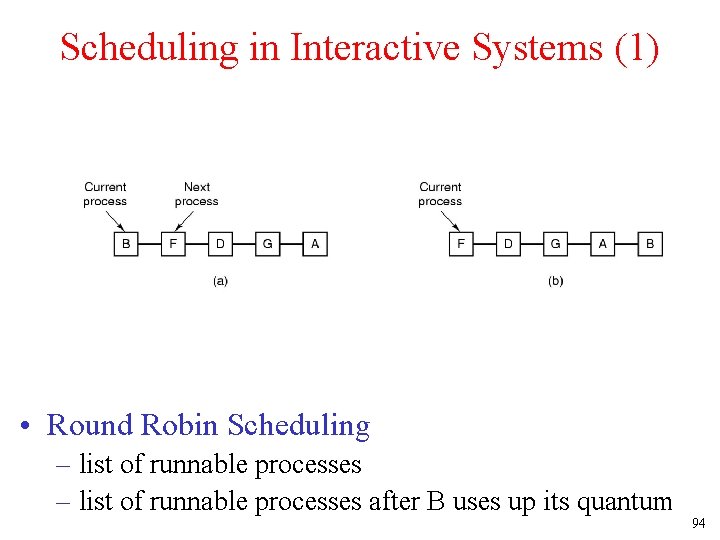

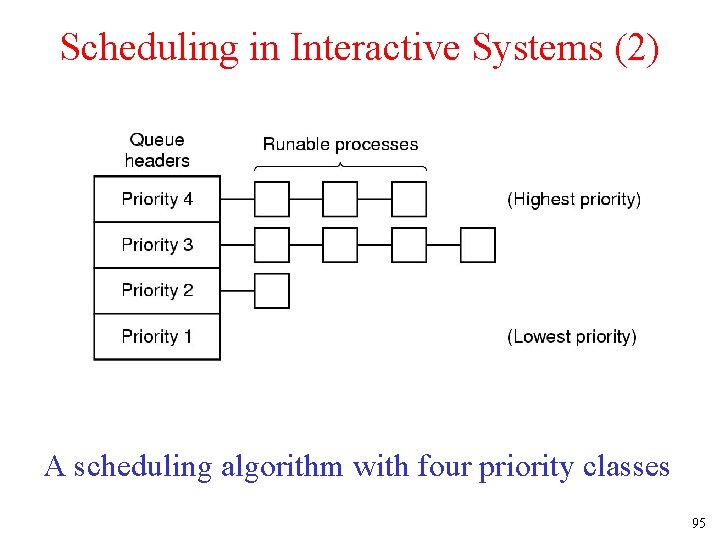

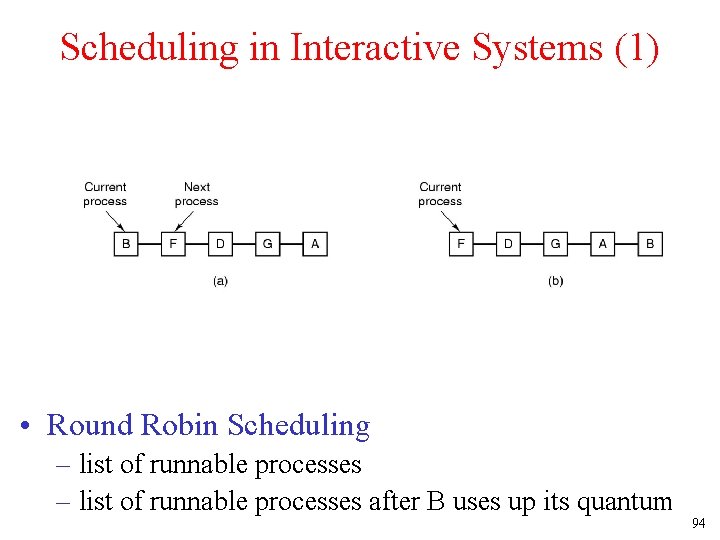

Scheduling in Interactive Systems (1) • Round Robin Scheduling – list of runnable processes after B uses up its quantum 94

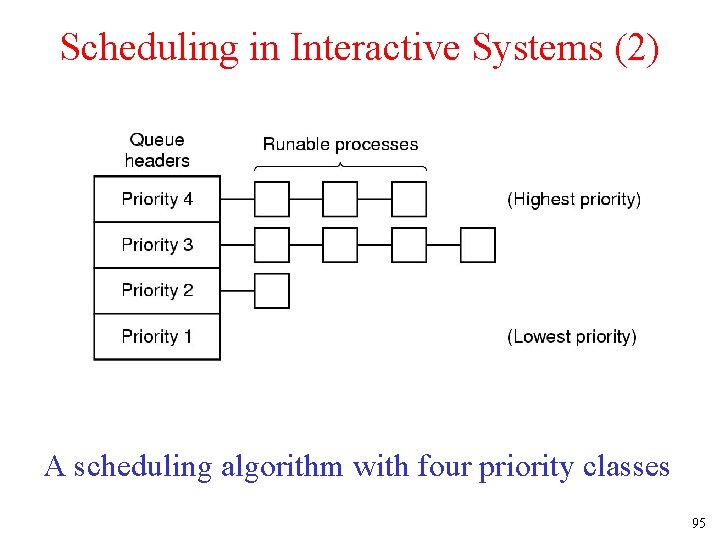

Scheduling in Interactive Systems (2) A scheduling algorithm with four priority classes 95

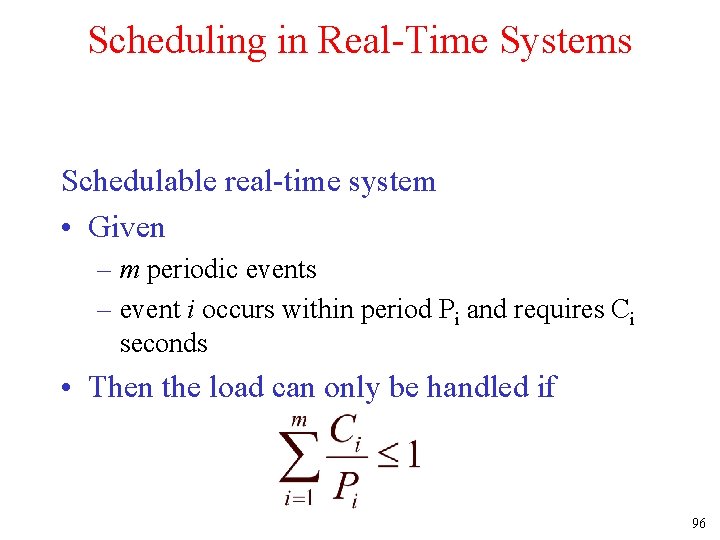

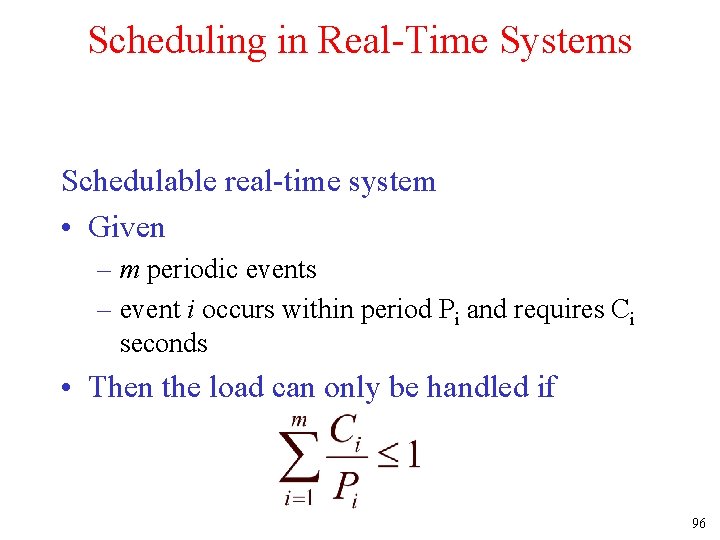

Scheduling in Real-Time Systems Schedulable real-time system • Given – m periodic events – event i occurs within period Pi and requires Ci seconds • Then the load can only be handled if 96

Policy versus Mechanism • Separate what is allowed to be done with how it is done – a process knows which of its children threads are important and need priority • Scheduling algorithm parameterized – mechanism in the kernel • Parameters filled in by user processes – policy set by user process 97

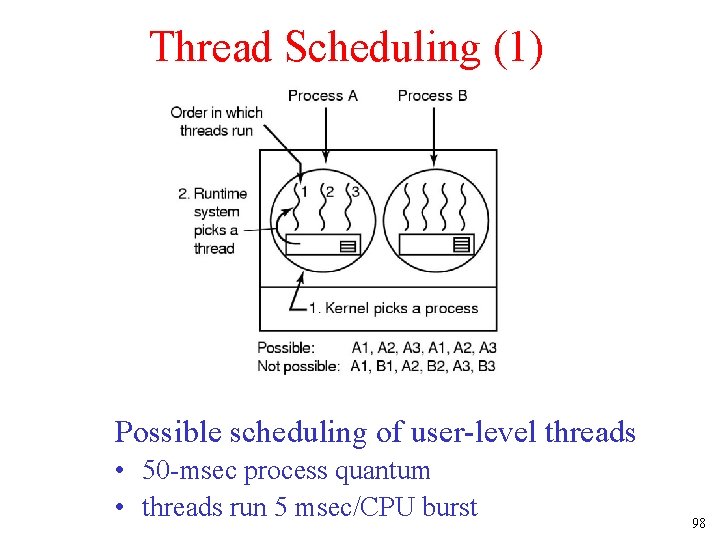

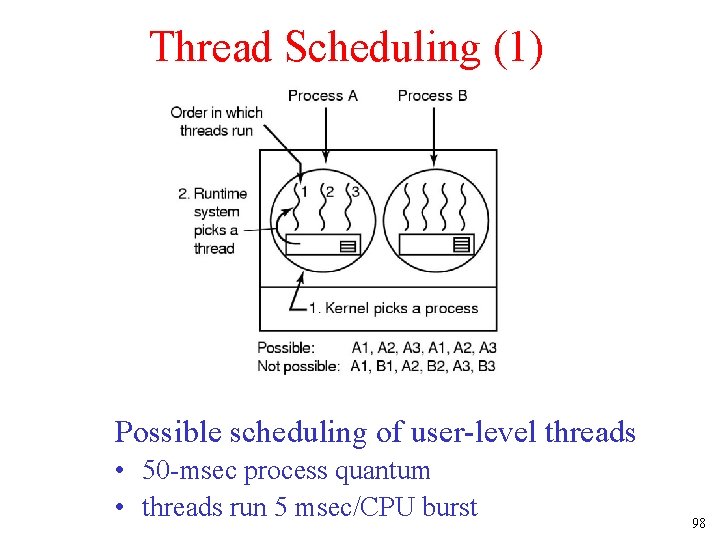

Thread Scheduling (1) Possible scheduling of user-level threads • 50 -msec process quantum • threads run 5 msec/CPU burst 98

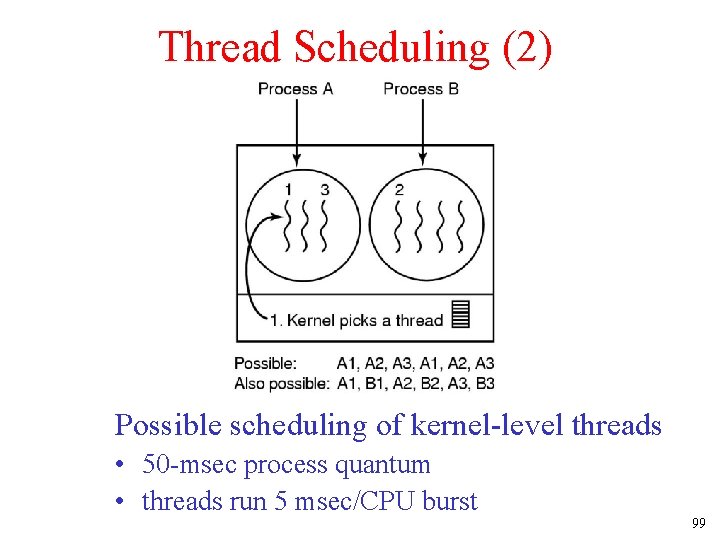

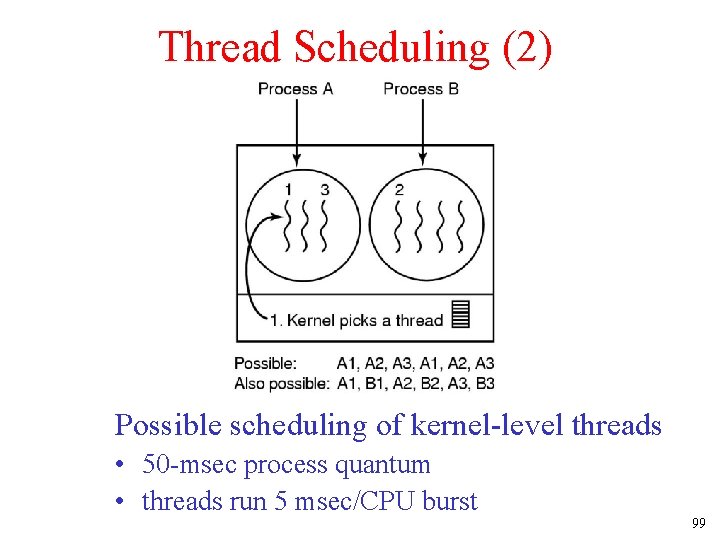

Thread Scheduling (2) Possible scheduling of kernel-level threads • 50 -msec process quantum • threads run 5 msec/CPU burst 99