Chapter 2 Memory Hierarchy Design Introduction Cache performance

![9. Reducing Misses by Compiler Optimizations • Mc. Farling [1989] reduced caches misses by 9. Reducing Misses by Compiler Optimizations • Mc. Farling [1989] reduced caches misses by](https://slidetodoc.com/presentation_image_h2/7e1144b5deb316d0b90db3e815f38f0a/image-28.jpg)

![Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /* Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /*](https://slidetodoc.com/presentation_image_h2/7e1144b5deb316d0b90db3e815f38f0a/image-29.jpg)

- Slides: 55

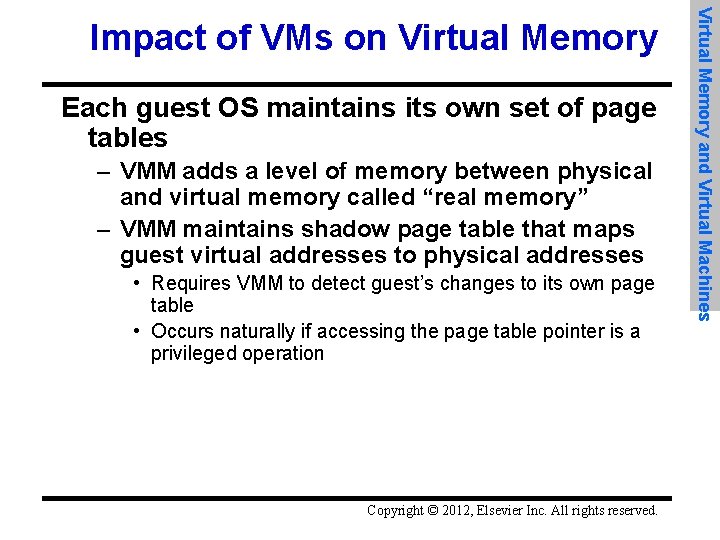

Chapter 2 Memory Hierarchy Design • Introduction • Cache performance • Advanced cache optimizations • Memory technology and DRAM optimizations • Virtual machines • Conclusion 1

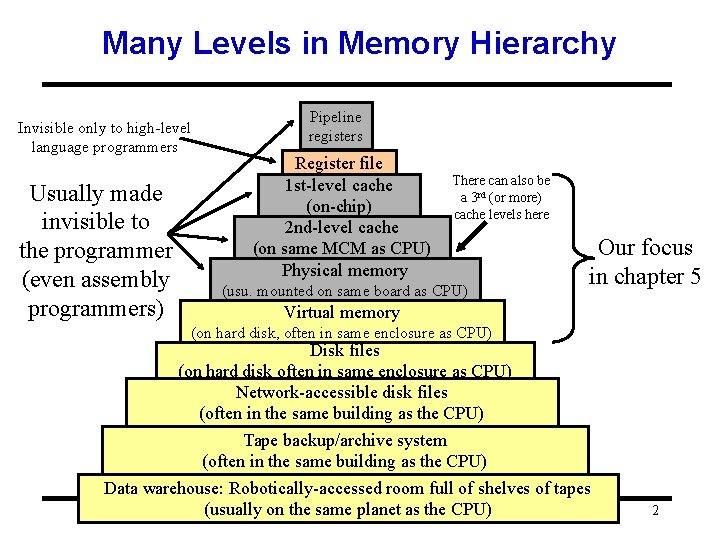

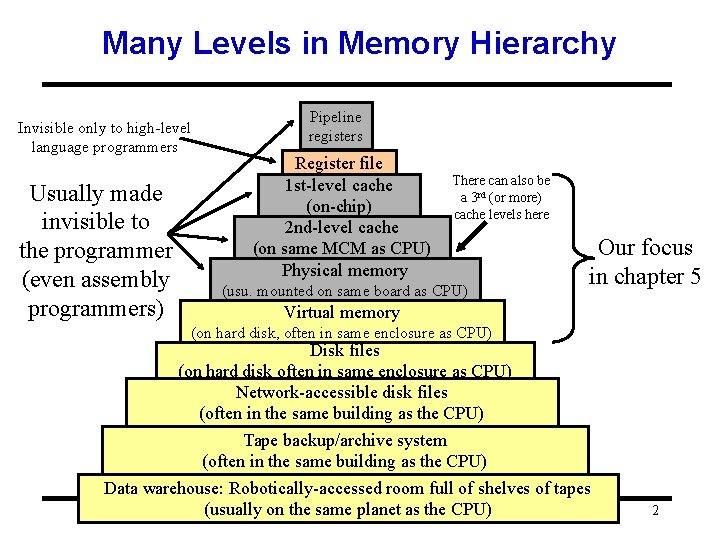

Many Levels in Memory Hierarchy Invisible only to high-level language programmers Usually made invisible to the programmer (even assembly programmers) Pipeline registers Register file 1 st-level cache (on-chip) 2 nd-level cache (on same MCM as CPU) Physical memory There can also be a 3 rd (or more) cache levels here (usu. mounted on same board as CPU) Our focus in chapter 5 Virtual memory (on hard disk, often in same enclosure as CPU) Disk files (on hard disk often in same enclosure as CPU) Network-accessible disk files (often in the same building as the CPU) Tape backup/archive system (often in the same building as the CPU) Data warehouse: Robotically-accessed room full of shelves of tapes (usually on the same planet as the CPU) 2

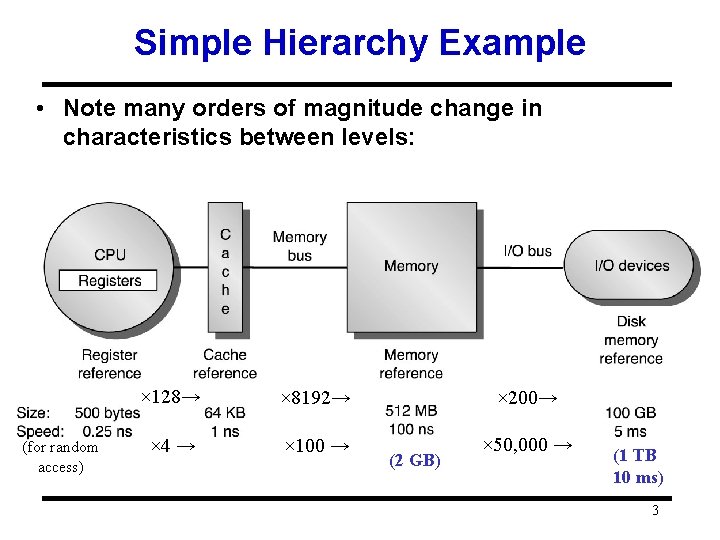

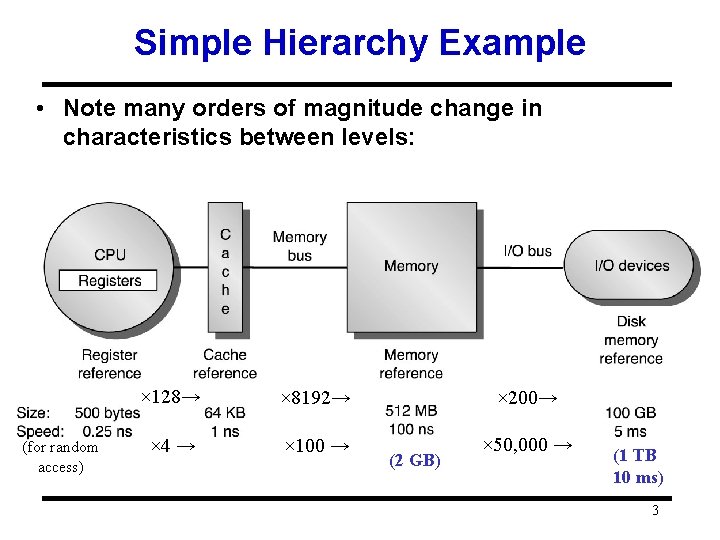

Simple Hierarchy Example • Note many orders of magnitude change in characteristics between levels: (for random access) × 128→ × 8192→ × 200→ × 4 → × 100 → × 50, 000 → (2 GB) (1 TB 10 ms) 3

Why More on Memory Hierarchy? Processor-Memory Performance Gap Growing 4

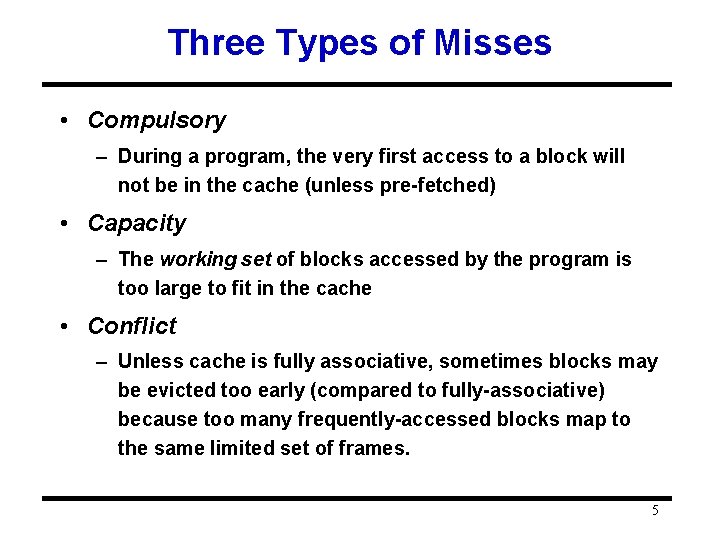

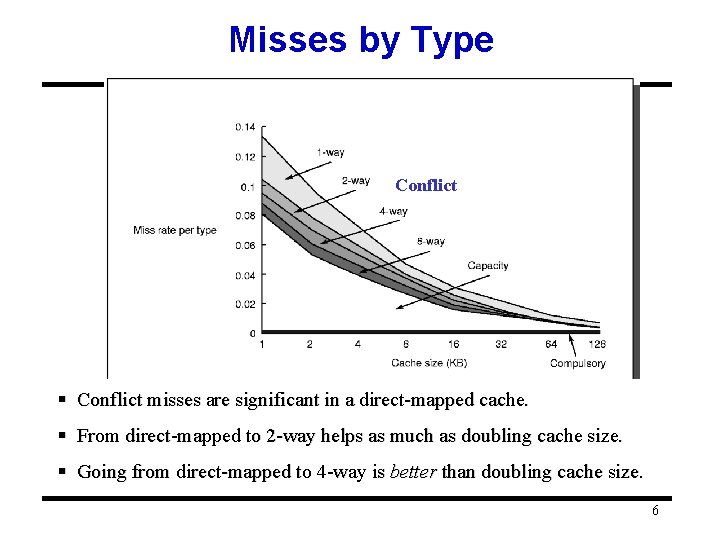

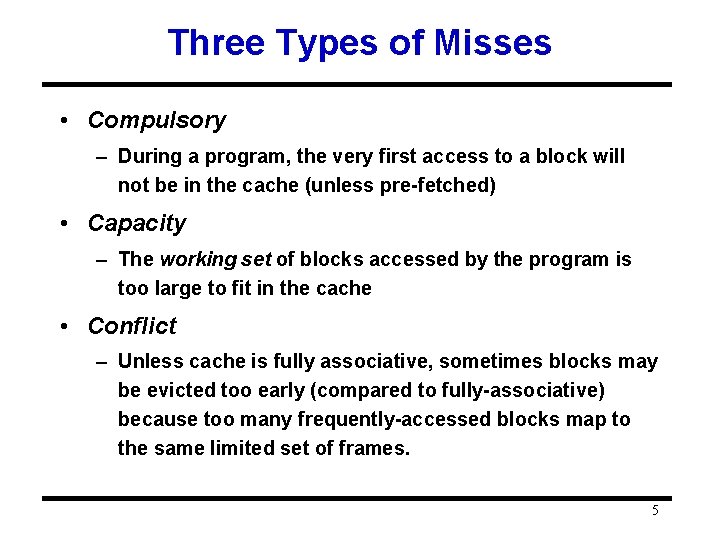

Three Types of Misses • Compulsory – During a program, the very first access to a block will not be in the cache (unless pre-fetched) • Capacity – The working set of blocks accessed by the program is too large to fit in the cache • Conflict – Unless cache is fully associative, sometimes blocks may be evicted too early (compared to fully-associative) because too many frequently-accessed blocks map to the same limited set of frames. 5

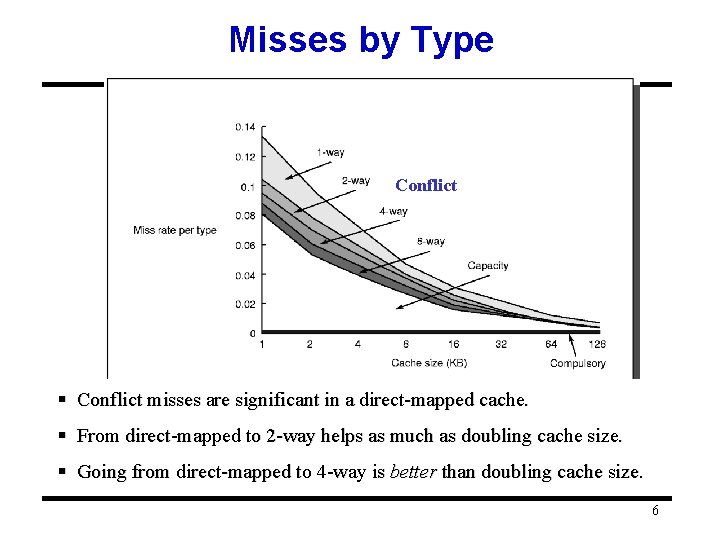

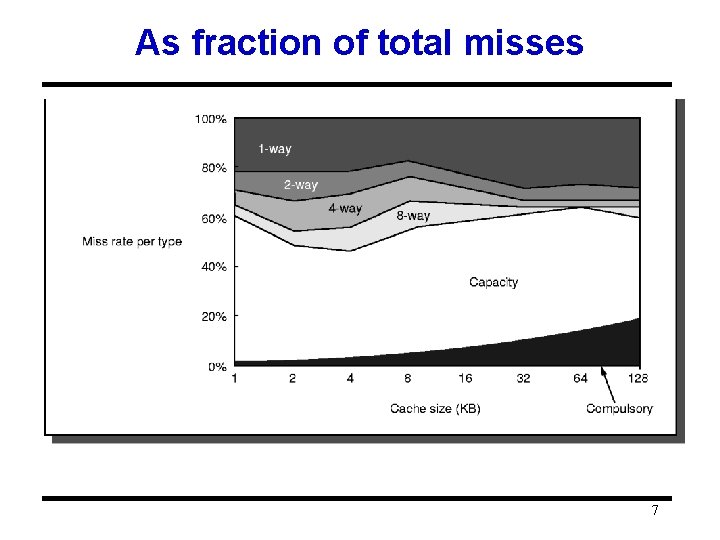

Misses by Type Conflict § Conflict misses are significant in a direct-mapped cache. § From direct-mapped to 2 -way helps as much as doubling cache size. § Going from direct-mapped to 4 -way is better than doubling cache size. 6

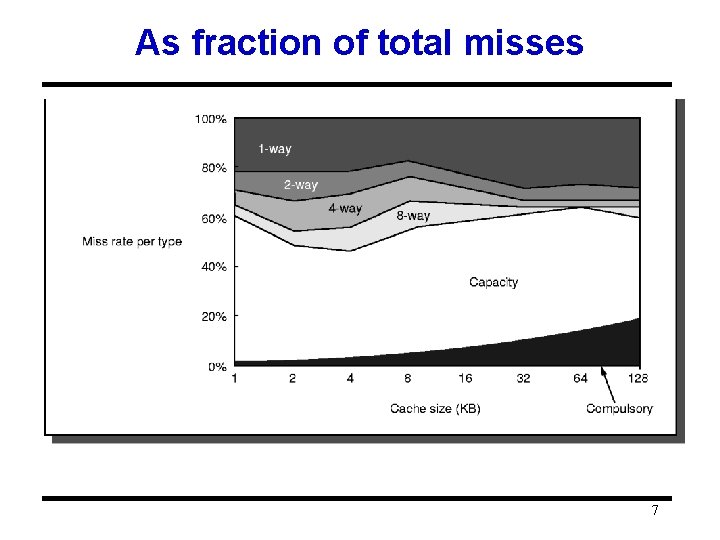

As fraction of total misses 7

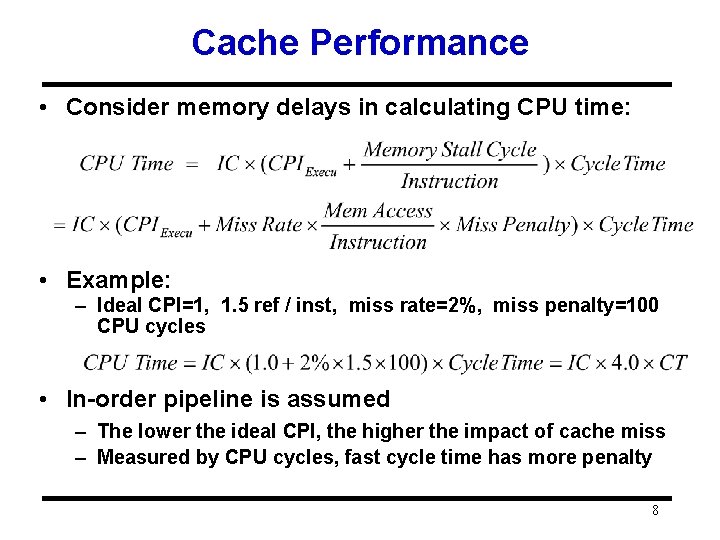

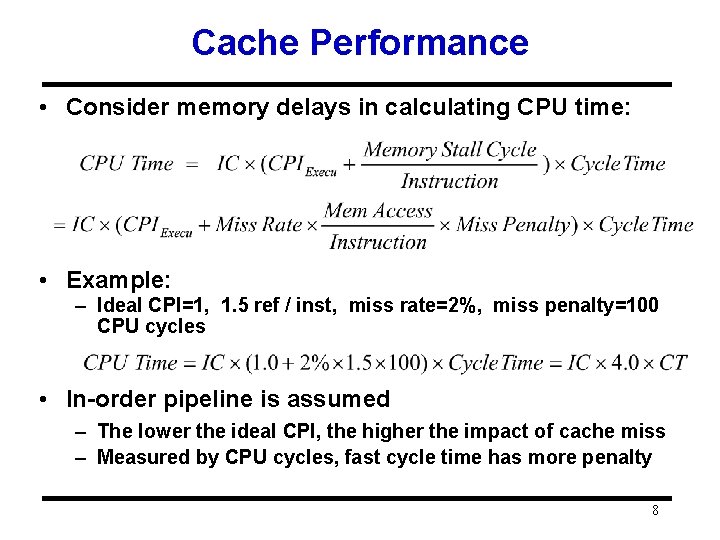

Cache Performance • Consider memory delays in calculating CPU time: • Example: – Ideal CPI=1, 1. 5 ref / inst, miss rate=2%, miss penalty=100 CPU cycles • In-order pipeline is assumed – The lower the ideal CPI, the higher the impact of cache miss – Measured by CPU cycles, fast cycle time has more penalty 8

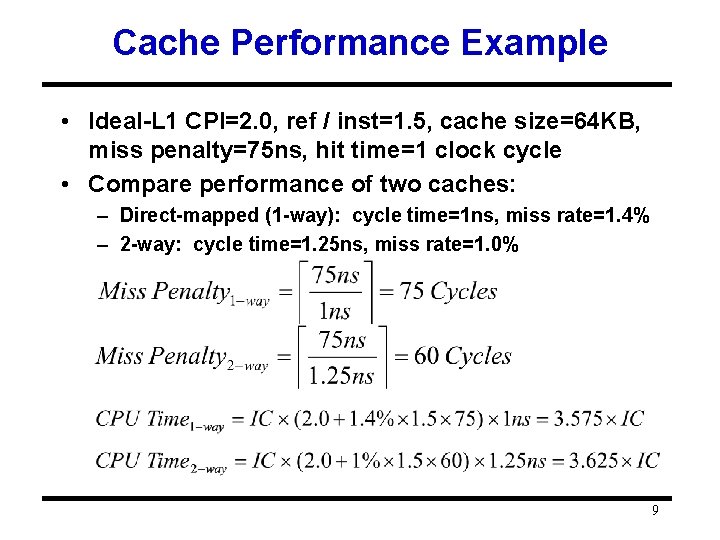

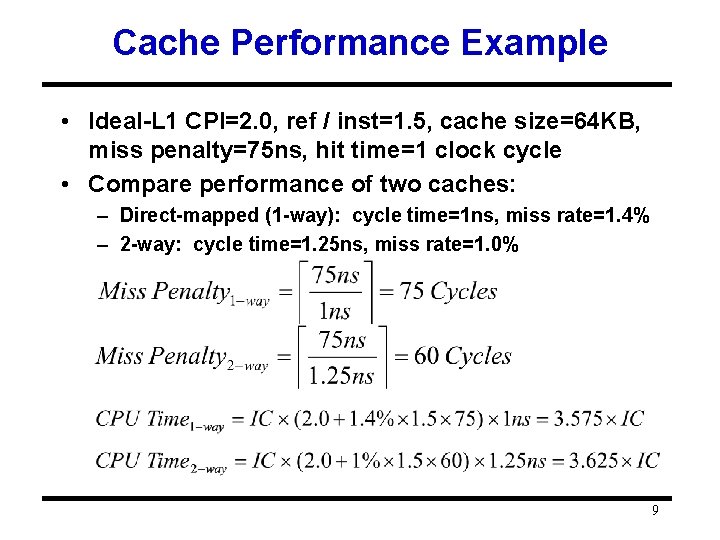

Cache Performance Example • Ideal-L 1 CPI=2. 0, ref / inst=1. 5, cache size=64 KB, miss penalty=75 ns, hit time=1 clock cycle • Compare performance of two caches: – Direct-mapped (1 -way): cycle time=1 ns, miss rate=1. 4% – 2 -way: cycle time=1. 25 ns, miss rate=1. 0% 9

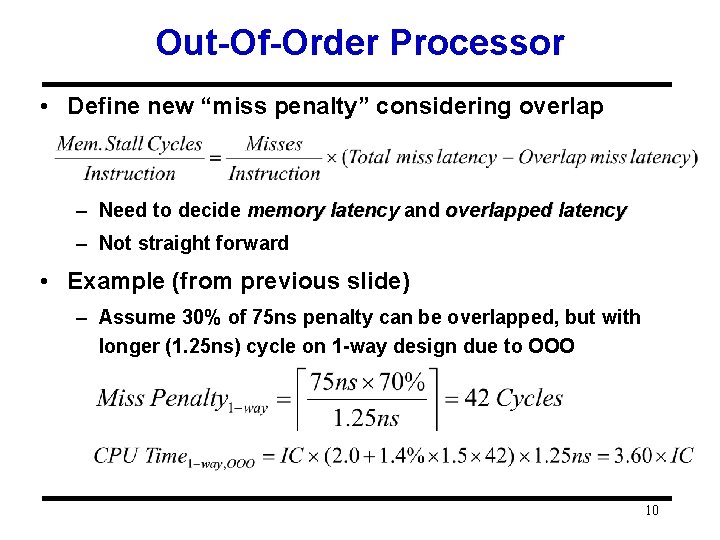

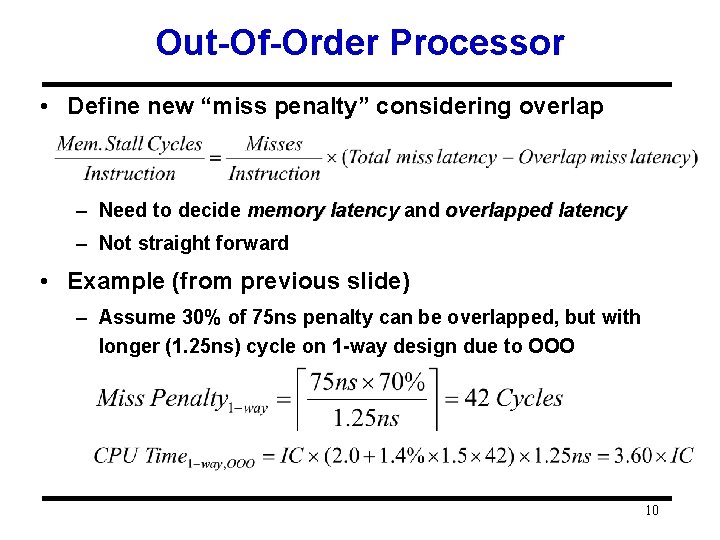

Out-Of-Order Processor • Define new “miss penalty” considering overlap – Need to decide memory latency and overlapped latency – Not straight forward • Example (from previous slide) – Assume 30% of 75 ns penalty can be overlapped, but with longer (1. 25 ns) cycle on 1 -way design due to OOO 10

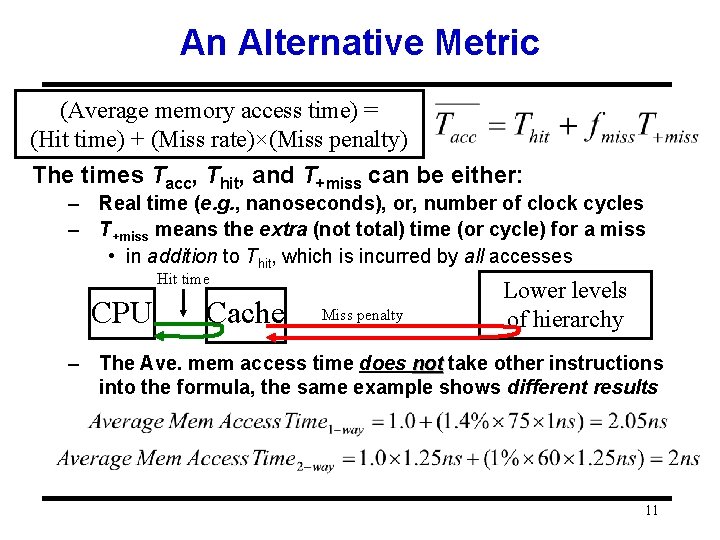

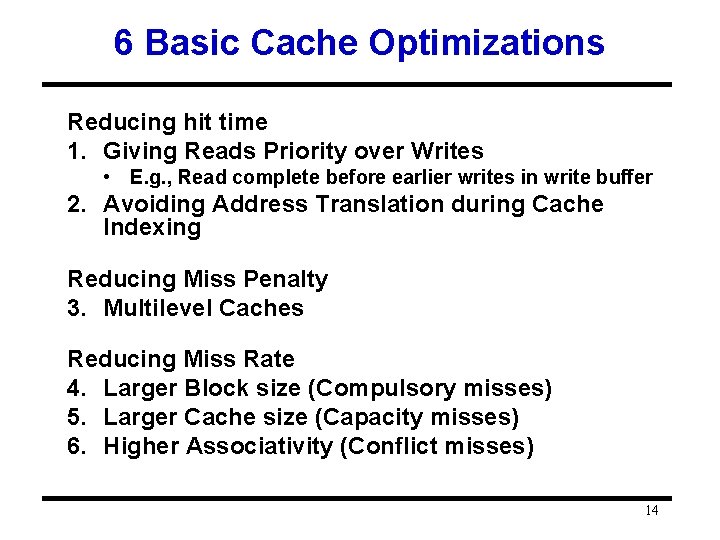

An Alternative Metric (Average memory access time) = (Hit time) + (Miss rate)×(Miss penalty) The times Tacc, Thit, and T+miss can be either: – Real time (e. g. , nanoseconds), or, number of clock cycles – T+miss means the extra (not total) time (or cycle) for a miss • in addition to Thit, which is incurred by all accesses Hit time CPU Cache Miss penalty Lower levels of hierarchy – The Ave. mem access time does not take other instructions into the formula, the same example shows different results 11

Another View (Multi-cycle Cache) • Instead of increase the cycle time, 2 -way cache can have two-cycle cache access, then • becomes: • In reality, not all the 2 nd cycle of the 2 -cycle loads will cause stalls of dependent instructions, say only 20%: (Note the formula assume impact all) • Note, cycle time always impacts all 12

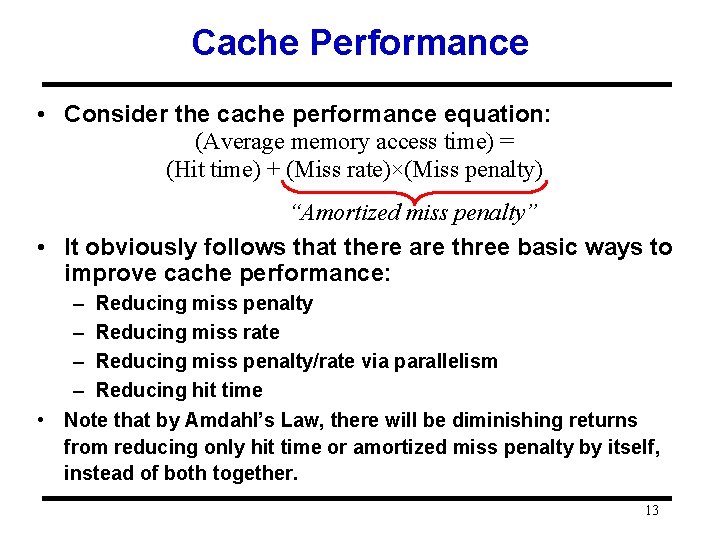

Cache Performance • Consider the cache performance equation: (Average memory access time) = (Hit time) + (Miss rate)×(Miss penalty) “Amortized miss penalty” • It obviously follows that there are three basic ways to improve cache performance: – Reducing miss penalty – Reducing miss rate – Reducing miss penalty/rate via parallelism – Reducing hit time • Note that by Amdahl’s Law, there will be diminishing returns from reducing only hit time or amortized miss penalty by itself, instead of both together. 13

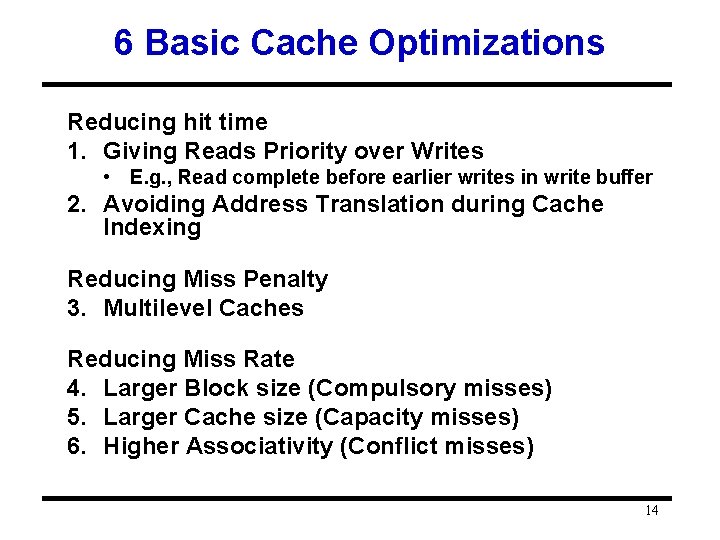

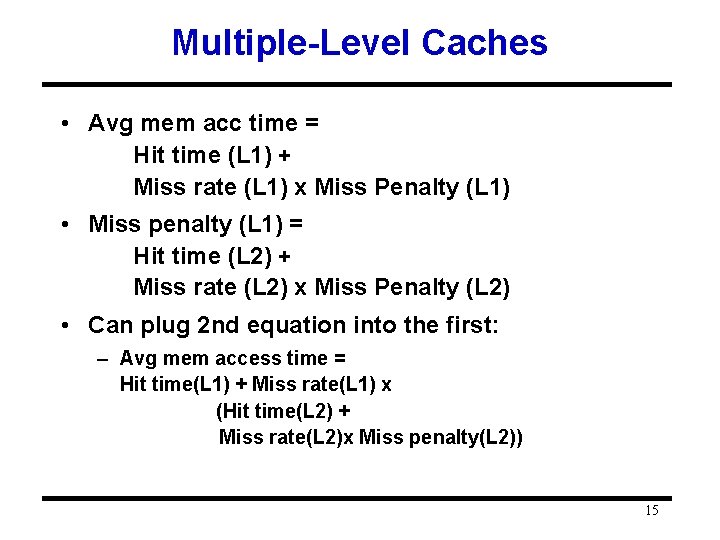

6 Basic Cache Optimizations Reducing hit time 1. Giving Reads Priority over Writes • E. g. , Read complete before earlier writes in write buffer 2. Avoiding Address Translation during Cache Indexing Reducing Miss Penalty 3. Multilevel Caches Reducing Miss Rate 4. Larger Block size (Compulsory misses) 5. Larger Cache size (Capacity misses) 6. Higher Associativity (Conflict misses) 14

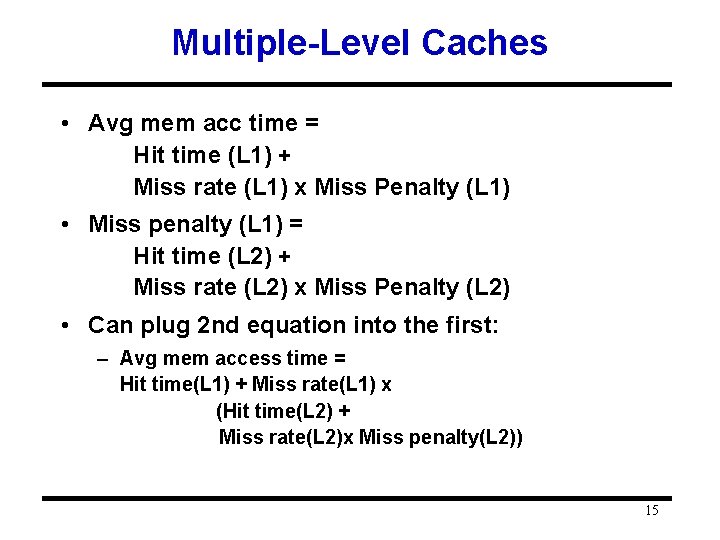

Multiple-Level Caches • Avg mem acc time = Hit time (L 1) + Miss rate (L 1) x Miss Penalty (L 1) • Miss penalty (L 1) = Hit time (L 2) + Miss rate (L 2) x Miss Penalty (L 2) • Can plug 2 nd equation into the first: – Avg mem access time = Hit time(L 1) + Miss rate(L 1) x (Hit time(L 2) + Miss rate(L 2)x Miss penalty(L 2)) 15

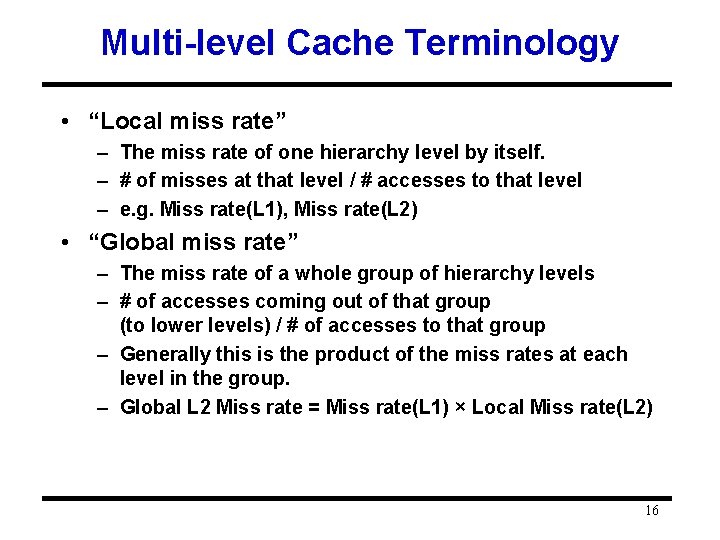

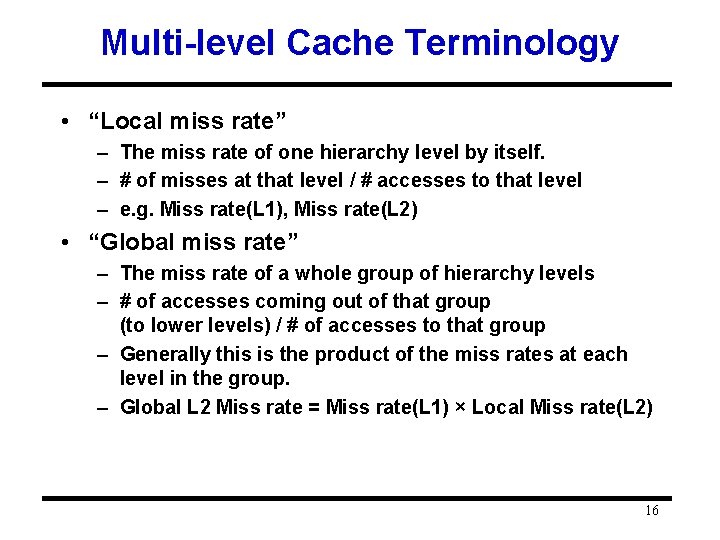

Multi-level Cache Terminology • “Local miss rate” – The miss rate of one hierarchy level by itself. – # of misses at that level / # accesses to that level – e. g. Miss rate(L 1), Miss rate(L 2) • “Global miss rate” – The miss rate of a whole group of hierarchy levels – # of accesses coming out of that group (to lower levels) / # of accesses to that group – Generally this is the product of the miss rates at each level in the group. – Global L 2 Miss rate = Miss rate(L 1) × Local Miss rate(L 2) 16

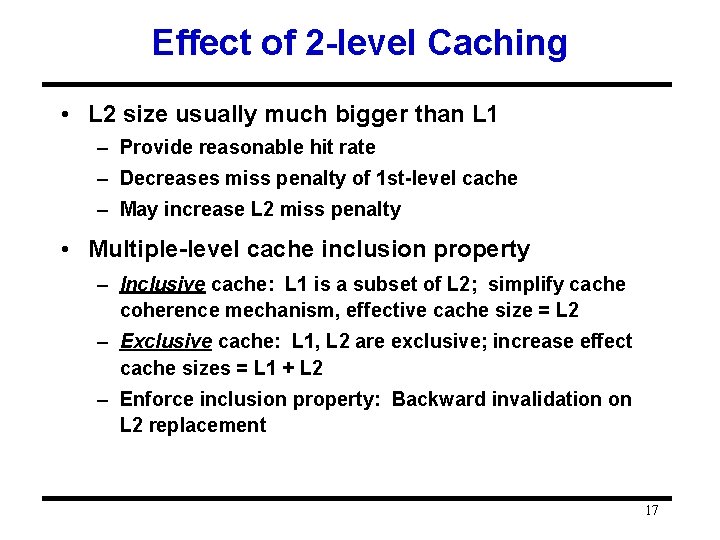

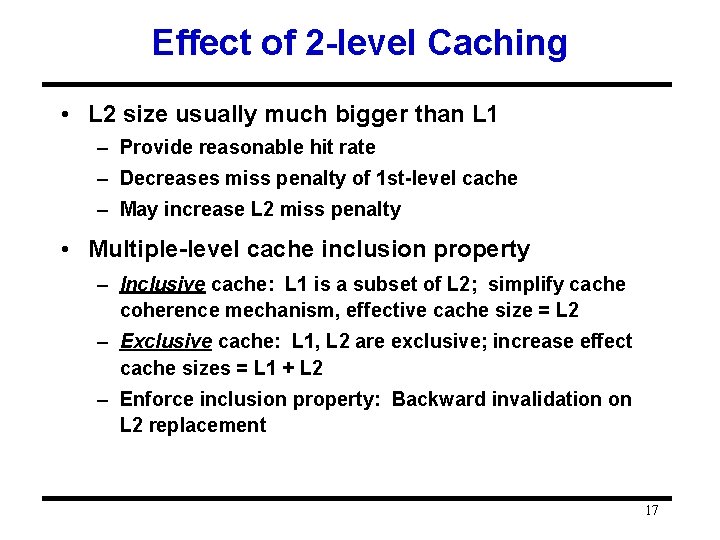

Effect of 2 -level Caching • L 2 size usually much bigger than L 1 – Provide reasonable hit rate – Decreases miss penalty of 1 st-level cache – May increase L 2 miss penalty • Multiple-level cache inclusion property – Inclusive cache: L 1 is a subset of L 2; simplify cache coherence mechanism, effective cache size = L 2 – Exclusive cache: L 1, L 2 are exclusive; increase effect cache sizes = L 1 + L 2 – Enforce inclusion property: Backward invalidation on L 2 replacement 17

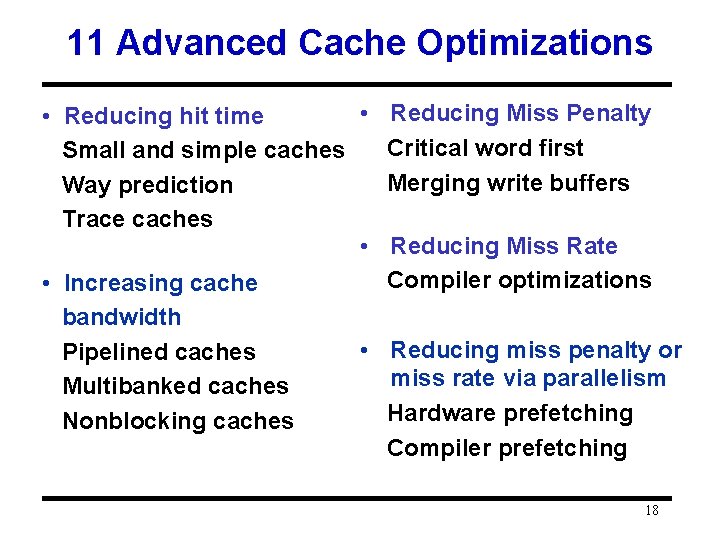

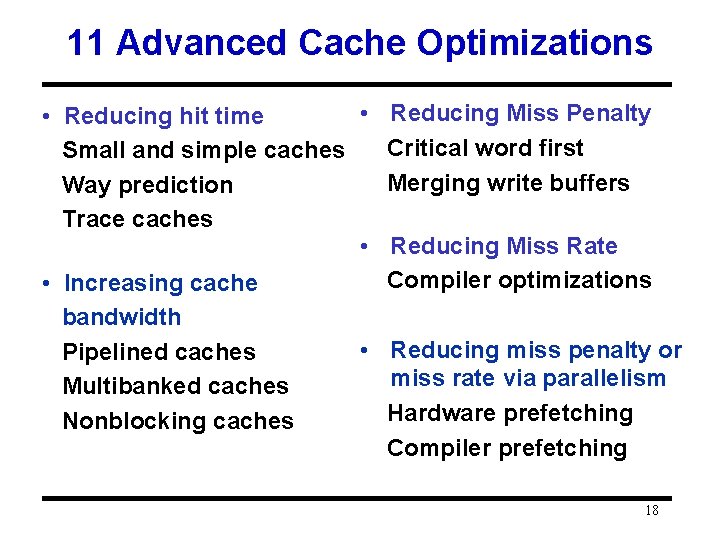

11 Advanced Cache Optimizations • Reducing Miss Penalty • Reducing hit time Critical word first Small and simple caches Merging write buffers Way prediction Trace caches • Reducing Miss Rate Compiler optimizations • Increasing cache bandwidth Pipelined caches Multibanked caches Nonblocking caches • Reducing miss penalty or miss rate via parallelism Hardware prefetching Compiler prefetching 18

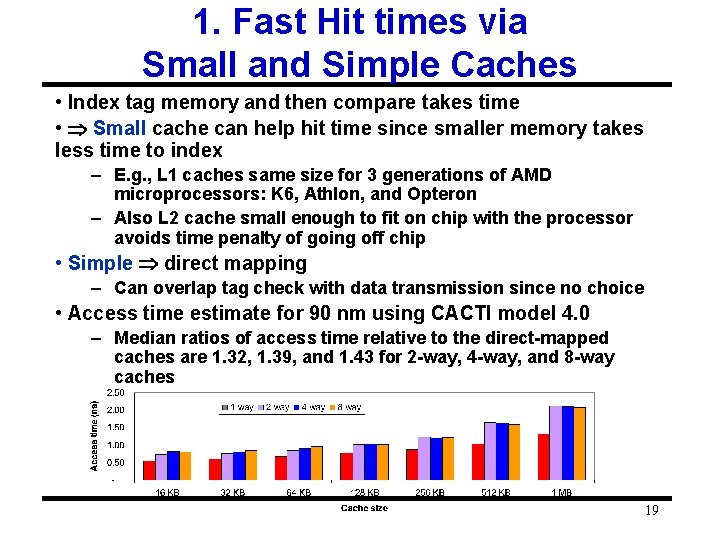

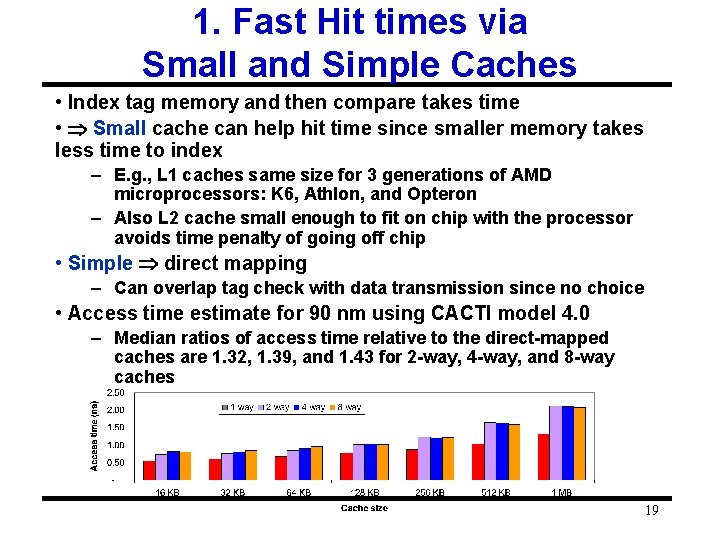

1. Fast Hit times via Small and Simple Caches • Index tag memory and then compare takes time • Small cache can help hit time since smaller memory takes less time to index – E. g. , L 1 caches same size for 3 generations of AMD microprocessors: K 6, Athlon, and Opteron – Also L 2 cache small enough to fit on chip with the processor avoids time penalty of going off chip • Simple direct mapping – Can overlap tag check with data transmission since no choice • Access time estimate for 90 nm using CACTI model 4. 0 – Median ratios of access time relative to the direct-mapped caches are 1. 32, 1. 39, and 1. 43 for 2 -way, 4 -way, and 8 -way caches 19

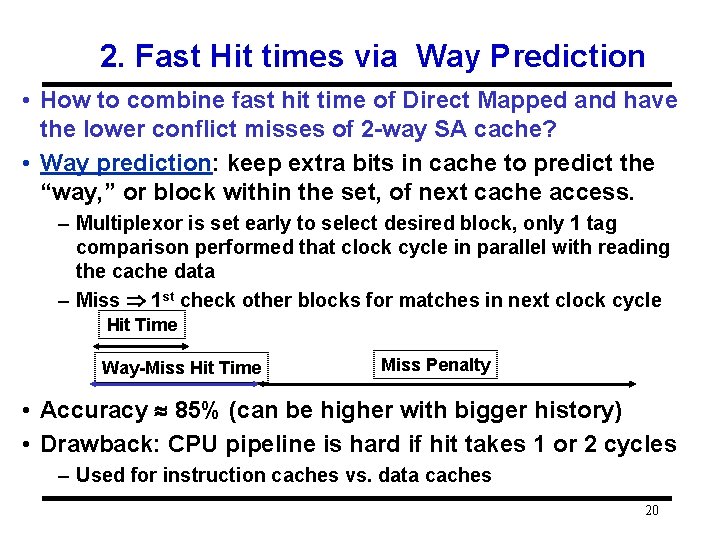

2. Fast Hit times via Way Prediction • How to combine fast hit time of Direct Mapped and have the lower conflict misses of 2 -way SA cache? • Way prediction: keep extra bits in cache to predict the “way, ” or block within the set, of next cache access. – Multiplexor is set early to select desired block, only 1 tag comparison performed that clock cycle in parallel with reading the cache data – Miss 1 st check other blocks for matches in next clock cycle Hit Time Way-Miss Hit Time Miss Penalty • Accuracy 85% (can be higher with bigger history) • Drawback: CPU pipeline is hard if hit takes 1 or 2 cycles – Used for instruction caches vs. data caches 20

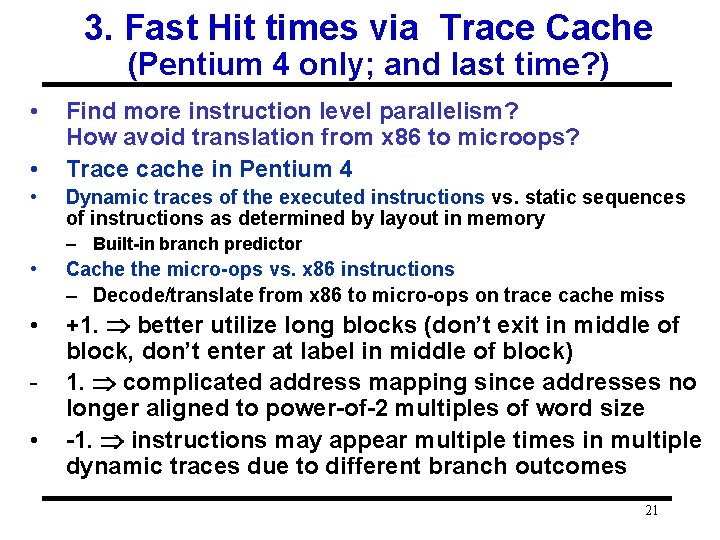

3. Fast Hit times via Trace Cache (Pentium 4 only; and last time? ) • • • Find more instruction level parallelism? How avoid translation from x 86 to microops? Trace cache in Pentium 4 Dynamic traces of the executed instructions vs. static sequences of instructions as determined by layout in memory – Built-in branch predictor • Cache the micro-ops vs. x 86 instructions – Decode/translate from x 86 to micro-ops on trace cache miss • +1. better utilize long blocks (don’t exit in middle of block, don’t enter at label in middle of block) 1. complicated address mapping since addresses no longer aligned to power-of-2 multiples of word size -1. instructions may appear multiple times in multiple dynamic traces due to different branch outcomes • 21

4: Increasing Cache Bandwidth by Pipelining • Pipeline cache access to maintain bandwidth, but higher latency • Instruction cache access pipeline stages: • 1: Pentium • 2: Pentium Pro through Pentium III • 4: Pentium 4 - greater penalty on mispredicted branches - more clock cycles between the issue of the load and the use of the data 22

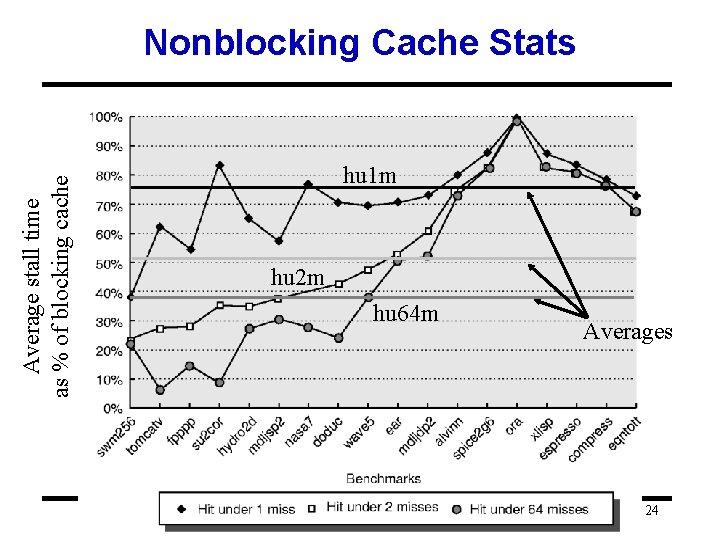

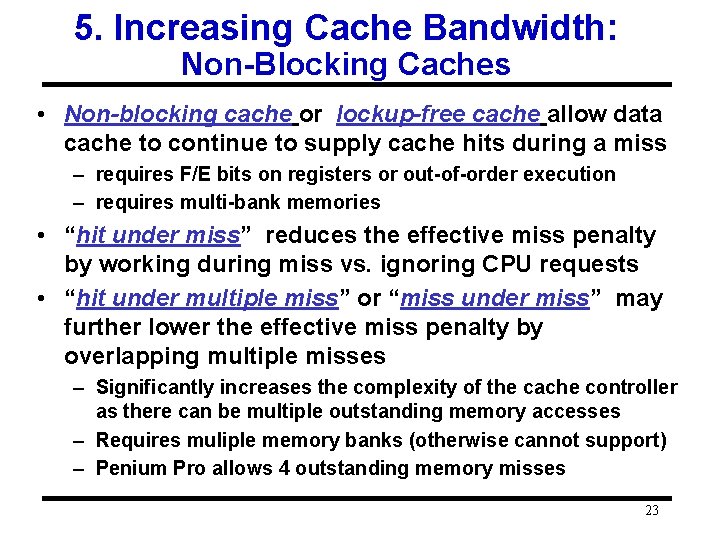

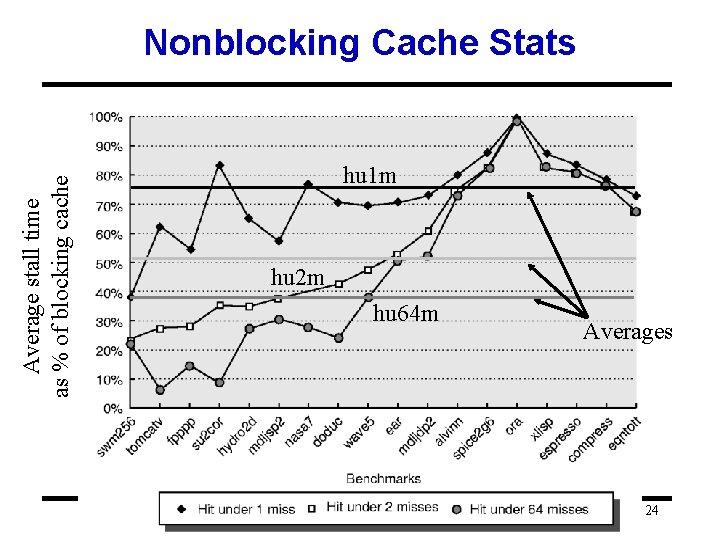

5. Increasing Cache Bandwidth: Non-Blocking Caches • Non-blocking cache or lockup-free cache allow data cache to continue to supply cache hits during a miss – requires F/E bits on registers or out-of-order execution – requires multi-bank memories • “hit under miss” reduces the effective miss penalty by working during miss vs. ignoring CPU requests • “hit under multiple miss” or “miss under miss” may further lower the effective miss penalty by overlapping multiple misses – Significantly increases the complexity of the cache controller as there can be multiple outstanding memory accesses – Requires muliple memory banks (otherwise cannot support) – Penium Pro allows 4 outstanding memory misses 23

Average stall time as % of blocking cache Nonblocking Cache Stats hu 1 m hu 2 m hu 64 m Averages 24

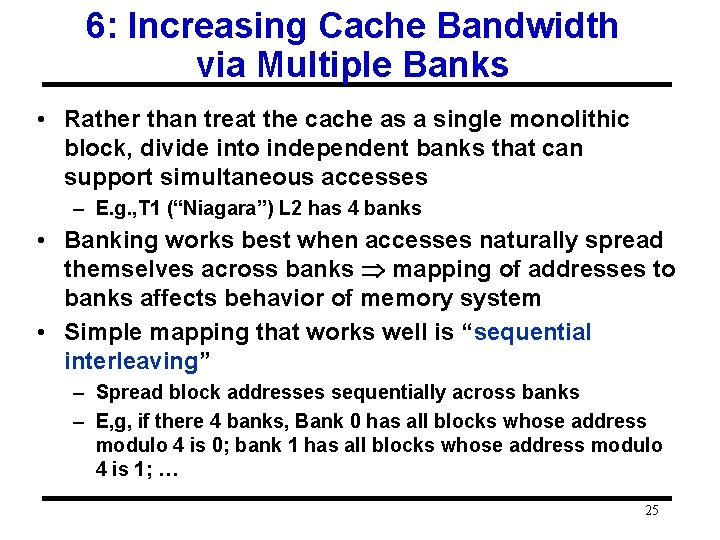

6: Increasing Cache Bandwidth via Multiple Banks • Rather than treat the cache as a single monolithic block, divide into independent banks that can support simultaneous accesses – E. g. , T 1 (“Niagara”) L 2 has 4 banks • Banking works best when accesses naturally spread themselves across banks mapping of addresses to banks affects behavior of memory system • Simple mapping that works well is “sequential interleaving” – Spread block addresses sequentially across banks – E, g, if there 4 banks, Bank 0 has all blocks whose address modulo 4 is 0; bank 1 has all blocks whose address modulo 4 is 1; … 25

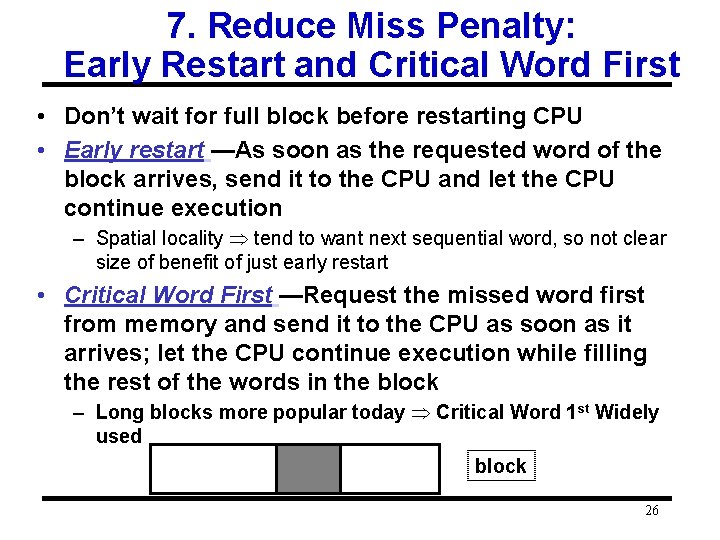

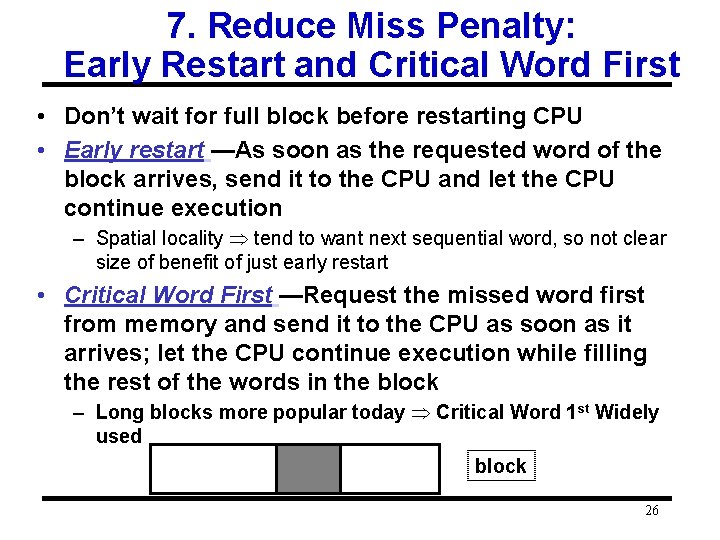

7. Reduce Miss Penalty: Early Restart and Critical Word First • Don’t wait for full block before restarting CPU • Early restart —As soon as the requested word of the block arrives, send it to the CPU and let the CPU continue execution – Spatial locality tend to want next sequential word, so not clear size of benefit of just early restart • Critical Word First —Request the missed word first from memory and send it to the CPU as soon as it arrives; let the CPU continue execution while filling the rest of the words in the block – Long blocks more popular today Critical Word 1 st Widely used block 26

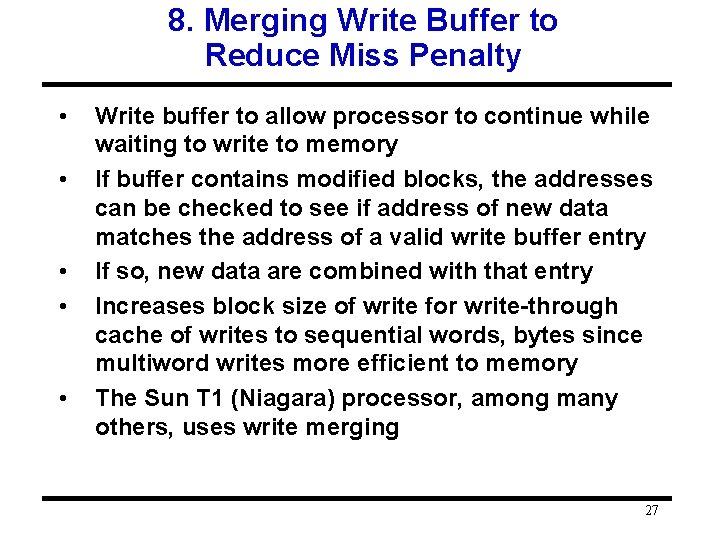

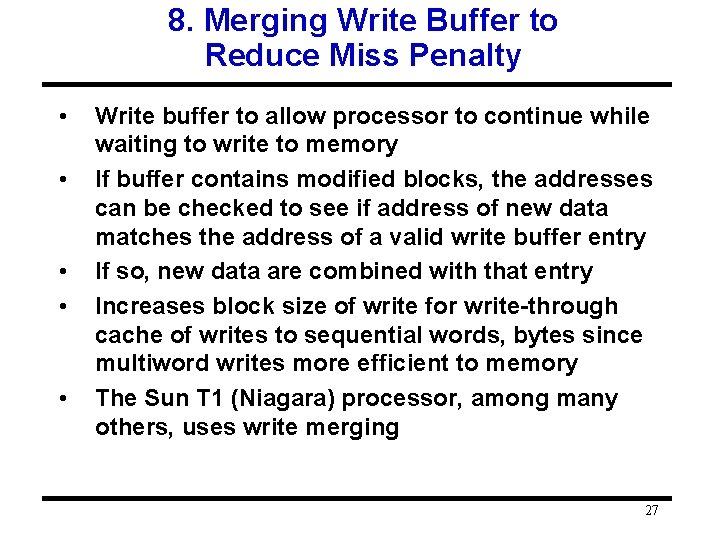

8. Merging Write Buffer to Reduce Miss Penalty • • • Write buffer to allow processor to continue while waiting to write to memory If buffer contains modified blocks, the addresses can be checked to see if address of new data matches the address of a valid write buffer entry If so, new data are combined with that entry Increases block size of write for write-through cache of writes to sequential words, bytes since multiword writes more efficient to memory The Sun T 1 (Niagara) processor, among many others, uses write merging 27

![9 Reducing Misses by Compiler Optimizations Mc Farling 1989 reduced caches misses by 9. Reducing Misses by Compiler Optimizations • Mc. Farling [1989] reduced caches misses by](https://slidetodoc.com/presentation_image_h2/7e1144b5deb316d0b90db3e815f38f0a/image-28.jpg)

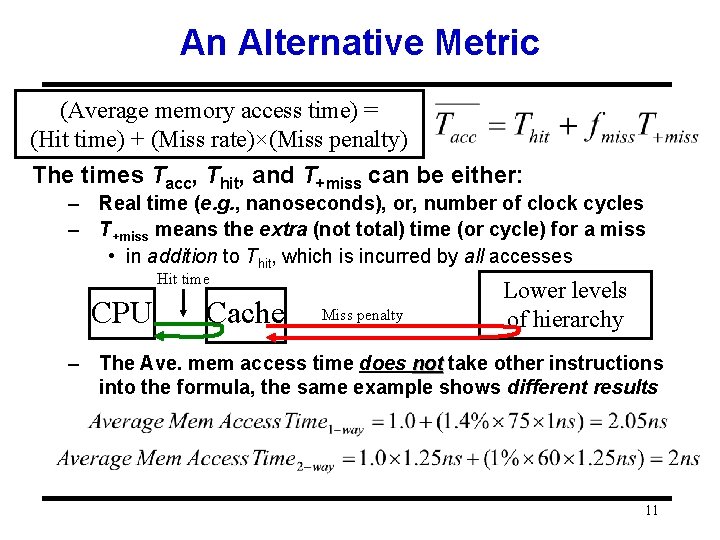

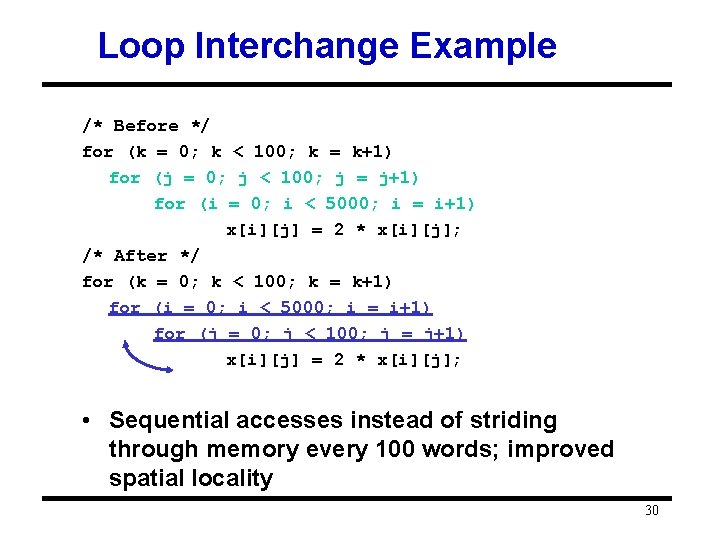

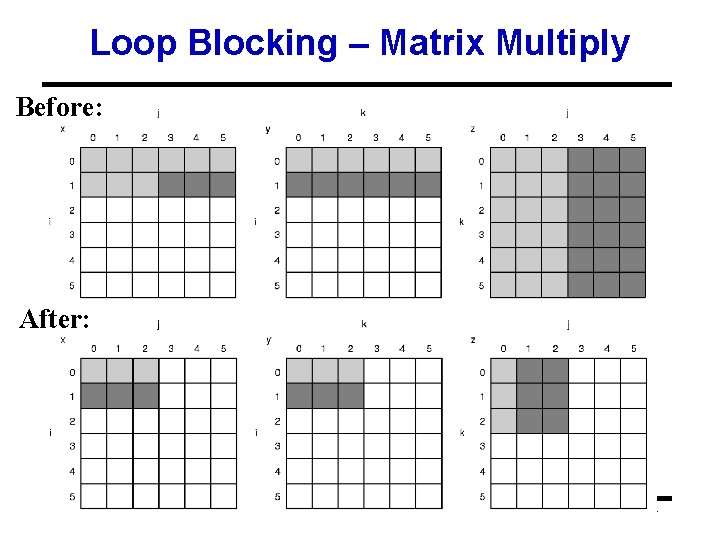

9. Reducing Misses by Compiler Optimizations • Mc. Farling [1989] reduced caches misses by 75% on 8 KB direct mapped cache, 4 byte blocks in software • Instructions – Reorder procedures in memory so as to reduce conflict misses – Profiling to look at conflicts(using tools they developed) • Data – Merging Arrays: improve spatial locality by single array of compound elements vs. 2 arrays – Loop Interchange: change nesting of loops to access data in order stored in memory – Loop Fusion: Combine 2 independent loops that have same looping and some variables overlap – Blocking: Improve temporal locality by accessing “blocks” of data repeatedly vs. going down whole columns or rows 28

![Merging Arrays Example Before 2 sequential arrays int valSIZE int keySIZE Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /*](https://slidetodoc.com/presentation_image_h2/7e1144b5deb316d0b90db3e815f38f0a/image-29.jpg)

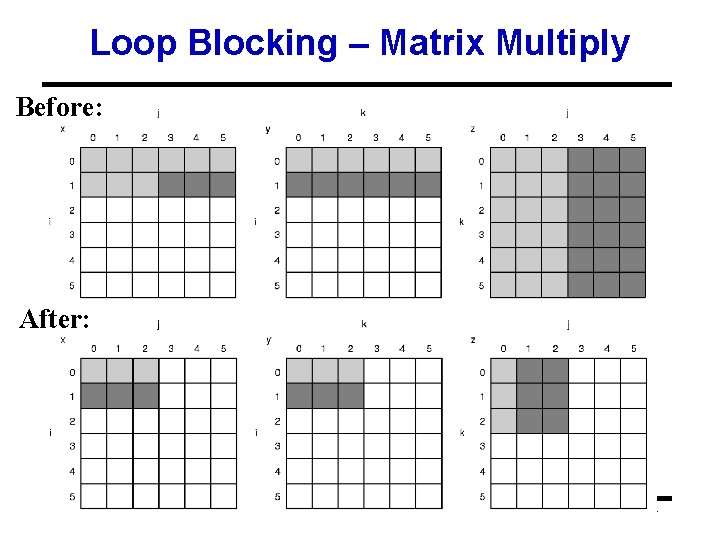

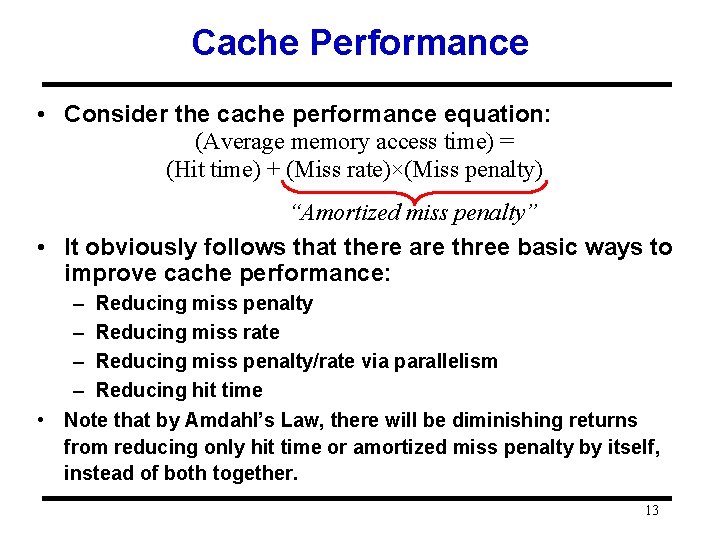

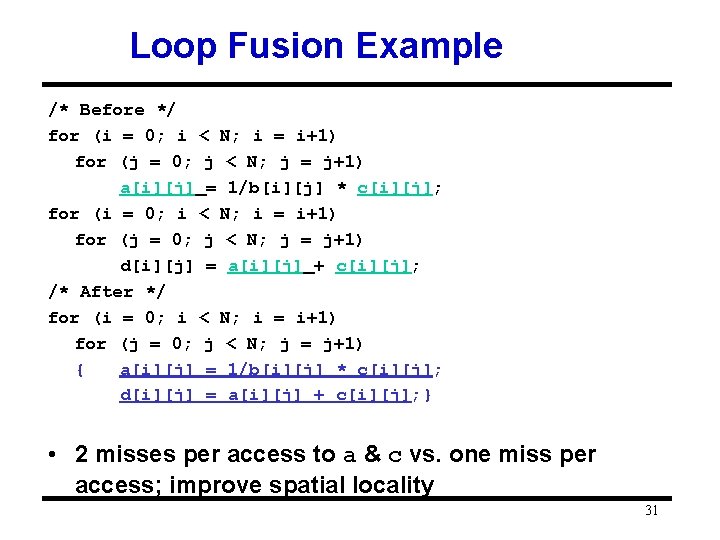

Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /* After: 1 array of stuctures */ struct merge { int val; int key; }; struct merged_array[SIZE]; • Reducing conflicts between val & key; improve spatial locality when they are accessed in a interleaved fashion 29

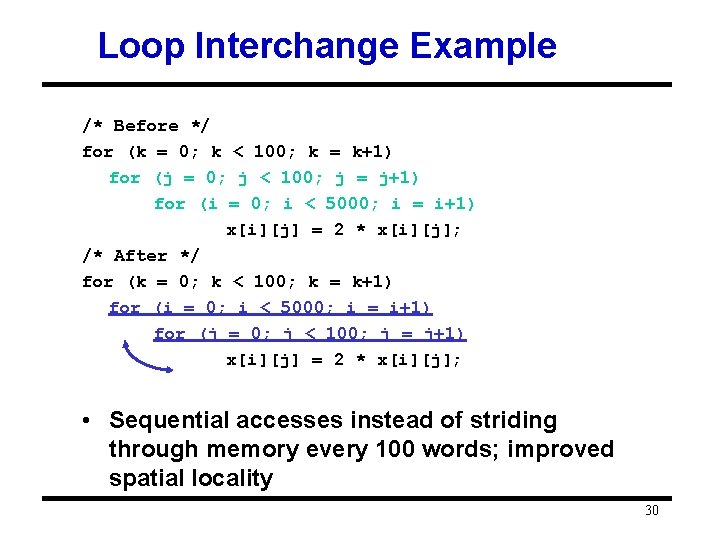

Loop Interchange Example /* Before */ for (k = 0; k < 100; k = k+1) for (j = 0; j < 100; j = j+1) for (i = 0; i < 5000; i = i+1) x[i][j] = 2 * x[i][j]; /* After */ for (k = 0; k < 100; k = k+1) for (i = 0; i < 5000; i = i+1) for (j = 0; j < 100; j = j+1) x[i][j] = 2 * x[i][j]; • Sequential accesses instead of striding through memory every 100 words; improved spatial locality 30

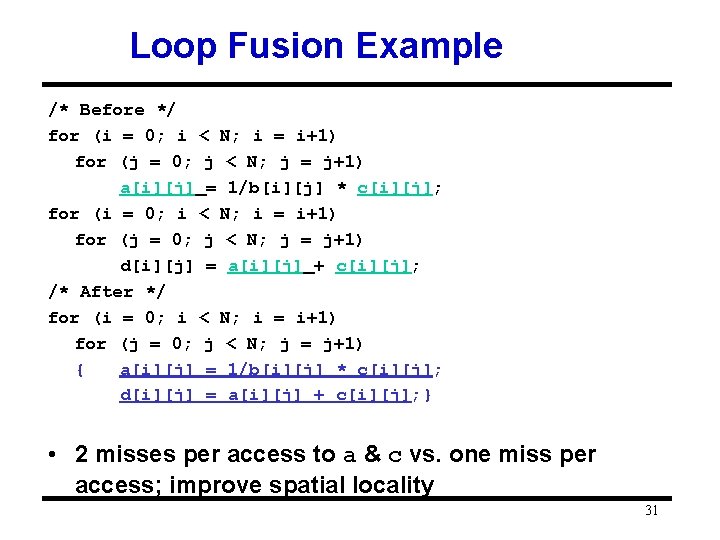

Loop Fusion Example /* Before */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) a[i][j] = 1/b[i][j] * c[i][j]; for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) d[i][j] = a[i][j] + c[i][j]; /* After */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) { a[i][j] = 1/b[i][j] * c[i][j]; d[i][j] = a[i][j] + c[i][j]; } • 2 misses per access to a & c vs. one miss per access; improve spatial locality 31

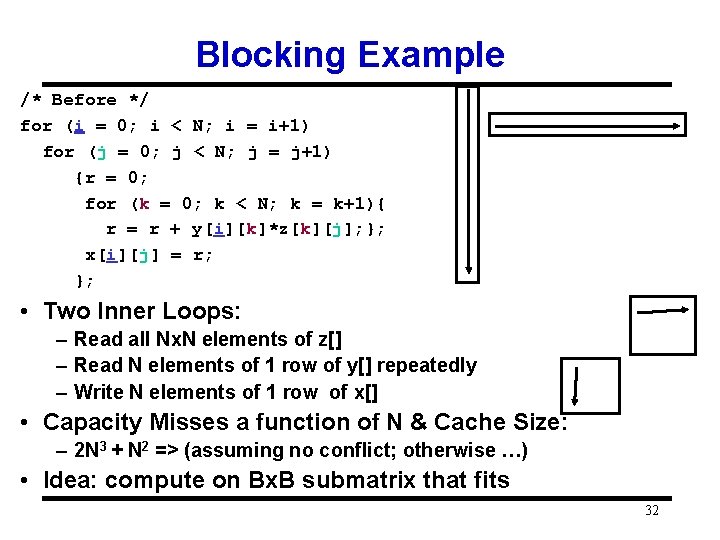

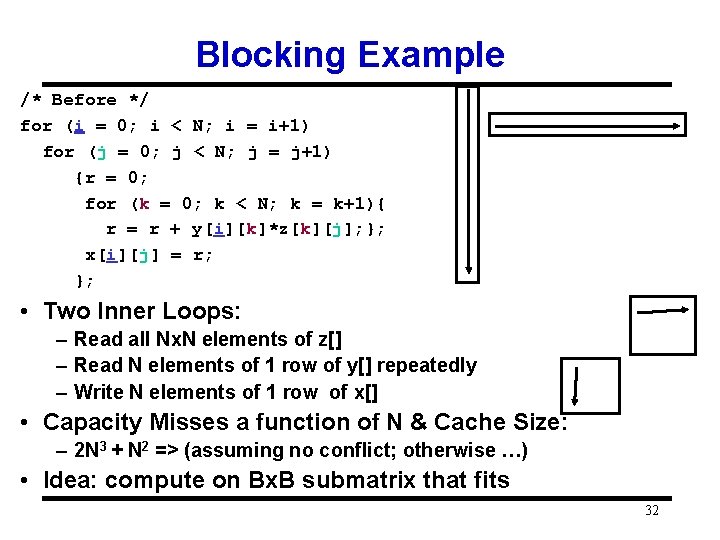

Blocking Example /* Before */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) {r = 0; for (k = 0; k < N; k = k+1){ r = r + y[i][k]*z[k][j]; }; x[i][j] = r; }; • Two Inner Loops: – Read all Nx. N elements of z[] – Read N elements of 1 row of y[] repeatedly – Write N elements of 1 row of x[] • Capacity Misses a function of N & Cache Size: – 2 N 3 + N 2 => (assuming no conflict; otherwise …) • Idea: compute on Bx. B submatrix that fits 32

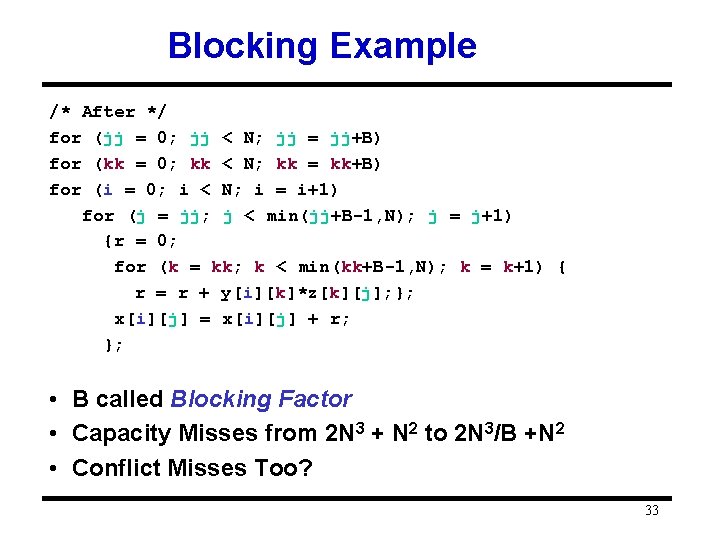

Blocking Example /* After */ for (jj = 0; jj < N; jj = jj+B) for (kk = 0; kk < N; kk = kk+B) for (i = 0; i < N; i = i+1) for (j = jj; j < min(jj+B-1, N); j = j+1) {r = 0; for (k = kk; k < min(kk+B-1, N); k = k+1) { r = r + y[i][k]*z[k][j]; }; x[i][j] = x[i][j] + r; }; • B called Blocking Factor • Capacity Misses from 2 N 3 + N 2 to 2 N 3/B +N 2 • Conflict Misses Too? 33

Loop Blocking – Matrix Multiply Before: After: 34

Reducing Conflict Misses by Blocking • Conflict misses in caches not FA vs. Blocking size – Lam et al [1991] a blocking factor of 24 had a fifth the misses vs. 48 despite both fit in cache 35

Summary of Compiler Optimizations to Reduce Cache Misses (by hand) 36

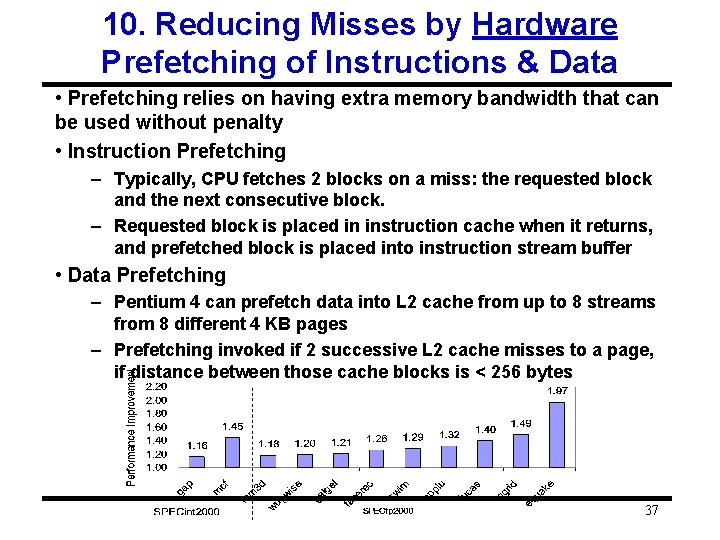

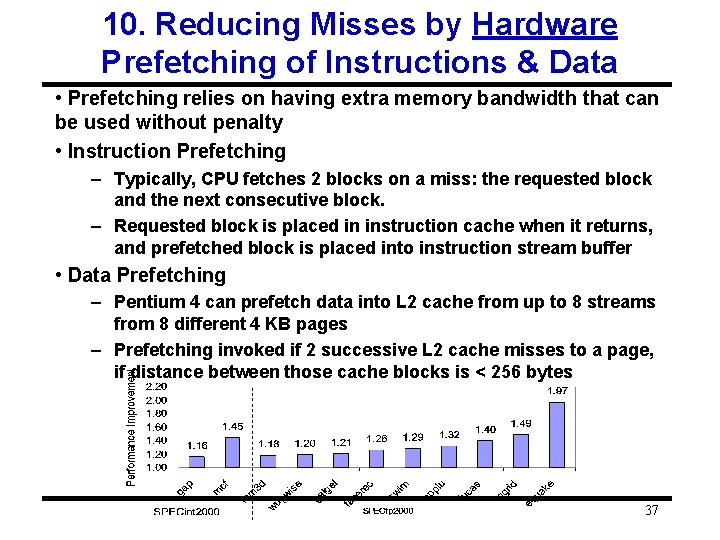

10. Reducing Misses by Hardware Prefetching of Instructions & Data • Prefetching relies on having extra memory bandwidth that can be used without penalty • Instruction Prefetching – Typically, CPU fetches 2 blocks on a miss: the requested block and the next consecutive block. – Requested block is placed in instruction cache when it returns, and prefetched block is placed into instruction stream buffer • Data Prefetching – Pentium 4 can prefetch data into L 2 cache from up to 8 streams from 8 different 4 KB pages – Prefetching invoked if 2 successive L 2 cache misses to a page, if distance between those cache blocks is < 256 bytes 37

11. Reducing Misses by Software Prefetching Data • Data Prefetch – Load data into register (HP PA-RISC loads) – Cache Prefetch: load into cache (MIPS IV, Power. PC, SPARC v. 9) – Special prefetching instructions cannot cause faults; a form of speculative execution • Issuing Prefetch Instructions takes time – Is cost of prefetch issues < savings in reduced misses? – Higher superscalar reduces difficulty of issue bandwidth 38

Compiler Optimization vs. Memory Hierarchy Search • Compiler tries to figure out memory hierarchy optimizations • New approach: “Auto-tuners” 1 st run variations of program on computer to find best combinations of optimizations (blocking, padding, …) and algorithms, then produce C code to be compiled for that computer • “Auto-tuner” targeted to numerical method – E. g. , PHi. PAC (BLAS), Atlas (BLAS), Sparsity (Sparse linear algebra), Spiral (DSP), FFT-W 39

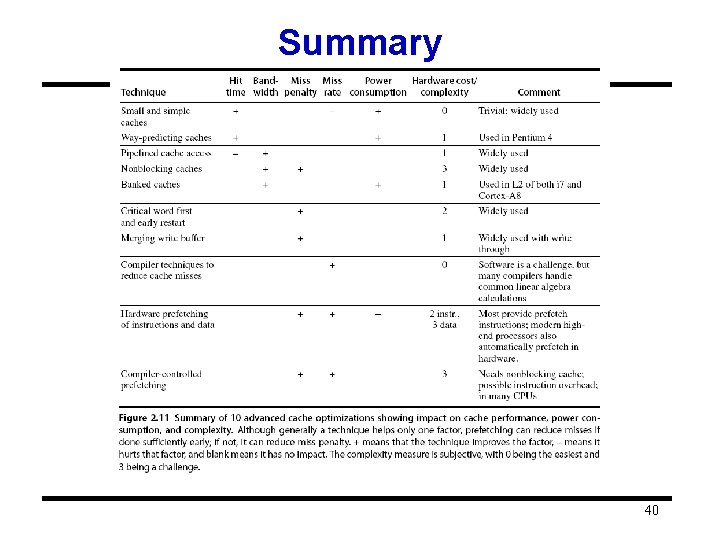

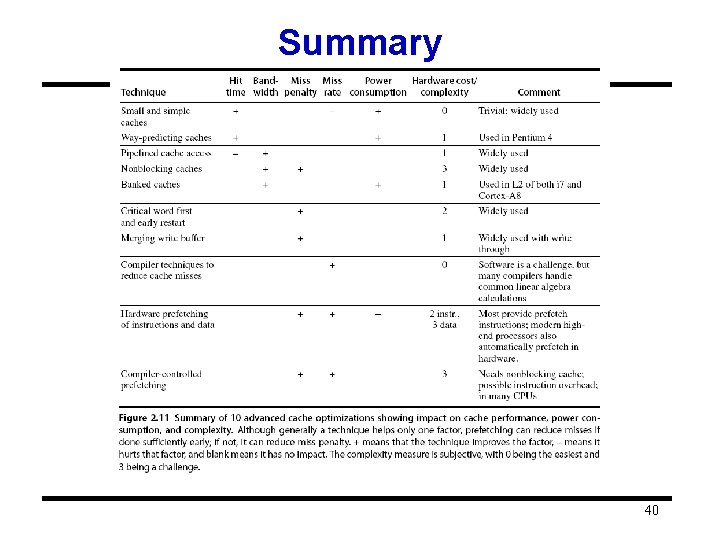

Summary 40

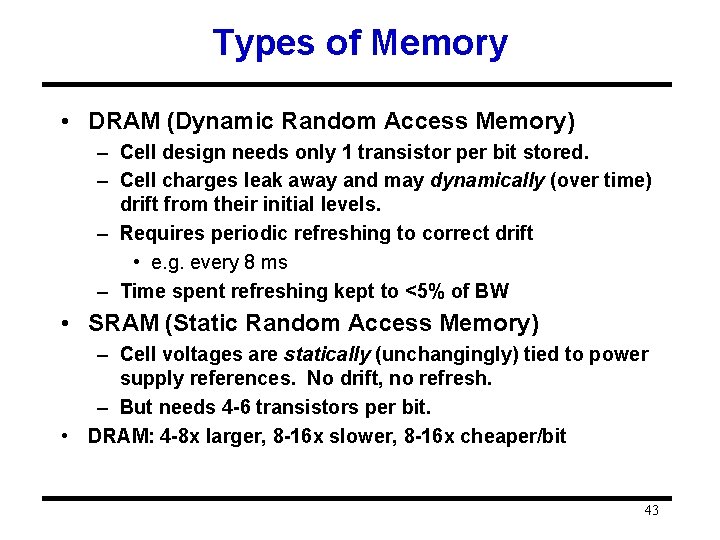

Main Memory • Some definitions: – Bandwidth (bw): Bytes read or written per unit time – Latency: Described by • Access Time: Delay between access initiation & completion – For reads: Present address till result ready. • Cycle time: Minimum interval between separate requests to memory. – Address lines: Separate bus CPU Mem to carry addresses. (Not usu. counted in BW figures. ) – RAS (Row Access Strobe) • First half of address, sent first. – CAS (Column Access Strobe) • Second half of address, sent second. 41

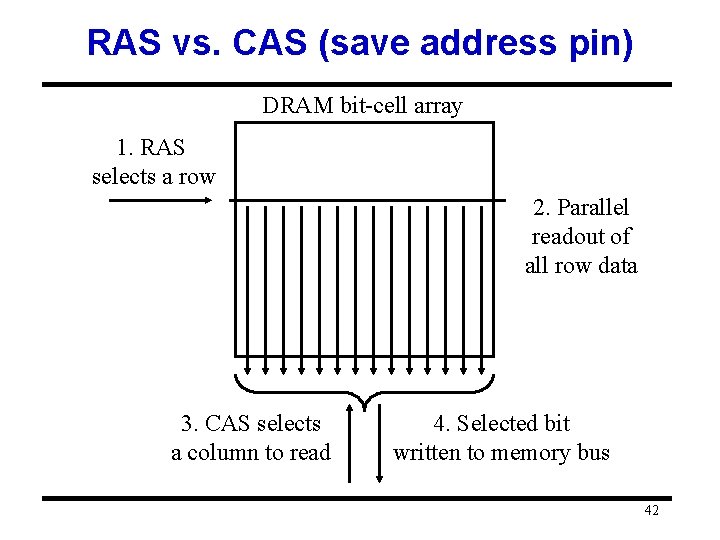

RAS vs. CAS (save address pin) DRAM bit-cell array 1. RAS selects a row 2. Parallel readout of all row data 3. CAS selects a column to read 4. Selected bit written to memory bus 42

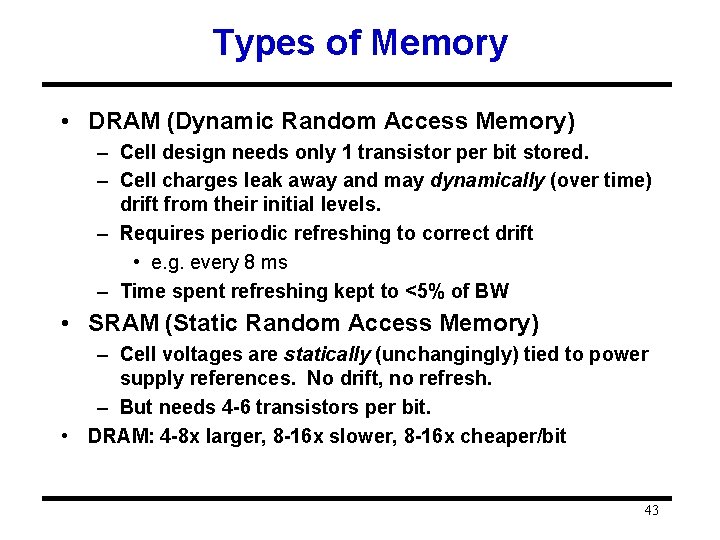

Types of Memory • DRAM (Dynamic Random Access Memory) – Cell design needs only 1 transistor per bit stored. – Cell charges leak away and may dynamically (over time) drift from their initial levels. – Requires periodic refreshing to correct drift • e. g. every 8 ms – Time spent refreshing kept to <5% of BW • SRAM (Static Random Access Memory) – Cell voltages are statically (unchangingly) tied to power supply references. No drift, no refresh. – But needs 4 -6 transistors per bit. • DRAM: 4 -8 x larger, 8 -16 x slower, 8 -16 x cheaper/bit 43

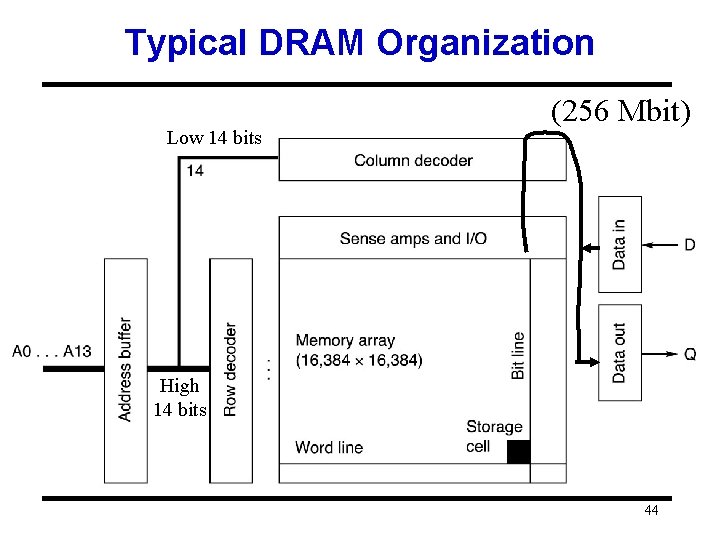

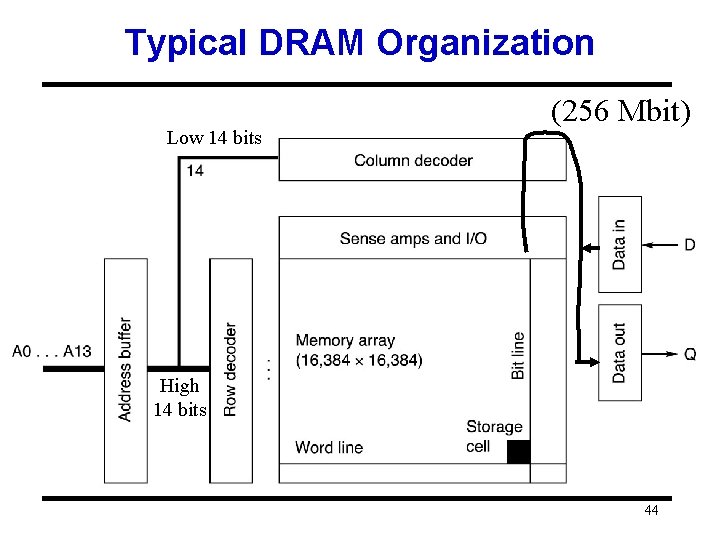

Typical DRAM Organization Low 14 bits (256 Mbit) High 14 bits 44

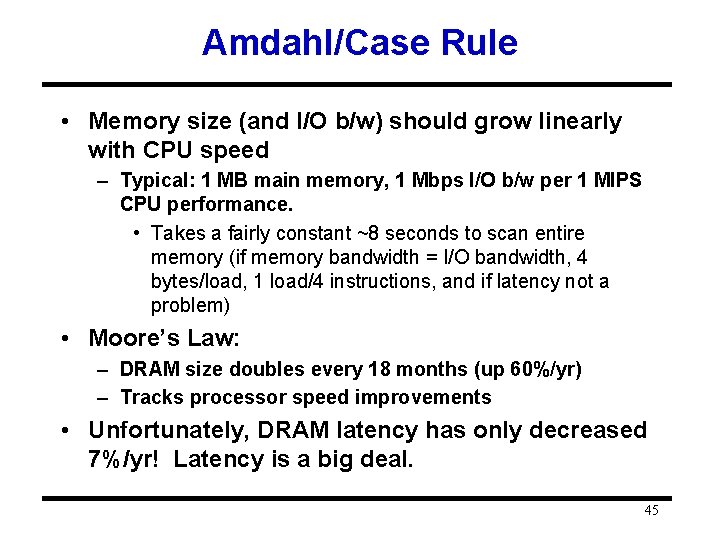

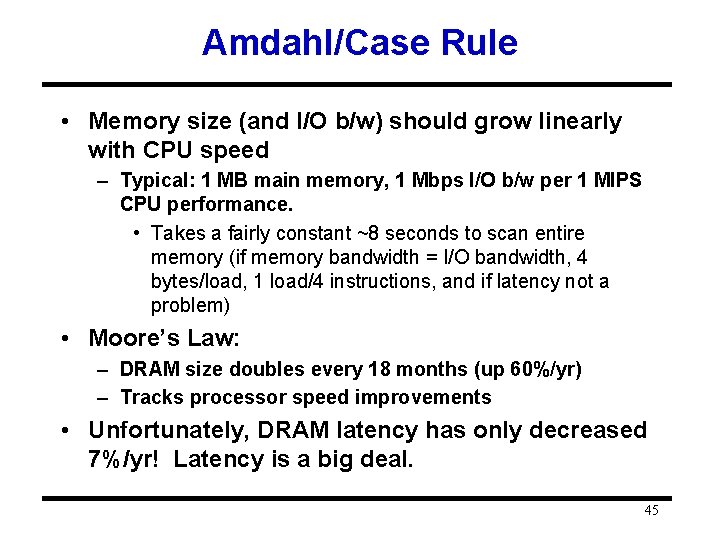

Amdahl/Case Rule • Memory size (and I/O b/w) should grow linearly with CPU speed – Typical: 1 MB main memory, 1 Mbps I/O b/w per 1 MIPS CPU performance. • Takes a fairly constant ~8 seconds to scan entire memory (if memory bandwidth = I/O bandwidth, 4 bytes/load, 1 load/4 instructions, and if latency not a problem) • Moore’s Law: – DRAM size doubles every 18 months (up 60%/yr) – Tracks processor speed improvements • Unfortunately, DRAM latency has only decreased 7%/yr! Latency is a big deal. 45

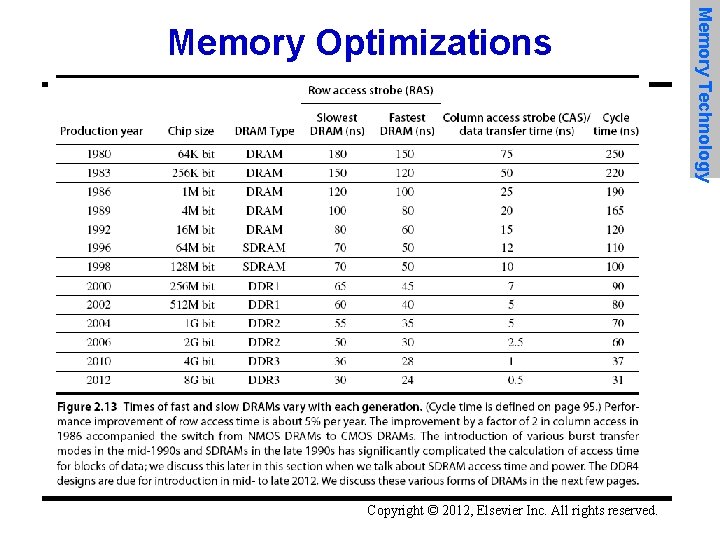

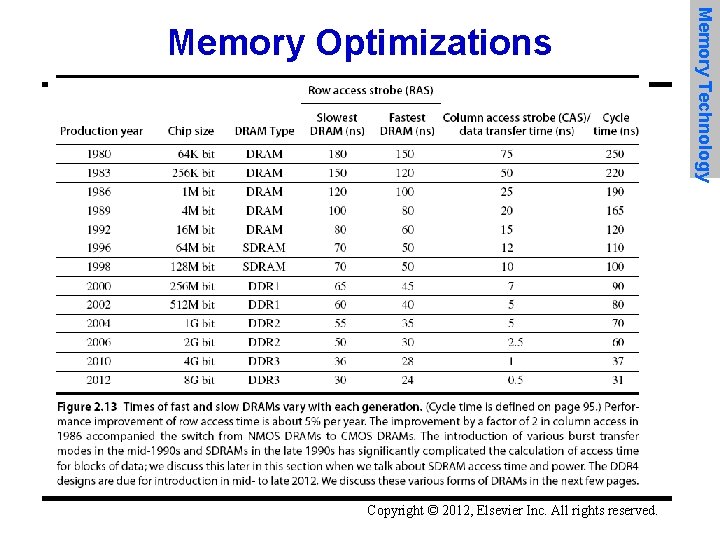

Copyright © 2012, Elsevier Inc. All rights reserved. Memory Technology Memory Optimizations

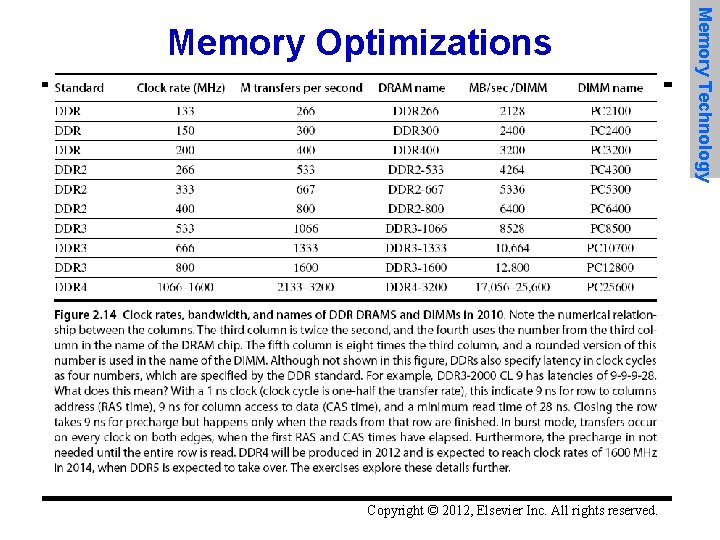

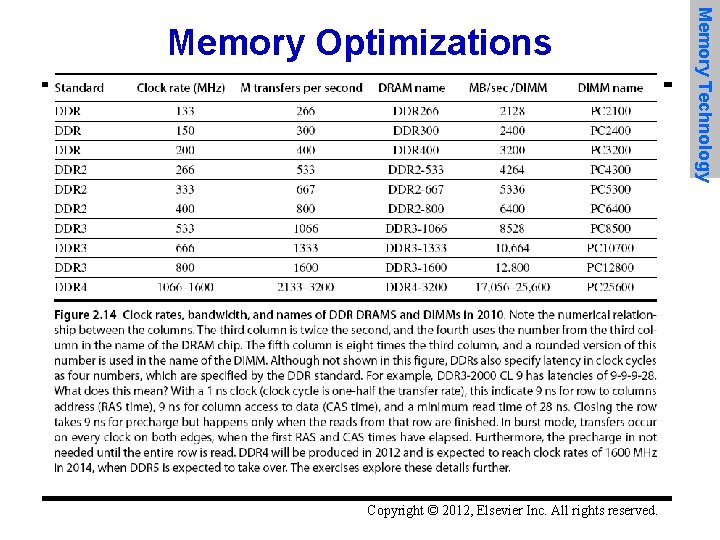

Copyright © 2012, Elsevier Inc. All rights reserved. Memory Technology Memory Optimizations

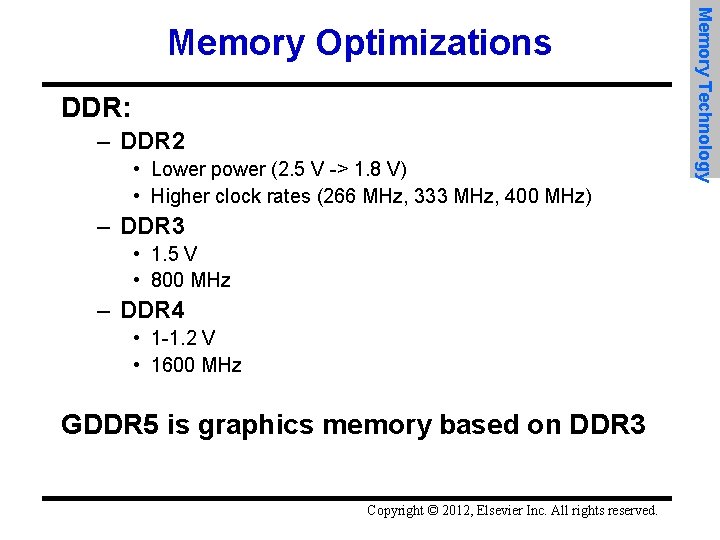

DDR: – DDR 2 • Lower power (2. 5 V -> 1. 8 V) • Higher clock rates (266 MHz, 333 MHz, 400 MHz) – DDR 3 • 1. 5 V • 800 MHz – DDR 4 • 1 -1. 2 V • 1600 MHz GDDR 5 is graphics memory based on DDR 3 Copyright © 2012, Elsevier Inc. All rights reserved. Memory Technology Memory Optimizations

Graphics memory: – Achieve 2 -5 X bandwidth per DRAM vs. DDR 3 • Wider interfaces (32 vs. 16 bit) • Higher clock rate – Possible because they are attached via soldering instead of socketted DIMM modules Reducing power in SDRAMs: – Lower voltage – Low power mode (ignores clock, continues to refresh) Copyright © 2012, Elsevier Inc. All rights reserved. Memory Technology Memory Optimizations

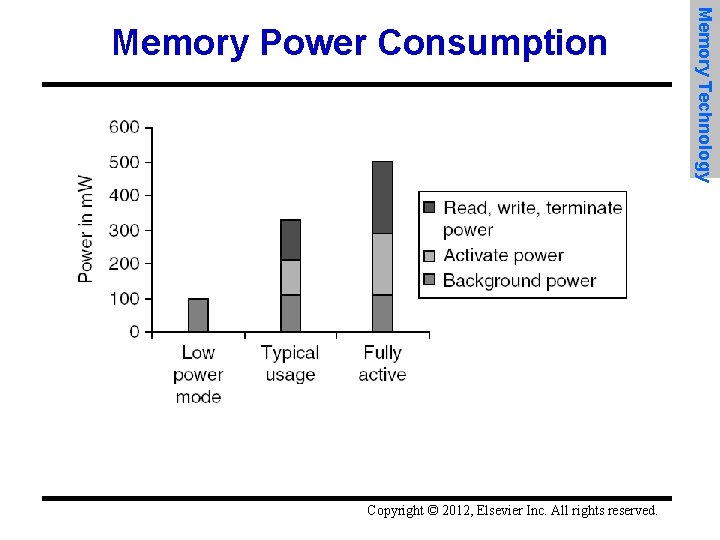

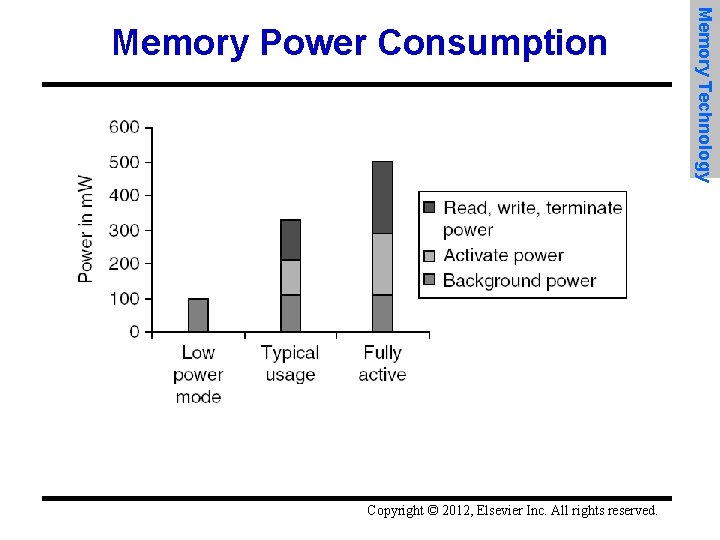

Copyright © 2012, Elsevier Inc. All rights reserved. Memory Technology Memory Power Consumption

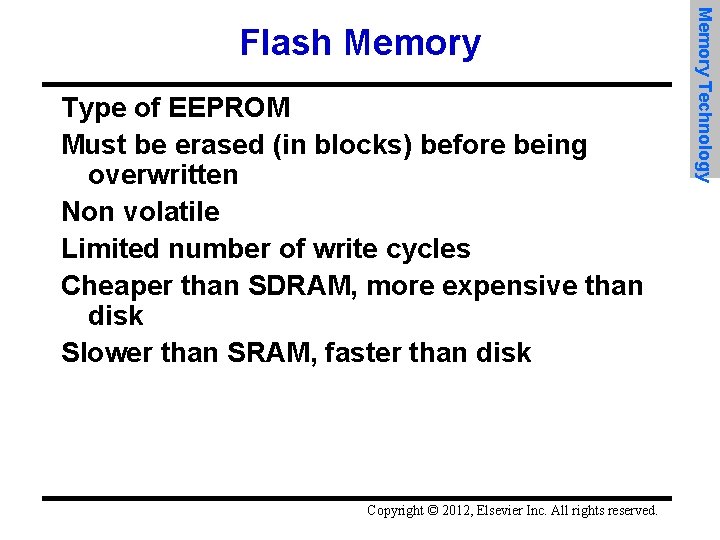

Type of EEPROM Must be erased (in blocks) before being overwritten Non volatile Limited number of write cycles Cheaper than SDRAM, more expensive than disk Slower than SRAM, faster than disk Copyright © 2012, Elsevier Inc. All rights reserved. Memory Technology Flash Memory

Memory is susceptible to cosmic rays Soft errors: dynamic errors – Detected and fixed by error correcting codes (ECC) Hard errors: permanent errors – Use sparse rows to replace defective rows Chipkill: a RAID-like error recovery technique Copyright © 2012, Elsevier Inc. All rights reserved. Memory Technology Memory Dependability

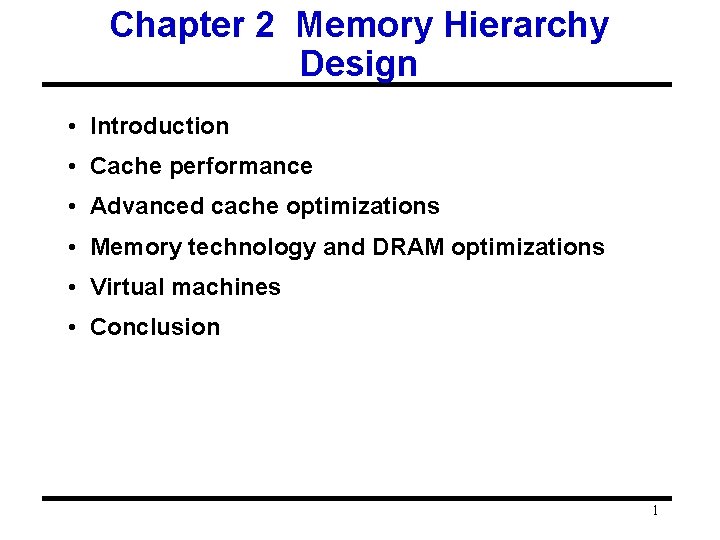

Protection via virtual memory – Keeps processes in their own memory space Role of architecture: – Provide user mode and supervisor mode – Protect certain aspects of CPU state – Provide mechanisms for switching between user mode and supervisor mode – Provide mechanisms to limit memory accesses – Provide TLB to translate addresses Copyright © 2012, Elsevier Inc. All rights reserved. Virtual Memory and Virtual Machines Virtual Memory

Supports isolation and security Sharing a computer among many unrelated users Enabled by raw speed of processors, making the overhead more acceptable Allows different ISAs and operating systems to be presented to user programs – “System Virtual Machines” – SVM software is called “virtual machine monitor” or “hypervisor” – Individual virtual machines run under the monitor are called “guest VMs” Copyright © 2012, Elsevier Inc. All rights reserved. Virtual Memory and Virtual Machines

Each guest OS maintains its own set of page tables – VMM adds a level of memory between physical and virtual memory called “real memory” – VMM maintains shadow page table that maps guest virtual addresses to physical addresses • Requires VMM to detect guest’s changes to its own page table • Occurs naturally if accessing the page table pointer is a privileged operation Copyright © 2012, Elsevier Inc. All rights reserved. Virtual Memory and Virtual Machines Impact of VMs on Virtual Memory