Chapter 2 Agents Intelligent Agents An agent is

- Slides: 20

Chapter 2 Agents

Intelligent Agents • An agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through effectors • A rational agent is one that does the right thing • The problem is to define means to decide how and when to evaluate an agent’s success or performance • Issues: – Performance measure – Frequency for obtaining performance measures – Omniscience

Intelligent Agents • We are interested on rational agents • What is rational depends upon: – The performance measure that defines the degree of success – Everything the agent has perceived so far. We call this perceptual history the percept sequence – What the agent knows about the environment – The actions that the agent can perform

Ideal Rational Agent An ideal rational agent is an agent that for each possible percept sequence, it performs whatever action is expected to maximize its performance measure, on the basis of the evidence provided by the percept sequence, and whatever built-in knowledge the agent has.

Agent Functions and Programs • An agent is completely specified by the agent function which is mapping percept sequences to actions • In principle, one can supply each possible sequence to see what it does. Obviously, a lookup table would usually be immense • One agent function (or a small equivalence class) is rational • Aim: find a way to implement the rational agent function concisely • An agent program takes a single percept as input, keeps internal state

Table-Driven Agent Programs • Agents have same skeleton: accepting percepts from an environment and generating actions. Each uses some internal data structures that are updated as new percepts arrive. • These data structures are operated on by the agent's decision-making procedures to generate an action choice, which is then passed to the architecture to be executed. • Table driven agents use a lookup table to associate perecptions from the environment with possible actions. • There are several drawbacks in this technique: – Need to keep in memory entire percept sequence – Long time to build the table – Agent has no autonomy • Other alternatives to map percepts to actions are: – – Simple Reflex agents Agents that keep track of world Goal-based agents Utility-based agents

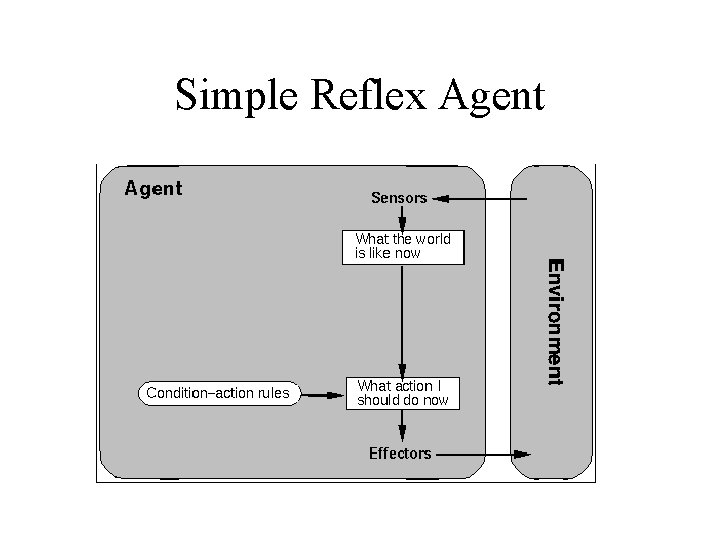

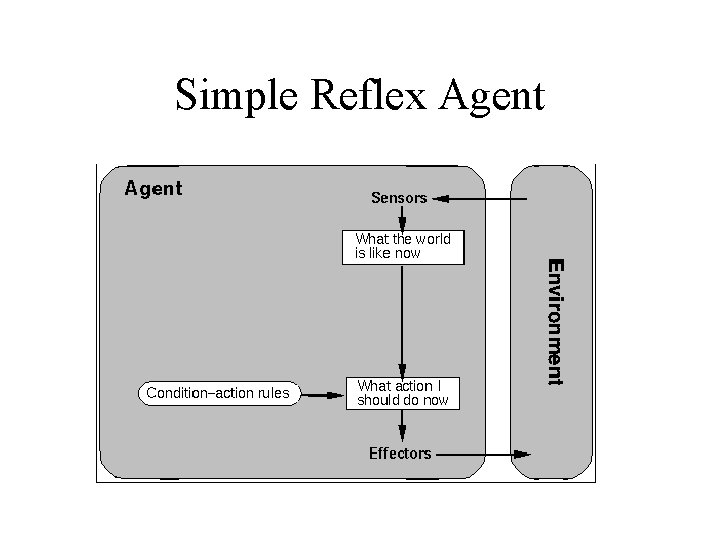

Simple Reflex Agents • Idea: note certain commonly occurring input/output associations • Such connections are called condition-action rules • A simple reflex agent works by finding a rule whose condition match the current situation and, then doing the action associated with that rule Function Simple-Reflex-Agent(percept) static: rules, /* condition-action rules */ state Intercept_input(percept) rule Rule_match(state, rules) action Rule_Action(rule) return(action)

Simple Reflex Agent

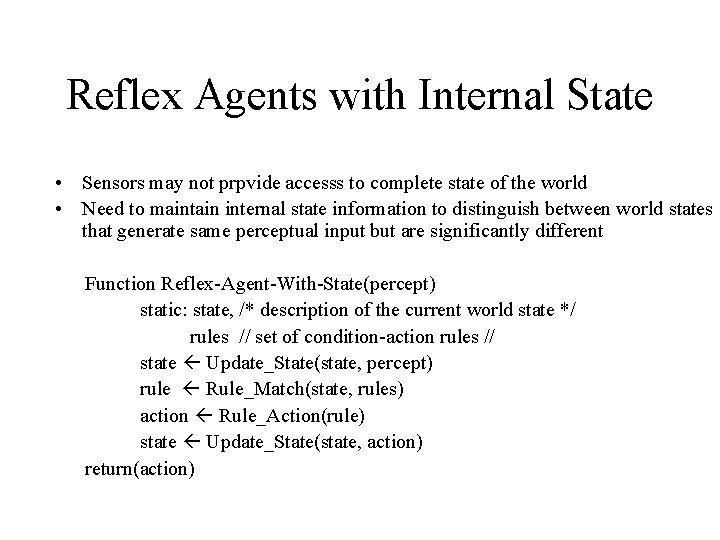

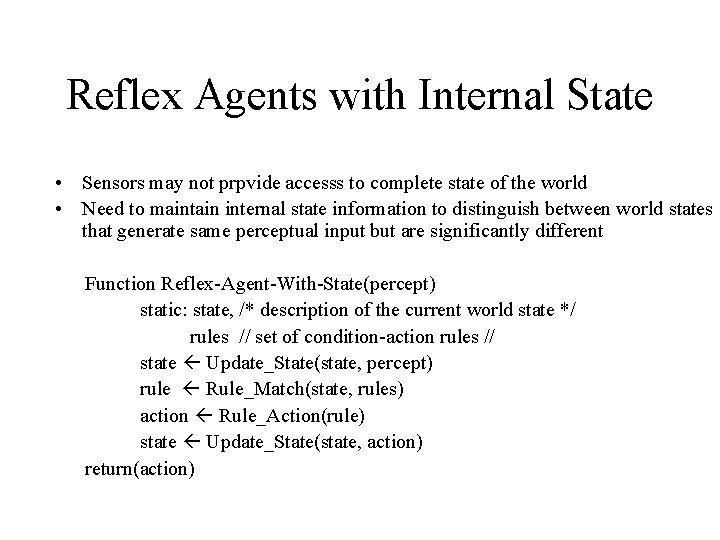

Reflex Agents with Internal State • Sensors may not prpvide accesss to complete state of the world • Need to maintain internal state information to distinguish between world states that generate same perceptual input but are significantly different Function Reflex-Agent-With-State(percept) static: state, /* description of the current world state */ rules // set of condition-action rules // state Update_State(state, percept) rule Rule_Match(state, rules) action Rule_Action(rule) state Update_State(state, action) return(action)

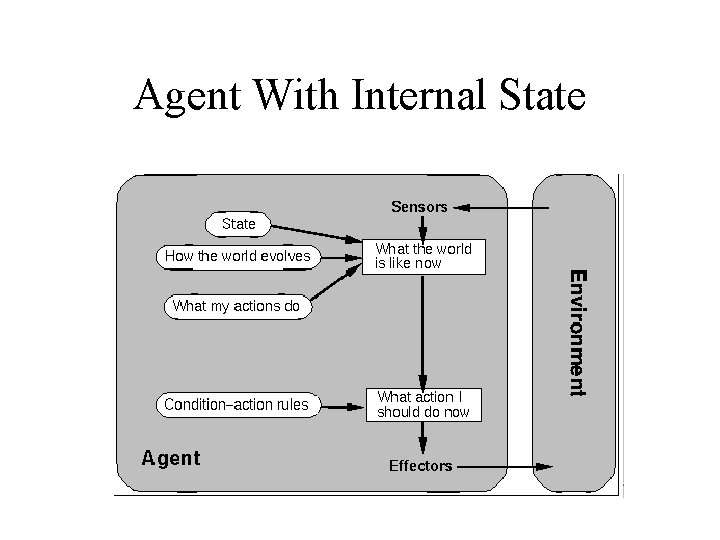

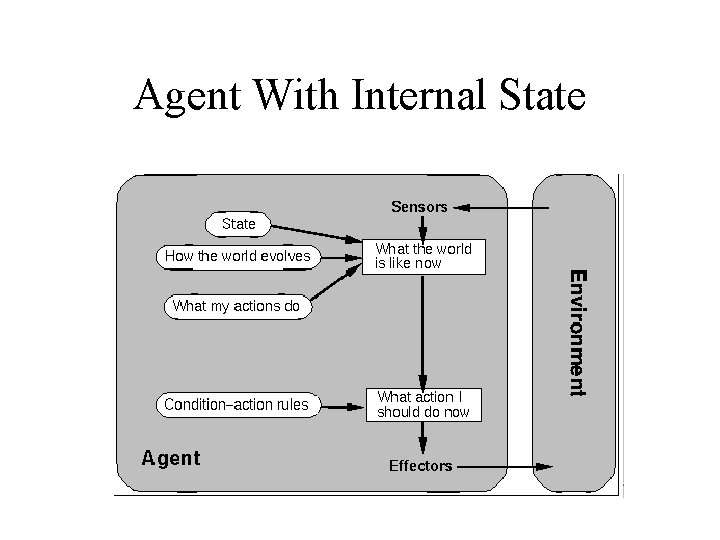

Agent With Internal State

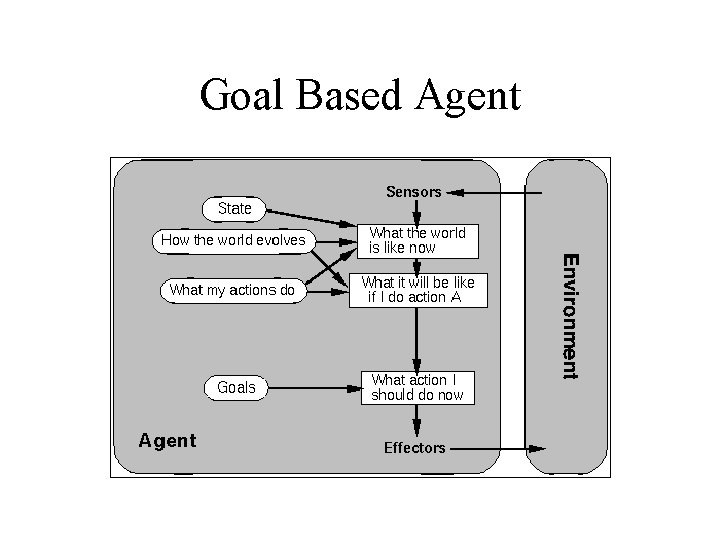

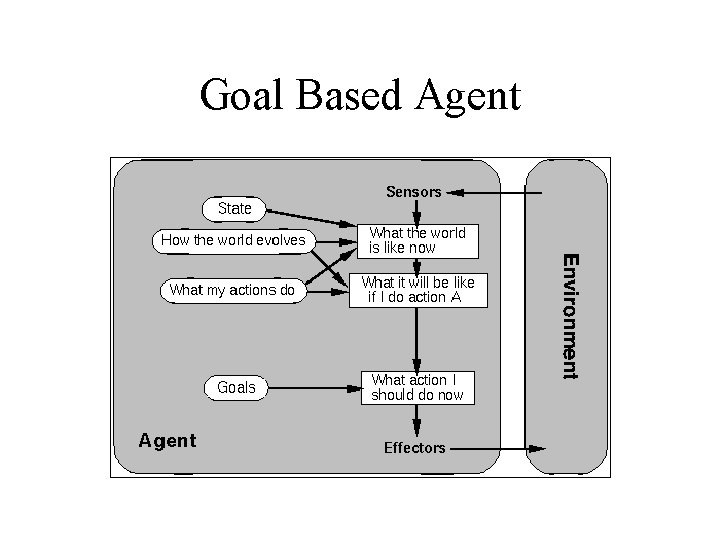

Goal Based Agents • Knowing current state of environment often is not enough to decide an action • Need of goal information • Combine goal info with possible actions to choose that achieve the goal • Not easy always to decide best action to take • Use search planning to find action sequences that achieve goal

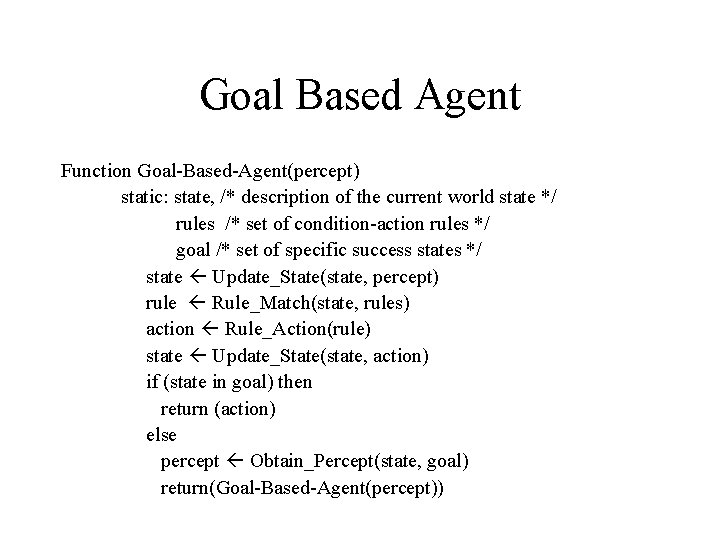

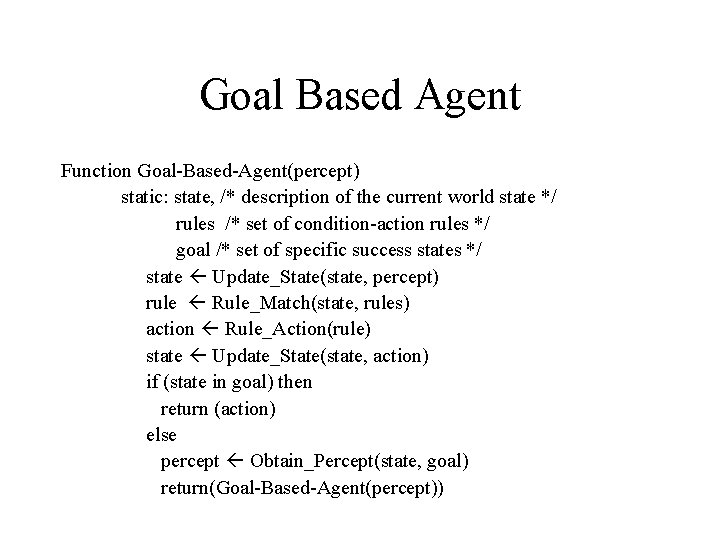

Goal Based Agent Function Goal-Based-Agent(percept) static: state, /* description of the current world state */ rules /* set of condition-action rules */ goal /* set of specific success states */ state Update_State(state, percept) rule Rule_Match(state, rules) action Rule_Action(rule) state Update_State(state, action) if (state in goal) then return (action) else percept Obtain_Percept(state, goal) return(Goal-Based-Agent(percept))

Goal Based Agent

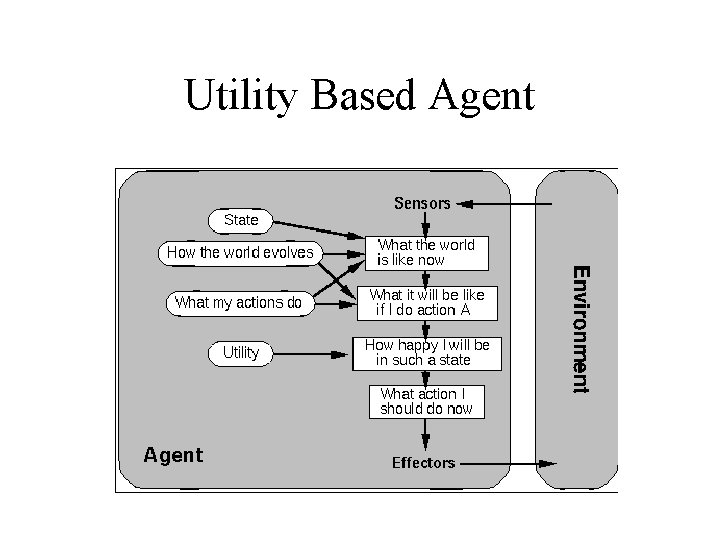

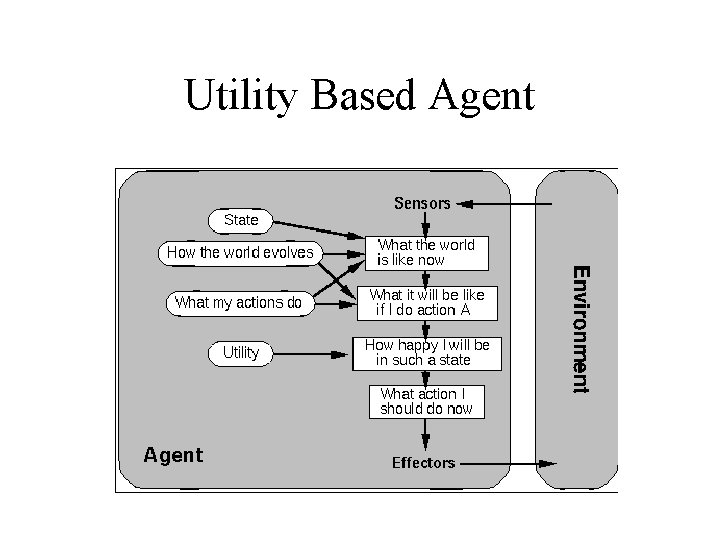

Utility Based Agents • Goals not enough for high-quality behaviour • In Goal-based agents states are classified as successful unsuccesful • We need a method to distinguish the level of utility or gain in a state • Utility: A function which maps a state (successful) into a real number (describes associated degree of success) • Utility functions allow for: – Specifying tradeoffs in conflicting or alternative goals – Specifying a way in which likelihood of success can be weighed up against the importance of alternative goals

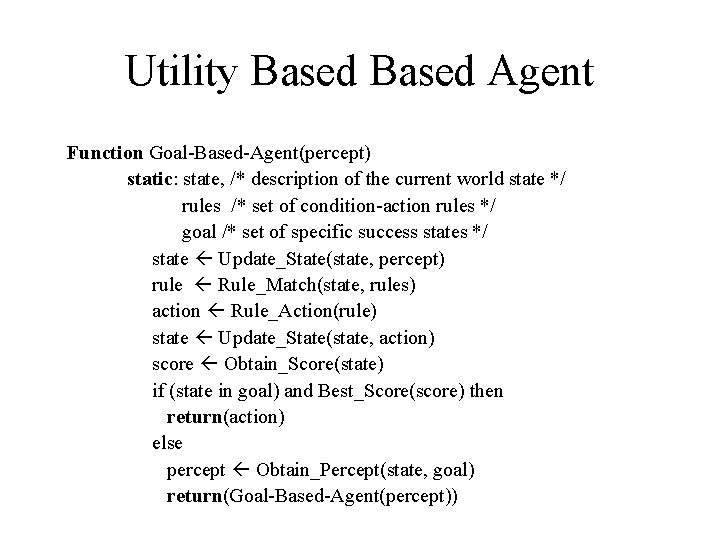

Utility Based Agent Function Goal-Based-Agent(percept) static: state, /* description of the current world state */ rules /* set of condition-action rules */ goal /* set of specific success states */ state Update_State(state, percept) rule Rule_Match(state, rules) action Rule_Action(rule) state Update_State(state, action) score Obtain_Score(state) if (state in goal) and Best_Score(score) then return(action) else percept Obtain_Percept(state, goal) return(Goal-Based-Agent(percept))

Utility Based Agent

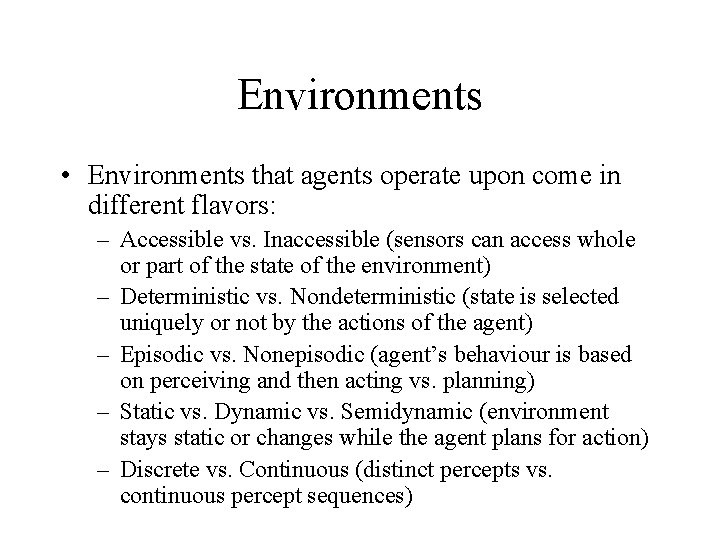

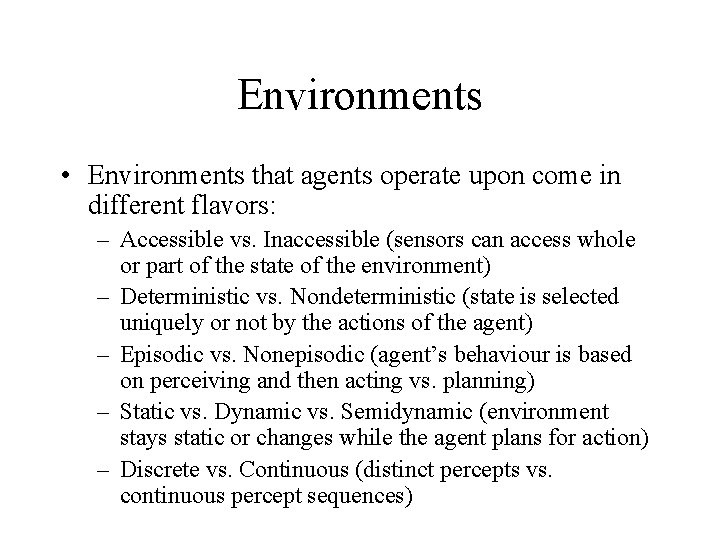

Environments • Environments that agents operate upon come in different flavors: – Accessible vs. Inaccessible (sensors can access whole or part of the state of the environment) – Deterministic vs. Nondeterministic (state is selected uniquely or not by the actions of the agent) – Episodic vs. Nonepisodic (agent’s behaviour is based on perceiving and then acting vs. planning) – Static vs. Dynamic vs. Semidynamic (environment stays static or changes while the agent plans for action) – Discrete vs. Continuous (distinct percepts vs. continuous percept sequences)

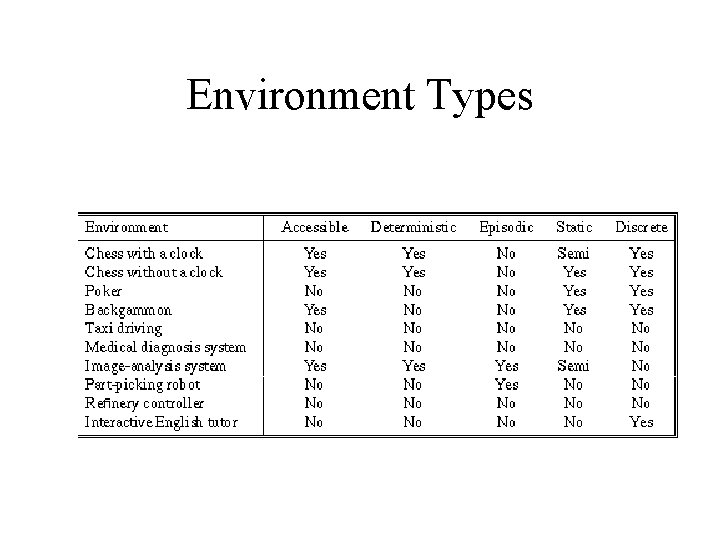

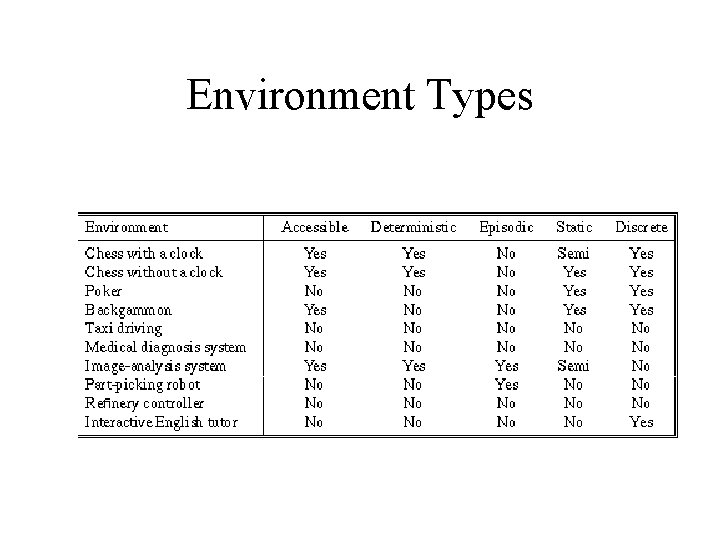

Environment Types

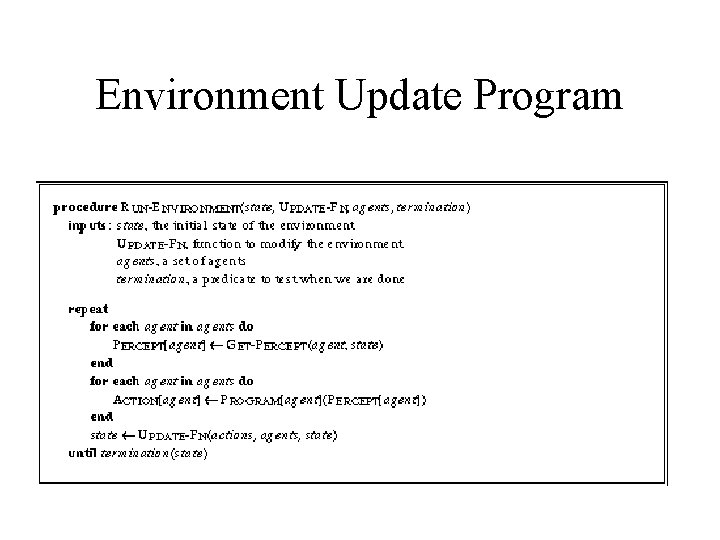

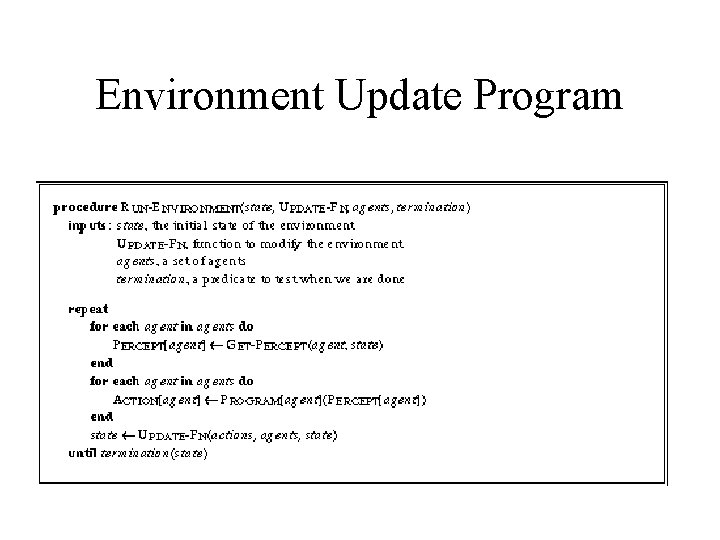

Environment Update Program

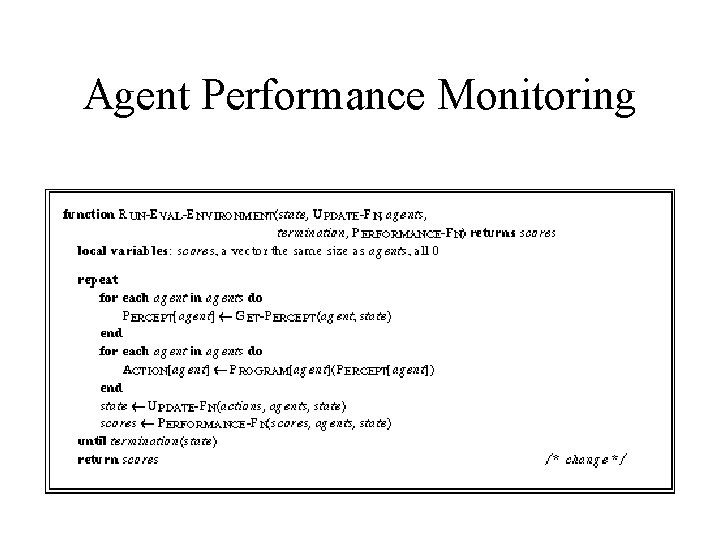

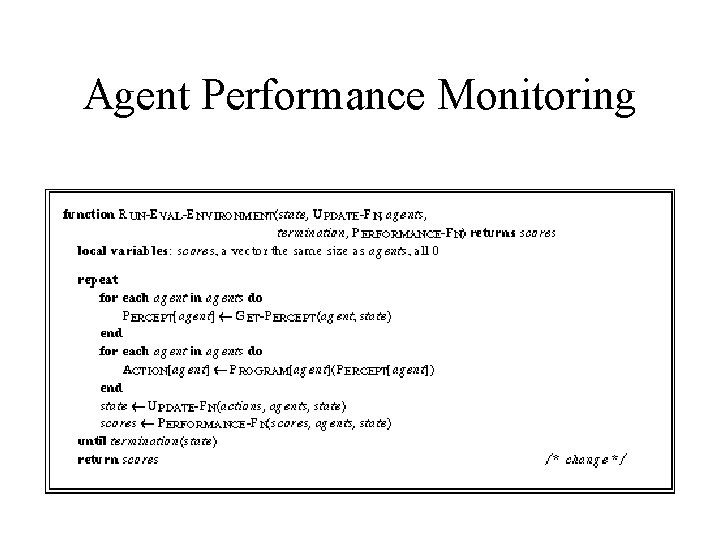

Agent Performance Monitoring