Chapter 2 Agent Architectures and Hierarchical Control Textbook

Chapter 2: Agent Architectures and Hierarchical Control Textbook: Artificial Intelligence Foundations of Computational Agents, 2 nd Edition, David L. Poole and Alan K Mackworth, Cambridge University Press, 2018. Asst. Prof. Dr. Anilkumar K. G 1

Overview • This topic discusses how an intelligent agent can perceive, reason, and act over time in an environment. • It presents ways to design agents in terms of hierarchical decompositions and taking into account the knowledge that an agent needs to act intelligently. Asst. Prof. Dr. Anilkumar K. G 2

Agents • An agent is something that acts in an environment. – An agent can be a person, a robot, a dog, a worm, a computer program, etc. • Agents interact with the environment with a body. – An embodied agent has a physical body. – A robot is an artificial purposive embodied agent. • Purposive agents have preferences – They prefer some states of the world from other states, and they act to try to achieve the states they prefer most. Asst. Prof. Dr. Anilkumar K. G 3

Agents • Agents receive information through their sensors. – An agent’s actions depend on the information it receives from its sensors. – These sensors may, or may not, reflect what is true in the world. – Sensors can be noisy, unreliable, or broken. – And even when sensors are reliable there is still ambiguity about the world based on sensor readings. • for example, “sensor s appears to be producing value v. ” – An agent must act on the information it has available. Asst. Prof. Dr. Anilkumar K. G 4

Agents • Robotic Agents act in the world through their actuators (also called effectors). – Actuators can also be noisy, unreliable, slow, or broken. – From actuators what an agent controls is the message (command) it sends to its actuators. – Agents often carry out actions to find more information about the world • such as opening a cupboard door to find out what is in the cupboard or giving students a test to determine their knowledge. Asst. Prof. Dr. Anilkumar K. G 5

Agents • An agent is something that acts in the world. • A situated agent perceives, reasons, and acts in time in an environment. • A purposive agent prefers some states of the world to other states, and acts to achieve worlds they prefer. • Agents interact with the environment with a body. • An embodied agent has a physical body. • A robot is an purposive embodied agent. Asst. Prof. Dr. Anilkumar K. G 6

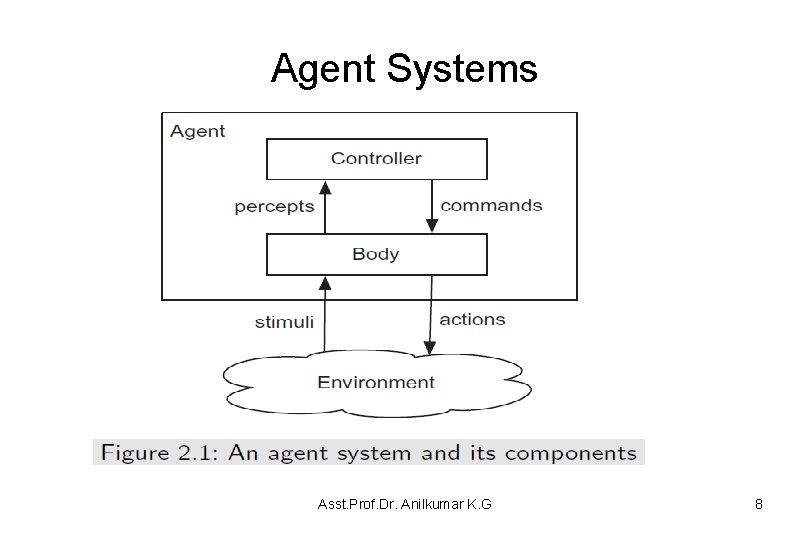

Agent Systems • Figure 2. 1 depicts the general interaction between an agent and its working environment. – An agent system is made up of an agent and the environment in which it acts. – An agent is made up of a body and a controller • The controller is the brain of the agent. – The controller receives percepts from the body and sends commends to the body. – A body includes sensors that convert stimuli into percepts and actuators that convert commands into actions. • Stimuli include light, sound, words typed on a keyboard, mouse movements, information obtained from a web page or from a database. Asst. Prof. Dr. Anilkumar K. G 7

Agent Systems Asst. Prof. Dr. Anilkumar K. G 8

Sensors and Actions • Common sensors include touch sensors, cameras, infrared sensors, sonar, microphones, keyboards, mouse, XML readers (extract information from web pages), etc. • As a prototypical sensor, a camera senses light coming into its lens and converts it into a 2 D array of intensity values called pixels. – More often, percepts consist of higher-level features such as lines, edges, and depth information. – Often the percepts are more specialized – for example, the positions of bright orange dots, the part of the display a student is looking at, or the hand gesture signals given by a human. • Actions include – steering, accelerating wheels, moving links of arms, speaking, displaying information, or sending a post command to a web site. Asst. Prof. Dr. Anilkumar K. G 9

The Agent Function • Agents are situated in time – they receive sensory data in time and do actions in time. • the action that an agent does at a particular time is a function of its inputs. • Let T be the set of time points – Assume that T is totally ordered time values and has some metric that can be used to measure the temporal distance between any two time points. • Basically, we assume that T can be mapped to some subset of the real line. Asst. Prof. Dr. Anilkumar K. G 10

The Agent Function – T is dense if there is always another time point between any two time points; • this implies there must be infinitely many time points between any two points. – T is discrete if there exist only a finite number of time points between any two action points; • for example, there is a time point at every hundredth of a second, or every day, or there may be time points whenever interesting events occur. • Discrete time has the property that, for all times, except a last time, there is always a next time. • Initially, we assume that time is discrete and goes on forever: – Thus, for each time there is a next time. We write (t + 1) to be the next time after time t. The time points do not need to be equally spaced. Asst. Prof. Dr. Anilkumar K. G 11

The Agent Function – Let P is a set of possible percepts (called a precept trace): • A percept trace is a function from T into P (what is observed at each time). • It is the sequence of all past, present, and future percepts received by the controller. – Let C is the set of all commands (called a command trace): • A command trace is a function from T into C ( it specifies the command for each time point). • It is the sequence of all past, present, and future commands issued by the controller. • The commands can be a function of the history of percepts – This gives to the concept of a transduction (a function which converts percept traces into command traces). Asst. Prof. Dr. Anilkumar K. G 12

The Agent Function – A transduction is causal if, for all times t, the command at time t depends only on percepts up to t. – A controller is an implementation of a causal transduction. – The history of an agent at time t is the precept trace of the agent for all times before or at time t. – Thus, a causal transduction specifies a function from the agent’s history at time t into the command at time t. • A causal transduction is a function of an agent’s history – Normally, an agent does not have direct access to its entire history (is a part of its KB). • It only has access to what it has remembered at time t (current memory). {remembered_value(x) History (KB)} Reasoning Asst. Prof. Dr. Anilkumar K. G 13

The Agent Functions • The memory or belief state of an agent at time t encodes all of the agent's history that it has access to – An agent has access only to its history that it has encoded in its belief state (memory). – The belief state of an agent encapsulates the information about its past that it can use for current and future actions. – At any time, an agent has access to its belief state and its percepts. • The belief state can contain any information, subject to the agent’s memory and processing limitations. Asst. Prof. Dr. Anilkumar K. G 14

The Agent Functions • Some instances of belief state include the following: – The belief state of an agent has a fixed sequence of instructions that may be a counter which records its current position in the sequence. – The belief state can contain specific facts that are useful • for example, where the delivery robot left the parcel in order to go and get the key, or where it has already checked for the key. – The belief state could encode a model or a partial model of the state of the world. • An agent could maintain its best guess about the current state of the world or could have a probability distribution over possible world states – The belief state could be a representation of the dynamics of the world • From the precepts, an agent could reason about what is true in the world. Asst. Prof. Dr. Anilkumar K. G 15

The Agent Functions • A controller maintains the agent’s belief state and determines what command to issue at each time • A belief state transition function for discrete time is a function, remember : S P Snew – where S is the set of belief states and P is the set of percepts; st+1 = remember(st, pt) means that st+1 is the new belief state following belief state st when pt is observed. • A command function is a function: command : S P C – where S is the set of belief states, P is the set of percepts, and C is the set of possible commands; ct = command(st, pt) means that the controller issues command ct when the belief state is st when pt is observed. Asst. Prof. Dr. Anilkumar K. G 16

The Agent Functions • The belief-state transition function and the command function together specify a causal transduction for an agent • Means that a command function (indicates what to do next) can be causally generated from the current percepts and belief-state. • If there exists a finite number of possible belief states, the controller is called a finite state controller or a finite state machine – At every time a controller has to decide on: • What should it do? • What should it remember? • (How should it update its memory? ) – as a function of its percepts and its memory. Asst. Prof. Dr. Anilkumar K. G 17

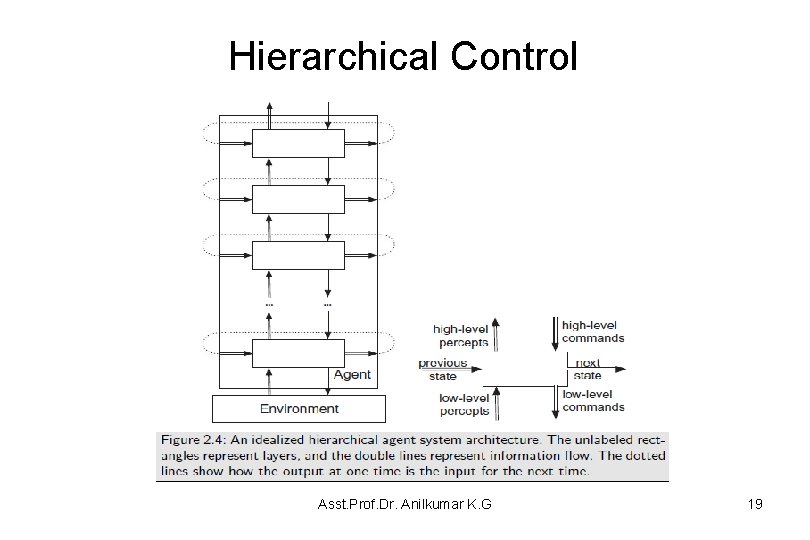

Hierarchical Control • A hierarchical agent architecture is depicted in Figure 2. 4 – Each layer sees the layers below it as a virtual body from which it gets percepts and to which it sends commands. – The lower-level controllers can run much faster, and react to the world more quickly deliver a simpler view of the world to the higher-level controllers. • There can be multiple features passed from layer to layer and between states at different times – There are three types of inputs to each layer at each time: (1). the features that come from the belief state. (2). the features representing the percepts from the layer below in the hierarchy. (3). the features representing the commands from the layer above in the hierarchy. Asst. Prof. Dr. Anilkumar K. G 18

Hierarchical Control Asst. Prof. Dr. Anilkumar K. G 19

Hierarchical Control • An implementation of a layer specifies how the outputs of a layer are a function of its inputs. • To implement a controller, each input to a layer must get its value from somewhere – High-level reasoning, carried out in the higher layers, is often discrete and qualitative. – Low-level reasoning, as carried out in the lower layers, is often continuous and quantitative. – A controller that reasons in terms of both discrete and continuous values is called a hybrid system. Asst. Prof. Dr. Anilkumar K. G 20

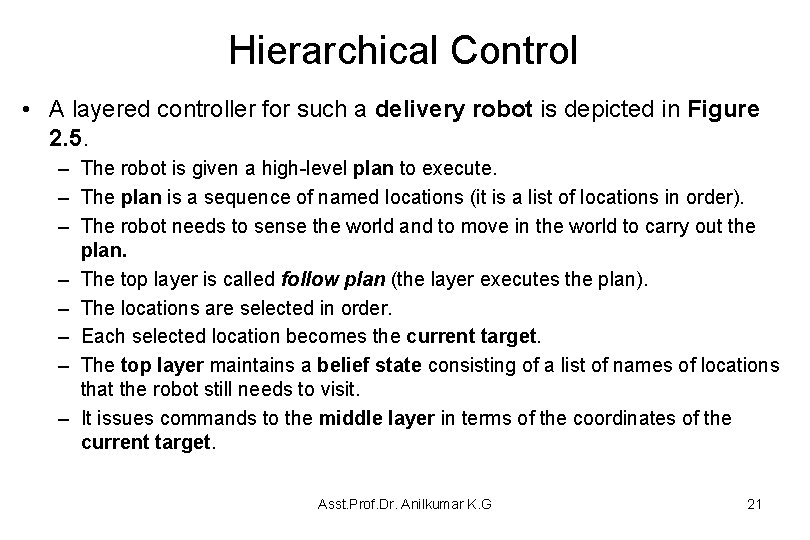

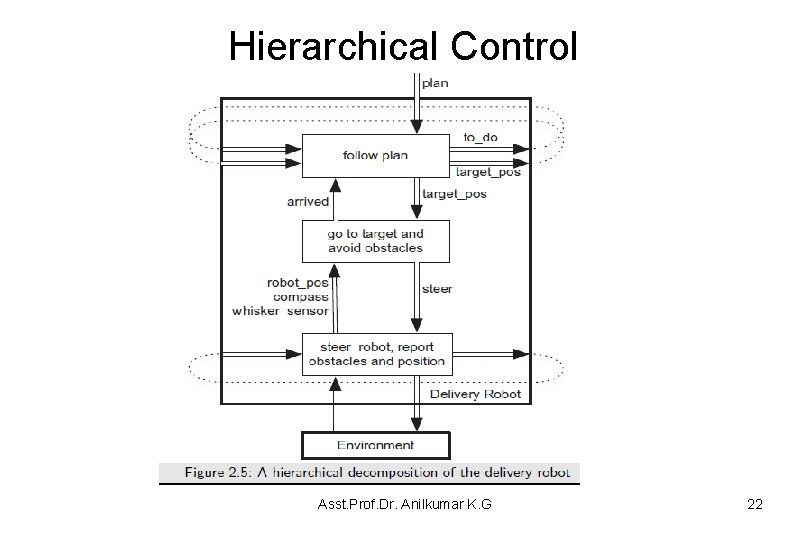

Hierarchical Control • A layered controller for such a delivery robot is depicted in Figure 2. 5. – The robot is given a high-level plan to execute. – The plan is a sequence of named locations (it is a list of locations in order). – The robot needs to sense the world and to move in the world to carry out the plan. – The top layer is called follow plan (the layer executes the plan). – The locations are selected in order. – Each selected location becomes the current target. – The top layer maintains a belief state consisting of a list of names of locations that the robot still needs to visit. – It issues commands to the middle layer in terms of the coordinates of the current target. Asst. Prof. Dr. Anilkumar K. G 21

Hierarchical Control Asst. Prof. Dr. Anilkumar K. G 22

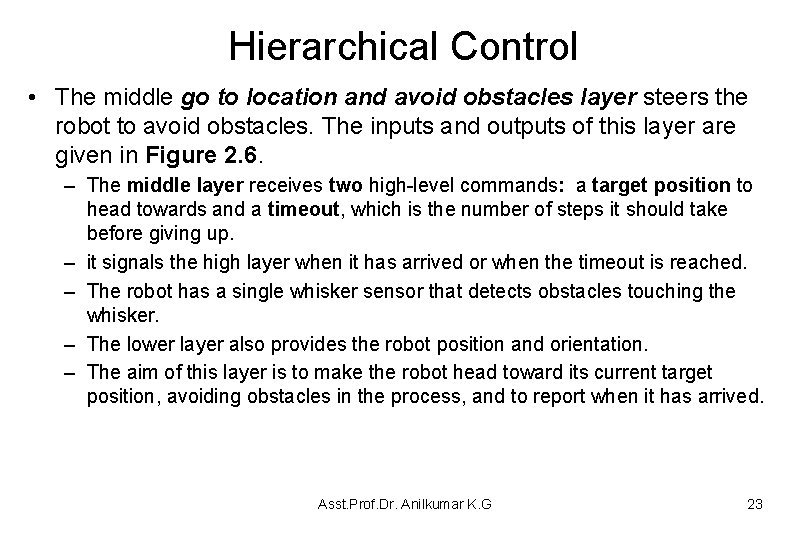

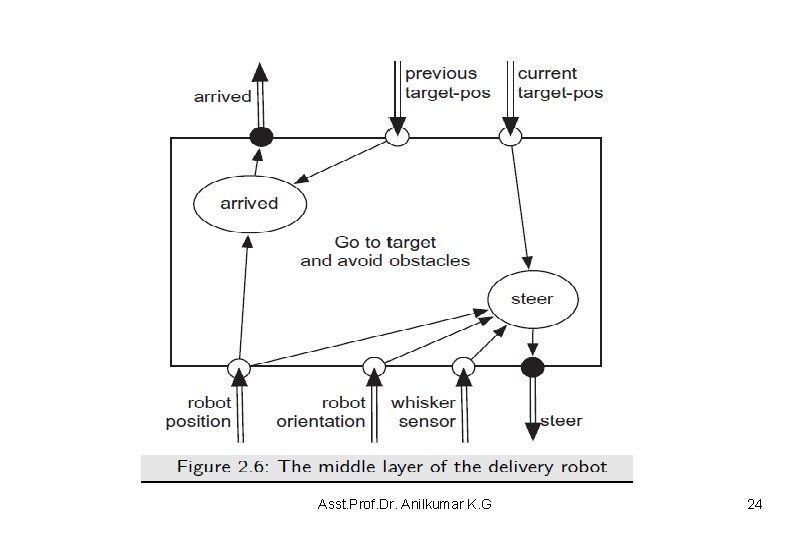

Hierarchical Control • The middle go to location and avoid obstacles layer steers the robot to avoid obstacles. The inputs and outputs of this layer are given in Figure 2. 6. – The middle layer receives two high-level commands: a target position to head towards and a timeout, which is the number of steps it should take before giving up. – it signals the high layer when it has arrived or when the timeout is reached. – The robot has a single whisker sensor that detects obstacles touching the whisker. – The lower layer also provides the robot position and orientation. – The aim of this layer is to make the robot head toward its current target position, avoiding obstacles in the process, and to report when it has arrived. Asst. Prof. Dr. Anilkumar K. G 23

Asst. Prof. Dr. Anilkumar K. G 24

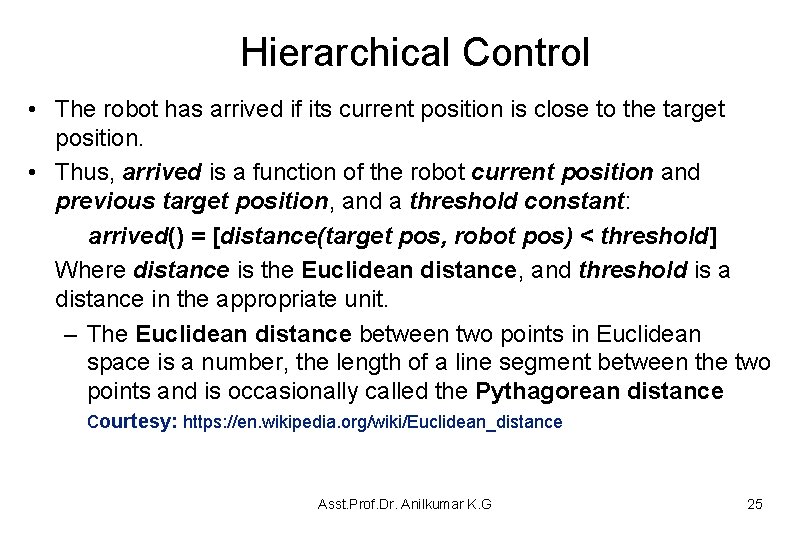

Hierarchical Control • The robot has arrived if its current position is close to the target position. • Thus, arrived is a function of the robot current position and previous target position, and a threshold constant: arrived() = [distance(target pos, robot pos) < threshold] Where distance is the Euclidean distance, and threshold is a distance in the appropriate unit. – The Euclidean distance between two points in Euclidean space is a number, the length of a line segment between the two points and is occasionally called the Pythagorean distance courtesy: https: //en. wikipedia. org/wiki/Euclidean_distance Asst. Prof. Dr. Anilkumar K. G 25

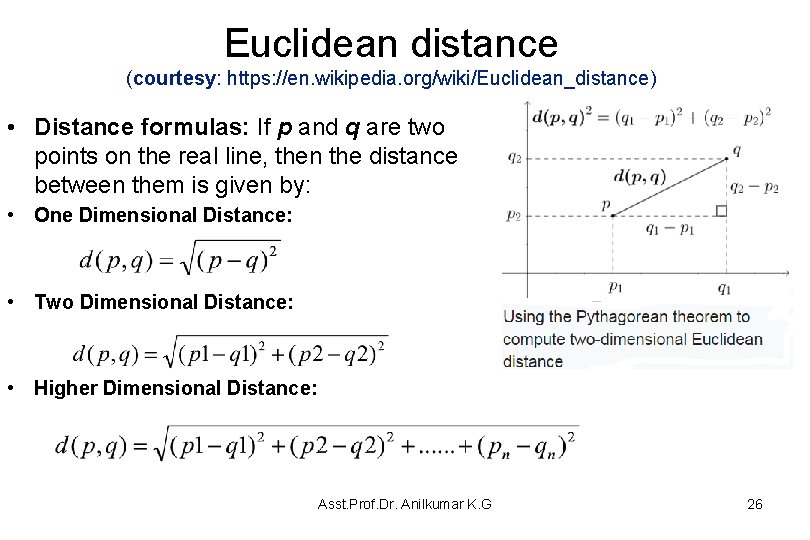

Euclidean distance (courtesy: https: //en. wikipedia. org/wiki/Euclidean_distance) • Distance formulas: If p and q are two points on the real line, then the distance between them is given by: • One Dimensional Distance: • Two Dimensional Distance: • Higher Dimensional Distance: Asst. Prof. Dr. Anilkumar K. G 26

Agents Modeling the World • A model of a world is a representation of the state of the world at a particular time and/or the dynamics of the world – One method is for the agent to maintain its belief about the world based on its commands. – This approach requires a model of both the state of the world and the dynamics of the world. • From the state at time t, the dynamics of the state at (t +1) can be predicted. This process is known as dead reckoning. – For example, a robot could maintain its estimate of its position and update it based on its actions. Asst. Prof. Dr. Anilkumar K. G 27

Embedded and Simulated Agents • There a number of ways an agent’s controller can be used: – An embedded agent is one that is run in the real world, where the actions are carried out in a real domain (specified). – A simulated agent is one that is run with a simulated body and environment; • that is, where a program takes in the commands and returns appropriate percepts. • An agent system model is where there are models of the controller, the body, and the environment that can answer questions about how the agent will behave. Asst. Prof. Dr. Anilkumar K. G 28

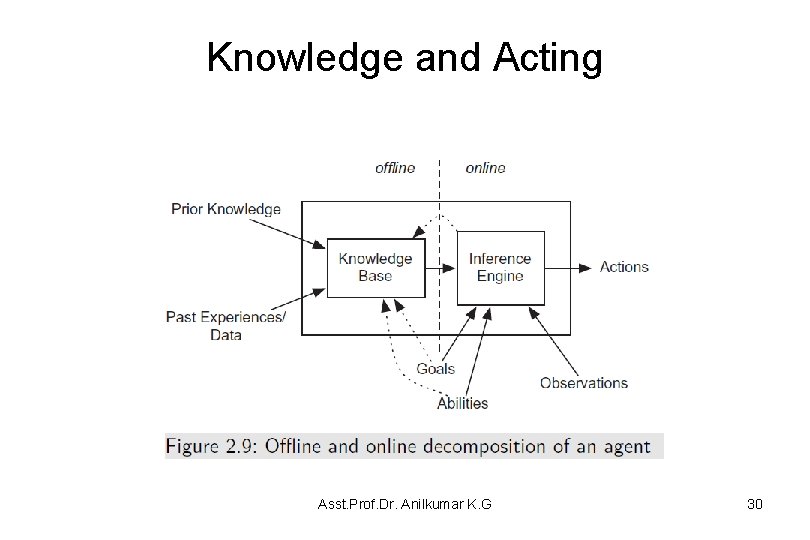

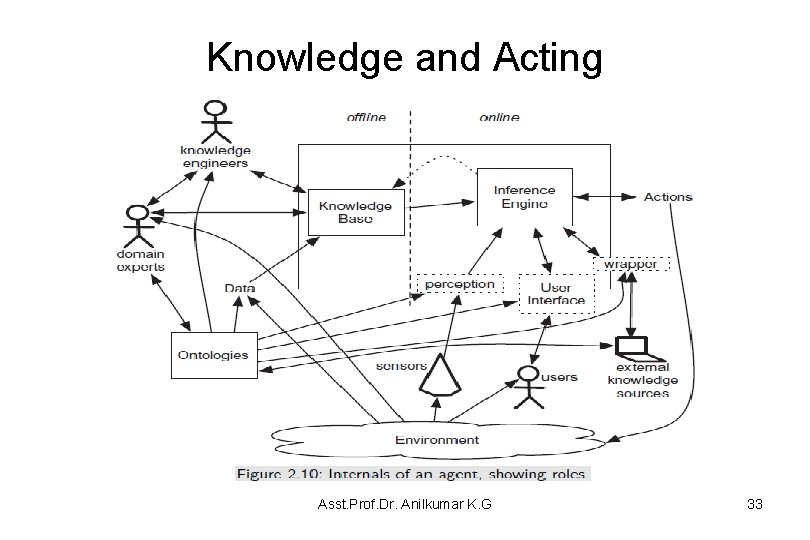

Knowledge and Acting • Knowledge is the information about a domain that is used for acting (solving problems) in that domain – An intelligent agent requires some internal representation of its belief state. – A knowledge-based system is a system that uses knowledge about a domain to act or to solve problems. • Figure 2. 9 shows a knowledge-based agent – A knowledge base (KB) is built offline and is used online to produce actions. – An intelligent agent requires both hierarchical organization and knowledge bases. Asst. Prof. Dr. Anilkumar K. G 29

Knowledge and Acting Asst. Prof. Dr. Anilkumar K. G 30

Knowledge and Acting • Online, when the agent is acting, the agent uses its KB, its current observations of the world, and its goals and abilities to choose what to do next and to update its KB – The KB is its long-term memory, where it keeps the knowledge that is needed to act in the future. • This knowledge comes from prior knowledge and is combined with what is learned from data and past experiences. – The belief state is the short-term memory, which maintains the model of current environment needed between time intervals. Asst. Prof. Dr. Anilkumar K. G 31

Knowledge and Acting • Offline, before the agent has to act, it uses prior knowledge and past experiences to update its KB (called learning) that is useful for acting online. – The role of the offline computation is to make the online computation more efficient or effective. • The online, or offline goals are depending on the agent • For example, a delivery robot could have general goals of keeping the lab clean and not damaging itself or other objects, but it could get other delivery goals at runtime. – Figure 2. 10 shows more detail of the interface between the agents and the world. Asst. Prof. Dr. Anilkumar K. G 32

Knowledge and Acting Asst. Prof. Dr. Anilkumar K. G 33

Design Time and Offline Computation • The KB required for online computation can be built initially at design time and then augmented (add more values) offline by the agent. – The KB is typically built offline from a combination of expert knowledge and data. – The KB is usually built before the agent knows the particulars of the environment in which it must act. – Maintaining and tuning the KB is often part of the online computation. Asst. Prof. Dr. Anilkumar K. G 34

Online Computation • In online, the information about the particular situation becomes available, and the agent has to act. • The information includes – the observations of the domain and the preference or goals. • the agent can get observations from sensors, users, websites, etc. • During online computation an agent can take advantage of particular goals and particular observations – For example, a medical agent has only the details of a particular patient online. It can acquire knowledge about how diseases and symptoms interact offline. It means that the agent can only do the computation about a particular patient online. Asst. Prof. Dr. Anilkumar K. G 35

Exercises • Complete the Exercise 2. 1 (at page 71). Asst. Prof. Dr. Anilkumar K. G 36

- Slides: 36