Chapter 14 Evaluation in Healthcare Education An Evaluation

- Slides: 42

Chapter 14 Evaluation in Healthcare Education

An Evaluation Is: • The final components of; – Education process – Nursing process – Decision-making process • Because these process are cyclic , so evaluation serves as a bridge at the end of one process that guides direction of the next process

Definition of Evaluation Gathering, summarizing, interpreting, and using data to determine the extent to which an action was successful A systematic process by which the worth or value of something-in this case teaching and learning –is judged

Evaluation • Evaluations are not intended to be generalizable, but are conducted to determine effectiveness of a specific intervention in a specific setting with an identified individual or group.

• What is the relationship between Evaluation, Evidence-Based Practice EBP and Practice-based evidence PBE

Evidence-Based Practice EBP • EBP has evolved and expanded over decades and can be defined as the conscientious use of current best evidence in making decisions about patient care (Melnyk and Fineout. Overholt, 2005, p. 6) • It includes results of systematically conducted evaluation from researches

Practice-based evidence PBE • PBE is just beginning to be defined and include results of systematically conducted evaluation from practice and clinical experience rather than from research

The Difference between Assessment and Evaluation Assessment = Input Evaluation = Output

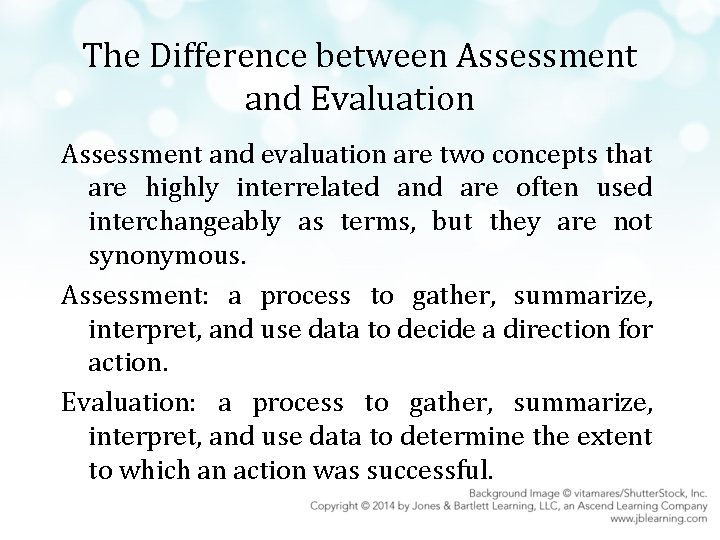

The Difference between Assessment and Evaluation Assessment and evaluation are two concepts that are highly interrelated and are often used interchangeably as terms, but they are not synonymous. Assessment: a process to gather, summarize, interpret, and use data to decide a direction for action. Evaluation: a process to gather, summarize, interpret, and use data to determine the extent to which an action was successful.

Formative and summative assessment • Formative assessment • A set of formal and informal assessment methods undertaken by the teachers at the time of the learning process is known as Formative Assessment. It is a part of the instructional process, which is undertaken by the teachers, with an objective of enhancing the student’s understanding and competency, by modifying teaching and learning methods.

• Formative Assessment attempts to provide direct and detailed feedback to both teachers and students, regarding the performance and learning of the student. It is a continuous process, that observes student’s needs and progress, in the learning process. • The goal of formative assessment is to monitor student learning to provide ongoing feedback that can be used by instructors to improve their teaching and by students to improve their learning.

• More specifically, formative assessments: • help students identify their strengths and weaknesses and target areas that need work • help faculty recognize where students are struggling and address problems immediately

• Formative assessments are generally low stakes, which means that they have low or no point value. Examples of formative assessments include asking students to: • draw a concept map in class to represent their understanding of a topic • submit one or two sentences identifying the main point of a lecture • turn in a research proposal for early feedback

• Summative assessment • refers to the evaluation of students; that focuses on the result. It is a part of the grading process which is given periodically to the participants, usually at the conclusion of the course, term or unit. The purpose is to check the knowledge of the students, i. e. to what extent they have learned the material, taught to them.

• Summative Assessment, seeks to evaluate the effectiveness of the course or program, checks the learning progress, etc. Scores, grades or percentage obtained to act as an indicator that shows the quality of the curriculum and forms a basis for rankings in schools.

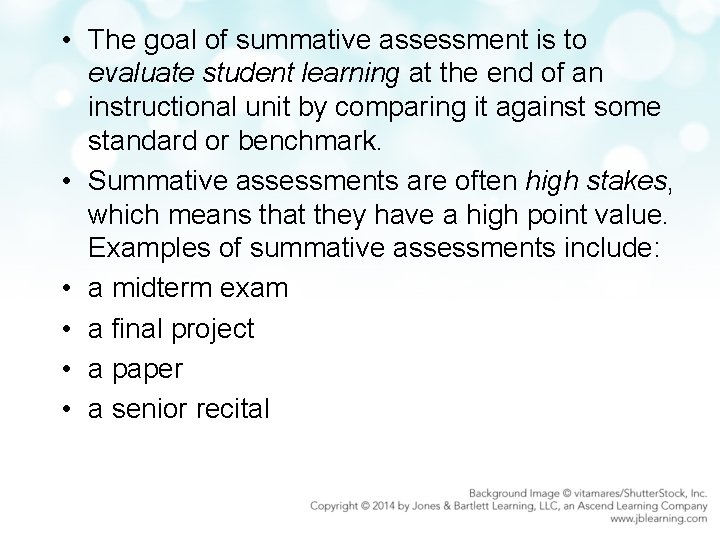

• The goal of summative assessment is to evaluate student learning at the end of an instructional unit by comparing it against some standard or benchmark. • Summative assessments are often high stakes, which means that they have a high point value. Examples of summative assessments include: • a midterm exam • a final project • a paper • a senior recital

• Information from summative assessments can be used formatively when students or faculty use it to guide their efforts and activities in subsequent courses.

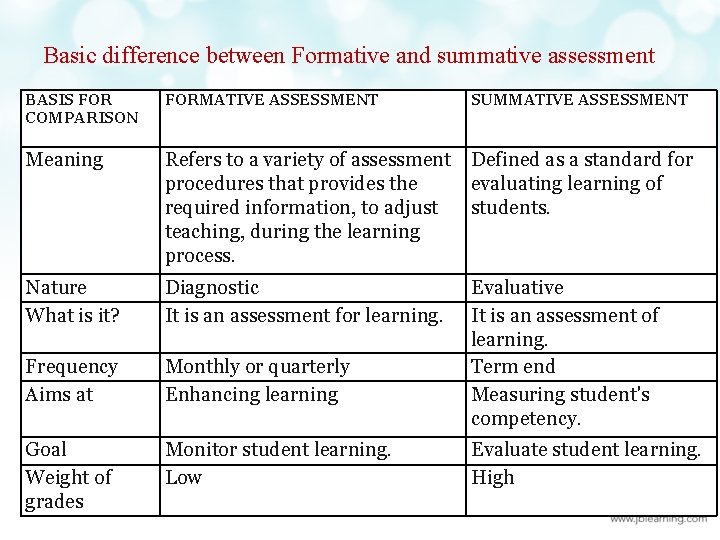

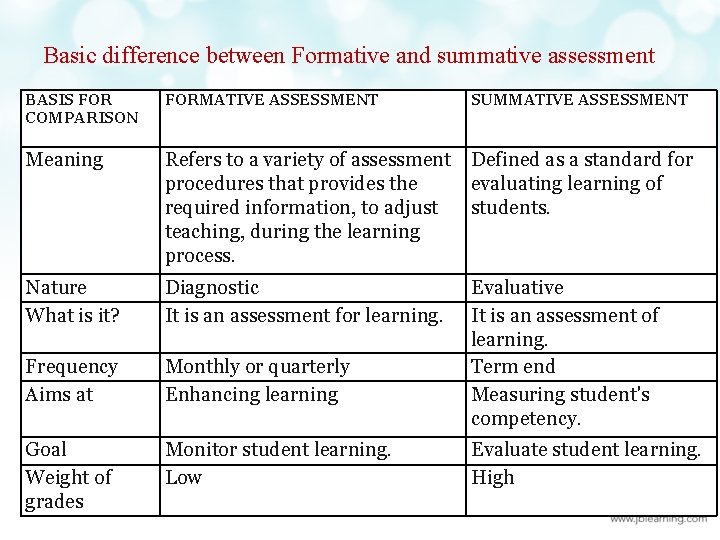

Basic difference between Formative and summative assessment BASIS FOR COMPARISON FORMATIVE ASSESSMENT SUMMATIVE ASSESSMENT Meaning Refers to a variety of assessment Defined as a standard for procedures that provides the evaluating learning of required information, to adjust students. teaching, during the learning process. Nature What is it? Diagnostic It is an assessment for learning. Frequency Aims at Monthly or quarterly Enhancing learning Goal Weight of grades Monitor student learning. Low Evaluative It is an assessment of learning. Term end Measuring student's competency. Evaluate student learning. High

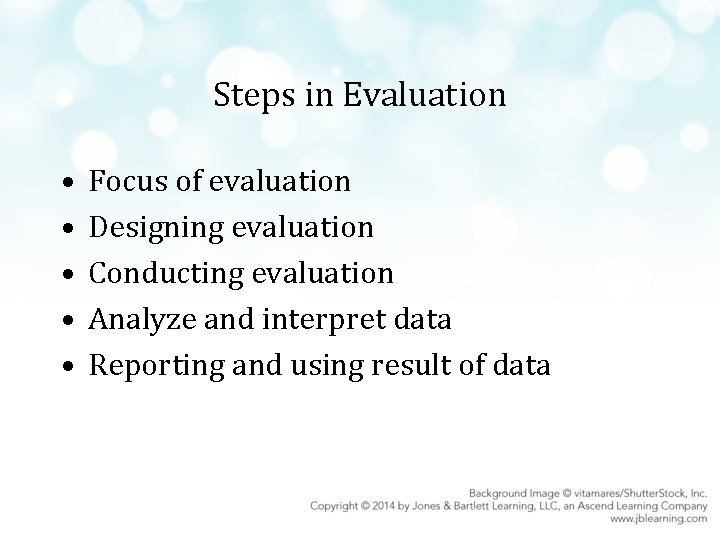

Steps in Evaluation • • • Focus of evaluation Designing evaluation Conducting evaluation Analyze and interpret data Reporting and using result of data

STEP ONE Focus of evaluation

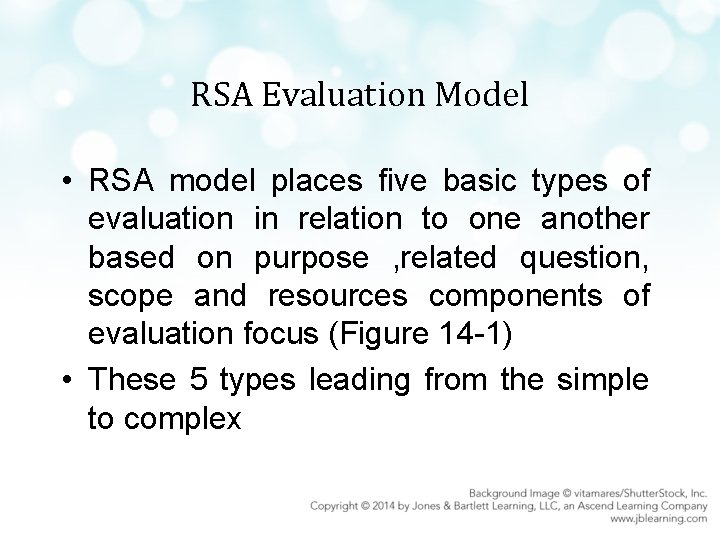

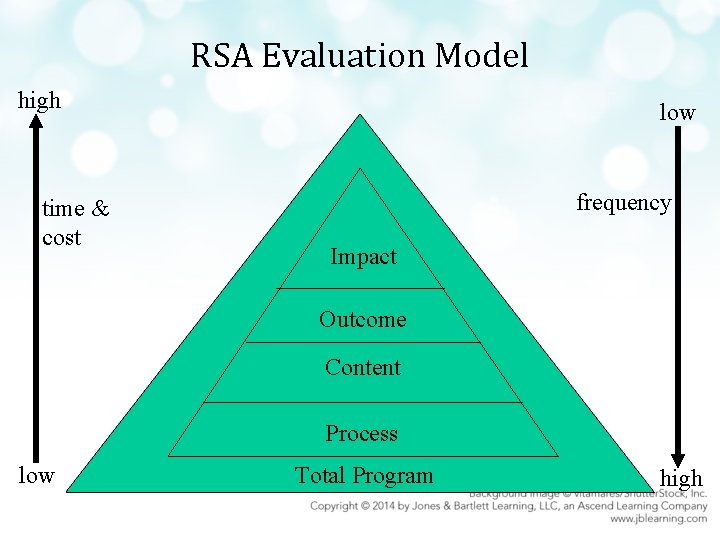

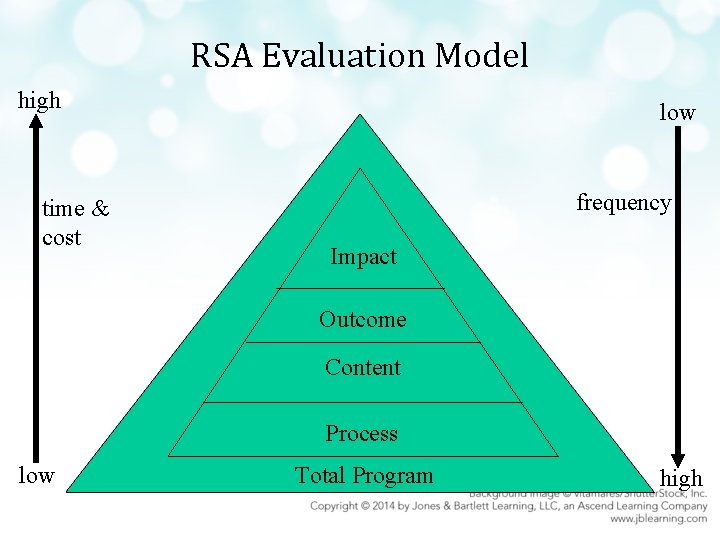

RSA Evaluation Model • RSA model places five basic types of evaluation in relation to one another based on purpose , related question, scope and resources components of evaluation focus (Figure 14 -1) • These 5 types leading from the simple to complex

RSA Evaluation Model high time & cost low frequency Impact Outcome Content Process low Total Program high

Process (Formative) Evaluation • Purpose: to make adjustments as soon as needed during education process • Scope: limited to specific learning experience; frequent; concurrent with learning

Content Evaluation • Purpose: to determine whether learners have acquired knowledge/skills just taught • Scope: limited to specific learning experience and objectives; immediately after education completed (short-term)

Outcome (Summative) Evaluation • Purpose: to determine effects of teaching • Scope: broader scope, more long term and less frequent than content evaluation

Impact Evaluation • Purpose: to determine relative effects of education on institution or community • Scope: broad, complex, sophisticated, long-term; occurs infrequently

Total Program Evaluation • Purpose: to determine extent to which total program meets/exceeds long-term goals • Scope: broad, long-term/strategic; lengthy, therefore conducted infrequently

STEP TWO Designing the evaluation

Designing the evaluation An important question to be answered in designing an evaluation is “How rigorous should the evaluation be” All evaluation should be systematic and carefully planned and structured before they are conducted. Evaluation design could be structured from a research perspective

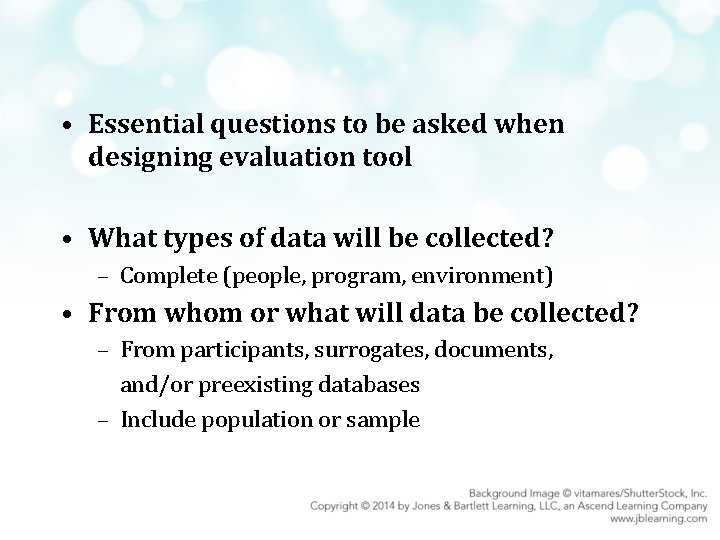

• Essential questions to be asked when designing evaluation tool • What types of data will be collected? – Complete (people, program, environment) • From whom or what will data be collected? – From participants, surrogates, documents, and/or preexisting databases – Include population or sample

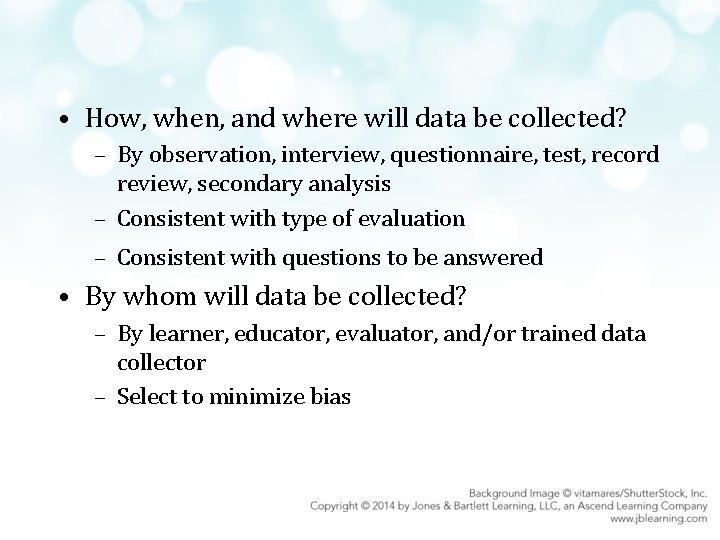

• How, when, and where will data be collected? – By observation, interview, questionnaire, test, record review, secondary analysis – Consistent with type of evaluation – Consistent with questions to be answered • By whom will data be collected? – By learner, educator, evaluator, and/or trained data collector – Select to minimize bias

Evaluation Barriers • Lack of clarity – Resolve by clearly describing five evaluation components. – Specify and operationally define terms. • Lack of ability – Resolve by making necessary resources available. – Solicit support from experts.

• Fear of punishment or loss of self-esteem – Resolve by being aware of existence of fear among those being evaluated. – Focus on data and results without personalizing or blaming. – Point out achievements. – Encourage ongoing effort. – COMMUNICATE!!!

Selecting an Evaluation Instrument • Identify existing instruments through literature search, review of similar evaluations conducted in the past. • Critique potential instruments for: – Fit with definitions of factors to be measured – Evidence of reliability and validity, especially with a similar population – Appropriateness for those being evaluated – Affordability, feasibility

STEP THREE Conducting an evaluation

When conducting an evaluation: • Conduct a pilot test first. – Assess feasibility of conducting the full evaluation as planned. – Assess reliability, validity of instruments. • Include extra time. – Be prepared for unexpected delays. • Keep a sense of humor!

STEP FOUR Data Analysis and Interpretation

Data Analysis and Interpretation The purpose for conducting data analysis is two-fold: 1. To organize data so that they can provide meaningful information, such as through the use of tables and graphs, and 2. To provide answers to evaluation questions. Data can be quantitative and/or qualitative in nature.

STEP FIVE Reporting and using result of data

Reporting and using Evaluation Results • Be audience focused. – Begin with a one-page executive summary. – Use format and language clear to the audience. – Present results in person and in writing. – Provide specific recommendations. • Stick to the evaluation purpose. – Directly answer questions asked.

• Use data as intended. – Maintain consistency between results and interpretation of results. – Identify limitations.

Summary of Evaluation Process • The process of evaluation in healthcare education is to gather, summarize, interpret, and use data to determine the extent to which an educational activity is efficient, effective, and useful to learners, teachers, and sponsors. • Each aspect of the evaluation process is important, but all of them are meaningless unless the results of evaluation are used to guide future action in planning and carrying out interventions.

Healthcare and the healthcare team chapter 2

Healthcare and the healthcare team chapter 2 Healthcare and the healthcare team chapter 2

Healthcare and the healthcare team chapter 2 Difference between formative and summative assessment

Difference between formative and summative assessment Chapter 3 careers in healthcare

Chapter 3 careers in healthcare Chapter 11 the nurse's role in women's healthcare

Chapter 11 the nurse's role in women's healthcare Chapter 1 history and trends of healthcare

Chapter 1 history and trends of healthcare Chapter 3 careers in healthcare

Chapter 3 careers in healthcare Chapter 3 healthcare laws and ethics

Chapter 3 healthcare laws and ethics Dental supply person definition

Dental supply person definition Test chapter 1 history and trends of health care

Test chapter 1 history and trends of health care Healthcare settings

Healthcare settings Theory of learning by insight

Theory of learning by insight Principles of evaluation in education

Principles of evaluation in education Fineec

Fineec Internal and external evaluation in education

Internal and external evaluation in education Als vs formal education

Als vs formal education Difference between health education and promotion

Difference between health education and promotion Types of extension education

Types of extension education Group number on united healthcare card

Group number on united healthcare card Vuna healthcare logistics

Vuna healthcare logistics Idm healthcare

Idm healthcare Va sunshine healthcare network

Va sunshine healthcare network United healthcare underwritten by golden rule

United healthcare underwritten by golden rule Group number on united healthcare card

Group number on united healthcare card Tort healthcare

Tort healthcare Stepps training

Stepps training Smartnet vs smartnet total care

Smartnet vs smartnet total care Sirius cybersecurity

Sirius cybersecurity Service line strategy

Service line strategy Another name for customer

Another name for customer Sap business one for healthcare

Sap business one for healthcare Roles and responsibilities of healthcare team

Roles and responsibilities of healthcare team Decision making in healthcare

Decision making in healthcare Scraping healthcare

Scraping healthcare Papercut healthcare

Papercut healthcare Nursing informatics and healthcare policy

Nursing informatics and healthcare policy Uhc medication prior authorization form

Uhc medication prior authorization form Midas health analytics solutions

Midas health analytics solutions What is micromedex

What is micromedex Tort examples in healthcare

Tort examples in healthcare Sertifikasi tenaga kesehatan

Sertifikasi tenaga kesehatan False imprisonment in healthcare

False imprisonment in healthcare Value stream mapping healthcare examples

Value stream mapping healthcare examples