Chapter 13 InstructionLevel Parallelism and Superscalar Processors Yonsei

![Reported Speedups 13 -5 Overview Reference Speedup [TJAD 70] 1. 8 [KUCK 72] 8 Reported Speedups 13 -5 Overview Reference Speedup [TJAD 70] 1. 8 [KUCK 72] 8](https://slidetodoc.com/presentation_image_h2/4798dfd159d756c006180f99eec21597/image-5.jpg)

- Slides: 78

Chapter 13 Instruction-Level Parallelism and Superscalar Processors Yonsei University

Contents • • 13 -2 Overview Design Issues Pentium Ⅱ Power. PC MIPS R 1000 Ultra. SPARC Ⅱ IA-64/Merced Yonsei University

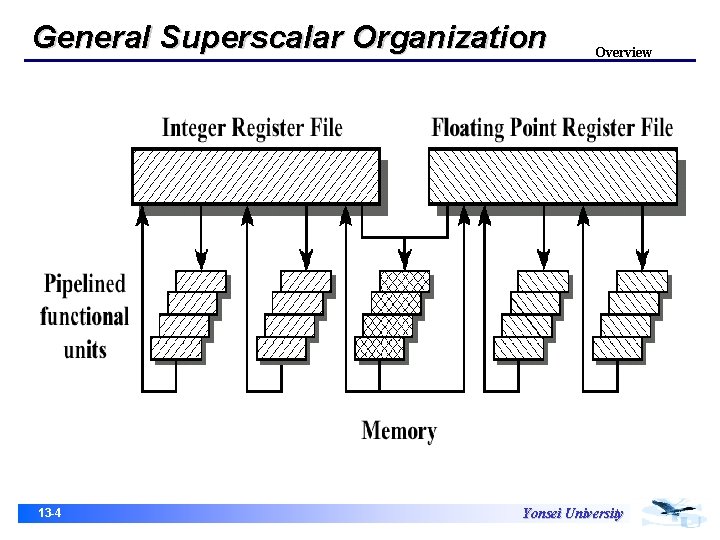

Overview • The essence of the superscalar approach is the ability to execute instructions independently in different pipelines • The concept can be further exploited by the allowing instructions to be executed in an order different from the program order 13 -3 Yonsei University

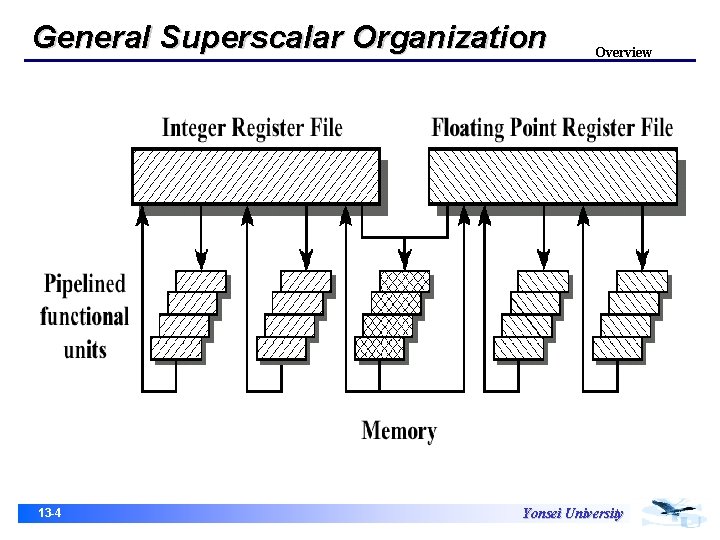

General Superscalar Organization 13 -4 Overview Yonsei University

![Reported Speedups 13 5 Overview Reference Speedup TJAD 70 1 8 KUCK 72 8 Reported Speedups 13 -5 Overview Reference Speedup [TJAD 70] 1. 8 [KUCK 72] 8](https://slidetodoc.com/presentation_image_h2/4798dfd159d756c006180f99eec21597/image-5.jpg)

Reported Speedups 13 -5 Overview Reference Speedup [TJAD 70] 1. 8 [KUCK 72] 8 [WEIS 84] 1. 58 [ACOS 86] 2. 7 [SOHI 90] 1. 8 [SMIT 89] 2. 3 [JOUP 89 b] 2. 2 [LEE 91] 7 Yonsei University

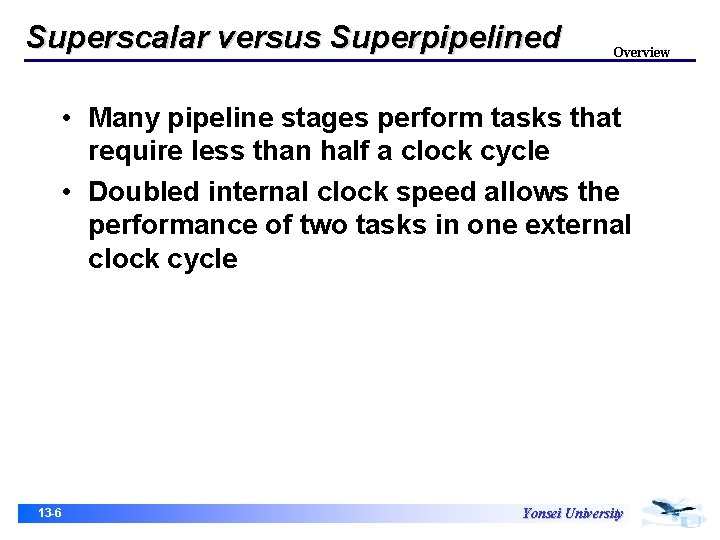

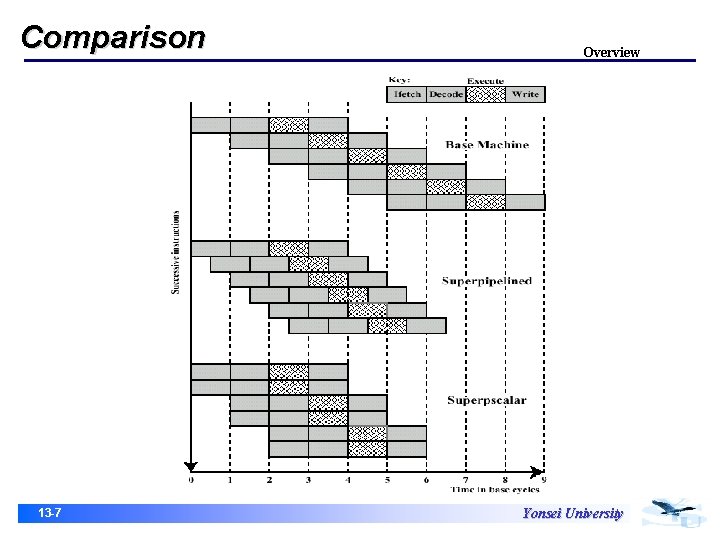

Superscalar versus Superpipelined Overview • Many pipeline stages perform tasks that require less than half a clock cycle • Doubled internal clock speed allows the performance of two tasks in one external clock cycle 13 -6 Yonsei University

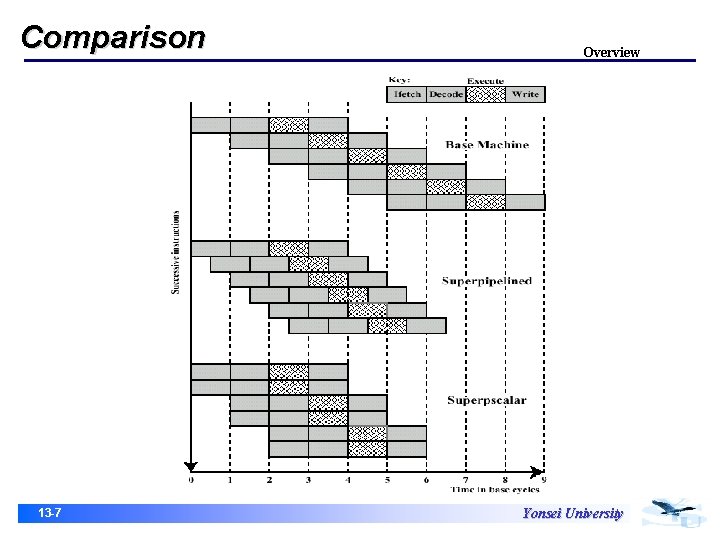

Comparison 13 -7 Overview Yonsei University

Superscalar versus Superpipelined Overview • Superpipeline – The function performed in each stage can be split into 2 nonoverlapping parts and each can execute in half a clock cycle – A superpipeline implementation that behaves in this fashion is said to be of degree 2 • Superscalar – Capable of executing 2 instances of each stage in parallel • The superpipelined processor falls behind the superscalar processor at the start of the program and at each branch target 13 -8 Yonsei University

Limitations Overview • Superscalar approach depends on the ability to execute multiple instructions in parallel. • The term instruction-level parallelism refers to the degree to which, on average, the instructions of a program can be executed in parallel. • A combination of compiler-based optimization and hardware techniques can be used to maximize instruction-level parallelism. 13 -9 Yonsei University

Limitations Overview • Five Limitations – – – 13 -10 True data dependency Procedural dependency Resource conflicts Output dependency Antidependency Yonsei University

True Data Dependency Overview add r 1, r 2 move r 3, r 1 • The second instruction needs data produced by the first instruction. 13 -11 Yonsei University

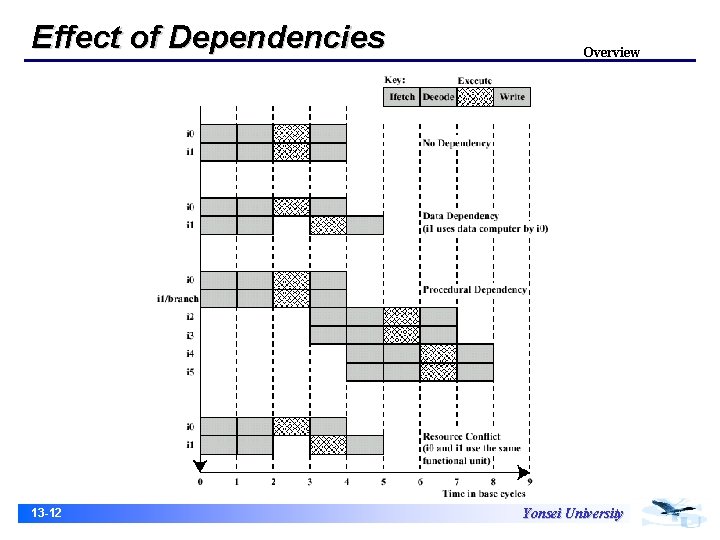

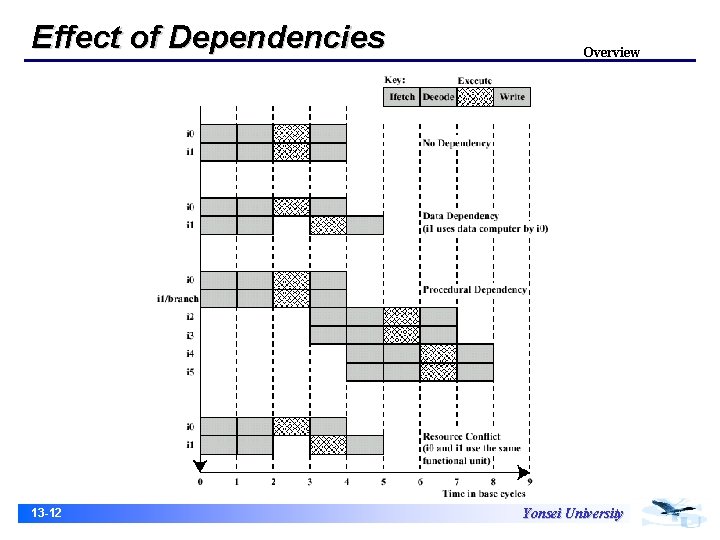

Effect of Dependencies 13 -12 Overview Yonsei University

True Data Dependency Overview • With no dependency, two instructions can be fetched and executed in parallel • If there is a data dependency between the first and second instructions, the second instruction is delayed as many clock cycles as required to remove the dependency 13 -13 Yonsei University

True Data Dependency Overview load r 1, eff move r 3, r 1 • A typical RISC processor takes two or more cycles to perform a load from memory because of the delay of an off-chip memory or cache access • One way to compensate for this delay is for the compiler to recorder instructions so that one or more subsequent instructions that do not depend on the memory load can begin flowing through the pipeline 13 -14 Yonsei University

Procedural Dependencies Overview • The instructions following a branch(taken or not taken) have a procedural dependency on the branch and cannot be executed until the branch is executed. • This type of procedural dependency also affects a scalar pipeline. The consequence for a superscalar pipeline is more severe, because a greater magnitude of opportunity is lost with each delay. 13 -15 Yonsei University

Resource Conflict Overview • A resource conflict is a competition of two or more instructions for the same resource at the same time. • Resource conflicts can be overcome by duplication of resources 13 -16 Yonsei University

Instruction-Level Parallelism Design Issues • Instruction-level parallelism exists when instructions in a sequence are independent and thus can be executed in parallel by overlapping Load R 1 R 2 Add R 3, “ 1” Add R 4, R 2 Add R 3, “ 1” Add R 4 R 3, R 2 Store [R 4] R 0 • Instruction-level parallelism is determined by the frequency of true data dependencies and procedural dependencies in the code 13 -17 Yonsei University

Machine Parallelism Design Issues • Machine parallelism is a measure of the ability of the processor to take advantage of instruction-level parallelism • Machine parallelism is determined by the number of instructions that can be fetched and executed at the same time (the number of parallel pipelines) and by the speed and sophistication of the mechanisms that the processor uses to find independent instructions 13 -18 Yonsei University

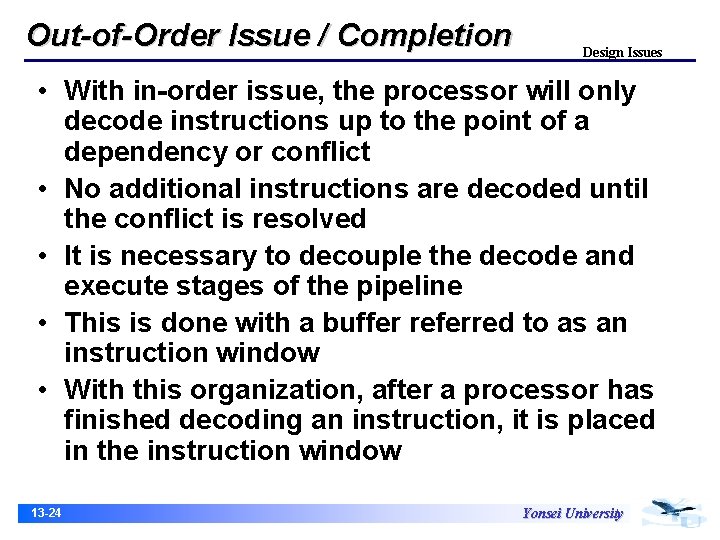

Instruction-Issue Policy Design Issues • Instruction-issue policy to refer to the protocol used to issue instructions • Three types of orderings – The order in which instructions are fetched – The order in which instructions are executed – The order in which update the contents of register and memory locations • Superscalar instruction issue policies – In-order issue with in-order completion – In-order issue with out-of-order completion – Out-of-order issue with out-of-order completion 13 -19 Yonsei University

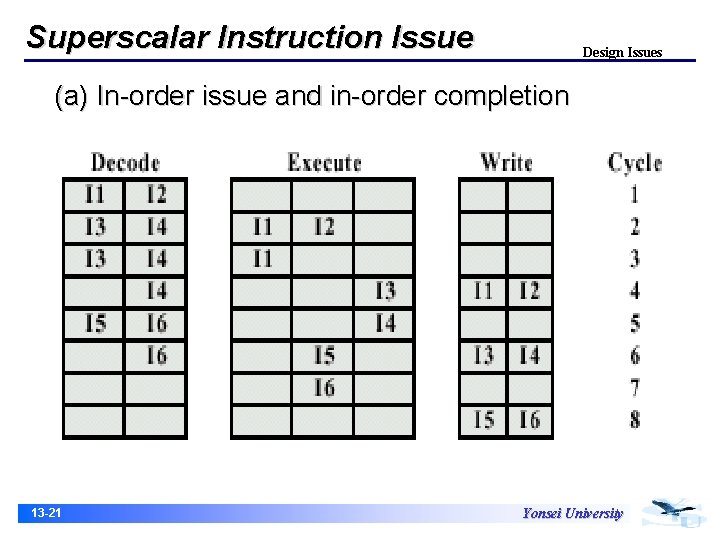

In-Order Issue / In-Order Completion Design Issues • The simplest instruction-issue policy is to issue instructions in the exact order that would be achieved by sequential execution (in-order issue) and to write results in that same order (in-order completion) 13 -20 Yonsei University

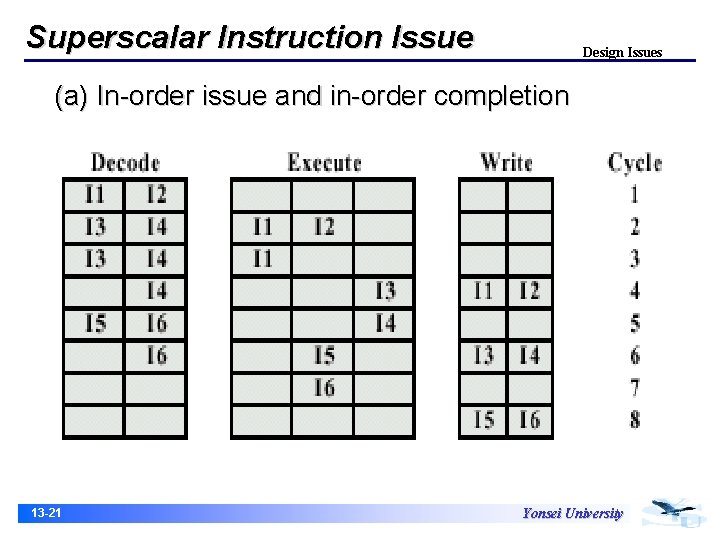

Superscalar Instruction Issue Design Issues (a) In-order issue and in-order completion 13 -21 Yonsei University

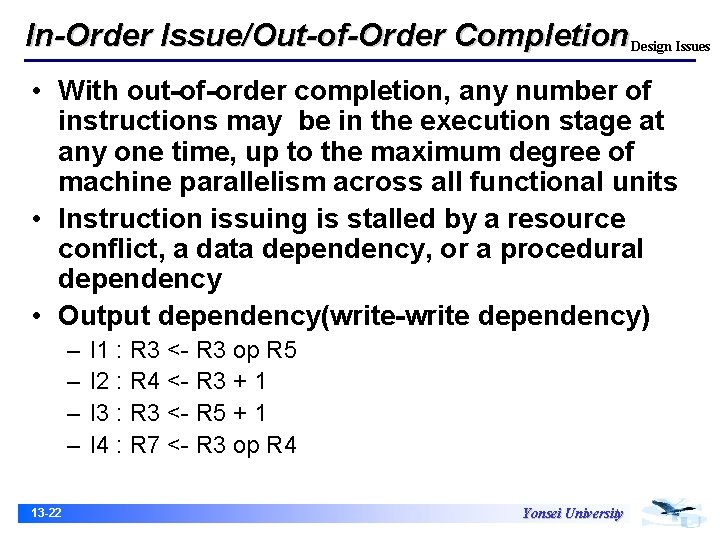

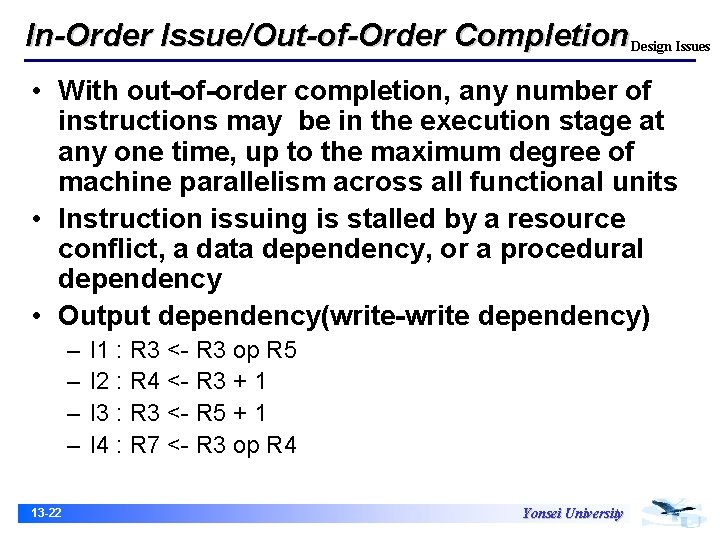

In-Order Issue/Out-of-Order Completion Design Issues • With out-of-order completion, any number of instructions may be in the execution stage at any one time, up to the maximum degree of machine parallelism across all functional units • Instruction issuing is stalled by a resource conflict, a data dependency, or a procedural dependency • Output dependency(write-write dependency) – – 13 -22 I 1 : R 3 <- R 3 op R 5 I 2 : R 4 <- R 3 + 1 I 3 : R 3 <- R 5 + 1 I 4 : R 7 <- R 3 op R 4 Yonsei University

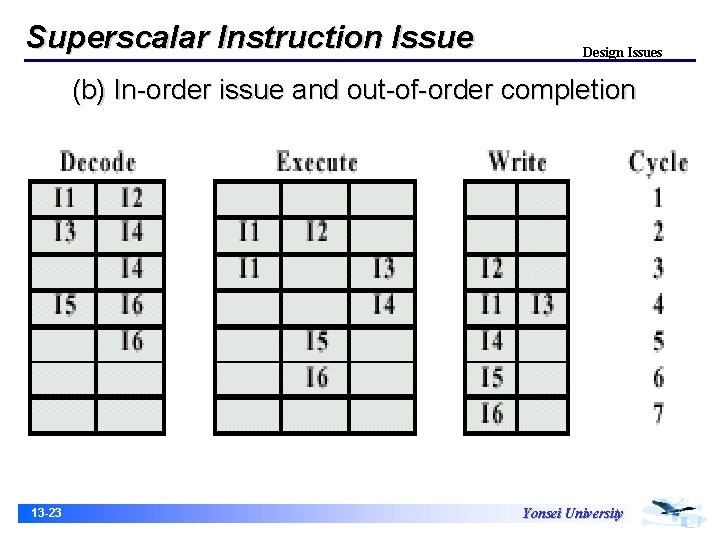

Superscalar Instruction Issue Design Issues (b) In-order issue and out-of-order completion 13 -23 Yonsei University

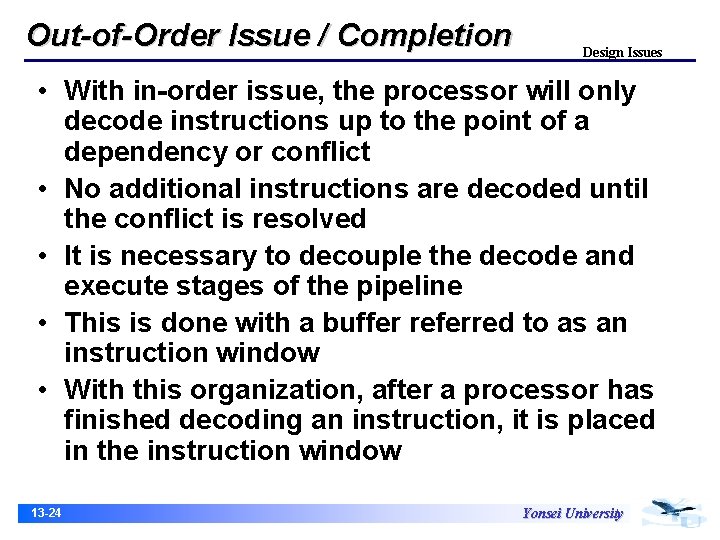

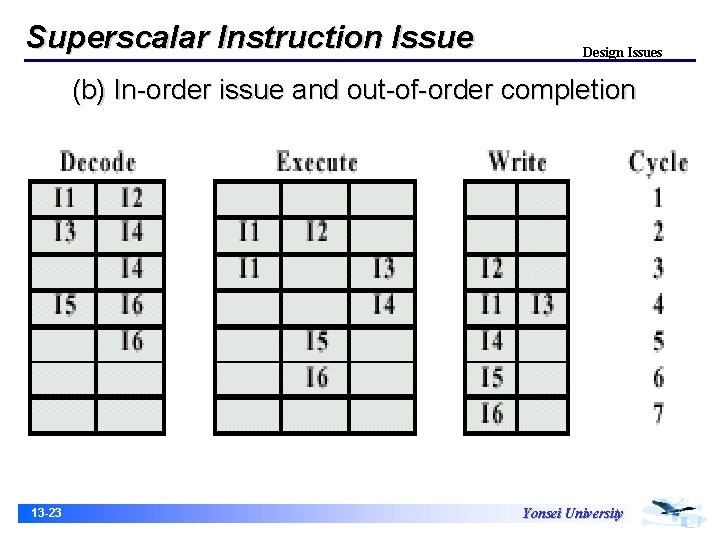

Out-of-Order Issue / Completion Design Issues • With in-order issue, the processor will only decode instructions up to the point of a dependency or conflict • No additional instructions are decoded until the conflict is resolved • It is necessary to decouple the decode and execute stages of the pipeline • This is done with a buffer referred to as an instruction window • With this organization, after a processor has finished decoding an instruction, it is placed in the instruction window 13 -24 Yonsei University

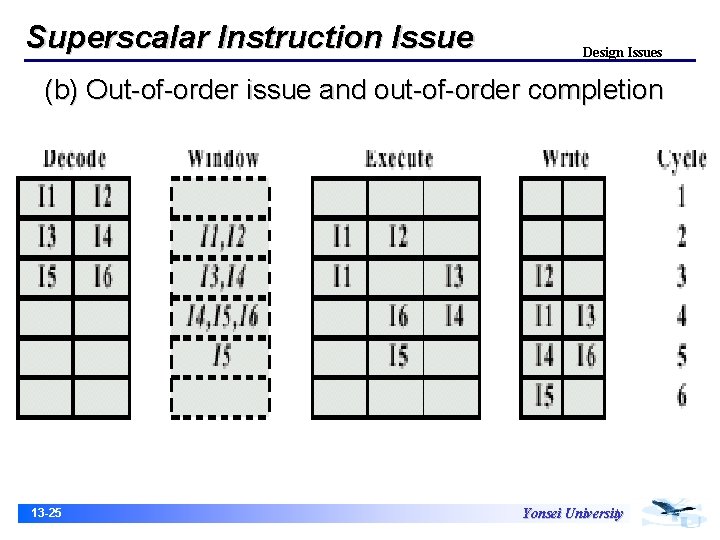

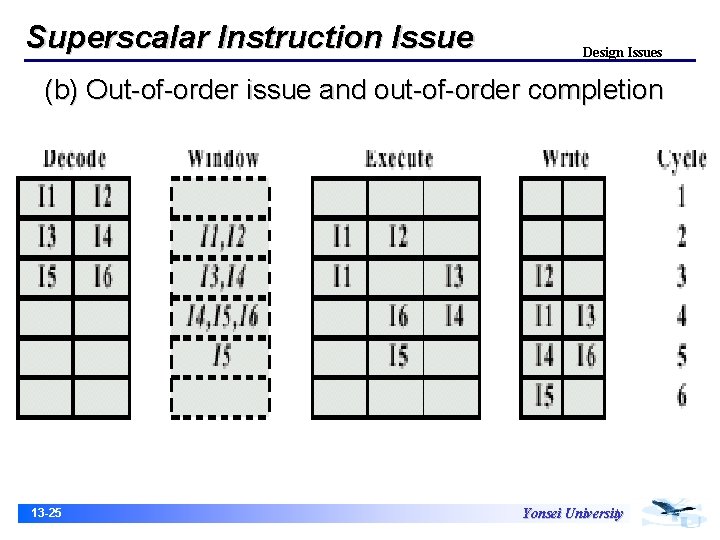

Superscalar Instruction Issue Design Issues (b) Out-of-order issue and out-of-order completion 13 -25 Yonsei University

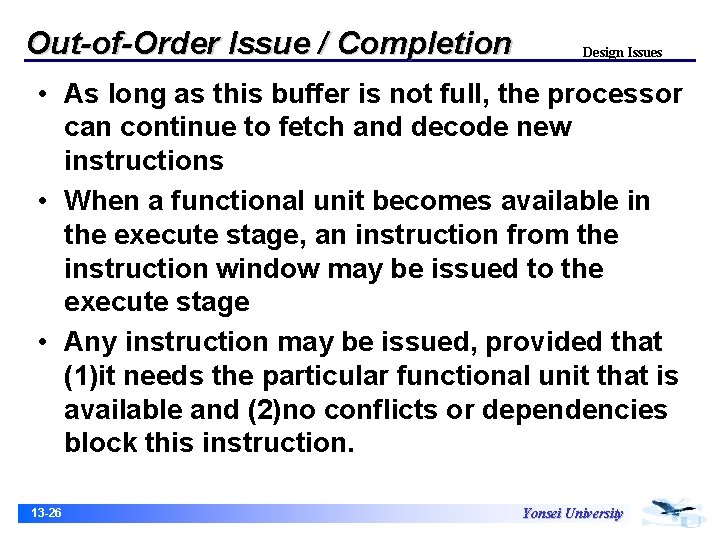

Out-of-Order Issue / Completion Design Issues • As long as this buffer is not full, the processor can continue to fetch and decode new instructions • When a functional unit becomes available in the execute stage, an instruction from the instruction window may be issued to the execute stage • Any instruction may be issued, provided that (1)it needs the particular functional unit that is available and (2)no conflicts or dependencies block this instruction. 13 -26 Yonsei University

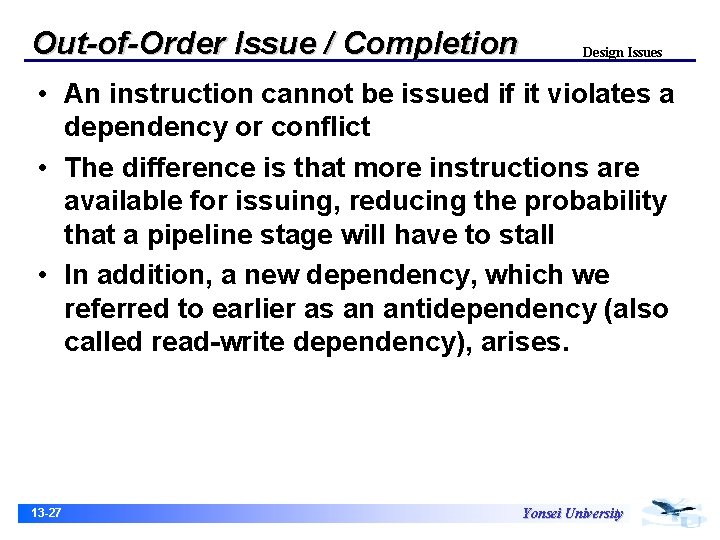

Out-of-Order Issue / Completion Design Issues • An instruction cannot be issued if it violates a dependency or conflict • The difference is that more instructions are available for issuing, reducing the probability that a pipeline stage will have to stall • In addition, a new dependency, which we referred to earlier as an antidependency (also called read-write dependency), arises. 13 -27 Yonsei University

Out-of-Order Issue / Completion Design Issues • Code fragment – – I 1 : R 3 <- R 3 op R 5 I 2 : R 4 <- R 3 + 1 I 3 : R 3 <- R 5 + 1 I 4 : R 7 <- R 3 op R 4 • The term antidependency is used because the constraint is similar to that of a true data dependency, but reversed 13 -28 Yonsei University

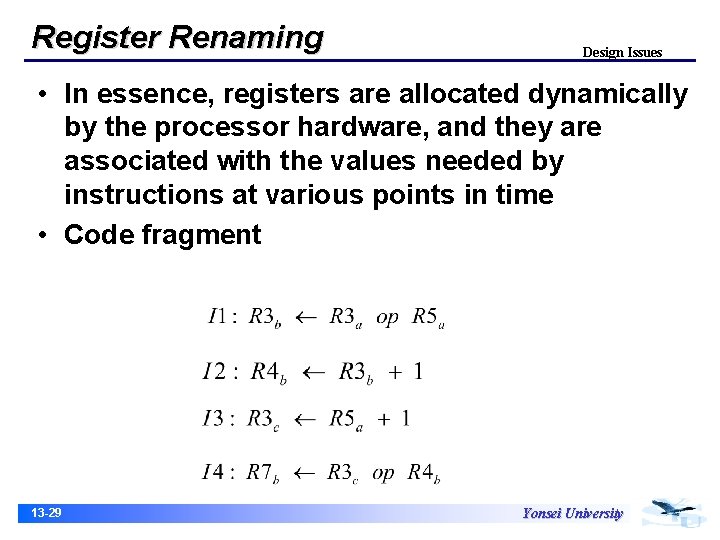

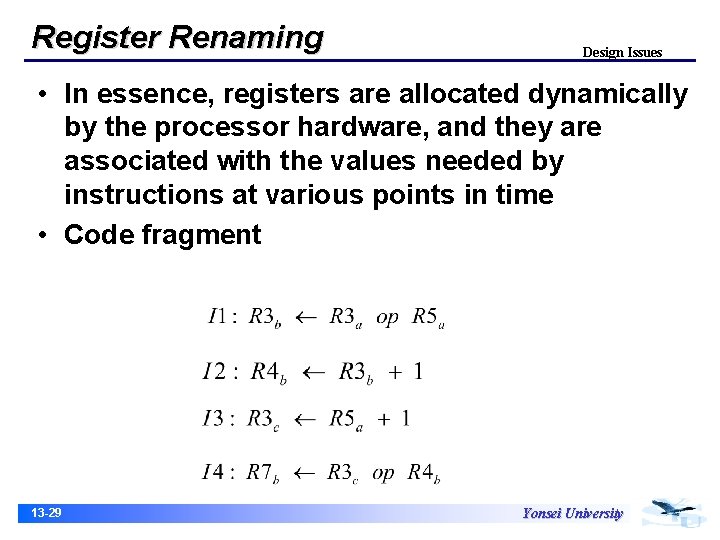

Register Renaming Design Issues • In essence, registers are allocated dynamically by the processor hardware, and they are associated with the values needed by instructions at various points in time • Code fragment 13 -29 Yonsei University

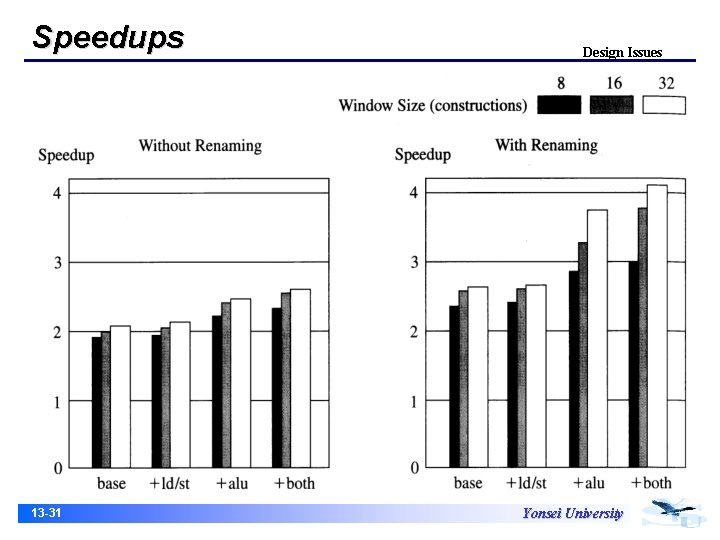

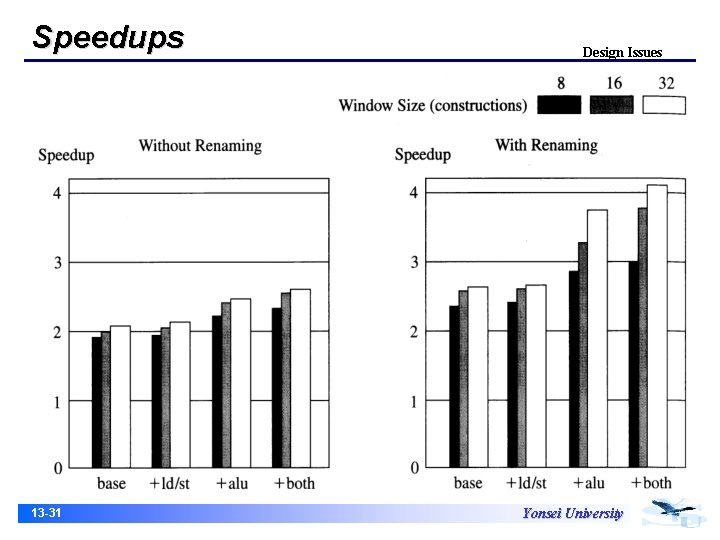

Machine Parallelism Design Issues • Three hardware techniques that can be used in a superscalar processor to enhance performance : duplication of resources, out-oforder issue, and renaming. • The base machine does not duplicate any of the functional units, but it can issue instructions out of order. 13 -30 Yonsei University

Speedups 13 -31 Design Issues Yonsei University

Branch Prediction Design Issues • Intel 80486 : because there are two pipeline stages between prefetch and execution, this strategy incurs a two-cycle delay when the branch gets taken • With the advent of RISC machines, the delayed branch strategy was explored • This allows the processor to calculate the result of conditional branch instructions before any unusable instructions have been prefetched • With this method, the processor always executes the single instructions that immediately follows the branch 13 -32 Yonsei University

Branch Prediction Design Issues • The reason is that multiple instructions need to execute in the delay, raising several problems relating to instruction dependencies • Thus, superscalar machines have returned to pre-RISC technique of branch prediction 13 -33 Yonsei University

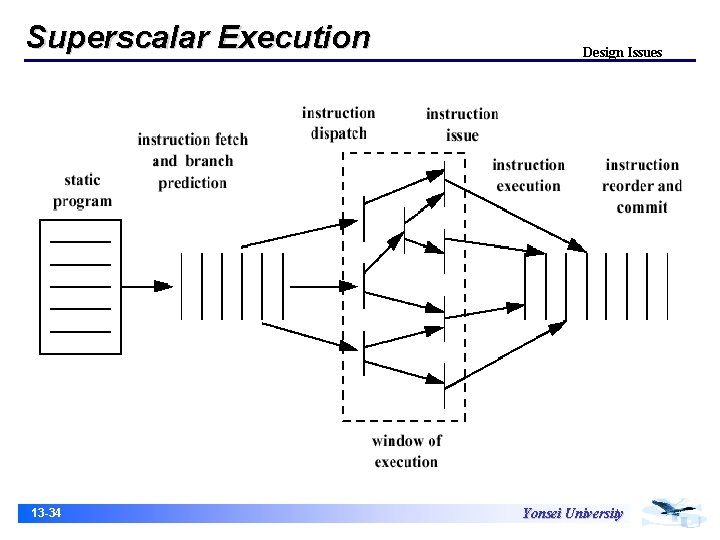

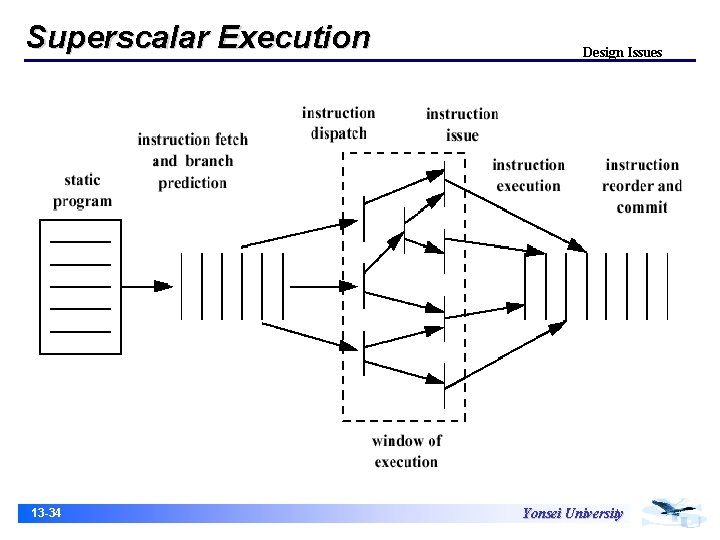

Superscalar Execution 13 -34 Design Issues Yonsei University

Superscalar Implementation Design Issues • Instruction fetch strategies that simultaneously fetch multiple instructions. • Logic for determining true dependencies involving register values, and mechanisms for communicating these values to where they are needed during execution. • Mechanisms for initiating, or issuing, multiple instructions in parallel. 13 -35 Yonsei University

Superscalar Implementation Design Issues • Resources for parallel execution of multiple instructions, including multiple pipelined functional units and memory hierarchies capable of simultaneously servicing multiple memory references. • Mechanisms for committing the process state in correct order. 13 -36 Yonsei University

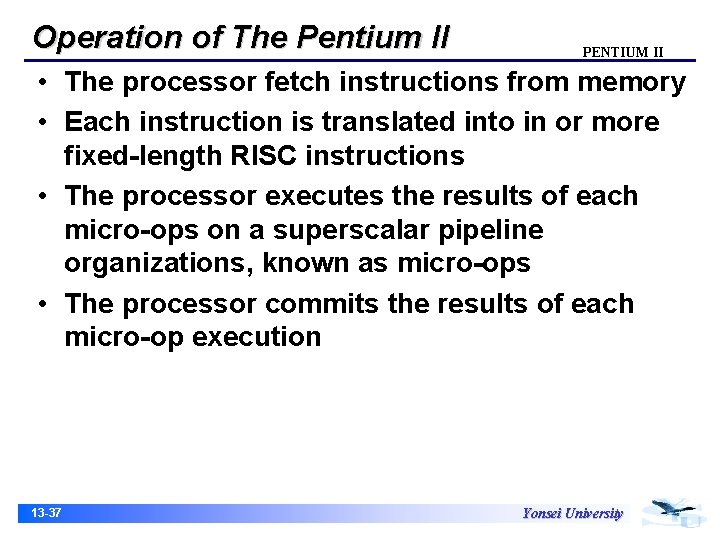

Operation of The Pentium II PENTIUM II • The processor fetch instructions from memory • Each instruction is translated into in or more fixed-length RISC instructions • The processor executes the results of each micro-ops on a superscalar pipeline organizations, known as micro-ops • The processor commits the results of each micro-op execution 13 -37 Yonsei University

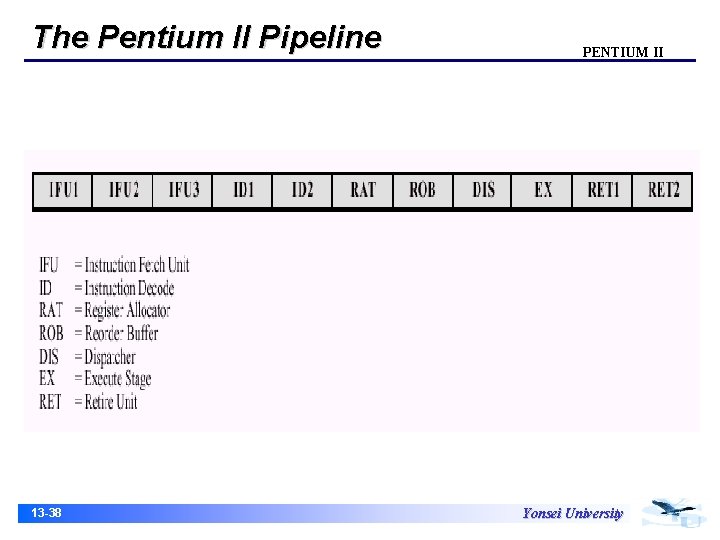

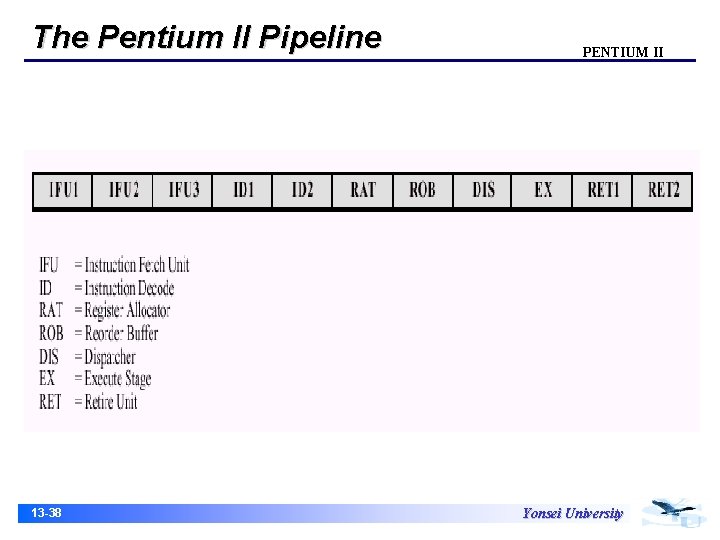

The Pentium II Pipeline 13 -38 PENTIUM II Yonsei University

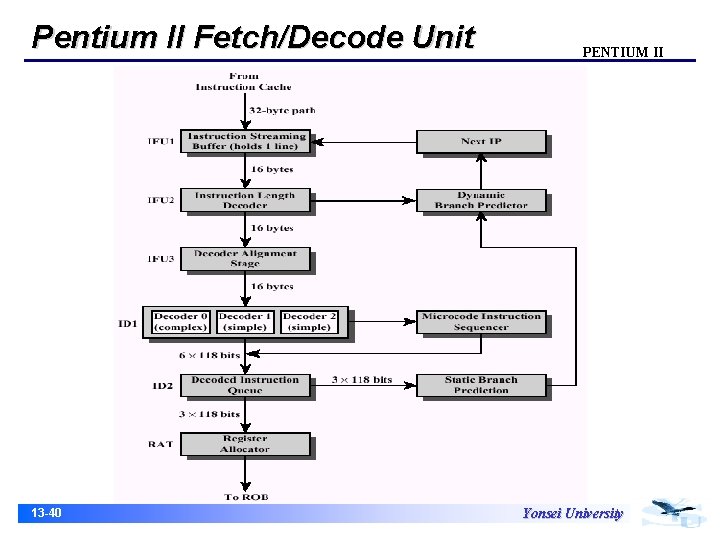

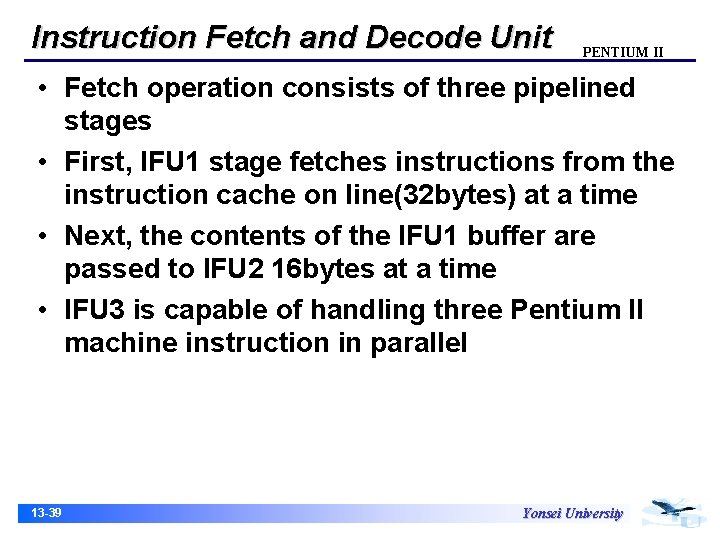

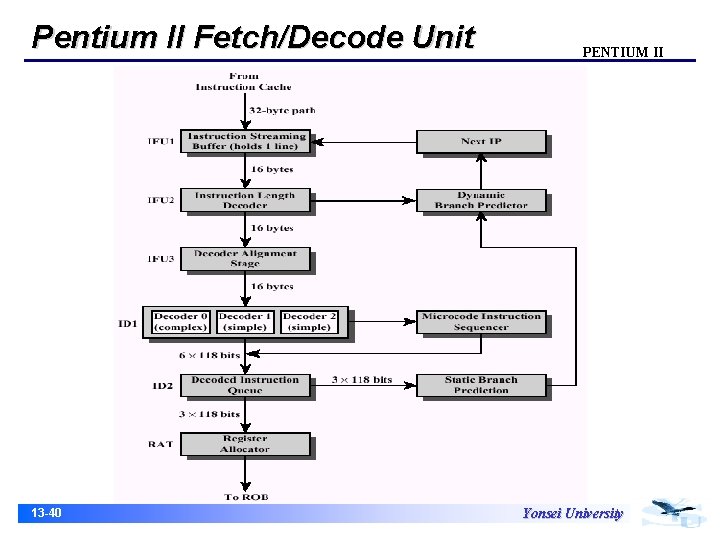

Instruction Fetch and Decode Unit PENTIUM II • Fetch operation consists of three pipelined stages • First, IFU 1 stage fetches instructions from the instruction cache on line(32 bytes) at a time • Next, the contents of the IFU 1 buffer are passed to IFU 2 16 bytes at a time • IFU 3 is capable of handling three Pentium II machine instruction in parallel 13 -39 Yonsei University

Pentium II Fetch/Decode Unit 13 -40 PENTIUM II Yonsei University

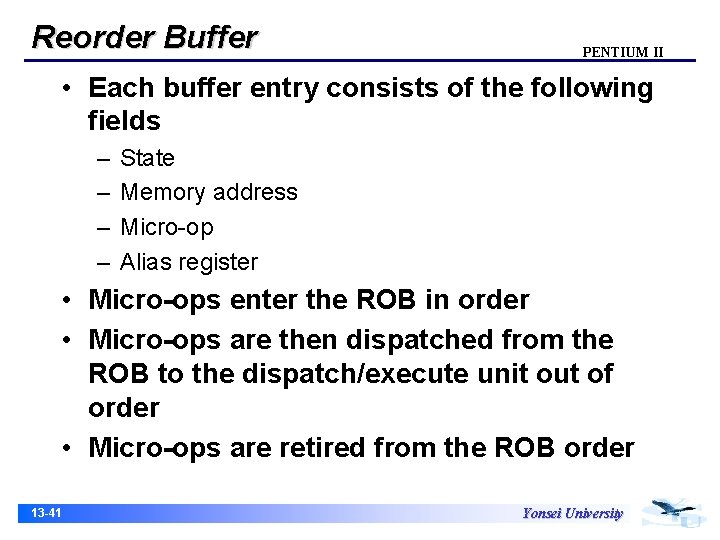

Reorder Buffer PENTIUM II • Each buffer entry consists of the following fields – – State Memory address Micro-op Alias register • Micro-ops enter the ROB in order • Micro-ops are then dispatched from the ROB to the dispatch/execute unit out of order • Micro-ops are retired from the ROB order 13 -41 Yonsei University

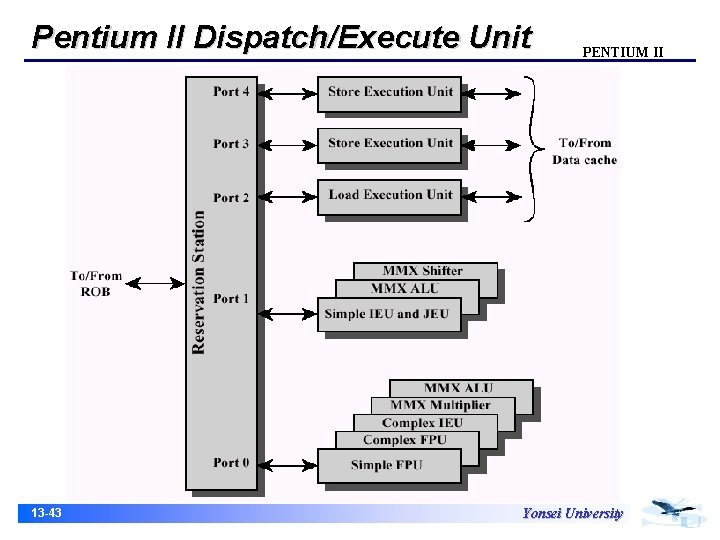

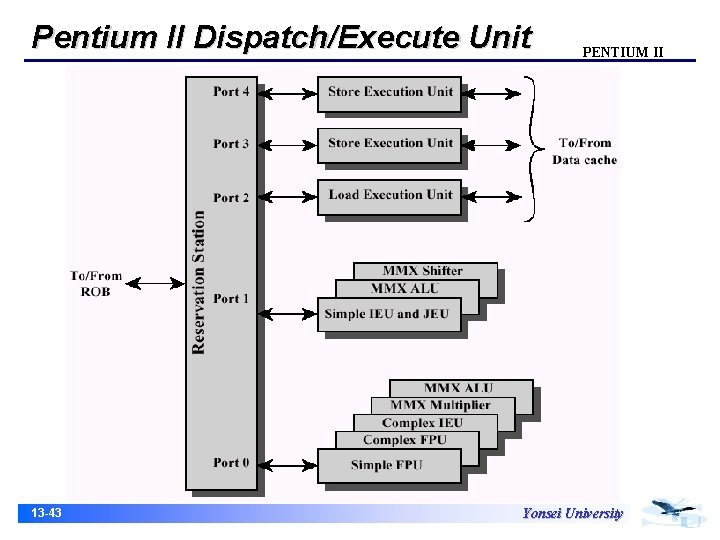

Dispatch/Execute Unit PENTIUM II • RS is responsible for retrieving micro-ops from the ROB, dispatching these for execution, and recording the results back in the ROB • Five ports attach the RS to the five execution units • Once execution is complete, the appropriate entry in the ROB is updated and the execution unit is available for another micro-op 13 -42 Yonsei University

Pentium II Dispatch/Execute Unit 13 -43 PENTIUM II Yonsei University

Retire Unit PENTIUM II • The retire unit(RU) work off of the reorder buffer to commit the results of instruction execution • The RU must take into account branch mispredictions and micro-ops that have executed but for which preceding branches have not yet been validated 13 -44 Yonsei University

Branch Prediction PENTIUM II • Pentium II use a dynamic prediction strategy • A branch target buffer(BTB) is maintained that caches information • Once the instruction is executed, the history portion of the appropriate entry is updated to reflect the result of the branch instruction 13 -45 Yonsei University

Power. PC 601 Power. PC • Dispatch Unit • Instruction Pipelines 13 -46 Yonsei University

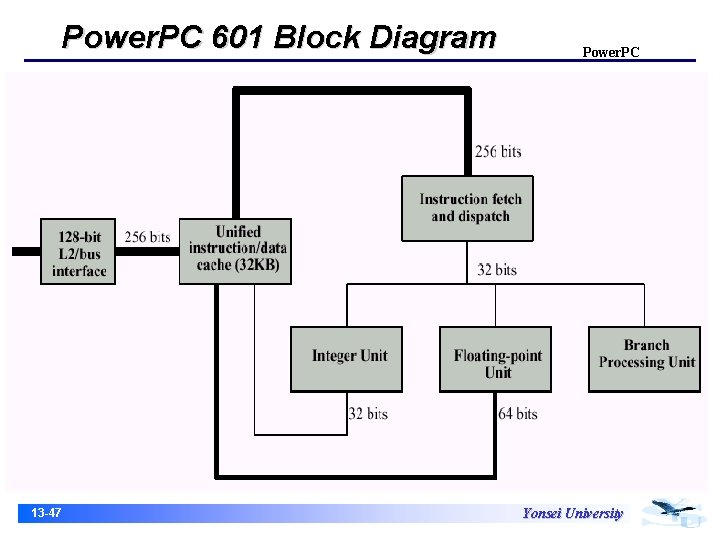

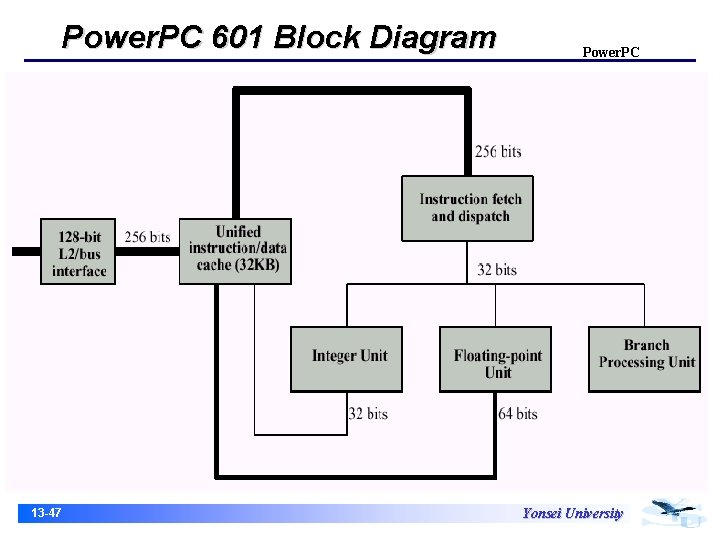

Power. PC 601 Block Diagram 13 -47 Power. PC Yonsei University

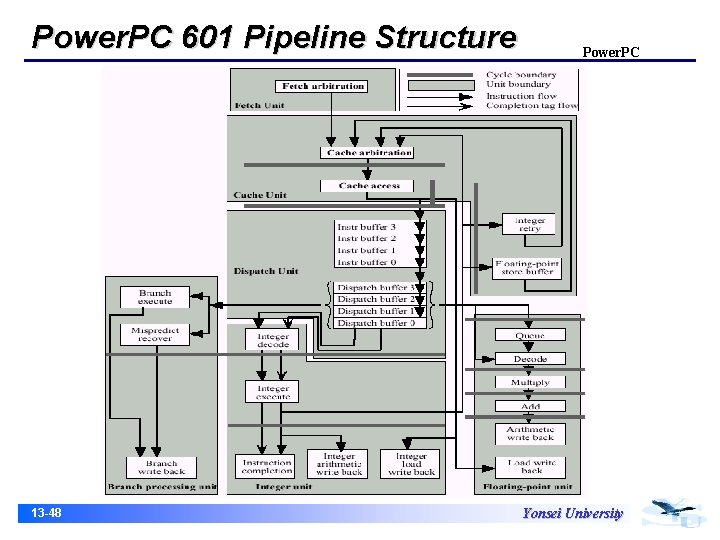

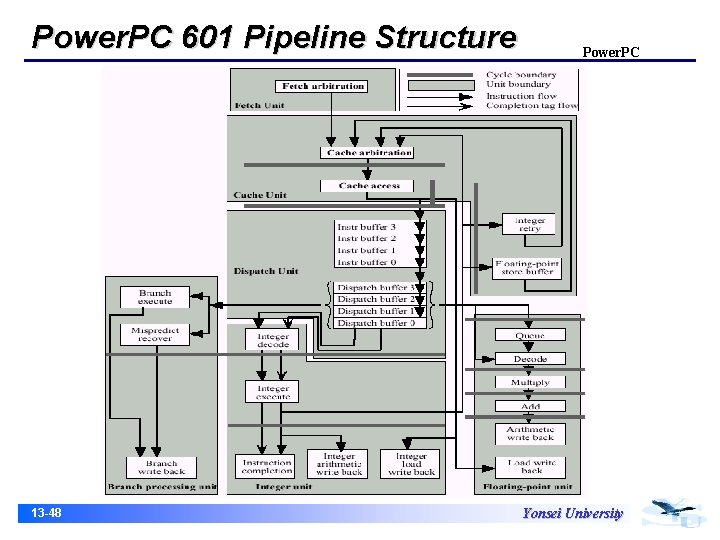

Power. PC 601 Pipeline Structure 13 -48 Power. PC Yonsei University

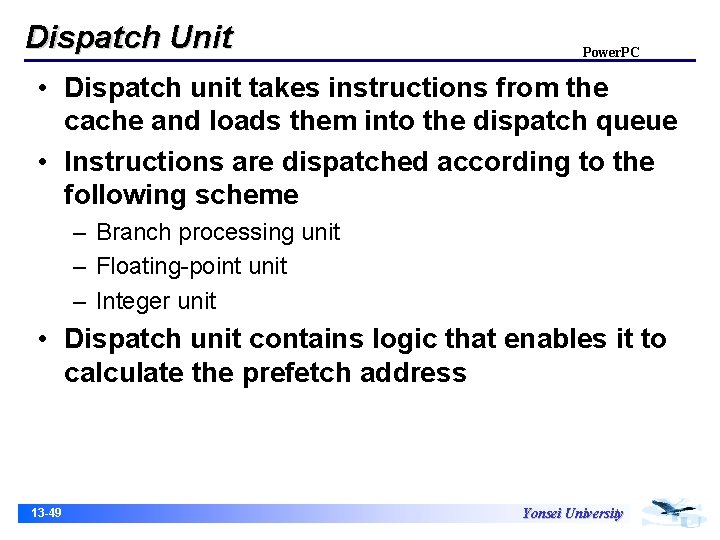

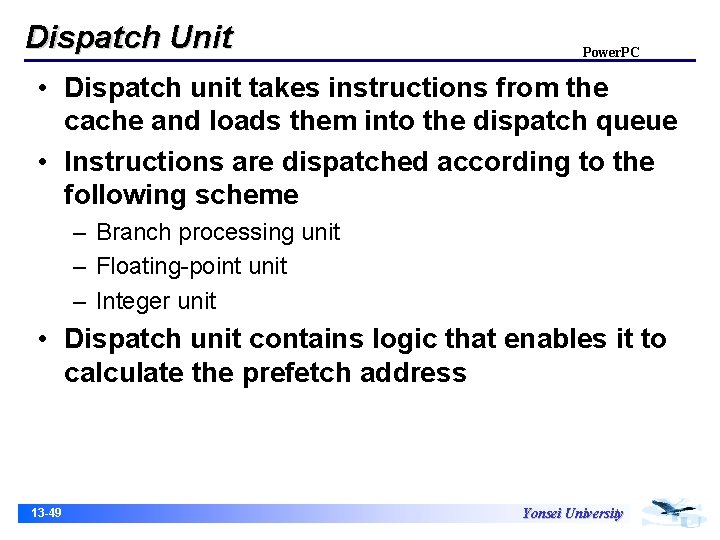

Dispatch Unit Power. PC • Dispatch unit takes instructions from the cache and loads them into the dispatch queue • Instructions are dispatched according to the following scheme – Branch processing unit – Floating-point unit – Integer unit • Dispatch unit contains logic that enables it to calculate the prefetch address 13 -49 Yonsei University

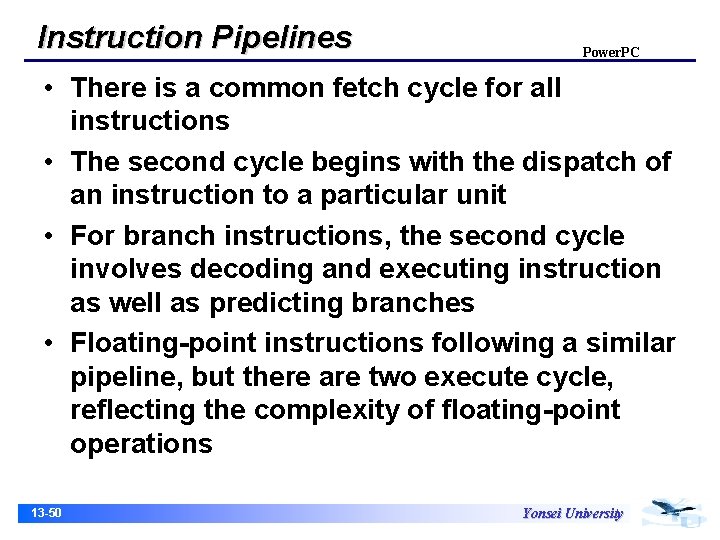

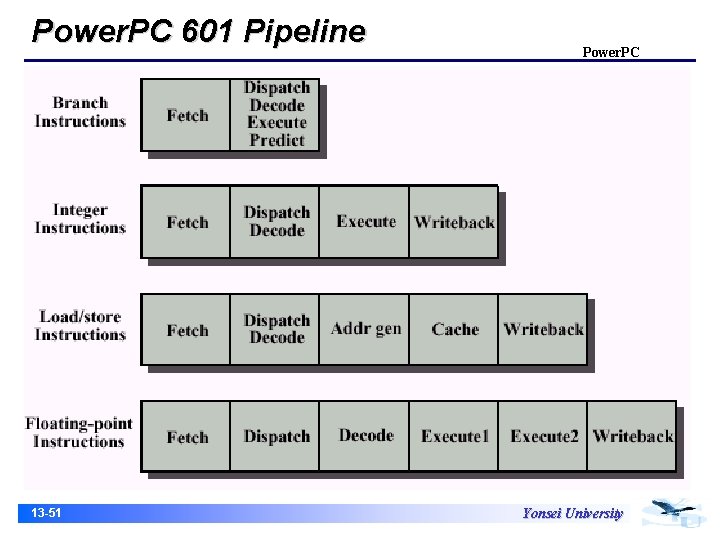

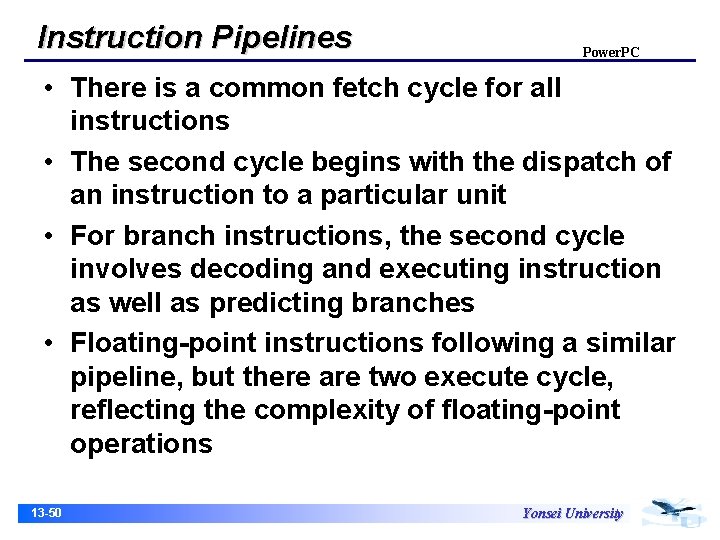

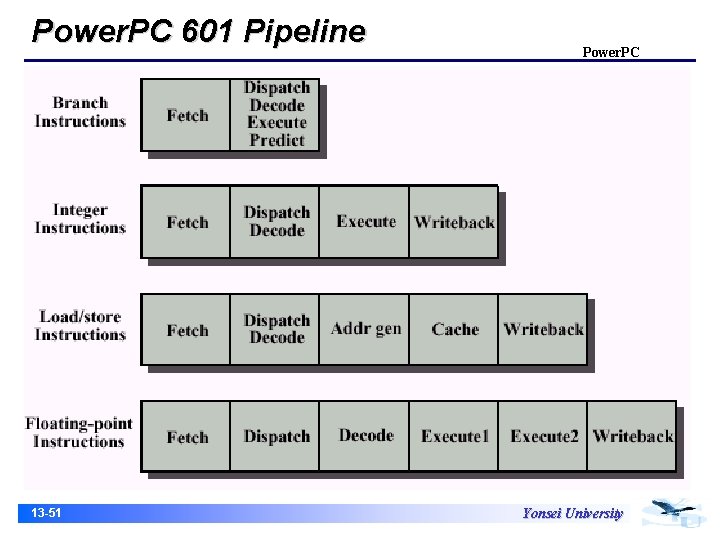

Instruction Pipelines Power. PC • There is a common fetch cycle for all instructions • The second cycle begins with the dispatch of an instruction to a particular unit • For branch instructions, the second cycle involves decoding and executing instruction as well as predicting branches • Floating-point instructions following a similar pipeline, but there are two execute cycle, reflecting the complexity of floating-point operations 13 -50 Yonsei University

Power. PC 601 Pipeline 13 -51 Power. PC Yonsei University

Instruction Pipeline Power. PC • The compiler can transform the sequence compare branch … To the sequence compare … branch … 13 -52 Yonsei University

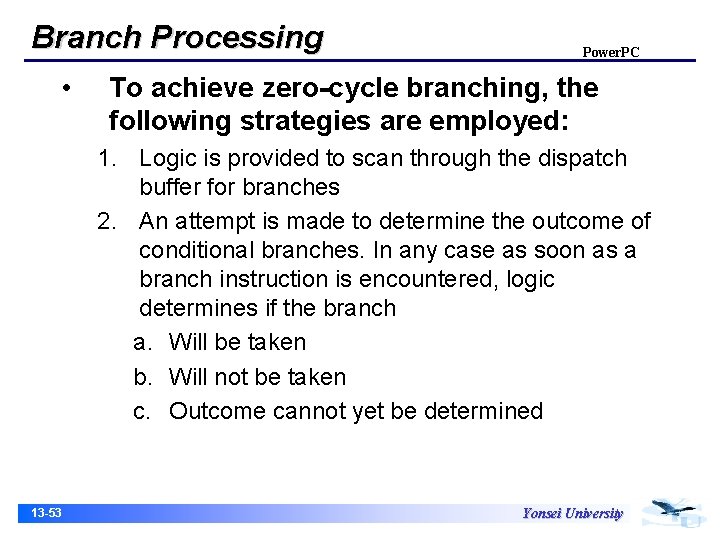

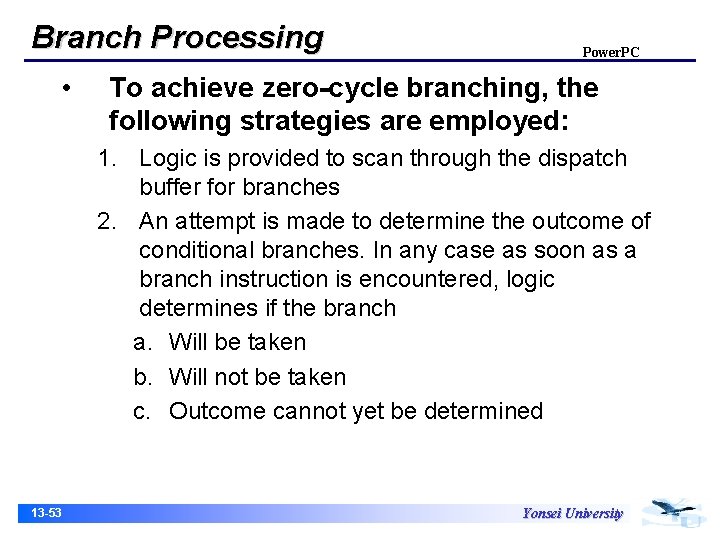

Branch Processing • Power. PC To achieve zero-cycle branching, the following strategies are employed: 1. Logic is provided to scan through the dispatch buffer for branches 2. An attempt is made to determine the outcome of conditional branches. In any case as soon as a branch instruction is encountered, logic determines if the branch a. Will be taken b. Will not be taken c. Outcome cannot yet be determined 13 -53 Yonsei University

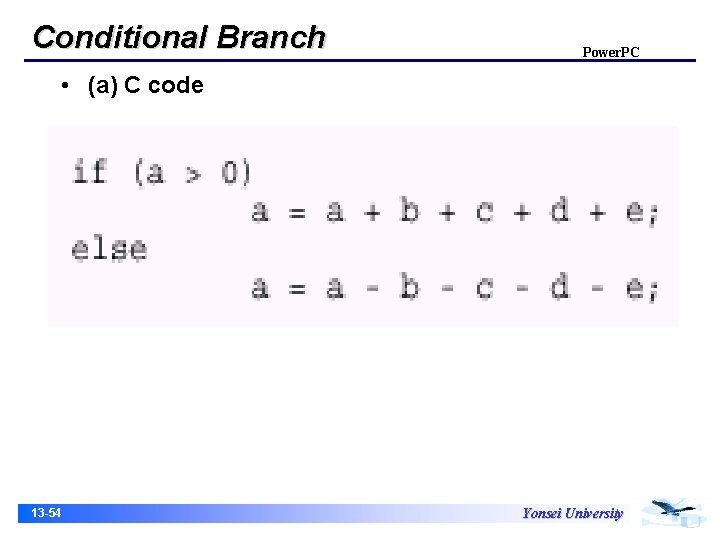

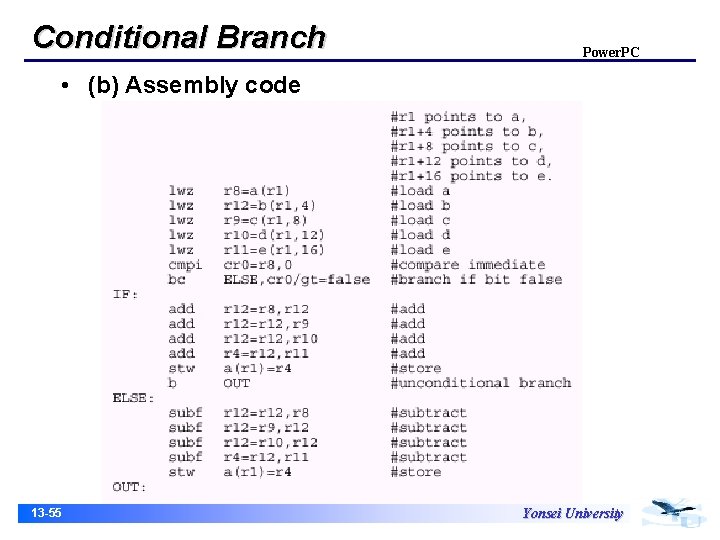

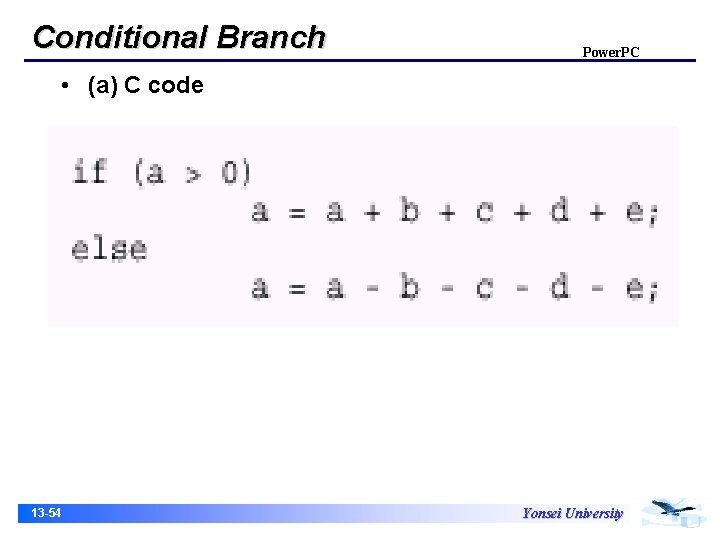

Conditional Branch Power. PC • (a) C code 13 -54 Yonsei University

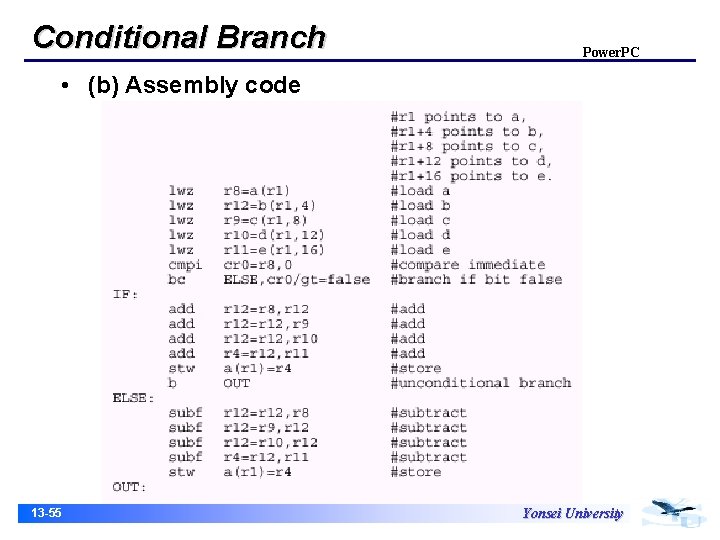

Conditional Branch Power. PC • (b) Assembly code 13 -55 Yonsei University

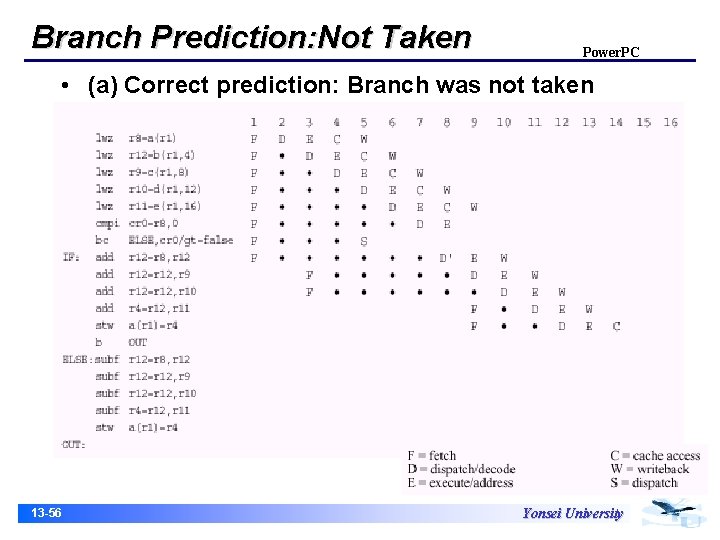

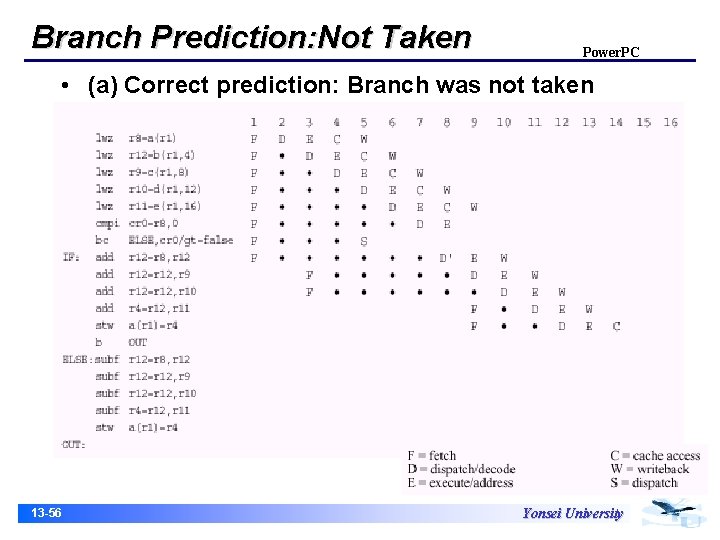

Branch Prediction: Not Taken Power. PC • (a) Correct prediction: Branch was not taken 13 -56 Yonsei University

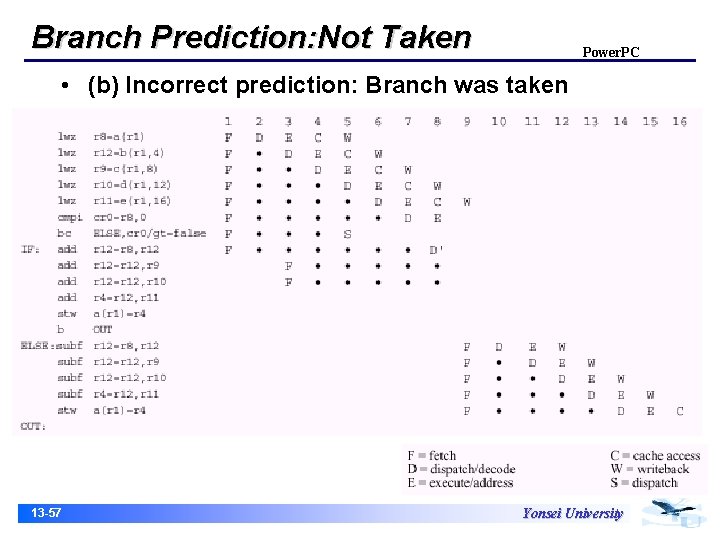

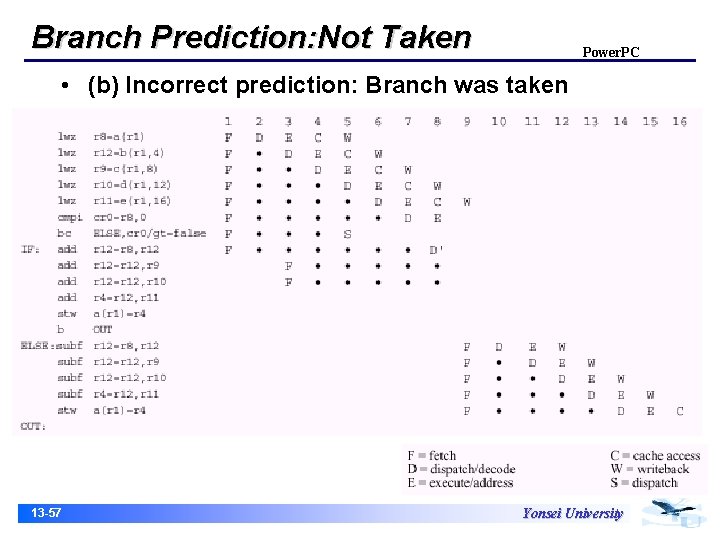

Branch Prediction: Not Taken Power. PC • (b) Incorrect prediction: Branch was taken 13 -57 Yonsei University

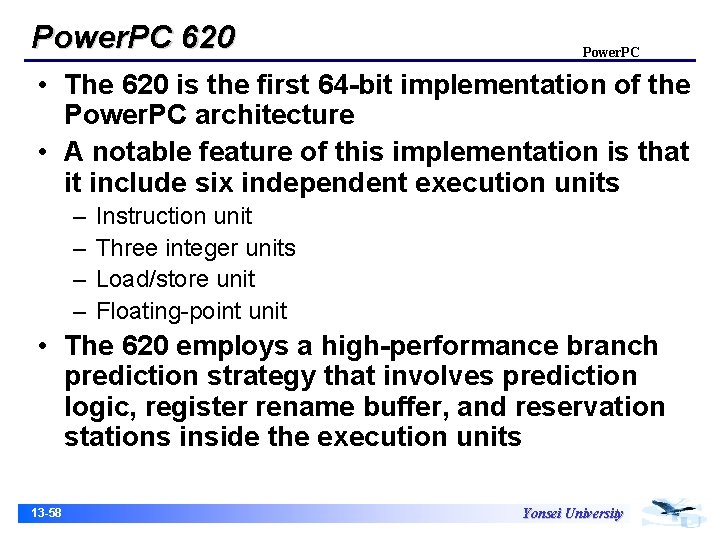

Power. PC 620 Power. PC • The 620 is the first 64 -bit implementation of the Power. PC architecture • A notable feature of this implementation is that it include six independent execution units – – Instruction unit Three integer units Load/store unit Floating-point unit • The 620 employs a high-performance branch prediction strategy that involves prediction logic, register rename buffer, and reservation stations inside the execution units 13 -58 Yonsei University

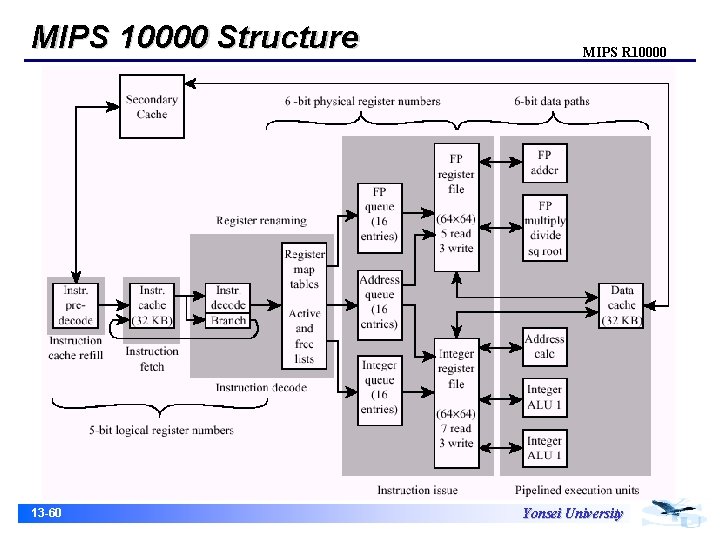

MIPS R 10000 MIPS R 1000 • MIPS R 10000, which has evolved from the MIPS R 4000 and uses the same instruction set, is a rather clean, straightforward implementation of superscalar design principles 13 -59 Yonsei University

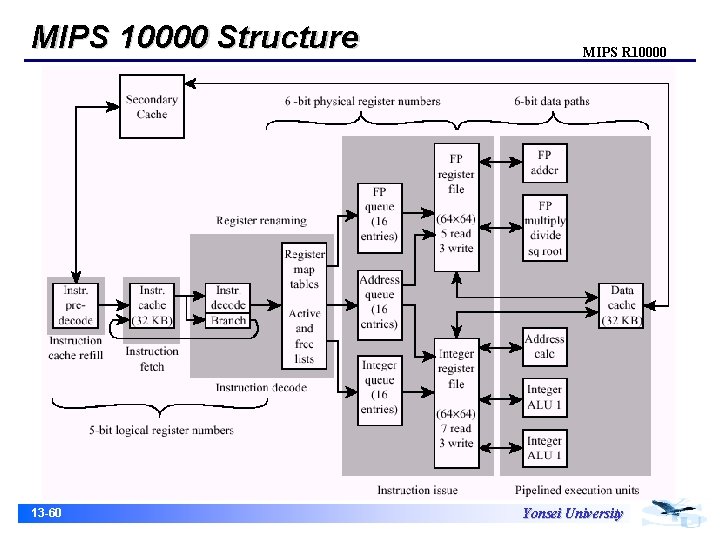

MIPS 10000 Structure 13 -60 MIPS R 10000 Yonsei University

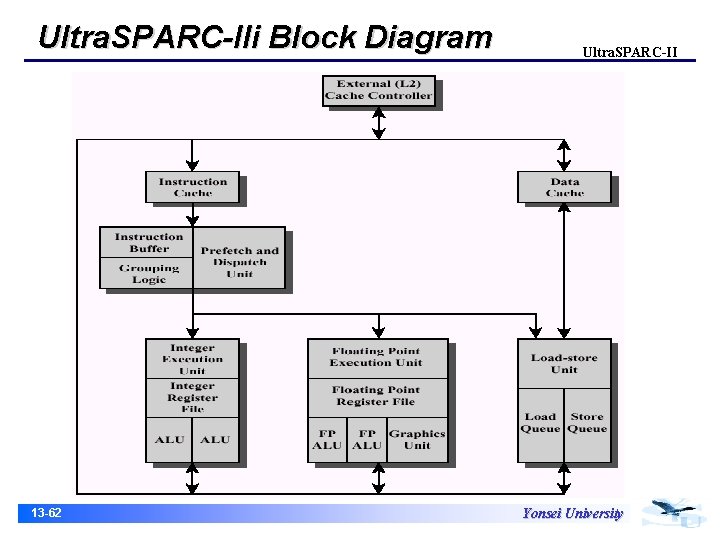

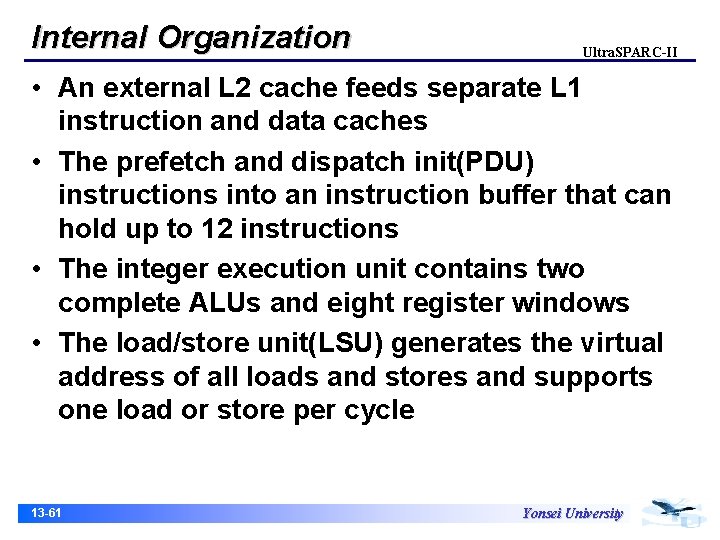

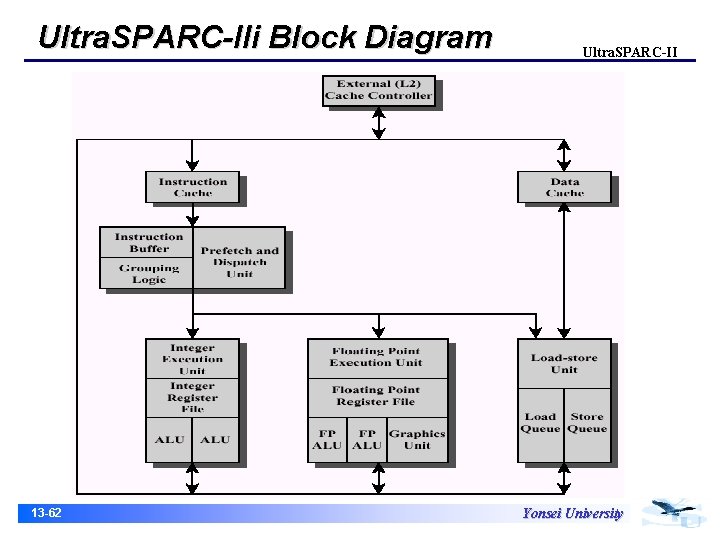

Internal Organization Ultra. SPARC-II • An external L 2 cache feeds separate L 1 instruction and data caches • The prefetch and dispatch init(PDU) instructions into an instruction buffer that can hold up to 12 instructions • The integer execution unit contains two complete ALUs and eight register windows • The load/store unit(LSU) generates the virtual address of all loads and stores and supports one load or store per cycle 13 -61 Yonsei University

Ultra. SPARC-IIi Block Diagram 13 -62 Ultra. SPARC-II Yonsei University

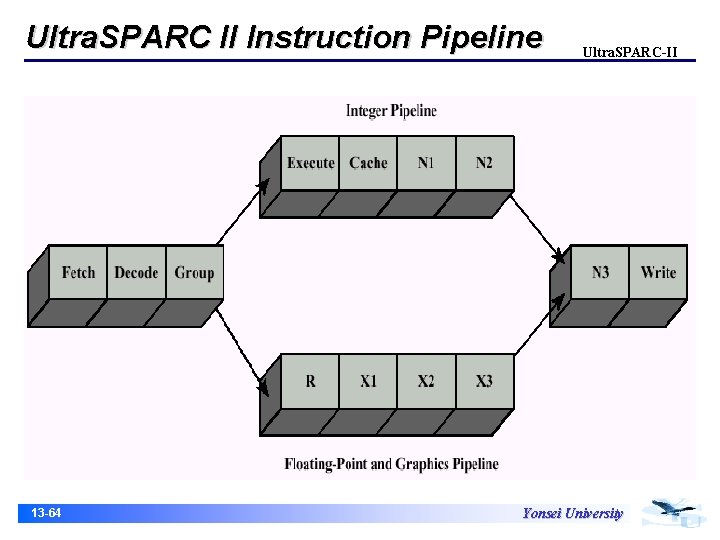

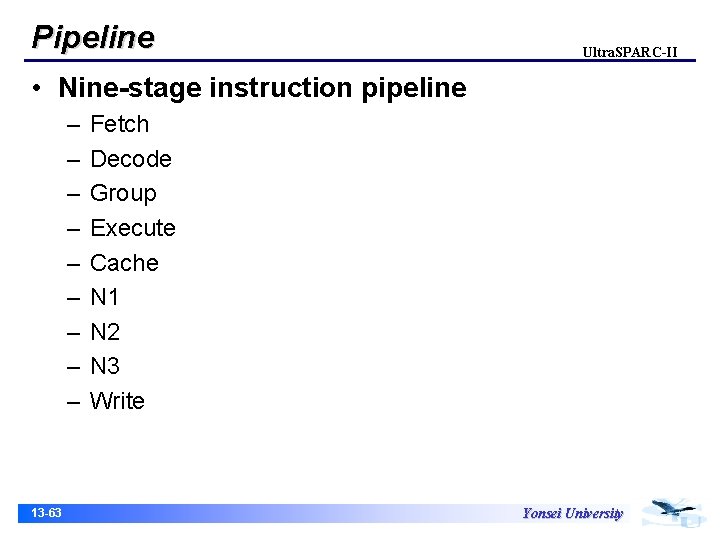

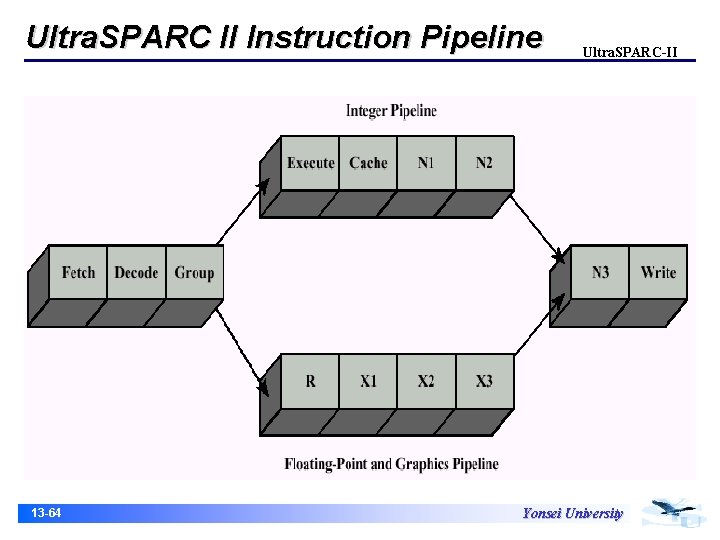

Pipeline Ultra. SPARC-II • Nine-stage instruction pipeline – – – – – 13 -63 Fetch Decode Group Execute Cache N 1 N 2 N 3 Write Yonsei University

Ultra. SPARC II Instruction Pipeline 13 -64 Ultra. SPARC-II Yonsei University

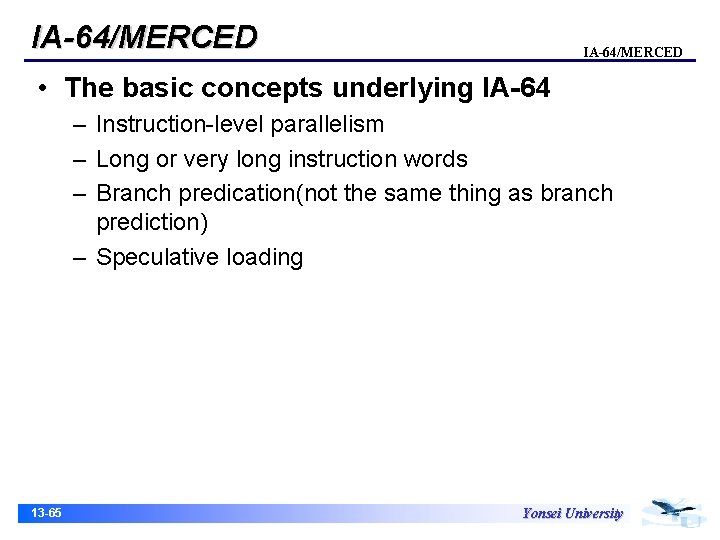

IA-64/MERCED • The basic concepts underlying IA-64 – Instruction-level parallelism – Long or very long instruction words – Branch predication(not the same thing as branch prediction) – Speculative loading 13 -65 Yonsei University

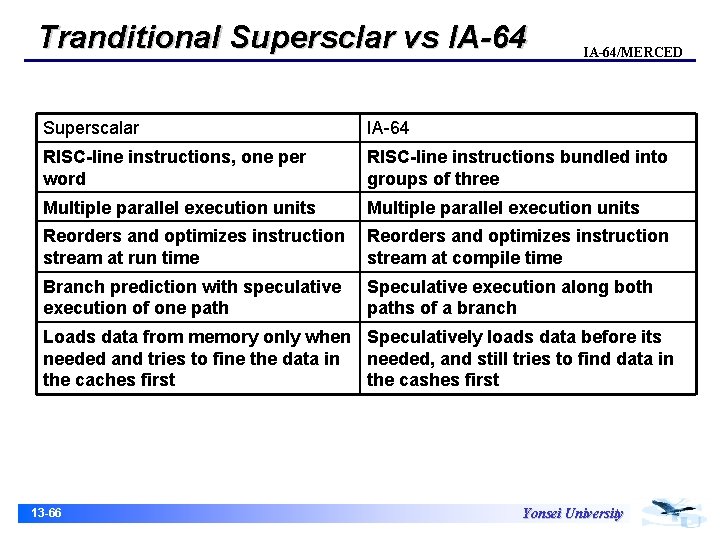

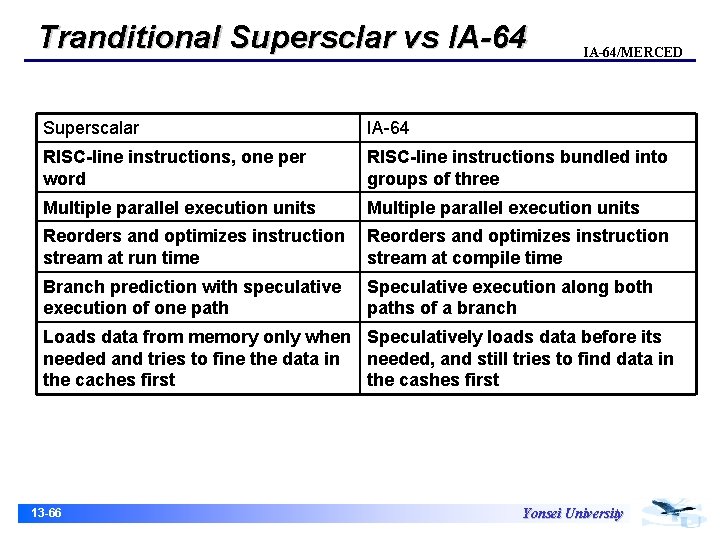

Tranditional Supersclar vs IA-64/MERCED Superscalar IA-64 RISC-line instructions, one per word RISC-line instructions bundled into groups of three Multiple parallel execution units Reorders and optimizes instruction stream at run time Reorders and optimizes instruction stream at compile time Branch prediction with speculative execution of one path Speculative execution along both paths of a branch Loads data from memory only when Speculatively loads data before its needed and tries to fine the data in needed, and still tries to find data in the caches first the cashes first 13 -66 Yonsei University

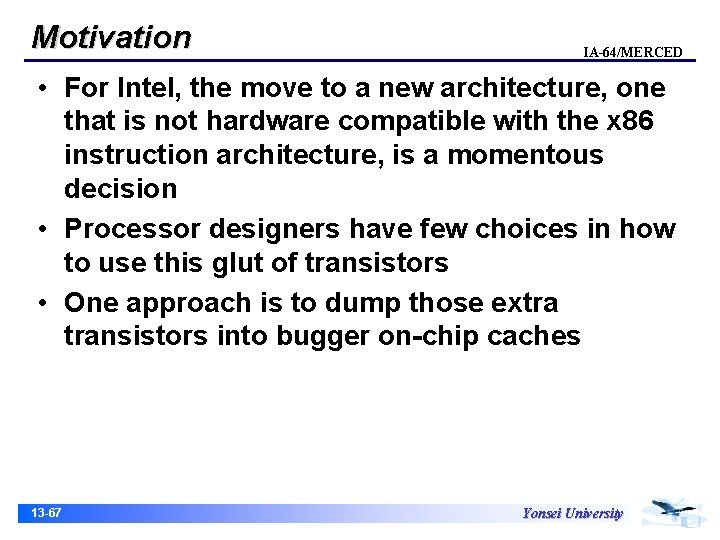

Motivation IA-64/MERCED • For Intel, the move to a new architecture, one that is not hardware compatible with the x 86 instruction architecture, is a momentous decision • Processor designers have few choices in how to use this glut of transistors • One approach is to dump those extra transistors into bugger on-chip caches 13 -67 Yonsei University

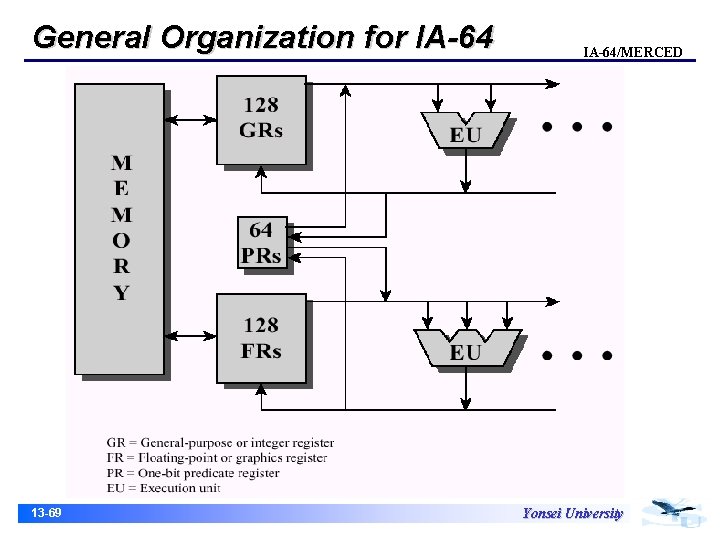

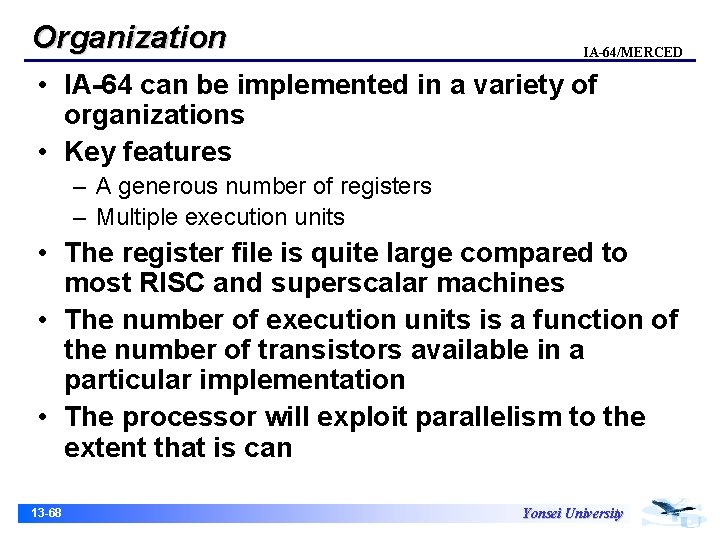

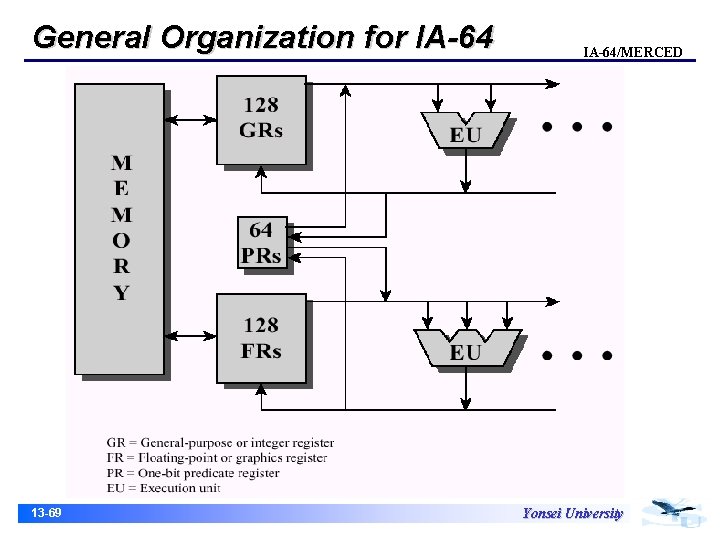

Organization IA-64/MERCED • IA-64 can be implemented in a variety of organizations • Key features – A generous number of registers – Multiple execution units • The register file is quite large compared to most RISC and superscalar machines • The number of execution units is a function of the number of transistors available in a particular implementation • The processor will exploit parallelism to the extent that is can 13 -68 Yonsei University

General Organization for IA-64 13 -69 IA-64/MERCED Yonsei University

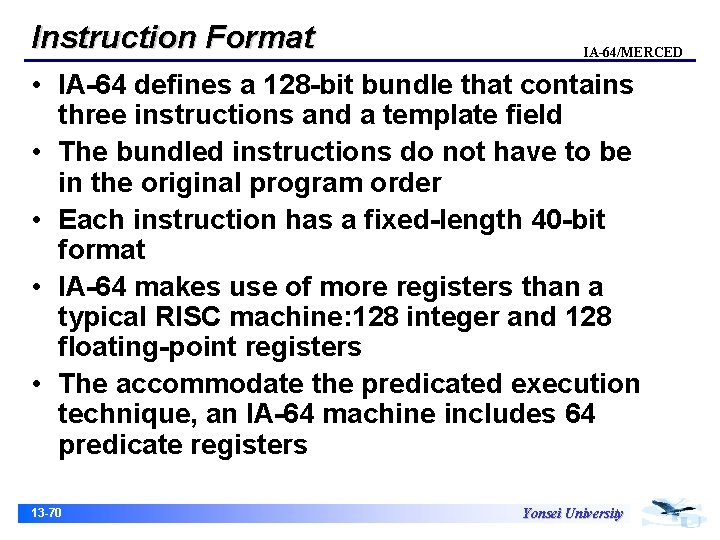

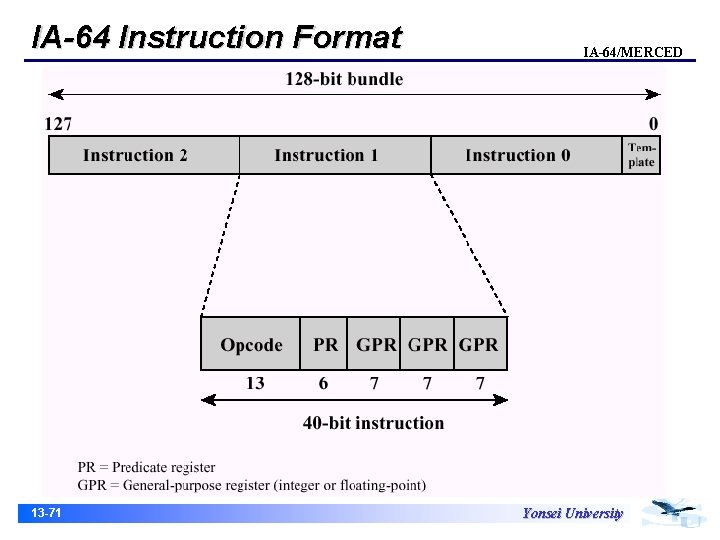

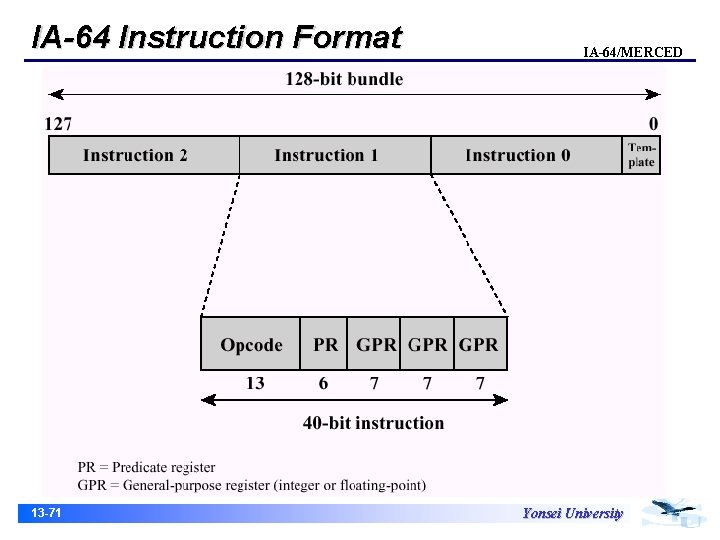

Instruction Format IA-64/MERCED • IA-64 defines a 128 -bit bundle that contains three instructions and a template field • The bundled instructions do not have to be in the original program order • Each instruction has a fixed-length 40 -bit format • IA-64 makes use of more registers than a typical RISC machine: 128 integer and 128 floating-point registers • The accommodate the predicated execution technique, an IA-64 machine includes 64 predicate registers 13 -70 Yonsei University

IA-64 Instruction Format 13 -71 IA-64/MERCED Yonsei University

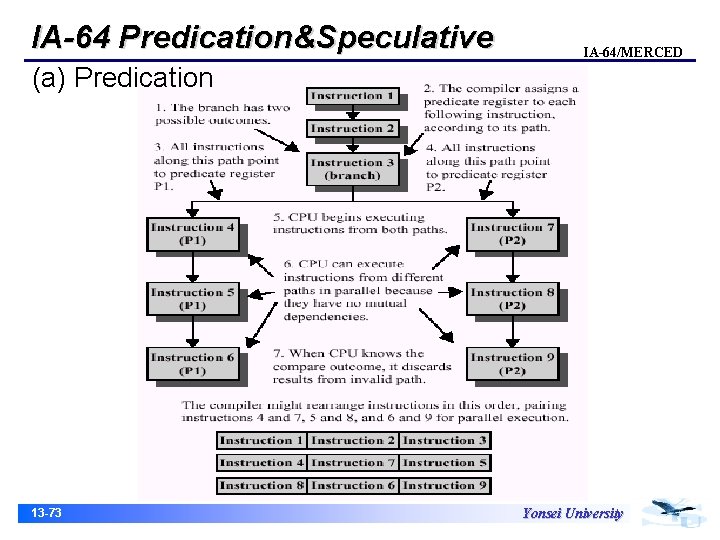

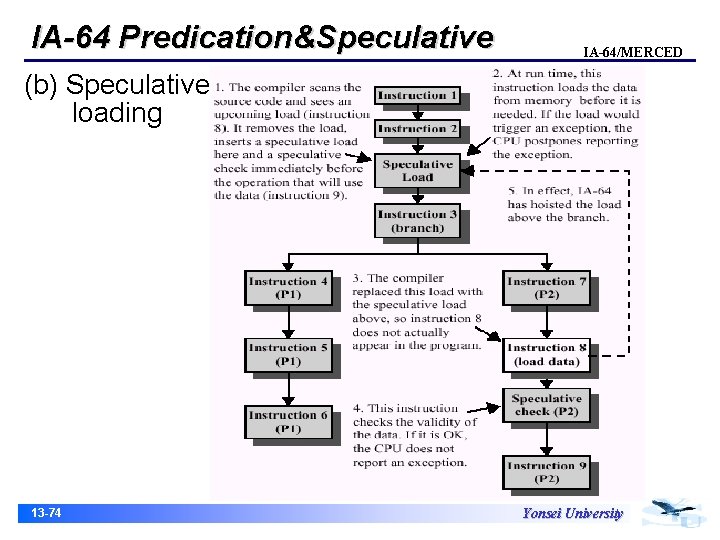

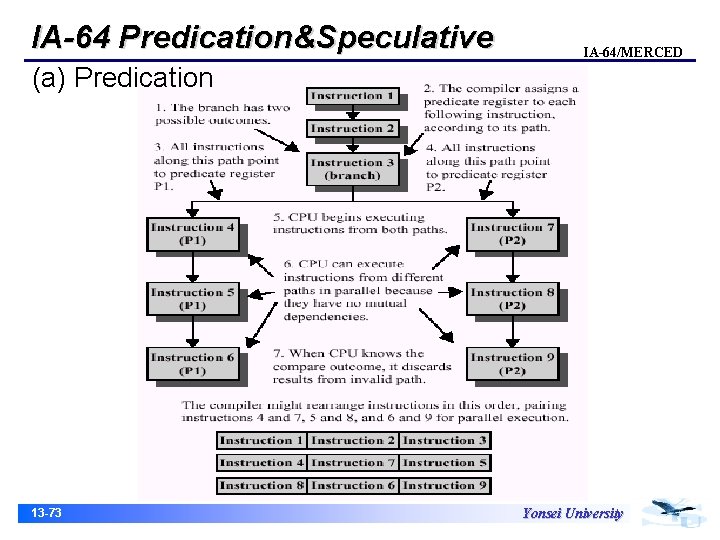

Predicated Execution • • IA-64/MERCED Prediction is a technique whereby the compiler determines which instruction may execute in parallel An IA-64 compiler instead does the following 1. At the if point in the program, insert a compare that creates two predicates 2. Augment each instruction in then path with a reference to a predicate register 3. The processor executes instructions along both paths 13 -72 Yonsei University

IA-64 Predication&Speculative IA-64/MERCED (a) Predication 13 -73 Yonsei University

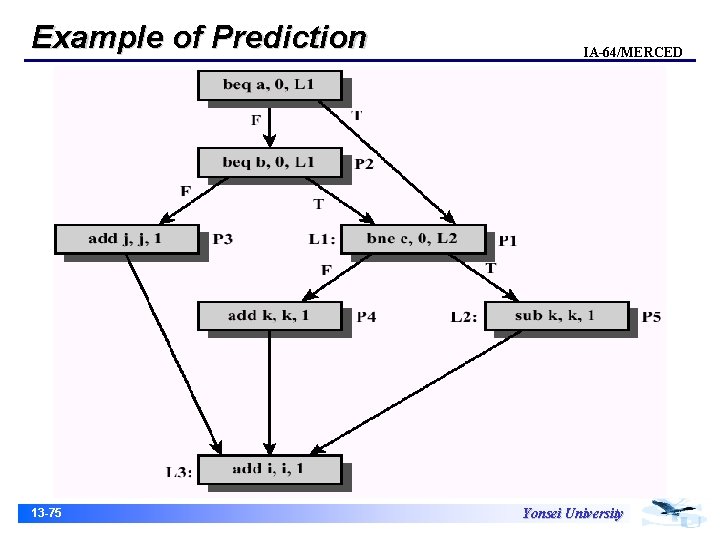

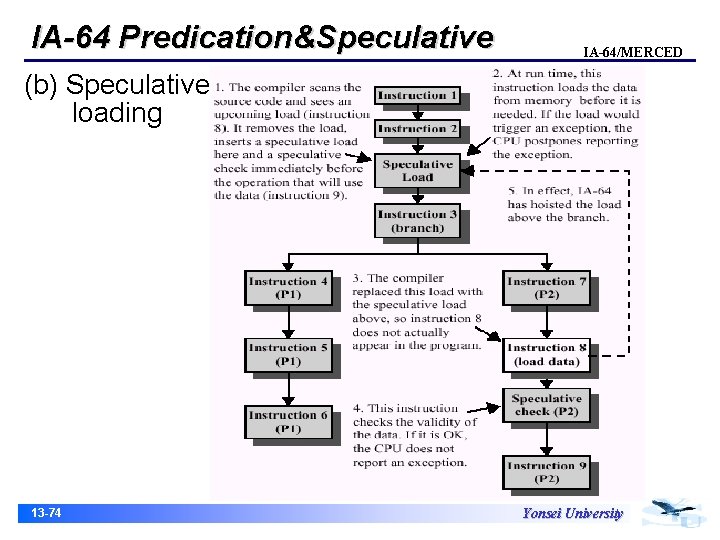

IA-64 Predication&Speculative IA-64/MERCED (b) Speculative loading 13 -74 Yonsei University

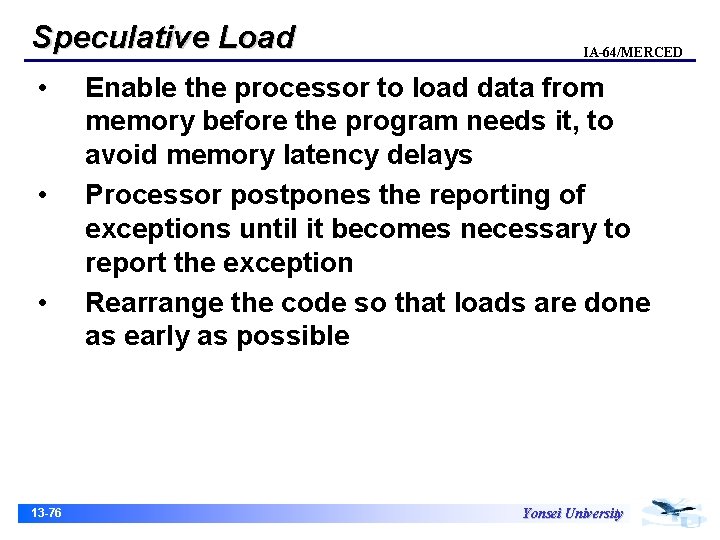

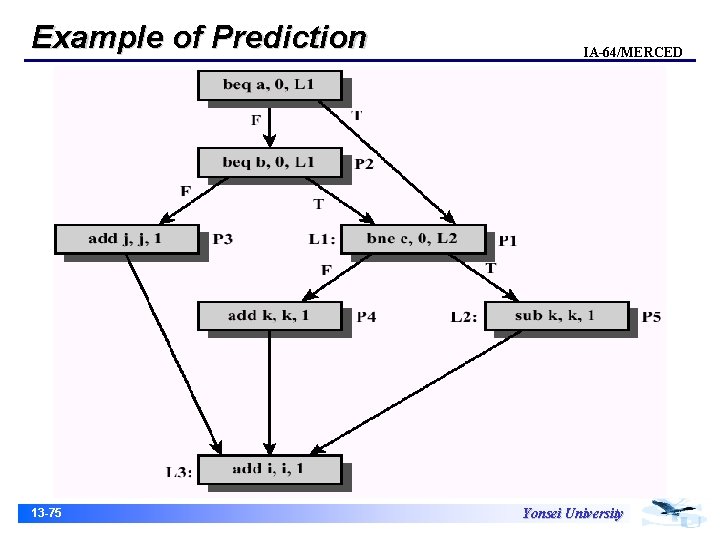

Example of Prediction 13 -75 IA-64/MERCED Yonsei University

Speculative Load • • • 13 -76 IA-64/MERCED Enable the processor to load data from memory before the program needs it, to avoid memory latency delays Processor postpones the reporting of exceptions until it becomes necessary to report the exception Rearrange the code so that loads are done as early as possible Yonsei University

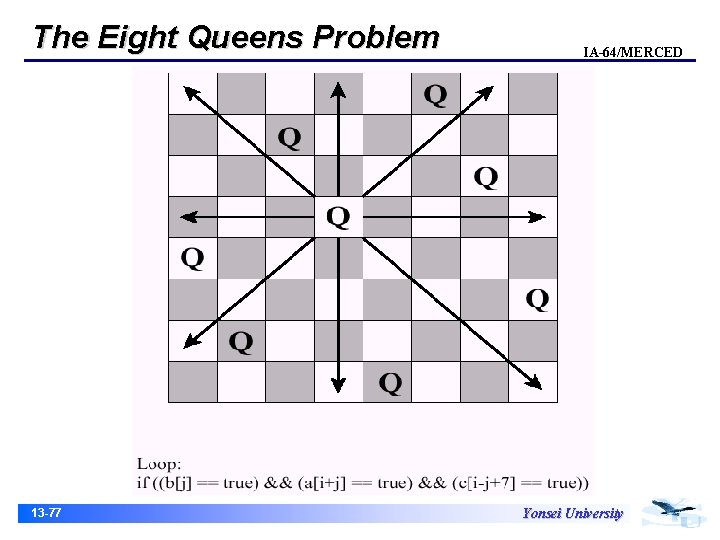

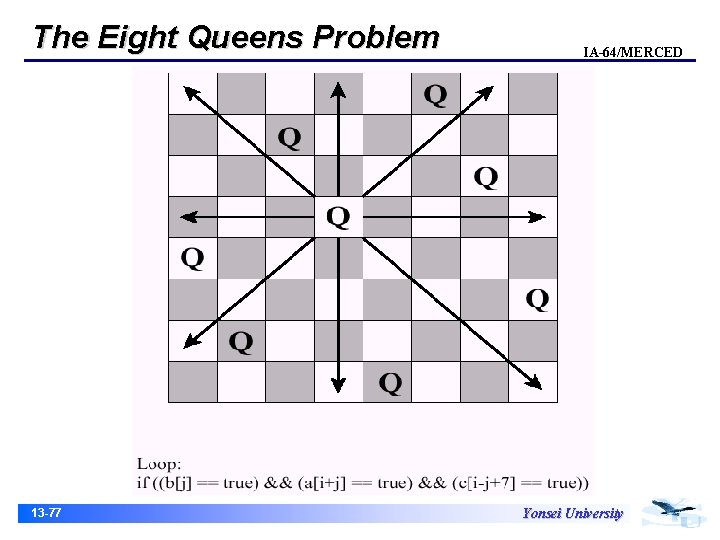

The Eight Queens Problem 13 -77 IA-64/MERCED Yonsei University

Speculative Load • A load instruction in the original program is replaced by two instructions – – 13 -78 IA-64/MERCED A speculative load executes the memory fetch, perform exception detection, but does deliver the exception A checking instruction remains in the place of the original load and delivers exceptions Yonsei University