Chapter 12 Pipelining Strategies Performance Hazards Example Register

- Slides: 44

Chapter 12 Pipelining • Strategies • Performance • Hazards

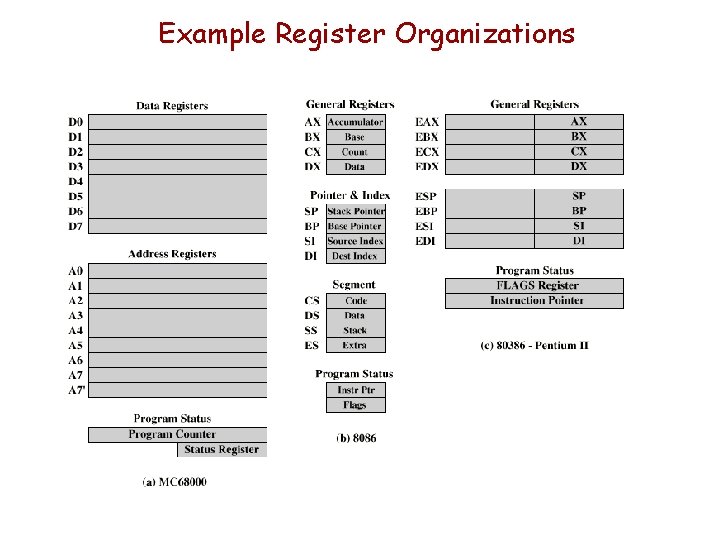

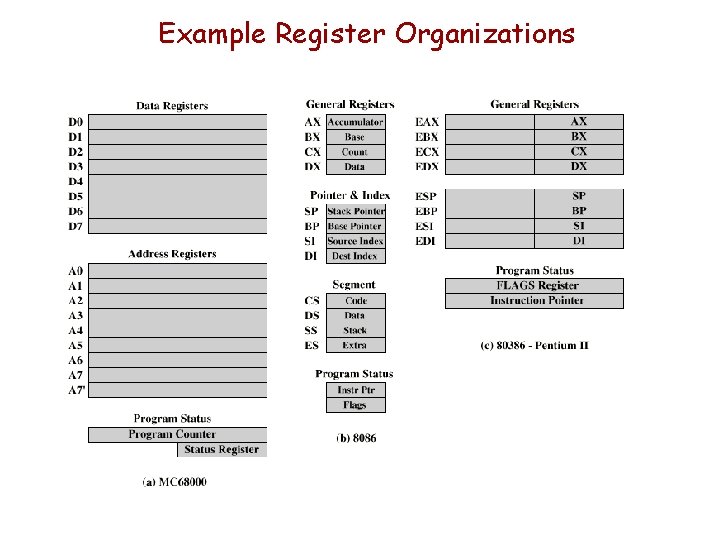

Example Register Organizations

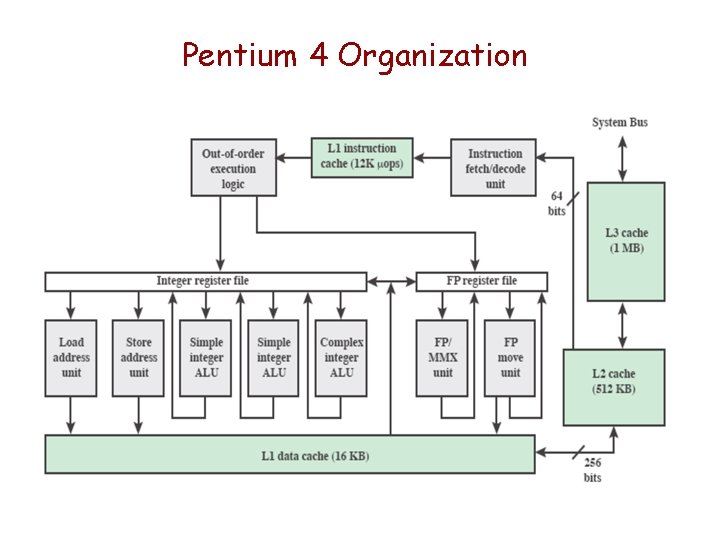

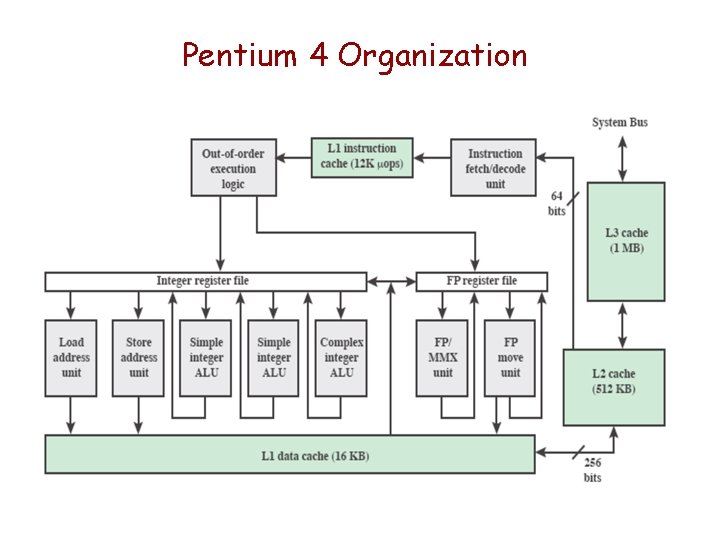

Pentium 4 Organization

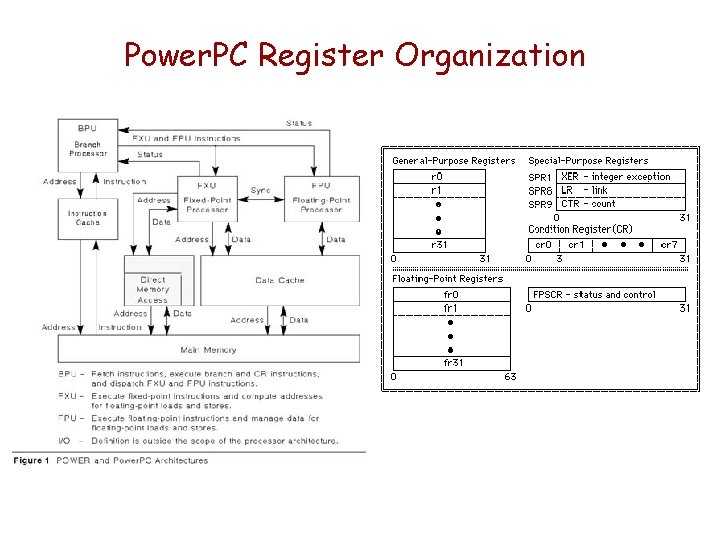

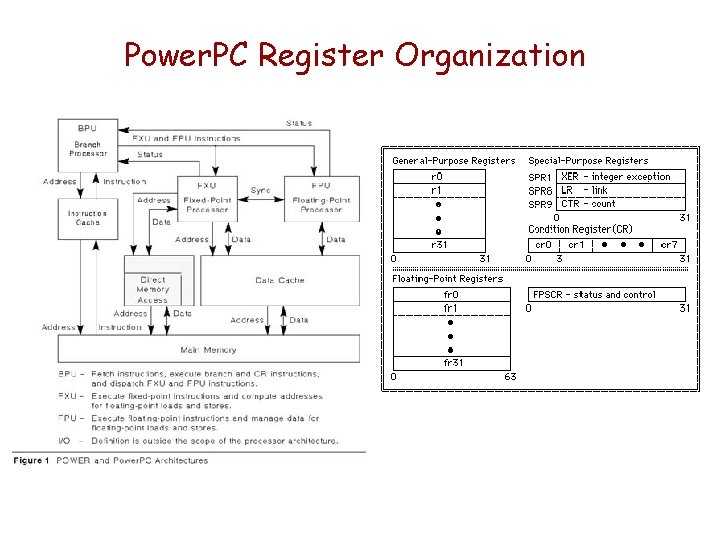

Power. PC Register Organization

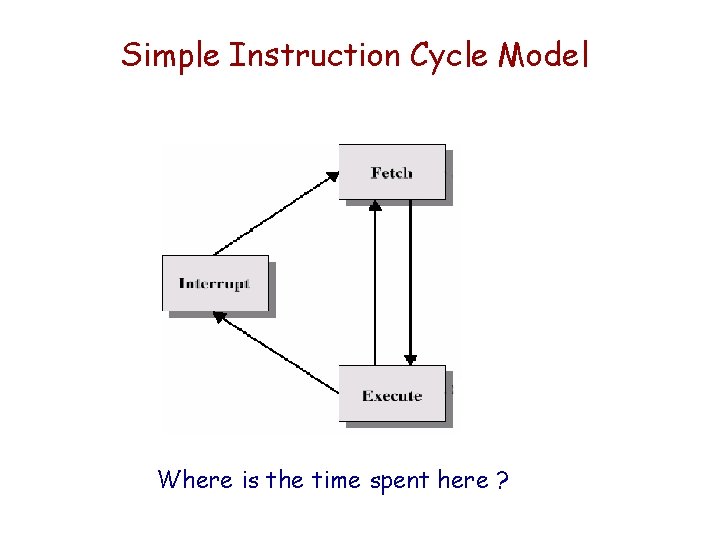

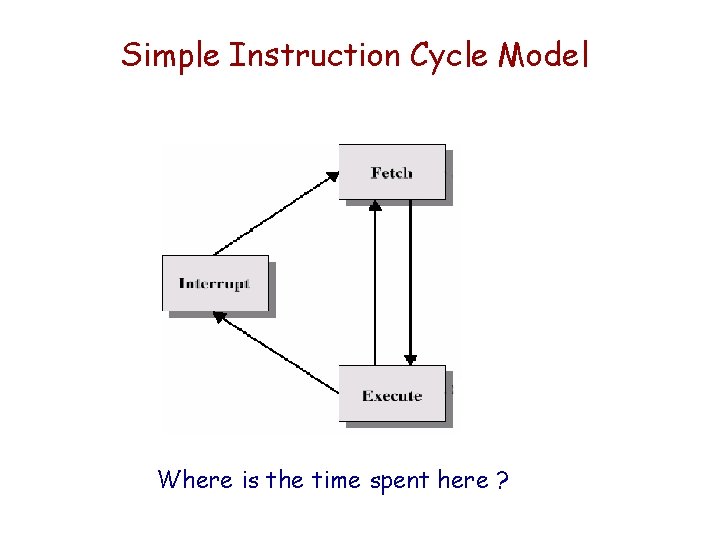

Simple Instruction Cycle Model Where is the time spent here ?

Faster Processing Can be achieved through: — Faster cycle time — Divide cycle into more States — Implementing parallelism

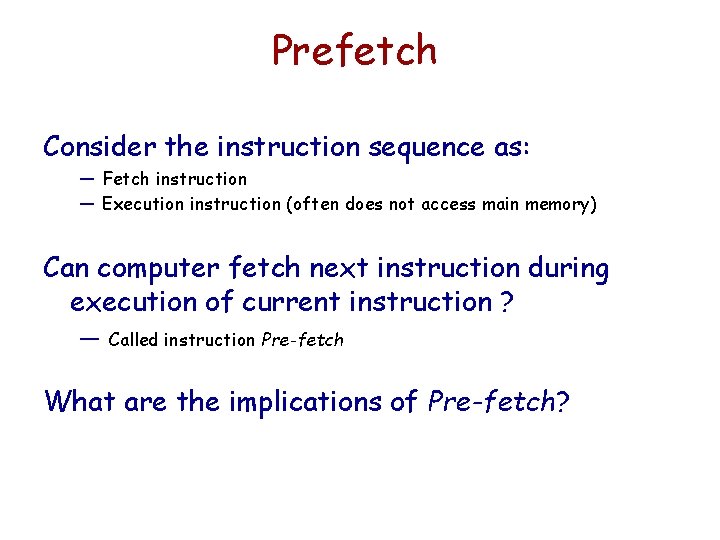

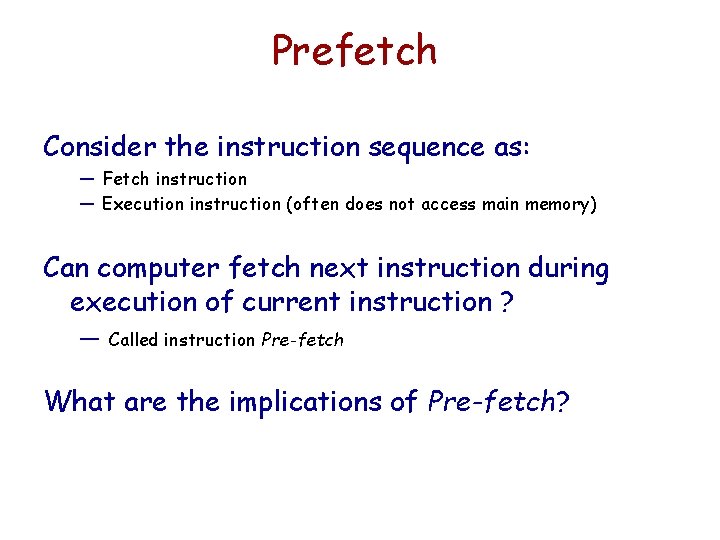

Prefetch Consider the instruction sequence as: — Fetch instruction — Execution instruction (often does not access main memory) Can computer fetch next instruction during execution of current instruction ? — Called instruction Pre-fetch What are the implications of Pre-fetch?

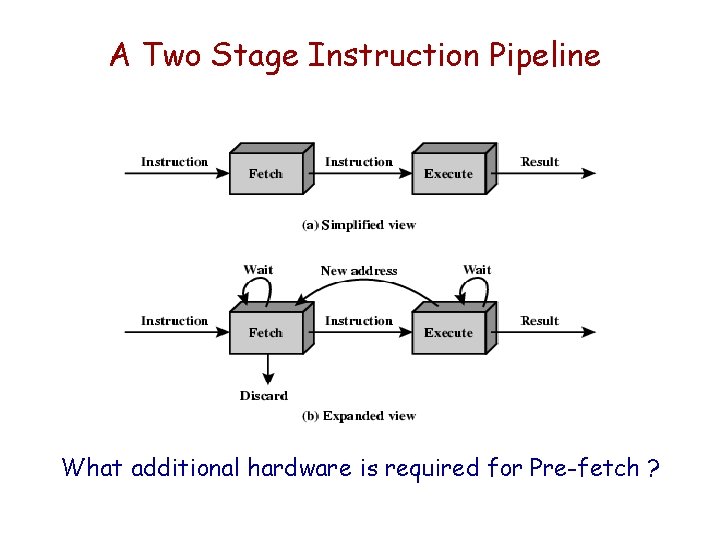

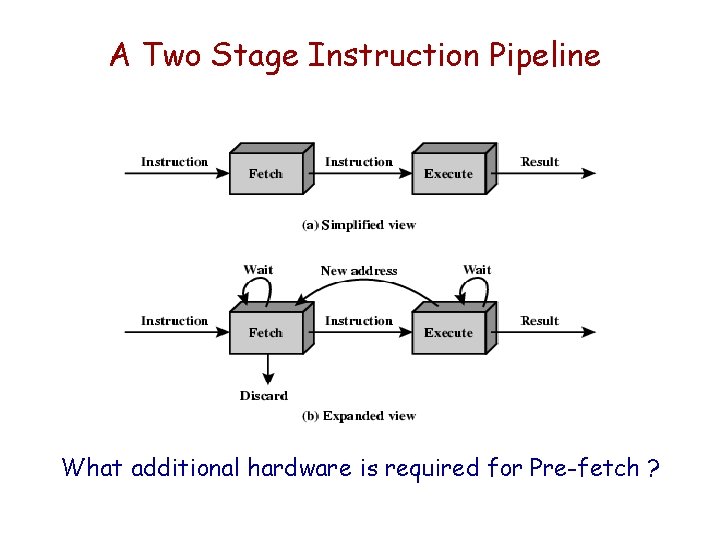

A Two Stage Instruction Pipeline What additional hardware is required for Pre-fetch ?

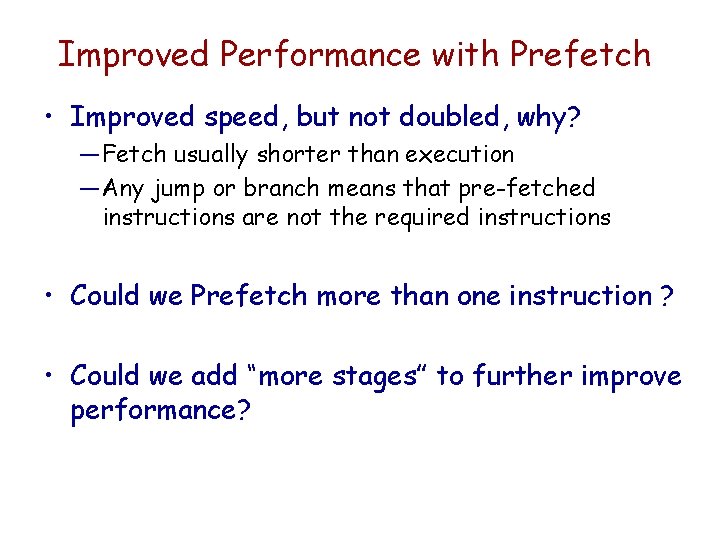

Improved Performance with Prefetch • Improved speed, but not doubled, why? — Fetch usually shorter than execution — Any jump or branch means that pre-fetched instructions are not the required instructions • Could we Prefetch more than one instruction ? • Could we add “more stages” to further improve performance?

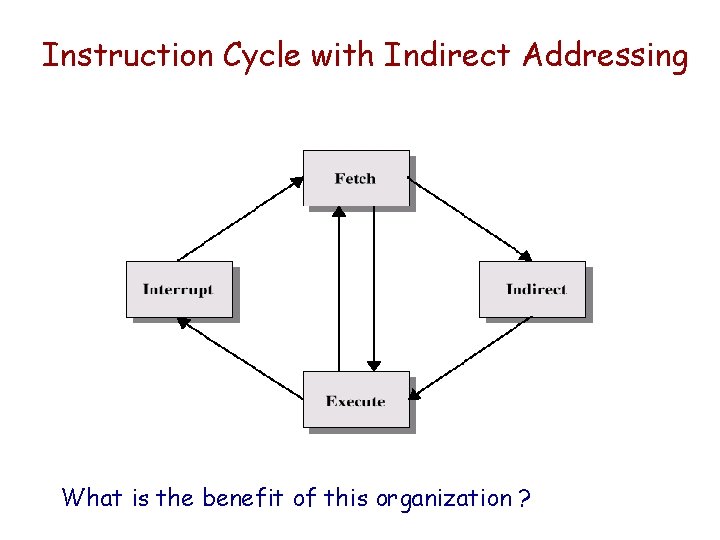

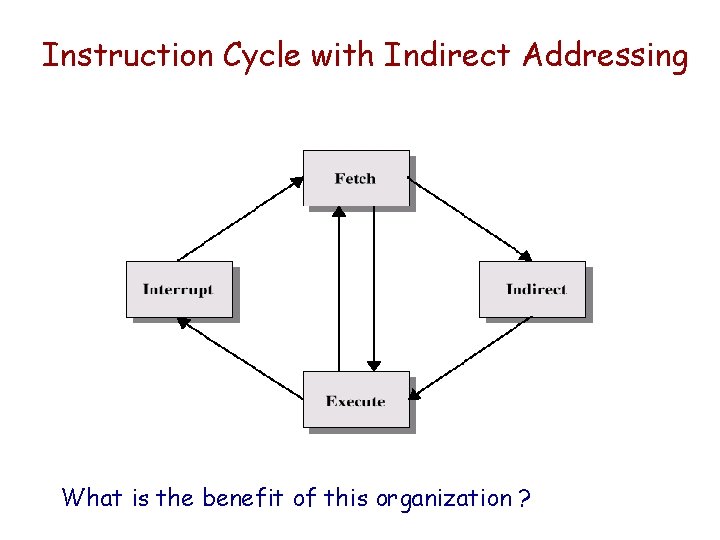

Instruction Cycle with Indirect Addressing What is the benefit of this organization ?

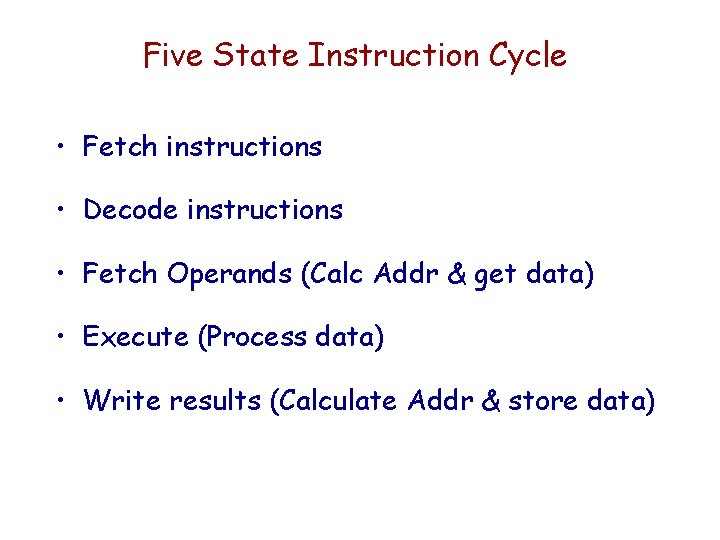

Five State Instruction Cycle • Fetch instructions • Decode instructions • Fetch Operands (Calc Addr & get data) • Execute (Process data) • Write results (Calculate Addr & store data)

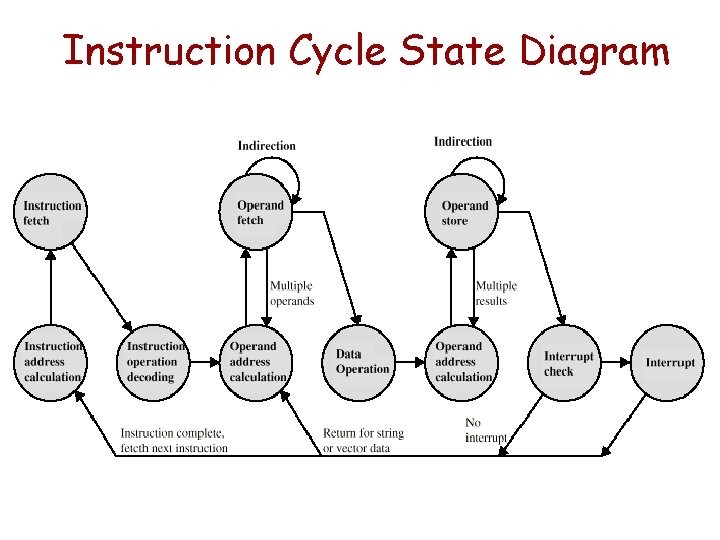

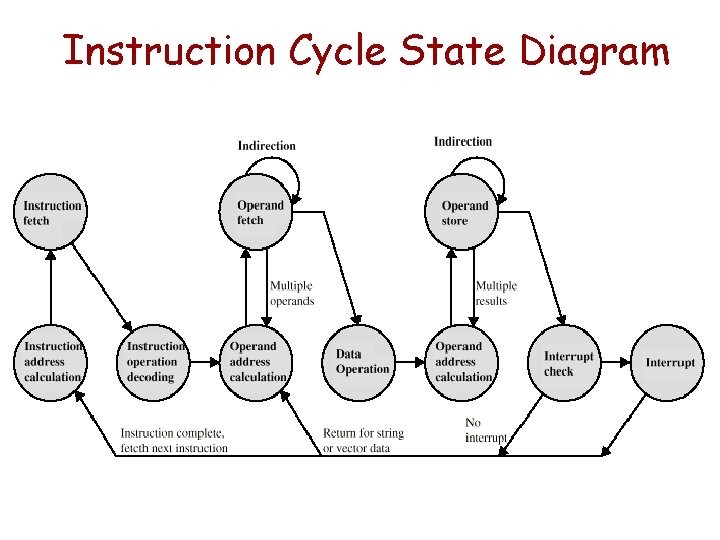

Instruction Cycle State Diagram

Pipelining Consider the instruction sequence as: — — — — Fetch instruction (FI) , Decode instruction (DI), Calculate Operands (CO), Fetch Operands (FO) Execute Instruction (EI), Write Operand (WO), Check for Interrupt (CI) Consider it as an “assembly line” of operations. Then we can begin the next instruction assembly line sequence before the last has finished. Actually we can fetch the next instruction while the present one is being decoded. This is pipelining

Pipeline “stations” Let’s define a possible set of Pipeline stations: • • • Fetch Instruction (FI) Decode Instruction (DI) Calculate Operand Addresses (CO) Fetch Operands (FO) Execute Instruction (EI) Write Operand (WO)

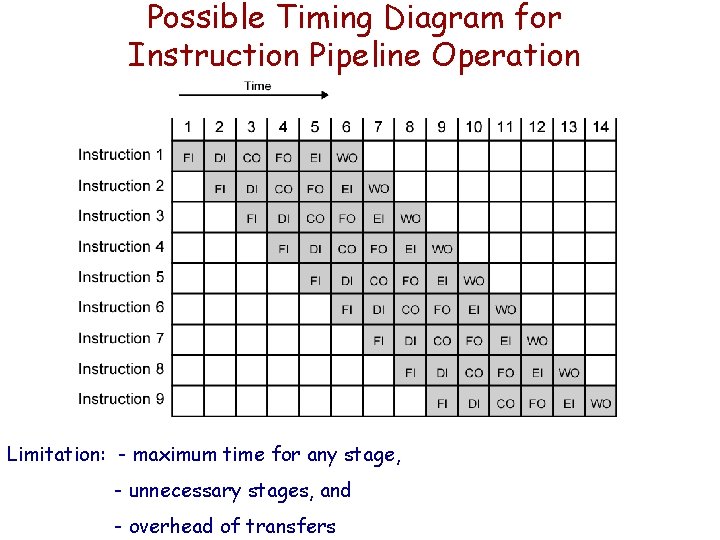

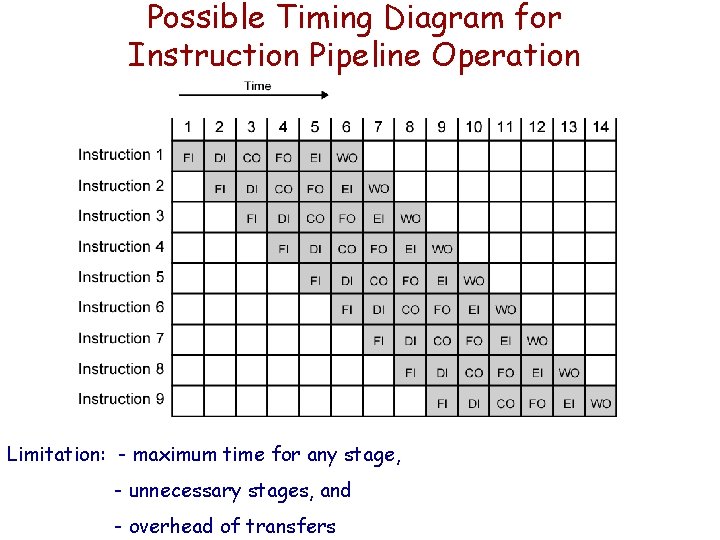

Possible Timing Diagram for Instruction Pipeline Operation Limitation: - maximum time for any stage, - unnecessary stages, and - overhead of transfers

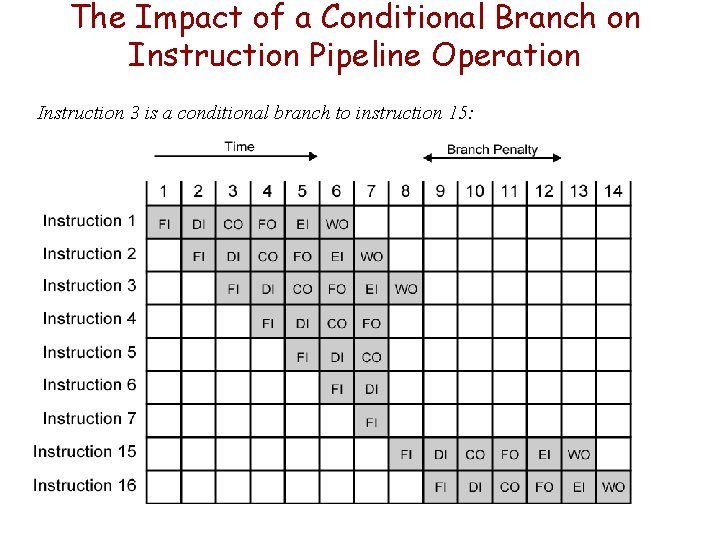

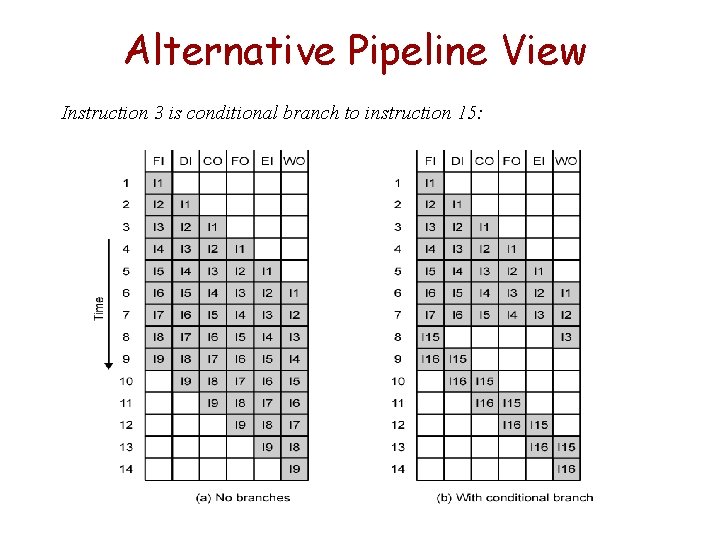

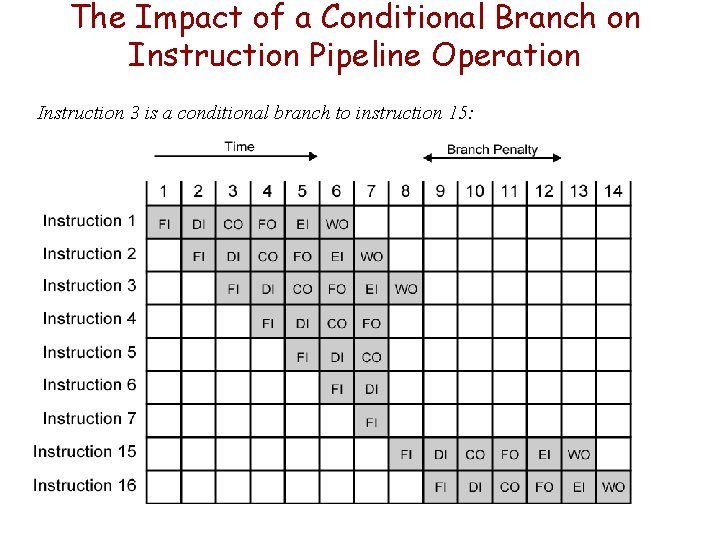

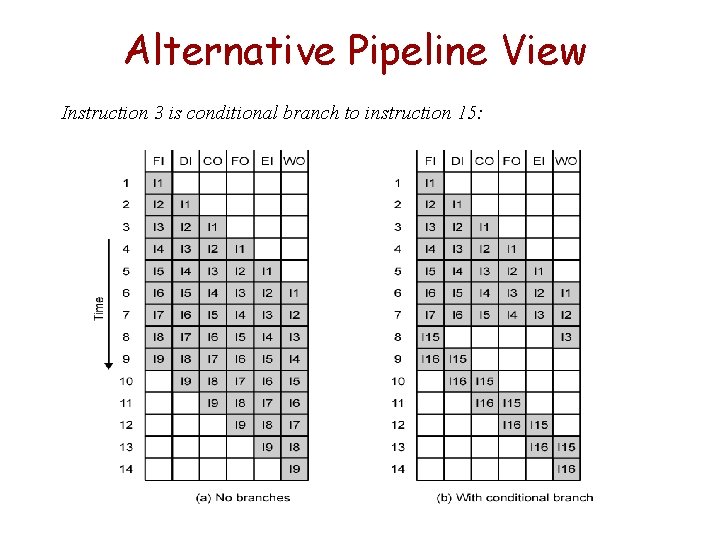

The Impact of a Conditional Branch on Instruction Pipeline Operation Instruction 3 is a conditional branch to instruction 15:

Alternative Pipeline View Instruction 3 is conditional branch to instruction 15:

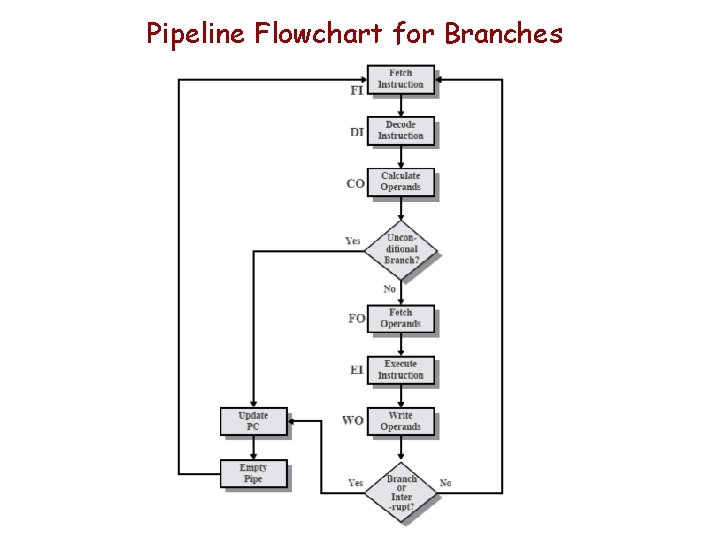

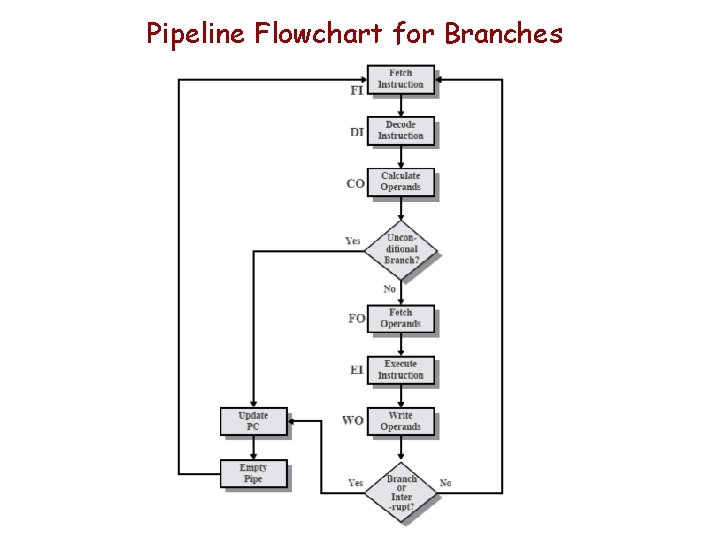

Pipeline Flowchart for Branches

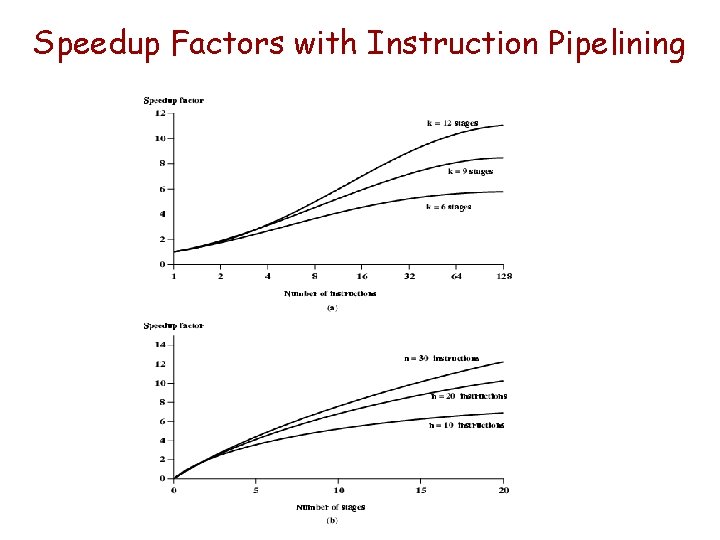

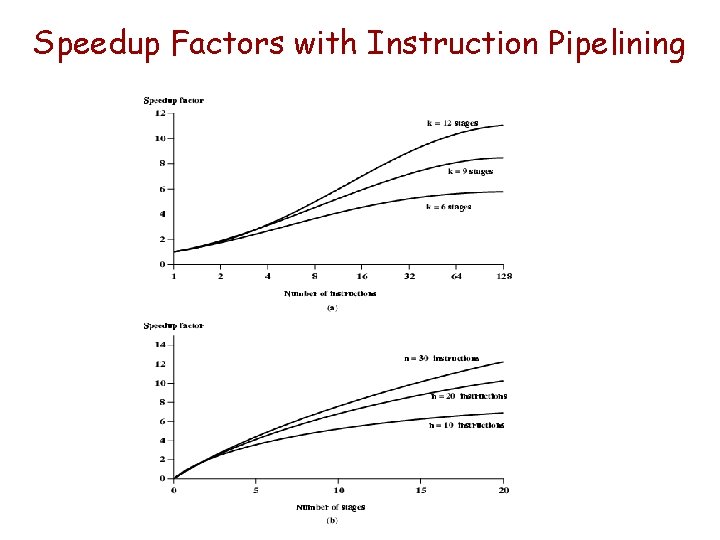

Speedup Factors with Instruction Pipelining

Pipeline Hazards Types of Pipeline Hazards: — Structural (or Resource) — Data — Control

Structural Hazards Structural hazards occur when instruction in the pipeline need the same resource: — Memory — CPU — Etc.

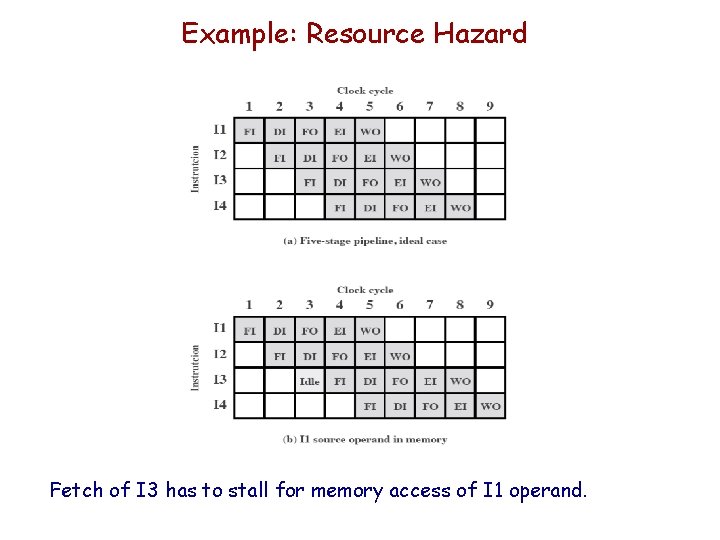

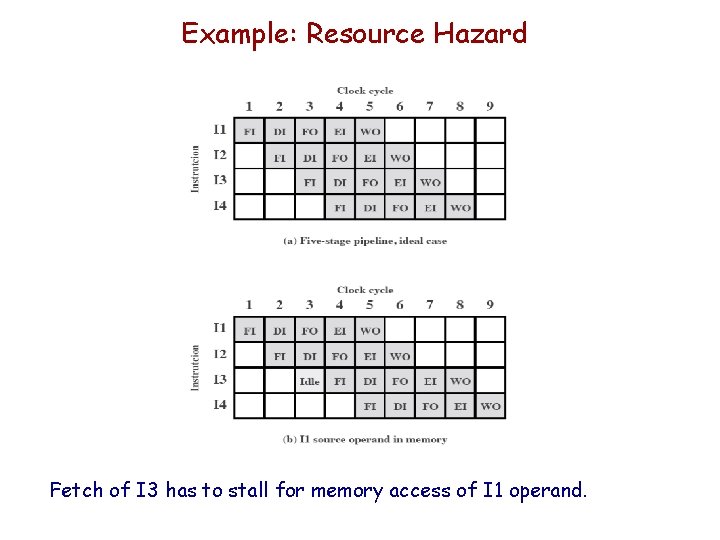

Example: Resource Hazard Fetch of I 3 has to stall for memory access of I 1 operand.

Data Hazards occur when there is a conflict in the access of: — a memory location or — a register

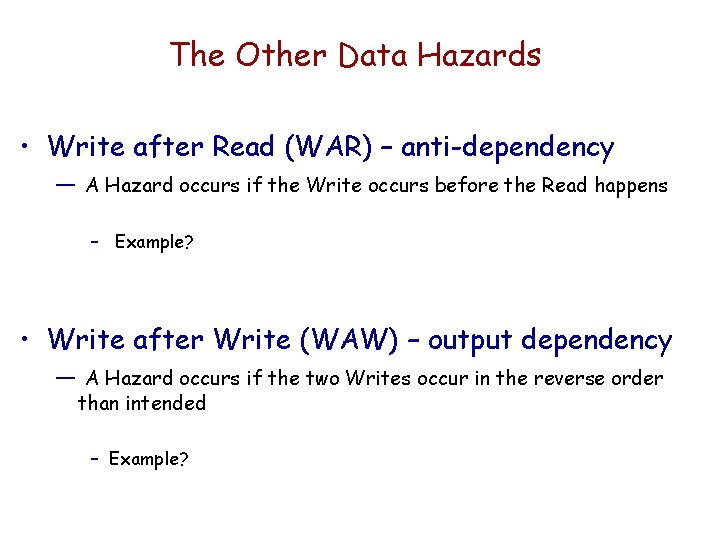

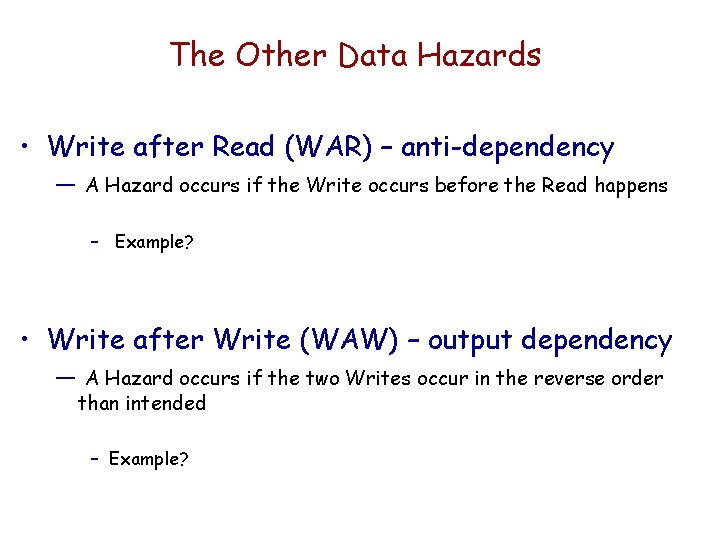

Types of Data Hazards • Read after Write (RAW) – true dependency — A Hazard occurs if the Read occurs before the Write is complete • Write after Read (WAR) – anti-dependency — A Hazard occurs if the Write occurs before the Read happens • Write after Write (WAW) – output dependency — A Hazard occurs if the two Writes occur in the reverse order than intended

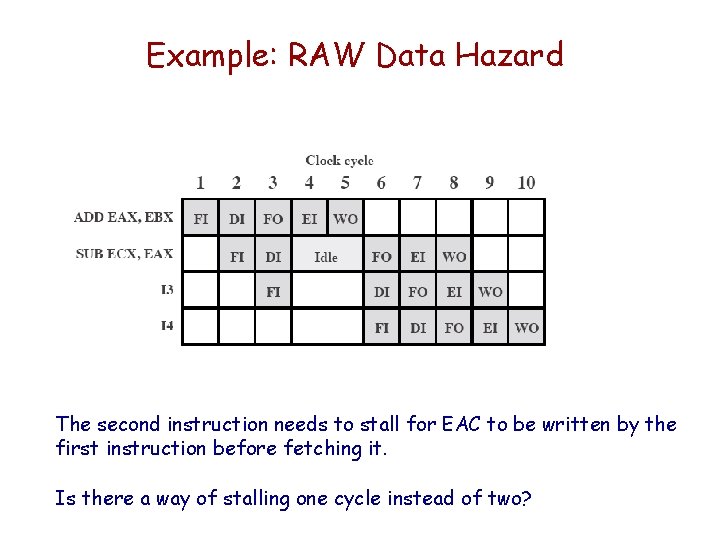

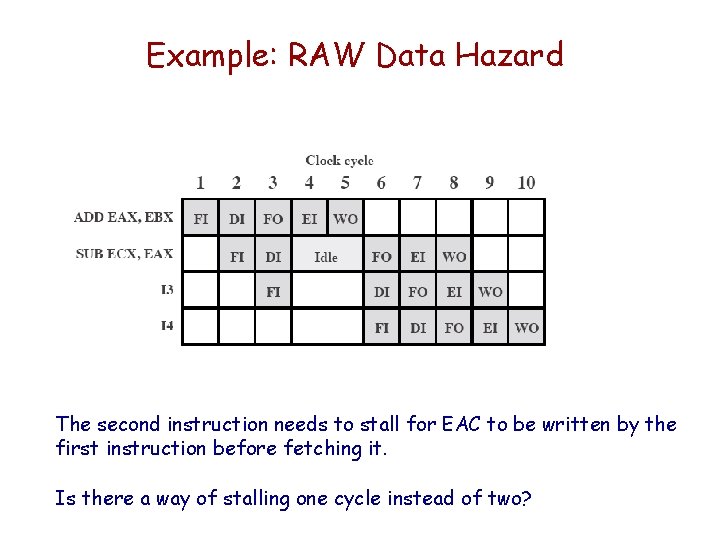

Example: RAW Data Hazard The second instruction needs to stall for EAC to be written by the first instruction before fetching it. Is there a way of stalling one cycle instead of two?

The Other Data Hazards • Write after Read (WAR) – anti-dependency — A Hazard occurs if the Write occurs before the Read happens – Example? • Write after Write (WAW) – output dependency — A Hazard occurs if the two Writes occur in the reverse order than intended – Example?

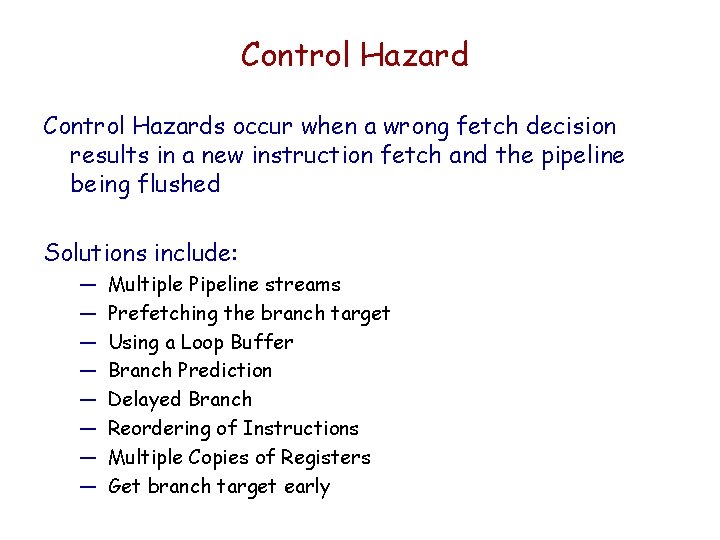

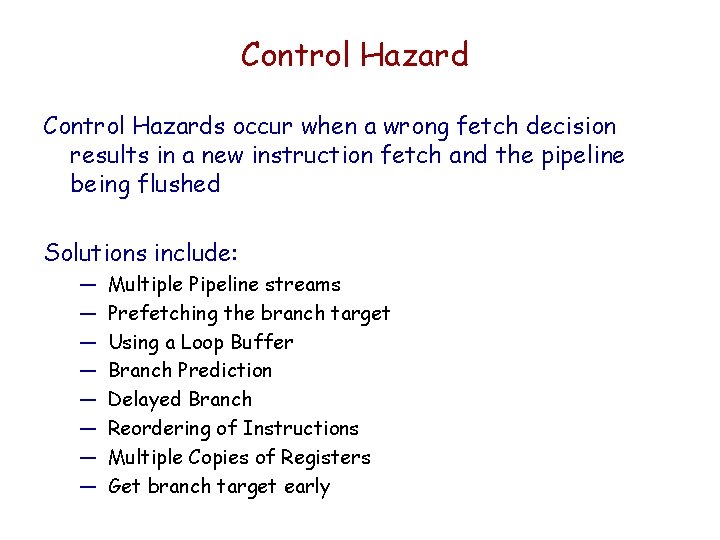

Control Hazards occur when a wrong fetch decision results in a new instruction fetch and the pipeline being flushed Solutions include: — — — — Multiple Pipeline streams Prefetching the branch target Using a Loop Buffer Branch Prediction Delayed Branch Reordering of Instructions Multiple Copies of Registers Get branch target early

Multiple Streams • Have two pipelines • Prefetch each branch into a separate pipeline • Use appropriate pipeline Challenges: • Leads to bus & register contention • Multiple branches lead to further pipelines being needed

Prefetch Branch Target • Target of branch is prefetched in addition to instructions following branch • Keep target until branch is executed

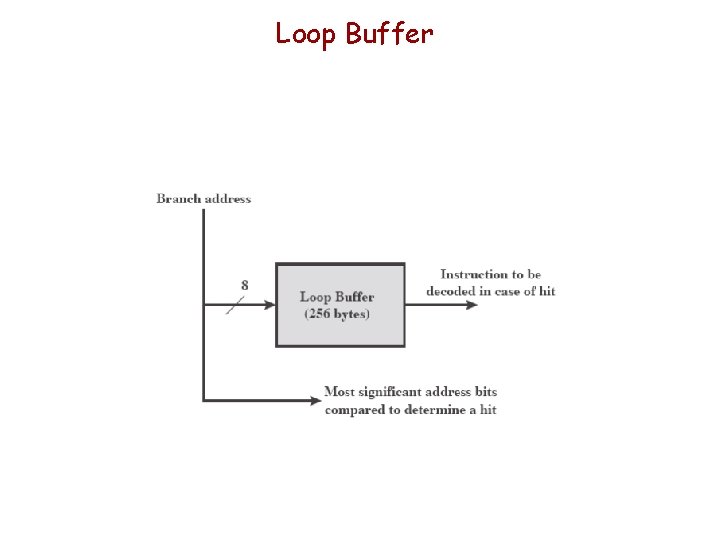

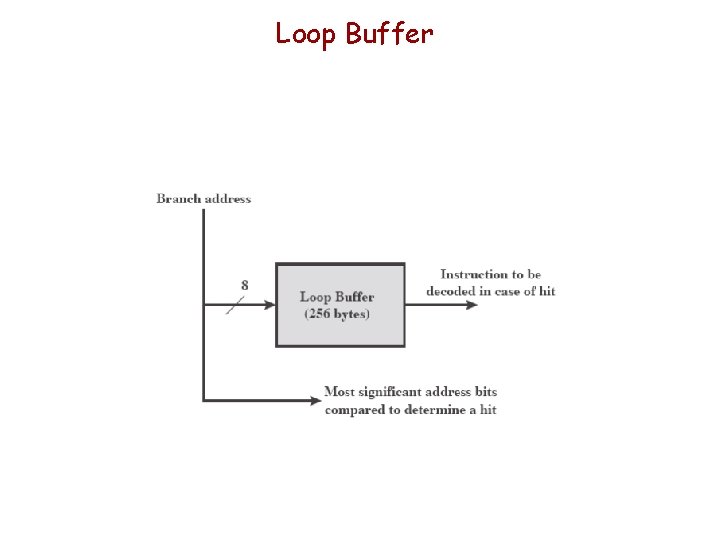

Using a Loop Buffer Have a small fast memory to hold the past n instructions – perhaps already decoded This likely contains loops that are executed repeatedly

Loop Buffer

Branch Prediction • Predict branch never taken • Predict branch always taken • Predict by opcode • Use Predict branch taken/not taken switch • Maintain branch history table • Get help from Compiler

Predict Branch Taken / Not taken • Predict never taken — Assume that jump will not happen — Always fetch next instruction • Predict always taken — Assume that jump will happen — Always fetch target instruction Which is better – consider possible page faults?

Branch Prediction by Opcode / Switch • Predict by Opcode — Some instructions are more likely to result in a jump than others — Can get up to 75% success with this stategy • Taken/Not taken switch — Based on previous history — Good for loops — Perhaps good to match programmer style

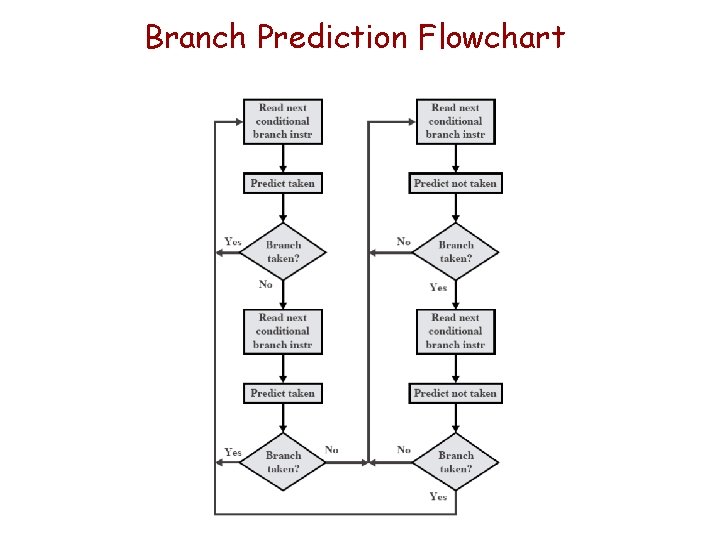

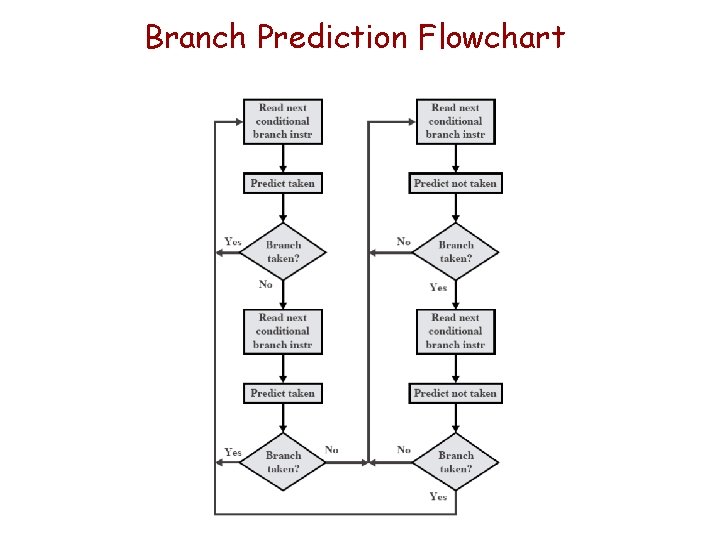

Branch Prediction Flowchart

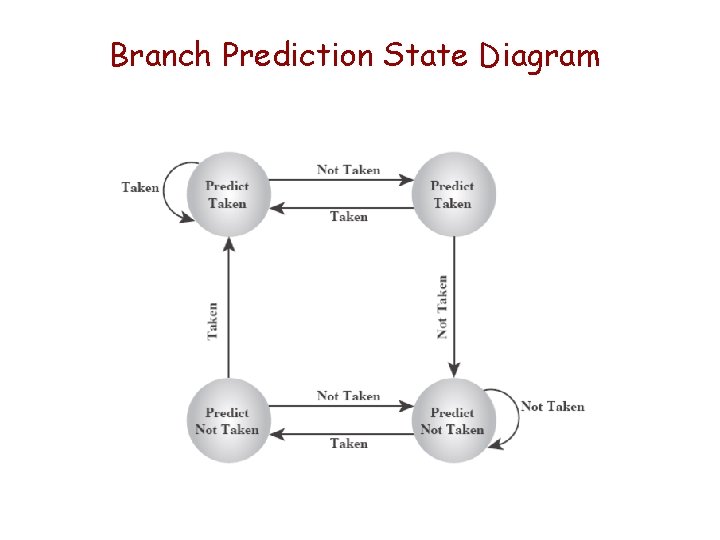

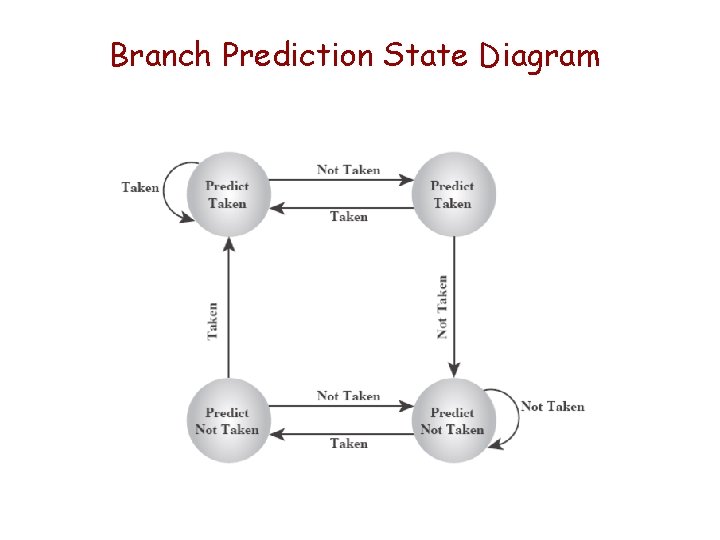

Branch Prediction State Diagram

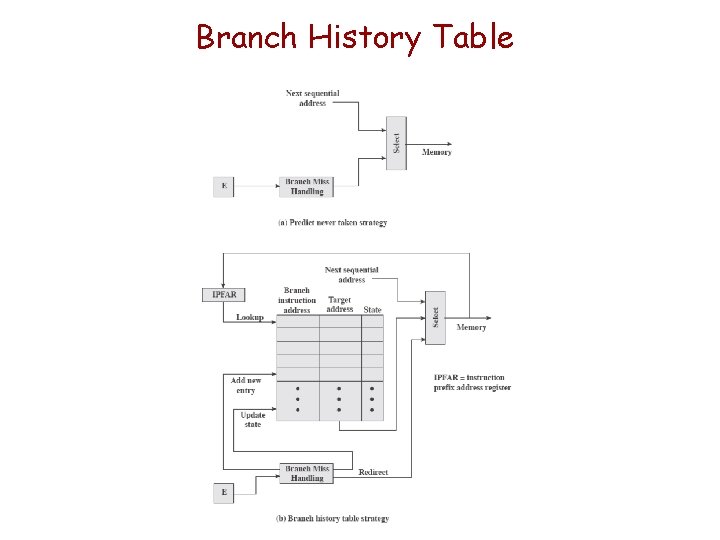

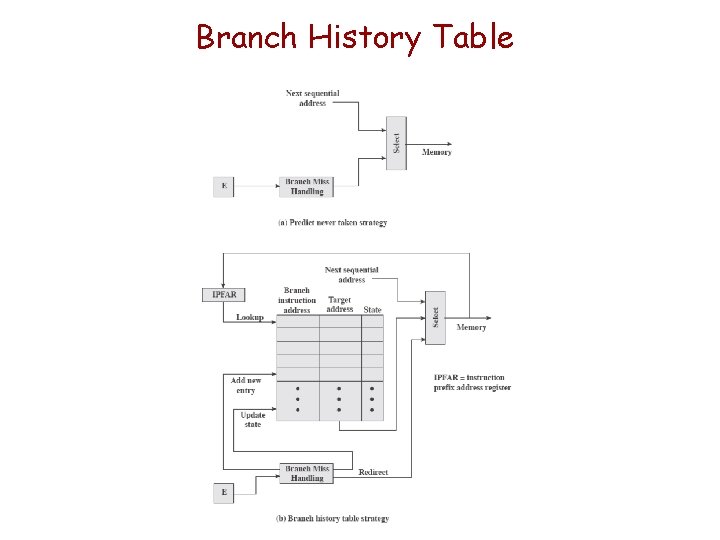

Maintain Branch Table • Perhaps maintain a cache table of three entries: - Address of branch - History of branching - Targets of branch

Branch History Table

Delayed Branch In Delayed Branch, the branch is moved before “independent instructions” preceding it. Then those instructions which now follow the branch can be executed while the branch target is being determined. What would it take to actually do this ?

Instruction Reordering Instruction reordering requires a judicious reordering of instructions so that data hazards can be eliminated. How can this be implemented ?

Multiple Copies of Registers Having multiple copies of registers – perhaps as many as one set for each stage can eliminate many data hazards How would you implement this ?

Get Branch Target Early The branch target is often available before the end of the pipeline, e. g. a JMP has it available as soon as the source operand stage is completed. There is no need to wait until the completion of the write back stage to begin fetching the next instruction. What would it take to implement this ?

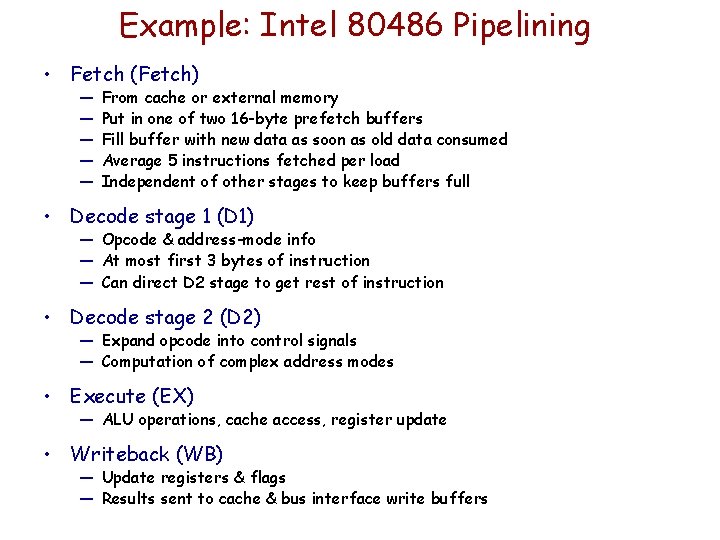

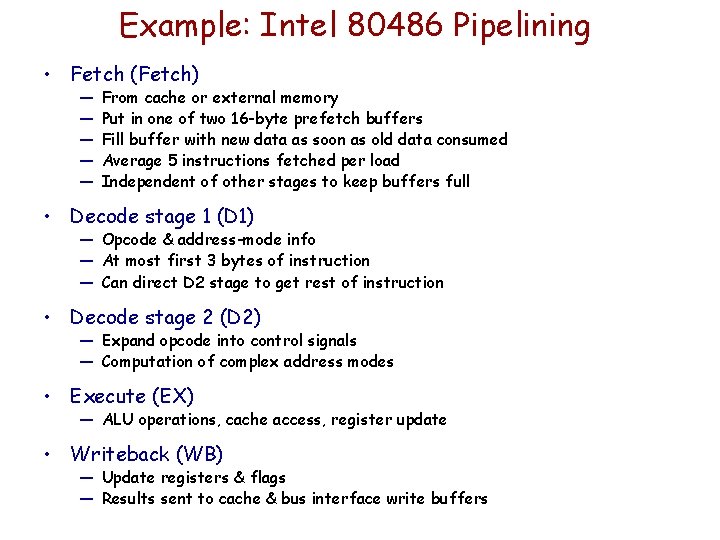

Example: Intel 80486 Pipelining • Fetch (Fetch) — — — From cache or external memory Put in one of two 16 -byte prefetch buffers Fill buffer with new data as soon as old data consumed Average 5 instructions fetched per load Independent of other stages to keep buffers full • Decode stage 1 (D 1) — Opcode & address-mode info — At most first 3 bytes of instruction — Can direct D 2 stage to get rest of instruction • Decode stage 2 (D 2) — Expand opcode into control signals — Computation of complex address modes • Execute (EX) — ALU operations, cache access, register update • Writeback (WB) — Update registers & flags — Results sent to cache & bus interface write buffers

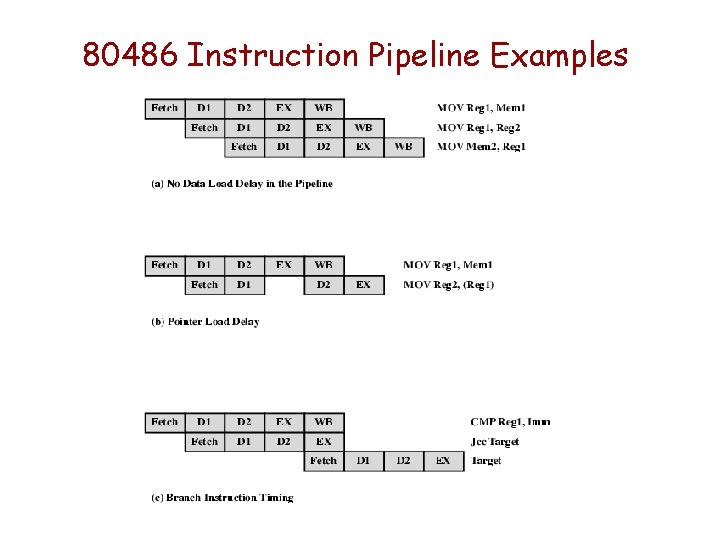

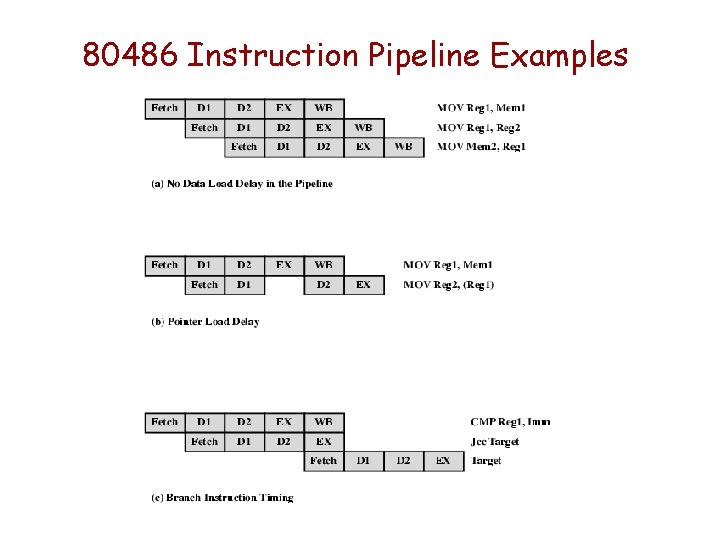

80486 Instruction Pipeline Examples