CHAPTER 12 ADVANCED INTELLIGENT SYSTEMS 2005 Prentice Hall

- Slides: 20

CHAPTER 12 ADVANCED INTELLIGENT SYSTEMS © 2005 Prentice Hall, Decision Support Systems and Intelligent 12 -1 Systems, 7 th Edition, Turban, Aronson, and Liang

Learning Objectives 12 -2 Understand second-generation intelligent systems. Learn the basic concepts and applications of casebased systems. Understand the uses of artificial neural networks. Examine the advantages and disadvantages of artificial neural networks. Learn about genetic algorithms. Examine theories and applications of fuzzy knowledge. © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Machine Learning 12 -3 Acquisition of knowledge through historical examples Different from the way that humans learn Implicitly induces expert knowledge from history Implications of system success and failure unclear Manipulates of symbols instead of numbers © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Methods of Machine Learning 12 -4 Supervised learning Induce knowledge from known outcomes New cases used to modify existing theories Statistical methods Rule induction Case based and inference Neural computing Genetic algorithms leading to survival of fittest Unsupervised learning Determine knowledge from data with unknown outcomes Clustering data into similar groups Neural computing Genetic algorithms leading to survival of fittest © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Case Reasoning 12 -5 Case base used for decision-making Effective when rule-based reasoning is not Case Primary knowledge element Ossified (inflexible) Paradigmatic(classic) Stories © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

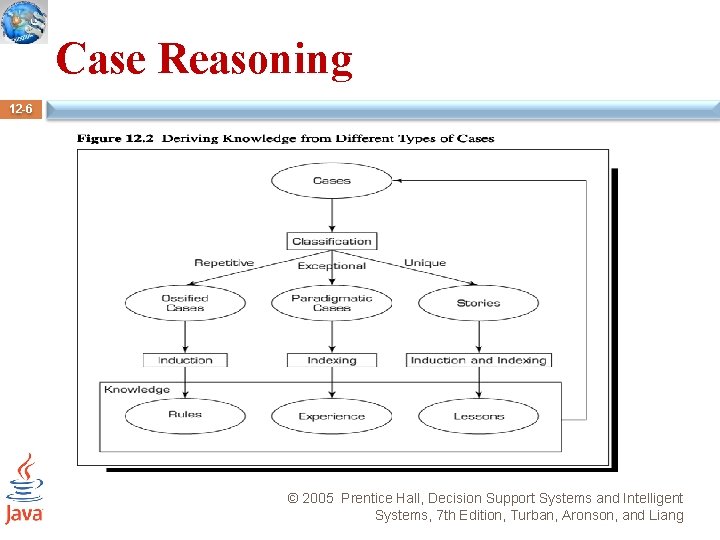

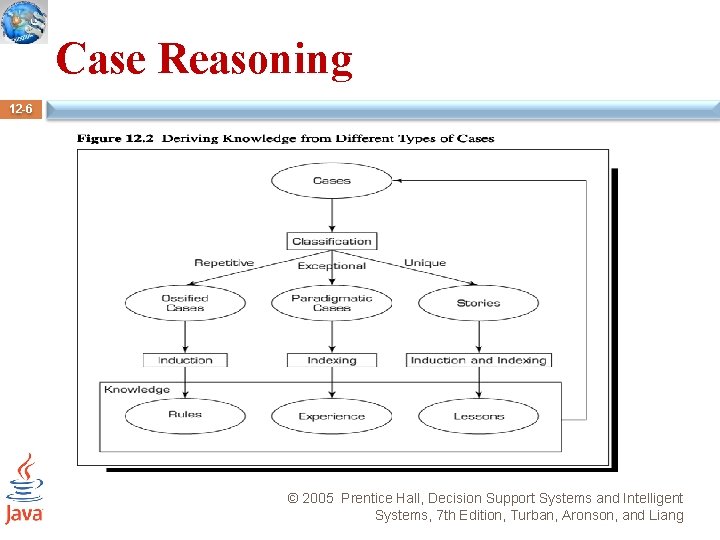

Case Reasoning 12 -6 © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

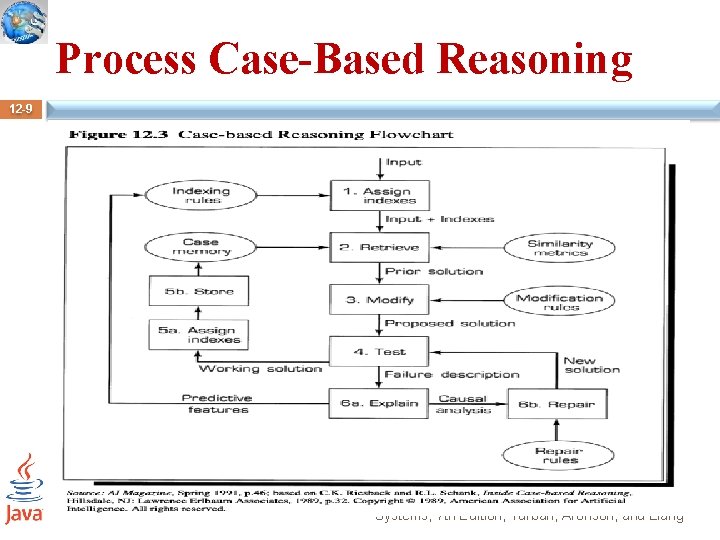

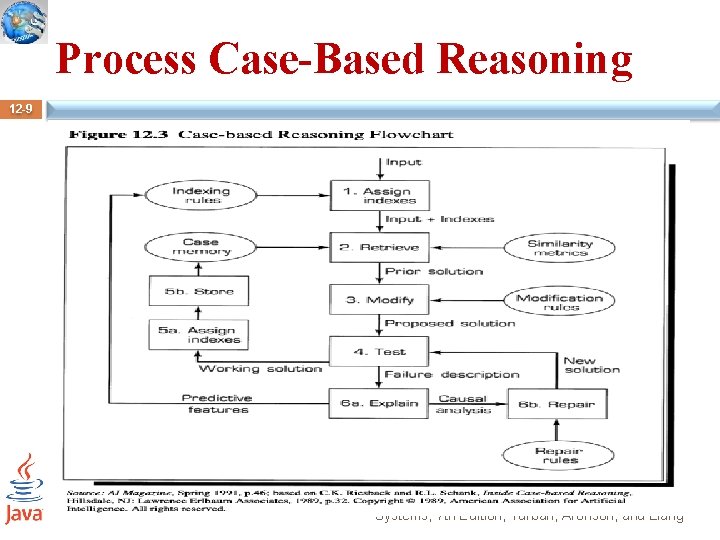

Process Case-Based Reasoning 12 -7 Features assigned as character indexes Indexes used to retrieve similar cases from memory Episodic case memories Similarity metrics applied Old solution adjusted to fit new case Indexing rules identify input features Modification rules Solution tested If successful, assigned value and stored If failure, explain, repair, test Alter plan to fit situation Rules for permissible alterations © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

12 -8 For example, customers of Cognitive Systems Inc. use such an approach for help desk applications. One customer has a 50, 000 query case library. New cases can be matched quickly against the 50, 000 samples in the library, providing automatic answers to queries with more than 90 percent accuracy. For more on case-based reasoning, © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Process Case-Based Reasoning 12 -9 © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Case Reasoning Success Factors 12 -10 Specific business objectives Knowledge should directly support end users Appropriate design Updatable Measurable metrics User accessible Expandable across enterprise © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

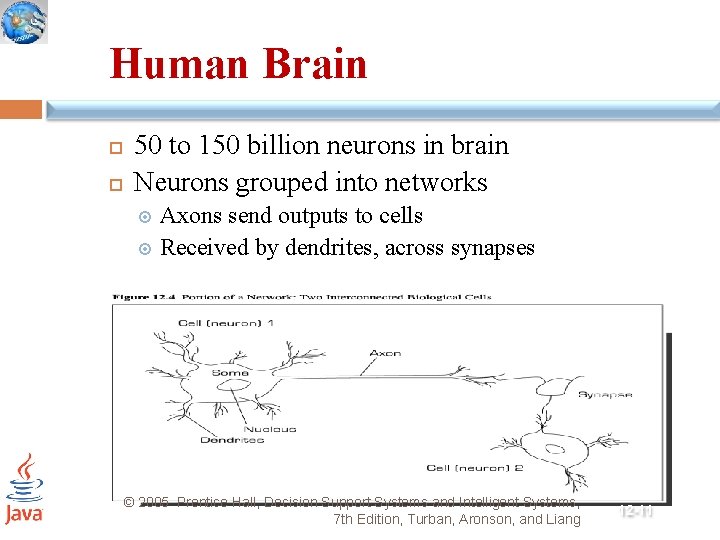

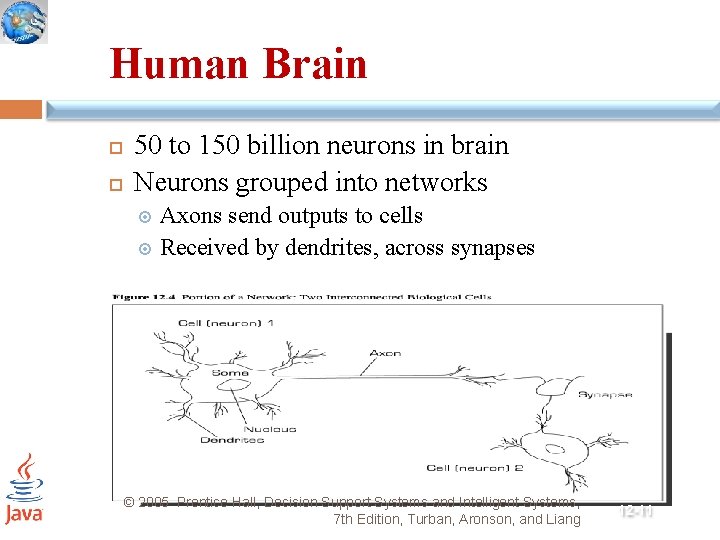

Human Brain 50 to 150 billion neurons in brain Neurons grouped into networks Axons send outputs to cells Received by dendrites, across synapses © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang 12 -11

Neural Networks 12 -12 Attempts to mimic brain functions Analogy, not accurate model Artificial neurons connected in network Organized by topologies Structure Three or more layers Input, intermediate (one or more hidden layers), output Receives modifiable signals © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Processing of Neural Networks 12 -13 Processing elements are neurons Allows for parallel processing Each input is single attribute Connection weight Summation function Adjustable mathematical value of input Weighted sum of input elements Internal stimulation Transfer function Relation between internal activation and output Sigmoid/transfer function Threshold value Outputs are problem solution © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Architecture of Neural Networks 12 -14 Feed forward-back propogation Neurons link output in one layer to input in next No feedback Associative memory system Correlates input data with stored information May have incomplete inputs Detects similarities Recurrent structure Activities go through network multiple times to produce output © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Network Learning 12 -15 Learning algorithms Supervised Connection weights derived from known cases Pattern recognition combined with weighting changes Back error propagation Easy implementation Multiple hidden layers Adjust learning rate and momentum Known patterns compared to output and allows for weight adjustment Established error tolerance Unsupervised Only stimuli shown to network Humans assign meanings and determine usefulness Adaptive resonance (tone)theory Kohonen self-organizing feature maps © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Development of Systems 12 -16 Collect data Separate data into training set to adjust weights Divide into test sets for network validation Select network topology Determine input, output, and hidden nodes, and hidden layers Select learning algorithm and connection weights Iterative training until network achieves preset error level Black box testing to verify inputs produce appropriate outputs The more, the better Contains routine and problematic cases Implementation Integration with other systems User training Monitoring and feedback © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Genetic Algorithms 12 -17 Computer programs that apply processes of evolution Self-organized Adaptable Fitness function Viability of candidate solutions Measured by objective obtained Iterative process Candidate solutions combine to produce generations Reproduction, crossover, mutation © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

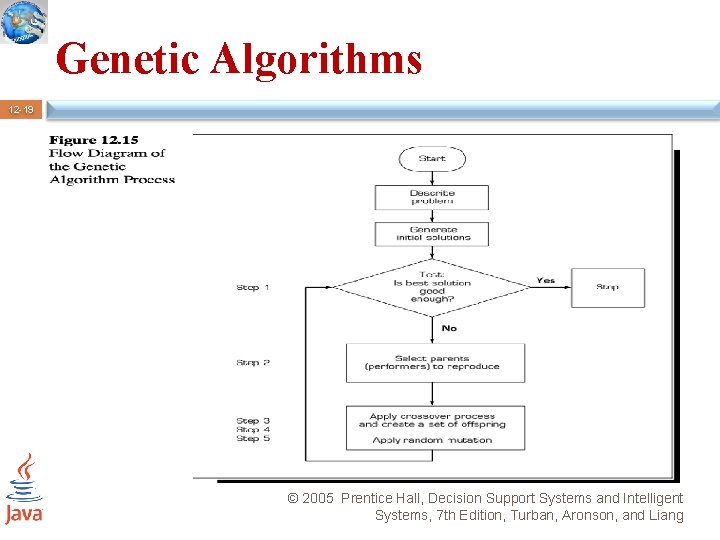

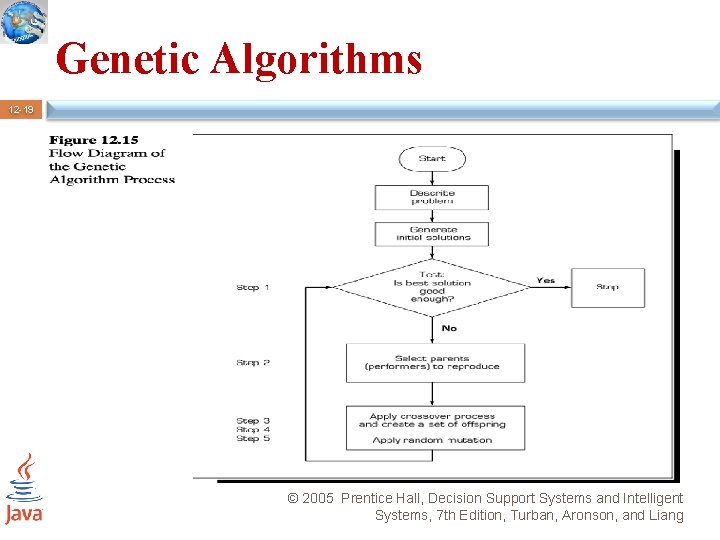

Genetic Algorithms 12 -18 Establish problem Parameters Number of initial solutions, number of offspring(child), number of parents and offspring for each generation, mutation level, probability distribution of crossover point occurrence Generate initial set of solutions Compute fitness functions Total all fitness functions Compare each solution’s fitness function to total Apply crossover Apply random mutation Repeat until good enough solution or no improvement © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Genetic Algorithms 12 -19 © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang

Fuzzy Logic 12 -20 Mathematical theory of fuzzy sets Imprecise (vague) thinking Describes human perception Continuous logic Not 100% true or false, black or white Fuzzy neural networks Fuzzification Fuzzy logic applied to input and output used to create model Defuzzification Model converted back to original input, output scales Output becomes input for another intelligent system © 2005 Prentice Hall, Decision Support Systems and Intelligent Systems, 7 th Edition, Turban, Aronson, and Liang