Chapter 11 Writing Parallel Programs Principles of Parallel

- Slides: 16

Chapter 11: Writing Parallel Programs Principles of Parallel Programming First Edition by Calvin Lawrence Snyder Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley

How to start writing programs • • Try simple programs first Build upon them to include the desired features Access a good timer Verify improved performance Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -2

Optimizing compilers • Often remove code that does not contribute to a result – May have to store a result or print • May stream line functions Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -3

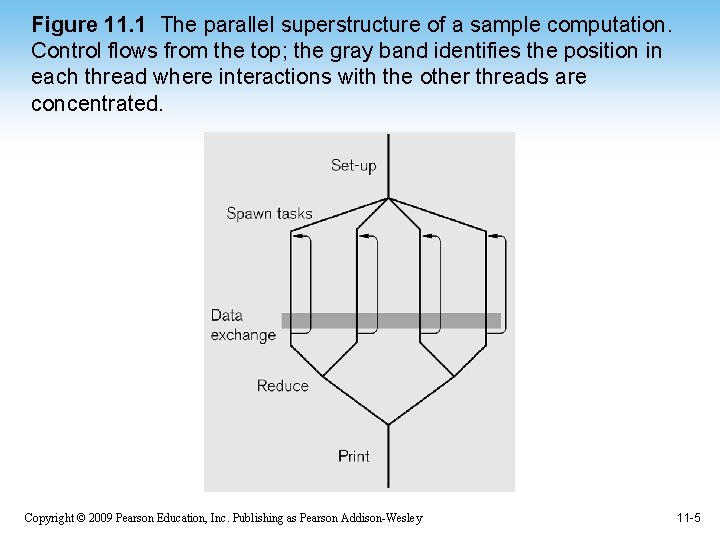

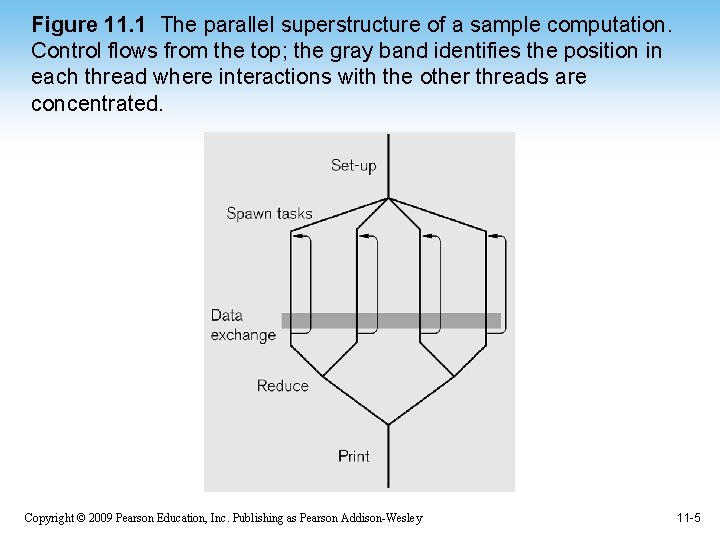

Program design • Build the parallel structure first • Then insert the functional components • This macro view often provides much parallelism Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -4

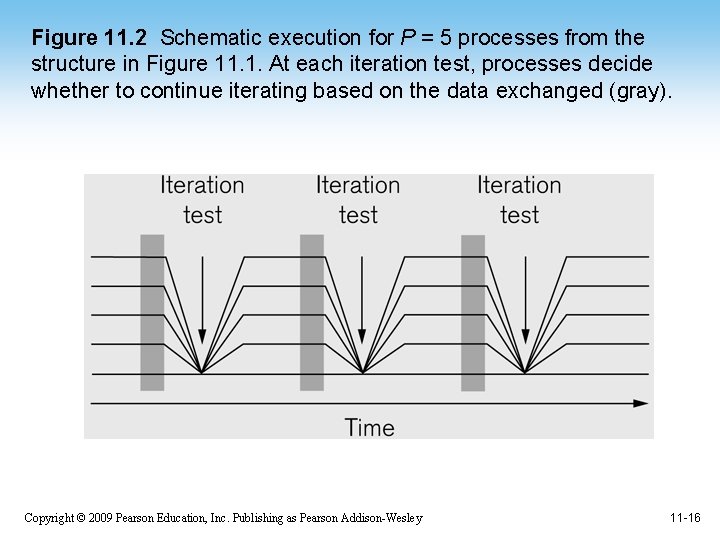

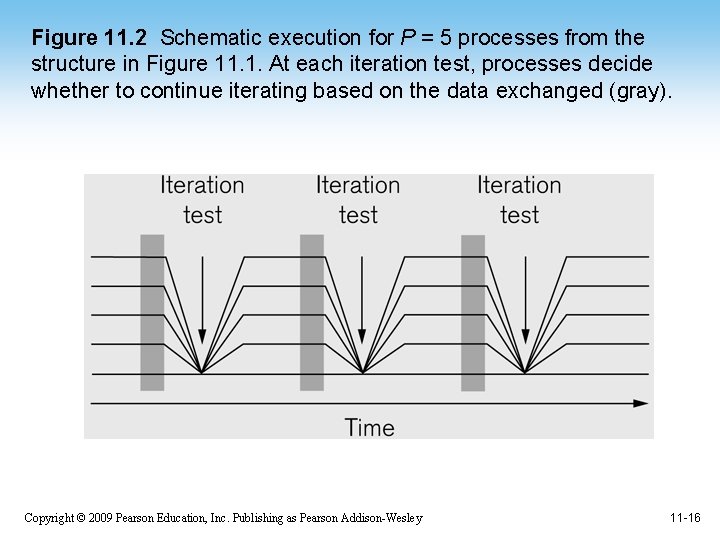

Figure 11. 1 The parallel superstructure of a sample computation. Control flows from the top; the gray band identifies the position in each thread where interactions with the other threads are concentrated. Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -5

Write extra code • To ensure correctness – Write test hooks to control processing order – Consider wide variety of inputs – Use print routines to inspect values – Implement check pointing for long running pgms • Use enough data – If n data is req’d for sequent tn data for t threads Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -6

Major Projects • • Seek algorithms of interest Often expressed in PRAM model (inadequate) Identify computational assumptions Create test cases Measure overall performance Revise pgm to improve performance Report results Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -7

Bench mark programs • https: //www. nasa. gov/Resources/Software/s wdescriptions. html • Well written • Compare your implementation Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -8

Process • • • Review a pgm in benchmark suite Locate papers reporting experiments Develop similar program Do similar testing Determine why your results vary Report differences Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -9

Solve new problem in parallel • • • Review literature on a computation Parallelize some of the computation (kernel) Analyze performance Look for improvements Report results Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -10

Efficiency considerations • How does it do versus sequential • What to measure – Initialization? – Clean up? – One time costs • Amdahl’s law – Sequential costs (initialization) reduce speed up – Limit I/O Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -11

Fair comparisons • • Run on same hardware Consistent language and compiler Consider optimization settings Consider input data Consider load of machine (repeatable results) Obtain many results to minimize variation Report average or median Run pgm to warm computer (cause pages to load) Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -12

Carefully consider speedup • Compiler optimizations • Cache sizes • Clock speeds Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -13

Develop deep understanding of pgm behavior • • Understand pgm individual phases How do individual phases scale What are bottlenecks of each phase How much parallelism is available in each phase • How many resources are used (memory) • What trade offs can be done to improve performance Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -14

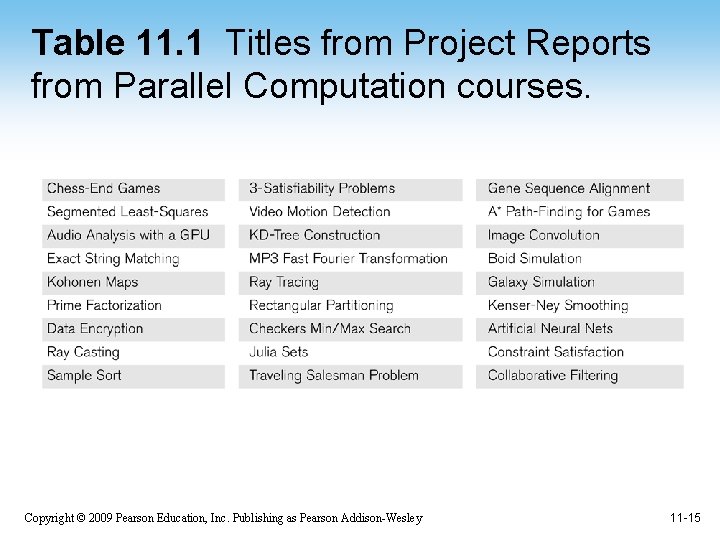

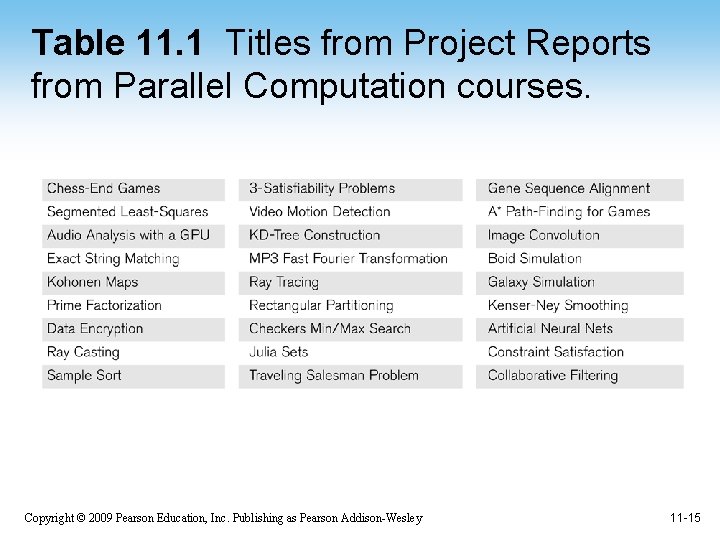

Table 11. 1 Titles from Project Reports from Parallel Computation courses. Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -15

Figure 11. 2 Schematic execution for P = 5 processes from the structure in Figure 11. 1. At each iteration test, processes decide whether to continue iterating based on the data exchanged (gray). Copyright © 2009 Pearson Education, Inc. Publishing as Pearson Addison-Wesley 11 -16