Chapter 11 Experimental Designs Power Point presentation developed

- Slides: 26

Chapter 11 Experimental Designs Power. Point presentation developed by: Sarah E. Bledsoe & E. Roberto Orellana

Overview § Introduction to Experimental Designs § Quasi-experimental Designs § Pre-experimental Pilot Studies § Additional Threats to the Validity of Experimental and Quasi-experimental Results § Practical Pitfalls in Carrying Out Experiments and Quasi-experiments in Social Agencies § Qualitative Techniques for Avoiding or Alleviating Practical Pitfalls

Introduction to Experimental Designs § Best causal evidence comes from designs with strong internal validity § Experimental designs are the strongest designs allowing social work researchers and evidence-based practitioners to have increased confidence in making causal inferences based of study findings

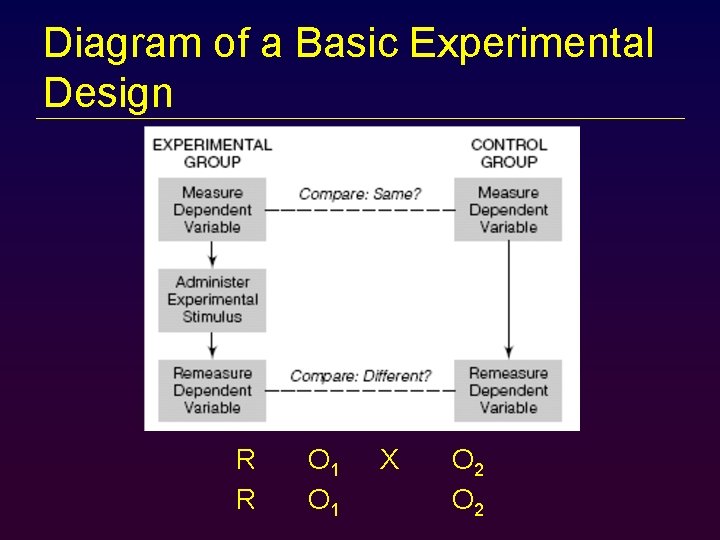

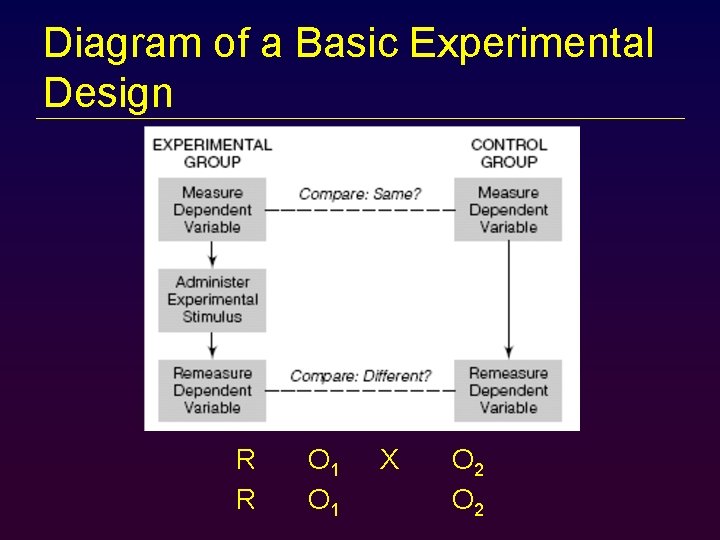

Diagram of a Basic Experimental Design R R O 1 X O 2

Randomization § Controls for selection bias in experimental designs § Participants are divided into groups using procedures based on probability theory § Improves the likelihood that the control group represents what the experimental group would look like had it not been exposed to the experimental stimulus

Matching § Procedure whereby pairs of subjects are matched on the basis of their similarities on one or more variables, and one member of the pair is assigned to the experimental group and the other to the control group § Combination with randomization in experimental designs increases the likelihood that the two groups will be comparable § Quota Matrix

Providing Services to Control Groups § Ethical considerations require that services be provided to many control groups § Often, control groups receive usual care in place of the experimental intervention – Compares experimental intervention to treatment as usual § Control group participants may also be given waitlist priority

Quasi-experimental Designs § Design that attempts to control for threats to internal validity and thus permits causal inferences but is distinguished from true experiments primarily by the lack of random assignment of subjects § Useful when it is not feasible to obtain a control group § When conducted properly can obtain a reasonable degree of internal validity § Three common quasi-experimental designs will be examined: − Nonequivalent comparison group designs − Simple time-series designs − Multiple time-series designs

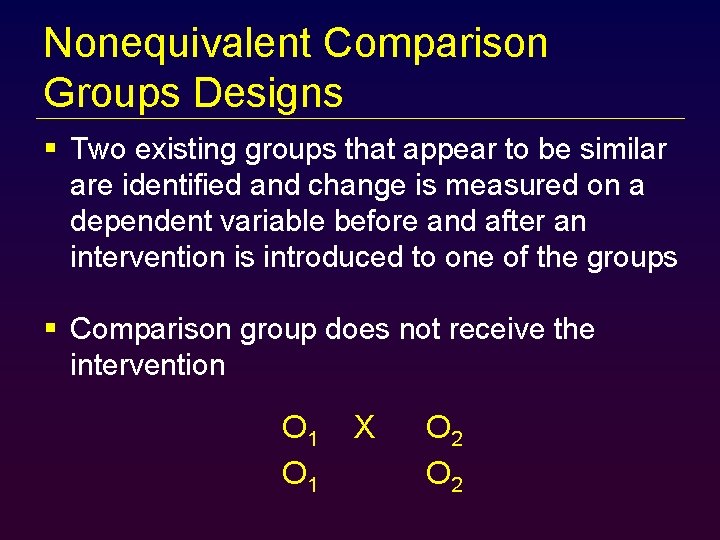

Nonequivalent Comparison Groups Designs § Two existing groups that appear to be similar are identified and change is measured on a dependent variable before and after an intervention is introduced to one of the groups § Comparison group does not receive the intervention O 1 X O 2

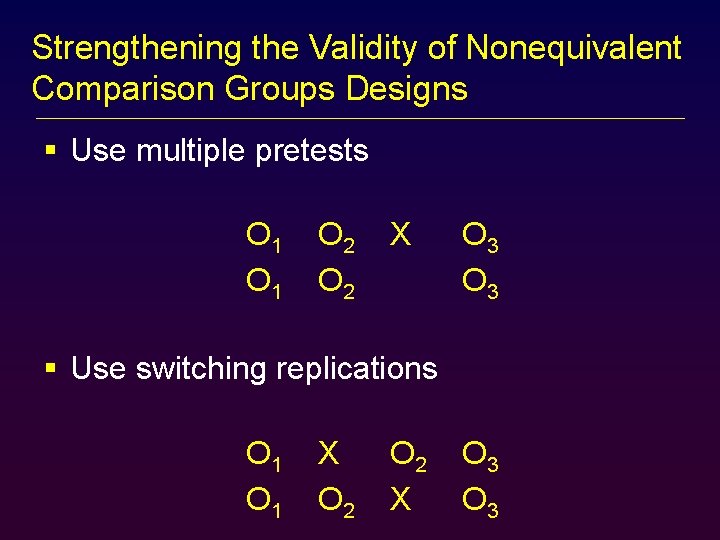

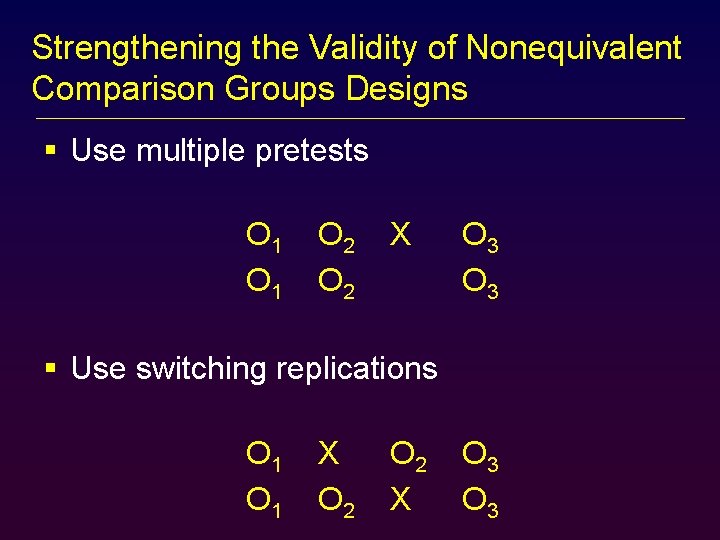

Strengthening the Validity of Nonequivalent Comparison Groups Designs § Use multiple pretests O 1 O 2 X O 3 § Use switching replications O 1 X O 2 X O 3

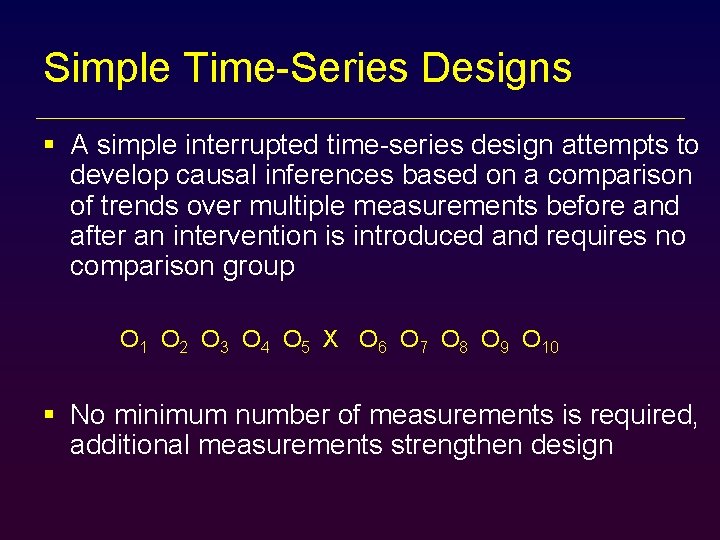

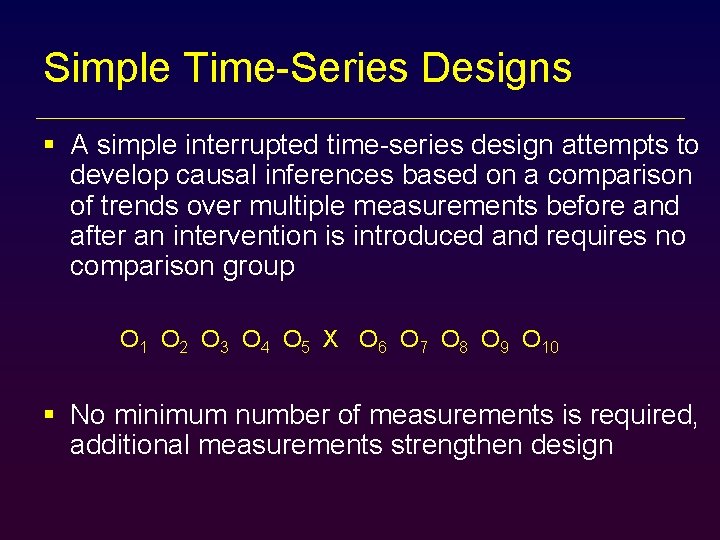

Simple Time-Series Designs § A simple interrupted time-series design attempts to develop causal inferences based on a comparison of trends over multiple measurements before and after an intervention is introduced and requires no comparison group O 1 O 2 O 3 O 4 O 5 X O 6 O 7 O 8 O 9 O 10 § No minimum number of measurements is required, additional measurements strengthen design

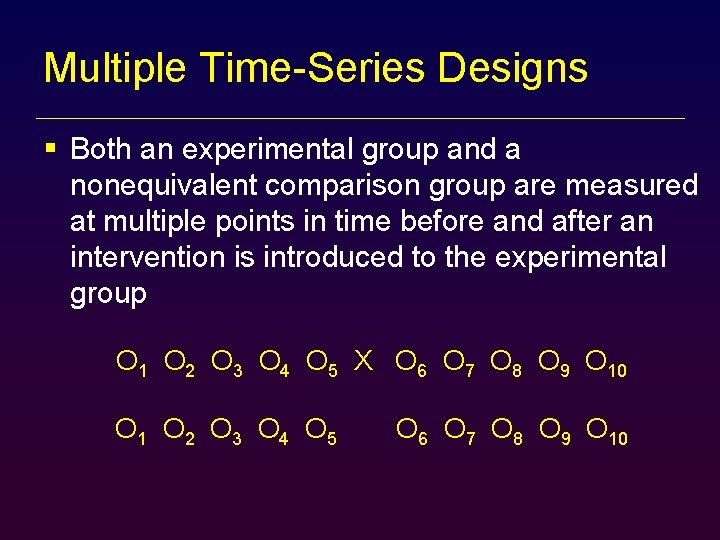

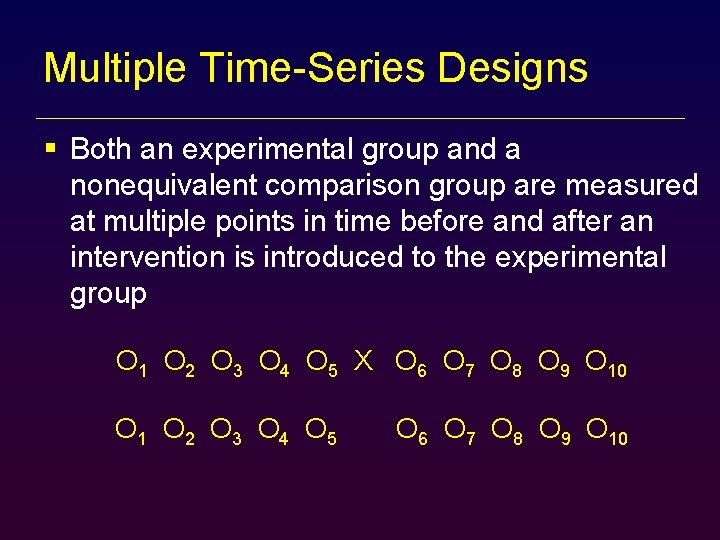

Multiple Time-Series Designs § Both an experimental group and a nonequivalent comparison group are measured at multiple points in time before and after an intervention is introduced to the experimental group O 1 O 2 O 3 O 4 O 5 X O 6 O 7 O 8 O 9 O 10 O 1 O 2 O 3 O 4 O 5 O 6 O 7 O 8 O 9 O 10

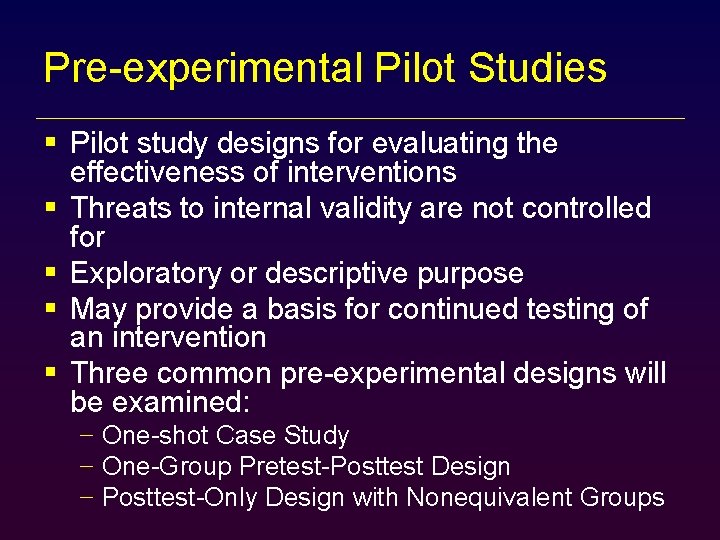

Pre-experimental Pilot Studies § Pilot study designs for evaluating the effectiveness of interventions § Threats to internal validity are not controlled for § Exploratory or descriptive purpose § May provide a basis for continued testing of an intervention § Three common pre-experimental designs will be examined: − One-shot Case Study − One-Group Pretest-Posttest Design − Posttest-Only Design with Nonequivalent Groups

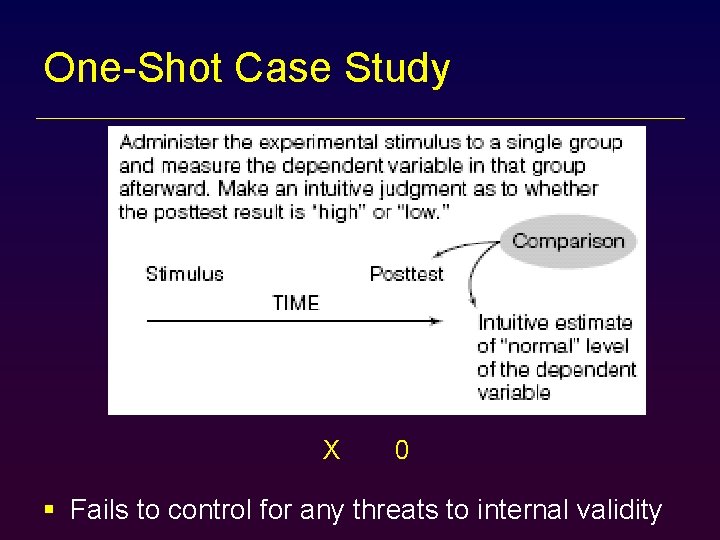

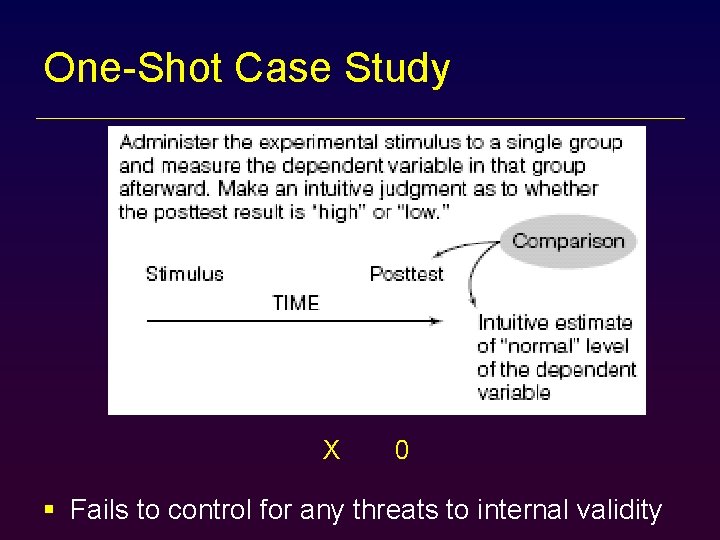

One-Shot Case Study X 0 § Fails to control for any threats to internal validity

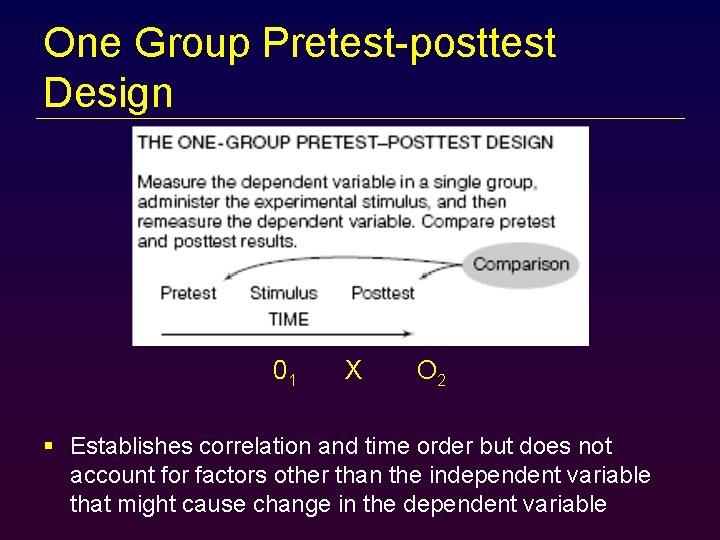

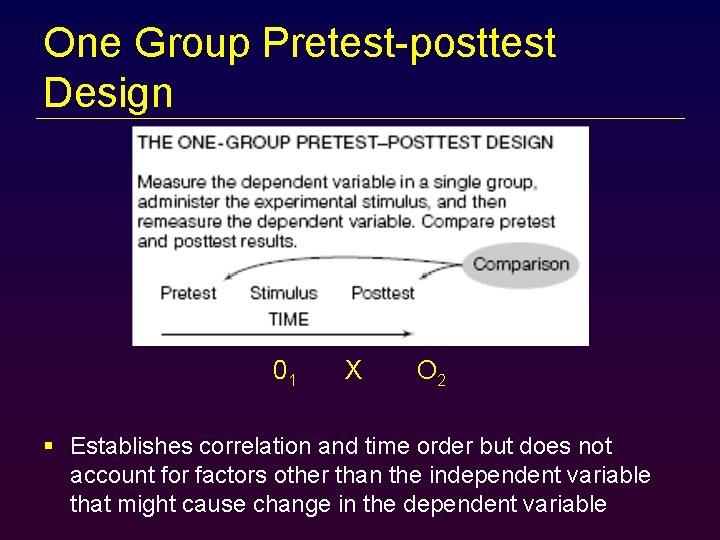

One Group Pretest-posttest Design 01 X O 2 § Establishes correlation and time order but does not account for factors other than the independent variable that might cause change in the dependent variable

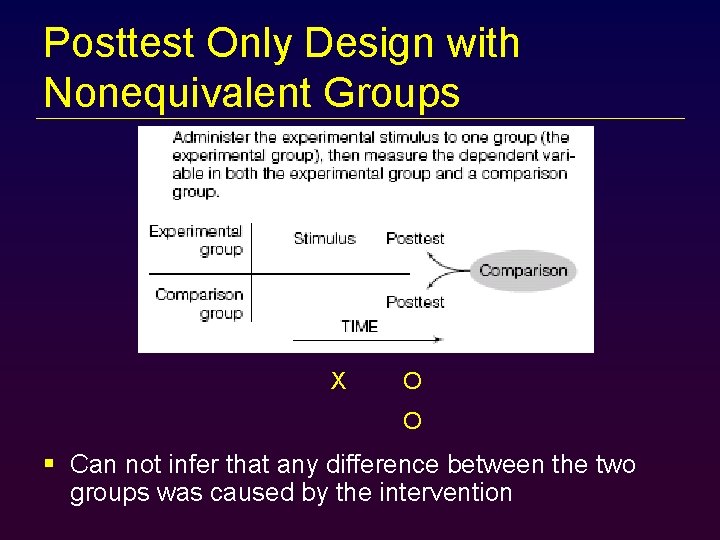

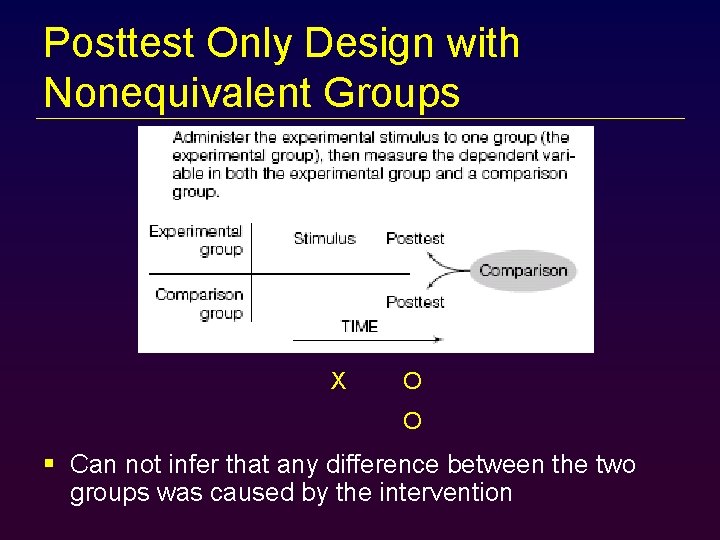

Posttest Only Design with Nonequivalent Groups X O O § Can not infer that any difference between the two groups was caused by the intervention

Additional Threats to the Validity of Experimental & Quasi-experimental Findings § Measurement Bias § Research Reactivity § Diffusion or Imitation of Treatments § Compensatory Equalization, Compensatory Rivalry, or Resentful Demoralization § Attrition (or Experimental Mortality)

Measurement Bias § Credibility of study conclusion can be compromised if measures are biased § Bias can be avoided if blind raters who are unaware of the hypotheses and whether or not a participant has received the experimental intervention

Research Reactivity § Refers to changes in outcome data that are caused by researchers or research procedures rather than the independent variable − Measurement Bias − Experimental Demand Characteristics − Experimenter Expectancies − Obtrusive Observation − Novelty and Disruption Effects − Placebo Effect

Diffusion and Imitation of Treatments § A threat to the validity of an evaluation of an intervention’s effectiveness that occurs when practitioners who are supposed to provide routine services to a comparison group implement aspects of the experimental group’s intervention in ways that tend to diminish the planned differences in the interventions received by the groups being compared

Compensatory Equalization, Compensatory Rivalry, or Resentful Demoralization § In Compensatory Equalization comparison condition practitioners compensate for differences in treatment groups by providing enhanced services that go beyond the routinetreatment regimen for their clients § In Compensatory Rivalry comparison condition practitioners compete with therapists in experimental group; extra efforts might improve the effectiveness of routine-treatment § In Resentful Demoralization practitioners or clients in the comparison condition become resentful and demoralized because they did not receive special training or treatment; decline in confidence or motivation may explain their inferior outcomes

Attrition § A threat to the validity of an experiment that occurs when participants drop out of an experiment before it is completed § Strategies to minimize attrition: − Reimbursement − Avoid intervention or research procedures that disappoint participants − Utilize tracking methods

Pitfalls in Carrying Out Experiments & Quasiexperiments in Social Service Agencies § Social work experiments tend to take place in agency settings where administrators are not researchers, may not understand the requisites of experimental and quasiexperimental designs, and may even resent and attempt to undermine the demands of the research design

Pitfalls in Carrying Out Experiments & Quasiexperiments in Social Service Agencies § Four practical pitfalls commonly encountered when implementing research in social service agencies are: − Fidelity of the intervention − Contamination of the control condition − Resistance to the case assignment protocol − Client recruitment and retention

Mechanisms for Avoiding or Alleviating Practical Pitfalls § Engage agency staff in research design and enlist their support from its inception § Interact with agency staff § Monitor implementation of the research protocol § Locate experimental and control conditions in separate buildings or agencies § Develop a treatment manual § Recruit clients on an ongoing basis § Conduct a pilot study

Qualitative Techniques for Avoiding or Alleviating Practical Pitfalls § Ethnographic shadowing § Observation during training, group supervision, & staff meetings § Informal interviews with agency staff § Videotaping or audiotaping sessions § Practitioner activity logs and event logs § Focus groups § Snowball sampling § Open-ended interviews with clients § Content analysis of agency documents and manuals