Chapter 10 Virtual Memory Operating System Concepts 10

- Slides: 32

Chapter 10: Virtual Memory Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

Chapter 10: Virtual Memory n Background n Demand Paging n Copy-on-Write n Page Replacement n Allocation of Frames n Thrashing n Memory-Mapped Files n Allocating Kernel Memory n Other Considerations n Operating-System Examples Operating System Concepts – 10 th Edition 10. 2 Silberschatz, Galvin and Gagne © 2018

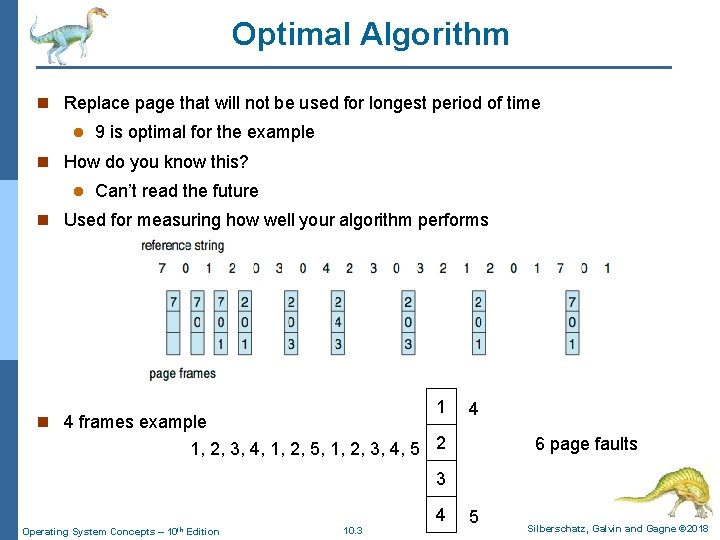

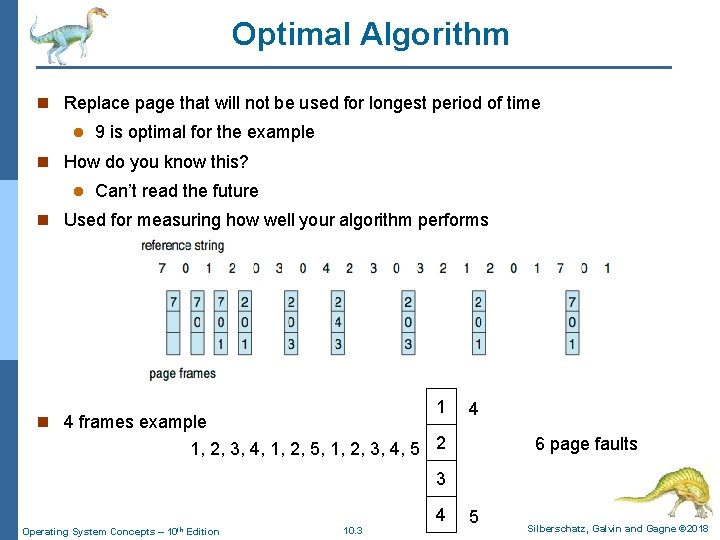

Optimal Algorithm n Replace page that will not be used for longest period of time l 9 is optimal for the example n How do you know this? l Can’t read the future n Used for measuring how well your algorithm performs 1 n 4 frames example 4 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 2 6 page faults 3 4 Operating System Concepts – 10 th Edition 10. 3 5 Silberschatz, Galvin and Gagne © 2018

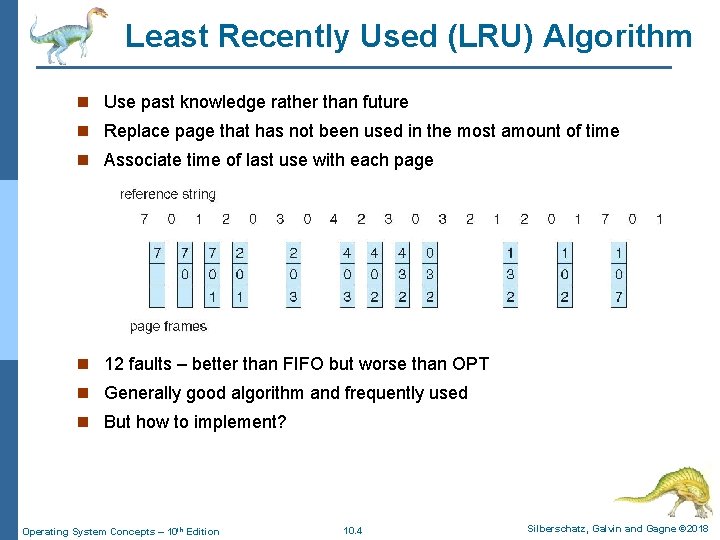

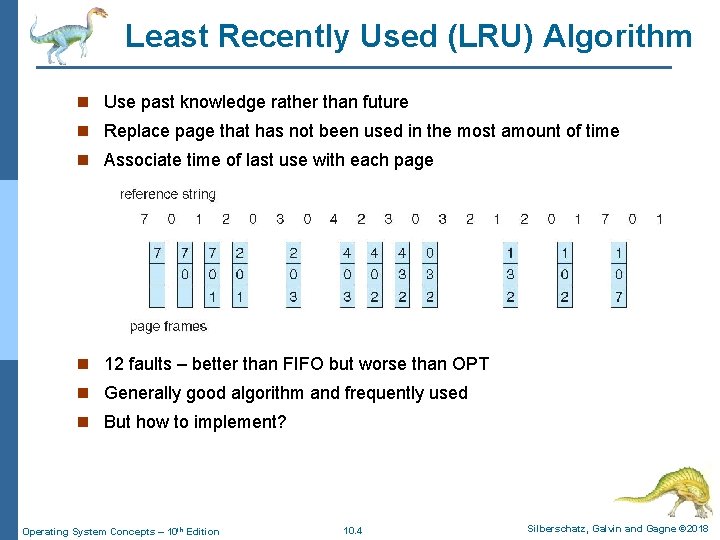

Least Recently Used (LRU) Algorithm n Use past knowledge rather than future n Replace page that has not been used in the most amount of time n Associate time of last use with each page n 12 faults – better than FIFO but worse than OPT n Generally good algorithm and frequently used n But how to implement? Operating System Concepts – 10 th Edition 10. 4 Silberschatz, Galvin and Gagne © 2018

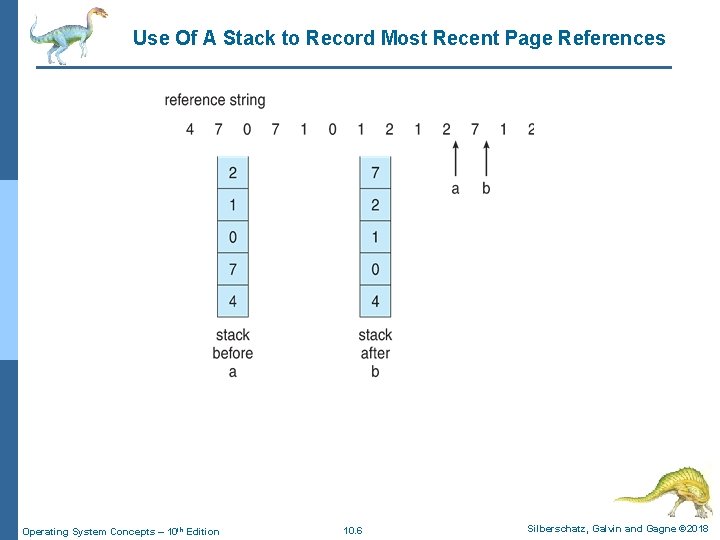

LRU Algorithm (Cont. ) n Counter implementation l Every page entry has a counter; every time page is referenced through this entry, copy the clock into the counter l When a page needs to be changed, look at the counters to find smallest value 4 Search through table needed n Stack implementation l Keep a stack of page numbers in a double link form: l Page referenced: 4 move it to the top 4 requires 6 pointers to be changed l But each update more expensive l No search for replacement n LRU and OPT are cases of stack algorithms that don’t have Belady’s Anomaly Operating System Concepts – 10 th Edition 10. 5 Silberschatz, Galvin and Gagne © 2018

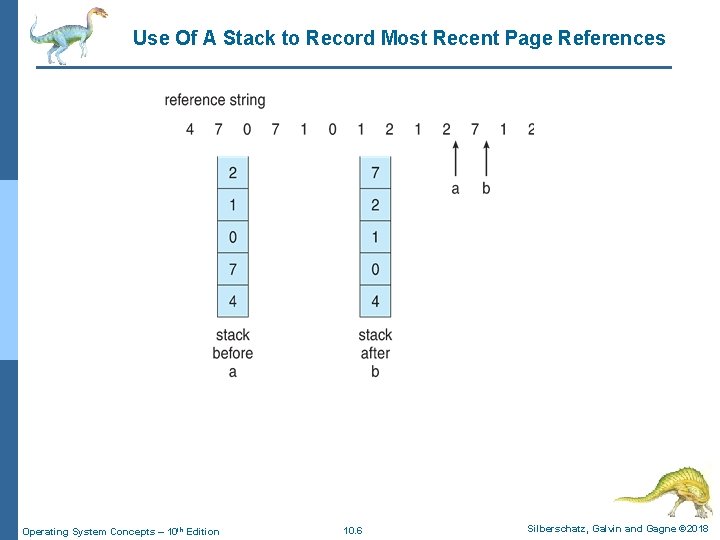

Use Of A Stack to Record Most Recent Page References Operating System Concepts – 10 th Edition 10. 6 Silberschatz, Galvin and Gagne © 2018

LRU Approximation Algorithms n LRU needs special hardware and still slow n Reference bit l With each page associate a bit, initially = 0 l When page is referenced bit set to 1 l Replace any with reference bit = 0 (if one exists) 4 We do not know the order, however Operating System Concepts – 10 th Edition 10. 7 Silberschatz, Galvin and Gagne © 2018

LRU Approximation Algorithms n Additional-Reference-Bits Algorithm l Keep an 8 -bit byte for each page in a table in memory. l At regular intervals, a timer interrupt transfers control to OS l The OS shifts the ref bit for each page into the high-order bit, shifting the other bits right 1 bit, discarding the low-order bit. l Shift register contains 0000 - page not used for eight time periods l Shift register contains 1111 - page used at least once for eight time periods l A page with a history register value 11000100 is more recently used than the one with 0111. l Interpret 8 -bit byte as unsigned integer. l Page with lowest number is LRU l If numbers are not unique, then 4 replace 4 use all pages with the smallest value, or a FIFO selection among them Operating System Concepts – 10 th Edition 10. 8 Silberschatz, Galvin and Gagne © 2018

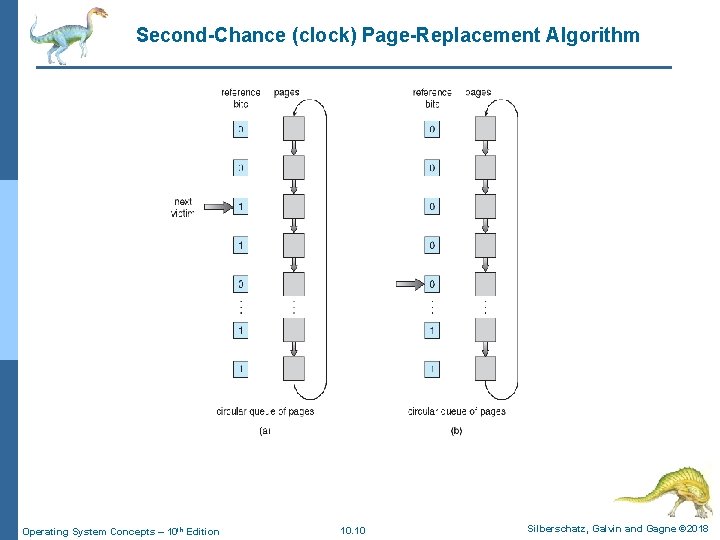

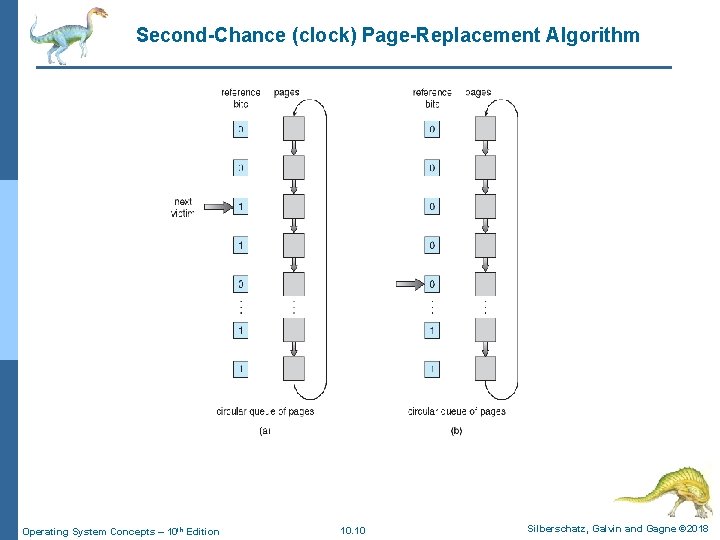

Second-Chance (clock) Page-Replacement Algorithm n Replace an old page, not the oldest page n Generally FIFO, plus hardware-provided reference bit n Clock replacement - arrange physical pages in a circle, with a clock hand 1. Hardware keeps reference bit per physical page frame 2. Hardware sets ref bit on each reference l If ref bit isn’t set, means not referenced in a long time l Nachos – ref bit in TLB, copy to page table or replace 3. On page fault: l Advance clock hand (not real time) l Check reference bit 4 Reference 4 reference – bit = 0 -> replace page bit = 1 then: set reference bit 0, leave page in memory, go on Operating System Concepts – 10 th Edition 10. 9 Silberschatz, Galvin and Gagne © 2018

Second-Chance (clock) Page-Replacement Algorithm Operating System Concepts – 10 th Edition 10. 10 Silberschatz, Galvin and Gagne © 2018

Second-Chance (Clock) algorithm n Will it always find a page or loop infinitely? l Even if all ref bits are set, it will eventually loop around, clearing all ref bits -> FIFO n What if hand is moving slowly? l Not many page faults and/or find page quickly n What if hand is moving quickly? l Lots of page faults and/or lots of reference bits set. n One way to view clock algorithm: crude partitioning of pages into two categories: l young and old. l Why not partition into more than 2 groups? Operating System Concepts – 10 th Edition 10. 11 Silberschatz, Galvin and Gagne © 2018

Nth Chance Algorithm n Don’t throw page out until hand has swept by n times n OS keeps counter page – # of sweeps n On page fault, OS checks ref bit: l 1 => clear ref and also clear counter, go on l 0 => increment counter, if < N, go on else replace page Operating System Concepts – 10 th Edition 10. 12 Silberschatz, Galvin and Gagne © 2018

Nth Chance Algorithm n How do we pick N? n Why pick large N? l Better approx to LRU. n Why pick small N? l More efficient; otherwise might have to look a long way to find free page. n Dirty pages have to be written back to disk when replaced. n Takes extra overhead to replace a dirty page, so give dirty pages an extra chance before replacing? n Common approach: Clean pages – use N = 1 l Dirty pages – use N = 2 (and write-back to disk when N=1) l Operating System Concepts – 10 th Edition 10. 13 Silberschatz, Galvin and Gagne © 2018

LRU Approximation n State per page table entry n To summarize, many machines maintain four bits per page table entry: l Ref: set when page is referenced, cleared by clock algorithm l Modified: set when page is modified, cleared when page is written to disk l Valid: ok for program to reference this page l Read-only: ok for program to read page, but not to modify it (for example, for catching modifications to code pages) Operating System Concepts – 10 th Edition 10. 14 Silberschatz, Galvin and Gagne © 2018

LRU Approximation n n n Do we really need a hardware-supported “modified” bit? No. Can emulate it (BSD UNIX). Keep two sets of books: i. Pages user program can access without taking a fault ii. Pages in memory Set (i) is a subset of set (ii). Initially, mark all pages as read-only, even data pages. On write, trap to OS. l OS sets modified bit, and marks page as read-write. When page comes back in from disk, mark read-only. Operating System Concepts – 10 th Edition 10. 15 Silberschatz, Galvin and Gagne © 2018

LRU Approximation n Do we really need a hardware-supported “ref” bit? n No. Can emulate it, exactly the same as before: i. iii. iv. v. Mark all pages as invalid, even if in memory. On read to invalid page, trap to OS. OS sets ref bit, and marks page read-only. On write, set ref and modified bit, and mark page read-write. When clock hand passes by, reset ref bit and mark page as invalid. n But remember, clock is just an approximation of LRU. n Can we do a better approximation, given that we have to take page faults on some reads and writes to collect ref information? n Need to identify an old page, not the oldest page! Operating System Concepts – 10 th Edition 10. 16 Silberschatz, Galvin and Gagne © 2018

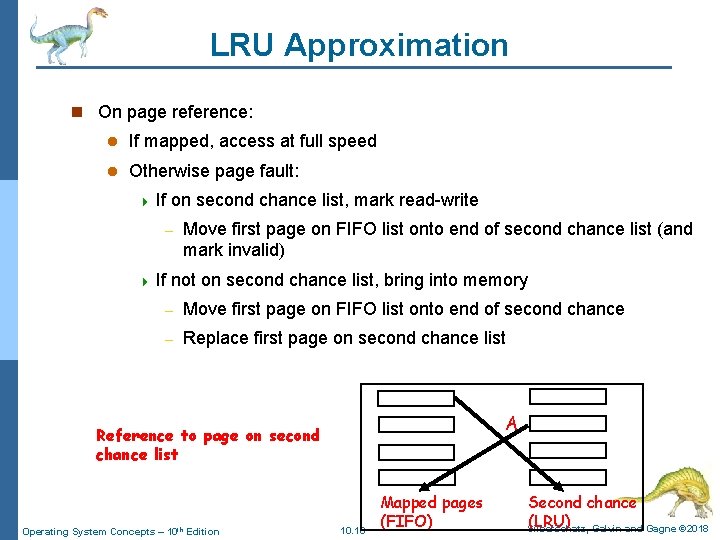

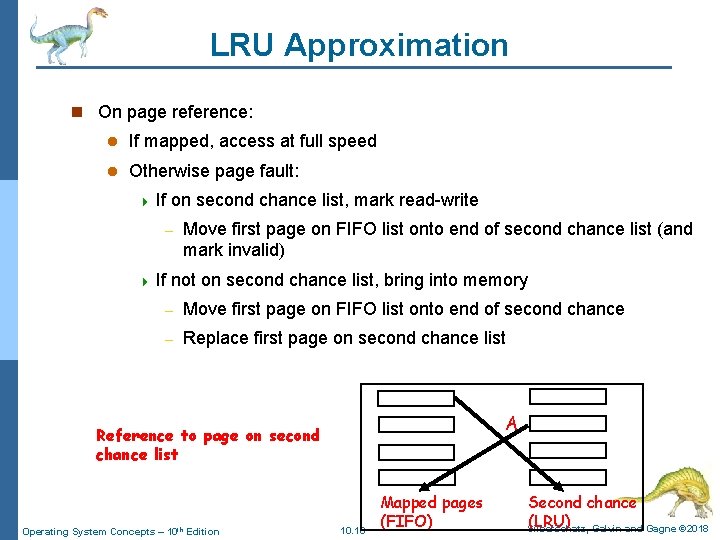

LRU Approximation n VAX/VMS didn’t have a ref or modified bit, so had to come up with some solution. n Idea was to split memory in two parts – mapped and unmapped: i. Directly accessible to program (marked as read-write) (managed FIFO) ii. Second-chance list (marked as invalid, but in memory) (managed pure LRU) Operating System Concepts – 10 th Edition 10. 17 Silberschatz, Galvin and Gagne © 2018

LRU Approximation n On page reference: l If mapped, access at full speed l Otherwise page fault: 4 If on second chance list, mark read-write – 4 If Move first page on FIFO list onto end of second chance list (and mark invalid) not on second chance list, bring into memory – Move first page on FIFO list onto end of second chance – Replace first page on second chance list A Reference to page on second chance list Operating System Concepts – 10 th Edition 10. 18 Mapped pages (FIFO) Second chance (LRU) Galvin and Gagne © 2018 Silberschatz,

LRU Approximation n How many pages for second chance list? l If 0, FIFO l If all, LRU, but page fault on every page reference n Pick intermediate value. Result is: l + Few disk accesses (page only goes to disk if it is unused for a long time) l – Increased overhead trapping to OS (software/hardware tradeoff) Operating System Concepts – 10 th Edition 10. 19 Silberschatz, Galvin and Gagne © 2018

LRU Approximation n Does a software-loaded TLB need a ref bit? n What if we have a software-loaded TLB (as in Nachos)? Two options: 1. Hardware sets ref bit in TLB; when TLB entry is replaced, software copies ref bit back to page table. 2. Software manages TLB entries as FIFO list; everything not in TLB is second-chance list, managed as strict LRU. Operating System Concepts – 10 th Edition 10. 20 Silberschatz, Galvin and Gagne © 2018

LRU Approximation n Core map n Page tables map virtual page # –> physical page # n Do we need the reverse? physical page # –> virtual page #? l Yes. Clock algorithm runs through page frames. What if it ran through page tables? i. Many more entries ii. What if there is sharing? Operating System Concepts – 10 th Edition 10. 21 Silberschatz, Galvin and Gagne © 2018

Enhanced Second-Chance Algorithm n Improve algorithm by using reference bit and modify bit (if available) in concert n Take ordered pair (reference, modify) 1. (0, 0) neither recently used not modified – best page to replace 2. (0, 1) not recently used but modified – not quite as good, must write out before replacement 3. (1, 0) recently used but clean – probably will be used again soon 4. (1, 1) recently used and modified – probably will be used again soon and need to write out before replacement n When page replacement called for, use the clock scheme but use the four classes replace page in lowest non-empty class l Might need to search circular queue several times Operating System Concepts – 10 th Edition 10. 22 Silberschatz, Galvin and Gagne © 2018

Counting Algorithms n Keep a counter of the number of references that have been made to each page l Not common n Lease Frequently Used (LFU) Algorithm: replaces page with smallest count n Most Frequently Used (MFU) Algorithm: based on the argument that the page with the smallest count was probably just brought in and has yet to be used Operating System Concepts – 10 th Edition 10. 23 Silberschatz, Galvin and Gagne © 2018

Page-Buffering Algorithms n Keep a pool of free frames, always l Then frame available when needed, not found at fault time l Read page into free frame and select victim to evict and add to free pool l When convenient, evictim n Possibly, keep list of modified pages l When backing store otherwise idle, write pages there and set to non-dirty n Possibly, keep free frame contents intact and note what is in them l If referenced again before reused, no need to load contents again from disk l Generally useful to reduce penalty if wrong victim frame selected Operating System Concepts – 10 th Edition 10. 24 Silberschatz, Galvin and Gagne © 2018

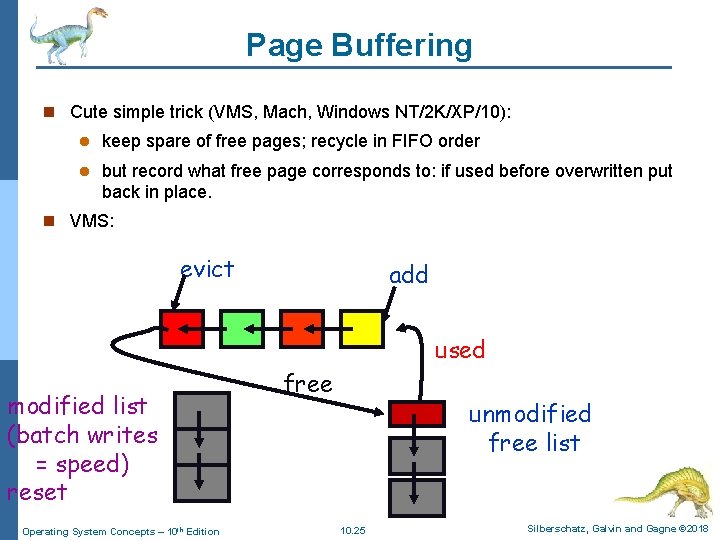

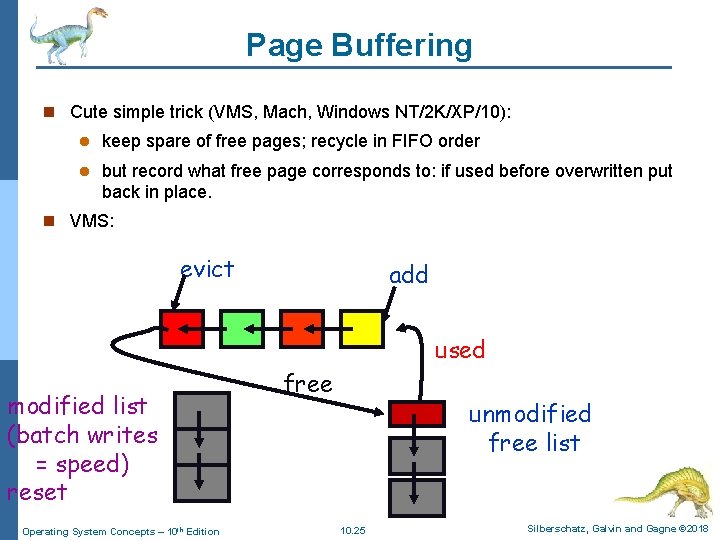

Page Buffering n Cute simple trick (VMS, Mach, Windows NT/2 K/XP/10): l keep spare of free pages; recycle in FIFO order l but record what free page corresponds to: if used before overwritten put back in place. n VMS: evict add used modified list (batch writes = speed) reset Operating System Concepts – 10 th Edition free unmodified free list 10. 25 Silberschatz, Galvin and Gagne © 2018

Applications and Page Replacement n All of these algorithms have OS guessing about future page access n Some applications have better knowledge – i. e. databases n Memory intensive applications can cause double buffering l OS keeps copy of page in memory as I/O buffer l Application keeps page in memory for its own work n Operating system can given direct access to the disk, getting out of the way of the applications l Raw disk mode n Bypasses buffering, locking, etc Operating System Concepts – 10 th Edition 10. 26 Silberschatz, Galvin and Gagne © 2018

Allocation of Frames n Each process needs minimum number of frames l Defined by instruction-set architecture n Example: IBM 370 – 6 pages to handle SS MOVE instruction: l instruction is 6 bytes, might span 2 pages l 2 pages to handle from l 2 pages to handle to n Maximum of course is total frames in the system n Two major allocation schemes l fixed allocation l priority allocation n Many variations Operating System Concepts – 10 th Edition 10. 27 Silberschatz, Galvin and Gagne © 2018

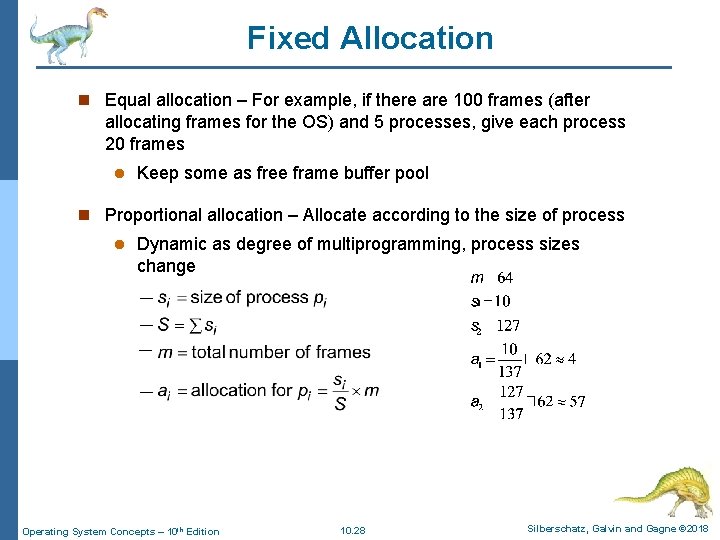

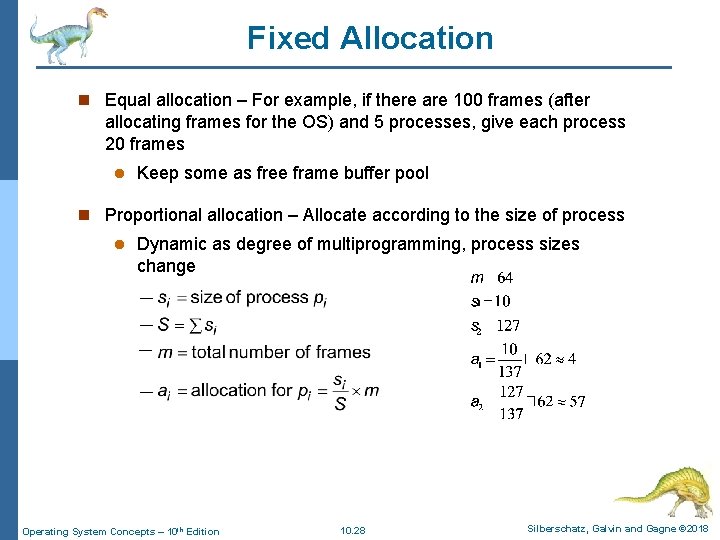

Fixed Allocation n Equal allocation – For example, if there are 100 frames (after allocating frames for the OS) and 5 processes, give each process 20 frames l Keep some as free frame buffer pool n Proportional allocation – Allocate according to the size of process l Dynamic as degree of multiprogramming, process sizes change Operating System Concepts – 10 th Edition 10. 28 Silberschatz, Galvin and Gagne © 2018

Priority Allocation n Use a proportional allocation scheme using priorities rather than size n If process Pi generates a page fault, l select for replacement one of its frames l select for replacement a frame from a process with lower priority number Operating System Concepts – 10 th Edition 10. 29 Silberschatz, Galvin and Gagne © 2018

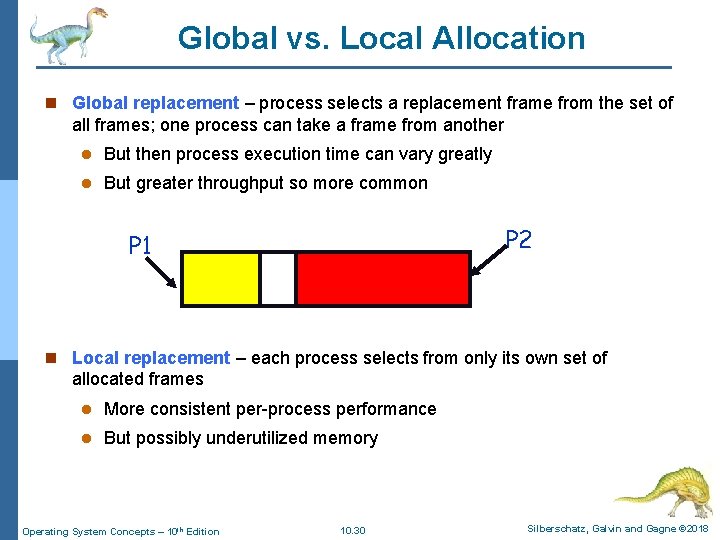

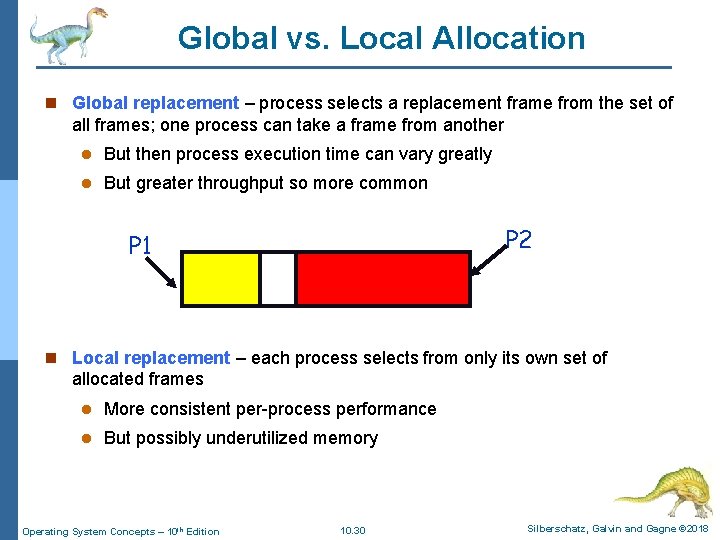

Global vs. Local Allocation n Global replacement – process selects a replacement frame from the set of all frames; one process can take a frame from another l But then process execution time can vary greatly l But greater throughput so more common P 2 P 1 n Local replacement – each process selects from only its own set of allocated frames l More consistent per-process performance l But possibly underutilized memory Operating System Concepts – 10 th Edition 10. 30 Silberschatz, Galvin and Gagne © 2018

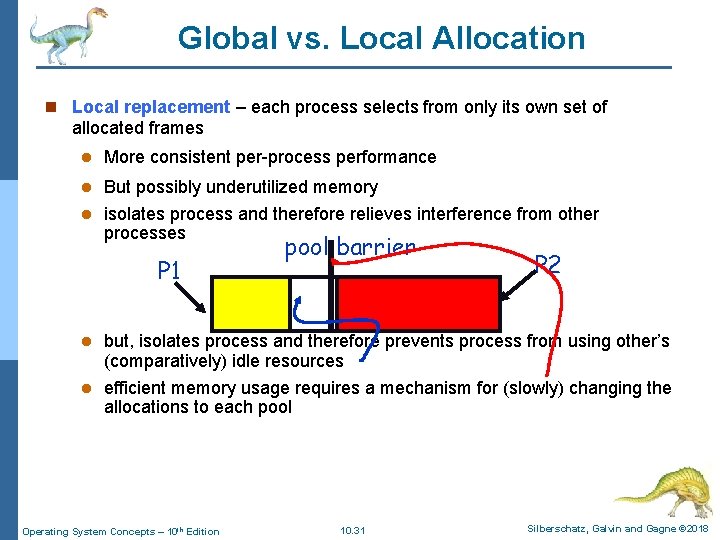

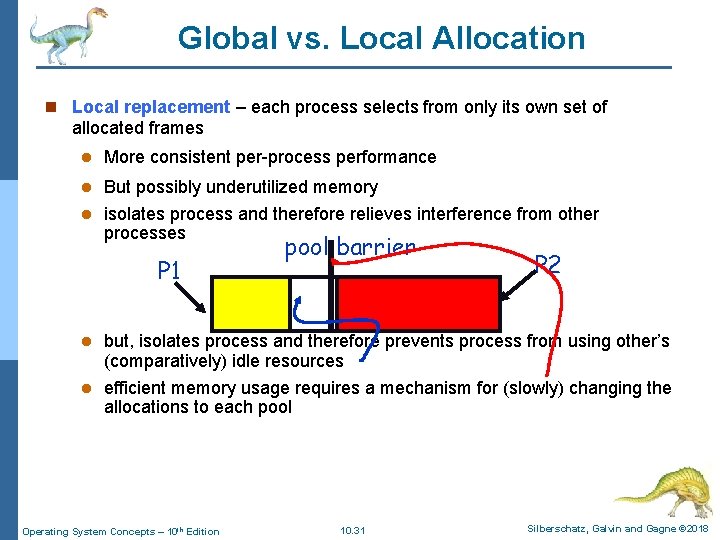

Global vs. Local Allocation n Local replacement – each process selects from only its own set of allocated frames l More consistent per-process performance l But possibly underutilized memory l isolates process and therefore relieves interference from other processes P 1 pool barrier P 2 but, isolates process and therefore prevents process from using other’s (comparatively) idle resources l efficient memory usage requires a mechanism for (slowly) changing the allocations to each pool l Operating System Concepts – 10 th Edition 10. 31 Silberschatz, Galvin and Gagne © 2018

Non-Uniform Memory Access n So far all memory accessed equally n Many systems are NUMA – speed of access to memory varies l Consider system boards containing CPUs and memory, interconnected over a system bus n Optimal performance comes from allocating memory “close to” the CPU on which the thread is scheduled l And modifying the scheduler to schedule thread on the same system board when possible l Solved by Solaris by creating lgroups 4 Structure 4 Used to track CPU / Memory low latency groups my schedule and pager 4 When possible schedule all threads of a process and allocate all memory for that process within the lgroup Operating System Concepts – 10 th Edition 10. 32 Silberschatz, Galvin and Gagne © 2018