CHAPTER 10 MULTICOLLINEARITY WHAT HAPPENS IF THE REGRESSORS

- Slides: 8

CHAPTER 10. MULTICOLLINEARITY: WHAT HAPPENS IF THE REGRESSORS ARE CORRELATED?

Assumption 10 of the CLRM No Multicollinearity among the regressors in the regression model. The questions in Multicollinearity: 1. 2. 3. 4. 5. What is the nature of multicollinearity? Is multicollinearity really a problem? What are its practical consequences? How does one detect it? What remedial measures can be taken to alleviate the problem of multicollinearity?

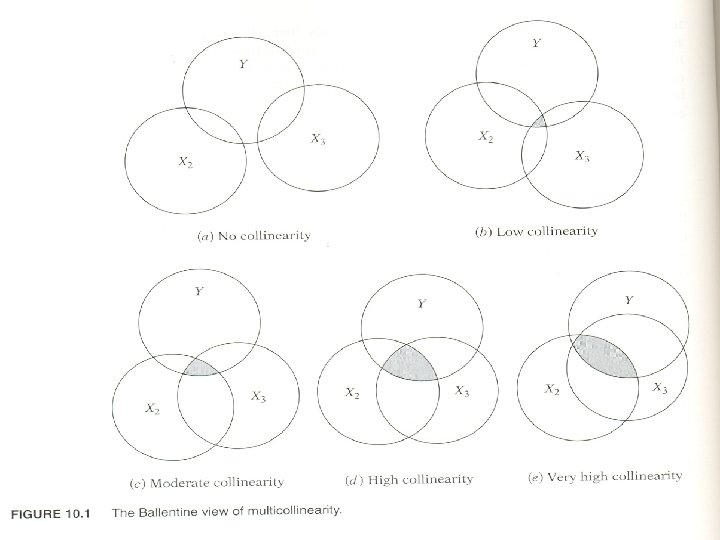

Why Multicollinearity? n If multicollinearity is perfect, the regression coefficients of the X variables are indeterminate and their standard errors are infinite. n If multicollinearity is less than perfect, the regression coefficients, although determinate, possess large standard errors, which means the coefficients cannot be estimated with great precision or accuracy.

Additional Sources of Multicollinearity n Data collection method: Limited sampling n Constraints on the model or in the population being sampled. For example, in the regression of electricity consumption on income (X 2) and house size (X 3) there is physical constraint in the population in that families with higher incomes generally have larger homes. n Model Specification: Employing incorrect model n An overdetermined model: where number of explanatory variables are more than the number of observations.

Consequences of Multicollinearity n Large variances and covariances which make precise estimation difficult n Wider confidence intervals leading to the acceptance of the “zero null hypothesis”. n Insignificant t ratios n High R-squares n OLS estimators and their standard errors can be sensitive to small changes in data

How to Detect Multicollinearity n High R-square but few significant t ratios n High pair-wise correlations among regressors n Auxiliary Regressions: regression each X on another and compare R-square and F values n Eigenvalues and condition index n Tolerance and variance inflation factor

Remedial Measures n A Priori Information n Combining cross sectional and time series data that is known as PANEL DATA. n Dropping variable(s) by avoiding specification bias or error. n Transformation of variables such as differencing, lagging, and ratio transformation.