Chapter 1 Introduction to Digital and Analog Communication

- Slides: 21

Chapter 1 Introduction to Digital and Analog Communication Systems (Sections 1 – 9)

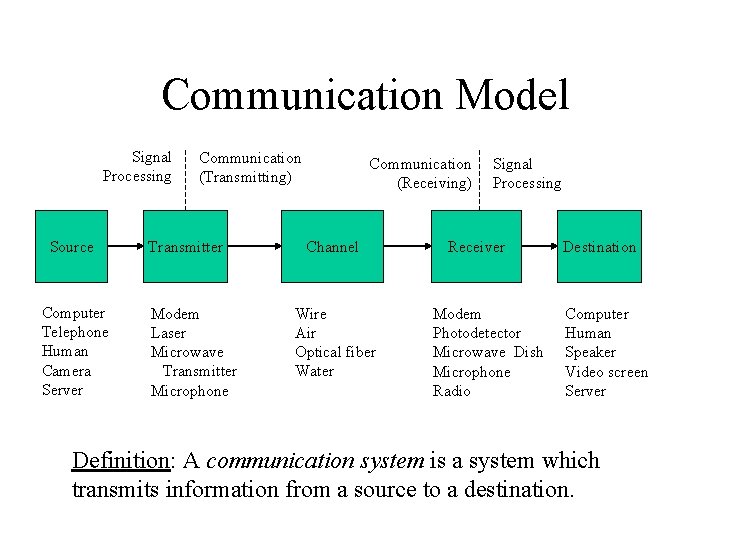

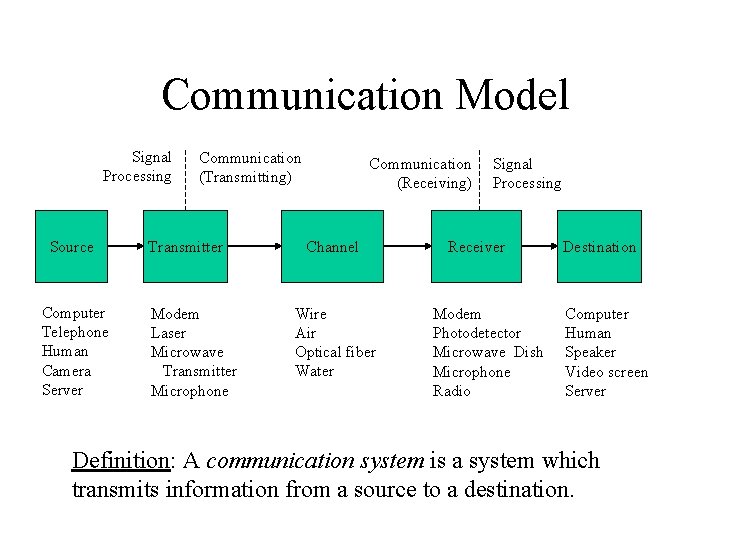

Communication Model Signal Processing Source Computer Telephone Human Camera Server Communication (Transmitting) Transmitter Modem Laser Microwave Transmitter Microphone Communication (Receiving) Channel Wire Air Optical fiber Water Signal Processing Receiver Modem Photodetector Microwave Dish Microphone Radio Destination Computer Human Speaker Video screen Server Definition: A communication system is a system which transmits information from a source to a destination.

Goals of ESE 471 • Understand how communication systems work. • Develop mathematical models for methods and components of communication systems. • Analyze performance of communication systems and methods. • Understand practical systems in use.

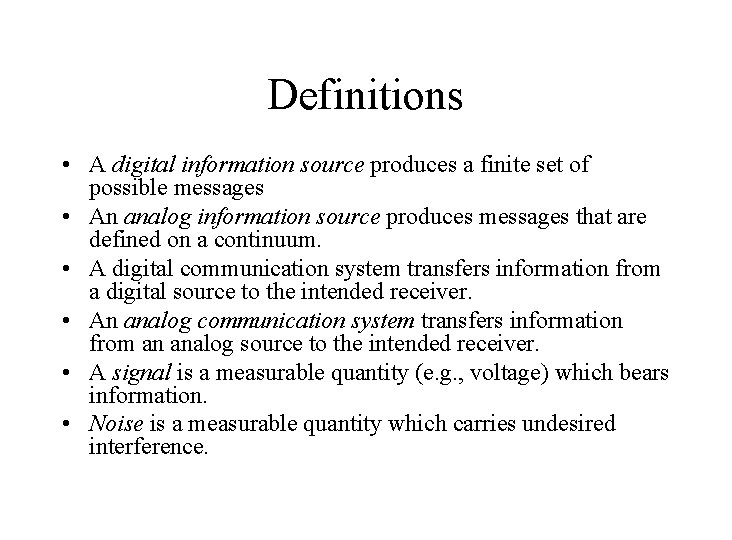

Definitions • A digital information source produces a finite set of possible messages • An analog information source produces messages that are defined on a continuum. • A digital communication system transfers information from a digital source to the intended receiver. • An analog communication system transfers information from an analog source to the intended receiver. • A signal is a measurable quantity (e. g. , voltage) which bears information. • Noise is a measurable quantity which carries undesired interference.

Why Digital? • • • Less expensive circuits Privacy and security Small signals (less power) Converged multimedia Error correction and reduction

Why Not Digital? • More bandwidth • Synchronization in electrical circuits • Approximated information

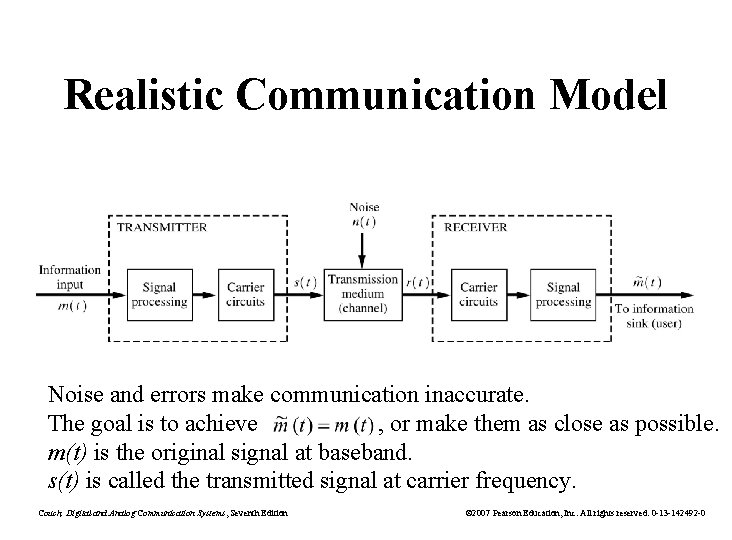

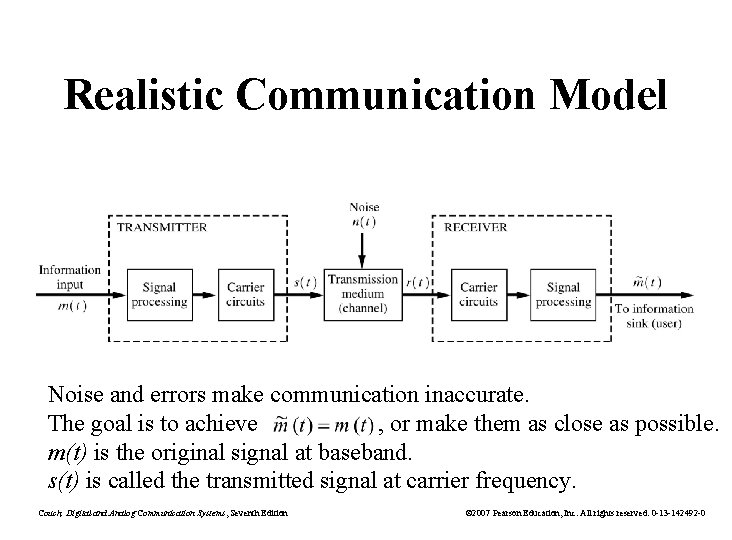

Realistic Communication Model Noise and errors make communication inaccurate. The goal is to achieve , or make them as close as possible. m(t) is the original signal at baseband. s(t) is called the transmitted signal at carrier frequency. Couch, Digital and Analog Communication Systems, Seventh Edition © 2007 Pearson Education, Inc. All rights reserved. 0 -13 -142492 -0

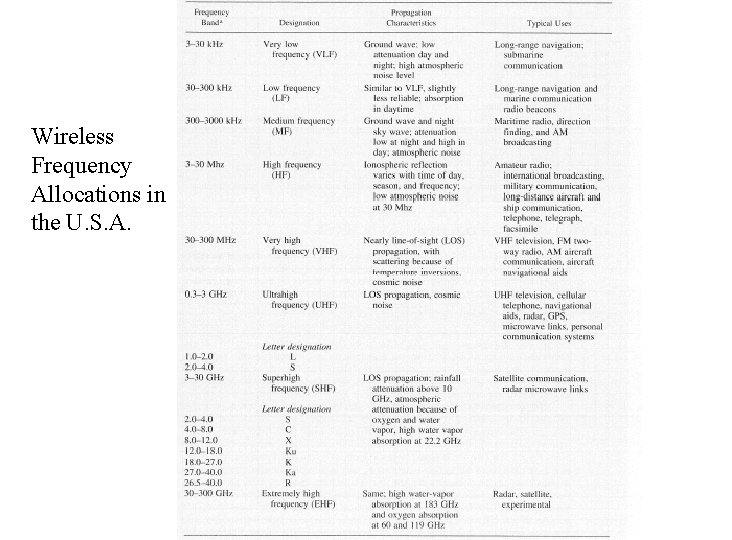

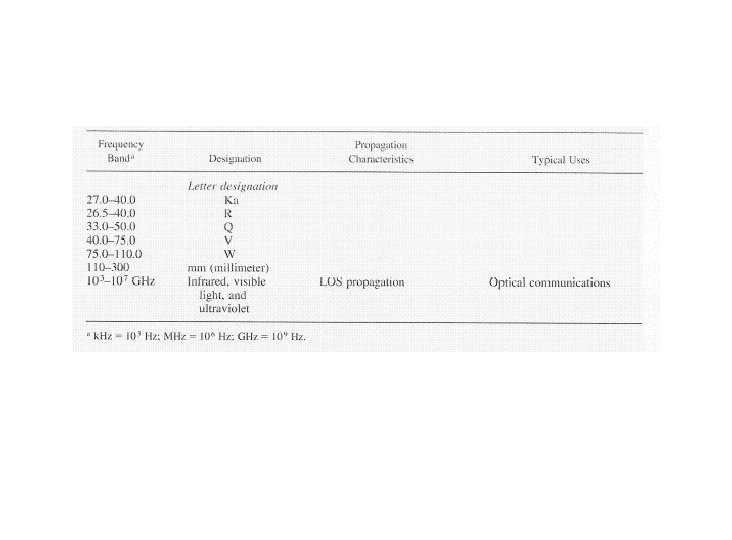

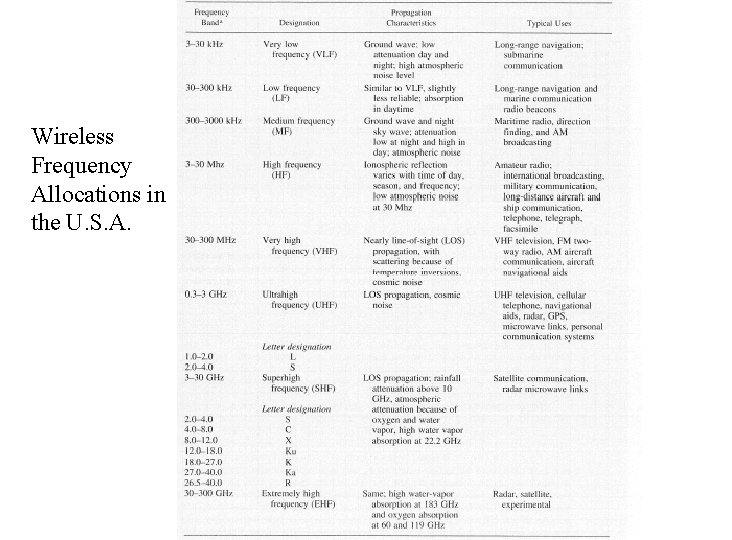

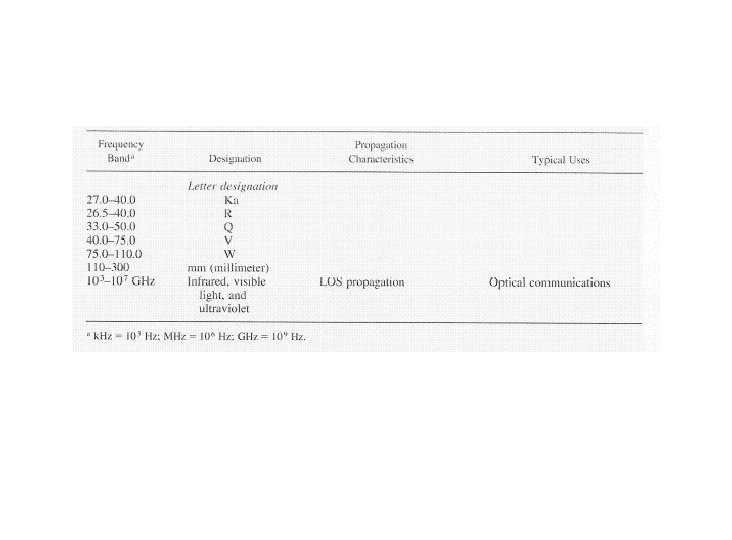

Wireless Frequency Allocations in the U. S. A.

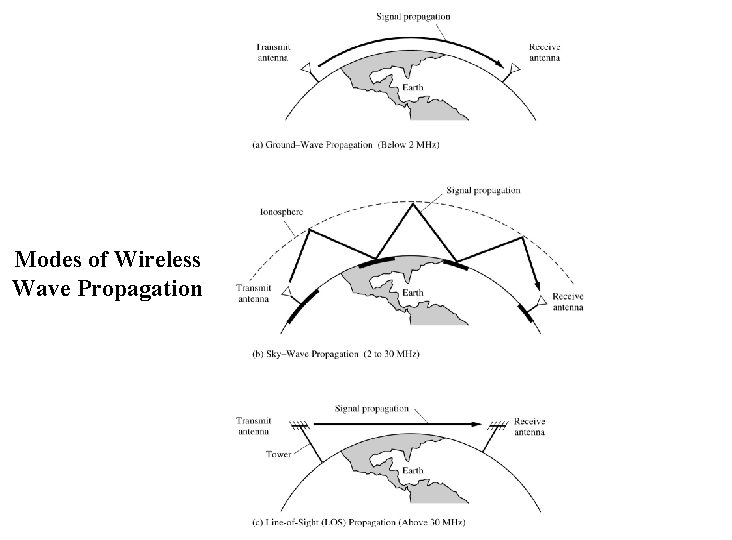

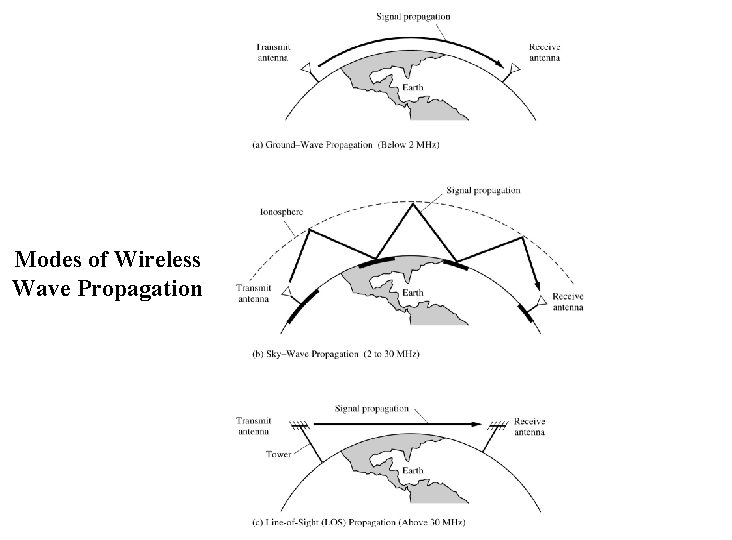

Modes of Wireless Wave Propagation

Information • Electronic communication systems are inherently analog. • The process of communication is inherently discrete. • Limitations – Noise or uncertainty – Changes in system characteristics

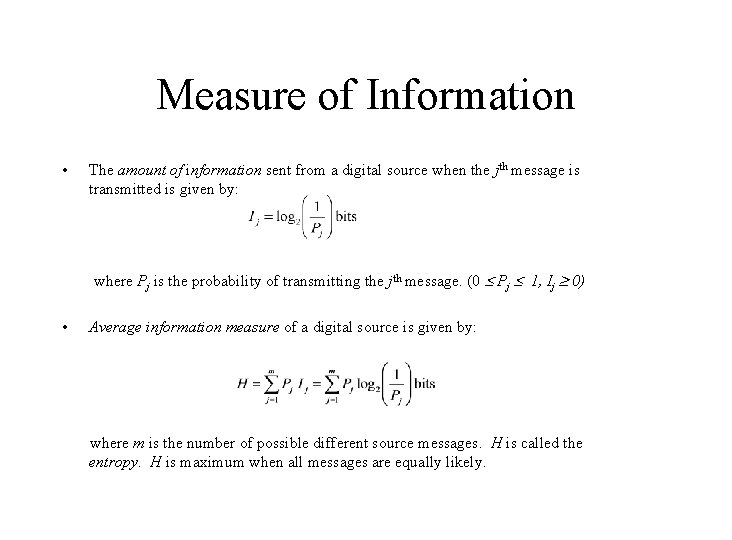

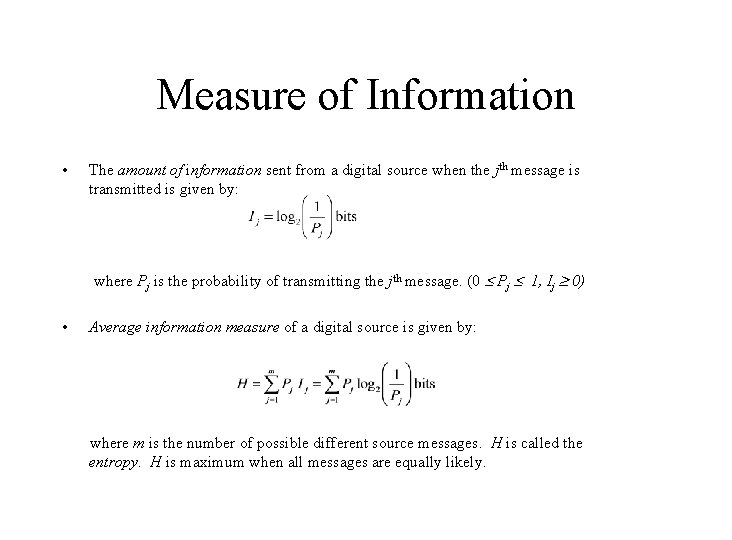

Measure of Information • The amount of information sent from a digital source when the jth message is transmitted is given by: where Pj is the probability of transmitting the jth message. (0 Pj 1, Ij 0) • Average information measure of a digital source is given by: where m is the number of possible different source messages. H is called the entropy. H is maximum when all messages are equally likely.

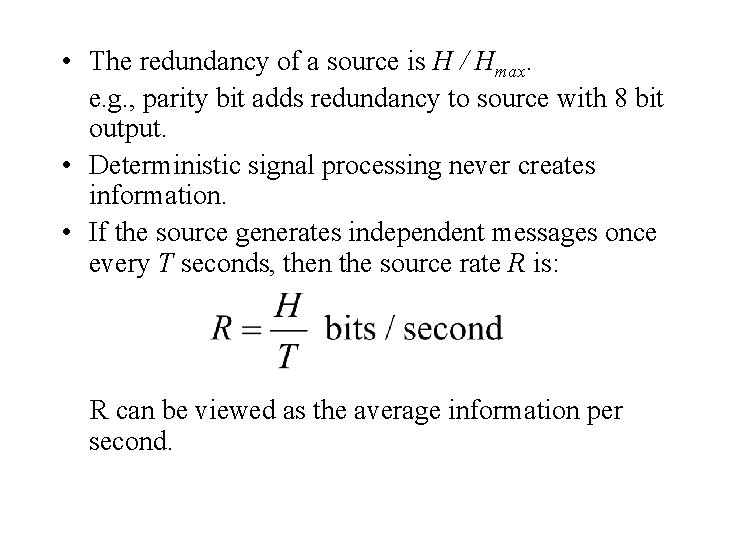

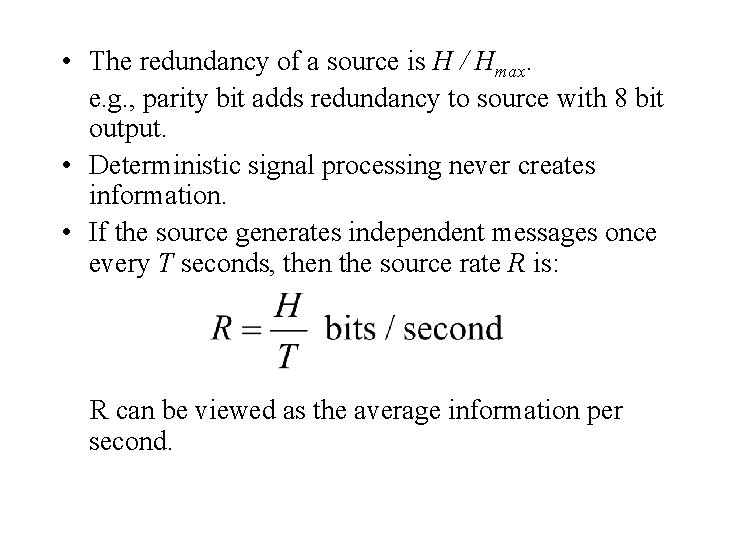

• The redundancy of a source is H / Hmax. e. g. , parity bit adds redundancy to source with 8 bit output. • Deterministic signal processing never creates information. • If the source generates independent messages once every T seconds, then the source rate R is: R can be viewed as the average information per second.

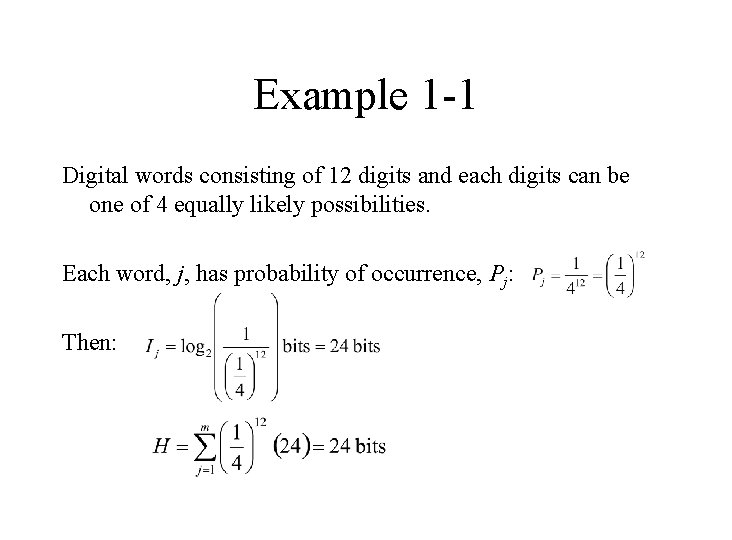

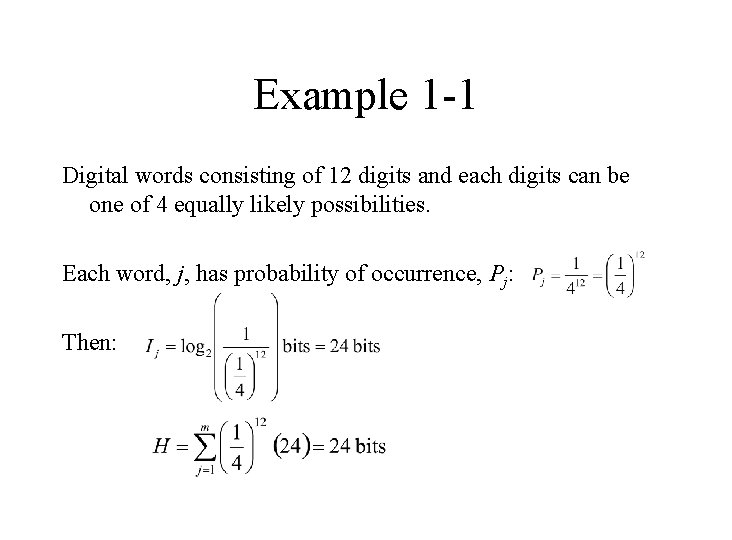

Example 1 -1 Digital words consisting of 12 digits and each digits can be one of 4 equally likely possibilities. Each word, j, has probability of occurrence, Pj: Then:

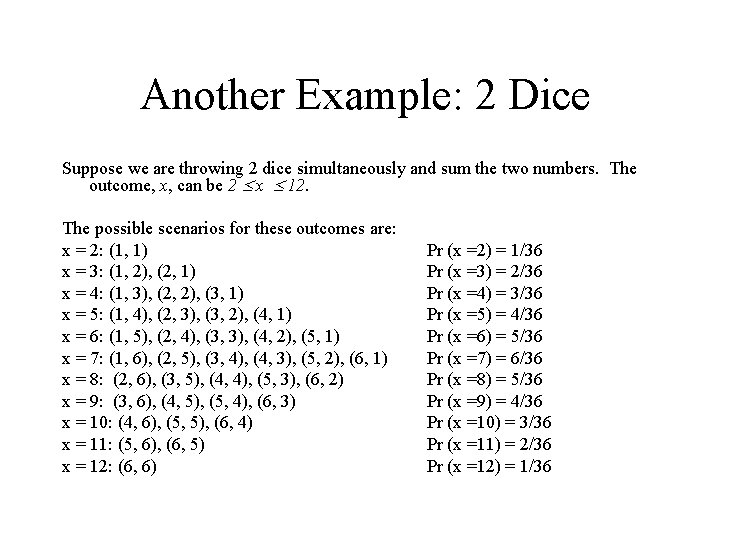

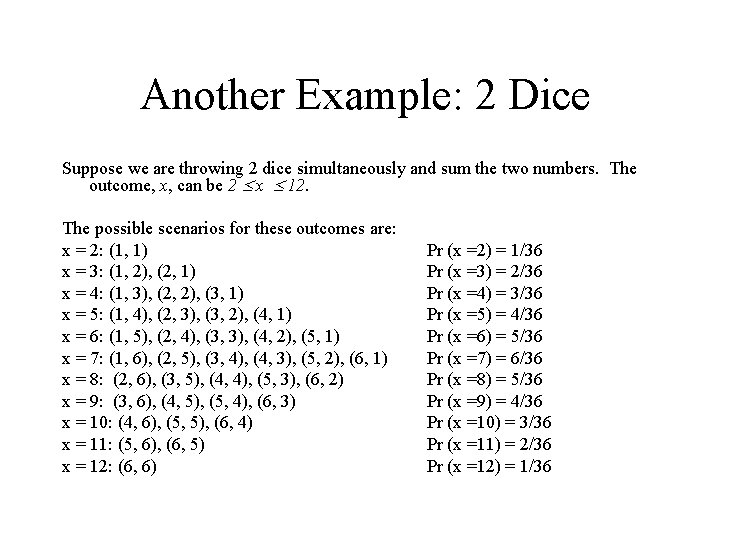

Another Example: 2 Dice Suppose we are throwing 2 dice simultaneously and sum the two numbers. The outcome, x, can be 2 x 12. The possible scenarios for these outcomes are: x = 2: (1, 1) x = 3: (1, 2), (2, 1) x = 4: (1, 3), (2, 2), (3, 1) x = 5: (1, 4), (2, 3), (3, 2), (4, 1) x = 6: (1, 5), (2, 4), (3, 3), (4, 2), (5, 1) x = 7: (1, 6), (2, 5), (3, 4), (4, 3), (5, 2), (6, 1) x = 8: (2, 6), (3, 5), (4, 4), (5, 3), (6, 2) x = 9: (3, 6), (4, 5), (5, 4), (6, 3) x = 10: (4, 6), (5, 5), (6, 4) x = 11: (5, 6), (6, 5) x = 12: (6, 6) Pr (x =2) = 1/36 Pr (x =3) = 2/36 Pr (x =4) = 3/36 Pr (x =5) = 4/36 Pr (x =6) = 5/36 Pr (x =7) = 6/36 Pr (x =8) = 5/36 Pr (x =9) = 4/36 Pr (x =10) = 3/36 Pr (x =11) = 2/36 Pr (x =12) = 1/36

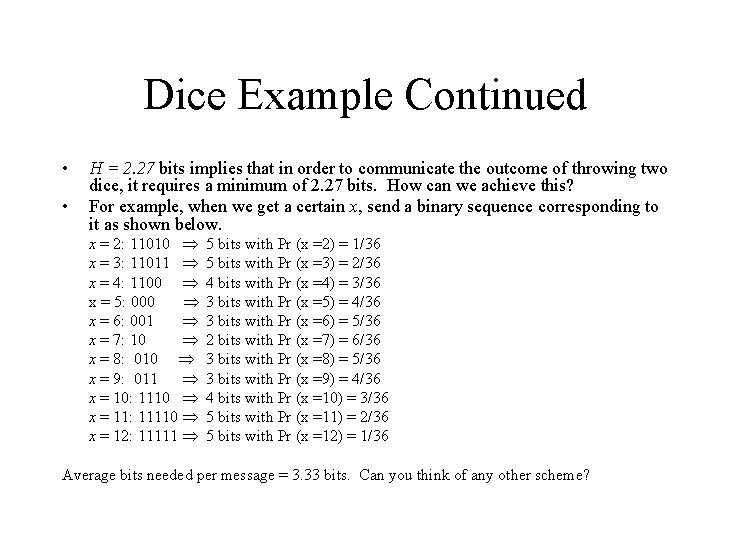

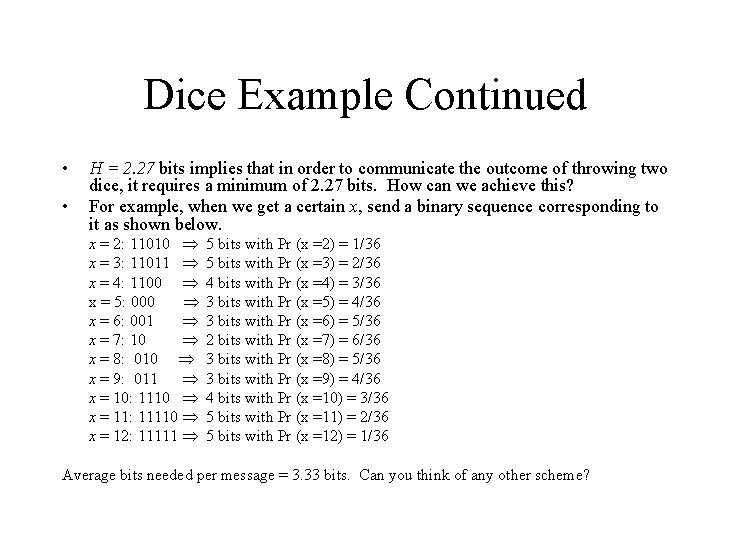

Dice Example Continued • • H = 2. 27 bits implies that in order to communicate the outcome of throwing two dice, it requires a minimum of 2. 27 bits. How can we achieve this? For example, when we get a certain x, send a binary sequence corresponding to it as shown below. x = 2: 11010 x = 3: 11011 x = 4: 1100 x = 5: 000 x = 6: 001 x = 7: 10 x = 8: 010 x = 9: 011 x = 10: 1110 x = 11: 11110 x = 12: 11111 5 bits with Pr (x =2) = 1/36 5 bits with Pr (x =3) = 2/36 4 bits with Pr (x =4) = 3/36 3 bits with Pr (x =5) = 4/36 3 bits with Pr (x =6) = 5/36 2 bits with Pr (x =7) = 6/36 3 bits with Pr (x =8) = 5/36 3 bits with Pr (x =9) = 4/36 4 bits with Pr (x =10) = 3/36 5 bits with Pr (x =11) = 2/36 5 bits with Pr (x =12) = 1/36 Average bits needed per message = 3. 33 bits. Can you think of any other scheme?

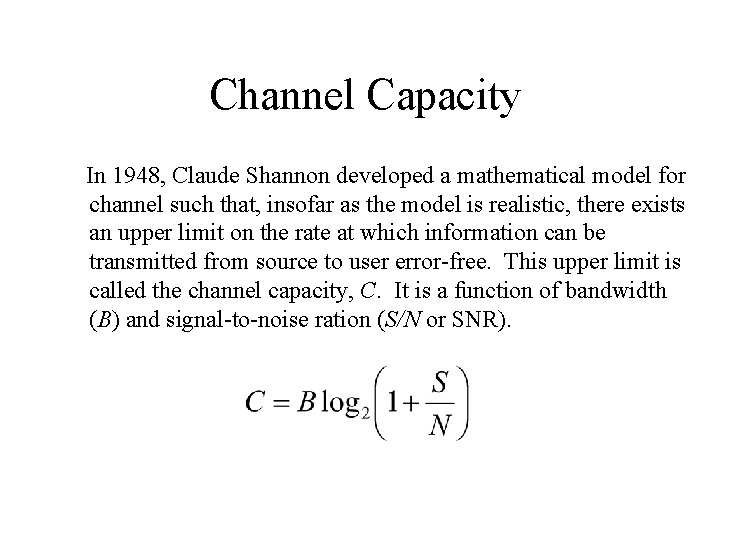

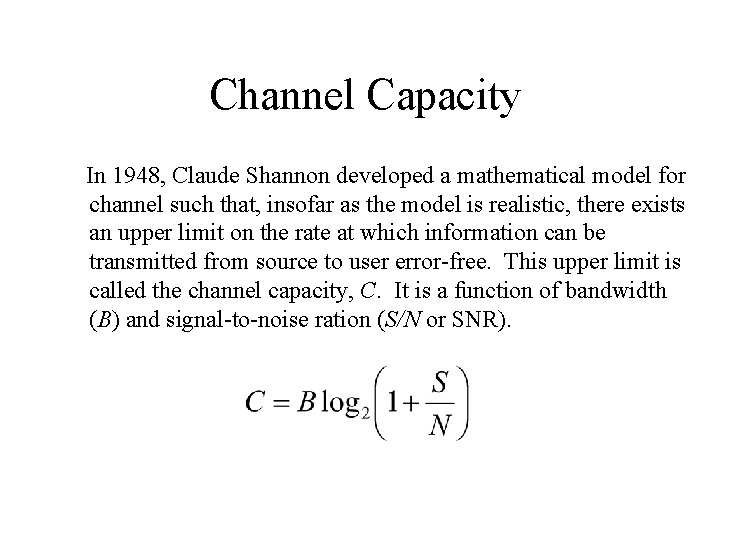

Channel Capacity In 1948, Claude Shannon developed a mathematical model for channel such that, insofar as the model is realistic, there exists an upper limit on the rate at which information can be transmitted from source to user error-free. This upper limit is called the channel capacity, C. It is a function of bandwidth (B) and signal-to-noise ration (S/N or SNR).

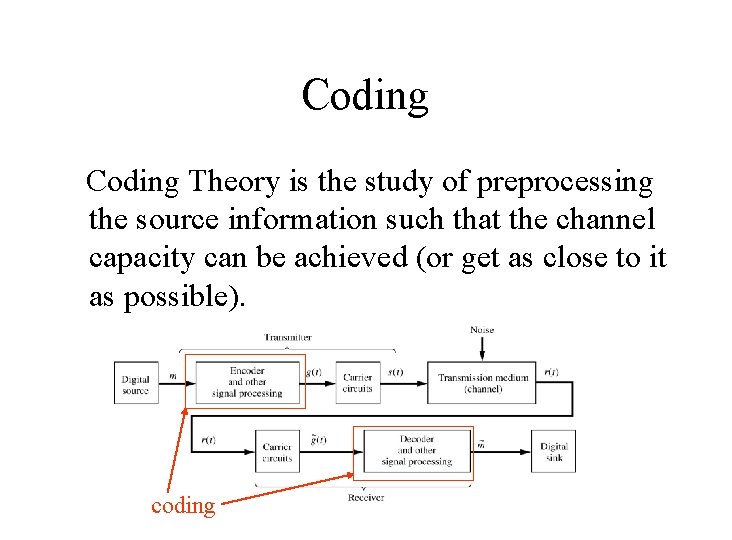

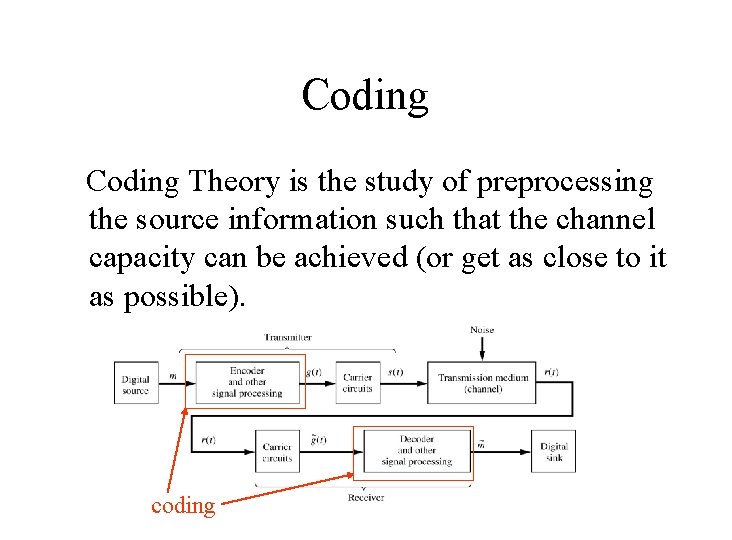

Coding Theory is the study of preprocessing the source information such that the channel capacity can be achieved (or get as close to it as possible). coding