Chapter 1 Fundamentals of Computer Design EENG633 1

- Slides: 42

Chapter 1 Fundamentals of Computer Design EENG-633 1

A bit of history • 1945 – no stored-program computers • Today, less than $1, 000 will buy a personal computer more powerful than a computer bought in 1980 for $1 million. • What has contributed to this rapid increase? – Technology – Computer Design EENG-633 2

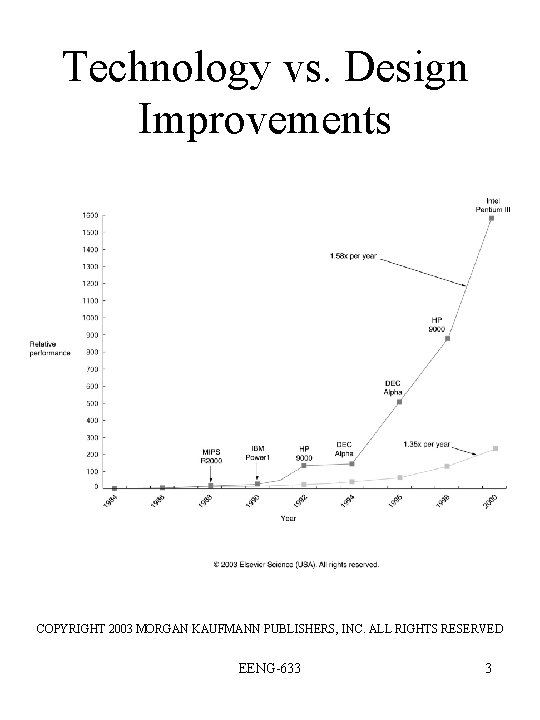

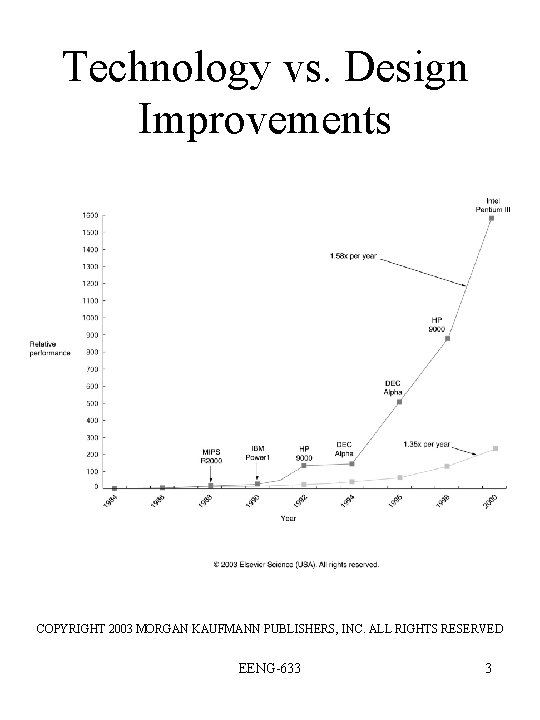

Technology vs. Design Improvements COPYRIGHT 2003 MORGAN KAUFMANN PUBLISHERS, INC. ALL RIGHTS RESERVED EENG-633 3

Course Emphasis • “by 2001, the difference between the highest-performance microprocessors and what would have been obtained by relying solely on technology, including improved circuit design, was about a factor of 15” • In this course we will discuss: – Architectural design techniques used – Associated compiler improvements – Quantitative approach to computer design and analysis EENG-633 4

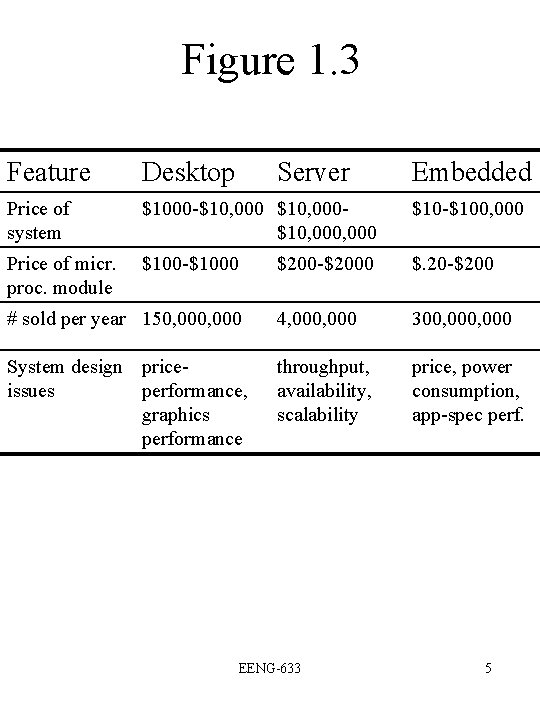

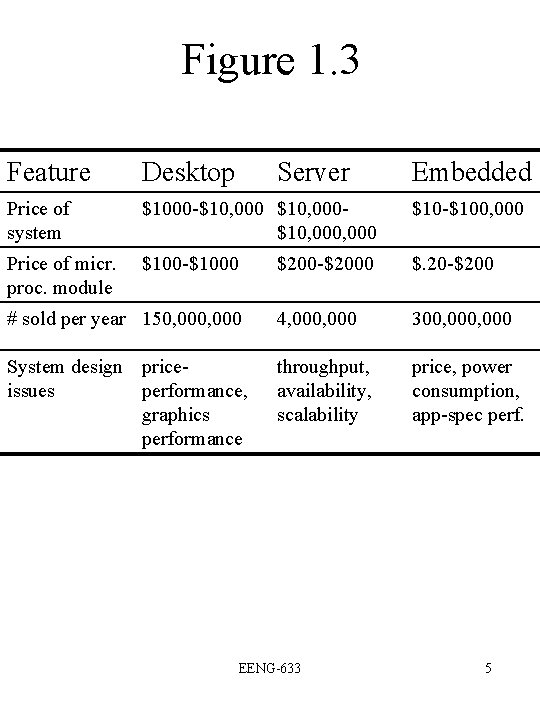

Figure 1. 3 Feature Desktop Server Price of system $1000 -$10, 000, 000 $10 -$100, 000 Price of micr. proc. module $100 -$1000 $200 -$2000 $. 20 -$200 # sold per year 150, 000 4, 000 300, 000 System design priceissues performance, graphics performance throughput, availability, scalability price, power consumption, app-spec perf. EENG-633 Embedded 5

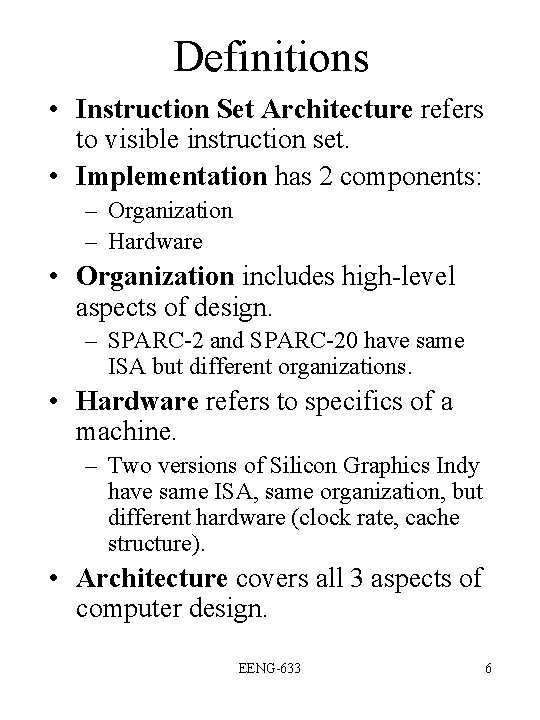

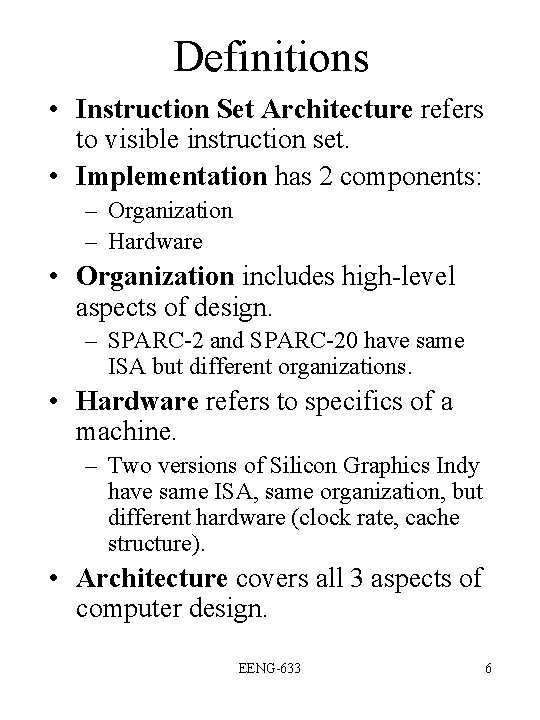

Definitions • Instruction Set Architecture refers to visible instruction set. • Implementation has 2 components: – Organization – Hardware • Organization includes high-level aspects of design. – SPARC-2 and SPARC-20 have same ISA but different organizations. • Hardware refers to specifics of a machine. – Two versions of Silicon Graphics Indy have same ISA, same organization, but different hardware (clock rate, cache structure). • Architecture covers all 3 aspects of computer design. EENG-633 6

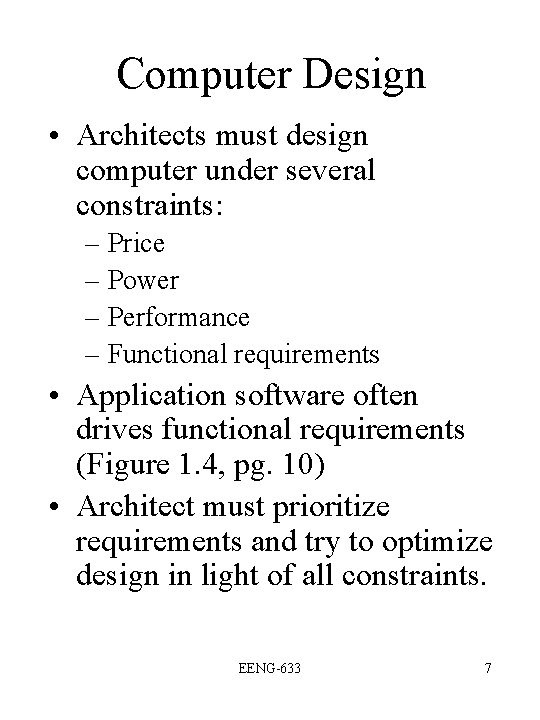

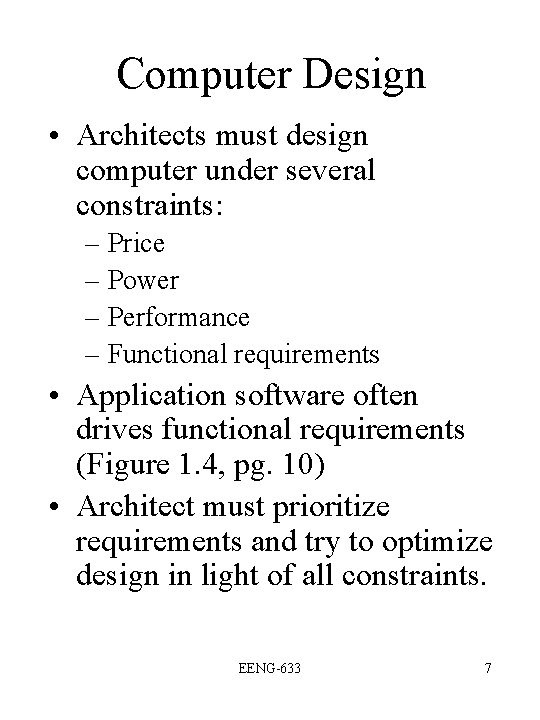

Computer Design • Architects must design computer under several constraints: – Price – Power – Performance – Functional requirements • Application software often drives functional requirements (Figure 1. 4, pg. 10) • Architect must prioritize requirements and try to optimize design in light of all constraints. EENG-633 7

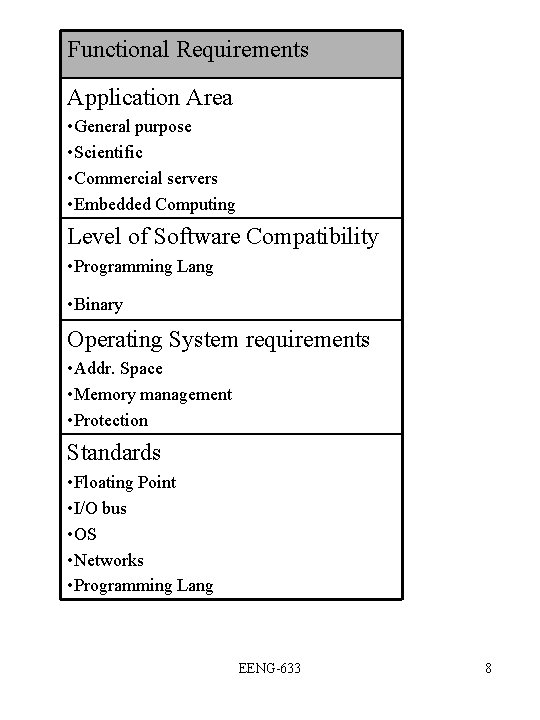

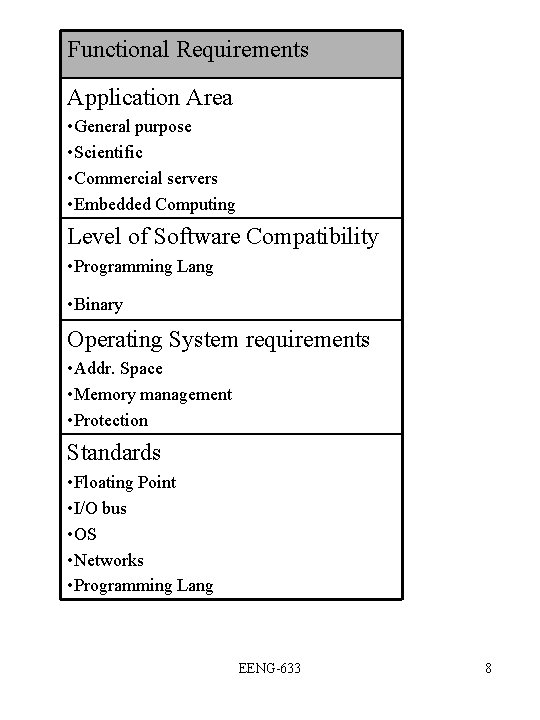

Functional Requirements Application Area • General purpose • Scientific • Commercial servers • Embedded Computing Level of Software Compatibility • Programming Lang • Binary Operating System requirements • Addr. Space • Memory management • Protection Standards • Floating Point • I/O bus • OS • Networks • Programming Lang EENG-633 8

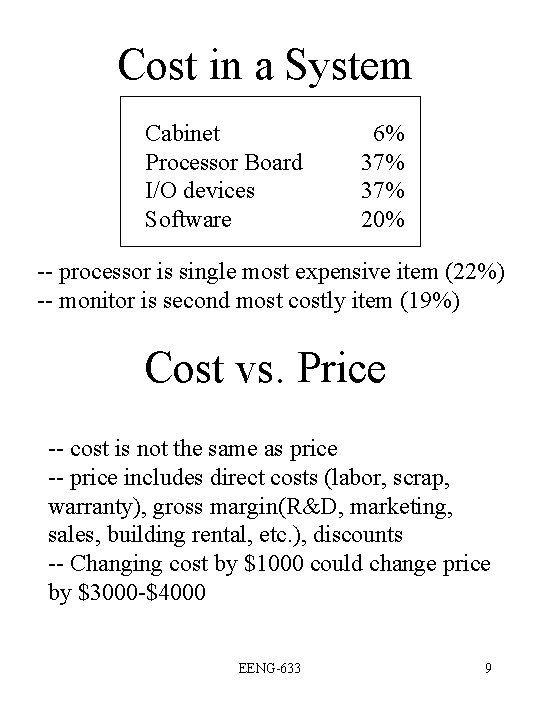

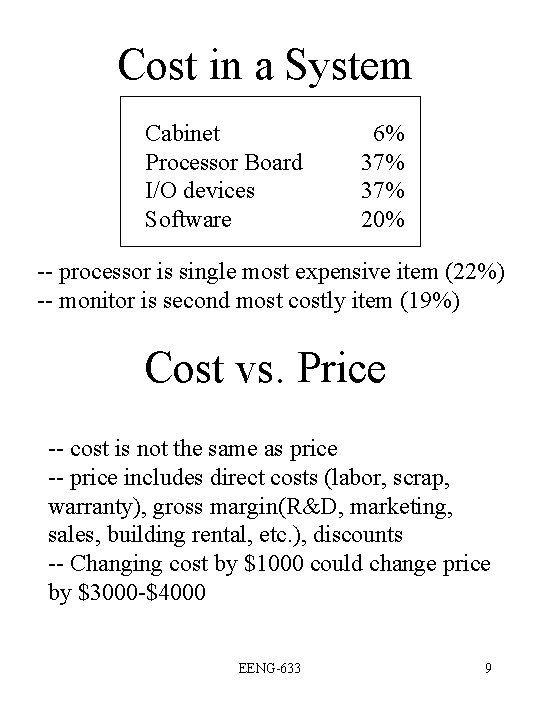

Cost in a System Cabinet Processor Board I/O devices Software 6% 37% 20% -- processor is single most expensive item (22%) -- monitor is second most costly item (19%) Cost vs. Price -- cost is not the same as price -- price includes direct costs (labor, scrap, warranty), gross margin(R&D, marketing, sales, building rental, etc. ), discounts -- Changing cost by $1000 could change price by $3000 -$4000 EENG-633 9

Performance • When we say one computer has better performance than another, what do we mean? • Different people may mean different things: – Single user – Manager of large, multi-user system EENG-633 10

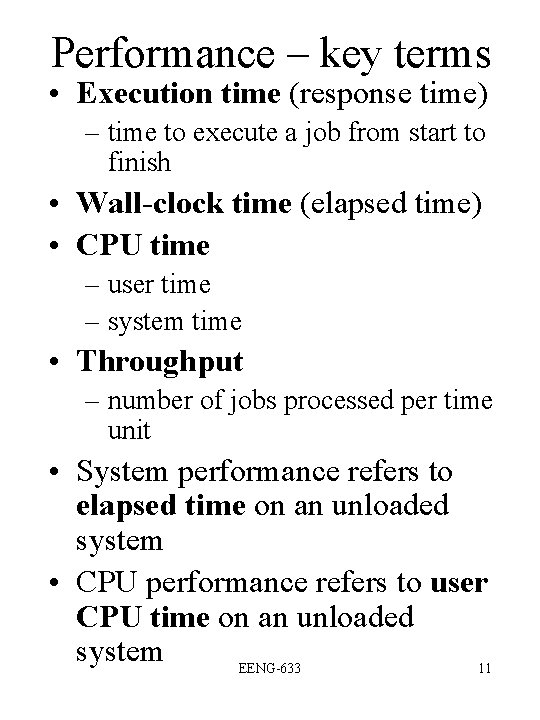

Performance – key terms • Execution time (response time) – time to execute a job from start to finish • Wall-clock time (elapsed time) • CPU time – user time – system time • Throughput – number of jobs processed per time unit • System performance refers to elapsed time on an unloaded system • CPU performance refers to user CPU time on an unloaded system EENG-633 11

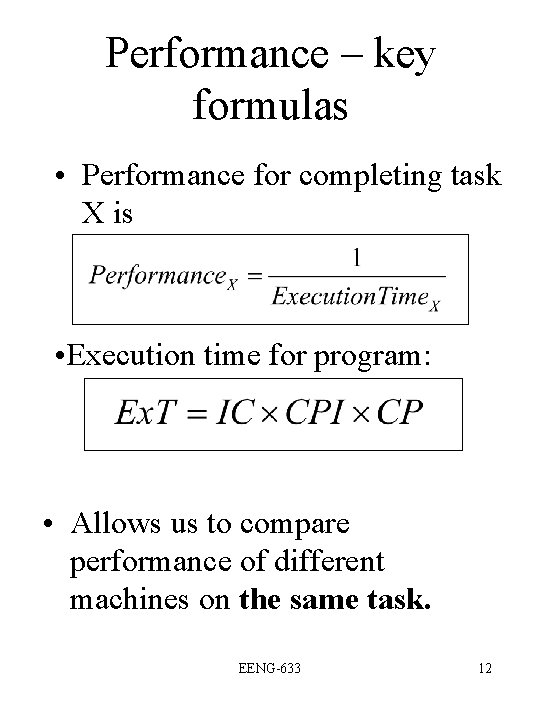

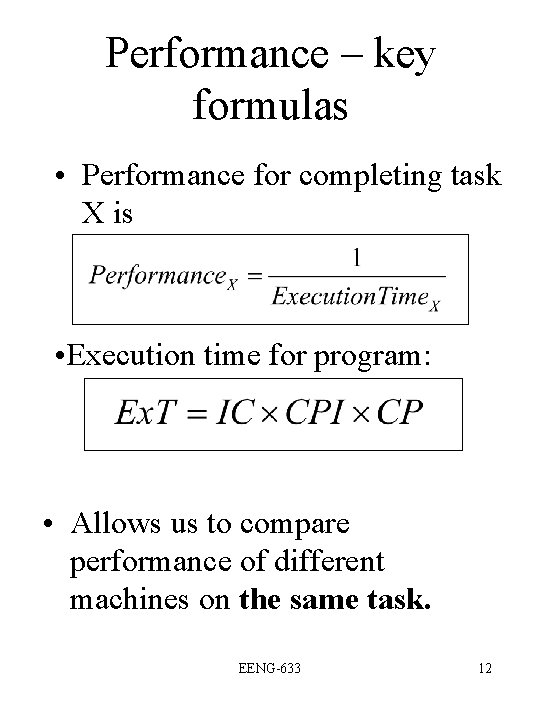

Performance – key formulas • Performance for completing task X is • Execution time for program: • Allows us to compare performance of different machines on the same task. EENG-633 12

Improving Performance • Improving performance is a hardware designers main goal. Given the previous formulas, how can a designer improve performance? EENG-633 13

If only it were that simple. . . • Unfortunately, these factors are NOT independent – Changing instruction set to lower the instruction count may lead to an organization with a slower clock cycle time… – small IC may not be fastest because complex instructions require more clock cycles. • There are many tradeoffs when designing for better performance. EENG-633 14

Performance Equation • IC: is a function of the instruction set architecture and compiler technology. • CPI: is primarily a function of implementation. • CP: is primarily a function of the hardware technology. EENG-633 15

Frequency vs. Period • Execution time is based on the clock period. • We are often given the clock rate (frequency) in MHz. What is MHz? • What is relationship between rate (f) and clock period (cp)? • Example – Clock rate 500 MHz – What’s the clock period? EENG-633 16

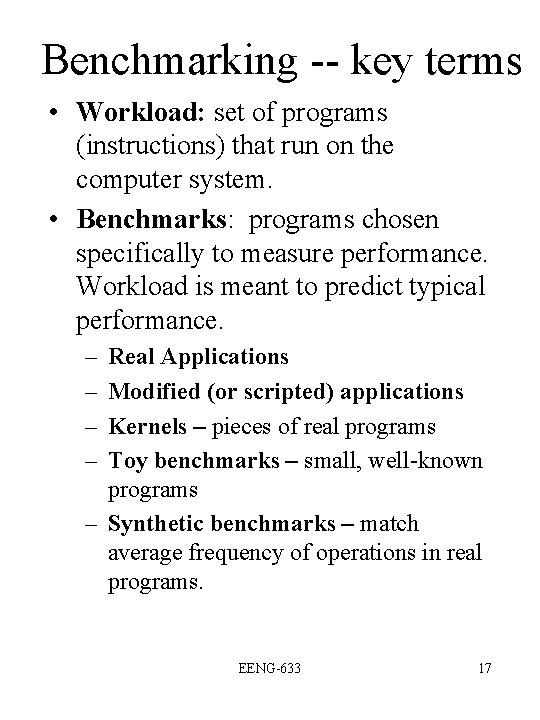

Benchmarking -- key terms • Workload: set of programs (instructions) that run on the computer system. • Benchmarks: programs chosen specifically to measure performance. Workload is meant to predict typical performance. – – Real Applications Modified (or scripted) applications Kernels – pieces of real programs Toy benchmarks – small, well-known programs – Synthetic benchmarks – match average frequency of operations in real programs. EENG-633 17

Benchmark suites • A benchmark suite is a collection of benchmark programs that contain a variety of applications. – E. g. , SPEC 92 • Advantage: weakness of one benchmark is lessened by presence of other benchmarks. • When we have several benchmarks we need to summarize performance of entire suite to determine which system has better performance. EENG-633 18

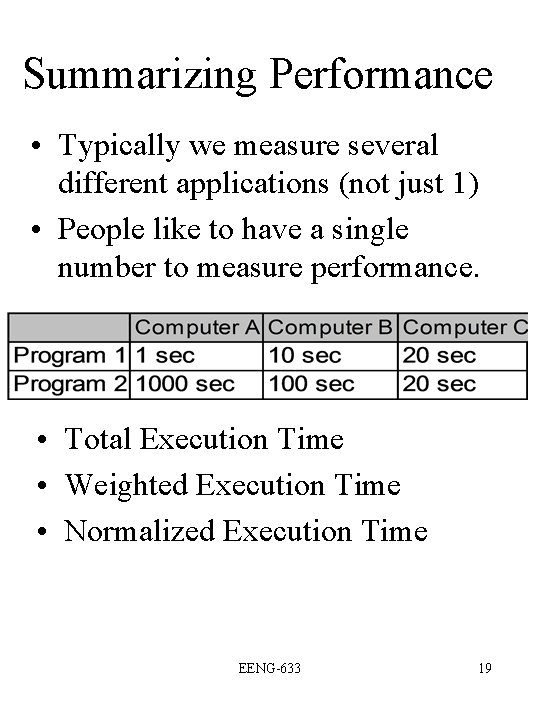

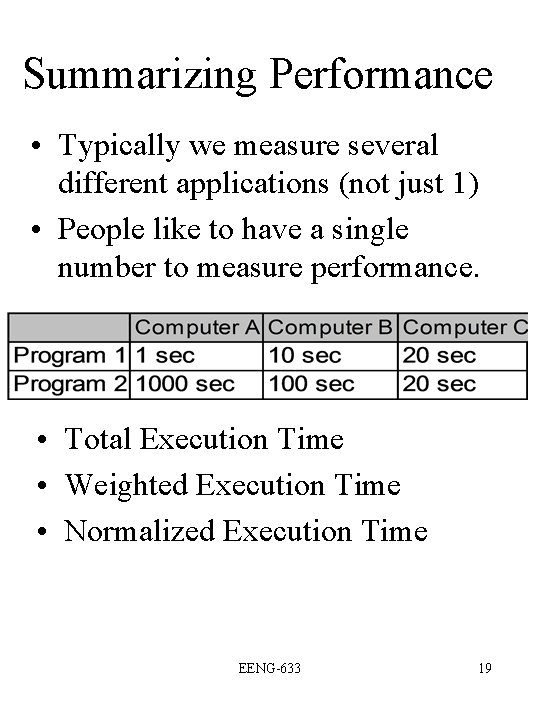

Summarizing Performance • Typically we measure several different applications (not just 1) • People like to have a single number to measure performance. • Total Execution Time • Weighted Execution Time • Normalized Execution Time EENG-633 19

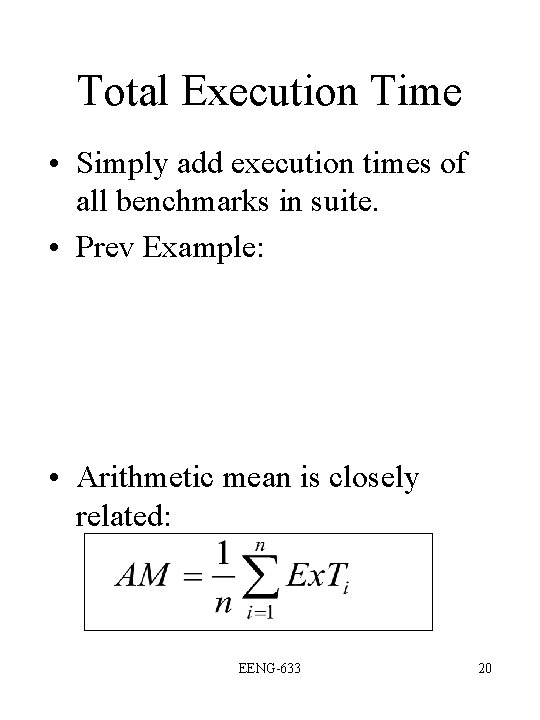

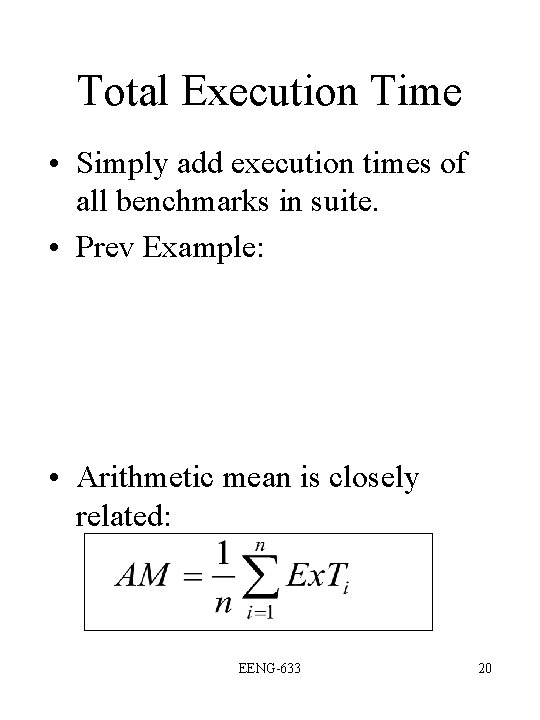

Total Execution Time • Simply add execution times of all benchmarks in suite. • Prev Example: • Arithmetic mean is closely related: EENG-633 20

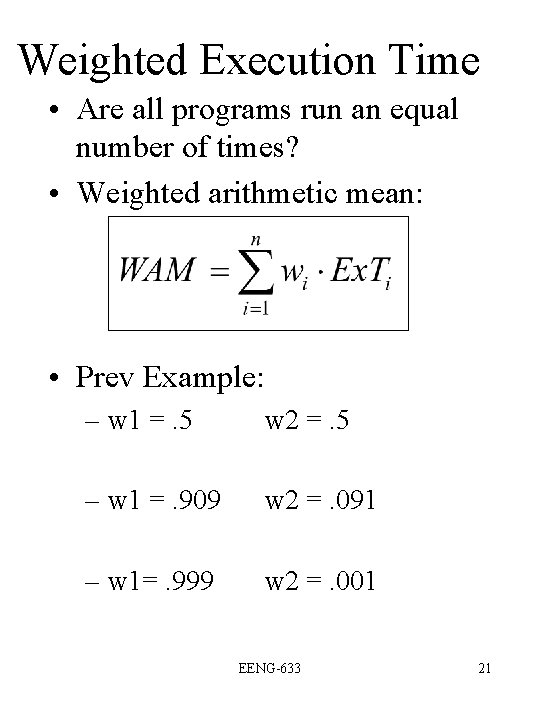

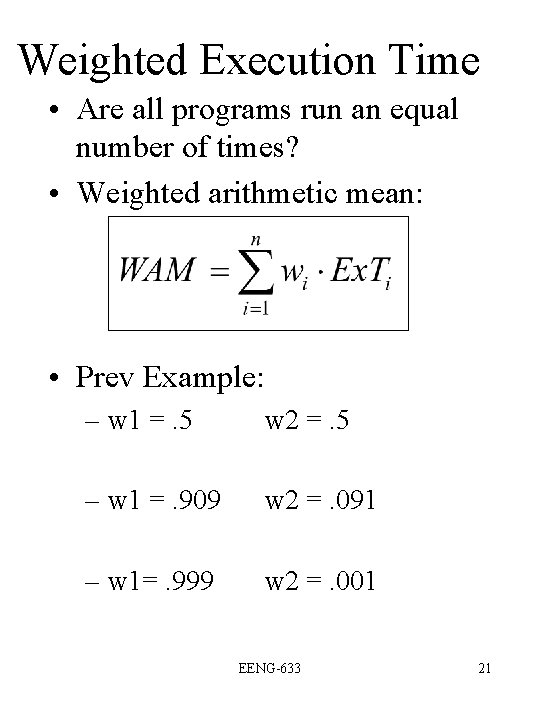

Weighted Execution Time • Are all programs run an equal number of times? • Weighted arithmetic mean: • Prev Example: – w 1 =. 5 w 2 =. 5 – w 1 =. 909 w 2 =. 091 – w 1=. 999 w 2 =. 001 EENG-633 21

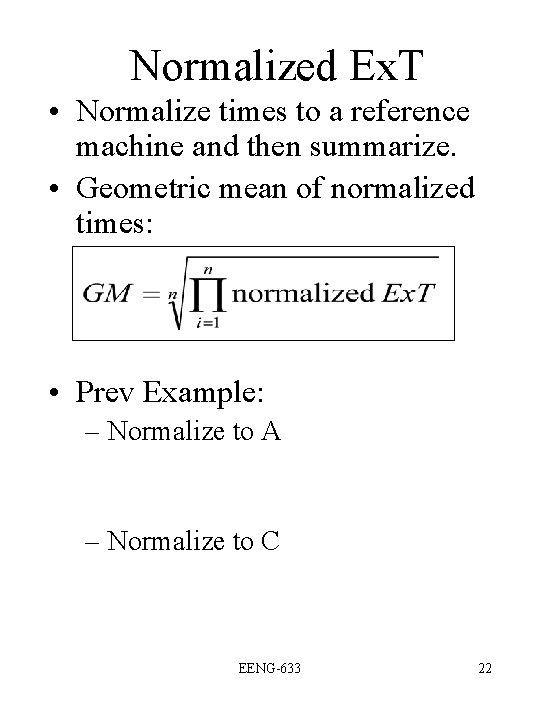

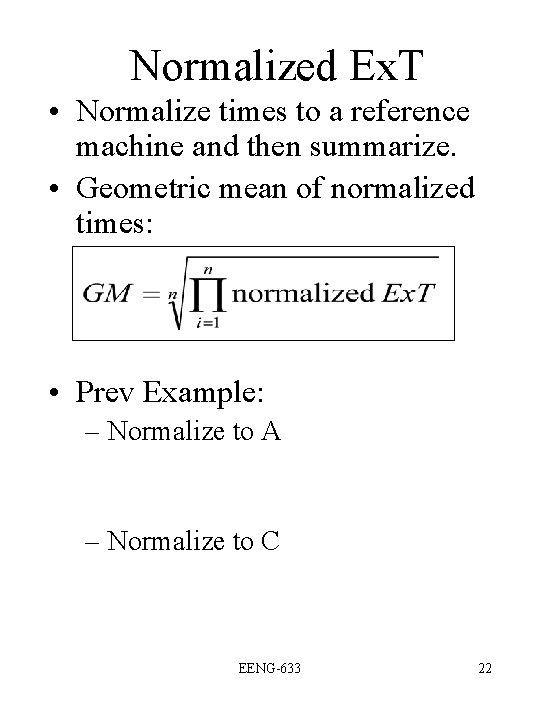

Normalized Ex. T • Normalize times to a reference machine and then summarize. • Geometric mean of normalized times: • Prev Example: – Normalize to A – Normalize to C EENG-633 22

Pros and Cons of GM • Pros: – GM is independent of running times of individual programs – Independent of base machine. • Cons: – Does not predict execution time. – Encourages hardware and software designers to focus on benchmarks that are easiest to improved rather than the slowest ones. EENG-633 23

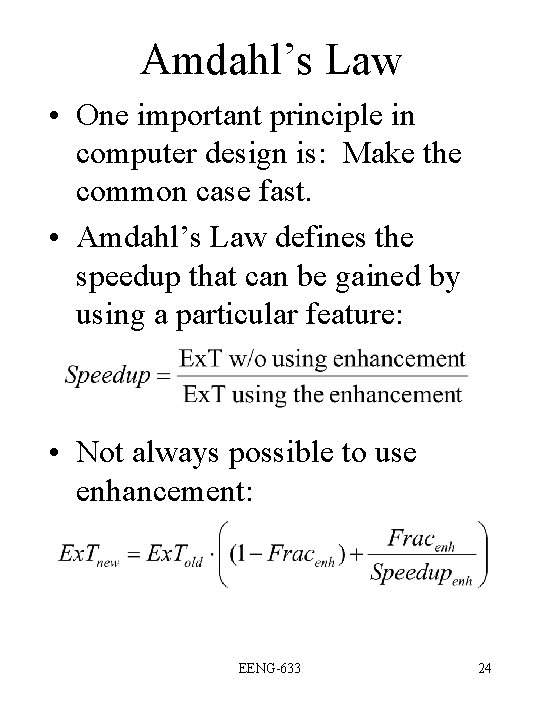

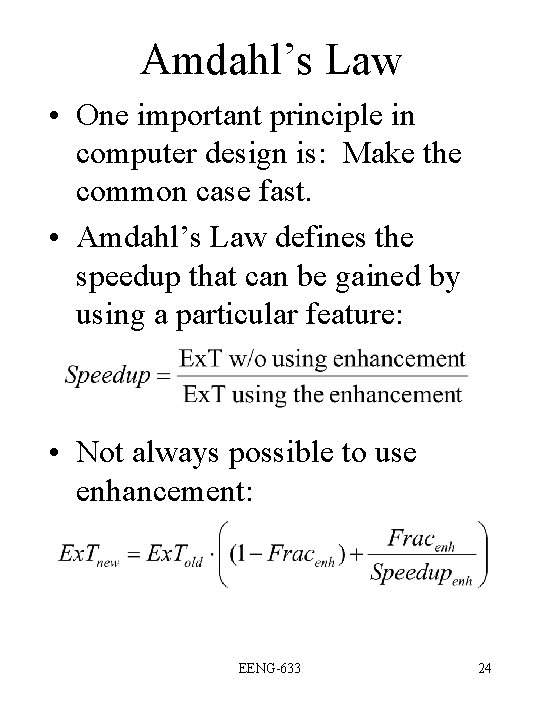

Amdahl’s Law • One important principle in computer design is: Make the common case fast. • Amdahl’s Law defines the speedup that can be gained by using a particular feature: • Not always possible to use enhancement: EENG-633 24

Speedup • speedup = old Ext / new Ext EENG-633 25

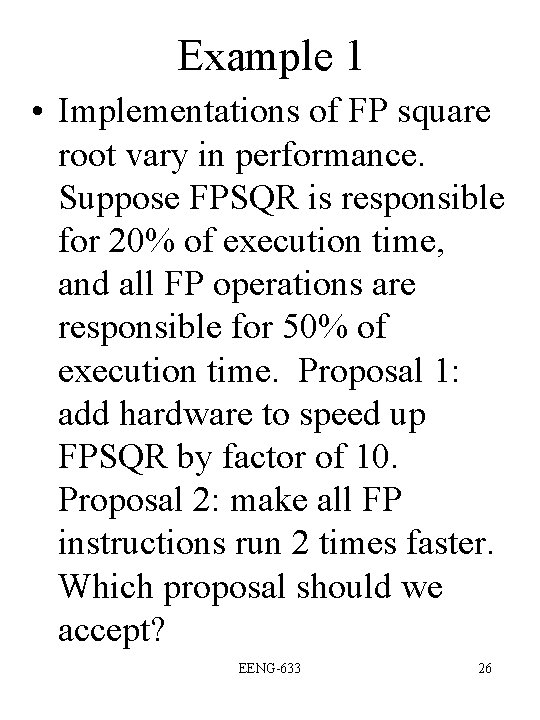

Example 1 • Implementations of FP square root vary in performance. Suppose FPSQR is responsible for 20% of execution time, and all FP operations are responsible for 50% of execution time. Proposal 1: add hardware to speed up FPSQR by factor of 10. Proposal 2: make all FP instructions run 2 times faster. Which proposal should we accept? EENG-633 26

Solution • Speedup. FPSQR • Speedup. FP EENG-633 27

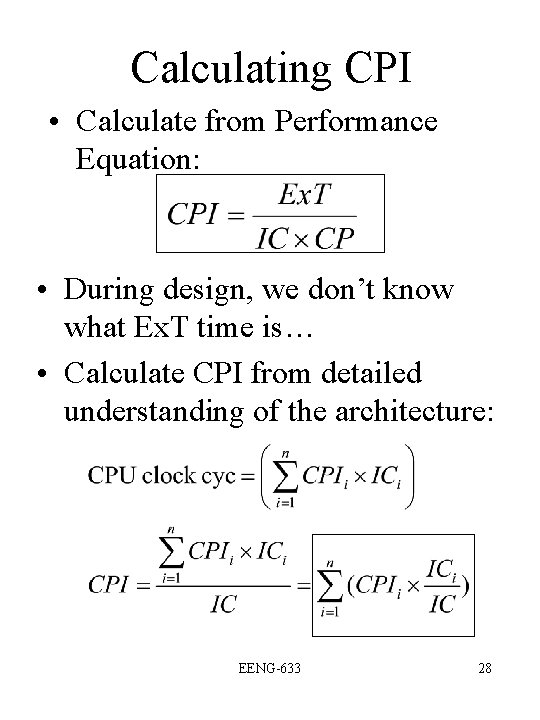

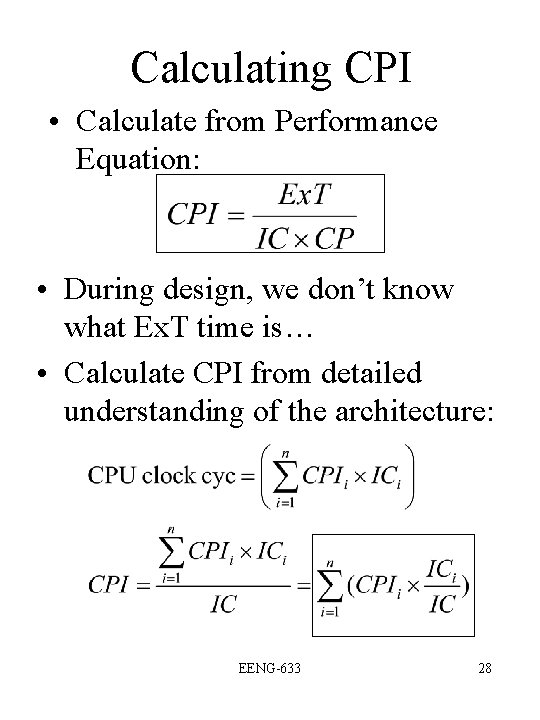

Calculating CPI • Calculate from Performance Equation: • During design, we don’t know what Ex. T time is… • Calculate CPI from detailed understanding of the architecture: EENG-633 28

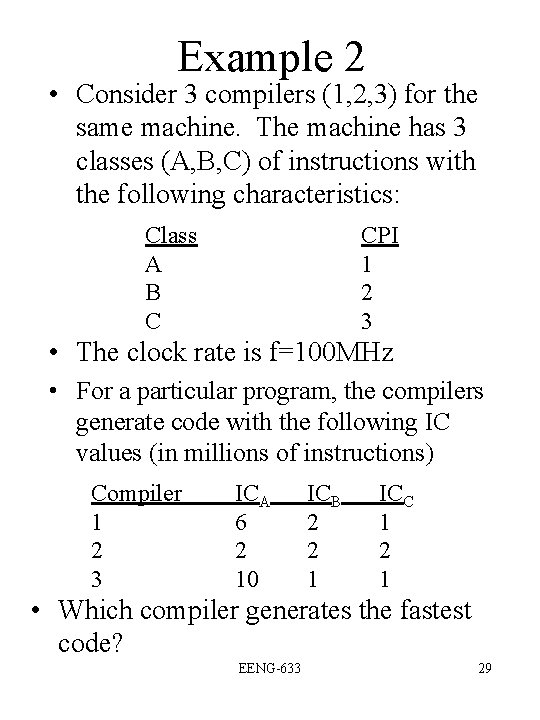

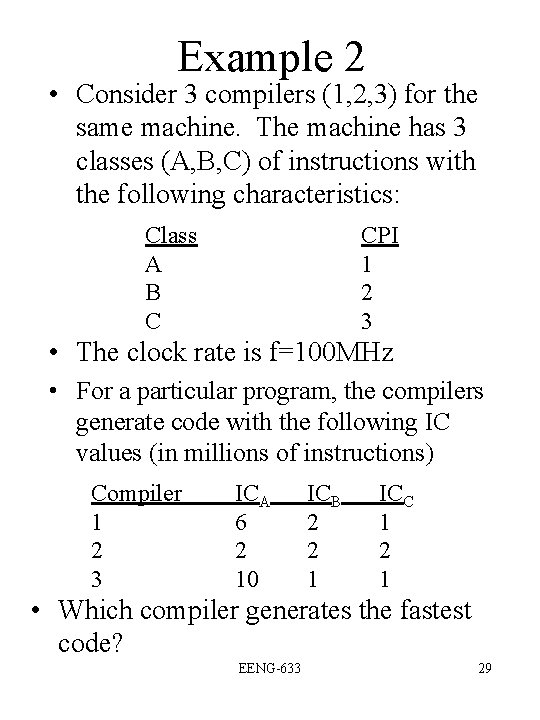

Example 2 • Consider 3 compilers (1, 2, 3) for the same machine. The machine has 3 classes (A, B, C) of instructions with the following characteristics: Class A B C CPI 1 2 3 • The clock rate is f=100 MHz • For a particular program, the compilers generate code with the following IC values (in millions of instructions) Compiler 1 2 3 ICA 6 2 10 ICB 2 2 1 ICC 1 2 1 • Which compiler generates the fastest code? EENG-633 29

Solution • First, calculate the CPI for each compiler: • Execution time for the 3 compilers: EENG-633 30

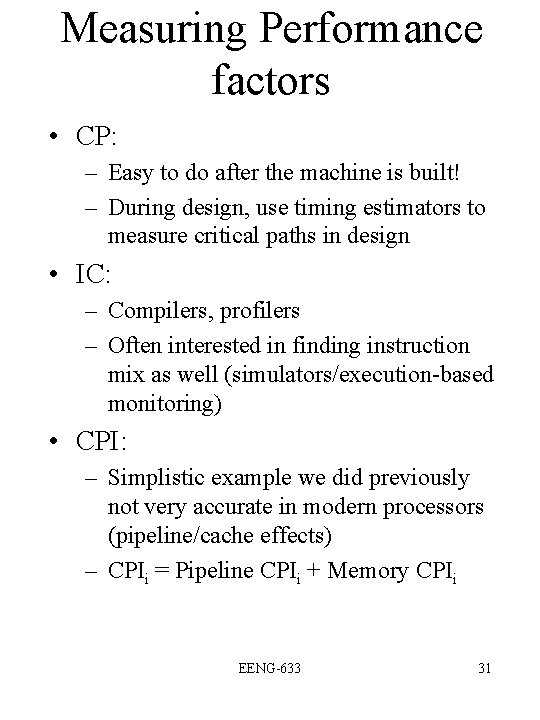

Measuring Performance factors • CP: – Easy to do after the machine is built! – During design, use timing estimators to measure critical paths in design • IC: – Compilers, profilers – Often interested in finding instruction mix as well (simulators/execution-based monitoring) • CPI: – Simplistic example we did previously not very accurate in modern processors (pipeline/cache effects) – CPIi = Pipeline CPIi + Memory CPIi EENG-633 31

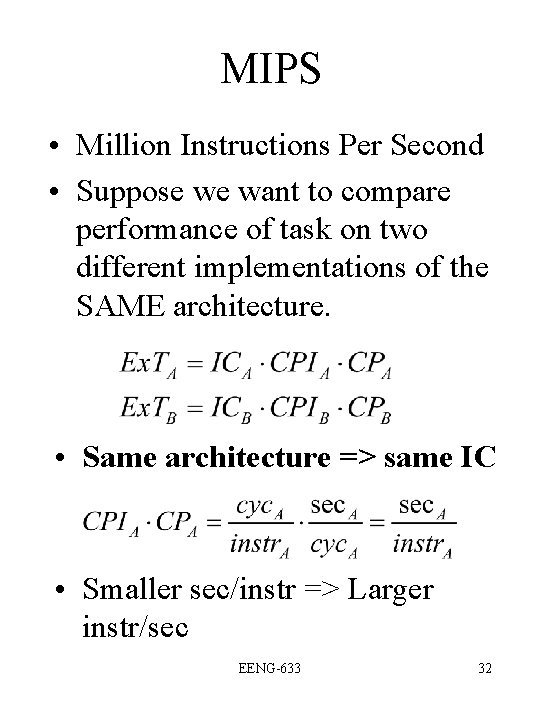

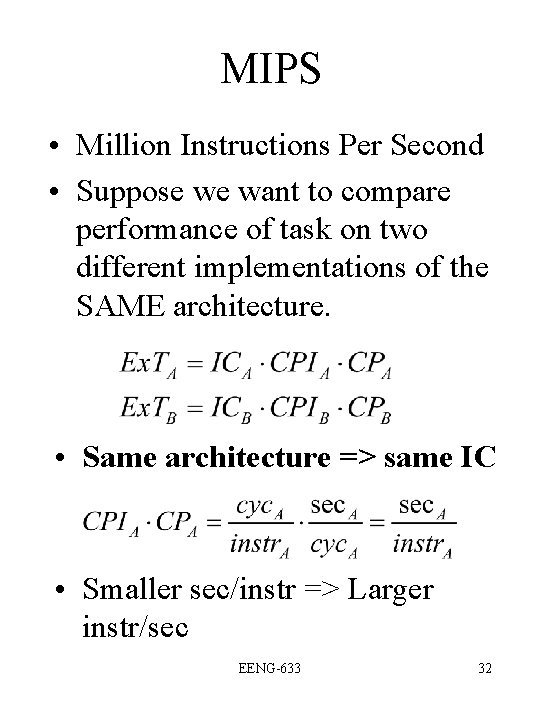

MIPS • Million Instructions Per Second • Suppose we want to compare performance of task on two different implementations of the SAME architecture. • Same architecture => same IC • Smaller sec/instr => Larger instr/sec EENG-633 32

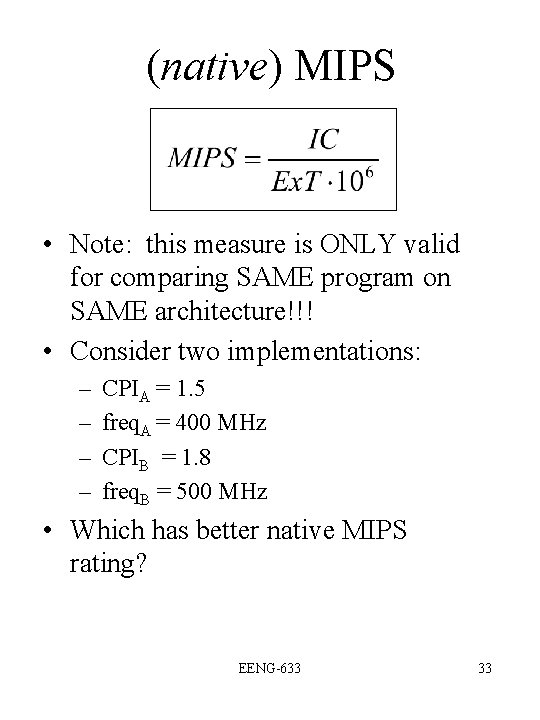

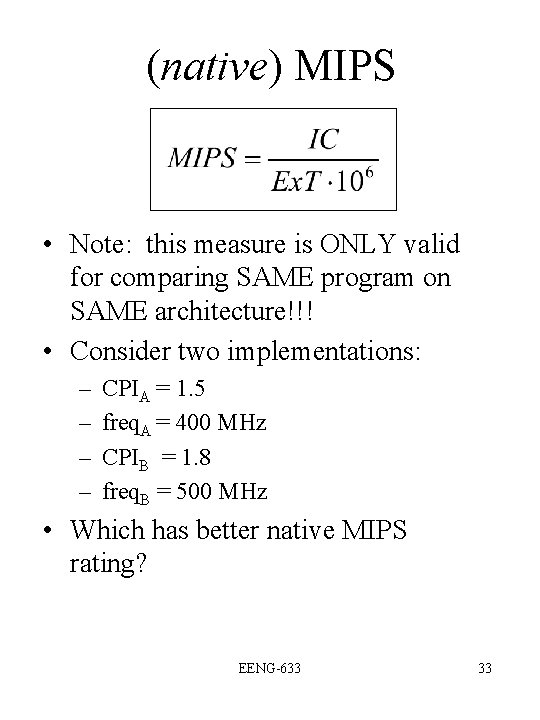

(native) MIPS • Note: this measure is ONLY valid for comparing SAME program on SAME architecture!!! • Consider two implementations: – – CPIA = 1. 5 freq. A = 400 MHz CPIB = 1. 8 freq. B = 500 MHz • Which has better native MIPS rating? EENG-633 33

Solution • Native MIPSA • Native MIPSB EENG-633 34

MIPS, MOPS and other FLOPS • MIPS : – Millions of Instructions Per Second • MOPS: – Millions of Operations Per Second • FLOPS – FLoating point Operations Per Second EENG-633 35

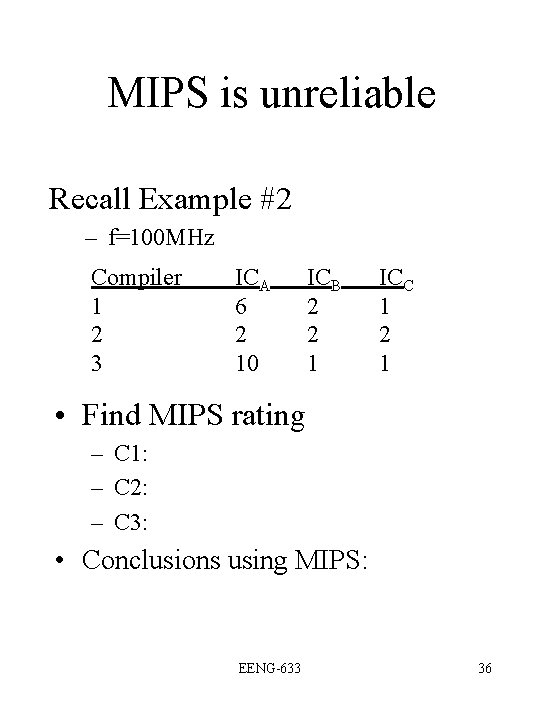

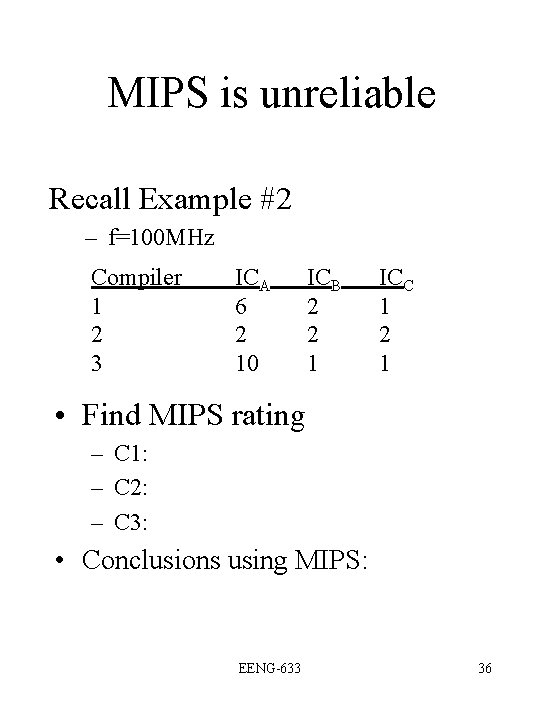

MIPS is unreliable Recall Example #2 – f=100 MHz Compiler 1 2 3 ICA 6 2 10 ICB 2 2 1 ICC 1 2 1 • Find MIPS rating – C 1: – C 2: – C 3: • Conclusions using MIPS: EENG-633 36

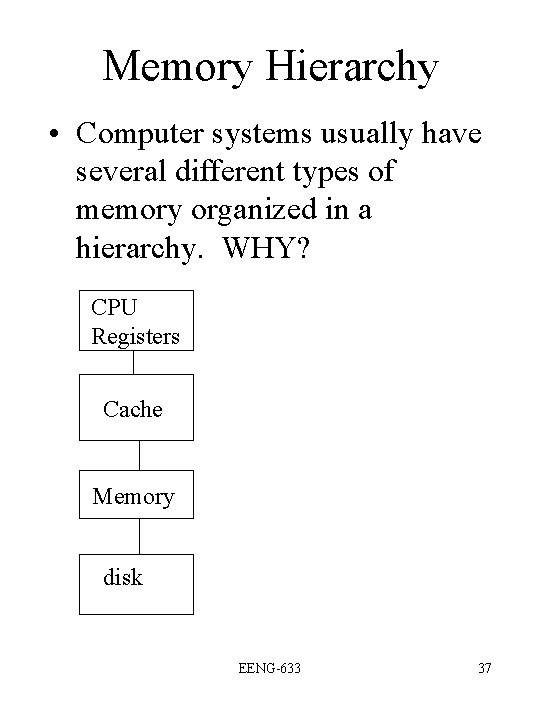

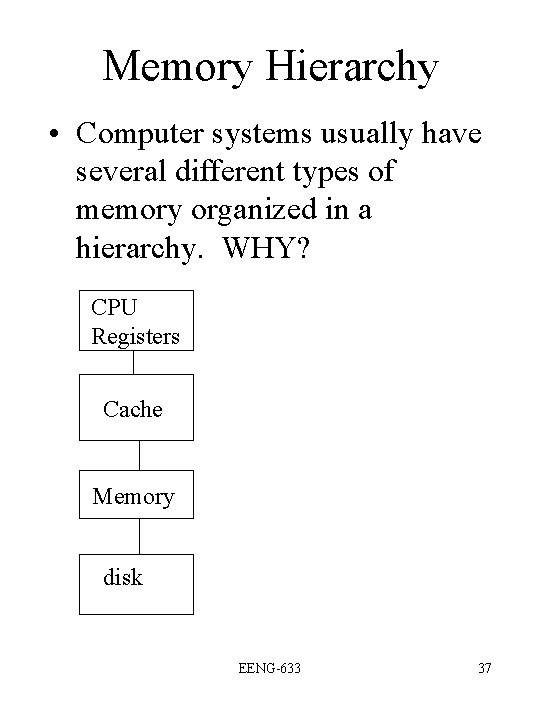

Memory Hierarchy • Computer systems usually have several different types of memory organized in a hierarchy. WHY? CPU Registers Cache Memory disk EENG-633 37

Locality • Locality of reference: programs tend to reuse data and instructions that they have used before (recently) • Two types of locality – Temporal: recently axccessed items are likely to be accessed in the near future – Spatial: items whose addresses are near one another tend to be referenced close together in time. • Do we expect instructions or data to have a higher degree of locality? EENG-633 38

Key Terms • Cache hit: CPU finds requested data in cache • Cache miss: requested data not in cache. • Miss rate: fraction of cache accesses that result in miss • Block: amount of data transferred between cache and memory • Miss penalty: extra time taken to get requested cache block into cache. • Page fault: requested data is not in memory. EENG-633 39

Example 4 • Suppose cache is 10 times faster than main memory and that cache can be used 90% of the time. How much speedup do we gain from using the cache? • Using Amdahl’s Law: EENG-633 40

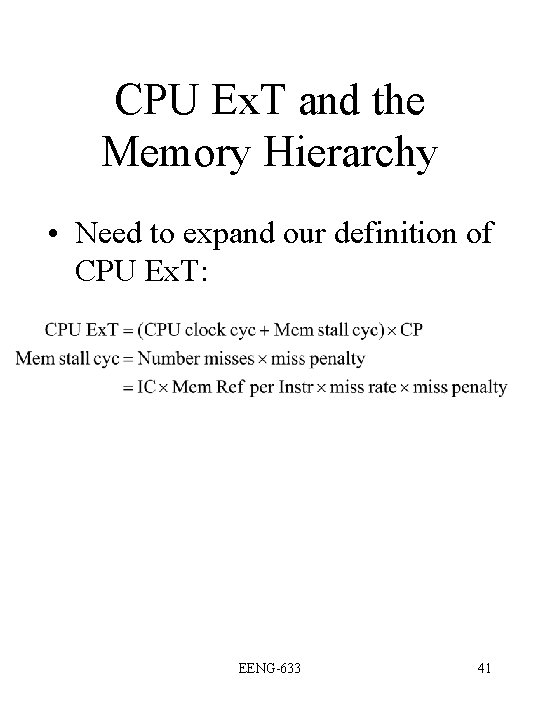

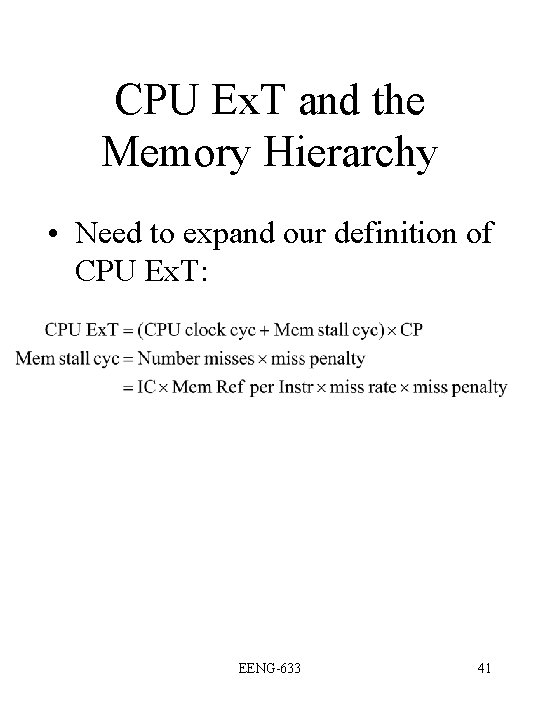

CPU Ex. T and the Memory Hierarchy • Need to expand our definition of CPU Ex. T: EENG-633 41

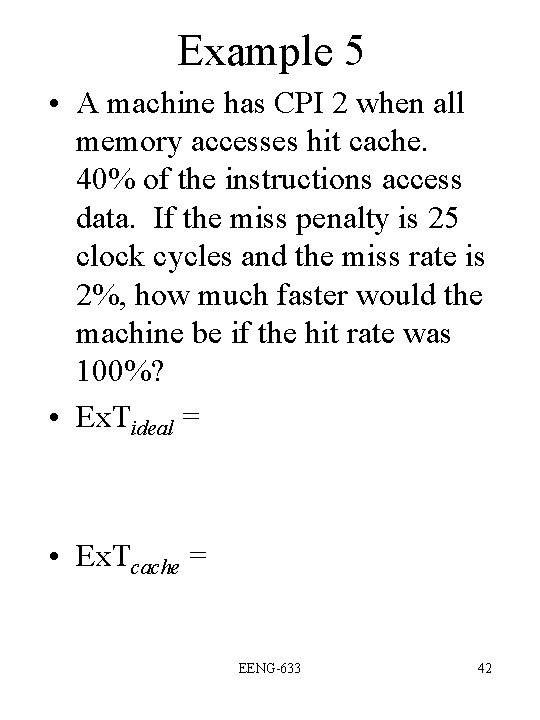

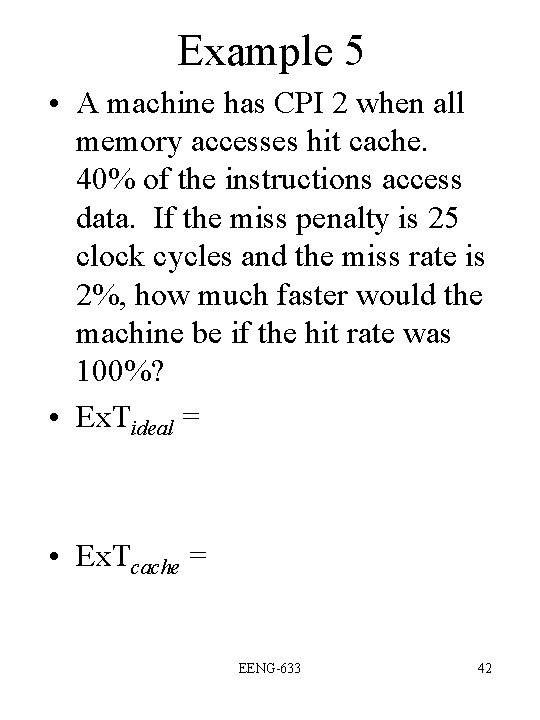

Example 5 • A machine has CPI 2 when all memory accesses hit cache. 40% of the instructions access data. If the miss penalty is 25 clock cycles and the miss rate is 2%, how much faster would the machine be if the hit rate was 100%? • Ex. Tideal = • Ex. Tcache = EENG-633 42