Ch 7 1 Overview of Retrieval Model Information

- Slides: 23

Ch. 7. 1 – Overview of Retrieval Model Information Retrieval in Practice All slides ©Addison Wesley, 2008

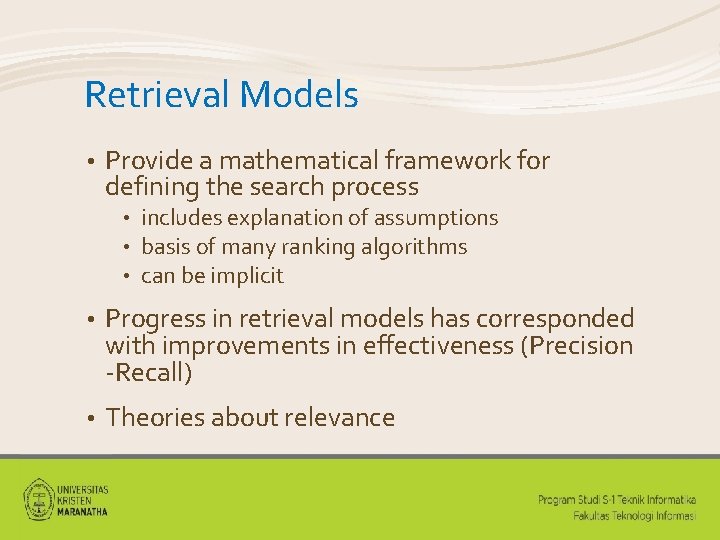

Retrieval Models • Provide a mathematical framework for defining the search process • • • includes explanation of assumptions basis of many ranking algorithms can be implicit • Progress in retrieval models has corresponded with improvements in effectiveness (Precision -Recall) • Theories about relevance

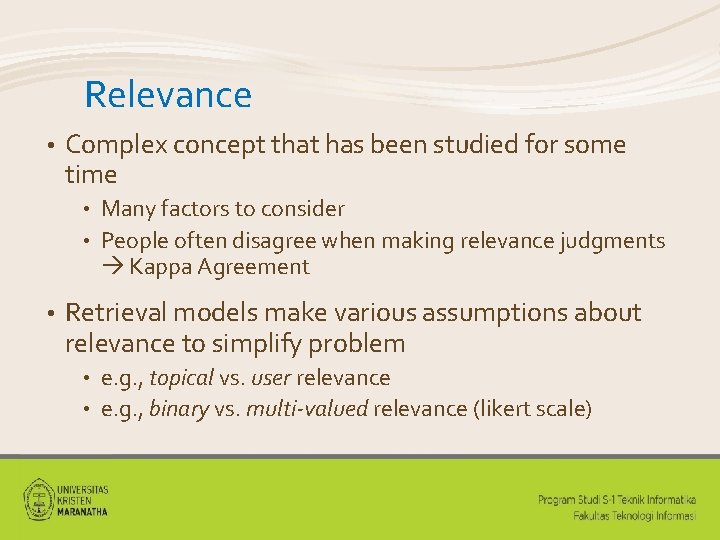

Relevance • Complex concept that has been studied for some time Many factors to consider • People often disagree when making relevance judgments Kappa Agreement • • Retrieval models make various assumptions about relevance to simplify problem e. g. , topical vs. user relevance • e. g. , binary vs. multi-valued relevance (likert scale) •

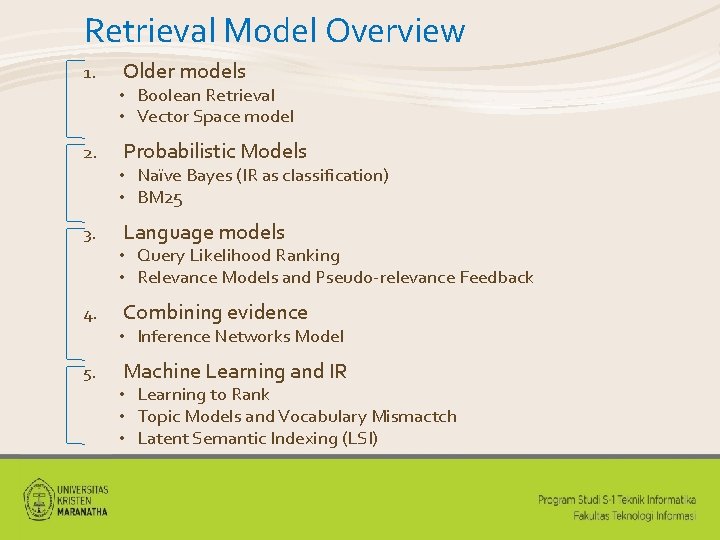

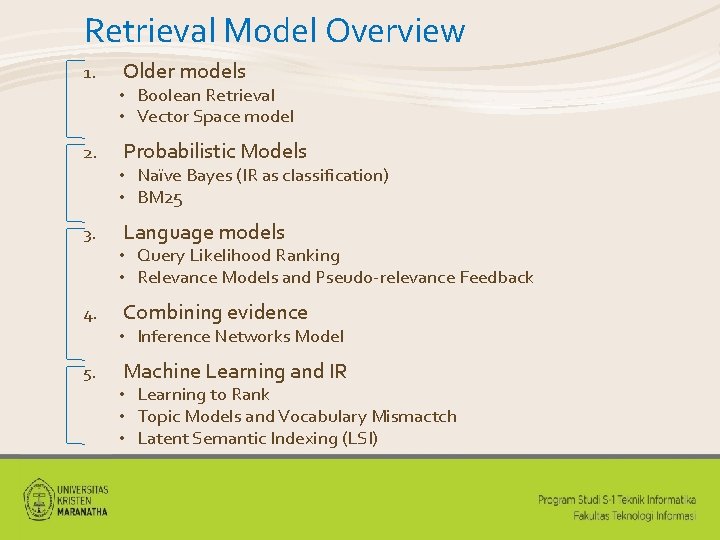

Retrieval Model Overview 1. Older models • • 2. Probabilistic Models • • 3. Query Likelihood Ranking Relevance Models and Pseudo-relevance Feedback Combining evidence • 5. Naïve Bayes (IR as classification) BM 25 Language models • • 4. Boolean Retrieval Vector Space model Inference Networks Model Machine Learning and IR • • • Learning to Rank Topic Models and Vocabulary Mismactch Latent Semantic Indexing (LSI)

Boolean Retrieval • Two possible outcomes for query processing TRUE and FALSE • “exact-match” retrieval • simplest form of ranking • • Query usually specified using Boolean operators AND, OR, NOT • proximity operators also used •

Boolean Retrieval • Advantages Results are predictable, relatively easy to explain • Many different features can be incorporated • Efficient processing since many documents can be eliminated from search • • Disadvantages Effectiveness depends entirely on user • Simple queries usually don’t work well • Complex queries are difficult •

Searching by Numbers • Sequence of queries driven by number of retrieved documents • • • e. g. “lincoln” search of news articles president AND lincoln AND NOT (automobile OR car) president AND lincoln AND biography AND life AND birthplace AND gettysburg AND NOT (automobile OR car) president AND lincoln AND (biography OR life OR birthplace OR gettysburg) AND NOT (automobile OR car)

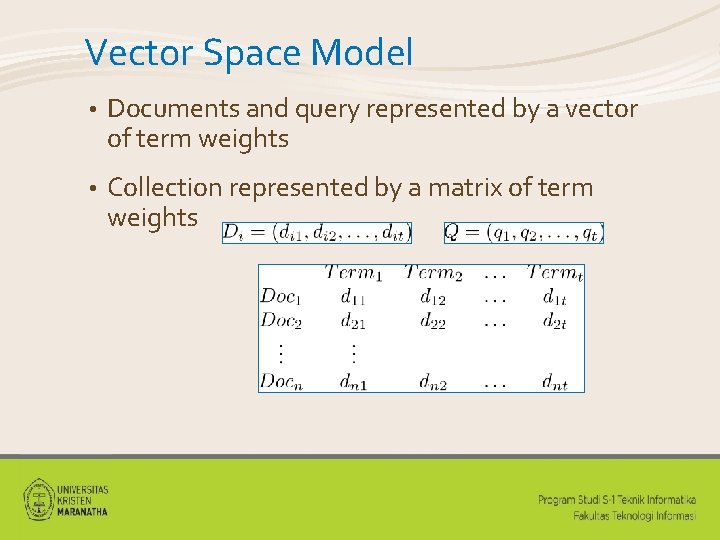

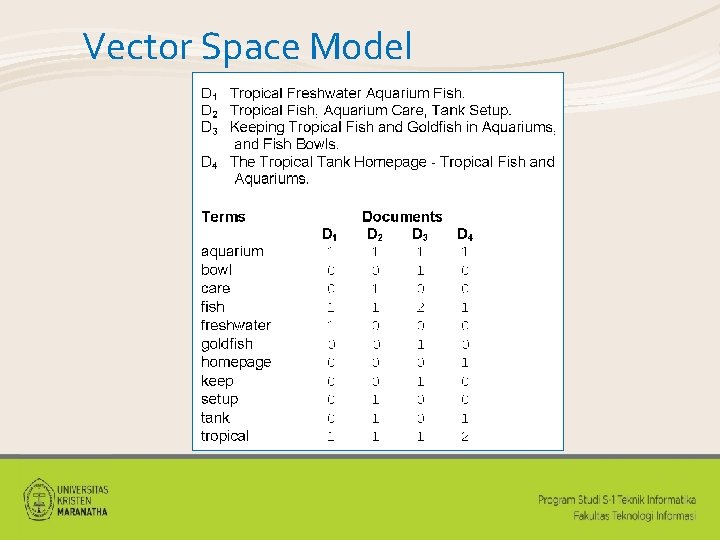

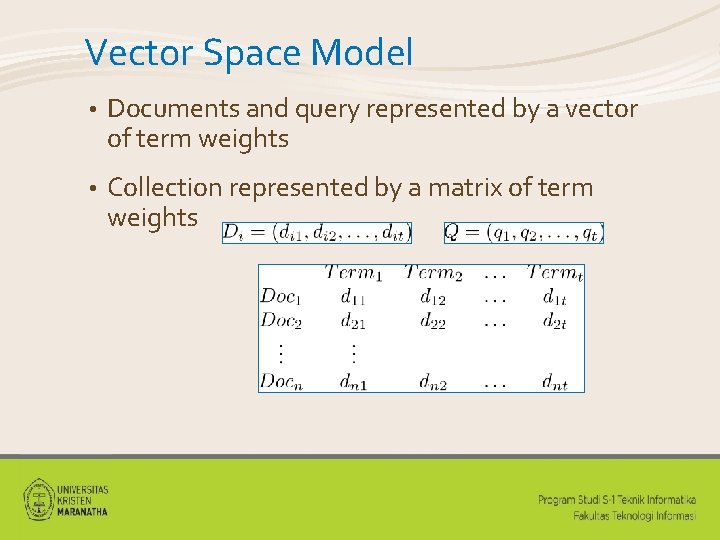

Vector Space Model • Documents and query represented by a vector of term weights • Collection represented by a matrix of term weights

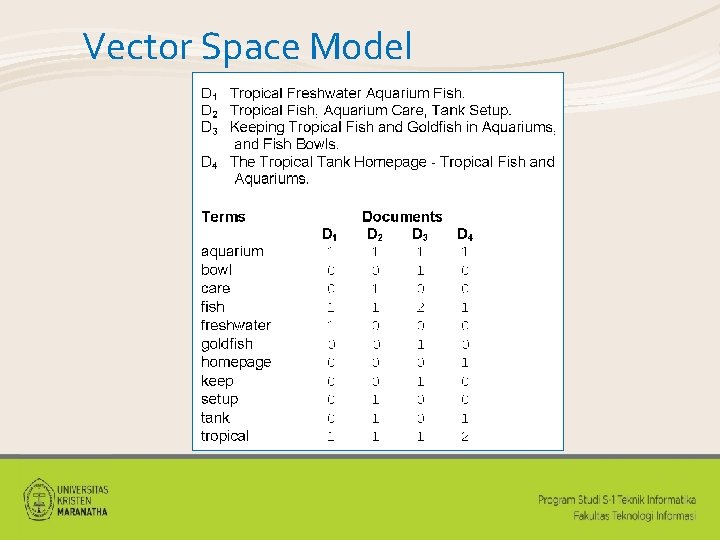

Vector Space Model

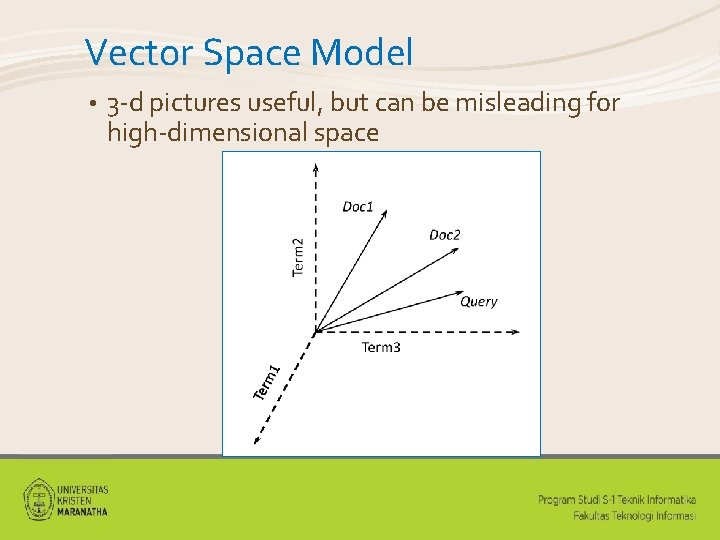

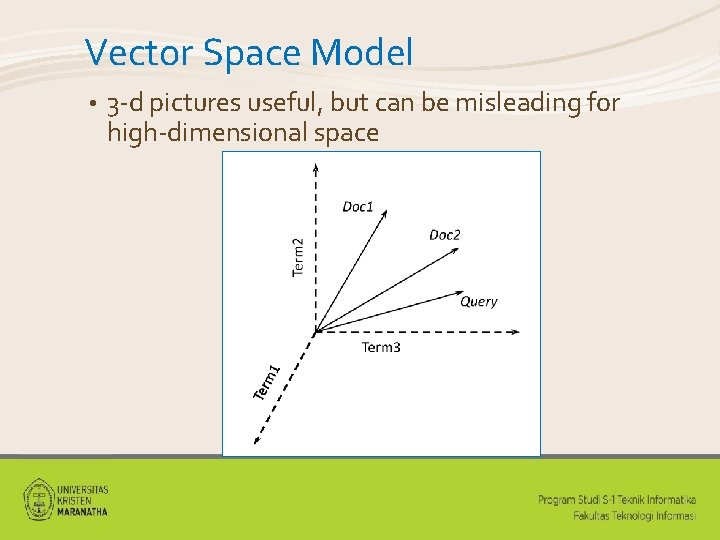

Vector Space Model • 3 -d pictures useful, but can be misleading for high-dimensional space

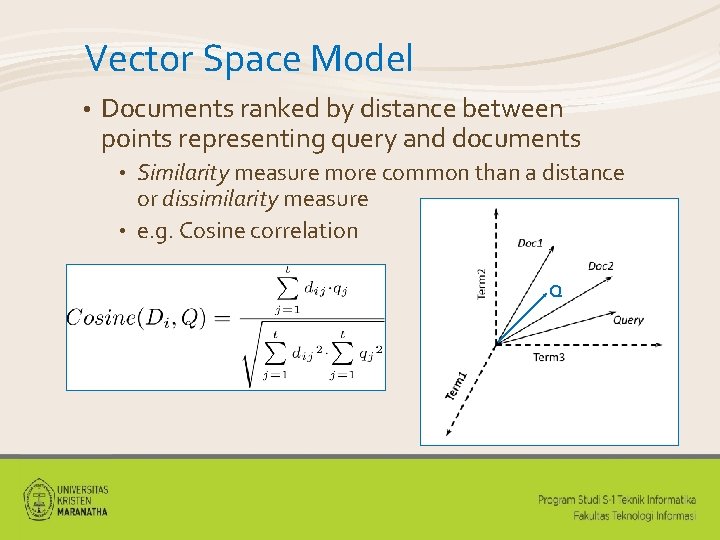

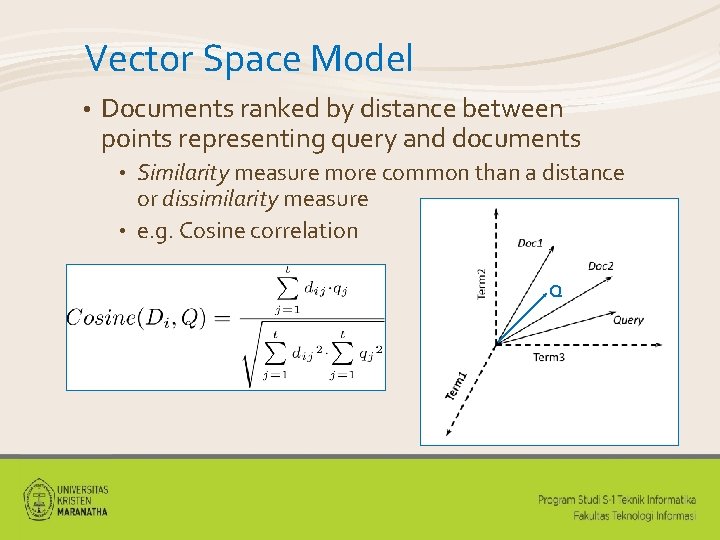

Vector Space Model • Documents ranked by distance between points representing query and documents Similarity measure more common than a distance or dissimilarity measure • e. g. Cosine correlation • Q

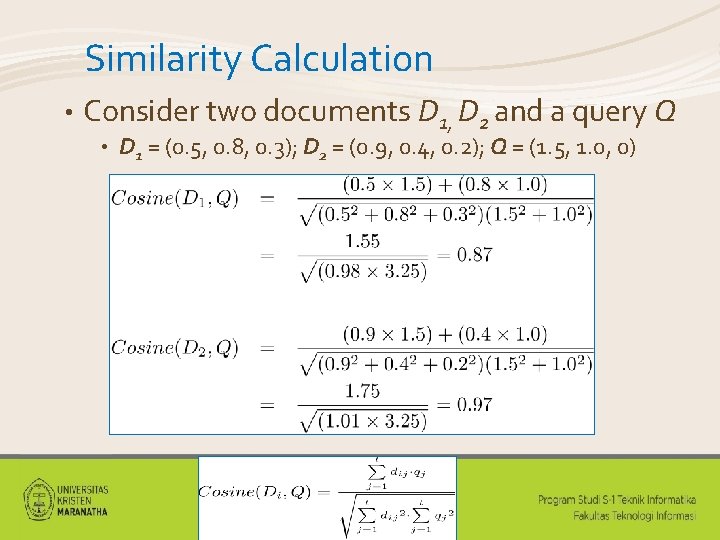

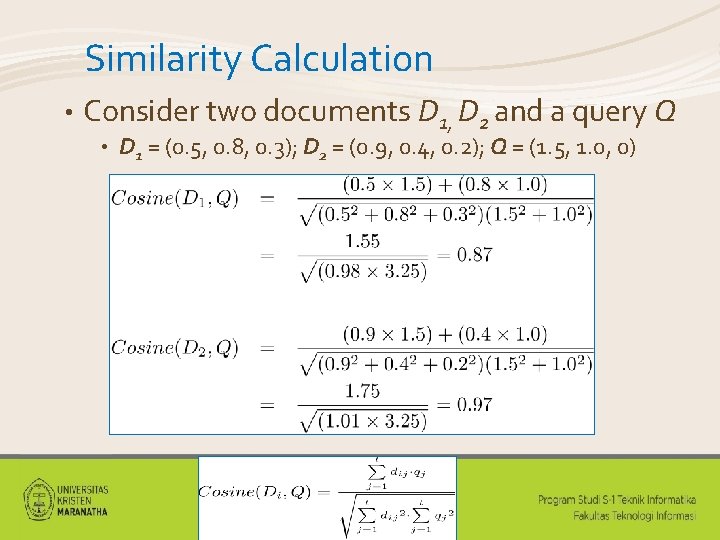

Similarity Calculation • Consider two documents D 1, D 2 and a query Q • D 1 = (0. 5, 0. 8, 0. 3); D 2 = (0. 9, 0. 4, 0. 2); Q = (1. 5, 1. 0, 0)

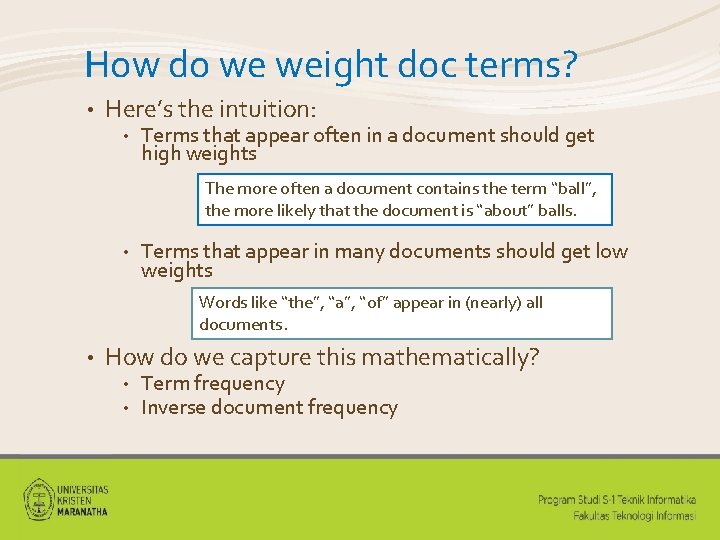

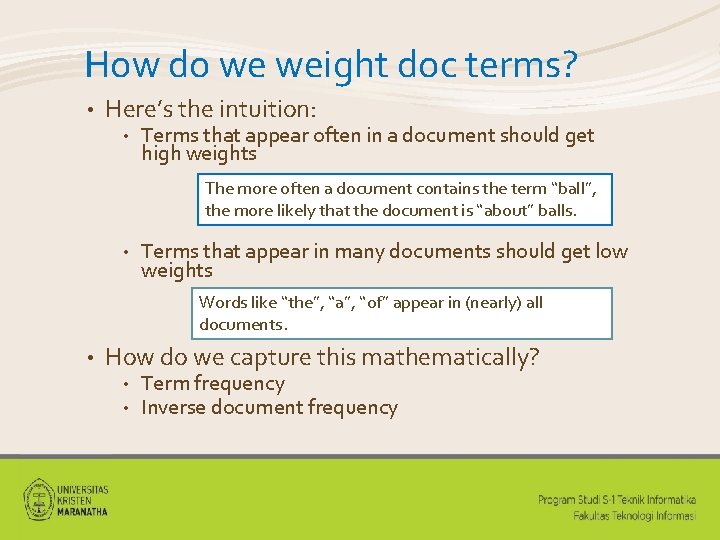

How do we weight doc terms? • Here’s the intuition: • Terms that appear often in a document should get high weights The more often a document contains the term “ball”, the more likely that the document is “about” balls. • Terms that appear in many documents should get low weights Words like “the”, “a”, “of” appear in (nearly) all documents. • How do we capture this mathematically? • • Term frequency Inverse document frequency

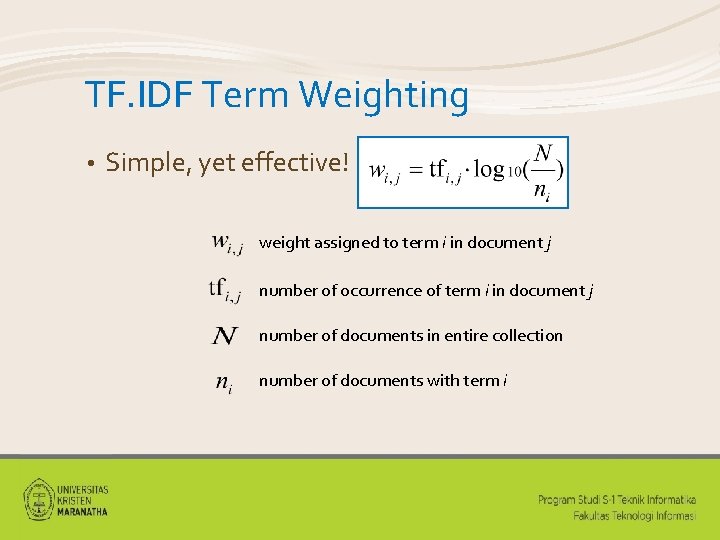

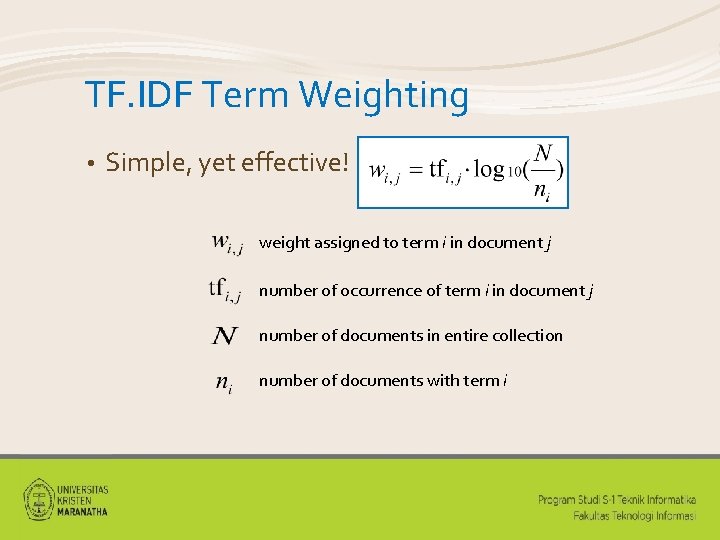

TF. IDF Term Weighting • Simple, yet effective! weight assigned to term i in document j number of occurrence of term i in document j number of documents in entire collection number of documents with term i

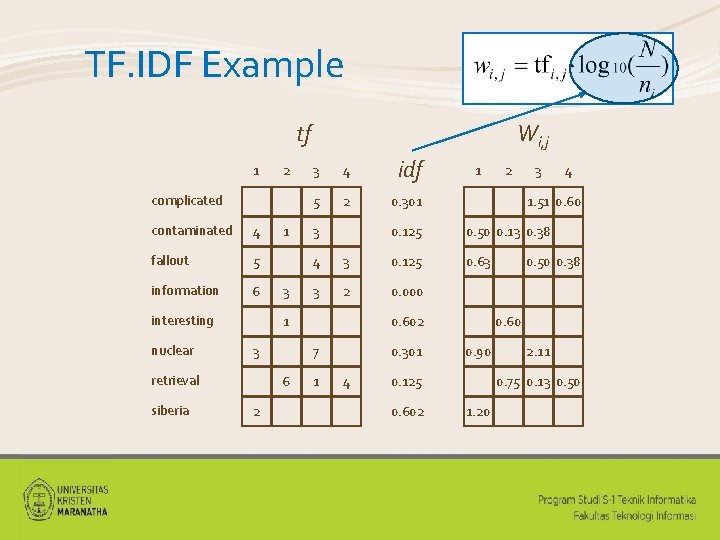

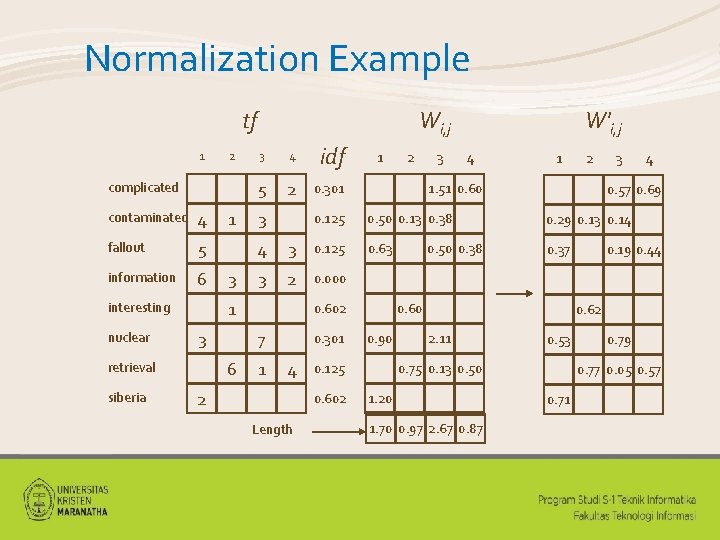

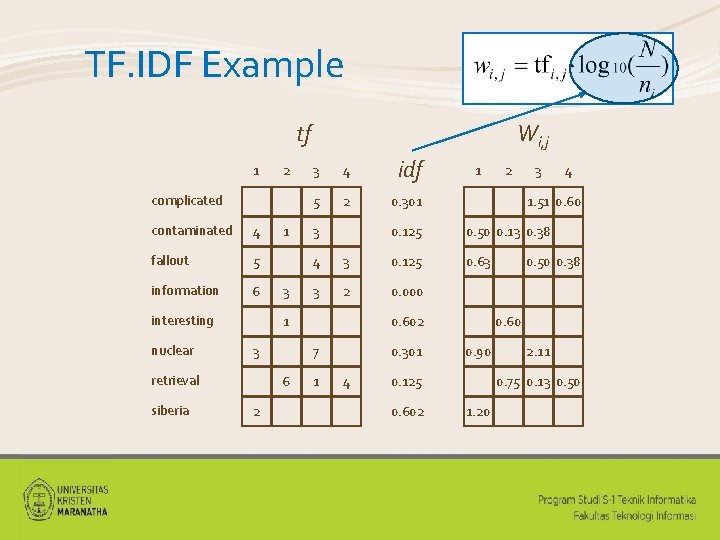

TF. IDF Example Wi, j tf 1 2 complicated contaminated 4 fallout 5 information 6 interesting nuclear 3 4 idf 5 2 0. 301 3 3 3 2 0. 000 2 0. 301 0. 50 0. 38 0. 60 0. 90 0. 125 0. 602 4 1. 51 0. 602 4 3 0. 63 0. 125 1 2 0. 50 0. 13 0. 38 3 7 6 1 0. 125 4 1 retrieval siberia 1 3 2. 11 0. 75 0. 13 0. 50 1. 20

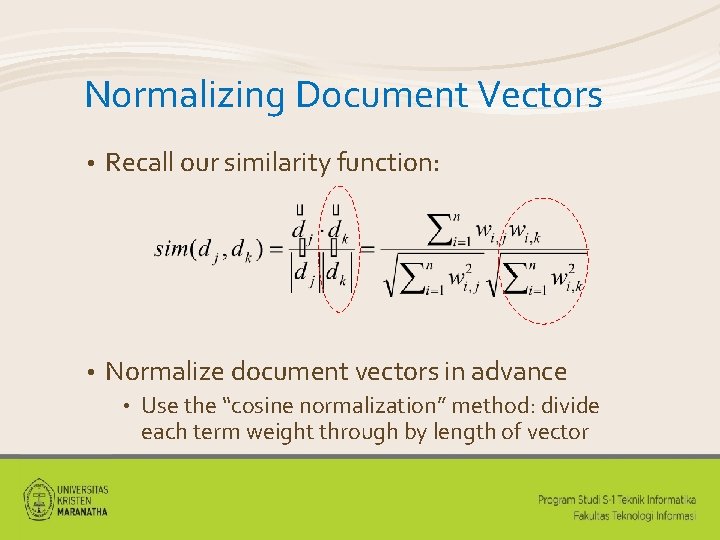

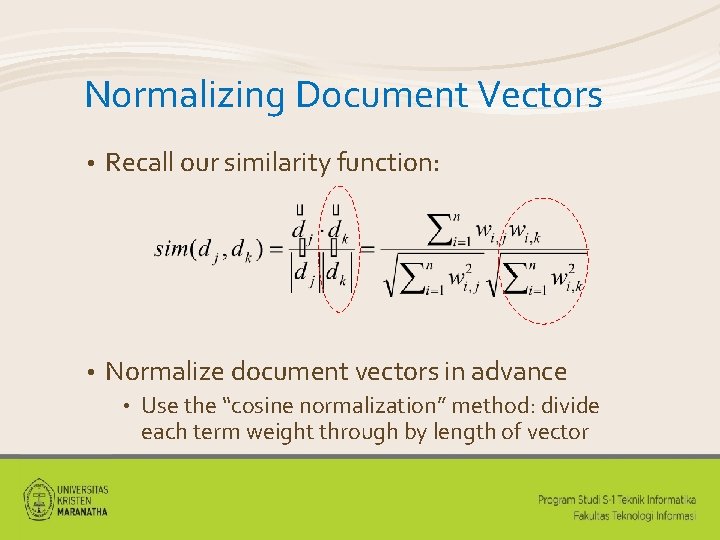

Normalizing Document Vectors • Recall our similarity function: • Normalize document vectors in advance • Use the “cosine normalization” method: divide each term weight through by length of vector

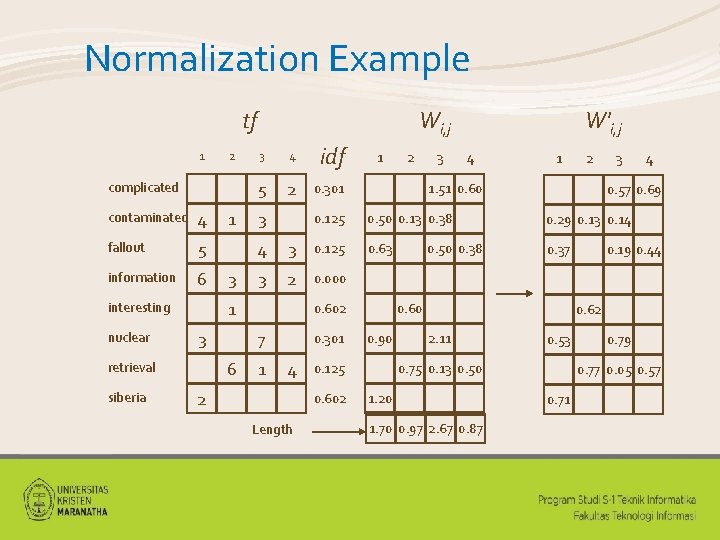

Normalization Example Wi, j tf 1 2 complicated contaminated 4 fallout 5 information 6 3 idf 5 2 0. 301 3 1 2 1. 51 0. 60 3 0. 000 0. 602 0. 301 0. 60 0. 90 0. 125 0. 602 0. 50 0. 38 4 0. 57 0. 69 0. 37 2 Length 1 0. 63 3 2 4 0. 29 0. 13 0. 14 0. 125 4 3 0. 50 0. 13 0. 38 3 1 2 0. 125 4 7 6 retrieval siberia 3 4 1 interesting nuclear 1 3 W'i, j 0. 19 0. 44 0. 62 2. 11 0. 53 0. 75 0. 13 0. 50 1. 20 1. 70 0. 97 2. 67 0. 87 0. 79 0. 77 0. 05 0. 57 0. 71

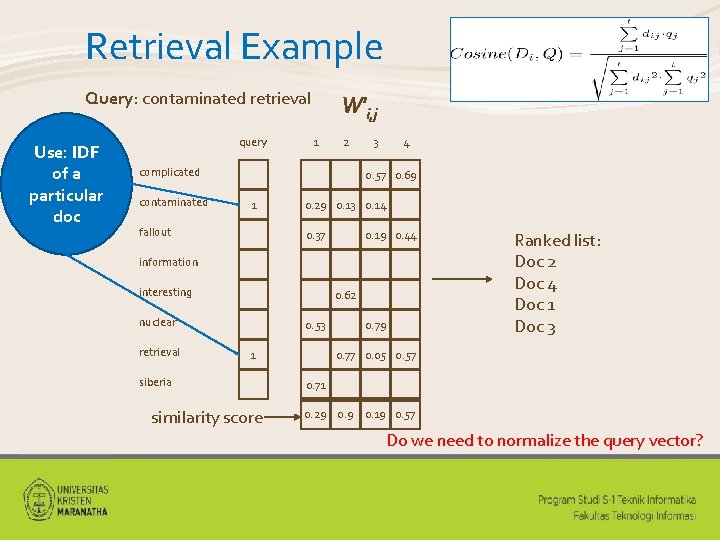

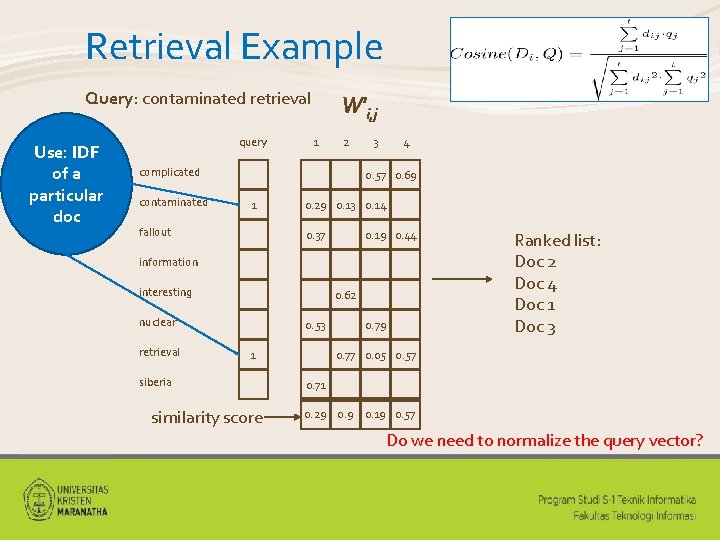

Retrieval Example Query: contaminated retrieval Use: IDF of a particular doc query W'i, j 1 2 complicated contaminated 3 4 0. 57 0. 69 1 fallout 0. 29 0. 13 0. 14 0. 37 0. 19 0. 44 information interesting 0. 62 nuclear retrieval 0. 53 1 siberia similarity score 0. 79 Ranked list: Doc 2 Doc 4 Doc 1 Doc 3 0. 77 0. 05 0. 57 0. 71 0. 29 0. 19 0. 57 Do we need to normalize the query vector?

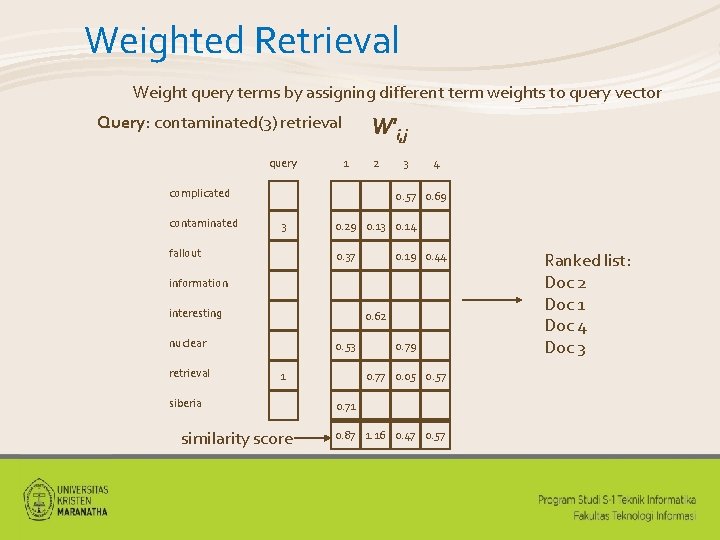

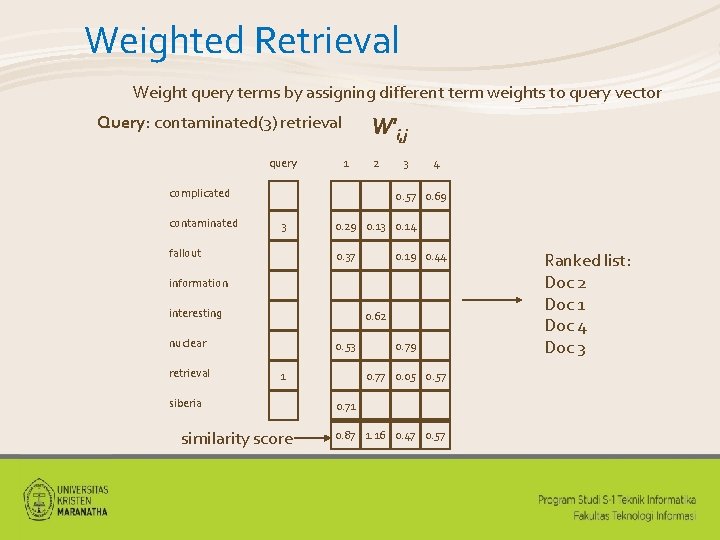

Weighted Retrieval Weight query terms by assigning different term weights to query vector W'i, j Query: contaminated(3) retrieval query 1 2 complicated contaminated 3 4 0. 57 0. 69 3 fallout 0. 29 0. 13 0. 14 0. 37 0. 19 0. 44 information interesting 0. 62 nuclear retrieval 0. 53 1 siberia similarity score 0. 79 0. 77 0. 05 0. 57 0. 71 0. 87 1. 16 0. 47 0. 57 Ranked list: Doc 2 Doc 1 Doc 4 Doc 3

Vector Space Model • Advantages Simple computational framework for ranking • Any similarity measure or term weighting scheme could be used • • Disadvantages Assumption of term independence • No predictions about techniques for effective ranking •

Summary • Boolean retrieval is powerful in the hands of a trained searcher • Ranked retrieval is preferred in other circumstances • Key ideas in the vector space model • • • Goal: find documents most similar to the query Geometric interpretation: measure similarity in terms of angles between vectors in high dimensional space Documents weights are some combinations of TF, DF, and Length normalization is critical Similarity is calculated via the inner product

What’s the point? • Information seeking behavior is incredibly complex • In order to build actual systems, we must make many simplifications Absolutely unrealistic assumptions! • But the resulting systems are nevertheless useful • • Know what these limitations are!

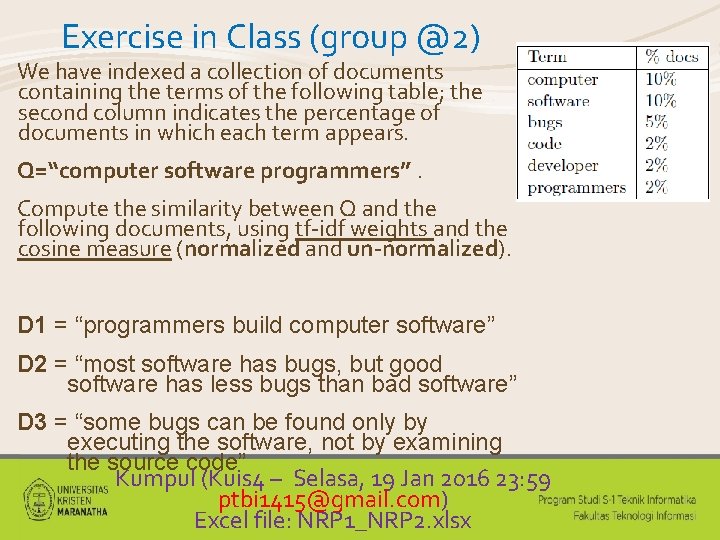

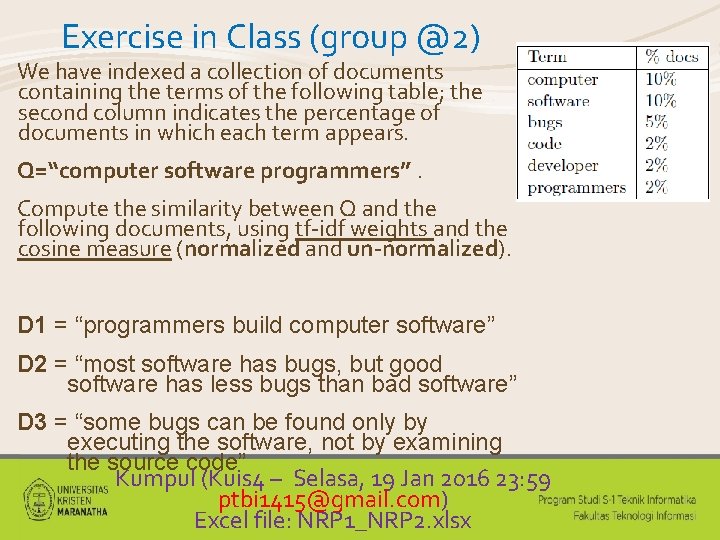

Exercise in Class (group @2) We have indexed a collection of documents containing the terms of the following table; the second column indicates the percentage of documents in which each term appears. Q=“computer software programmers”. Compute the similarity between Q and the following documents, using tf-idf weights and the cosine measure (normalized and un-normalized). D 1 = “programmers build computer software” D 2 = “most software has bugs, but good software has less bugs than bad software” D 3 = “some bugs can be found only by executing the software, not by examining the source code” Kumpul (Kuis 4 – Selasa, 19 Jan 2016 23: 59 ptbi 1415@gmail. com) Excel file: NRP 1_NRP 2. xlsx