CGS 3763 Operating Systems Concepts Spring 2013 Dan

- Slides: 23

CGS 3763 Operating Systems Concepts Spring 2013 Dan C. Marinescu Office: HEC 304 Office hours: M-Wd 11: 30 - 12: 30 AM

Lecture 9 – Monday, January 28, 2013 n n Last time: Today: ¨ n Thread coordination, Locks Next time 9/15/2021 2

Thread coordination n n Critical section code that accesses a shared resource Race conditions two or more threads access shared data and the result depends on the order in which the threads access the shared data. Mutual exclusion only one thread should execute a critical section at any one time. Scheduling algorithms decide which thread to choose when multiple threads are in a RUNNABLE state FIFO – first in first out ¨ LIFO – last in first out ¨ Priority scheduling ¨ EDF – earliest deadline first ¨ n n Preemption ability to stop a running activity and start another one with a higher priority. Side effects of thread coordination Deadlock ¨ Priority inversion a lower priority activity is allowed to run before one with a higher priority ¨ Lecture 18 3

Solutions to thread coordination problems must satisfy a set of conditions 1. Safety: The required condition will never be violated. 2. Liveness: The system should eventually progress irrespective of contention. 3. Freedom From Starvation: No process should be denied progress for ever. That is, every process should make progress in a finite time. 4. Bounded Wait: Every process is assured of not more than a fixed number of overtakes by other processes in the system before it makes progress. 5. Fairness: dependent on the scheduling algorithm • FIFO: No process will ever overtake another process. • LRU: The process which received the service least recently gets the service next. For example for the mutual exclusion problem the solution should guarantee that: Safety the mutual exclusion property is never violated Liveness a thread will access the shared resource in a finite time Freedom for starvation a thread will access the shared resource in a finite time Bounded wait a thread will access the shared resource at least after a fixed number of accesses by other threads. Lecture 18 4

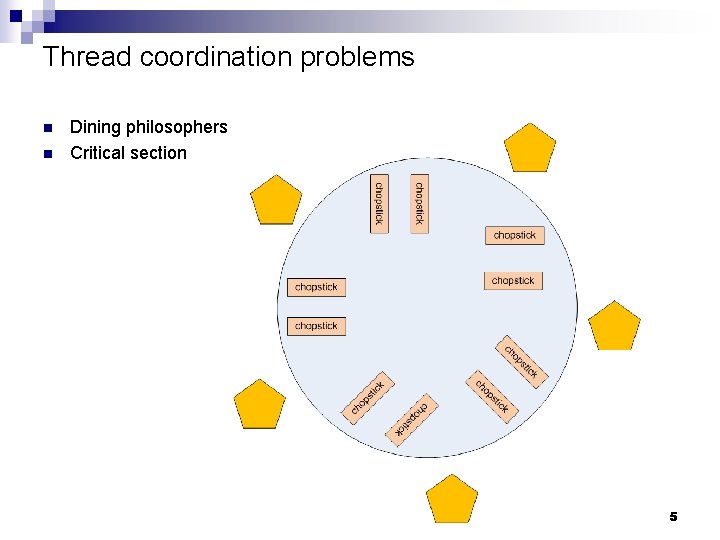

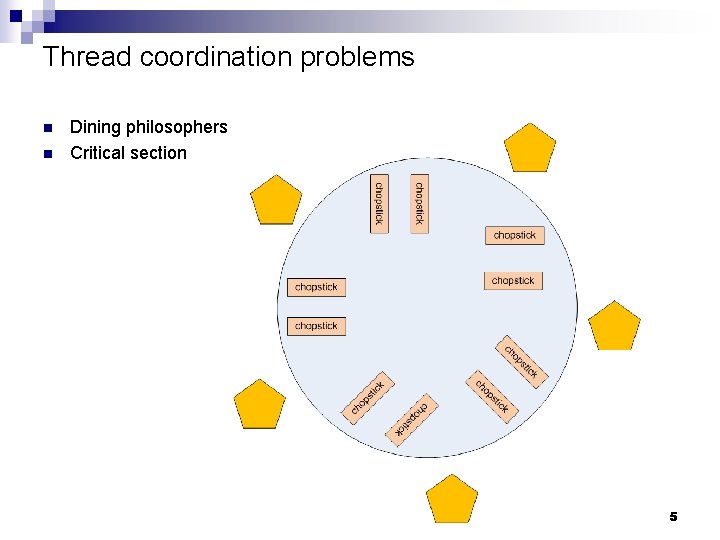

Thread coordination problems n n Dining philosophers Critical section Lecture 18 5

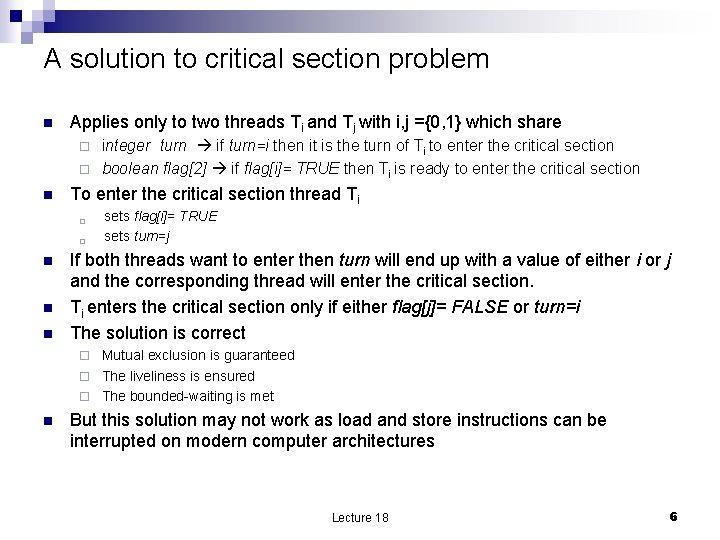

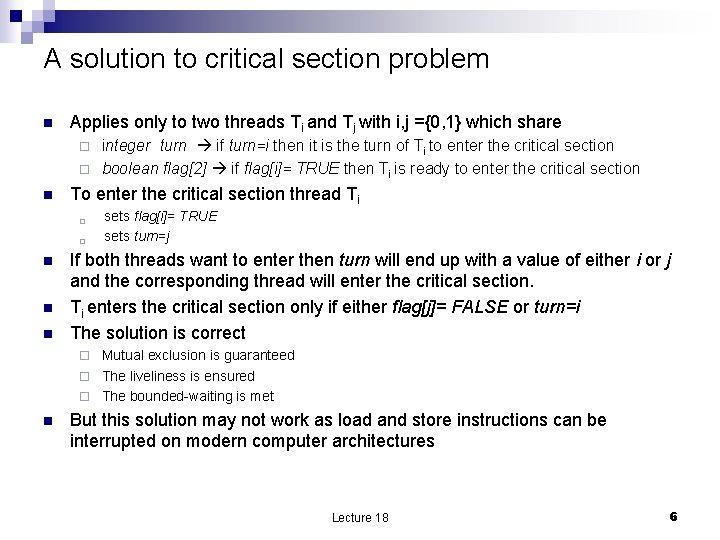

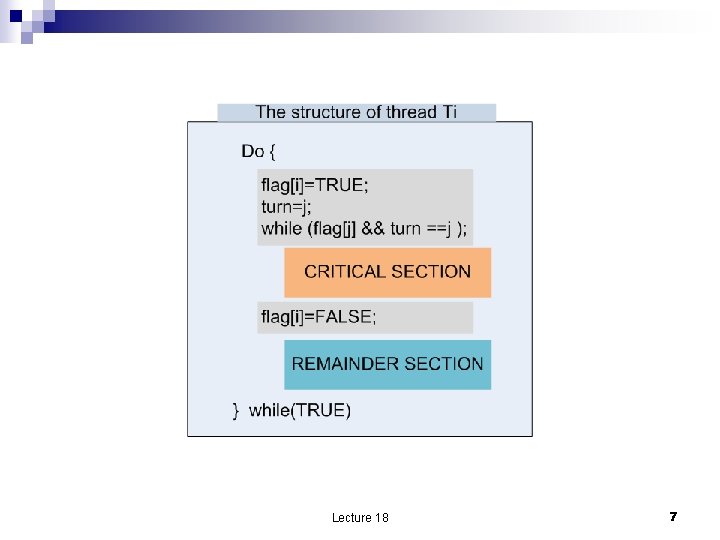

A solution to critical section problem n Applies only to two threads Ti and Tj with i, j ={0, 1} which share integer turn if turn=i then it is the turn of Ti to enter the critical section ¨ boolean flag[2] if flag[i]= TRUE then Ti is ready to enter the critical section ¨ n To enter the critical section thread Ti ¨ ¨ n n n sets flag[i]= TRUE sets turn=j If both threads want to enter then turn will end up with a value of either i or j and the corresponding thread will enter the critical section. Ti enters the critical section only if either flag[j]= FALSE or turn=i The solution is correct Mutual exclusion is guaranteed ¨ The liveliness is ensured ¨ The bounded-waiting is met ¨ n But this solution may not work as load and store instructions can be interrupted on modern computer architectures Lecture 18 6

Lecture 18 7

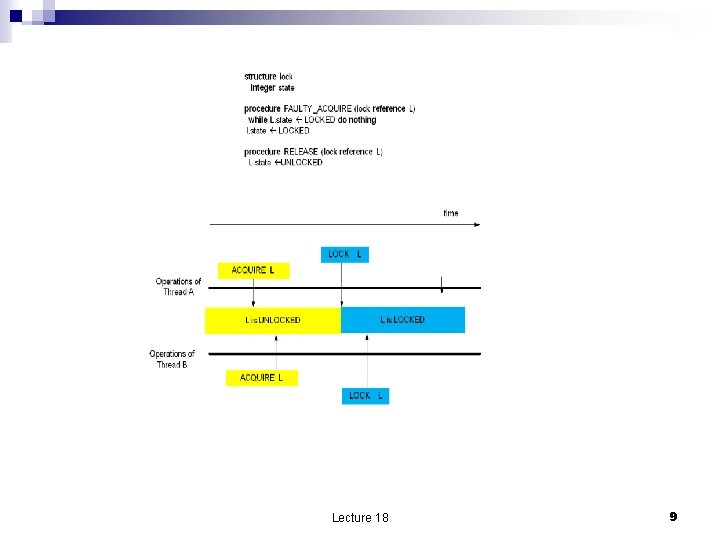

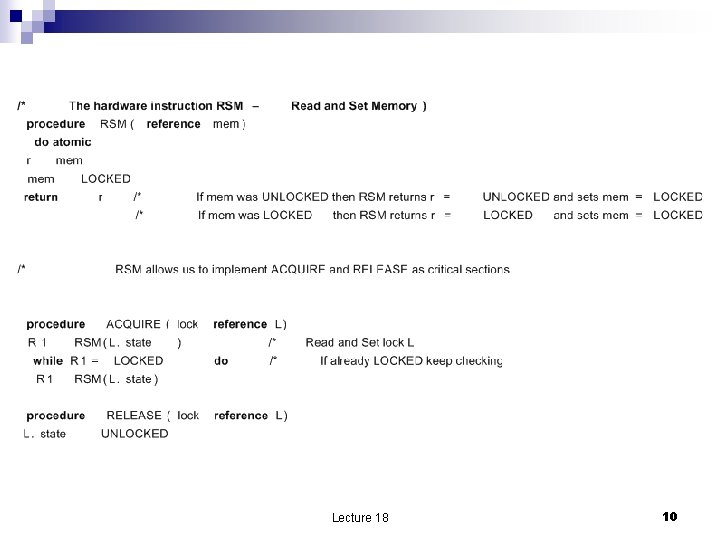

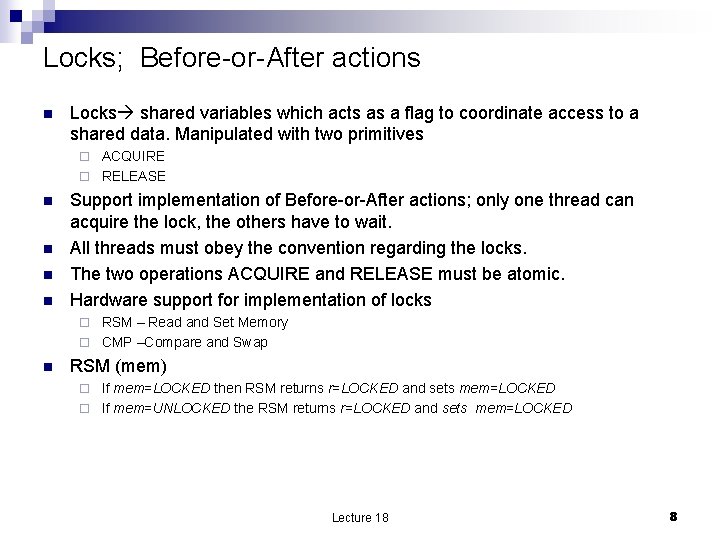

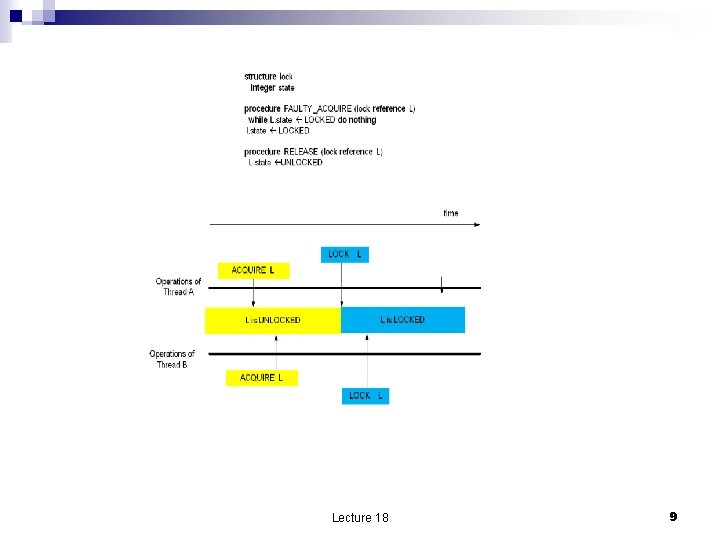

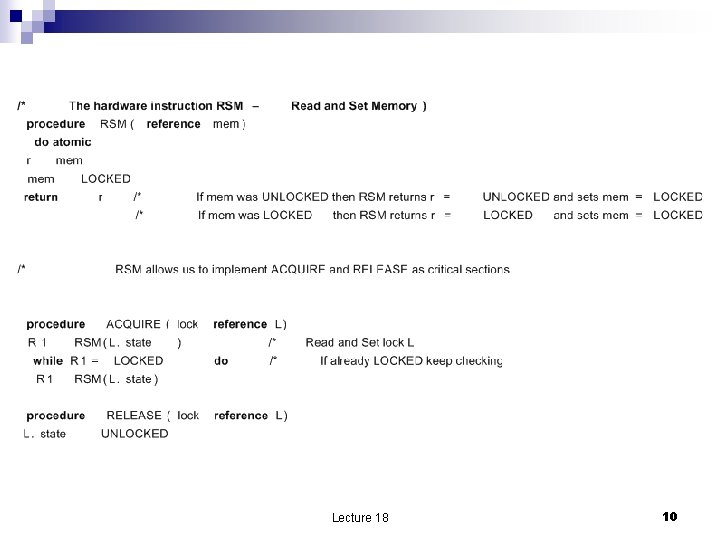

Locks; Before-or-After actions n Locks shared variables which acts as a flag to coordinate access to a shared data. Manipulated with two primitives ACQUIRE ¨ RELEASE ¨ n n Support implementation of Before-or-After actions; only one thread can acquire the lock, the others have to wait. All threads must obey the convention regarding the locks. The two operations ACQUIRE and RELEASE must be atomic. Hardware support for implementation of locks RSM – Read and Set Memory ¨ CMP –Compare and Swap ¨ n RSM (mem) If mem=LOCKED then RSM returns r=LOCKED and sets mem=LOCKED ¨ If mem=UNLOCKED the RSM returns r=LOCKED and sets mem=LOCKED ¨ Lecture 18 8

Lecture 18 9

Lecture 18 10

Deadlocks n n n Happen quite often in real life and the proposed solutions are not always logical: “When two trains approach each other at a crossing, both shall come to a full stop and neither shall start up again until the other has gone. ” a pearl from Kansas legislation. Deadlock jury. Deadlock legislative body. Lecture 18 11

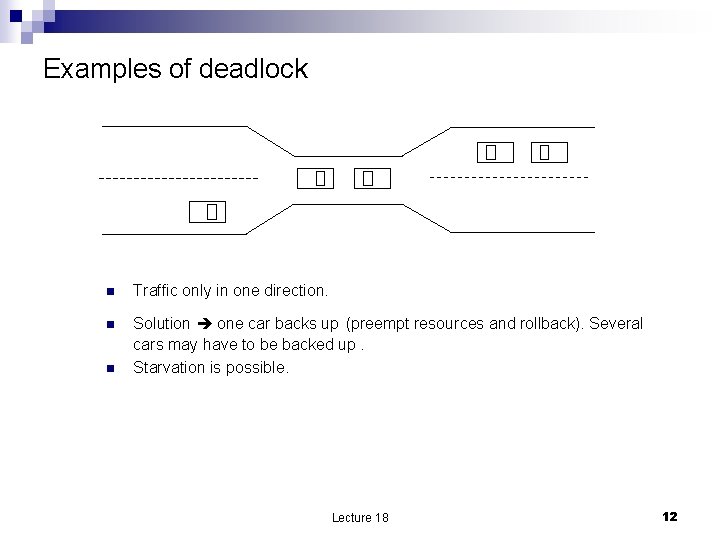

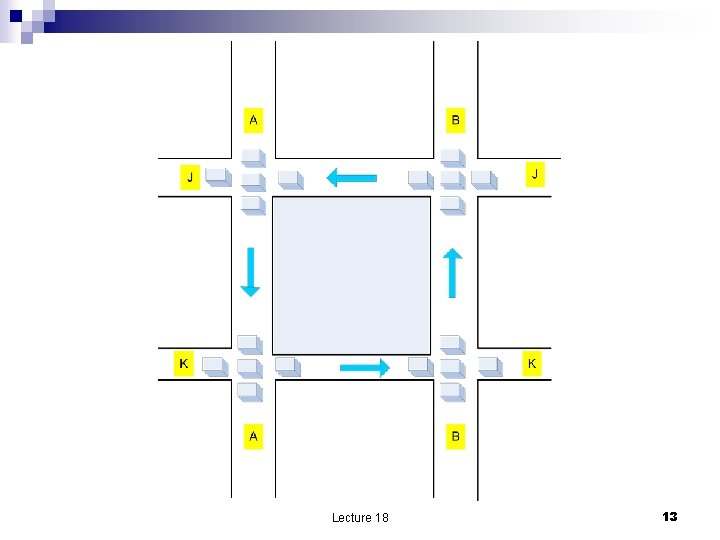

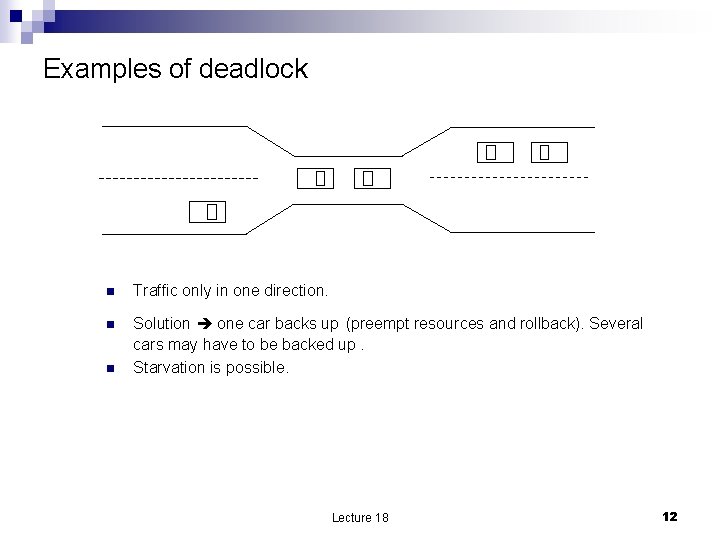

Examples of deadlock n Traffic only in one direction. n Solution one car backs up (preempt resources and rollback). Several cars may have to be backed up. Starvation is possible. n Lecture 18 12

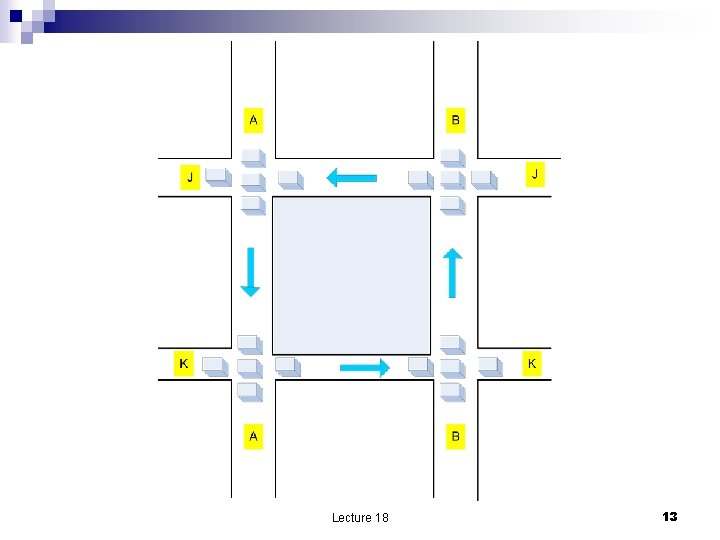

Lecture 18 13

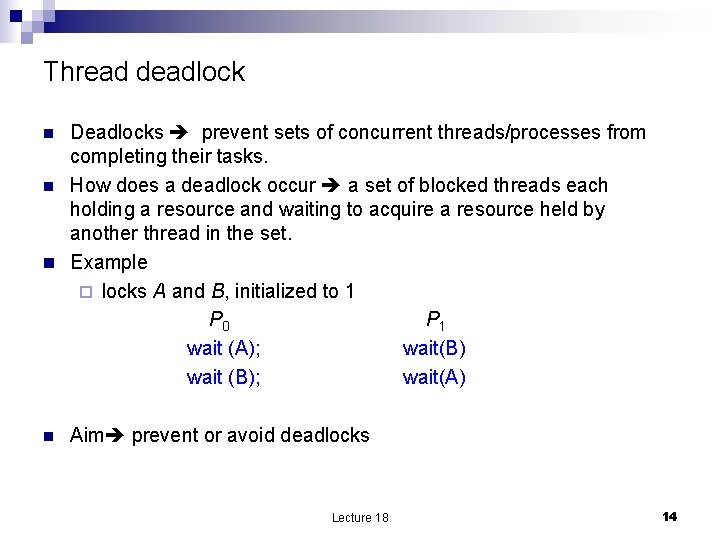

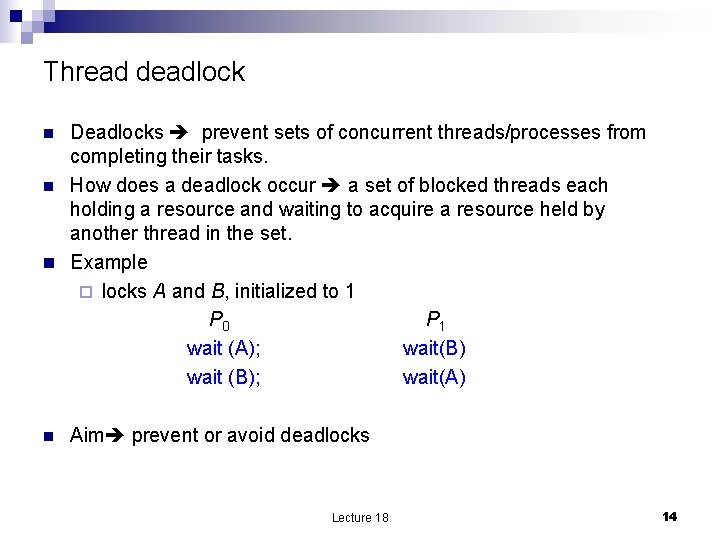

Thread deadlock Deadlocks prevent sets of concurrent threads/processes from completing their tasks. n How does a deadlock occur a set of blocked threads each holding a resource and waiting to acquire a resource held by another thread in the set. n Example ¨ locks A and B, initialized to 1 P 0 P 1 wait (A); wait(B) wait (B); wait(A) n n Aim prevent or avoid deadlocks Lecture 18 14

System model n n n Resource types R 1, R 2, . . . , Rm (CPU cycles, memory space, I/O devices) Each resource type Ri has Wi instances. Resource access model: ¨ request ¨ use ¨ release Lecture 18 15

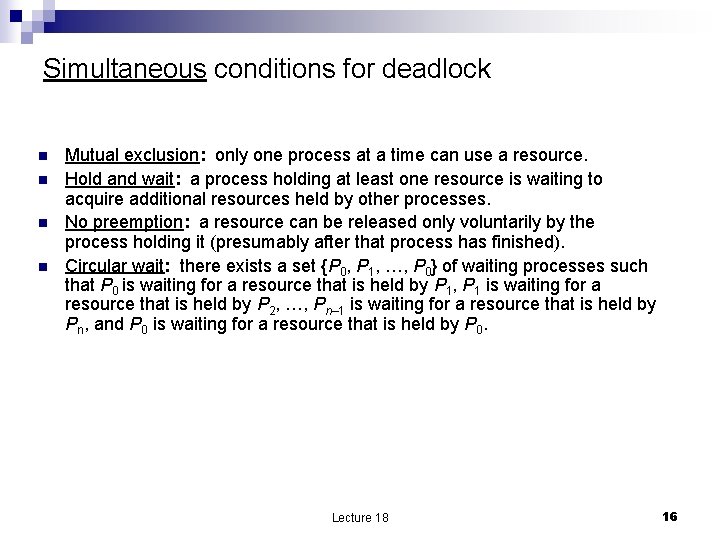

Simultaneous conditions for deadlock n n Mutual exclusion: only one process at a time can use a resource. Hold and wait: a process holding at least one resource is waiting to acquire additional resources held by other processes. No preemption: a resource can be released only voluntarily by the process holding it (presumably after that process has finished). Circular wait: there exists a set {P 0, P 1, …, P 0} of waiting processes such that P 0 is waiting for a resource that is held by P 1, P 1 is waiting for a resource that is held by P 2, …, Pn– 1 is waiting for a resource that is held by Pn, and P 0 is waiting for a resource that is held by P 0. Lecture 18 16

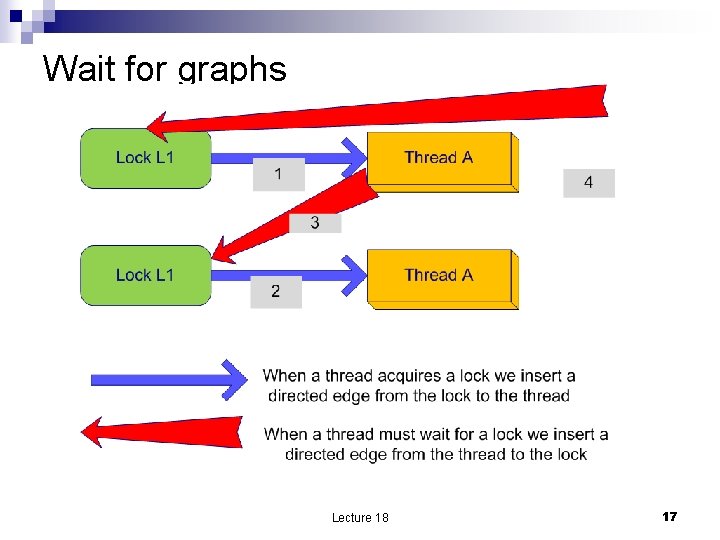

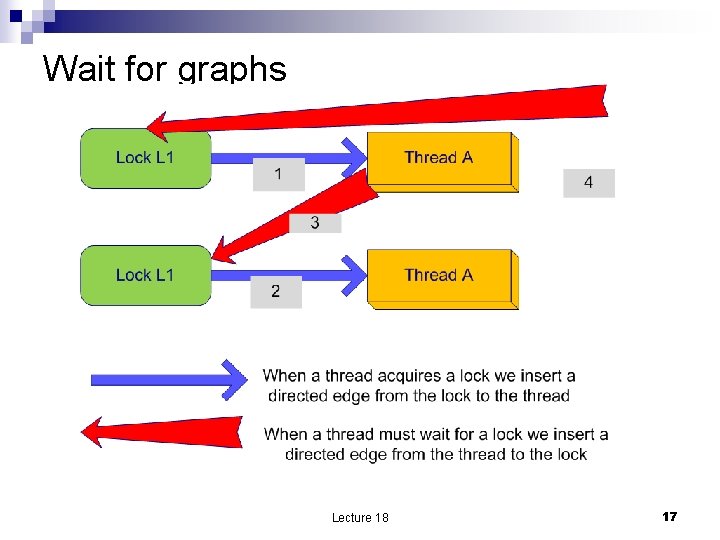

Wait for graphs Lecture 18 17

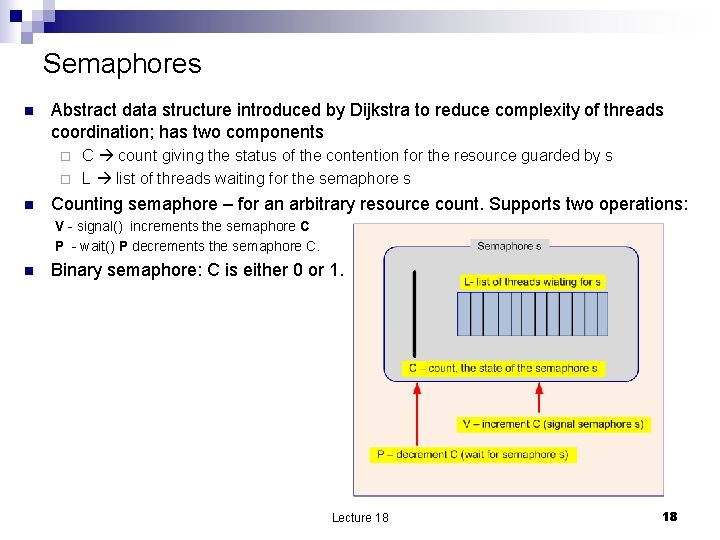

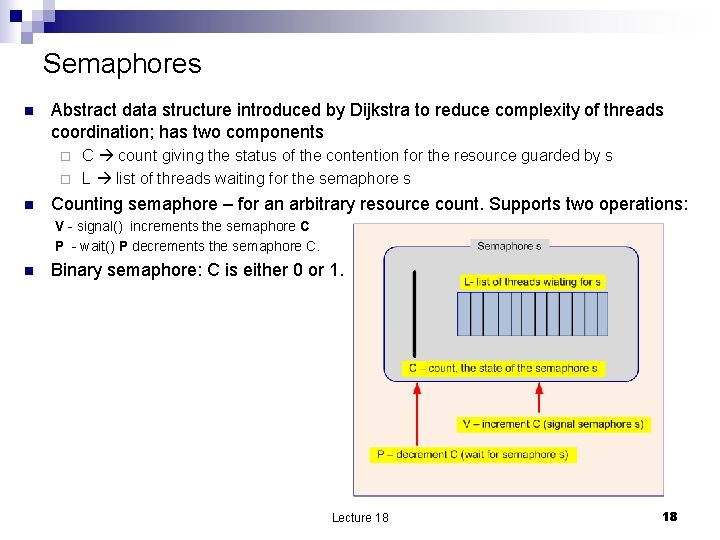

Semaphores n Abstract data structure introduced by Dijkstra to reduce complexity of threads coordination; has two components C count giving the status of the contention for the resource guarded by s ¨ L list of threads waiting for the semaphore s ¨ n Counting semaphore – for an arbitrary resource count. Supports two operations: V - signal() increments the semaphore C P - wait() P decrements the semaphore C. n Binary semaphore: C is either 0 or 1. Lecture 18 18

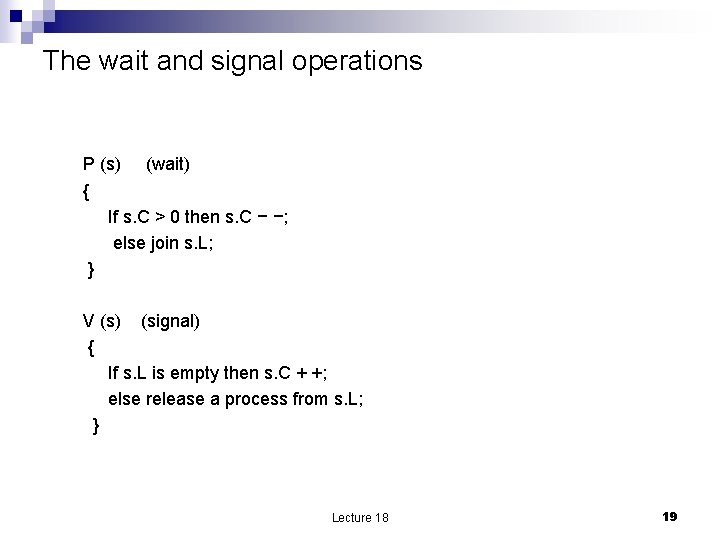

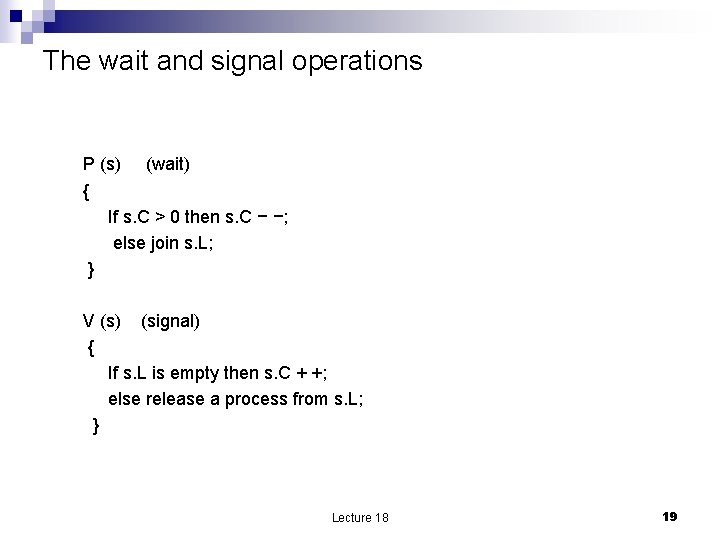

The wait and signal operations P (s) (wait) { If s. C > 0 then s. C − −; else join s. L; } V (s) (signal) { If s. L is empty then s. C + +; else release a process from s. L; } Lecture 18 19

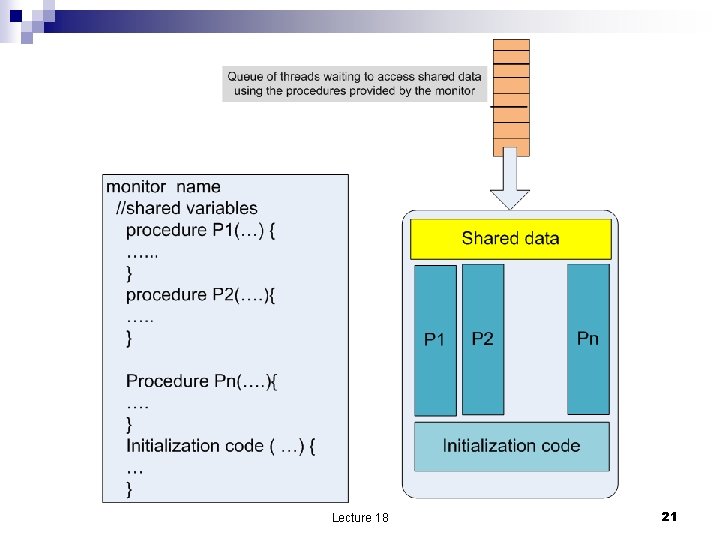

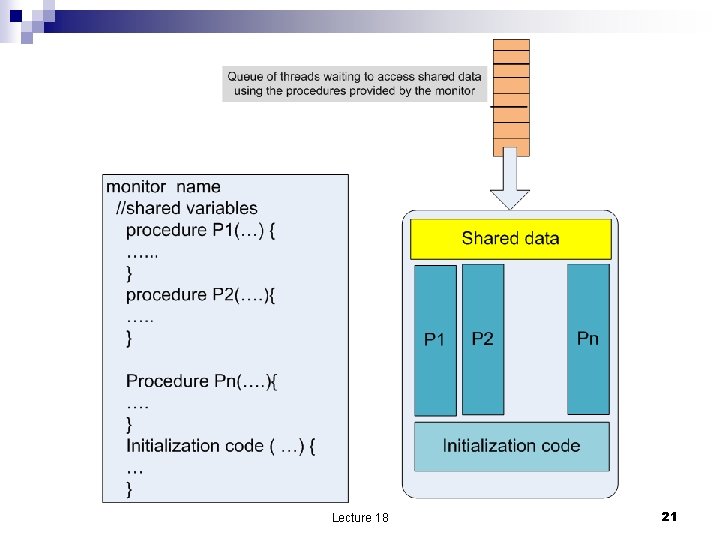

Monitors n Semaphores can be used incorrectly multiple threads may be allowed to enter the critical section guarded by the semaphore ¨ may cause deadlocks ¨ n n n Threads may access the shared data directly without checking the semaphore. Solution encapsulate shared data with access methods to operate on them. Monitors an abstract data type that allows access to shared data with specific methods that guarantee mutual exclusion Lecture 18 20

Lecture 18 21

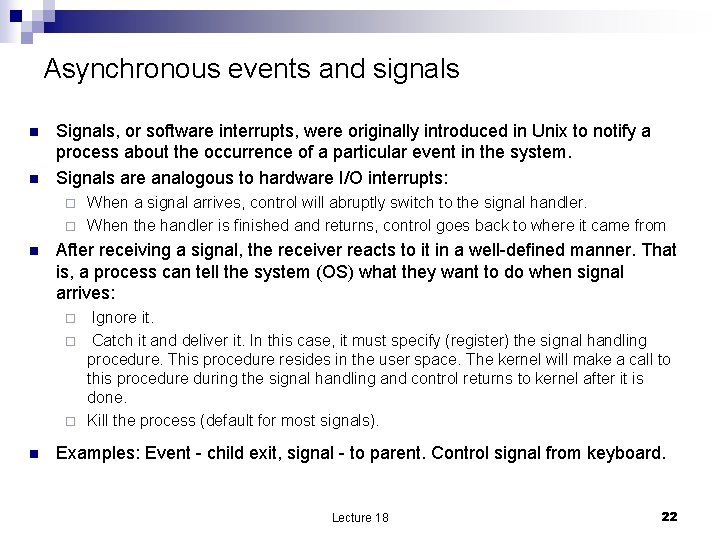

Asynchronous events and signals n n Signals, or software interrupts, were originally introduced in Unix to notify a process about the occurrence of a particular event in the system. Signals are analogous to hardware I/O interrupts: When a signal arrives, control will abruptly switch to the signal handler. ¨ When the handler is finished and returns, control goes back to where it came from ¨ n After receiving a signal, the receiver reacts to it in a well-defined manner. That is, a process can tell the system (OS) what they want to do when signal arrives: Ignore it. ¨ Catch it and deliver it. In this case, it must specify (register) the signal handling procedure. This procedure resides in the user space. The kernel will make a call to this procedure during the signal handling and control returns to kernel after it is done. ¨ Kill the process (default for most signals). ¨ n Examples: Event - child exit, signal - to parent. Control signal from keyboard. Lecture 18 22

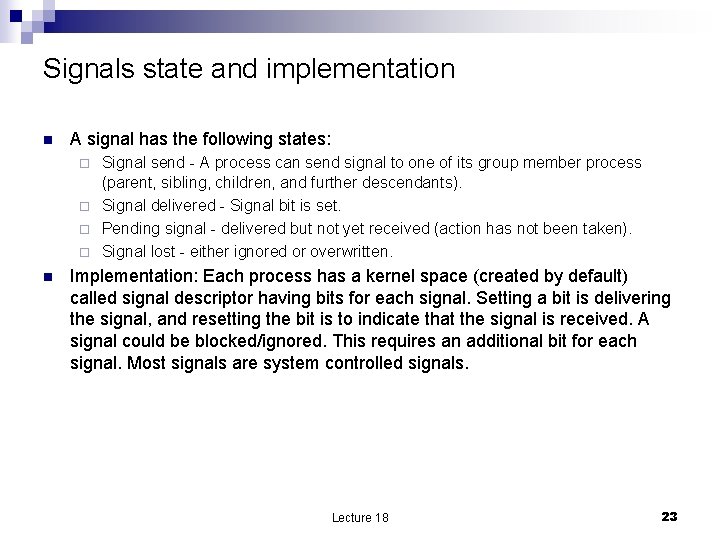

Signals state and implementation n A signal has the following states: Signal send - A process can send signal to one of its group member process (parent, sibling, children, and further descendants). ¨ Signal delivered - Signal bit is set. ¨ Pending signal - delivered but not yet received (action has not been taken). ¨ Signal lost - either ignored or overwritten. ¨ n Implementation: Each process has a kernel space (created by default) called signal descriptor having bits for each signal. Setting a bit is delivering the signal, and resetting the bit is to indicate that the signal is received. A signal could be blocked/ignored. This requires an additional bit for each signal. Most signals are system controlled signals. Lecture 18 23