CERES Cognitive Expertise through RepetitionEnhanced Simulation HPTE Technical

- Slides: 26

CERES: Cognitive Expertise through Repetition-Enhanced Simulation HPT&E Technical Review Decision Making & Expertise Development Paul J. Reber, Ph. D. Northwestern University Feb 20, 2019

Objective • Accelerate the development of expertise in a cognitive skill by training with large numbers of repetitions in simulation-based protocol • Target domain: Reading and understanding topographic maps 2

Background • Training typically provides very few repetitions of connecting a complex topographic map to the world around the trainee – Rules are explained, instructed in classroom – Expertise developed slowly by experience • Research Foundations: implicit learning depends on practice – Eventually produces automatic, habit-like execution of learned skills • Applications: land navigation, decision-making from topographic features 3

Overall Approach • Develop map training protocol with naïve NU community participants – Underway • Field test with military personnel – Software testing, SME feedback – NU NROTC personnel – Field testing (e. g. , Quantico) – Quantify training benefit on orienteering assessment within Land Navigation training 4

Training Approach • Procedural content – Random world surfaces – Topographic map/video pairings – STATE software (Charles River Analytics, Neihaus) • Training protocol – Identify facing orientation on map from video – 30 s trials with feedback – Repeated experience with map until understood • Assessment approach – Pre/post training, 10 trials no feedback 5

Map Training • Explicit Training • Pretest • High-repetition Training – Advance to new map when accuracy is high – Increase difficulty of task • Posttest • Questionnaire 6

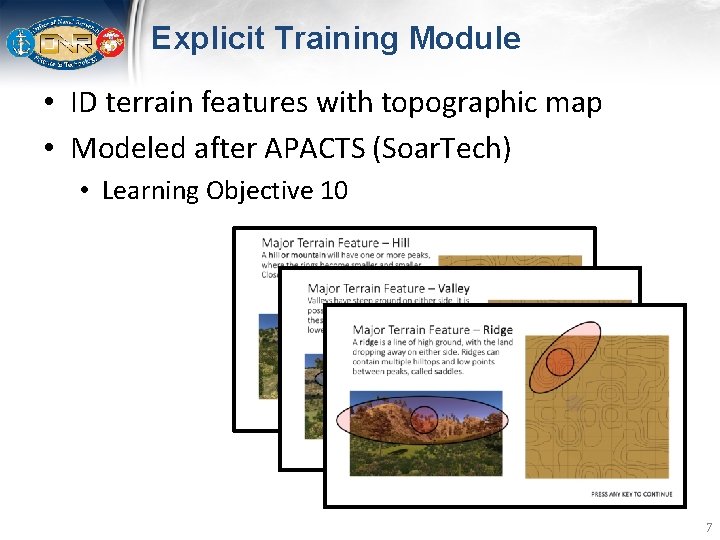

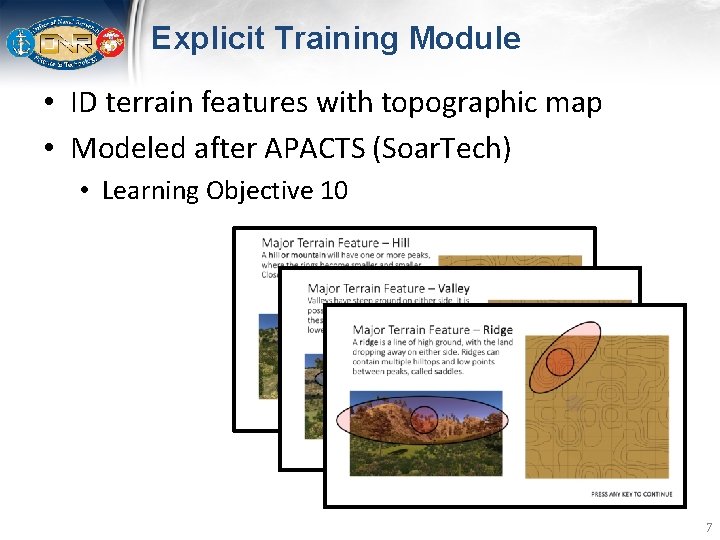

Explicit Training Module • ID terrain features with topographic map • Modeled after APACTS (Soar. Tech) • Learning Objective 10 7

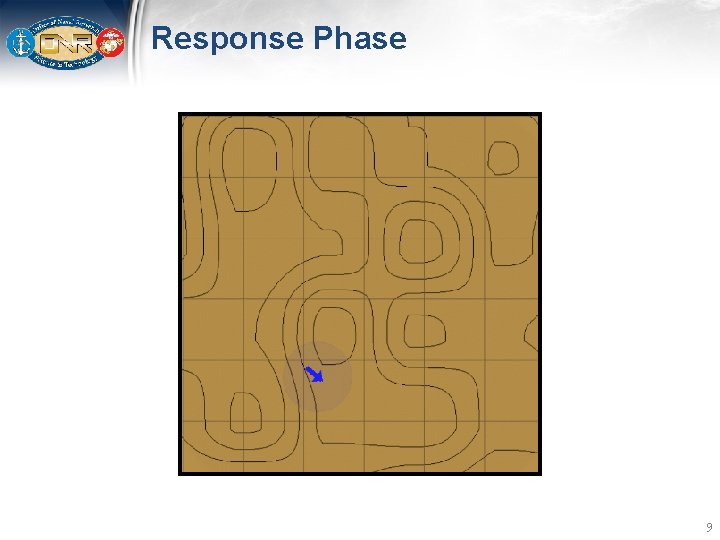

High-Repetition Map Training Where are you facing on the map? Topographic Map First-person View 8

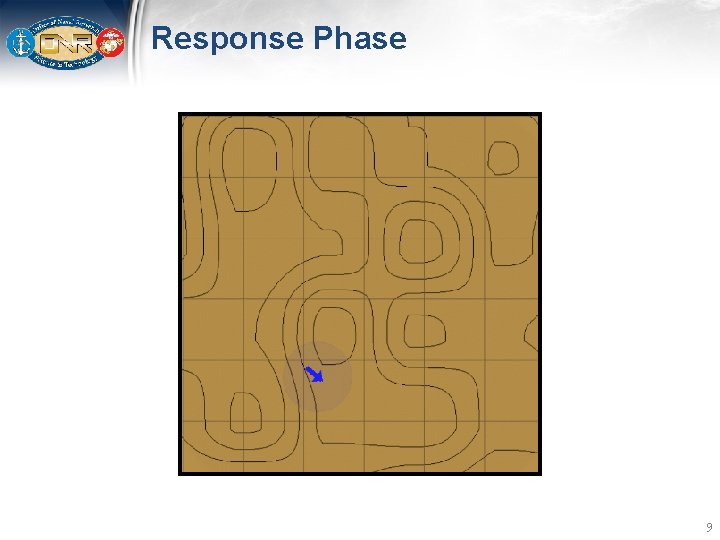

Response Phase 9

Feedback Phase 10

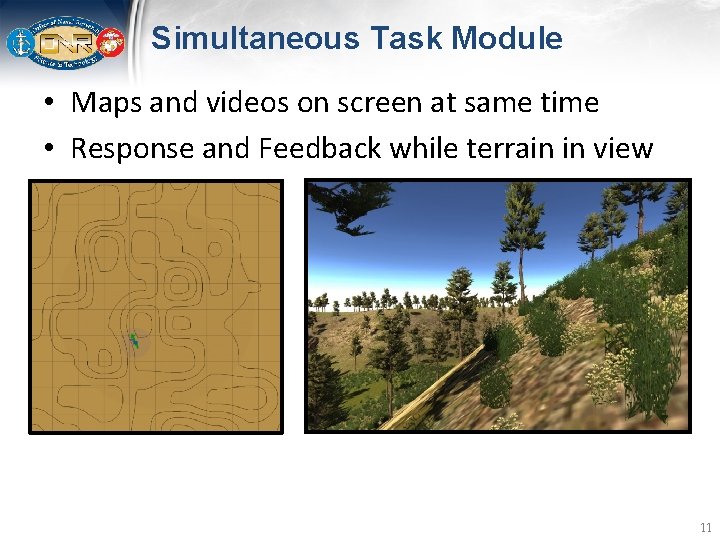

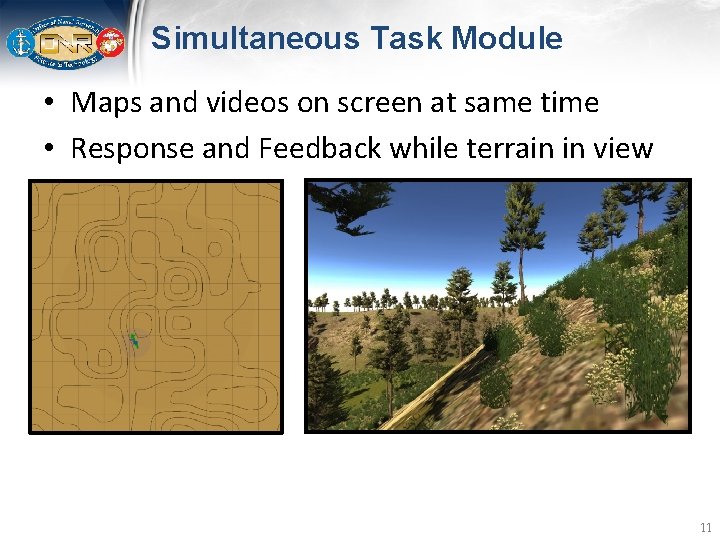

Simultaneous Task Module • Maps and videos on screen at same time • Response and Feedback while terrain in view 11

Sequential Task Module • • Map and video presented separately Must hold terrain info in working memory Study Compare Decide Feedback 12

Sequential Task Module Study Compare Decide Feedback 13

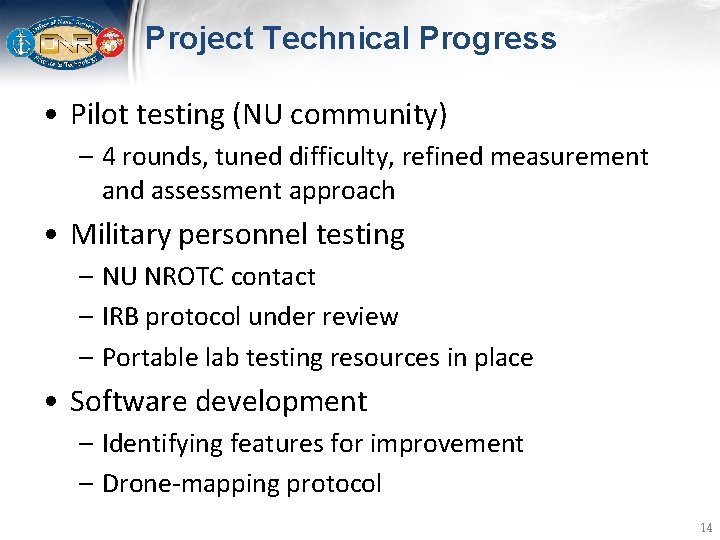

Project Technical Progress • Pilot testing (NU community) – 4 rounds, tuned difficulty, refined measurement and assessment approach • Military personnel testing – NU NROTC contact – IRB protocol under review – Portable lab testing resources in place • Software development – Identifying features for improvement – Drone-mapping protocol 14

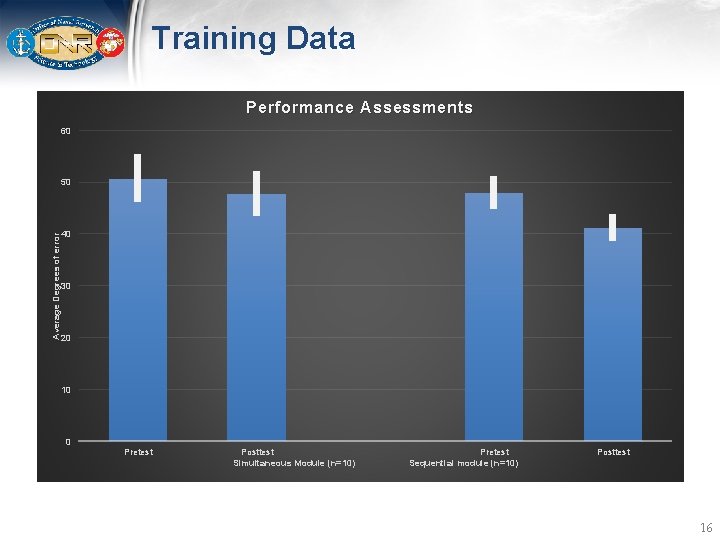

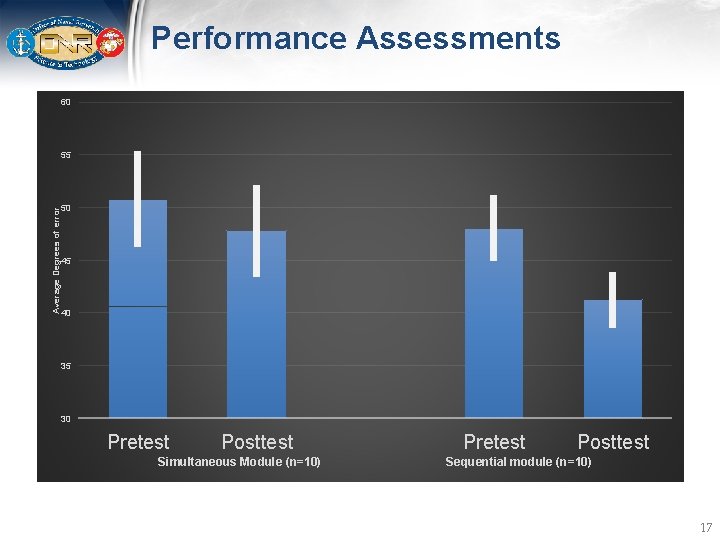

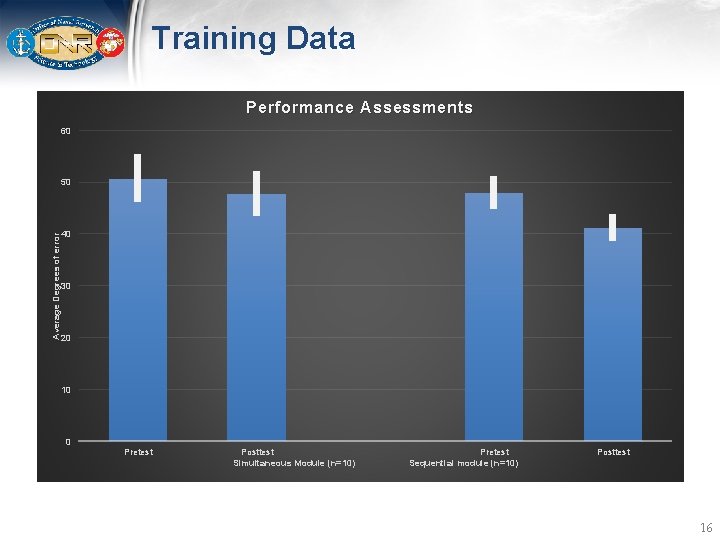

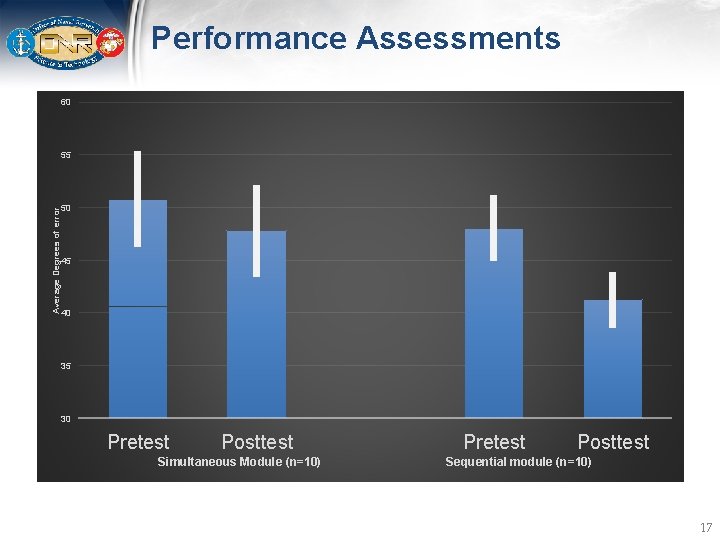

Training Data • Explicit Training (~8 minutes) • Simultaneous Task Module – 3 -4 terrain decisions per minute (~150 total) – ~10 trials / map on average • Sequential Task Module – 1 -2 terrain decisions per minute (~81 total) – ~9 trials / map on average 15

Training Data Performance Assessments 60 Average Degrees of error 50 40 30 20 10 0 Pretest Posttest Simultaneous Module (n=10) Pretest Sequential module (n=10) Posttest 16

Performance Assessments 60 Average Degrees of error 55 50 45 40 35 30 Pretest Posttest Simultaneous Module (n=10) Pretest Posttest Sequential module (n=10) 17

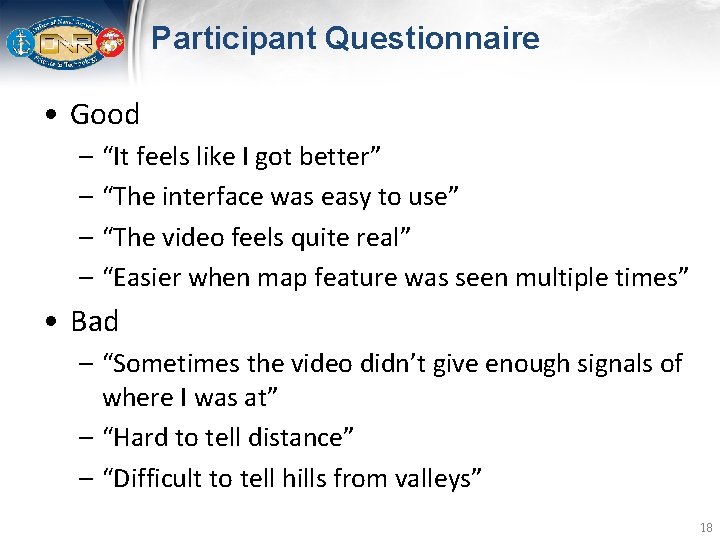

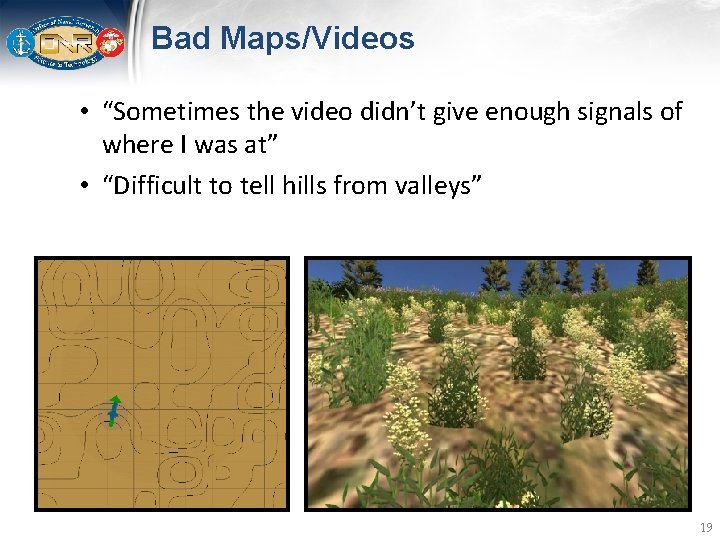

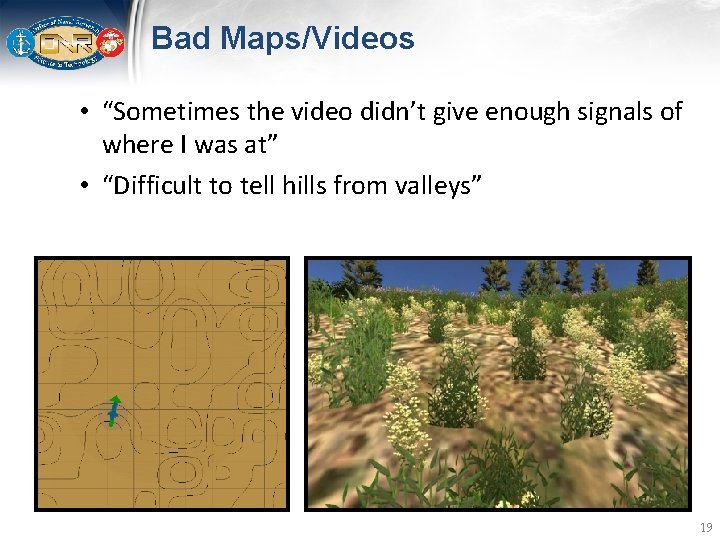

Participant Questionnaire • Good – “It feels like I got better” – “The interface was easy to use” – “The video feels quite real” – “Easier when map feature was seen multiple times” • Bad – “Sometimes the video didn’t give enough signals of where I was at” – “Hard to tell distance” – “Difficult to tell hills from valleys” 18

Bad Maps/Videos • “Sometimes the video didn’t give enough signals of where I was at” • “Difficult to tell hills from valleys” 19

Issues and Opportunities • Issue: Map quality – Artificial topo surfaces are difficult to understand, not particularly realistic – Course of action: software improvement to enhance procedural content generation, integration of real-world maps via drone • Opportunities for collaboration – Ongoing Land Navigation training development, decision-making based on topographic map features 20

Way Forward • Continuing pilot data collection (NU) – Extending training to multiple hours – Improving all stimuli • Engagement with instructors, trainees – SME feedback – Trainee experience testing • Applied field testing – Pending IRB, protocol stabilization, site selection – Summer 2019 21

Acknowledgements • Northwestern University – Marcia Grabowecky, Ph. D. , Kevin Schmidt, Brooke Feinstein, Ben Reuveni, Catherine Han, Ken Paller, Ph. D. , Mark Beeman, Ph. D. , Satoru Suzuki, Ph. D. – Captain Adam M. North, USMC Marine Officer Instructor/Assistant Professor of Naval Science, NROTC Chicago Consortium • Charles River Analytics – James Niehaus, Ph. D. , Paul Woodall, William Manning 22

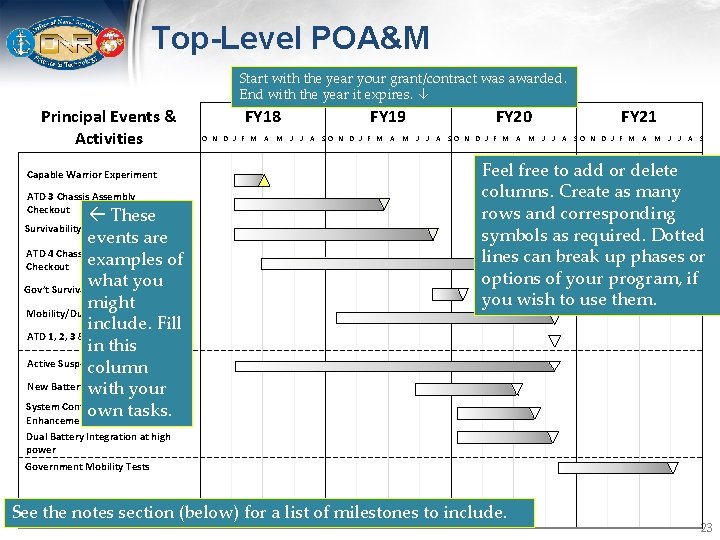

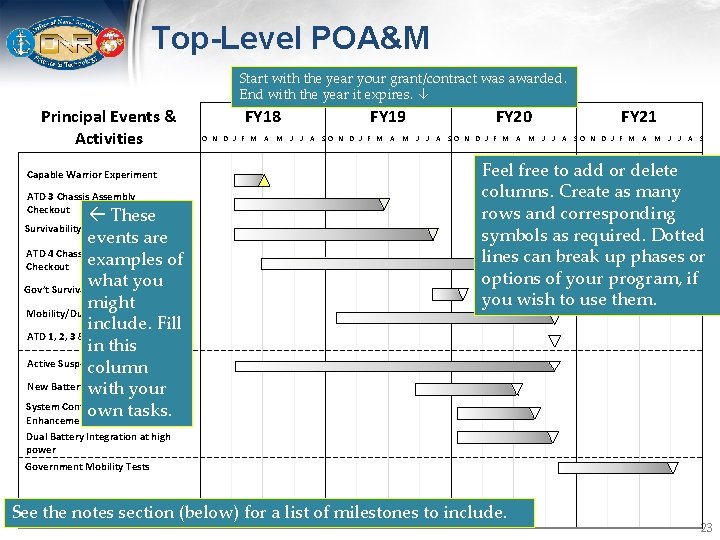

Top-Level POA&M Start with the year your grant/contract was awarded. End with the year it expires. Principal Events & Activities Capable Warrior Experiment ATD 3 Chassis Assembly Checkout These events are ATD 4 Chassis Assembly examples of Checkout what you Gov’t Survivability Experiments might Mobility/Durability Testing include. Fill ATD 1, 2, 3 & 4 Delivery in this Active Suspension column New Battery Power with. Converters your System Control HW/SW own tasks. Enhancement Survivability System Integration FY 18 FY 19 FY 20 FY 21 O N D J F M A M J J A SO N D J F M A M J J A S Feel free to add or delete columns. Create as many rows and corresponding symbols as required. Dotted lines can break up phases or options of your program, if you wish to use them. Dual Battery Integration at high power Government Mobility Tests See the notes section (below) for a list of milestones to include. 23

Back up slides

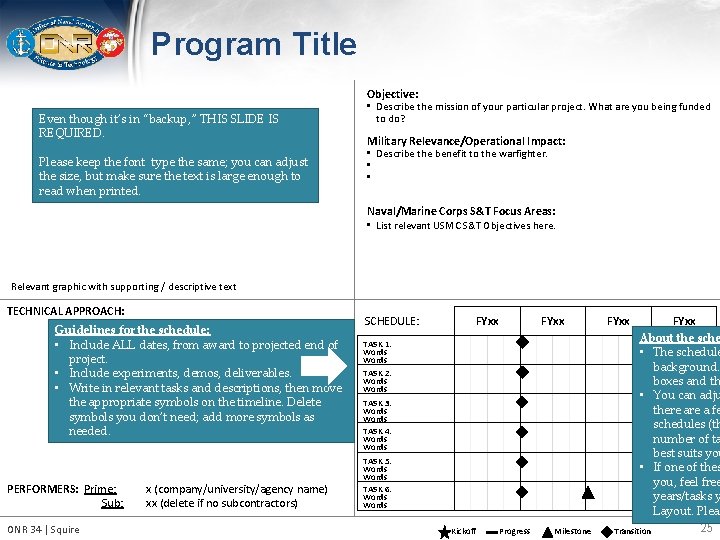

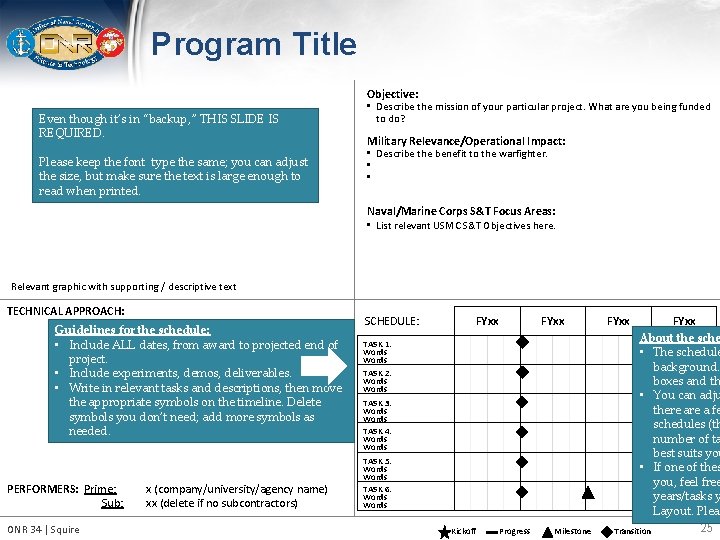

Program Title Even though it’s in “backup, ” THIS SLIDE IS REQUIRED. Please keep the font type the same; you can adjust the size, but make sure the text is large enough to read when printed. Objective: • Describe the mission of your particular project. What are you being funded to do? Military Relevance/Operational Impact: • Describe the benefit to the warfighter. • • Naval/Marine Corps S&T Focus Areas: • List relevant USMC S&T Objectives here. Relevant graphic with supporting / descriptive text TECHNICAL APPROACH: Guidelines for the schedule: • Include ALL dates, from award to projected end of project. • Include experiments, demos, deliverables. • Write in relevant tasks and descriptions, then move the appropriate symbols on the timeline. Delete symbols you don’t need; add more symbols as needed. PERFORMERS: Prime: Sub: ONR 34 | Squire x (company/university/agency name) xx (delete if no subcontractors) SCHEDULE: FYxx TASK 1: Words TASK 2: Words TASK 3: Words TASK 4: Words TASK 5: Words TASK 6: Words Kickoff Progress Milestone FYxx About the sche • The schedule background. boxes and th • You can adju there a fe schedules (th number of ta best suits you • If one of thes you, feel free years/tasks y Layout. Pleas 25 Transition

• You can include additional slides (any format) here, if desired. 26