CENG 450 Computer Systems Architecture Lecture 2 Amirali

![Language Evolution swap (int v[], int k) { int temp = v[k]; v[k] = Language Evolution swap (int v[], int k) { int temp = v[k]; v[k] =](https://slidetodoc.com/presentation_image_h/b5668a81b8a1e0f2b1b6ae79abf48c09/image-14.jpg)

- Slides: 58

CENG 450 Computer Systems & Architecture Lecture 2 Amirali Baniasadi amirali@ece. uvic. ca

Outline z Power & Cost z Performance measurement z Amdahl's Law z Benchmarks

History 1. “Big Iron” Computers: Used vacuum tubes, electric relays and bulk magnetic storage devices. No microprocessors. No memory. Example: ENIAC (1945), IBM Mark 1 (1944)

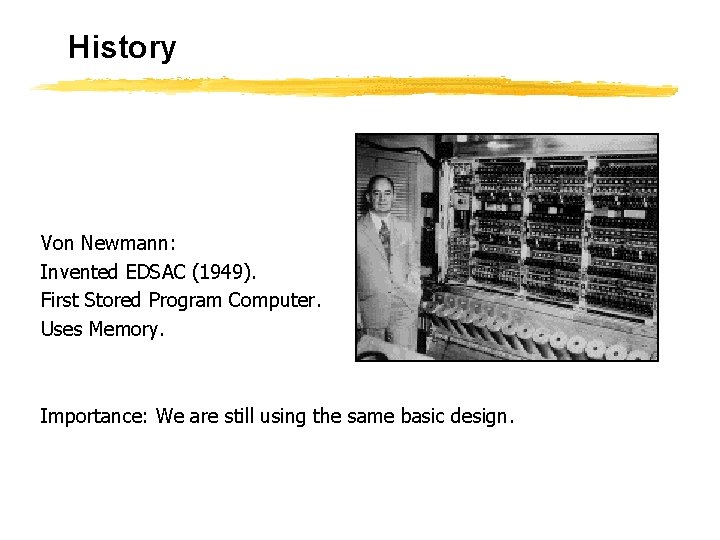

History Von Newmann: Invented EDSAC (1949). First Stored Program Computer. Uses Memory. Importance: We are still using the same basic design.

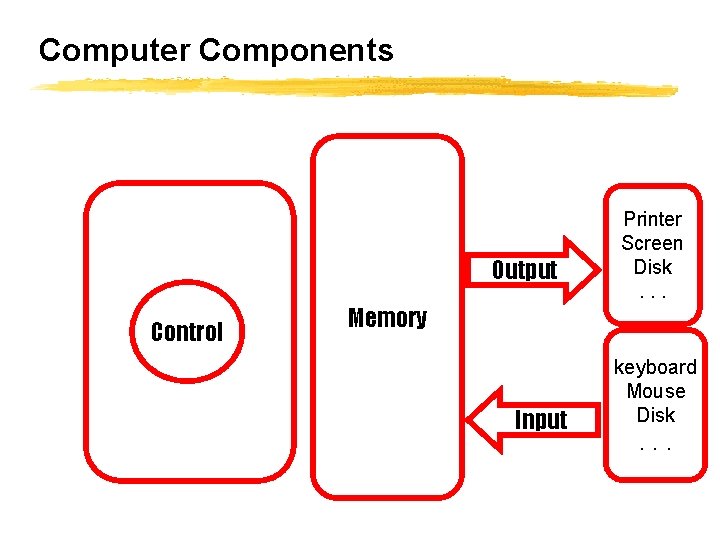

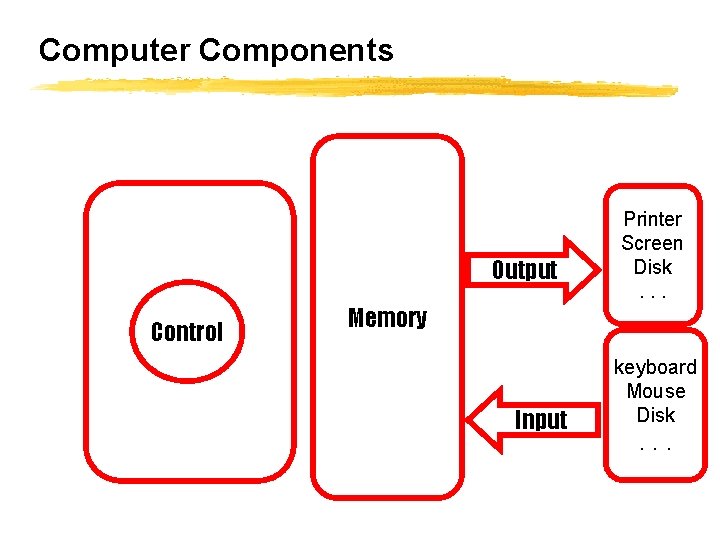

Computer Components Output Processor Control (CPU) Memory Input Printer Screen Disk. . . keyboard Mouse Disk . . .

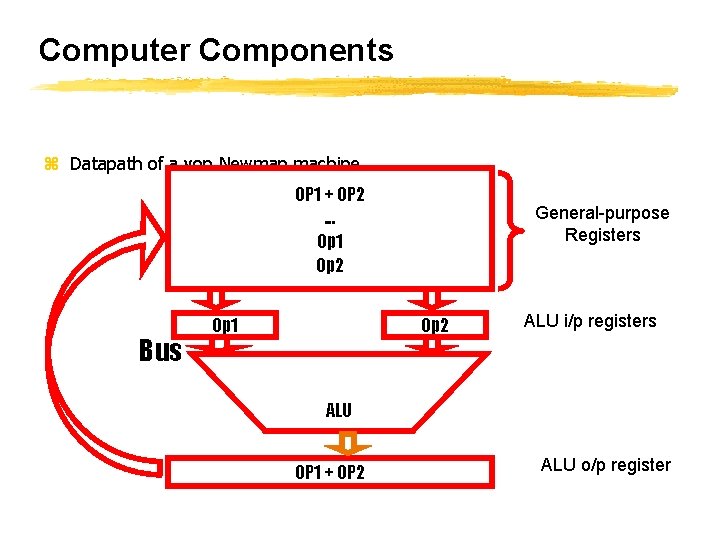

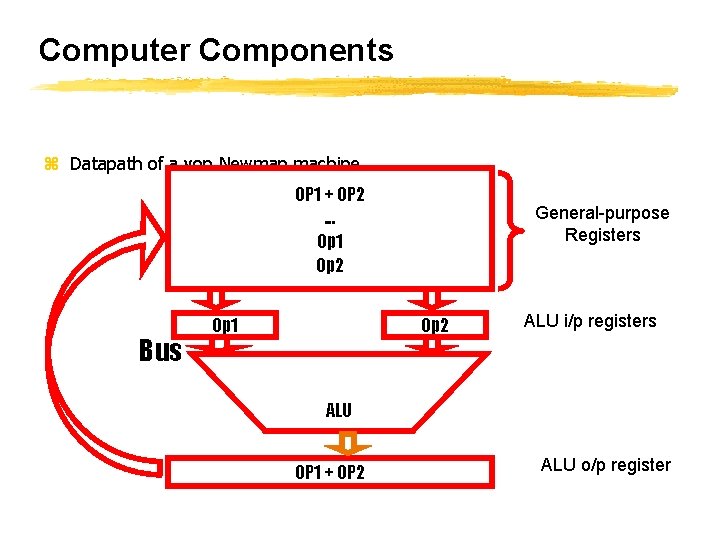

Computer Components z Datapath of a von Newman machine OP 1 + OP 2. . . Op 1 Op 2 Bus Op 1 General-purpose Registers Op 2 ALU i/p registers ALU OP 1 + OP 2 ALU o/p register

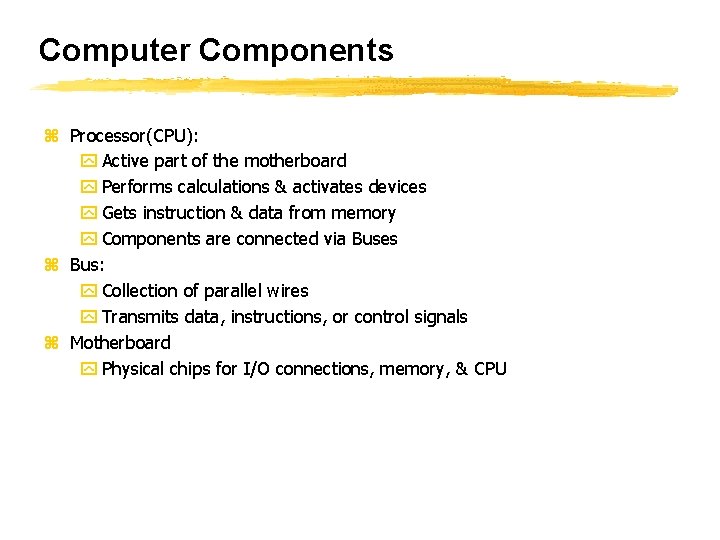

Computer Components z Processor(CPU): y Active part of the motherboard y Performs calculations & activates devices y Gets instruction & data from memory y Components are connected via Buses z Bus: y Collection of parallel wires y Transmits data, instructions, or control signals z Motherboard y Physical chips for I/O connections, memory, & CPU

Computer Components z CPU consists of y Datapath (ALU+ Registers): x Performs arithmetic & logical operations y Control (CU): x Controls the data path, memory, & I/O devices x Sends signals that determine operations of datapath, memory, input & output

Technology Change z Technology changes rapidly y HW x. Vacuum tubes: Electron emitting devices x Transistors: On-off switches controlled by electricity x. Integrated Circuits( IC/ Chips): Combines thousands of transistors x. Very Large-Scale Integration( VLSI): Combines millions of transistors x. What next? y SW x. Machine language: Zeros and ones x. Assembly language: Mnemonics x. High-Level Languages: English-like x. Artificial Intelligence languages: Functions & logic predicates x. Object-Oriented Programming: Objects & operations on objects

Moore’s Prediction

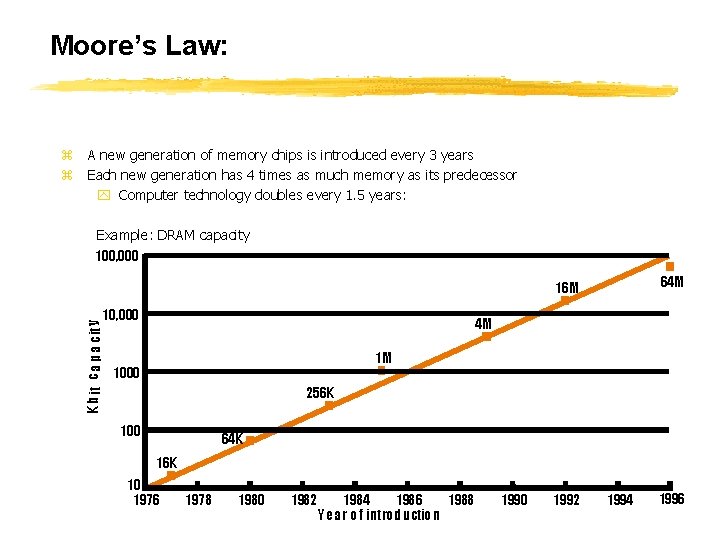

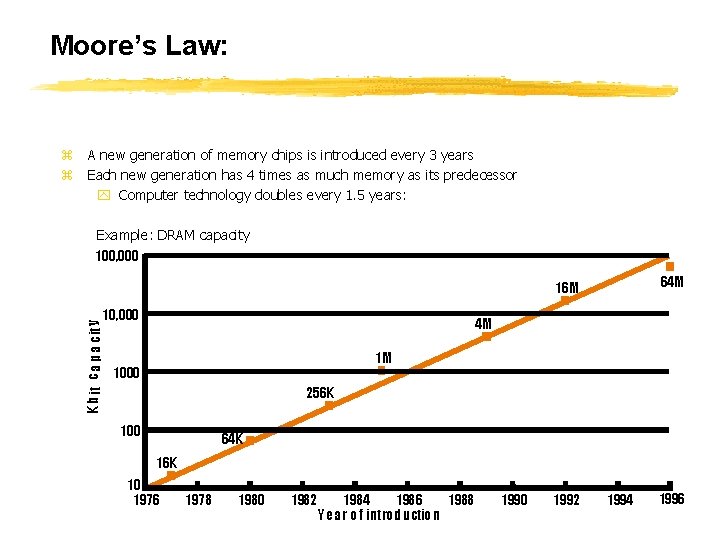

Moore’s Law: z A new generation of memory chips is introduced every 3 years z Each new generation has 4 times as much memory as its predecessor y Computer technology doubles every 1. 5 years: Example: DRAM capacity 100, 000 64 M K b it c a p a cit y 16 M 10, 000 4 M 1 M 1000 256 K 100 64 K 16 K 10 1976 1978 1980 1982 1984 1986 1988 Year o f introduction 1990 1992 1994 1996

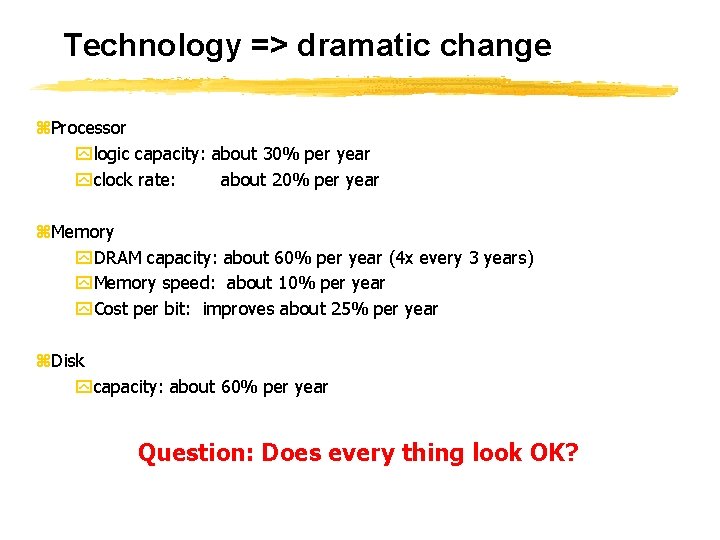

Technology => dramatic change z. Processor ylogic capacity: about 30% per year yclock rate: about 20% per year z. Memory y. DRAM capacity: about 60% per year (4 x every 3 years) y. Memory speed: about 10% per year y. Cost per bit: improves about 25% per year z. Disk ycapacity: about 60% per year Question: Does every thing look OK?

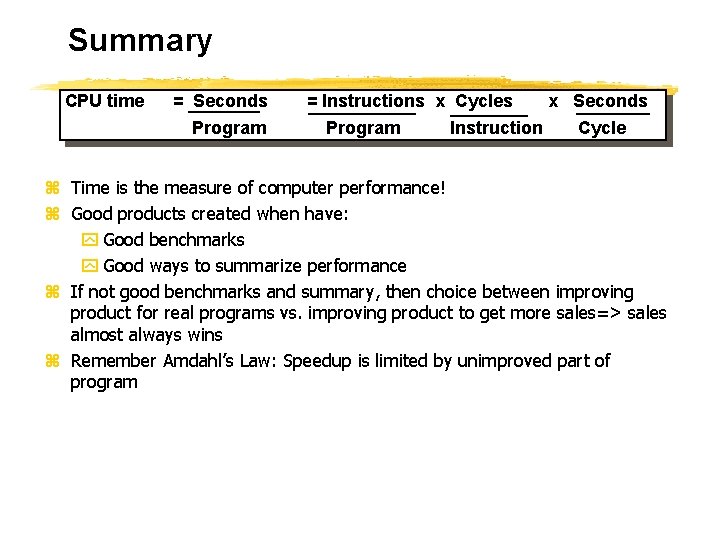

Software Evolution. z z Machine language Assembly language High-level languages Subroutine libraries z There is a large gap between what is convenient for computers & what is convenient for humans z Translation/Interpretation is needed between both

![Language Evolution swap int v int k int temp vk vk Language Evolution swap (int v[], int k) { int temp = v[k]; v[k] =](https://slidetodoc.com/presentation_image_h/b5668a81b8a1e0f2b1b6ae79abf48c09/image-14.jpg)

Language Evolution swap (int v[], int k) { int temp = v[k]; v[k] = v[k+1]; v[k+1] = temp; } swap: muli $2, $5, 4 add $2, $4, $2 lw $15, 0($2) lw $18, 4($2) sw $18, 0($2) sw $15, 4($2) jr $31 0 00 0 0 1 0 00 00 1 1 0 0 00 0 0 1 1 1 0 0 0 0 0 1 00 0 0 1 1 0 0 0 0 00 00 0 1 1 1 1 0 0 0 00 00 1 0 1 0 1 1 0 0 1 0 0 0 0 00 00 0 1 0 1 1 0 0 0 0 00 00 1 0 00 0 1 1 1 0 0 0 0 00 0 0 0 1 0 0 0 High-level language program (in C) Assembly language program (for MIPS) Binary machine language program (for MIPS)

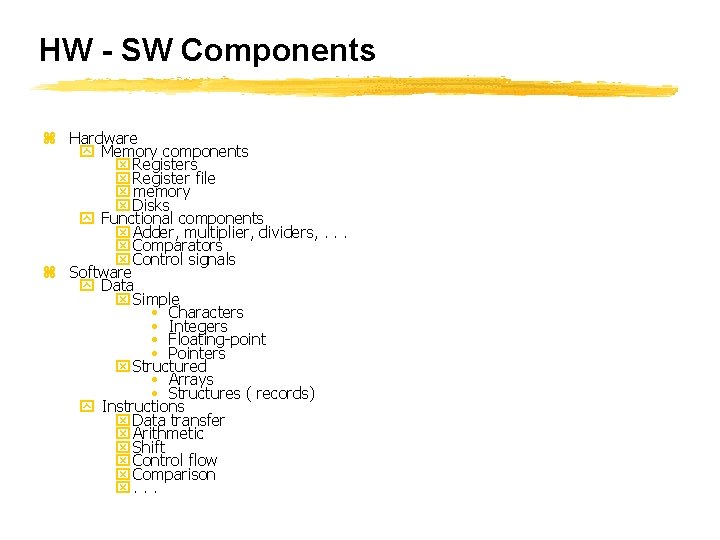

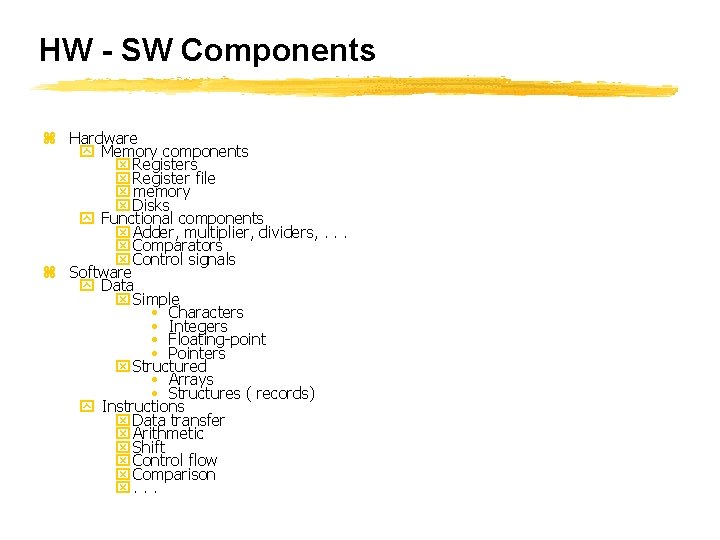

HW - SW Components z Hardware y Memory components x Register file x memory x Disks y Functional components x Adder, multiplier, dividers, . . . x Comparators x Control signals z Software y Data x Simple • Characters • Integers • Floating-point • Pointers x Structured • Arrays • Structures ( records) y Instructions x Data transfer x Arithmetic x Shift x Control flow x Comparison x. . .

Things You Will Learn z Assembly language introduction/Review z How to analyze program performance z How to design processor components z How to enhance processors performance (caches, pipelines, parallel processors, multiprocessors)

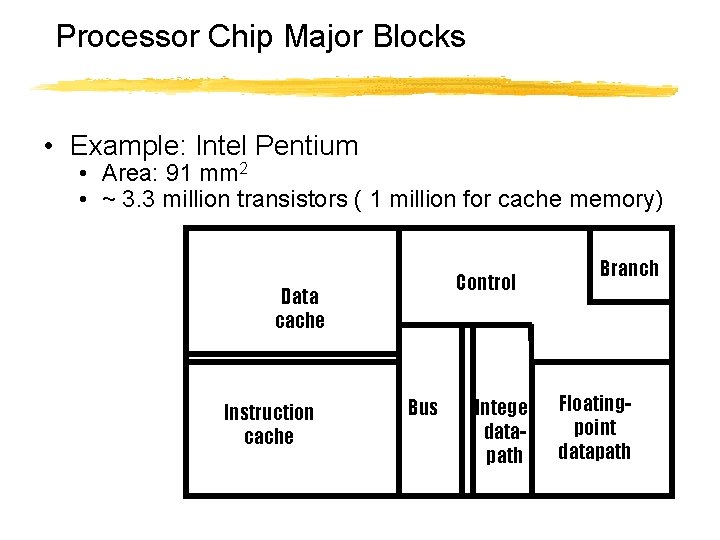

The Processor Chip

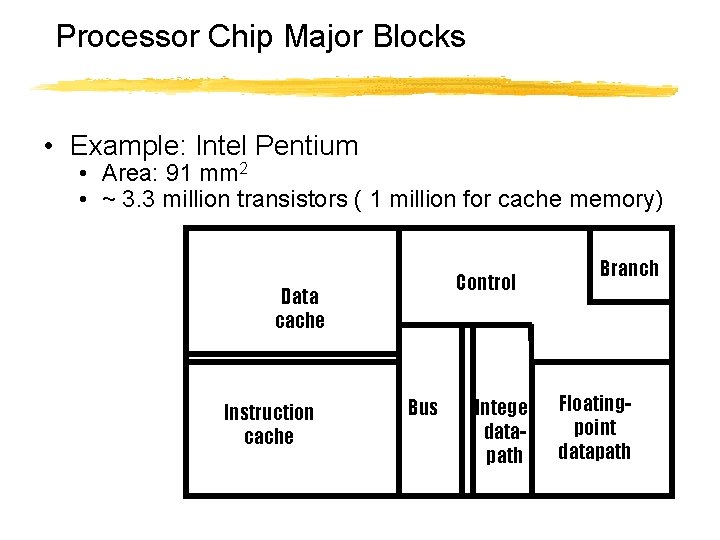

Processor Chip Major Blocks • Example: Intel Pentium • Area: 91 mm 2 • ~ 3. 3 million transistors ( 1 million for cache memory) Control Data cache Instruction cache Bus Integer datapath Branch Floatingpoint datapath

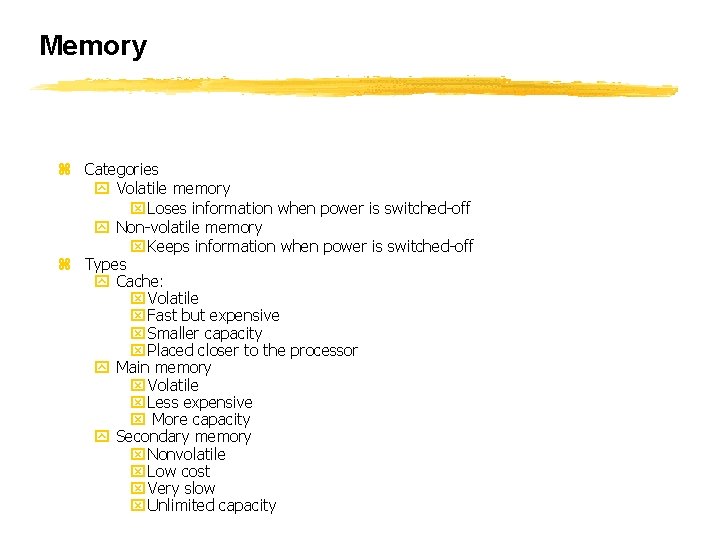

Memory z Categories y Volatile memory x Loses information when power is switched-off y Non-volatile memory x Keeps information when power is switched-off z Types y Cache: x Volatile x Fast but expensive x Smaller capacity x Placed closer to the processor y Main memory x Volatile x Less expensive x More capacity y Secondary memory x Nonvolatile x Low cost x Very slow x Unlimited capacity

Input-Output (I/O) z I/O devices have the hardest organization y Wide range of speeds x. Graphics vs. keyboard y Wide range of requirements x. Speed x. Standard x. Cost. . . y Least amount of research done in this area

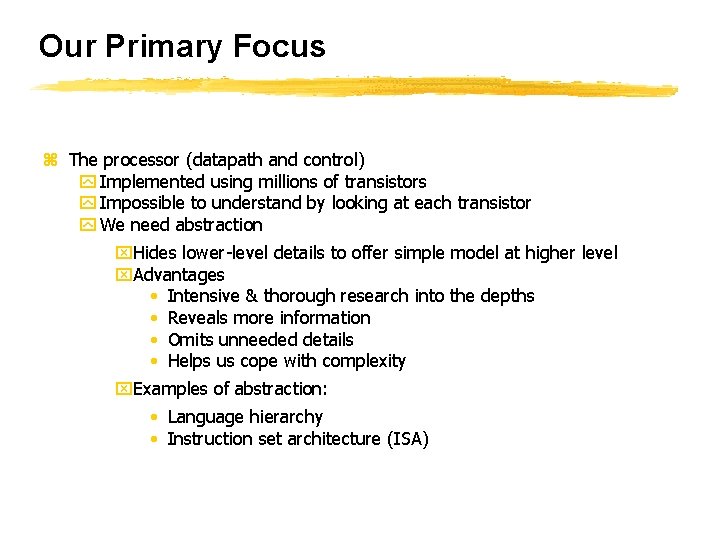

Our Primary Focus z The processor (datapath and control) y Implemented using millions of transistors y Impossible to understand by looking at each transistor y We need abstraction x. Hides lower-level details to offer simple model at higher level x. Advantages • Intensive & thorough research into the depths • Reveals more information • Omits unneeded details • Helps us cope with complexity x. Examples of abstraction: • Language hierarchy • Instruction set architecture (ISA)

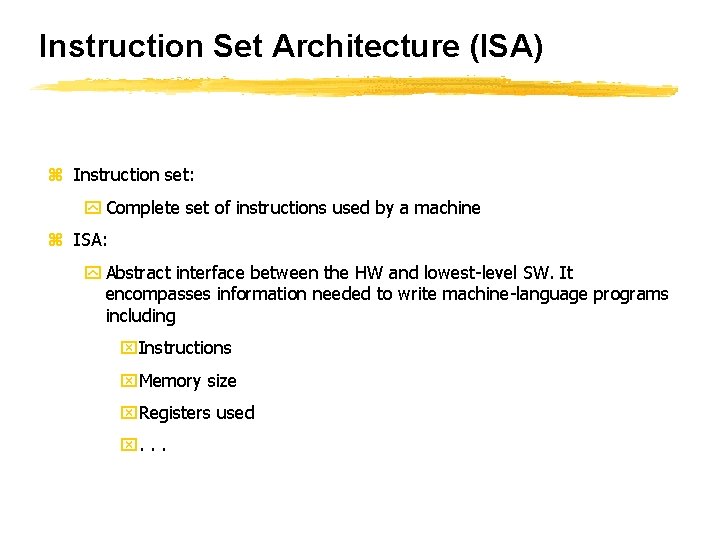

Instruction Set Architecture (ISA) z Instruction set: y Complete set of instructions used by a machine z ISA: y Abstract interface between the HW and lowest-level SW. It encompasses information needed to write machine-language programs including x. Instructions x. Memory size x. Registers used x. . .

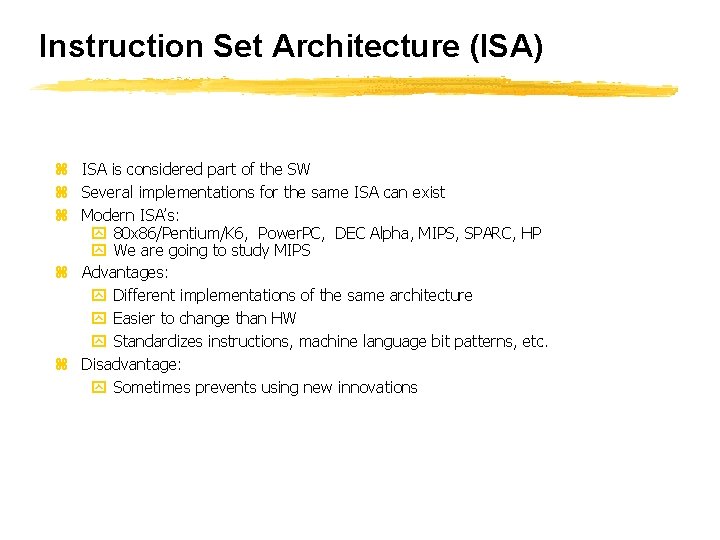

Instruction Set Architecture (ISA) z ISA is considered part of the SW z Several implementations for the same ISA can exist z Modern ISA’s: y 80 x 86/Pentium/K 6, Power. PC, DEC Alpha, MIPS, SPARC, HP y We are going to study MIPS z Advantages: y Different implementations of the same architecture y Easier to change than HW y Standardizes instructions, machine language bit patterns, etc. z Disadvantage: y Sometimes prevents using new innovations

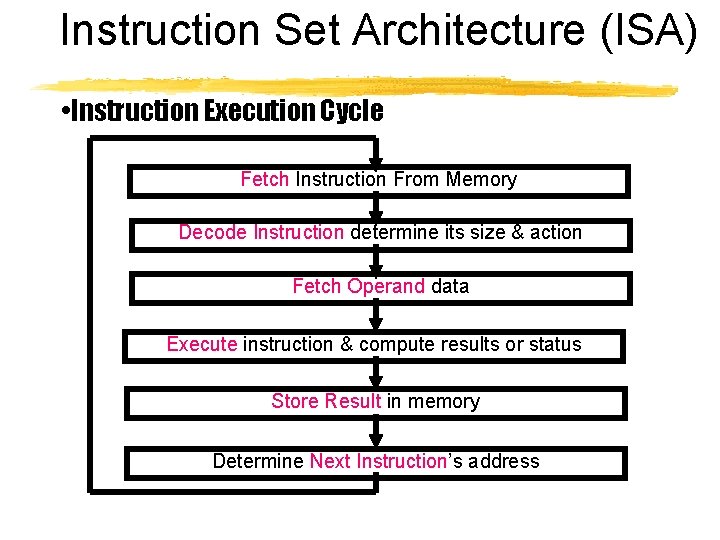

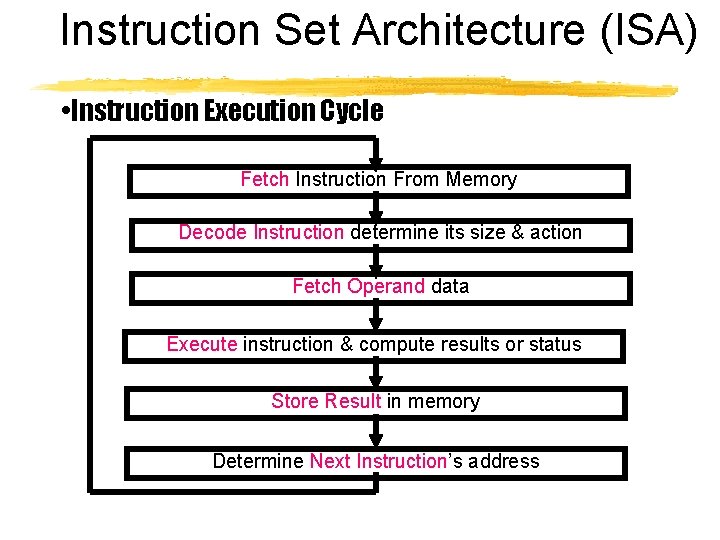

Instruction Set Architecture (ISA) • Instruction Execution Cycle Fetch Instruction From Memory Decode Instruction determine its size & action Fetch Operand data Execute instruction & compute results or status Store Result in memory Determine Next Instruction’s address

What Should we Learn? z A specific ISA (MIPS) z Performance issues - vocabulary and motivation z Instruction-Level Parallelism z How to Use Pipelining to improve performance z Exploiting Instruction-Level Parallelism w/ Software Approach z Memory: caches and virtual memory z I/O

What is Expected From You? • • Read textbook & readings! Be up-to-date! Come back with your input & questions for discussion! Appreciate and participate in teamwork!

Power? z Everything is done by tiny switches z z z Their charge represents logic values Changing charge energy Power energy over time Devices are non-ideal power heat Excess heat Circuits breakdown Need to keep power within acceptable limits

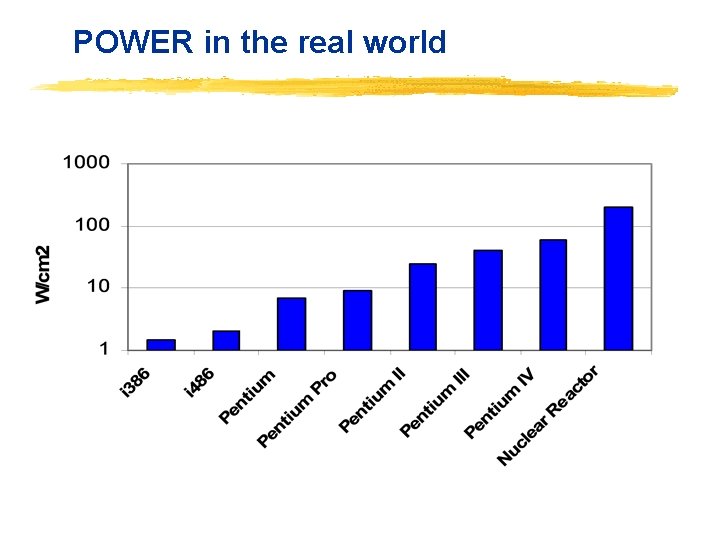

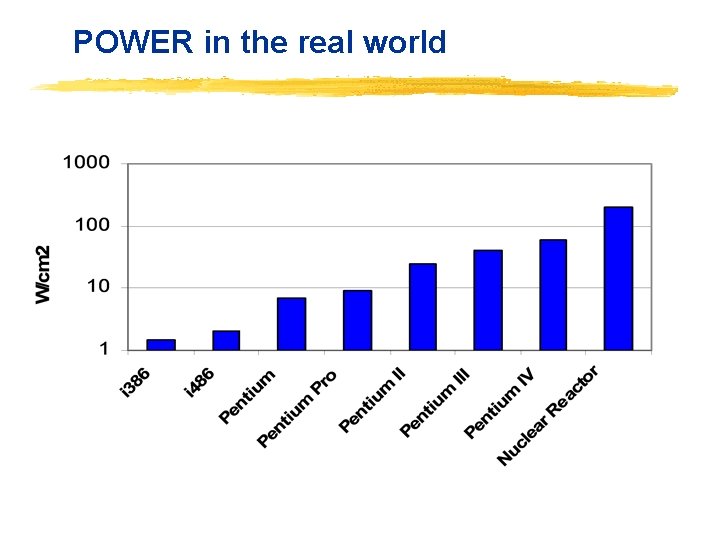

POWER in the real world

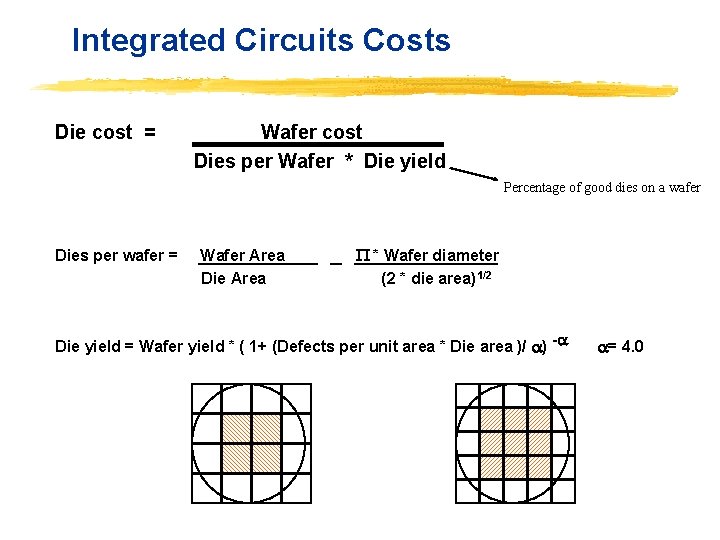

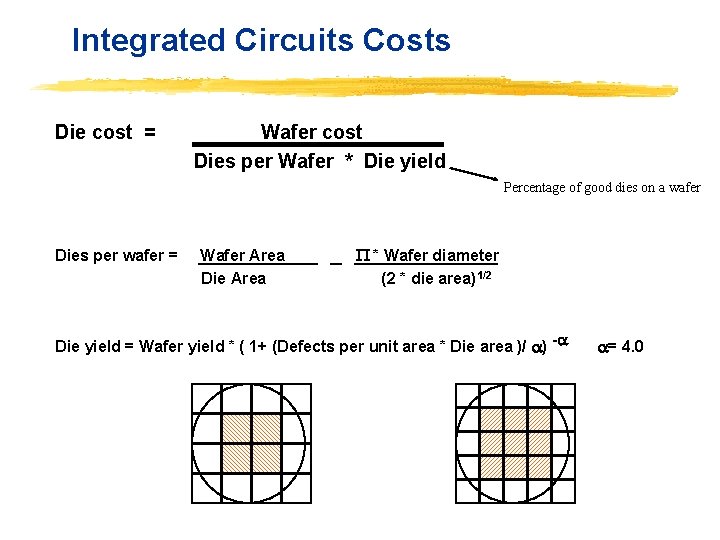

Integrated Circuits Costs Die cost = Wafer cost Dies per Wafer * Die yield Percentage of good dies on a wafer Dies per wafer = Wafer Area Die Area * Wafer diameter (2 * die area) 1/2 Die yield = Wafer yield * ( 1+ (Defects per unit area * Die area )/ ) - = 4. 0

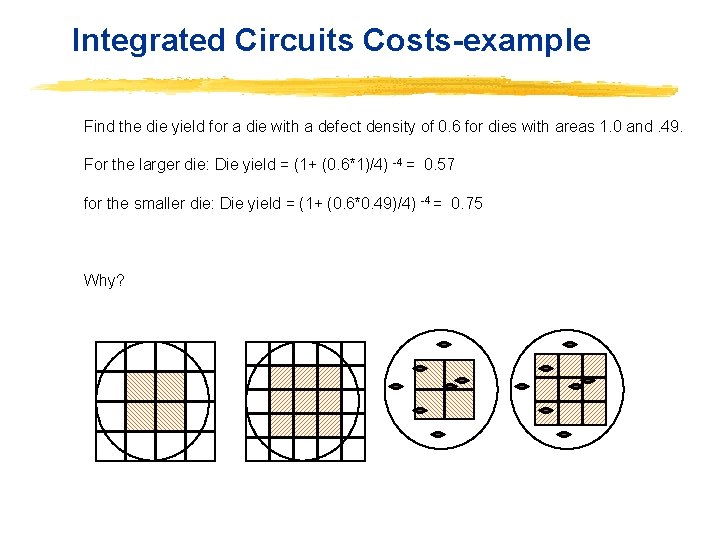

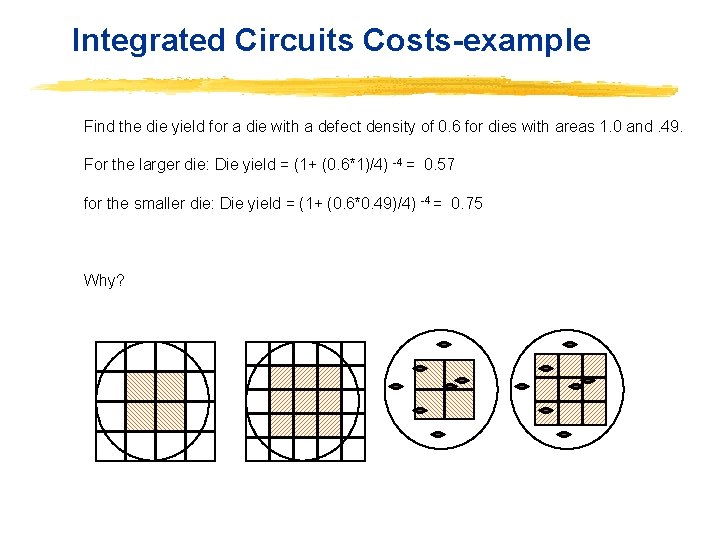

Integrated Circuits Costs-example Find the die yield for a die with a defect density of 0. 6 for dies with areas 1. 0 and. 49. For the larger die: Die yield = (1+ (0. 6*1)/4) -4 = 0. 57 for the smaller die: Die yield = (1+ (0. 6*0. 49)/4) -4 = 0. 75 Why?

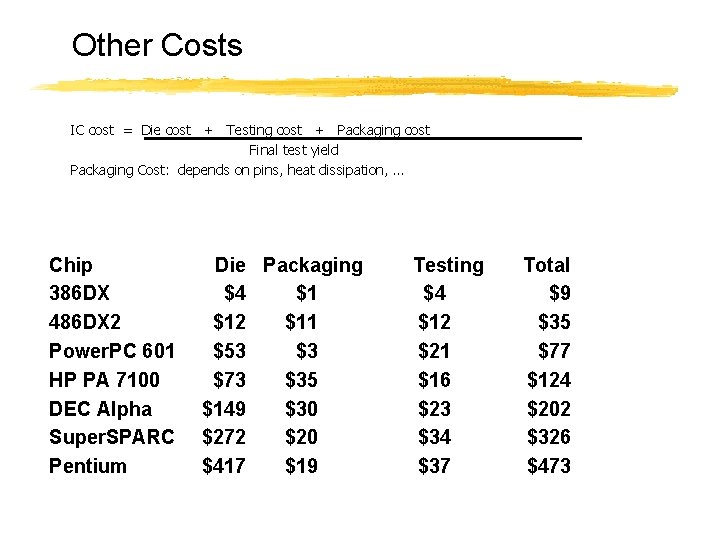

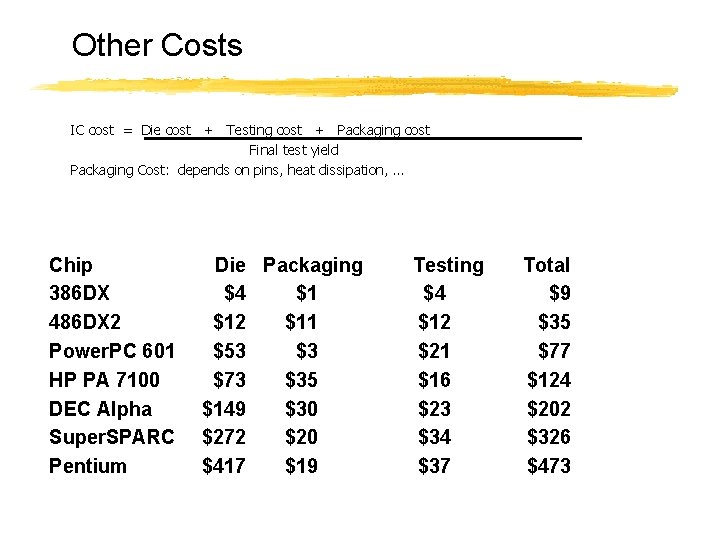

Other Costs IC cost = Die cost + Testing cost + Packaging cost Final test yield Packaging Cost: depends on pins, heat dissipation, . . . Chip 386 DX 486 DX 2 Power. PC 601 HP PA 7100 DEC Alpha Super. SPARC Pentium Die Packaging $4 $1 $12 $11 $53 $3 $73 $35 $149 $30 $272 $20 $417 $19 Testing $4 $12 $21 $16 $23 $34 $37 Total $9 $35 $77 $124 $202 $326 $473

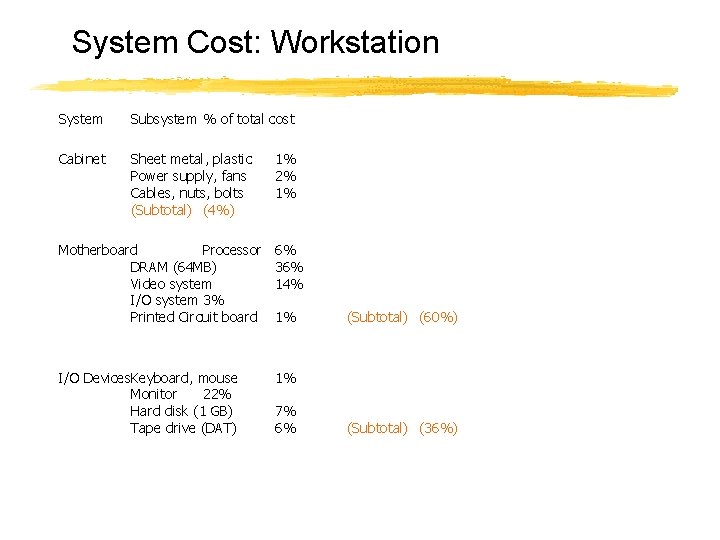

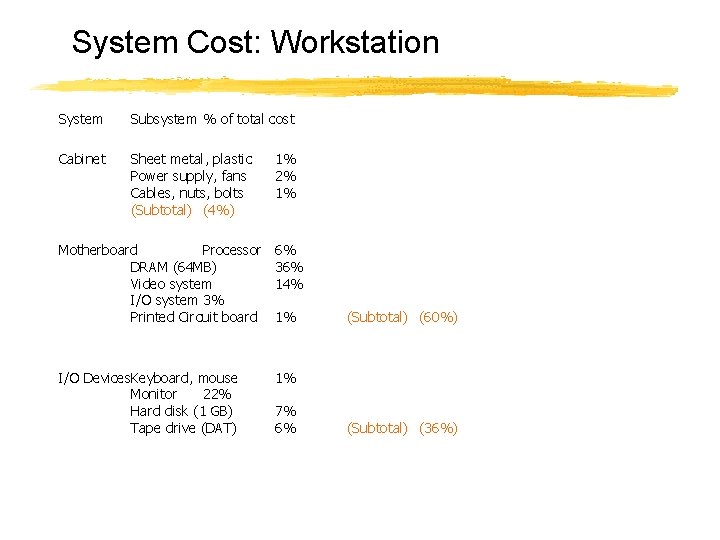

System Cost: Workstation System Subsystem % of total cost Cabinet Sheet metal, plastic Power supply, fans Cables, nuts, bolts (Subtotal) (4%) 1% 2% 1% Motherboard Processor DRAM (64 MB) Video system I/O system 3% Printed Circuit board 6% 36% 14% I/O Devices. Keyboard, mouse Monitor 22% Hard disk (1 GB) Tape drive (DAT) 1% 1% 7% 6% (Subtotal) (60%) (Subtotal) (36%)

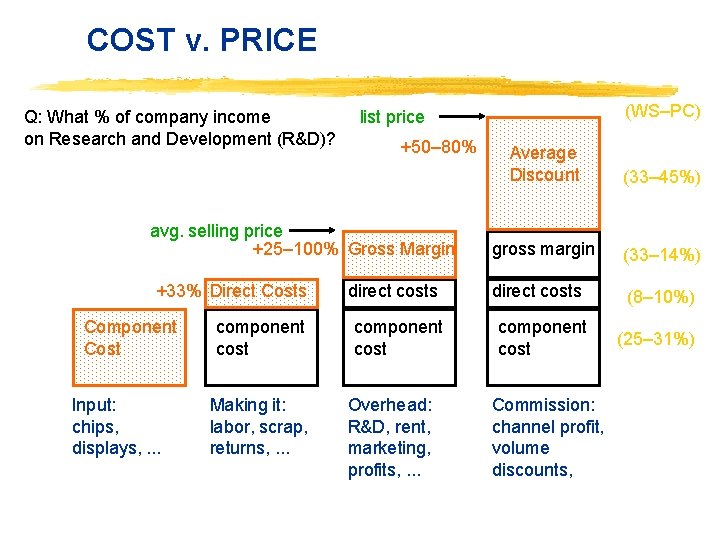

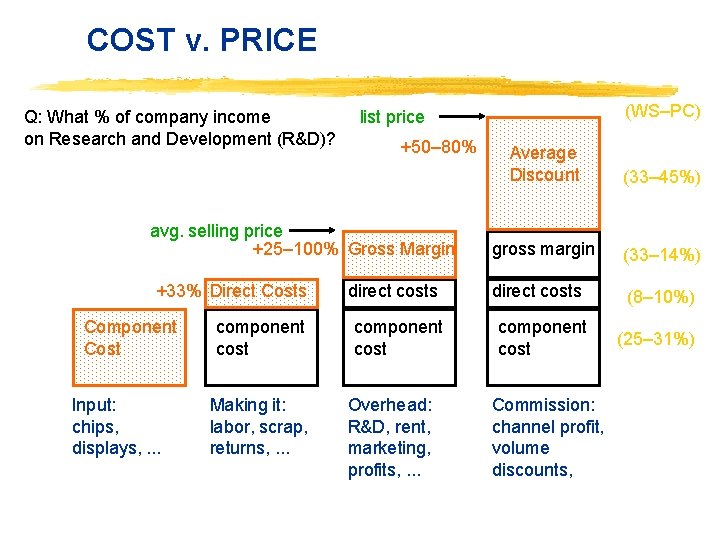

COST v. PRICE Q: What % of company income on Research and Development (R&D)? +50– 80% Average Discount (33– 45%) gross margin (33– 14%) direct costs (8– 10%) component cost (25– 31%) avg. selling price +25– 100% Gross Margin +33% Direct Costs Component Cost Input: chips, displays, . . . component cost Making it: labor, scrap, returns, . . . (WS–PC) list price Overhead: R&D, rent, marketing, profits, . . . Commission: channel profit, volume discounts,

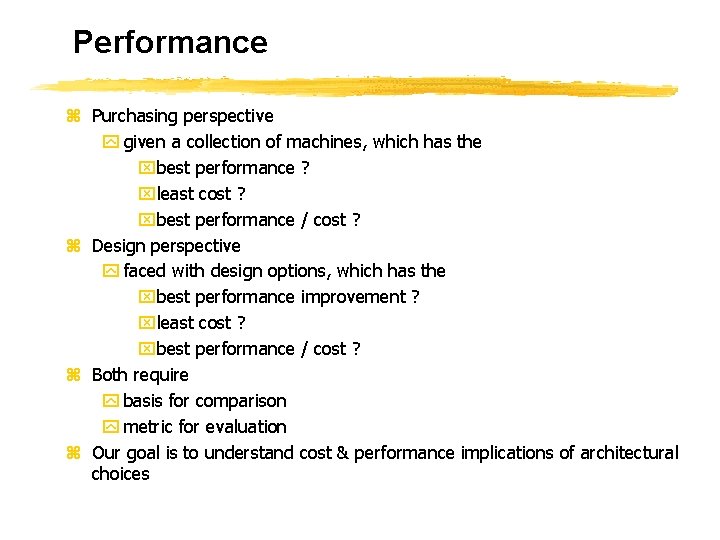

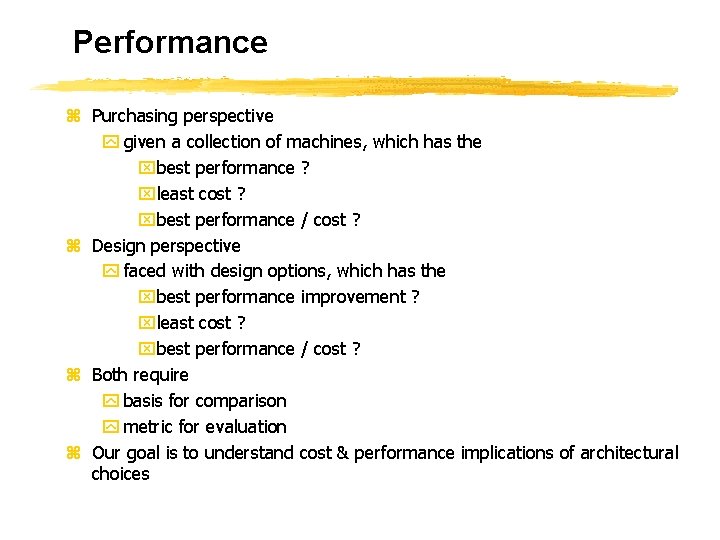

Performance z Purchasing perspective y given a collection of machines, which has the xbest performance ? xleast cost ? xbest performance / cost ? z Design perspective y faced with design options, which has the xbest performance improvement ? xleast cost ? xbest performance / cost ? z Both require y basis for comparison y metric for evaluation z Our goal is to understand cost & performance implications of architectural choices

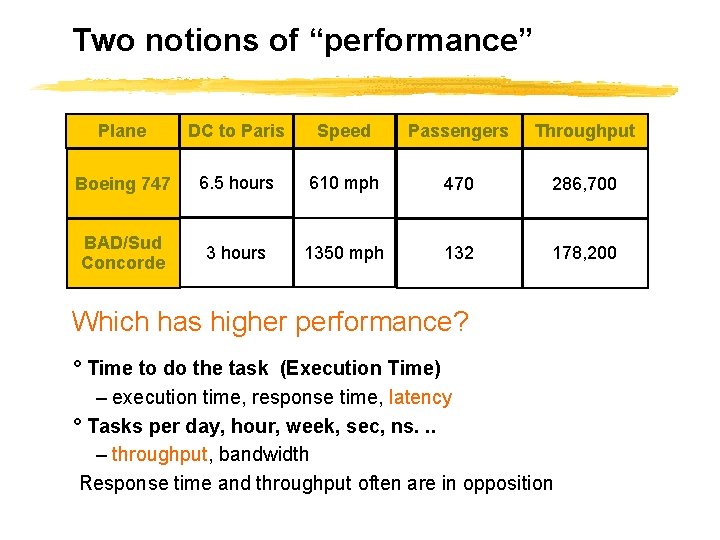

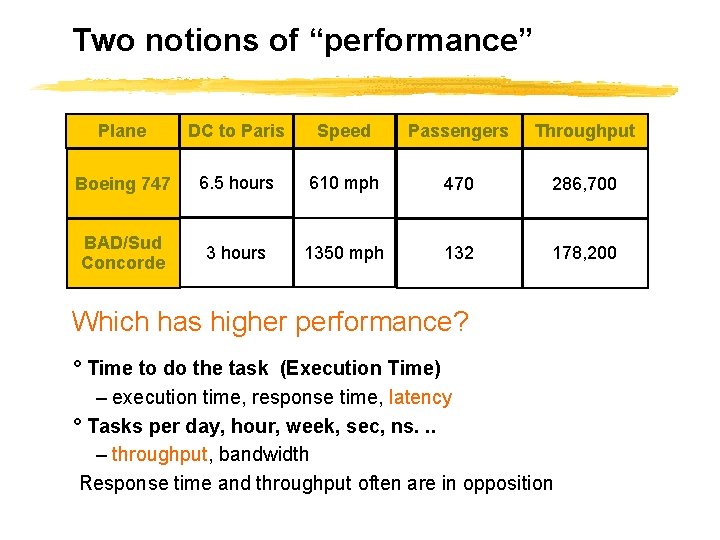

Two notions of “performance” Plane DC to Paris Speed Passengers Throughput Boeing 747 6. 5 hours 610 mph 470 286, 700 BAD/Sud Concorde 3 hours 1350 mph 132 178, 200 Which has higher performance? ° Time to do the task (Execution Time) – execution time, response time, latency ° Tasks per day, hour, week, sec, ns. . . – throughput, bandwidth Response time and throughput often are in opposition

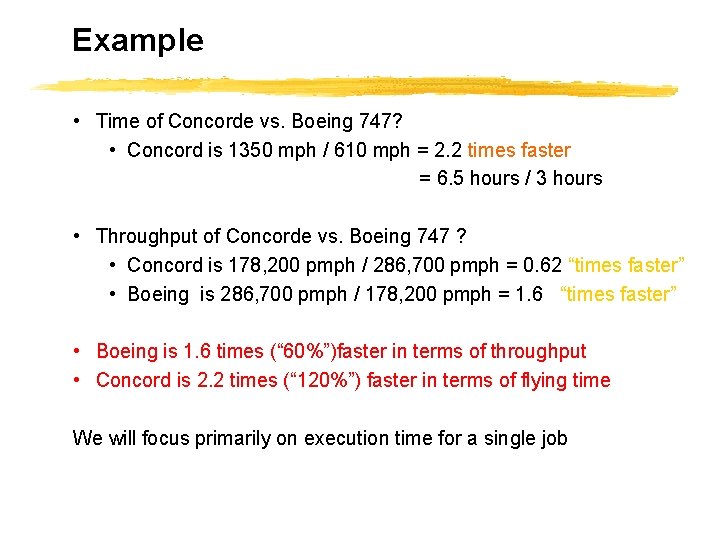

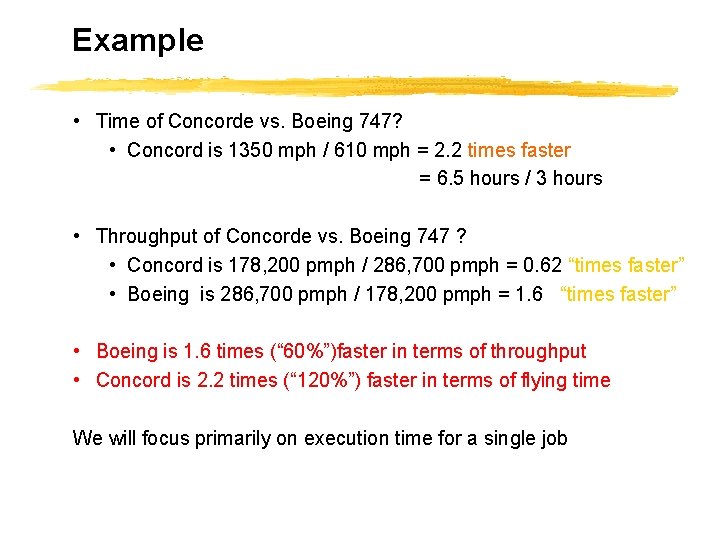

Example • Time of Concorde vs. Boeing 747? • Concord is 1350 mph / 610 mph = 2. 2 times faster = 6. 5 hours / 3 hours • Throughput of Concorde vs. Boeing 747 ? • Concord is 178, 200 pmph / 286, 700 pmph = 0. 62 “times faster” • Boeing is 286, 700 pmph / 178, 200 pmph = 1. 6 “times faster” • Boeing is 1. 6 times (“ 60%”)faster in terms of throughput • Concord is 2. 2 times (“ 120%”) faster in terms of flying time We will focus primarily on execution time for a single job

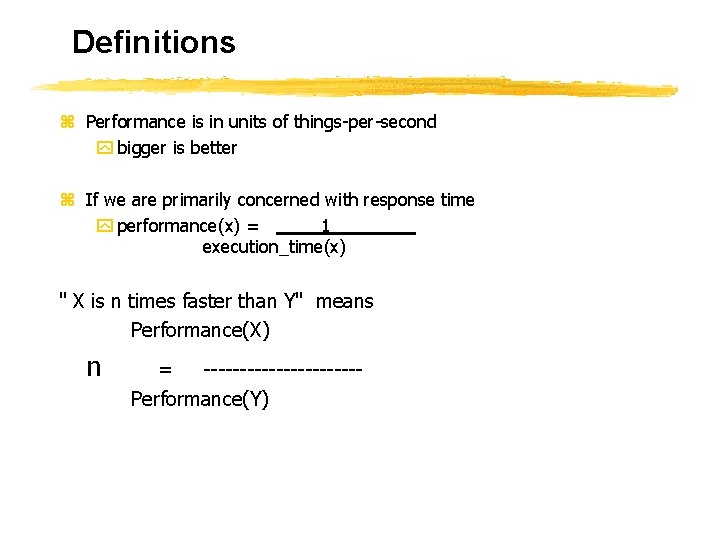

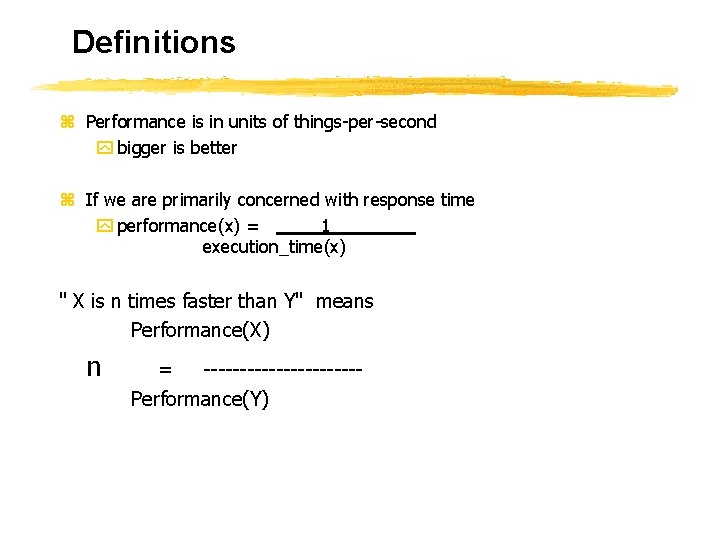

Definitions z Performance is in units of things-per-second y bigger is better z If we are primarily concerned with response time y performance(x) = 1 execution_time(x) " X is n times faster than Y" means Performance(X) n = ----------- Performance(Y)

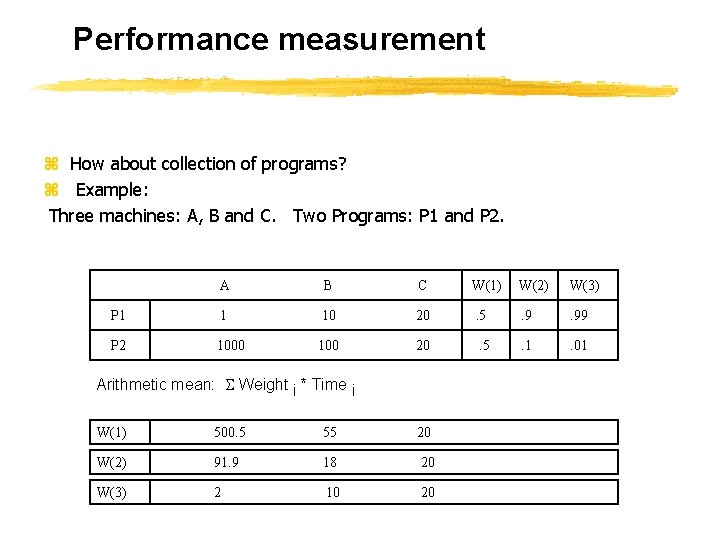

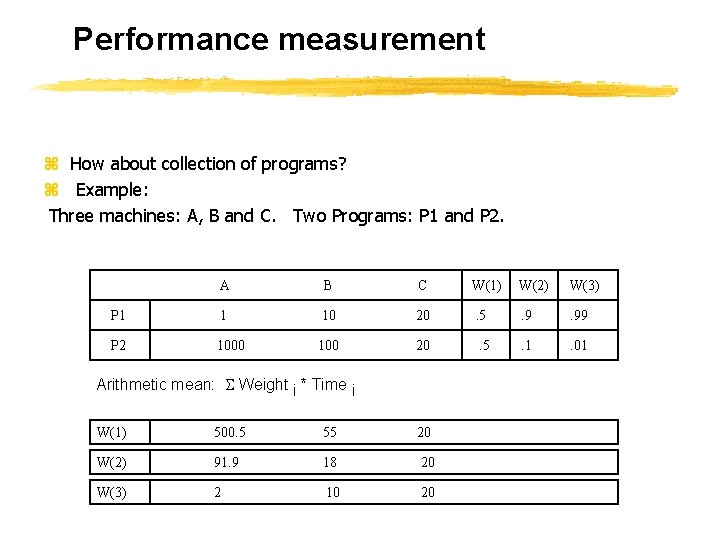

Performance measurement z How about collection of programs? z Example: Three machines: A, B and C. Two Programs: P 1 and P 2. A B C W(1) W(2) W(3) P 1 1 10 20 . 5 . 99 P 2 1000 100 20 . 5 . 1 . 01 Arithmetic mean: Weight i * Time i W(1) 500. 5 55 20 W(2) 91. 9 18 20 W(3) 2 10 20

Performance measurement z Other option: Geometric Means (Self study pages 37 -39 text book)

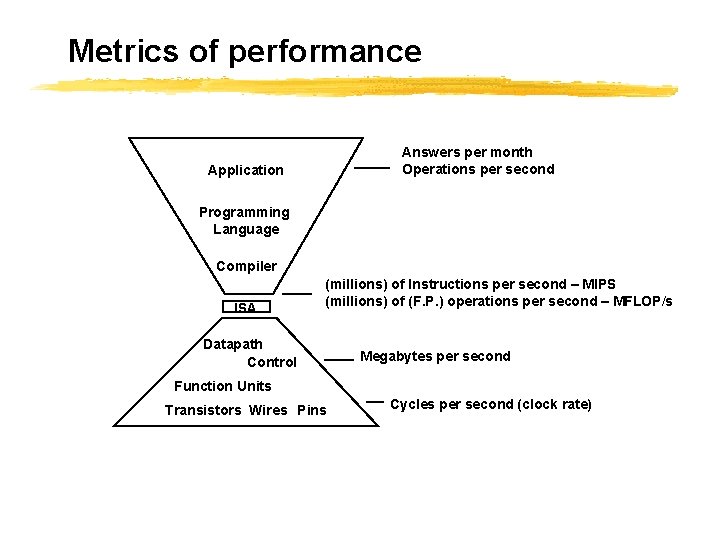

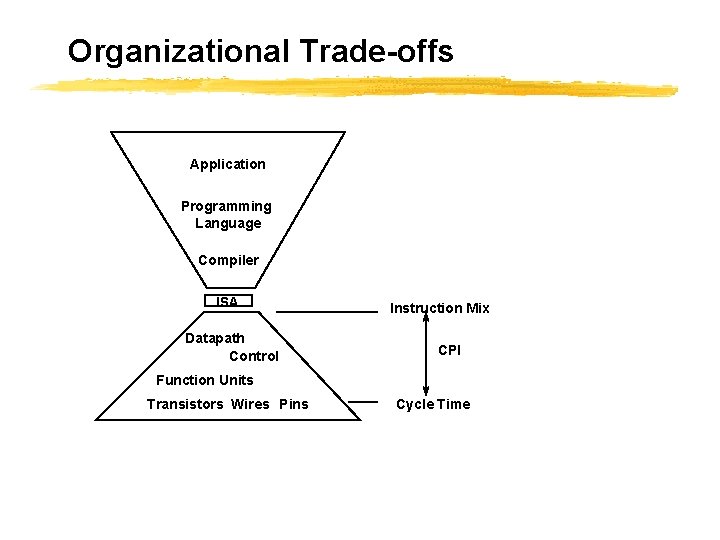

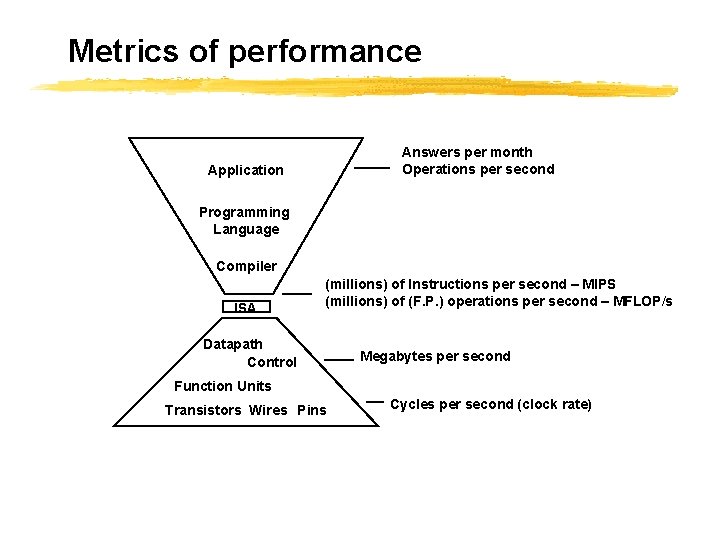

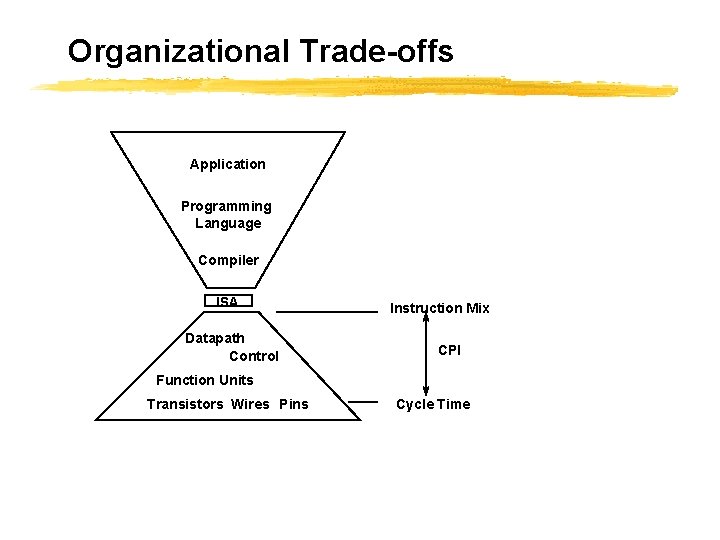

Metrics of performance Answers per month Operations per second Application Programming Language Compiler ISA (millions) of Instructions per second – MIPS (millions) of (F. P. ) operations per second – MFLOP/s Datapath Control Megabytes per second Function Units Transistors Wires Pins Cycles per second (clock rate)

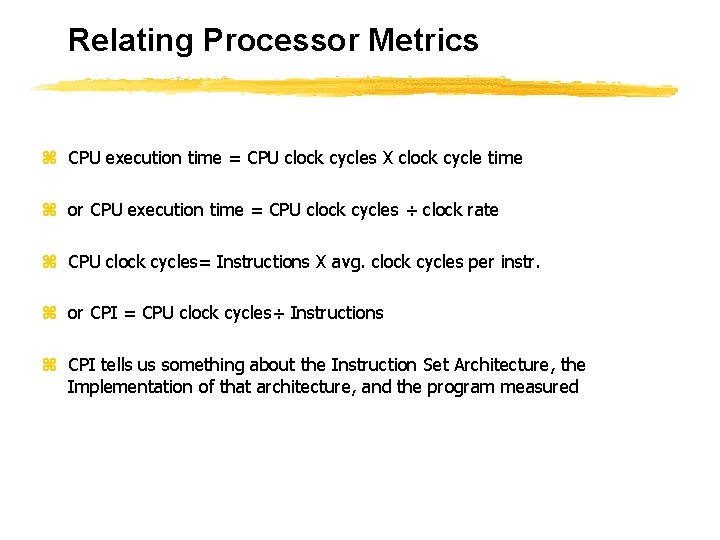

Relating Processor Metrics z CPU execution time = CPU clock cycles X clock cycle time z or CPU execution time = CPU clock cycles ÷ clock rate z CPU clock cycles= Instructions X avg. clock cycles per instr. z or CPI = CPU clock cycles÷ Instructions z CPI tells us something about the Instruction Set Architecture, the Implementation of that architecture, and the program measured

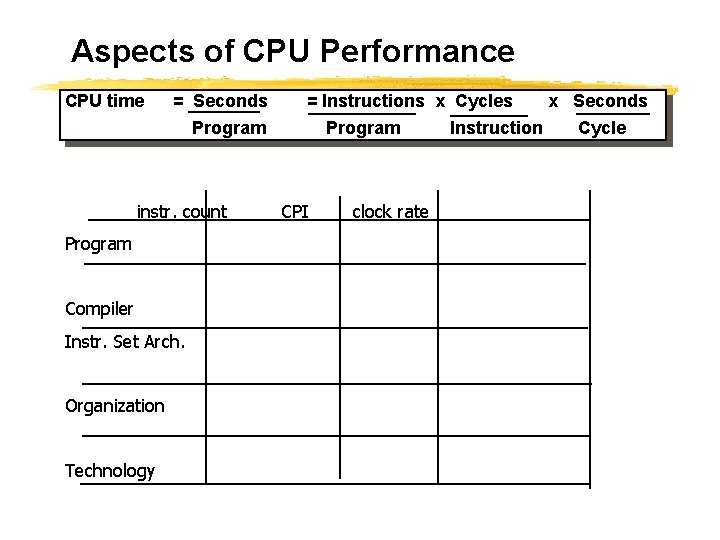

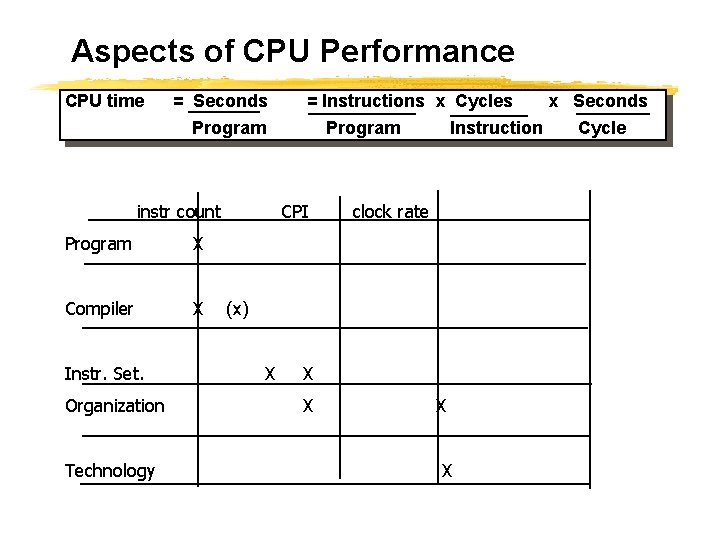

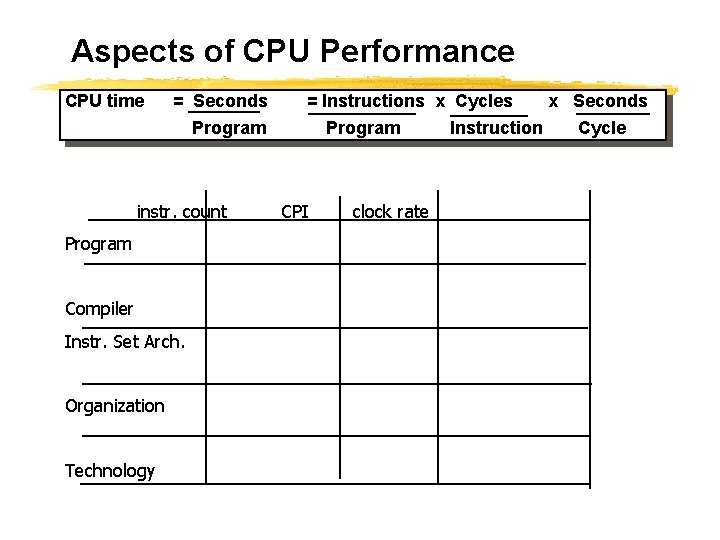

Aspects of CPU Performance CPU time = Seconds = Instructions x Cycles Program instr. count Program Compiler Instr. Set Arch. Organization Technology Program CPI clock rate Instruction x Seconds Cycle

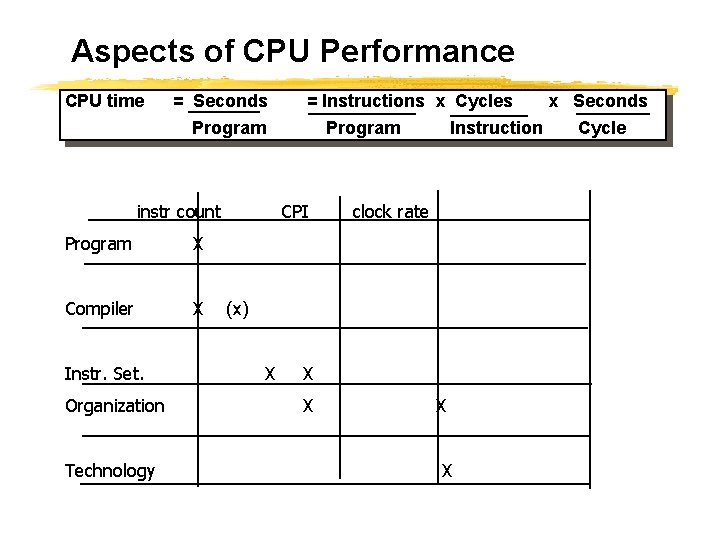

Aspects of CPU Performance CPU time = Seconds = Instructions x Cycles Program instr count Program X Compiler X Instr. Set. Organization Technology Program CPI Instruction clock rate (x) X X X x Seconds Cycle

Organizational Trade-offs Application Programming Language Compiler ISA Datapath Control Instruction Mix CPI Function Units Transistors Wires Pins Cycle Time

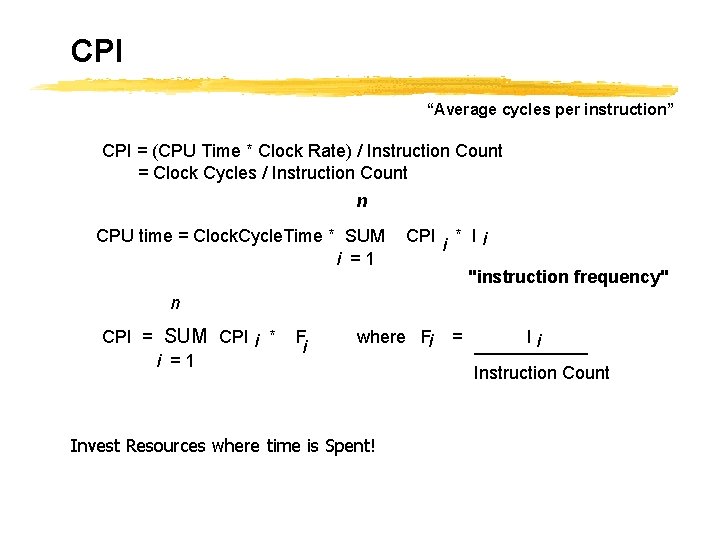

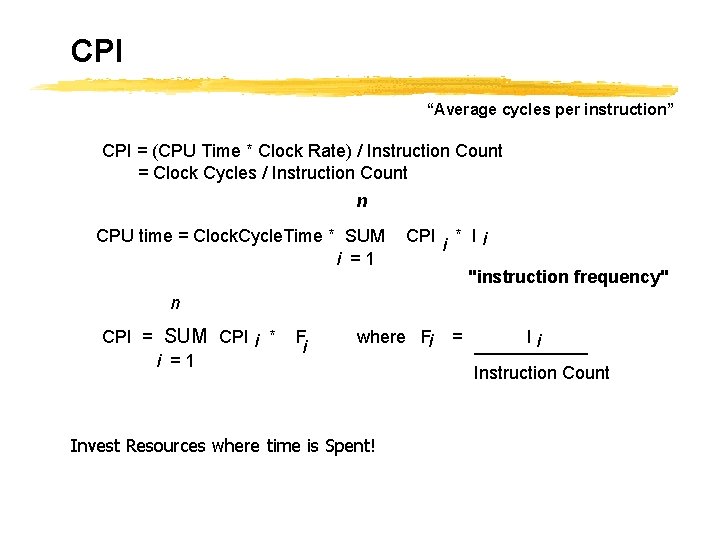

CPI “Average cycles per instruction” CPI = (CPU Time * Clock Rate) / Instruction Count = Clock Cycles / Instruction Count n CPU time = Clock. Cycle. Time * SUM i =1 CPI i * Ii "instruction frequency" n CPI = SUM CPI i * i =1 F i where Fi Invest Resources where time is Spent! = Ii Instruction Count

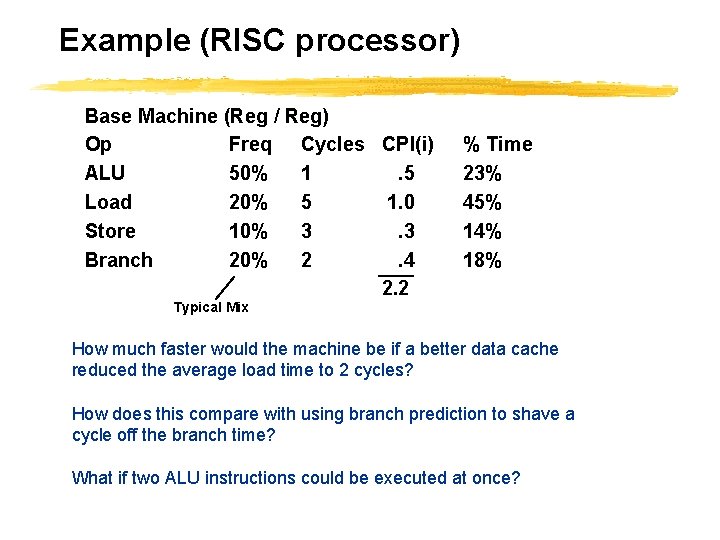

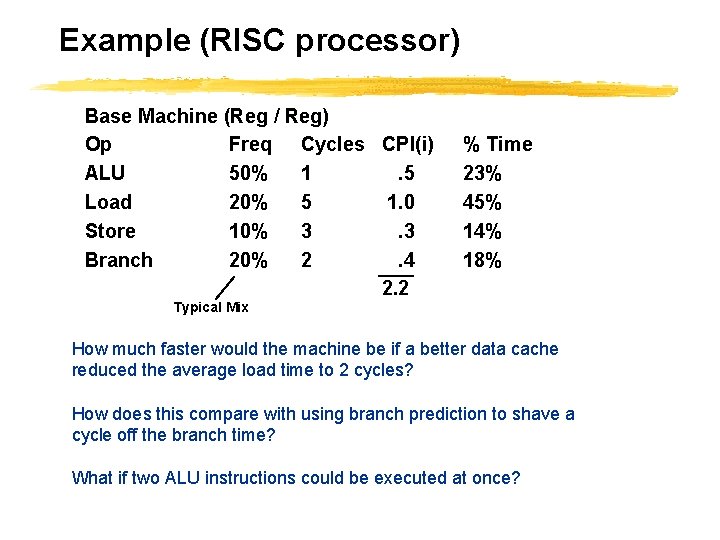

Example (RISC processor) Base Machine (Reg / Reg) Op Freq Cycles CPI(i) ALU 50% 1. 5 Load 20% 5 1. 0 Store 10% 3. 3 Branch 20% 2. 4 2. 2 % Time 23% 45% 14% 18% Typical Mix How much faster would the machine be if a better data cache reduced the average load time to 2 cycles? How does this compare with using branch prediction to shave a cycle off the branch time? What if two ALU instructions could be executed at once?

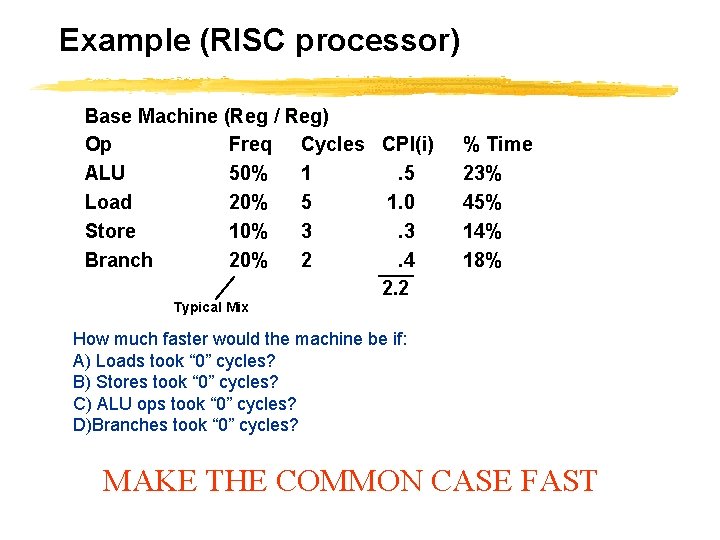

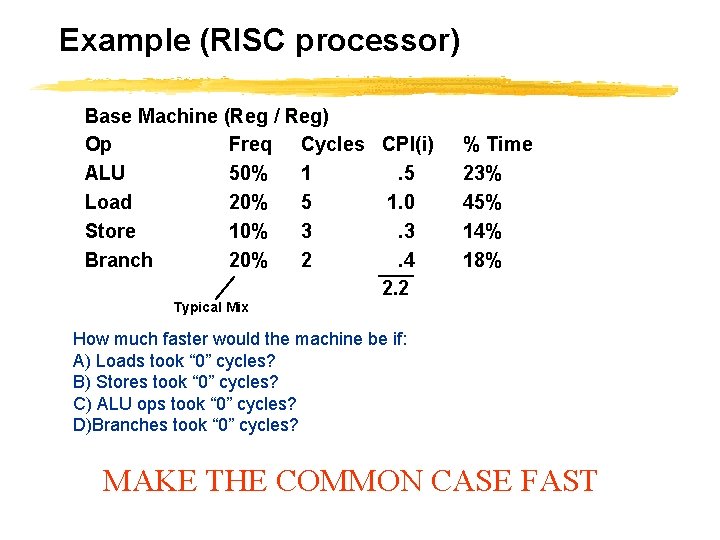

Example (RISC processor) Base Machine (Reg / Reg) Op Freq Cycles CPI(i) ALU 50% 1. 5 Load 20% 5 1. 0 Store 10% 3. 3 Branch 20% 2. 4 2. 2 % Time 23% 45% 14% 18% Typical Mix How much faster would the machine be if: A) Loads took “ 0” cycles? B) Stores took “ 0” cycles? C) ALU ops took “ 0” cycles? D)Branches took “ 0” cycles? MAKE THE COMMON CASE FAST

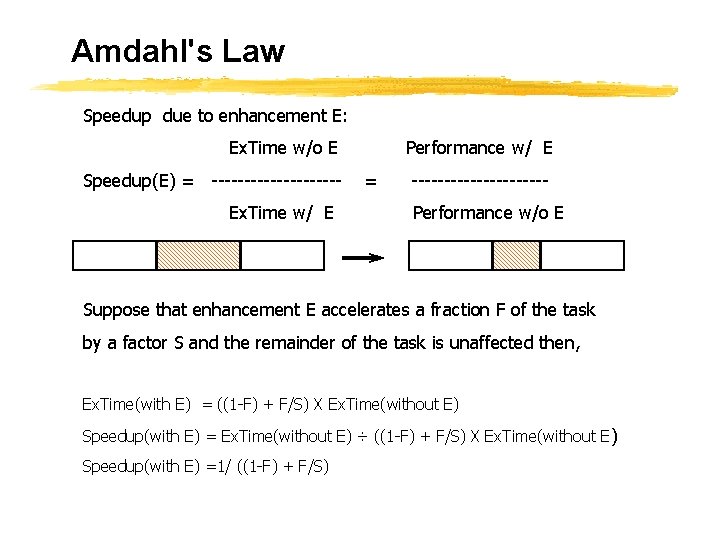

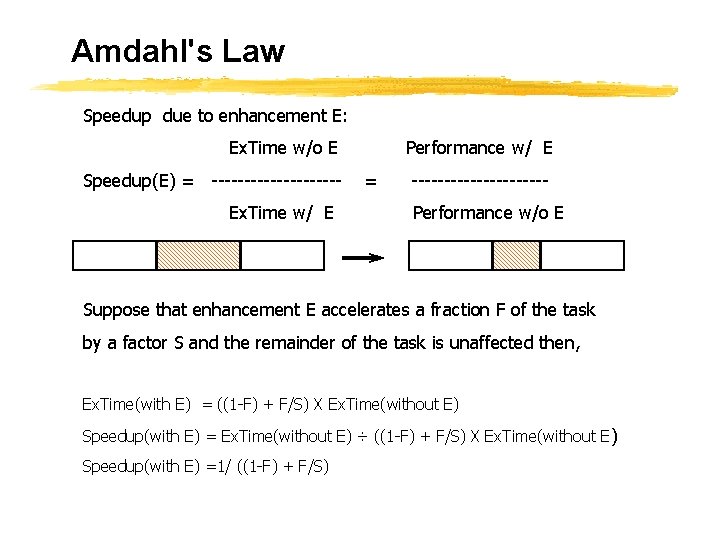

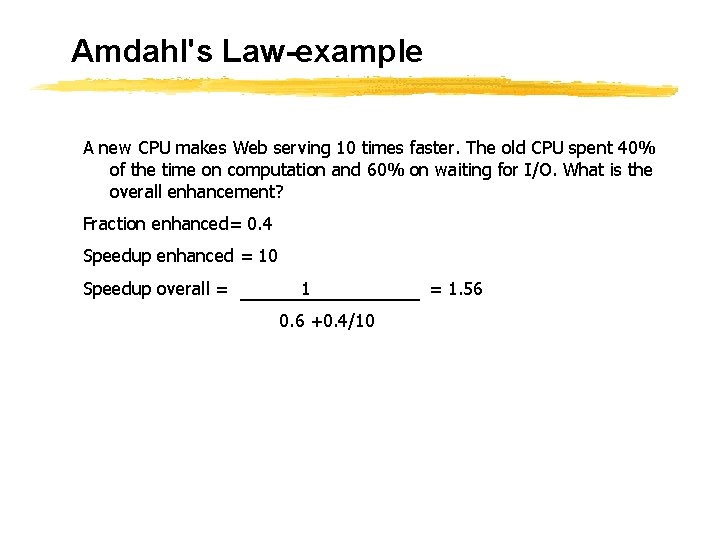

Amdahl's Law Speedup due to enhancement E: Ex. Time w/o E Speedup(E) = ----------Ex. Time w/ E Performance w/ E = ----------Performance w/o E Suppose that enhancement E accelerates a fraction F of the task by a factor S and the remainder of the task is unaffected then, Ex. Time(with E) = ((1 -F) + F/S) X Ex. Time(without E) Speedup(with E) = Ex. Time(without E) ÷ ((1 -F) + F/S) X Ex. Time(without E ) Speedup(with E) =1/ ((1 -F) + F/S)

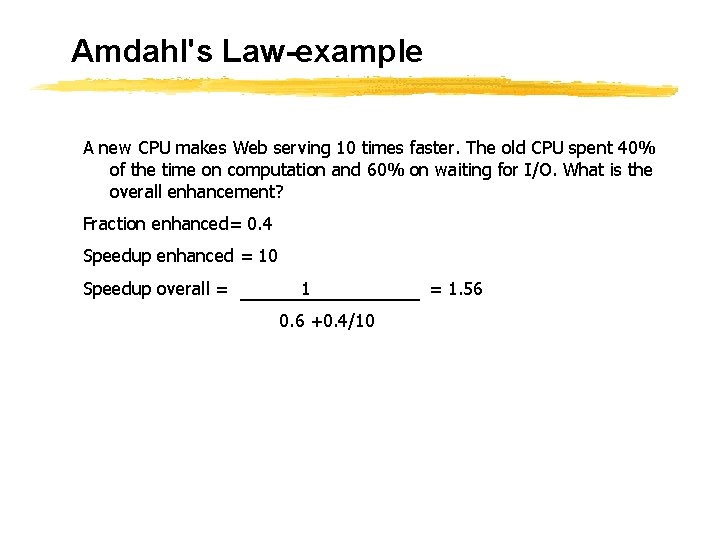

Amdahl's Law-example A new CPU makes Web serving 10 times faster. The old CPU spent 40% of the time on computation and 60% on waiting for I/O. What is the overall enhancement? Fraction enhanced= 0. 4 Speedup enhanced = 10 Speedup overall = 1 0. 6 +0. 4/10 = 1. 56

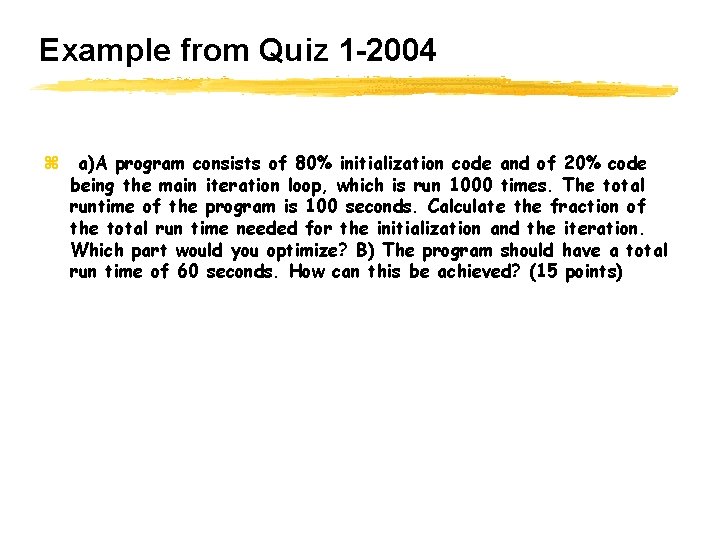

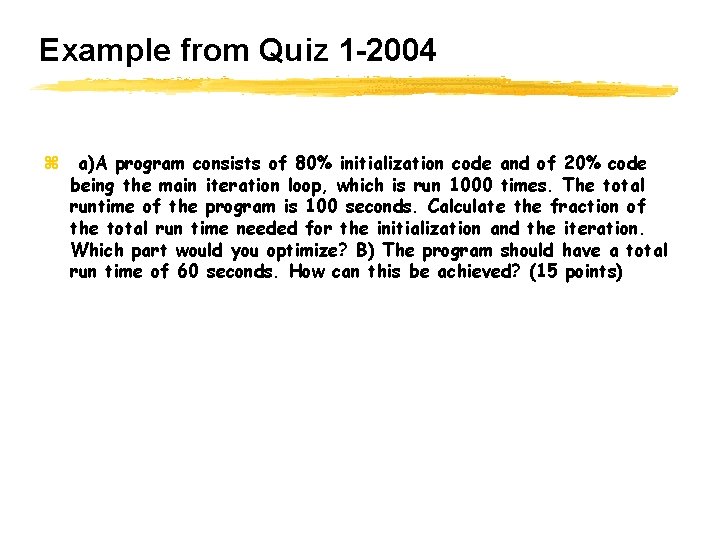

Example from Quiz 1 -2004 z a)A program consists of 80% initialization code and of 20% code being the main iteration loop, which is run 1000 times. The total runtime of the program is 100 seconds. Calculate the fraction of the total run time needed for the initialization and the iteration. Which part would you optimize? B) The program should have a total run time of 60 seconds. How can this be achieved? (15 points)

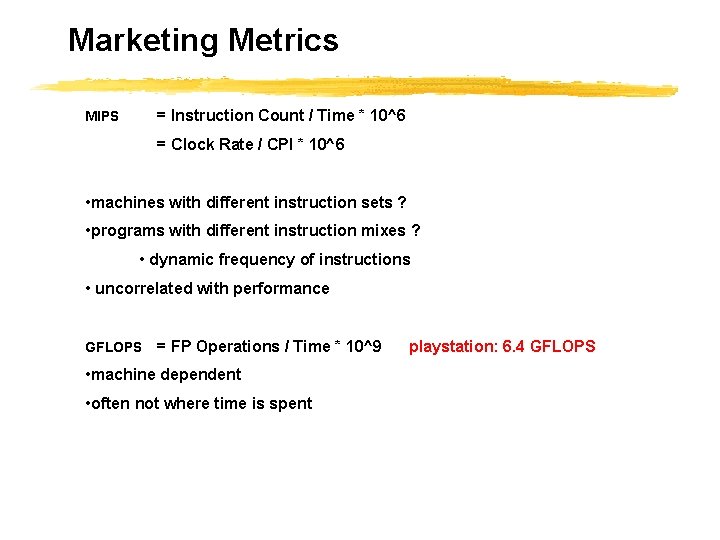

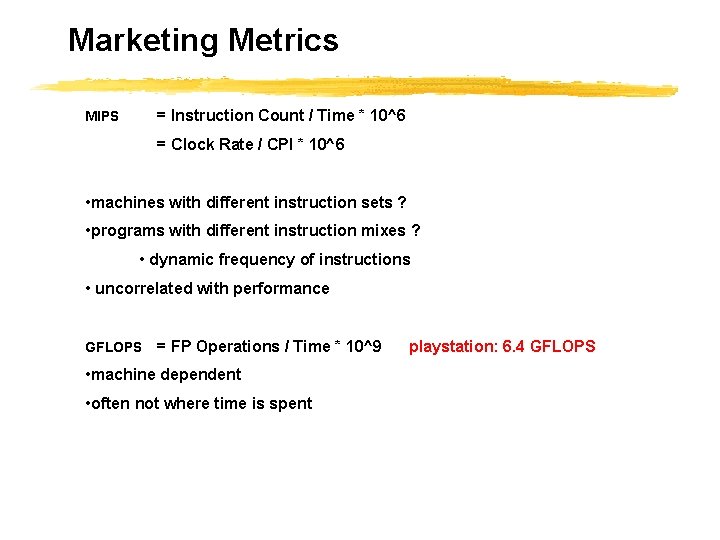

Marketing Metrics = Instruction Count / Time * 10^6 MIPS = Clock Rate / CPI * 10^6 • machines with different instruction sets ? • programs with different instruction mixes ? • dynamic frequency of instructions • uncorrelated with performance GFLOPS = FP Operations / Time * 10^9 • machine dependent • often not where time is spent playstation: 6. 4 GFLOPS

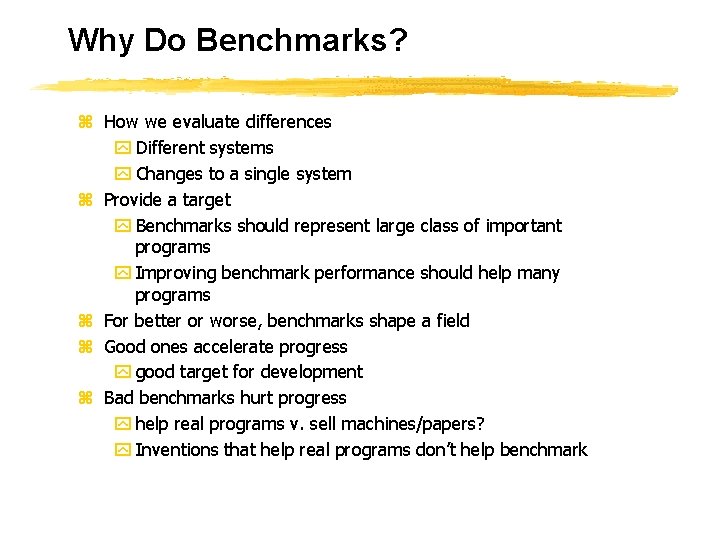

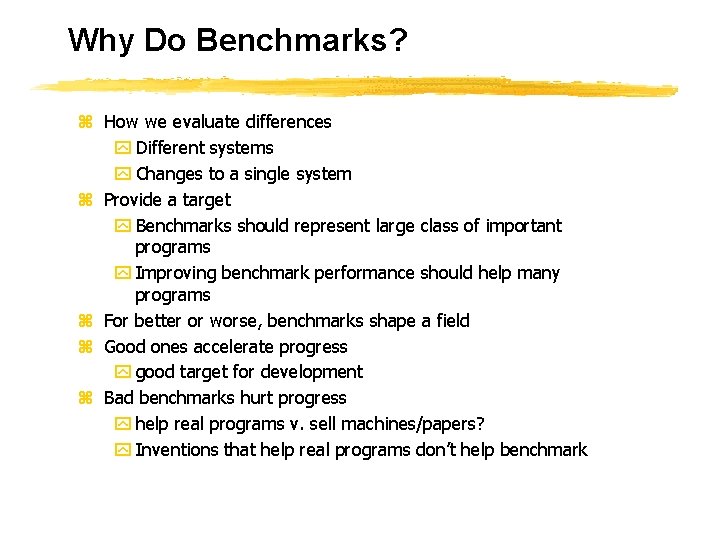

Why Do Benchmarks? z How we evaluate differences y Different systems y Changes to a single system z Provide a target y Benchmarks should represent large class of important programs y Improving benchmark performance should help many programs z For better or worse, benchmarks shape a field z Good ones accelerate progress y good target for development z Bad benchmarks hurt progress y help real programs v. sell machines/papers? y Inventions that help real programs don’t help benchmark

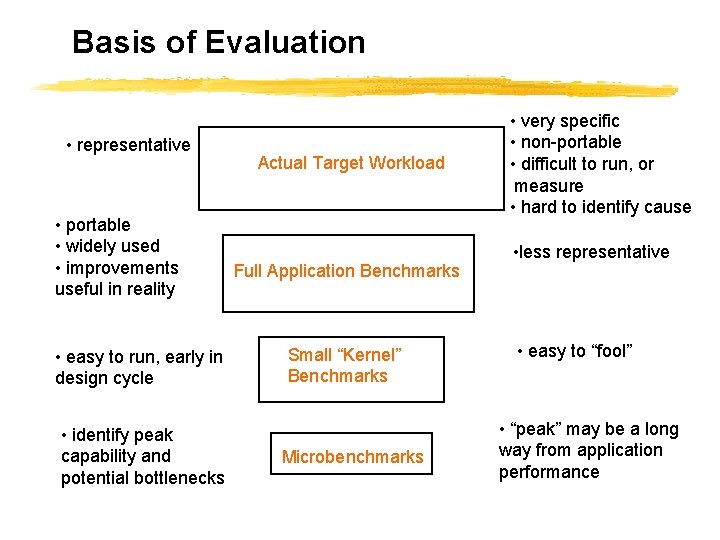

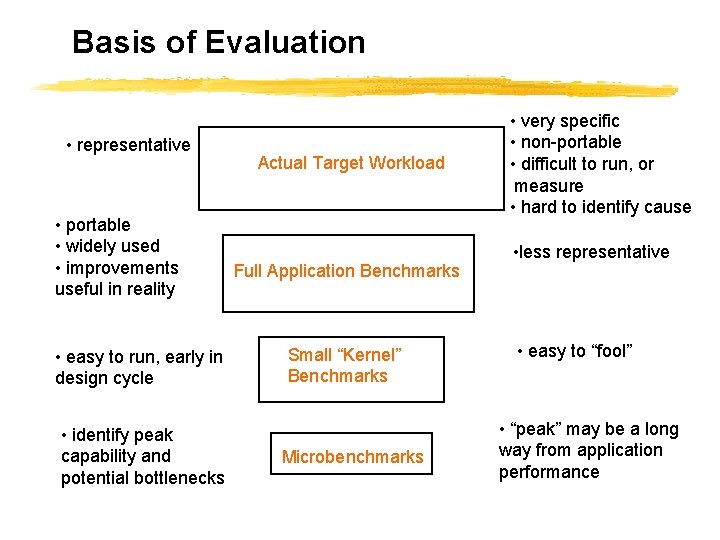

Basis of Evaluation Cons • representative • portable • widely used • improvements useful in reality • easy to run, early in design cycle • identify peak capability and potential bottlenecks Actual Target Workload Full Application Benchmarks Small “Kernel” Benchmarks Microbenchmarks • very specific • non-portable • difficult to run, or measure • hard to identify cause • less representative • easy to “fool” • “peak” may be a long way from application performance

Successful Benchmark: SPEC z 1987 RISC industry mired in “bench marketing”: (“That is 8 MIPS machine, but they claim 10 MIPS!”) z EE Times + 5 companies band together to perform Systems Performance Evaluation Committee (SPEC) in 1988: Sun, MIPS, HP, Apollo, DEC z Create standard list of programs, inputs, reporting: some real programs, includes OS calls, some I/O

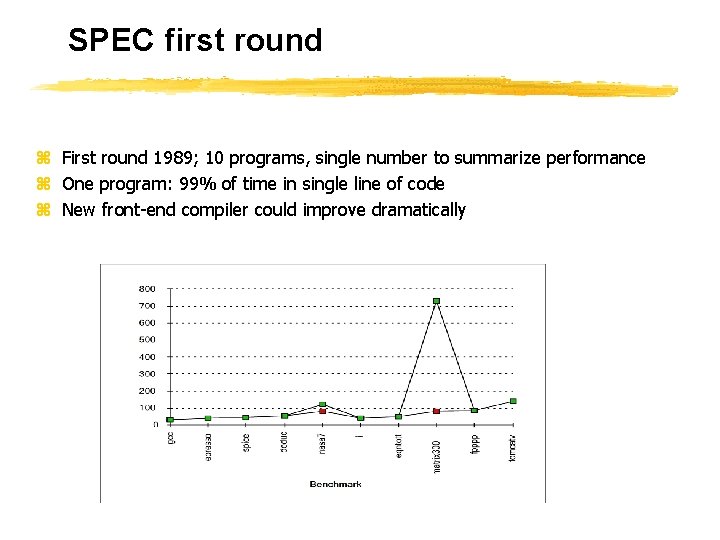

SPEC first round z First round 1989; 10 programs, single number to summarize performance z One program: 99% of time in single line of code z New front-end compiler could improve dramatically

SPEC 95 z Eighteen application benchmarks (with inputs) reflecting a technical computing workload z Eight integer y go, m 88 ksim, gcc, compress, li, ijpeg, perl, vortex z Ten floating-point intensive y tomcatv, swim, su 2 cor, hydro 2 d, mgrid, applu, turb 3 d, apsi, fppp, wave 5 z Must run with standard compiler flags y eliminate special undocumented incantations that may not even generate working code for real programs

Summary CPU time = Seconds Program = Instructions x Cycles Program Instruction x Seconds Cycle z Time is the measure of computer performance! z Good products created when have: y Good benchmarks y Good ways to summarize performance z If not good benchmarks and summary, then choice between improving product for real programs vs. improving product to get more sales=> sales almost always wins z Remember Amdahl’s Law: Speedup is limited by unimproved part of program

Readings & More… Reminder: READ: TEXTBOOK: Chapter 1 pages 1 to 47 Moore paper (posted on course web site).