CDPFS A Centralized Dynamic Parallel Flow Scheduling Algorithm

![NSP Graph 1101 1102 1201 3101 3202 1111 1131 Address value [0. . n-1]: NSP Graph 1101 1102 1201 3101 3202 1111 1131 Address value [0. . n-1]:](https://slidetodoc.com/presentation_image_h/b70aaacc1ee647b5dfbd4a56be6cf385/image-17.jpg)

![Algo 5 Build Flow[i]Network by Wbounded Non-node-split MF method Case for SP path Case Algo 5 Build Flow[i]Network by Wbounded Non-node-split MF method Case for SP path Case](https://slidetodoc.com/presentation_image_h/b70aaacc1ee647b5dfbd4a56be6cf385/image-29.jpg)

![Flow[i]Network 1. Node Disjoint Max. Flow 2. 3. s and t are the source Flow[i]Network 1. Node Disjoint Max. Flow 2. 3. s and t are the source](https://slidetodoc.com/presentation_image_h/b70aaacc1ee647b5dfbd4a56be6cf385/image-30.jpg)

![Probability model of CDPFSMP go fast adding edge to Flow[i]Network 34 Probability model of CDPFSMP go fast adding edge to Flow[i]Network 34](https://slidetodoc.com/presentation_image_h/b70aaacc1ee647b5dfbd4a56be6cf385/image-34.jpg)

- Slides: 47

CDPFS: A Centralized Dynamic Parallel Flow Scheduling Algorithm to Improve Load Balancing on BCube Topology for Data Center Network Wei-Kang Chung 1 and Sun-Yuan Hsieh 1, 2 1 Department of Computer Science and Information Engineering, 2 Institute of Medical Informatics, National Cheng Kung University, No. 1, University Road, Tainan 701, Taiwan 報告者: 鍾煒康 指導教授: 謝孫源

Outline Introduction Related Work The Bcube Structure Bcube Source Routing The Proposed Algorithm SP and NSP Graph CDPFS for Single-Path CDPFSMP for Multi-Path Simulation Architecture and Result Conclusion 2

Introduction (1/2) Data center A pool of resources (computational, storage) interconnected using a communication network. Data Center Network (DCN) Interconnects all of the data center resources together, and need to be scalable and efficient to connect tens or even hundreds of thousands of servers to handle the growing demands of Cloud Significant bandwidth oversubscription on computing (Map. Reduce, Big-data Applications). the links in the network core Significant research work has been done on designing the data center network topologies in order to improve the performance of data centers. 3

Introduction (2/2) A favorable data center network architectures High scalability, efficient links utilization, and high fault tolerance. Data traffic flow (network flow) A sequence of packets that share common values in a subset of fields of their head, e. g. , 5 -tuple (src, src_port, dst_port, protocol) 90% of using TCP flow on DCNs Design routing algorithm on DCNs Centralized v. s. Distributed Staic routing v. s. Adaptive routing Hybrid 4

Type of Data Center Network Topologies Data Center Networks Fixed Topology Tree-based Flexible Topology Recursive Fully Optical Hybrid OSA Three-tier Tree Fat Tree Clos Network DCell Fi. Conn MDCube c-Through Helios BCube Port. Land Hedera VL 2 5

The BCube Structure 6

The BCube Addressing 7

The BCube(4, 1) Structure BCube 1 <1, 0> <1, 1> <1, 2> <1, 3> <0, 1> <0, 2> <0, 3> Level-1 BCube 0 <0, 0> Level-0 00 01 02 03 switch server 10 11 12 13 20 21 22 23 30 31 32 33 • Connecting rule - The i-th server in the j-th Bcubek-1 connects to the j-th port of the i-th level-k switch 8

The BCube(4, 2) Structure BCube 2 <2, 00> <2, 01> <2, 02> <2, 03> <2, 33> …… BCube 1 9

The BSR Algorithm for BCube(4, 3) Correct the digits sequentially Iteration 1 Iteration 2 Iteration 3 Iteration 4 Iteration 5 Shifting right Choosing available neighbor on level 2 Correct the neighbor value to the destination value on level 2 at last iteration 10

Forwarding with BCube Header Switch <1, 3> MAC table port BCube 1 <1, 0> <1, 2>MAC 03 <1, 1> MAC 13 MAC 23 MAC 33 Switch <0, 2> port MAC table MAC 20 MAC 21 MAC 22 MAC 23 <0, 1> BCube 0 <0, 0> 00 01 02 10 03 A dst 11 12 0 1 2 3 13 0 1 2 3 <1, 3> B MAC 20 MAC 23 20 03 21 MAC 03 20 03 data <0, 3> <0, 2> 20 MAC 23 22 data 30 23 31 32 33 src MAC addr MAC 23 MAC 03 MAC 20 MAC 23 Bcube addr 20 03 data 11

Load Balancing Issue Load balancing aims to optimize link utilizations, maximize throughput, minimize transmission time, and avoid overload of any single resource. Usage count optimal Link #id 12

Our Load Balancing Approach The number of flow 3. Two_Decision_Find_Path (Algorithm 3. ) by actual congestion value of links Overestimated Each flow 1. Building SP & NSP Graph (Algorithm 1. ) 2. CDPFS/CDPFSMP (Algorithm 2. / 4. 5. ) threshold (capacity) Link #id Using LDF method by global congestion value of links (Greedy strategy) 13

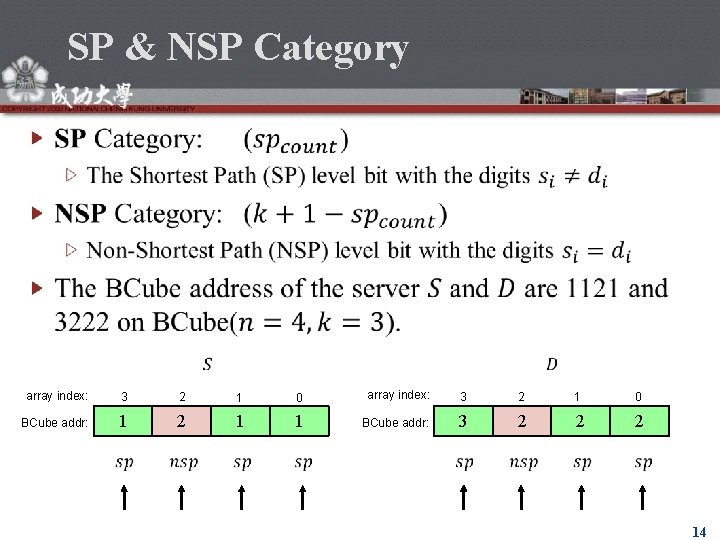

SP & NSP Category array index: 3 2 1 0 BCube addr: 1 2 1 1 BCube addr: 3 2 2 2 14

Algo 1: Create SP Path Set proposed by B. R. Heap, Permutations by interchanges, Comput. J. , 6 , pp. 293294. (1963) 15

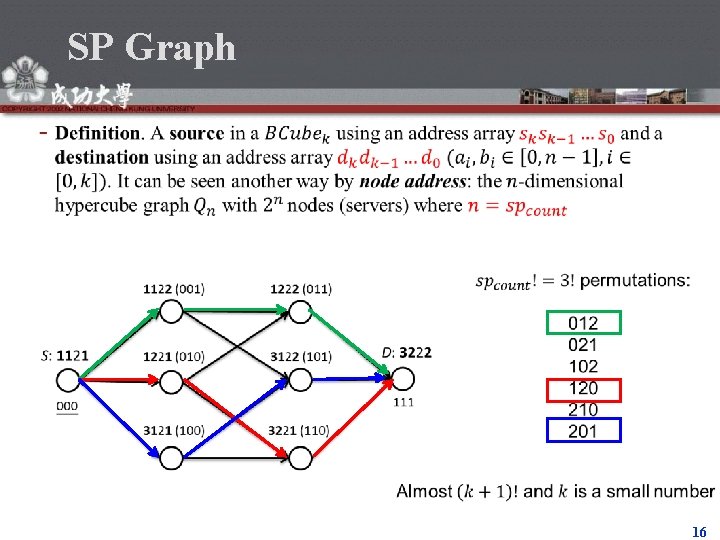

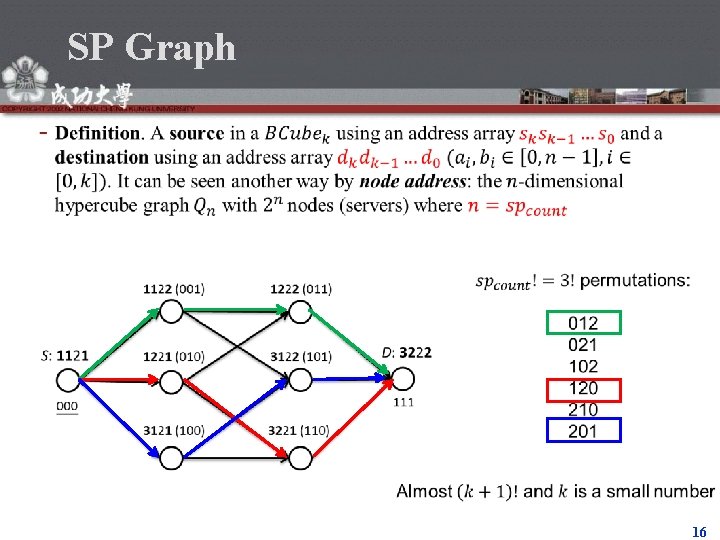

SP Graph 16

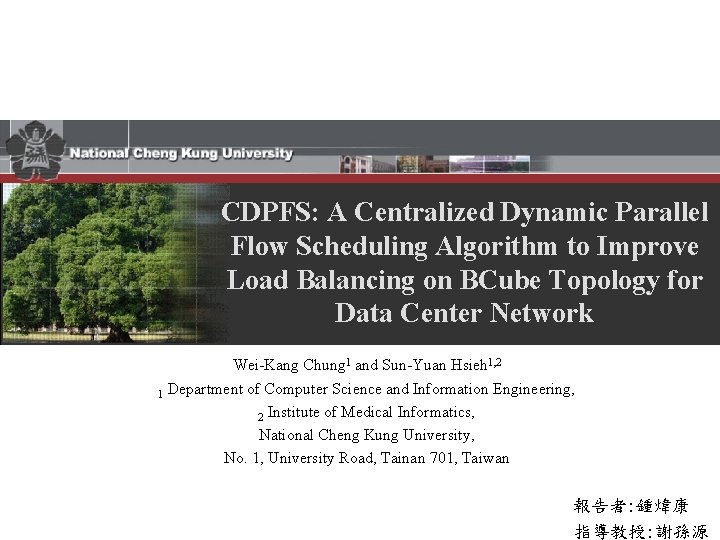

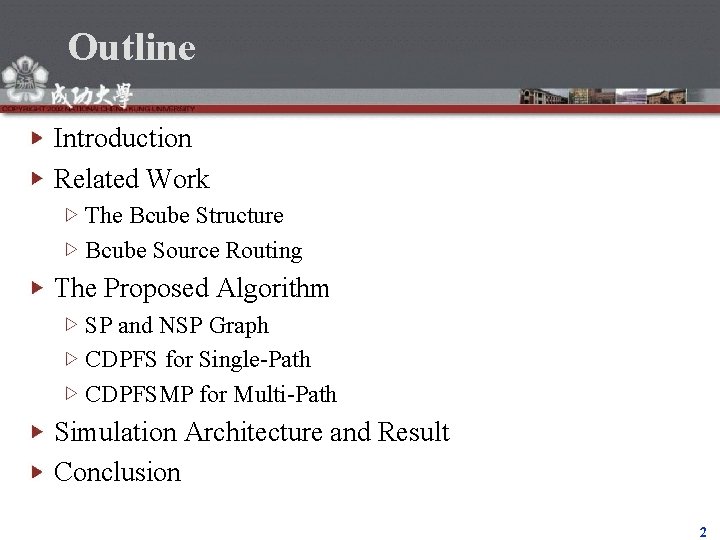

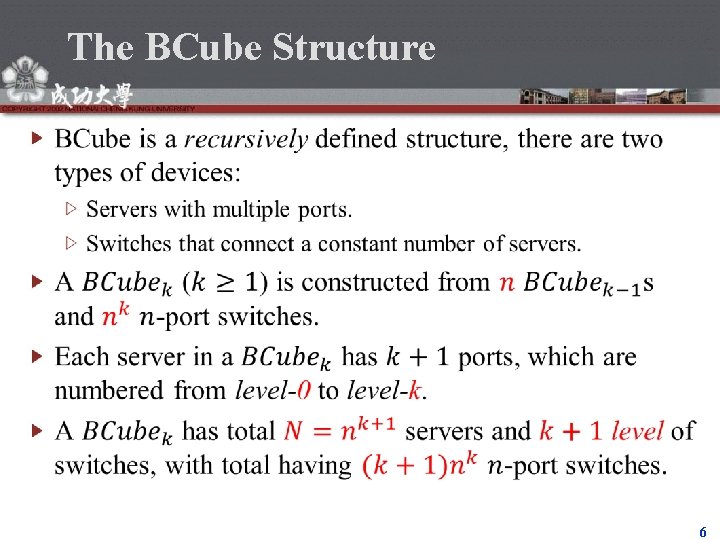

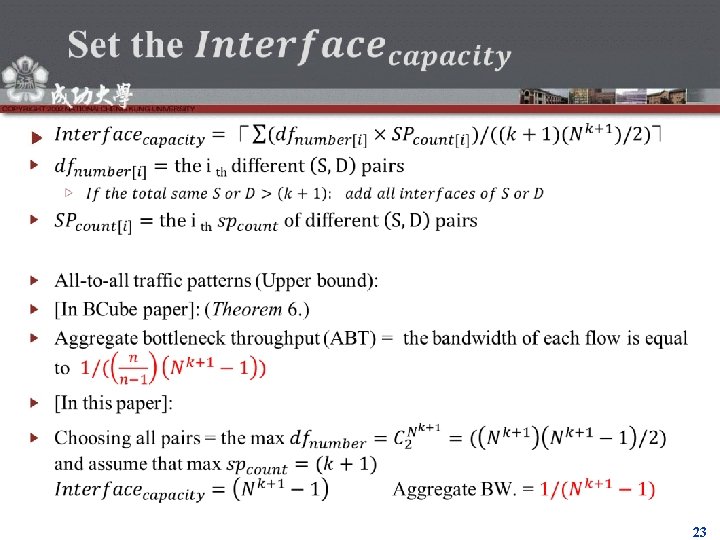

![NSP Graph 1101 1102 1201 3101 3202 1111 1131 Address value 0 n1 NSP Graph 1101 1102 1201 3101 3202 1111 1131 Address value [0. . n-1]:](https://slidetodoc.com/presentation_image_h/b70aaacc1ee647b5dfbd4a56be6cf385/image-17.jpg)

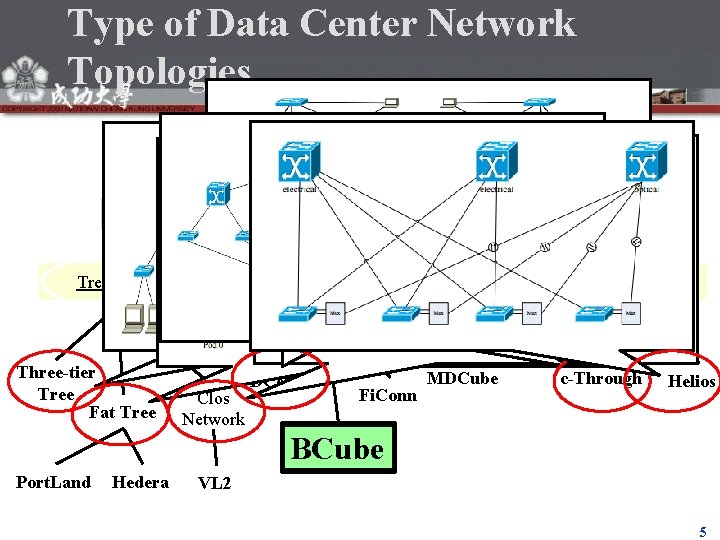

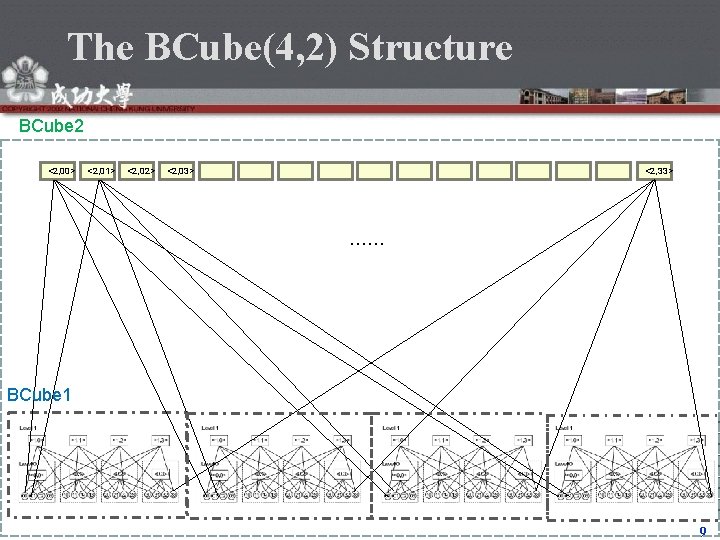

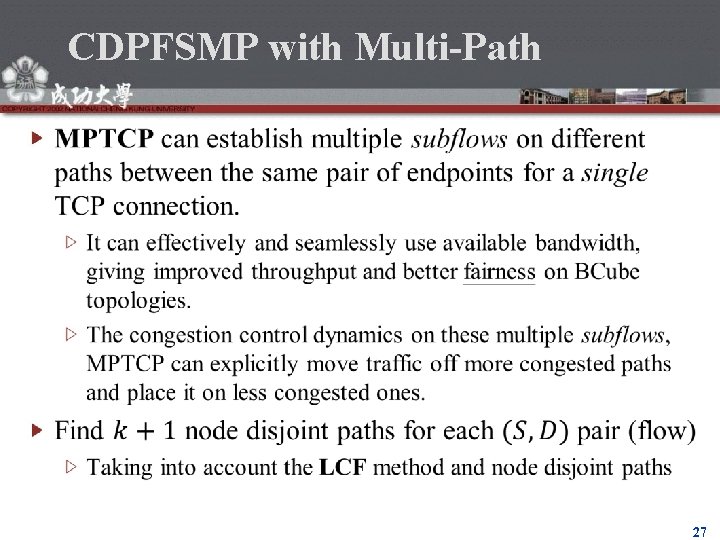

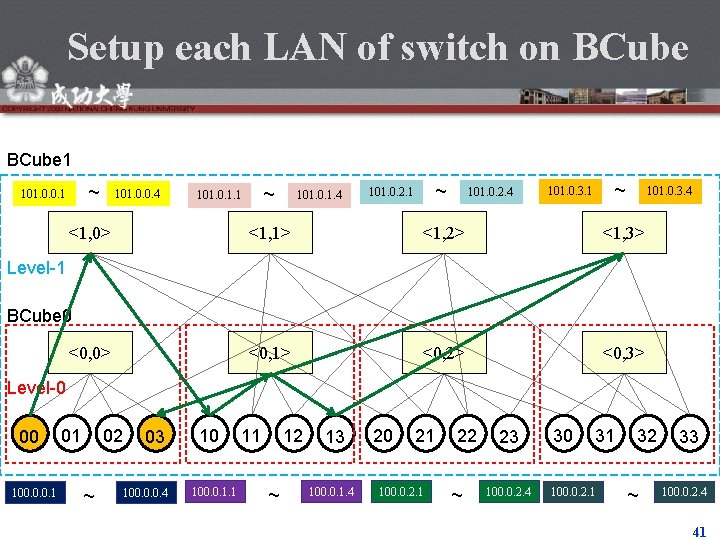

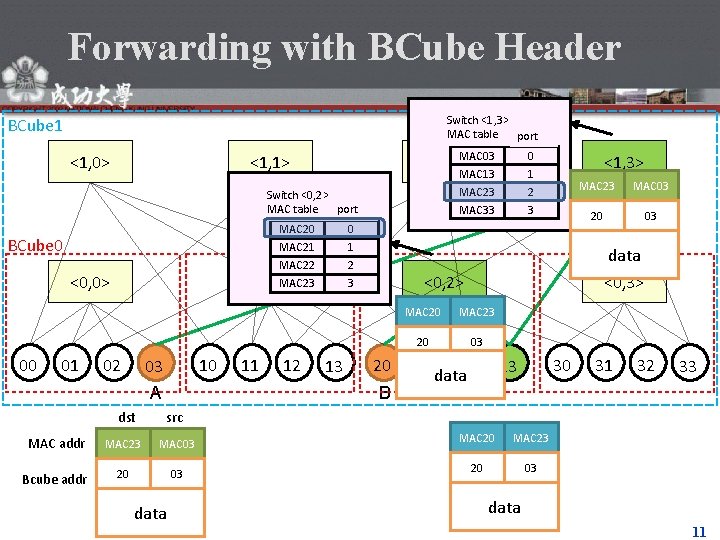

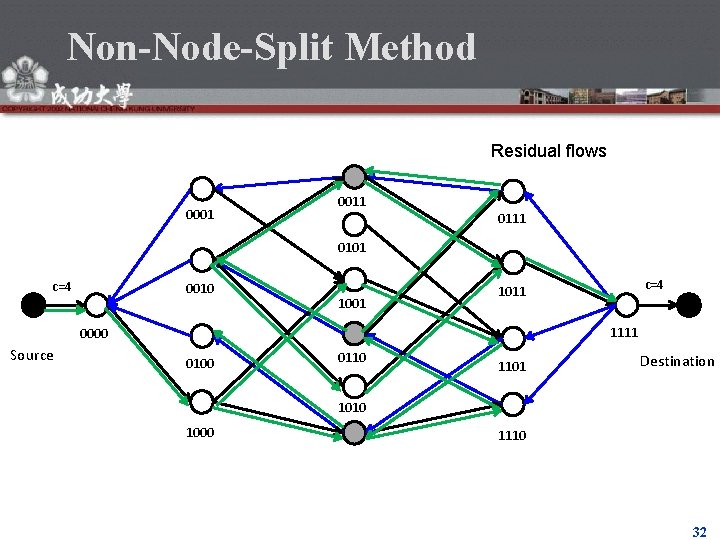

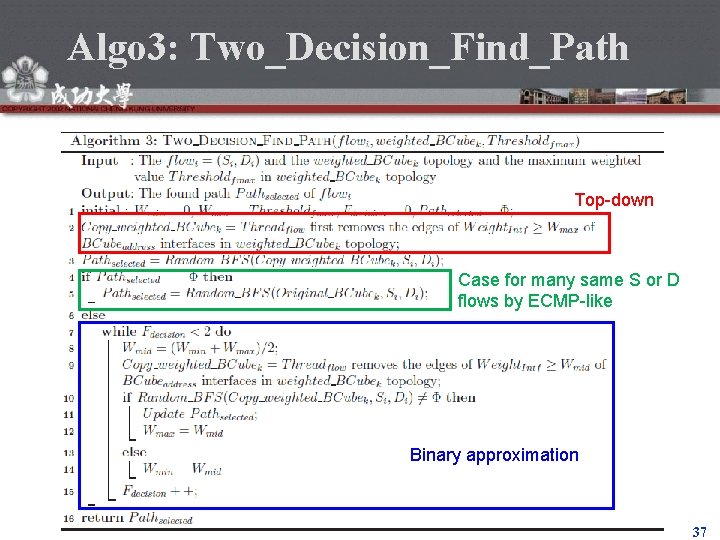

NSP Graph 1101 1102 1201 3101 3202 1111 1131 Address value [0. . n-1]: 1122 1221 3122 3121 3221 1132 1231 3131 3232 17

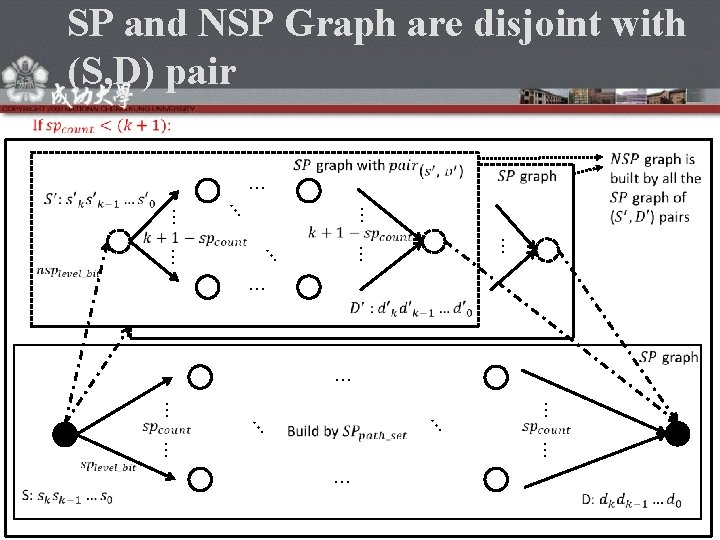

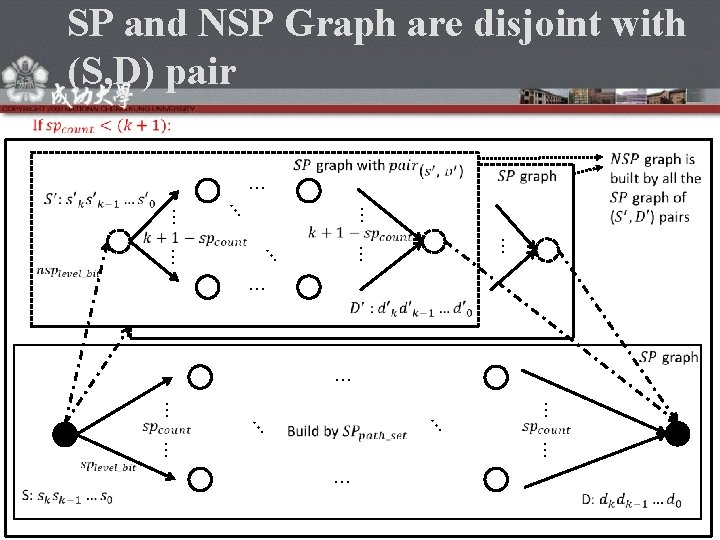

SP and NSP Graph are disjoint with (S, D) pair … … … … … … …

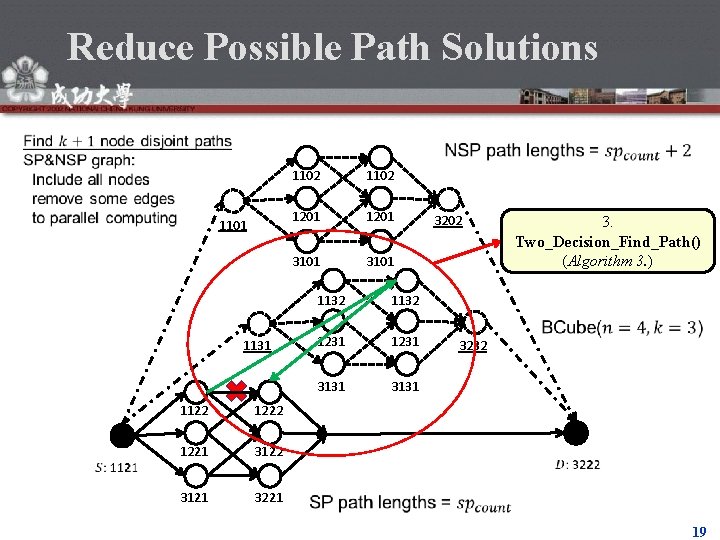

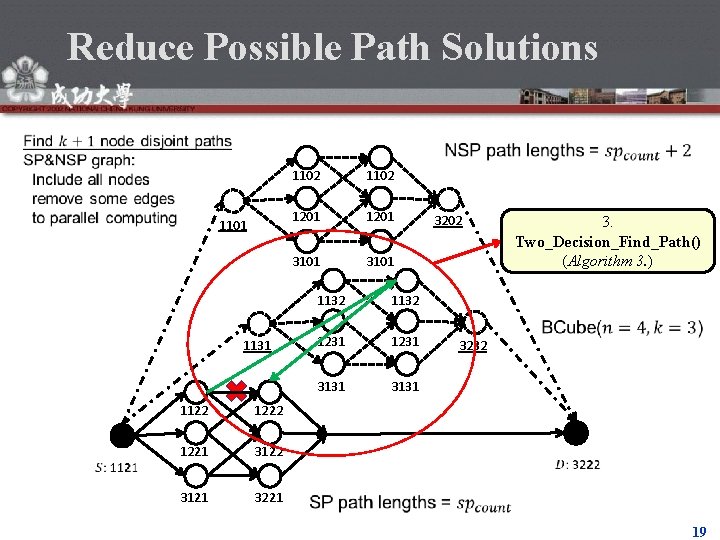

Reduce Possible Path Solutions 1101 1102 1201 3101 1131 1122 1221 3122 3121 3221 1132 1231 3131 3202 3232 3. Two_Decision_Find_Path() (Algorithm 3. ) 19

The First, Intermediate and Last Forwarders 0011 0001 0111 0101 0010 1001 1011 1111 0000 Source 0110 0100 1101 Destination 1010 1000 1110 The first forwarders 1100 The last forwarders The intermediate forwarders 20

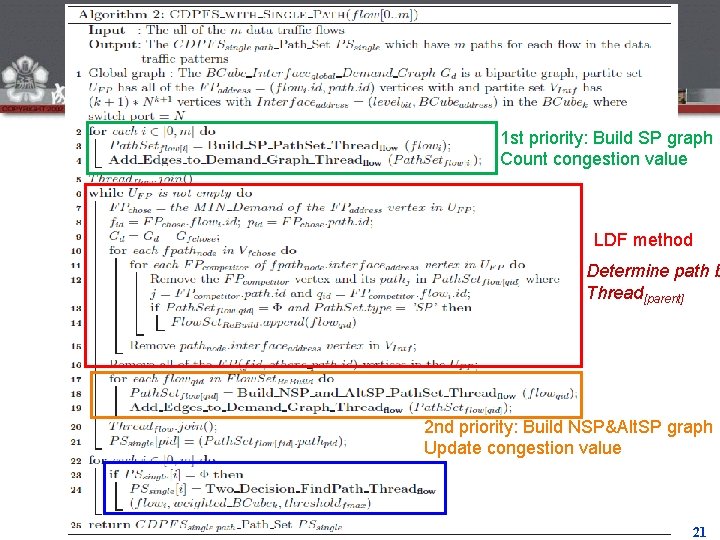

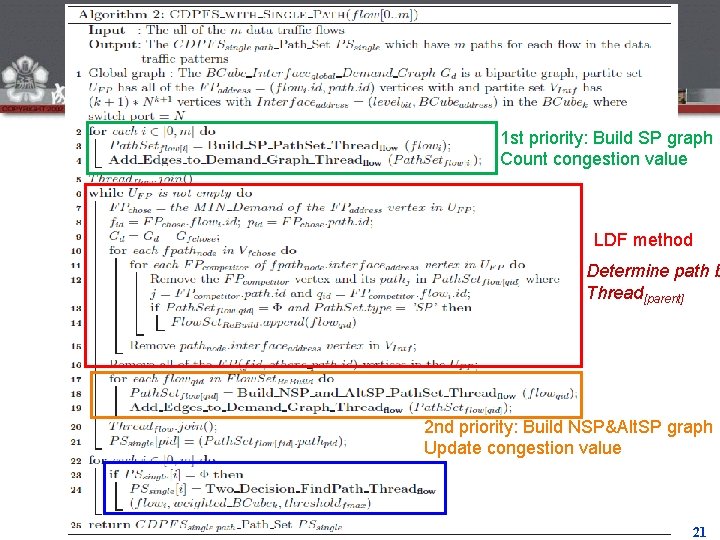

Algo 2 1 st priority: Build SP graph Count congestion value LDF method Determine path b Thread[parent] 2 nd priority: Build NSP&Alt. SP graph Update congestion value 21

Links of Path transfer to Interface address 0011 0001 0111 0101 0010 1011 1001 1111 0000 0110 0100 1010 1101 1110 1100 22

23

Least Demand First (LDF) method 24

Two case for LDF method 25

The example of CDPFS (2, 4) (3, 6) (8, 10 ) (1, 7) (4, 5) (6, 8) (1, 6) (2, 7) 1 4 1 2 3 2 4 3 1423 3 5 4 143 4 5 132 5 6 1423 6 7 12 7 8 8 132 8 9 9 10 12 10 3 (3, 4) (1, 9) 7 (3, 5) (2, 5) (3, 6) 2 6 (4, 10 ) (1, 8) 1 10 26

CDPFSMP with Multi-Path 27

Algo 4 Build SP&NSP graph Count congestion value Bottom-up LCF method Determine path by Thread[child] 28

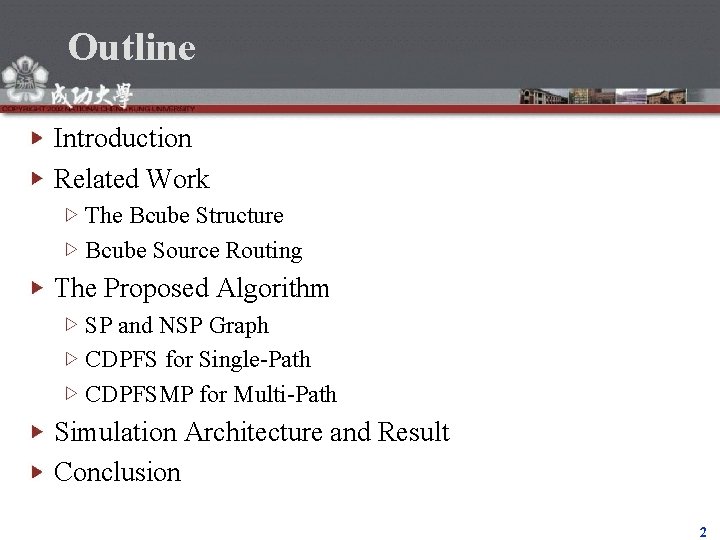

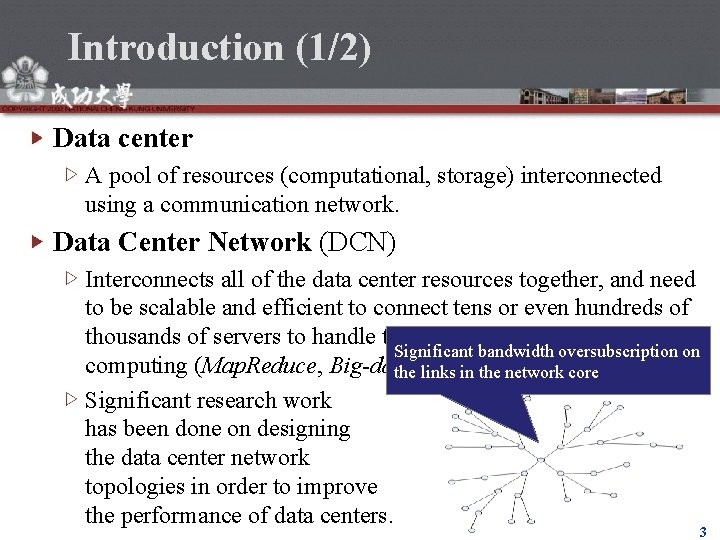

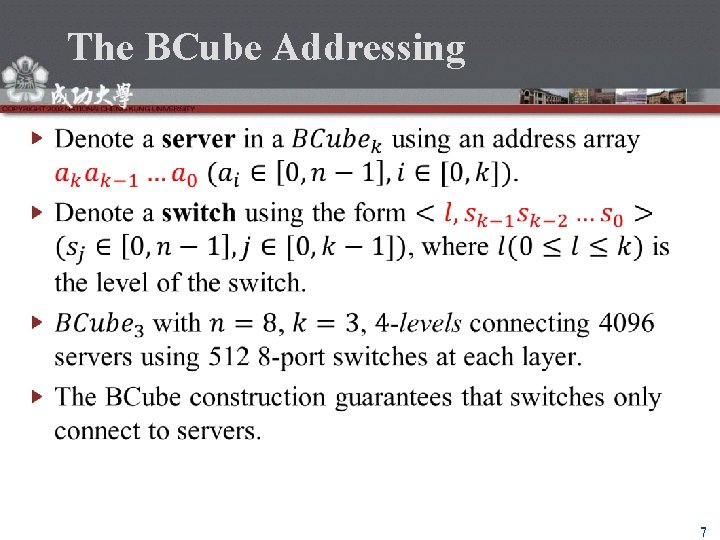

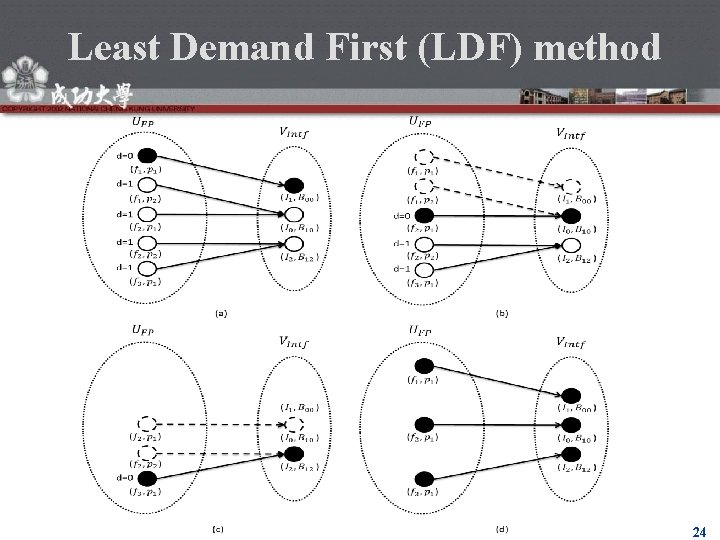

![Algo 5 Build FlowiNetwork by Wbounded Nonnodesplit MF method Case for SP path Case Algo 5 Build Flow[i]Network by Wbounded Non-node-split MF method Case for SP path Case](https://slidetodoc.com/presentation_image_h/b70aaacc1ee647b5dfbd4a56be6cf385/image-29.jpg)

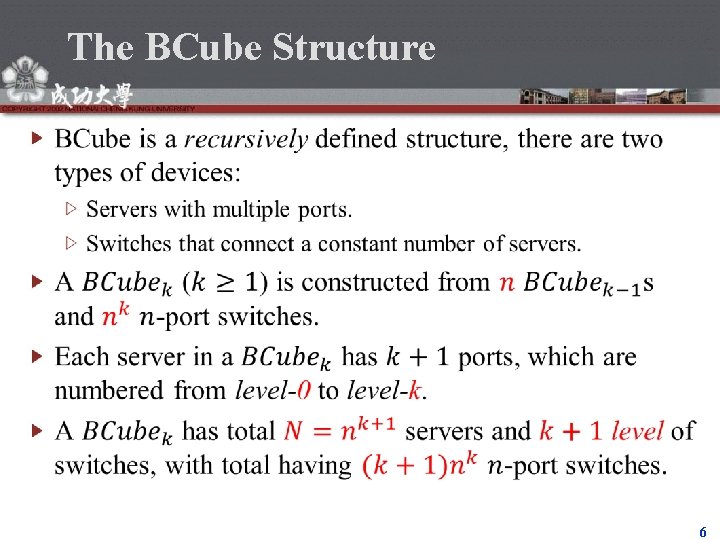

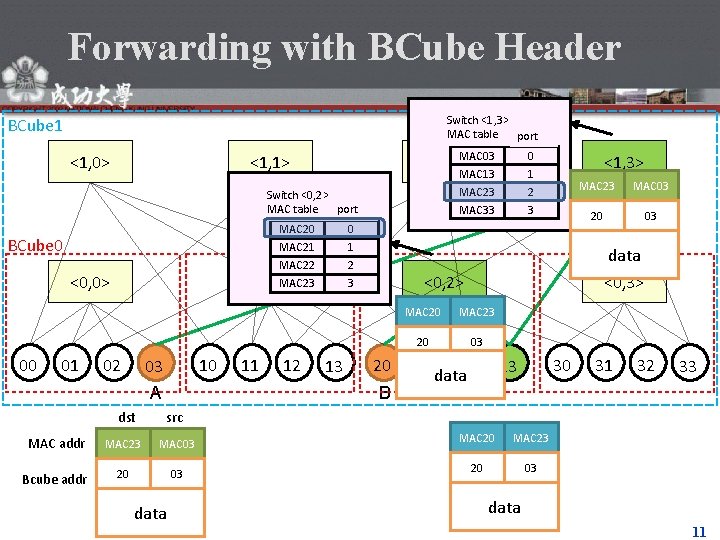

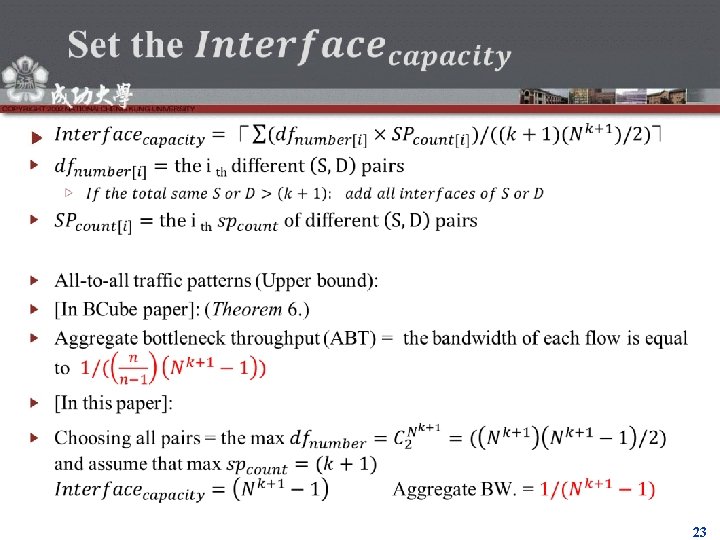

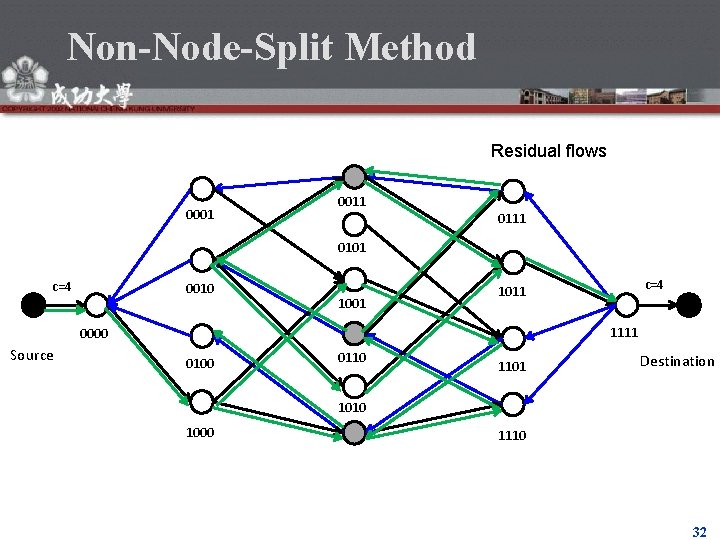

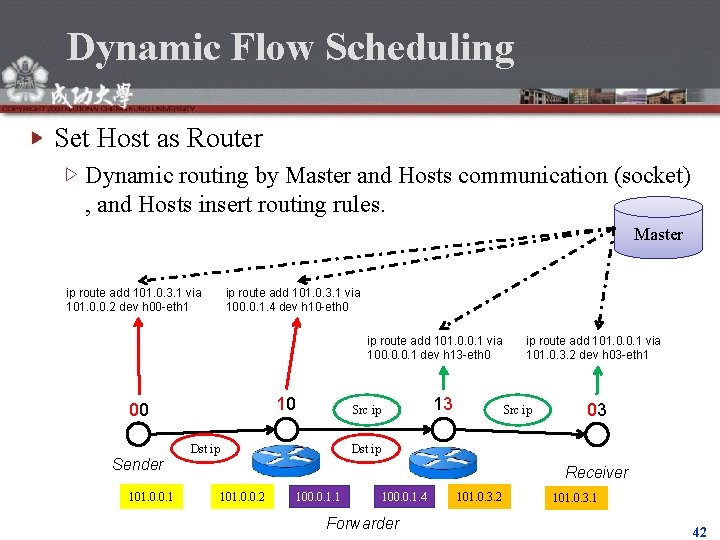

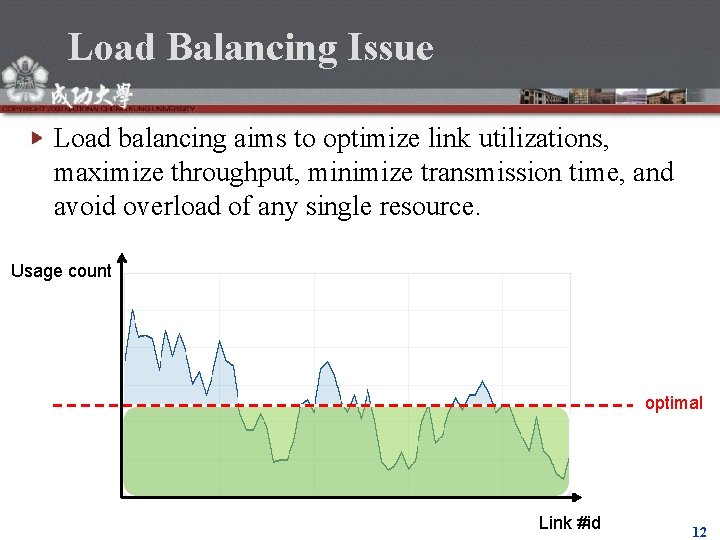

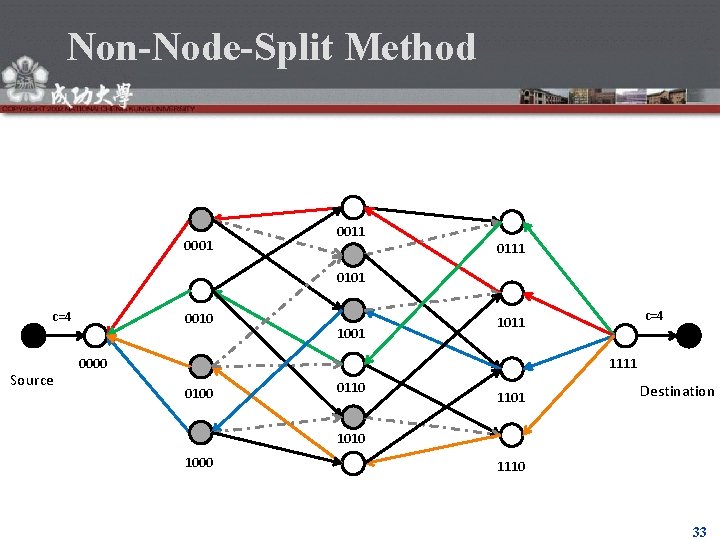

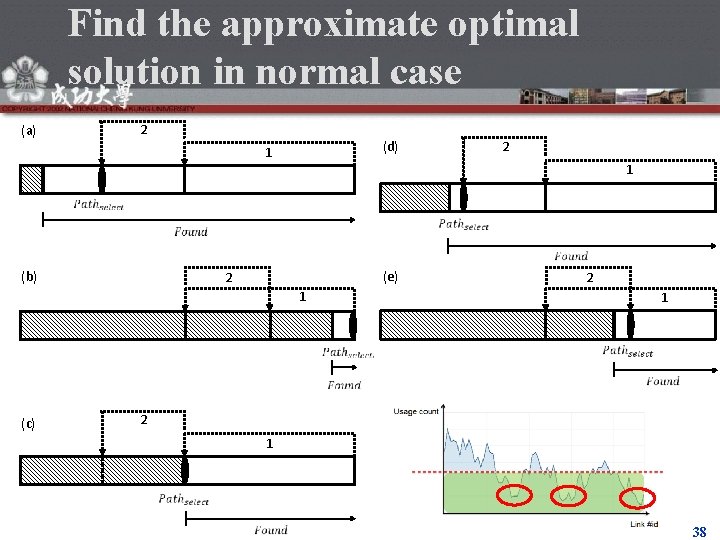

Algo 5 Build Flow[i]Network by Wbounded Non-node-split MF method Case for SP path Case for NSP path 29

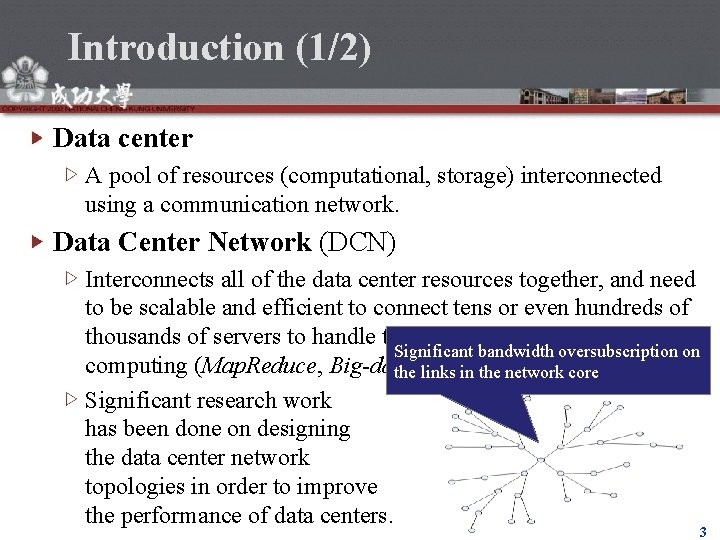

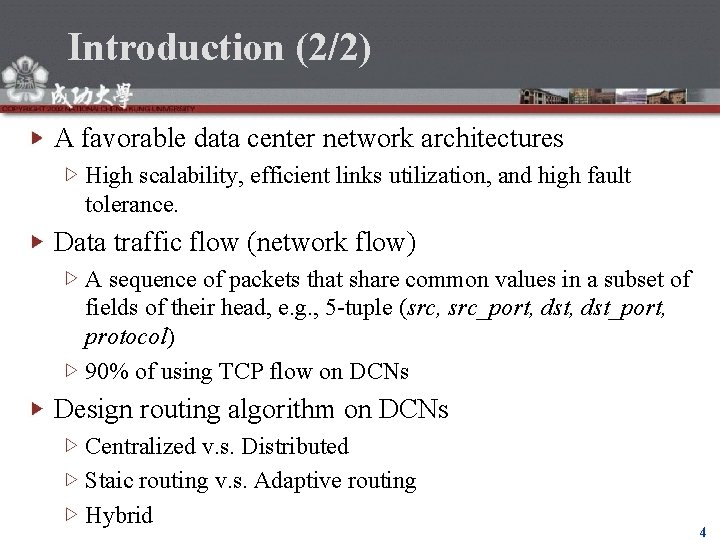

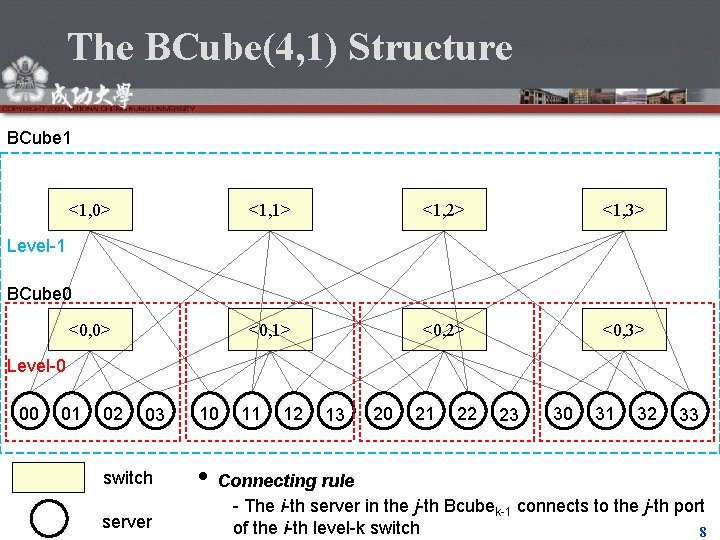

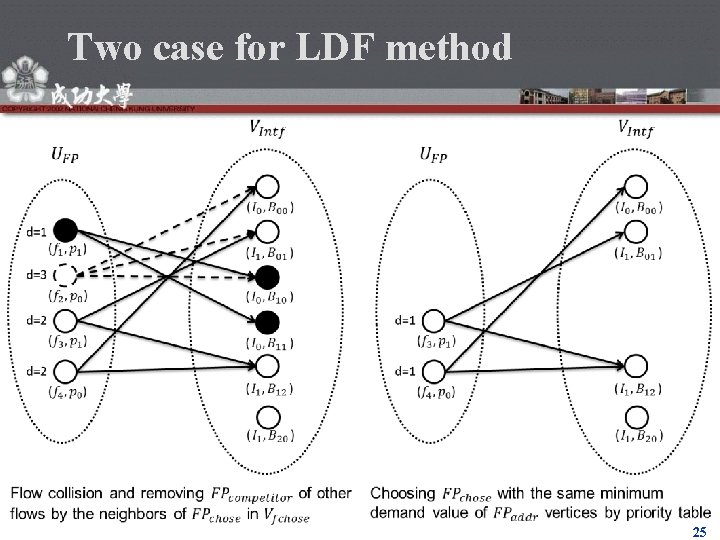

![FlowiNetwork 1 Node Disjoint Max Flow 2 3 s and t are the source Flow[i]Network 1. Node Disjoint Max. Flow 2. 3. s and t are the source](https://slidetodoc.com/presentation_image_h/b70aaacc1ee647b5dfbd4a56be6cf385/image-30.jpg)

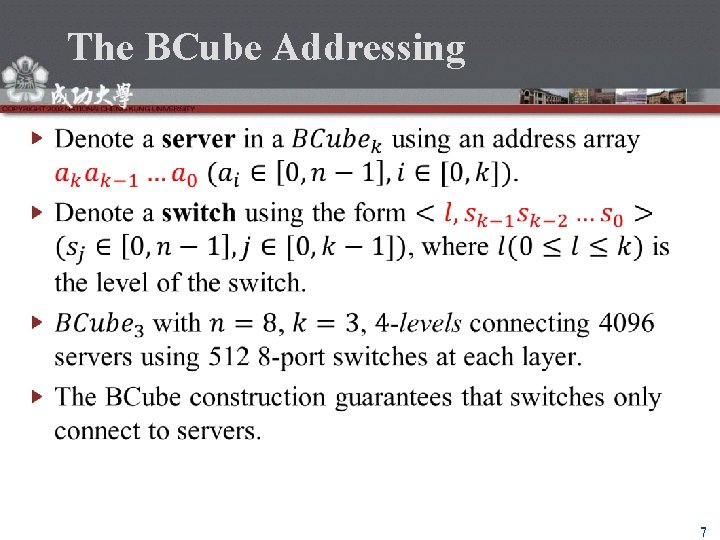

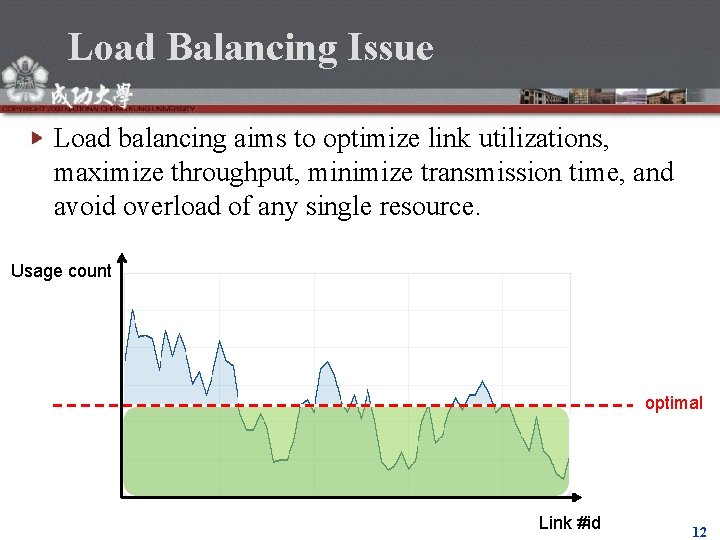

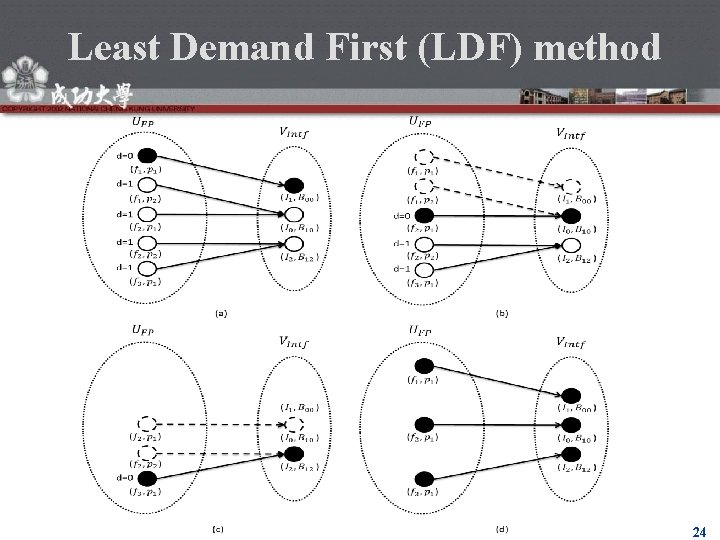

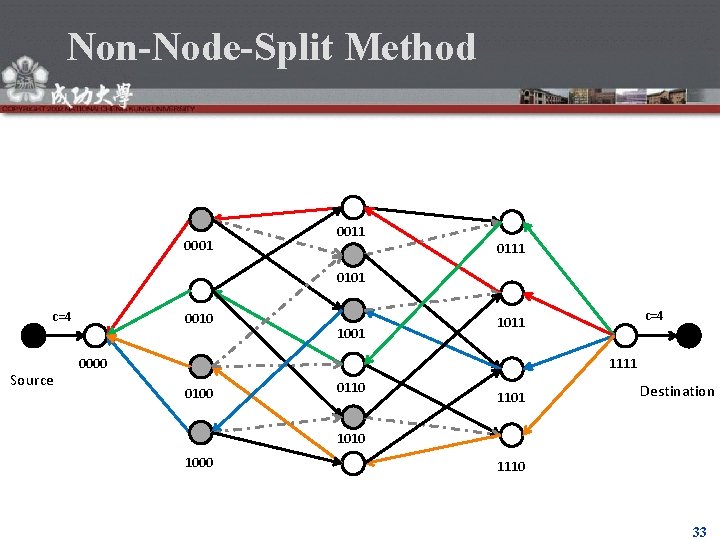

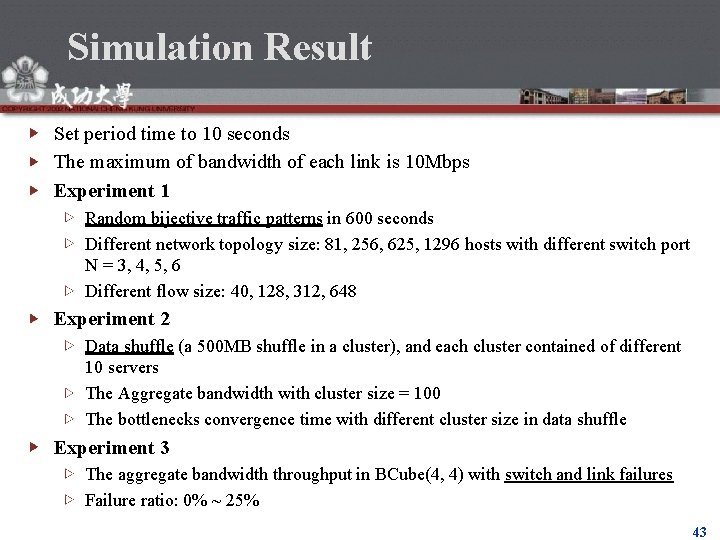

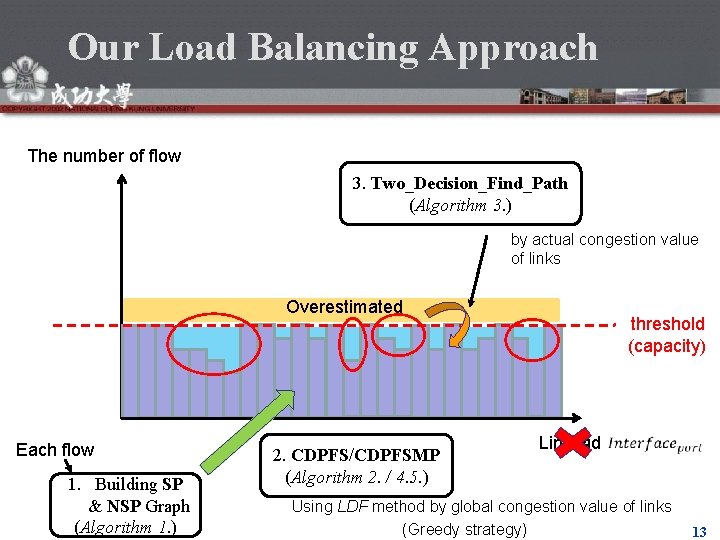

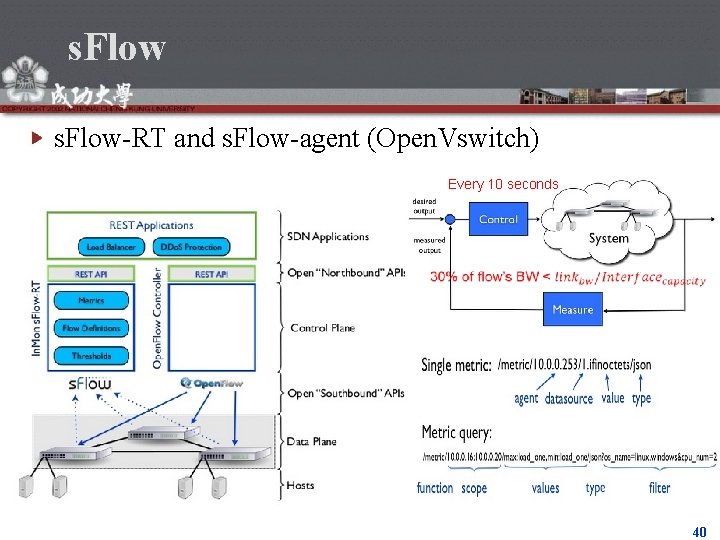

Flow[i]Network 1. Node Disjoint Max. Flow 2. 3. s and t are the source and the sink of Flow. Network respectively c(v) = 1 for each v ∈ V c(e) = 1 for each e ∈ E c=1 ⇒ 1121 1101 All of edges with c=1 3202 c=1 c=3 1121 3222 c=1 All of edges with c=1 3222 30

Non-Node-Split Method 1. We use BFS search by each edges not vertices (we can repeatedly access same vertices) 2. We maintain a table which record intermediate nodes with residual edge type 0011 0001 0111 0101 c=4 0010 1001 1111 0000 Source c=4 1011 0110 0100 1101 Destination 1010 1000 1110 The first forwarders 1100 The last forwarders The intermediate forwarders 31

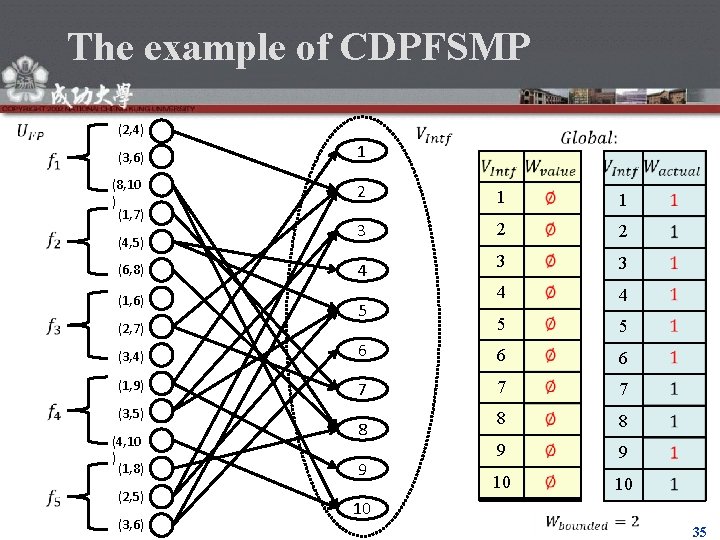

Non-Node-Split Method Residual flows 0001 0011 0101 c=4 0010 1001 1111 0000 Source c=4 1011 0100 0110 1101 Destination 1010 1000 1110 32

Non-Node-Split Method 0001 0011 0101 c=4 Source 0010 1001 c=4 1011 1111 0000 0110 1101 Destination 1010 1000 1110 33

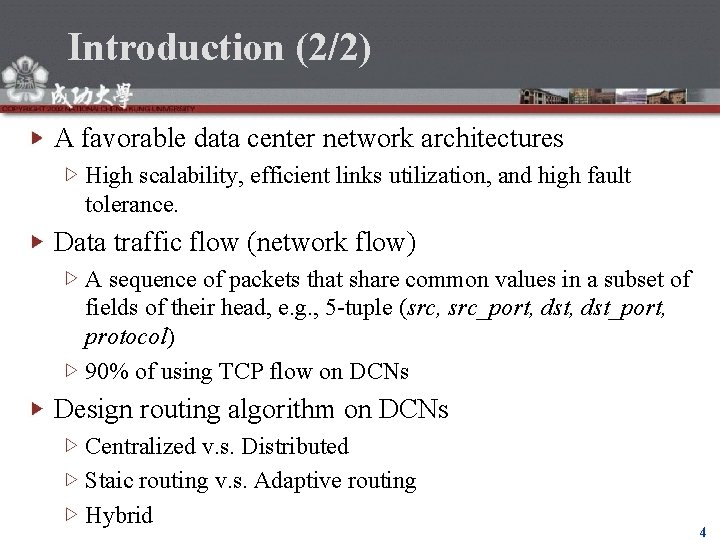

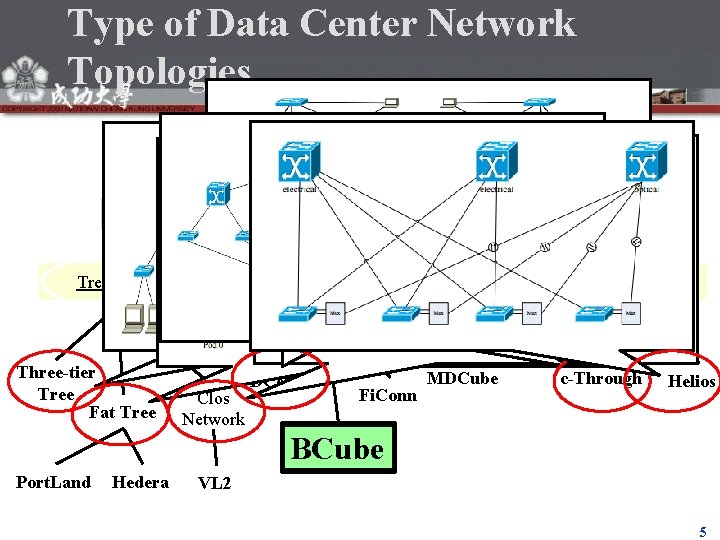

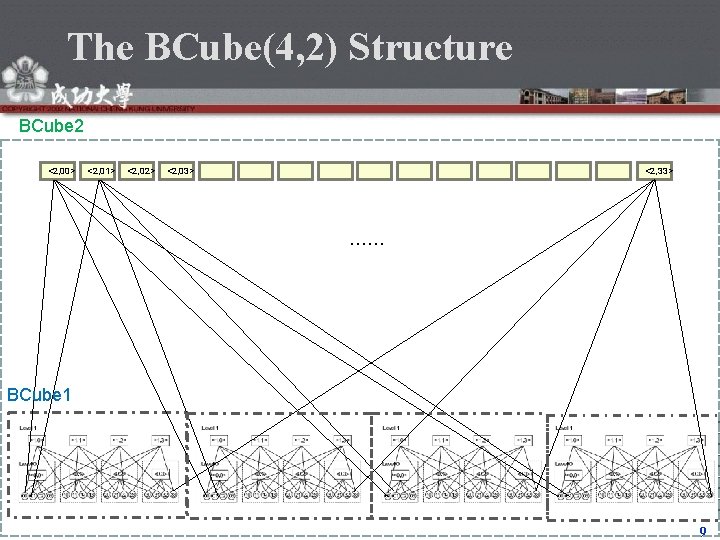

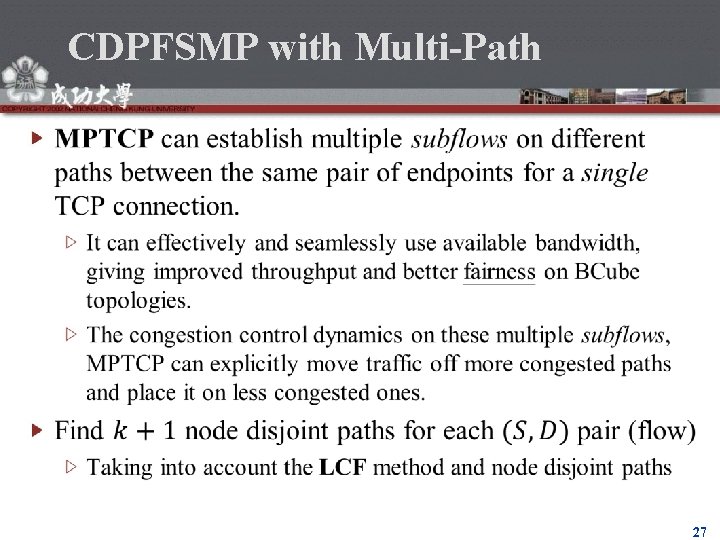

![Probability model of CDPFSMP go fast adding edge to FlowiNetwork 34 Probability model of CDPFSMP go fast adding edge to Flow[i]Network 34](https://slidetodoc.com/presentation_image_h/b70aaacc1ee647b5dfbd4a56be6cf385/image-34.jpg)

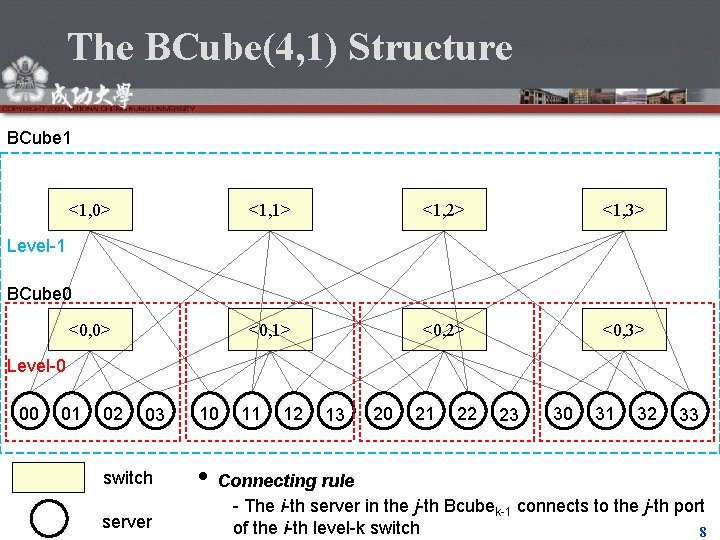

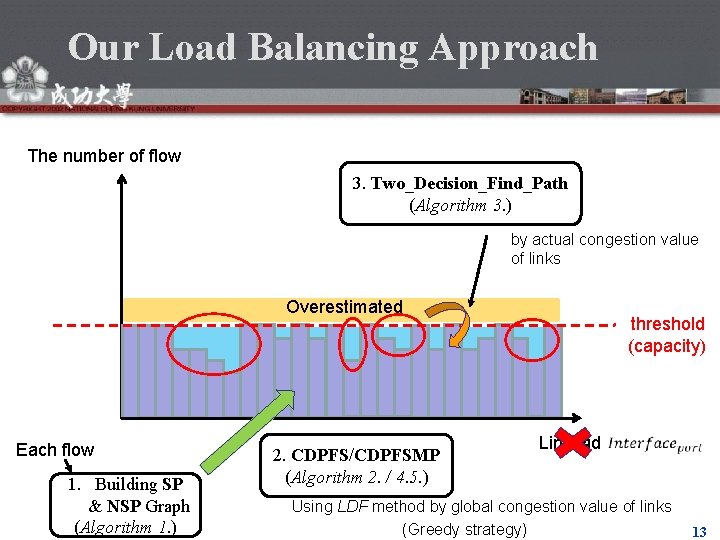

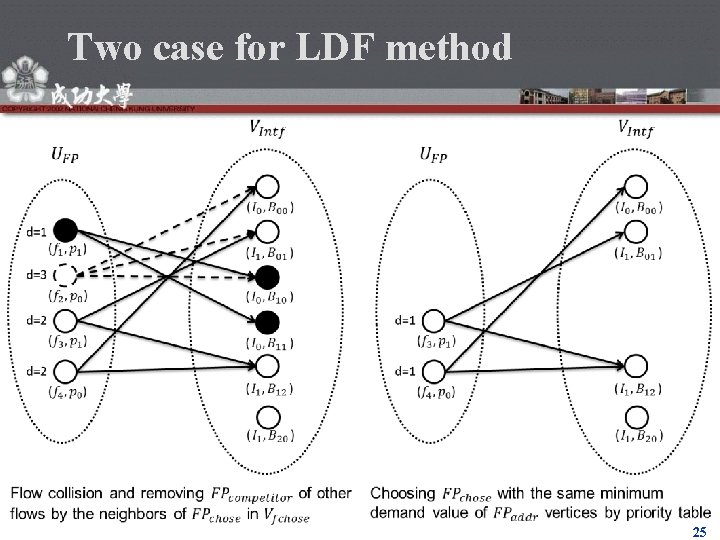

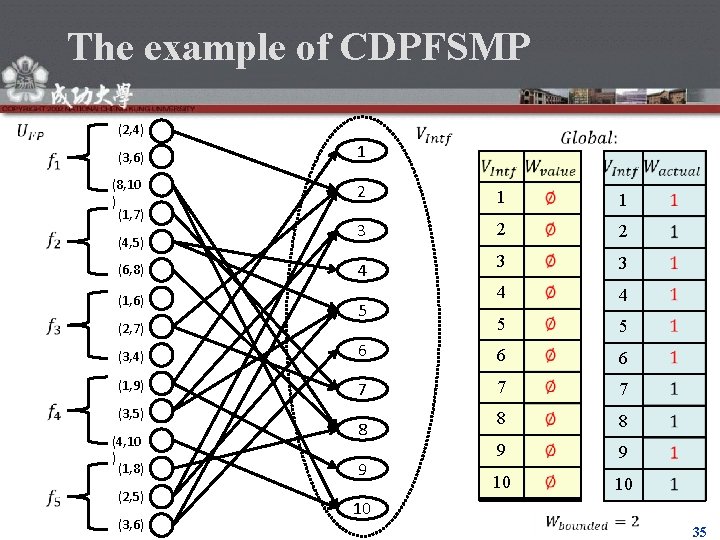

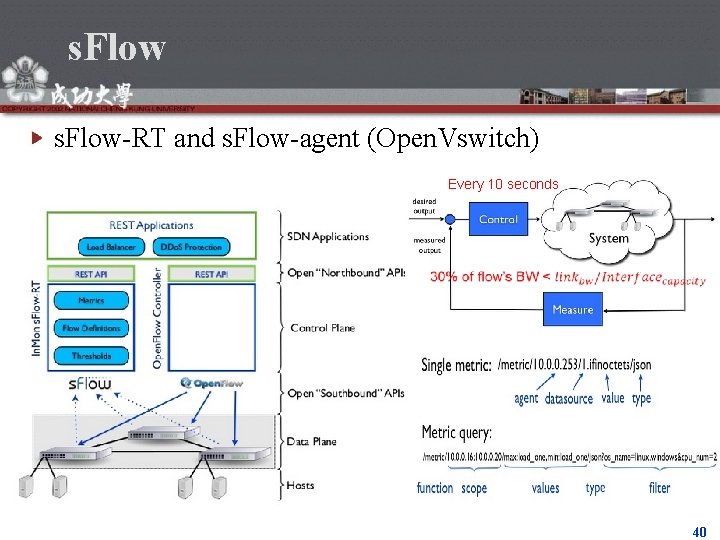

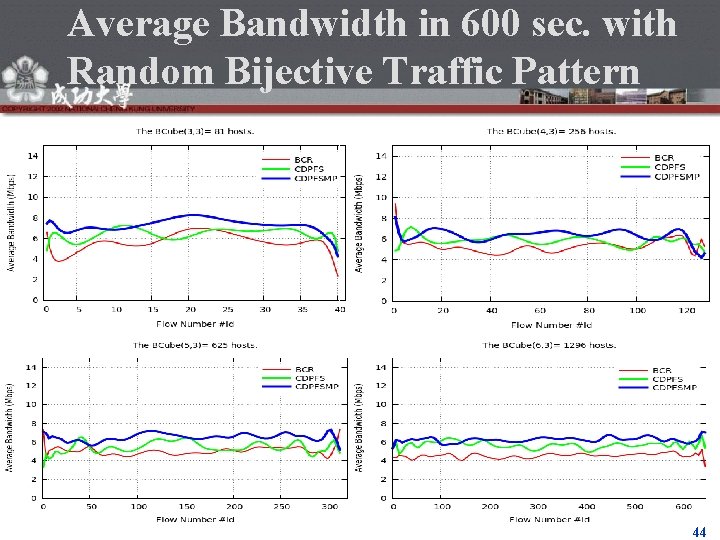

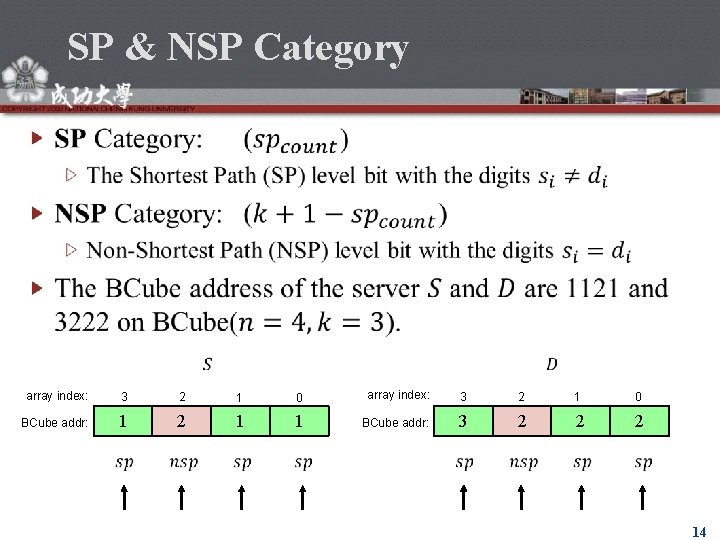

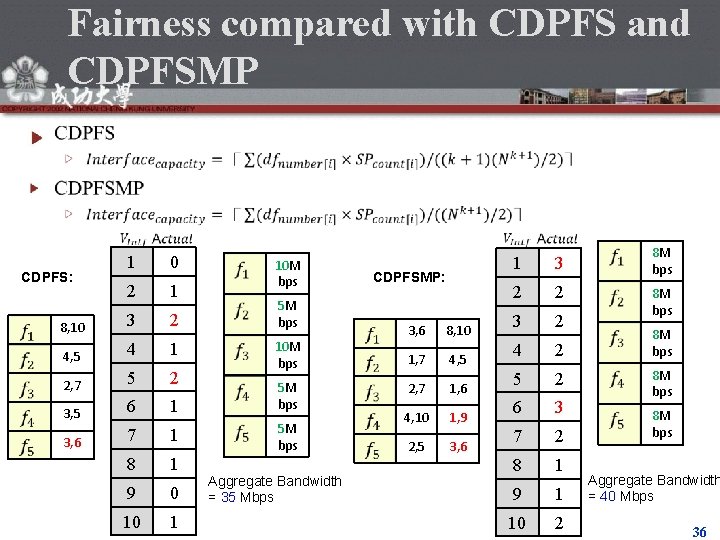

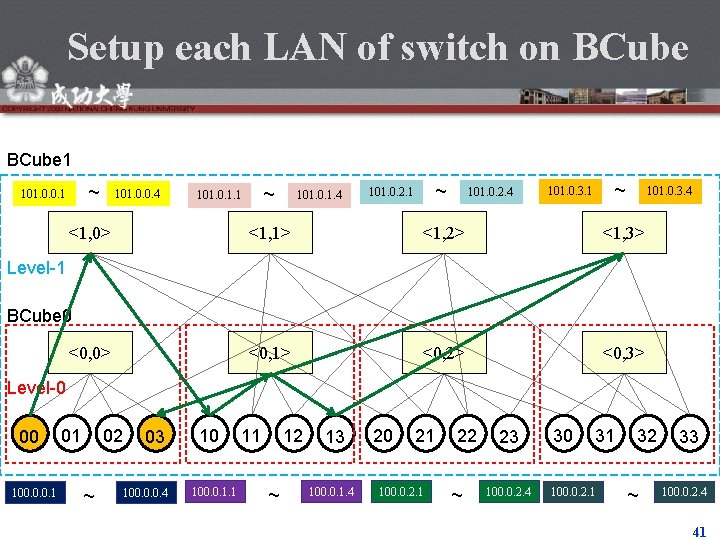

Probability model of CDPFSMP go fast adding edge to Flow[i]Network 34

The example of CDPFSMP (2, 4) (3, 6) (8, 10 ) (1, 7) (4, 5) (6, 8) (1, 6) (2, 7) 2 1 341 1 3 2 4 3 42 3 4 241 4 5 23 5 5 (3, 4) 6 6 241 6 (1, 9) 7 7 2 7 8 3 8 9 10 2 10 (3, 5) (4, 10 ) (1, 8) 1 (2, 5) (3, 6) 8 9 10 35

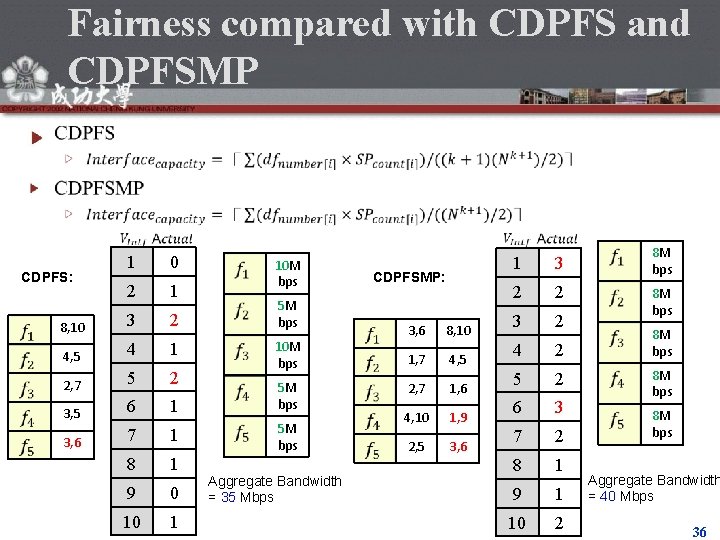

Fairness compared with CDPFS and CDPFSMP CDPFS: 8, 10 1 0 2 1 3 2 5 M bps 10 M bps 4, 5 4 1 2, 7 5 2 3, 5 6 1 3, 6 7 1 8 1 9 0 10 1 10 M bps 5 M bps Aggregate Bandwidth = 35 Mbps CDPFSMP: 3, 6 8, 10 1, 7 4, 5 2, 7 1, 6 4, 10 1, 9 2, 5 3, 6 1 3 2 2 3 2 4 2 5 2 6 3 7 2 8 1 9 1 10 2 8 M bps 8 M bps Aggregate Bandwidth = 40 Mbps 36

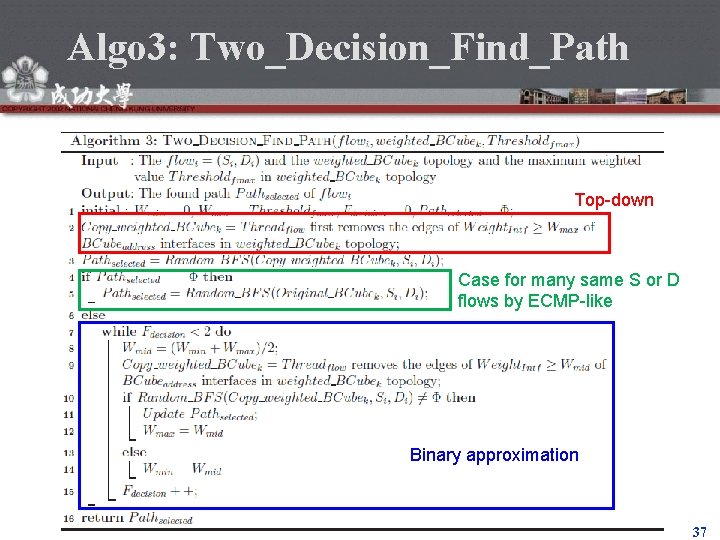

Algo 3: Two_Decision_Find_Path Top-down Case for many same S or D flows by ECMP-like Binary approximation 37

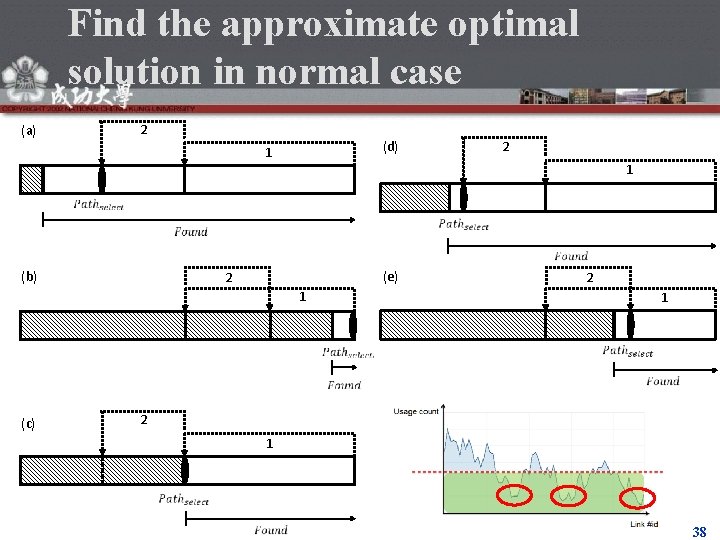

Find the approximate optimal solution in normal case 2 (a) (d) 1 2 1 (b) (e) 2 2 1 1 (c) 2 1 38

The Architecture of BCubek with Master and Monitor 39

s. Flow-RT and s. Flow-agent (Open. Vswitch) Every 10 seconds 40

Setup each LAN of switch on BCube 1 ~ 101. 0. 0. 1 101. 0. 0. 4 ~ 101. 0. 1. 1 <1, 0> 101. 0. 1. 4 ~ 101. 0. 2. 1 101. 0. 2. 4 ~ 101. 0. 3. 1 101. 0. 3. 4 <1, 1> <1, 2> <1, 3> <0, 1> <0, 2> <0, 3> Level-1 BCube 0 <0, 0> Level-0 00 100. 0. 0. 1 01 02 ~ 03 100. 0. 0. 4 10 100. 0. 1. 1 11 12 ~ 13 100. 0. 1. 4 20 21 100. 0. 2. 1 22 ~ 23 100. 0. 2. 4 30 31 100. 0. 2. 1 32 ~ 33 100. 0. 2. 4 41

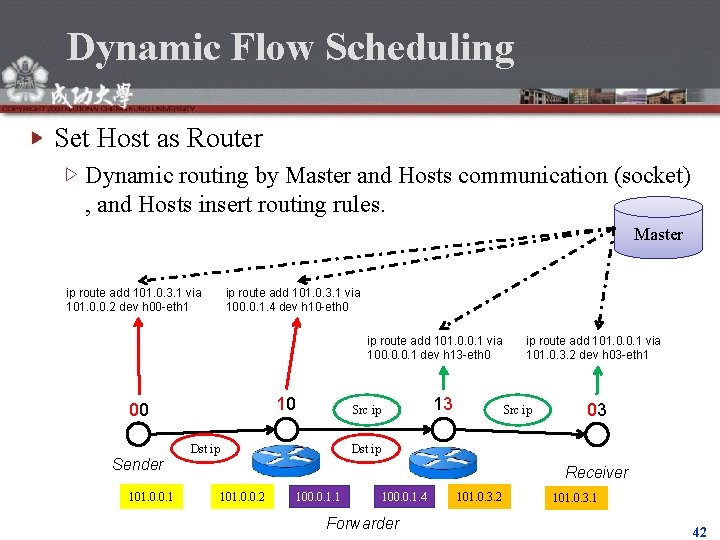

Dynamic Flow Scheduling Set Host as Router Dynamic routing by Master and Hosts communication (socket) , and Hosts insert routing rules. Master ip route add 101. 0. 3. 1 via 101. 0. 0. 2 dev h 00 -eth 1 ip route add 101. 0. 3. 1 via 100. 0. 1. 4 dev h 10 -eth 0 ip route add 101. 0. 0. 1 via 100. 0. 0. 1 dev h 13 -eth 0 10 00 Sender 101. 0. 0. 1 13 Src ip Dst ip ip route add 101. 0. 0. 1 via 101. 0. 3. 2 dev h 03 -eth 1 Src ip 03 Dst ip Receiver 101. 0. 0. 2 100. 0. 1. 1 100. 0. 1. 4 Forwarder 101. 0. 3. 2 101. 0. 3. 1 42

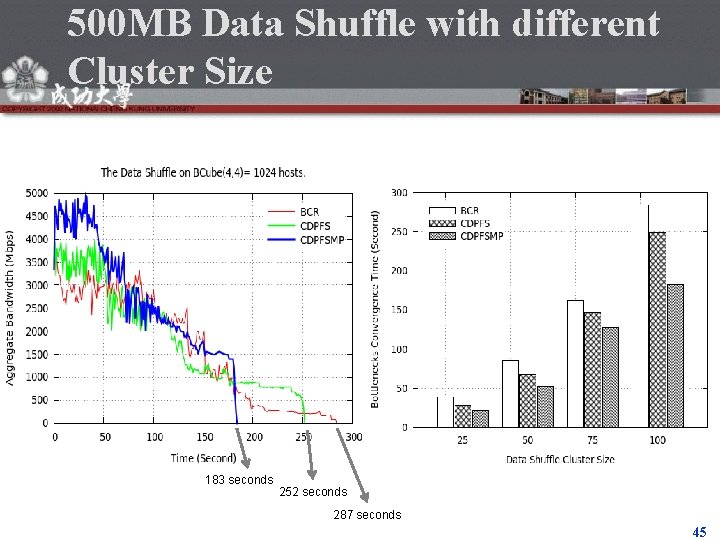

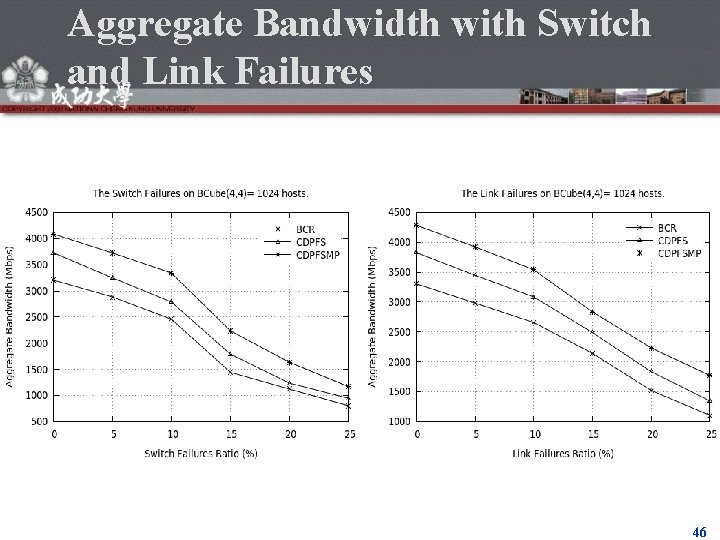

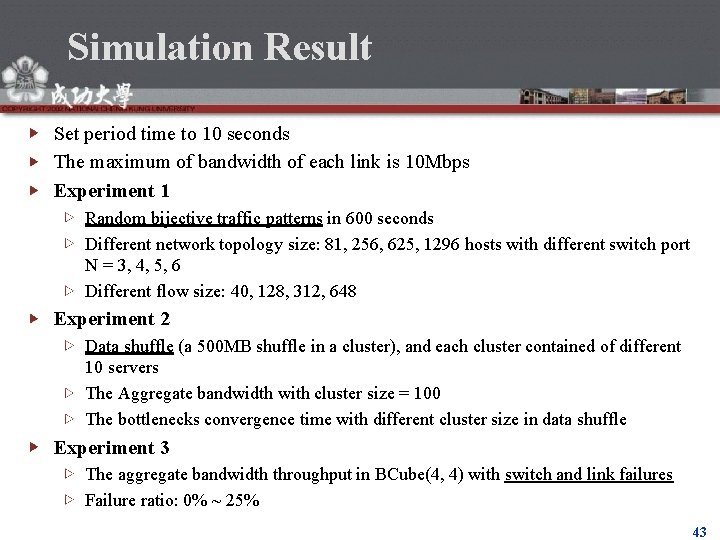

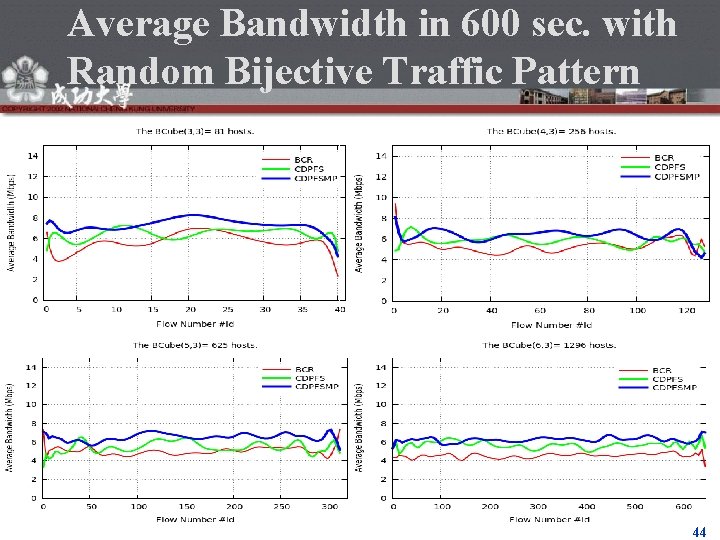

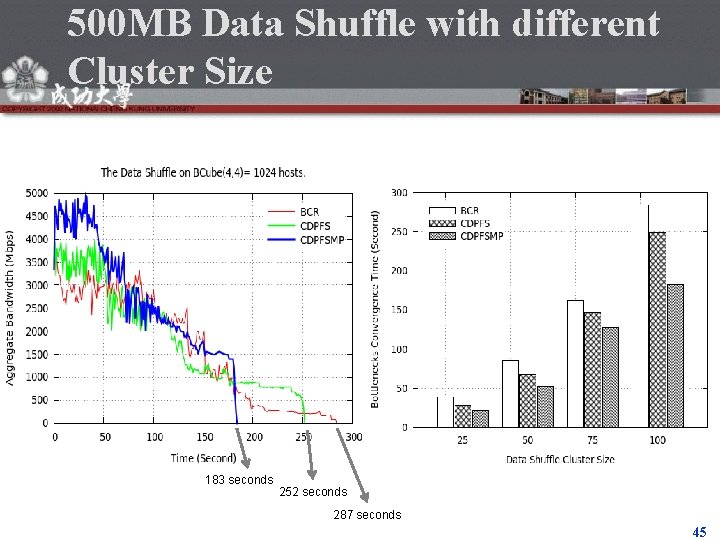

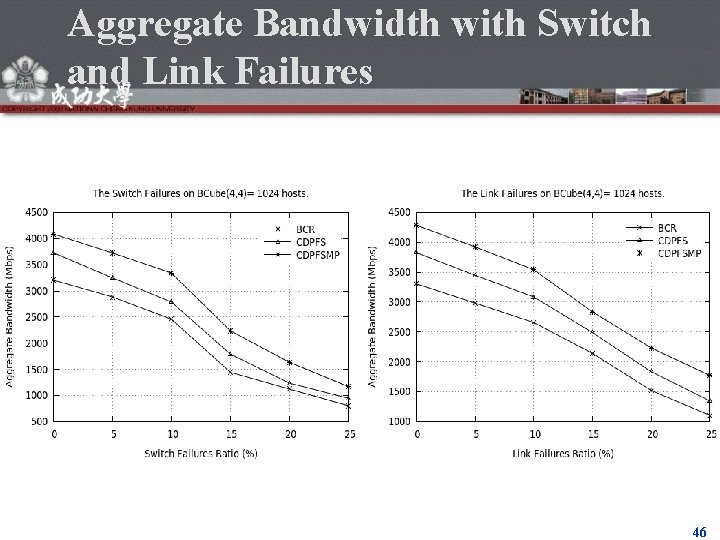

Simulation Result Set period time to 10 seconds The maximum of bandwidth of each link is 10 Mbps Experiment 1 Random bijective traffic patterns in 600 seconds Different network topology size: 81, 256, 625, 1296 hosts with different switch port N = 3, 4, 5, 6 Different flow size: 40, 128, 312, 648 Experiment 2 Data shuffle (a 500 MB shuffle in a cluster), and each cluster contained of different 10 servers The Aggregate bandwidth with cluster size = 100 The bottlenecks convergence time with different cluster size in data shuffle Experiment 3 The aggregate bandwidth throughput in BCube(4, 4) with switch and link failures Failure ratio: 0% ~ 25% 43

Average Bandwidth in 600 sec. with Random Bijective Traffic Pattern 44

500 MB Data Shuffle with different Cluster Size 183 seconds 252 seconds 287 seconds 45

Aggregate Bandwidth with Switch and Link Failures 46

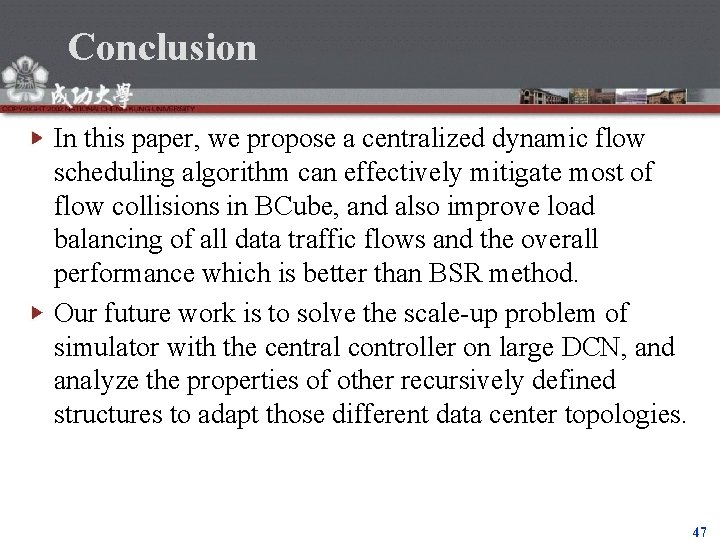

Conclusion In this paper, we propose a centralized dynamic flow scheduling algorithm can effectively mitigate most of flow collisions in BCube, and also improve load balancing of all data traffic flows and the overall performance which is better than BSR method. Our future work is to solve the scale-up problem of simulator with the central controller on large DCN, and analyze the properties of other recursively defined structures to adapt those different data center topologies. 47