CART classification and regression trees Random forests Partly

- Slides: 14

CART (classification and regression trees) & Random forests Partly based on Statistical Learning course by Trevor Hastie and Rob Tibshirani

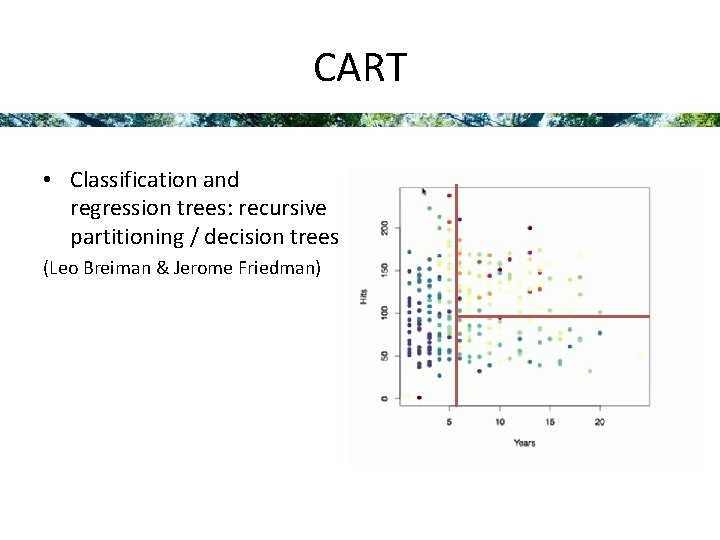

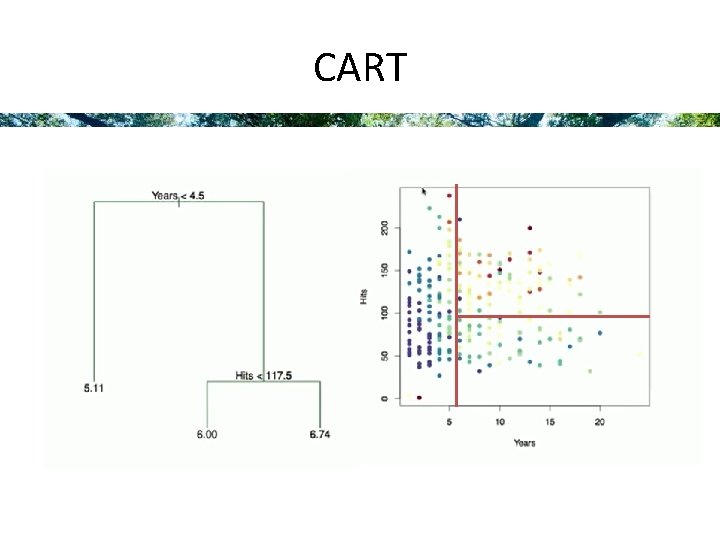

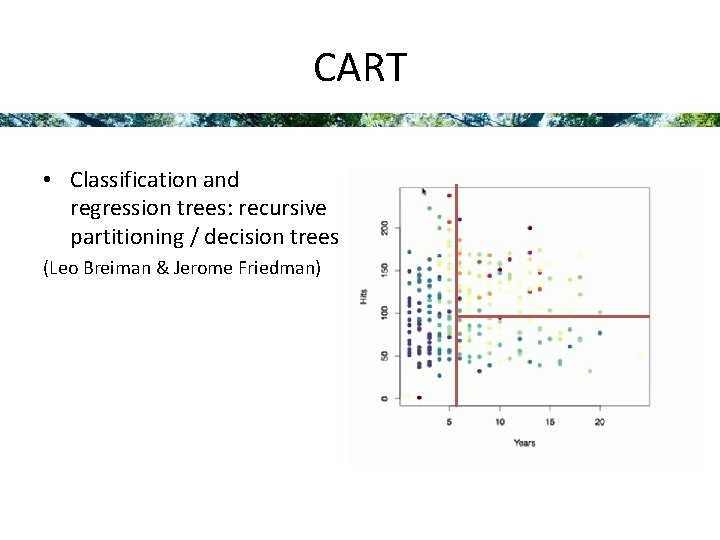

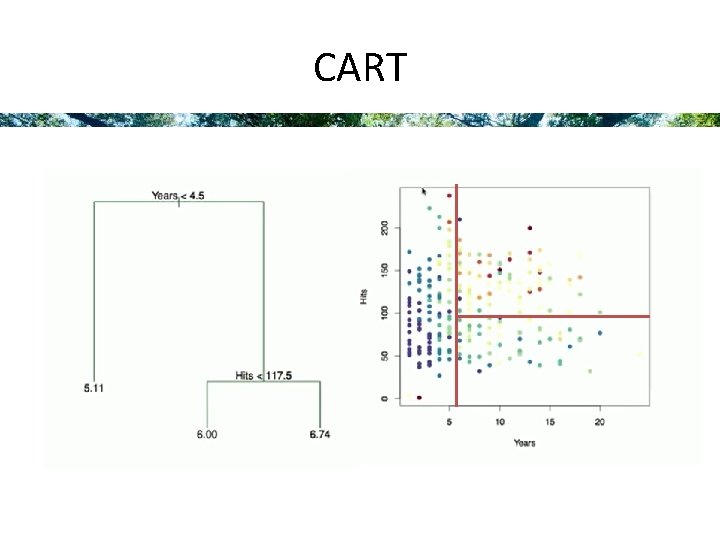

CART • Classification and regression trees: recursive partitioning / decision trees (Leo Breiman & Jerome Friedman)

CART

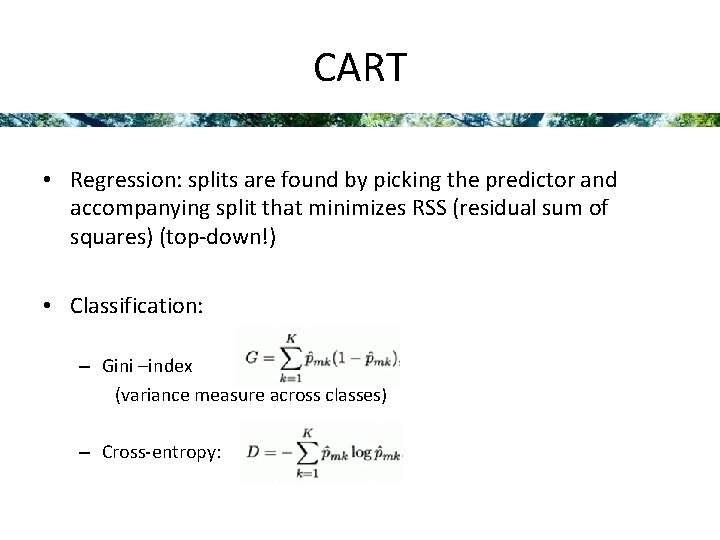

CART • Regression: splits are found by picking the predictor and accompanying split that minimizes RSS (residual sum of squares) (top-down!) • Classification: – Gini –index (variance measure across classes) – Cross-entropy:

CART • Predicting: predict test observation by passing it down the tree, following the splits, and use the mean / majority vote of the training observations to make the prediction • To avoid overfitting (= low bias but high variance), the tree needs to be pruned, using cost complexity pruning: A penalty is placed on the total number of final nodes, cross validation to find optimal value for penalty parameter alpha (preferred to growing smaller trees because a good split might follow a split that does not look very informative)

CART ü Intuitive to interpret (applied researchers) ü Not rely on common assumptions like multivariate normality or homogeneity of variance ü Automatically detect nonlinear relationships & interactions ₋ Overfitting ₋ Do not predict as well as other common (machine learning) methods

Random forests • Instead of growing 1 tree, grow an ensemble of trees • To reduce overfitting and improve prediction • Cost: interpretability

Random forests • 2 tricks: 1) Every tree uses a bootstrap sample of the data (usually 2/3) (also referred to as bagging) 2) At every node only a subset m of the parameters is considered for partitioning

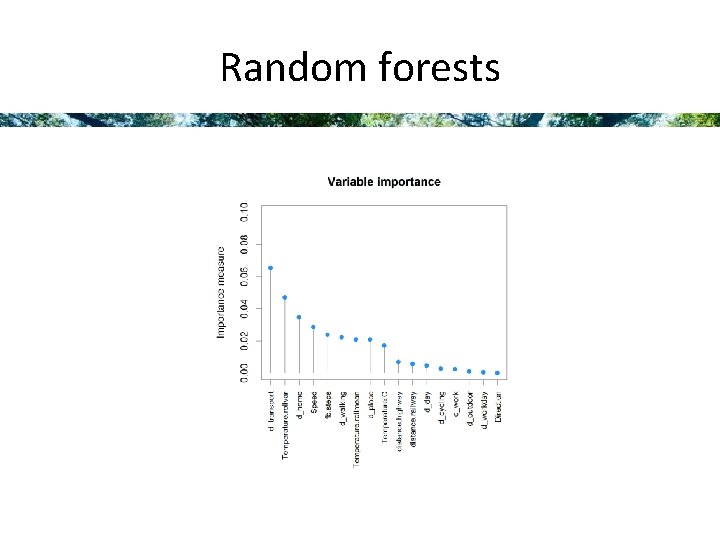

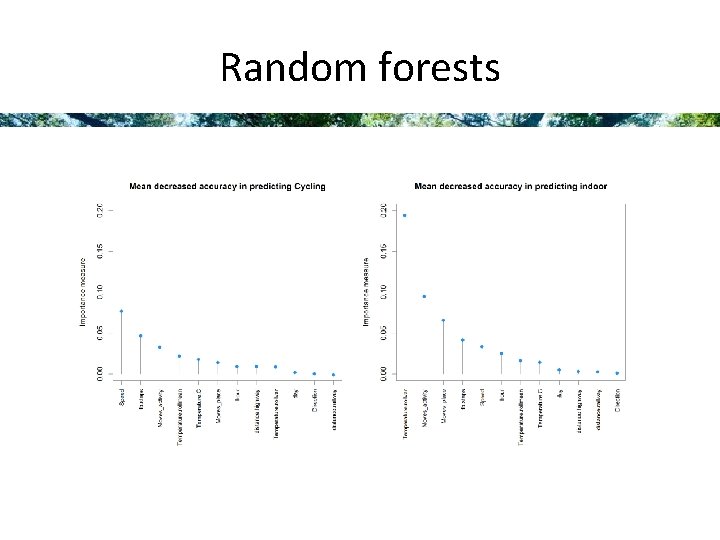

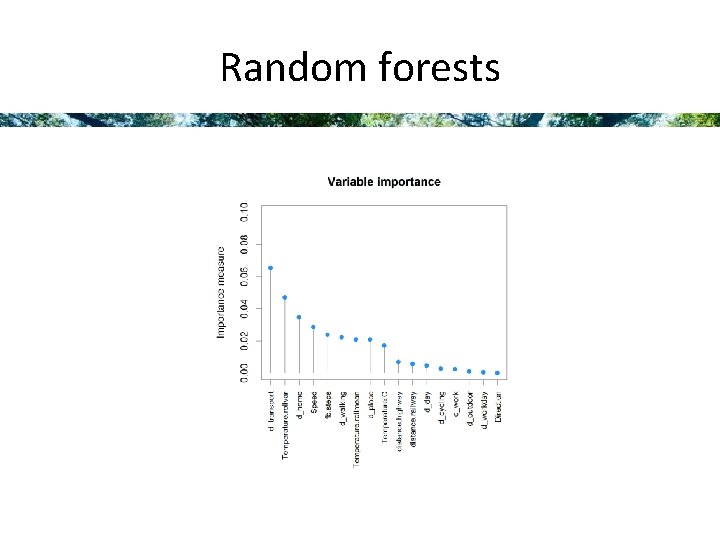

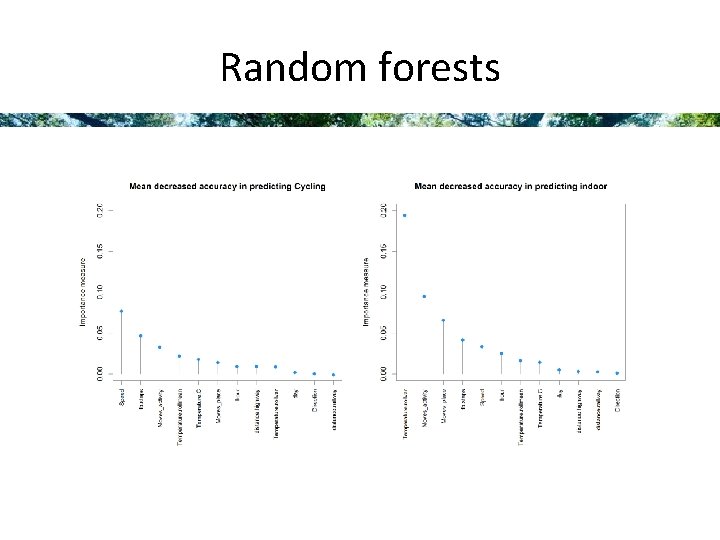

Random forests • Additional benefits: • Because of point 1, able to get ‘test errors’ for free: OOB – out of bag, error estimates • Because of point 2: obtain an indication of parameter importance

Random forests

Random forests

Random forest • Tuning paremters: number of trees to be grown and m, the number of paramters to be considered at each node (√p and p / 3) • Use cross- validation to determine m

R packages • • rpart tree random. Forest caret

Nested data and trees • Prediction = OK • Trees in RF with nested structure are highly correlated, biased OOB estimates and variable importance • CART likely to prefer variables with more values, biasing towards level 1 variables