Capers Jones Associates LLC SOFTWARE PROCESS ASSESSMENTS HISTORY

- Slides: 71

Capers Jones & Associates LLC SOFTWARE PROCESS ASSESSMENTS: HISTORY AND USAGE Capers Jones, President and Chief Scientist Emeritus of Software Productivity Research LLC Quality seminar: talk 3 Web: www. spr. com Email: Capers. Jones 3@gmail. com Copyright © 2011 by Capers Jones. All Rights Reserved. June 11, 2011 Assessments 1

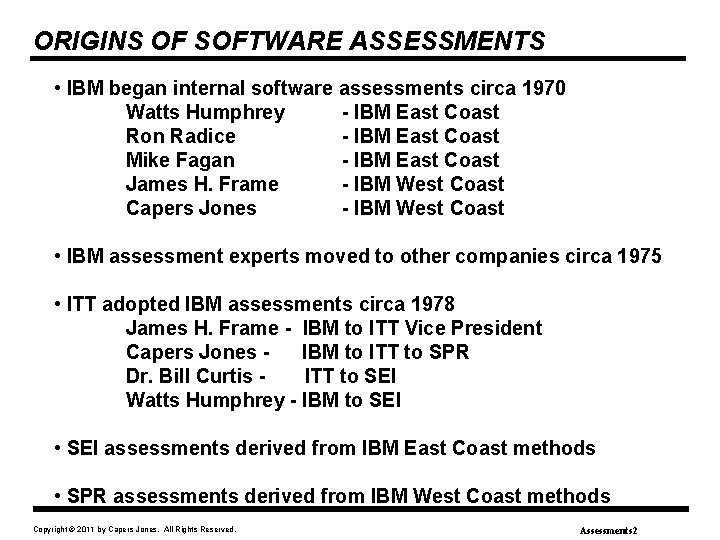

ORIGINS OF SOFTWARE ASSESSMENTS • IBM began internal software assessments circa 1970 Watts Humphrey - IBM East Coast Ron Radice - IBM East Coast Mike Fagan - IBM East Coast James H. Frame - IBM West Coast Capers Jones - IBM West Coast • IBM assessment experts moved to other companies circa 1975 • ITT adopted IBM assessments circa 1978 James H. Frame - IBM to ITT Vice President Capers Jones IBM to ITT to SPR Dr. Bill Curtis ITT to SEI Watts Humphrey - IBM to SEI • SEI assessments derived from IBM East Coast methods • SPR assessments derived from IBM West Coast methods Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 2

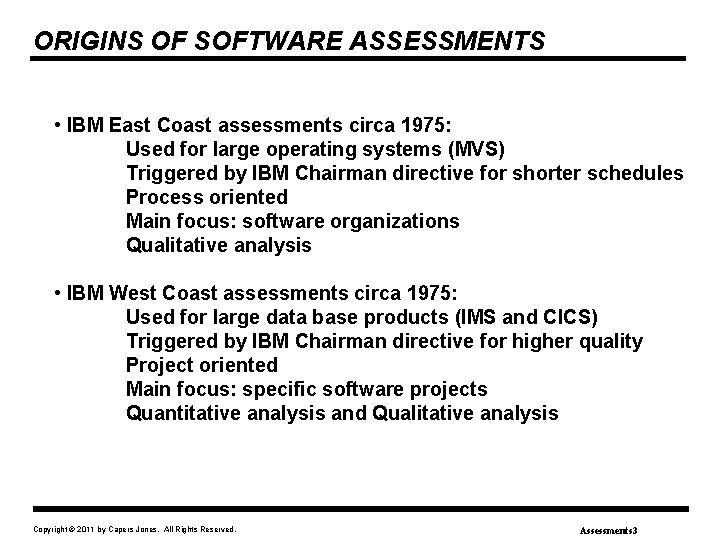

ORIGINS OF SOFTWARE ASSESSMENTS • IBM East Coast assessments circa 1975: Used for large operating systems (MVS) Triggered by IBM Chairman directive for shorter schedules Process oriented Main focus: software organizations Qualitative analysis • IBM West Coast assessments circa 1975: Used for large data base products (IMS and CICS) Triggered by IBM Chairman directive for higher quality Project oriented Main focus: specific software projects Quantitative analysis and Qualitative analysis Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 3

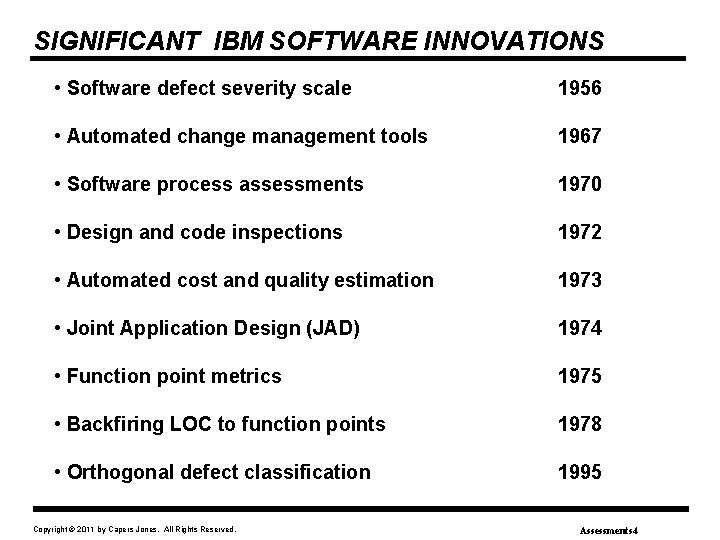

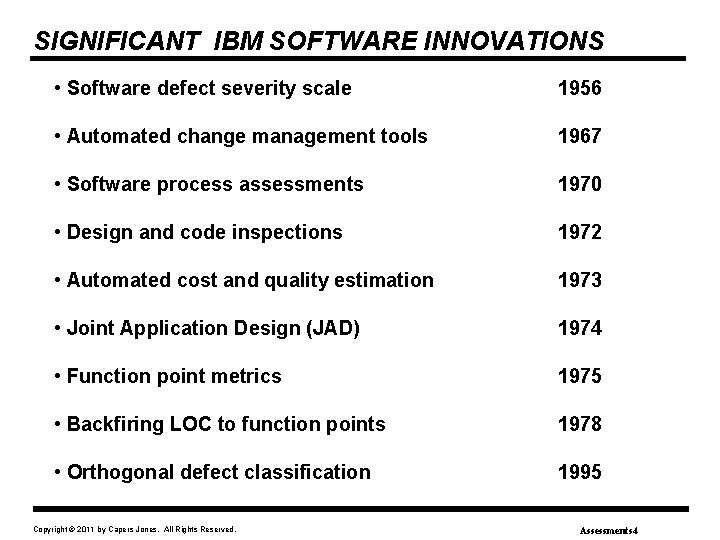

SIGNIFICANT IBM SOFTWARE INNOVATIONS • Software defect severity scale 1956 • Automated change management tools 1967 • Software process assessments 1970 • Design and code inspections 1972 • Automated cost and quality estimation 1973 • Joint Application Design (JAD) 1974 • Function point metrics 1975 • Backfiring LOC to function points 1978 • Orthogonal defect classification 1995 Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 4

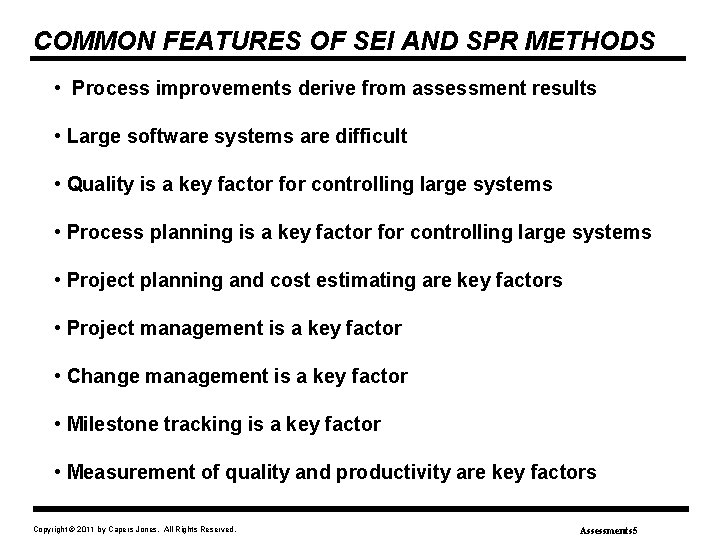

COMMON FEATURES OF SEI AND SPR METHODS • Process improvements derive from assessment results • Large software systems are difficult • Quality is a key factor for controlling large systems • Process planning is a key factor for controlling large systems • Project planning and cost estimating are key factors • Project management is a key factor • Change management is a key factor • Milestone tracking is a key factor • Measurement of quality and productivity are key factors Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 5

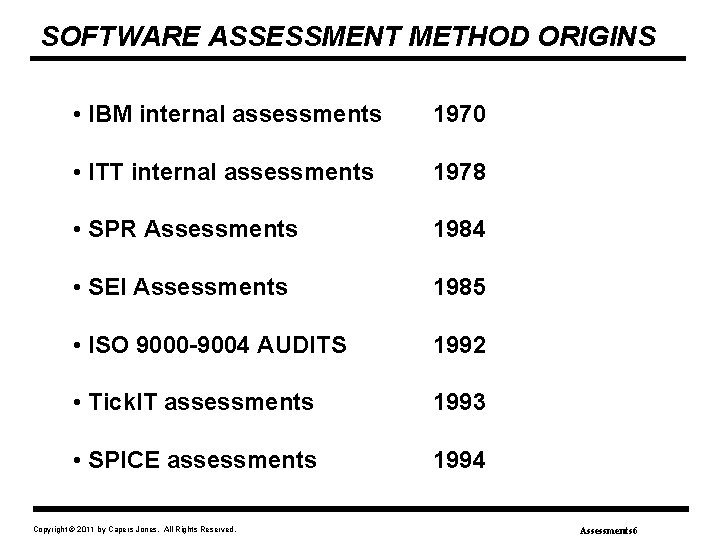

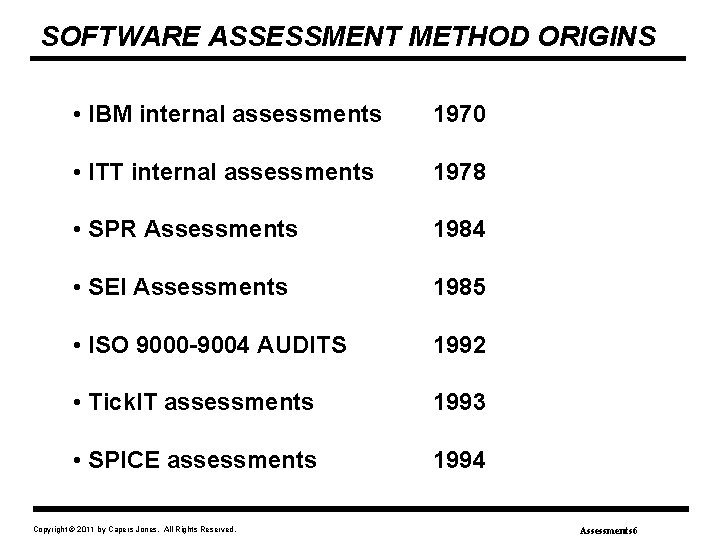

SOFTWARE ASSESSMENT METHOD ORIGINS • IBM internal assessments 1970 • ITT internal assessments 1978 • SPR Assessments 1984 • SEI Assessments 1985 • ISO 9000 -9004 AUDITS 1992 • Tick. IT assessments 1993 • SPICE assessments 1994 Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 6

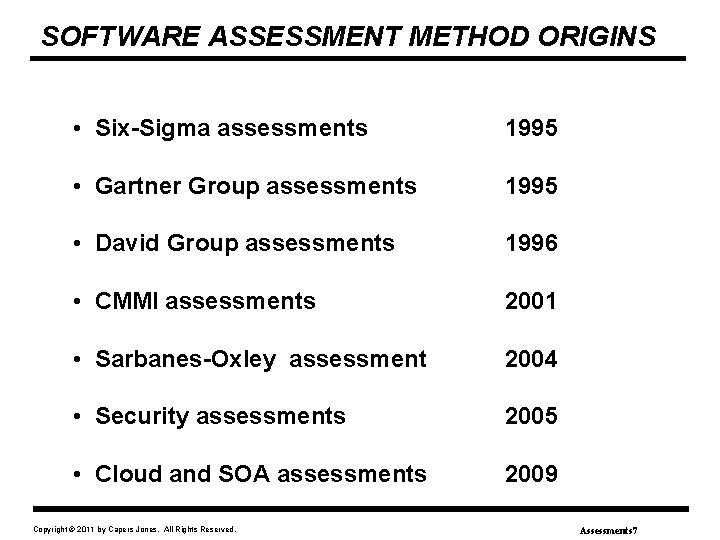

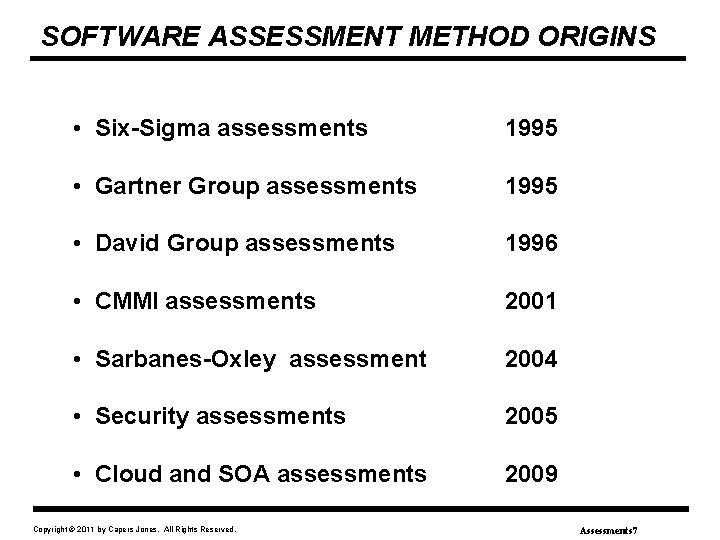

SOFTWARE ASSESSMENT METHOD ORIGINS • Six-Sigma assessments 1995 • Gartner Group assessments 1995 • David Group assessments 1996 • CMMI assessments 2001 • Sarbanes-Oxley assessment 2004 • Security assessments 2005 • Cloud and SOA assessments 2009 Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 7

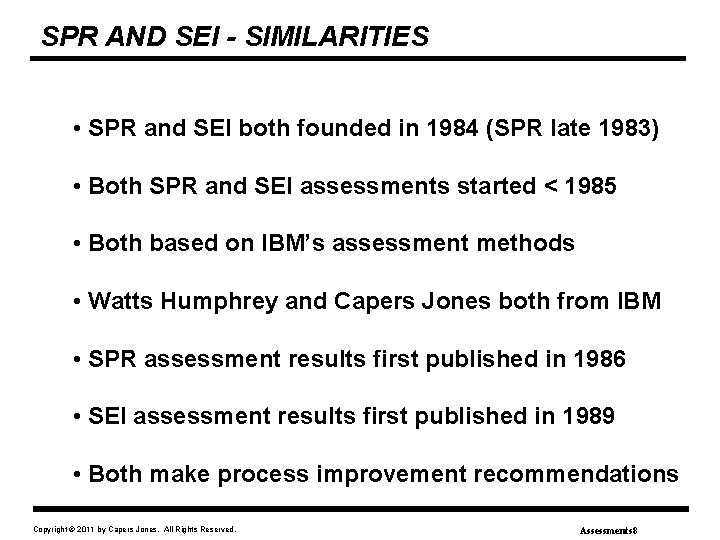

SPR AND SEI - SIMILARITIES • SPR and SEI both founded in 1984 (SPR late 1983) • Both SPR and SEI assessments started < 1985 • Both based on IBM’s assessment methods • Watts Humphrey and Capers Jones both from IBM • SPR assessment results first published in 1986 • SEI assessment results first published in 1989 • Both make process improvement recommendations Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 8

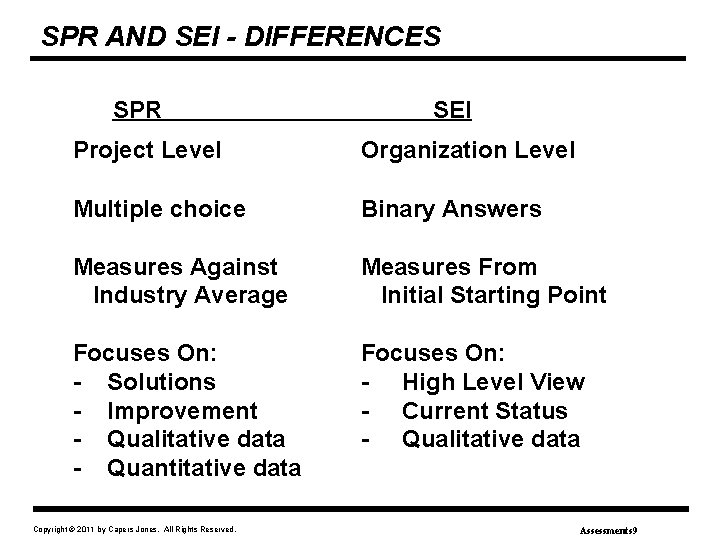

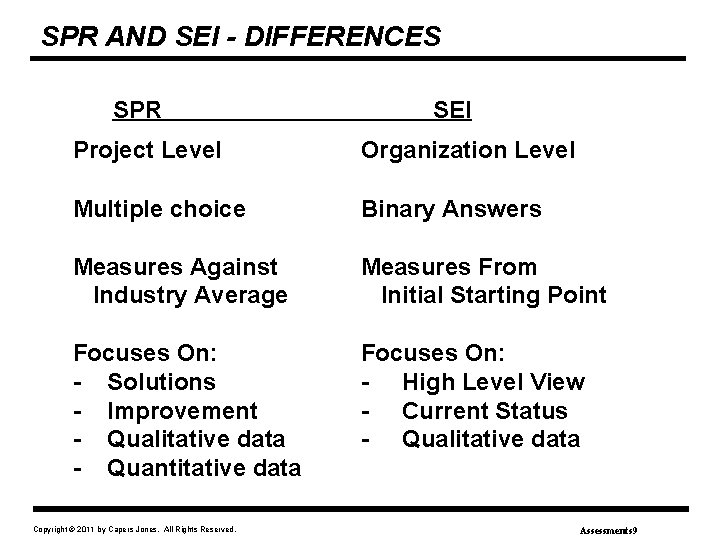

SPR AND SEI - DIFFERENCES SPR SEI Project Level Organization Level Multiple choice Binary Answers Measures Against Industry Average Measures From Initial Starting Point Focuses On: - Solutions - Improvement - Qualitative data - Quantitative data Focuses On: - High Level View - Current Status - Qualitative data Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 9

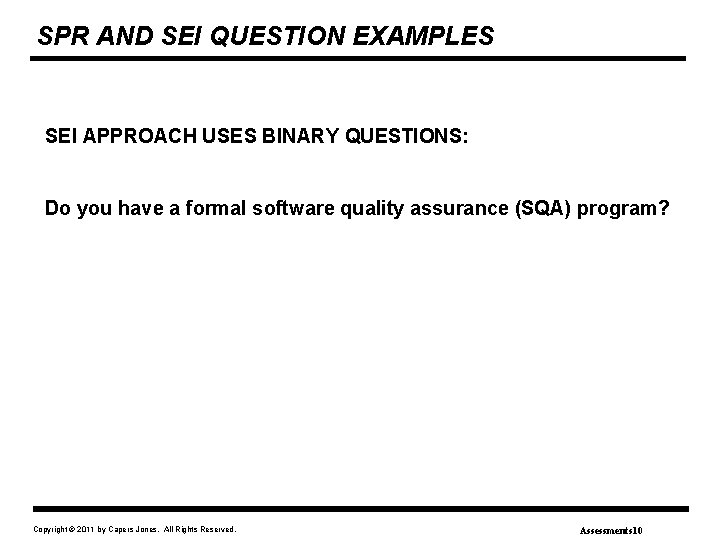

SPR AND SEI QUESTION EXAMPLES SEI APPROACH USES BINARY QUESTIONS: Do you have a formal software quality assurance (SQA) program? Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 10

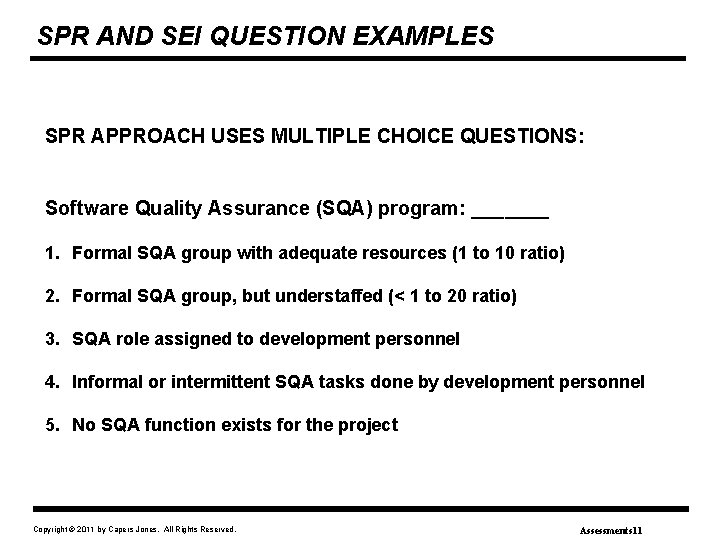

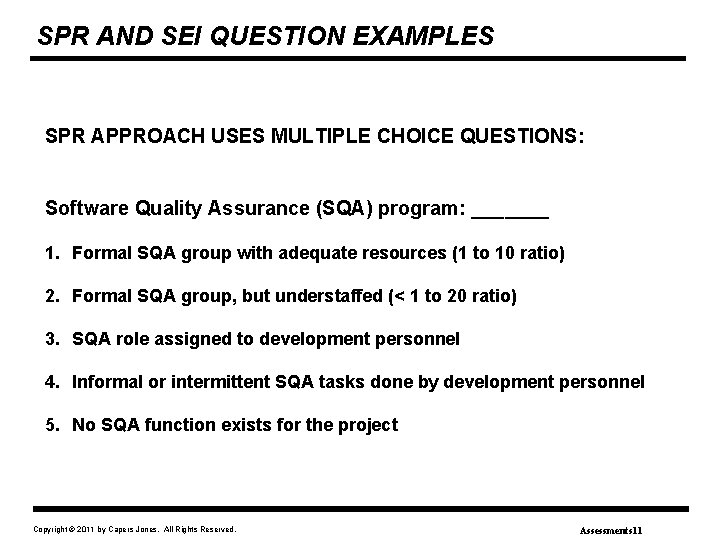

SPR AND SEI QUESTION EXAMPLES SPR APPROACH USES MULTIPLE CHOICE QUESTIONS: Software Quality Assurance (SQA) program: _______ 1. Formal SQA group with adequate resources (1 to 10 ratio) 2. Formal SQA group, but understaffed (< 1 to 20 ratio) 3. SQA role assigned to development personnel 4. Informal or intermittent SQA tasks done by development personnel 5. No SQA function exists for the project Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 11

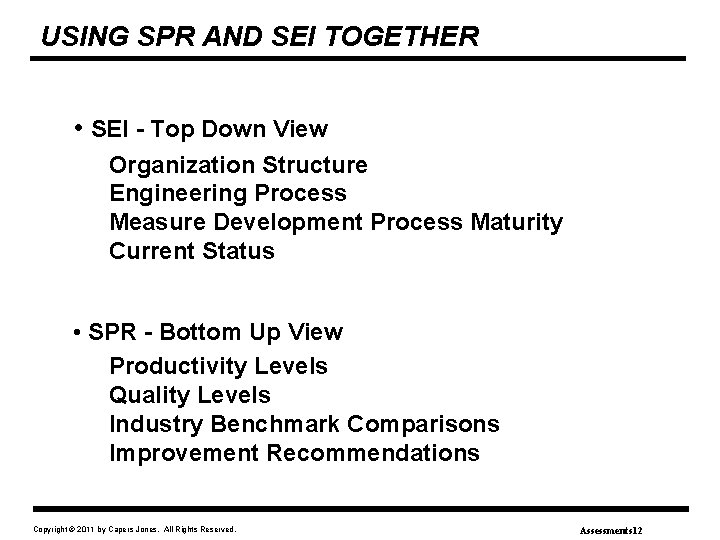

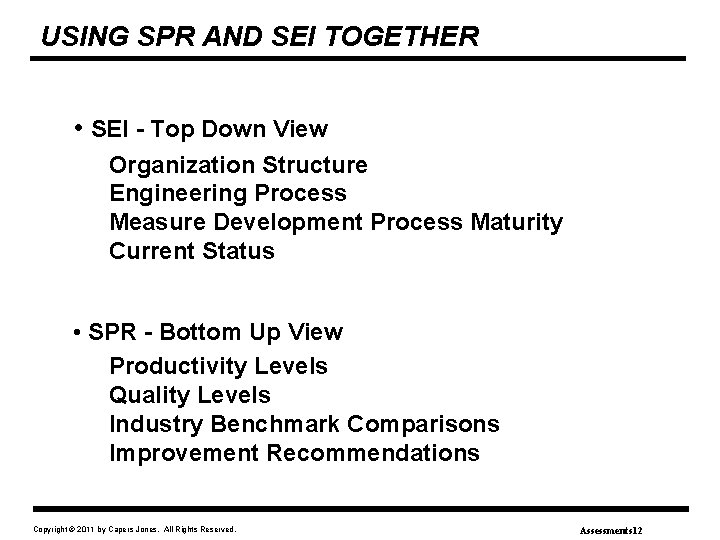

USING SPR AND SEI TOGETHER • SEI - Top Down View Organization Structure Engineering Process Measure Development Process Maturity Current Status • SPR - Bottom Up View Productivity Levels Quality Levels Industry Benchmark Comparisons Improvement Recommendations Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 12

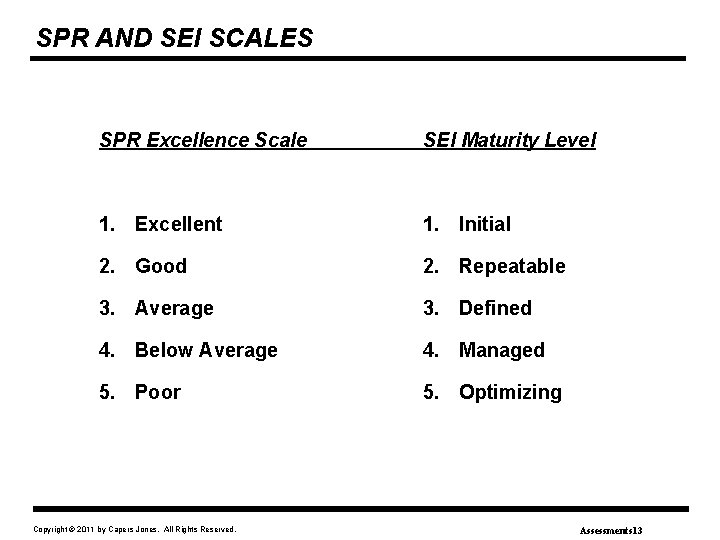

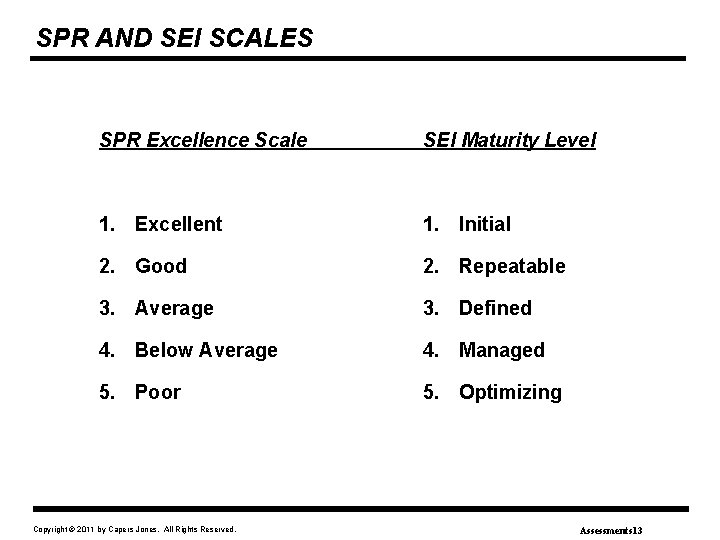

SPR AND SEI SCALES SPR Excellence Scale SEI Maturity Level 1. Excellent 1. Initial 2. Good 2. Repeatable 3. Average 3. Defined 4. Below Average 4. Managed 5. Poor 5. Optimizing Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 13

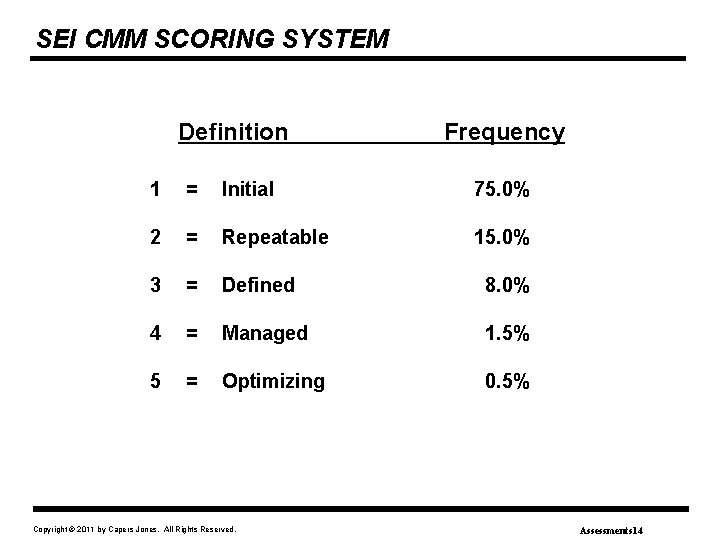

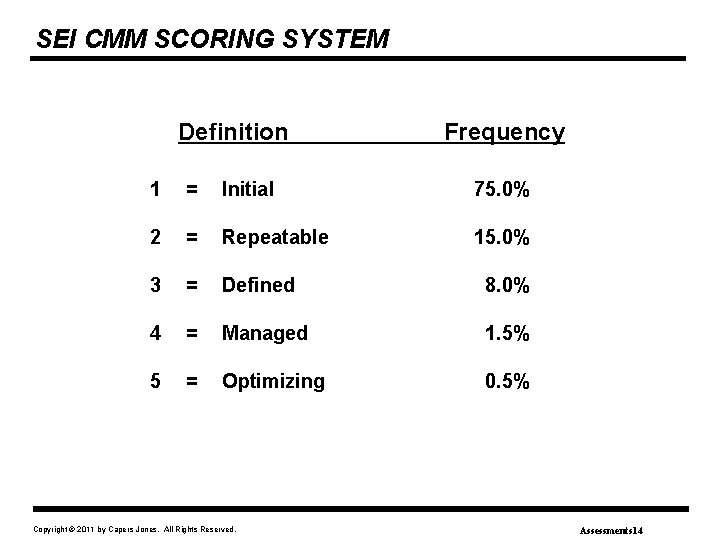

SEI CMM SCORING SYSTEM Definition Frequency 1 = Initial 75. 0% 2 = Repeatable 15. 0% 3 = Defined 8. 0% 4 = Managed 1. 5% 5 = Optimizing 0. 5% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 14

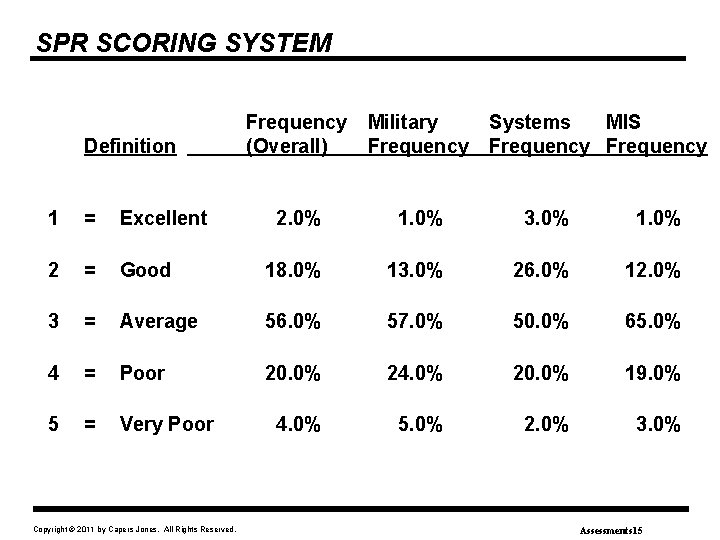

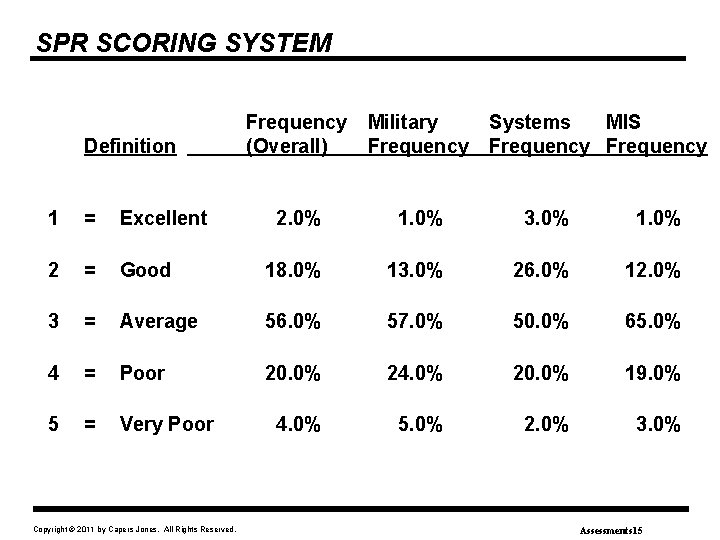

SPR SCORING SYSTEM Definition Frequency (Overall) Military Frequency Systems MIS Frequency 2. 0% 1. 0% 3. 0% 1 = Excellent 2 = Good 18. 0% 13. 0% 26. 0% 12. 0% 3 = Average 56. 0% 57. 0% 50. 0% 65. 0% 4 = Poor 20. 0% 24. 0% 20. 0% 19. 0% 5 = Very Poor 4. 0% 5. 0% 2. 0% 3. 0% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 15

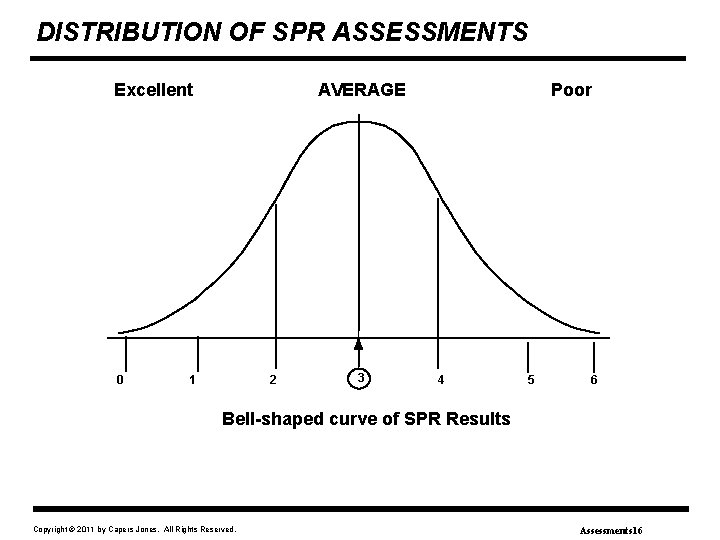

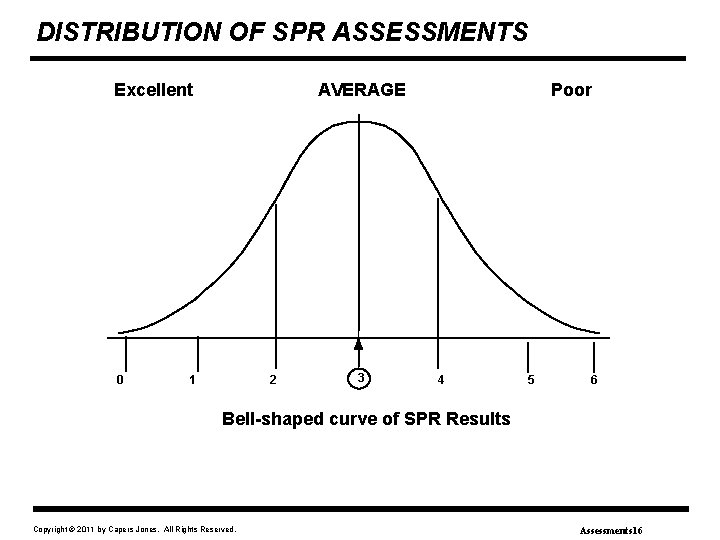

DISTRIBUTION OF SPR ASSESSMENTS Excellent 0 AVERAGE 1 2 3 Poor 4 5 6 Bell-shaped curve of SPR Results Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 16

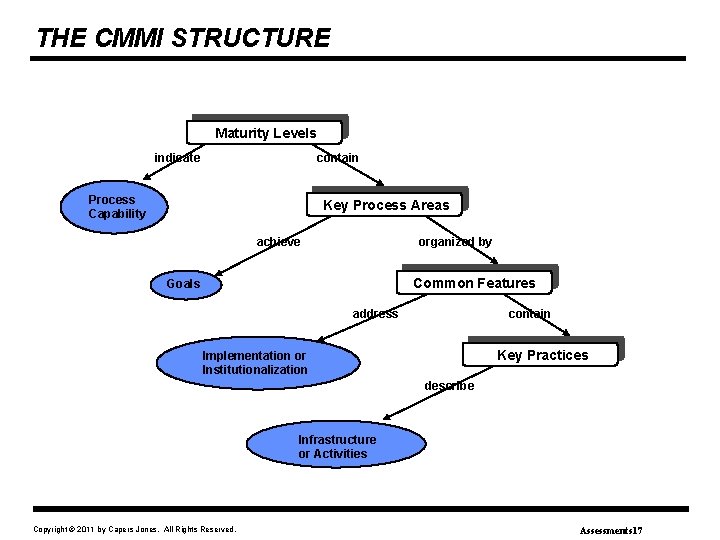

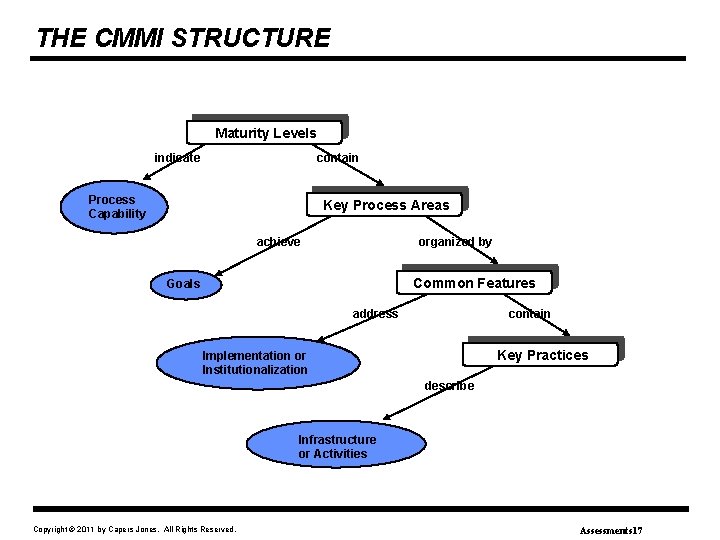

THE CMMI STRUCTURE Maturity Levels indicate contain Process Capability Key Process Areas achieve organized by Common Features Goals address contain Key Practices Implementation or Institutionalization describe Infrastructure or Activities Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 17

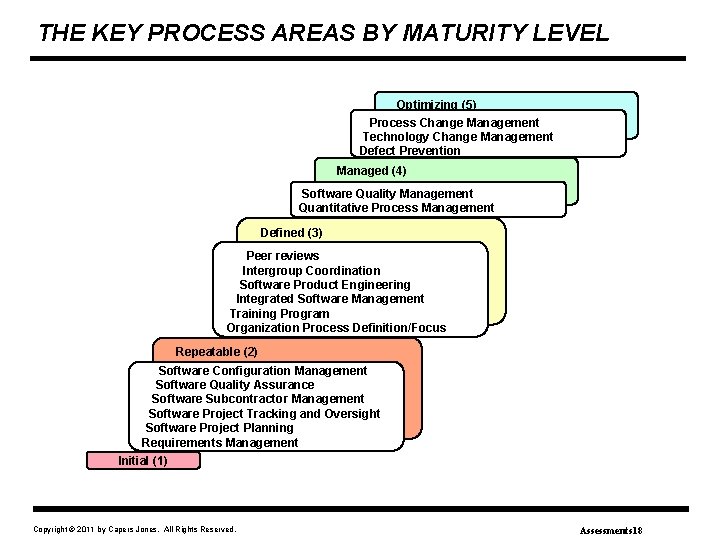

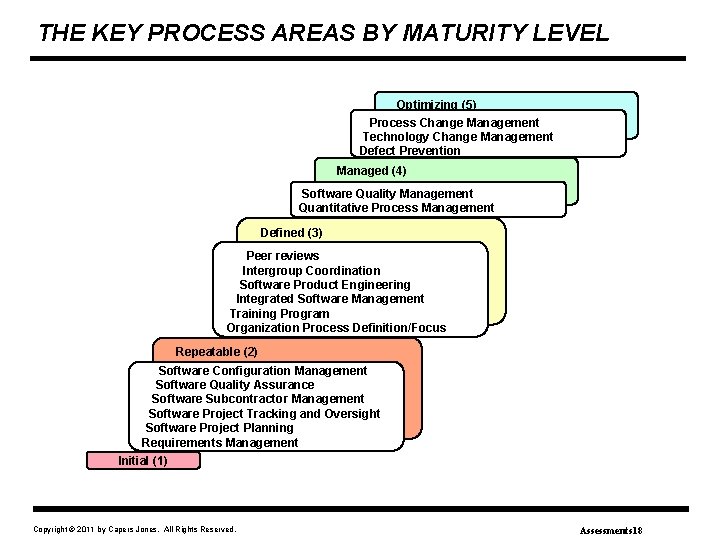

THE KEY PROCESS AREAS BY MATURITY LEVEL Optimizing (5) Process Change Management Technology Change Management Defect Prevention Managed (4) Software Quality Management Quantitative Process Management Defined (3) Peer reviews Intergroup Coordination Software Product Engineering Integrated Software Management Training Program Organization Process Definition/Focus Repeatable (2) Software Configuration Management Software Quality Assurance Software Subcontractor Management Software Project Tracking and Oversight Software Project Planning Requirements Management Initial (1) Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 18

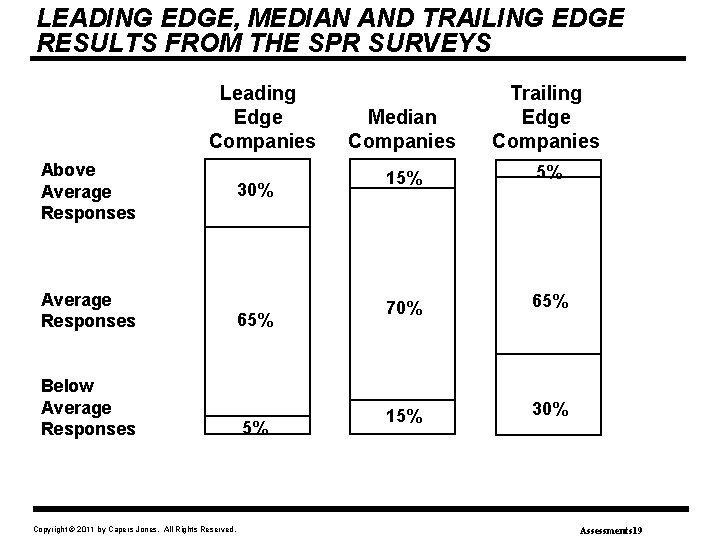

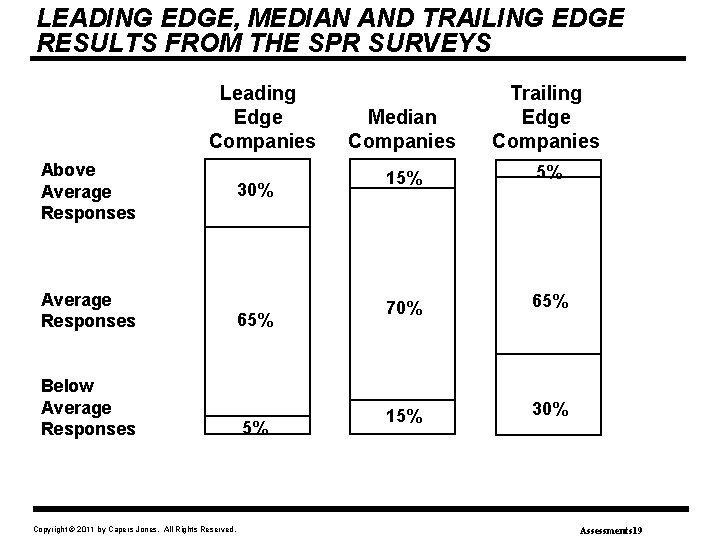

LEADING EDGE, MEDIAN AND TRAILING EDGE RESULTS FROM THE SPR SURVEYS Leading Edge Companies Above Average Responses Below Average Responses Copyright © 2011 by Capers Jones. All Rights Reserved. 30% 65% 5% Median Companies Trailing Edge Companies 15% 5% 70% 65% 15% 30% Assessments 19

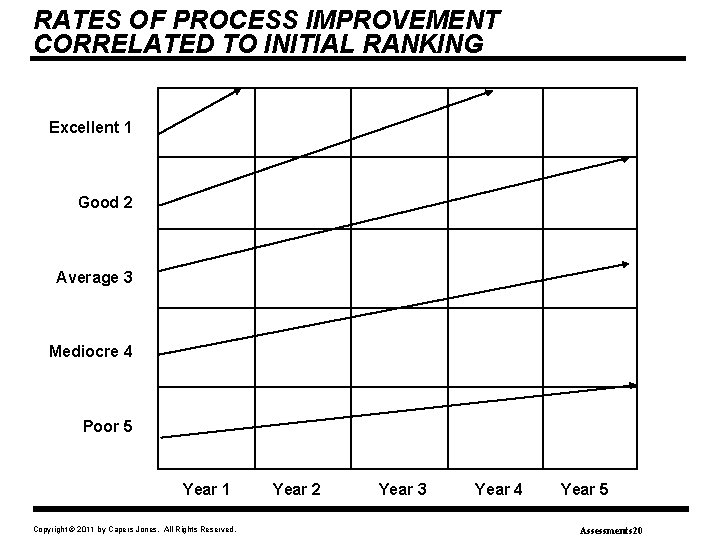

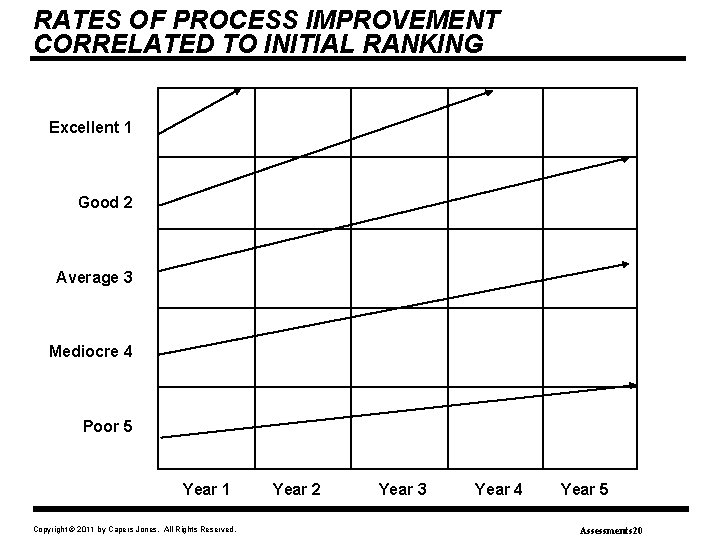

RATES OF PROCESS IMPROVEMENT CORRELATED TO INITIAL RANKING Excellent 1 Good 2 Average 3 Mediocre 4 Poor 5 Year 1 Copyright © 2011 by Capers Jones. All Rights Reserved. Year 2 Year 3 Year 4 Year 5 Assessments 20

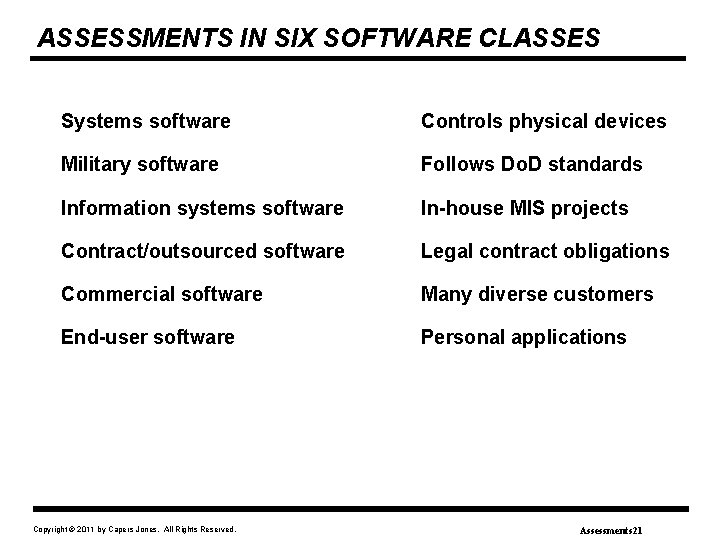

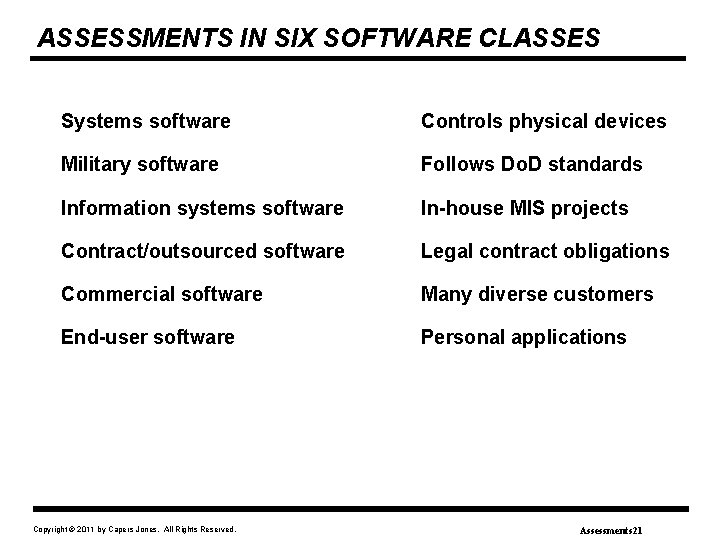

ASSESSMENTS IN SIX SOFTWARE CLASSES Systems software Controls physical devices Military software Follows Do. D standards Information systems software In-house MIS projects Contract/outsourced software Legal contract obligations Commercial software Many diverse customers End-user software Personal applications Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 21

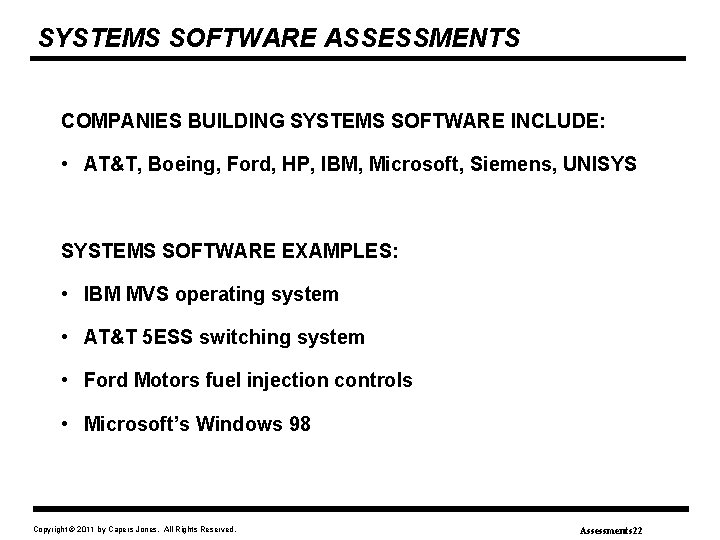

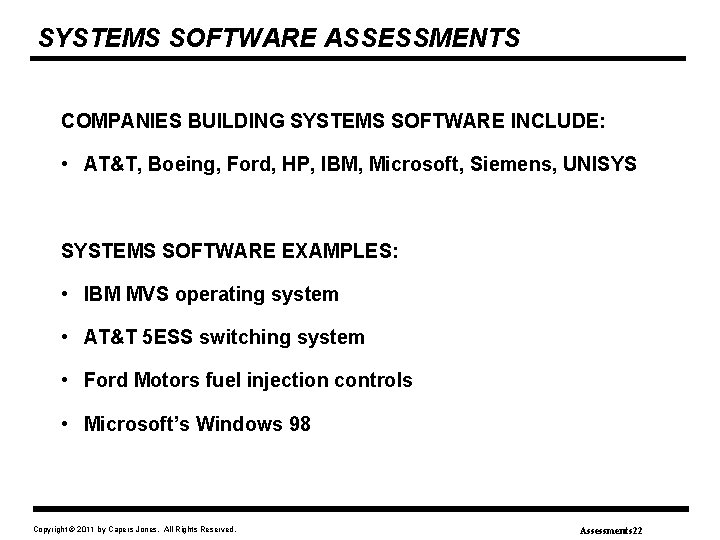

SYSTEMS SOFTWARE ASSESSMENTS COMPANIES BUILDING SYSTEMS SOFTWARE INCLUDE: • AT&T, Boeing, Ford, HP, IBM, Microsoft, Siemens, UNISYS SYSTEMS SOFTWARE EXAMPLES: • IBM MVS operating system • AT&T 5 ESS switching system • Ford Motors fuel injection controls • Microsoft’s Windows 98 Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 22

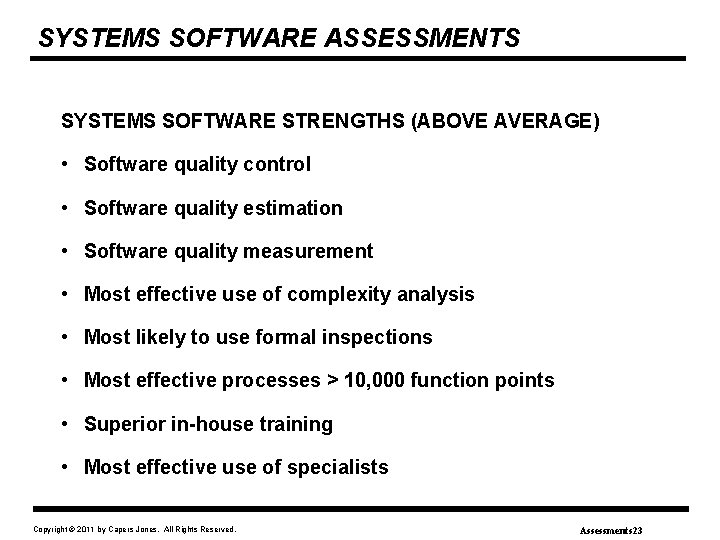

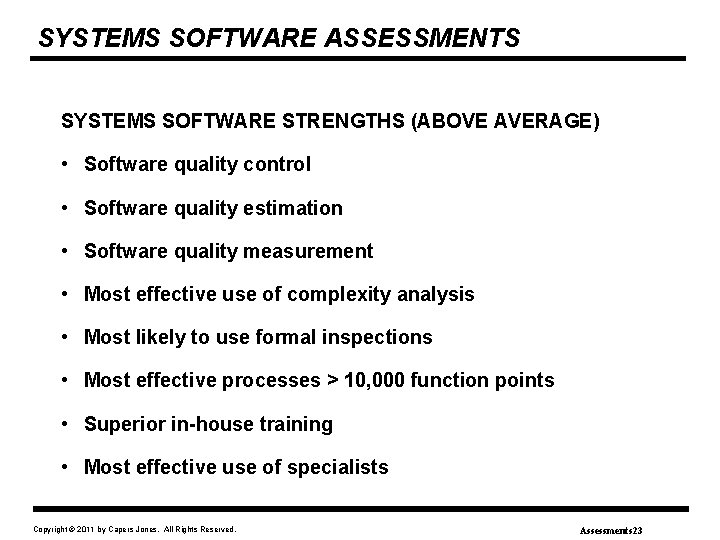

SYSTEMS SOFTWARE ASSESSMENTS SYSTEMS SOFTWARE STRENGTHS (ABOVE AVERAGE) • Software quality control • Software quality estimation • Software quality measurement • Most effective use of complexity analysis • Most likely to use formal inspections • Most effective processes > 10, 000 function points • Superior in-house training • Most effective use of specialists Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 23

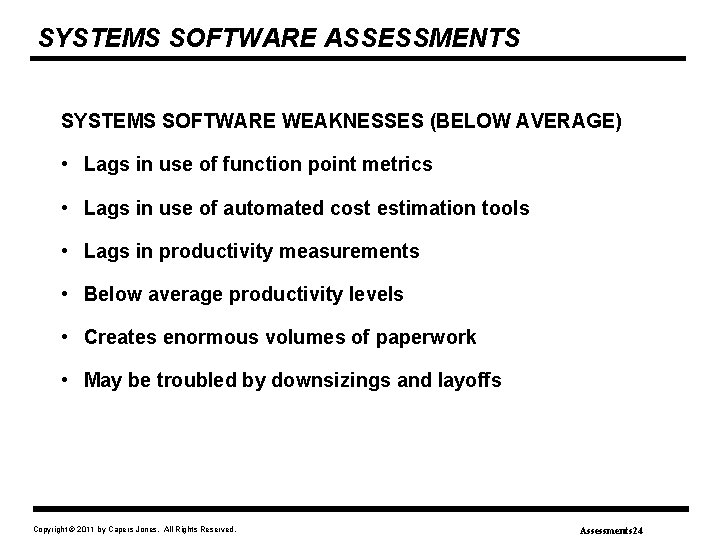

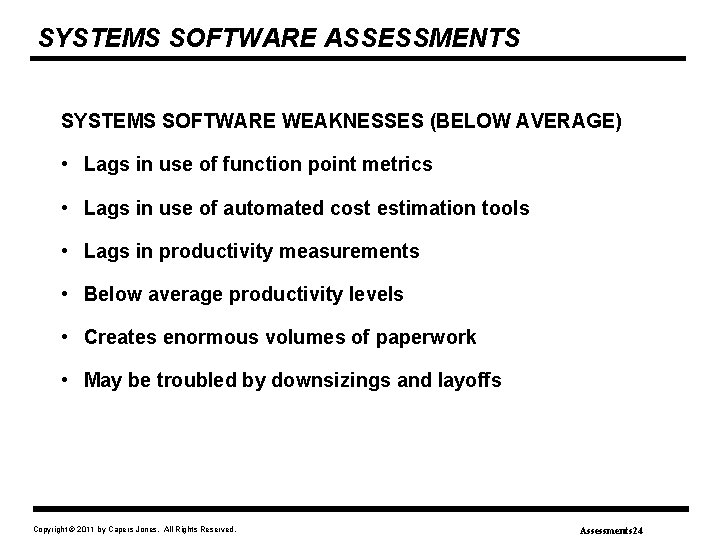

SYSTEMS SOFTWARE ASSESSMENTS SYSTEMS SOFTWARE WEAKNESSES (BELOW AVERAGE) • Lags in use of function point metrics • Lags in use of automated cost estimation tools • Lags in productivity measurements • Below average productivity levels • Creates enormous volumes of paperwork • May be troubled by downsizings and layoffs Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 24

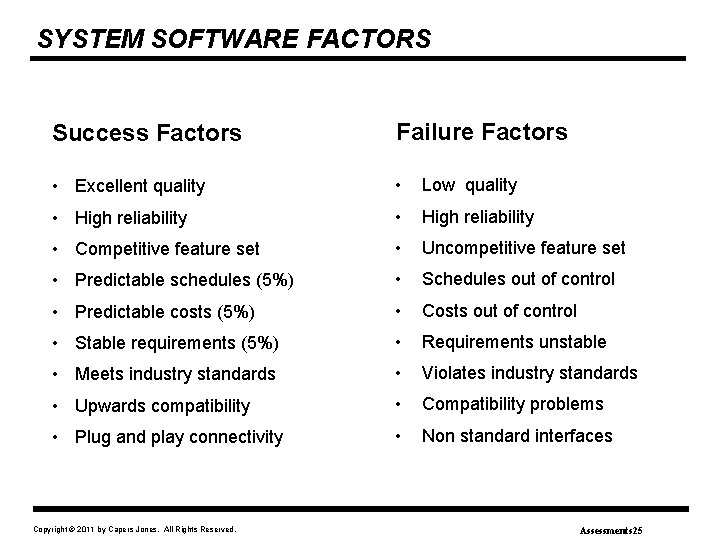

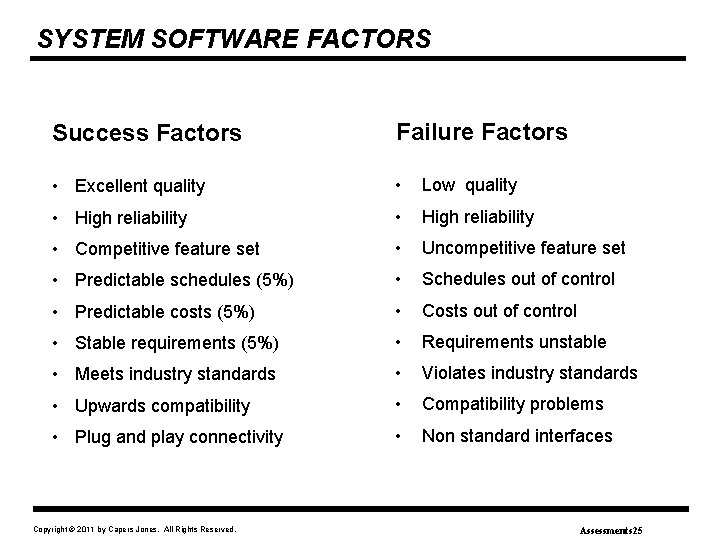

SYSTEM SOFTWARE FACTORS Success Factors Failure Factors • Excellent quality • Low quality • High reliability • Competitive feature set • Uncompetitive feature set • Predictable schedules (5%) • Schedules out of control • Predictable costs (5%) • Costs out of control • Stable requirements (5%) • Requirements unstable • Meets industry standards • Violates industry standards • Upwards compatibility • Compatibility problems • Plug and play connectivity • Non standard interfaces Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 25

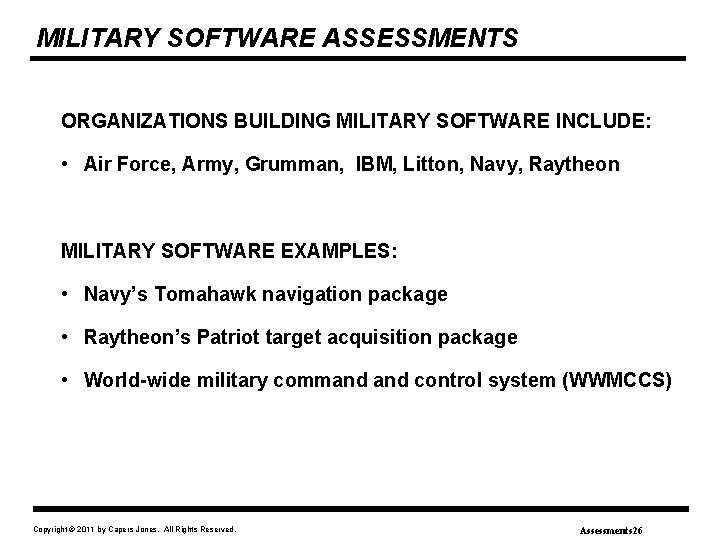

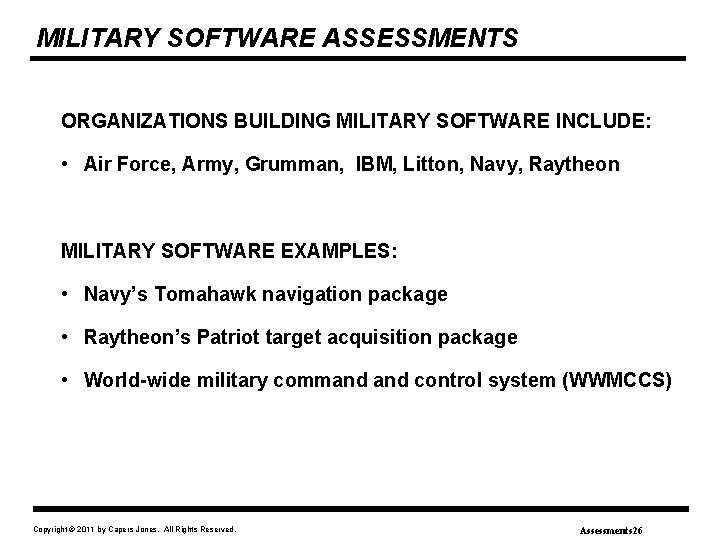

MILITARY SOFTWARE ASSESSMENTS ORGANIZATIONS BUILDING MILITARY SOFTWARE INCLUDE: • Air Force, Army, Grumman, IBM, Litton, Navy, Raytheon MILITARY SOFTWARE EXAMPLES: • Navy’s Tomahawk navigation package • Raytheon’s Patriot target acquisition package • World-wide military command control system (WWMCCS) Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 26

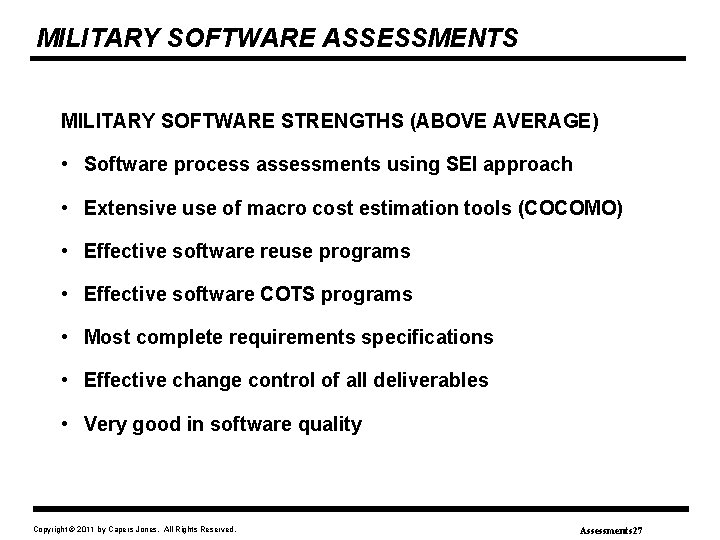

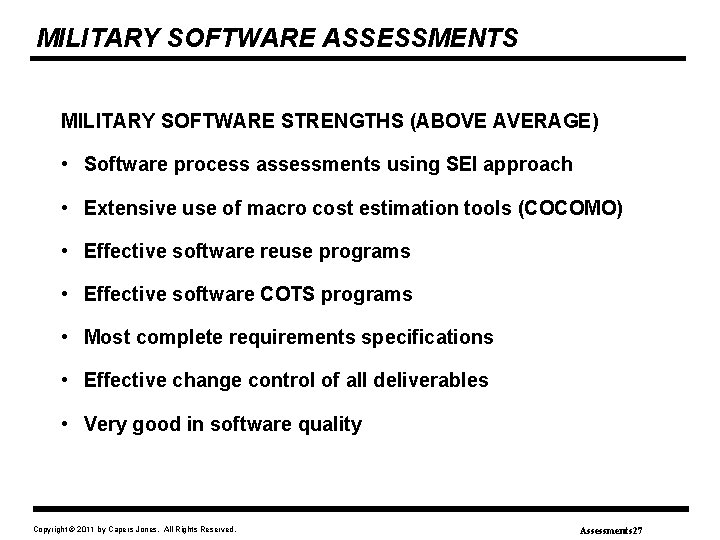

MILITARY SOFTWARE ASSESSMENTS MILITARY SOFTWARE STRENGTHS (ABOVE AVERAGE) • Software process assessments using SEI approach • Extensive use of macro cost estimation tools (COCOMO) • Effective software reuse programs • Effective software COTS programs • Most complete requirements specifications • Effective change control of all deliverables • Very good in software quality Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 27

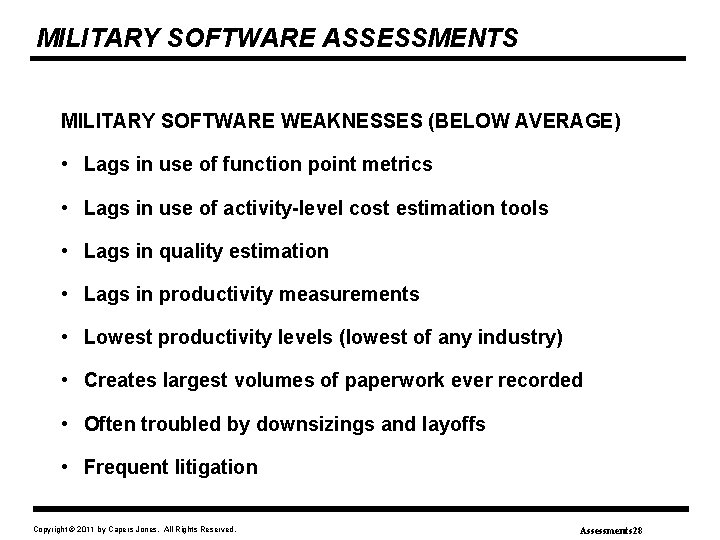

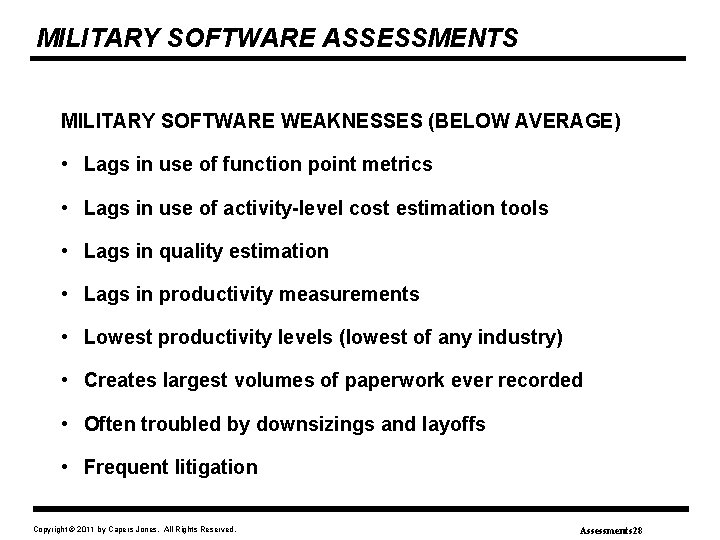

MILITARY SOFTWARE ASSESSMENTS MILITARY SOFTWARE WEAKNESSES (BELOW AVERAGE) • Lags in use of function point metrics • Lags in use of activity-level cost estimation tools • Lags in quality estimation • Lags in productivity measurements • Lowest productivity levels (lowest of any industry) • Creates largest volumes of paperwork ever recorded • Often troubled by downsizings and layoffs • Frequent litigation Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 28

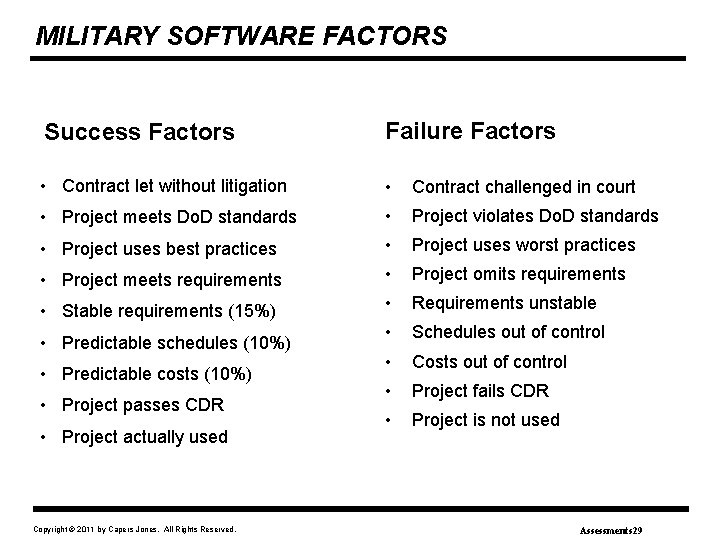

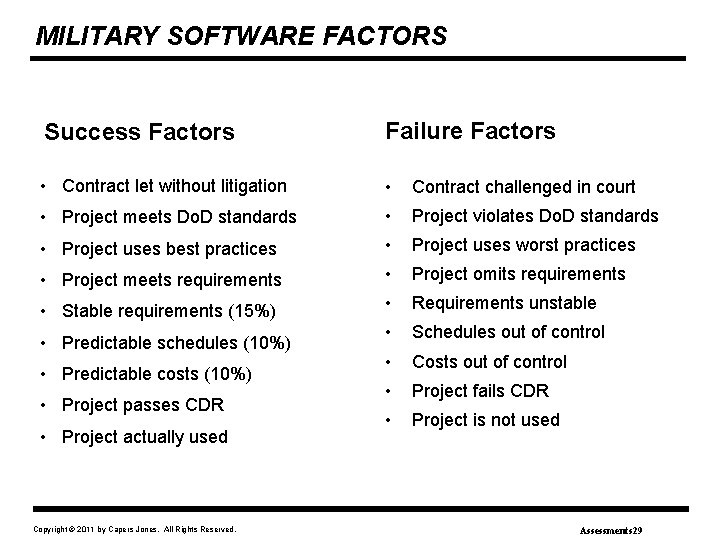

MILITARY SOFTWARE FACTORS Success Factors Failure Factors • Contract let without litigation • Contract challenged in court • Project meets Do. D standards • Project violates Do. D standards • Project uses best practices • Project uses worst practices • Project meets requirements • Project omits requirements • Stable requirements (15%) • Requirements unstable • Schedules out of control • Costs out of control • Project fails CDR • Project is not used • Predictable schedules (10%) • Predictable costs (10%) • Project passes CDR • Project actually used Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 29

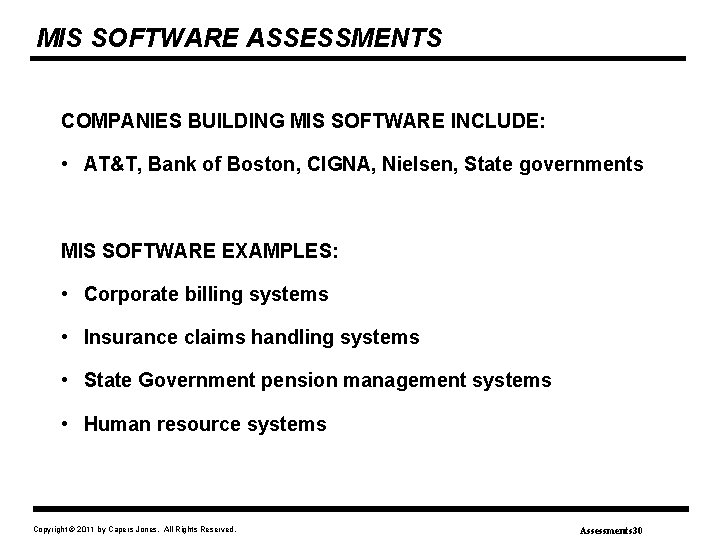

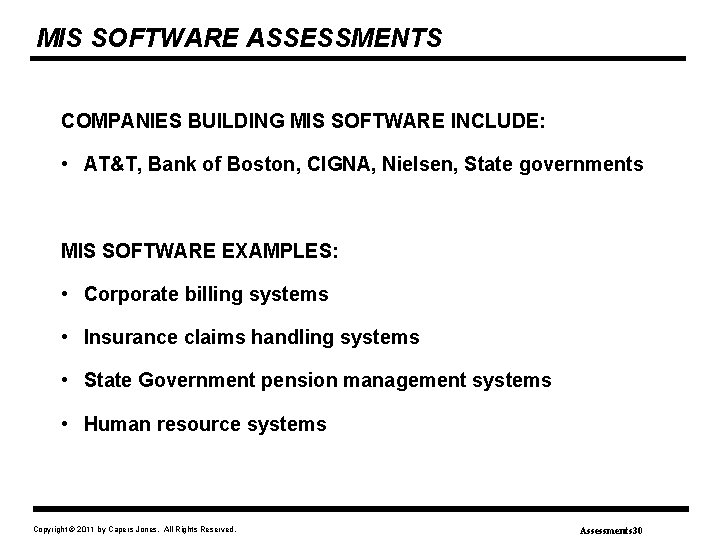

MIS SOFTWARE ASSESSMENTS COMPANIES BUILDING MIS SOFTWARE INCLUDE: • AT&T, Bank of Boston, CIGNA, Nielsen, State governments MIS SOFTWARE EXAMPLES: • Corporate billing systems • Insurance claims handling systems • State Government pension management systems • Human resource systems Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 30

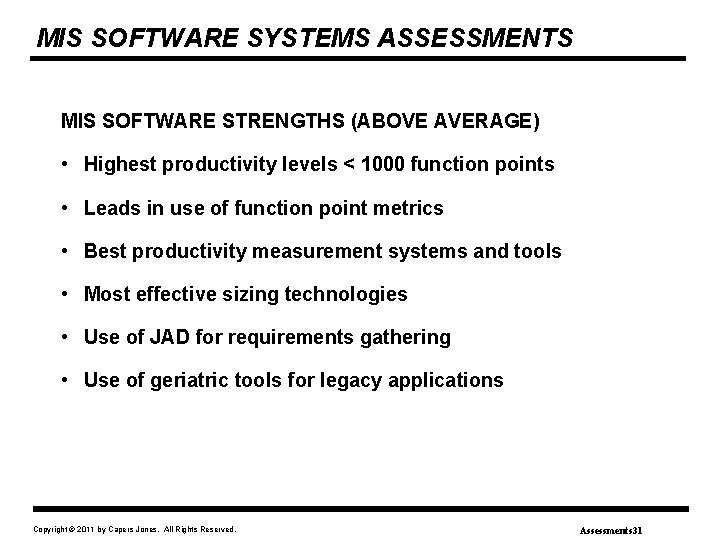

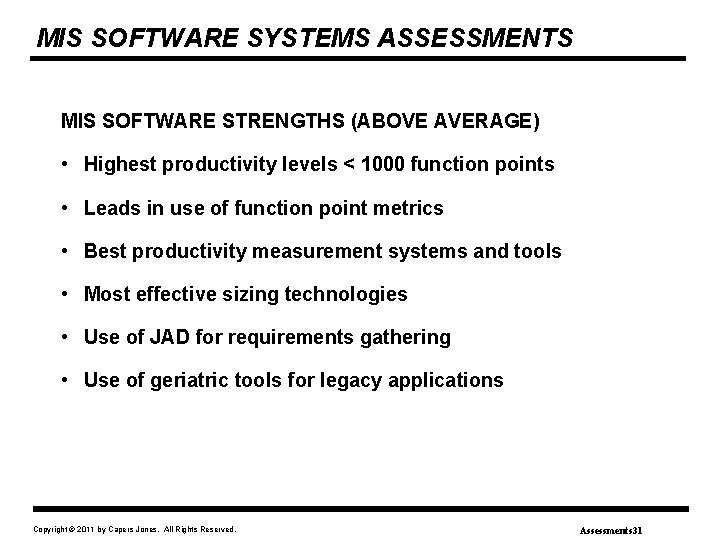

MIS SOFTWARE SYSTEMS ASSESSMENTS MIS SOFTWARE STRENGTHS (ABOVE AVERAGE) • Highest productivity levels < 1000 function points • Leads in use of function point metrics • Best productivity measurement systems and tools • Most effective sizing technologies • Use of JAD for requirements gathering • Use of geriatric tools for legacy applications Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 31

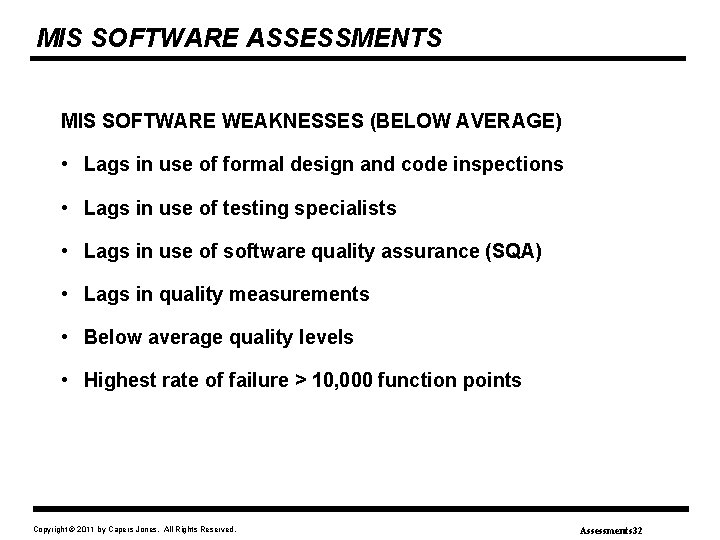

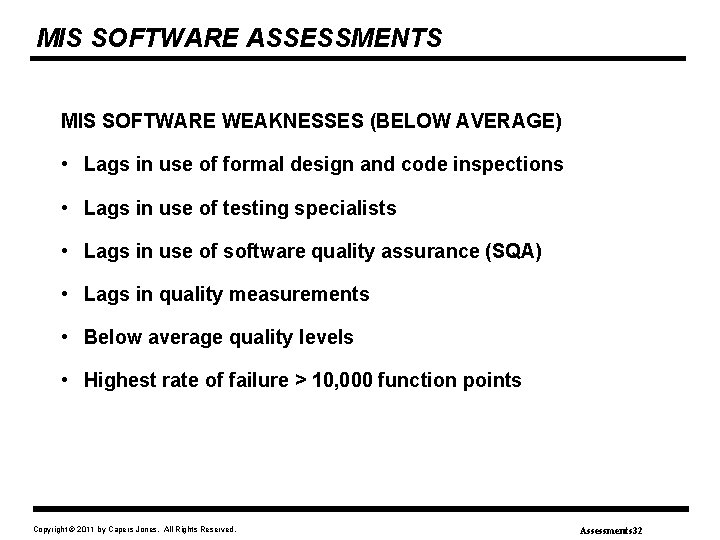

MIS SOFTWARE ASSESSMENTS MIS SOFTWARE WEAKNESSES (BELOW AVERAGE) • Lags in use of formal design and code inspections • Lags in use of testing specialists • Lags in use of software quality assurance (SQA) • Lags in quality measurements • Below average quality levels • Highest rate of failure > 10, 000 function points Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 32

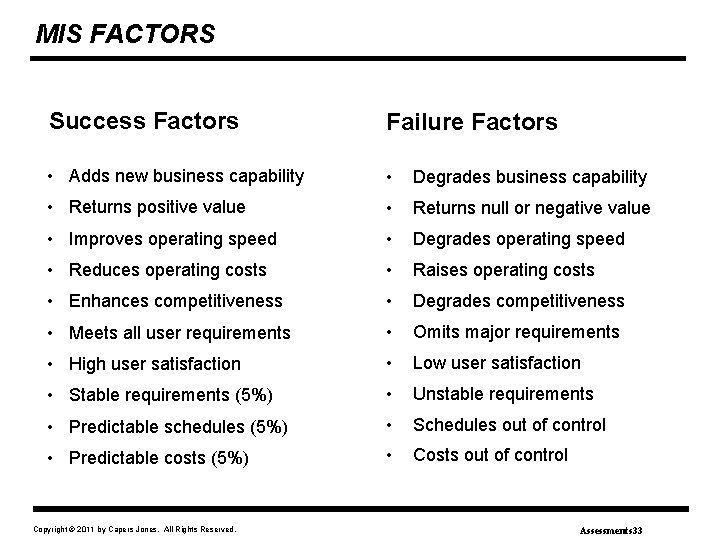

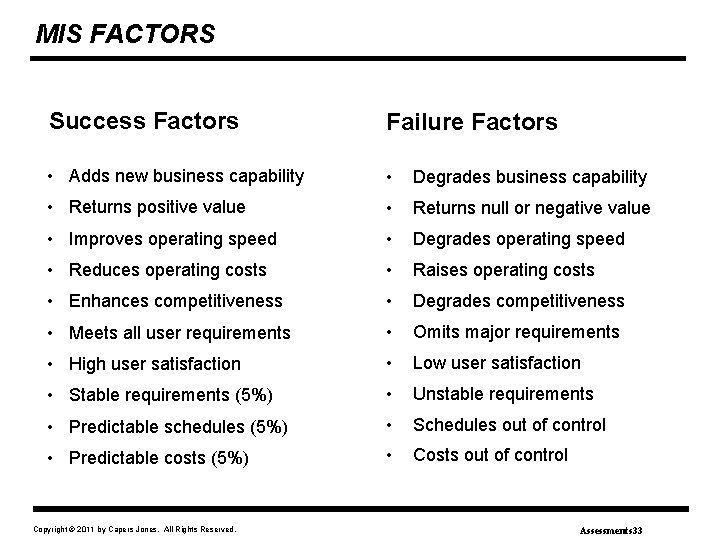

MIS FACTORS Success Factors Failure Factors • Adds new business capability • Degrades business capability • Returns positive value • Returns null or negative value • Improves operating speed • Degrades operating speed • Reduces operating costs • Raises operating costs • Enhances competitiveness • Degrades competitiveness • Meets all user requirements • Omits major requirements • High user satisfaction • Low user satisfaction • Stable requirements (5%) • Unstable requirements • Predictable schedules (5%) • Schedules out of control • Predictable costs (5%) • Costs out of control Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 33

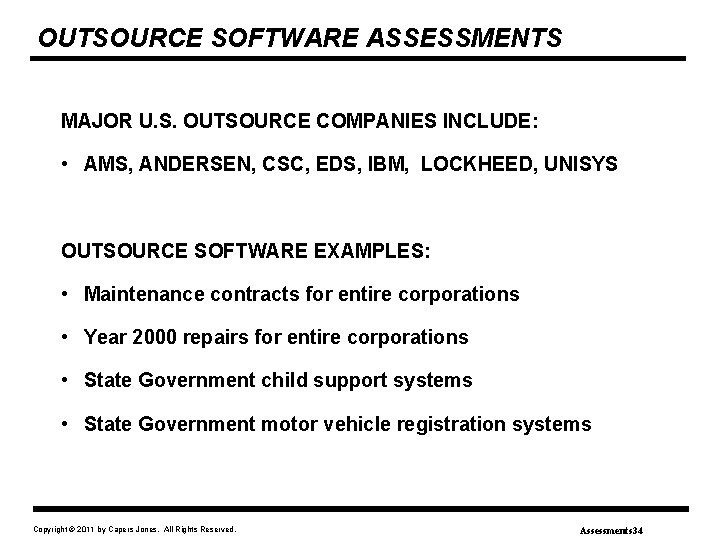

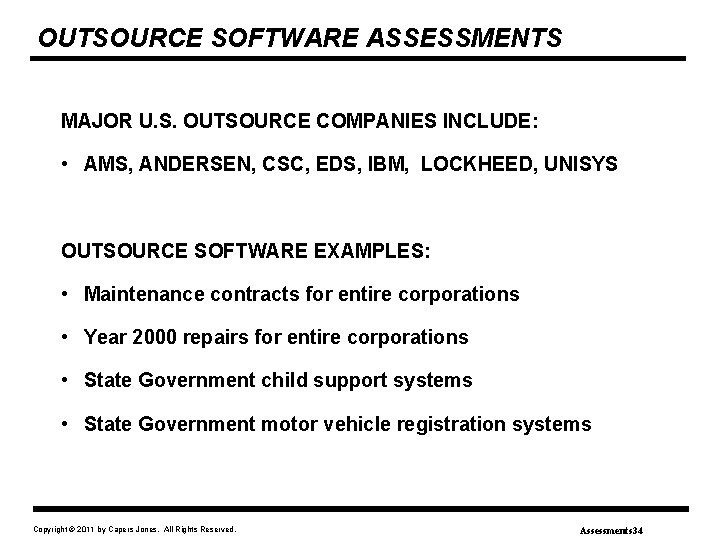

OUTSOURCE SOFTWARE ASSESSMENTS MAJOR U. S. OUTSOURCE COMPANIES INCLUDE: • AMS, ANDERSEN, CSC, EDS, IBM, LOCKHEED, UNISYS OUTSOURCE SOFTWARE EXAMPLES: • Maintenance contracts for entire corporations • Year 2000 repairs for entire corporations • State Government child support systems • State Government motor vehicle registration systems Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 34

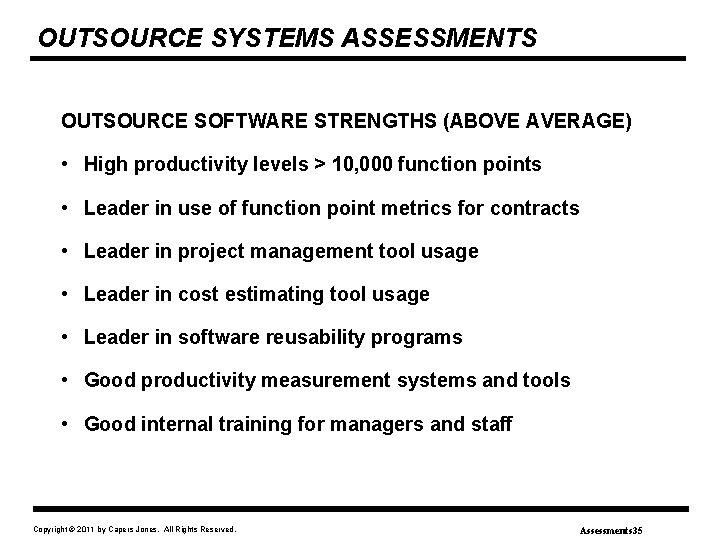

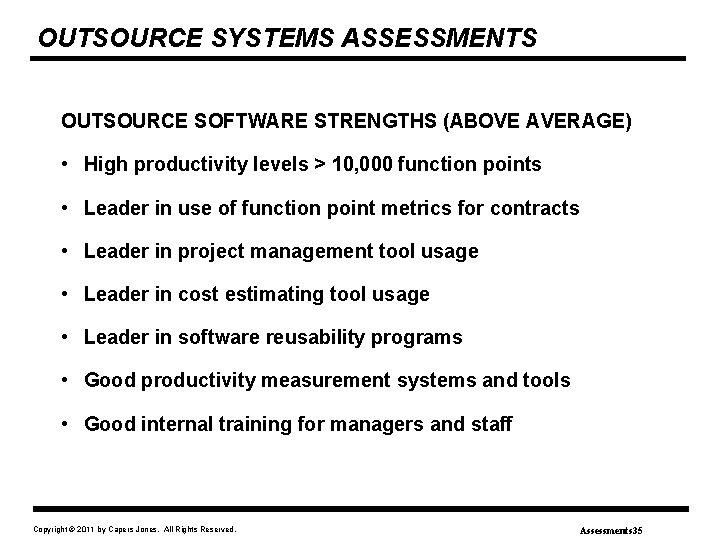

OUTSOURCE SYSTEMS ASSESSMENTS OUTSOURCE SOFTWARE STRENGTHS (ABOVE AVERAGE) • High productivity levels > 10, 000 function points • Leader in use of function point metrics for contracts • Leader in project management tool usage • Leader in cost estimating tool usage • Leader in software reusability programs • Good productivity measurement systems and tools • Good internal training for managers and staff Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 35

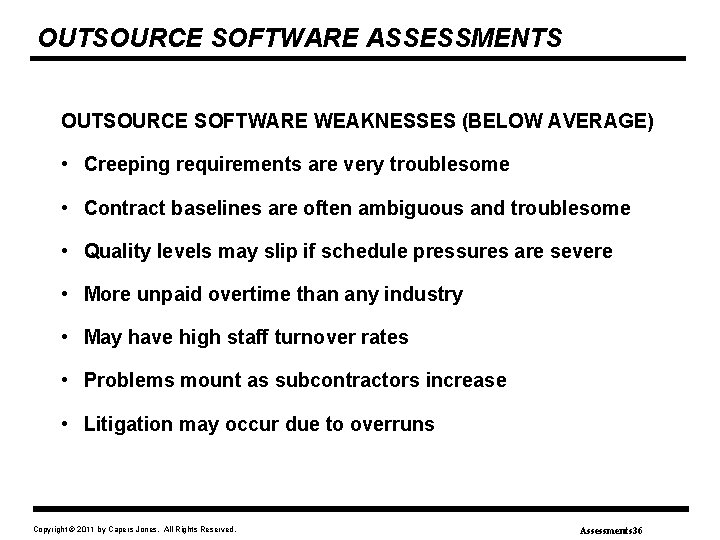

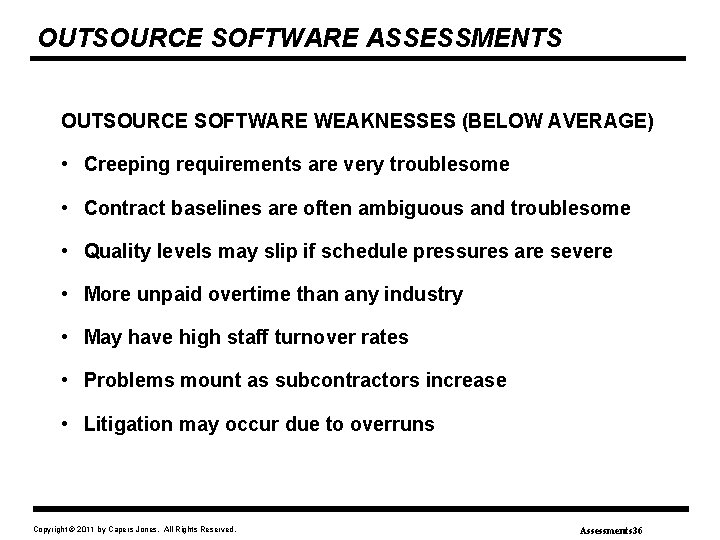

OUTSOURCE SOFTWARE ASSESSMENTS OUTSOURCE SOFTWARE WEAKNESSES (BELOW AVERAGE) • Creeping requirements are very troublesome • Contract baselines are often ambiguous and troublesome • Quality levels may slip if schedule pressures are severe • More unpaid overtime than any industry • May have high staff turnover rates • Problems mount as subcontractors increase • Litigation may occur due to overruns Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 36

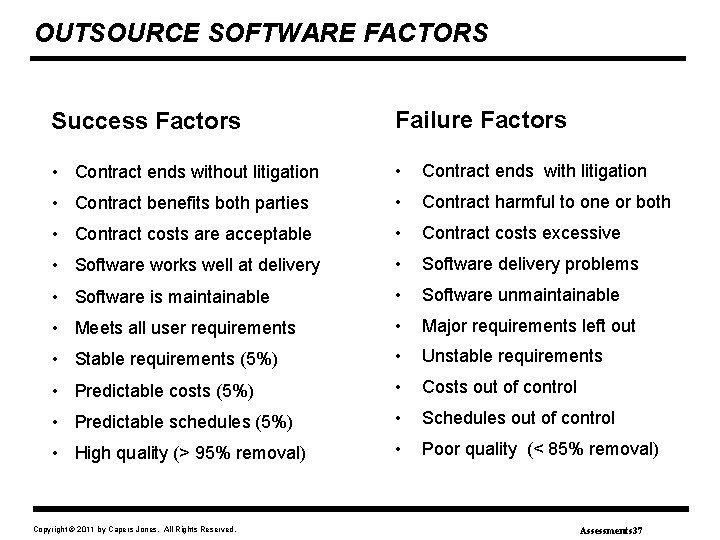

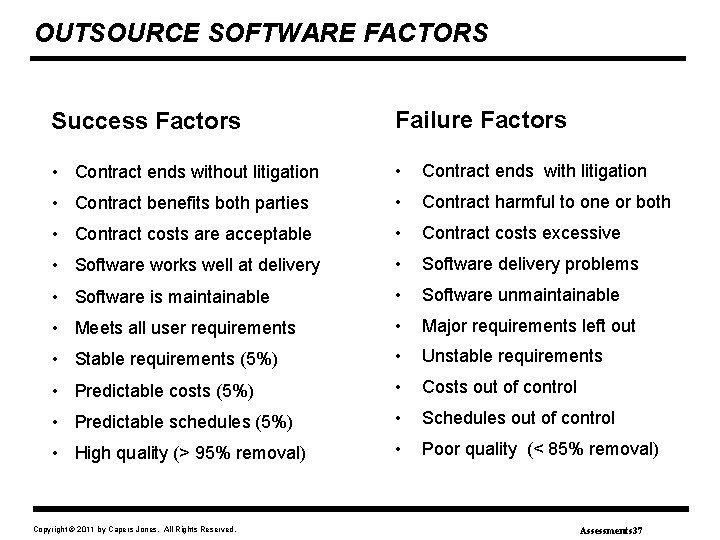

OUTSOURCE SOFTWARE FACTORS Success Factors Failure Factors • Contract ends without litigation • Contract ends with litigation • Contract benefits both parties • Contract harmful to one or both • Contract costs are acceptable • Contract costs excessive • Software works well at delivery • Software delivery problems • Software is maintainable • Software unmaintainable • Meets all user requirements • Major requirements left out • Stable requirements (5%) • Unstable requirements • Predictable costs (5%) • Costs out of control • Predictable schedules (5%) • Schedules out of control • High quality (> 95% removal) • Poor quality (< 85% removal) Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 37

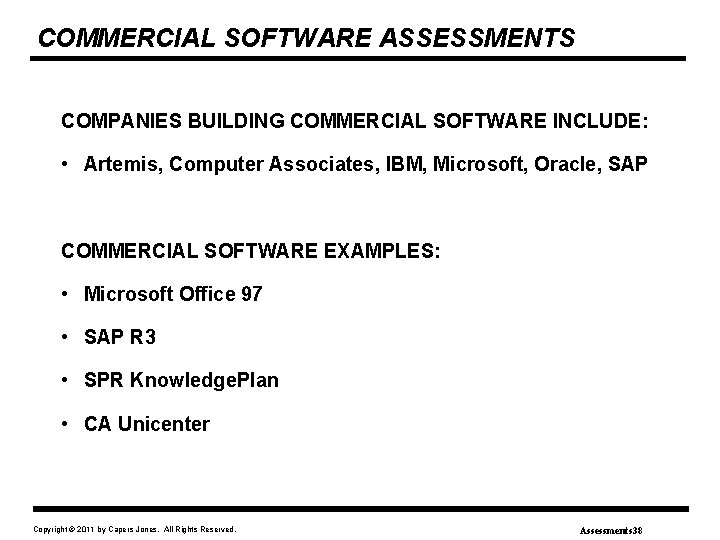

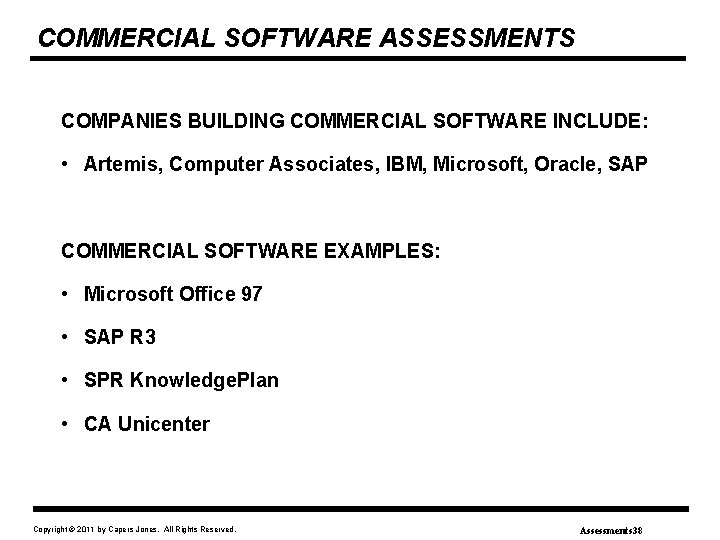

COMMERCIAL SOFTWARE ASSESSMENTS COMPANIES BUILDING COMMERCIAL SOFTWARE INCLUDE: • Artemis, Computer Associates, IBM, Microsoft, Oracle, SAP COMMERCIAL SOFTWARE EXAMPLES: • Microsoft Office 97 • SAP R 3 • SPR Knowledge. Plan • CA Unicenter Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 38

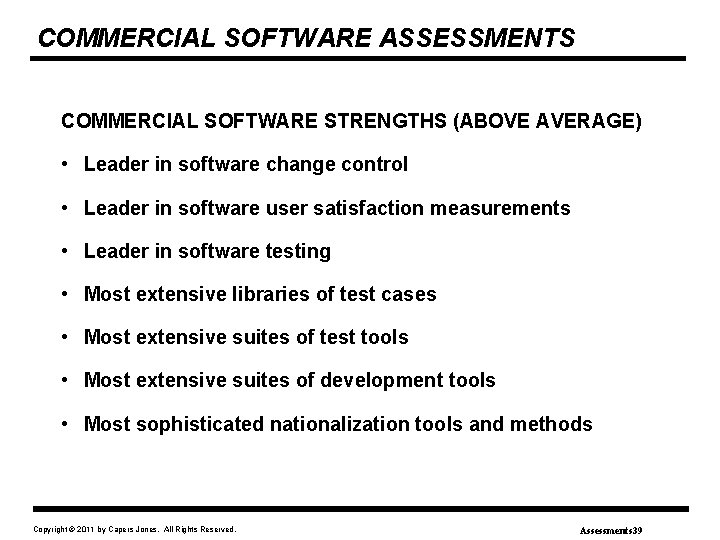

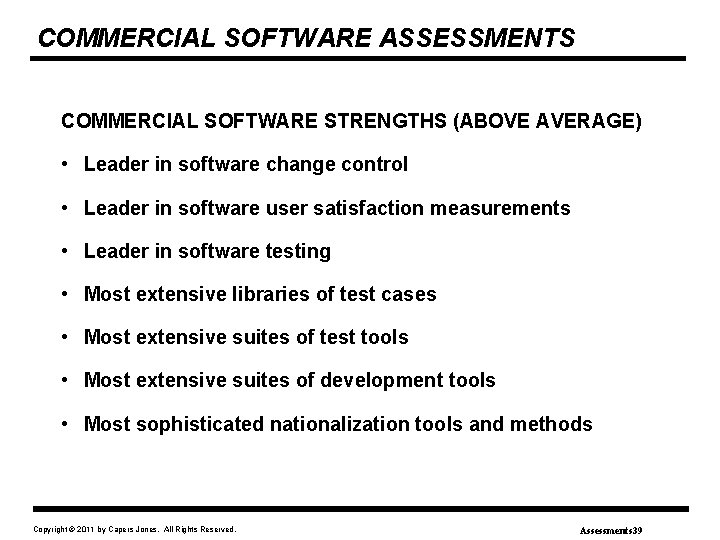

COMMERCIAL SOFTWARE ASSESSMENTS COMMERCIAL SOFTWARE STRENGTHS (ABOVE AVERAGE) • Leader in software change control • Leader in software user satisfaction measurements • Leader in software testing • Most extensive libraries of test cases • Most extensive suites of test tools • Most extensive suites of development tools • Most sophisticated nationalization tools and methods Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 39

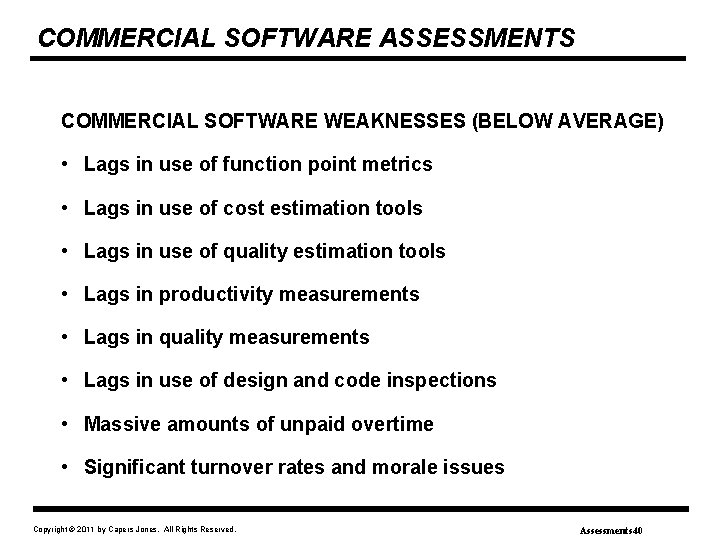

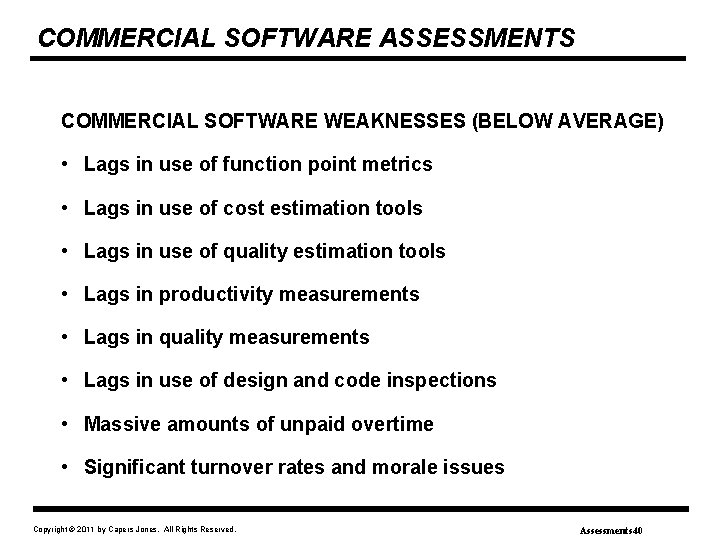

COMMERCIAL SOFTWARE ASSESSMENTS COMMERCIAL SOFTWARE WEAKNESSES (BELOW AVERAGE) • Lags in use of function point metrics • Lags in use of cost estimation tools • Lags in use of quality estimation tools • Lags in productivity measurements • Lags in quality measurements • Lags in use of design and code inspections • Massive amounts of unpaid overtime • Significant turnover rates and morale issues Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 40

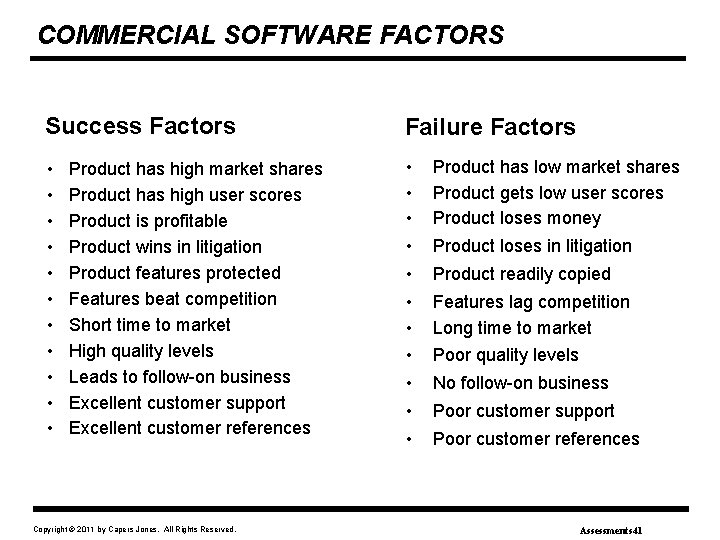

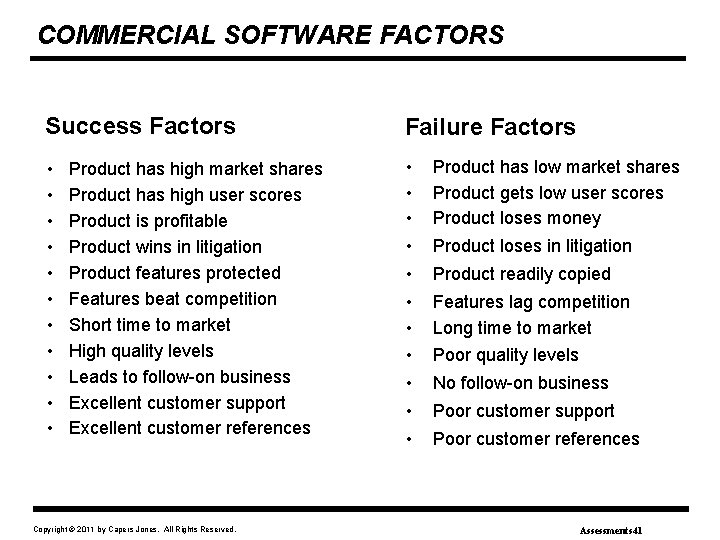

COMMERCIAL SOFTWARE FACTORS Success Factors Failure Factors • • • • Product has low market shares Product gets low user scores Product loses money • Product readily copied • • • Features lag competition Long time to market • No follow-on business • Poor customer support • Poor customer references Product has high market shares Product has high user scores Product is profitable Product wins in litigation Product features protected Features beat competition Short time to market High quality levels Leads to follow-on business Excellent customer support Excellent customer references Copyright © 2011 by Capers Jones. All Rights Reserved. Product loses in litigation Poor quality levels Assessments 41

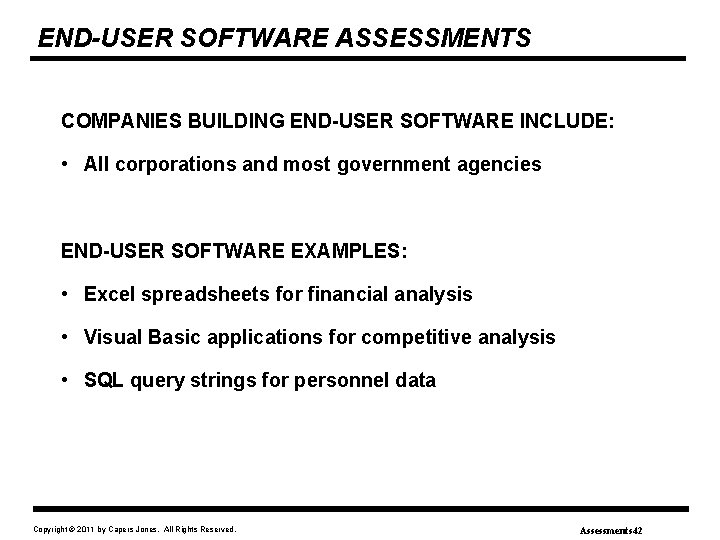

END-USER SOFTWARE ASSESSMENTS COMPANIES BUILDING END-USER SOFTWARE INCLUDE: • All corporations and most government agencies END-USER SOFTWARE EXAMPLES: • Excel spreadsheets for financial analysis • Visual Basic applications for competitive analysis • SQL query strings for personnel data Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 42

END-USER SOFTWARE ASSESSMENTS END-USER SOFTWARE STRENGTHS (ABOVE AVERAGE) • Highest productivity levels < 100 function points Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 43

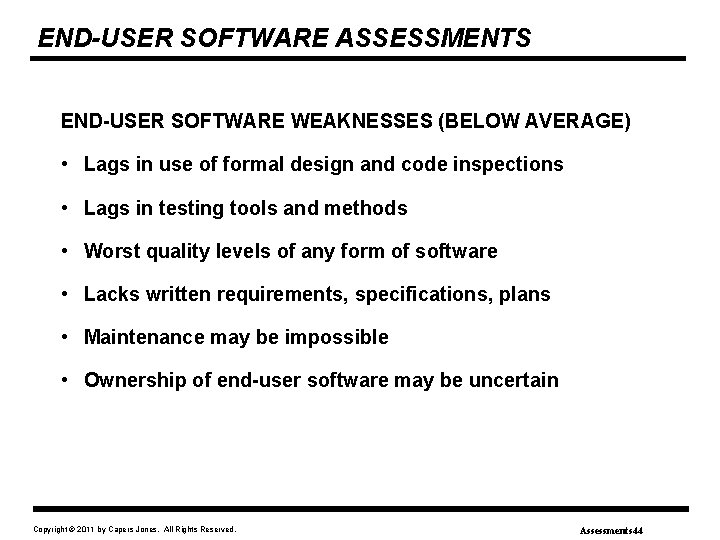

END-USER SOFTWARE ASSESSMENTS END-USER SOFTWARE WEAKNESSES (BELOW AVERAGE) • Lags in use of formal design and code inspections • Lags in testing tools and methods • Worst quality levels of any form of software • Lacks written requirements, specifications, plans • Maintenance may be impossible • Ownership of end-user software may be uncertain Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 44

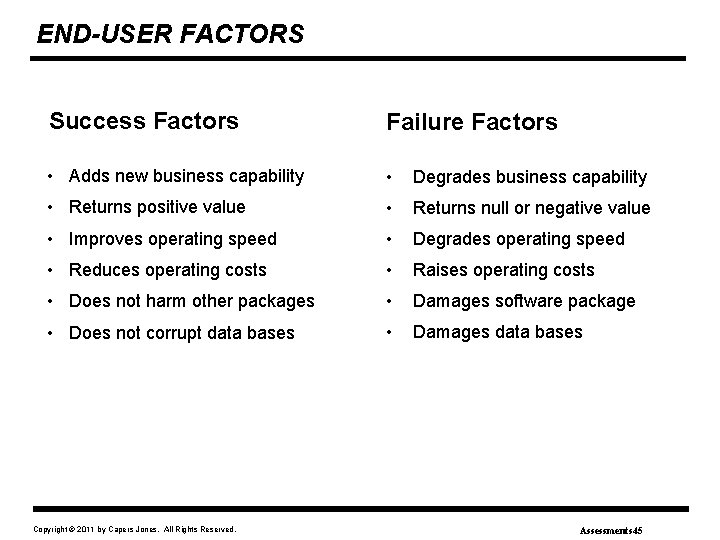

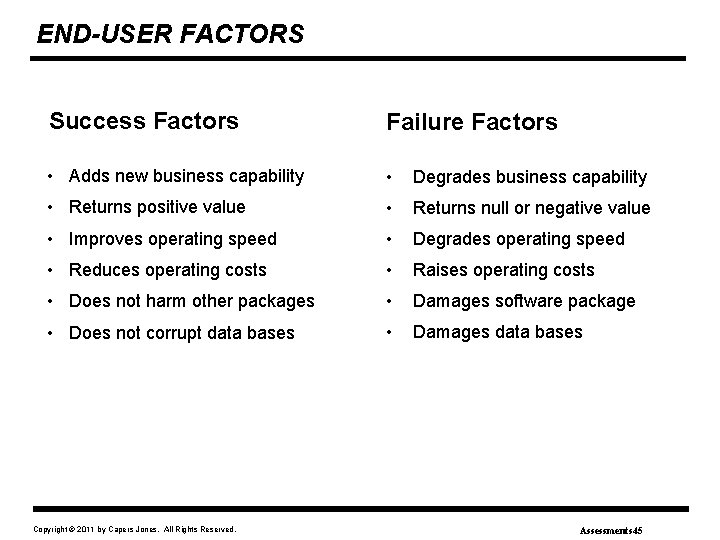

END-USER FACTORS Success Factors Failure Factors • Adds new business capability • Degrades business capability • Returns positive value • Returns null or negative value • Improves operating speed • Degrades operating speed • Reduces operating costs • Raises operating costs • Does not harm other packages • Damages software package • Does not corrupt data bases • Damages data bases Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 45

FACTORS THAT INFLUENCE SOFTWARE • MANAGEMENT FACTORS • SOCIAL FACTORS • TECHNOLOGY FACTORS • COMBINATIONS OF ALL FACTORS Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 46

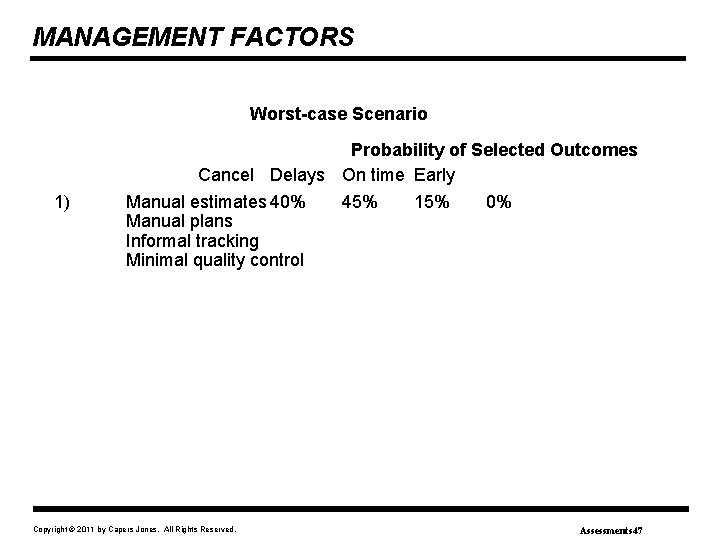

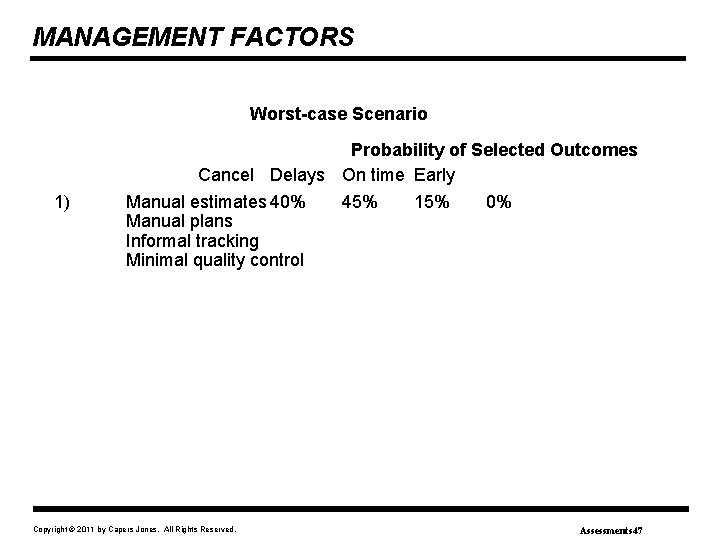

MANAGEMENT FACTORS Worst-case Scenario Probability of Selected Outcomes Cancel Delays On time Early 1) Manual estimates 40% Manual plans Informal tracking Minimal quality control Copyright © 2011 by Capers Jones. All Rights Reserved. 45% 15% 0% Assessments 47

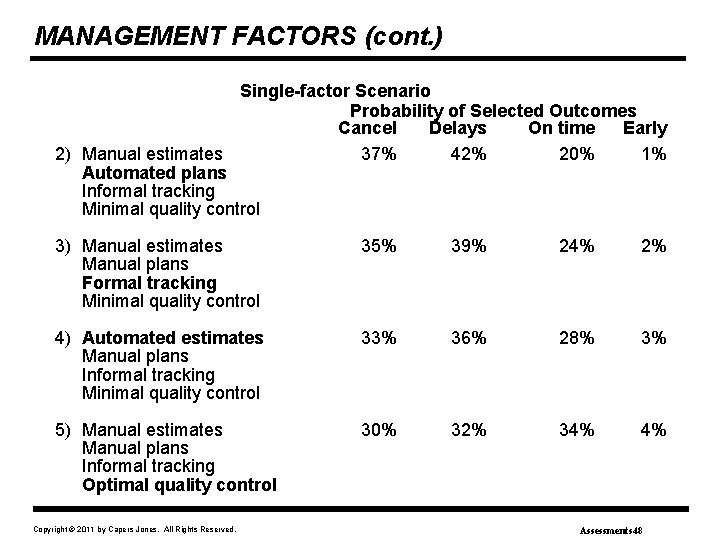

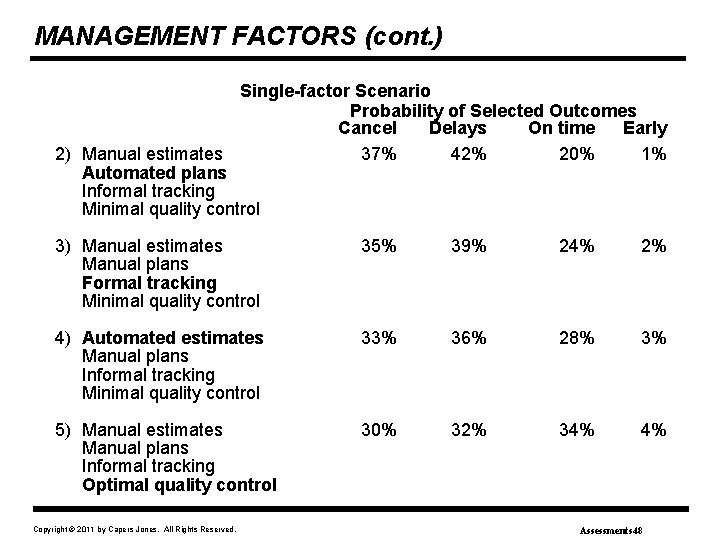

MANAGEMENT FACTORS (cont. ) Single-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 2) Manual estimates 37% 42% 20% 1% Automated plans Informal tracking Minimal quality control 3) Manual estimates Manual plans Formal tracking Minimal quality control 35% 39% 24% 2% 4) Automated estimates Manual plans Informal tracking Minimal quality control 33% 36% 28% 3% 5) Manual estimates Manual plans Informal tracking Optimal quality control 30% 32% 34% 4% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 48

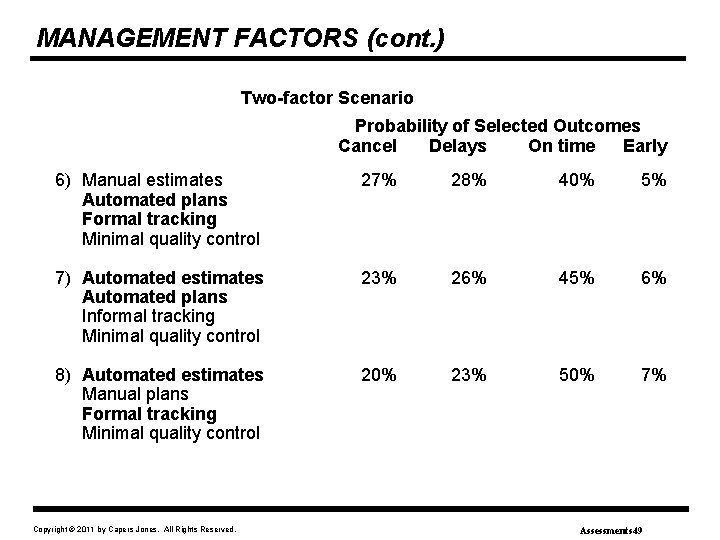

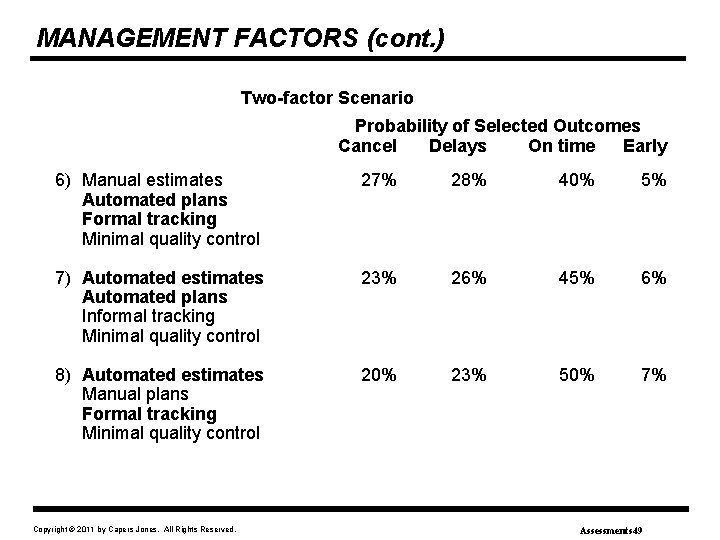

MANAGEMENT FACTORS (cont. ) Two-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 6) Manual estimates Automated plans Formal tracking Minimal quality control 27% 28% 40% 5% 7) Automated estimates Automated plans Informal tracking Minimal quality control 23% 26% 45% 6% 8) Automated estimates Manual plans Formal tracking Minimal quality control 20% 23% 50% 7% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 49

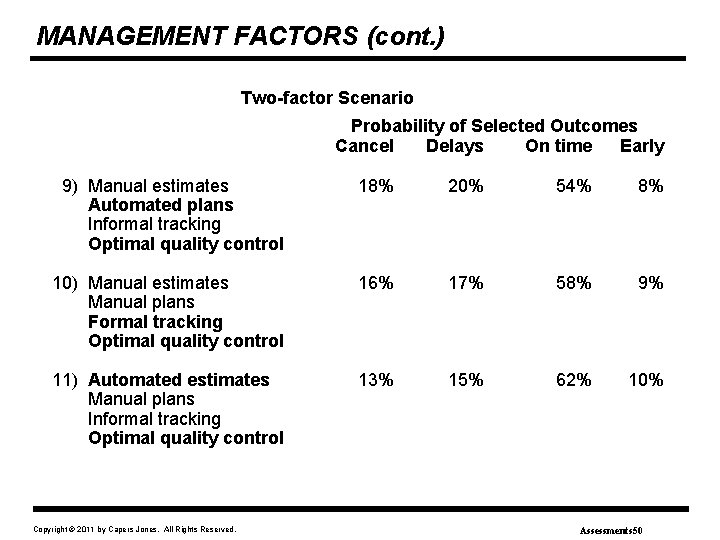

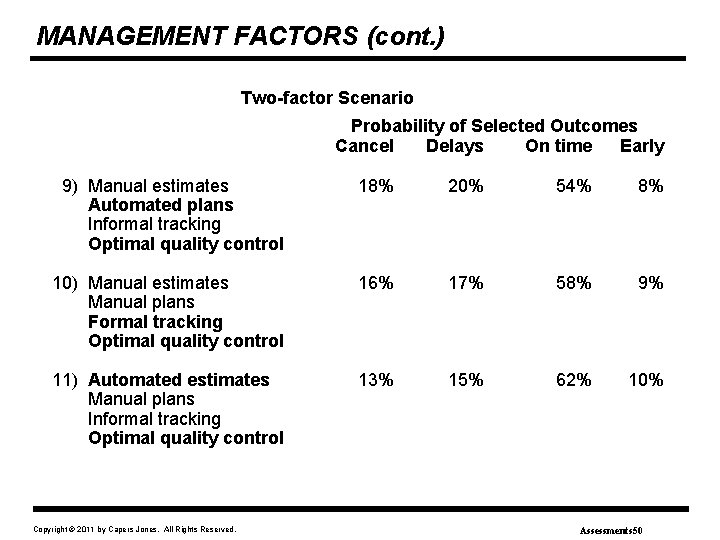

MANAGEMENT FACTORS (cont. ) Two-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 9) Manual estimates Automated plans Informal tracking Optimal quality control 18% 20% 54% 8% 10) Manual estimates Manual plans Formal tracking Optimal quality control 16% 17% 58% 9% 11) Automated estimates Manual plans Informal tracking Optimal quality control 13% 15% 62% 10% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 50

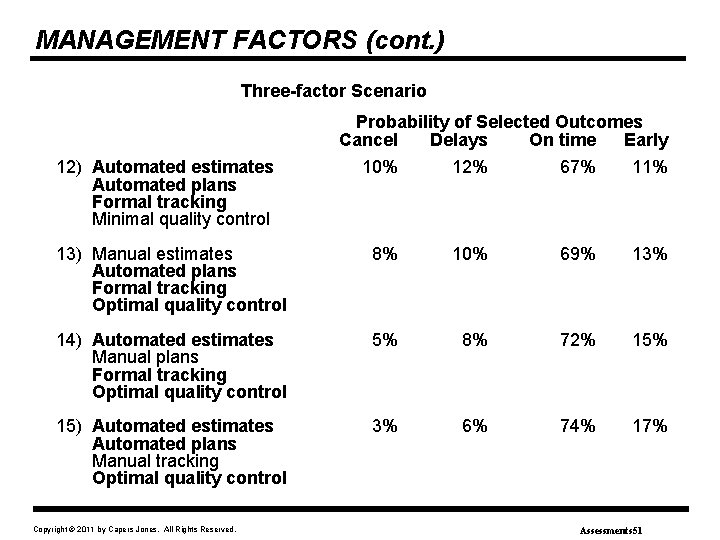

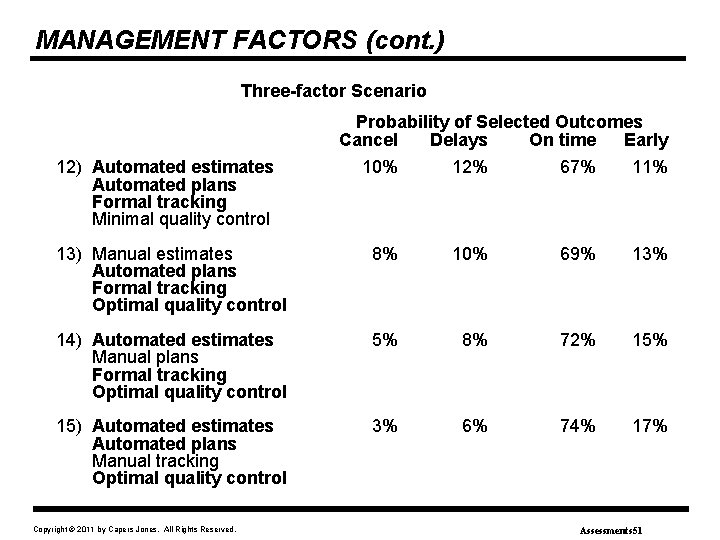

MANAGEMENT FACTORS (cont. ) Three-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 12) Automated estimates Automated plans Formal tracking Minimal quality control 10% 12% 67% 11% 13) Manual estimates Automated plans Formal tracking Optimal quality control 8% 10% 69% 13% 14) Automated estimates Manual plans Formal tracking Optimal quality control 5% 8% 72% 15) Automated estimates Automated plans Manual tracking Optimal quality control 3% 6% 74% 17% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 51

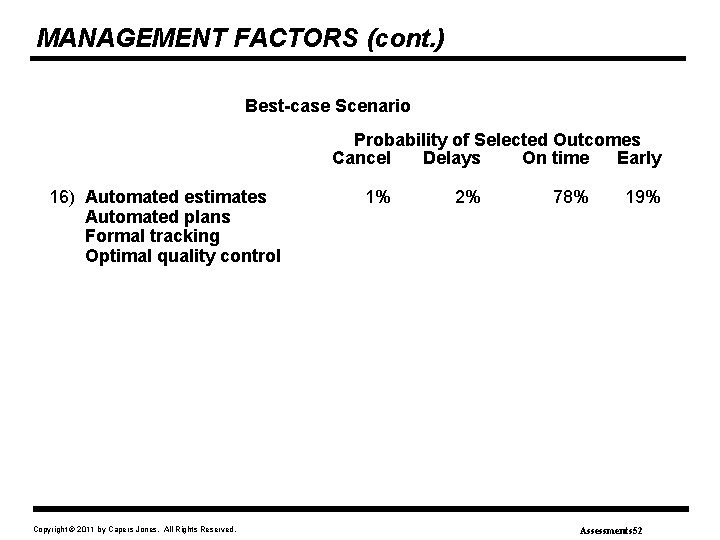

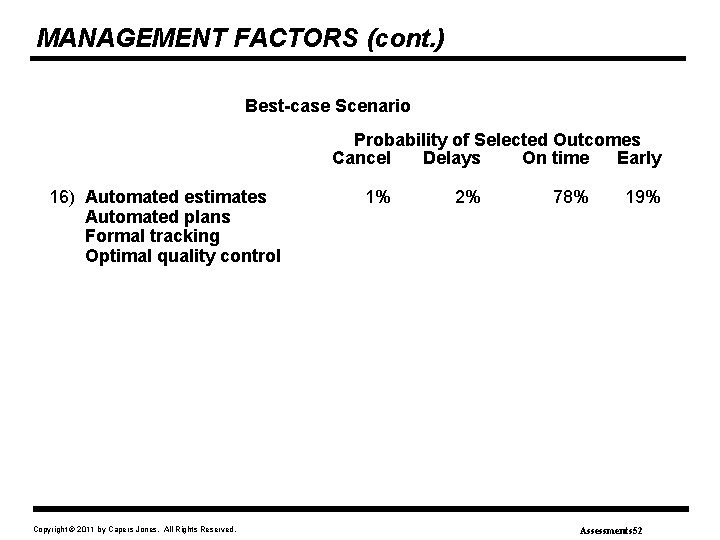

MANAGEMENT FACTORS (cont. ) Best-case Scenario Probability of Selected Outcomes Cancel Delays On time Early 16) Automated estimates Automated plans Formal tracking Optimal quality control Copyright © 2011 by Capers Jones. All Rights Reserved. 1% 2% 78% 19% Assessments 52

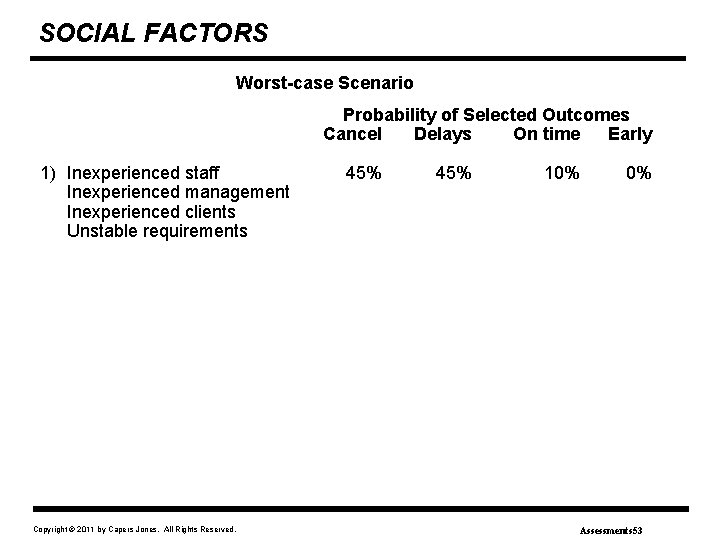

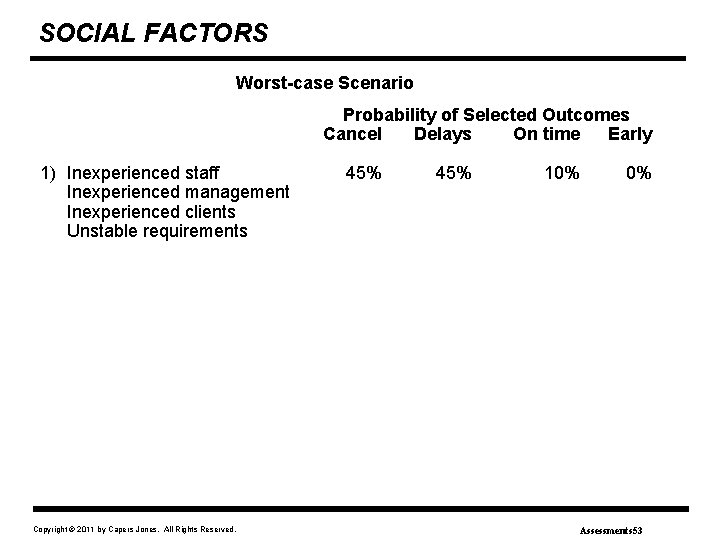

SOCIAL FACTORS Worst-case Scenario Probability of Selected Outcomes Cancel Delays On time Early 1) Inexperienced staff Inexperienced management Inexperienced clients Unstable requirements Copyright © 2011 by Capers Jones. All Rights Reserved. 45% 10% 0% Assessments 53

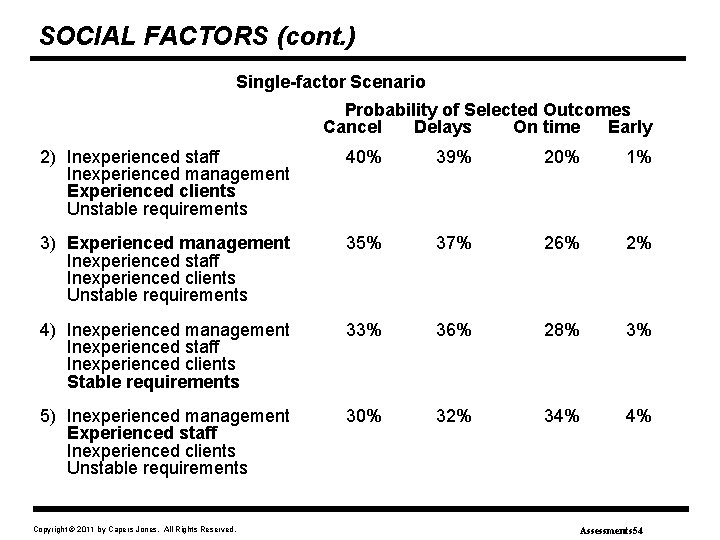

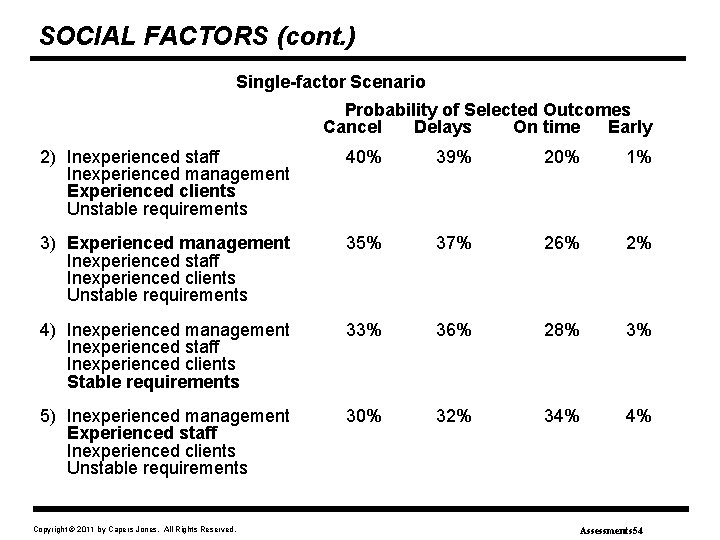

SOCIAL FACTORS (cont. ) Single-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 2) Inexperienced staff Inexperienced management Experienced clients Unstable requirements 40% 39% 20% 1% 3) Experienced management Inexperienced staff Inexperienced clients Unstable requirements 35% 37% 26% 2% 4) Inexperienced management Inexperienced staff Inexperienced clients Stable requirements 33% 36% 28% 3% 5) Inexperienced management Experienced staff Inexperienced clients Unstable requirements 30% 32% 34% 4% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 54

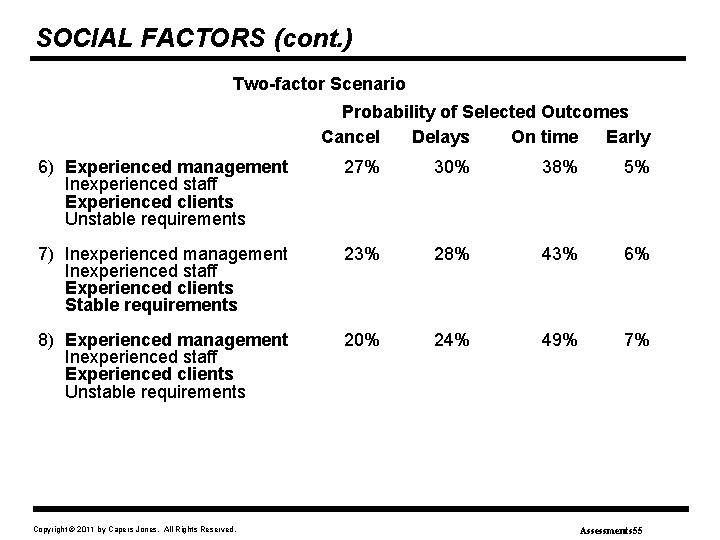

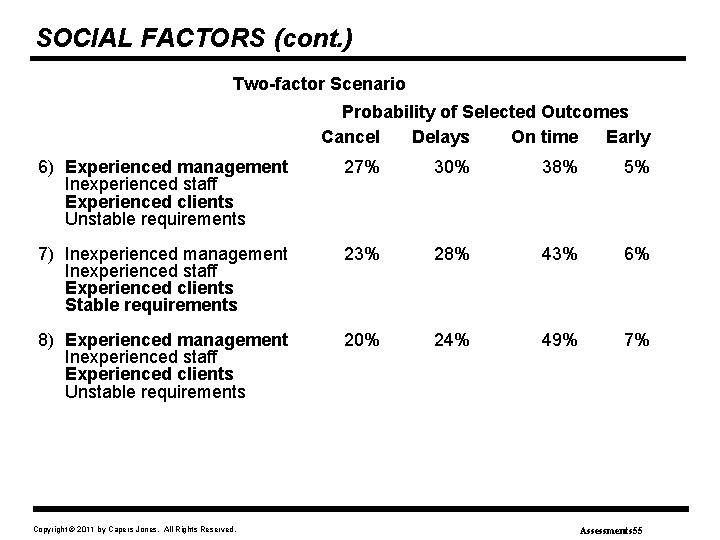

SOCIAL FACTORS (cont. ) Two-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 6) Experienced management Inexperienced staff Experienced clients Unstable requirements 27% 30% 38% 5% 7) Inexperienced management Inexperienced staff Experienced clients Stable requirements 23% 28% 43% 6% 8) Experienced management Inexperienced staff Experienced clients Unstable requirements 20% 24% 49% 7% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 55

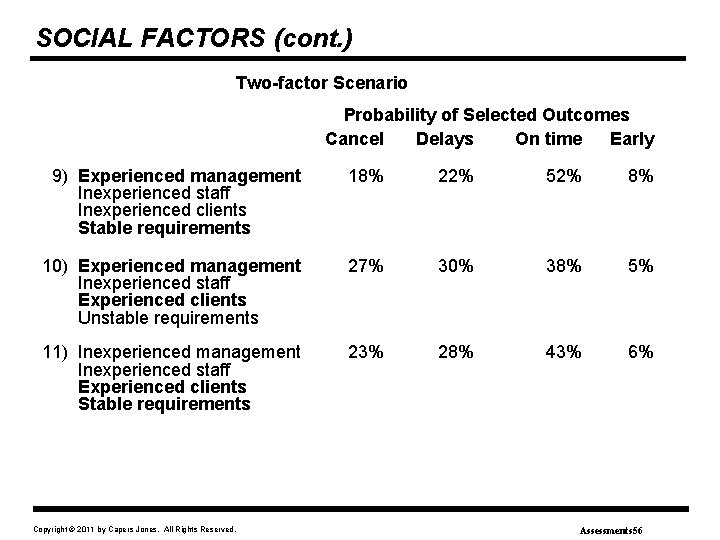

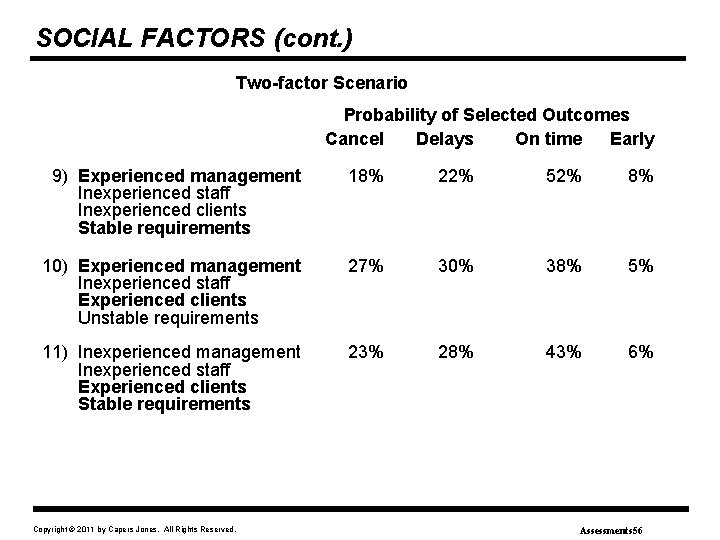

SOCIAL FACTORS (cont. ) Two-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 9) Experienced management Inexperienced staff Inexperienced clients Stable requirements 18% 22% 52% 8% 10) Experienced management Inexperienced staff Experienced clients Unstable requirements 27% 30% 38% 5% 11) Inexperienced management Inexperienced staff Experienced clients Stable requirements 23% 28% 43% 6% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 56

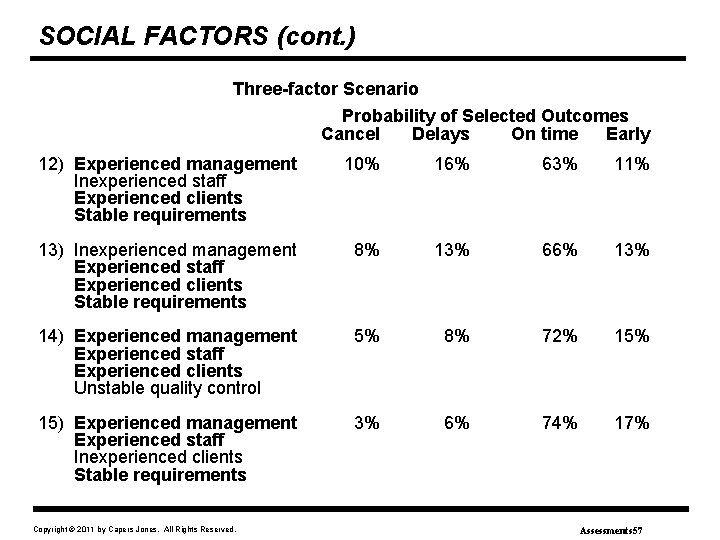

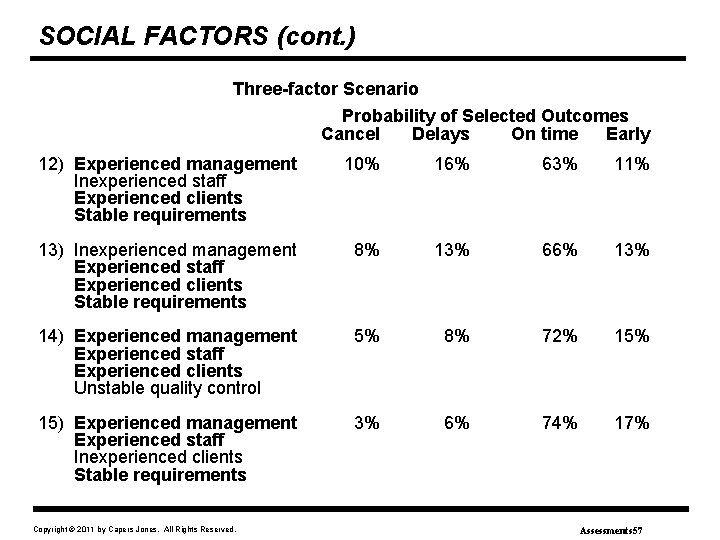

SOCIAL FACTORS (cont. ) Three-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 12) Experienced management Inexperienced staff Experienced clients Stable requirements 10% 16% 63% 11% 13) Inexperienced management Experienced staff Experienced clients Stable requirements 8% 13% 66% 13% 14) Experienced management Experienced staff Experienced clients Unstable quality control 5% 8% 72% 15) Experienced management Experienced staff Inexperienced clients Stable requirements 3% 6% 74% 17% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 57

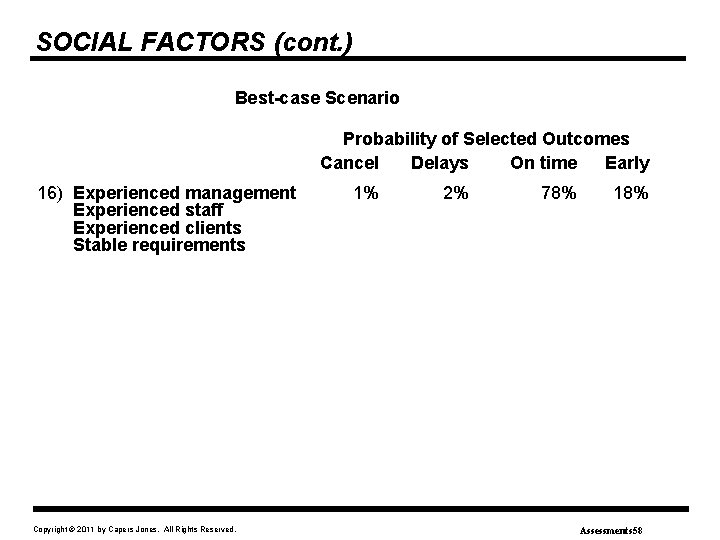

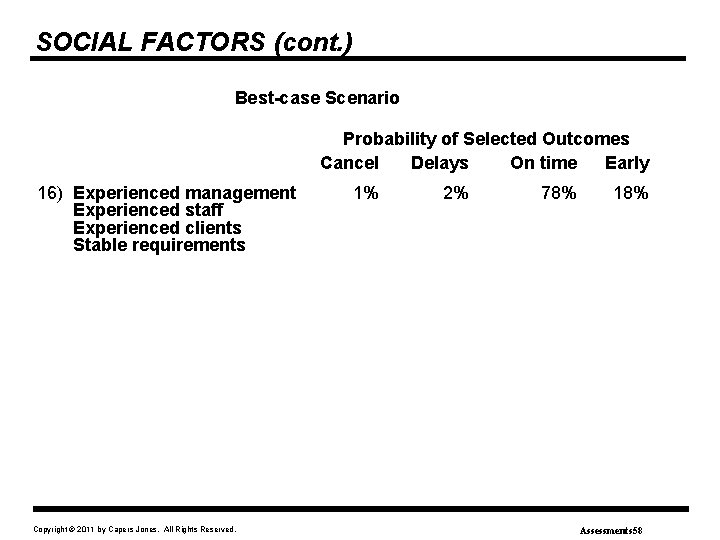

SOCIAL FACTORS (cont. ) Best-case Scenario Probability of Selected Outcomes Cancel Delays On time Early 16) Experienced management Experienced staff Experienced clients Stable requirements Copyright © 2011 by Capers Jones. All Rights Reserved. 1% 2% 78% 18% Assessments 58

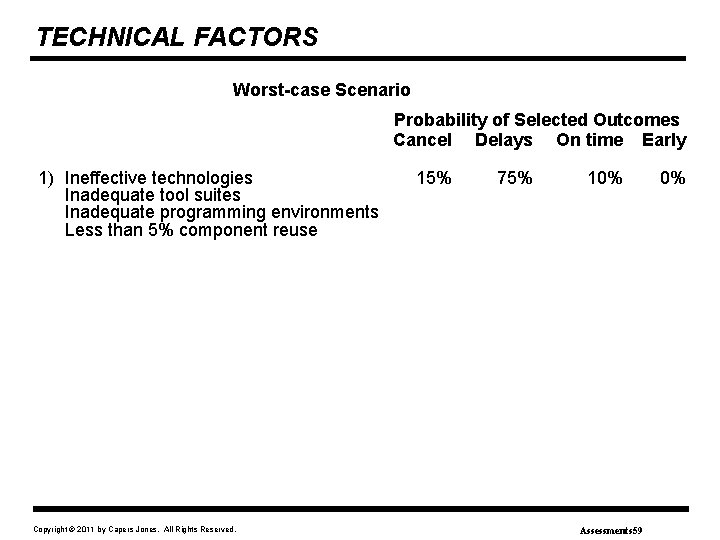

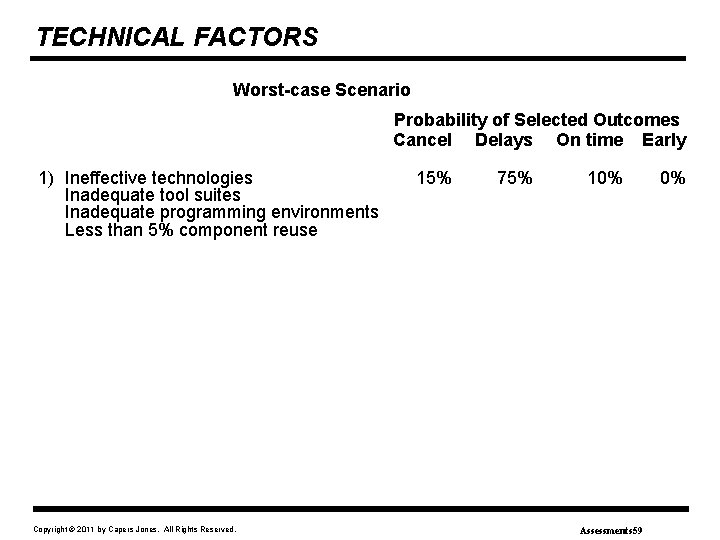

TECHNICAL FACTORS Worst-case Scenario Probability of Selected Outcomes Cancel Delays On time Early 1) Ineffective technologies Inadequate tool suites Inadequate programming environments Less than 5% component reuse Copyright © 2011 by Capers Jones. All Rights Reserved. 15% 75% 10% Assessments 59 0%

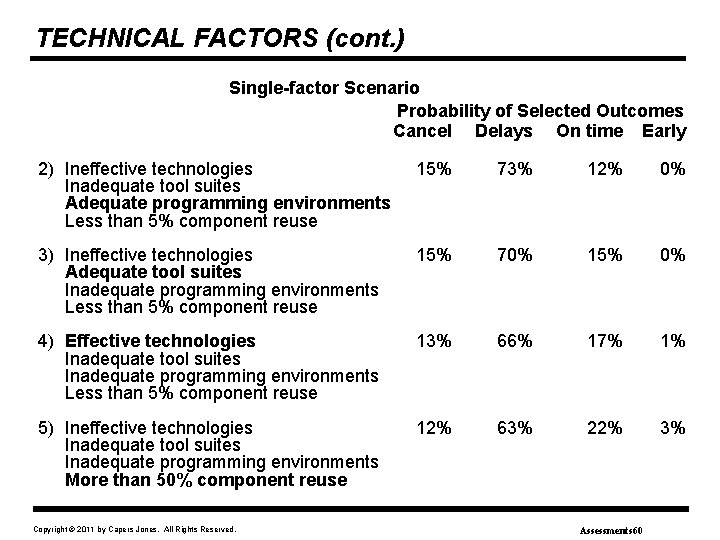

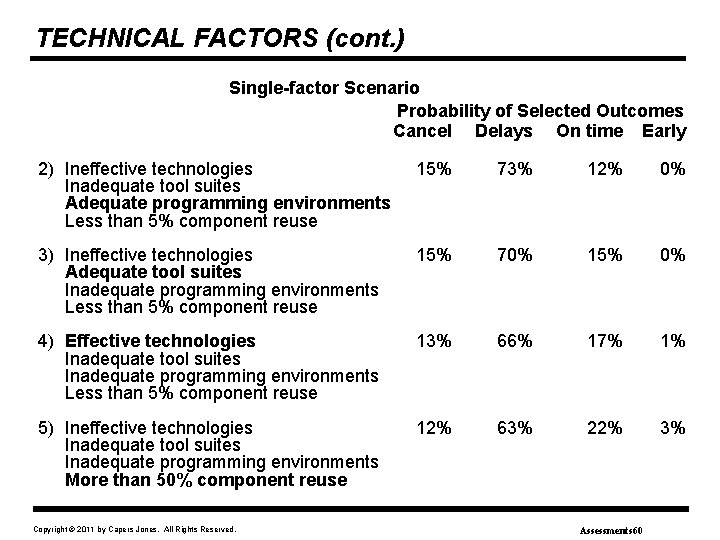

TECHNICAL FACTORS (cont. ) Single-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 2) Ineffective technologies Inadequate tool suites Adequate programming environments Less than 5% component reuse 15% 73% 12% 0% 3) Ineffective technologies Adequate tool suites Inadequate programming environments Less than 5% component reuse 15% 70% 15% 0% 4) Effective technologies Inadequate tool suites Inadequate programming environments Less than 5% component reuse 13% 66% 17% 1% 5) Ineffective technologies Inadequate tool suites Inadequate programming environments More than 50% component reuse 12% 63% 22% 3% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 60

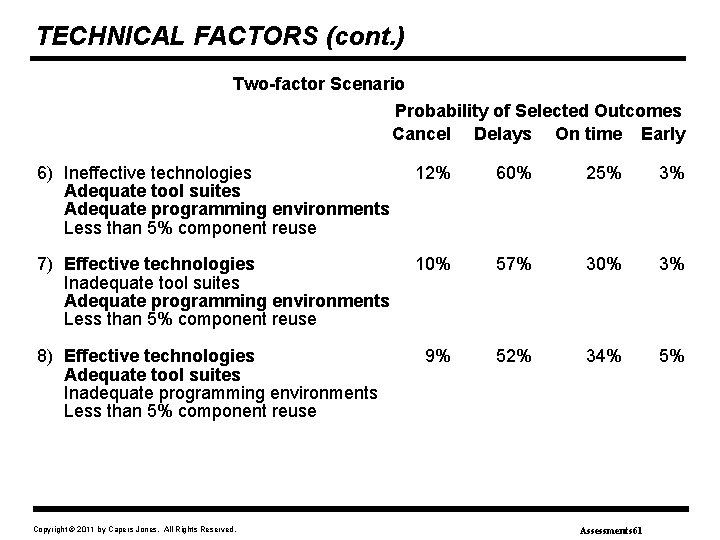

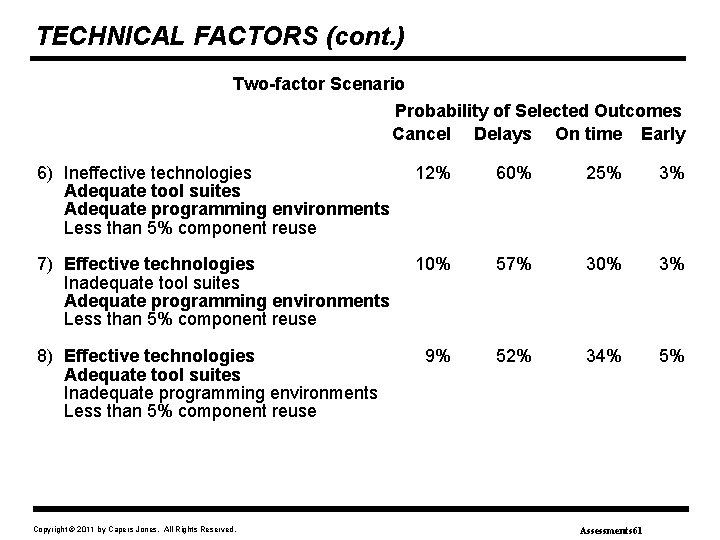

TECHNICAL FACTORS (cont. ) Two-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 6) Ineffective technologies Adequate tool suites Adequate programming environments Less than 5% component reuse 12% 60% 25% 3% 7) Effective technologies Inadequate tool suites Adequate programming environments Less than 5% component reuse 10% 57% 30% 3% 9% 52% 34% 5% 8) Effective technologies Adequate tool suites Inadequate programming environments Less than 5% component reuse Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 61

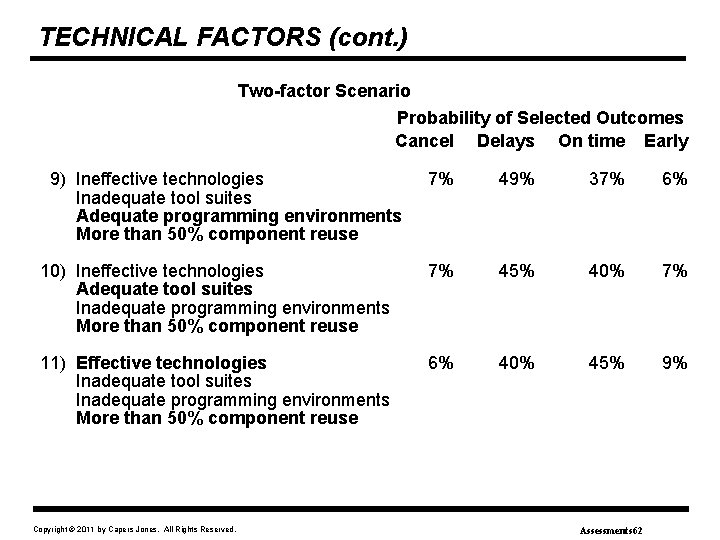

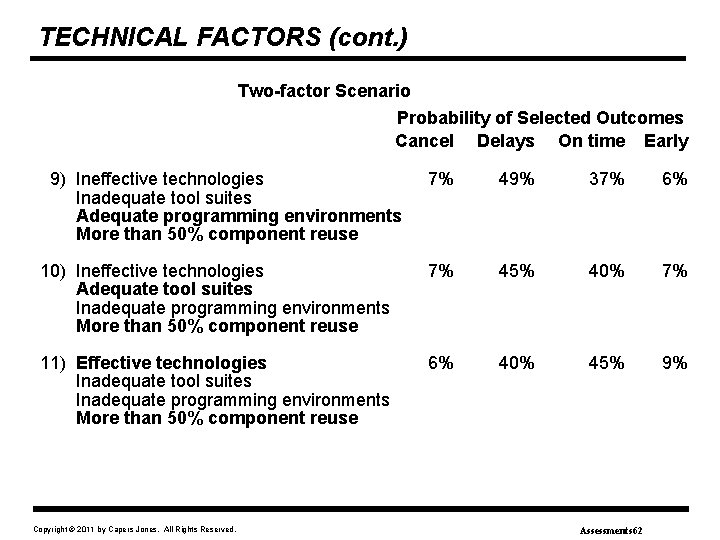

TECHNICAL FACTORS (cont. ) Two-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 9) Ineffective technologies Inadequate tool suites Adequate programming environments More than 50% component reuse 7% 49% 37% 6% 10) Ineffective technologies Adequate tool suites Inadequate programming environments More than 50% component reuse 7% 45% 40% 7% 11) Effective technologies Inadequate tool suites Inadequate programming environments More than 50% component reuse 6% 40% 45% 9% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 62

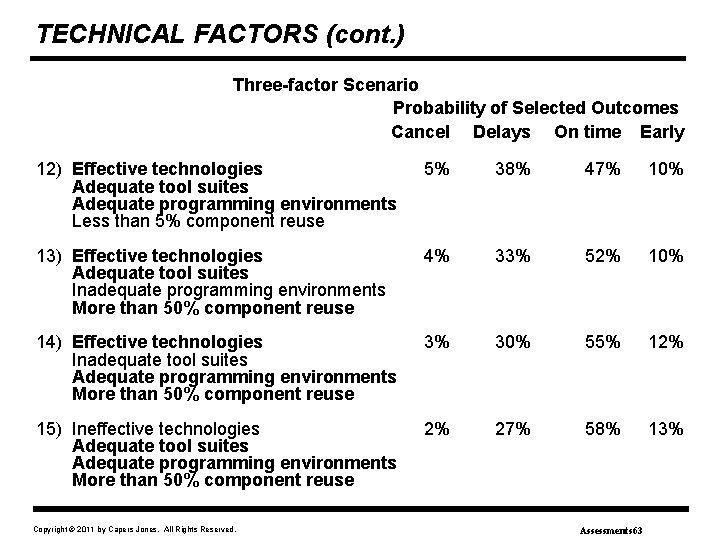

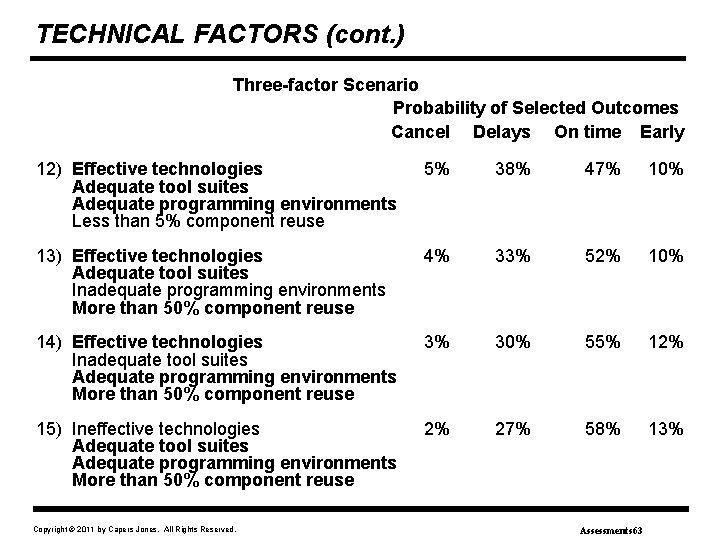

TECHNICAL FACTORS (cont. ) Three-factor Scenario Probability of Selected Outcomes Cancel Delays On time Early 12) Effective technologies Adequate tool suites Adequate programming environments Less than 5% component reuse 5% 38% 47% 10% 13) Effective technologies Adequate tool suites Inadequate programming environments More than 50% component reuse 4% 33% 52% 10% 14) Effective technologies Inadequate tool suites Adequate programming environments More than 50% component reuse 3% 30% 55% 12% 15) Ineffective technologies Adequate tool suites Adequate programming environments More than 50% component reuse 2% 27% 58% 13% Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 63

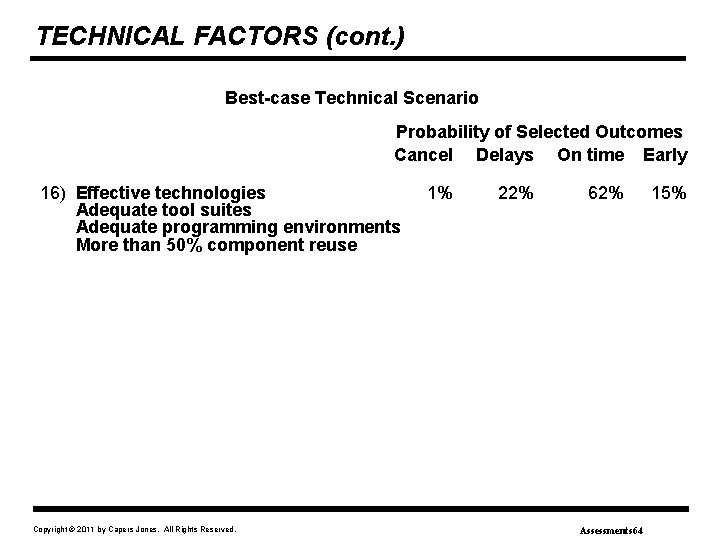

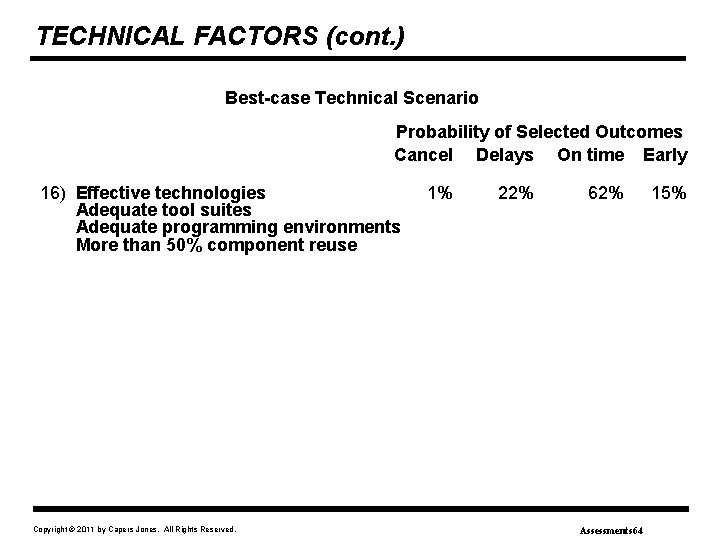

TECHNICAL FACTORS (cont. ) Best-case Technical Scenario Probability of Selected Outcomes Cancel Delays On time Early 16) Effective technologies Adequate tool suites Adequate programming environments More than 50% component reuse Copyright © 2011 by Capers Jones. All Rights Reserved. 1% 22% 62% Assessments 64 15%

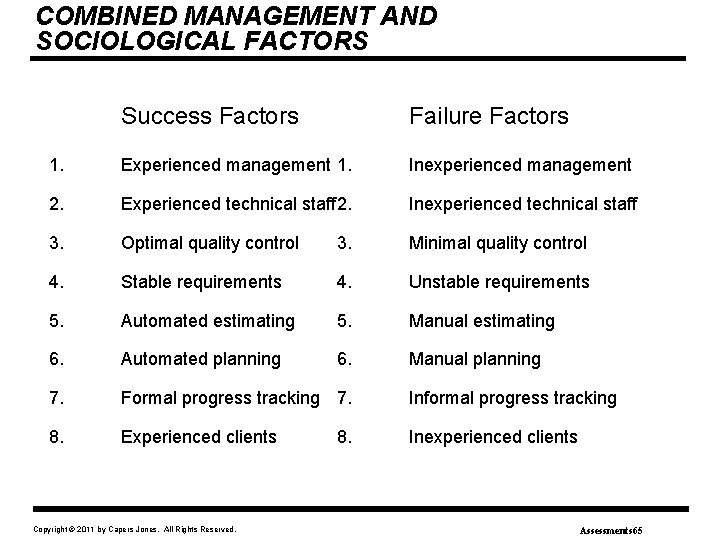

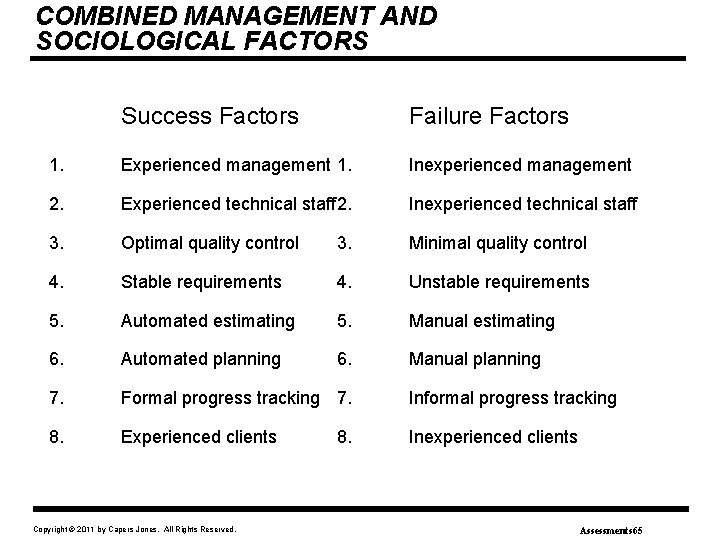

COMBINED MANAGEMENT AND SOCIOLOGICAL FACTORS Success Factors Failure Factors 1. Experienced management 1. Inexperienced management 2. Experienced technical staff 2. Inexperienced technical staff 3. Optimal quality control 3. Minimal quality control 4. Stable requirements 4. Unstable requirements 5. Automated estimating 5. Manual estimating 6. Automated planning 6. Manual planning 7. Formal progress tracking 7. Informal progress tracking 8. Experienced clients Inexperienced clients Copyright © 2011 by Capers Jones. All Rights Reserved. 8. Assessments 65

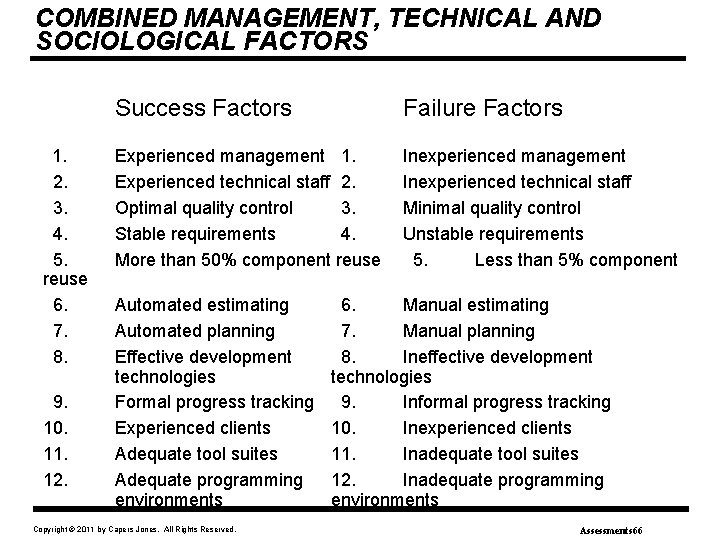

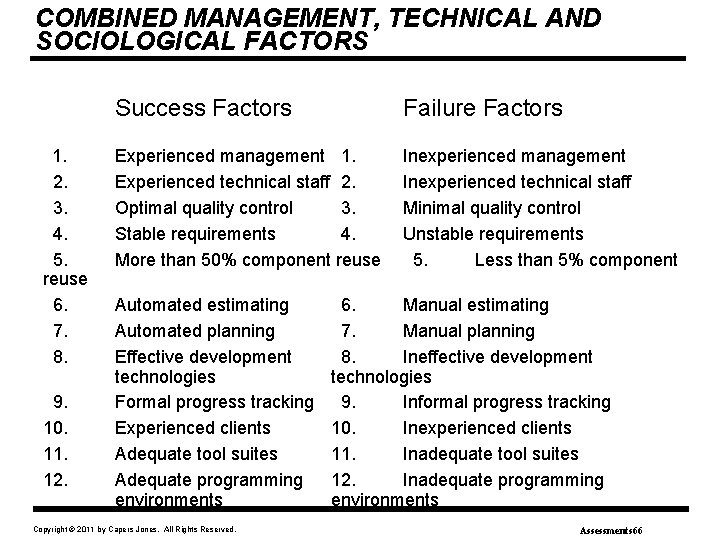

COMBINED MANAGEMENT, TECHNICAL AND SOCIOLOGICAL FACTORS 1. 2. 3. 4. 5. reuse 6. 7. 8. 9. 10. 11. 12. Success Factors Failure Factors Experienced management 1. Experienced technical staff 2. Optimal quality control 3. Stable requirements 4. More than 50% component reuse Inexperienced management Inexperienced technical staff Minimal quality control Unstable requirements 5. Less than 5% component Automated estimating Automated planning Effective development technologies Formal progress tracking Experienced clients Adequate tool suites Adequate programming environments Copyright © 2011 by Capers Jones. All Rights Reserved. 6. Manual estimating 7. Manual planning 8. Ineffective development technologies 9. Informal progress tracking 10. Inexperienced clients 11. Inadequate tool suites 12. Inadequate programming environments Assessments 66

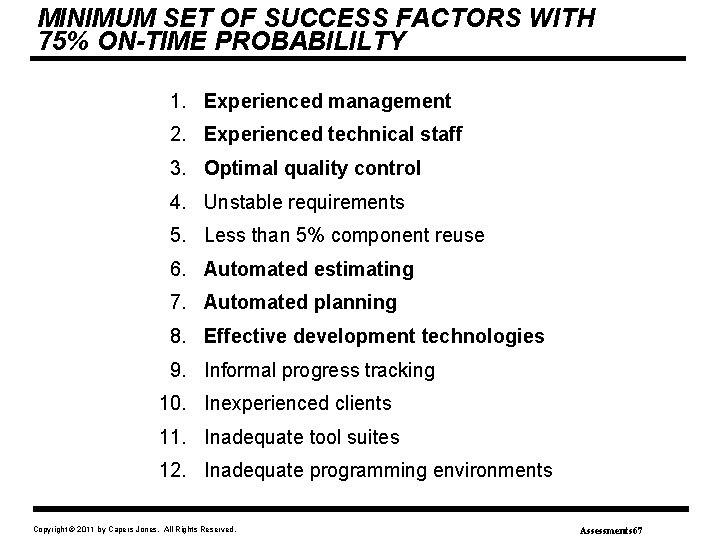

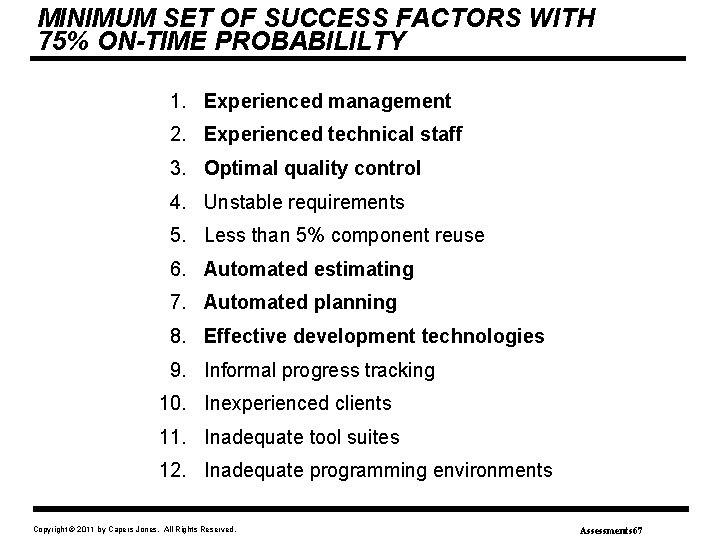

MINIMUM SET OF SUCCESS FACTORS WITH 75% ON-TIME PROBABILILTY 1. Experienced management 2. Experienced technical staff 3. Optimal quality control 4. Unstable requirements 5. Less than 5% component reuse 6. Automated estimating 7. Automated planning 8. Effective development technologies 9. Informal progress tracking 10. Inexperienced clients 11. Inadequate tool suites 12. Inadequate programming environments Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 67

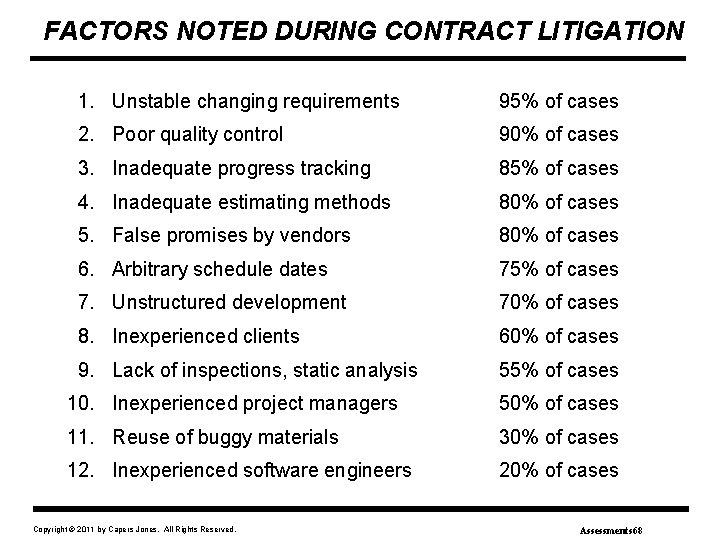

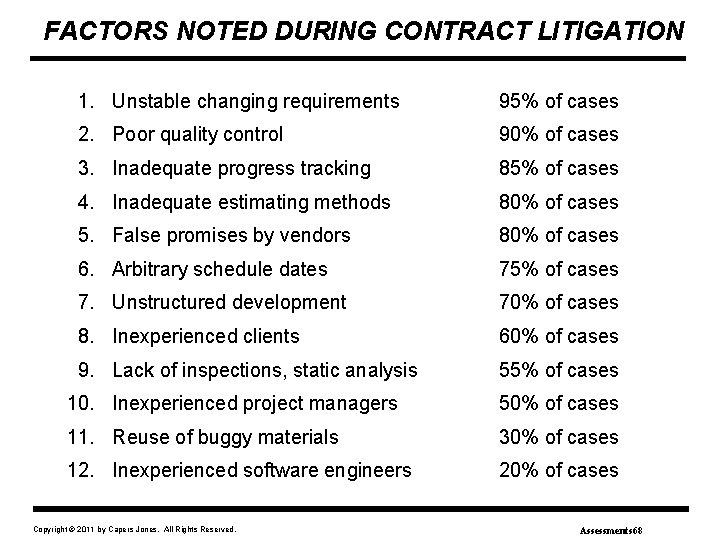

FACTORS NOTED DURING CONTRACT LITIGATION 1. Unstable changing requirements 95% of cases 2. Poor quality control 90% of cases 3. Inadequate progress tracking 85% of cases 4. Inadequate estimating methods 80% of cases 5. False promises by vendors 80% of cases 6. Arbitrary schedule dates 75% of cases 7. Unstructured development 70% of cases 8. Inexperienced clients 60% of cases 9. Lack of inspections, static analysis 55% of cases 10. Inexperienced project managers 50% of cases 11. Reuse of buggy materials 30% of cases 12. Inexperienced software engineers 20% of cases Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 68

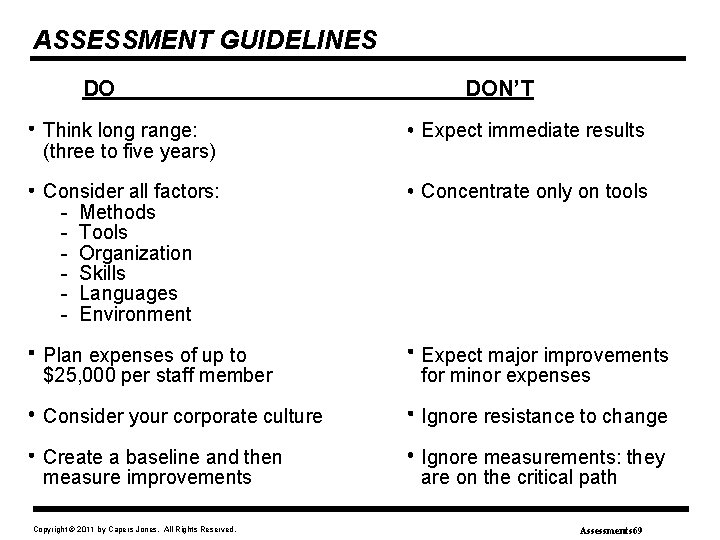

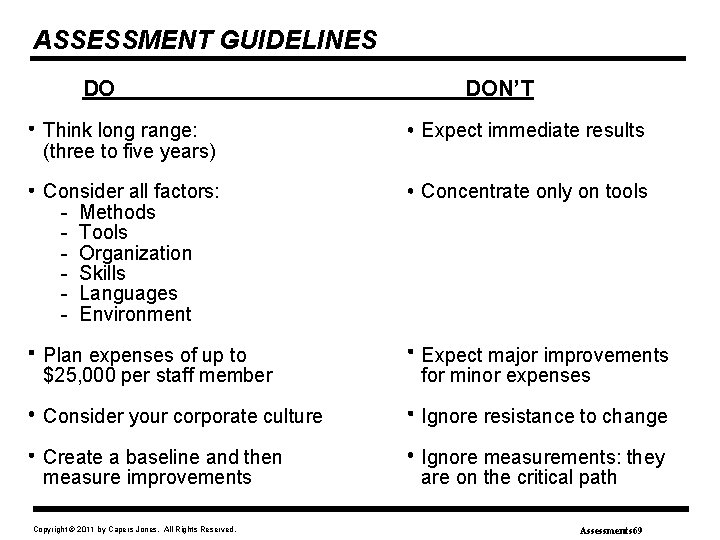

ASSESSMENT GUIDELINES DO DON’T Think long range: (three to five years) Expect immediate results Consider all factors: - Methods - Tools - Organization - Skills - Languages - Environment Concentrate only on tools Plan expenses of up to $25, 000 per staff member Expect major improvements for minor expenses Consider your corporate culture Ignore resistance to change Create a baseline and then measure improvements Ignore measurements: they are on the critical path Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 69

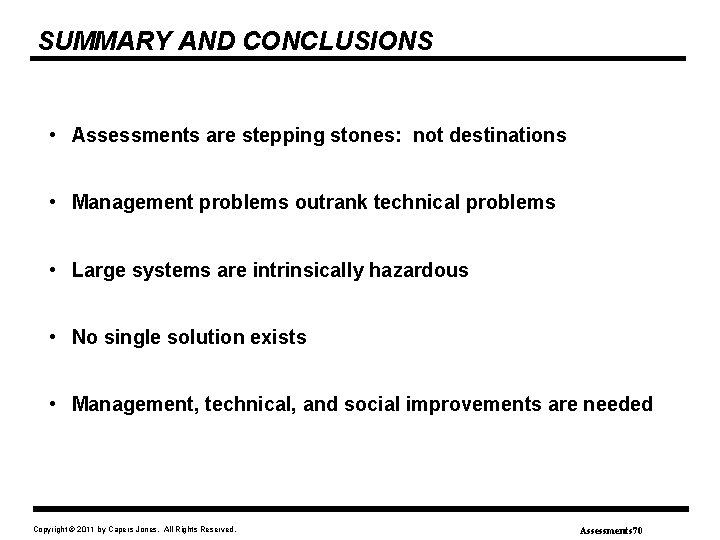

SUMMARY AND CONCLUSIONS • Assessments are stepping stones: not destinations • Management problems outrank technical problems • Large systems are intrinsically hazardous • No single solution exists • Management, technical, and social improvements are needed Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 70

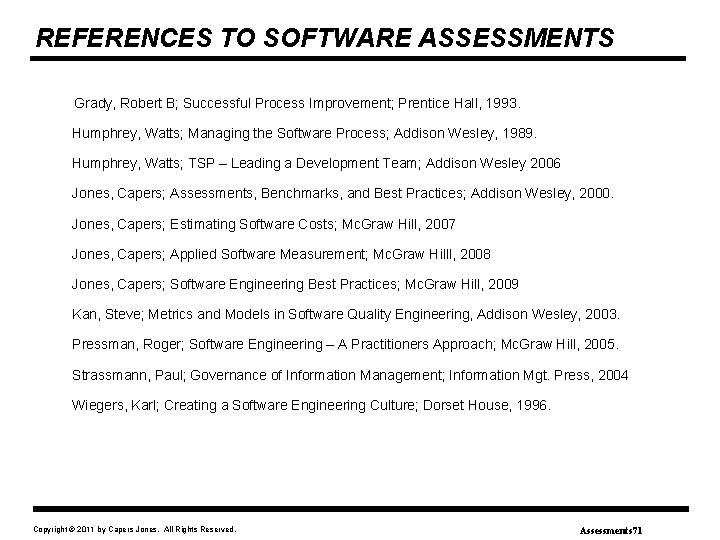

REFERENCES TO SOFTWARE ASSESSMENTS Grady, Robert B; Successful Process Improvement; Prentice Hall, 1993. Humphrey, Watts; Managing the Software Process; Addison Wesley, 1989. Humphrey, Watts; TSP – Leading a Development Team; Addison Wesley 2006 Jones, Capers; Assessments, Benchmarks, and Best Practices; Addison Wesley, 2000. Jones, Capers; Estimating Software Costs; Mc. Graw Hill, 2007 Jones, Capers; Applied Software Measurement; Mc. Graw Hilll, 2008 Jones, Capers; Software Engineering Best Practices; Mc. Graw Hill, 2009 Kan, Steve; Metrics and Models in Software Quality Engineering, Addison Wesley, 2003. Pressman, Roger; Software Engineering – A Practitioners Approach; Mc. Graw Hill, 2005. Strassmann, Paul; Governance of Information Management; Information Mgt. Press, 2004 Wiegers, Karl; Creating a Software Engineering Culture; Dorset House, 1996. Copyright © 2011 by Capers Jones. All Rights Reserved. Assessments 71