Capers Jones Associates LLC SOFTWARE DEFECT REMOVAL THE

- Slides: 85

Capers Jones & Associates LLC SOFTWARE DEFECT REMOVAL: THE STATE OF THE ART IN 2011 Capers Jones, President Capers Jones & Associates LLC Chief Scientist Emeritus, SPR LLC Quality Seminar: talk 1 http: //www. spr. com Capers. Jones 3@gmail. com June 11, 2011

BASIC DEFINITIONS DEFECT An error in a software deliverable that would cause the software to either stop or produce incorrect results if it is not removed. DEFECT The predicted sum of errors in requirements, POTENTIAL specifications, source code, documents, and bad fix categories. DEFECT REMOVAL bugs or errors. The set of static and dynamic methods applied to software to find and remove UNREPORTED Defects excluded from formal counts of DEFECTS bugs. Usually desk checking, unit test and other “private” forms of defect removal. Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 2

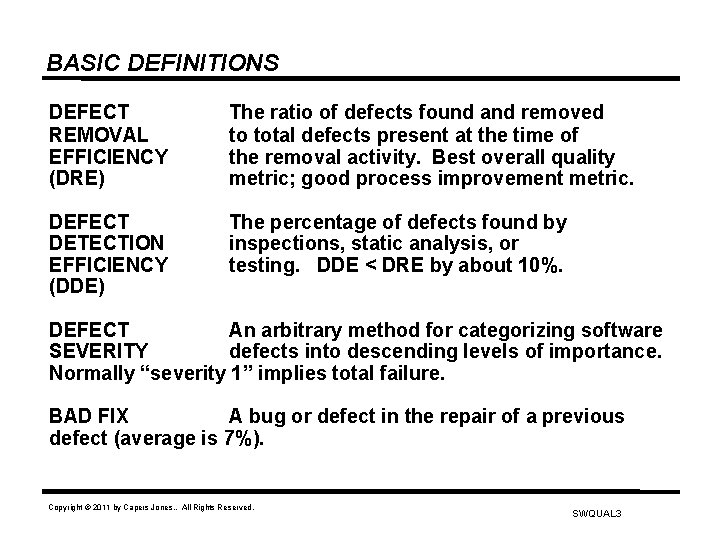

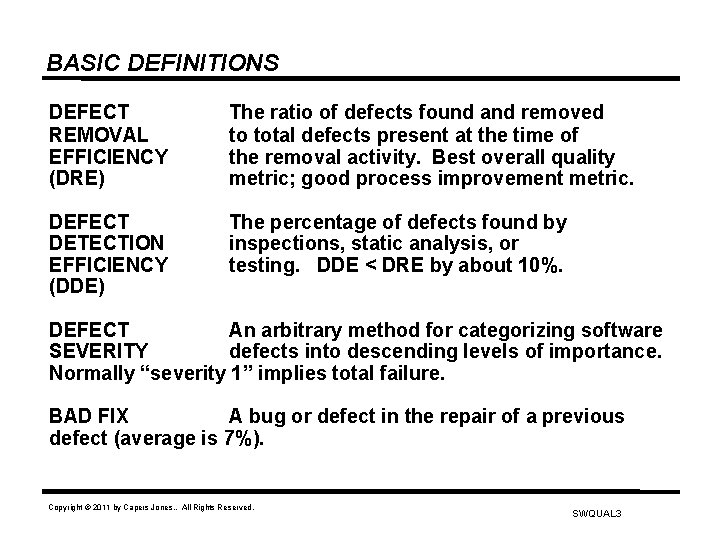

BASIC DEFINITIONS DEFECT REMOVAL EFFICIENCY (DRE) The ratio of defects found and removed to total defects present at the time of the removal activity. Best overall quality metric; good process improvement metric. DEFECT DETECTION EFFICIENCY (DDE) The percentage of defects found by inspections, static analysis, or testing. DDE < DRE by about 10%. DEFECT An arbitrary method for categorizing software SEVERITY defects into descending levels of importance. Normally “severity 1” implies total failure. BAD FIX A bug or defect in the repair of a previous defect (average is 7%). Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 3

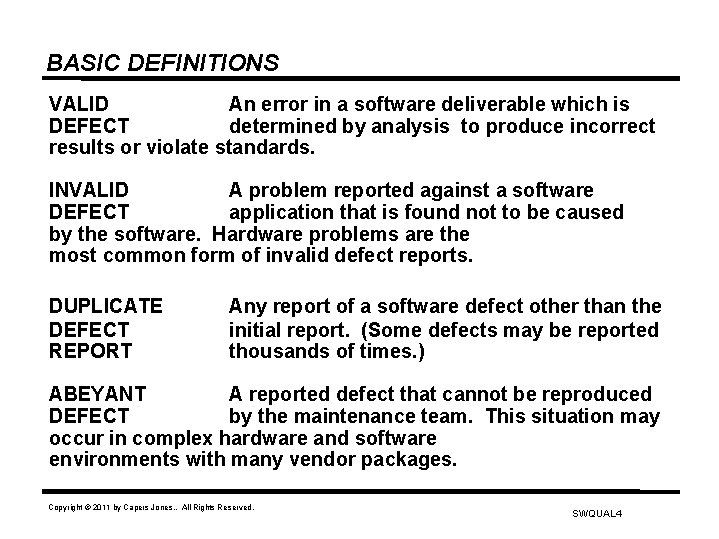

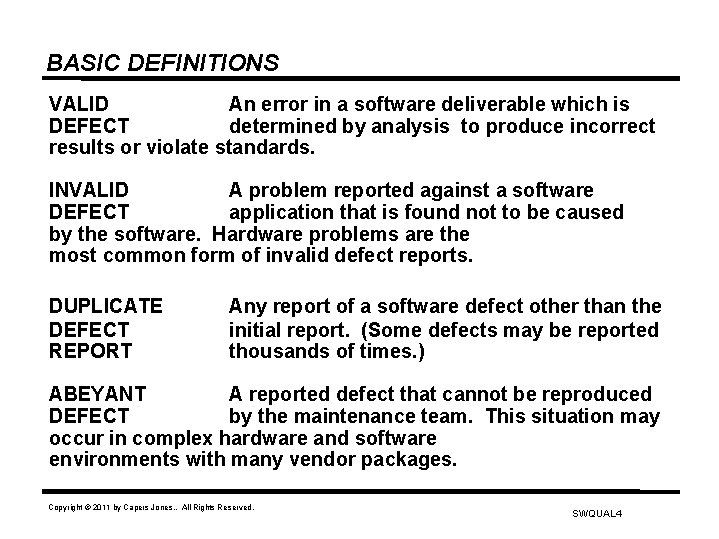

BASIC DEFINITIONS VALID An error in a software deliverable which is DEFECT determined by analysis to produce incorrect results or violate standards. INVALID A problem reported against a software DEFECT application that is found not to be caused by the software. Hardware problems are the most common form of invalid defect reports. DUPLICATE DEFECT REPORT Any report of a software defect other than the initial report. (Some defects may be reported thousands of times. ) ABEYANT A reported defect that cannot be reproduced DEFECT by the maintenance team. This situation may occur in complex hardware and software environments with many vendor packages. Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 4

BASIC DEFINITIONS STATIC Defect removal methods that do not ANALYSIS utilize execution of the software. Examples include design and code inspections; audits, and automated static analysis. DESIGN A formal, manual method in which a team INSPECTION of design personnel including a moderator and recorder examine specifications. CODE A formal, method in which a team of INSPECTION programmers and SQA personnel including a moderator and recorder examine source code. AUDIT A formal review of the process of software development, tools used, and records kept. Purpose is to ascertain good practices. Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 5

BASIC DEFINITIONS DYNAMIC Methods of defect removal that involve ANALYSIS executing software in order to determine its properties under usage conditions. TESTING Executing software in a controlled manner in order to judge its behavior against predetermined results TEST CASE A set of formal initial conditions and expected results against which software execution patterns can be evaluated FALSE POSITIVE A defect report made by mistake. Not a real defect. (Common with automated tests and automated static analysis. ) Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 6

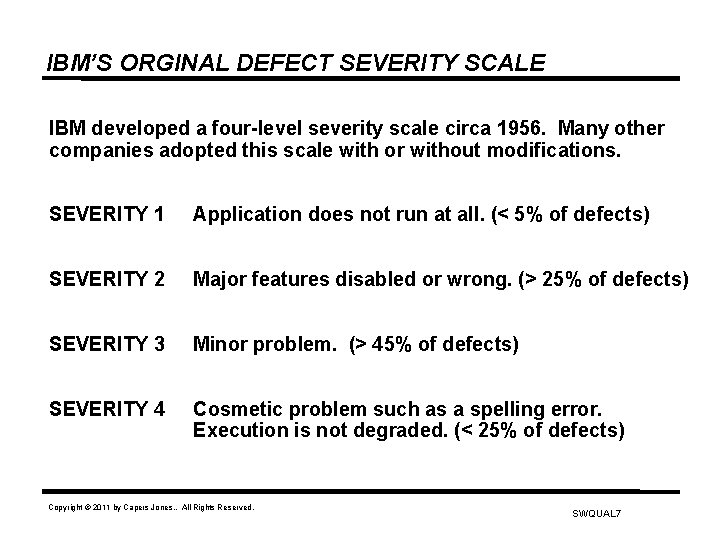

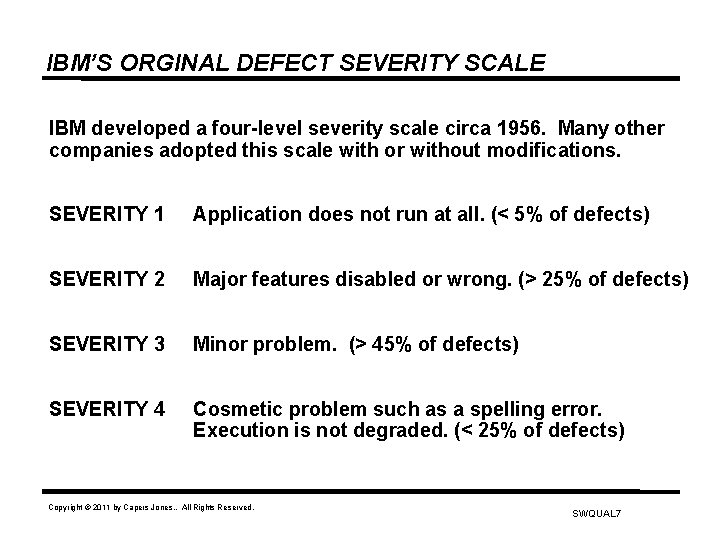

IBM’S ORGINAL DEFECT SEVERITY SCALE IBM developed a four-level severity scale circa 1956. Many other companies adopted this scale with or without modifications. SEVERITY 1 Application does not run at all. (< 5% of defects) SEVERITY 2 Major features disabled or wrong. (> 25% of defects) SEVERITY 3 Minor problem. (> 45% of defects) SEVERITY 4 Cosmetic problem such as a spelling error. Execution is not degraded. (< 25% of defects) Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 7

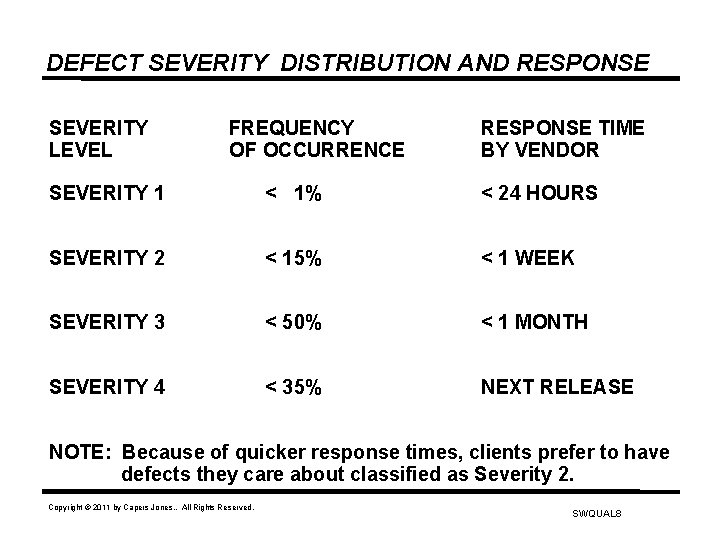

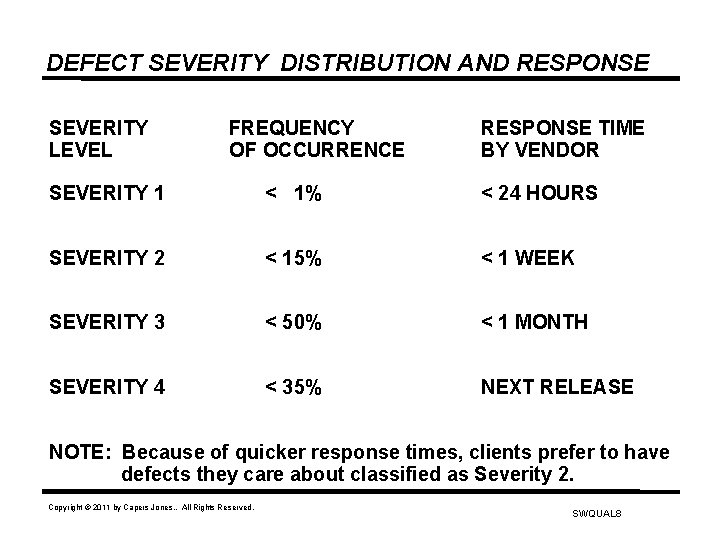

DEFECT SEVERITY DISTRIBUTION AND RESPONSE SEVERITY LEVEL FREQUENCY OF OCCURRENCE RESPONSE TIME BY VENDOR SEVERITY 1 < 1% < 24 HOURS SEVERITY 2 < 15% < 1 WEEK SEVERITY 3 < 50% < 1 MONTH SEVERITY 4 < 35% NEXT RELEASE NOTE: Because of quicker response times, clients prefer to have defects they care about classified as Severity 2. Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 8

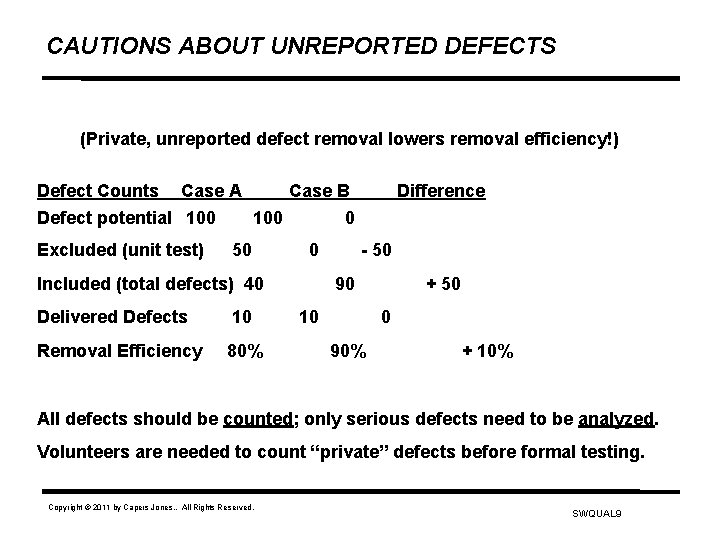

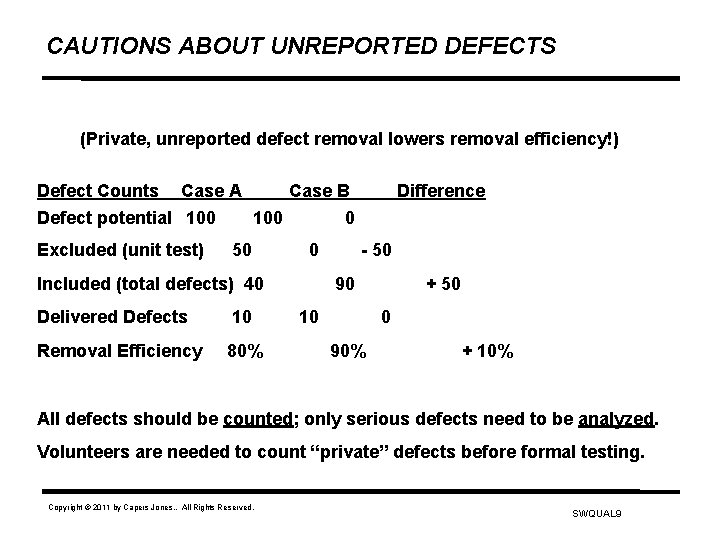

CAUTIONS ABOUT UNREPORTED DEFECTS (Private, unreported defect removal lowers removal efficiency!) Defect Counts Case A Case B Defect potential 100 0 Excluded (unit test) 50 0 Included (total defects) 40 Delivered Defects 10 Removal Efficiency 80% Difference - 50 90 10 + 50 0 90% + 10% All defects should be counted; only serious defects need to be analyzed. Volunteers are needed to count “private” defects before formal testing. Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 9

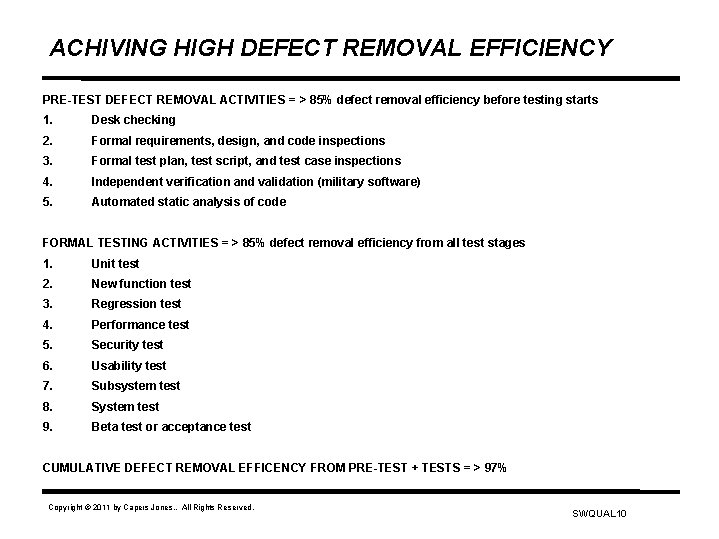

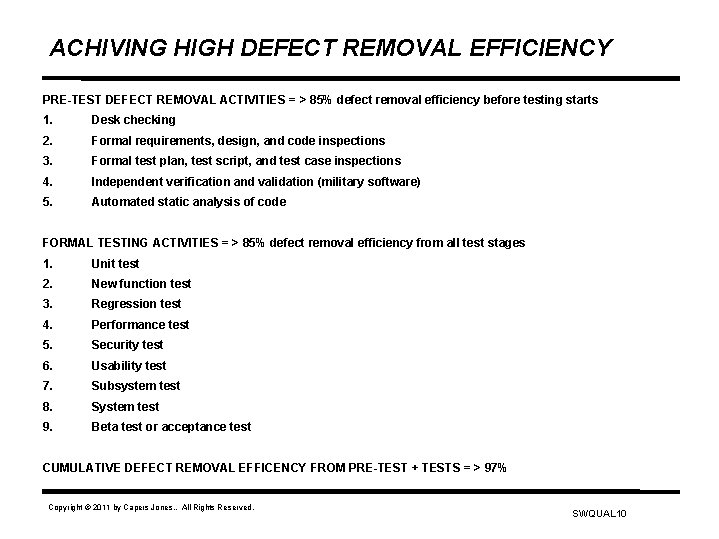

ACHIVING HIGH DEFECT REMOVAL EFFICIENCY PRE-TEST DEFECT REMOVAL ACTIVITIES = > 85% defect removal efficiency before testing starts 1. Desk checking 2. Formal requirements, design, and code inspections 3. Formal test plan, test script, and test case inspections 4. Independent verification and validation (military software) 5. Automated static analysis of code FORMAL TESTING ACTIVITIES = > 85% defect removal efficiency from all test stages 1. Unit test 2. New function test 3. Regression test 4. Performance test 5. Security test 6. Usability test 7. Subsystem test 8. System test 9. Beta test or acceptance test CUMULATIVE DEFECT REMOVAL EFFICENCY FROM PRE-TEST + TESTS = > 97% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 10

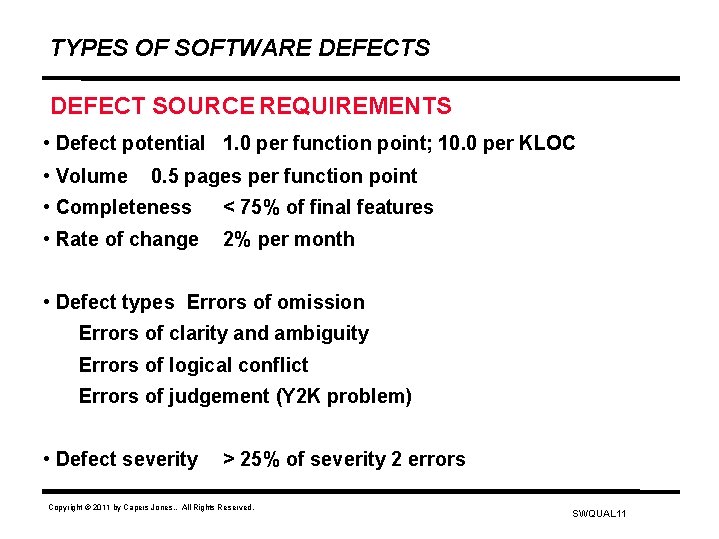

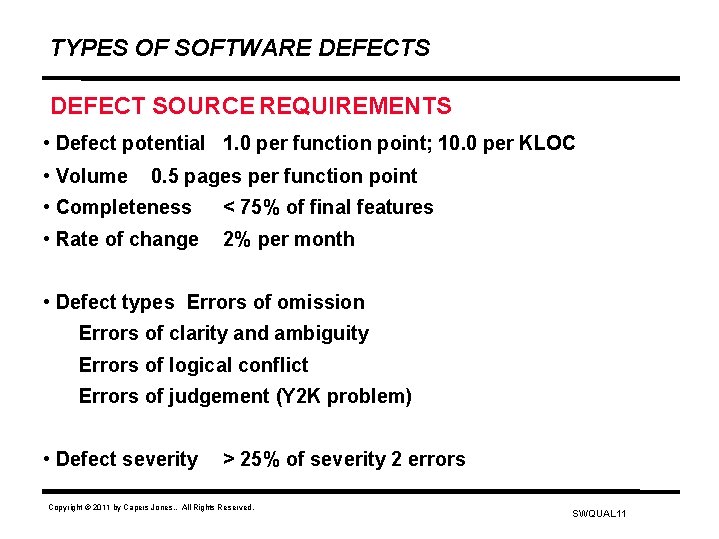

TYPES OF SOFTWARE DEFECTS DEFECT SOURCE REQUIREMENTS • Defect potential 1. 0 per function point; 10. 0 per KLOC • Volume 0. 5 pages per function point • Completeness < 75% of final features • Rate of change 2% per month • Defect types Errors of omission Errors of clarity and ambiguity Errors of logical conflict Errors of judgement (Y 2 K problem) • Defect severity > 25% of severity 2 errors Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 11

TYPES OF SOFTWARE DEFECTS DEFECT SOURCE DESIGN • Defect potential 1. 25 per function point; 12. 5 per KLOC • Volume 2. 5 pages per function point (in total) • Completeness < 65% of final features • Rate of change 2% per month • Defect types Errors of omission Errors of clarity and ambiguity Errors of logical conflict Errors of architecture and structure • Defect severity > 25% of severity 2 errors Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 12

TYPES OF SOFTWARE DEFECTS DEFECT SOURCE CODE • Defect potential 1. 75 per function point; 17. 5 per KLOC • Volume Varies by programming language • Completeness • Dead code 100% of final features > 10%; grows larger over time • Rate of change 5% per month • Defect types Errors of control flow Errors of memory management Errors of complexity and structure • Defect severity > 50% of severity 1 errors Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 13

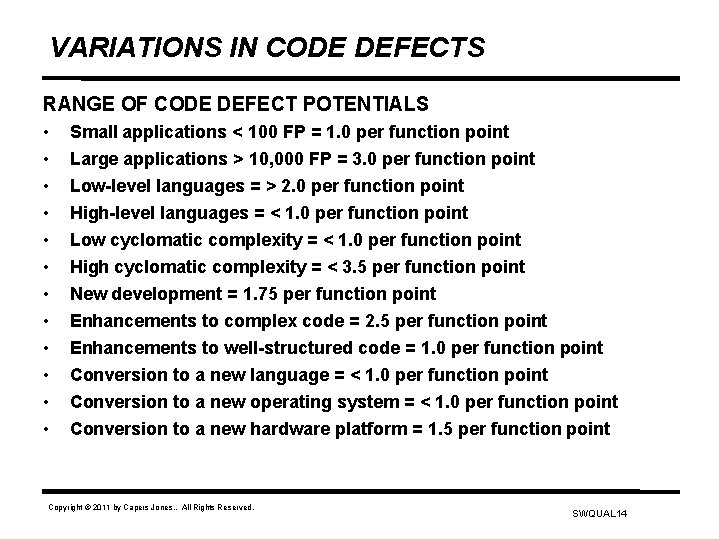

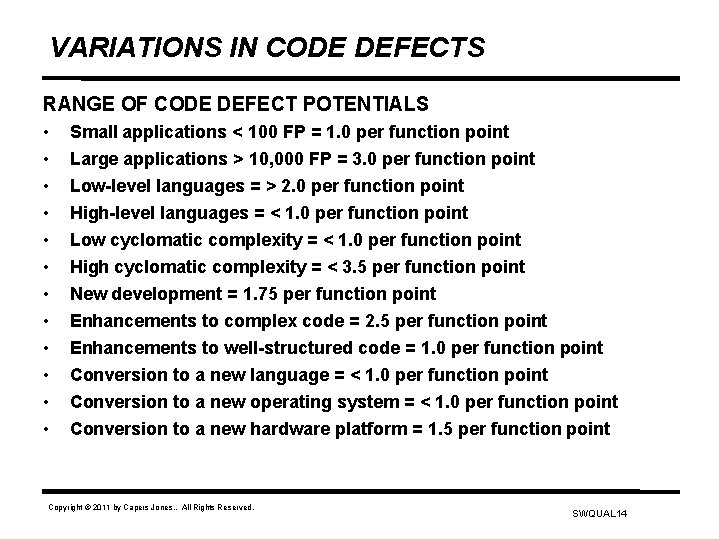

VARIATIONS IN CODE DEFECTS RANGE OF CODE DEFECT POTENTIALS • • • Small applications < 100 FP = 1. 0 per function point Large applications > 10, 000 FP = 3. 0 per function point Low-level languages = > 2. 0 per function point High-level languages = < 1. 0 per function point Low cyclomatic complexity = < 1. 0 per function point High cyclomatic complexity = < 3. 5 per function point New development = 1. 75 per function point Enhancements to complex code = 2. 5 per function point Enhancements to well-structured code = 1. 0 per function point Conversion to a new language = < 1. 0 per function point Conversion to a new operating system = < 1. 0 per function point Conversion to a new hardware platform = 1. 5 per function point Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 14

EXAMPLES OF TYPICAL CODE DEFECTS SOURCES: SANS INSTITUTE AND MITRE (www. SANS. org and www. CWE-MITRE. org) • Errors in SQL queries • Failure to validate inputs • Failure to validate outputs • Race conditions • Leaks from error messages • Unconstrained memory buffers • Loss of state data • Incorrect branches; hazardous paths • Careless initialization and shutdown • Errors in calculations and algorithms • Hard coding of variable items • Reusing code without validation or context checking • Changing code without changing comments that explain code Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 15

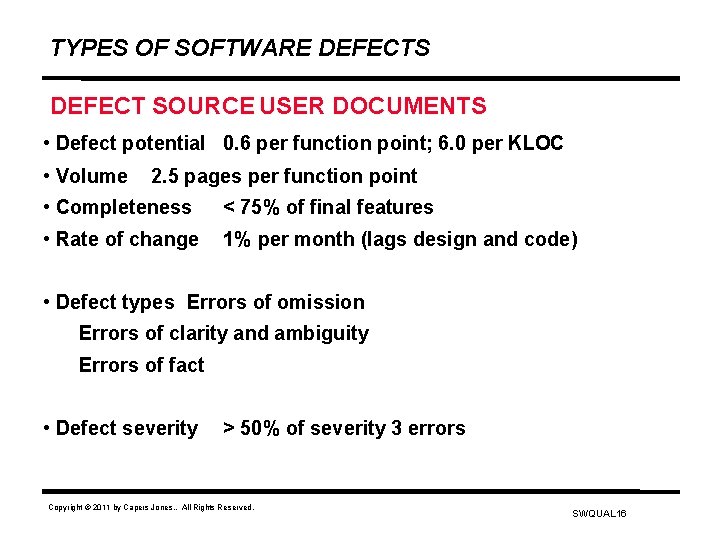

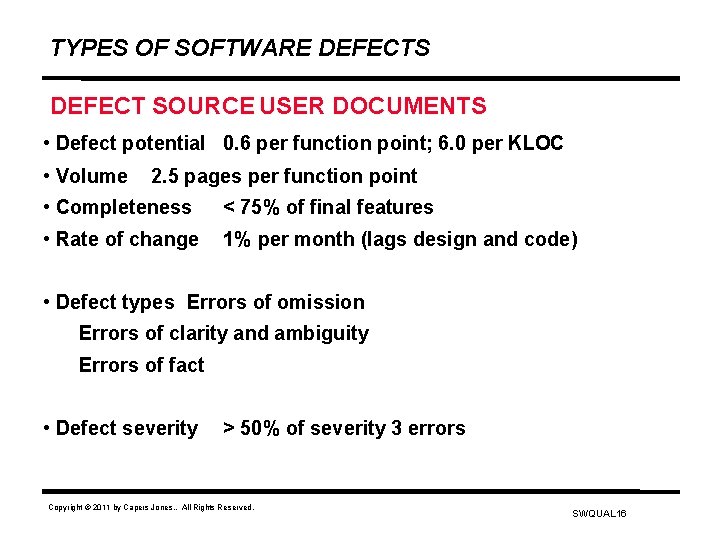

TYPES OF SOFTWARE DEFECTS DEFECT SOURCE USER DOCUMENTS • Defect potential 0. 6 per function point; 6. 0 per KLOC • Volume 2. 5 pages per function point • Completeness < 75% of final features • Rate of change 1% per month (lags design and code) • Defect types Errors of omission Errors of clarity and ambiguity Errors of fact • Defect severity > 50% of severity 3 errors Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 16

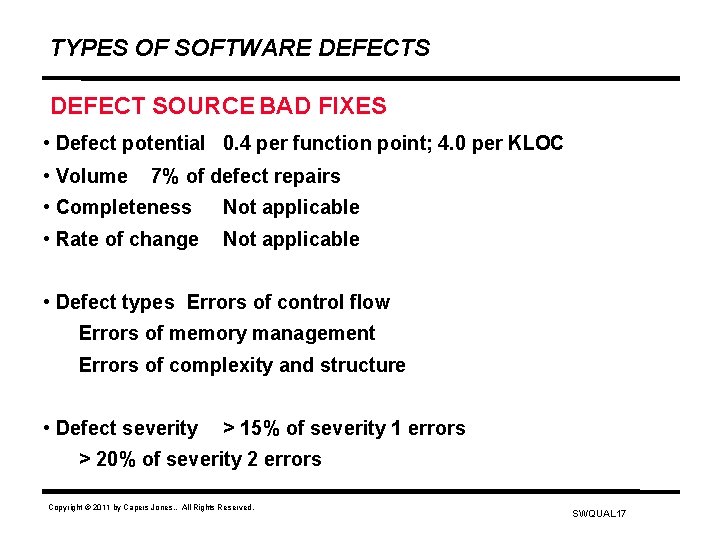

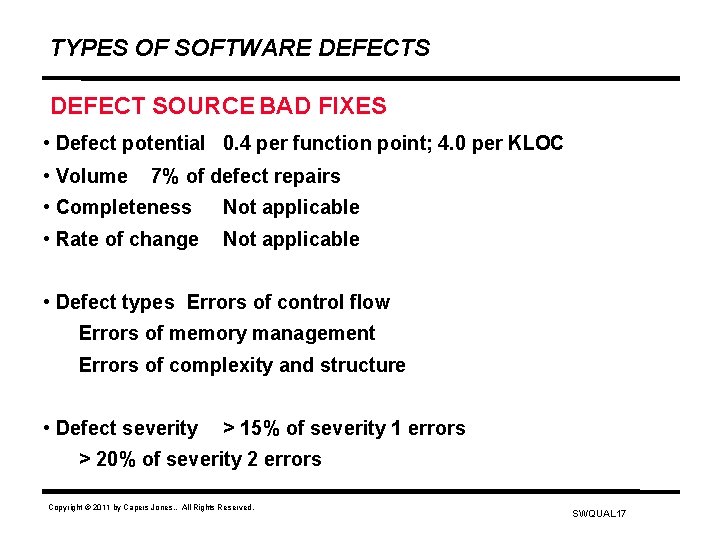

TYPES OF SOFTWARE DEFECTS DEFECT SOURCE BAD FIXES • Defect potential 0. 4 per function point; 4. 0 per KLOC • Volume 7% of defect repairs • Completeness Not applicable • Rate of change Not applicable • Defect types Errors of control flow Errors of memory management Errors of complexity and structure • Defect severity > 15% of severity 1 errors > 20% of severity 2 errors Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 17

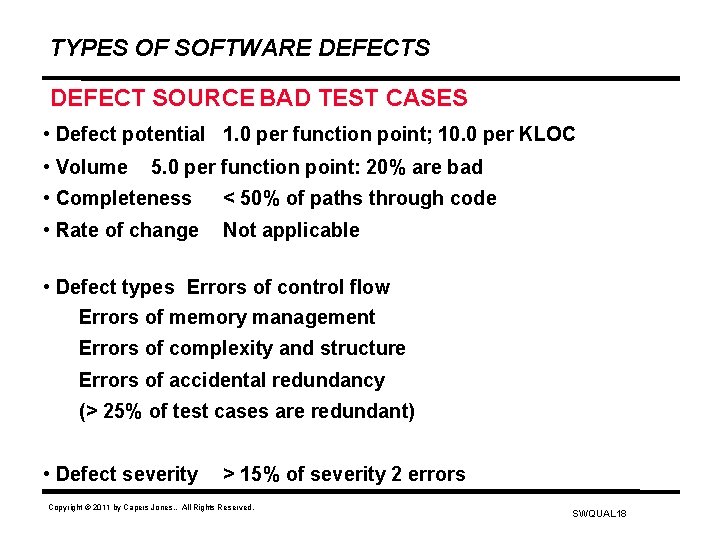

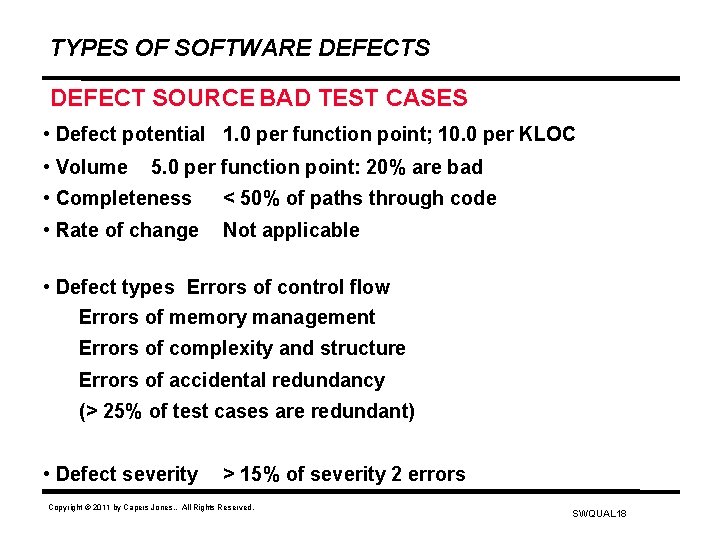

TYPES OF SOFTWARE DEFECTS DEFECT SOURCE BAD TEST CASES • Defect potential 1. 0 per function point; 10. 0 per KLOC • Volume 5. 0 per function point: 20% are bad • Completeness < 50% of paths through code • Rate of change Not applicable • Defect types Errors of control flow Errors of memory management Errors of complexity and structure Errors of accidental redundancy (> 25% of test cases are redundant) • Defect severity > 15% of severity 2 errors Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 18

TYPES OF SOFTWARE DEFECTS DEFECT SOURCE AUTOMATIC TEST CASES • Defect potential 0. 5 per function point; 5. 0 per KLOC • Volume 5. 0 per function point: 15% are bad • Completeness < 75% of paths through code • Rate of change 2% per month • Defect types Errors of control flow Errors of omission (not in design) Errors of complexity and structure Errors of accidental redundancy (> 25% of test cases are redundant) • Defect severity > 15% of severity 2 errors Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 19

FORMS OF SOFTWARE DEFECT REMOVAL STATIC ANALYSIS • • • Requirement inspections Design inspections Code inspections Automated static analysis Informal peer reviews Personal desk checking Test plan inspections Test case inspections Document editing Independent verification and validation (IV&V) Independent audits Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 20

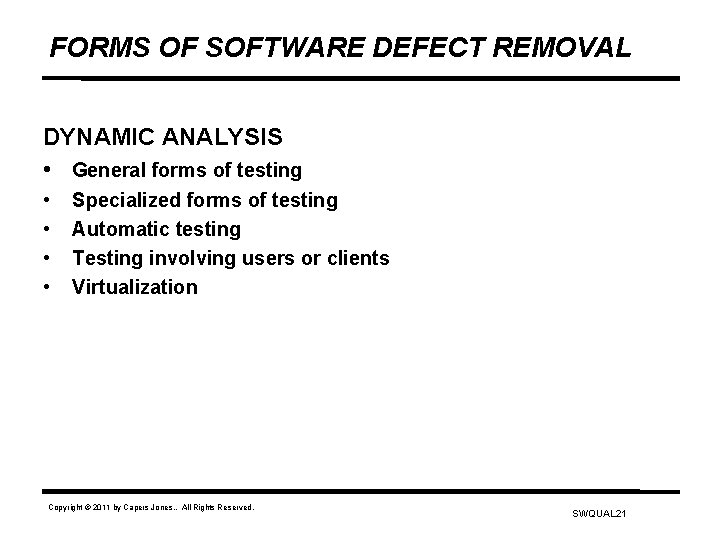

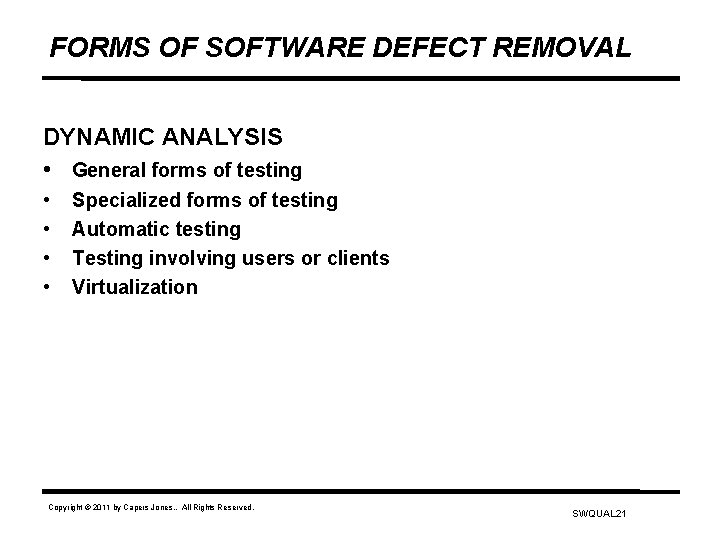

FORMS OF SOFTWARE DEFECT REMOVAL DYNAMIC ANALYSIS • General forms of testing • • Specialized forms of testing Automatic testing Testing involving users or clients Virtualization Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 21

FORMS OF SOFTWARE TESTING GENERAL FORMS OF SOFTWARE TESTING • Subroutine testing • Extreme Programming (XP) testing • Unit testing • Component testing • New function testing • Regression testing • Integration testing • System testing Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 22

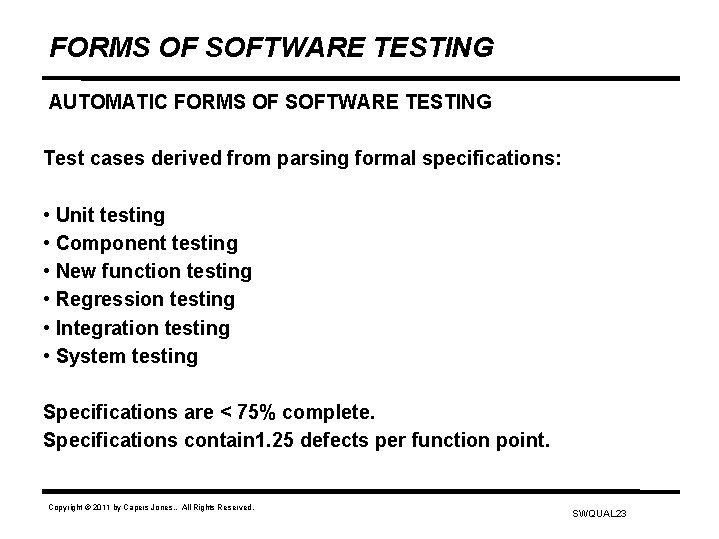

FORMS OF SOFTWARE TESTING AUTOMATIC FORMS OF SOFTWARE TESTING Test cases derived from parsing formal specifications: • Unit testing • Component testing • New function testing • Regression testing • Integration testing • System testing Specifications are < 75% complete. Specifications contain 1. 25 defects per function point. Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 23

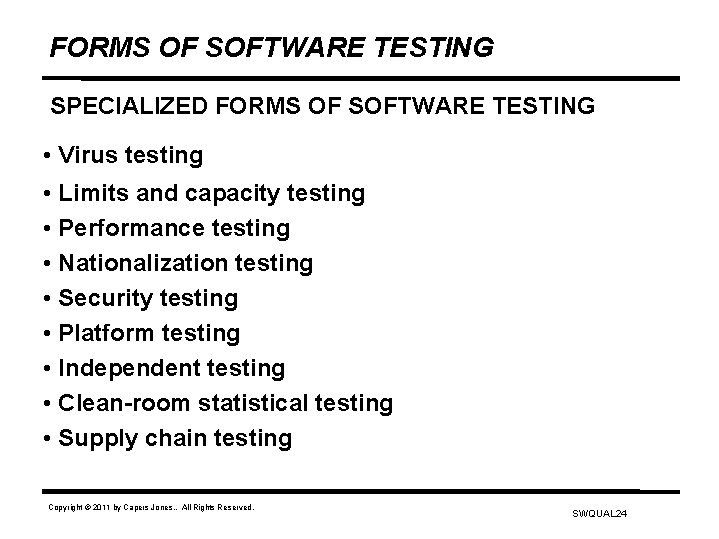

FORMS OF SOFTWARE TESTING SPECIALIZED FORMS OF SOFTWARE TESTING • Virus testing • Limits and capacity testing • Performance testing • Nationalization testing • Security testing • Platform testing • Independent testing • Clean-room statistical testing • Supply chain testing Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 24

FORMS OF SOFTWARE TESTING WITH USERS • Pre-purchase testing • Acceptance testing • External Beta testing • Usability testing • Laboratory testing Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 25

FORMS OF DEFECT REMOVAL SOFTWARE STATIC ANALYSIS Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 26

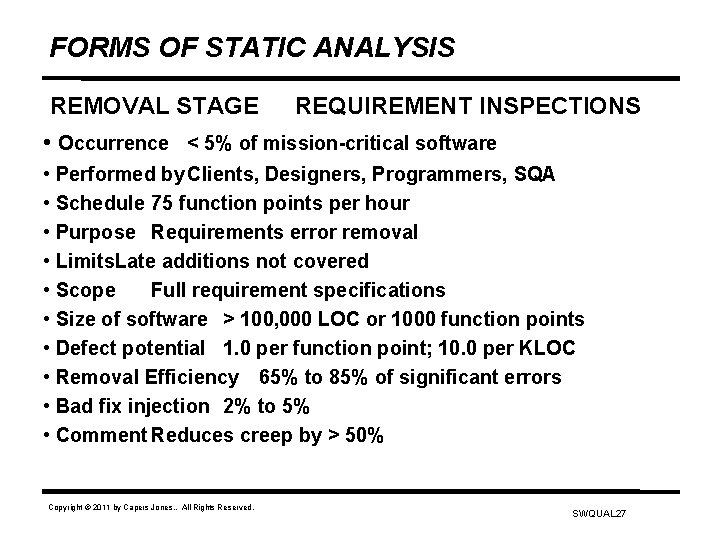

FORMS OF STATIC ANALYSIS REMOVAL STAGE REQUIREMENT INSPECTIONS • Occurrence < 5% of mission-critical software • Performed by Clients, Designers, Programmers, SQA • Schedule 75 function points per hour • Purpose Requirements error removal • Limits. Late additions not covered • Scope Full requirement specifications • Size of software > 100, 000 LOC or 1000 function points • Defect potential 1. 0 per function point; 10. 0 per KLOC • Removal Efficiency 65% to 85% of significant errors • Bad fix injection 2% to 5% • Comment Reduces creep by > 50% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 27

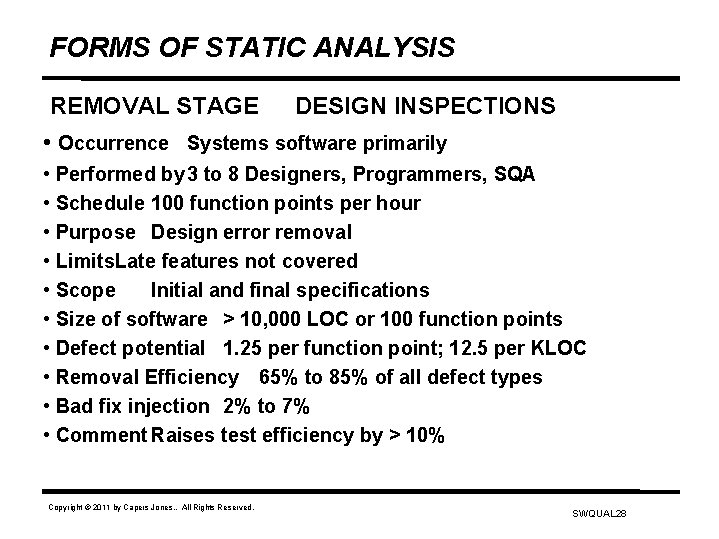

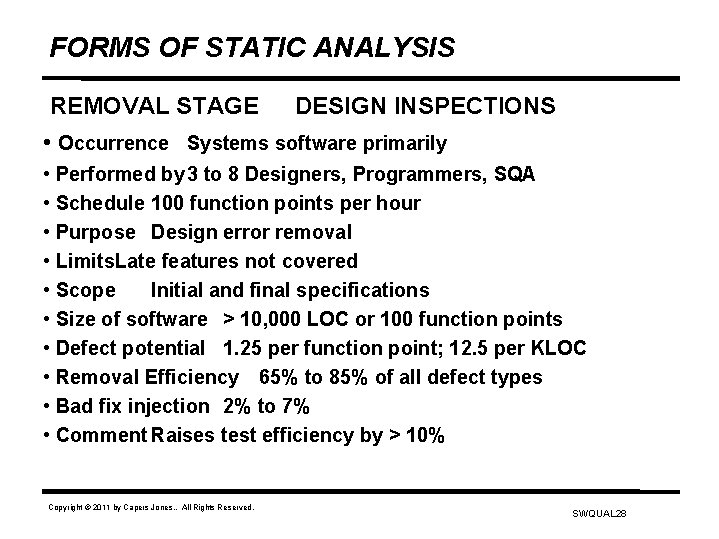

FORMS OF STATIC ANALYSIS REMOVAL STAGE DESIGN INSPECTIONS • Occurrence Systems software primarily • Performed by 3 to 8 Designers, Programmers, SQA • Schedule 100 function points per hour • Purpose Design error removal • Limits. Late features not covered • Scope Initial and final specifications • Size of software > 10, 000 LOC or 100 function points • Defect potential 1. 25 per function point; 12. 5 per KLOC • Removal Efficiency 65% to 85% of all defect types • Bad fix injection 2% to 7% • Comment Raises test efficiency by > 10% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 28

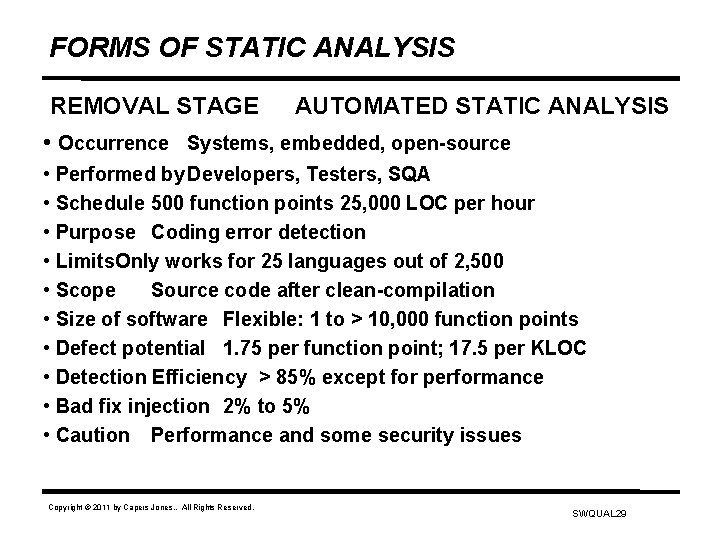

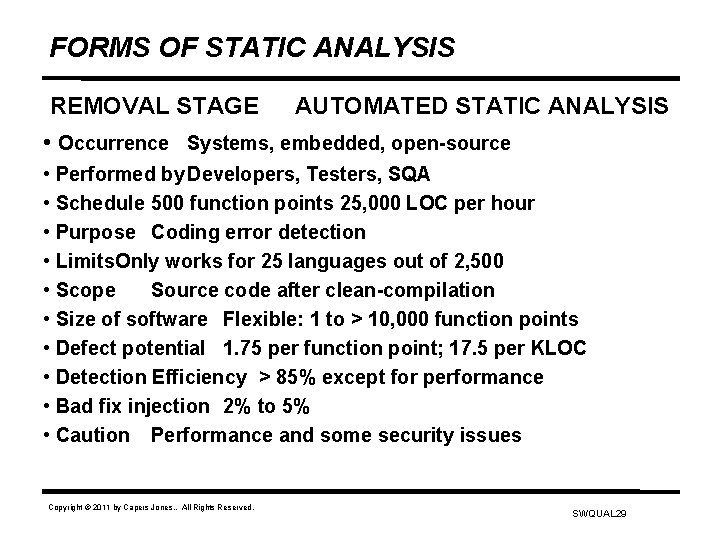

FORMS OF STATIC ANALYSIS REMOVAL STAGE AUTOMATED STATIC ANALYSIS • Occurrence Systems, embedded, open-source • Performed by Developers, Testers, SQA • Schedule 500 function points 25, 000 LOC per hour • Purpose Coding error detection • Limits. Only works for 25 languages out of 2, 500 • Scope Source code after clean-compilation • Size of software Flexible: 1 to > 10, 000 function points • Defect potential 1. 75 per function point; 17. 5 per KLOC • Detection Efficiency > 85% except for performance • Bad fix injection 2% to 5% • Caution Performance and some security issues Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 29

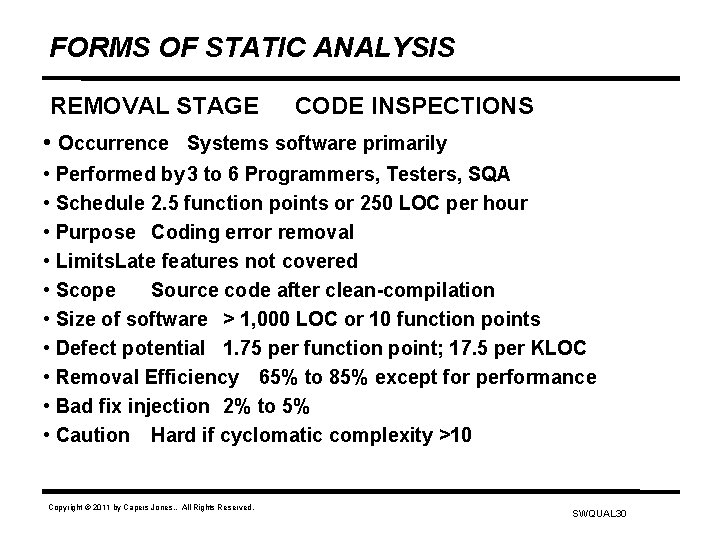

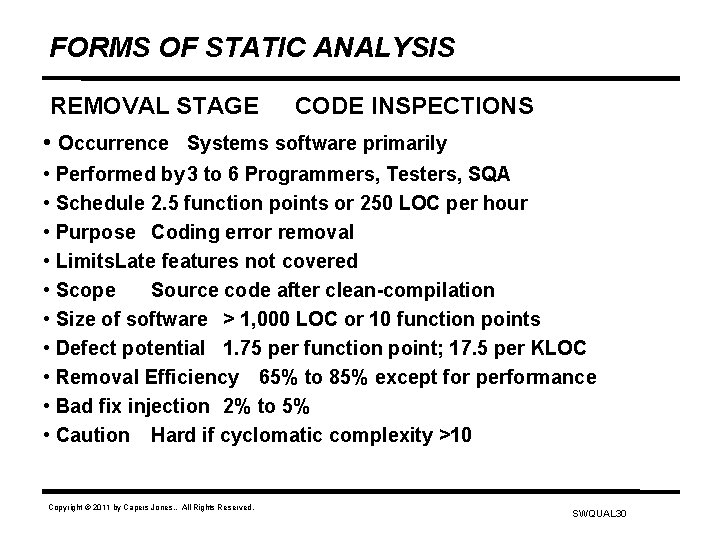

FORMS OF STATIC ANALYSIS REMOVAL STAGE CODE INSPECTIONS • Occurrence Systems software primarily • Performed by 3 to 6 Programmers, Testers, SQA • Schedule 2. 5 function points or 250 LOC per hour • Purpose Coding error removal • Limits. Late features not covered • Scope Source code after clean-compilation • Size of software > 1, 000 LOC or 10 function points • Defect potential 1. 75 per function point; 17. 5 per KLOC • Removal Efficiency 65% to 85% except for performance • Bad fix injection 2% to 5% • Caution Hard if cyclomatic complexity >10 Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 30

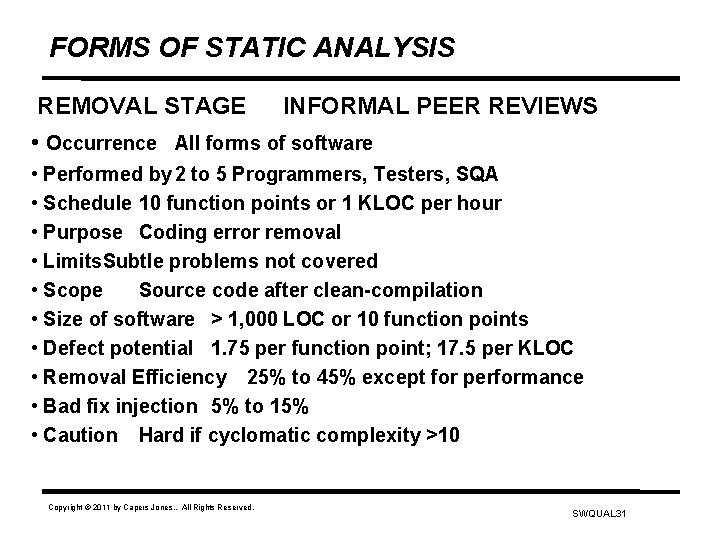

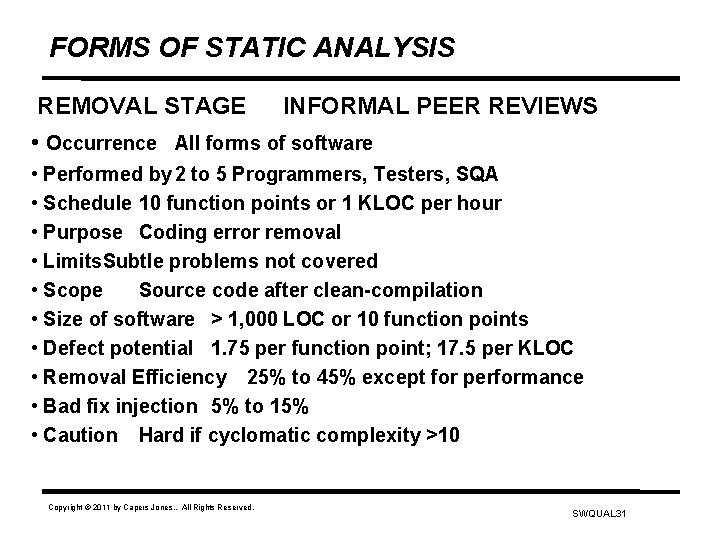

FORMS OF STATIC ANALYSIS REMOVAL STAGE INFORMAL PEER REVIEWS • Occurrence All forms of software • Performed by 2 to 5 Programmers, Testers, SQA • Schedule 10 function points or 1 KLOC per hour • Purpose Coding error removal • Limits. Subtle problems not covered • Scope Source code after clean-compilation • Size of software > 1, 000 LOC or 10 function points • Defect potential 1. 75 per function point; 17. 5 per KLOC • Removal Efficiency 25% to 45% except for performance • Bad fix injection 5% to 15% • Caution Hard if cyclomatic complexity >10 Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 31

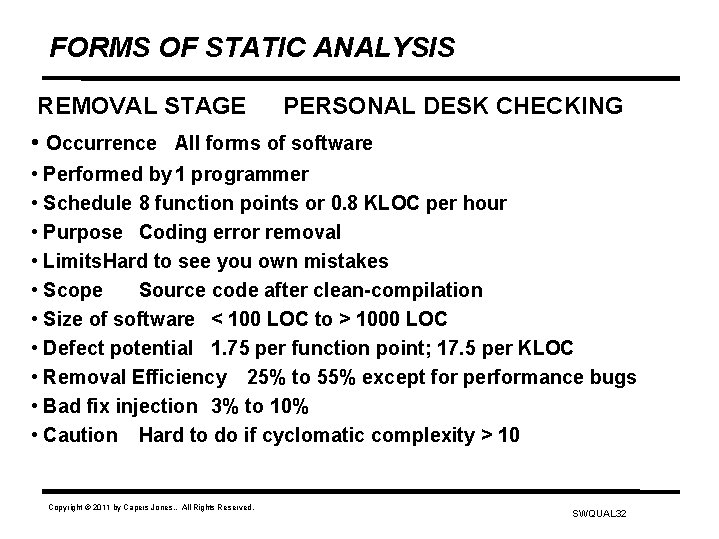

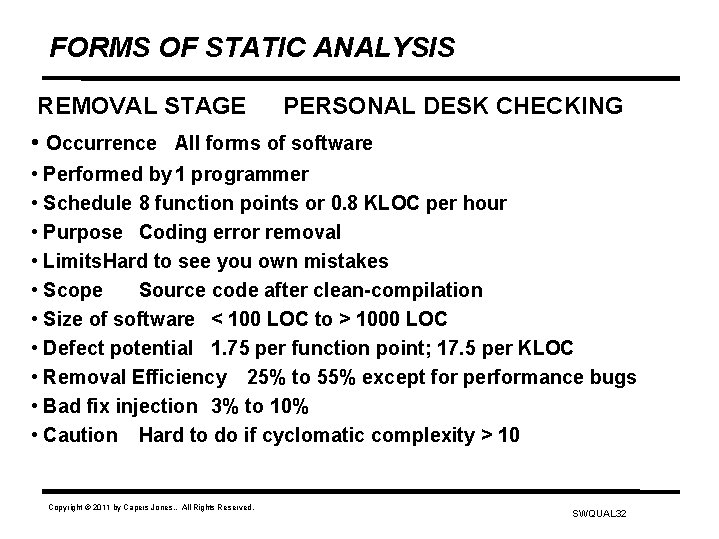

FORMS OF STATIC ANALYSIS REMOVAL STAGE PERSONAL DESK CHECKING • Occurrence All forms of software • Performed by 1 programmer • Schedule 8 function points or 0. 8 KLOC per hour • Purpose Coding error removal • Limits. Hard to see you own mistakes • Scope Source code after clean-compilation • Size of software < 100 LOC to > 1000 LOC • Defect potential 1. 75 per function point; 17. 5 per KLOC • Removal Efficiency 25% to 55% except for performance bugs • Bad fix injection 3% to 10% • Caution Hard to do if cyclomatic complexity > 10 Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 32

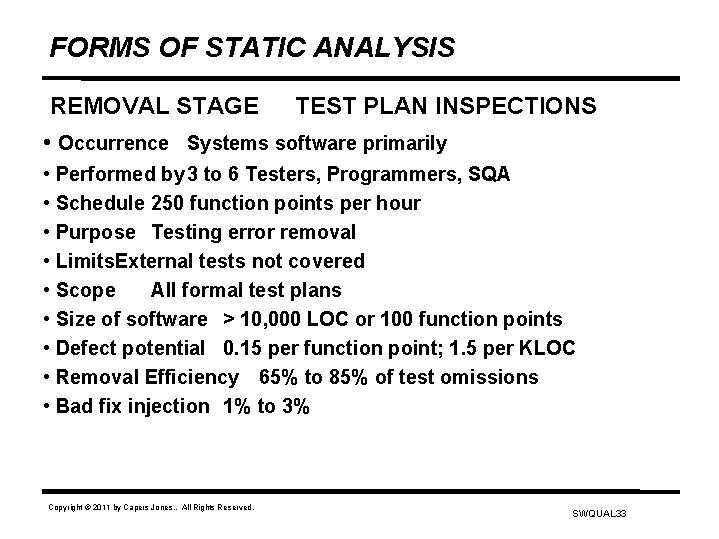

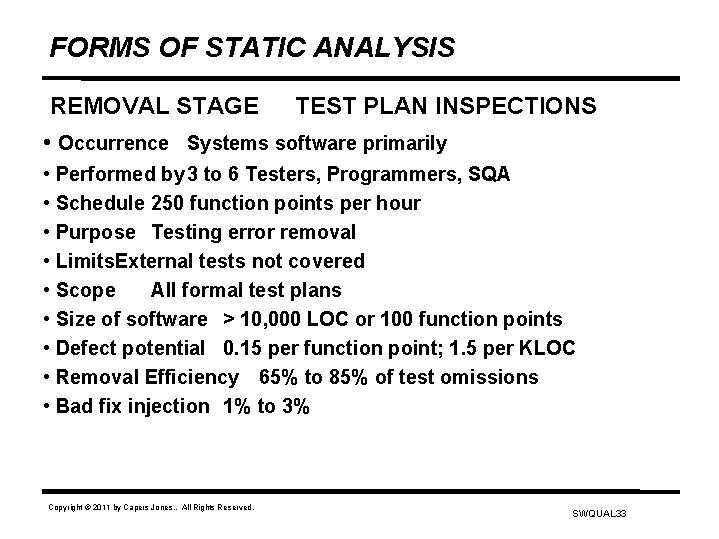

FORMS OF STATIC ANALYSIS REMOVAL STAGE TEST PLAN INSPECTIONS • Occurrence Systems software primarily • Performed by 3 to 6 Testers, Programmers, SQA • Schedule 250 function points per hour • Purpose Testing error removal • Limits. External tests not covered • Scope All formal test plans • Size of software > 10, 000 LOC or 100 function points • Defect potential 0. 15 per function point; 1. 5 per KLOC • Removal Efficiency 65% to 85% of test omissions • Bad fix injection 1% to 3% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 33

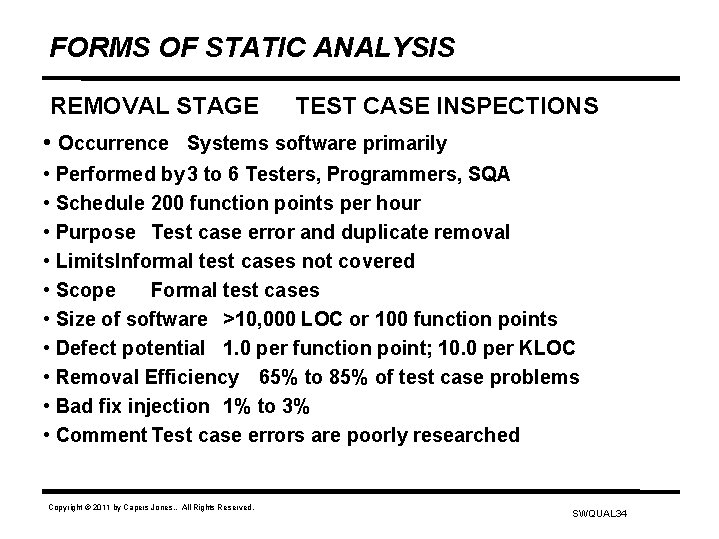

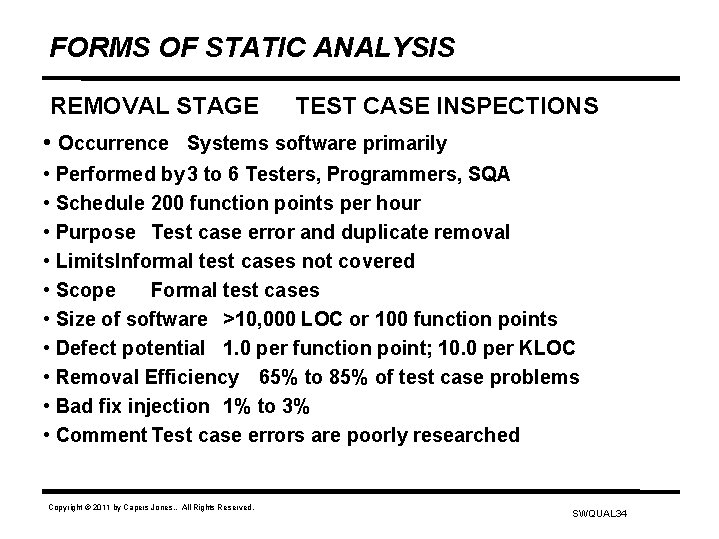

FORMS OF STATIC ANALYSIS REMOVAL STAGE TEST CASE INSPECTIONS • Occurrence Systems software primarily • Performed by 3 to 6 Testers, Programmers, SQA • Schedule 200 function points per hour • Purpose Test case error and duplicate removal • Limits. Informal test cases not covered • Scope Formal test cases • Size of software >10, 000 LOC or 100 function points • Defect potential 1. 0 per function point; 10. 0 per KLOC • Removal Efficiency 65% to 85% of test case problems • Bad fix injection 1% to 3% • Comment Test case errors are poorly researched Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 34

FORMS OF STATIC ANALYSIS REMOVAL STAGE DOCUMENT EDITING • Occurrence Commercial software primarily • Performed by Editors, SQA • Schedule 100 function points per hour • Purpose Document error removal • Limits. Late code changes not covered • Scope Paper documents and screens • Size of software >10, 000 LOC or 100 function points • Defect potential 0. 6 per function point; 6. 0 per KLOC • Removal Efficiency 70% to 95% of errors of style • Bad fix injection 1% to 3% • Comment Document errors often severity 3 Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 35

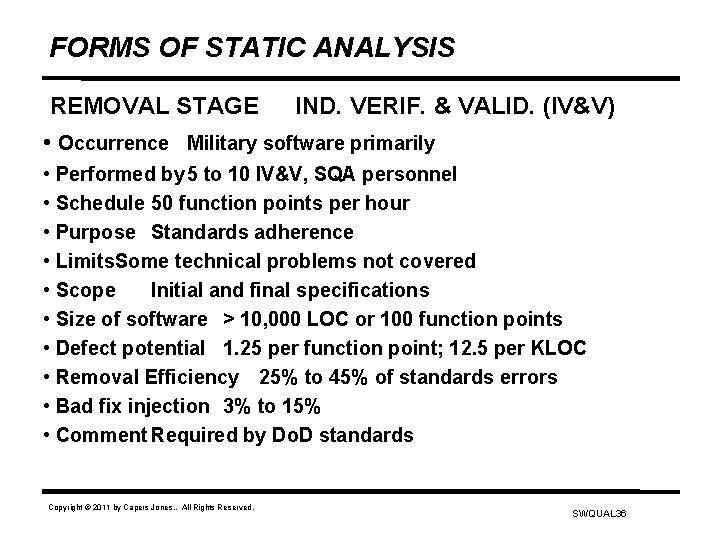

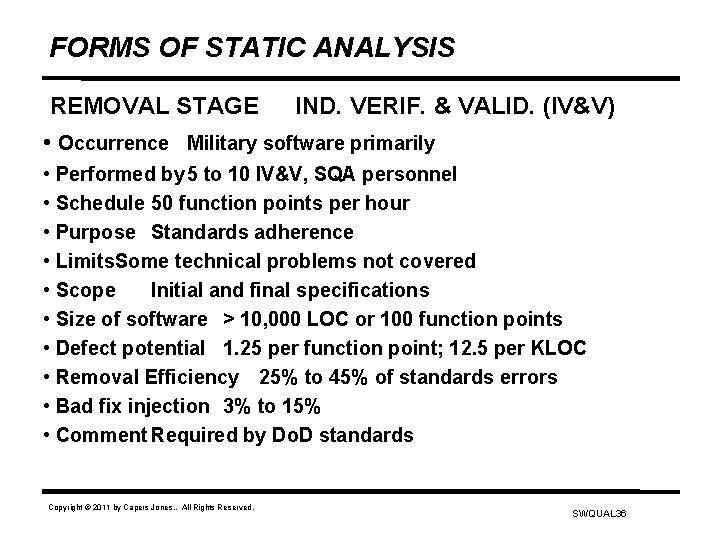

FORMS OF STATIC ANALYSIS REMOVAL STAGE IND. VERIF. & VALID. (IV&V) • Occurrence Military software primarily • Performed by 5 to 10 IV&V, SQA personnel • Schedule 50 function points per hour • Purpose Standards adherence • Limits. Some technical problems not covered • Scope Initial and final specifications • Size of software > 10, 000 LOC or 100 function points • Defect potential 1. 25 per function point; 12. 5 per KLOC • Removal Efficiency 25% to 45% of standards errors • Bad fix injection 3% to 15% • Comment Required by Do. D standards Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 36

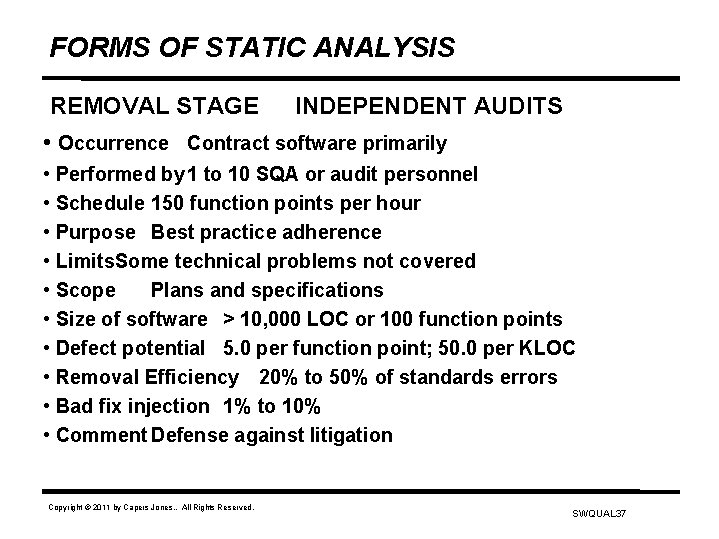

FORMS OF STATIC ANALYSIS REMOVAL STAGE INDEPENDENT AUDITS • Occurrence Contract software primarily • Performed by 1 to 10 SQA or audit personnel • Schedule 150 function points per hour • Purpose Best practice adherence • Limits. Some technical problems not covered • Scope Plans and specifications • Size of software > 10, 000 LOC or 100 function points • Defect potential 5. 0 per function point; 50. 0 per KLOC • Removal Efficiency 20% to 50% of standards errors • Bad fix injection 1% to 10% • Comment Defense against litigation Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 37

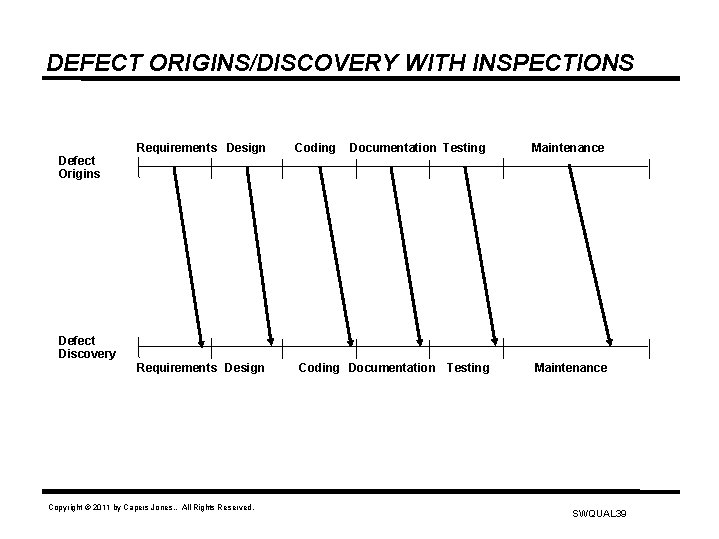

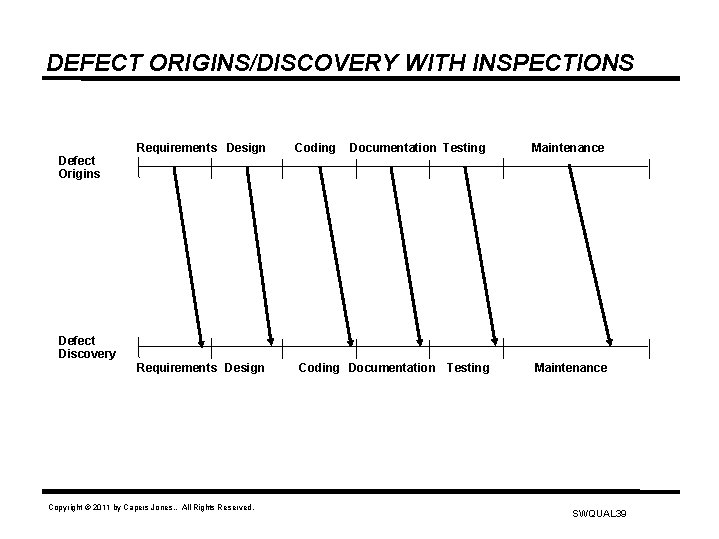

NORMAL DEFECT ORIGIN/DISCOVERY GAPS Defect Origins Requirements Design Coding Documentation Testing Maintenance Defect Discovery Zone of Chaos Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 38

DEFECT ORIGINS/DISCOVERY WITH INSPECTIONS Defect Origins Requirements Design Coding Documentation Testing Maintenance Defect Discovery Requirements Design Copyright © 2011 by Capers Jones. . All Rights Reserved. Coding Documentation Testing Maintenance SWQUAL 39

LOGISTICS OF SOFTWARE INSPECTIONS • Inspections most often used on “mission critical” software • Full inspections of 100% of design and code are best • From 3 to 8 team members for each inspection • Team includes SQA, testers, tech writers, software engineers • Every inspection has a “moderator” and a “recorder” • Preparation time starts 1 week prior to inspection session • Many defects are found during preparation • Inspections can be live, or remote using groupware tools Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 40

LOGISTICS OF SOFTWARE INSPECTIONS • Inspection sessions limited to 2 hour duration • No more than 2 inspection sessions per business day • Inspections find problems: repairs take place off-line • Moderator follows up to check status of repairs • Team determines if a problem is a defect or an enhancement • Inspections are peer reviews: no managers are present • Inspection defect data should not be used for appraisals • Remote on-line inspections are now very cost effective Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 41

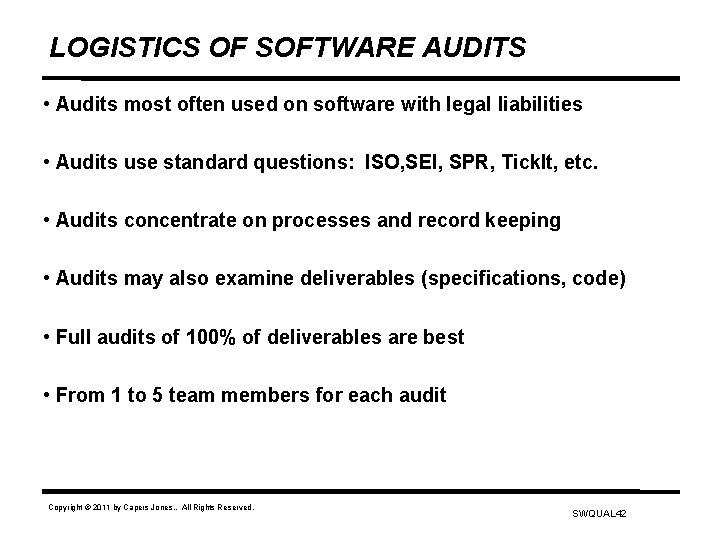

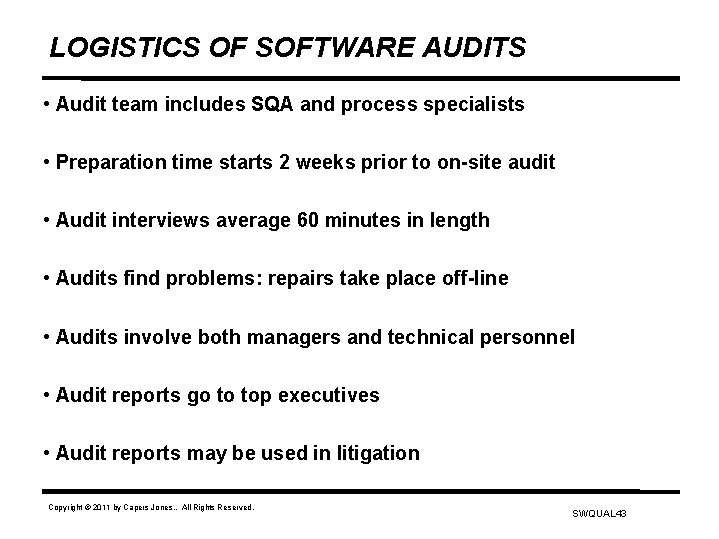

LOGISTICS OF SOFTWARE AUDITS • Audits most often used on software with legal liabilities • Audits use standard questions: ISO, SEI, SPR, Tick. It, etc. • Audits concentrate on processes and record keeping • Audits may also examine deliverables (specifications, code) • Full audits of 100% of deliverables are best • From 1 to 5 team members for each audit Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 42

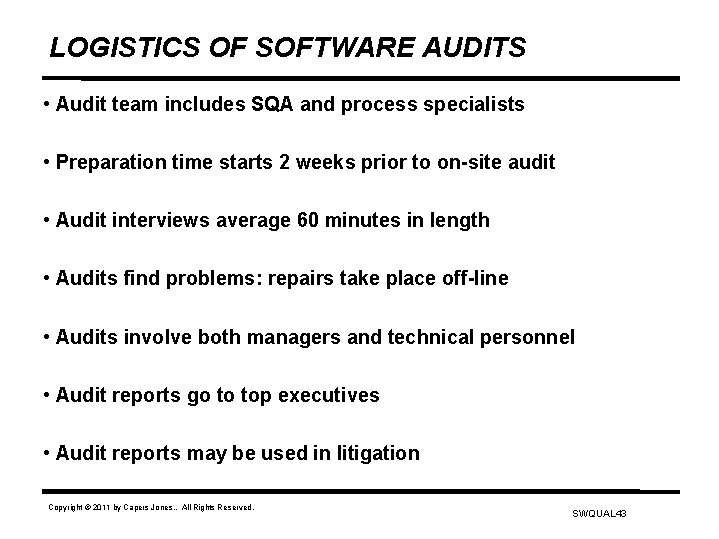

LOGISTICS OF SOFTWARE AUDITS • Audit team includes SQA and process specialists • Preparation time starts 2 weeks prior to on-site audit • Audit interviews average 60 minutes in length • Audits find problems: repairs take place off-line • Audits involve both managers and technical personnel • Audit reports go to top executives • Audit reports may be used in litigation Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 43

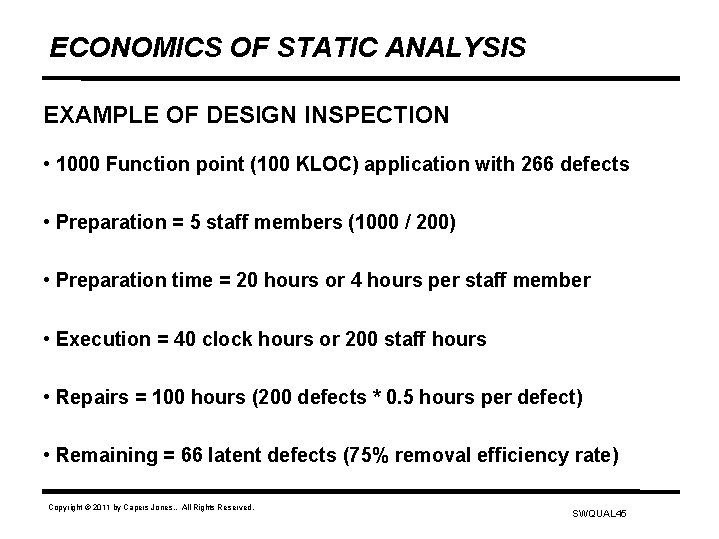

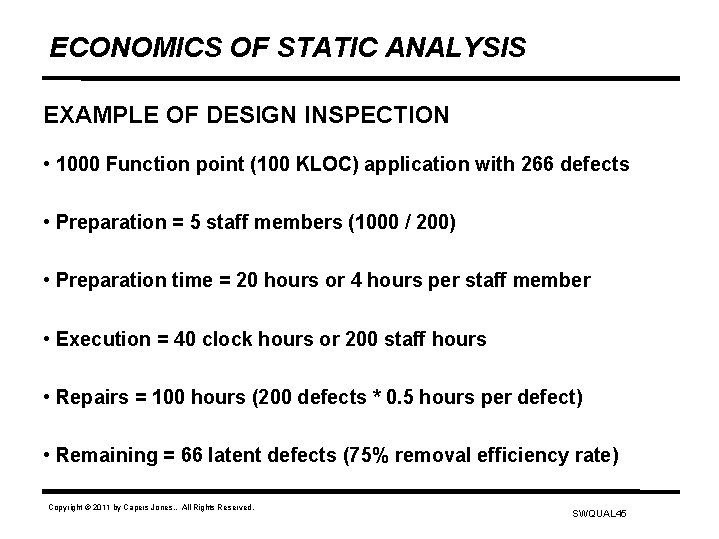

ECONOMICS OF STATIC ANALYSIS EXAMPLE OF DESIGN INSPECTION • Assignment scope 200 function points • Defect potential 266 design errors • Preparation 50. 0 function points per staff hour • Execution 25. 0 function points per hour • Repairs 0. 5 staff hours per defect • Efficiency > 75. 0% of latent errors detected Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 44

ECONOMICS OF STATIC ANALYSIS EXAMPLE OF DESIGN INSPECTION • 1000 Function point (100 KLOC) application with 266 defects • Preparation = 5 staff members (1000 / 200) • Preparation time = 20 hours or 4 hours per staff member • Execution = 40 clock hours or 200 staff hours • Repairs = 100 hours (200 defects * 0. 5 hours per defect) • Remaining = 66 latent defects (75% removal efficiency rate) Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 45

FORMS OF DEFECT REMOVAL GENERAL SOFTWARE TESTING Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 46

GENERAL FORMS OF SOFTWARE TESTING Test SUBROUTINE TEST • Occurrence All types of software • Performed by Programmers • Schedule 2. 0 function points or 200 LOC per hour • Purpose Coding error removal • Limits. Interfaces and control flow not tested • Scope Individual subroutines • Size Tested 10 LOC or 0. 1 function point • Test cases 0. 25 per function point • Test case errors 20% • Test runs 3. 0 per test case • Removal Efficiency 50% to 70% of logic and coding errors • Bad fix injection 2% to 5% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 47

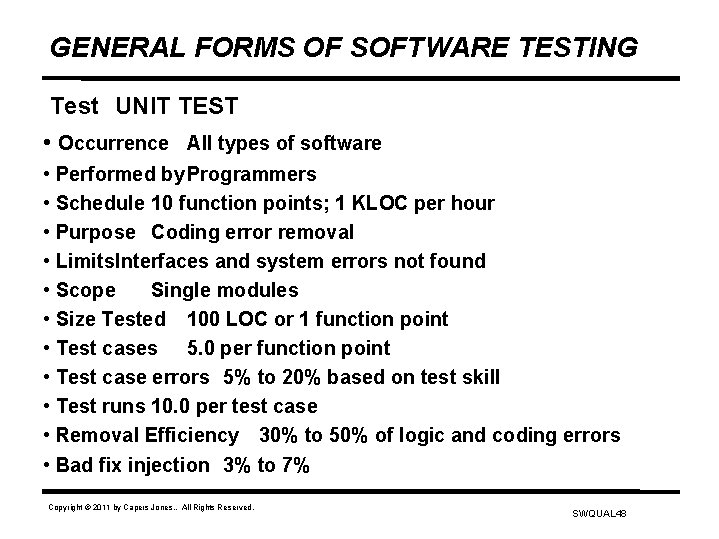

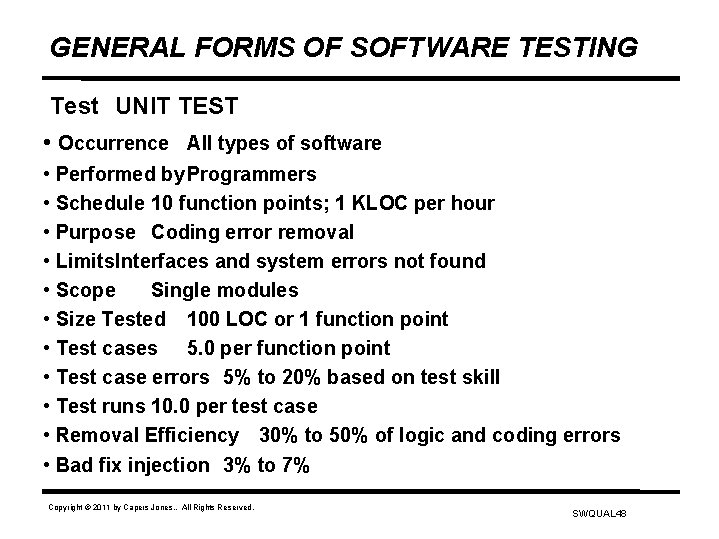

GENERAL FORMS OF SOFTWARE TESTING Test UNIT TEST • Occurrence All types of software • Performed by Programmers • Schedule 10 function points; 1 KLOC per hour • Purpose Coding error removal • Limits. Interfaces and system errors not found • Scope Single modules • Size Tested 100 LOC or 1 function point • Test cases 5. 0 per function point • Test case errors 5% to 20% based on test skill • Test runs 10. 0 per test case • Removal Efficiency 30% to 50% of logic and coding errors • Bad fix injection 3% to 7% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 48

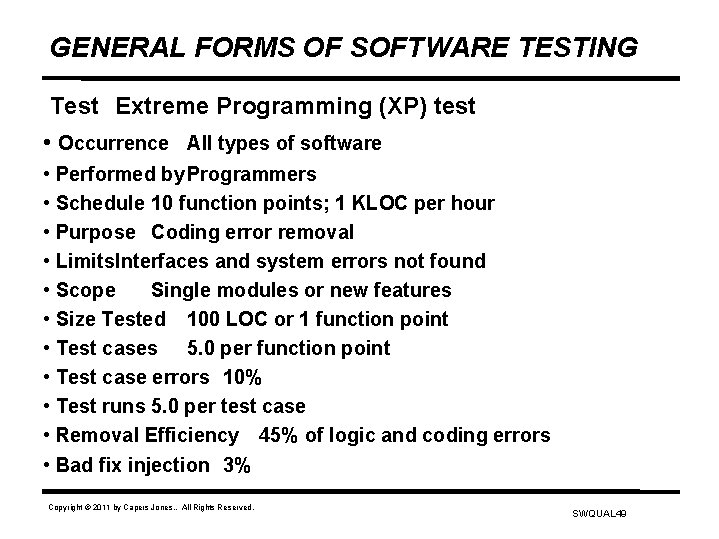

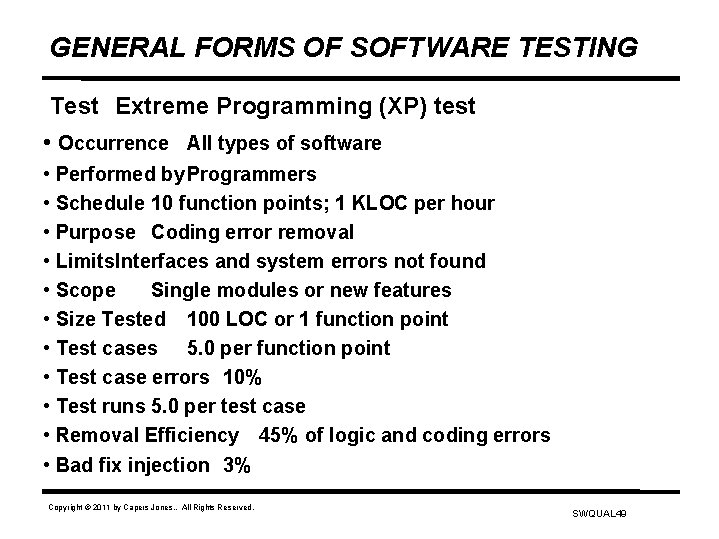

GENERAL FORMS OF SOFTWARE TESTING Test Extreme Programming (XP) test • Occurrence All types of software • Performed by Programmers • Schedule 10 function points; 1 KLOC per hour • Purpose Coding error removal • Limits. Interfaces and system errors not found • Scope Single modules or new features • Size Tested 100 LOC or 1 function point • Test cases 5. 0 per function point • Test case errors 10% • Test runs 5. 0 per test case • Removal Efficiency 45% of logic and coding errors • Bad fix injection 3% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 49

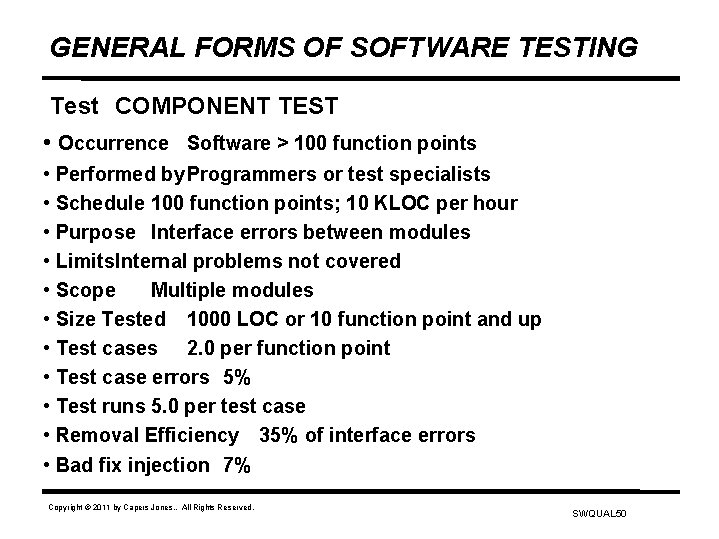

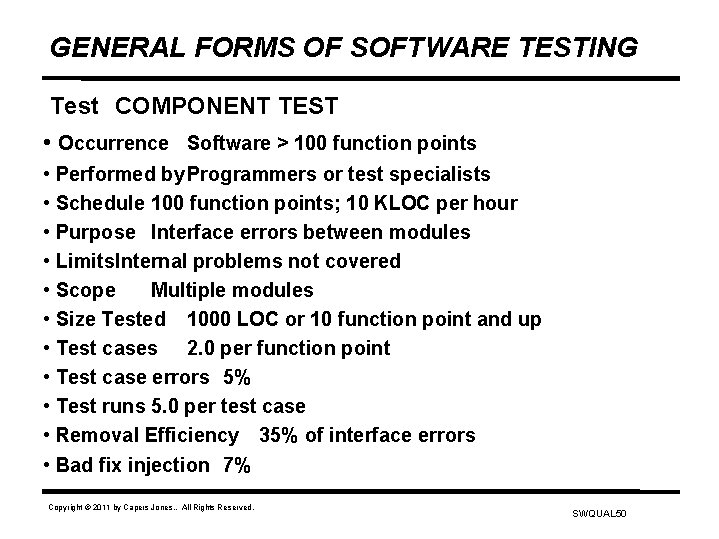

GENERAL FORMS OF SOFTWARE TESTING Test COMPONENT TEST • Occurrence Software > 100 function points • Performed by Programmers or test specialists • Schedule 100 function points; 10 KLOC per hour • Purpose Interface errors between modules • Limits. Internal problems not covered • Scope Multiple modules • Size Tested 1000 LOC or 10 function point and up • Test cases 2. 0 per function point • Test case errors 5% • Test runs 5. 0 per test case • Removal Efficiency 35% of interface errors • Bad fix injection 7% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 50

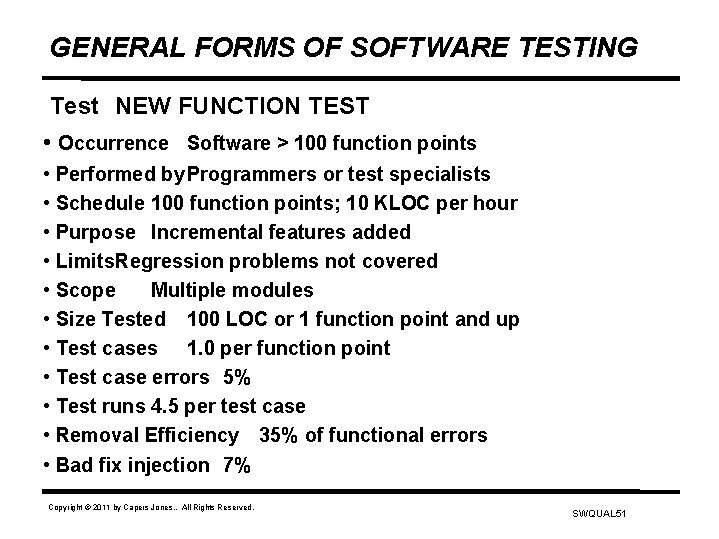

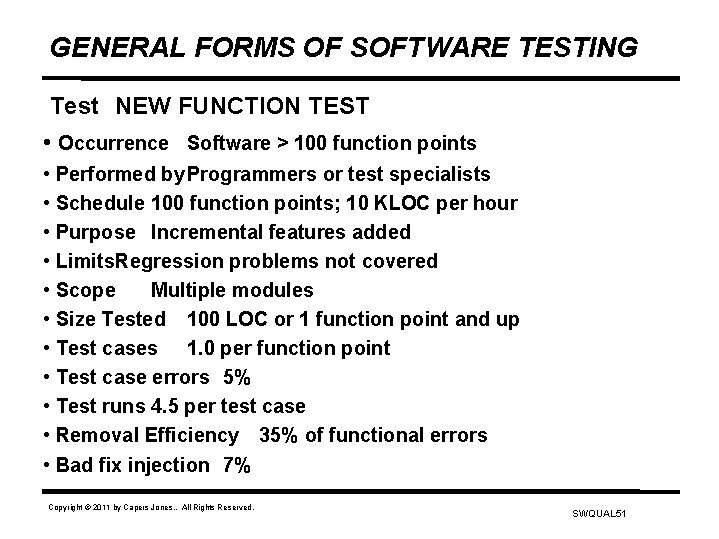

GENERAL FORMS OF SOFTWARE TESTING Test NEW FUNCTION TEST • Occurrence Software > 100 function points • Performed by Programmers or test specialists • Schedule 100 function points; 10 KLOC per hour • Purpose Incremental features added • Limits. Regression problems not covered • Scope Multiple modules • Size Tested 100 LOC or 1 function point and up • Test cases 1. 0 per function point • Test case errors 5% • Test runs 4. 5 per test case • Removal Efficiency 35% of functional errors • Bad fix injection 7% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 51

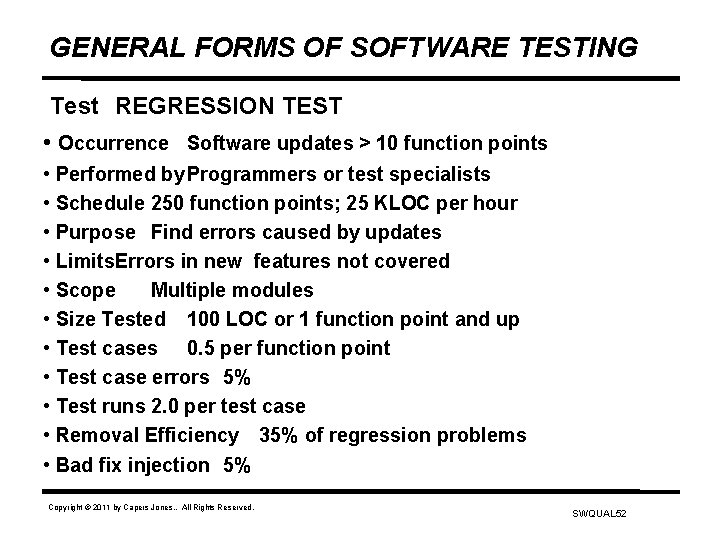

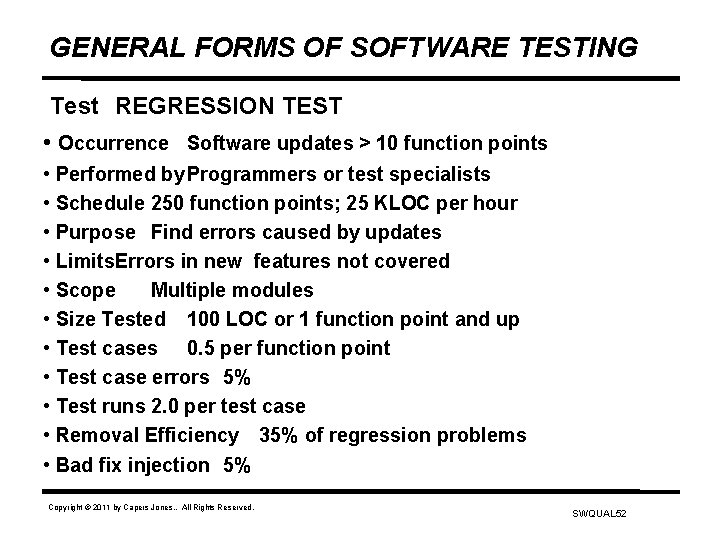

GENERAL FORMS OF SOFTWARE TESTING Test REGRESSION TEST • Occurrence Software updates > 10 function points • Performed by Programmers or test specialists • Schedule 250 function points; 25 KLOC per hour • Purpose Find errors caused by updates • Limits. Errors in new features not covered • Scope Multiple modules • Size Tested 100 LOC or 1 function point and up • Test cases 0. 5 per function point • Test case errors 5% • Test runs 2. 0 per test case • Removal Efficiency 35% of regression problems • Bad fix injection 5% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 52

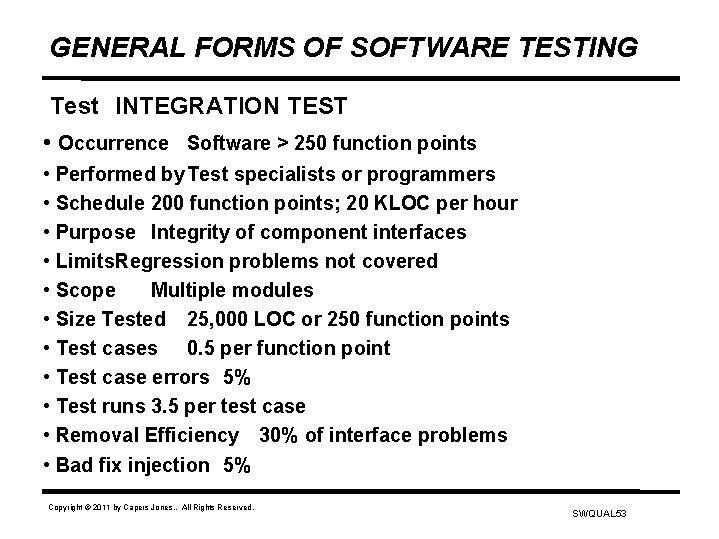

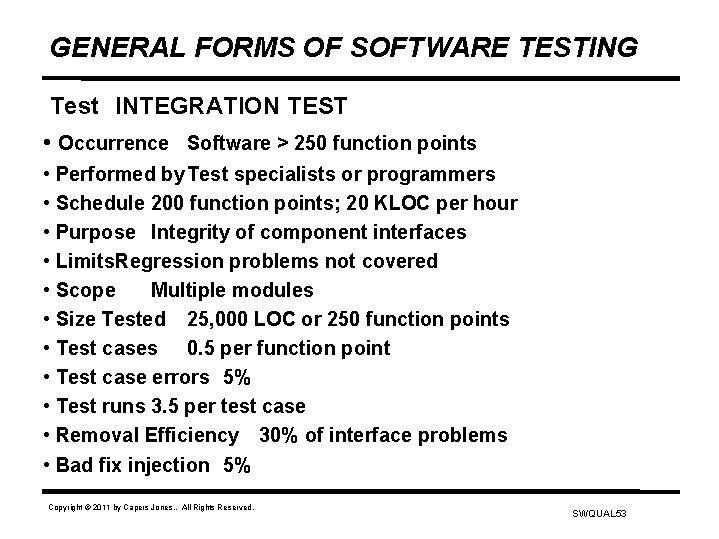

GENERAL FORMS OF SOFTWARE TESTING Test INTEGRATION TEST • Occurrence Software > 250 function points • Performed by Test specialists or programmers • Schedule 200 function points; 20 KLOC per hour • Purpose Integrity of component interfaces • Limits. Regression problems not covered • Scope Multiple modules • Size Tested 25, 000 LOC or 250 function points • Test cases 0. 5 per function point • Test case errors 5% • Test runs 3. 5 per test case • Removal Efficiency 30% of interface problems • Bad fix injection 5% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 53

GENERAL FORMS OF SOFTWARE TESTING Test SYSTEM TEST • Occurrence Software > 1000 function points • Performed by Test specialists or programmers • Schedule 250 function points; 25 KLOC per hour • Purpose Errors in interfaces, inputs, & outputs • Limits. All paths not covered • Scope Full application • Size Tested 100, 000 LOC or 1000 function points • Test cases 0. 5 per function point • Test case errors 5% • Test runs 3. 0 per test case • Removal Efficiency 50% of usability problems • Bad fix injection 7% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 54

FORMS OF DEFECT REMOVAL AUTOMATED SOFTWARE TESTING Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 55

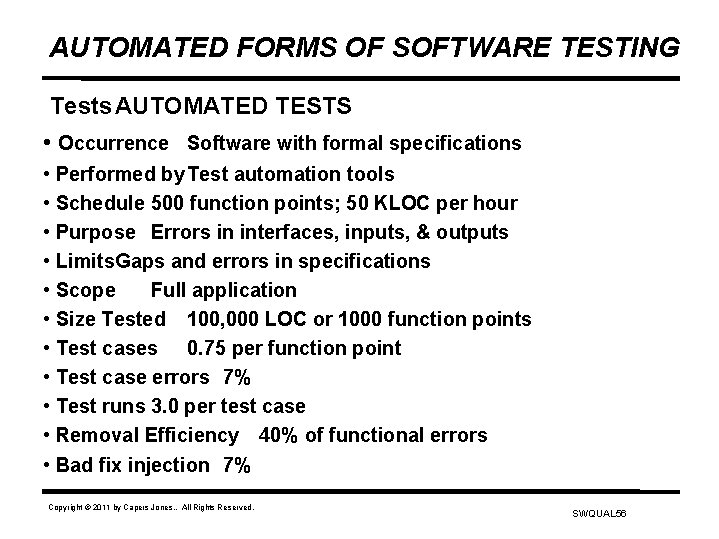

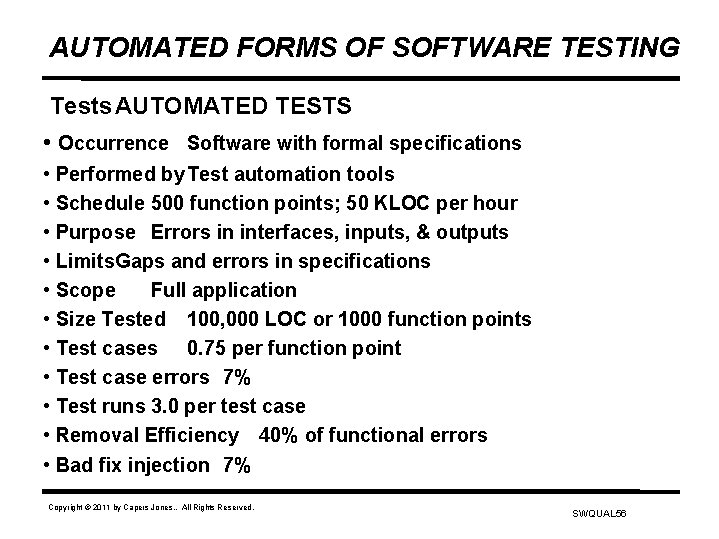

AUTOMATED FORMS OF SOFTWARE TESTING Tests AUTOMATED TESTS • Occurrence Software with formal specifications • Performed by Test automation tools • Schedule 500 function points; 50 KLOC per hour • Purpose Errors in interfaces, inputs, & outputs • Limits. Gaps and errors in specifications • Scope Full application • Size Tested 100, 000 LOC or 1000 function points • Test cases 0. 75 per function point • Test case errors 7% • Test runs 3. 0 per test case • Removal Efficiency 40% of functional errors • Bad fix injection 7% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 56

FORMS OF DEFECT REMOVAL SPECIALIZED SOFTWARE TESTING Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 57

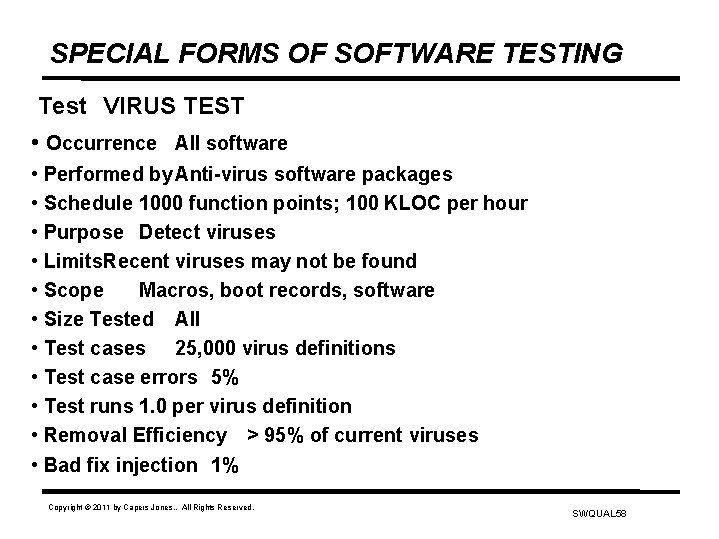

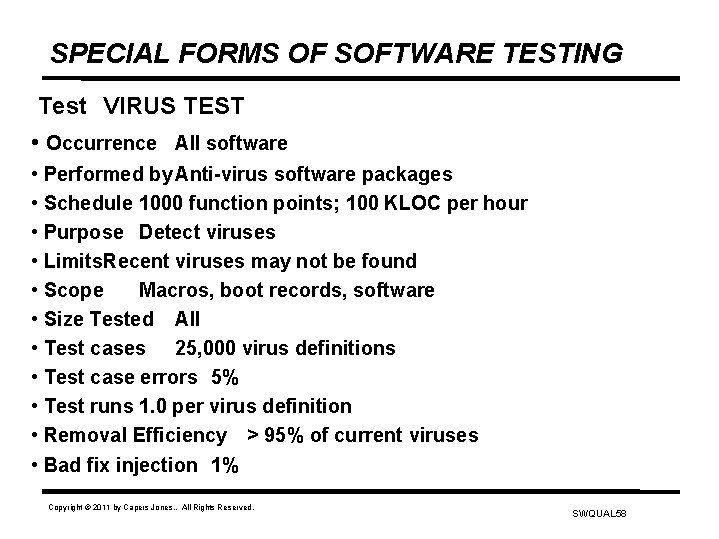

SPECIAL FORMS OF SOFTWARE TESTING Test VIRUS TEST • Occurrence All software • Performed by Anti-virus software packages • Schedule 1000 function points; 100 KLOC per hour • Purpose Detect viruses • Limits. Recent viruses may not be found • Scope Macros, boot records, software • Size Tested All • Test cases 25, 000 virus definitions • Test case errors 5% • Test runs 1. 0 per virus definition • Removal Efficiency > 95% of current viruses • Bad fix injection 1% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 58

SPECIAL FORMS OF SOFTWARE TESTING Test LIMITS OR CAPACITY TEST • Occurrence Systems, military software primarily • Performed by Test specialists; programmers; SQA • Schedule 500 function points; 50 KLOC per hour • Purpose Full-load error detection • Limits. Complex hardware/software issues • Scope Entire application plus interfaces • Size Tested > 10 KLOC; 100 function points • Test cases 0. 1 test cases per function point • Test case errors 5% • Test runs 1. 5 per test case • Removal Efficiency 50% of capacity problems • Bad fix injection 5% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 59

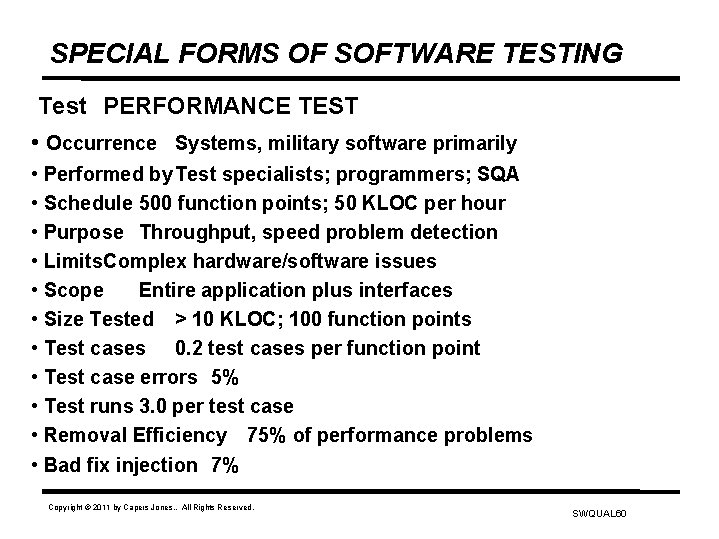

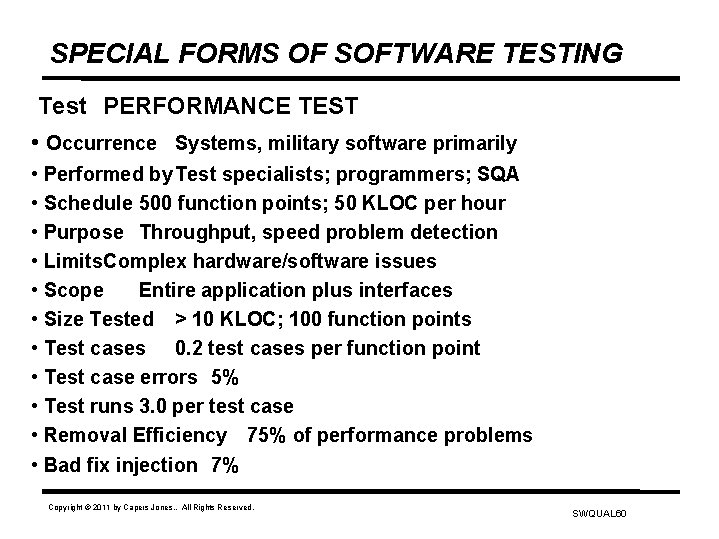

SPECIAL FORMS OF SOFTWARE TESTING Test PERFORMANCE TEST • Occurrence Systems, military software primarily • Performed by Test specialists; programmers; SQA • Schedule 500 function points; 50 KLOC per hour • Purpose Throughput, speed problem detection • Limits. Complex hardware/software issues • Scope Entire application plus interfaces • Size Tested > 10 KLOC; 100 function points • Test cases 0. 2 test cases per function point • Test case errors 5% • Test runs 3. 0 per test case • Removal Efficiency 75% of performance problems • Bad fix injection 7% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 60

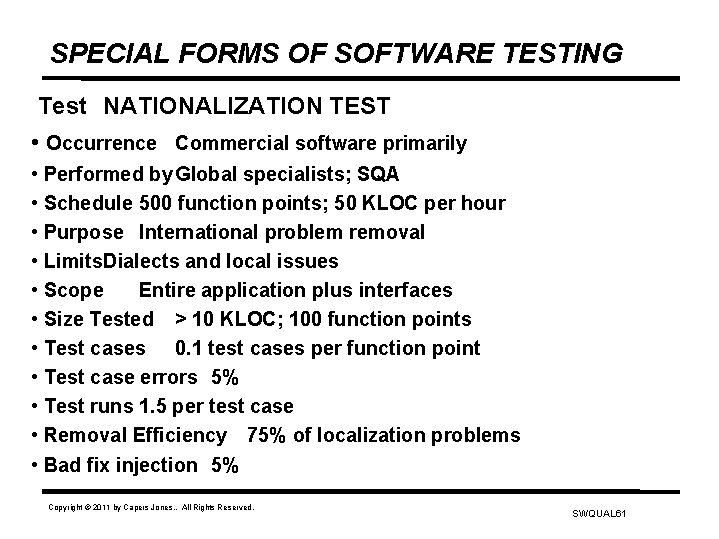

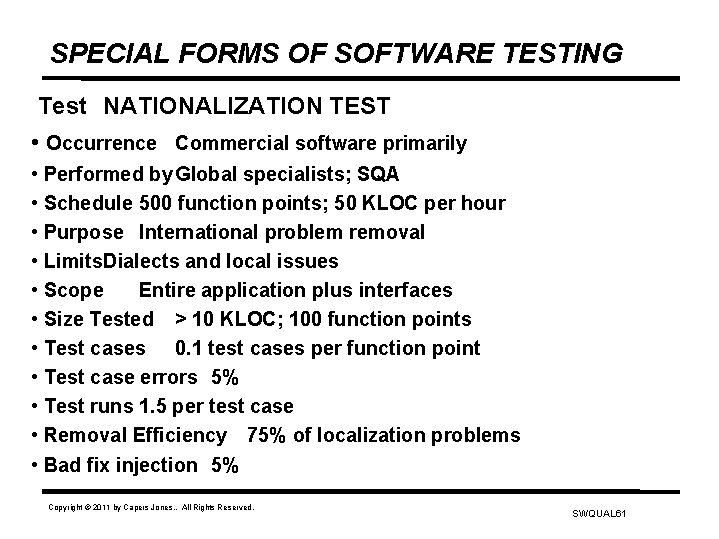

SPECIAL FORMS OF SOFTWARE TESTING Test NATIONALIZATION TEST • Occurrence Commercial software primarily • Performed by Global specialists; SQA • Schedule 500 function points; 50 KLOC per hour • Purpose International problem removal • Limits. Dialects and local issues • Scope Entire application plus interfaces • Size Tested > 10 KLOC; 100 function points • Test cases 0. 1 test cases per function point • Test case errors 5% • Test runs 1. 5 per test case • Removal Efficiency 75% of localization problems • Bad fix injection 5% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 61

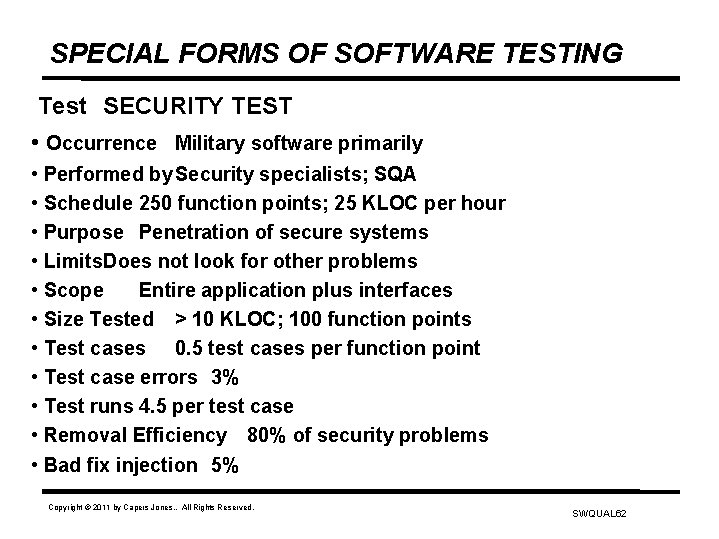

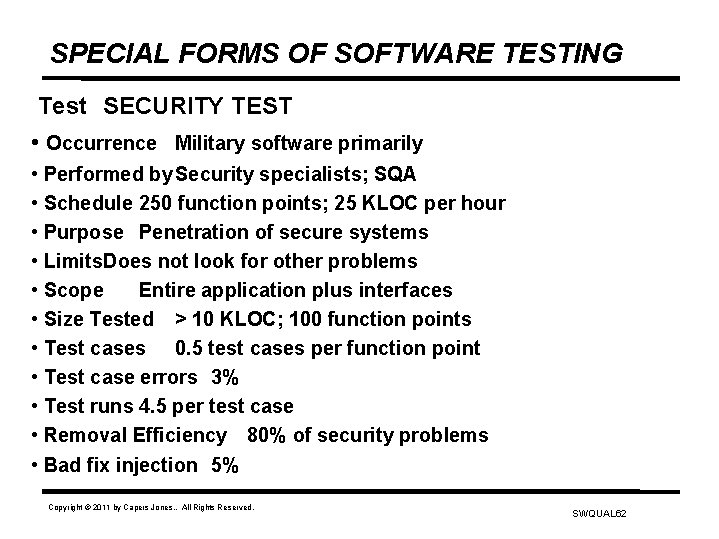

SPECIAL FORMS OF SOFTWARE TESTING Test SECURITY TEST • Occurrence Military software primarily • Performed by Security specialists; SQA • Schedule 250 function points; 25 KLOC per hour • Purpose Penetration of secure systems • Limits. Does not look for other problems • Scope Entire application plus interfaces • Size Tested > 10 KLOC; 100 function points • Test cases 0. 5 test cases per function point • Test case errors 3% • Test runs 4. 5 per test case • Removal Efficiency 80% of security problems • Bad fix injection 5% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 62

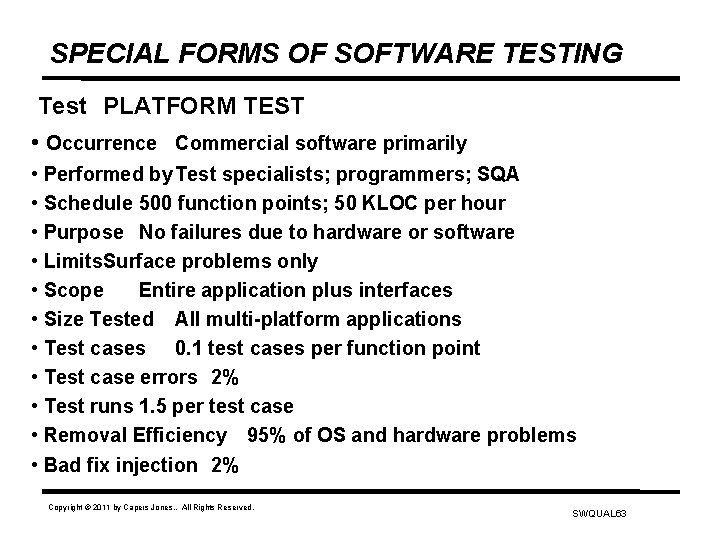

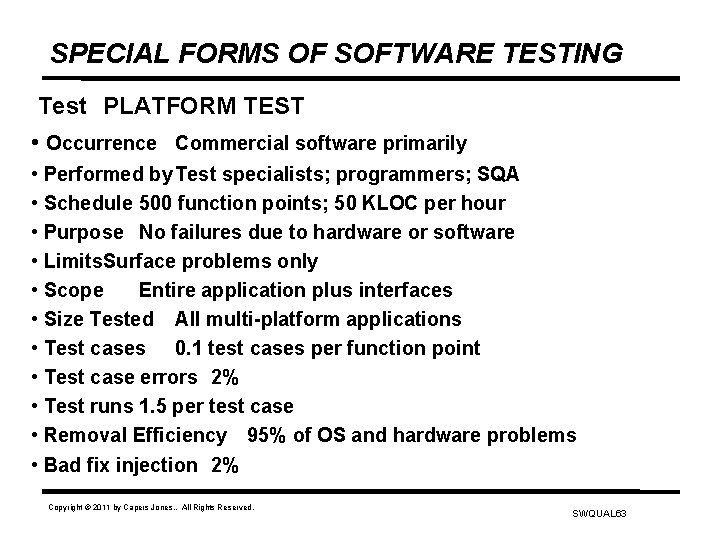

SPECIAL FORMS OF SOFTWARE TESTING Test PLATFORM TEST • Occurrence Commercial software primarily • Performed by Test specialists; programmers; SQA • Schedule 500 function points; 50 KLOC per hour • Purpose No failures due to hardware or software • Limits. Surface problems only • Scope Entire application plus interfaces • Size Tested All multi-platform applications • Test cases 0. 1 test cases per function point • Test case errors 2% • Test runs 1. 5 per test case • Removal Efficiency 95% of OS and hardware problems • Bad fix injection 2% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 63

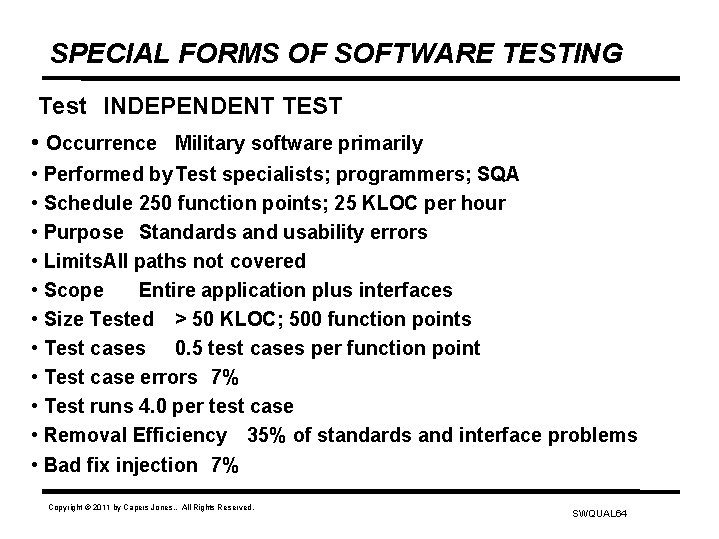

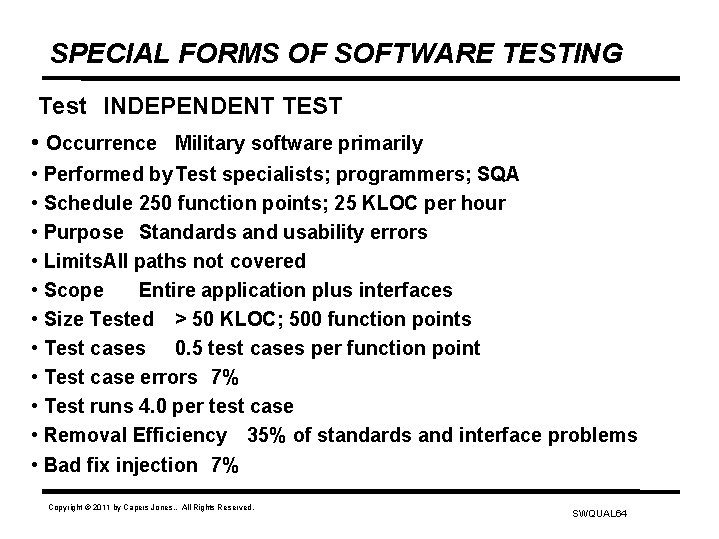

SPECIAL FORMS OF SOFTWARE TESTING Test INDEPENDENT TEST • Occurrence Military software primarily • Performed by Test specialists; programmers; SQA • Schedule 250 function points; 25 KLOC per hour • Purpose Standards and usability errors • Limits. All paths not covered • Scope Entire application plus interfaces • Size Tested > 50 KLOC; 500 function points • Test cases 0. 5 test cases per function point • Test case errors 7% • Test runs 4. 0 per test case • Removal Efficiency 35% of standards and interface problems • Bad fix injection 7% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 64

SPECIAL FORMS OF SOFTWARE TESTING Test CLEAN-ROOM TEST • Occurrence Systems software primarily • Performed by Clean-room specialists; SQA • Schedule 150 function points; 15 KLOC per hour • Purpose Simulate usage patterns • Limits. Not useful for unstable requirements • Scope Entire application plus interfaces • Size Tested > 10 KLOC; 100 function points • Test cases 1. 5 test cases per function point • Test case errors 7% • Test runs 5. 0 per test case • Removal Efficiency 40% of usability problems • Bad fix injection 5% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 65

SPECIAL FORMS OF SOFTWARE TESTING Test SUPPLY CHAIN TEST • Occurrence All forms of software • Performed by Test specialists; programmers; SQA • Schedule 500 function points; 50 KLOC per hour • Purpose Multi-company date transmission • Limits. Obscure dates may not be detected • Scope Entire application plus interfaces • Size Tested > 1 KLOC; 10 function points • Test cases 1. 5 test cases per function point • Test case errors 8% • Test runs 2. 5 per test case • Removal Efficiency 45% of date problems • Bad fix injection 10% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 66

FORMS OF DEFECT REMOVAL USER-ORIENTED SOFTWARE TESTING Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 67

USER-ORIENTED SOFTWARE TESTING Test PRE-PURCHASE TEST • Occurrence Software > $1000 per seat • Performed by Test specialists or users • Schedule 500 function points; 50 KLOC per hour • Purpose Suitability for local needs • Limits. Only basic features usually tested • Scope Full application • Size Tested 100 KLOC; 1000 function points • Test cases 0. 5 per function point • Test case errors 15% • Test runs 1. 5 per test case • Removal Efficiency 25% of usability problems • Bad fix injection < 1% (problems reported but not fixed) Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 68

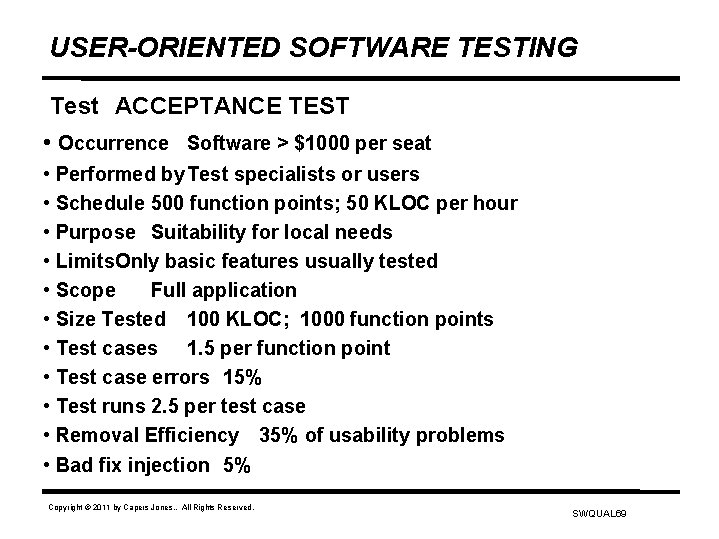

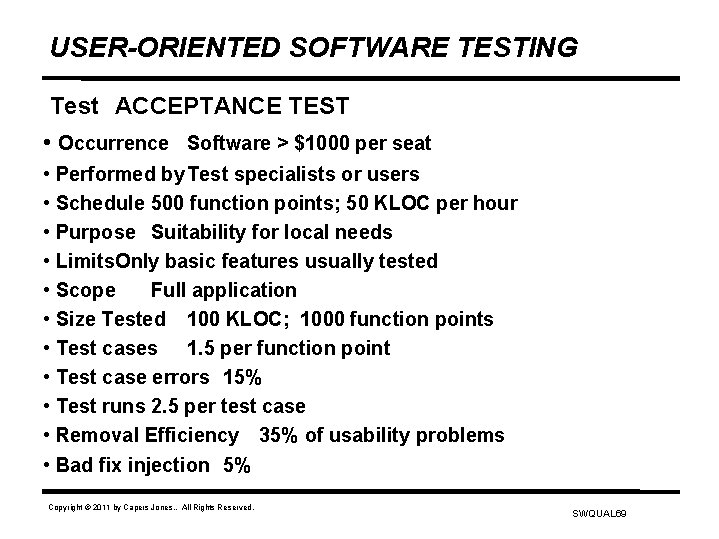

USER-ORIENTED SOFTWARE TESTING Test ACCEPTANCE TEST • Occurrence Software > $1000 per seat • Performed by Test specialists or users • Schedule 500 function points; 50 KLOC per hour • Purpose Suitability for local needs • Limits. Only basic features usually tested • Scope Full application • Size Tested 100 KLOC; 1000 function points • Test cases 1. 5 per function point • Test case errors 15% • Test runs 2. 5 per test case • Removal Efficiency 35% of usability problems • Bad fix injection 5% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 69

USER-ORIENTED SOFTWARE TESTING Test BETA TEST • Occurrence Commercial software primarily • Performed by Users; programmers; SQA • Schedule 500 function points; 50 KLOC per hour • Purpose Assisting major software vendors • Limits. Only basic features usually tested • Scope Full application • Size Tested 100 KLOC; 1000 function points • Test cases 1. 5 per function point • Test case errors 9% • Test runs 2. 5 per test case • Removal Efficiency 55% of usability problems • Bad fix injection 7% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 70

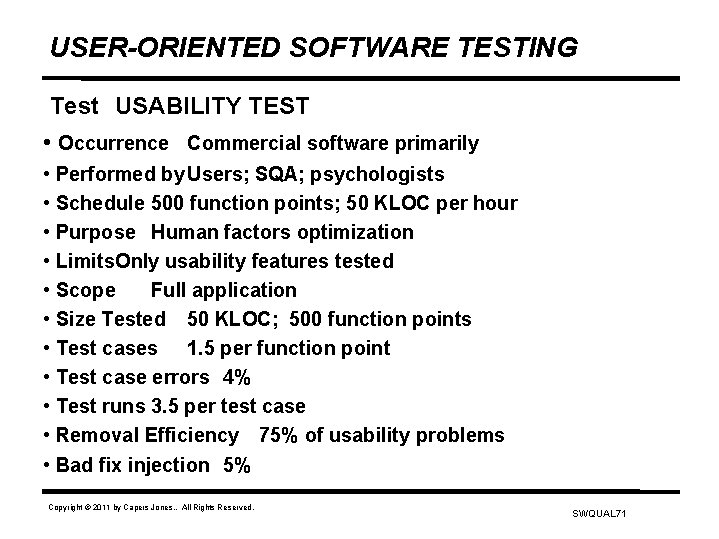

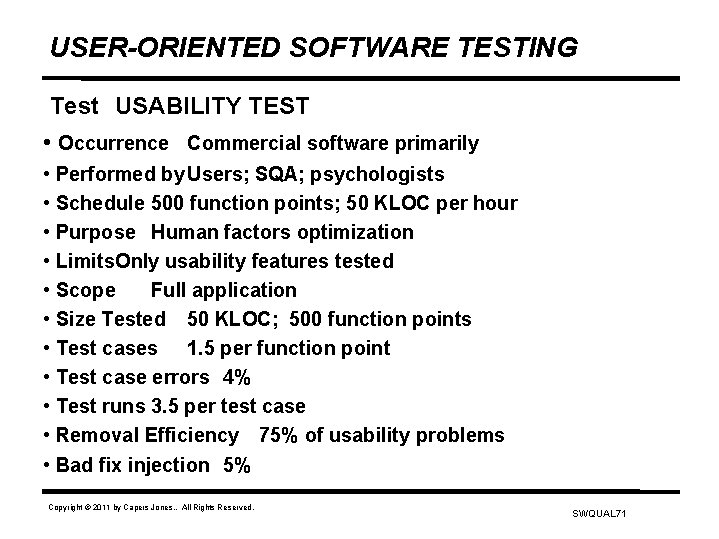

USER-ORIENTED SOFTWARE TESTING Test USABILITY TEST • Occurrence Commercial software primarily • Performed by Users; SQA; psychologists • Schedule 500 function points; 50 KLOC per hour • Purpose Human factors optimization • Limits. Only usability features tested • Scope Full application • Size Tested 50 KLOC; 500 function points • Test cases 1. 5 per function point • Test case errors 4% • Test runs 3. 5 per test case • Removal Efficiency 75% of usability problems • Bad fix injection 5% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 71

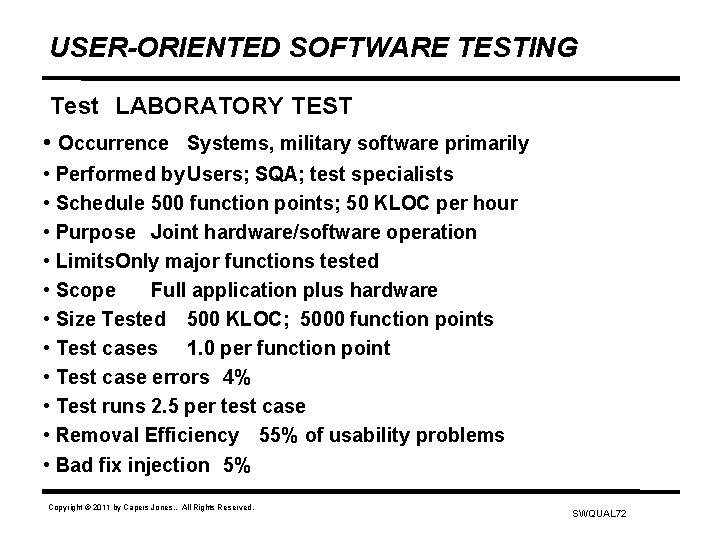

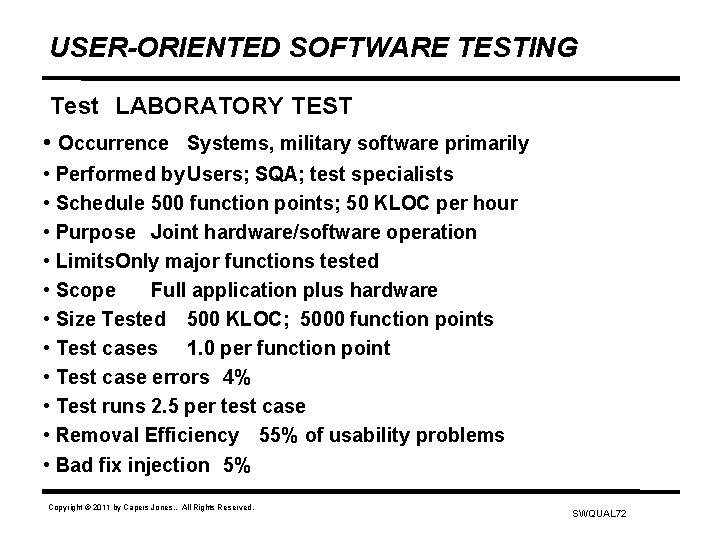

USER-ORIENTED SOFTWARE TESTING Test LABORATORY TEST • Occurrence Systems, military software primarily • Performed by Users; SQA; test specialists • Schedule 500 function points; 50 KLOC per hour • Purpose Joint hardware/software operation • Limits. Only major functions tested • Scope Full application plus hardware • Size Tested 500 KLOC; 5000 function points • Test cases 1. 0 per function point • Test case errors 4% • Test runs 2. 5 per test case • Removal Efficiency 55% of usability problems • Bad fix injection 5% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 72

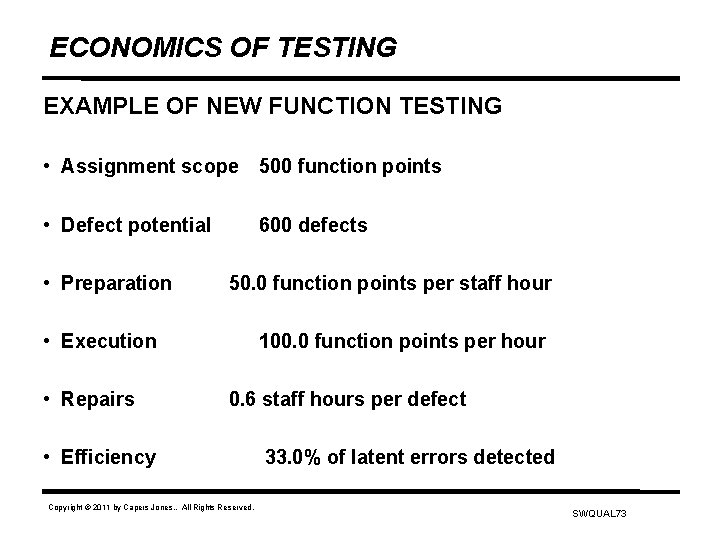

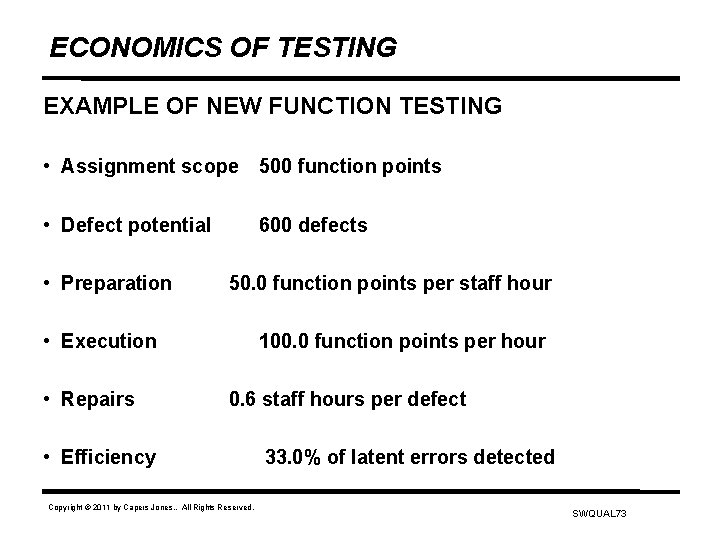

ECONOMICS OF TESTING EXAMPLE OF NEW FUNCTION TESTING • Assignment scope 500 function points • Defect potential • Preparation 600 defects 50. 0 function points per staff hour • Execution • Repairs 100. 0 function points per hour 0. 6 staff hours per defect • Efficiency Copyright © 2011 by Capers Jones. . All Rights Reserved. 33. 0% of latent errors detected SWQUAL 73

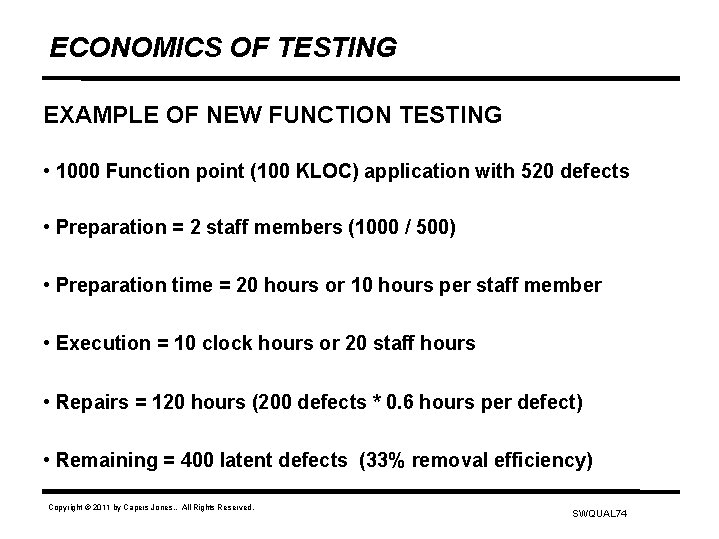

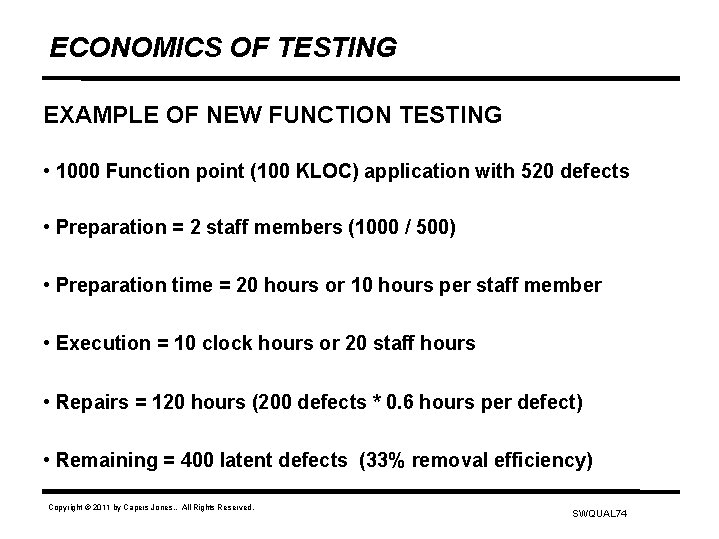

ECONOMICS OF TESTING EXAMPLE OF NEW FUNCTION TESTING • 1000 Function point (100 KLOC) application with 520 defects • Preparation = 2 staff members (1000 / 500) • Preparation time = 20 hours or 10 hours per staff member • Execution = 10 clock hours or 20 staff hours • Repairs = 120 hours (200 defects * 0. 6 hours per defect) • Remaining = 400 latent defects (33% removal efficiency) Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 74

DISTRIBUTION OF 1500 SOFTWARE PROJECTS BY DEFECT REMOVAL EFFICIENCY LEVEL Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 75

AVERAGE NUMBER OF TEST STAGES OBSERVED BY APPLICATION SIZE AND CLASS OF SOFTWARE (Size of Application in Function Points) Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 76

NUMBER OF TESTING STAGES, TESTING EFFORT, AND DEFECT REMOVAL EFFICIENCY Number of Testing Stages Percent of Effort Devoted to Testing 1 testing stage 2 testing stages 3 testing stages 4 testing stages 5 testing stages 6 testing stages* 7 testing stages 8 testing stages 9 testing stages 10% 15% 20% 25% 30% 33%* 36% 39% 42% Cumulative Defect Removal Efficiency 50% 60% 75% 80% 85%* 87% 90% 92% *Note: Six test stages, 33% costs, and 85% removal efficiency are U. S. averages. Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 77

NUMBER OF TESTING STAGES, TESTING EFFORT, AND DEFECT REMOVAL EFFICIENCY (cont. ) Number of Testing Stages Percent of Effort Devoted to Testing 10 testing stages 11 testing stages 12 testing stages 13 testing stages 14 testing stages 15 testing stages 16 testing stages 17 testing stages 18 testing stages 45% 48% 52% 55% 58% 61% 64% 67% 70% Cumulative Defect Removal Efficiency 94% 96% 98% 99. 9% 99. 999% 99. 99999% *Note: Six test stages, 33% costs, and 85% removal efficiency are U. S. averages. Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 78

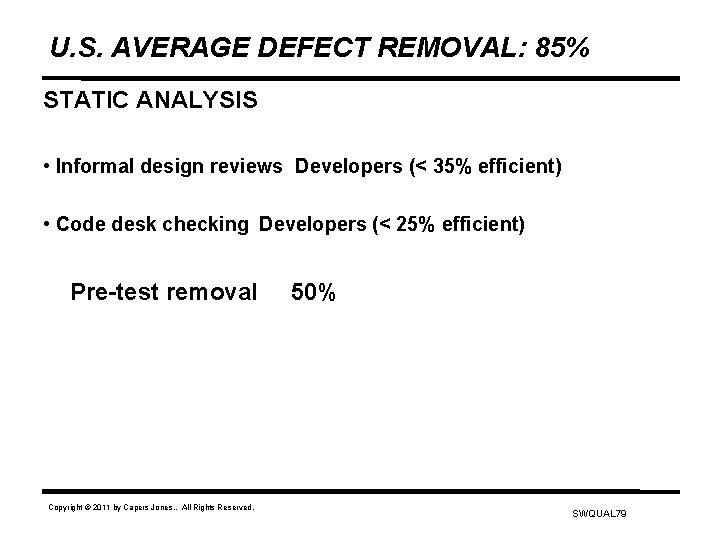

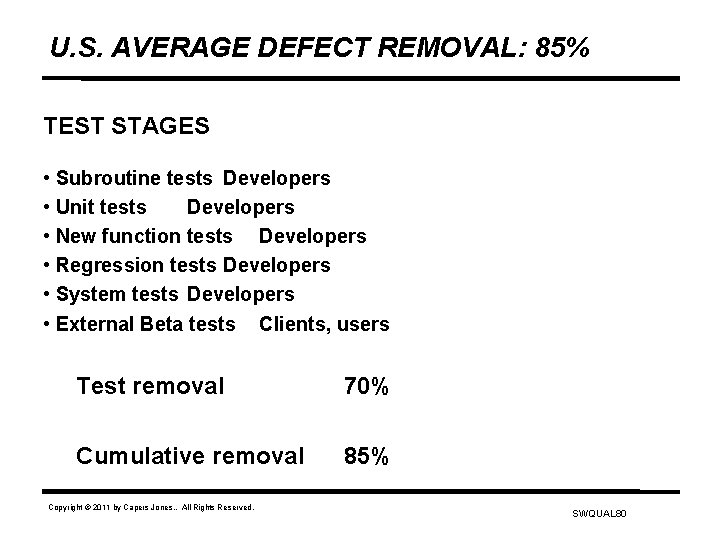

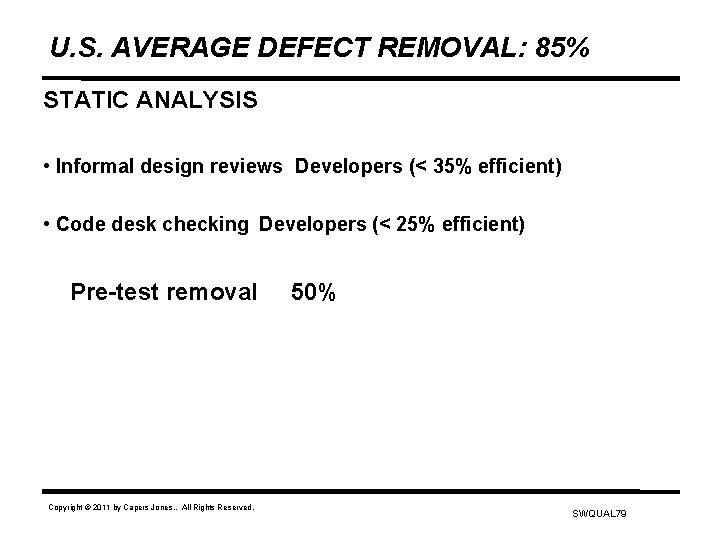

U. S. AVERAGE DEFECT REMOVAL: 85% STATIC ANALYSIS • Informal design reviews Developers (< 35% efficient) • Code desk checking Developers (< 25% efficient) Pre-test removal Copyright © 2011 by Capers Jones. . All Rights Reserved. 50% SWQUAL 79

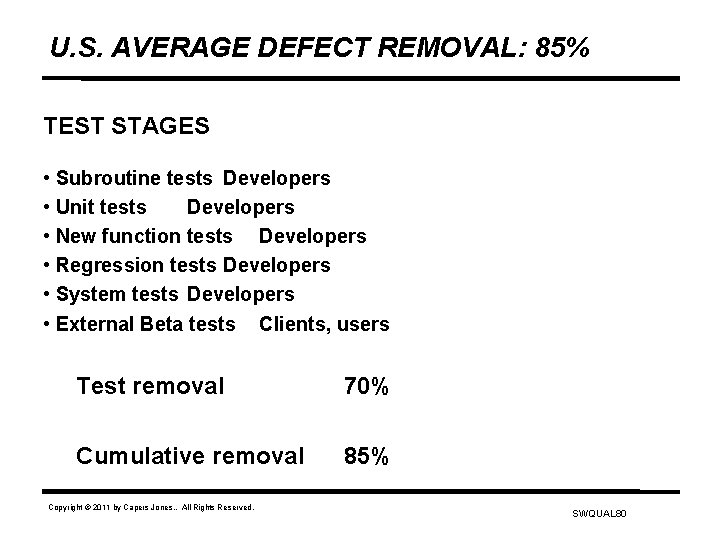

U. S. AVERAGE DEFECT REMOVAL: 85% TEST STAGES • Subroutine tests Developers • Unit tests Developers • New function tests Developers • Regression tests Developers • System tests Developers • External Beta tests Clients, users Test removal 70% Cumulative removal 85% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 80

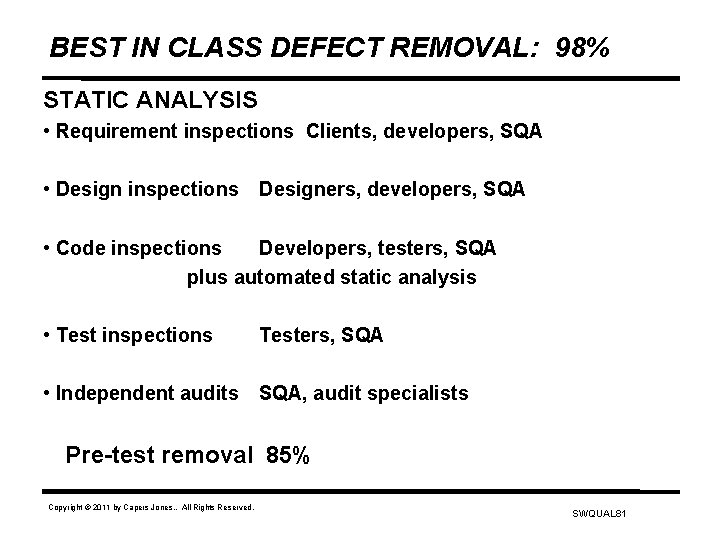

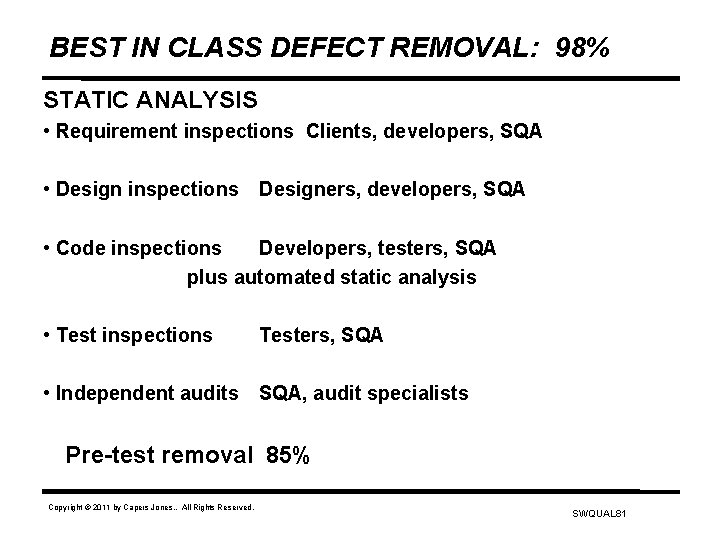

BEST IN CLASS DEFECT REMOVAL: 98% STATIC ANALYSIS • Requirement inspections Clients, developers, SQA • Design inspections Designers, developers, SQA • Code inspections Developers, testers, SQA plus automated static analysis • Test inspections Testers, SQA • Independent audits SQA, audit specialists Pre-test removal 85% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 81

BEST IN CLASS DEFECT REMOVAL: 98% TEST STAGES • Subroutine tests Developers • Unit tests Developers • New function tests Test specialists, SQA • Regression tests Test specialists, SQA • Performance tests Test specialists, SQA • Integration tests Test specialists, SQA • System tests Test specialists, SQA • External Beta tests Clients, users Test removal 85% Cumulative removal 98% Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 82

SOFTWARE DEFECT POTENTIALS AND DEFECT REMOVAL EFFICIENCY FOR EACH LEVEL OF SEI CMM (Data Expressed in Terms of Defects per Function Point) Defect Removal Delivered SEI CMMI Levels. Potentials Efficiency Defects SEI CMMI 1 5. 50 73% 1. 48 SEI CMMI 2 4. 00 90% 0. 40 SEI CMMI 3 3. 00 95% 0. 15 SEI CMMI 4 2. 50 97% 0. 008 SEI CMMI 5 2. 25 98% 0. 005 Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 83

CONCLUSIONS ON SOFTWARE DEFECT REMOVAL • No single defect removal method is adequate. • Testing alone is insufficient to top 90% removal efficiency. • Formal inspections, automated analysis, and tests combined give high removal efficiency, low costs and short schedules. • Defect prevention plus inspections and tests give highest cumulative efficiency and best economics. • Bad fix injections need special solutions for defect removal. • Test case errors need special solutions. • Unreported defects (desk checks; unit test) lower efficiency. Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 84

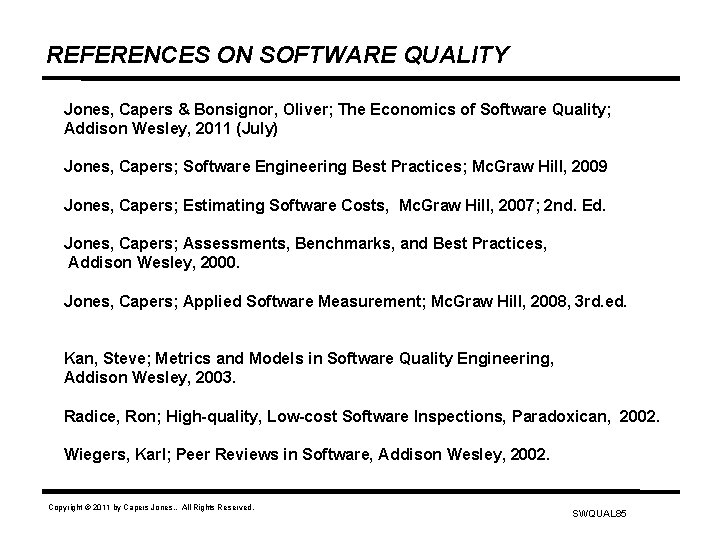

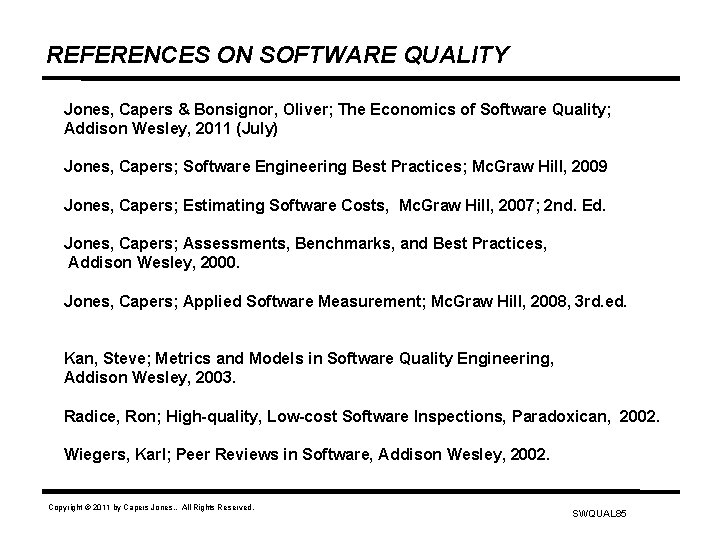

REFERENCES ON SOFTWARE QUALITY Jones, Capers & Bonsignor, Oliver; The Economics of Software Quality; Addison Wesley, 2011 (July) Jones, Capers; Software Engineering Best Practices; Mc. Graw Hill, 2009 Jones, Capers; Estimating Software Costs, Mc. Graw Hill, 2007; 2 nd. Ed. Jones, Capers; Assessments, Benchmarks, and Best Practices, Addison Wesley, 2000. Jones, Capers; Applied Software Measurement; Mc. Graw Hill, 2008, 3 rd. ed. Kan, Steve; Metrics and Models in Software Quality Engineering, Addison Wesley, 2003. Radice, Ron; High-quality, Low-cost Software Inspections, Paradoxican, 2002. Wiegers, Karl; Peer Reviews in Software, Addison Wesley, 2002. Copyright © 2011 by Capers Jones. . All Rights Reserved. SWQUAL 85