Can we make these scheduling algorithms simpler Using

- Slides: 101

Can we make these scheduling algorithms simpler? Using a Simpler Architecture COMP 680 E by M. Hamdi 1

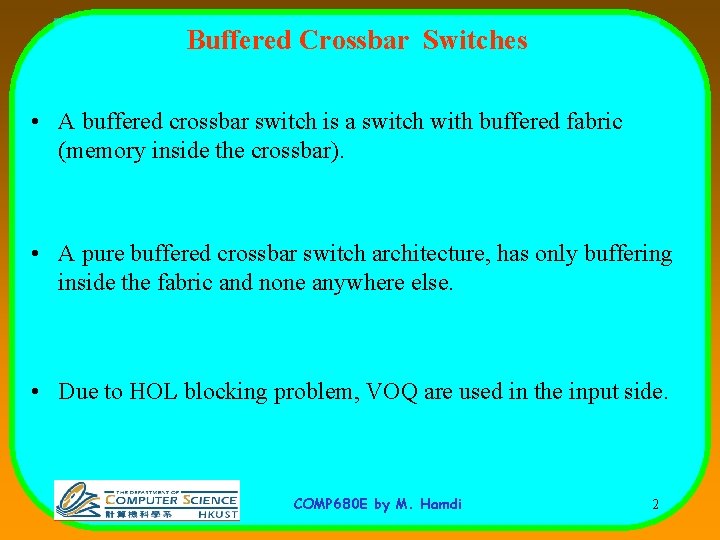

Buffered Crossbar Switches • A buffered crossbar switch is a switch with buffered fabric (memory inside the crossbar). • A pure buffered crossbar switch architecture, has only buffering inside the fabric and none anywhere else. • Due to HOL blocking problem, VOQ are used in the input side. COMP 680 E by M. Hamdi 2

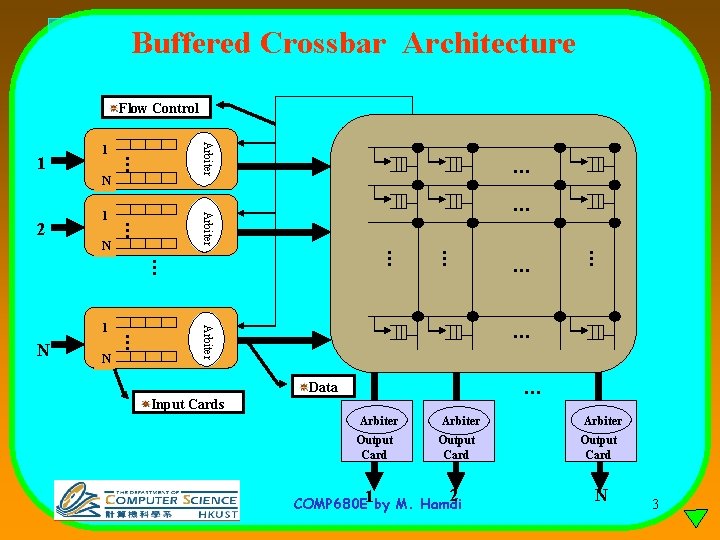

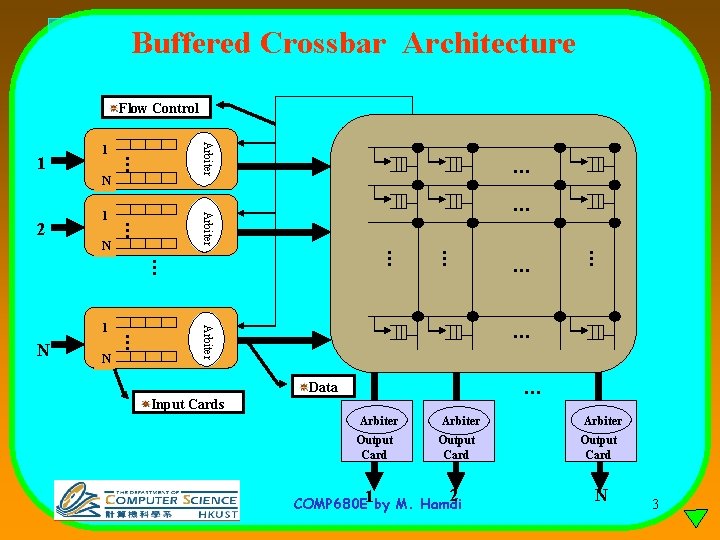

Buffered Crossbar Architecture Flow Control Arbiter …. 1 1 N … Arbiter …. 2 1 … … N … … Arbiter N …. 1 … … … N … Data Input Cards Arbiter Output Card 2 N COMP 680 E 1 by M. Hamdi 3

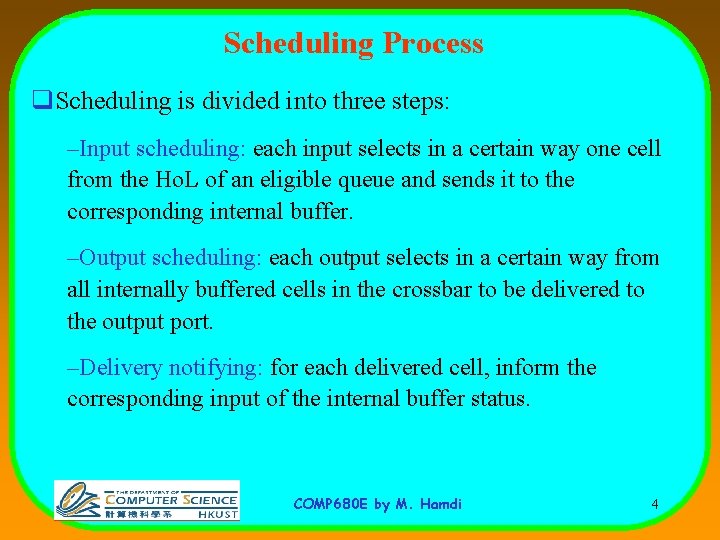

Scheduling Process q. Scheduling is divided into three steps: –Input scheduling: each input selects in a certain way one cell from the Ho. L of an eligible queue and sends it to the corresponding internal buffer. –Output scheduling: each output selects in a certain way from all internally buffered cells in the crossbar to be delivered to the output port. –Delivery notifying: for each delivered cell, inform the corresponding input of the internal buffer status. COMP 680 E by M. Hamdi 4

Advantages • Total independence between input and output arbiters (distributed design) (1/N complexity as compared to centralized schedulers) • Performance of Switch is much better (because there is much less output contention) – a combination of IQ and OQ switches • Disadvantage: Crossbar is more complicated COMP 680 E by M. Hamdi 5

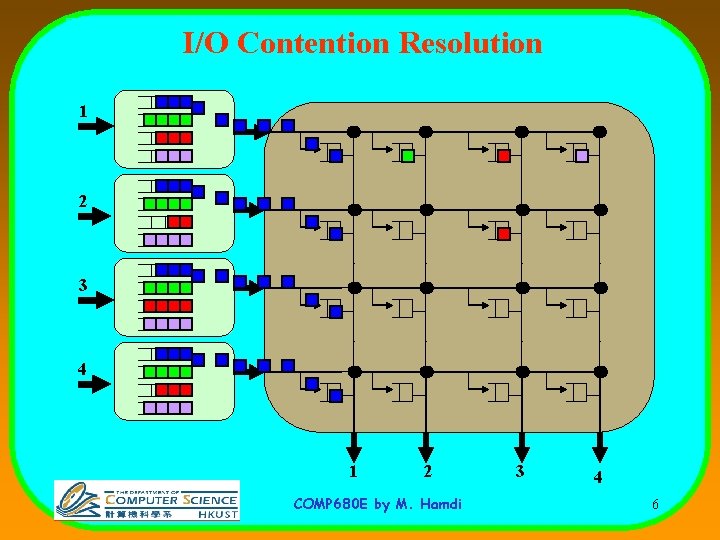

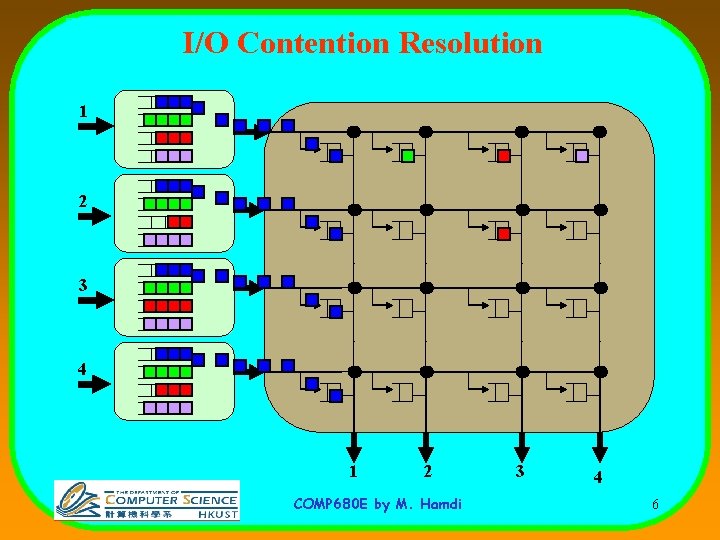

I/O Contention Resolution 1 2 3 4 1 2 COMP 680 E by M. Hamdi 3 4 6

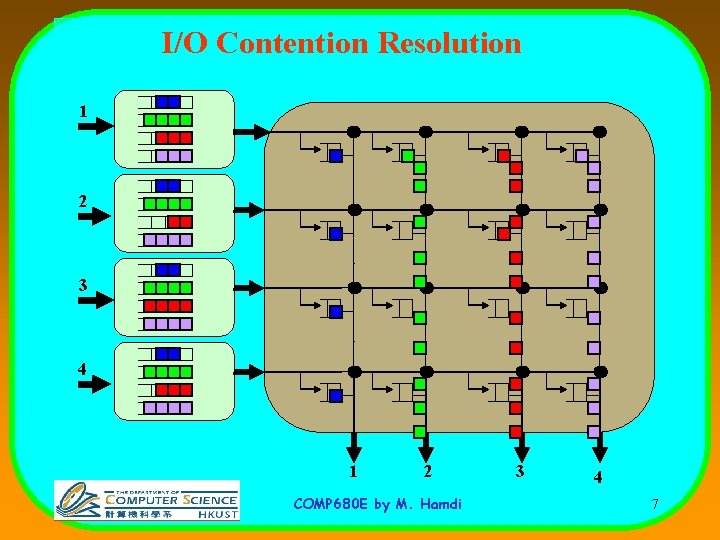

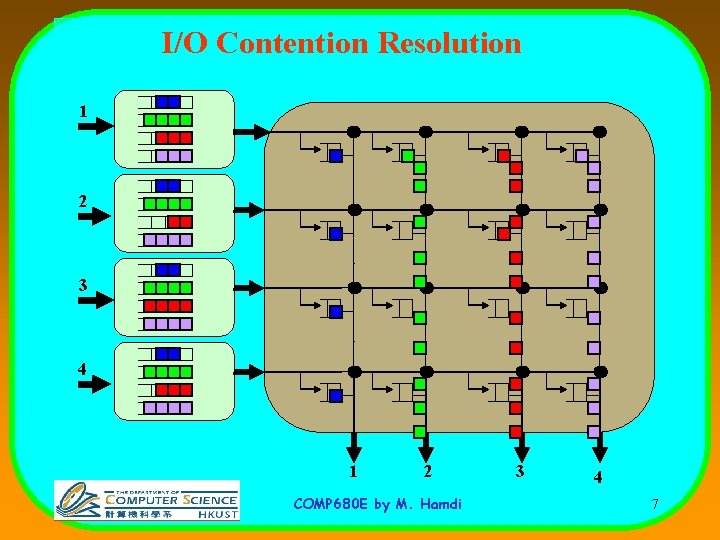

I/O Contention Resolution 1 2 3 4 1 2 COMP 680 E by M. Hamdi 3 4 7

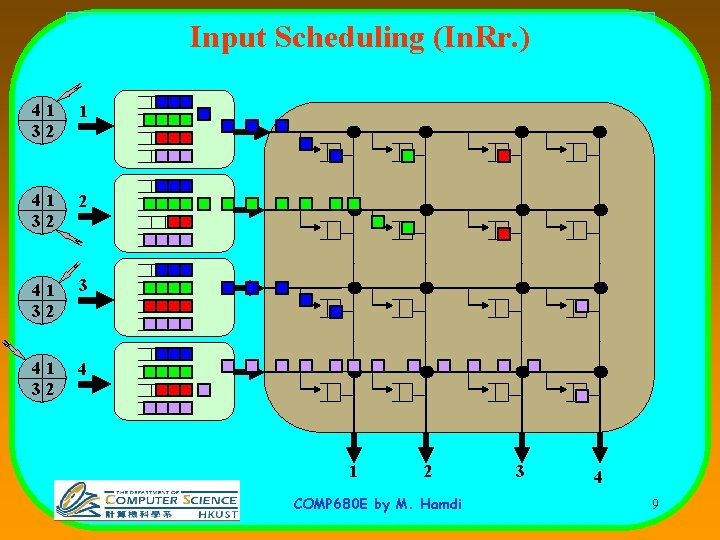

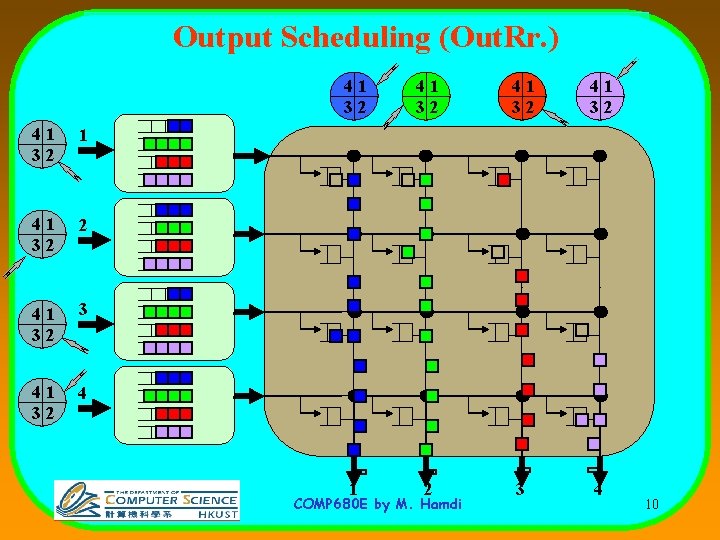

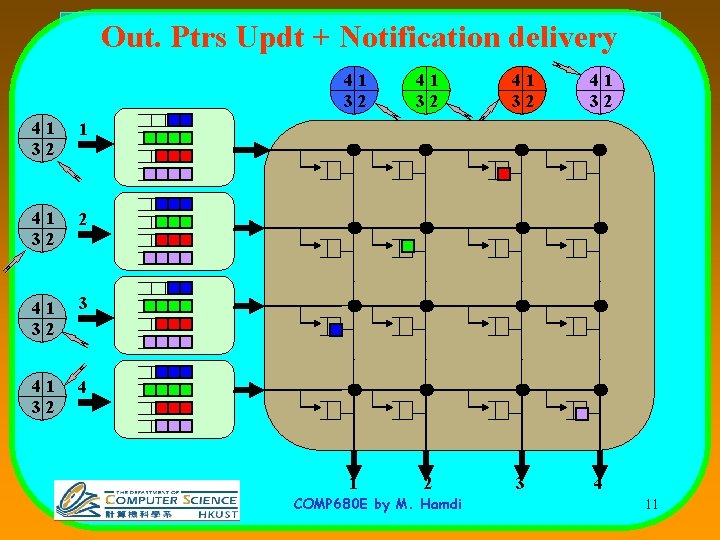

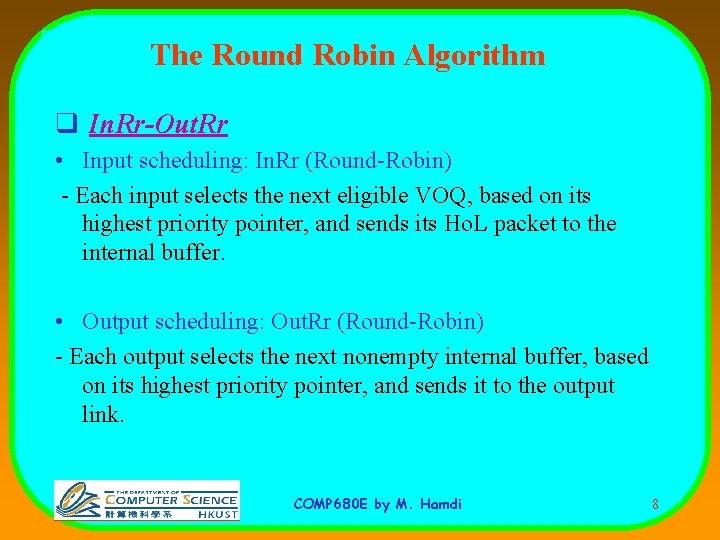

The Round Robin Algorithm q In. Rr-Out. Rr • Input scheduling: In. Rr (Round-Robin) - Each input selects the next eligible VOQ, based on its highest priority pointer, and sends its Ho. L packet to the internal buffer. • Output scheduling: Out. Rr (Round-Robin) - Each output selects the next nonempty internal buffer, based on its highest priority pointer, and sends it to the output link. COMP 680 E by M. Hamdi 8

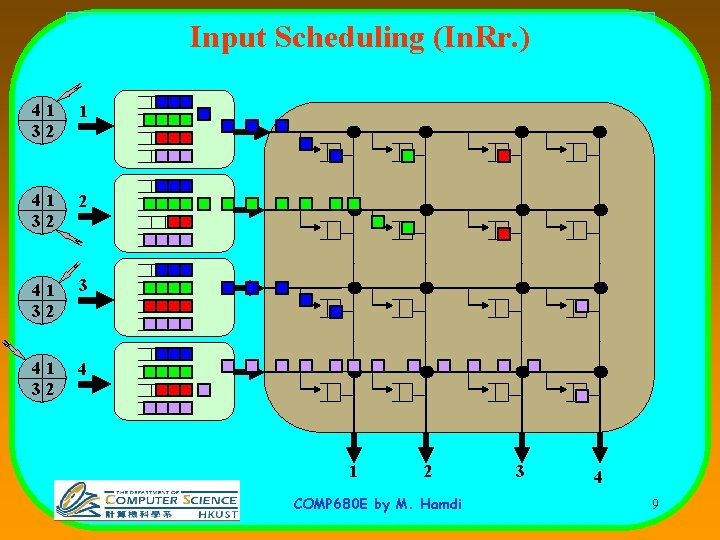

Input Scheduling (In. Rr. ) 41 32 1 41 32 2 41 32 3 41 32 4 1 2 COMP 680 E by M. Hamdi 3 4 9

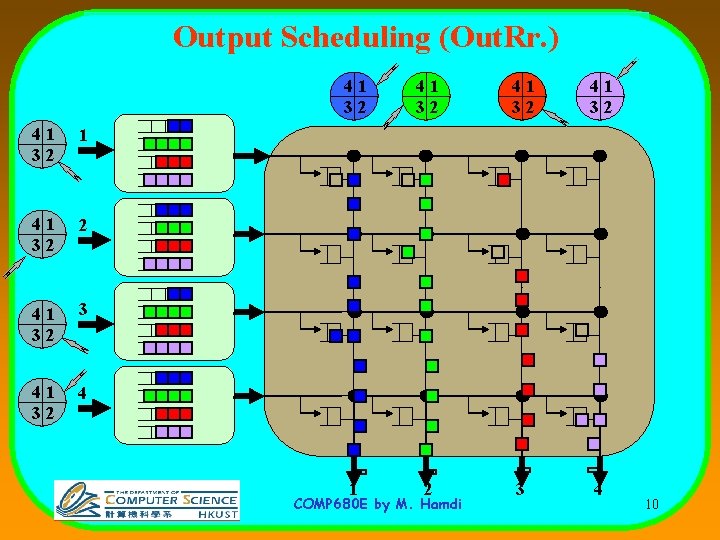

Output Scheduling (Out. Rr. ) 41 32 1 41 32 2 41 32 3 41 32 41 32 1 2 3 4 COMP 680 E by M. Hamdi 10

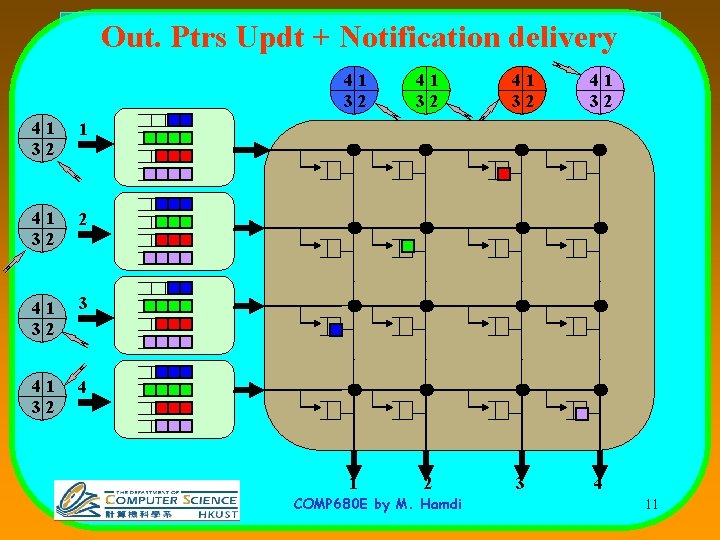

Out. Ptrs Updt + Notification delivery 41 32 1 41 32 2 41 32 3 41 32 41 32 1 2 3 4 COMP 680 E by M. Hamdi 11

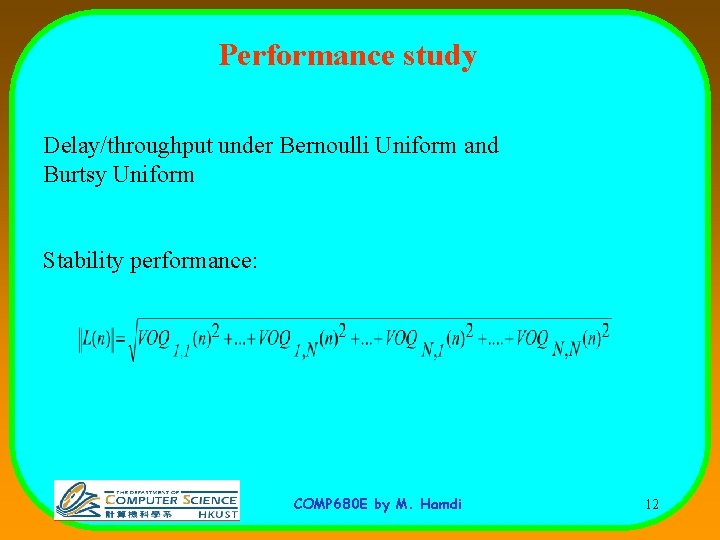

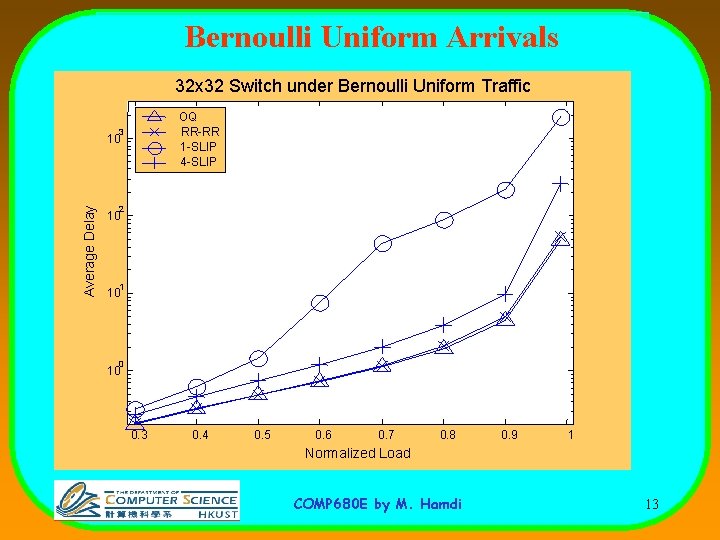

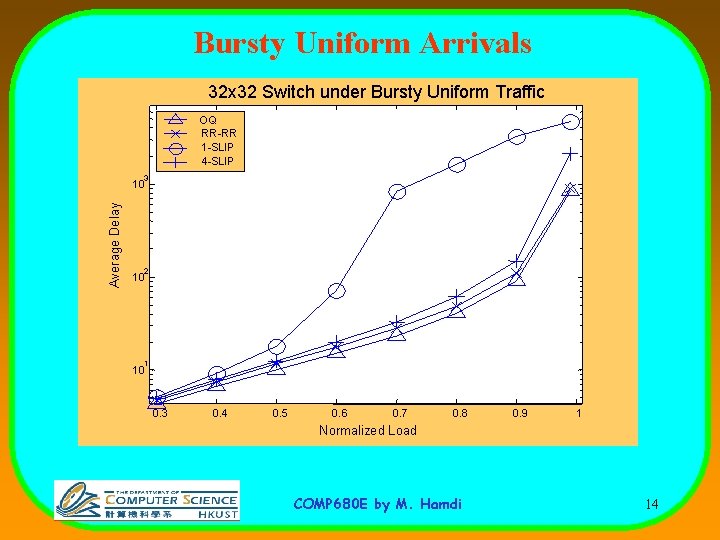

Performance study Delay/throughput under Bernoulli Uniform and Burtsy Uniform Stability performance: COMP 680 E by M. Hamdi 12

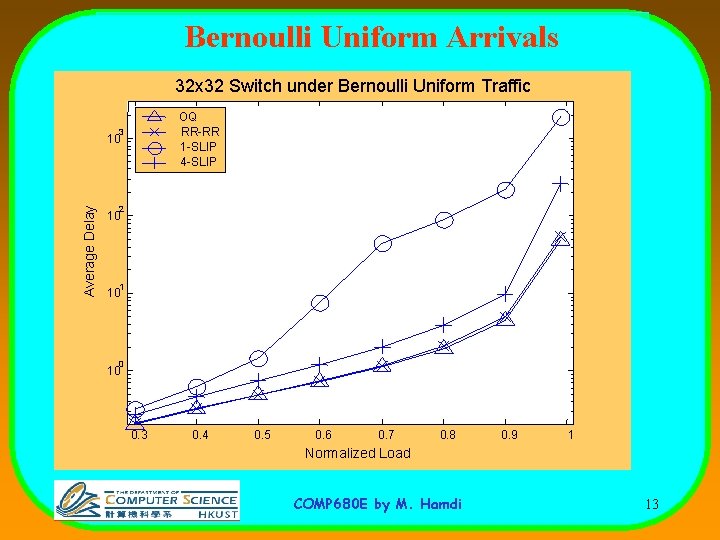

Bernoulli Uniform Arrivals 32 x 32 Switch under Bernoulli Uniform Traffic OQ RR-RR 1 -SLIP 4 -SLIP 3 Average Delay 10 2 10 1 10 0. 3 0. 4 0. 5 0. 6 0. 7 0. 8 0. 9 1 Normalized Load COMP 680 E by M. Hamdi 13

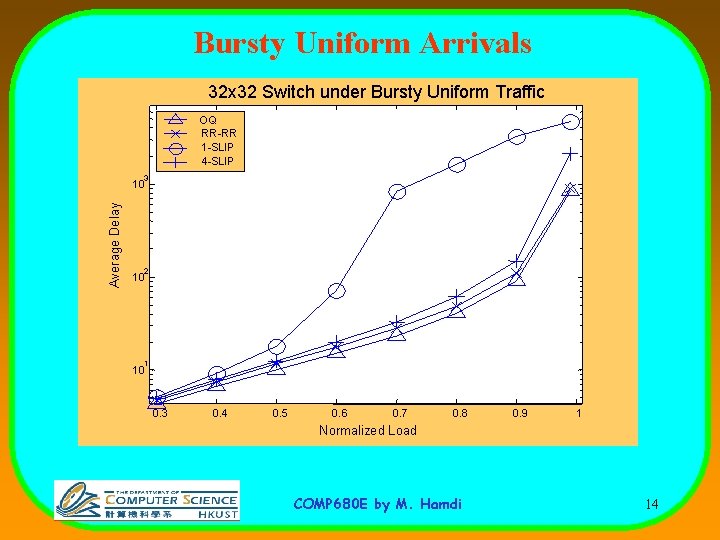

Bursty Uniform Arrivals 32 x 32 Switch under Bursty Uniform Traffic OQ RR-RR 1 -SLIP 4 -SLIP 3 Average Delay 10 2 10 1 10 0. 3 0. 4 0. 5 0. 6 0. 7 0. 8 0. 9 1 Normalized Load COMP 680 E by M. Hamdi 14

Scheduling Process q Because the arbitration is simple: – We can afford to have algorithms based on weights for example (LQF, OCF). – We can afford to have algorithms that provide Qo. S COMP 680 E by M. Hamdi 15

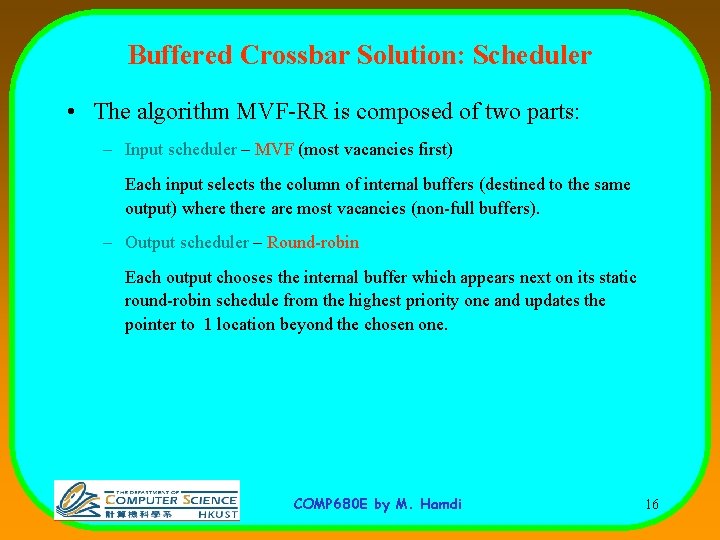

Buffered Crossbar Solution: Scheduler • The algorithm MVF-RR is composed of two parts: – Input scheduler – MVF (most vacancies first) Each input selects the column of internal buffers (destined to the same output) where there are most vacancies (non-full buffers). – Output scheduler – Round-robin Each output chooses the internal buffer which appears next on its static round-robin schedule from the highest priority one and updates the pointer to 1 location beyond the chosen one. COMP 680 E by M. Hamdi 16

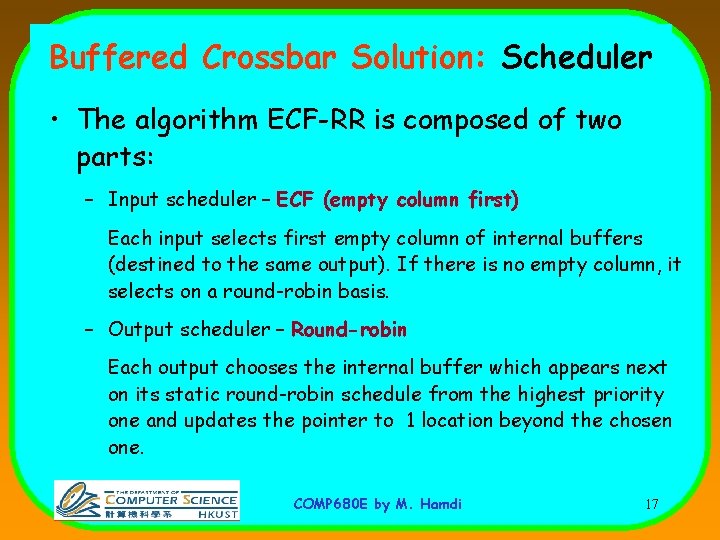

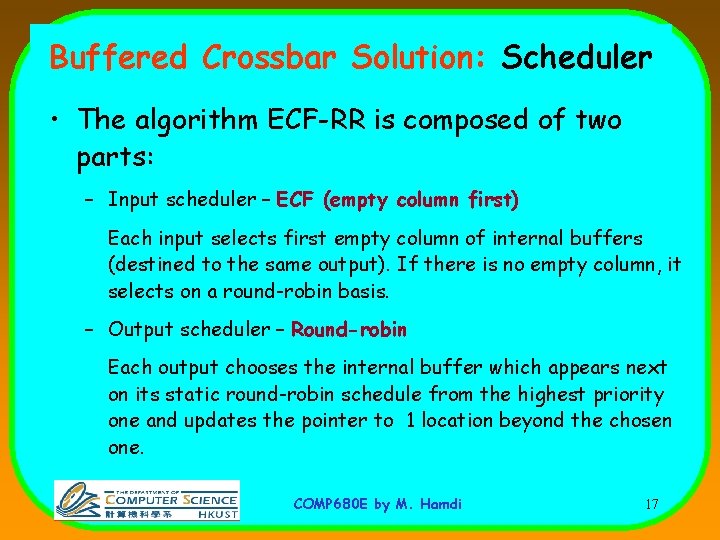

Buffered Crossbar Solution: Scheduler • The algorithm ECF-RR is composed of two parts: – Input scheduler – ECF (empty column first) Each input selects first empty column of internal buffers (destined to the same output). If there is no empty column, it selects on a round-robin basis. – Output scheduler – Round-robin Each output chooses the internal buffer which appears next on its static round-robin schedule from the highest priority one and updates the pointer to 1 location beyond the chosen one. COMP 680 E by M. Hamdi 17

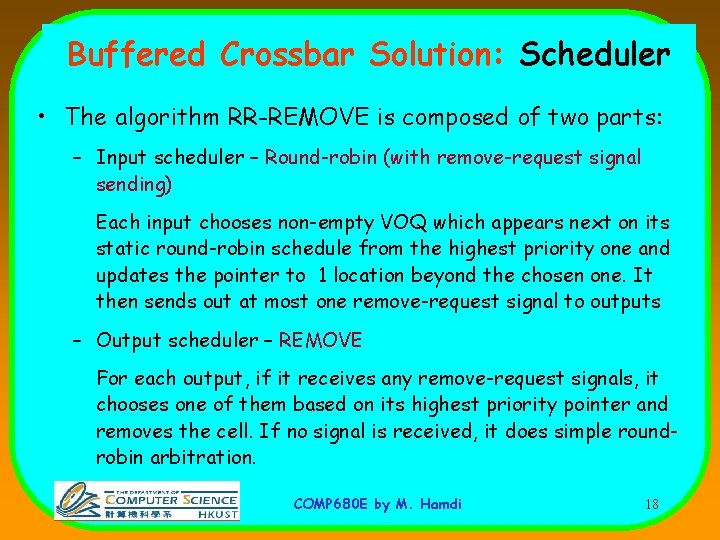

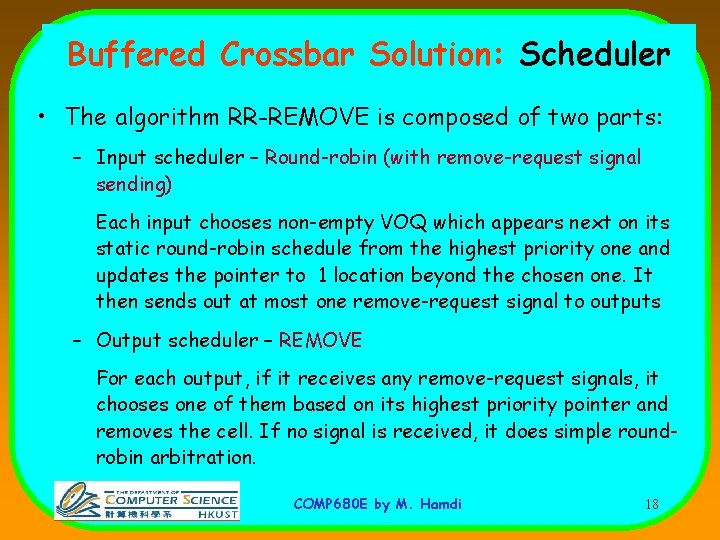

Buffered Crossbar Solution: Scheduler • The algorithm RR-REMOVE is composed of two parts: – Input scheduler – Round-robin (with remove-request signal sending) Each input chooses non-empty VOQ which appears next on its static round-robin schedule from the highest priority one and updates the pointer to 1 location beyond the chosen one. It then sends out at most one remove-request signal to outputs – Output scheduler – REMOVE For each output, if it receives any remove-request signals, it chooses one of them based on its highest priority pointer and removes the cell. If no signal is received, it does simple roundrobin arbitration. COMP 680 E by M. Hamdi 18

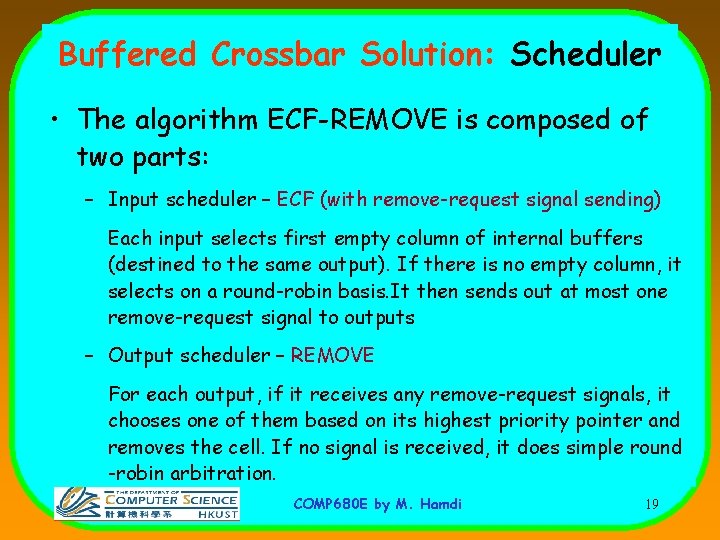

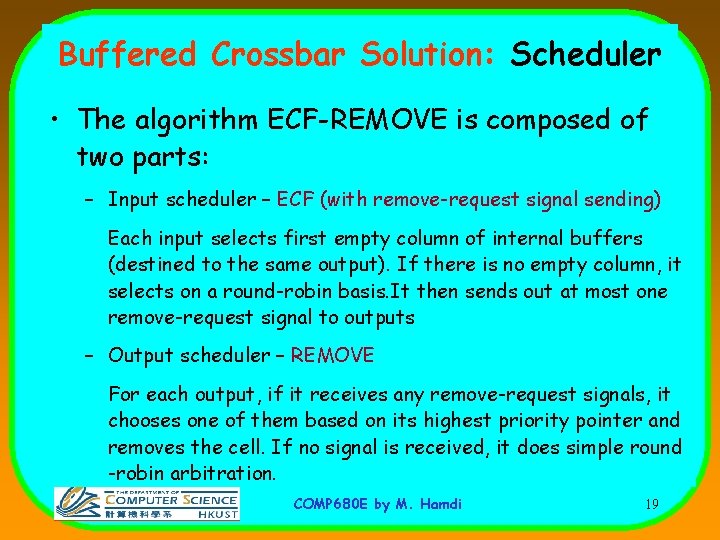

Buffered Crossbar Solution: Scheduler • The algorithm ECF-REMOVE is composed of two parts: – Input scheduler – ECF (with remove-request signal sending) Each input selects first empty column of internal buffers (destined to the same output). If there is no empty column, it selects on a round-robin basis. It then sends out at most one remove-request signal to outputs – Output scheduler – REMOVE For each output, if it receives any remove-request signals, it chooses one of them based on its highest priority pointer and removes the cell. If no signal is received, it does simple round -robin arbitration. COMP 680 E by M. Hamdi 19

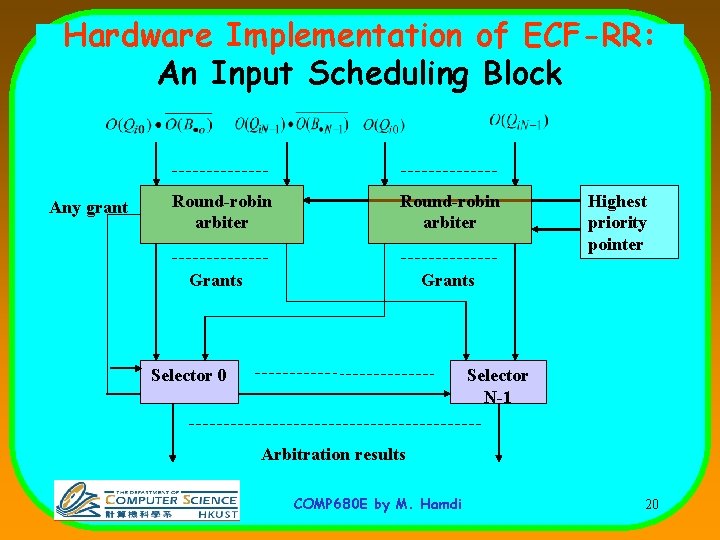

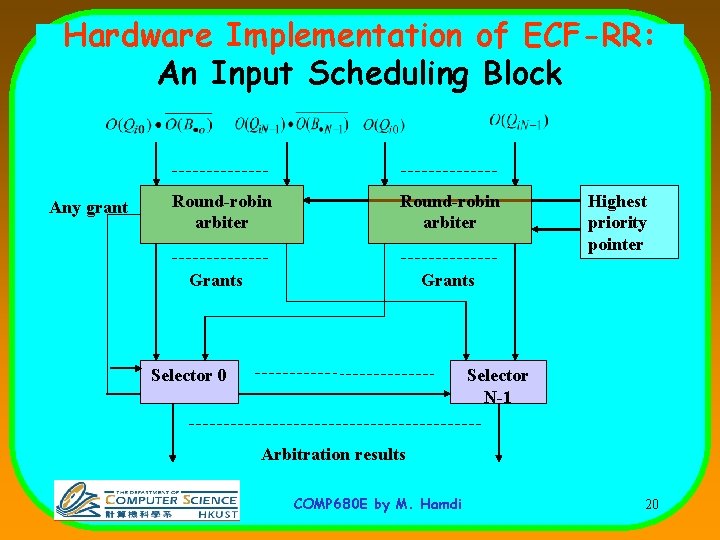

Hardware Implementation of ECF-RR: An Input Scheduling Block Any grant Round-robin arbiter Grants Highest priority pointer Grants Selector 0 Selector N-1 Arbitration results COMP 680 E by M. Hamdi 20

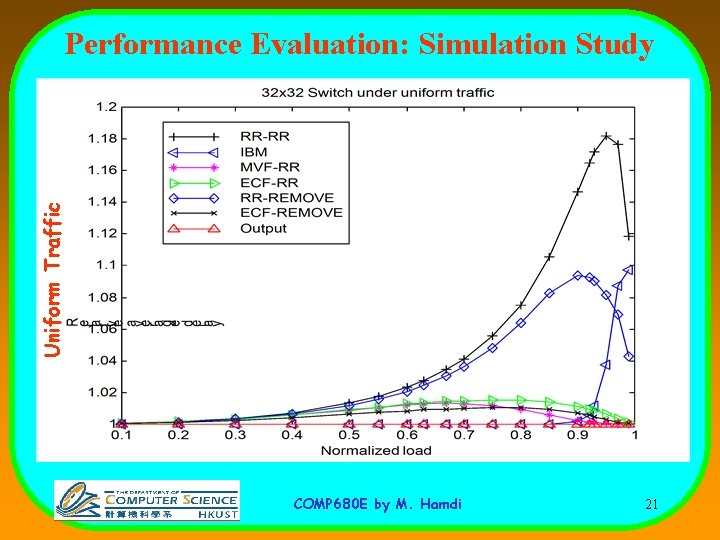

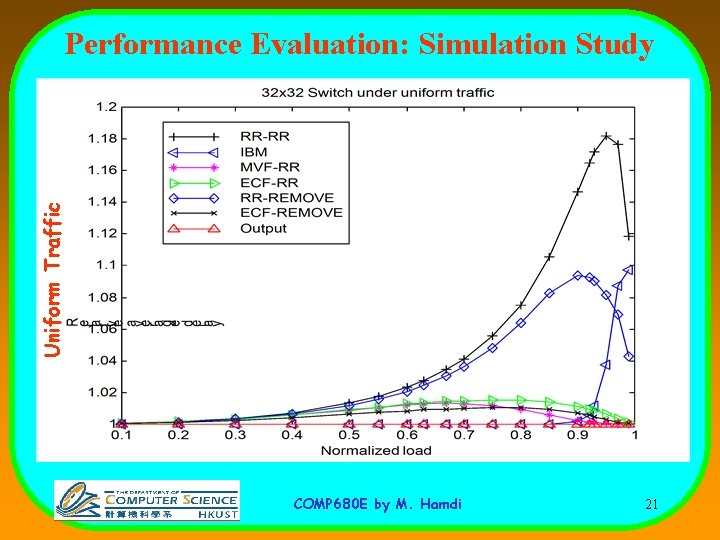

Uniform Traffic Performance Evaluation: Simulation Study COMP 680 E by M. Hamdi 21

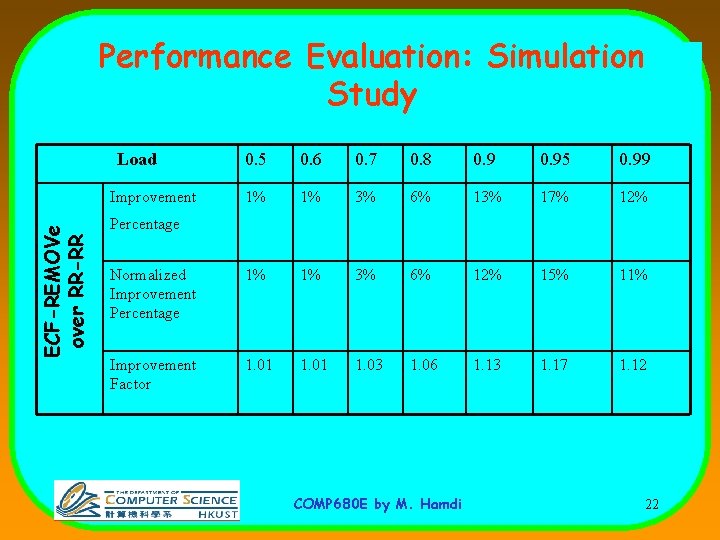

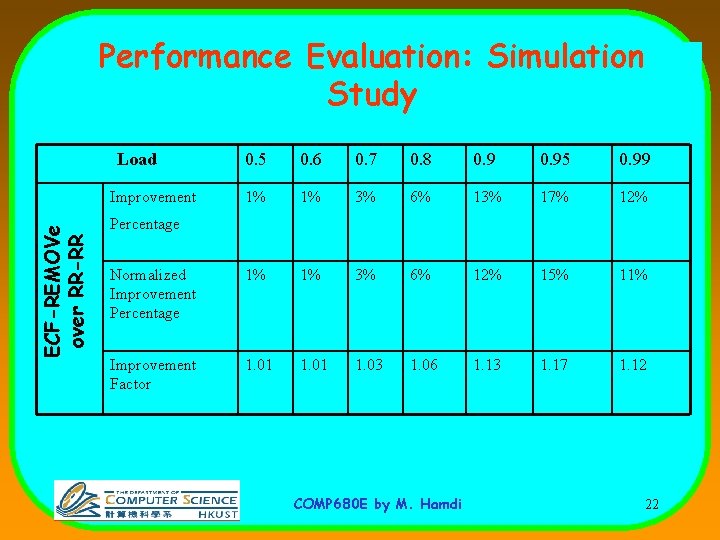

Performance Evaluation: Simulation Study Load 0. 5 0. 6 0. 7 0. 8 0. 95 0. 99 1% 1% 3% 6% 13% 17% 12% Normalized Improvement Percentage 1% 1% 3% 6% 12% 15% 11% Improvement Factor 1. 01 1. 03 1. 06 1. 13 1. 17 1. 12 ECF-REMOVe over RR-RR Improvement Percentage COMP 680 E by M. Hamdi 22

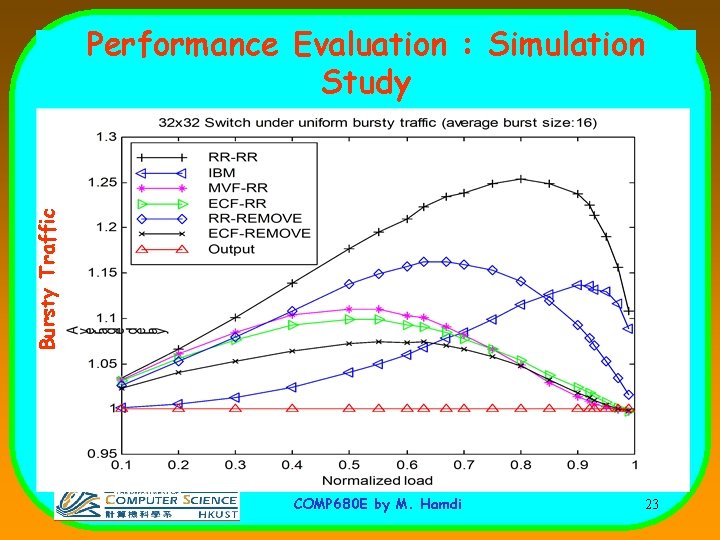

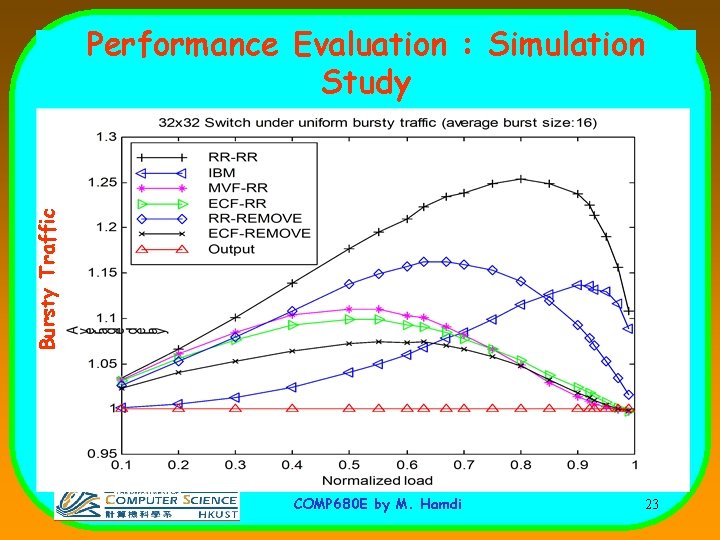

Bursty Traffic Performance Evaluation : Simulation Study COMP 680 E by M. Hamdi 23

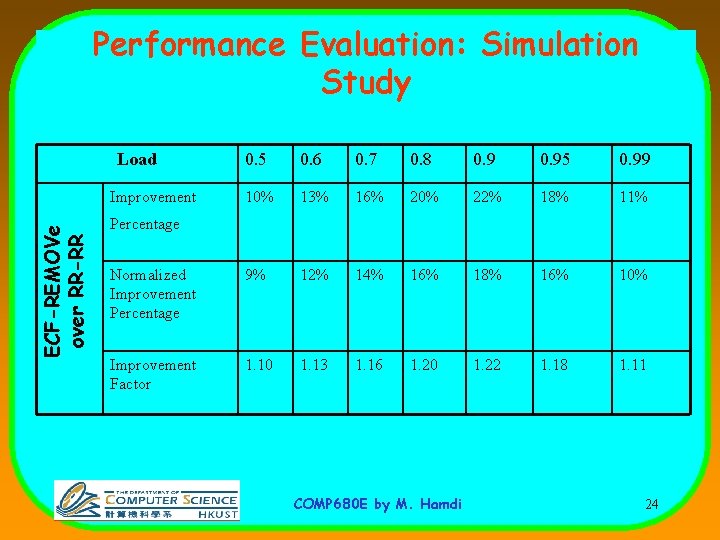

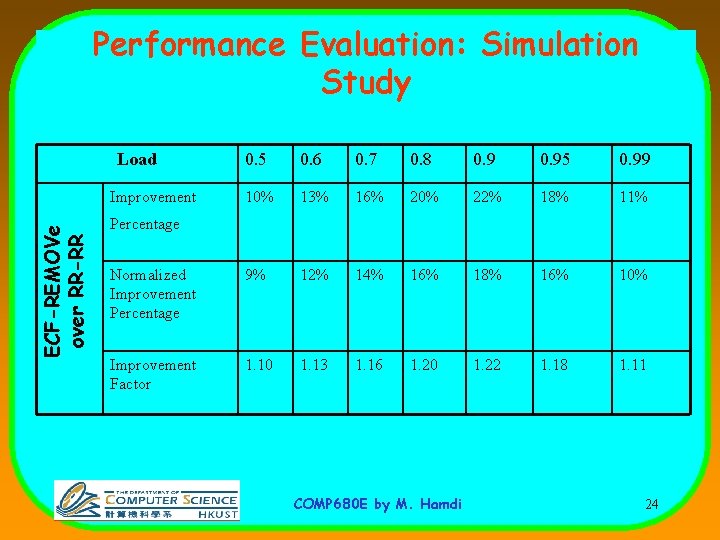

Performance Evaluation: Simulation Study Load 0. 5 0. 6 0. 7 0. 8 0. 95 0. 99 10% 13% 16% 20% 22% 18% 11% Normalized Improvement Percentage 9% 12% 14% 16% 18% 16% 10% Improvement Factor 1. 10 1. 13 1. 16 1. 20 1. 22 1. 18 1. 11 ECF-REMOVe over RR-RR Improvement Percentage COMP 680 E by M. Hamdi 24

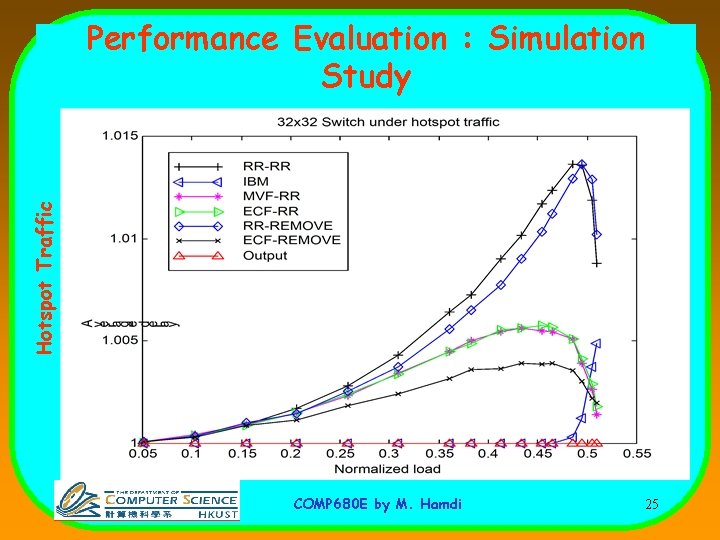

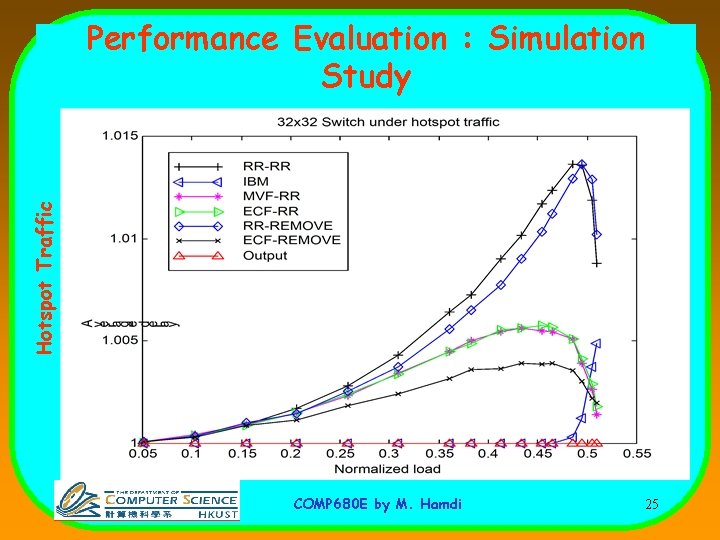

Hotspot Traffic Performance Evaluation : Simulation Study COMP 680 E by M. Hamdi 25

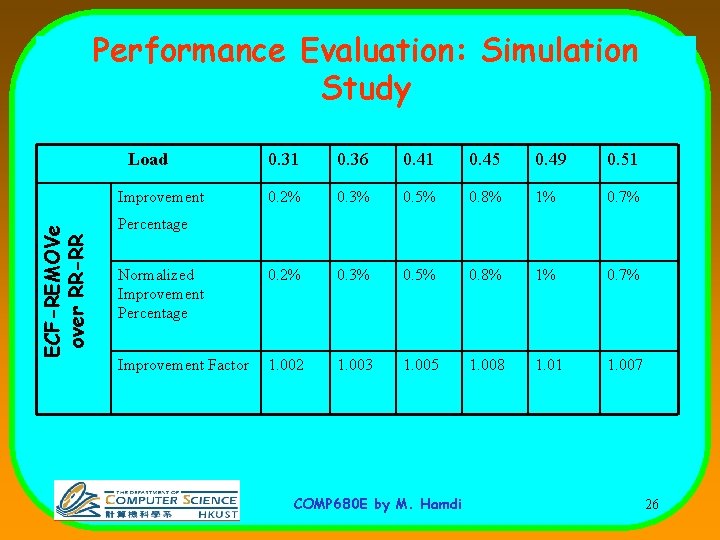

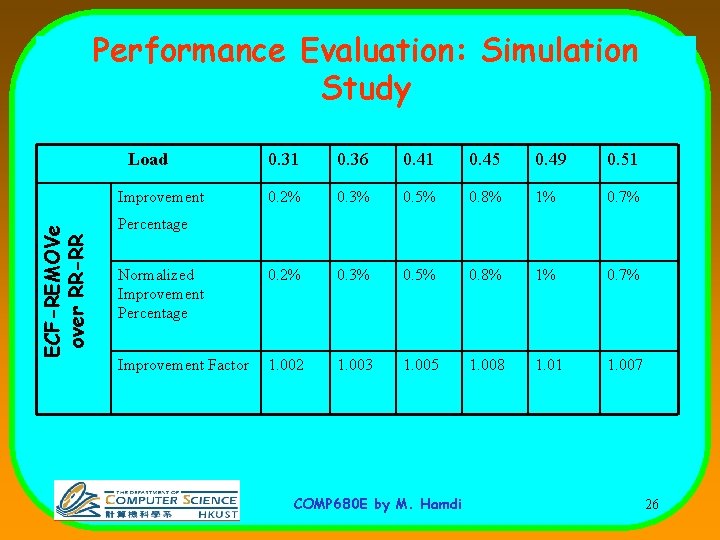

Performance Evaluation: Simulation Study Load 0. 31 0. 36 0. 41 0. 45 0. 49 0. 51 0. 2% 0. 3% 0. 5% 0. 8% 1% 0. 7% Normalized Improvement Percentage 0. 2% 0. 3% 0. 5% 0. 8% 1% 0. 7% Improvement Factor 1. 002 1. 003 1. 005 1. 008 1. 01 1. 007 ECF-REMOVe over RR-RR Improvement Percentage COMP 680 E by M. Hamdi 26

Quality of Service Mechanisms for Switches/Routers and the Internet COMP 680 E by M. Hamdi 27

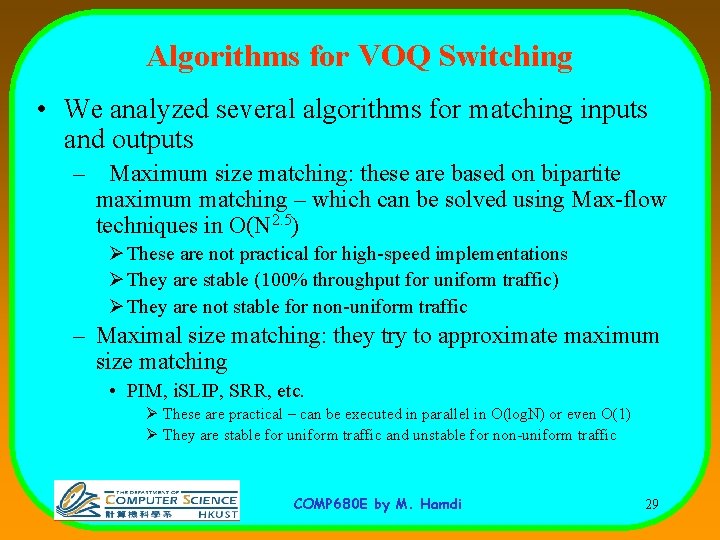

Recap • High-Performance Switch Design – We need scalable switch fabrics – crossbar, bitsliced crossbar, Clos networks. – We need to solve the memory bandwidth problem Ø Our conclusion is to go for input queued-switches Ø We need to use VOQ instead of FIFO queues – For these switches to function at high-speed, we need efficient and practically implementable scheduling/arbitration algorithms COMP 680 E by M. Hamdi 28

Algorithms for VOQ Switching • We analyzed several algorithms for matching inputs and outputs – Maximum size matching: these are based on bipartite maximum matching – which can be solved using Max-flow techniques in O(N 2. 5) Ø These are not practical for high-speed implementations Ø They are stable (100% throughput for uniform traffic) Ø They are not stable for non-uniform traffic – Maximal size matching: they try to approximate maximum size matching • PIM, i. SLIP, SRR, etc. Ø These are practical – can be executed in parallel in O(log. N) or even O(1) Ø They are stable for uniform traffic and unstable for non-uniform traffic COMP 680 E by M. Hamdi 29

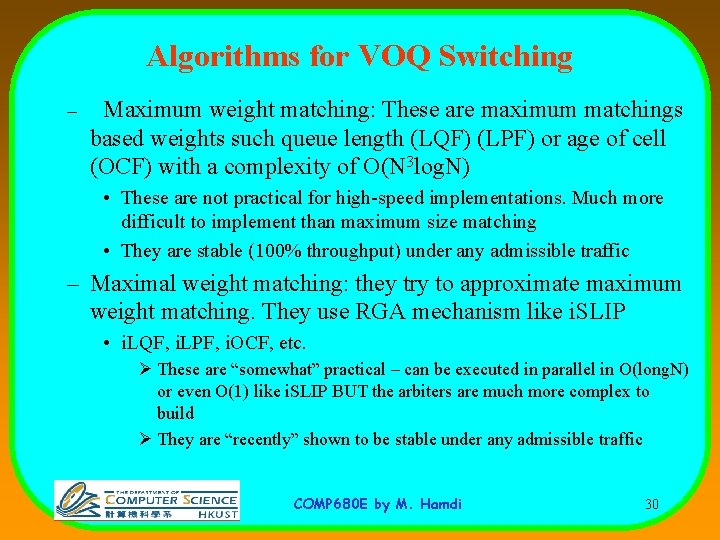

Algorithms for VOQ Switching – Maximum weight matching: These are maximum matchings based weights such queue length (LQF) (LPF) or age of cell (OCF) with a complexity of O(N 3 log. N) • These are not practical for high-speed implementations. Much more difficult to implement than maximum size matching • They are stable (100% throughput) under any admissible traffic – Maximal weight matching: they try to approximate maximum weight matching. They use RGA mechanism like i. SLIP • i. LQF, i. LPF, i. OCF, etc. Ø These are “somewhat” practical – can be executed in parallel in O(long. N) or even O(1) like i. SLIP BUT the arbiters are much more complex to build Ø They are “recently” shown to be stable under any admissible traffic COMP 680 E by M. Hamdi 30

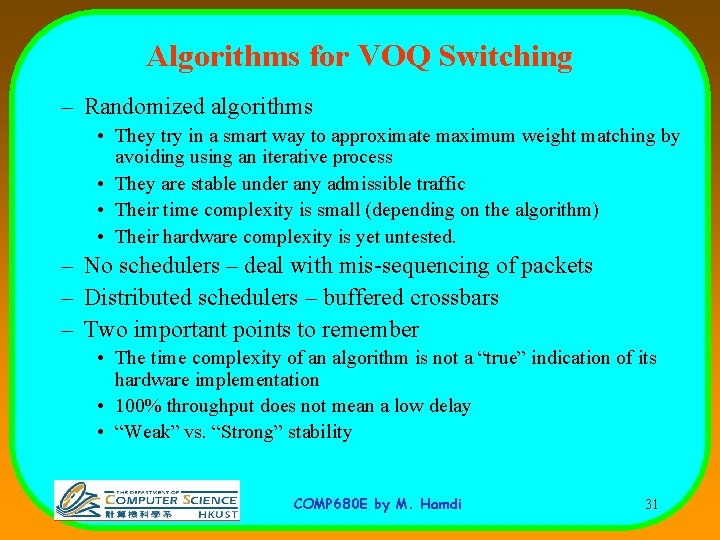

Algorithms for VOQ Switching – Randomized algorithms • They try in a smart way to approximate maximum weight matching by avoiding using an iterative process • They are stable under any admissible traffic • Their time complexity is small (depending on the algorithm) • Their hardware complexity is yet untested. – No schedulers – deal with mis-sequencing of packets – Distributed schedulers – buffered crossbars – Two important points to remember • The time complexity of an algorithm is not a “true” indication of its hardware implementation • 100% throughput does not mean a low delay • “Weak” vs. “Strong” stability COMP 680 E by M. Hamdi 31

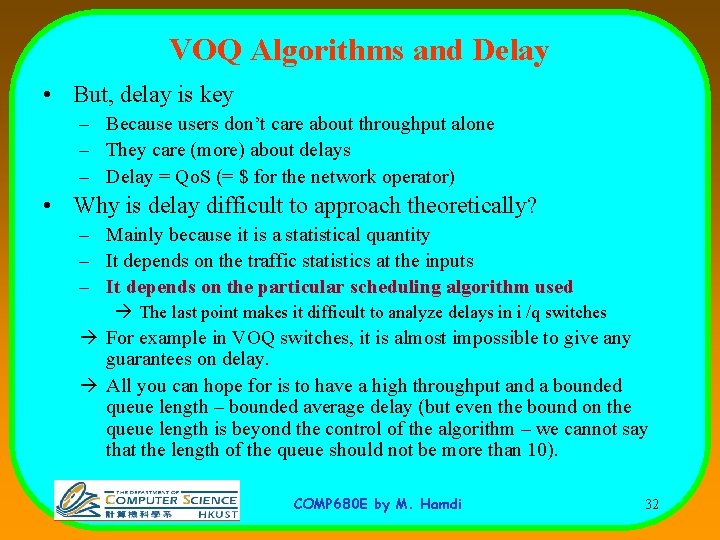

VOQ Algorithms and Delay • But, delay is key – Because users don’t care about throughput alone – They care (more) about delays – Delay = Qo. S (= $ for the network operator) • Why is delay difficult to approach theoretically? – Mainly because it is a statistical quantity – It depends on the traffic statistics at the inputs – It depends on the particular scheduling algorithm used The last point makes it difficult to analyze delays in i /q switches For example in VOQ switches, it is almost impossible to give any guarantees on delay. All you can hope for is to have a high throughput and a bounded queue length – bounded average delay (but even the bound on the queue length is beyond the control of the algorithm – we cannot say that the length of the queue should not be more than 10). COMP 680 E by M. Hamdi 32

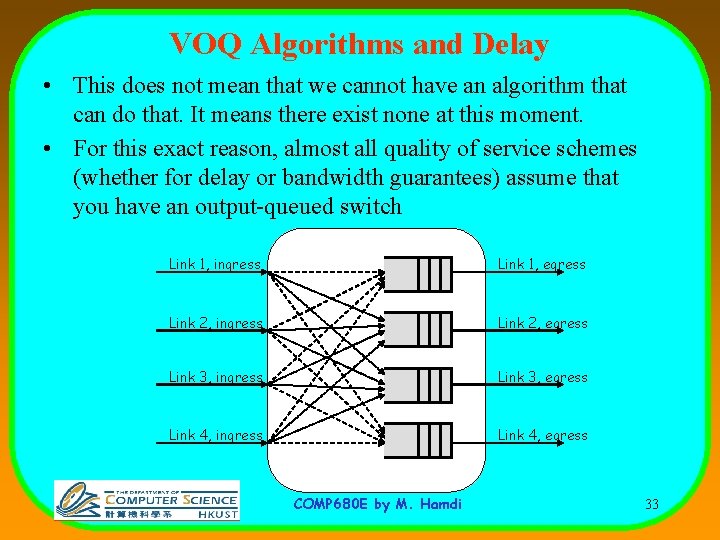

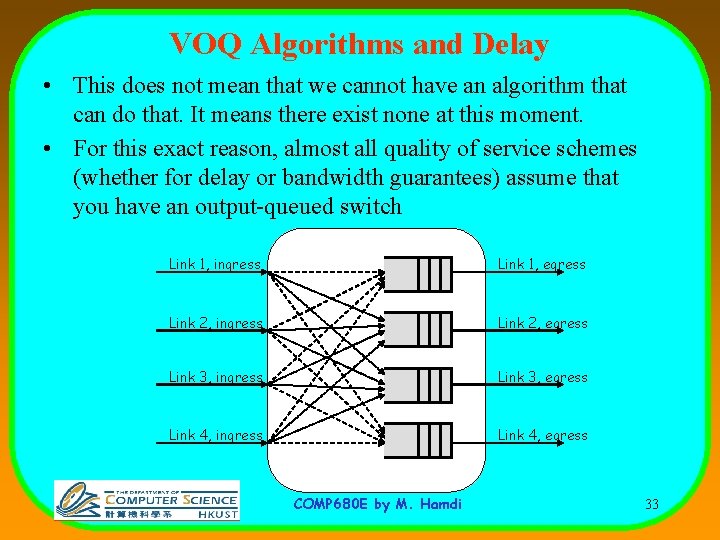

VOQ Algorithms and Delay • This does not mean that we cannot have an algorithm that can do that. It means there exist none at this moment. • For this exact reason, almost all quality of service schemes (whether for delay or bandwidth guarantees) assume that you have an output-queued switch Link 1, ingress Link 1, egress Link 2, ingress Link 2, egress Link 3, ingress Link 3, egress Link 4, ingress Link 4, egress COMP 680 E by M. Hamdi 33

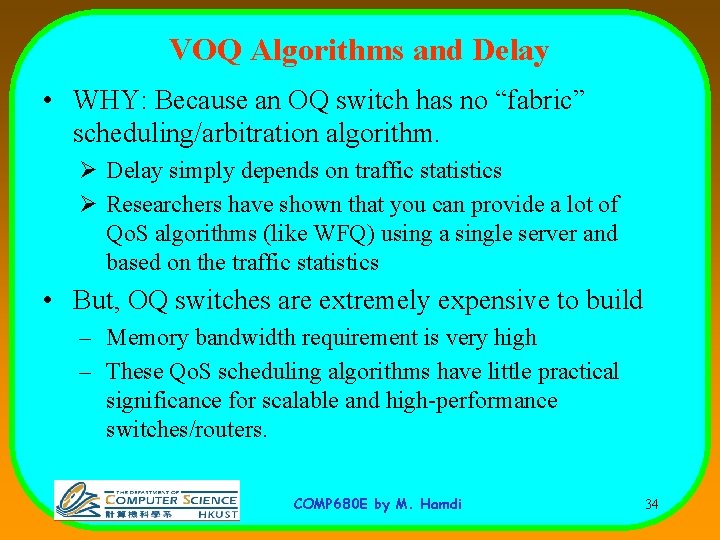

VOQ Algorithms and Delay • WHY: Because an OQ switch has no “fabric” scheduling/arbitration algorithm. Ø Delay simply depends on traffic statistics Ø Researchers have shown that you can provide a lot of Qo. S algorithms (like WFQ) using a single server and based on the traffic statistics • But, OQ switches are extremely expensive to build – Memory bandwidth requirement is very high – These Qo. S scheduling algorithms have little practical significance for scalable and high-performance switches/routers. COMP 680 E by M. Hamdi 34

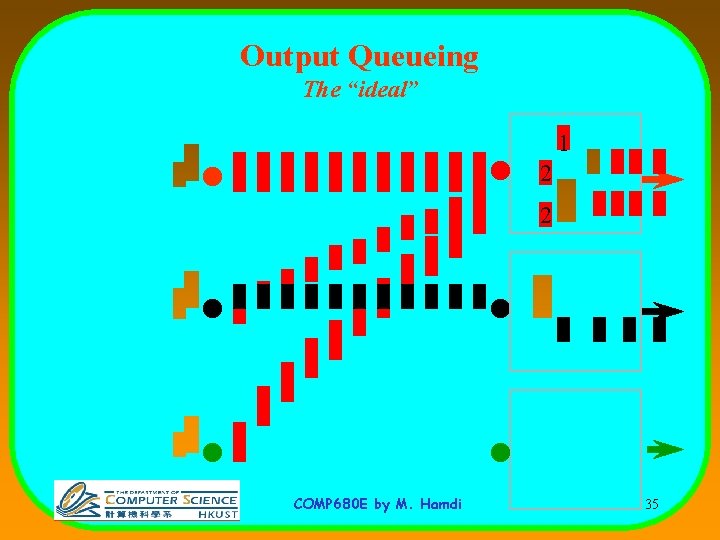

Output Queueing The “ideal” 1 2 1 2 11 2 2 1 COMP 680 E by M. Hamdi 35

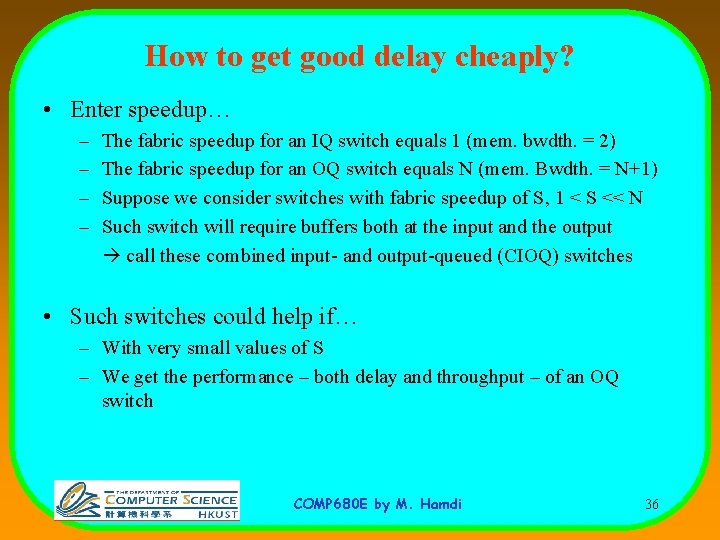

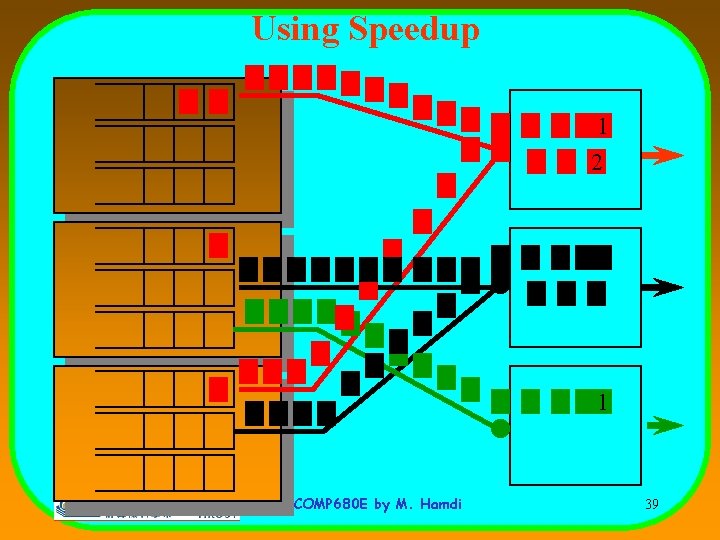

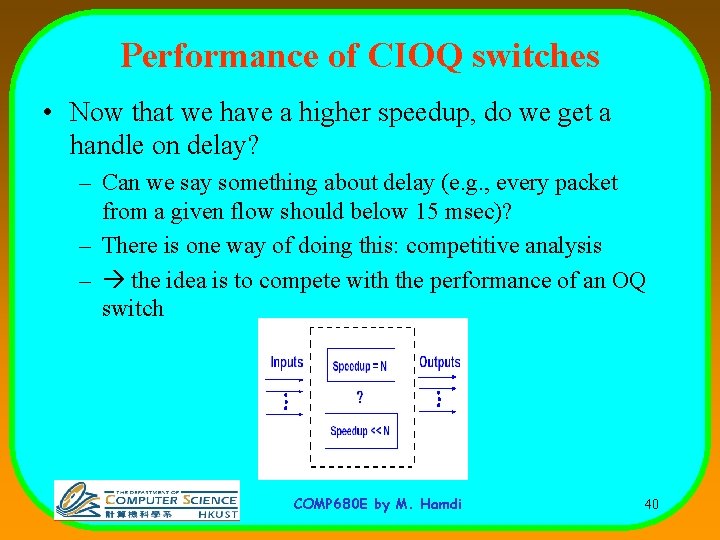

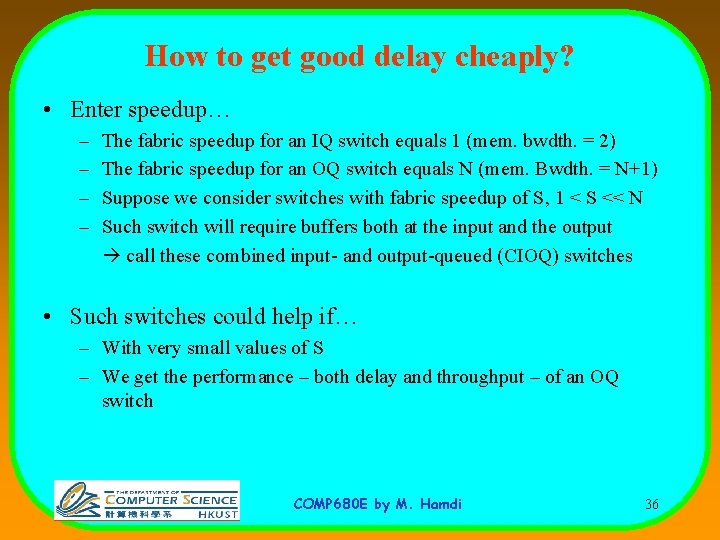

How to get good delay cheaply? • Enter speedup… – – The fabric speedup for an IQ switch equals 1 (mem. bwdth. = 2) The fabric speedup for an OQ switch equals N (mem. Bwdth. = N+1) Suppose we consider switches with fabric speedup of S, 1 < S << N Such switch will require buffers both at the input and the output call these combined input- and output-queued (CIOQ) switches • Such switches could help if… – With very small values of S – We get the performance – both delay and throughput – of an OQ switch COMP 680 E by M. Hamdi 36

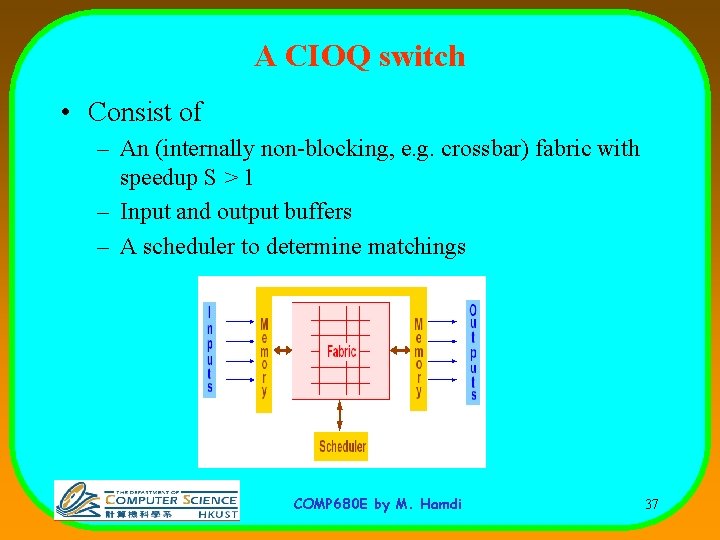

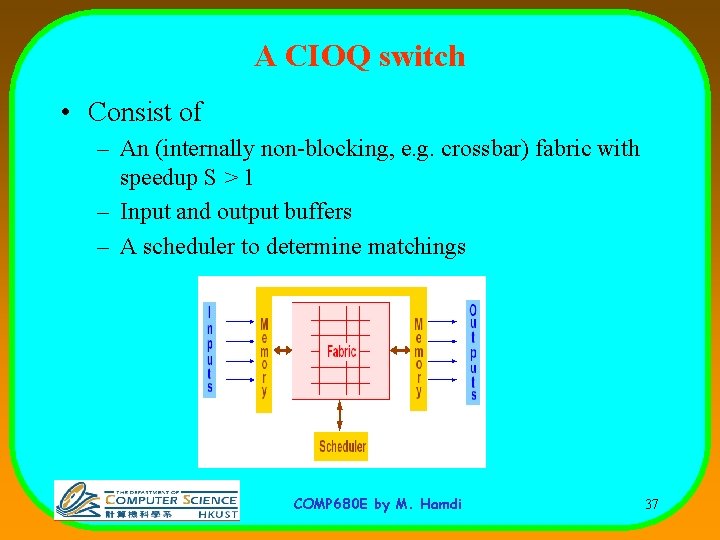

A CIOQ switch • Consist of – An (internally non-blocking, e. g. crossbar) fabric with speedup S > 1 – Input and output buffers – A scheduler to determine matchings COMP 680 E by M. Hamdi 37

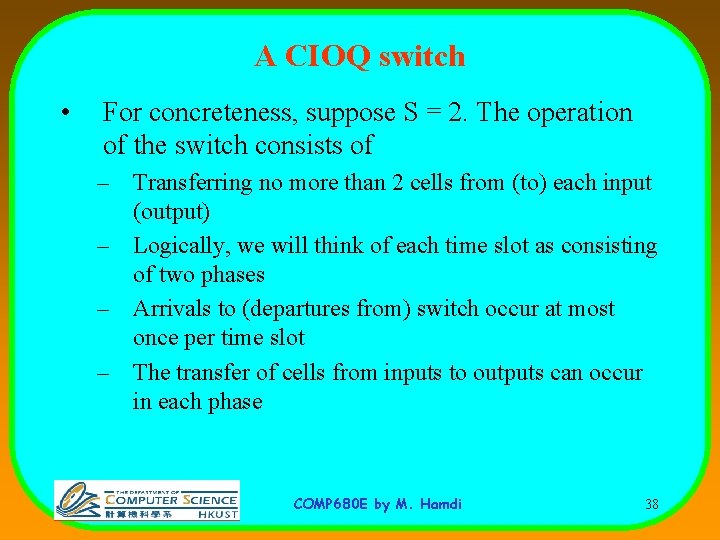

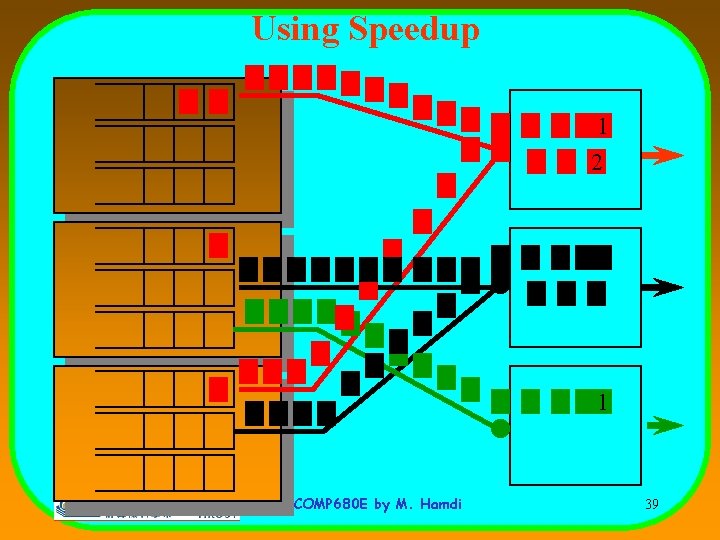

A CIOQ switch • For concreteness, suppose S = 2. The operation of the switch consists of – Transferring no more than 2 cells from (to) each input (output) – Logically, we will think of each time slot as consisting of two phases – Arrivals to (departures from) switch occur at most once per time slot – The transfer of cells from inputs to outputs can occur in each phase COMP 680 E by M. Hamdi 38

Using Speedup 1 2 1 COMP 680 E by M. Hamdi 39

Performance of CIOQ switches • Now that we have a higher speedup, do we get a handle on delay? – Can we say something about delay (e. g. , every packet from a given flow should below 15 msec)? – There is one way of doing this: competitive analysis – the idea is to compete with the performance of an OQ switch COMP 680 E by M. Hamdi 40

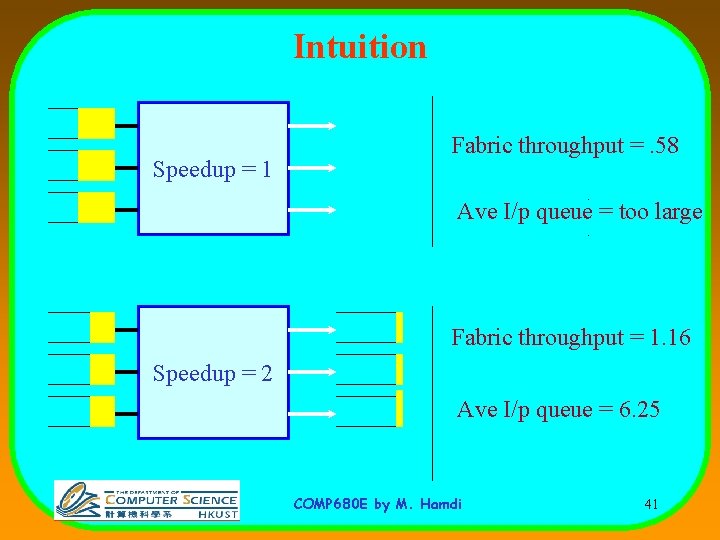

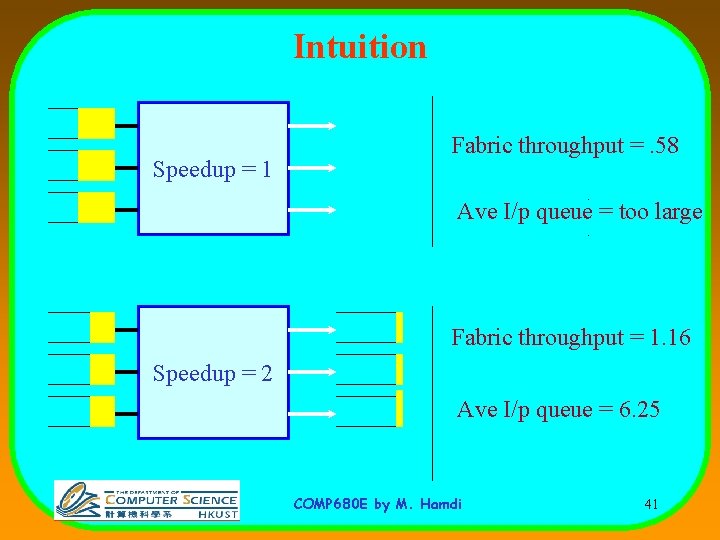

Intuition Speedup = 1 Fabric throughput =. 58 Ave I/p queue = too large Fabric throughput = 1. 16 Speedup = 2 Ave I/p queue = 6. 25 COMP 680 E by M. Hamdi 41

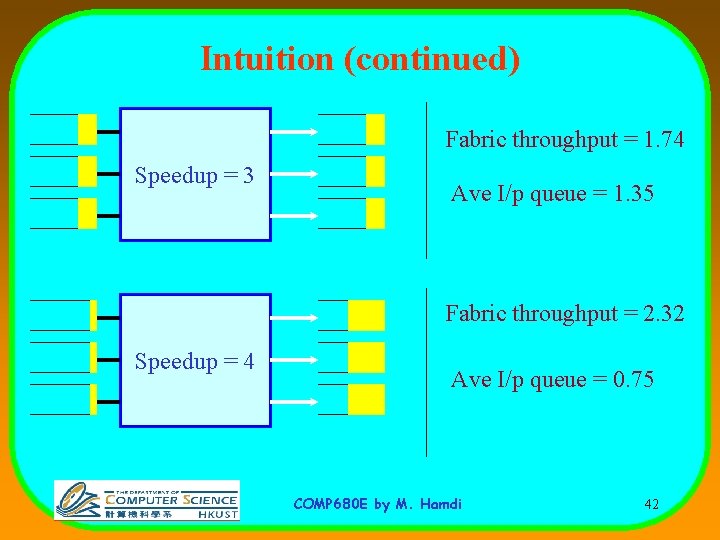

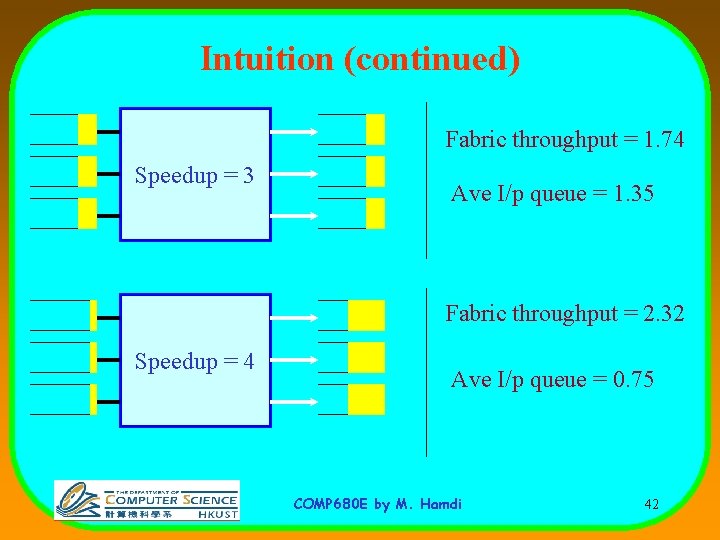

Intuition (continued) Fabric throughput = 1. 74 Speedup = 3 Ave I/p queue = 1. 35 Fabric throughput = 2. 32 Speedup = 4 Ave I/p queue = 0. 75 COMP 680 E by M. Hamdi 42

Performance of CIOQ switches • The setup – Under arbitrary, but identical inputs (packet-by-packet) – Is it possible to replace an OQ switch by a CIOQ switch and schedule the CIOQ switch so that the outputs are identical packet-by-packet? To exactly mimick an OQ switch – If yes, what is the scheduling algorithm? COMP 680 E by M. Hamdi 43

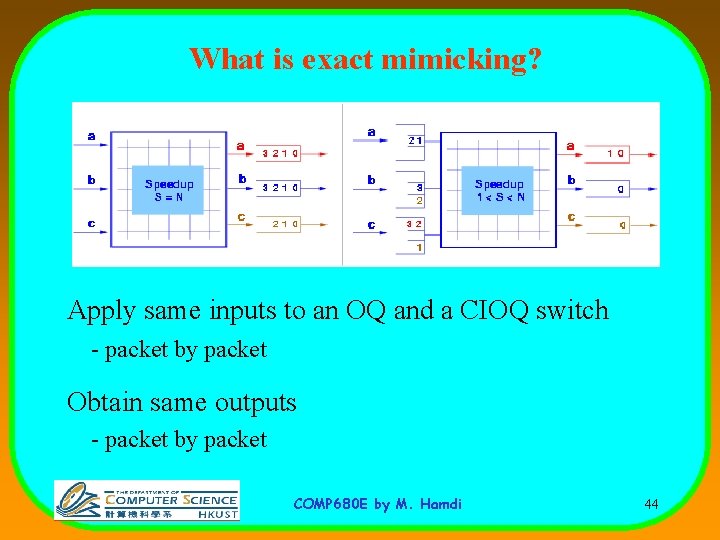

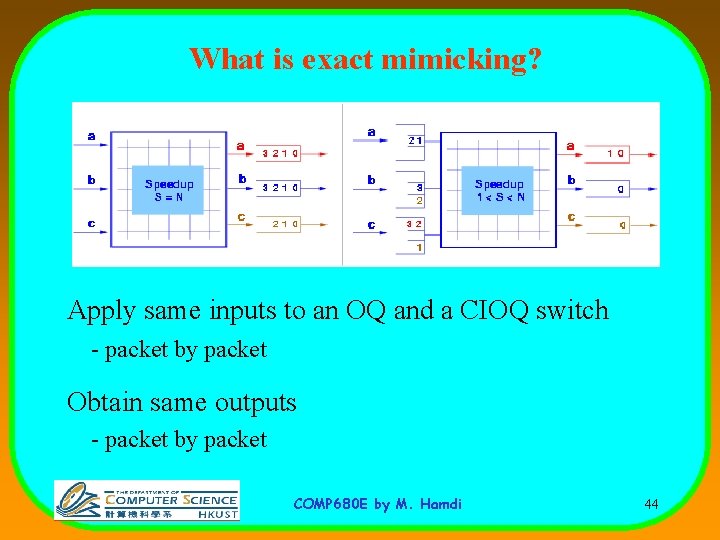

What is exact mimicking? Apply same inputs to an OQ and a CIOQ switch - packet by packet Obtain same outputs - packet by packet COMP 680 E by M. Hamdi 44

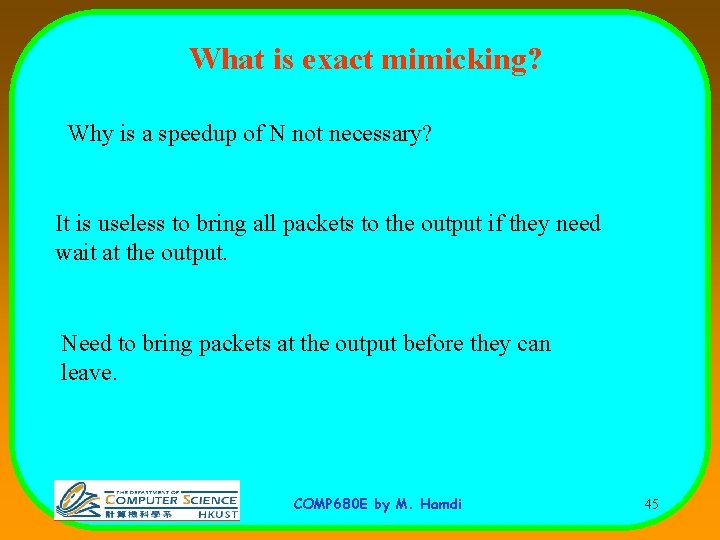

What is exact mimicking? Why is a speedup of N not necessary? It is useless to bring all packets to the output if they need wait at the output. Need to bring packets at the output before they can leave. COMP 680 E by M. Hamdi 45

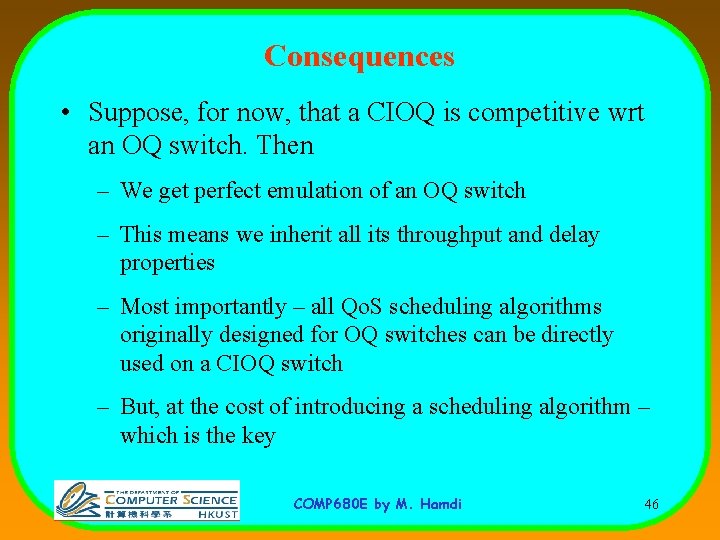

Consequences • Suppose, for now, that a CIOQ is competitive wrt an OQ switch. Then – We get perfect emulation of an OQ switch – This means we inherit all its throughput and delay properties – Most importantly – all Qo. S scheduling algorithms originally designed for OQ switches can be directly used on a CIOQ switch – But, at the cost of introducing a scheduling algorithm – which is the key COMP 680 E by M. Hamdi 46

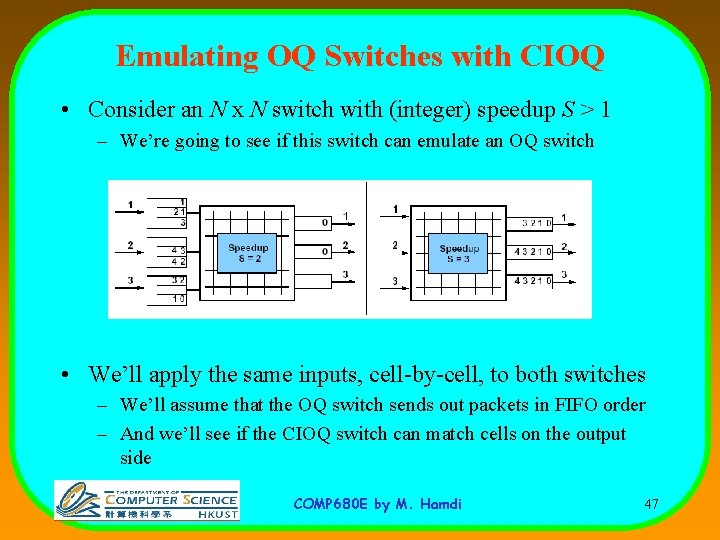

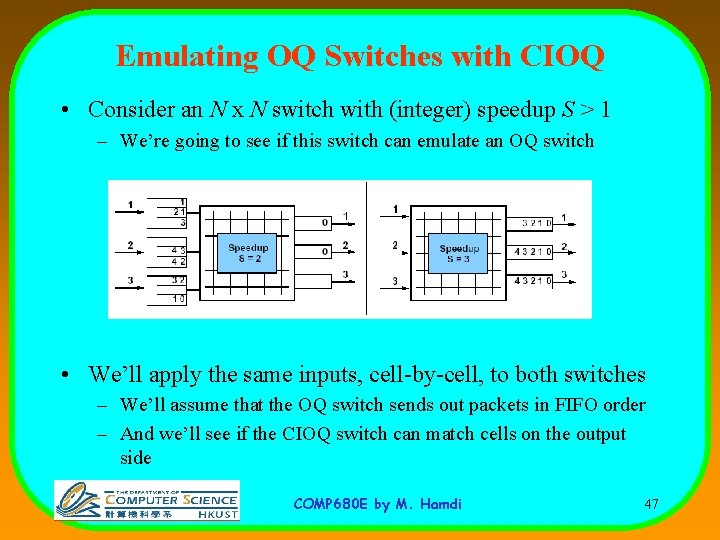

Emulating OQ Switches with CIOQ • Consider an N x N switch with (integer) speedup S > 1 – We’re going to see if this switch can emulate an OQ switch • We’ll apply the same inputs, cell-by-cell, to both switches – We’ll assume that the OQ switch sends out packets in FIFO order – And we’ll see if the CIOQ switch can match cells on the output side COMP 680 E by M. Hamdi 47

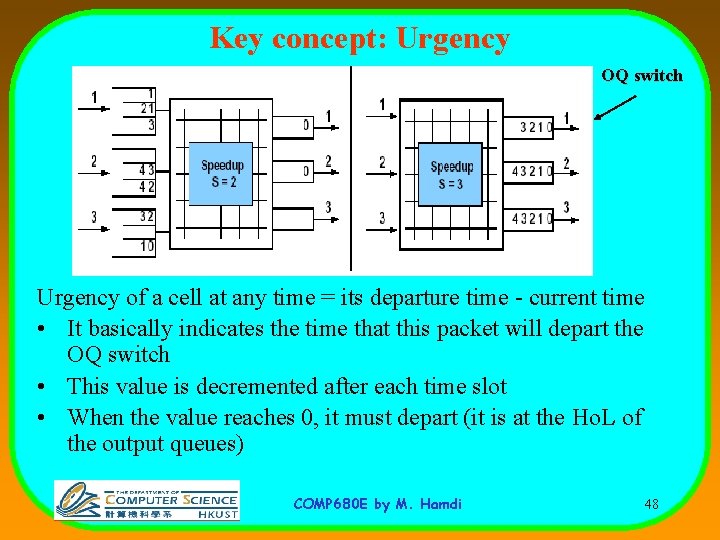

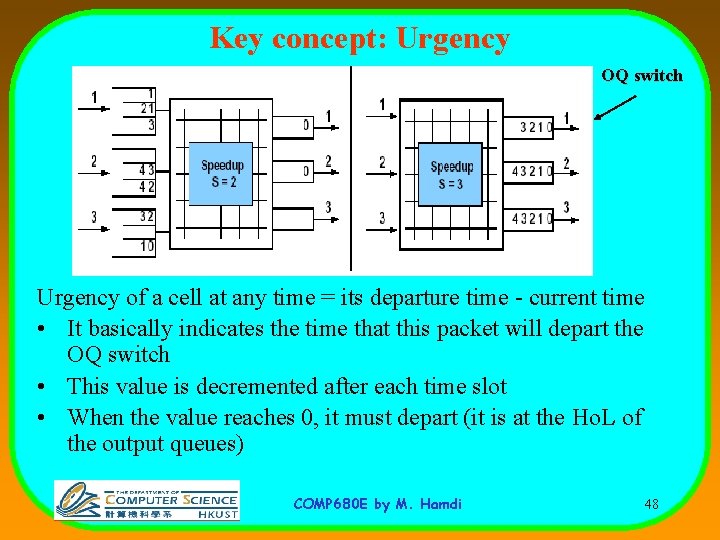

Key concept: Urgency OQ switch Urgency of a cell at any time = its departure time - current time • It basically indicates the time that this packet will depart the OQ switch • This value is decremented after each time slot • When the value reaches 0, it must depart (it is at the Ho. L of the output queues) COMP 680 E by M. Hamdi 48

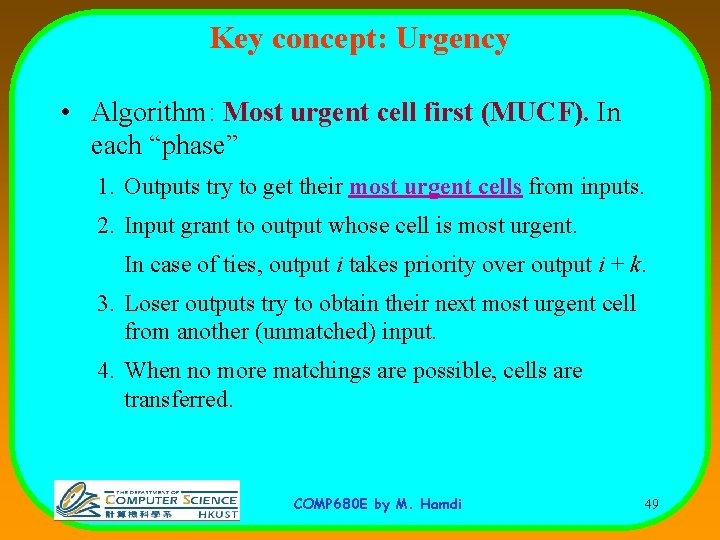

Key concept: Urgency • Algorithm: Most urgent cell first (MUCF). In each “phase” 1. Outputs try to get their most urgent cells from inputs. 2. Input grant to output whose cell is most urgent. In case of ties, output i takes priority over output i + k. 3. Loser outputs try to obtain their next most urgent cell from another (unmatched) input. 4. When no more matchings are possible, cells are transferred. COMP 680 E by M. Hamdi 49

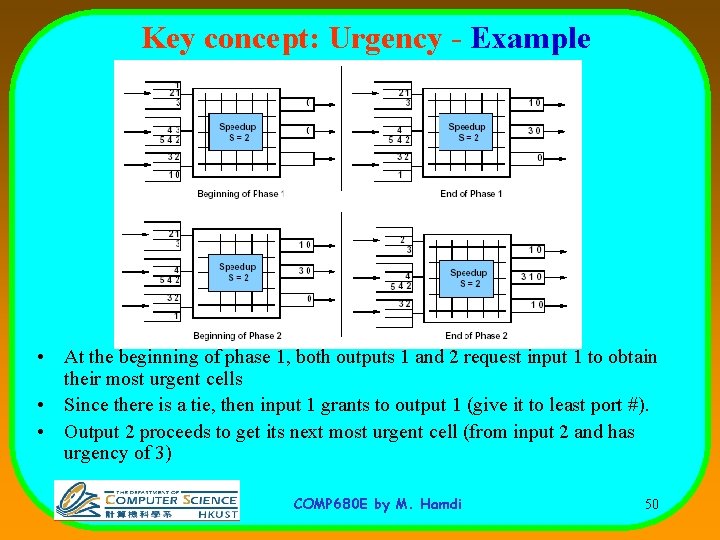

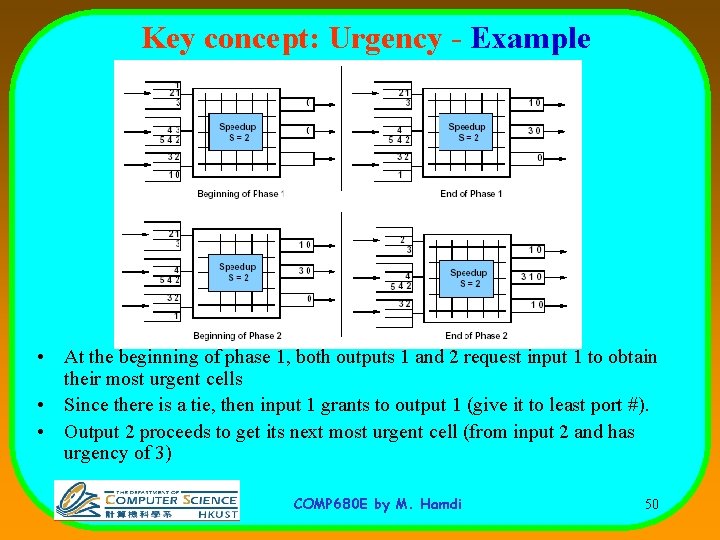

Key concept: Urgency - Example • At the beginning of phase 1, both outputs 1 and 2 request input 1 to obtain their most urgent cells • Since there is a tie, then input 1 grants to output 1 (give it to least port #). • Output 2 proceeds to get its next most urgent cell (from input 2 and has urgency of 3) COMP 680 E by M. Hamdi 50

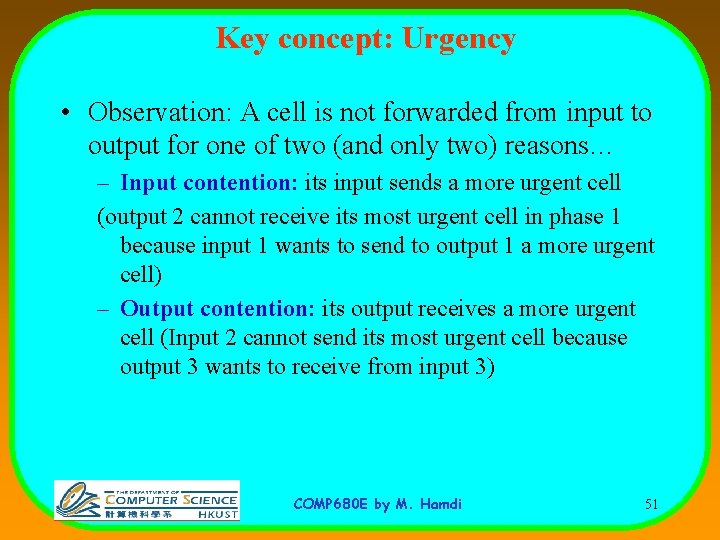

Key concept: Urgency • Observation: A cell is not forwarded from input to output for one of two (and only two) reasons… – Input contention: its input sends a more urgent cell (output 2 cannot receive its most urgent cell in phase 1 because input 1 wants to send to output 1 a more urgent cell) – Output contention: its output receives a more urgent cell (Input 2 cannot send its most urgent cell because output 3 wants to receive from input 3) COMP 680 E by M. Hamdi 51

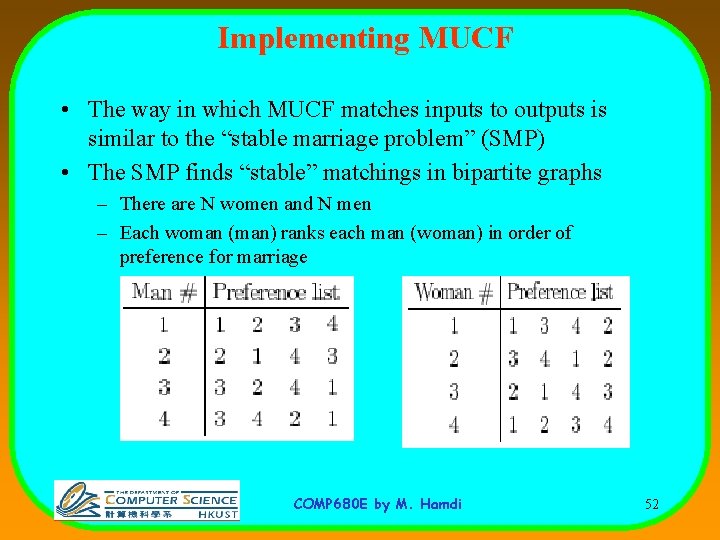

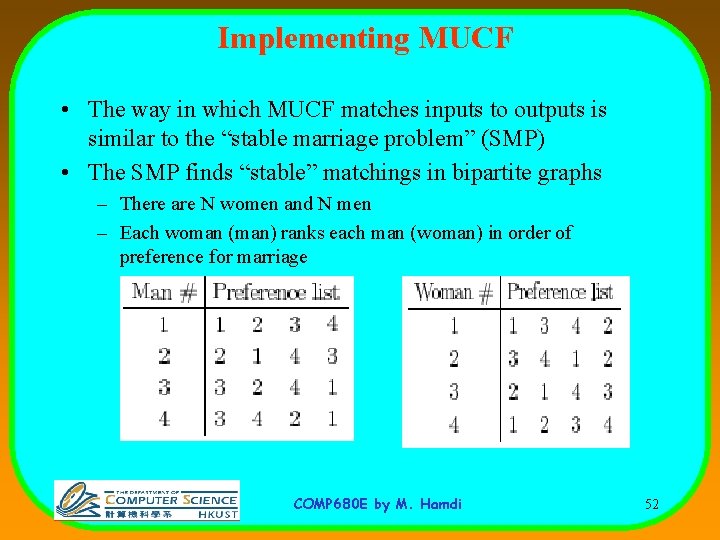

Implementing MUCF • The way in which MUCF matches inputs to outputs is similar to the “stable marriage problem” (SMP) • The SMP finds “stable” matchings in bipartite graphs – There are N women and N men – Each woman (man) ranks each man (woman) in order of preference for marriage COMP 680 E by M. Hamdi 52

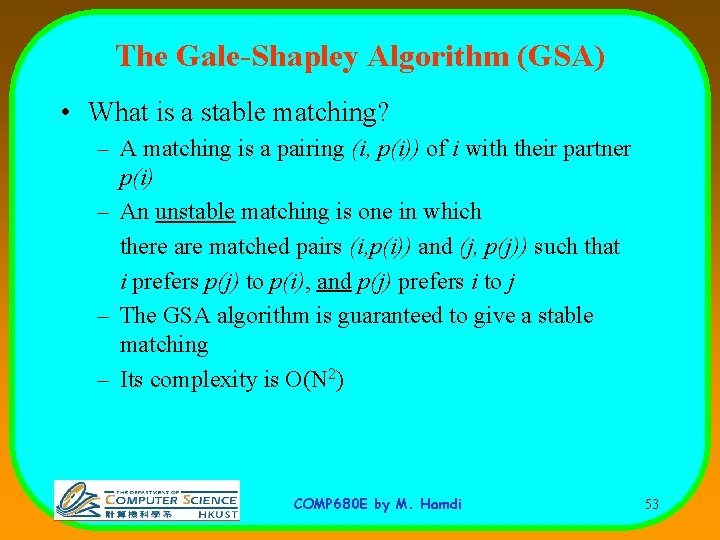

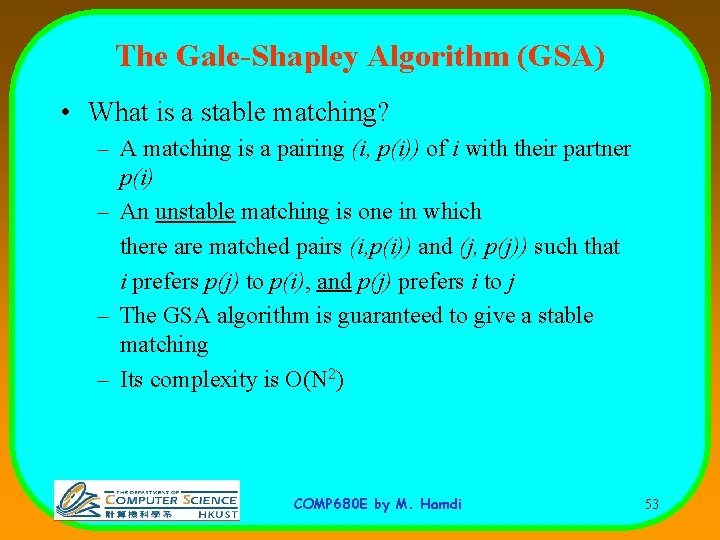

The Gale-Shapley Algorithm (GSA) • What is a stable matching? – A matching is a pairing (i, p(i)) of i with their partner p(i) – An unstable matching is one in which there are matched pairs (i, p(i)) and (j, p(j)) such that i prefers p(j) to p(i), and p(j) prefers i to j – The GSA algorithm is guaranteed to give a stable matching – Its complexity is O(N 2) COMP 680 E by M. Hamdi 53

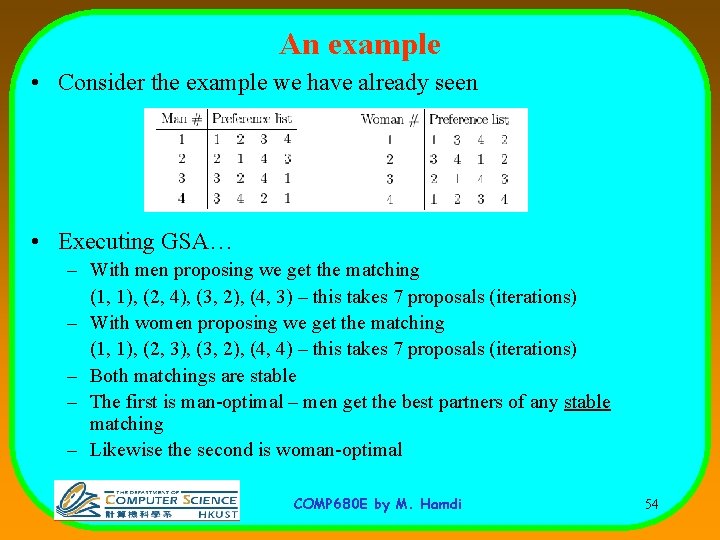

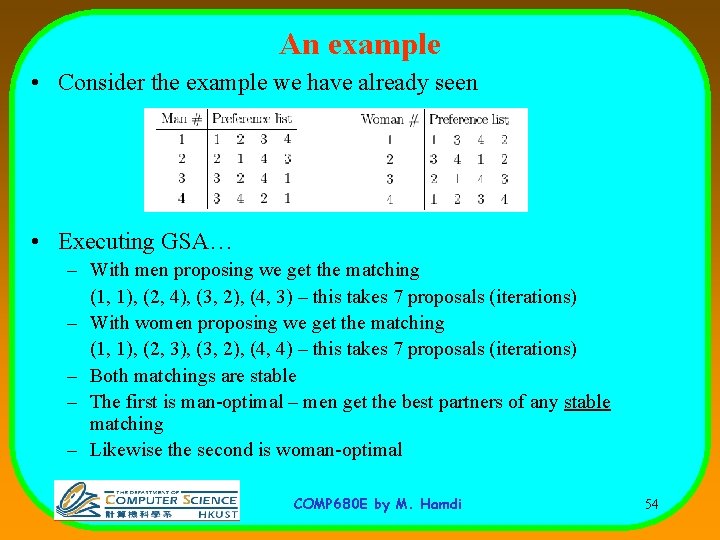

An example • Consider the example we have already seen • Executing GSA… – With men proposing we get the matching (1, 1), (2, 4), (3, 2), (4, 3) – this takes 7 proposals (iterations) – With women proposing we get the matching (1, 1), (2, 3), (3, 2), (4, 4) – this takes 7 proposals (iterations) – Both matchings are stable – The first is man-optimal – men get the best partners of any stable matching – Likewise the second is woman-optimal COMP 680 E by M. Hamdi 54

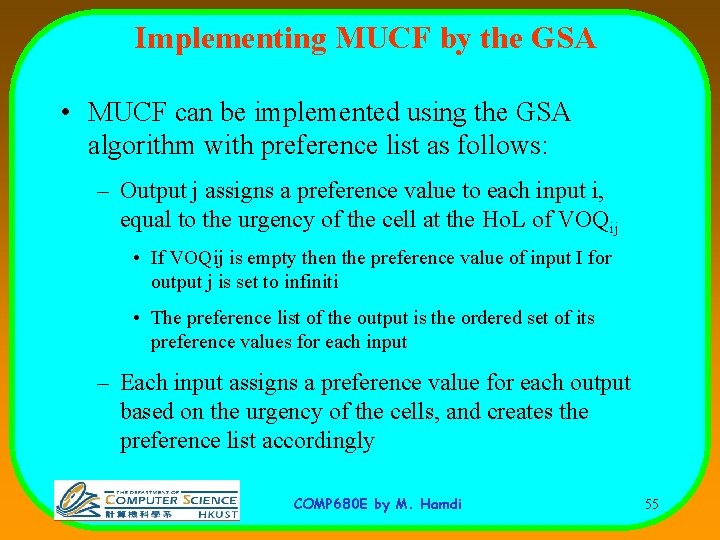

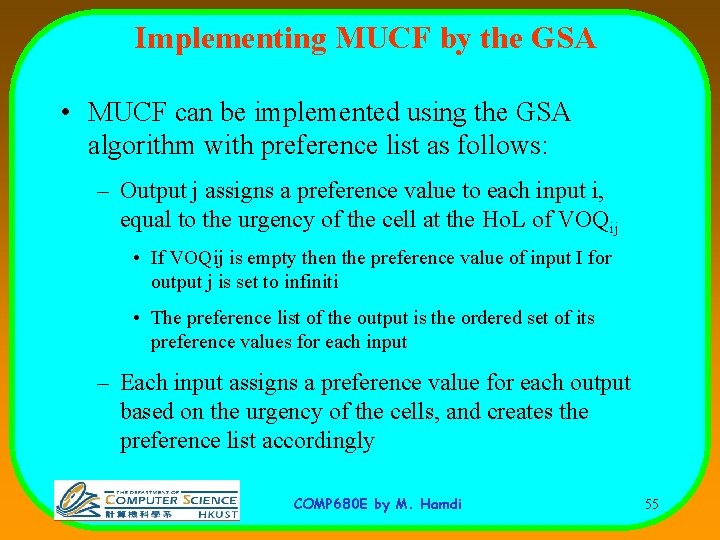

Implementing MUCF by the GSA • MUCF can be implemented using the GSA algorithm with preference list as follows: – Output j assigns a preference value to each input i, equal to the urgency of the cell at the Ho. L of VOQij • If VOQij is empty then the preference value of input I for output j is set to infiniti • The preference list of the output is the ordered set of its preference values for each input – Each input assigns a preference value for each output based on the urgency of the cells, and creates the preference list accordingly COMP 680 E by M. Hamdi 55

Theorem • A CIOQ switch with a speedup of 4 operating under the MUCF algorithm exactly matches cells with FIFO output-queued switch. • This is true even for Non-FIFO OQ scheduling schemes (e. g. , WFQ, strict priority, etc. ) • We can achieve similar results with S = 2 COMP 680 E by M. Hamdi 56

Implementation - a closer look Main sources of difficulty - Estimating urgency (and communicating this info among I/ps and O/ps) - Matching process - too many iterations? Estimating urgency depends on what is being emulated - FIFO, Strict priorities - no problem - WFQ, etc - problems COMP 680 E by M. Hamdi 57

Other Work Relax stringent requirement of exact emulation - Least Occupied O/p First Algorithm (LOOFA) Keeps outputs always busy if there are packets - Can provide Qo. S • A lot of work has been done using this direction Conclusion: We must have a speedup if we want to approach the performance of OQ switches, or provide Qo. S COMP 680 E by M. Hamdi 58

Qo. S Scheduling Algorithms COMP 680 E by M. Hamdi 59

Qo. S Differentiation: Two options • Stateful (per flow) • IETF Integrated Services (Intserv)/RSVP • Stateless (per class) • IETF Differentiated Services (Diffserv) COMP 680 E by M. Hamdi 60

The Building Blocks: May contain more functions • • • Classifier Shaper Policer Scheduler Dropper COMP 680 E by M. Hamdi 61

Qo. S Mechanisms • • Admission Control – Determines whether the flow can/should be allowed to enter the network. Packet Classification – Classifies the data based on admission control for desired treatment through the network • Traffic Policing – Measures the traffic to determine if it is out of profile. Packets that are determined to be out-of-profile can be dropped or marked differently (so they may be dropped later if needed) • Traffic Shaping – Provides some buffering, therefore delaying some of the data, to make sure the traffic fits into the profile (may only effect bursts or all traffic to make it similar to Constant Bit Rate) • Queue Management – Determines the behavior of data within a queue. Parameters include queue depth, drop policy • Queue Scheduling – Determines how different queues empty onto the outbound link COMP 680 E by M. Hamdi 62

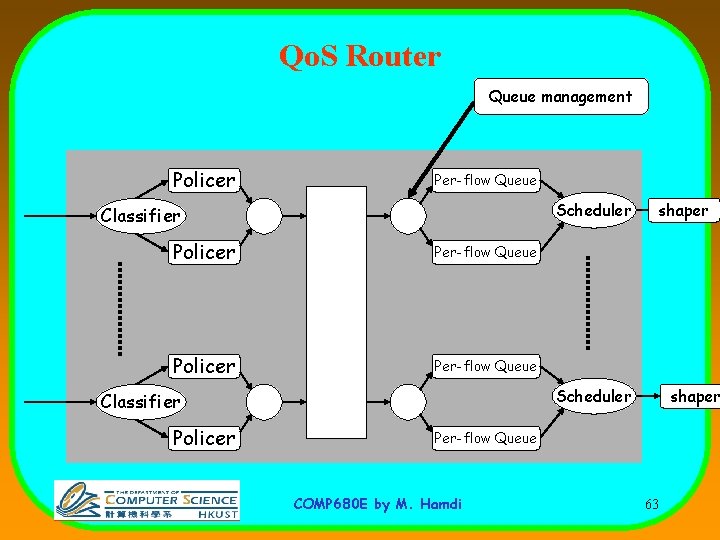

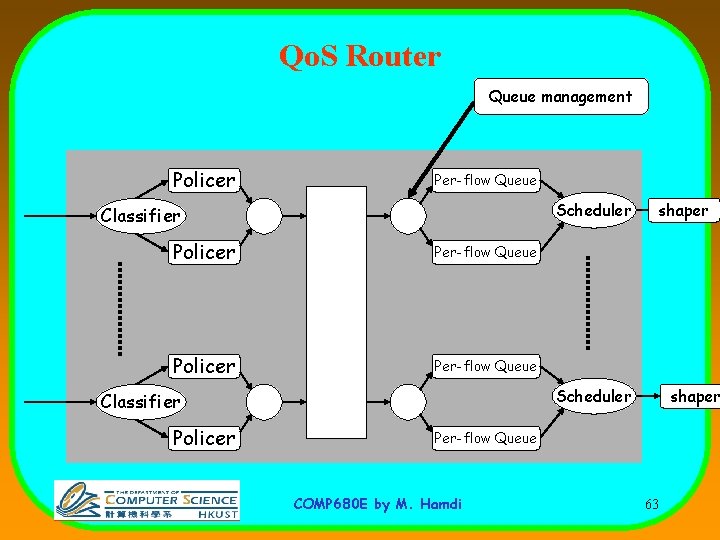

Qo. S Router Queue management Policer Per-flow Queue Scheduler Classifier Policer shaper Per-flow Queue COMP 680 E by M. Hamdi 63

Queue Scheduling Algorithms COMP 680 E by M. Hamdi 64

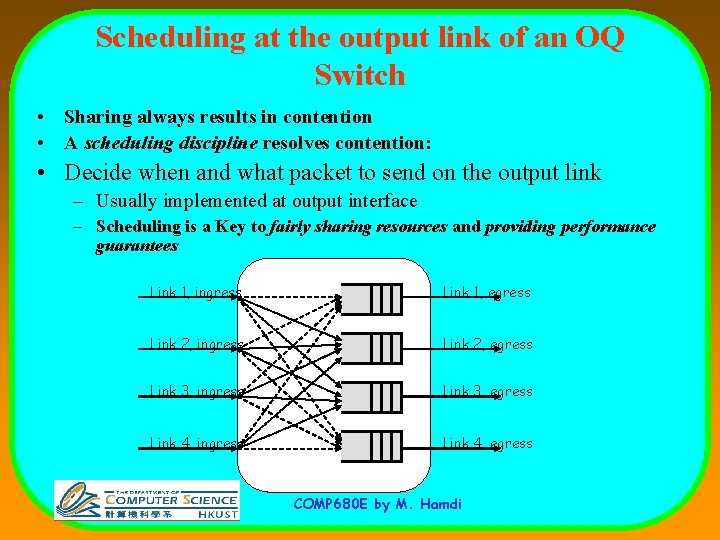

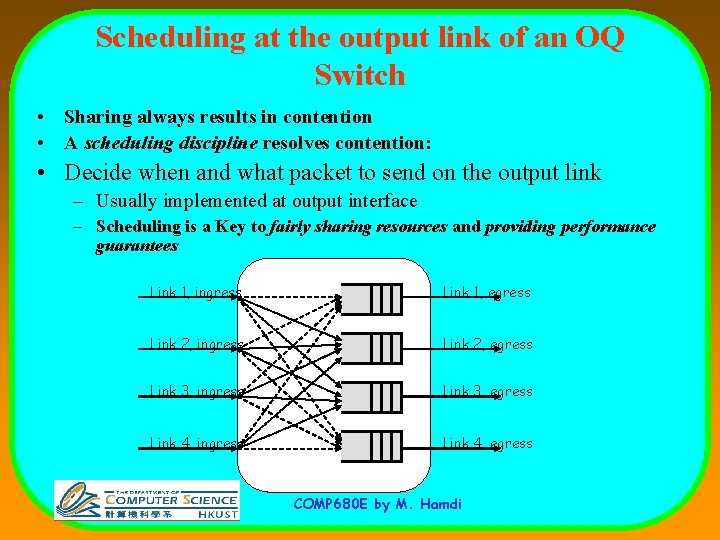

Scheduling at the output link of an OQ Switch • Sharing always results in contention • A scheduling discipline resolves contention: • Decide when and what packet to send on the output link – Usually implemented at output interface – Scheduling is a Key to fairly sharing resources and providing performance guarantees Link 1, ingress Link 1, egress Link 2, ingress Link 2, egress Link 3, ingress Link 3, egress Link 4, ingress Link 4, egress COMP 680 E by M. Hamdi

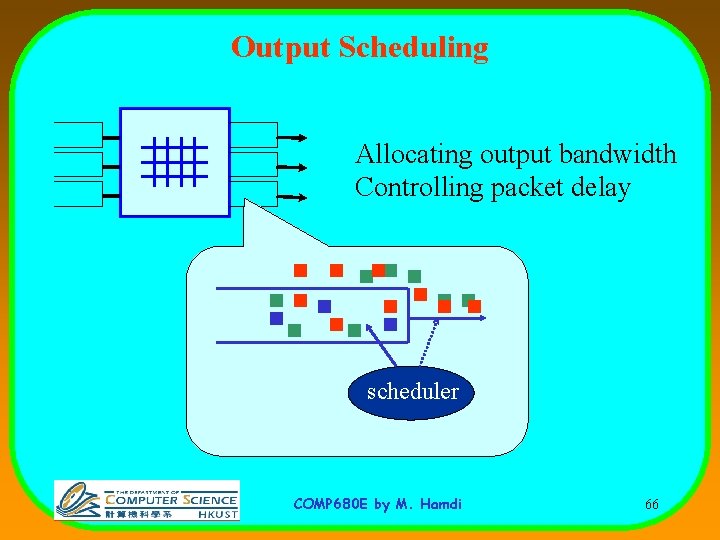

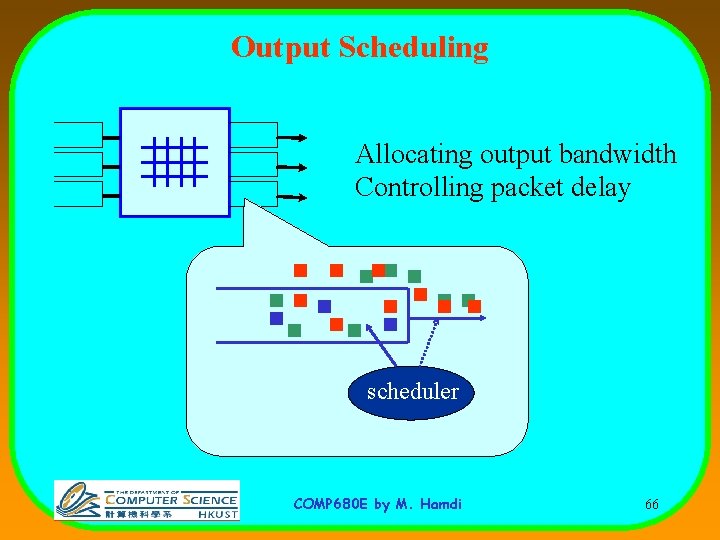

Output Scheduling Allocating output bandwidth Controlling packet delay scheduler COMP 680 E by M. Hamdi 66

Types of Queue Scheduling • Strict Priority – Empties the highest priority non-empty queue first, before servicing lower priority queues. It can cause starvation of lower priority queues. • Round Robin – Services each queue by emptying a certain amount of data and then going to the next queue in order. • Weighted Fair Queuing (WFQ) – Empties an amount of data from a queue based on the relative weight for the queue (driven by reserved bandwidth) before servicing the next queue. • Earliest Deadline First – Determines the latest time a packet must leave to meet the delay requirements and service the queues in that order COMP 680 E by M. Hamdi 67

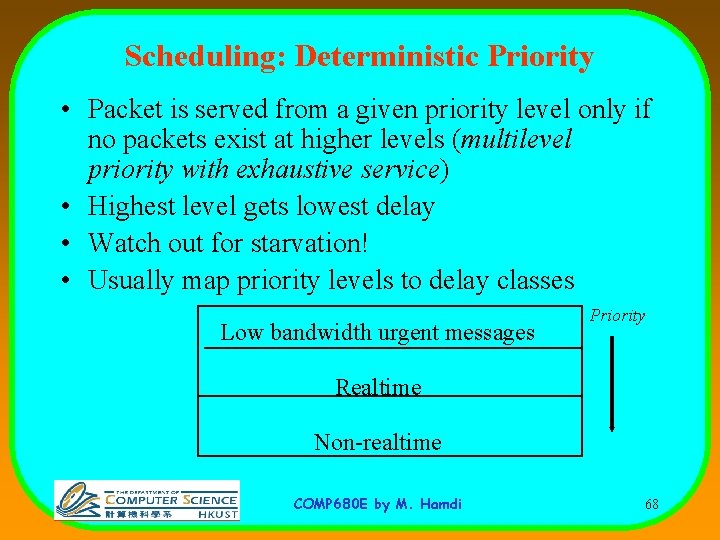

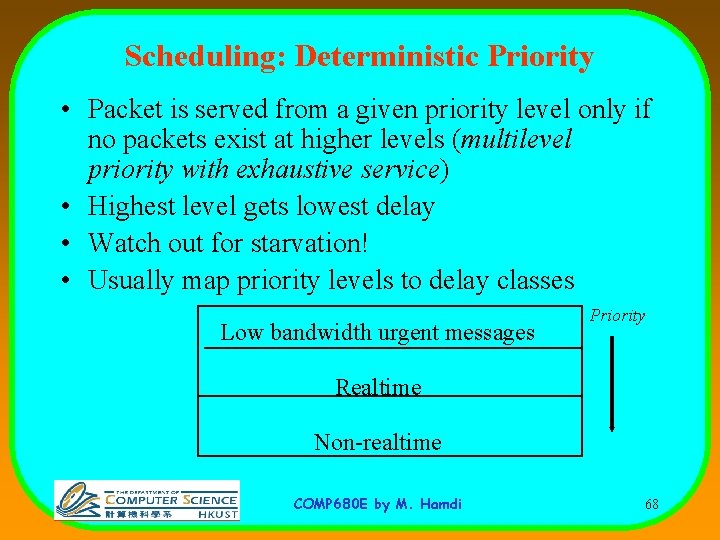

Scheduling: Deterministic Priority • Packet is served from a given priority level only if no packets exist at higher levels (multilevel priority with exhaustive service) • Highest level gets lowest delay • Watch out for starvation! • Usually map priority levels to delay classes Low bandwidth urgent messages Priority Realtime Non-realtime COMP 680 E by M. Hamdi 68

Scheduling: Work conserving vs. non-work -conserving • Work conserving discipline is never idle when packets await service • Why bother with non-work conserving? (sometimes useful for example to minimize delay jitter) COMP 680 E by M. Hamdi 69

Scheduling: Requirements • An ideal scheduling discipline – is easy to implement (preferably in hardware) – is fair (each connection gets no more than what it wants. The excess, if any, is equally shared) – provides performance bounds (Can be deterministic or statistical) Common parameters are • • bandwidth delay-jitter Loss – allows easy admission control decisions (Choice of scheduling discipline affects ease of admission control algorithm) • to decide whether a new flow can be allowed COMP 680 E by M. Hamdi

Scheduling: No Classification • This is the simplest possible. But we cannot provide any guarantees. • With FIFO queues, if the depth of the queue is not bounded, there very little that can be done • We can perform preferential dropping • We can use other service disciplines on a single queue (e. g. , EDF) FIFO First come first serve COMP 680 E by M. Hamdi 71

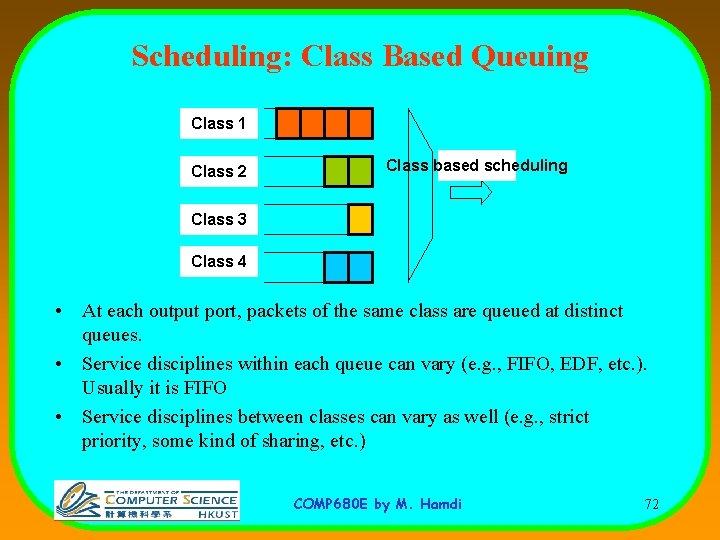

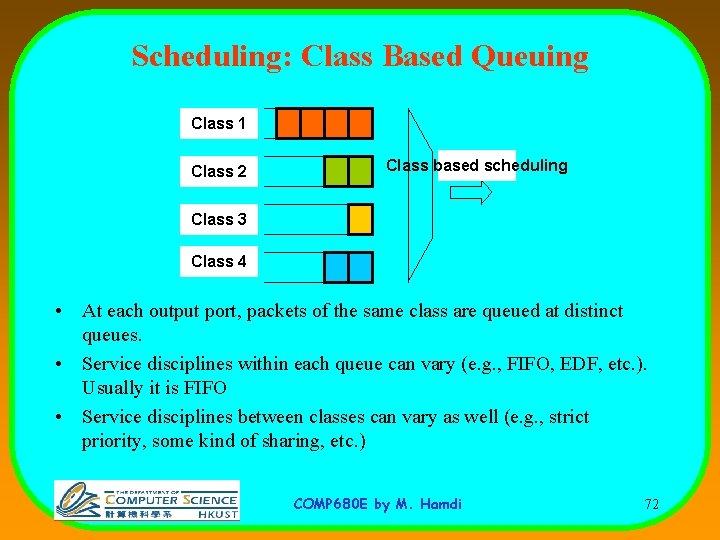

Scheduling: Class Based Queuing Class 1 Class 2 Class based scheduling Class 3 Class 4 • At each output port, packets of the same class are queued at distinct queues. • Service disciplines within each queue can vary (e. g. , FIFO, EDF, etc. ). Usually it is FIFO • Service disciplines between classes can vary as well (e. g. , strict priority, some kind of sharing, etc. ) COMP 680 E by M. Hamdi 72

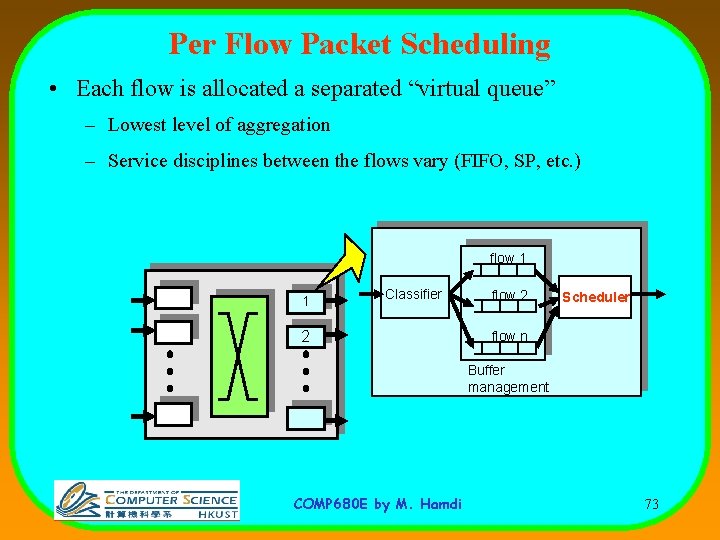

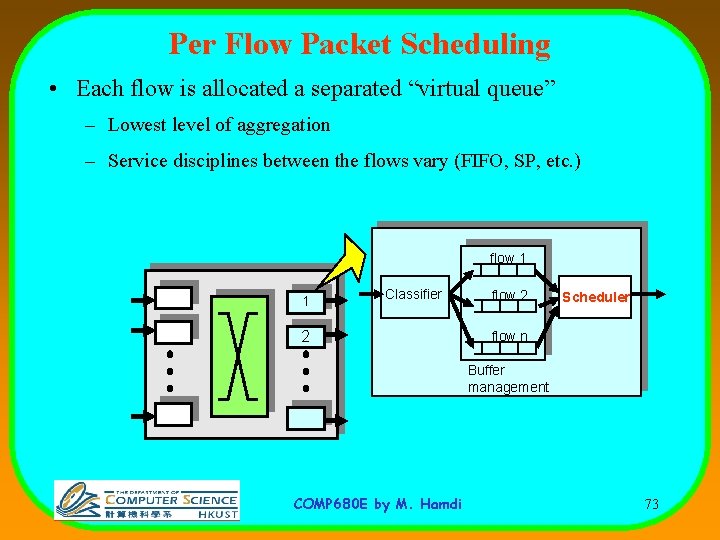

Per Flow Packet Scheduling • Each flow is allocated a separated “virtual queue” – Lowest level of aggregation – Service disciplines between the flows vary (FIFO, SP, etc. ) flow 1 1 Classifier 2 flow 2 Scheduler flow n Buffer management COMP 680 E by M. Hamdi 73

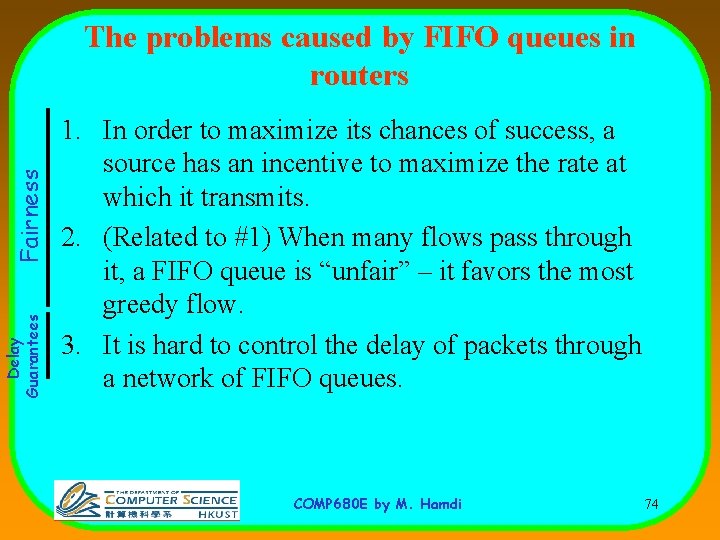

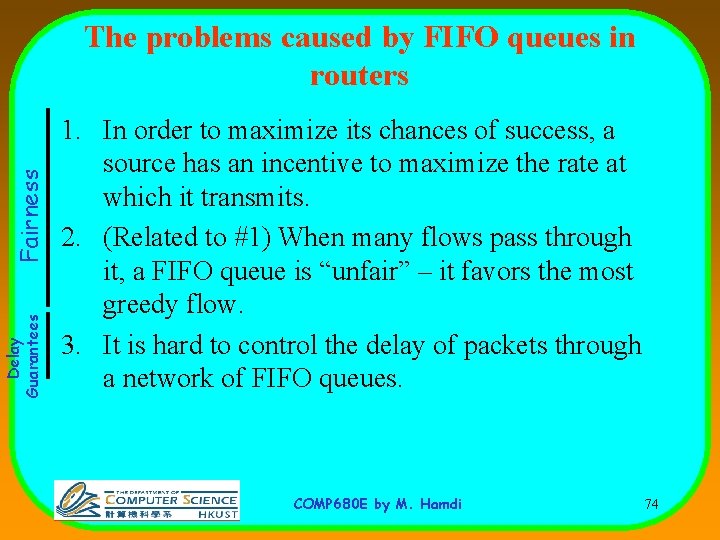

Delay Guarantees Fairness The problems caused by FIFO queues in routers 1. In order to maximize its chances of success, a source has an incentive to maximize the rate at which it transmits. 2. (Related to #1) When many flows pass through it, a FIFO queue is “unfair” – it favors the most greedy flow. 3. It is hard to control the delay of packets through a network of FIFO queues. COMP 680 E by M. Hamdi 74

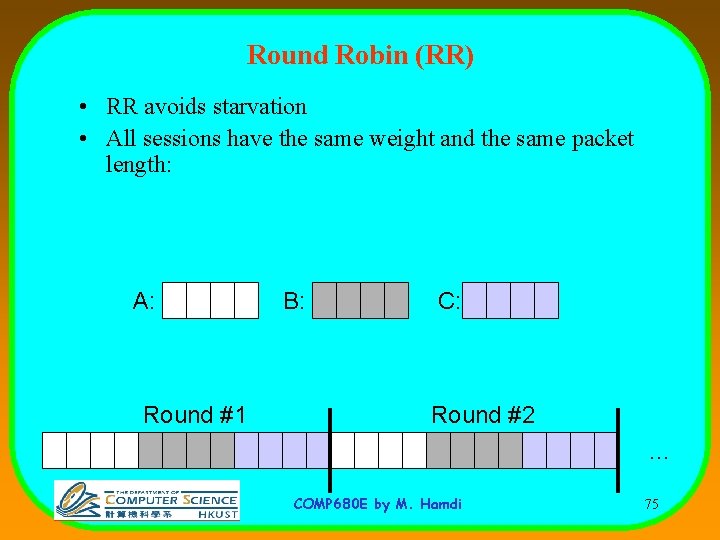

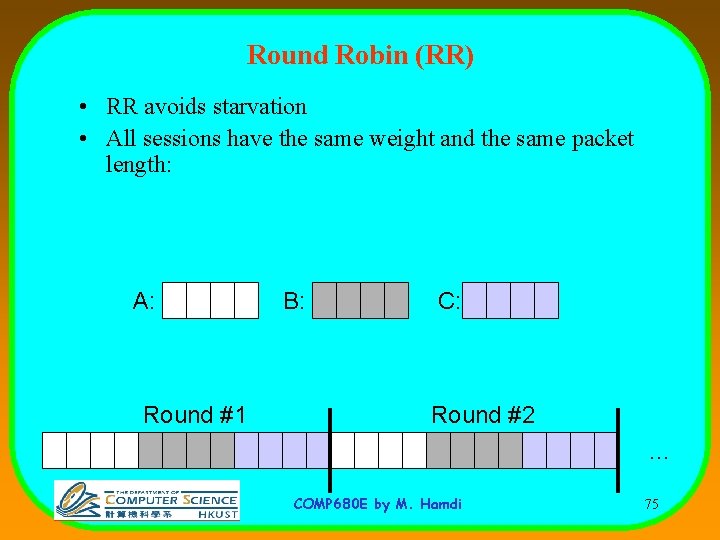

Round Robin (RR) • RR avoids starvation • All sessions have the same weight and the same packet length: A: Round #1 B: C: Round #2 … COMP 680 E by M. Hamdi 75

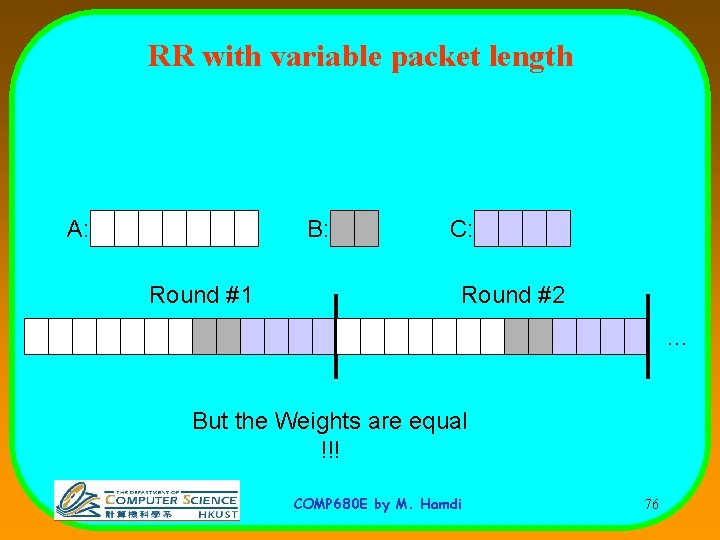

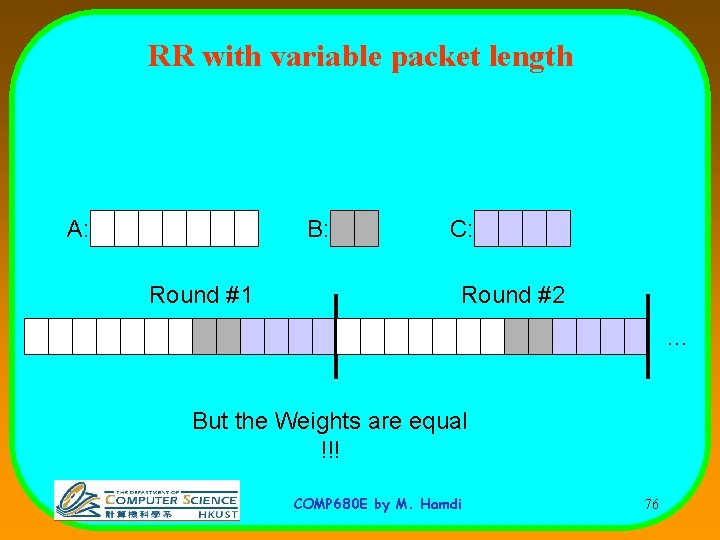

RR with variable packet length A: B: Round #1 C: Round #2 … But the Weights are equal !!! COMP 680 E by M. Hamdi 76

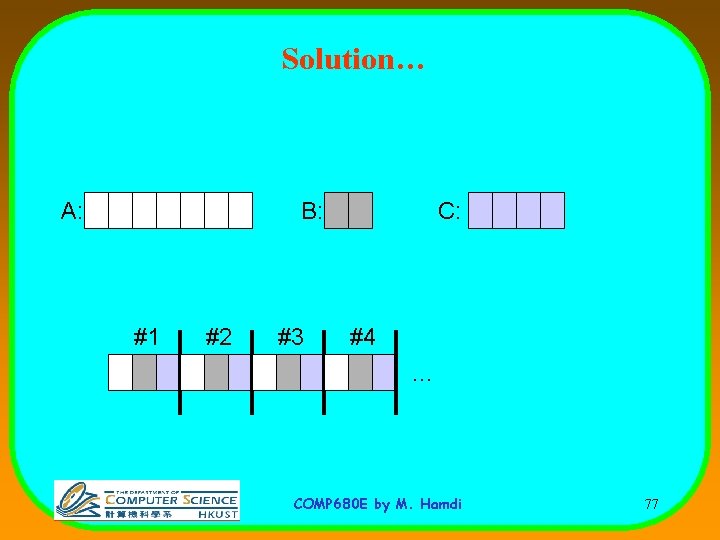

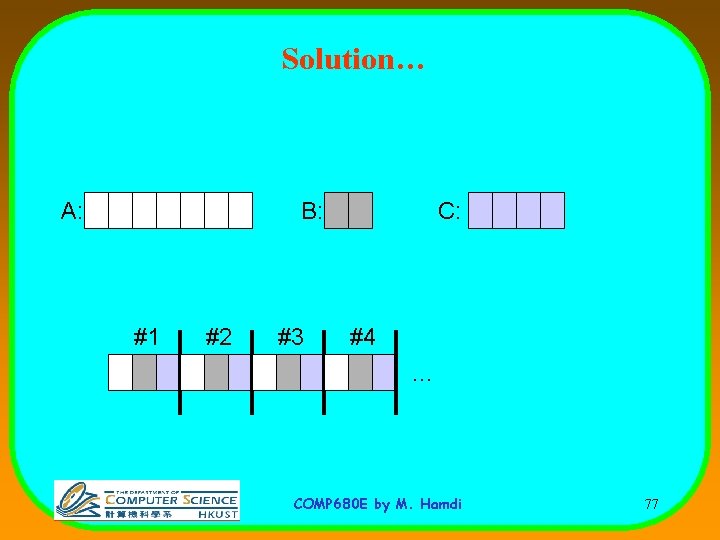

Solution… A: B: #1 #2 #3 C: #4 … COMP 680 E by M. Hamdi 77

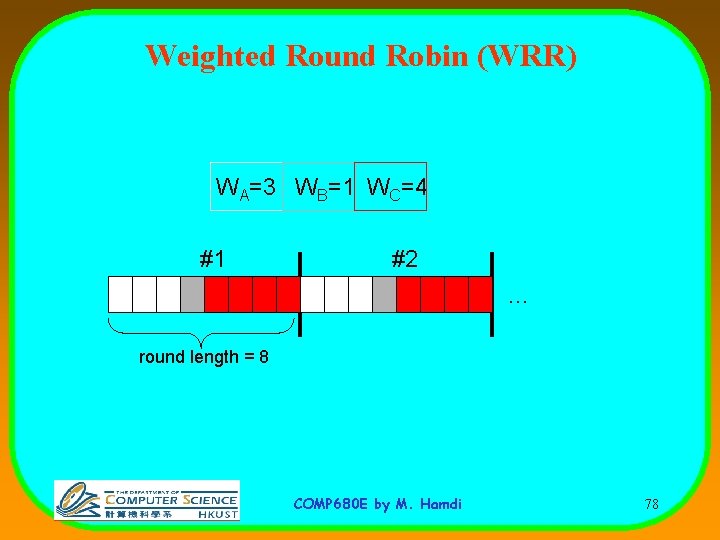

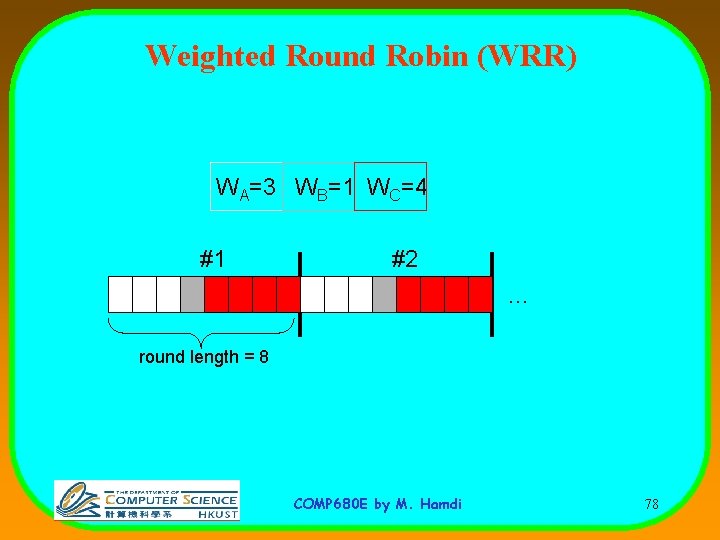

Weighted Round Robin (WRR) WA=3 WB=1 WC=4 #1 #2 … round length = 8 COMP 680 E by M. Hamdi 78

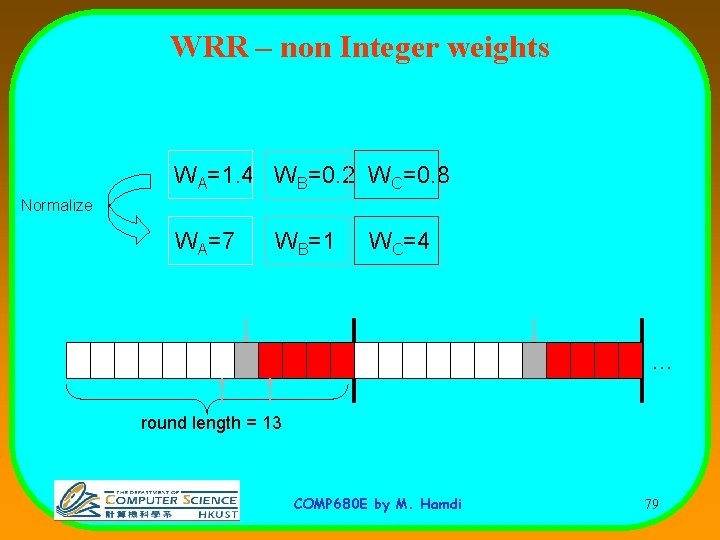

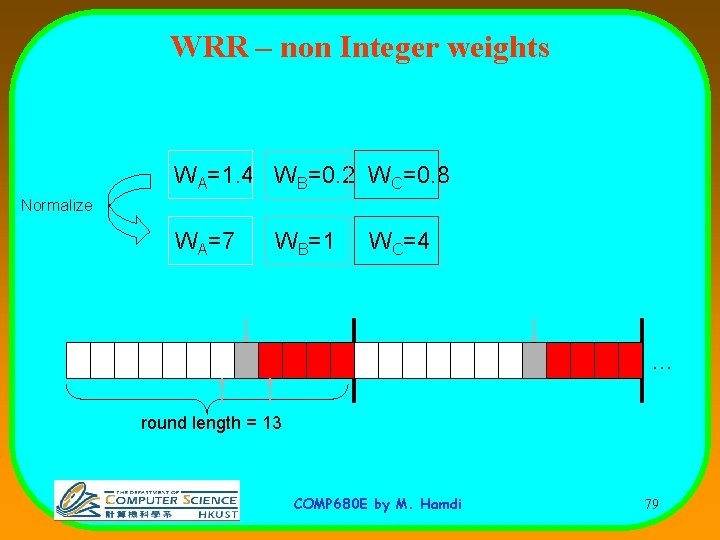

WRR – non Integer weights WA=1. 4 WB=0. 2 WC=0. 8 Normalize WA=7 WB=1 WC=4 … round length = 13 COMP 680 E by M. Hamdi 79

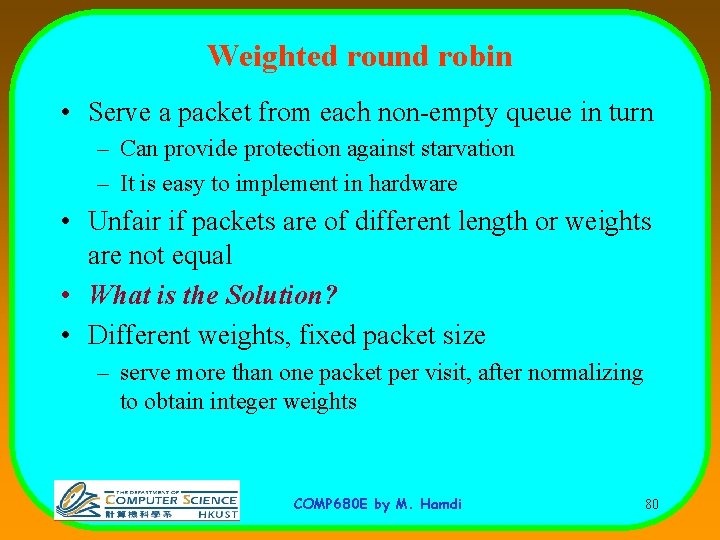

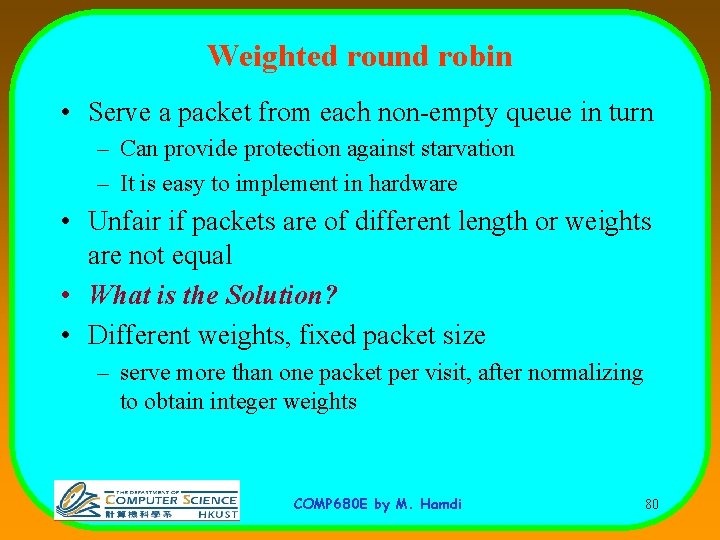

Weighted round robin • Serve a packet from each non-empty queue in turn – Can provide protection against starvation – It is easy to implement in hardware • Unfair if packets are of different length or weights are not equal • What is the Solution? • Different weights, fixed packet size – serve more than one packet per visit, after normalizing to obtain integer weights COMP 680 E by M. Hamdi 80

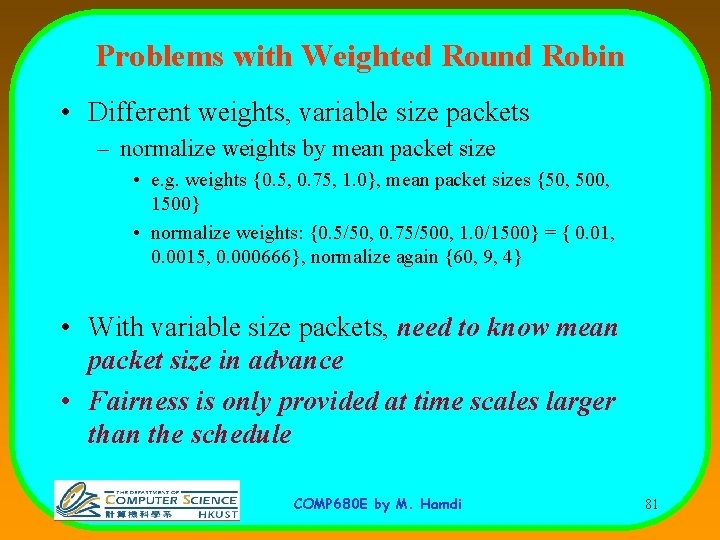

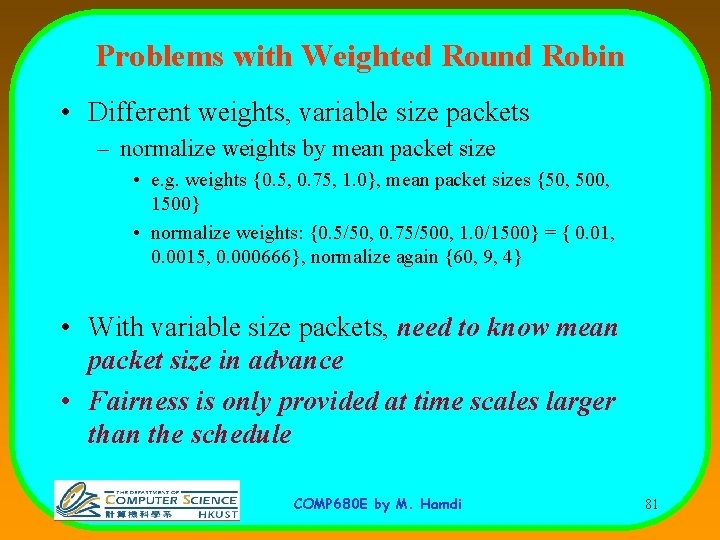

Problems with Weighted Round Robin • Different weights, variable size packets – normalize weights by mean packet size • e. g. weights {0. 5, 0. 75, 1. 0}, mean packet sizes {50, 500, 1500} • normalize weights: {0. 5/50, 0. 75/500, 1. 0/1500} = { 0. 01, 0. 0015, 0. 000666}, normalize again {60, 9, 4} • With variable size packets, need to know mean packet size in advance • Fairness is only provided at time scales larger than the schedule COMP 680 E by M. Hamdi 81

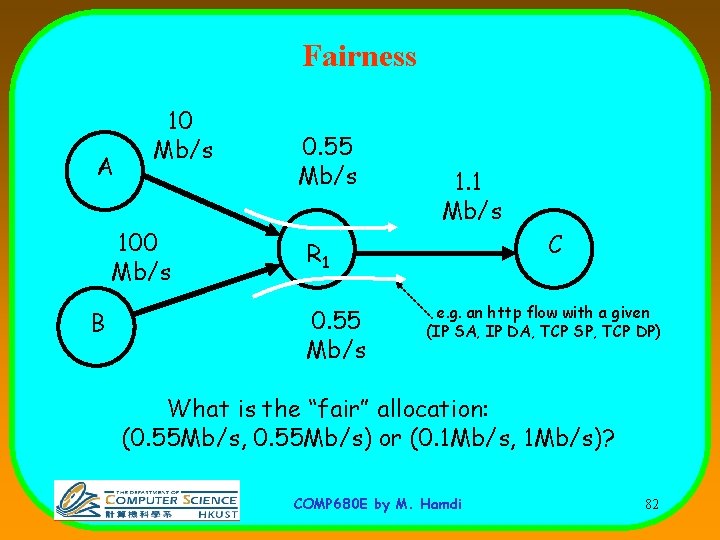

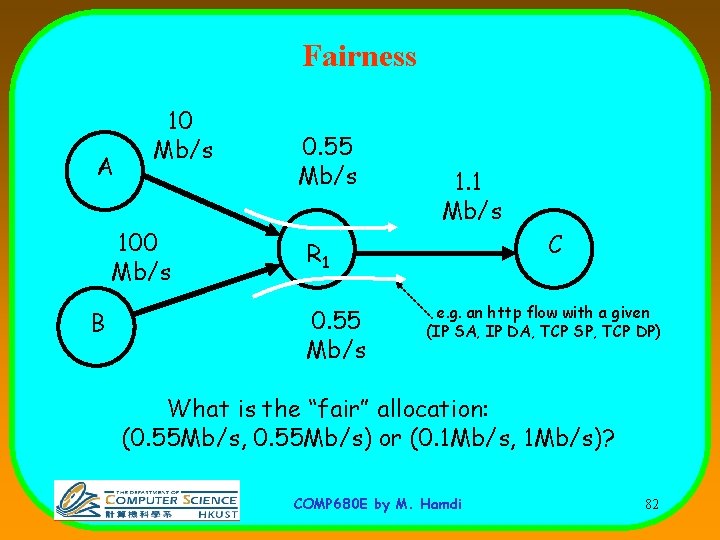

Fairness A 10 Mb/s 100 Mb/s B 0. 55 Mb/s 1. 1 Mb/s C R 1 0. 55 Mb/s e. g. an http flow with a given (IP SA, IP DA, TCP SP, TCP DP) What is the “fair” allocation: (0. 55 Mb/s, 0. 55 Mb/s) or (0. 1 Mb/s, 1 Mb/s)? COMP 680 E by M. Hamdi 82

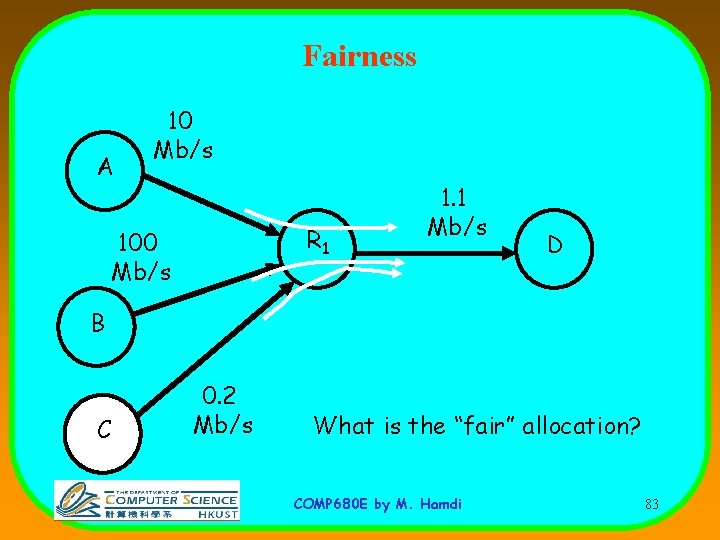

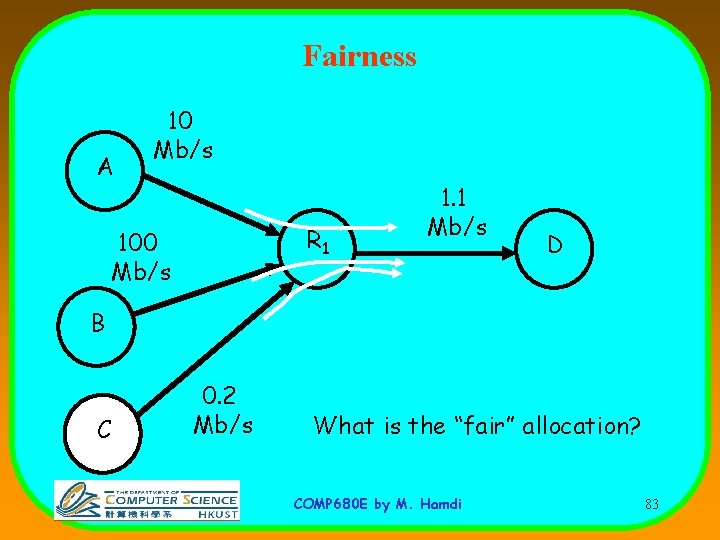

Fairness A 10 Mb/s R 1 100 Mb/s 1. 1 Mb/s D B C 0. 2 Mb/s What is the “fair” allocation? COMP 680 E by M. Hamdi 83

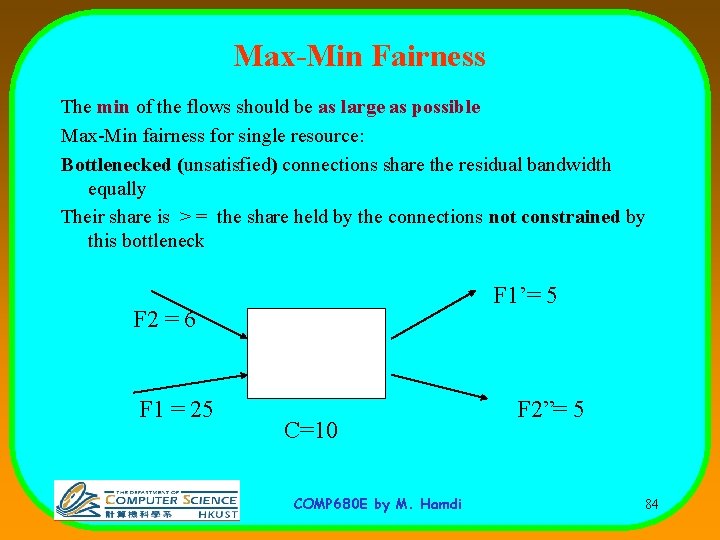

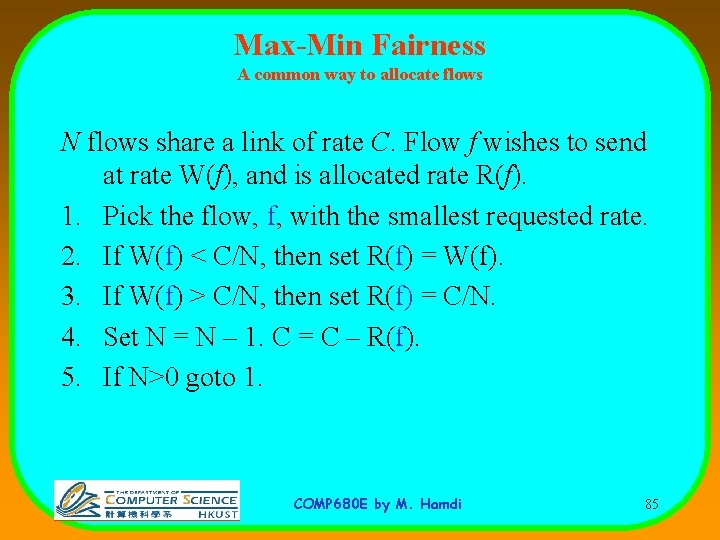

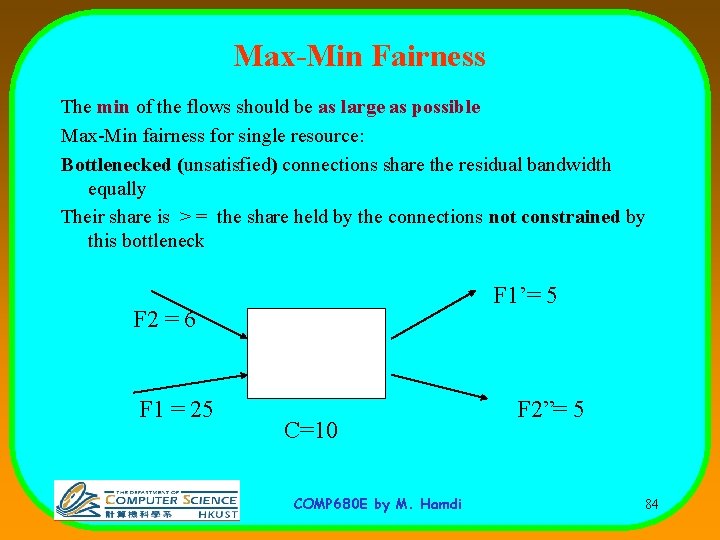

Max-Min Fairness The min of the flows should be as large as possible Max-Min fairness for single resource: Bottlenecked (unsatisfied) connections share the residual bandwidth equally Their share is > = the share held by the connections not constrained by this bottleneck F 1’= 5 F 2 = 6 F 1 = 25 C=10 COMP 680 E by M. Hamdi F 2”= 5 84

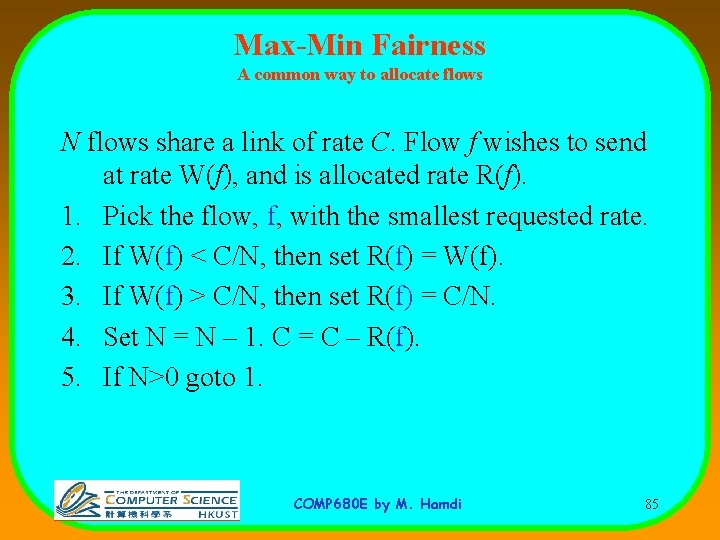

Max-Min Fairness A common way to allocate flows N flows share a link of rate C. Flow f wishes to send at rate W(f), and is allocated rate R(f). 1. Pick the flow, f, with the smallest requested rate. 2. If W(f) < C/N, then set R(f) = W(f). 3. If W(f) > C/N, then set R(f) = C/N. 4. Set N = N – 1. C = C – R(f). 5. If N>0 goto 1. COMP 680 E by M. Hamdi 85

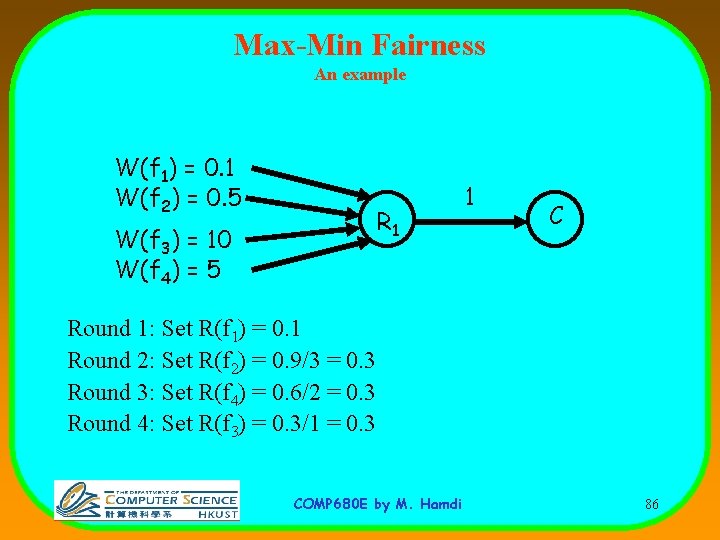

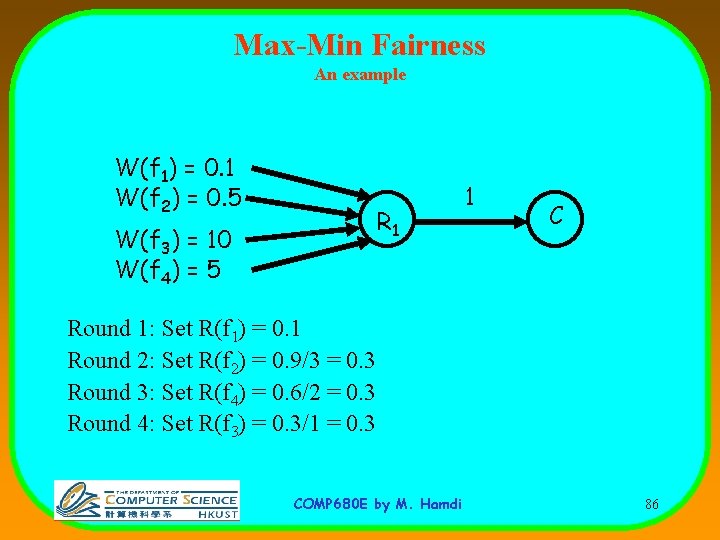

Max-Min Fairness An example W(f 1) = 0. 1 W(f 2) = 0. 5 R 1 W(f 3) = 10 W(f 4) = 5 1 C Round 1: Set R(f 1) = 0. 1 Round 2: Set R(f 2) = 0. 9/3 = 0. 3 Round 3: Set R(f 4) = 0. 6/2 = 0. 3 Round 4: Set R(f 3) = 0. 3/1 = 0. 3 COMP 680 E by M. Hamdi 86

Max-Min Fairness • How can an Internet router “allocate” different rates to different flows? • First, let’s see how a router can allocate the “same” rate to different flows… COMP 680 E by M. Hamdi 87

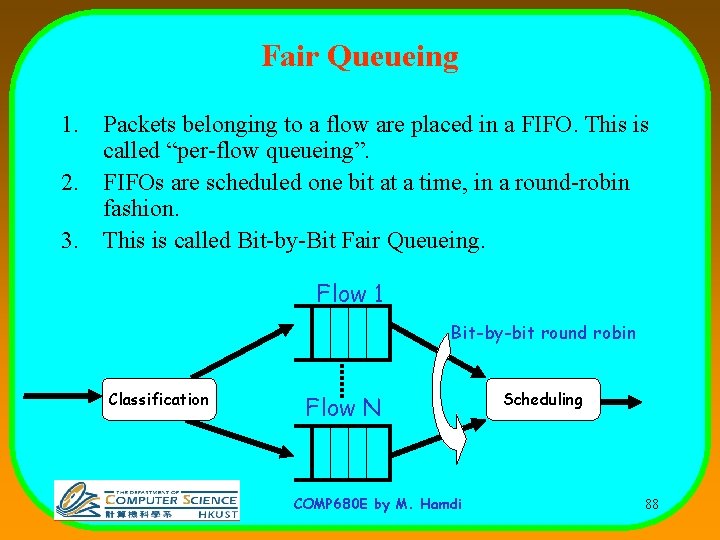

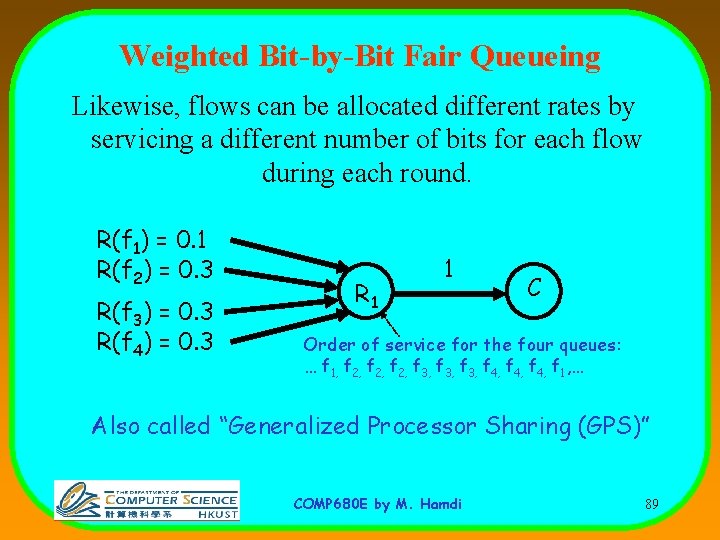

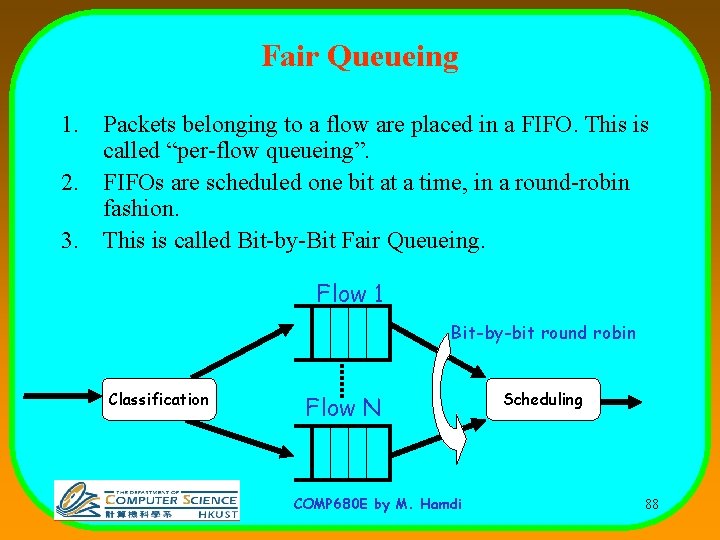

Fair Queueing 1. Packets belonging to a flow are placed in a FIFO. This is called “per-flow queueing”. 2. FIFOs are scheduled one bit at a time, in a round-robin fashion. 3. This is called Bit-by-Bit Fair Queueing. Flow 1 Bit-by-bit round robin Classification Flow N COMP 680 E by M. Hamdi Scheduling 88

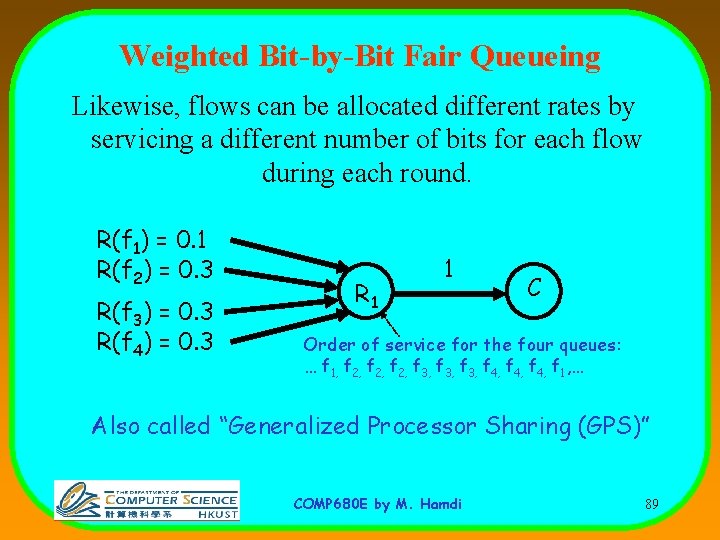

Weighted Bit-by-Bit Fair Queueing Likewise, flows can be allocated different rates by servicing a different number of bits for each flow during each round. R(f 1) = 0. 1 R(f 2) = 0. 3 R(f 3) = 0. 3 R(f 4) = 0. 3 R 1 1 C Order of service for the four queues: … f 1, f 2, f 3, f 4, f 1, … Also called “Generalized Processor Sharing (GPS)” COMP 680 E by M. Hamdi 89

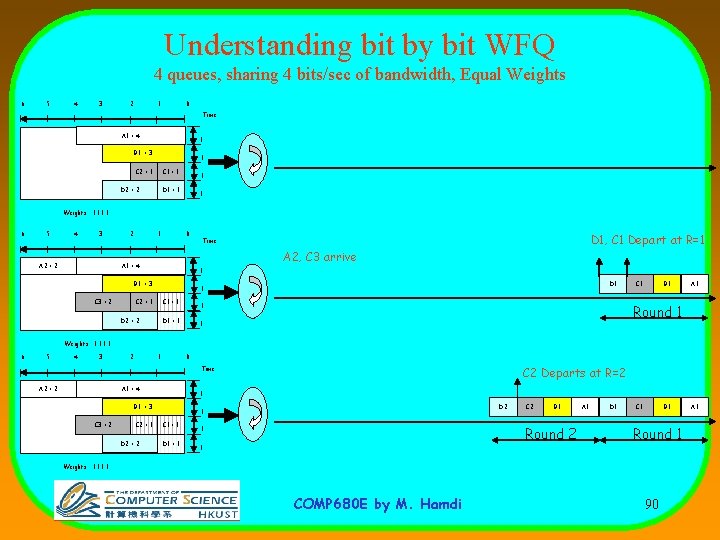

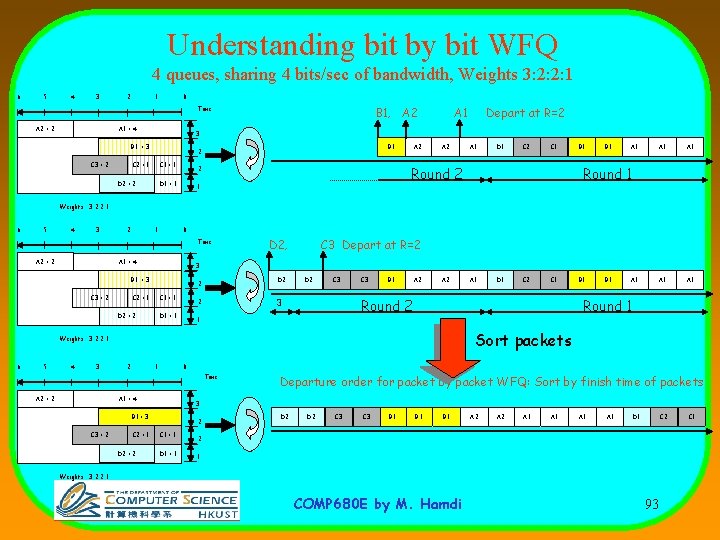

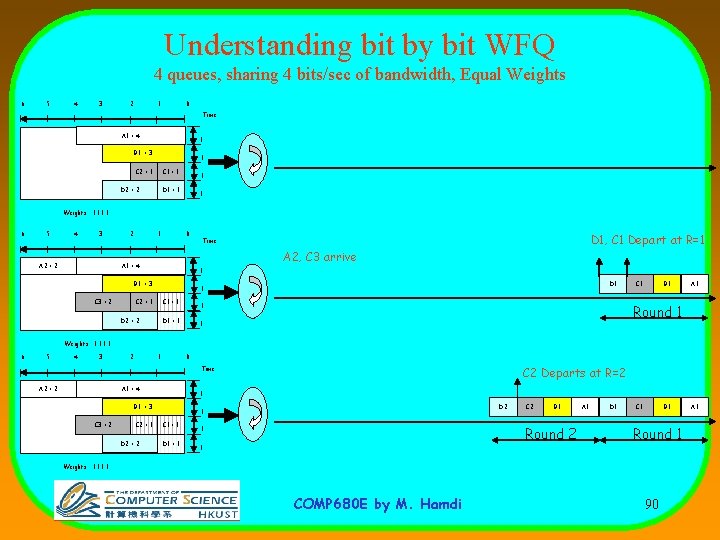

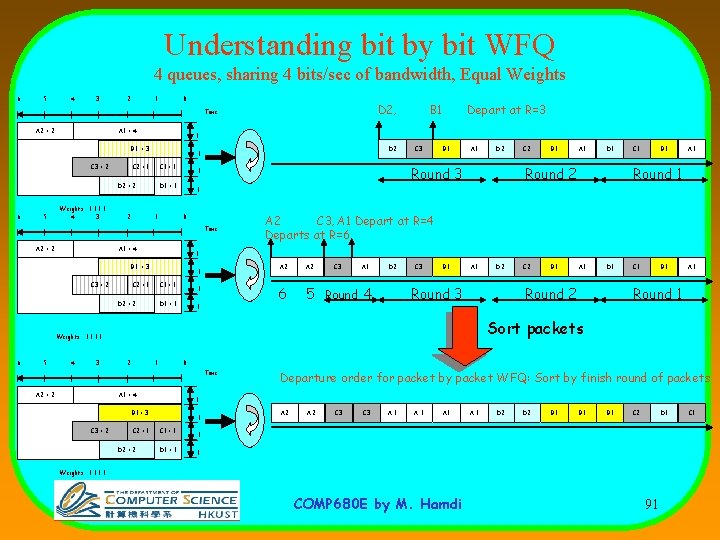

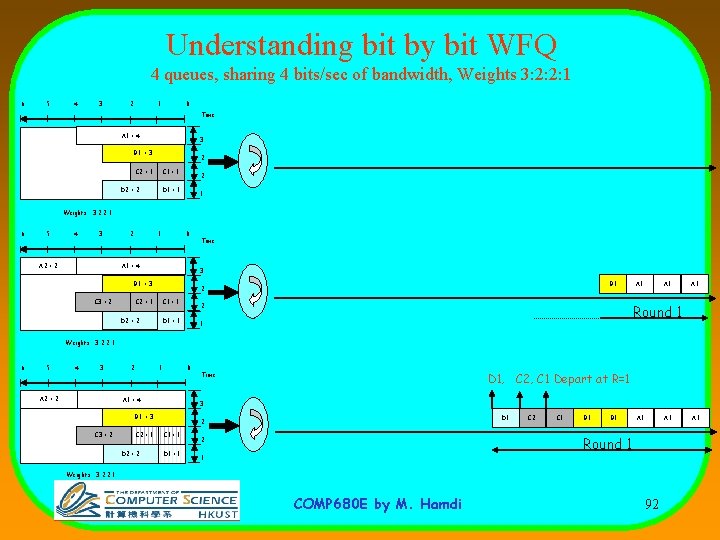

Understanding bit by bit WFQ 4 queues, sharing 4 bits/sec of bandwidth, Equal Weights 6 5 4 3 2 1 0 Time A 1 = 4 1 B 1 = 3 1 C 2 = 1 C 1 = 1 D 2 = 2 1 D 1 = 1 1 Weights : 1: 1 6 5 4 3 A 2 = 2 2 1 0 A 2, C 3 arrive A 1 = 4 1 B 1 = 3 C 3 = 2 D 1, C 1 Depart at R=1 Time D 1 1 C 2 = 1 C 1 = 1 D 2 = 2 B 1 A 1 Round 1 1 D 1 = 1 C 1 1 Weights : 1: 1 6 5 4 3 2 1 0 C 2 Departs at R=2 Time A 2 = 2 A 1 = 4 1 B 1 = 3 C 3 = 2 C 2 = 1 D 2 = 2 D 2 1 C 1 = 1 D 1 = 1 C 2 B 1 Round 2 1 1 A 1 D 1 C 1 B 1 Round 1 Weights : 1: 1 COMP 680 E by M. Hamdi 90 A 1

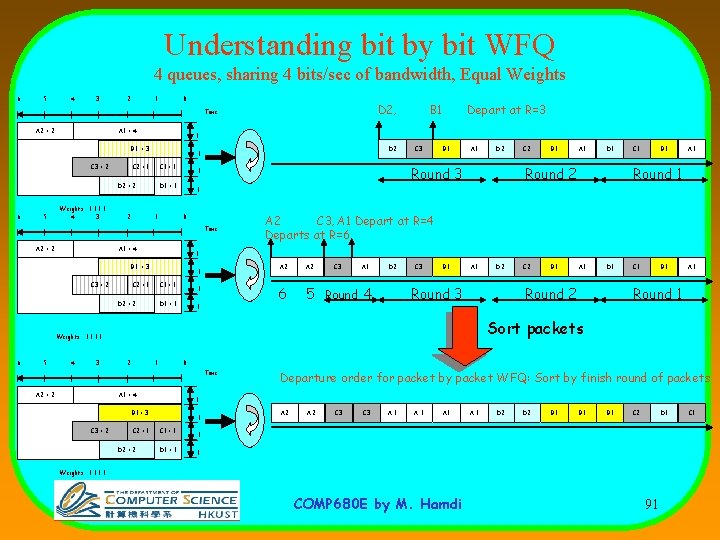

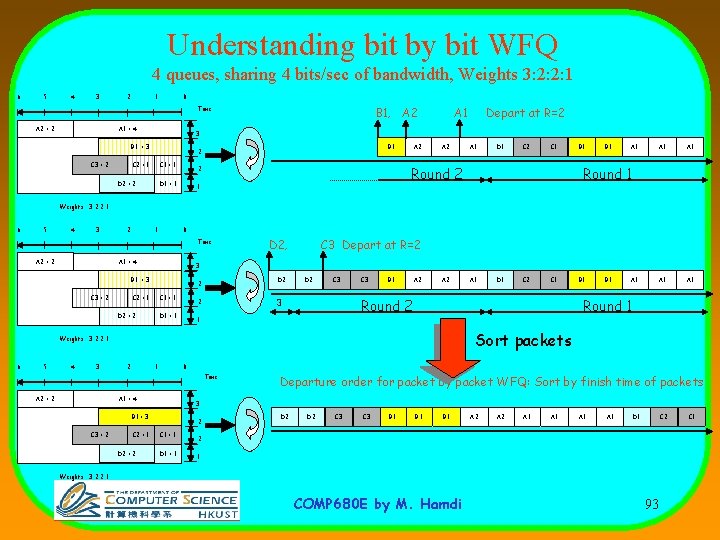

Understanding bit by bit WFQ 4 queues, sharing 4 bits/sec of bandwidth, Equal Weights 6 5 4 3 2 1 0 D 2, Time A 2 = 2 A 1 = 4 5 Weights : 1: 1 4 3 C 2 = 1 2 D 2 1 C 1 = 1 D 2 = 2 6 1 B 1 A 1 0 1 C 2 = 1 C 1 = 1 D 2 = 2 4 3 1 D 1 = 1 1 2 1 D 1 C 1 B 1 A 1 Round 1 A 2 C 3, A 1 Depart at R=4 Departs at R=6 A 2 C 3 A 1 6 5 Round 4 D 2 C 3 B 1 A 1 D 2 C 2 B 1 A 1 D 1 Round 2 Round 3 C 1 B 1 A 1 Round 1 0 A 1 = 4 C 2 = 1 D 2 = 2 Departure order for packet by packet WFQ: Sort by finish round of packets 1 B 1 = 3 C 3 = 2 A 1 Sort packets Time A 2 = 2 B 1 Round 2 Weights : 1: 1 5 C 2 1 B 1 = 3 6 D 2 1 A 1 = 4 C 3 = 2 C 3 Round 3 1 D 1 = 1 Time A 2 = 2 Depart at R=3 1 B 1 = 3 C 3 = 2 B 1 1 C 1 = 1 D 1 = 1 A 2 C 3 A 1 A 1 D 2 B 1 B 1 C 2 D 1 1 1 Weights : 1: 1 COMP 680 E by M. Hamdi 91 C 1

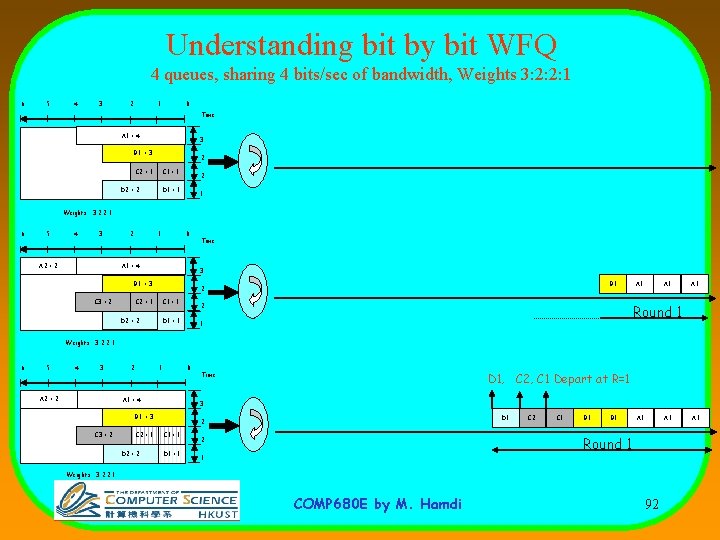

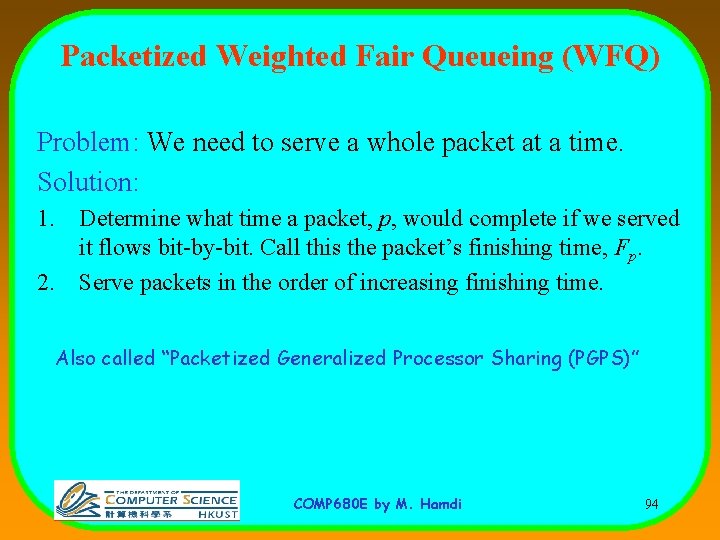

Understanding bit by bit WFQ 4 queues, sharing 4 bits/sec of bandwidth, Weights 3: 2: 2: 1 6 5 4 3 2 1 0 Time A 1 = 4 3 B 1 = 3 2 C 2 = 1 C 1 = 1 D 2 = 2 2 D 1 = 1 1 Weights : 3: 2: 2: 1 6 5 4 3 A 2 = 2 2 1 0 A 1 = 4 3 B 1 = 3 C 3 = 2 Time B 1 2 C 2 = 1 C 1 = 1 D 2 = 2 A 1 Round 1 2 D 1 = 1 A 1 1 Weights : 3: 2: 2: 1 6 5 4 3 A 2 = 2 2 1 0 A 1 = 4 3 B 1 = 3 C 3 = 2 C 2 = 1 D 2 = 2 D 1, C 2, C 1 Depart at R=1 Time D 1 2 C 1 = 1 D 1 = 1 C 2 C 1 B 1 A 1 Round 1 2 1 Weights : 3: 2: 2: 1 COMP 680 E by M. Hamdi 92 A 1

Understanding bit by bit WFQ 4 queues, sharing 4 bits/sec of bandwidth, Weights 3: 2: 2: 1 6 5 4 3 2 1 0 B 1, A 2 Time A 2 = 2 A 1 = 4 Depart at R=2 3 B 1 = 3 C 3 = 2 A 1 B 1 2 C 2 = 1 C 1 = 1 D 2 = 2 A 1 D 1 C 2 C 1 Round 2 2 D 1 = 1 A 2 1 B 1 A 1 A 1 A 1 Round 1 Weights : 3: 2: 2: 1 6 5 4 3 2 1 0 Time A 2 = 2 A 1 = 4 C 3 Depart at R=2 3 B 1 = 3 C 3 = 2 D 2, D 2 2 C 2 = 1 C 1 = 1 D 2 = 2 C 3 B 1 A 2 5 4 3 2 1 C 1 B 1 A 1 Round 1 0 A 1 = 4 C 2 = 1 D 2 = 2 Weights : 1: 1 Departure order for packet by packet WFQ: Sort by finish time of packets 3 B 1 = 3 C 3 = 2 C 2 Sort packets Time A 2 = 2 D 1 1 Weights : 3: 2: 2: 1 6 A 1 Round 2 3 2 D 1 = 1 D 2 2 C 1 = 1 D 2 D 2 C 3 B 1 B 1 A 2 A 1 A 1 D 1 C 2 2 1 Weights : 3: 2: 2: 1 COMP 680 E by M. Hamdi 93 C 1

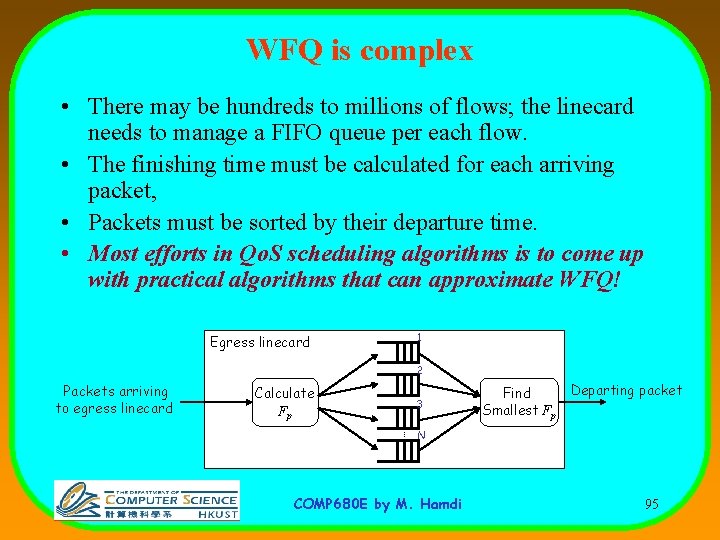

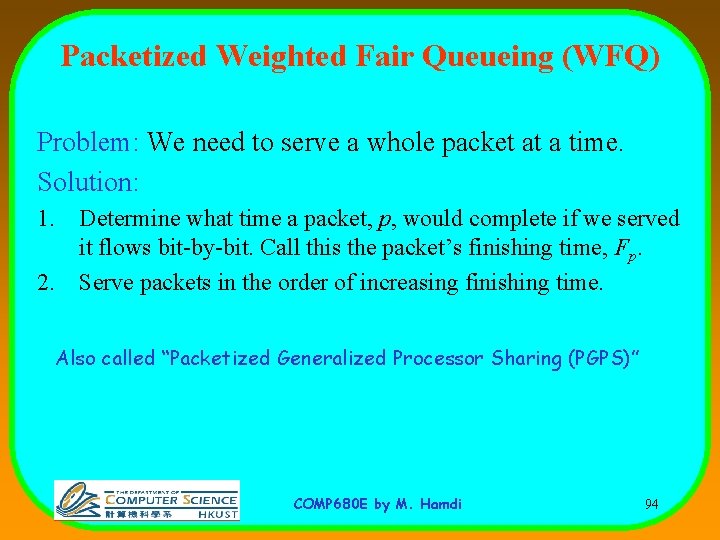

Packetized Weighted Fair Queueing (WFQ) Problem: We need to serve a whole packet at a time. Solution: 1. Determine what time a packet, p, would complete if we served it flows bit-by-bit. Call this the packet’s finishing time, Fp. 2. Serve packets in the order of increasing finishing time. Also called “Packetized Generalized Processor Sharing (PGPS)” COMP 680 E by M. Hamdi 94

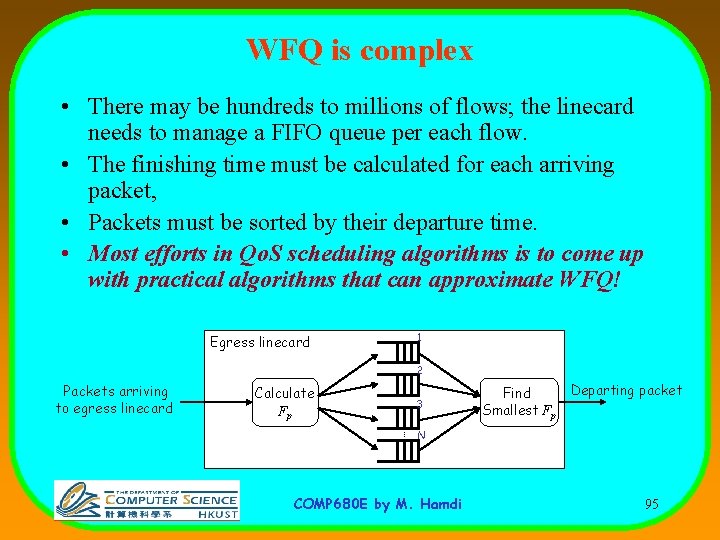

WFQ is complex • There may be hundreds to millions of flows; the linecard needs to manage a FIFO queue per each flow. • The finishing time must be calculated for each arriving packet, • Packets must be sorted by their departure time. • Most efforts in Qo. S scheduling algorithms is to come up with practical algorithms that can approximate WFQ! Egress linecard 1 2 Packets arriving to egress linecard Calculate Fp 3 Find Smallest Fp Departing packet N COMP 680 E by M. Hamdi 95

When can we Guarantee Delays? • Theorem If flows are leaky bucket constrained and all nodes employ GPS (WFQ), then the network can guarantee worst-case delay bounds to sessions. COMP 680 E by M. Hamdi 96

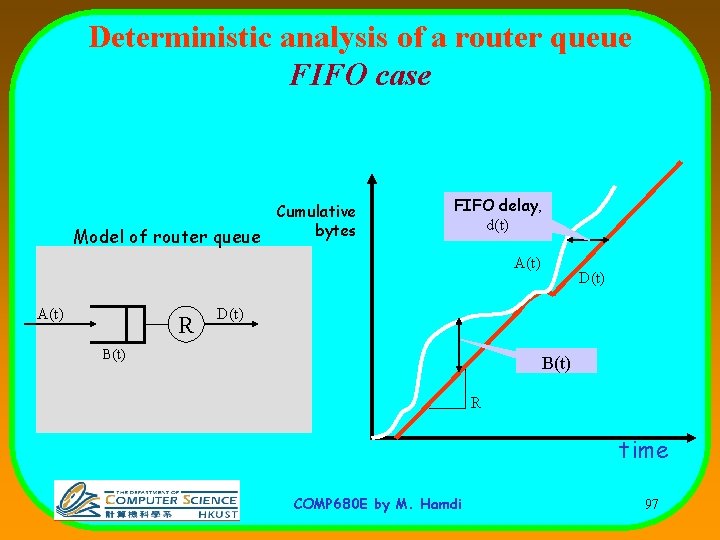

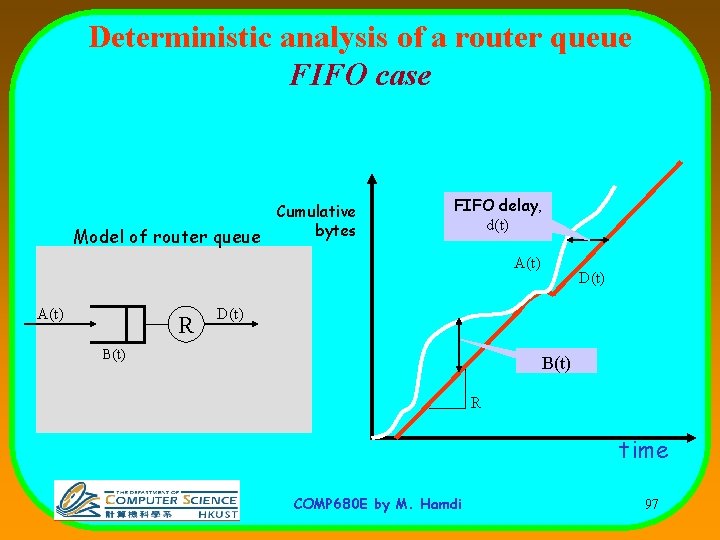

Deterministic analysis of a router queue FIFO case Model of router queue Cumulative bytes FIFO delay, d(t) A(t) R D(t) B(t) R time COMP 680 E by M. Hamdi 97

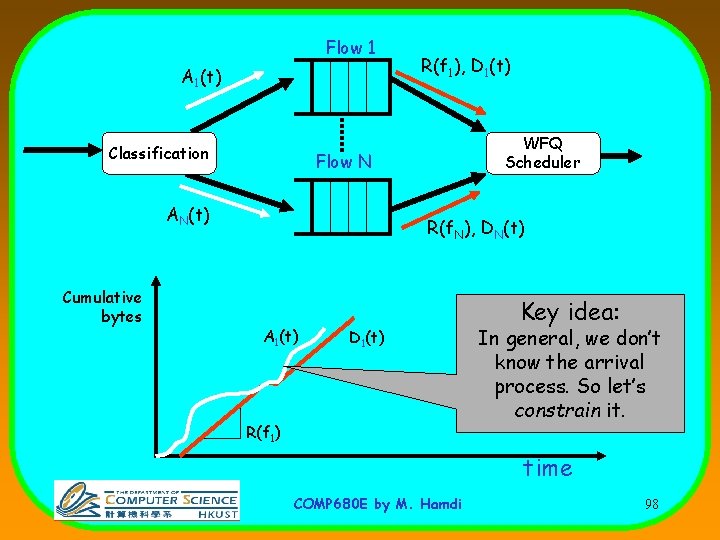

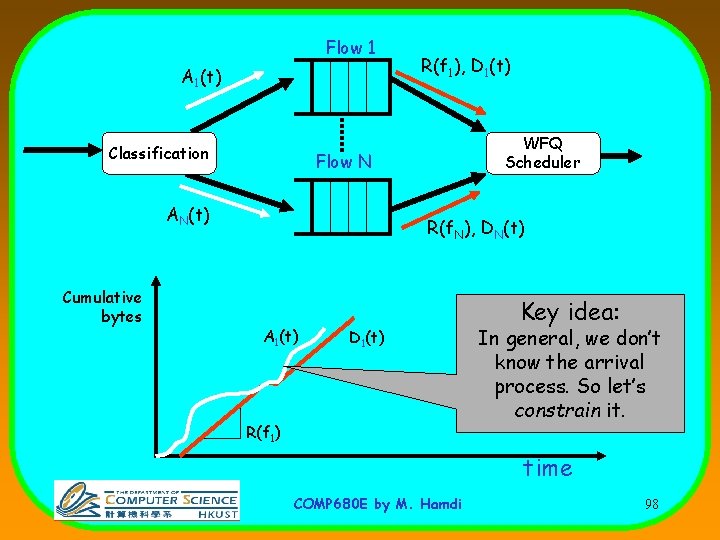

Flow 1 A 1(t) Classification WFQ Scheduler Flow N AN(t) Cumulative bytes R(f 1), D 1(t) R(f. N), DN(t) A 1(t) D 1(t) R(f 1) Key idea: In general, we don’t know the arrival process. So let’s constrain it. time COMP 680 E by M. Hamdi 98

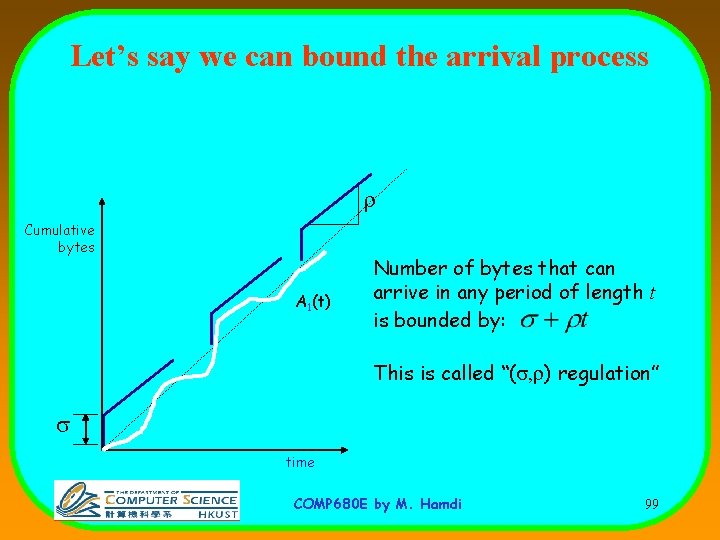

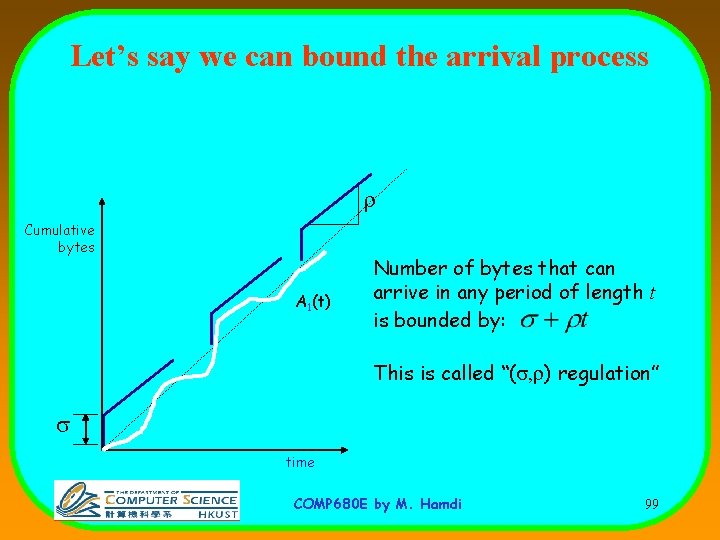

Let’s say we can bound the arrival process r Cumulative bytes A 1(t) Number of bytes that can arrive in any period of length t is bounded by: This is called “(s, r) regulation” s time COMP 680 E by M. Hamdi 99

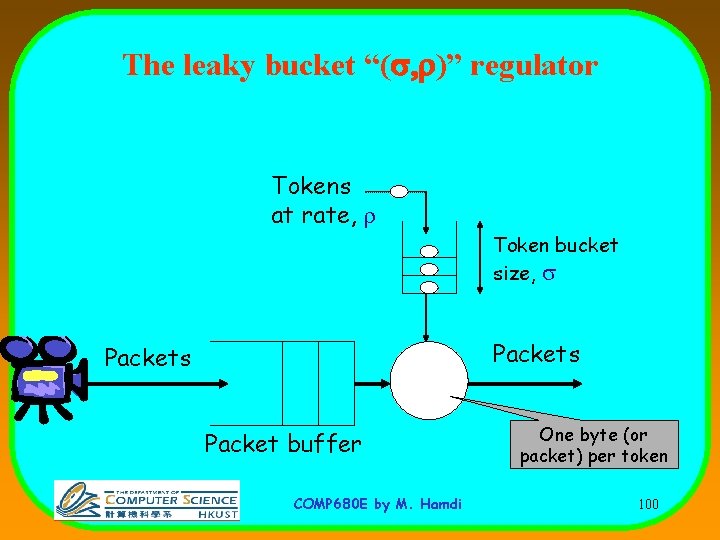

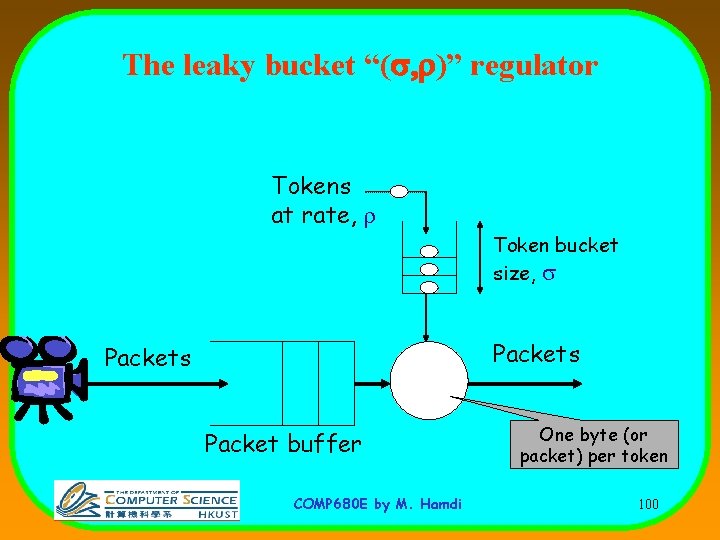

The leaky bucket “(s, r)” regulator Tokens at rate, r Token bucket size, s Packets Packet buffer COMP 680 E by M. Hamdi One byte (or packet) per token 100

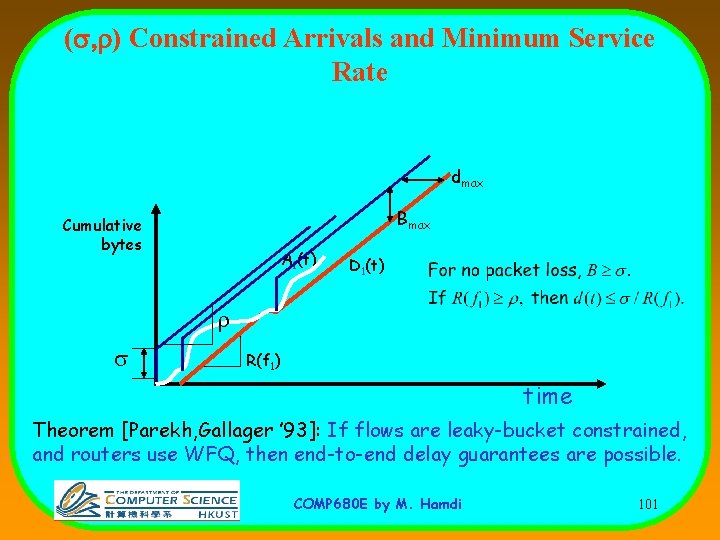

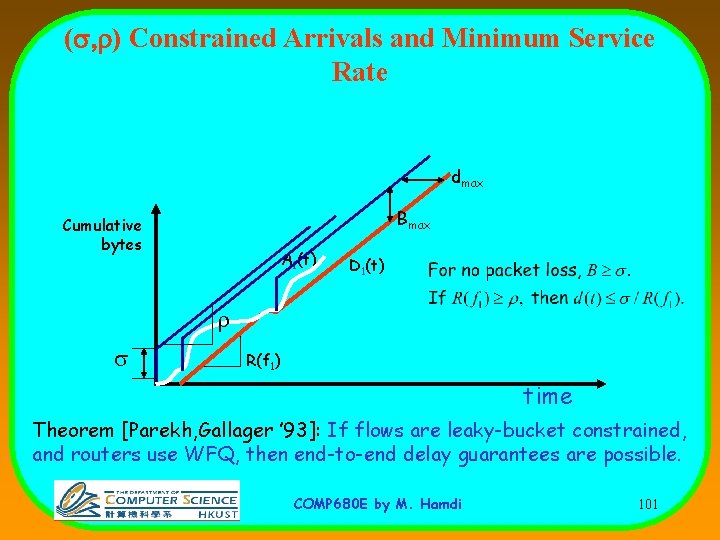

(s, r) Constrained Arrivals and Minimum Service Rate dmax Bmax Cumulative bytes A 1(t) D 1(t) r s R(f 1) time Theorem [Parekh, Gallager ’ 93]: If flows are leaky-bucket constrained, and routers use WFQ, then end-to-end delay guarantees are possible. COMP 680 E by M. Hamdi 101