CACHE REPLACEMENT CHAMPIONSHIP An Analysis of Cache Replacement

- Slides: 9

CACHE REPLACEMENT CHAMPIONSHIP An Analysis of Cache Replacement Algorithms Used to Win Next Years Competition Abeer Agrawal Joe Berman Milestone II 11/4/2011

CRC Last year was the first year that the competition was held Led by individuals from Intel, IBM, Microsoft, NC State and Georgia Tech Attempt to improve the status quo cache replacement algorithms within a set of hardware constraints (Complexity and Cost) Provided a simulation infrastructure in which to run algorithms Both a single and multi core track 2

METHODOLOGY Cache Replacement algorithms used on last level cache (LLC) For the multicore track, LLC is 4 MB with 16 -way associativity Simulation doesn’t take latency into account, as all cache misses have the same penalty Simulator shows misses and CPI for each thread We are evaluating improvement based upon decrease in CPI from baseline of LRU 3

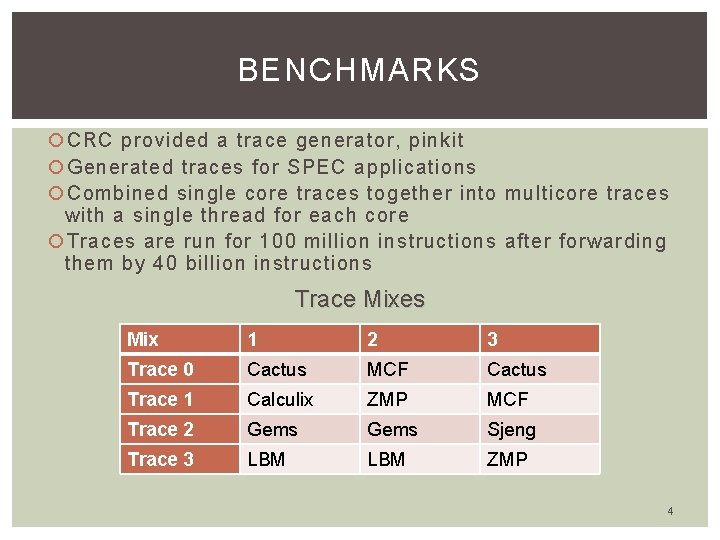

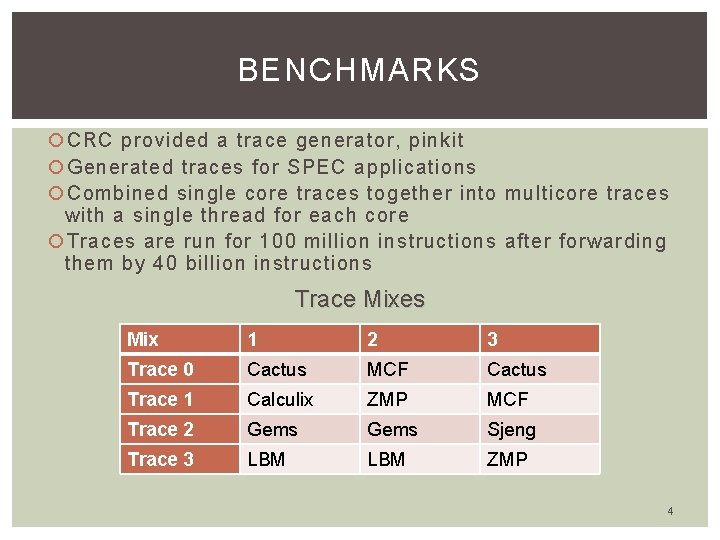

BENCHMARKS CRC provided a trace generator, pinkit Generated traces for SPEC applications Combined single core traces together into multicore traces with a single thread for each core Traces are run for 100 million instructions after forwarding them by 40 billion instructions Trace Mixes Mix 1 2 3 Trace 0 Cactus MCF Cactus Trace 1 Calculix ZMP MCF Trace 2 Gems Sjeng Trace 3 LBM ZMP 4

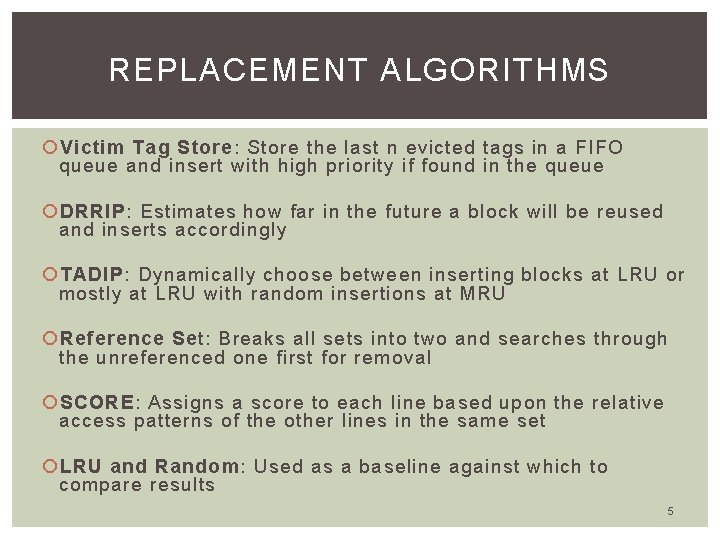

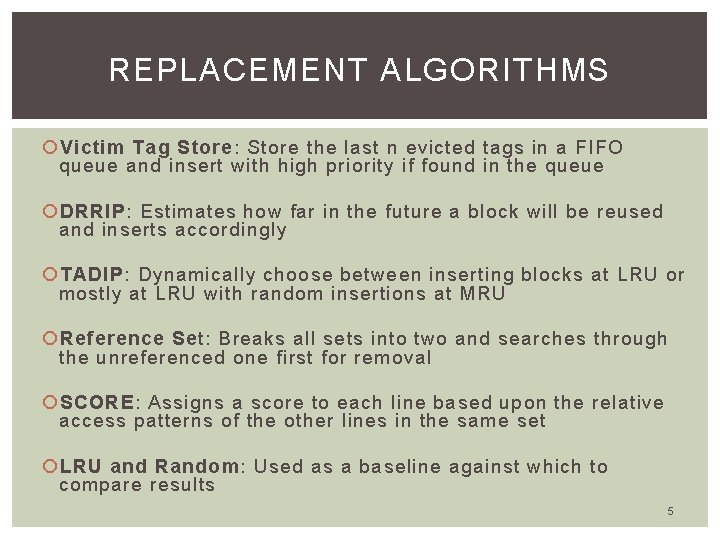

REPLACEMENT ALGORITHMS Victim Tag Store: Store the last n evicted tags in a FIFO queue and insert with high priority if found in the queue DRRIP: Estimates how far in the future a block will be reused and inserts accordingly TADIP: Dynamically choose between inserting blocks at LRU or mostly at LRU with random insertions at MRU Reference Set: Breaks all sets into two and searches through the unreferenced one first for removal SCORE: Assigns a score to each line based upon the relative access patterns of the other lines in the same set LRU and Random: Used as a baseline against which to compare results 5

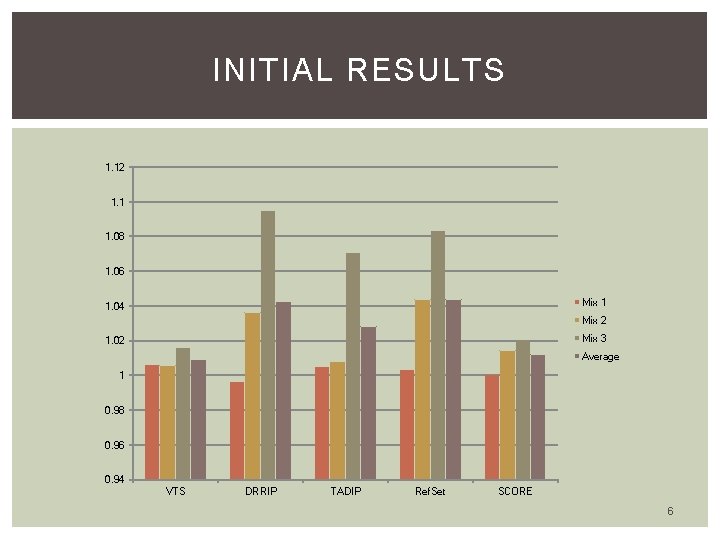

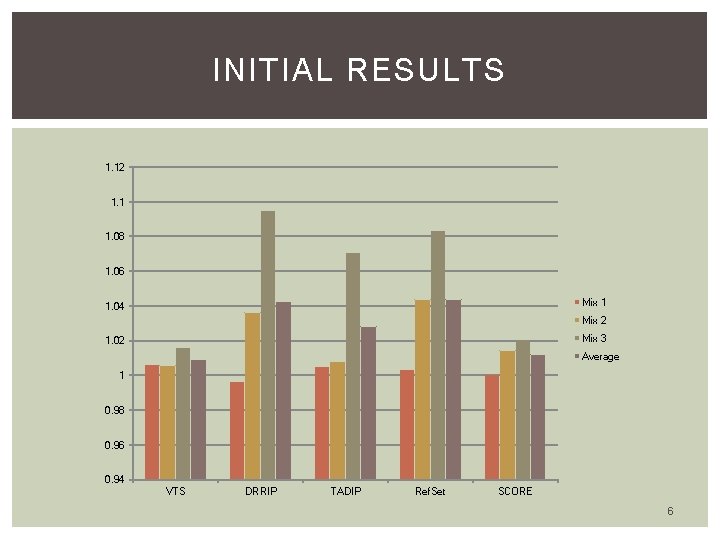

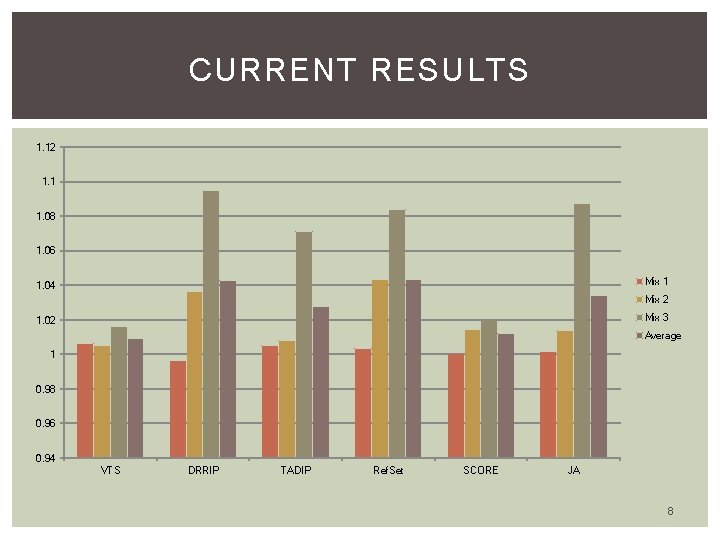

INITIAL RESULTS 1. 12 1. 1 1. 08 1. 06 Mix 1 1. 04 Mix 2 Mix 3 1. 02 Average 1 0. 98 0. 96 0. 94 VTS DRRIP TADIP Ref. Set SCORE 6

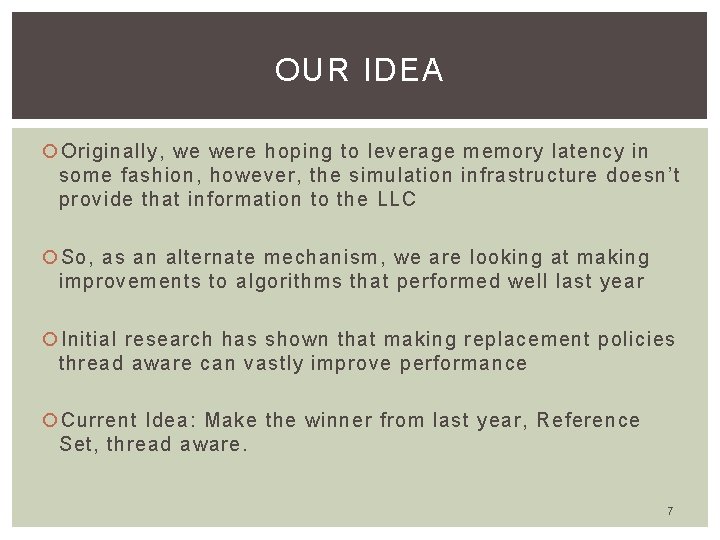

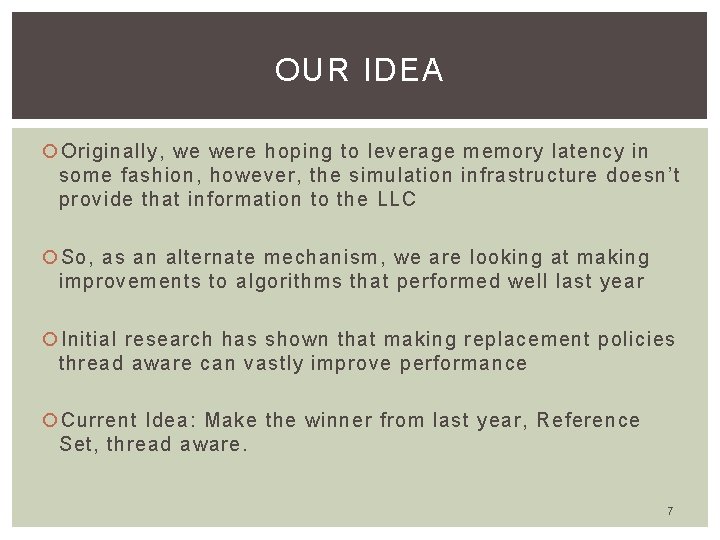

OUR IDEA Originally, we were hoping to leverage memory latency in some fashion, however, the simulation infrastructure doesn’t provide that information to the LLC So, as an alternate mechanism, we are looking at making improvements to algorithms that performed well last year Initial research has shown that making replacement policies thread aware can vastly improve performance Current Idea: Make the winner from last year, Reference Set, thread aware. 7

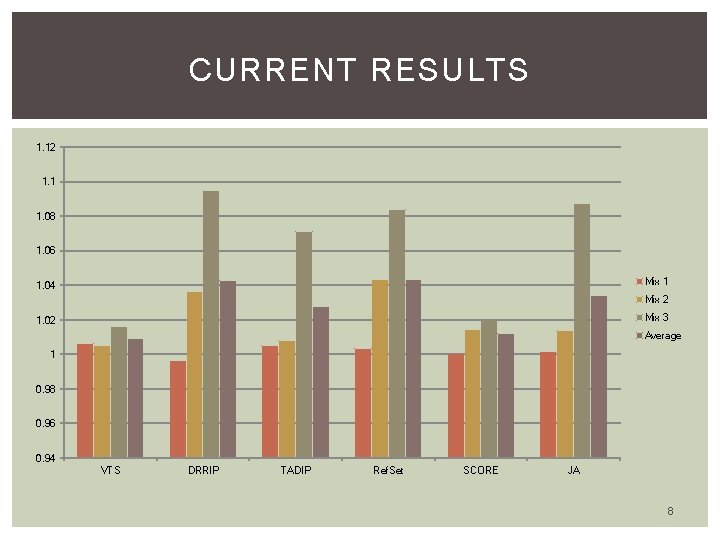

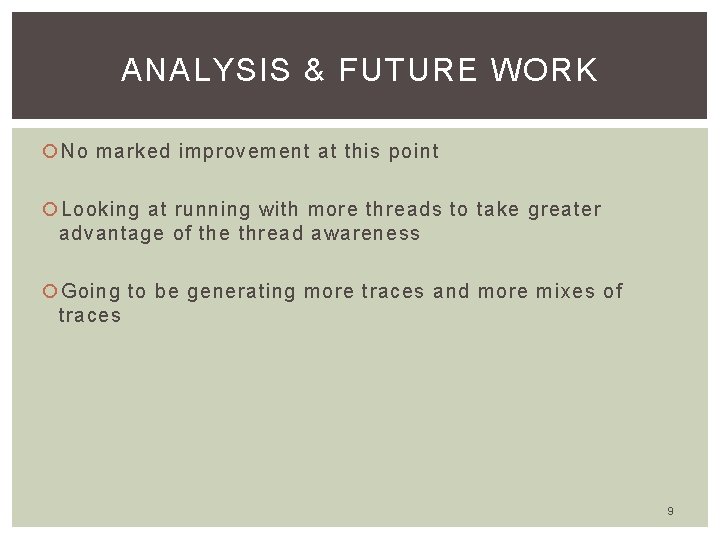

CURRENT RESULTS 1. 12 1. 1 1. 08 1. 06 Mix 1 1. 04 Mix 2 Mix 3 1. 02 Average 1 0. 98 0. 96 0. 94 VTS DRRIP TADIP Ref. Set SCORE JA 8

ANALYSIS & FUTURE WORK No marked improvement at this point Looking at running with more threads to take greater advantage of the thread awareness Going to be generating more traces and more mixes of traces 9