Cache Performance Interfacing Multiprocessors CPSC 321 Andreas Klappenecker

![Measuring Cache Performance • Write-stall cycle [write-through scheme]: • two sources of stalls: • Measuring Cache Performance • Write-stall cycle [write-through scheme]: • two sources of stalls: •](https://slidetodoc.com/presentation_image_h2/85fad34b45441b492c6e800565167d89/image-7.jpg)

- Slides: 46

Cache Performance, Interfacing, Multiprocessors CPSC 321 Andreas Klappenecker

Today’s Menu Cache Performance Review of Virtual Memory Processor and Peripherals Multiprocessors

Cache Performance

Caching Basics • What are the different cache placement schemes? • direct mapped • set associative • fully associative • Explain how a 2 -way cache with 4 sets works • If we want to read a memory block whose address is addr, then we search the set addr mod 4 • The memory block could be in either element of the set • Compare tags with upper n-2 bits of addr

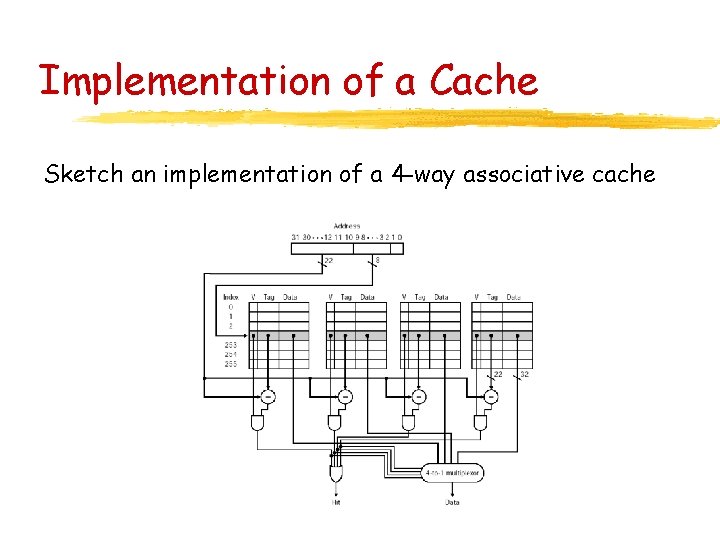

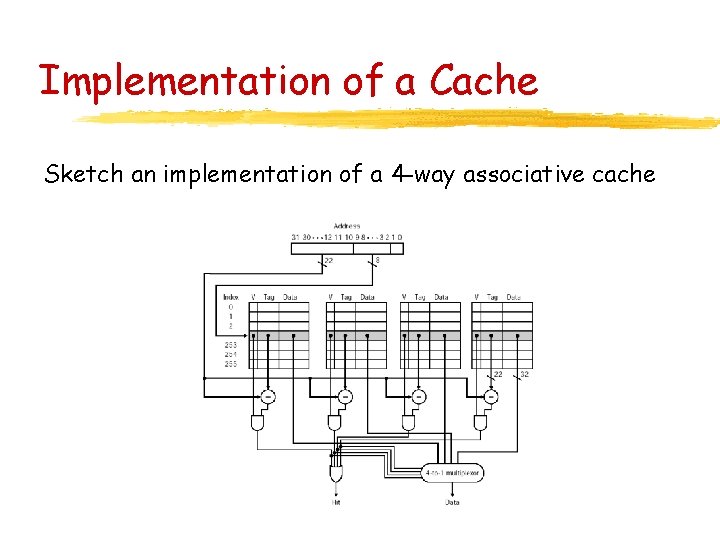

Implementation of a Cache Sketch an implementation of a 4 -way associative cache

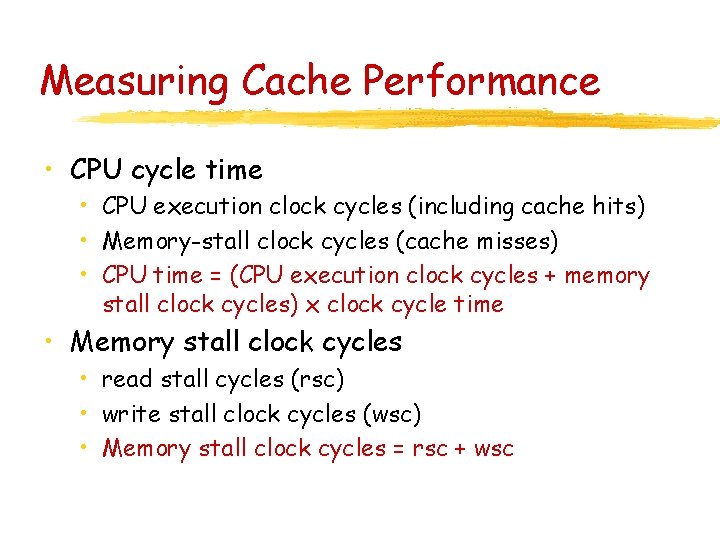

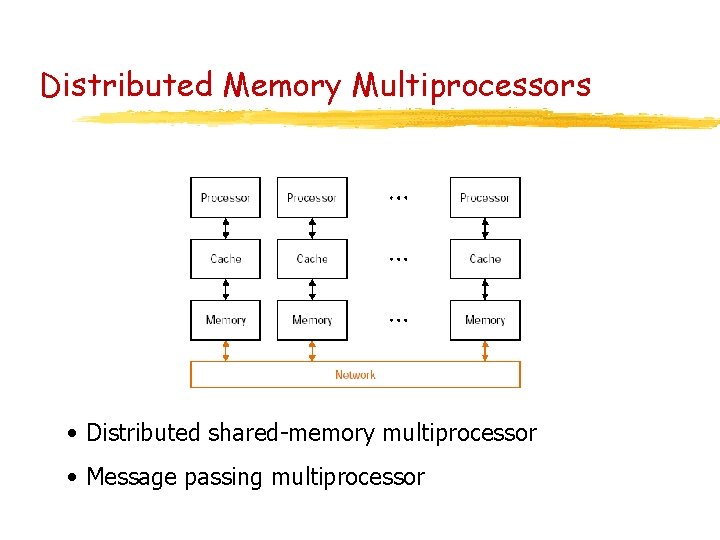

Measuring Cache Performance • CPU cycle time • CPU execution clock cycles (including cache hits) • Memory-stall clock cycles (cache misses) • CPU time = (CPU execution clock cycles + memory stall clock cycles) x clock cycle time • Memory stall clock cycles • read stall cycles (rsc) • write stall clock cycles (wsc) • Memory stall clock cycles = rsc + wsc

![Measuring Cache Performance Writestall cycle writethrough scheme two sources of stalls Measuring Cache Performance • Write-stall cycle [write-through scheme]: • two sources of stalls: •](https://slidetodoc.com/presentation_image_h2/85fad34b45441b492c6e800565167d89/image-7.jpg)

Measuring Cache Performance • Write-stall cycle [write-through scheme]: • two sources of stalls: • write misses (usually require to fetch the block) • write buffer stalls (write buffer is full when write occurs) • WSCs are sum of the two: WSCs = (writes/prg x write miss rate x write miss penalty) + write buffer stalls • Memory stall clock cycles similar

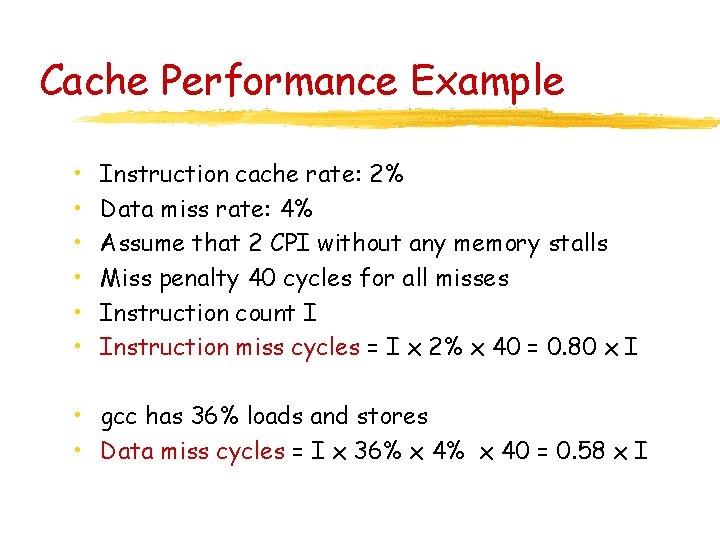

Cache Performance Example • • • Instruction cache rate: 2% Data miss rate: 4% Assume that 2 CPI without any memory stalls Miss penalty 40 cycles for all misses Instruction count I Instruction miss cycles = I x 2% x 40 = 0. 80 x I • gcc has 36% loads and stores • Data miss cycles = I x 36% x 40 = 0. 58 x I

Review of Virtual Memory

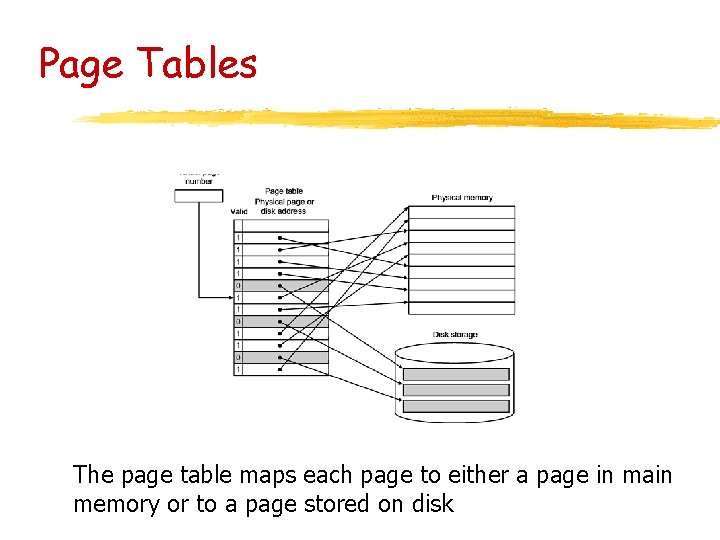

Virtual Memory • Processor generates virtual addresses • Memory is accessed using physical addresses • Virtual and physical memory is broken into blocks of memory, called pages • A virtual page may be absent from main memory, residing on the disk or may be mapped to a physical page

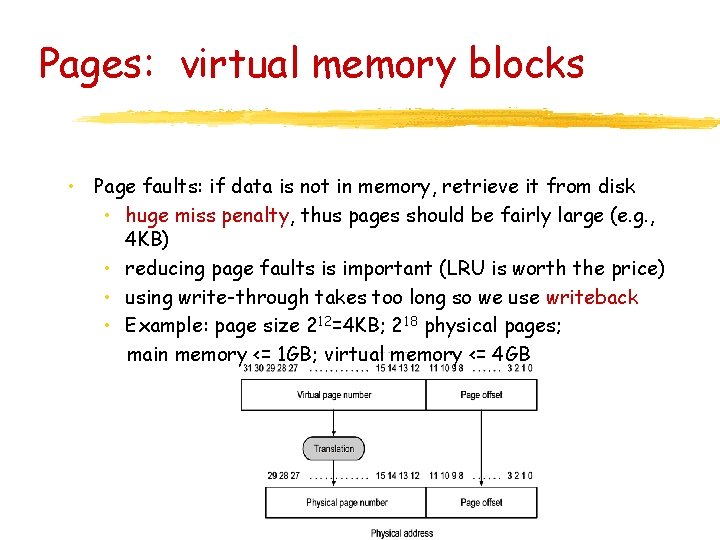

Page Tables The page table maps each page to either a page in main memory or to a page stored on disk

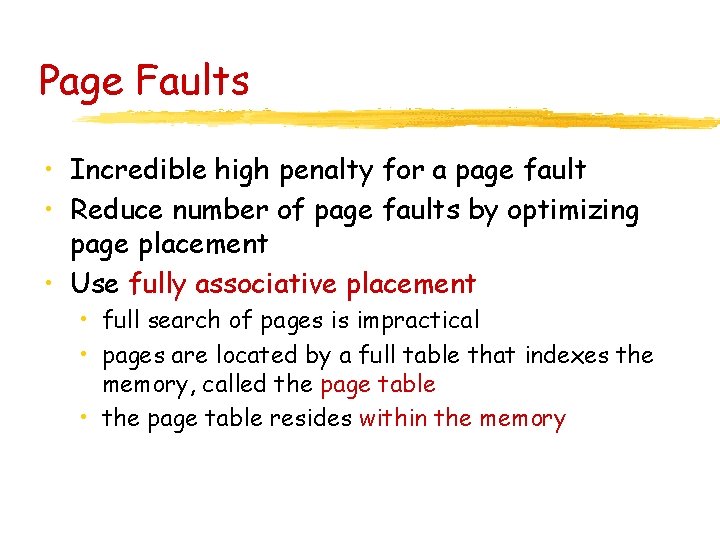

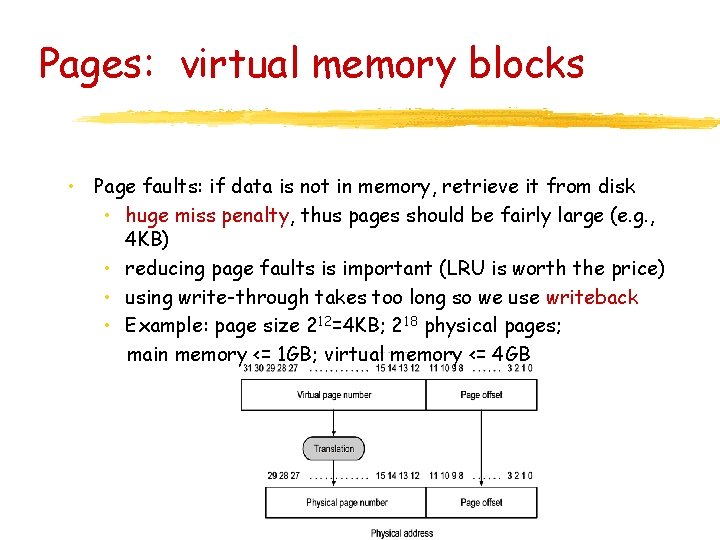

Pages: virtual memory blocks • Page faults: if data is not in memory, retrieve it from disk • huge miss penalty, thus pages should be fairly large (e. g. , 4 KB) • reducing page faults is important (LRU is worth the price) • using write-through takes too long so we use writeback • Example: page size 212=4 KB; 218 physical pages; main memory <= 1 GB; virtual memory <= 4 GB

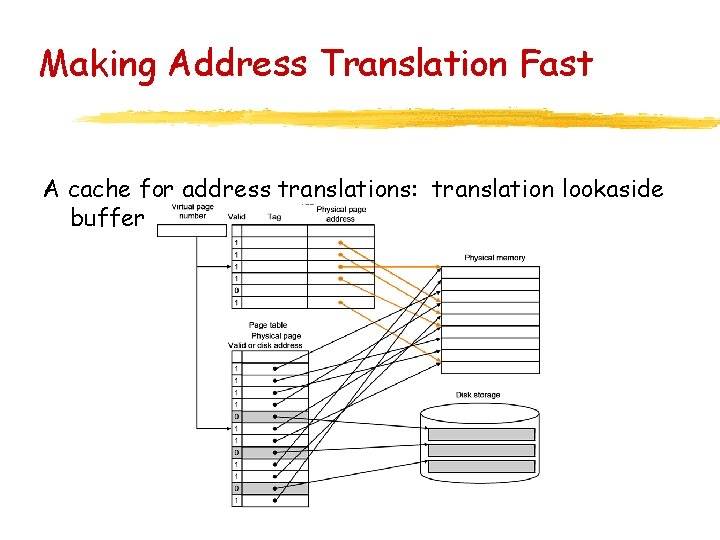

Page Faults • Incredible high penalty for a page fault • Reduce number of page faults by optimizing page placement • Use fully associative placement • full search of pages is impractical • pages are located by a full table that indexes the memory, called the page table • the page table resides within the memory

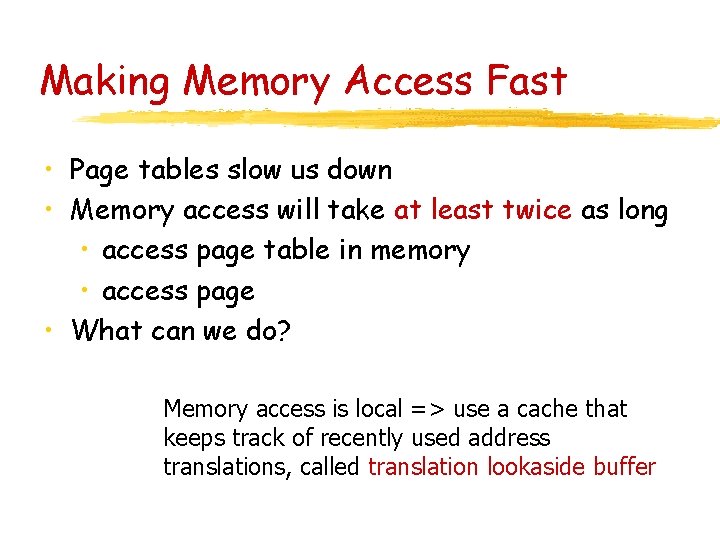

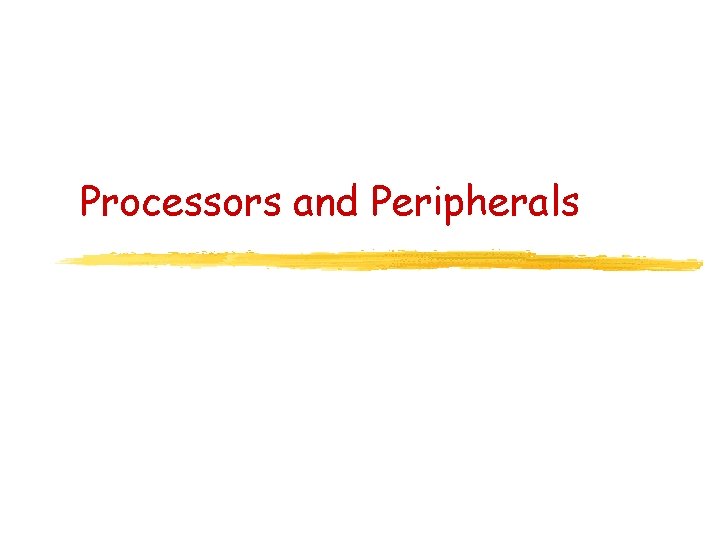

Making Memory Access Fast • Page tables slow us down • Memory access will take at least twice as long • access page table in memory • access page • What can we do? Memory access is local => use a cache that keeps track of recently used address translations, called translation lookaside buffer

Making Address Translation Fast A cache for address translations: translation lookaside buffer

Processors and Peripherals

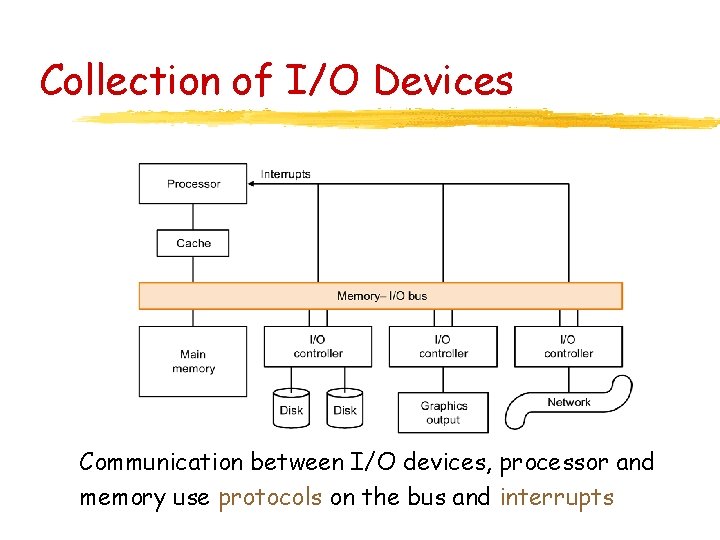

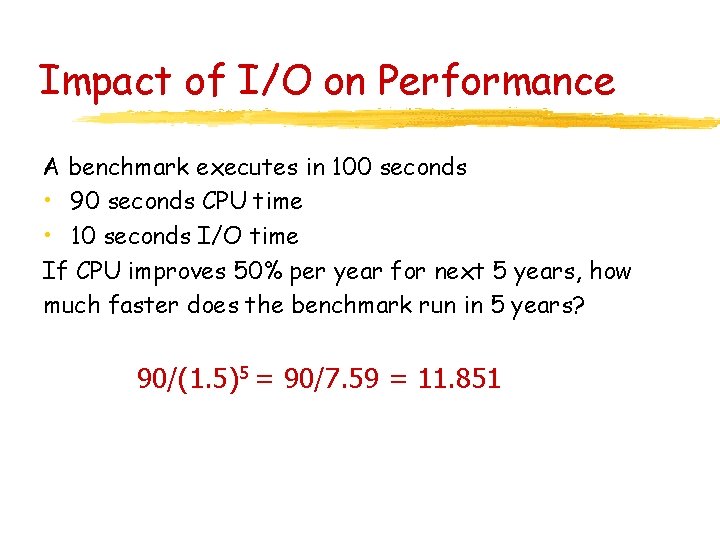

Collection of I/O Devices Communication between I/O devices, processor and memory use protocols on the bus and interrupts

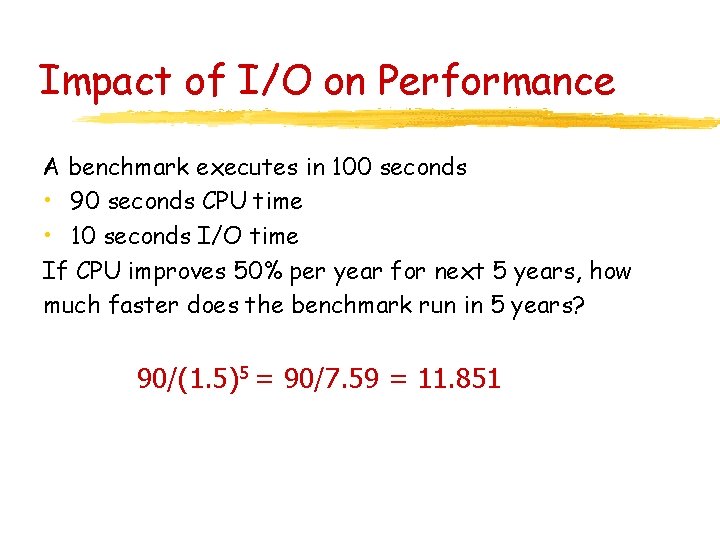

Impact of I/O on Performance A benchmark executes in 100 seconds • 90 seconds CPU time • 10 seconds I/O time If CPU improves 50% per year for next 5 years, how much faster does the benchmark run in 5 years? 90/(1. 5)5 = 90/7. 59 = 11. 851

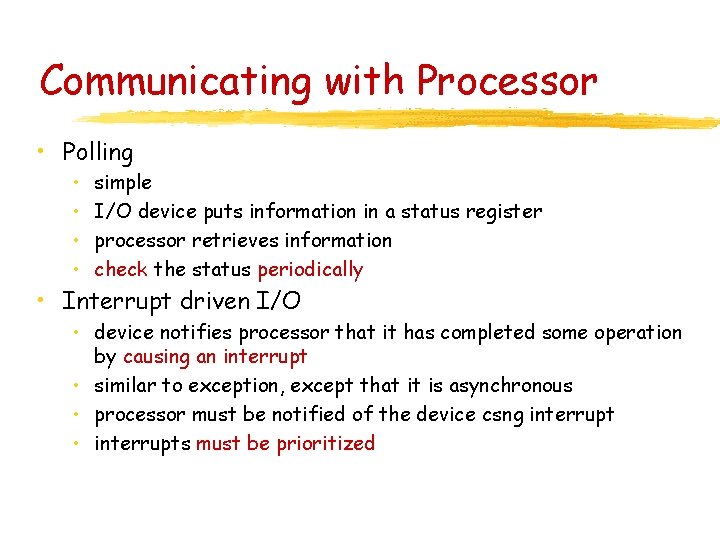

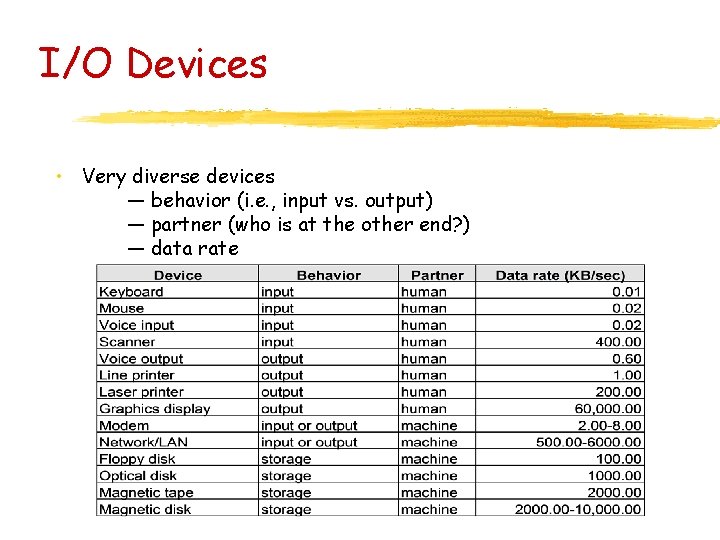

I/O Devices • Very diverse devices — behavior (i. e. , input vs. output) — partner (who is at the other end? ) — data rate

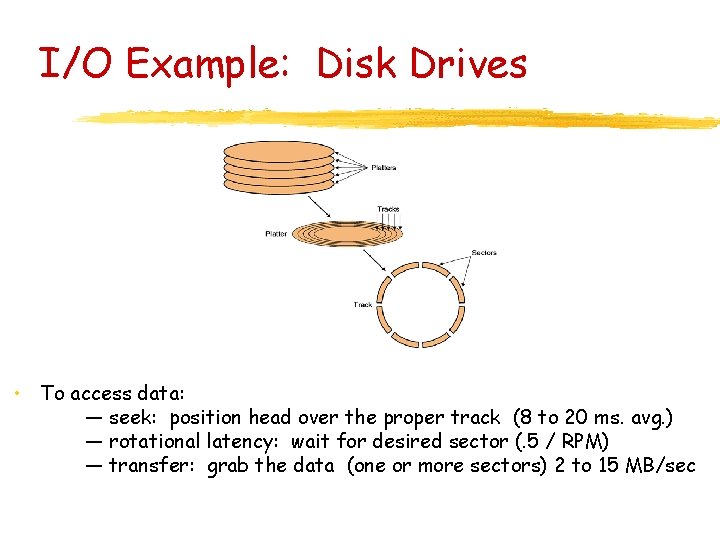

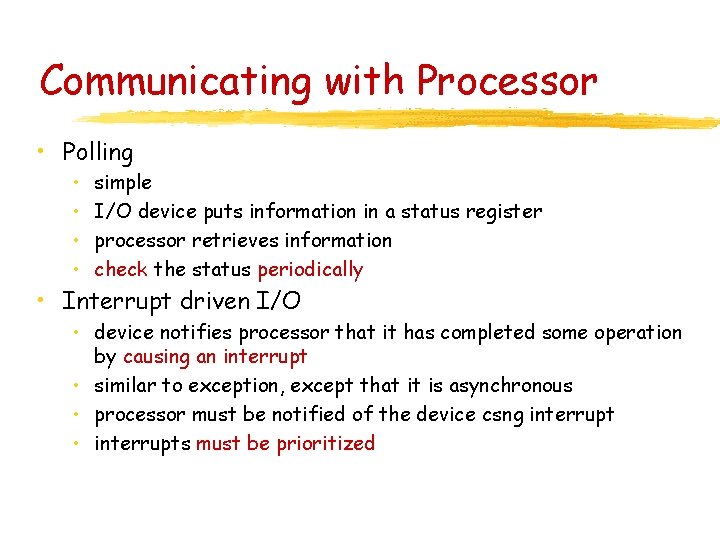

Communicating with Processor • Polling • • simple I/O device puts information in a status register processor retrieves information check the status periodically • Interrupt driven I/O • device notifies processor that it has completed some operation by causing an interrupt • similar to exception, except that it is asynchronous • processor must be notified of the device csng interrupt • interrupts must be prioritized

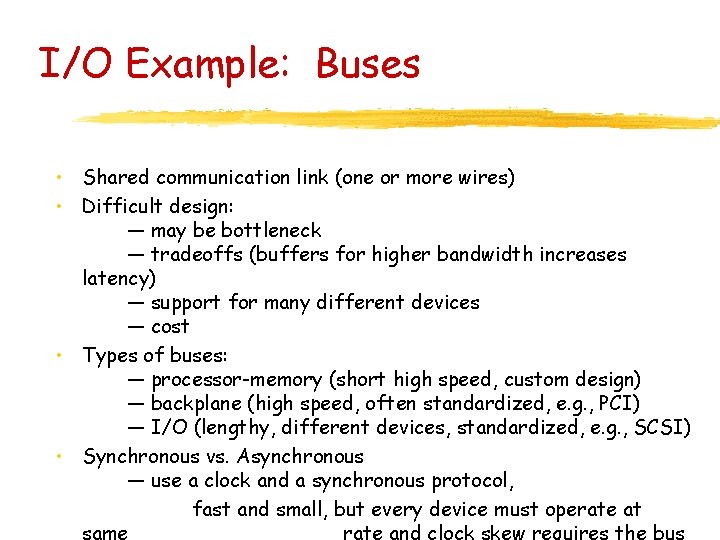

I/O Example: Disk Drives • To access data: — seek: position head over the proper track (8 to 20 ms. avg. ) — rotational latency: wait for desired sector (. 5 / RPM) — transfer: grab the data (one or more sectors) 2 to 15 MB/sec

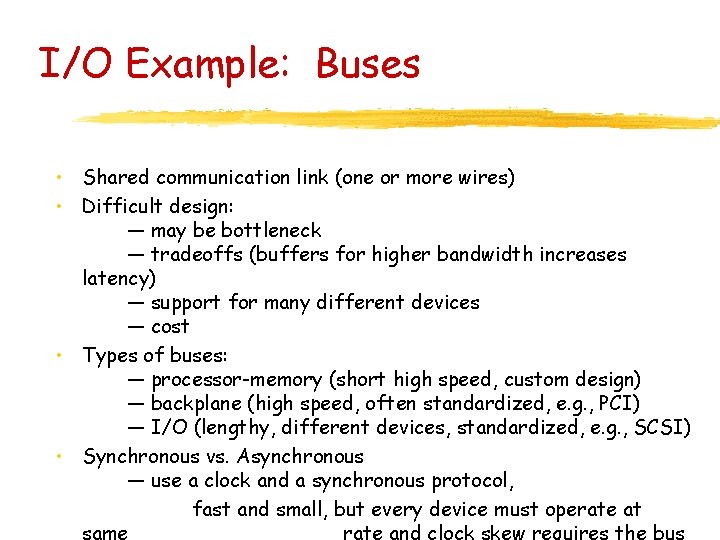

I/O Example: Buses • Shared communication link (one or more wires) • Difficult design: — may be bottleneck — tradeoffs (buffers for higher bandwidth increases latency) — support for many different devices — cost • Types of buses: — processor-memory (short high speed, custom design) — backplane (high speed, often standardized, e. g. , PCI) — I/O (lengthy, different devices, standardized, e. g. , SCSI) • Synchronous vs. Asynchronous — use a clock and a synchronous protocol, fast and small, but every device must operate at same rate and clock skew requires the bus

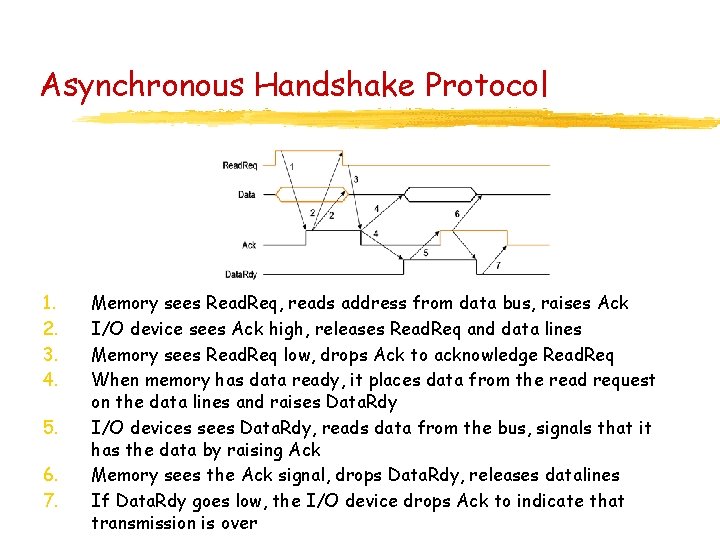

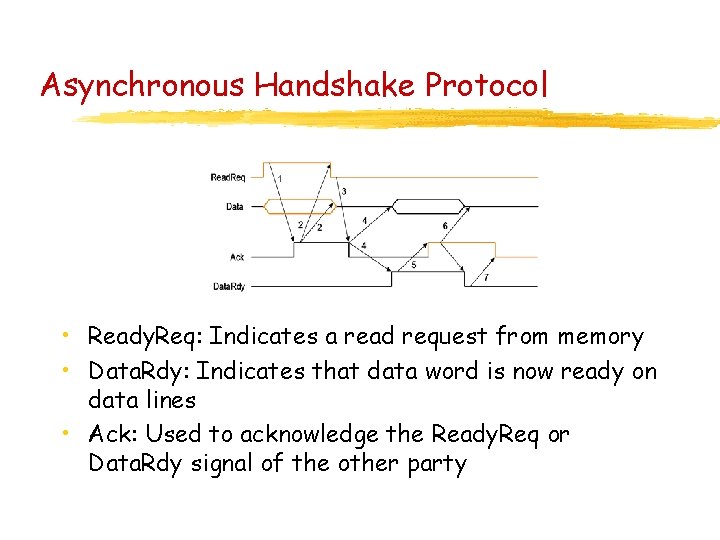

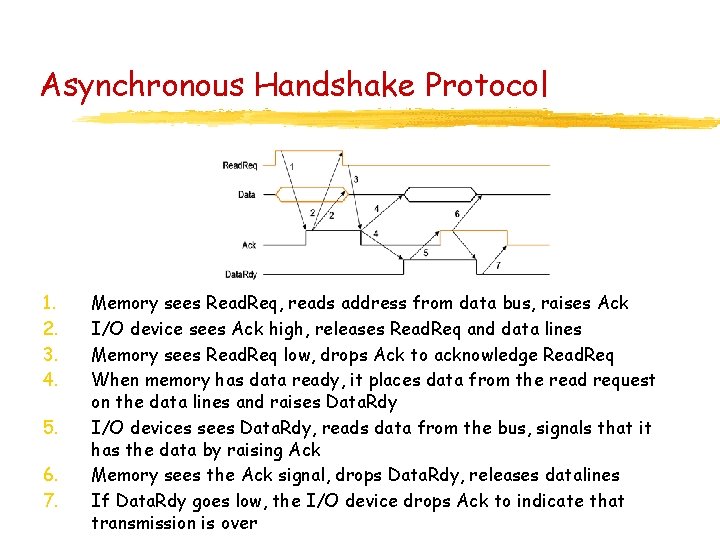

Asynchronous Handshake Protocol • Ready. Req: Indicates a read request from memory • Data. Rdy: Indicates that data word is now ready on data lines • Ack: Used to acknowledge the Ready. Req or Data. Rdy signal of the other party

Asynchronous Handshake Protocol 1. 2. 3. 4. 5. 6. 7. Memory sees Read. Req, reads address from data bus, raises Ack I/O device sees Ack high, releases Read. Req and data lines Memory sees Read. Req low, drops Ack to acknowledge Read. Req When memory has data ready, it places data from the read request on the data lines and raises Data. Rdy I/O devices sees Data. Rdy, reads data from the bus, signals that it has the data by raising Ack Memory sees the Ack signal, drops Data. Rdy, releases datalines If Data. Rdy goes low, the I/O device drops Ack to indicate that transmission is over

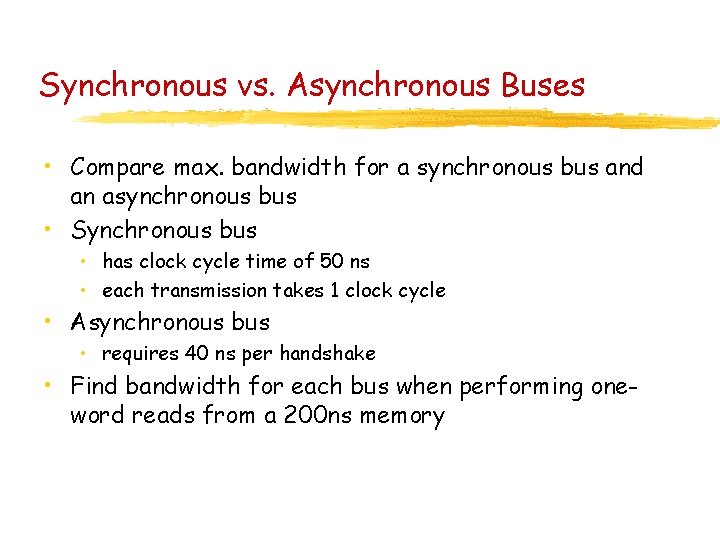

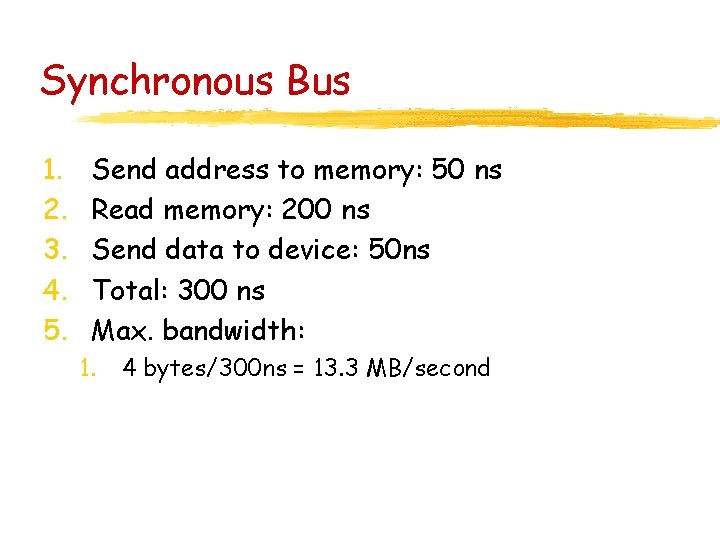

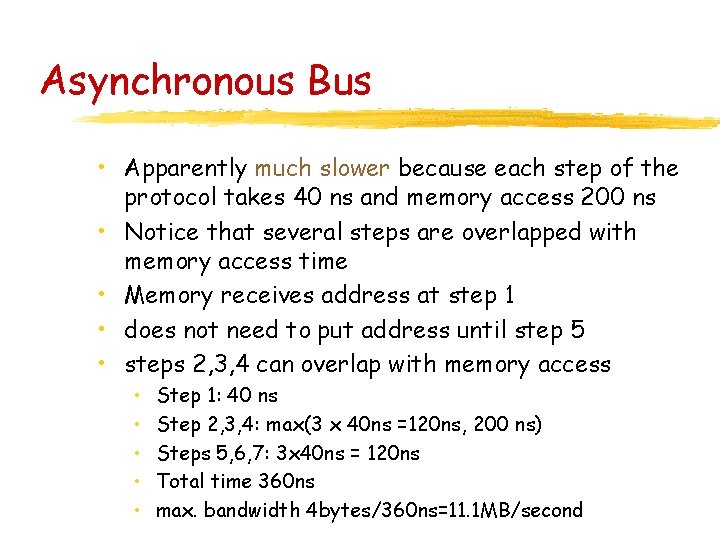

Synchronous vs. Asynchronous Buses • Compare max. bandwidth for a synchronous bus and an asynchronous bus • Synchronous bus • has clock cycle time of 50 ns • each transmission takes 1 clock cycle • Asynchronous bus • requires 40 ns per handshake • Find bandwidth for each bus when performing oneword reads from a 200 ns memory

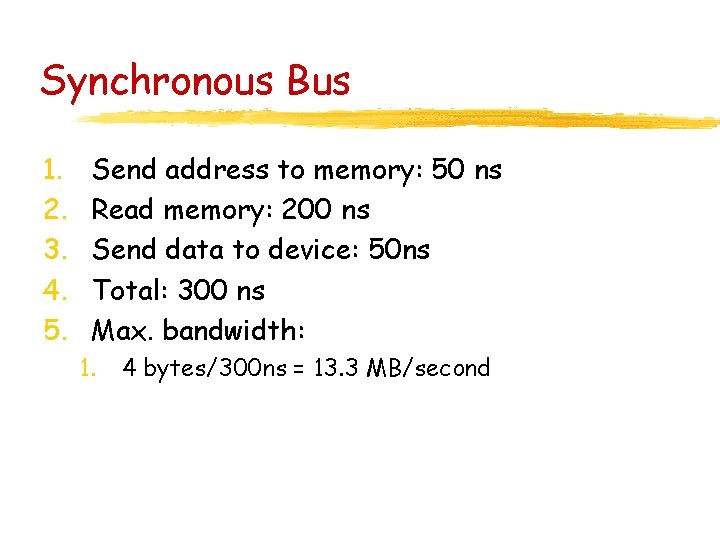

Synchronous Bus 1. 2. 3. 4. 5. Send address to memory: 50 ns Read memory: 200 ns Send data to device: 50 ns Total: 300 ns Max. bandwidth: 1. 4 bytes/300 ns = 13. 3 MB/second

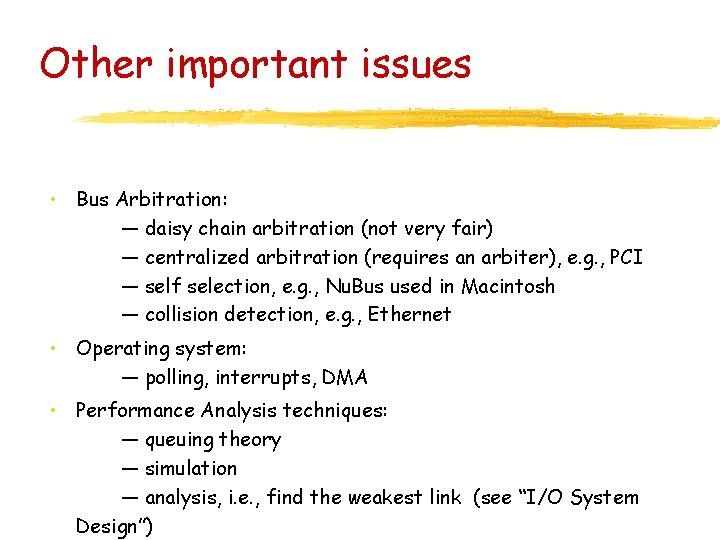

Asynchronous Bus • Apparently much slower because each step of the protocol takes 40 ns and memory access 200 ns • Notice that several steps are overlapped with memory access time • Memory receives address at step 1 • does not need to put address until step 5 • steps 2, 3, 4 can overlap with memory access • • • Step 1: 40 ns Step 2, 3, 4: max(3 x 40 ns =120 ns, 200 ns) Steps 5, 6, 7: 3 x 40 ns = 120 ns Total time 360 ns max. bandwidth 4 bytes/360 ns=11. 1 MB/second

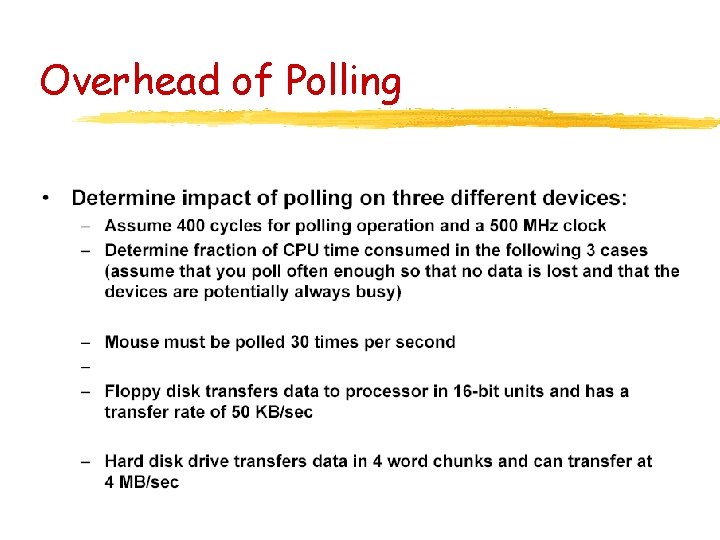

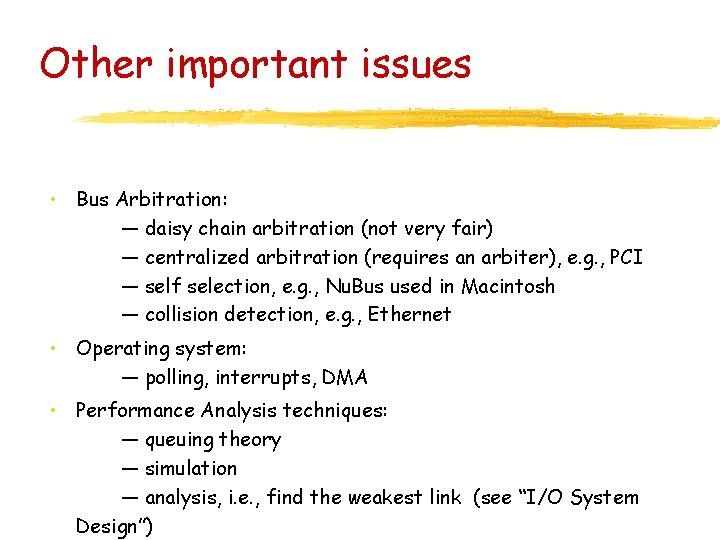

Other important issues • Bus Arbitration: — daisy chain arbitration (not very fair) — centralized arbitration (requires an arbiter), e. g. , PCI — self selection, e. g. , Nu. Bus used in Macintosh — collision detection, e. g. , Ethernet • Operating system: — polling, interrupts, DMA • Performance Analysis techniques: — queuing theory — simulation — analysis, i. e. , find the weakest link (see “I/O System Design”)

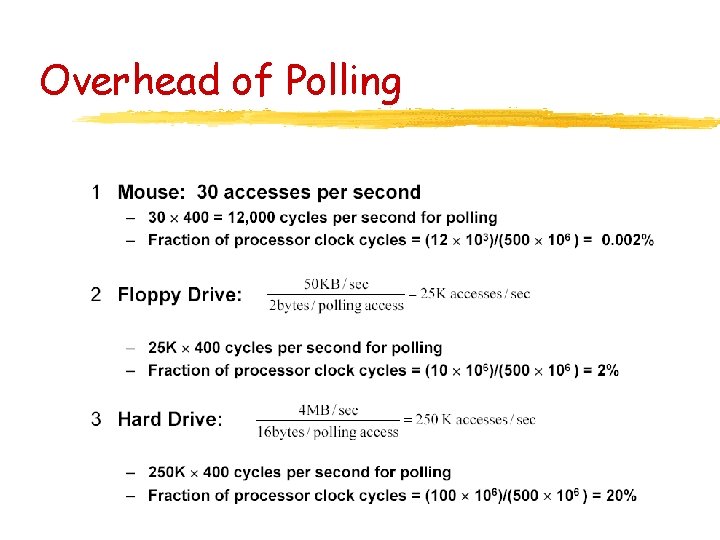

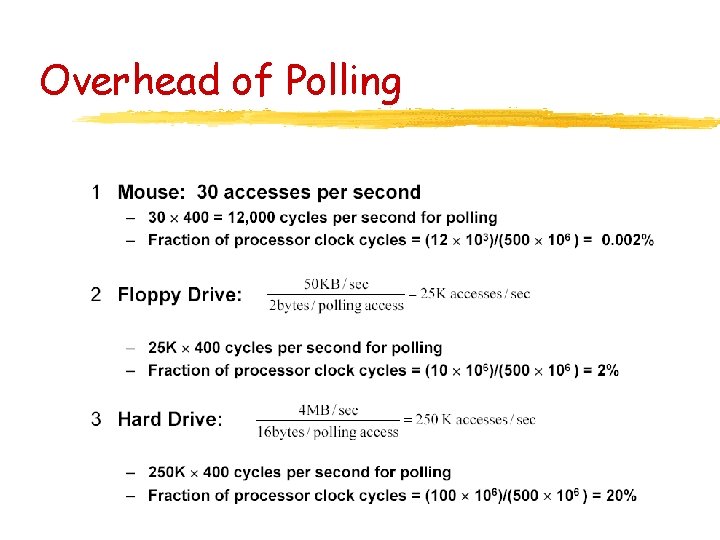

Overhead of Polling

Overhead of Polling

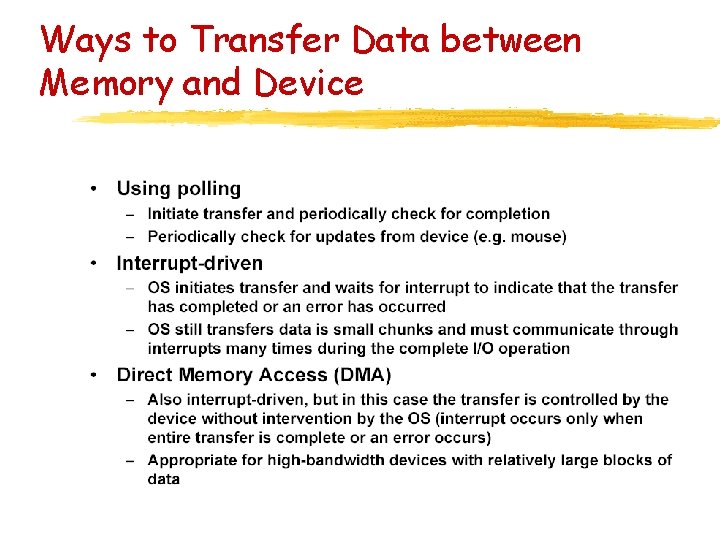

Ways to Transfer Data between Memory and Device

Multiprocessors

Idea Build powerful computers by connecting many smaller ones.

Multiprocessors + Good for timesharing + easy to realize - difficult to write good concurrent programs - hard to parallelize tasks - mapping to architecture can be difficult

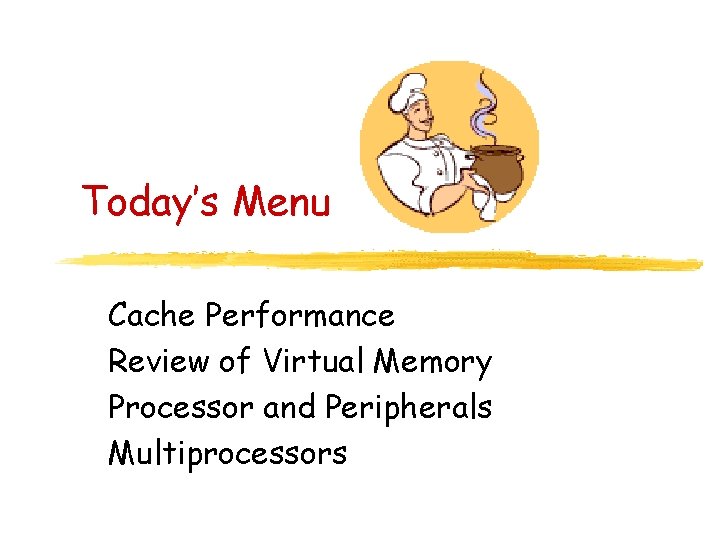

Questions • How do parallel processors share data? — single address space — message passing • How do parallel processors coordinate? — synchronization (locks, semaphores) — built into send / receive primitives — operating system protocols • How are they implemented? — connected by a single bus — connected by a network

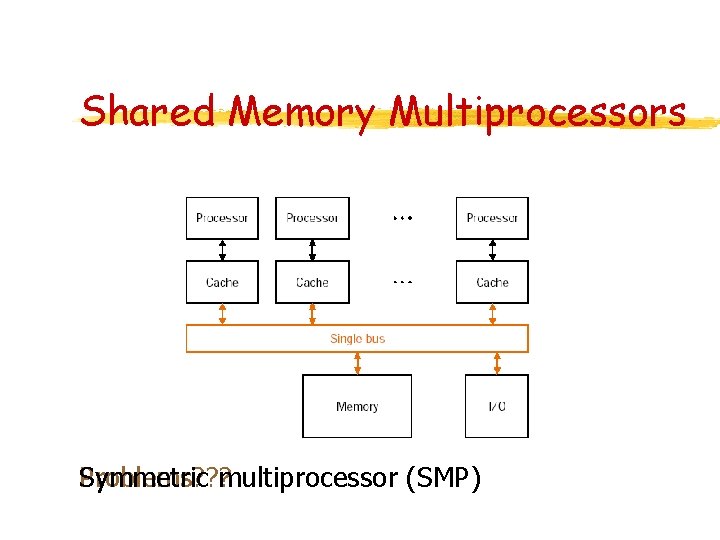

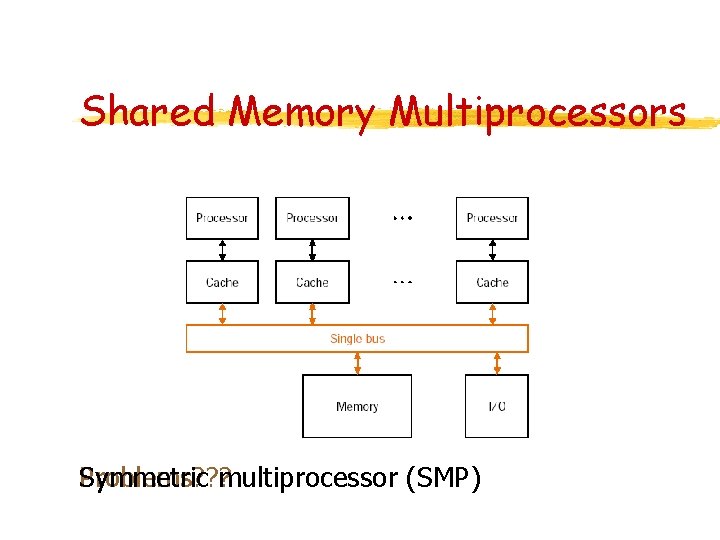

Shared Memory Multiprocessors Symmetric multiprocessor (SMP) Problems? ? ?

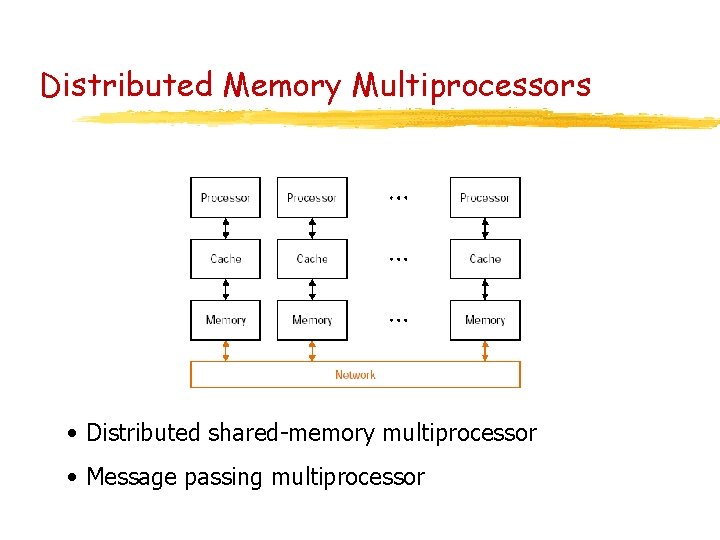

Distributed Memory Multiprocessors • Distributed shared-memory multiprocessor • Message passing multiprocessor

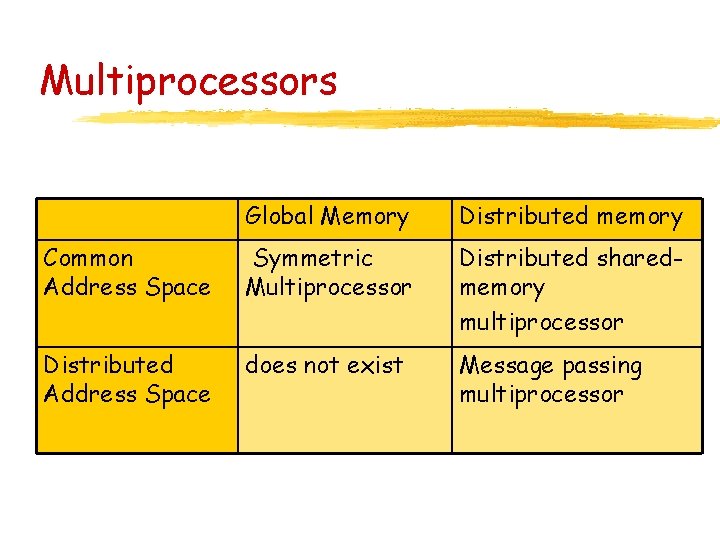

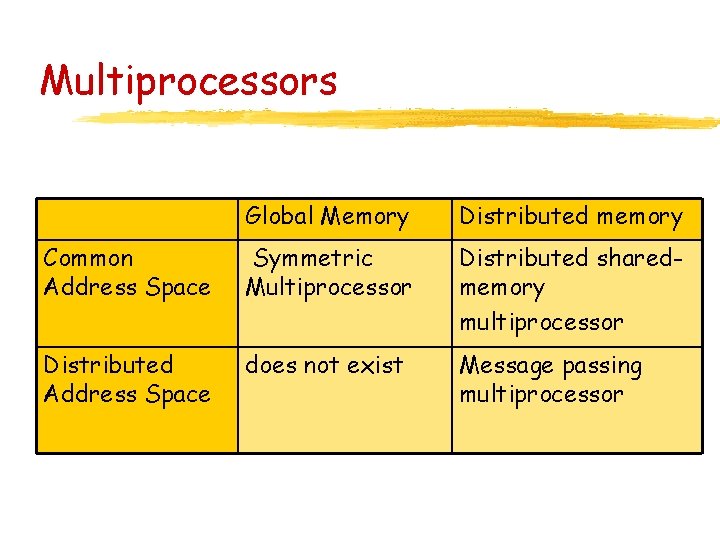

Multiprocessors Global Memory Distributed memory Common Address Space Symmetric Multiprocessor Distributed sharedmemory multiprocessor Distributed Address Space does not exist Message passing multiprocessor

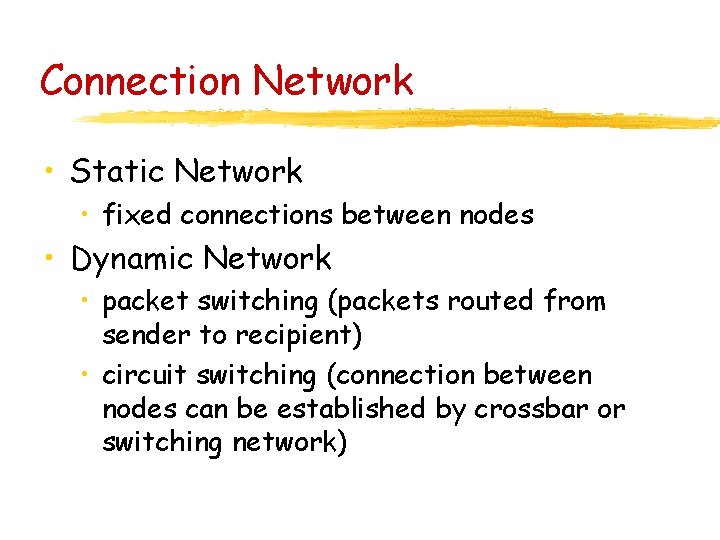

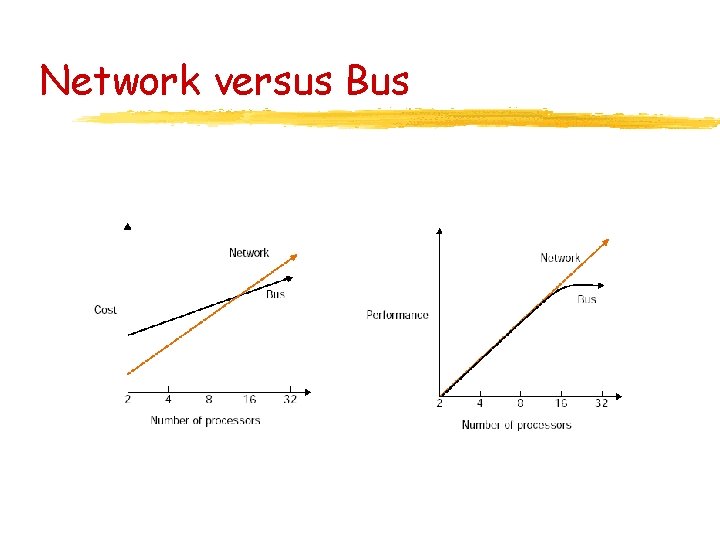

Connection Network • Static Network • fixed connections between nodes • Dynamic Network • packet switching (packets routed from sender to recipient) • circuit switching (connection between nodes can be established by crossbar or switching network)

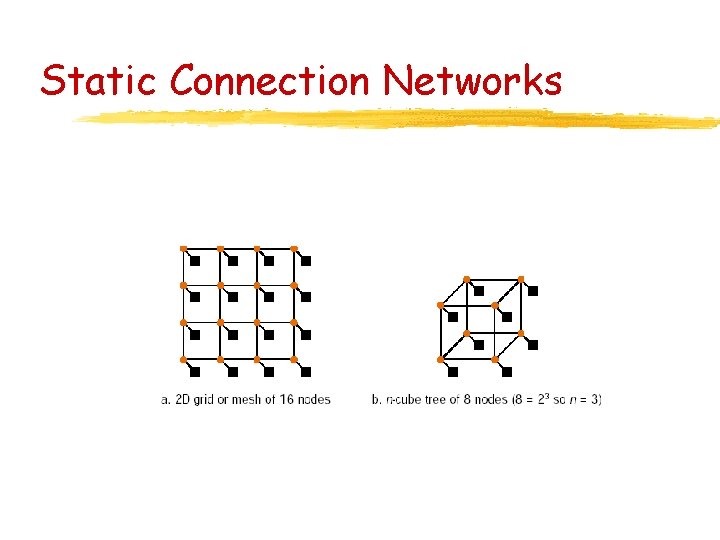

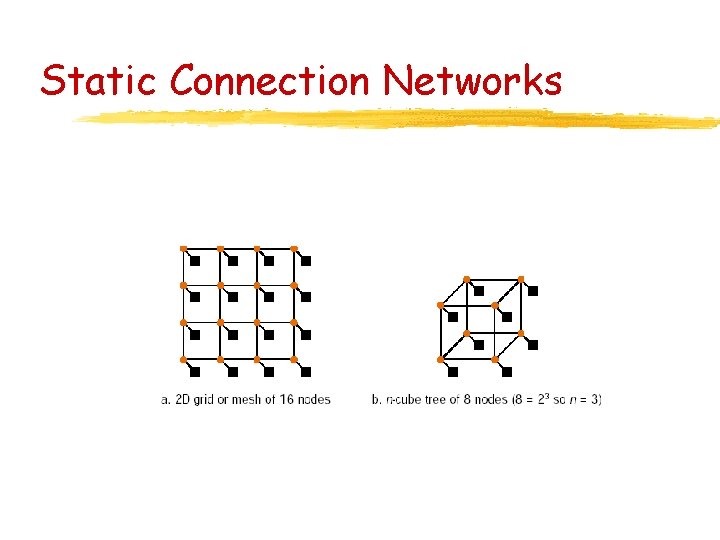

Static Connection Networks

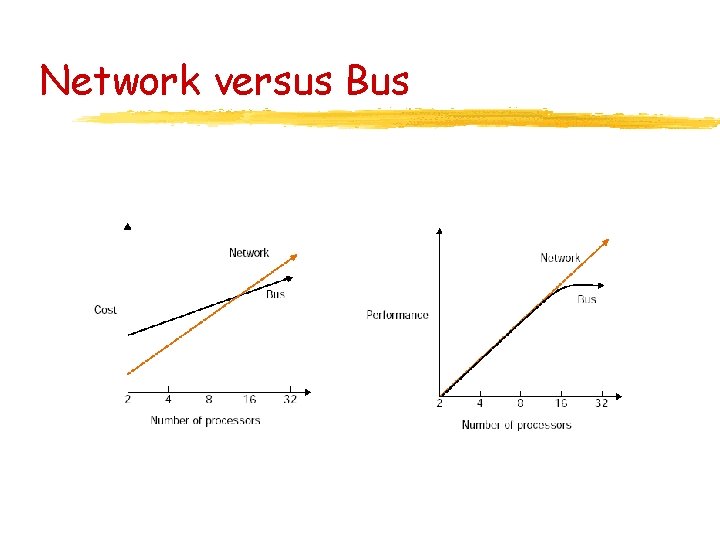

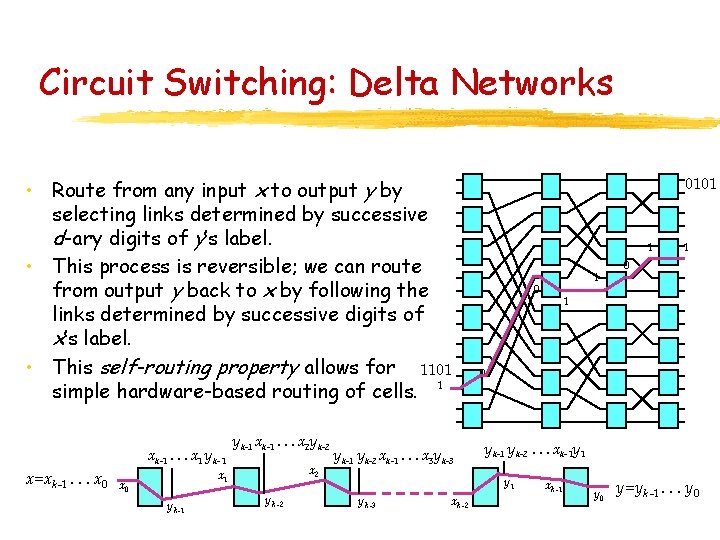

Circuit Switching: Delta Networks • Route from any input x to output y by selecting links determined by successive d-ary digits of y’s label. • This process is reversible; we can route from output y back to x by following the links determined by successive digits of x’s label. • This self-routing property allows for 1101 simple hardware-based routing of cells. 1 xk -1. . . x 1 y k- 1 x=xk -1. . . x 0 y k-1 xk -1. . . x 2 y k-2 x 1 x 0 y k -1 y k -2 y k-1 y k-2 xk -1. . . x 3 y k-3 0101 1 0 xk -2 0 1 0 y k-1 y k-2. . . xk- 1 y 1 y k -3 1 1 xk -1 y 0 y=y k -1. . . y 0

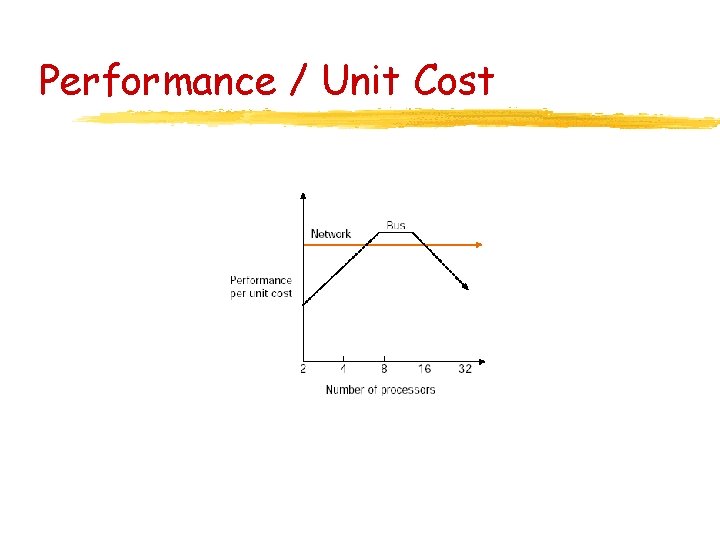

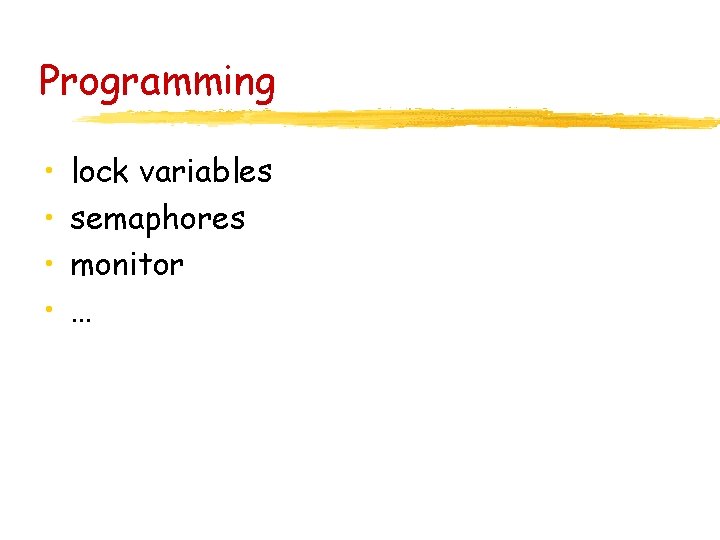

Network versus Bus

Performance / Unit Cost

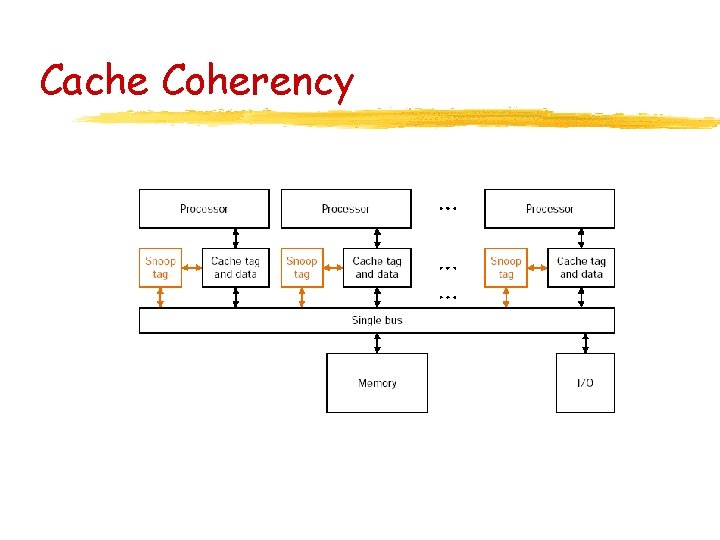

Programming • • lock variables semaphores monitor …

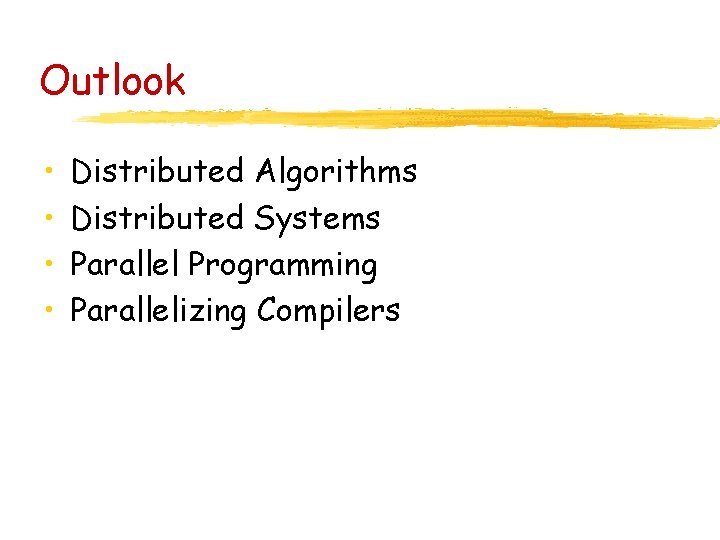

Cache Coherency

Outlook • • Distributed Algorithms Distributed Systems Parallel Programming Parallelizing Compilers