Cache Memory An Introduction Creative Commons License Curt

- Slides: 43

Cache Memory An Introduction • Creative Commons License – Curt Hill

What is it? • Transparent mechanism for speeding up memory or disk accesses • Two kinds: – Memory cache • Designed with CPU board • Designed for speedup of the CPU – File cache • Built into file system • This is mainly about the memory cache Creative Commons License – Curt Hill

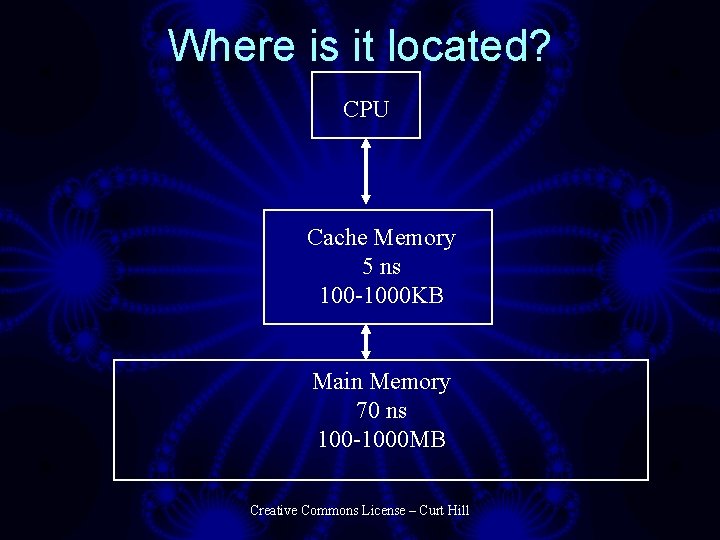

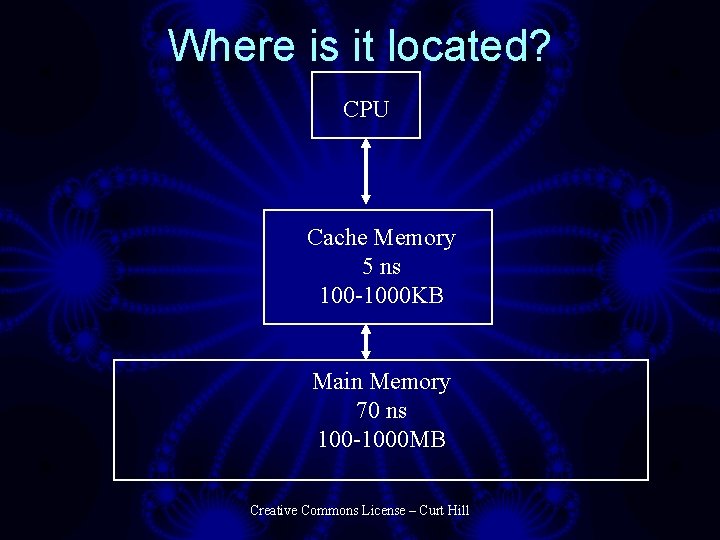

Where is it located? CPU Cache Memory 5 ns 100 -1000 KB Main Memory 70 ns 100 -1000 MB Creative Commons License – Curt Hill

Basic Operation • Every time that the CPU issues a fetch or store instruction to memory: – Intercepted by cache – If that item is present, satisfy the request and tell the CPU to continue • No memory request • Very fast – If not ask memory for it • Keep a copy for the cache • Much slower Creative Commons License – Curt Hill

Cache Manipulation • Cache is usually transparent to the programmer • When a task changes the system usually flushes the cache – Cache is usually at hardware level, below virtual memory – All changed cache memory is sent back to main memory – Memory read is just deleted Creative Commons License – Curt Hill

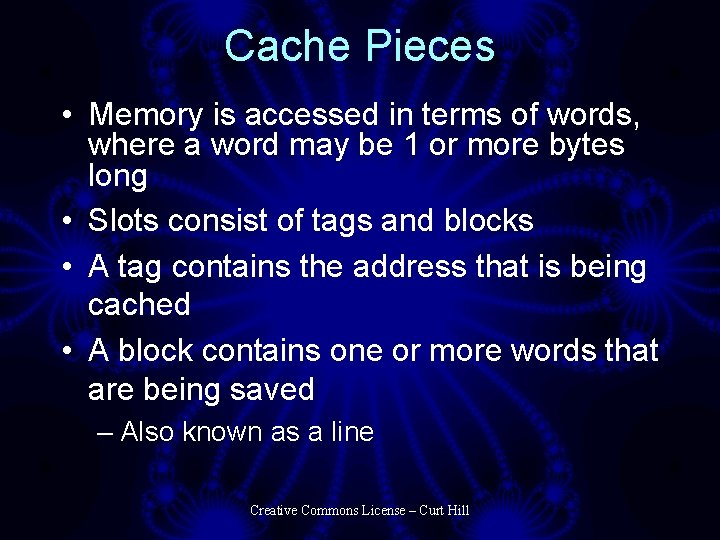

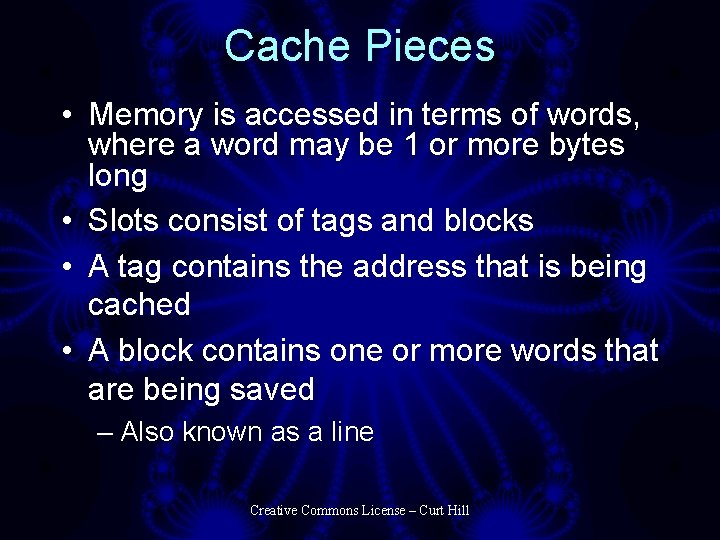

Cache Pieces • Memory is accessed in terms of words, where a word may be 1 or more bytes long • Slots consist of tags and blocks • A tag contains the address that is being cached • A block contains one or more words that are being saved – Also known as a line Creative Commons License – Curt Hill

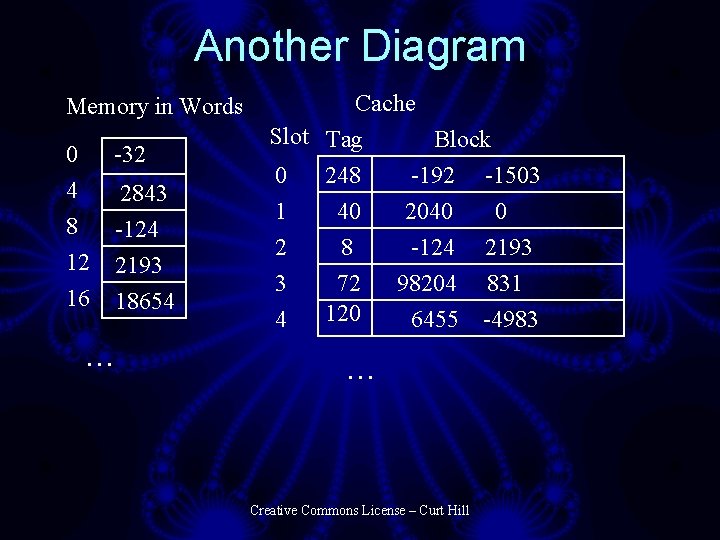

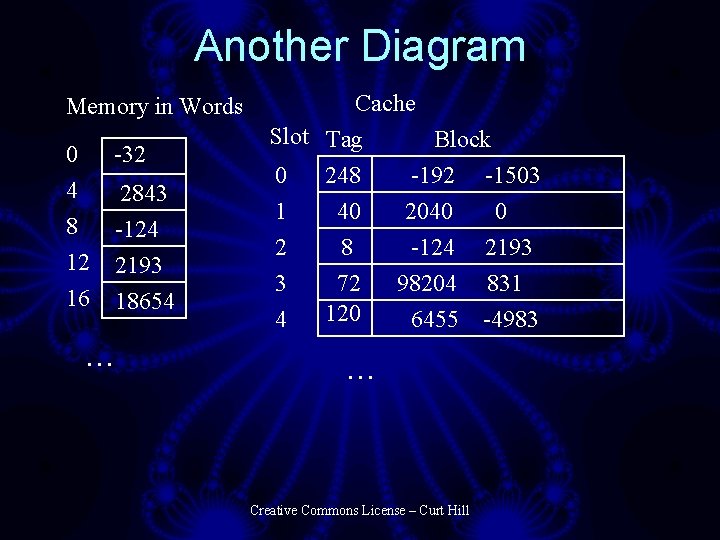

Another Diagram Memory in Words 0 4 8 12 16 -32 2843 -124 2193 18654 … Cache Slot Tag Block 0 1 2 3 4 248 40 8 72 120 -192 2040 -124 98204 6455 … Creative Commons License – Curt Hill -1503 0 2193 831 -4983

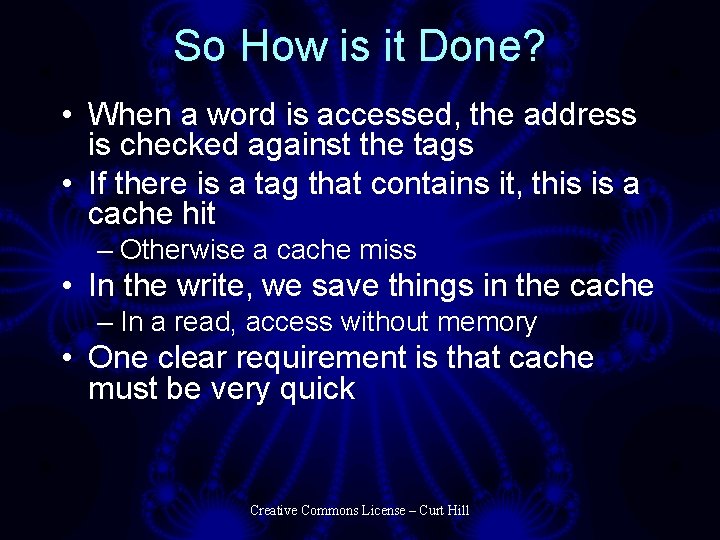

So How is it Done? • When a word is accessed, the address is checked against the tags • If there is a tag that contains it, this is a cache hit – Otherwise a cache miss • In the write, we save things in the cache – In a read, access without memory • One clear requirement is that cache must be very quick Creative Commons License – Curt Hill

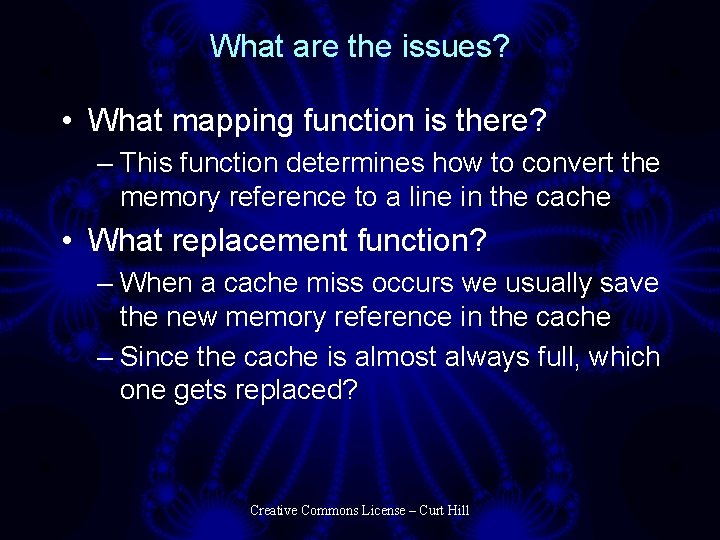

What are the issues? • What mapping function is there? – This function determines how to convert the memory reference to a line in the cache • What replacement function? – When a cache miss occurs we usually save the new memory reference in the cache – Since the cache is almost always full, which one gets replaced? Creative Commons License – Curt Hill

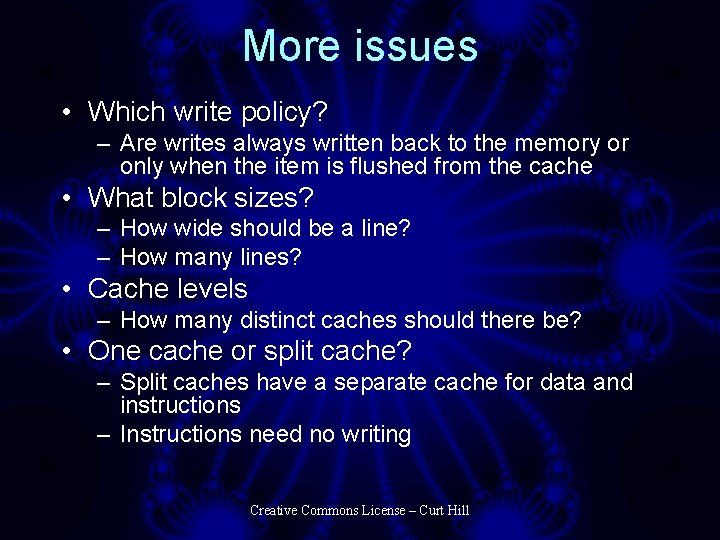

More issues • Which write policy? – Are writes always written back to the memory or only when the item is flushed from the cache • What block sizes? – How wide should be a line? – How many lines? • Cache levels – How many distinct caches should there be? • One cache or split cache? – Split caches have a separate cache for data and instructions – Instructions need no writing Creative Commons License – Curt Hill

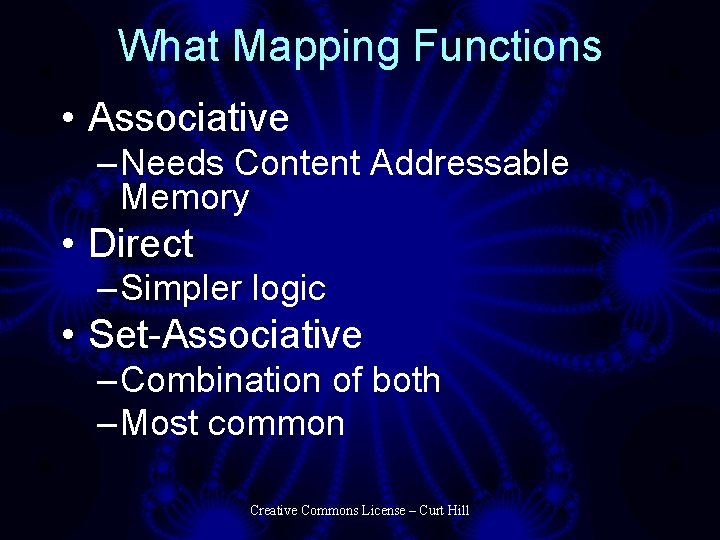

What Mapping Functions • Associative – Needs Content Addressable Memory • Direct – Simpler logic • Set-Associative – Combination of both – Most common Creative Commons License – Curt Hill

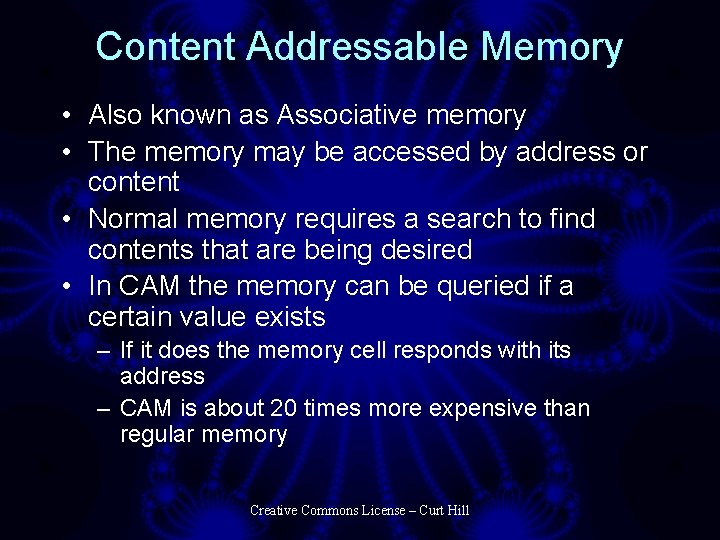

Content Addressable Memory • Also known as Associative memory • The memory may be accessed by address or content • Normal memory requires a search to find contents that are being desired • In CAM the memory can be queried if a certain value exists – If it does the memory cell responds with its address – CAM is about 20 times more expensive than regular memory Creative Commons License – Curt Hill

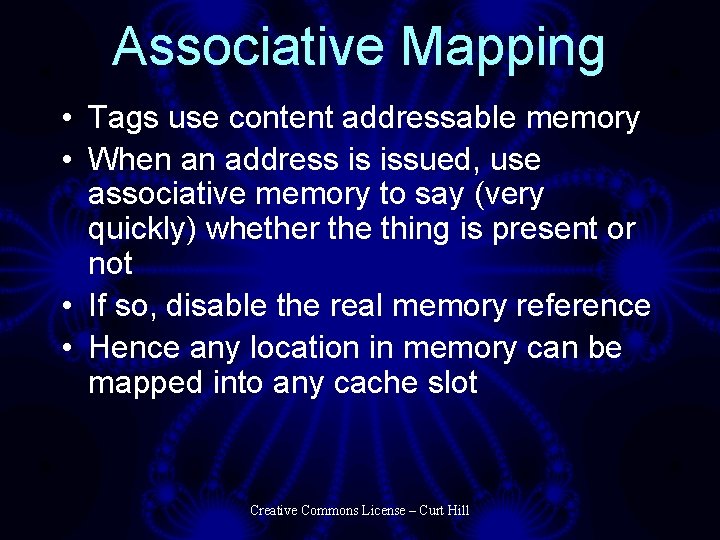

Associative Mapping • Tags use content addressable memory • When an address is issued, use associative memory to say (very quickly) whether the thing is present or not • If so, disable the real memory reference • Hence any location in memory can be mapped into any cache slot Creative Commons License – Curt Hill

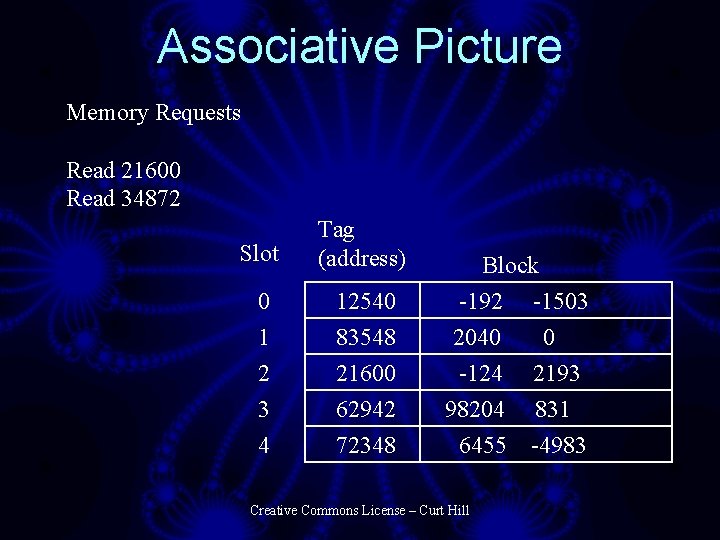

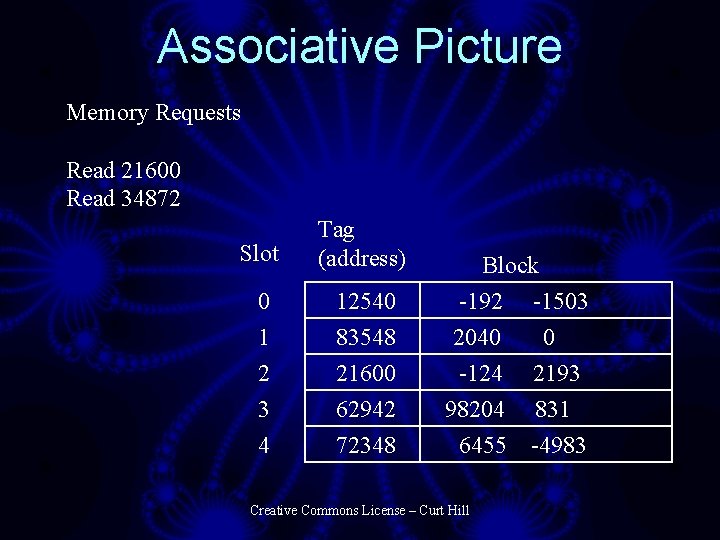

Associative Picture Memory Requests Read 21600 Read 34872 Slot Tag (address) 0 1 2 3 4 12540 83548 21600 62942 72348 Block -192 -1503 2040 0 -124 2193 98204 831 6455 -4983 Creative Commons License – Curt Hill

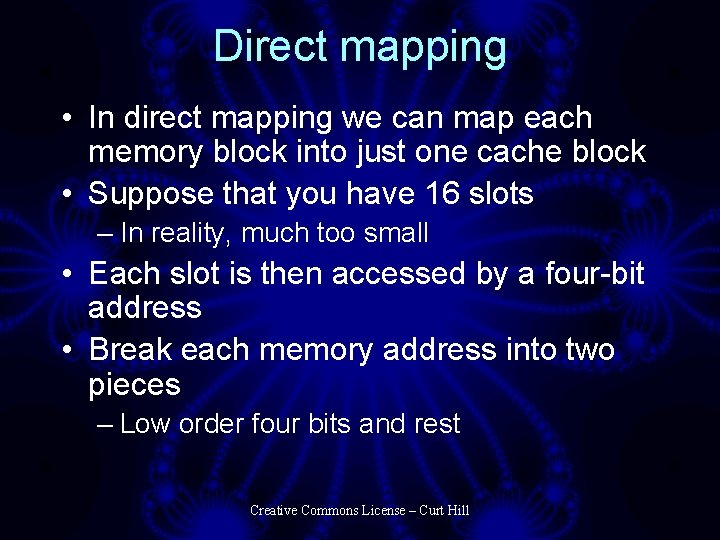

Direct mapping • In direct mapping we can map each memory block into just one cache block • Suppose that you have 16 slots – In reality, much too small • Each slot is then accessed by a four-bit address • Break each memory address into two pieces – Low order four bits and rest Creative Commons License – Curt Hill

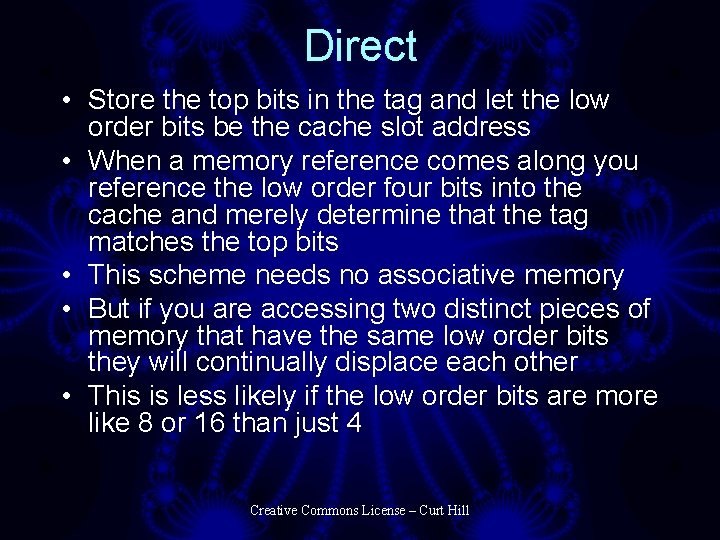

Direct • Store the top bits in the tag and let the low order bits be the cache slot address • When a memory reference comes along you reference the low order four bits into the cache and merely determine that the tag matches the top bits • This scheme needs no associative memory • But if you are accessing two distinct pieces of memory that have the same low order bits they will continually displace each other • This is less likely if the low order bits are more like 8 or 16 than just 4 Creative Commons License – Curt Hill

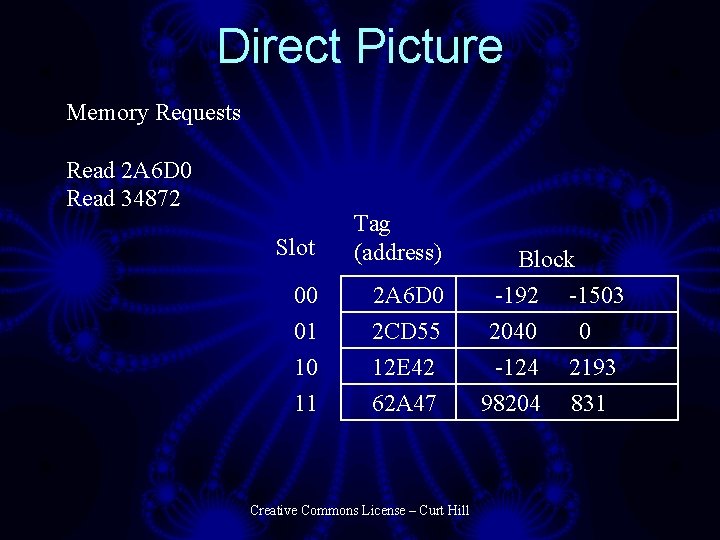

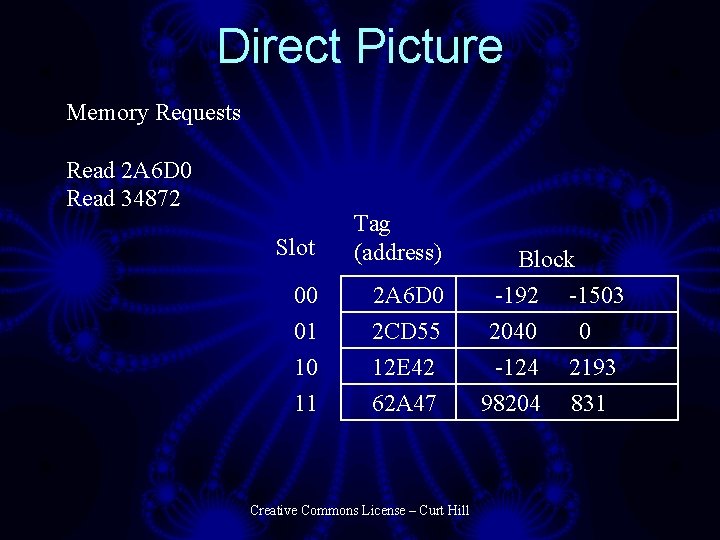

Direct Picture Memory Requests Read 2 A 6 D 0 Read 34872 Slot Tag (address) 00 01 10 11 2 A 6 D 0 2 CD 55 12 E 42 62 A 47 Creative Commons License – Curt Hill Block -192 -1503 2040 0 -124 2193 98204 831

Set associative • Combination of Associative and Direct • The problem with Associative is that associative memory is very expensive and you need lots of it • The problem with direct is that it is not too uncommon to get addresses that are the same in the last n bits which flush each other out • The hybrid approach works because the likelihood of say three frequently used references being the same is much less than that of two Creative Commons License – Curt Hill

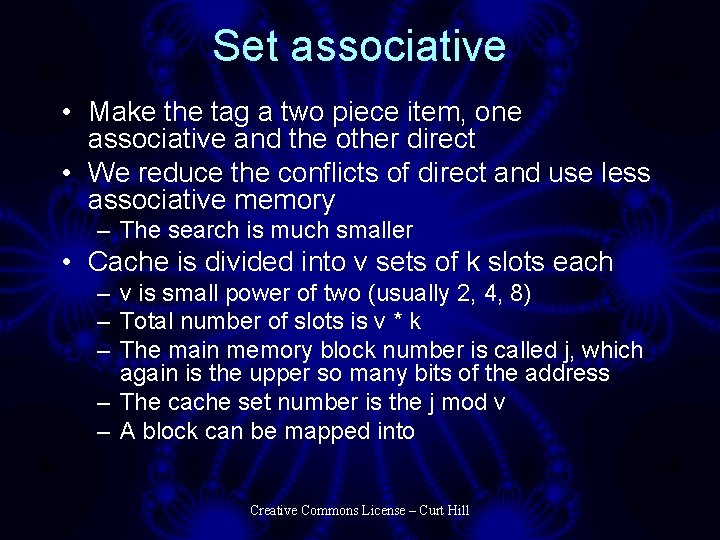

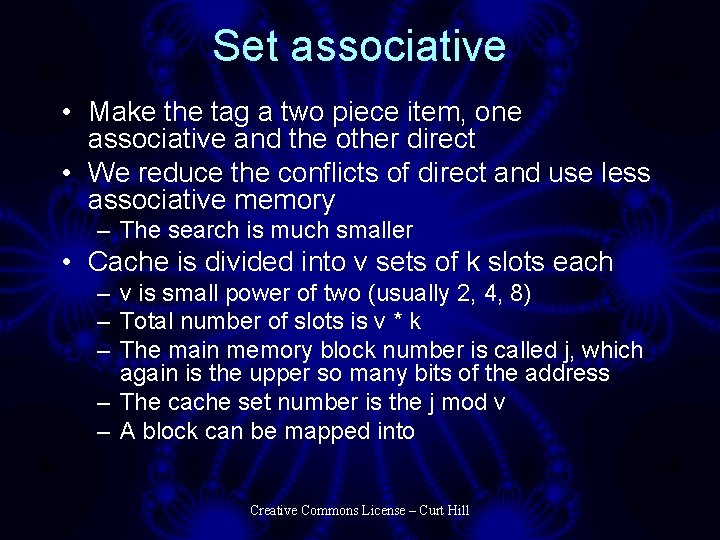

Set associative • Make the tag a two piece item, one associative and the other direct • We reduce the conflicts of direct and use less associative memory – The search is much smaller • Cache is divided into v sets of k slots each – v is small power of two (usually 2, 4, 8) – Total number of slots is v * k – The main memory block number is called j, which again is the upper so many bits of the address – The cache set number is the j mod v – A block can be mapped into Creative Commons License – Curt Hill

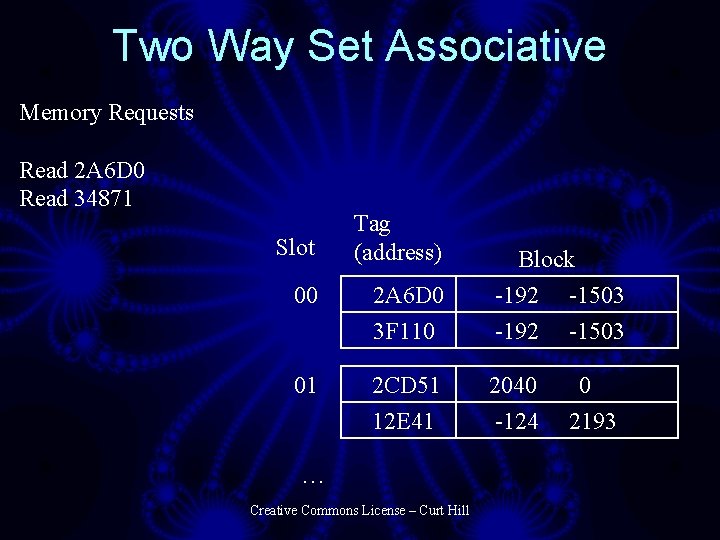

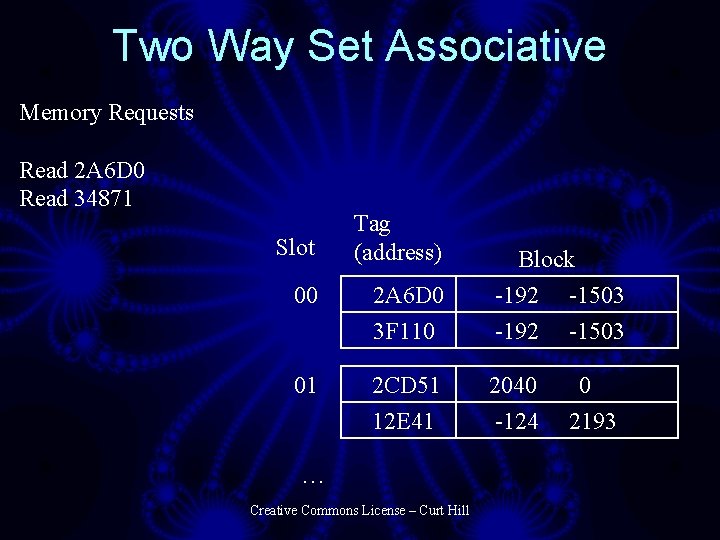

Two Way Set Associative Memory Requests Read 2 A 6 D 0 Read 34871 Slot Tag (address) 00 2 A 6 D 0 3 F 110 Block -192 -1503 01 2 CD 51 12 E 41 2040 -124 … Creative Commons License – Curt Hill 0 2193

Mapping Summary • The two or four way set associative seems to be the popular one today • It is a nice compromise • Most of the ease of implementation of direct – Associative searches are very small so little content addressable memory is needed • Good hit ratio of associative Creative Commons License – Curt Hill

Replacement algorithm • None needed for direct • After a very short time of execution a cache is full • When a new item is brought into the cache an old one must be discarded which one shall it be? • The best algorithm requires the prediction of the future – Difficult to implement • Here are some that we will consider • Least recently used, License First– Curt in Hill first out, Least Creative Commons frequently used

Least Recently Used • Most common and very effective is LRU • Put a timer on each slot – Requires some hardware • Reset the timer on every reference • The largest timer value gets dumped when one is needed Creative Commons License – Curt Hill

First In First Out • The cache is a queue of items • This assumes that the oldest is the least likely to be next used • Organize the cache in round robin fashion • Easier to implement than Least Recently Used Creative Commons License – Curt Hill

Least Frequently Used • Attach counter that records references in last x nanoseconds • In a 16 slot cache, increment the counter on every reference and at every so often (like every 16 references) decrement every one by 1 • Like Least Recently Used plenty of extra hardware • The frequent cache hits tend to stay and others leave Creative Commons License – Curt Hill

Random • Choose one at random • Not really substantially inferior in simulations • What this should tell us is that as to guessing the future all our algorithms are rather pathetic • However, any good algorithm is probably too complicated to implement directly in hardware Creative Commons License – Curt Hill

Write policy • When the reference is a write reference, what do you do? • When we are about to discard the cache, we have to figure out whether to save it in memory or whether that’s already been done • Two values – Write back – Write through Creative Commons License – Curt Hill

Write back • Set an update bit in cache and only write it when the cache slot is discarded • This tends to isolate memory from CPU, which is a good thing • There are several problems, all involving concurrently executing hardware Creative Commons License – Curt Hill

The problems • Memory is often invalid – Only CPU can access the cache • Routing the I/O controller through the cache – Tends to defeat the separation of CPU and I/O controller that gives us performance advantage – Also complicates design Creative Commons License – Curt Hill

System Level Response • When a process issues an I/O operation – Process is suspended until the operation is done. – Since no two processes share a memory location, the processes cache items can then be flushed and the I/O can proceed without fear – Threads inside processes tend to defeat this • Research indicates that only 15% of memory references are writes • The whole problem gets really exciting with multiple CPUs and Local caches attempting to maintain cache coherency Creative Commons License – Curt Hill

Write Through • Always change the memory when the cache is updated – A write updates cache but always changes memory as well • Main memory is then always valid • There is more memory traffic incurred • When cache is discarded no decision on what to save is needed Creative Commons License – Curt Hill

Block size • How many addressable units should be in a block or line – Large maximizes hit ratio – Also most expensive – Cache is most expensive memory • The optimal size is dependent on the program being run, which means we can only study averages • Research tends to support the size of 48 units (may be words or bytes) as the best size Creative Commons License – Curt Hill

Levels of cache • Is it best to only have one cache? – Not necessarily • Many processors have several levels of cache • Level 1 (L 1) is on the CPU chip • Level 2 (L 2) is on the mother board • Level 3 (L 3) is farther out Creative Commons License – Curt Hill

Problem • Suppose there is are multiple cores on a chip – Each one has its own cache • Suppose that the two cores are sharing a memory variable • If either one writes to this location the cache will have the updated value but memory and the other cache will not – An incoherent cache • Complicated by multiple chips Creative Commons License – Curt Hill

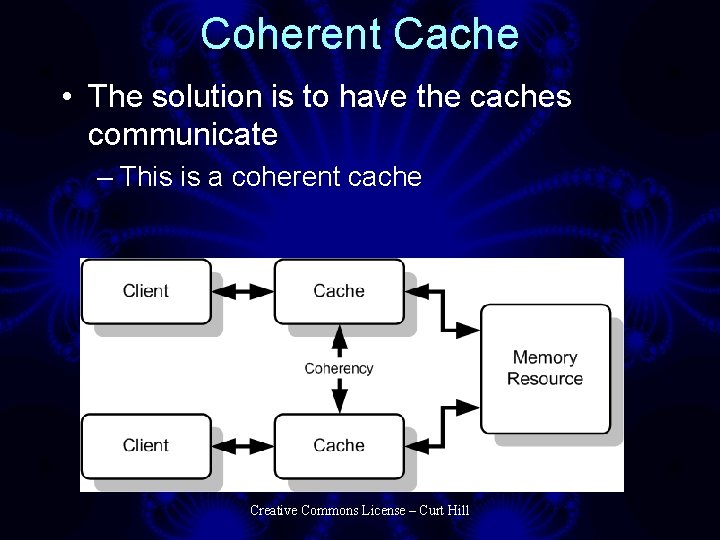

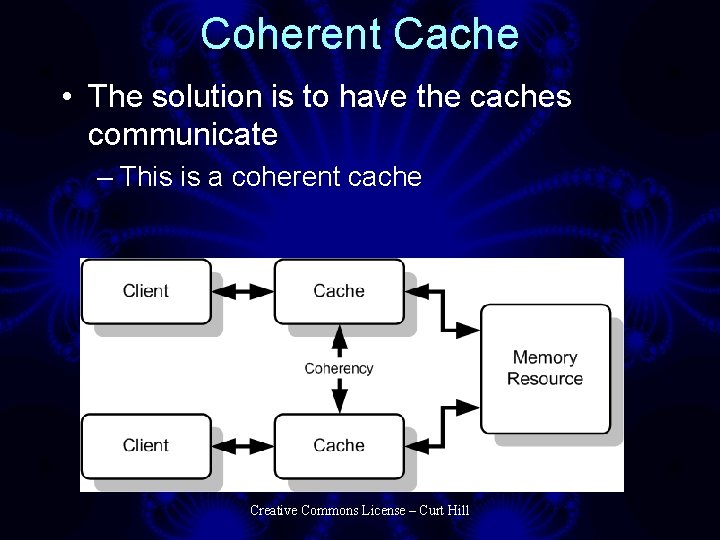

Coherent Cache • The solution is to have the caches communicate – This is a coherent cache Creative Commons License – Curt Hill

Coherence Again • There are two requirements – A write to a common location must cause notification to similar caches – Reads and writes to this common location must be serialized • All processors must be able to see the same order of reads and writes • Cache coherence can not defend against a disk controller altering memory that the cache holds – Usually the OS must do that Creative Commons License – Curt Hill

Examples • The 486 and up as well as the Power. PC have on chip caches • The short circuit lengths and lack of bus activity make these highly desirable • Is there a still a need for a board level cache? – Apparently so Creative Commons License – Curt Hill

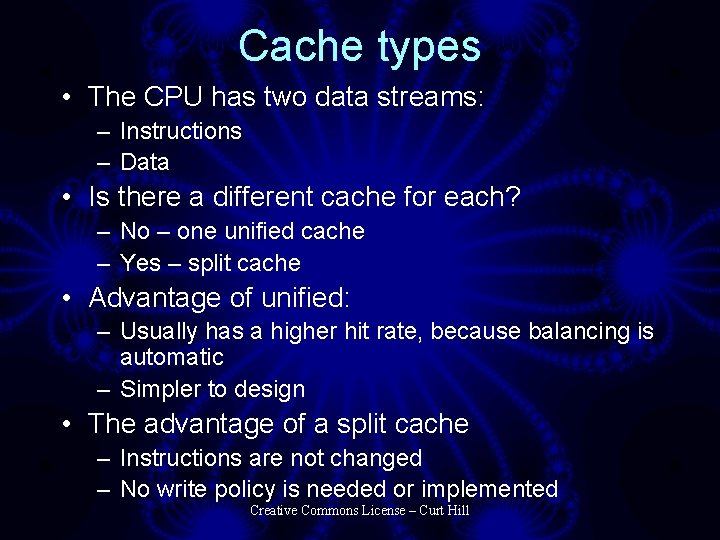

Cache types • The CPU has two data streams: – Instructions – Data • Is there a different cache for each? – No – one unified cache – Yes – split cache • Advantage of unified: – Usually has a higher hit rate, because balancing is automatic – Simpler to design • The advantage of a split cache – Instructions are not changed – No write policy is needed or implemented Creative Commons License – Curt Hill

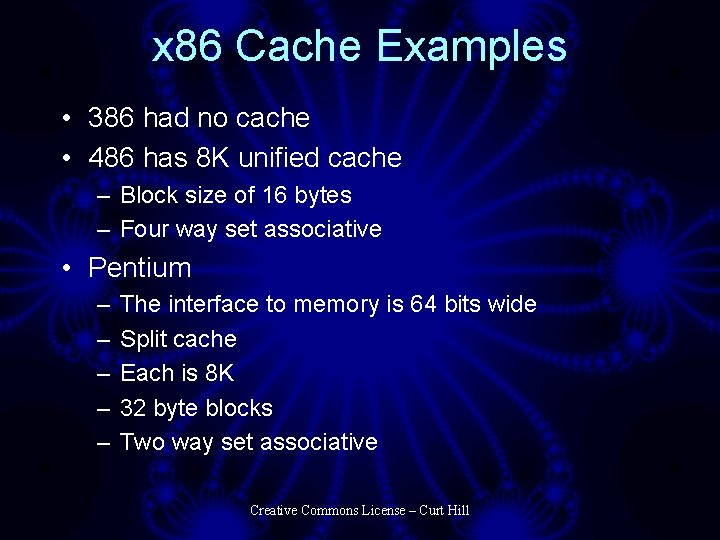

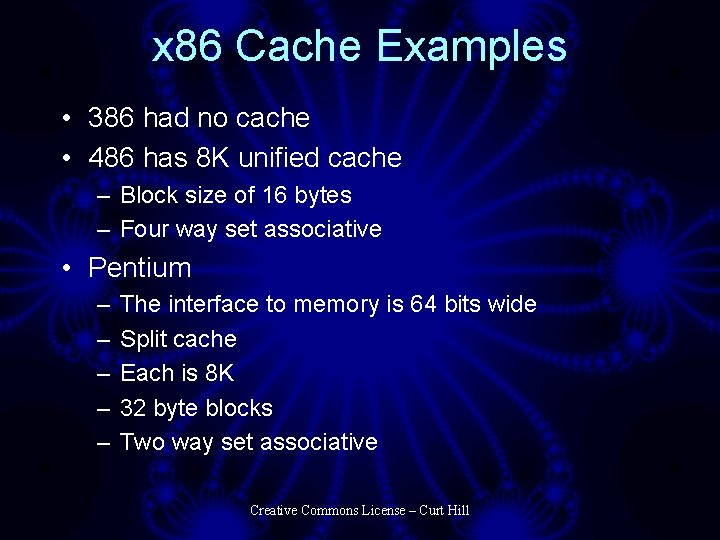

x 86 Cache Examples • 386 had no cache • 486 has 8 K unified cache – Block size of 16 bytes – Four way set associative • Pentium – – – The interface to memory is 64 bits wide Split cache Each is 8 K 32 byte blocks Two way set associative Creative Commons License – Curt Hill

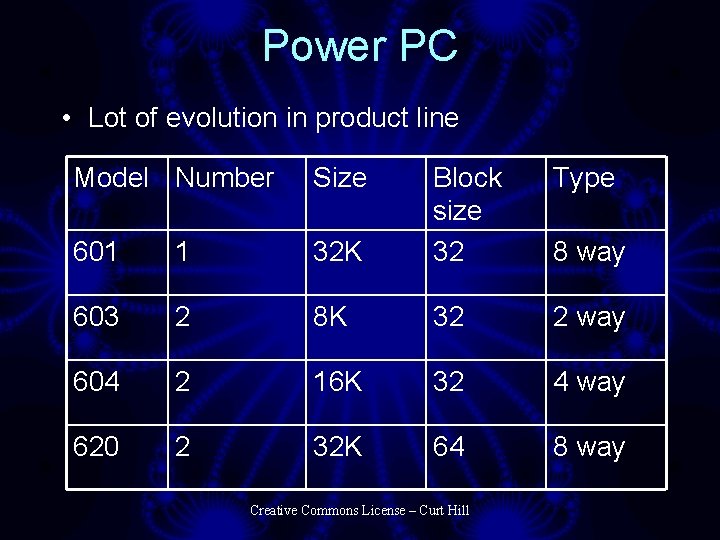

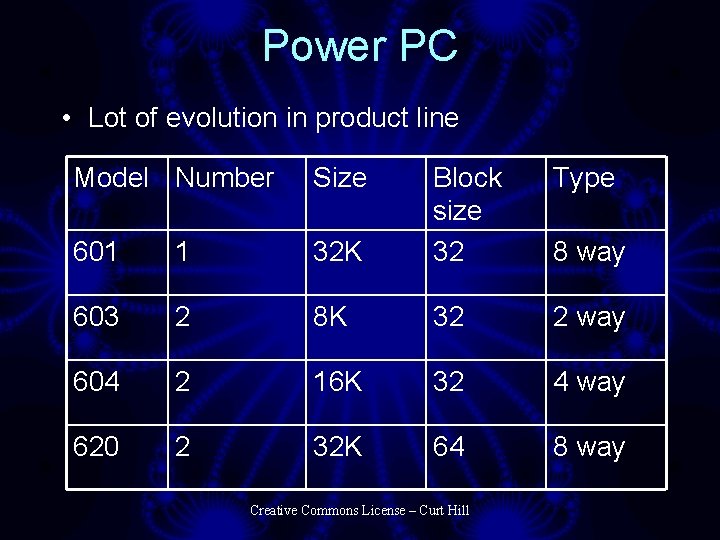

Power PC • Lot of evolution in product line Model Number Size Type 32 K Block size 32 601 1 603 2 8 K 32 2 way 604 2 16 K 32 4 way 620 2 32 K 64 8 way Creative Commons License – Curt Hill 8 way

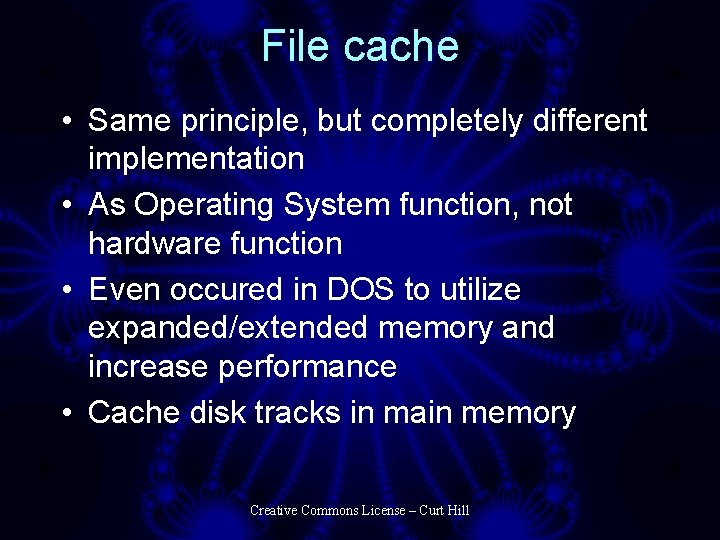

File cache • Same principle, but completely different implementation • As Operating System function, not hardware function • Even occured in DOS to utilize expanded/extended memory and increase performance • Cache disk tracks in main memory Creative Commons License – Curt Hill

File Cache Workings • When we read a disk sector, save the entire track in RAM and remember it • When any request for a read occurs, see if the sector is in RAM, if so do no disk access • When writing a sector, save it in RAM and then allow the program to continue and write the sector later when there is time • A cache is a RAM memory of disk activity, it remembers what has happened and prevents some disk accesses that could have been prevented Creative Commons License – Curt Hill

Conclusion • Caching is purely a speed-up operation • It is usually invisible to the programmer • The caches between the CPU and memory are all hardware • The file cache is all OS Creative Commons License – Curt Hill