Cache Memory 1 Outline General concepts 3 ways

![Writing cache-friendly code P 508 • Example (M=4, N=8, 10 cycles/iter) int sumvec(int a[M][N]) Writing cache-friendly code P 508 • Example (M=4, N=8, 10 cycles/iter) int sumvec(int a[M][N])](https://slidetodoc.com/presentation_image_h2/1b915ed4ddb74524cc74c2239c1b3131/image-39.jpg)

![Writing cache-friendly code a[i][j] i=0 i=1 i=2 i=3 j=0 j= 1 j= 2 j= Writing cache-friendly code a[i][j] i=0 i=1 i=2 i=3 j=0 j= 1 j= 2 j=](https://slidetodoc.com/presentation_image_h2/1b915ed4ddb74524cc74c2239c1b3131/image-40.jpg)

![Writing cache-friendly code P 508 • Example (M=4, N=8, 20 cycles/iter) int sumvec(int v[M][N]) Writing cache-friendly code P 508 • Example (M=4, N=8, 20 cycles/iter) int sumvec(int v[M][N])](https://slidetodoc.com/presentation_image_h2/1b915ed4ddb74524cc74c2239c1b3131/image-41.jpg)

![Writing cache-friendly code a[i][j] j=0 j= 1 j= 2 j= 3 j= 4 j= Writing cache-friendly code a[i][j] j=0 j= 1 j= 2 j= 3 j= 4 j=](https://slidetodoc.com/presentation_image_h2/1b915ed4ddb74524cc74c2239c1b3131/image-42.jpg)

- Slides: 55

Cache Memory 1

Outline • General concepts • 3 ways to organize cache memory • Issues with writes • Write cache friendly codes • Cache mountain • Suggested Reading: 6. 4, 6. 5, 6. 6 2

6. 4 Cache Memories 3

Cache Memory • History – At very beginning, 3 levels • Registers, main memory, disk storage – 10 years later, 4 levels • Register, SRAM cache, main DRAM memory, disk storage – Modern processor, 4~5 levels • Registers, SRAM L 1, L 2(, L 3) cache, main DRAM memory, disk storage – Cache memories • are small, fast SRAM-based memories • are managed by hardware automatically • can be on-chip, on-die, off-chip 4

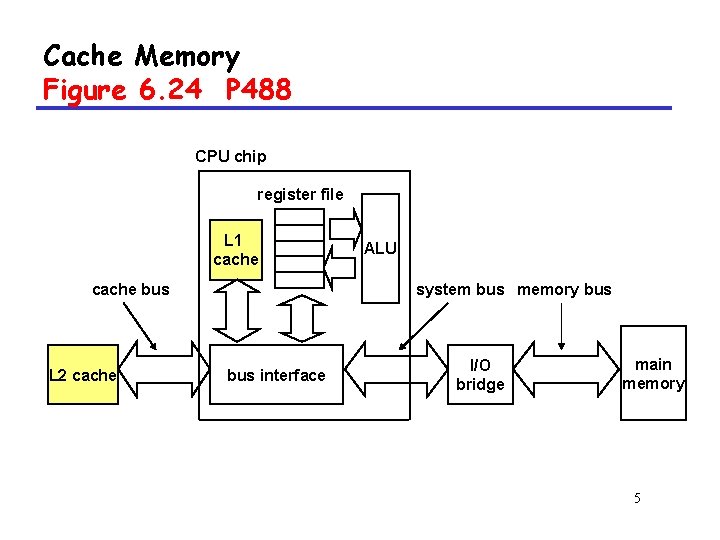

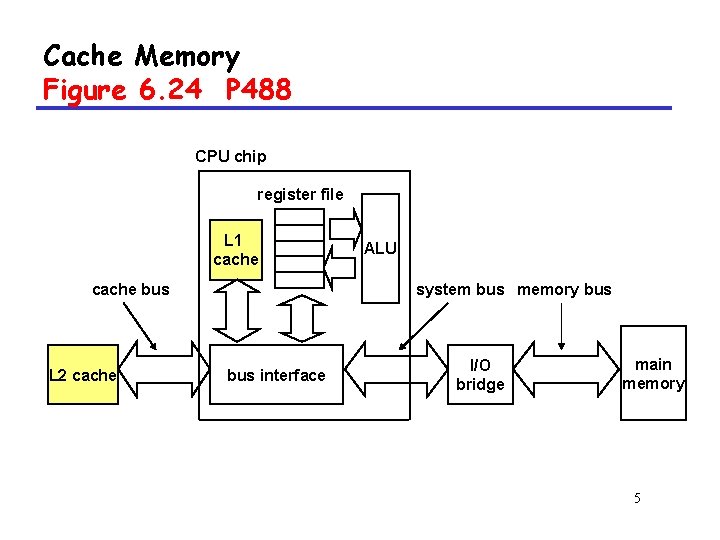

Cache Memory Figure 6. 24 P 488 CPU chip register file L 1 cache bus L 2 cache ALU system bus memory bus interface I/O bridge main memory 5

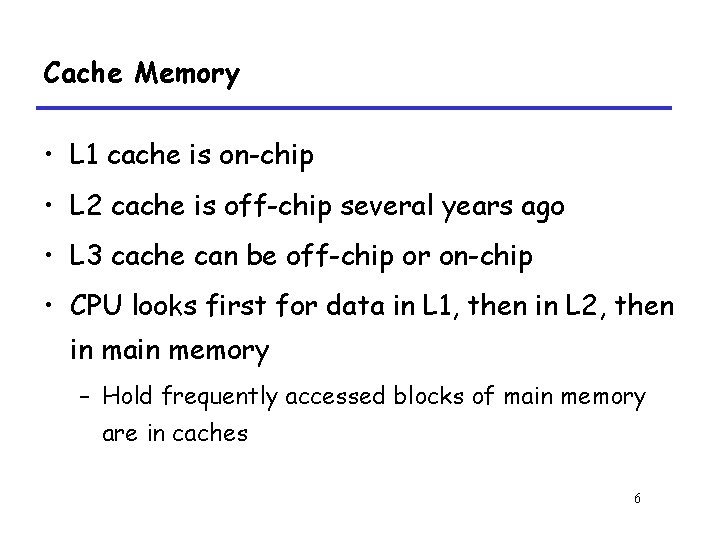

Cache Memory • L 1 cache is on-chip • L 2 cache is off-chip several years ago • L 3 cache can be off-chip or on-chip • CPU looks first for data in L 1, then in L 2, then in main memory – Hold frequently accessed blocks of main memory are in caches 6

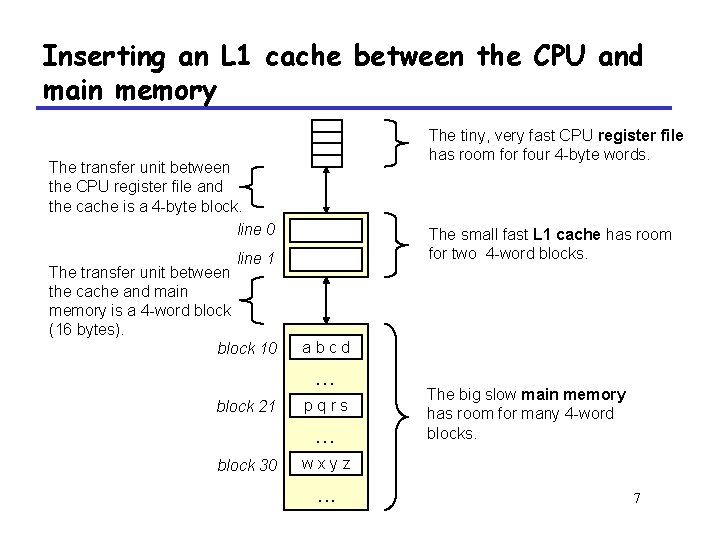

Inserting an L 1 cache between the CPU and main memory The tiny, very fast CPU register file has room for four 4 -byte words. The transfer unit between the CPU register file and the cache is a 4 -byte block. line 0 line 1 The transfer unit between the cache and main memory is a 4 -word block (16 bytes). block 10 The small fast L 1 cache has room for two 4 -word blocks. abcd . . . block 21 pqrs . . . block 30 The big slow main memory has room for many 4 -word blocks. wxyz . . . 7

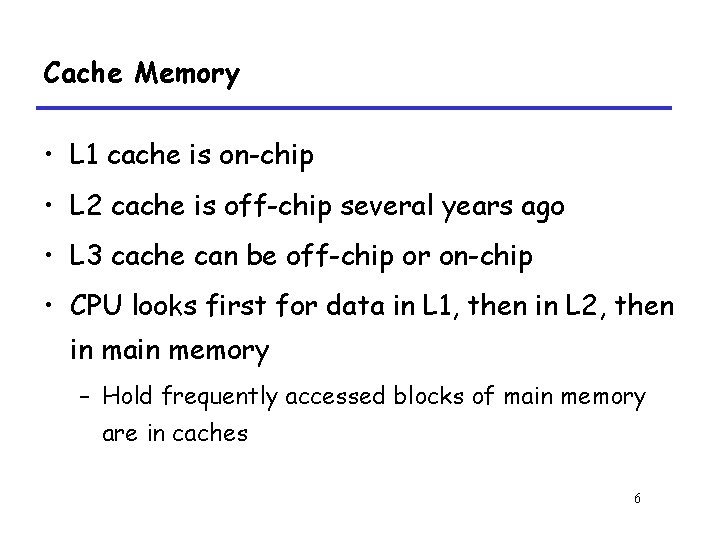

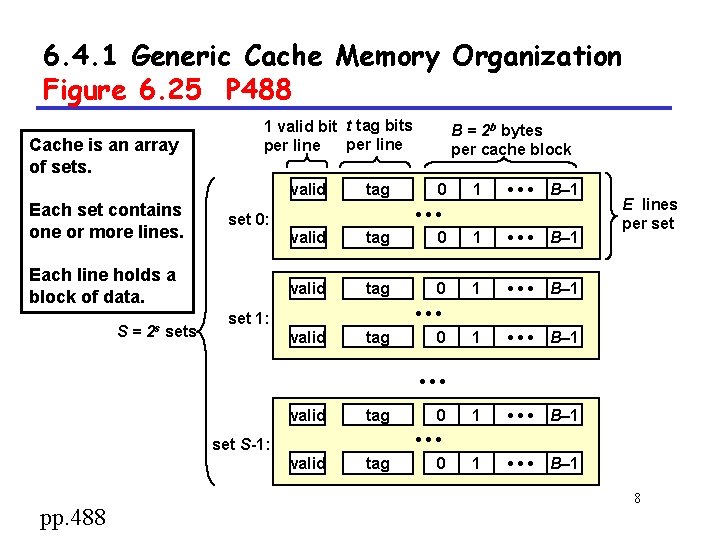

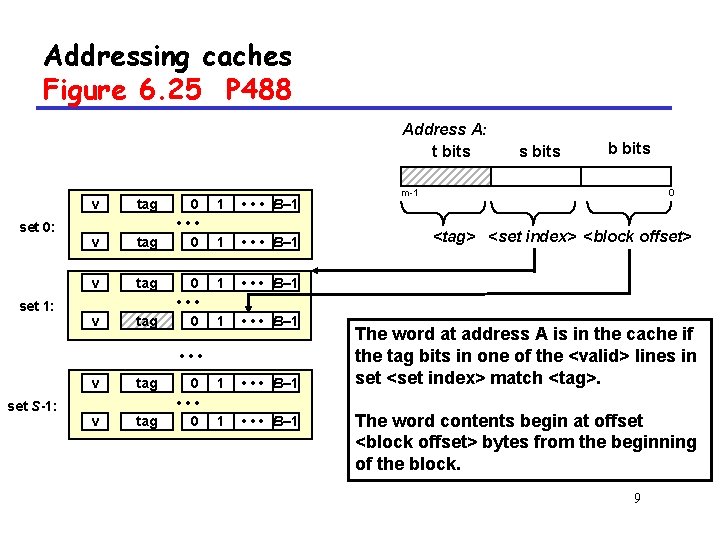

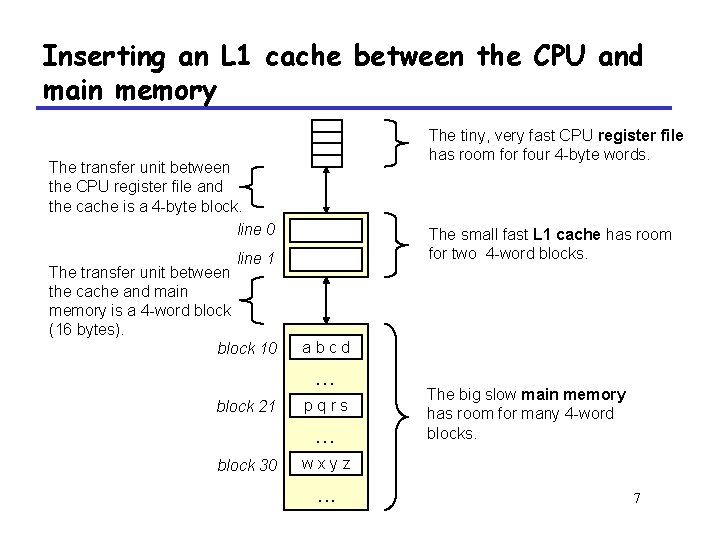

6. 4. 1 Generic Cache Memory Organization Figure 6. 25 P 488 Cache is an array of sets. 1 valid bit t tag bits per line valid Each set contains one or more lines. S = 2 s sets tag 0 1 • • • B– 1 valid tag • • • 0 1 • • • B– 1 valid tag 0 1 • • • B– 1 tag • • • 0 1 • • • B– 1 set 0: Each line holds a block of data. B = 2 b bytes per cache block set 1: valid E lines per set • • • valid tag 0 1 • • • B– 1 tag • • • 0 1 • • • B– 1 set S-1: valid pp. 488 8

Addressing caches Figure 6. 25 P 488 Address A: t bits set 0: set 1: v tag 0 • • • 0 1 • • • B– 1 1 • • • B– 1 • • • set S-1: v tag 0 • • • 0 s bits b bits m-1 0 <tag> <set index> <block offset> The word at address A is in the cache if the tag bits in one of the <valid> lines in set <set index> match <tag>. The word contents begin at offset <block offset> bytes from the beginning of the block. 9

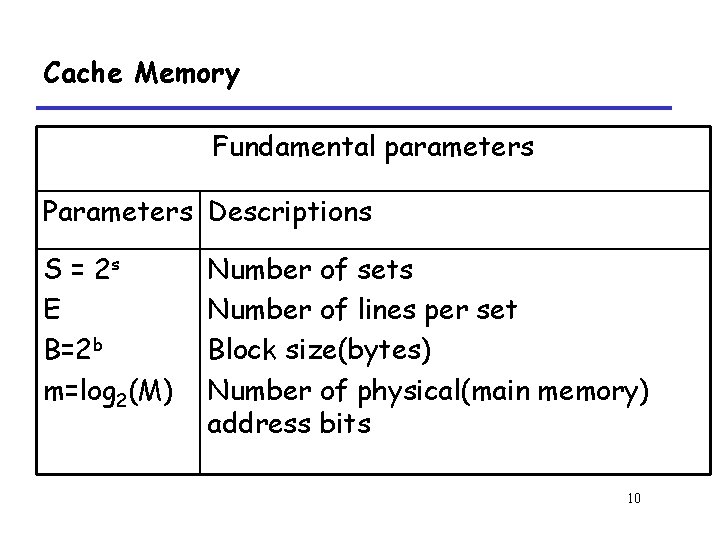

Cache Memory Fundamental parameters Parameters Descriptions S = 2 s E B=2 b m=log 2(M) Number of sets Number of lines per set Block size(bytes) Number of physical(main memory) address bits 10

Cache Memory Derived quantities Parameters Descriptions M=2 m s=log 2(S) b=log 2(B) t=m-(s+b) C=B E S Maximum number of unique memory address Number of set index bits Number of block offset bits Number of tag bits Cache size (bytes) not including overhead such as the valid and tag bits 11

6. 4. 2 Direct-mapped cache Figure 6. 27 P 490 • Simplest kind of cache • Characterized by exactly one line per set 0: valid tag cache block set 1: valid tag cache block E=1 lines per set • • • set S-1: valid tag cache block 12

Accessing direct-mapped caches Figure 6. 28 P 491 • Set selection – Use the set index bits to determine the set of interest selected set 0: valid tag cache block set 1: valid tag cache block • • • t bits s bits b bits 00 001 m-1 tag set S-1: valid tag cache block set index block offset 0 13

Accessing direct-mapped caches • Line matching and word extraction – find a valid line in the selected set with a matching tag (line matching) – then extract the word (word selection) 14

Accessing direct-mapped caches Figure 6. 29 P 491 =1? (1) The valid bit must be set 0 selected set (i): 1 1 0110 2 3 4 w 0 5 w 1 w 2 (2) The tag bits in the cache =? line must match the tag bits in the address t bits 0110 m-1 tag s bits b bits i 100 6 7 w 3 (3) If (1) and (2), then cache hit, and block offset selects starting byte. set index block offset 0 15

Line Replacement on Misses in Directed Caches • If cache misses – Retrieve the requested block from the next level in the memory hierarchy – Store the new block in one of the cache lines of the set indicated by the set index bits 16

Line Replacement on Misses in Directed Caches • If the set is full of valid cache lines – One of the existing lines must be evicted • For a direct-mapped caches – Each set contains only one line – Current line is replaced by the newly fetched line 17

Direct-mapped cache simulation P 492 • M=16 byte addresses • B=2 bytes/block, S=4 sets, E=1 entry/set 18

Direct-mapped cache simulation P 493 t=1 s=2 x xx b=1 x M=16 byte addresses, B=2 bytes/block, S=4 sets, E=1 entry/set Address trace (reads): 0 [0000] 1 [0001] 13 [1101] 8 [1000] 0 [0000] v 1 0 [0000] (miss) tag data 0 m[1] m[0] (1) (2) v (3) 13 [1101] (miss) v tag data 8 [1000] (miss) tag data 1 1 m[9] m[8] 1 1 m[13] m[12] 1 0 m[1] m[0] 1 1 m[13] m[12] v (4) 0 [0000] (miss) tag data 1 0 m[1] m[0] 1 1 m[13] m[12] 19

Direct-mapped cache simulation Figure 6. 30 P 493 Address bits Address (decimal) Tag bits (t=1) Index bits (s=2) Offset bits (b=1) 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 0 0 0 0 1 1 1 1 00 00 01 01 10 10 11 11 0 1 0 1 Block number (decimal) 0 0 1 1 2 2 3 3 4 4 5 5 6 6 7 20 7

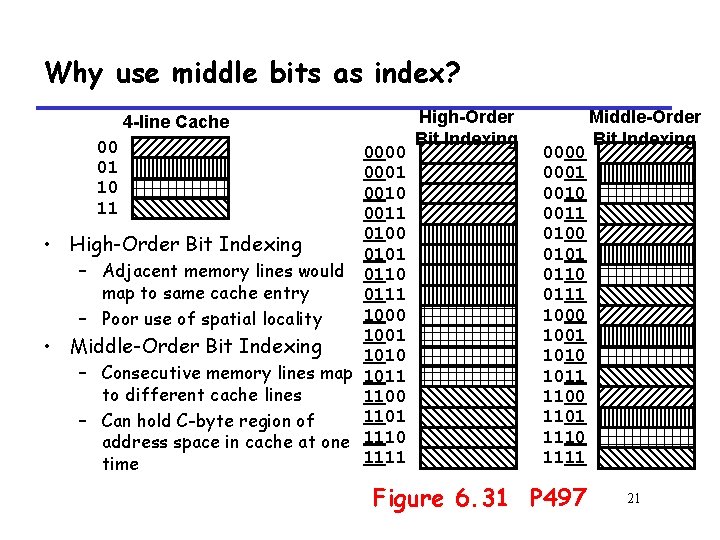

Why use middle bits as index? 4 -line Cache 00 01 10 11 • • 0000 0001 0010 0011 0100 High-Order Bit Indexing 0101 – Adjacent memory lines would 0110 map to same cache entry 0111 1000 – Poor use of spatial locality 1001 Middle-Order Bit Indexing 1010 – Consecutive memory lines map 1011 to different cache lines 1100 1101 – Can hold C-byte region of address space in cache at one 1110 1111 time High-Order Bit Indexing 0000 0001 0010 0011 0100 0101 0110 0111 1000 1001 1010 1011 1100 1101 1110 1111 Figure 6. 31 P 497 Middle-Order Bit Indexing 21

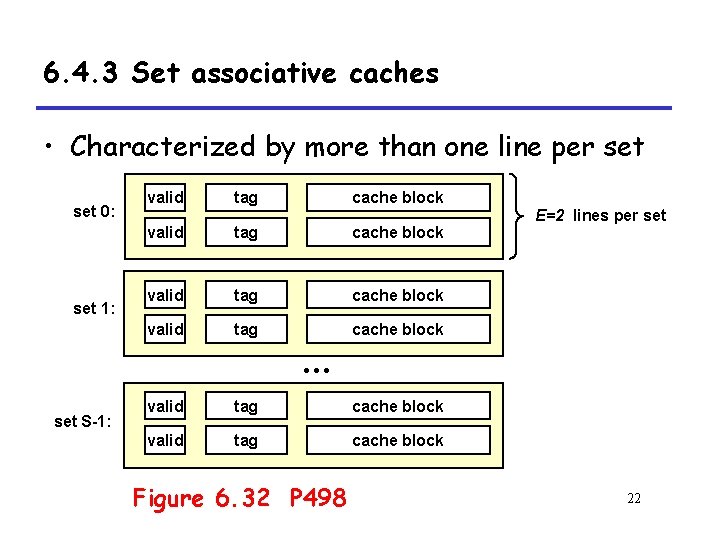

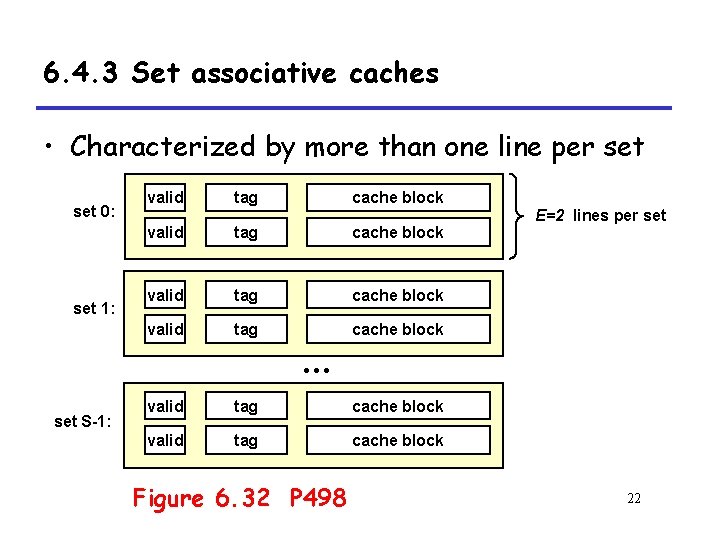

6. 4. 3 Set associative caches • Characterized by more than one line per set 0: set 1: valid tag cache block E=2 lines per set • • • set S-1: valid tag cache block Figure 6. 32 P 498 22

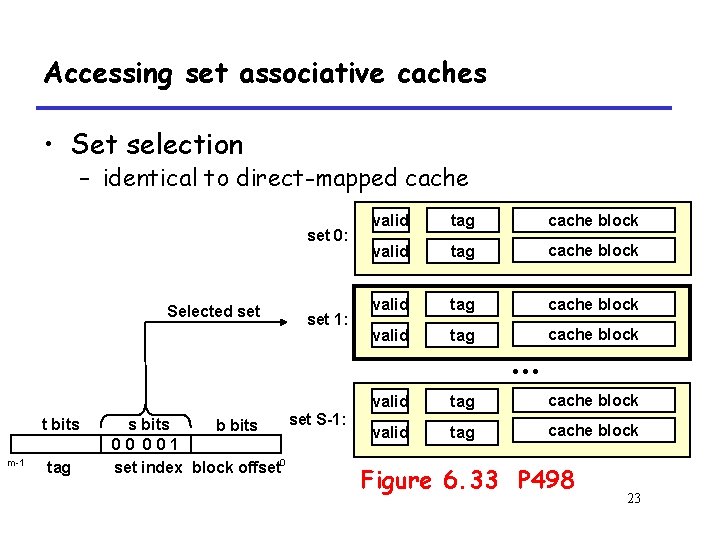

Accessing set associative caches • Set selection – identical to direct-mapped cache set 0: Selected set 1: valid tag cache block • • • t bits m-1 tag set S-1: s bits b bits 00 001 set index block offset 0 valid tag cache block Figure 6. 33 P 498 23

Accessing set associative caches • Line matching and word selection – must compare the tag in each valid line in the selected set. =1? (1) The valid bit must be set. 0 selected set (i): 1 1001 1 0110 (2) The tag bits in one of the cache lines must match the tag bits in the address 1 2 3 4 w 0 m-1 6 w 1 w 2 7 w 3 (3) If (1) and (2), then cache hit, and block offset selects starting byte. =? t bits 0110 tag 5 s bits b bits i 100 set index block offset 0 Figure 6. 34 P 499 24

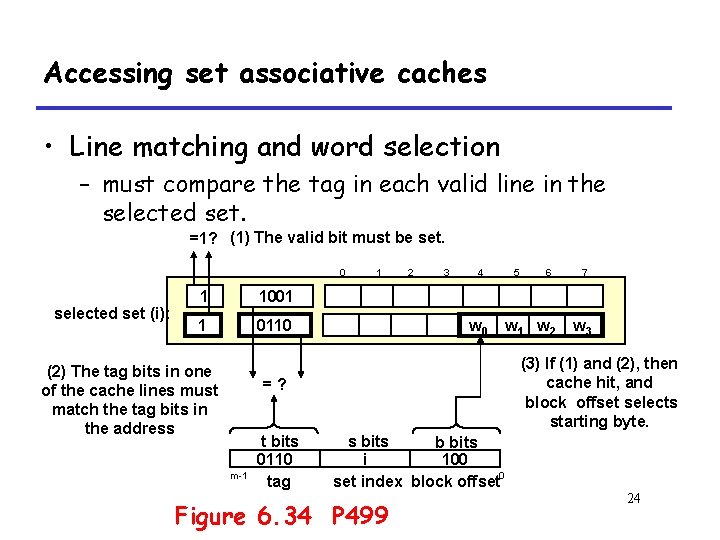

6. 4. 4 Fully associative caches • Characterized by all of the lines in the only one set • No set index bits in the address set 0: tag cache block valid tag … valid E=C/B lines in the one and only set cache block Figure 6. 35 P 500 t bits b bits tag block offset Figure 6. 36 P 500 25

Accessing set associative caches • Word selection – must compare the tag in each valid line =1? (1) The valid bit must be set. 0 1 1001 0 0110 1 0110 0 1110 (2) The tag bits in one of the cache lines must match the tag bits in the address 2 3 4 w 0 5 t bits m-1 tag 6 w 1 w 2 =? 0110 Figure 6. 37 P 500 1 b bits 100 block offset 0 7 w 3 (3) If (1) and (2), then cache hit, and block offset selects starting byte. 26

6. 4. 5 Issues with Writes • Write hits – 1) Write through • Cache updates its copy • Immediately writes the corresponding cache block to memory – 2) Write back • Defers the memory update as long as possible • Writing the updated block to memory only when it is evicted from the cache • Maintains a dirty bit for each cache line 27

Issues with Writes • Write misses – 1) Write-allocate • Loads the corresponding memory block into the cache • Then updates the cache block – 2) No-write-allocate • Bypasses the cache • Writes the word directly to memory • Combination – Write through, no-write-allocate – Write back, write-allocate 28

6. 4. 6 Multi-level caches Options: separate data and instruction caches, or a unified cache Processor TLB regs L 1 D-cache L 1 I-cache L 2 Cache disk Memory Figure 6. 38 P 504 size: speed: $/Mbyte: line size: 8 -64 KB 3 ns 1 -4 MB SRAM 6 ns $100/MB 32 B larger, slower, cheaper 128 MB DRAM 60 ns $1. 50/MB 8 KB 30 GB 8 ms $0. 05/MB larger line size, higher associativity, more likely to write back 29

6. 4. 7 Cache performance metrics P 505 • Miss Rate – fraction of memory references not found in cache (misses/references) – Typical numbers: 3 -10% for L 1 • Hit Rate – fraction of memory references found in cache (1 miss rate) 30

Cache performance metrics • Hit Time – time to deliver a line in the cache to the processor (includes time to determine whether the line is in the cache) – Typical numbers: 1 -2 clock cycle for L 1 5 -10 clock cycles for L 2 • Miss Penalty – additional time required because of a miss • Typically 25 -100 cycles for main memory 31

Cache performance metrics P 505 • 1> Cache size – Hit rate vs. hit time • 2> Block size – Spatial locality vs. temporal locality • 3> Associativity – – Thrashing Cost Speed Miss penalty • 4> Write strategy – Simple, read misses, fewer transfer 32

6. 5 Writing Cache-Friendly Code 33

Writing Cache-Friendly Code • Principles – Programs with better locality will tend to have lower miss rates – Programs with lower miss rates will tend to run faster than programs with higher miss rates 34

Writing Cache-Friendly Code • Basic approach – Make the common case go fast • Programs often spend most of their time in a few core functions. • These functions often spend most of their time in a few loops – Minimize the number of cache misses in each inner loop • All things being equal 35

Writing Cache-Friendly Code P 507 Temporal locality, These variables are usually put in registers int sumvec(int v[N]) { int i, sum = 0 ; for (i = 0 ; i < N ; i++) sum += v[i] ; return sum ; } v[i] Access order, [h]it or [m]iss i=0 i= 1 i= 2 i= 3 i= 4 i= 5 i= 6 i= 7 1[m] 2[h] 3[h] 4[h] 5[m] 6[h] 7[h] 8[h] 36

Writing cache-friendly code • Temporal locality – Repeated references to local variables are good because the compiler can cache them in the register file 37

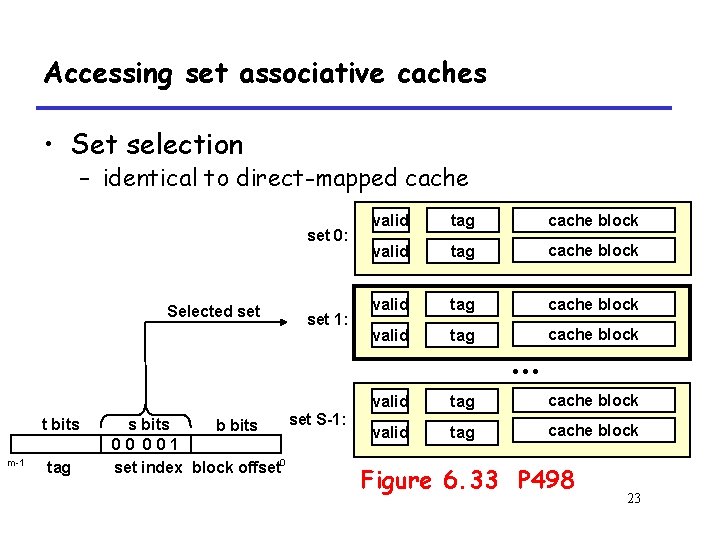

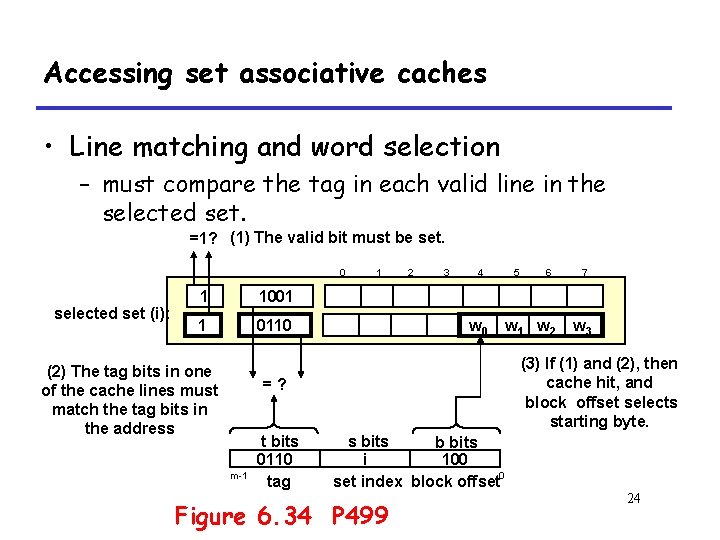

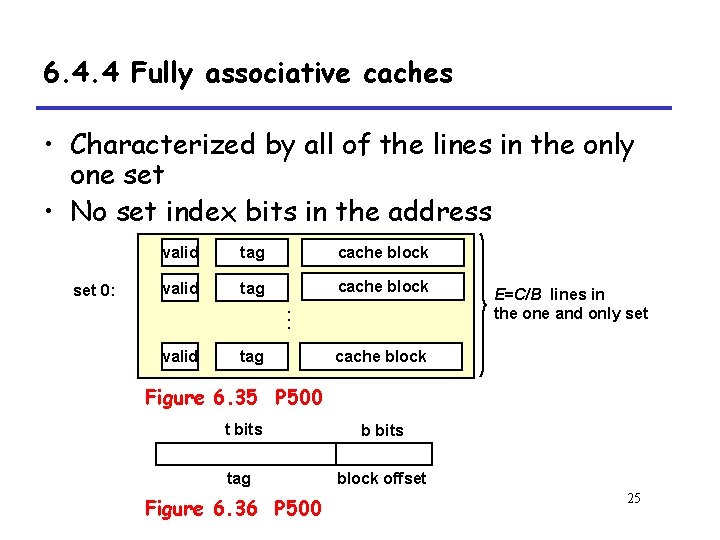

Writing cache-friendly code • Spatial locality – Stride-1 references patterns are good because caches at all levels of the memory hierarchy store data as contiguous blocks • Spatial locality is especially important in programs that operate on multidimensional arrays 38

![Writing cachefriendly code P 508 Example M4 N8 10 cyclesiter int sumvecint aMN Writing cache-friendly code P 508 • Example (M=4, N=8, 10 cycles/iter) int sumvec(int a[M][N])](https://slidetodoc.com/presentation_image_h2/1b915ed4ddb74524cc74c2239c1b3131/image-39.jpg)

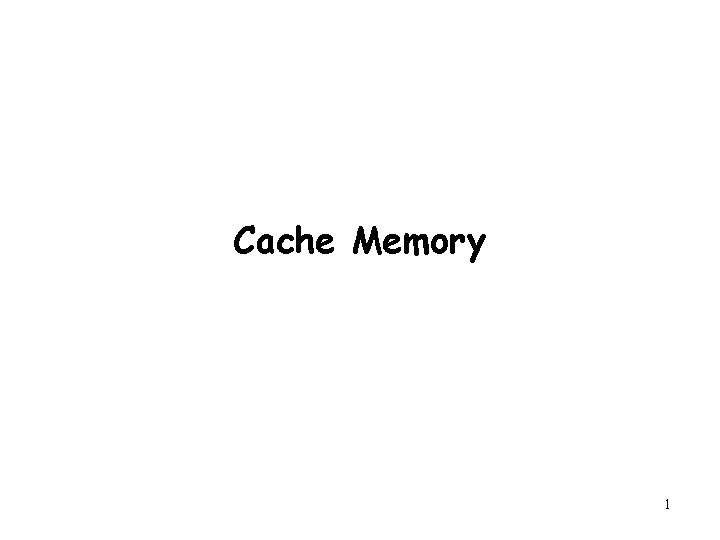

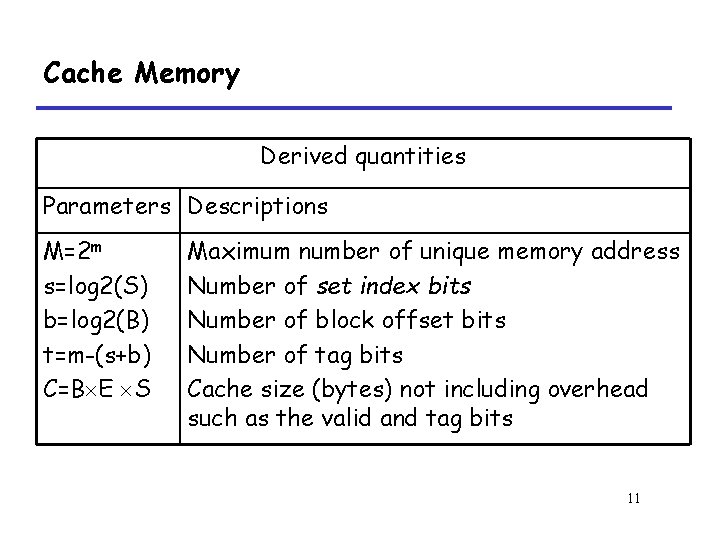

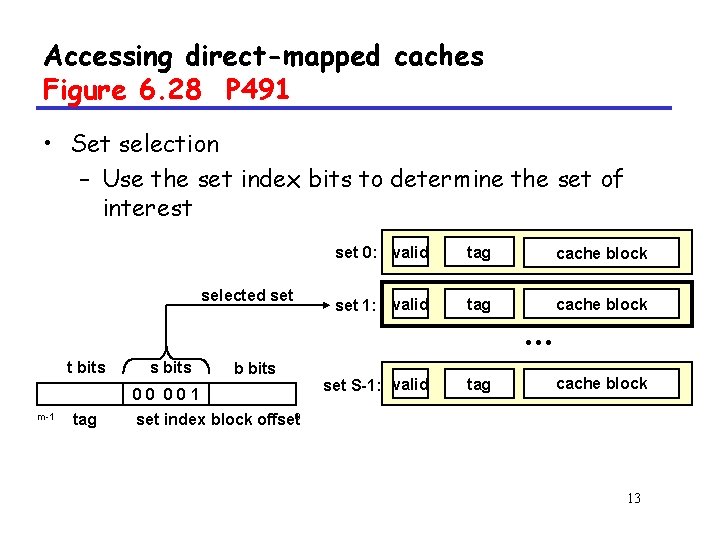

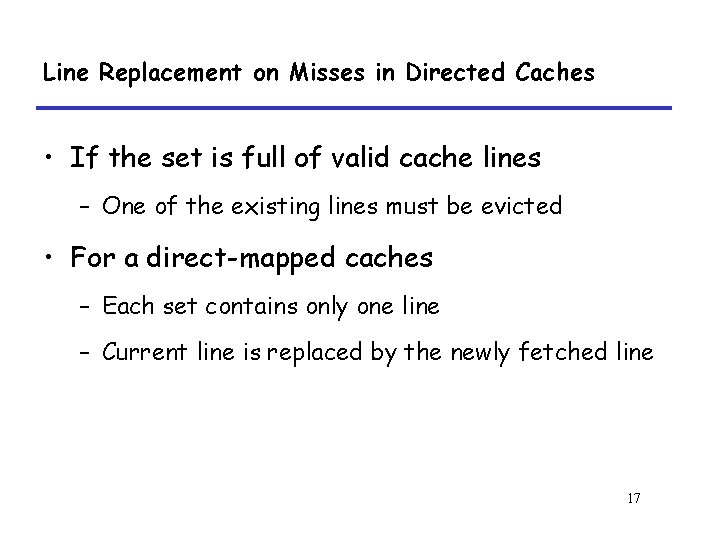

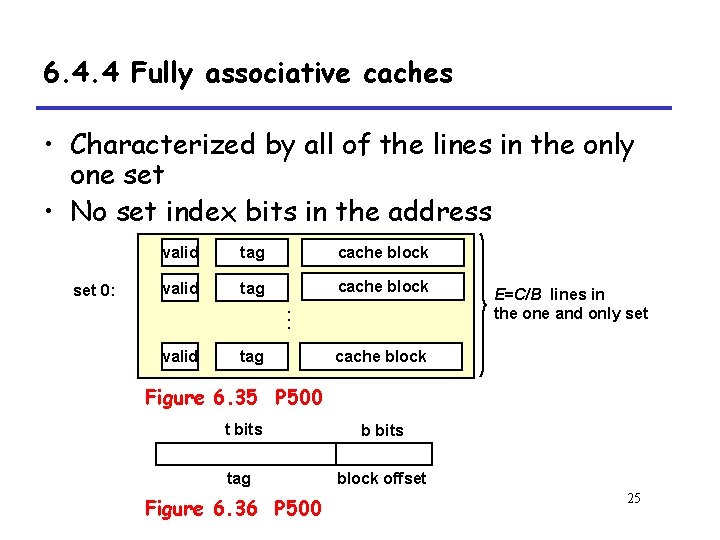

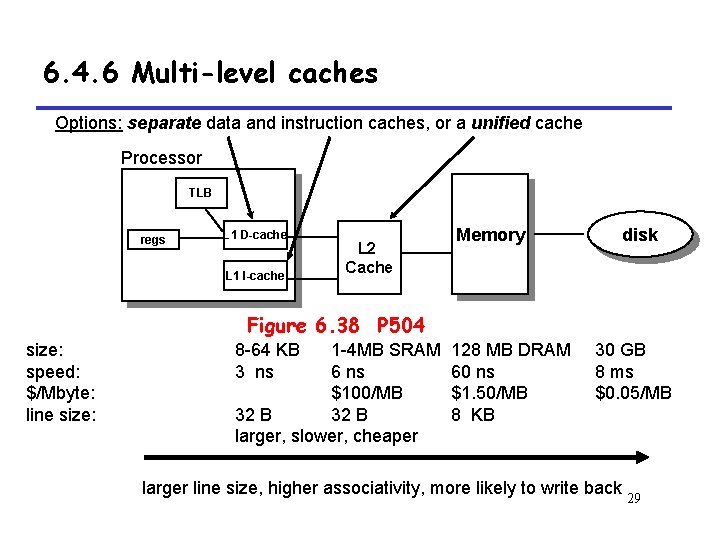

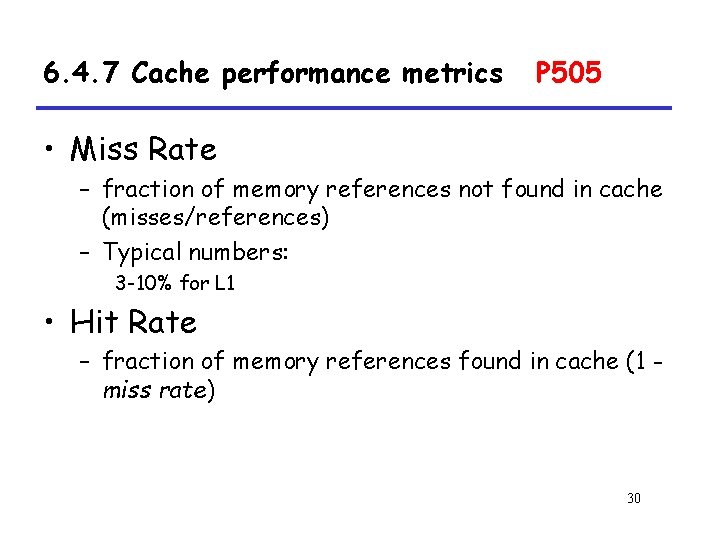

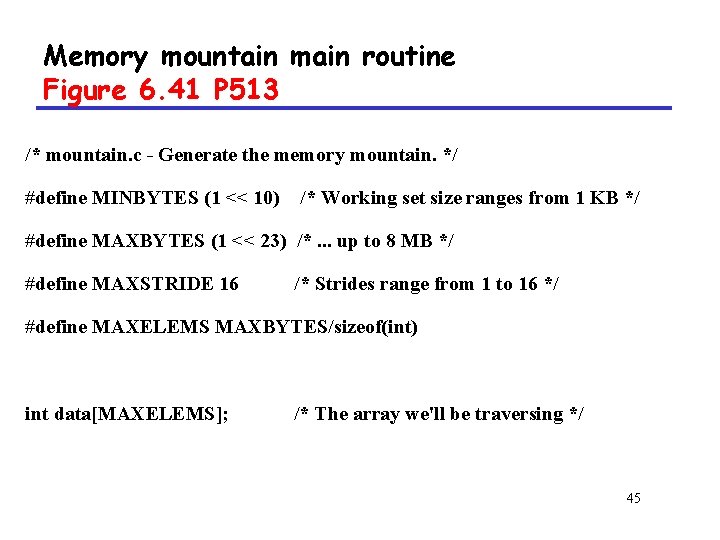

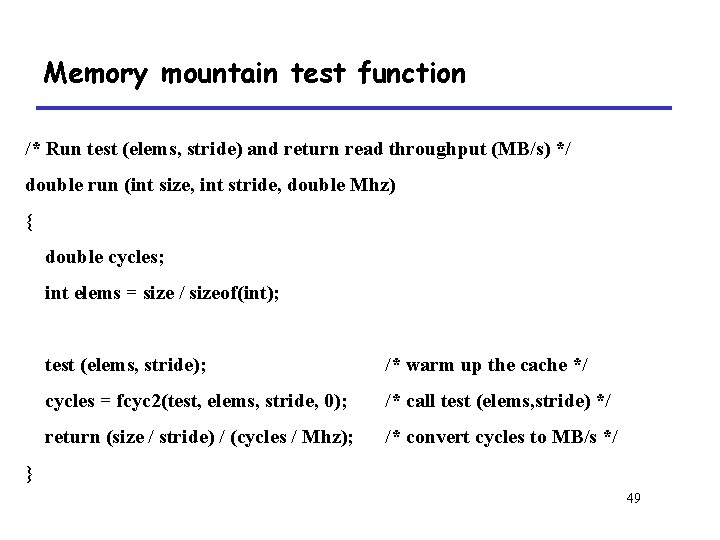

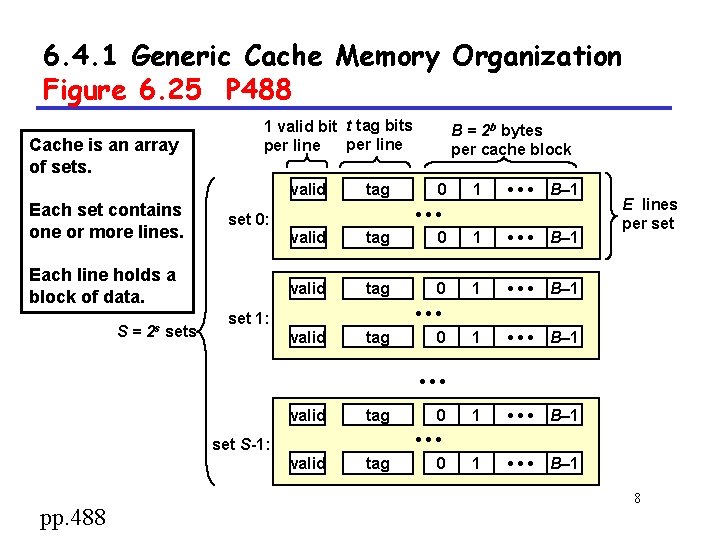

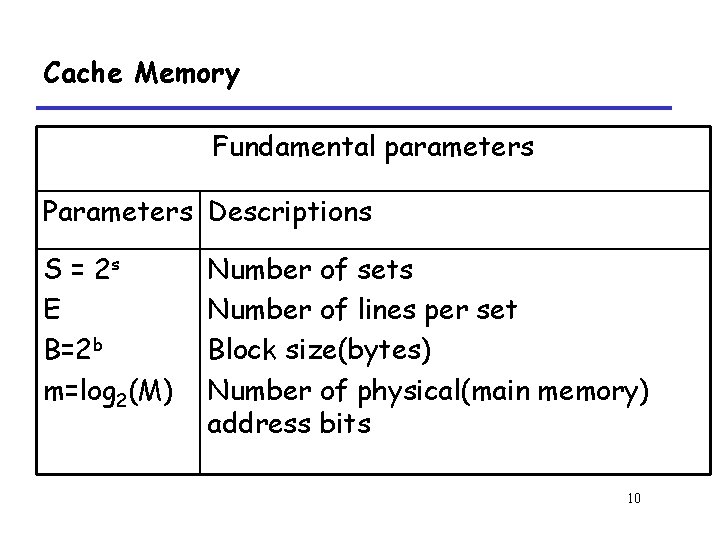

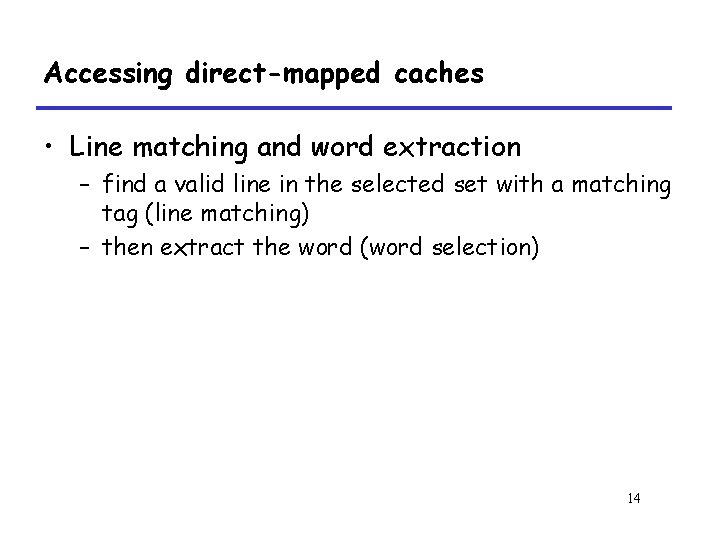

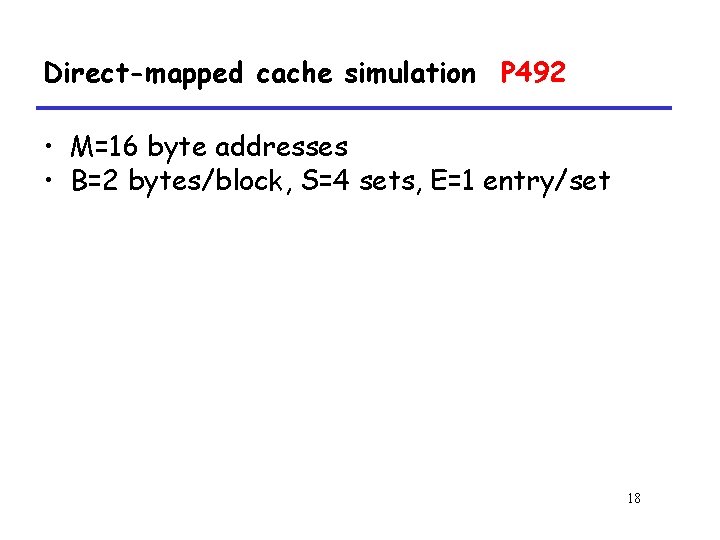

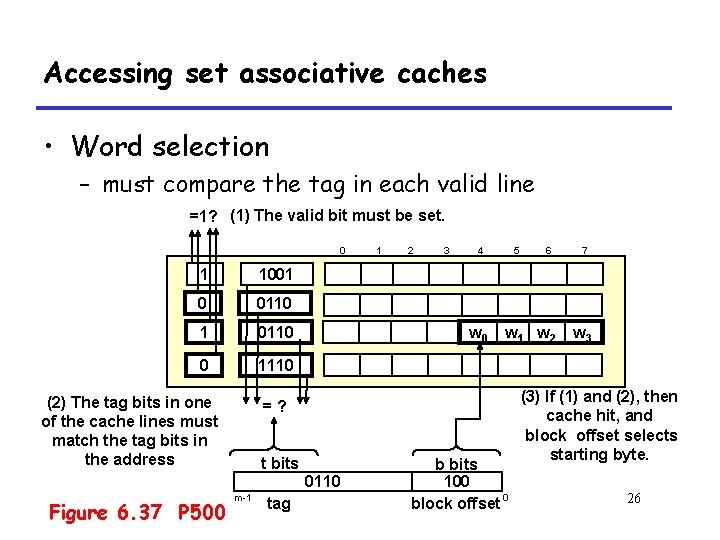

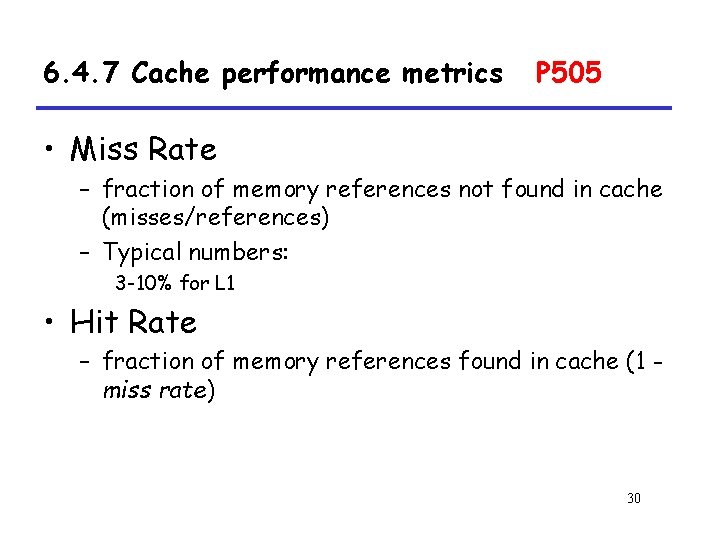

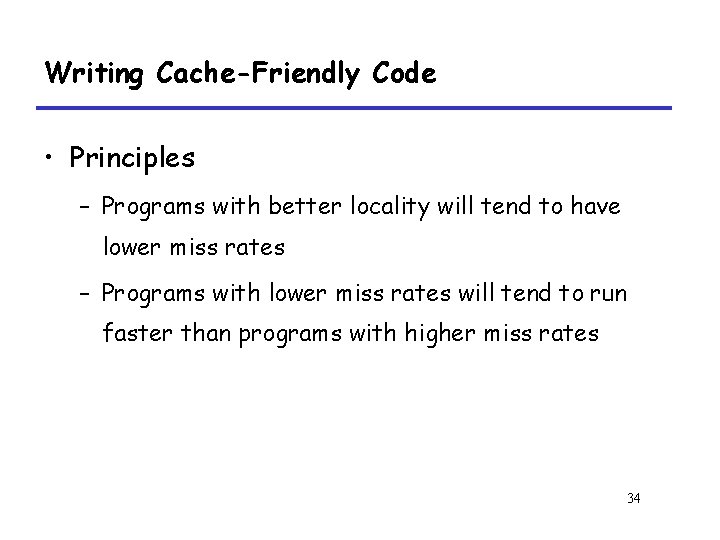

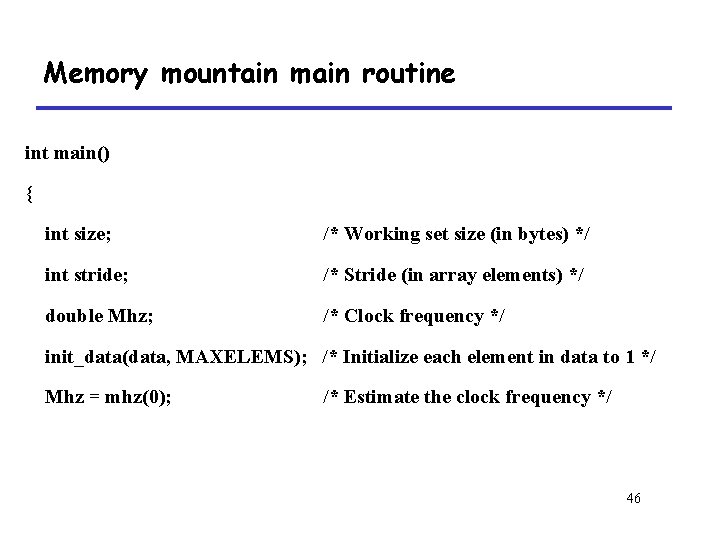

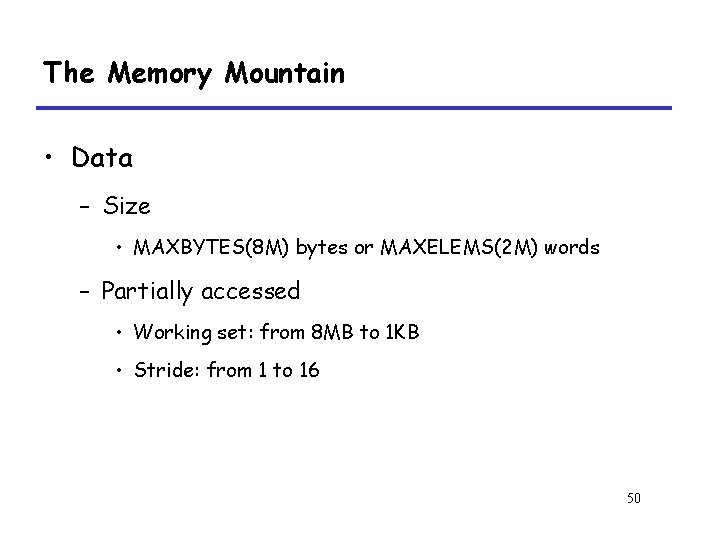

Writing cache-friendly code P 508 • Example (M=4, N=8, 10 cycles/iter) int sumvec(int a[M][N]) { int i, j, sum = 0 ; for (i = 0 ; i < M ; i++) for ( j = 0 ; j < N ; j++ ) sum += a[i][j] ; return sum ; } 39

![Writing cachefriendly code aij i0 i1 i2 i3 j0 j 1 j 2 j Writing cache-friendly code a[i][j] i=0 i=1 i=2 i=3 j=0 j= 1 j= 2 j=](https://slidetodoc.com/presentation_image_h2/1b915ed4ddb74524cc74c2239c1b3131/image-40.jpg)

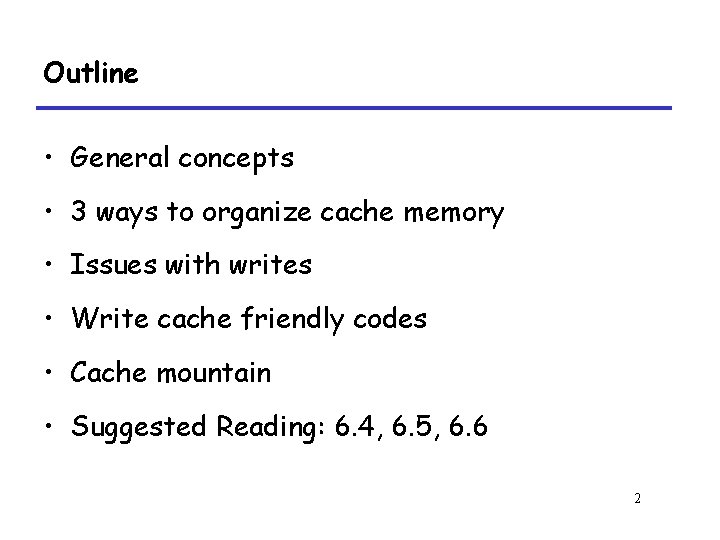

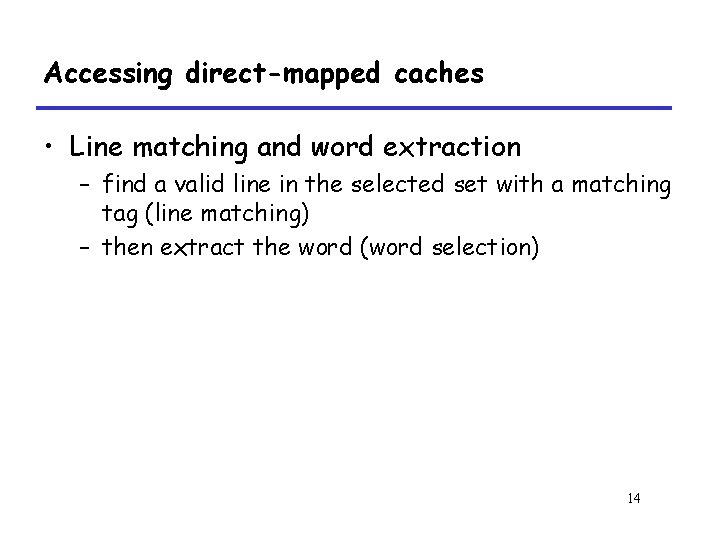

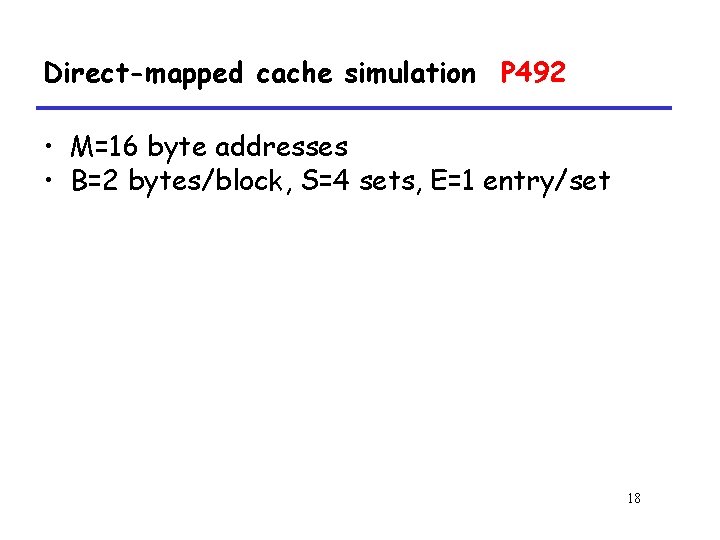

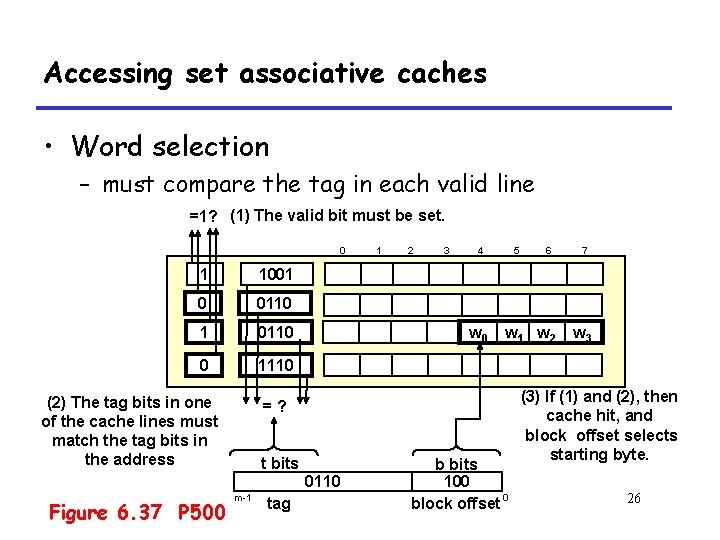

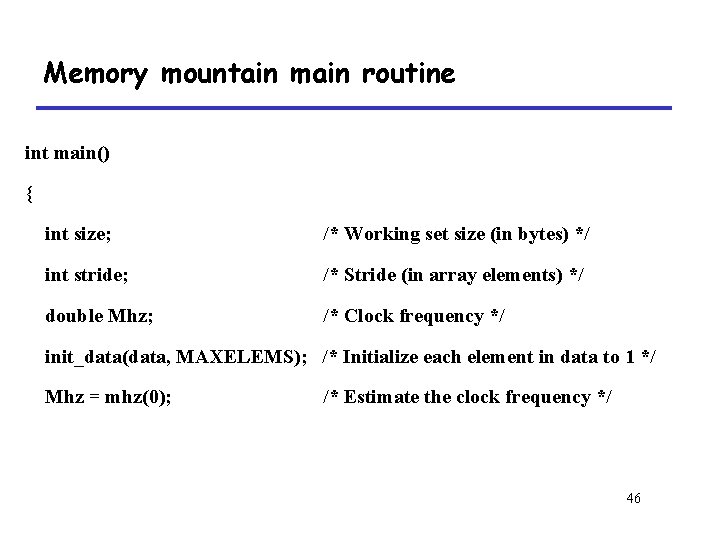

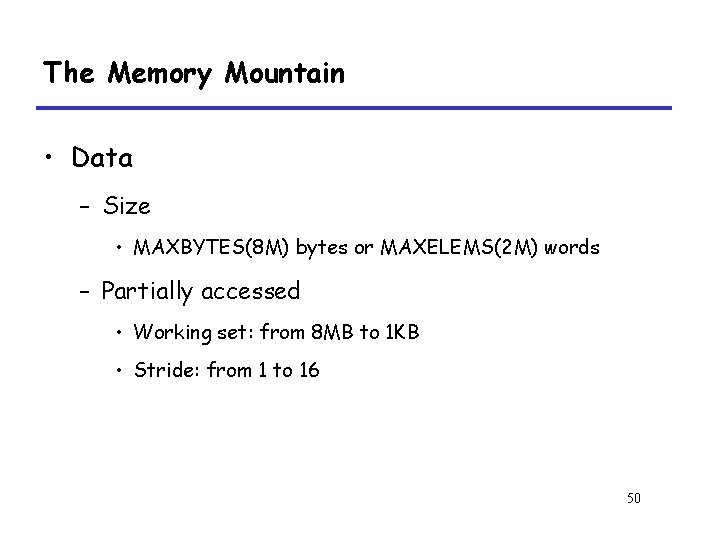

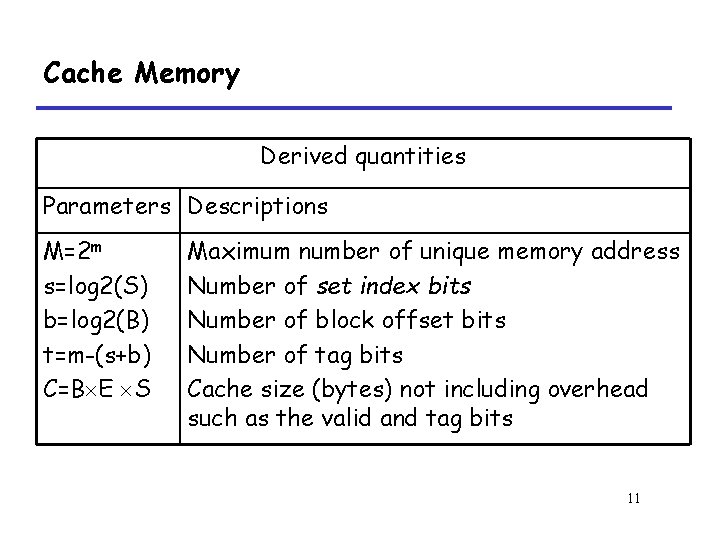

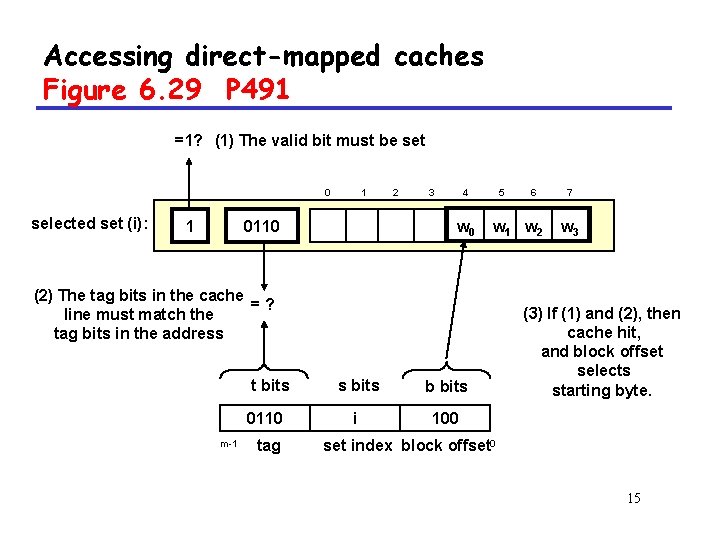

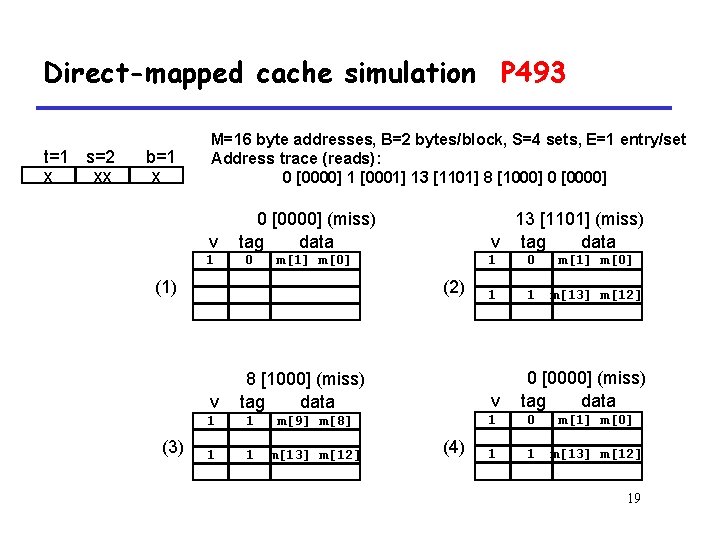

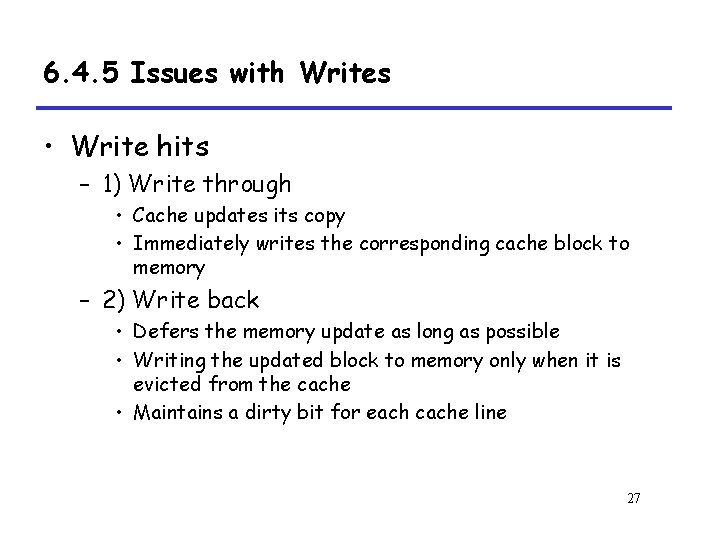

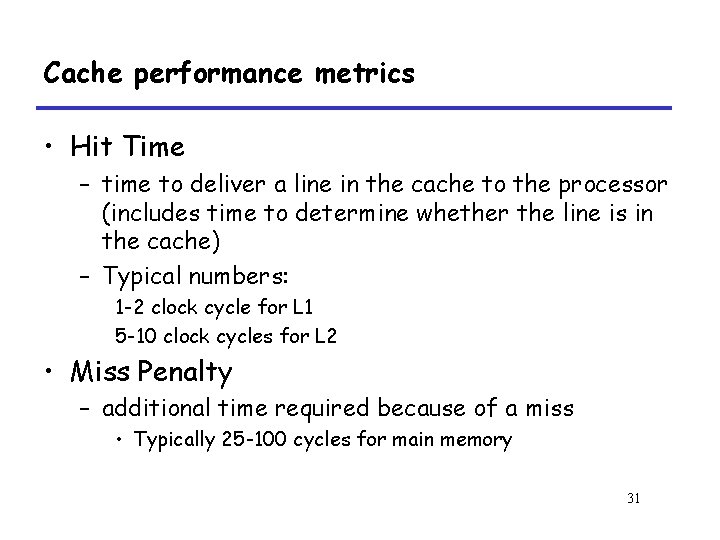

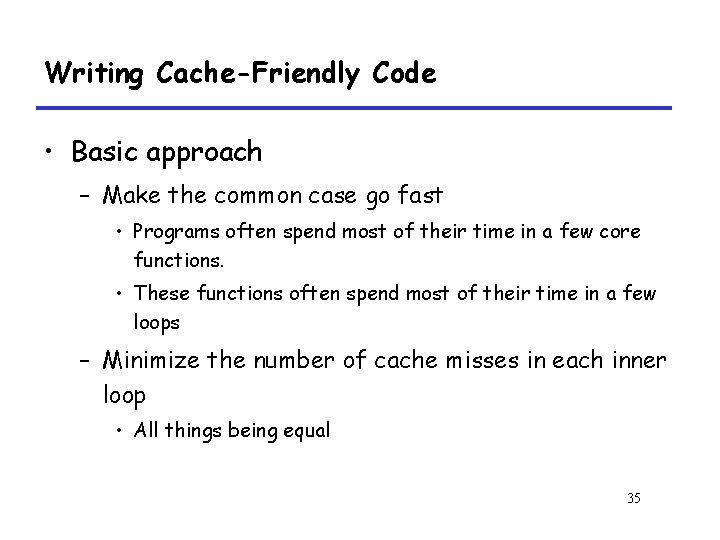

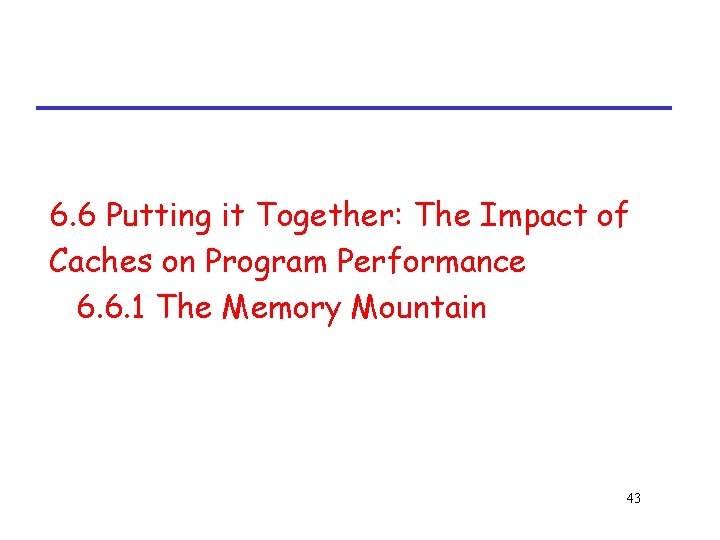

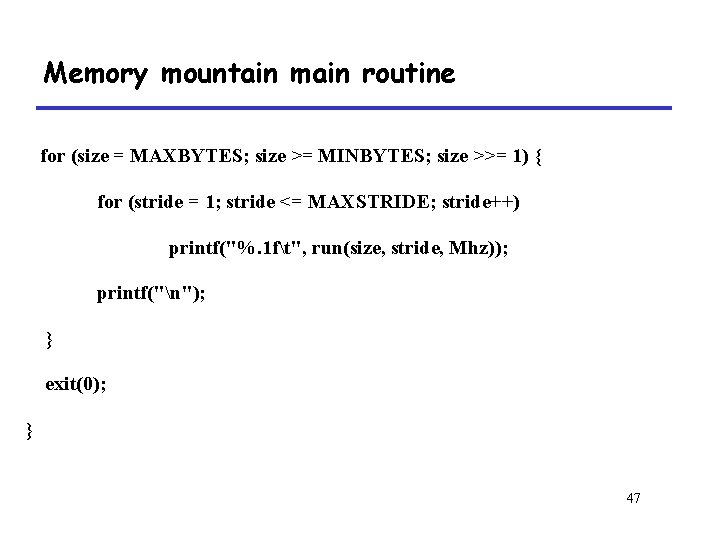

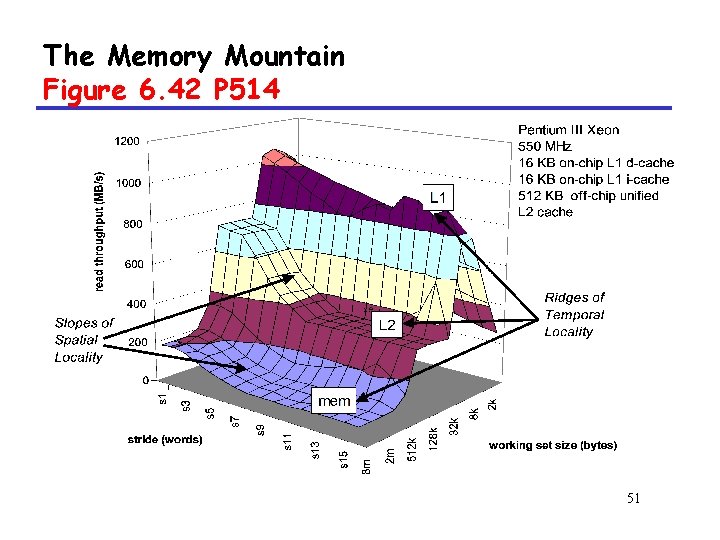

Writing cache-friendly code a[i][j] i=0 i=1 i=2 i=3 j=0 j= 1 j= 2 j= 3 j= 4 j= 5 j= 6 j= 7 1[m] 9[m] 17[m] 25[m] 2[h] 10[h] 18[h] 26[h] 3[h] 11[h] 19[h] 27[h] 4[h] 12[h] 20[h] 28[h] 5[m] 13[m] 21[m] 29[m] 6[h] 14[h] 22[h] 30[h] 7[h] 15[h] 23[h] 31[h] 8[h] 16[h] 24[h] 32[h] 40

![Writing cachefriendly code P 508 Example M4 N8 20 cyclesiter int sumvecint vMN Writing cache-friendly code P 508 • Example (M=4, N=8, 20 cycles/iter) int sumvec(int v[M][N])](https://slidetodoc.com/presentation_image_h2/1b915ed4ddb74524cc74c2239c1b3131/image-41.jpg)

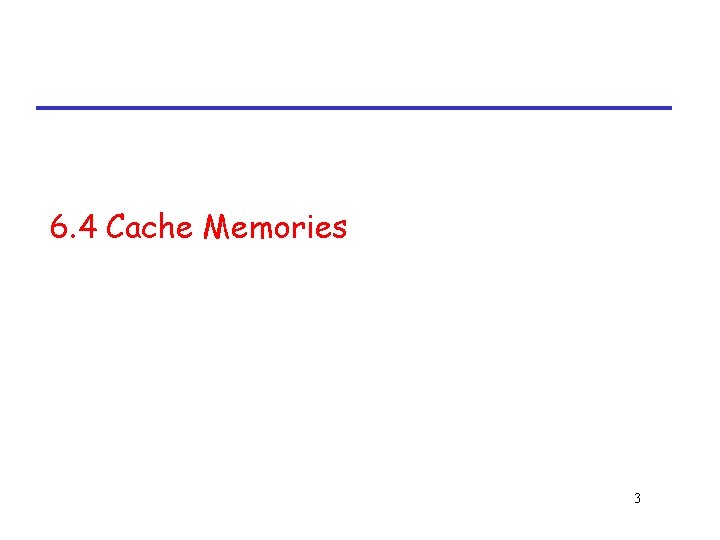

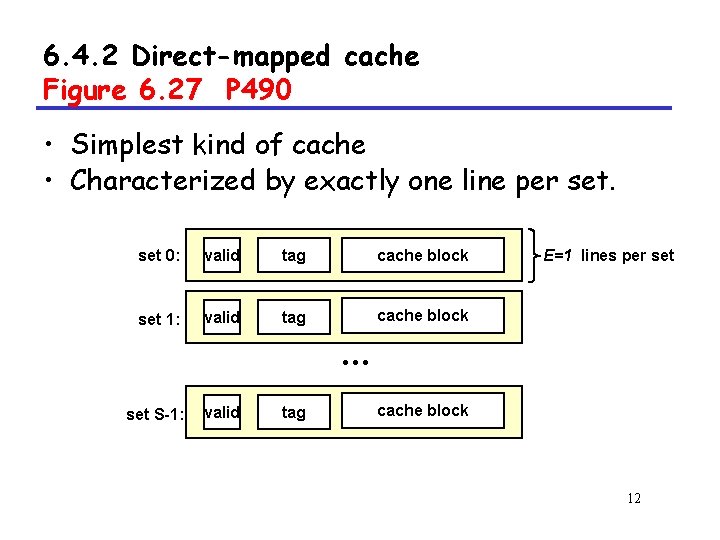

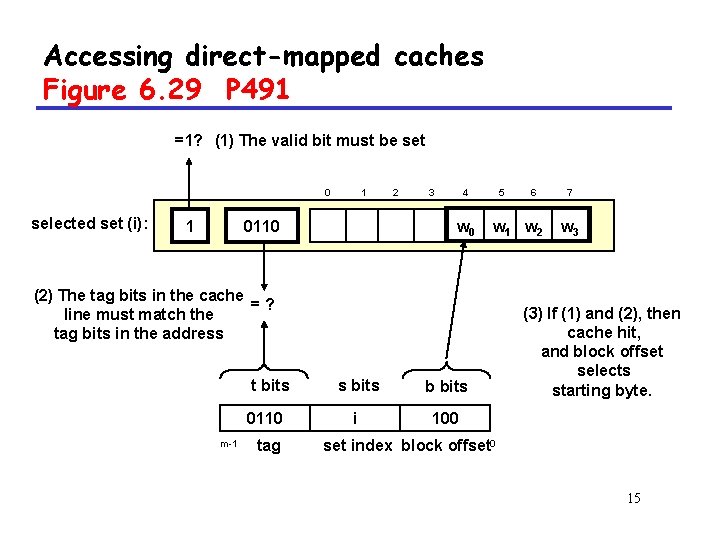

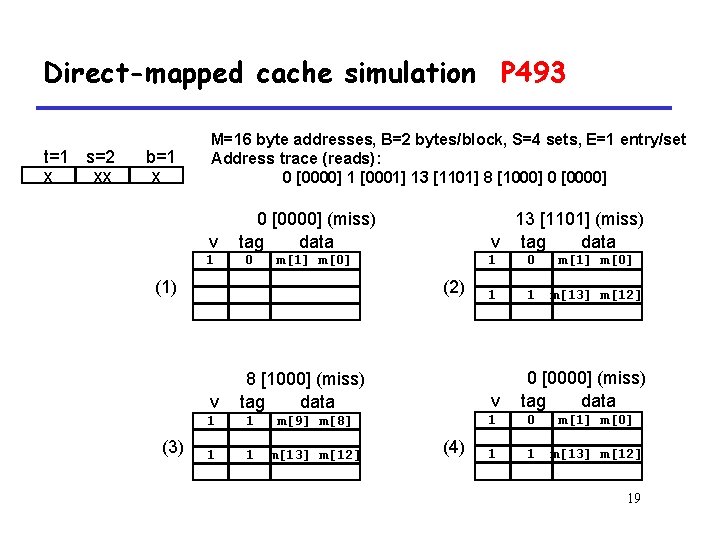

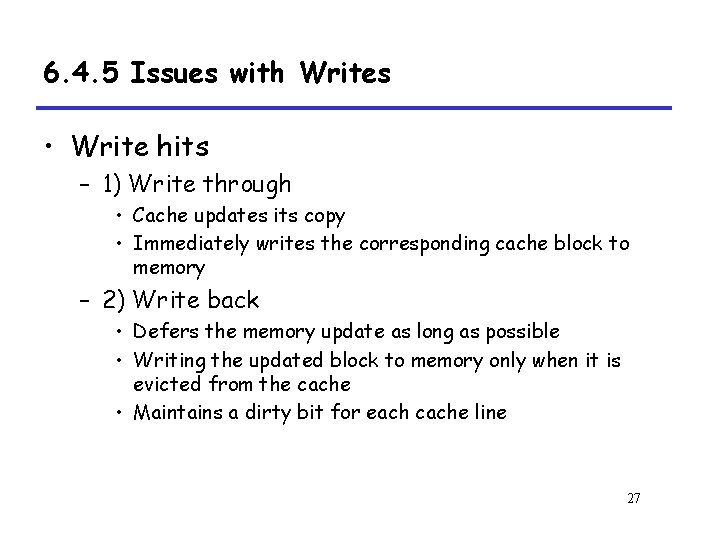

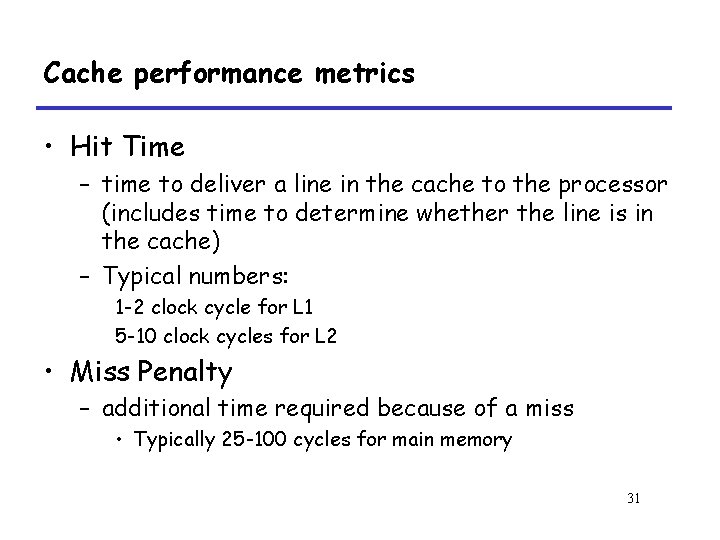

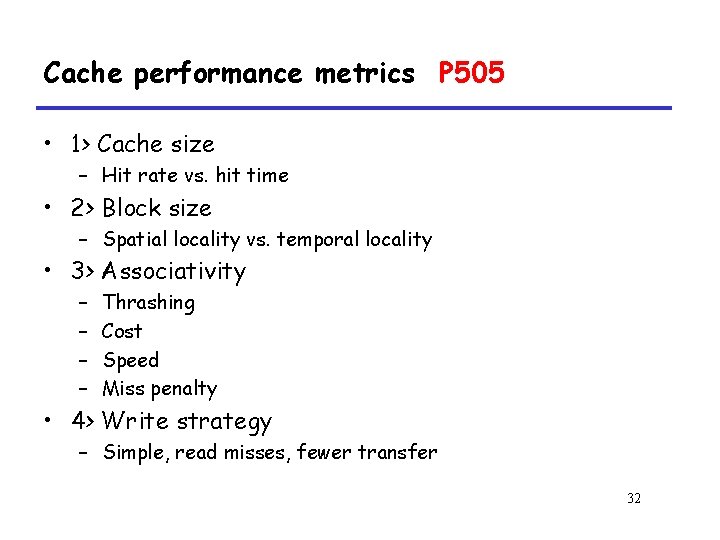

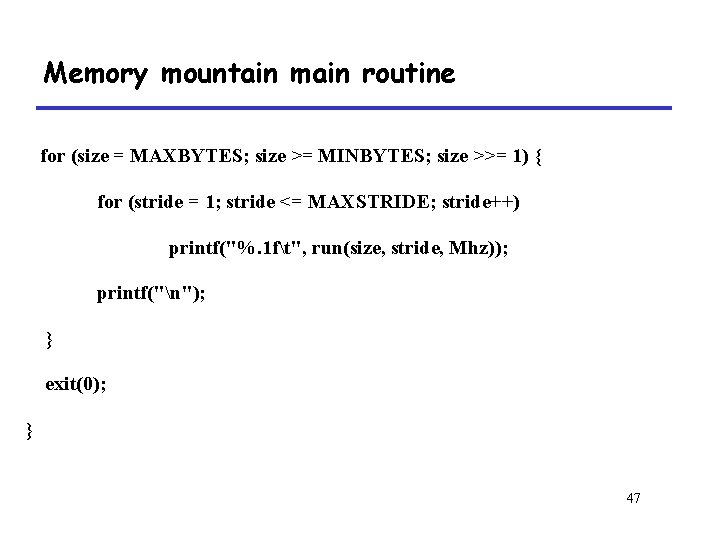

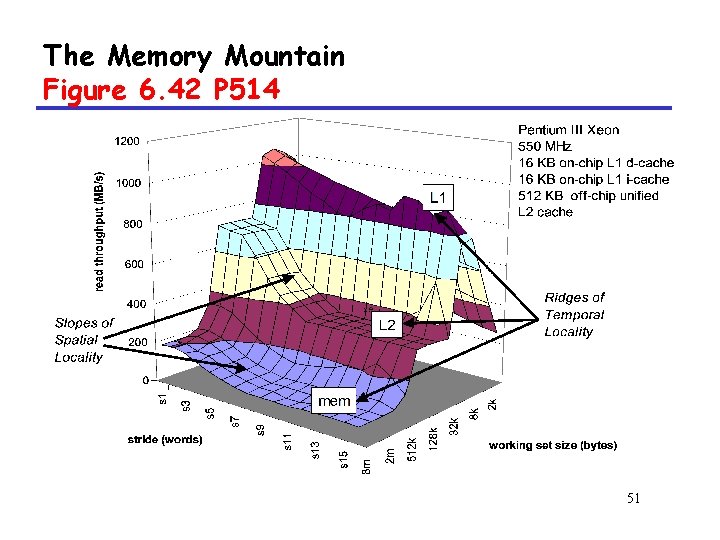

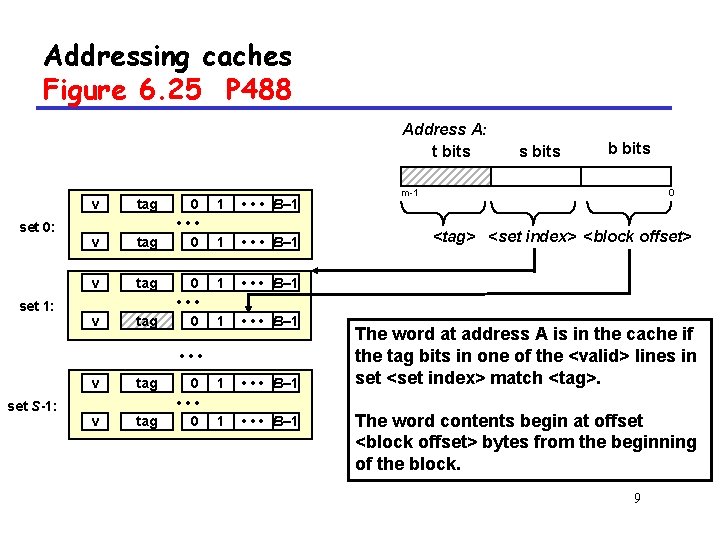

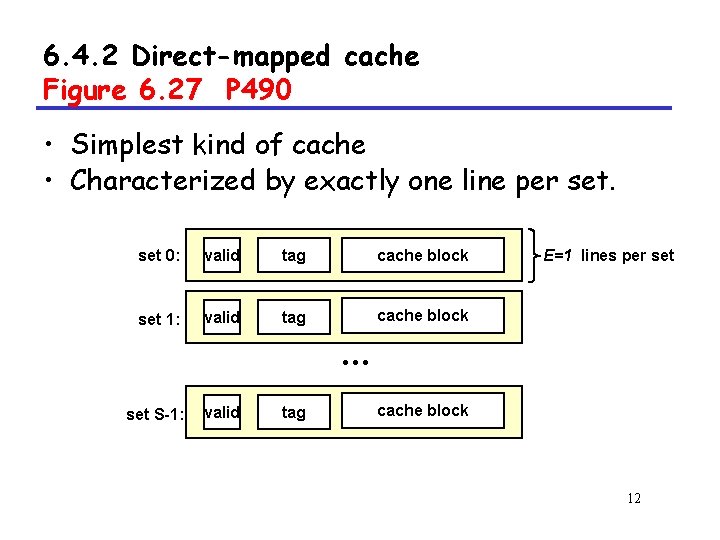

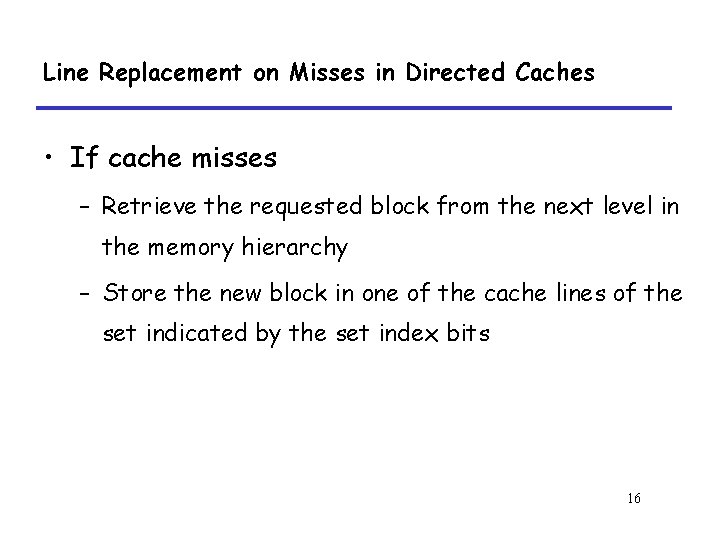

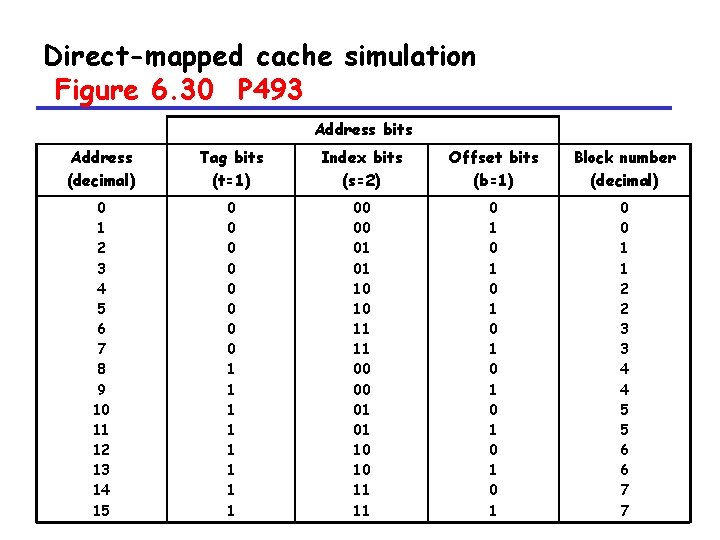

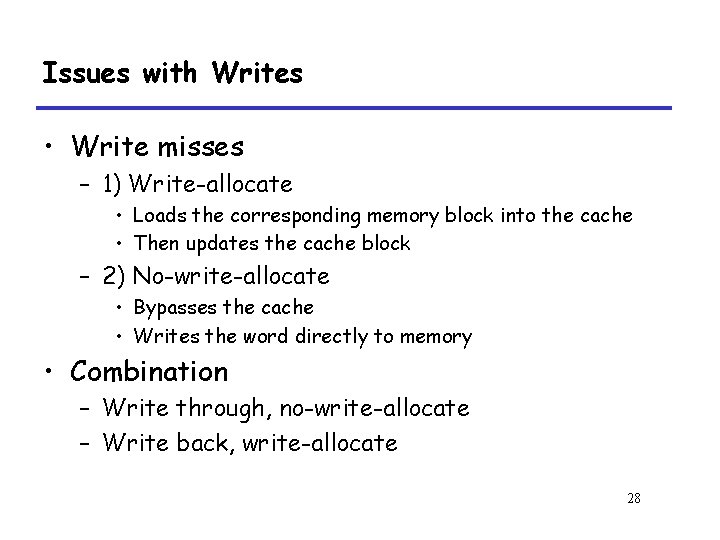

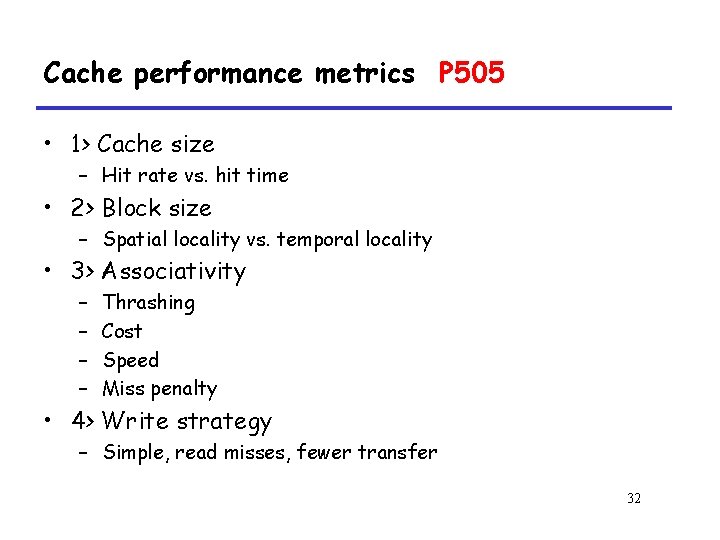

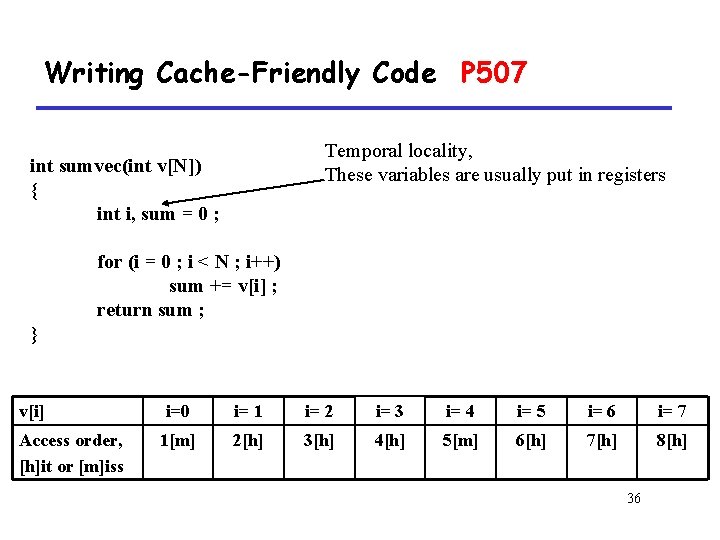

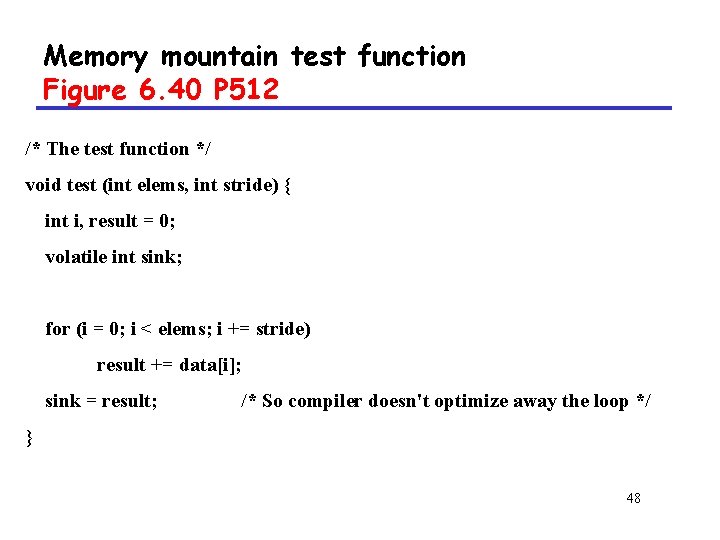

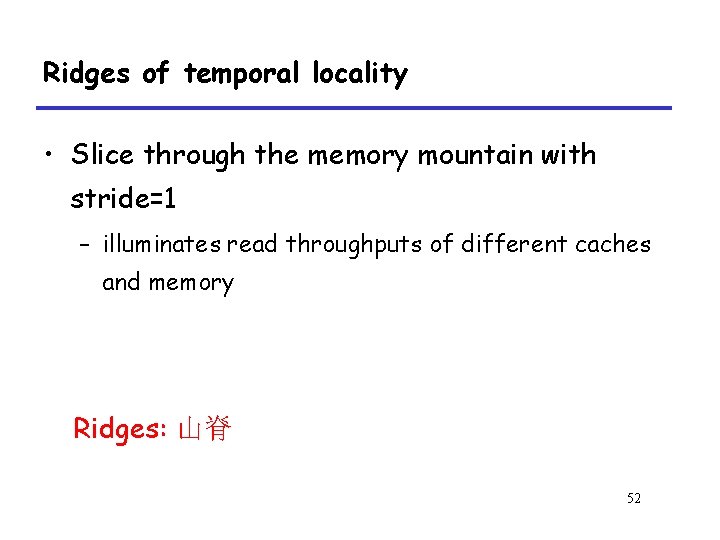

Writing cache-friendly code P 508 • Example (M=4, N=8, 20 cycles/iter) int sumvec(int v[M][N]) { int i, j, sum = 0 ; for ( j = 0 ; j < N ; j++ ) for ( i = 0 ; i < M ; i++ ) sum += v[i][j] ; return sum ; } 41

![Writing cachefriendly code aij j0 j 1 j 2 j 3 j 4 j Writing cache-friendly code a[i][j] j=0 j= 1 j= 2 j= 3 j= 4 j=](https://slidetodoc.com/presentation_image_h2/1b915ed4ddb74524cc74c2239c1b3131/image-42.jpg)

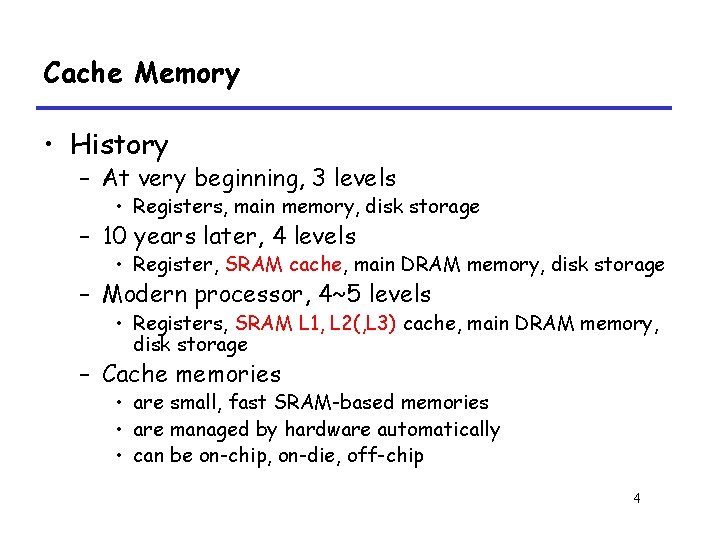

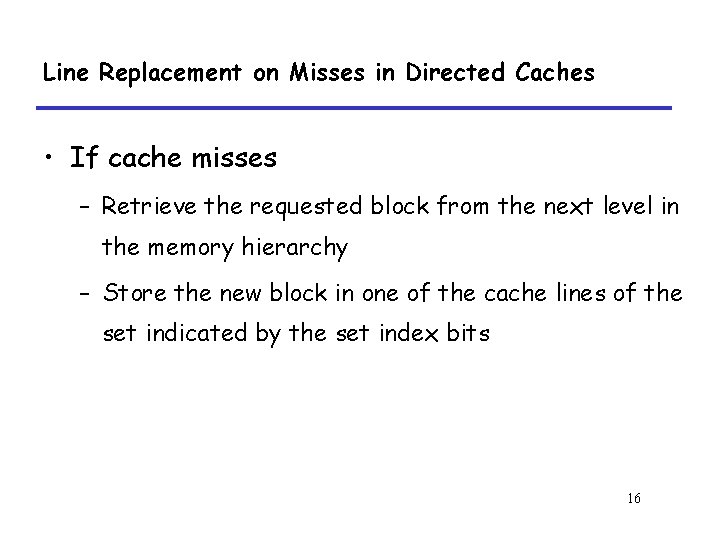

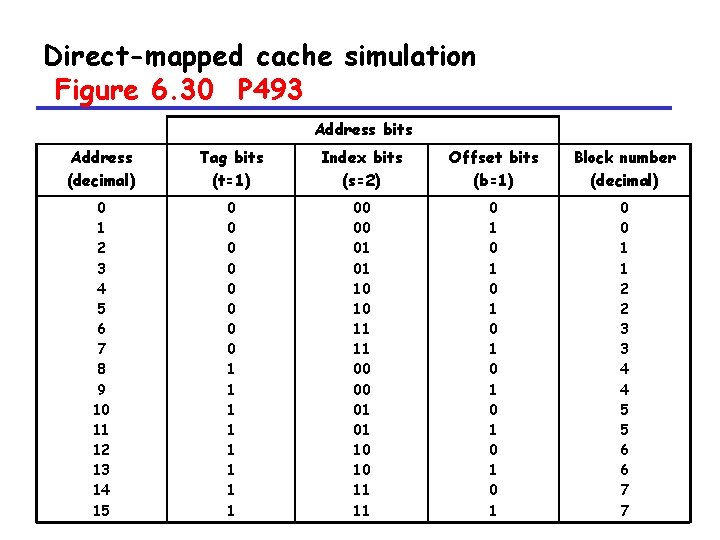

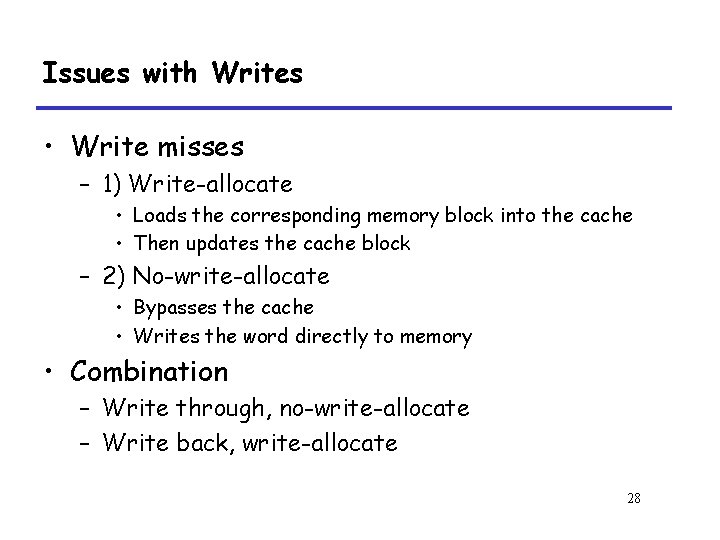

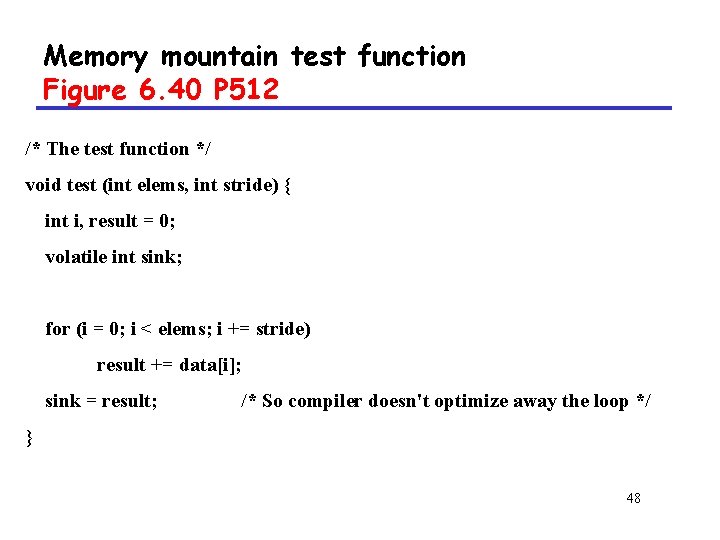

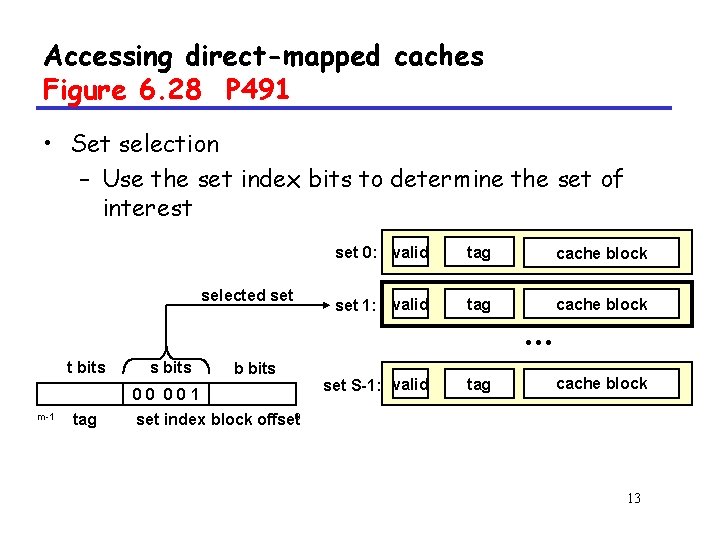

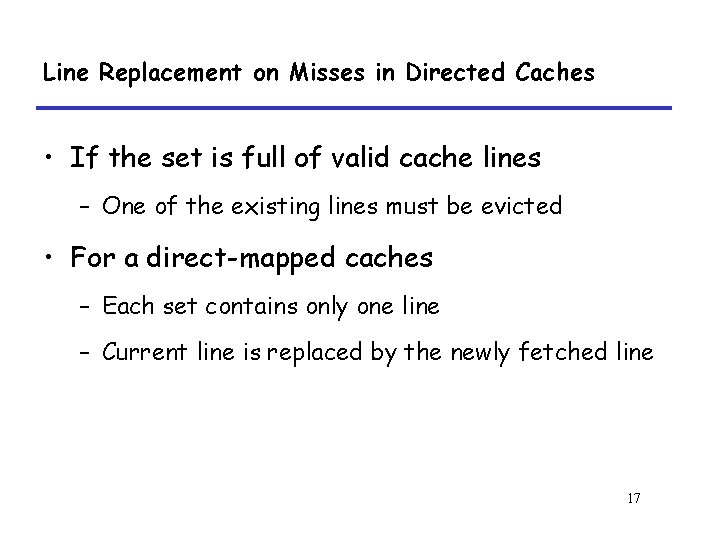

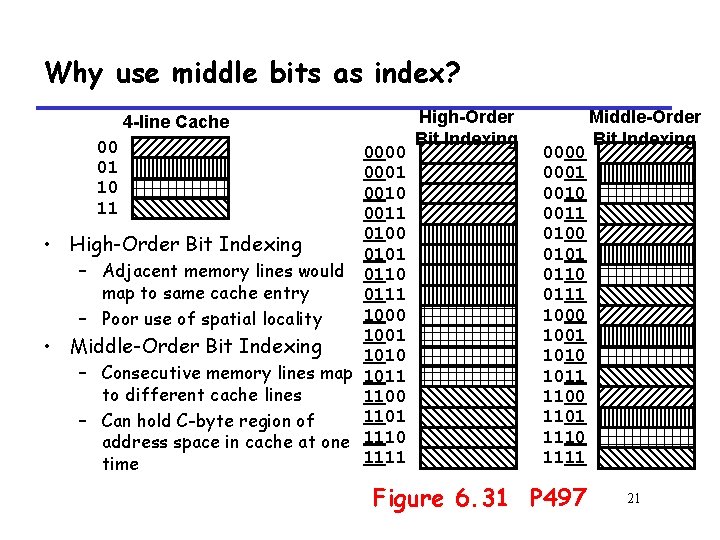

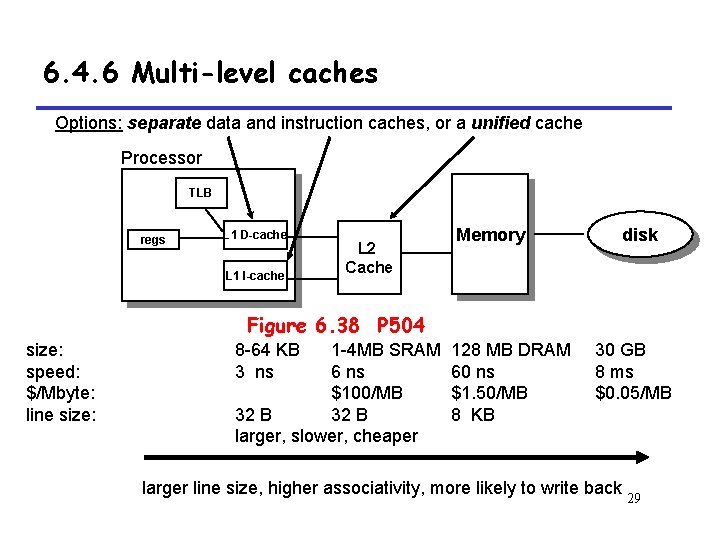

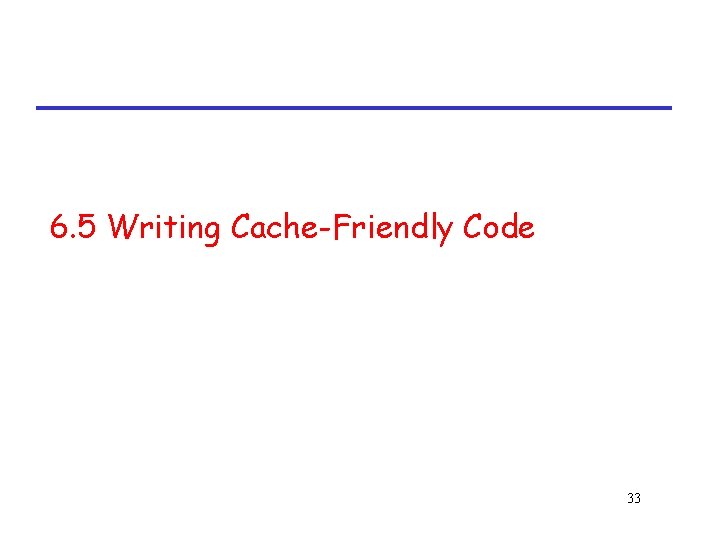

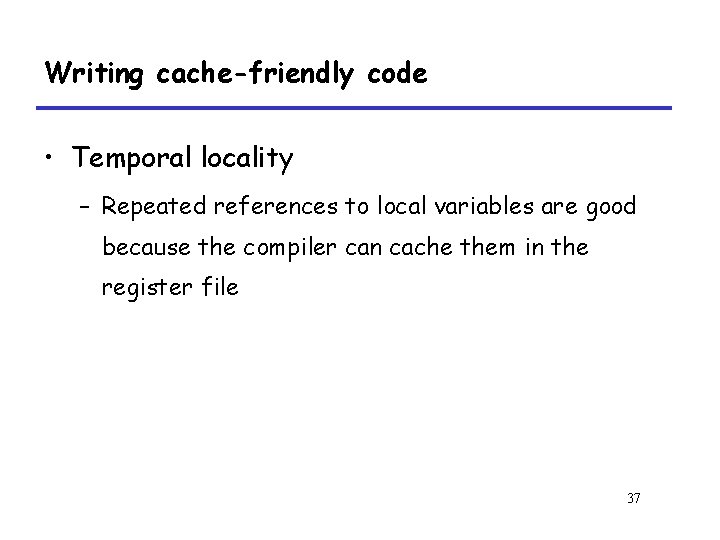

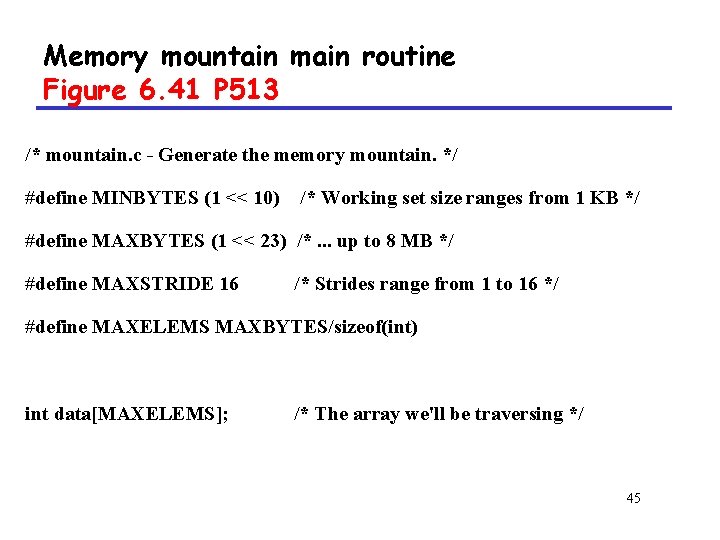

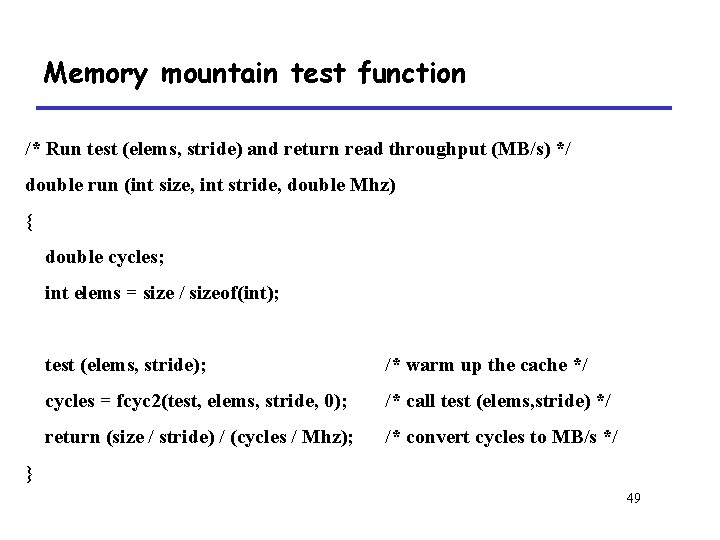

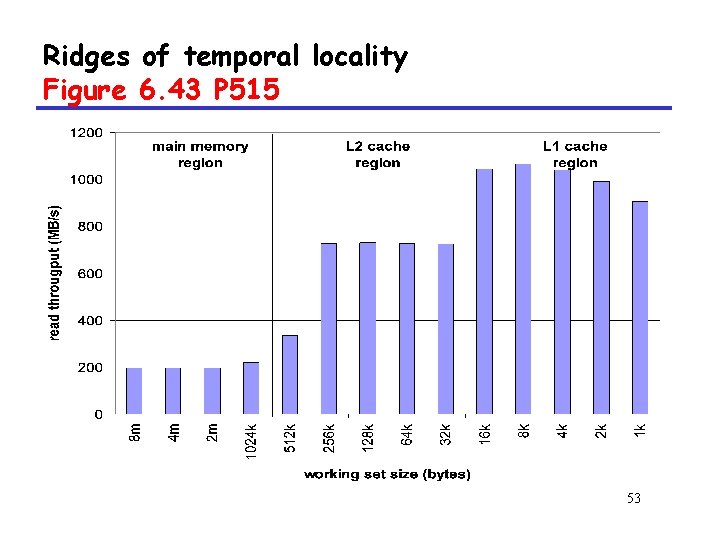

Writing cache-friendly code a[i][j] j=0 j= 1 j= 2 j= 3 j= 4 j= 5 j= 6 j= 7 i=0 i=1 i=2 i=3 1[m] 2[m] 3[m] 4[m] 5[m] 6[m] 7[m] 8[m] 9[m] 10[m] 11[m] 12[m] 13[m] 14[m] 15[m] 16[m] 17[m] 18[m] 19[m] 20[m] 21[m] 22[m] 23[m] 24[m] 25[m] 26[m] 27[m] 28[m] 29[m] 30[m] 31[m] 32[m] 42

6. 6 Putting it Together: The Impact of Caches on Program Performance 6. 6. 1 The Memory Mountain 43

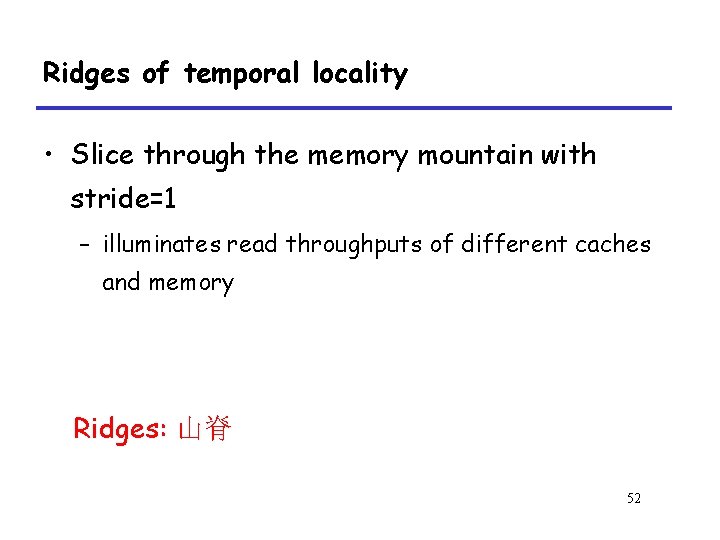

The Memory Mountain P 512 • Read throughput (read bandwidth) – The rate that a program reads data from the memory system • Memory mountain – A two-dimensional function of read bandwidth versus temporal and spatial locality – Characterizes the capabilities of the memory system for each computer 44

Memory mountain main routine Figure 6. 41 P 513 /* mountain. c - Generate the memory mountain. */ #define MINBYTES (1 << 10) /* Working set size ranges from 1 KB */ #define MAXBYTES (1 << 23) /*. . . up to 8 MB */ #define MAXSTRIDE 16 /* Strides range from 1 to 16 */ #define MAXELEMS MAXBYTES/sizeof(int) int data[MAXELEMS]; /* The array we'll be traversing */ 45

Memory mountain main routine int main() { int size; /* Working set size (in bytes) */ int stride; /* Stride (in array elements) */ double Mhz; /* Clock frequency */ init_data(data, MAXELEMS); /* Initialize each element in data to 1 */ Mhz = mhz(0); /* Estimate the clock frequency */ 46

Memory mountain main routine for (size = MAXBYTES; size >= MINBYTES; size >>= 1) { for (stride = 1; stride <= MAXSTRIDE; stride++) printf("%. 1 ft", run(size, stride, Mhz)); printf("n"); } exit(0); } 47

Memory mountain test function Figure 6. 40 P 512 /* The test function */ void test (int elems, int stride) { int i, result = 0; volatile int sink; for (i = 0; i < elems; i += stride) result += data[i]; sink = result; /* So compiler doesn't optimize away the loop */ } 48

Memory mountain test function /* Run test (elems, stride) and return read throughput (MB/s) */ double run (int size, int stride, double Mhz) { double cycles; int elems = size / sizeof(int); test (elems, stride); /* warm up the cache */ cycles = fcyc 2(test, elems, stride, 0); /* call test (elems, stride) */ return (size / stride) / (cycles / Mhz); /* convert cycles to MB/s */ } 49

The Memory Mountain • Data – Size • MAXBYTES(8 M) bytes or MAXELEMS(2 M) words – Partially accessed • Working set: from 8 MB to 1 KB • Stride: from 1 to 16 50

The Memory Mountain Figure 6. 42 P 514 51

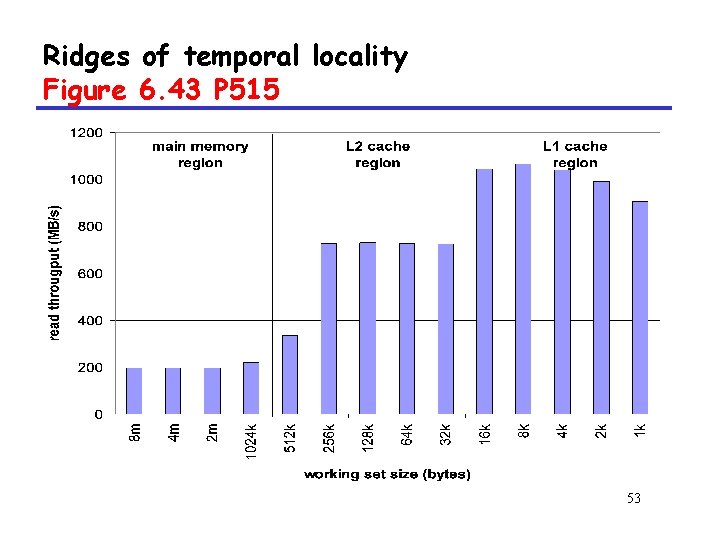

Ridges of temporal locality • Slice through the memory mountain with stride=1 – illuminates read throughputs of different caches and memory Ridges: 山脊 52

Ridges of temporal locality Figure 6. 43 P 515 53

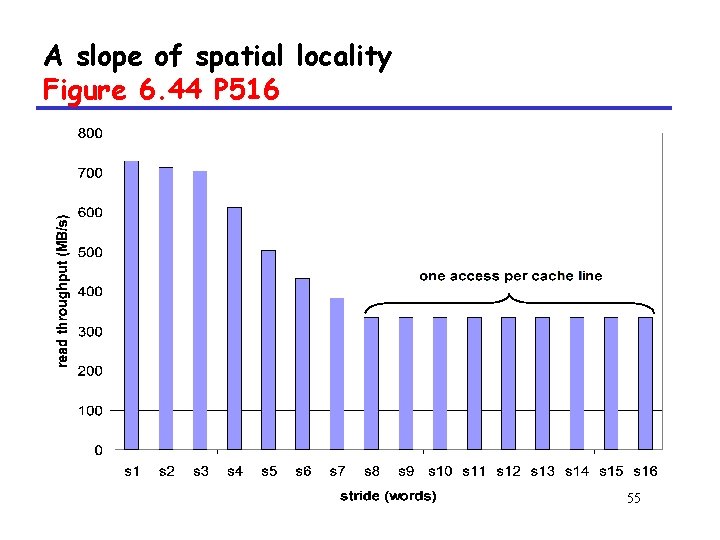

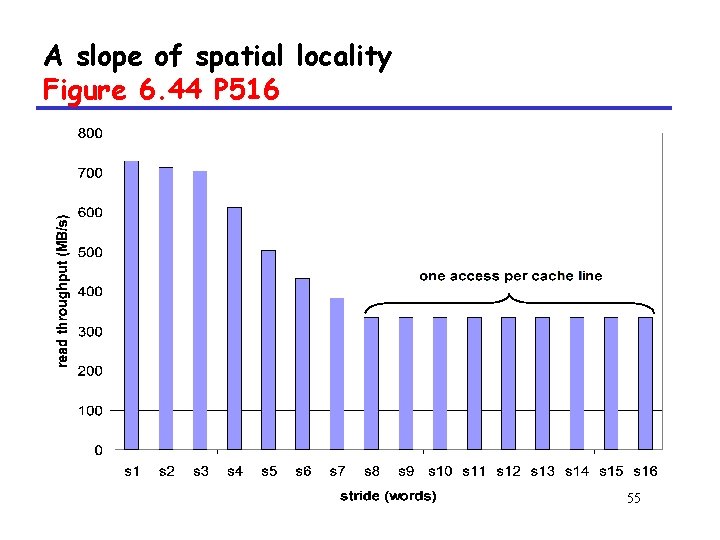

A slope of spatial locality • Slice through memory mountain with size=256 KB – shows cache block size. 54

A slope of spatial locality Figure 6. 44 P 516 55