Cache Design Handouts Lecture Slides 1 Basic Cache

Cache Design + = Handouts: Lecture Slides 1

![Basic Cache Algorithm CPU Tag a Data A Mem[A] B Mem[B] (1 -a) MAIN Basic Cache Algorithm CPU Tag a Data A Mem[A] B Mem[B] (1 -a) MAIN](http://slidetodoc.com/presentation_image_h2/b837116e7ed41eb3be99582c7c3b3d90/image-2.jpg)

Basic Cache Algorithm CPU Tag a Data A Mem[A] B Mem[B] (1 -a) MAIN MEMORY ON REFERENCE TO Mem[X]: Look for X among cache tags. . . HIT: X = TAG(i) , for some cache line i – READ: return DATA(i) – WRITE: change DATA(i); Start Write to Mem(X) MISS: X not found in TAG of any cache line – REPLACEMENT SELECTION: » Select some line k to hold Mem[X] – READ: Read Mem[X] Set TAG(k)=X, DATA(k)=Mem[X] – WRITE: Start Write to Mem(X) Set TAG(k)=X, DATA(k)= new Mem[X] 2

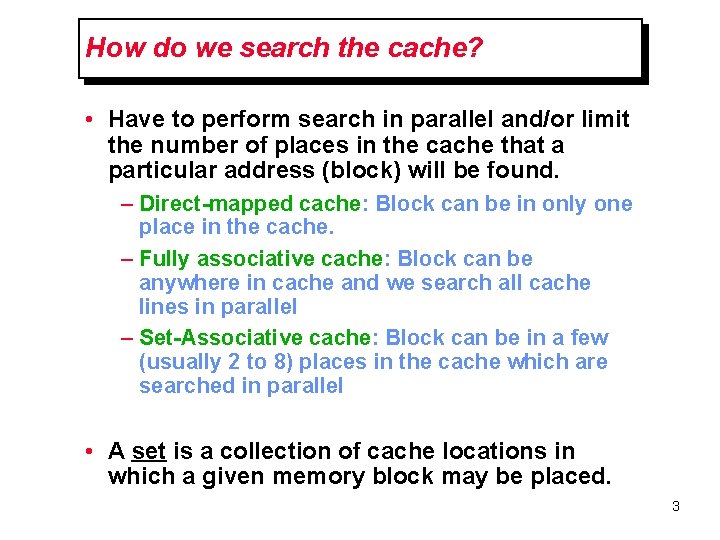

How do we search the cache? • Have to perform search in parallel and/or limit the number of places in the cache that a particular address (block) will be found. – Direct-mapped cache: Block can be in only one place in the cache. – Fully associative cache: Block can be anywhere in cache and we search all cache lines in parallel – Set-Associative cache: Block can be in a few (usually 2 to 8) places in the cache which are searched in parallel • A set is a collection of cache locations in which a given memory block may be placed. 3

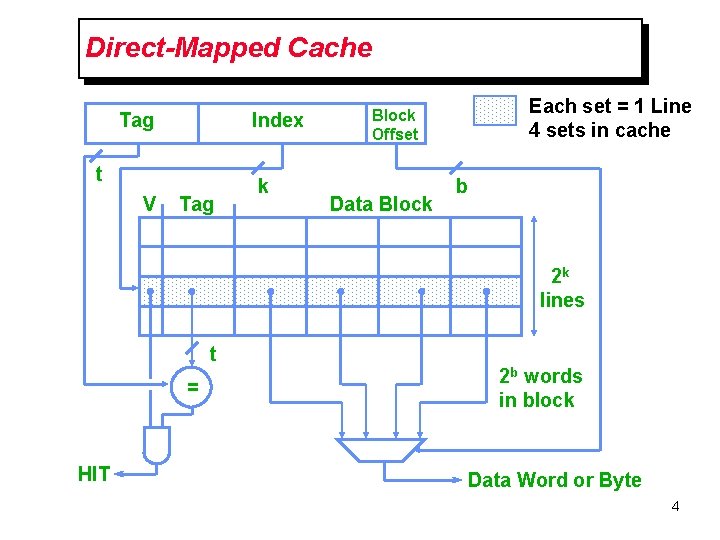

Direct-Mapped Cache Tag Index t V Tag k Each set = 1 Line 4 sets in cache Block Offset Data Block b 2 k lines t = HIT 2 b words in block Data Word or Byte 4

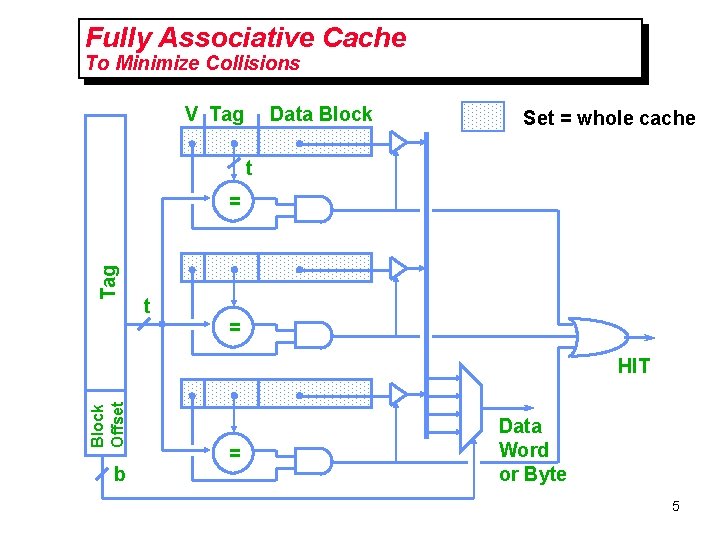

Fully Associative Cache To Minimize Collisions V Tag Data Block Set = whole cache t Tag = t = Block Offset HIT b = Data Word or Byte 5

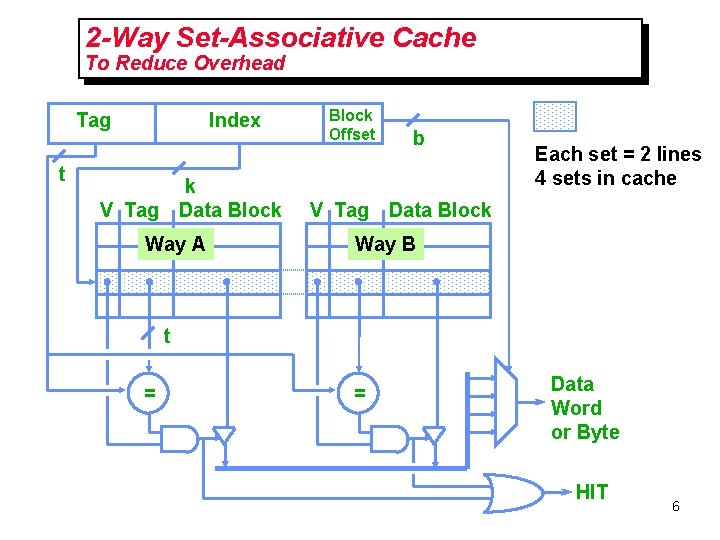

2 -Way Set-Associative Cache To Reduce Overhead Tag t Index k V Tag Data Block Way A Block Offset b Each set = 2 lines 4 sets in cache V Tag Data Block Way B t = = Data Word or Byte HIT 6

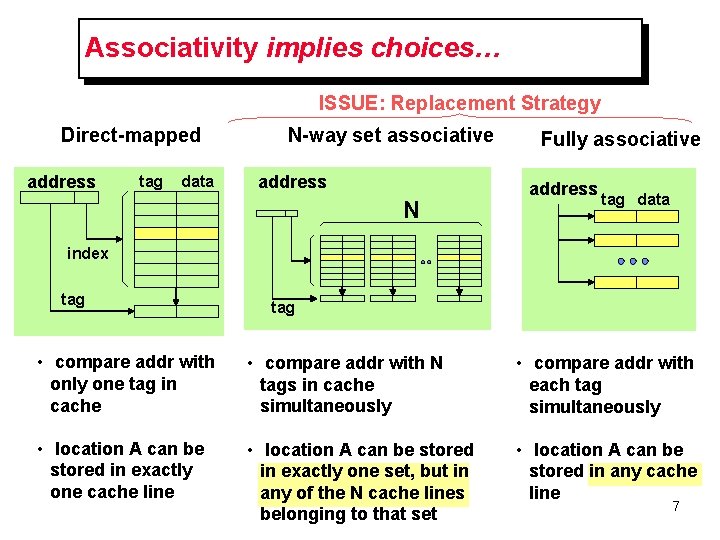

Associativity implies choices… ISSUE: Replacement Strategy Direct-mapped address tag data N-way set associative address N Fully associative address tag data index tag • compare addr with only one tag in cache • compare addr with N tags in cache simultaneously • compare addr with each tag simultaneously • location A can be stored in exactly one cache line • location A can be stored in exactly one set, but in any of the N cache lines belonging to that set • location A can be stored in any cache line 7

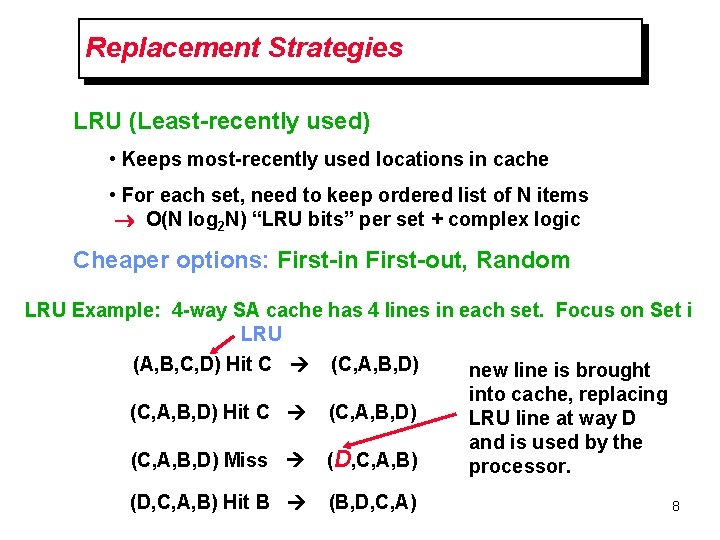

Replacement Strategies LRU (Least-recently used) • Keeps most-recently used locations in cache • For each set, need to keep ordered list of N items O(N log 2 N) “LRU bits” per set + complex logic Cheaper options: First-in First-out, Random LRU Example: 4 -way SA cache has 4 lines in each set. Focus on Set i LRU (A, B, C, D) Hit C (C, A, B, D) new line is brought (C, A, B, D) Hit C (C, A, B, D) Miss (D, C, A, B) Hit B (B, D, C, A) into cache, replacing LRU line at way D and is used by the processor. 8

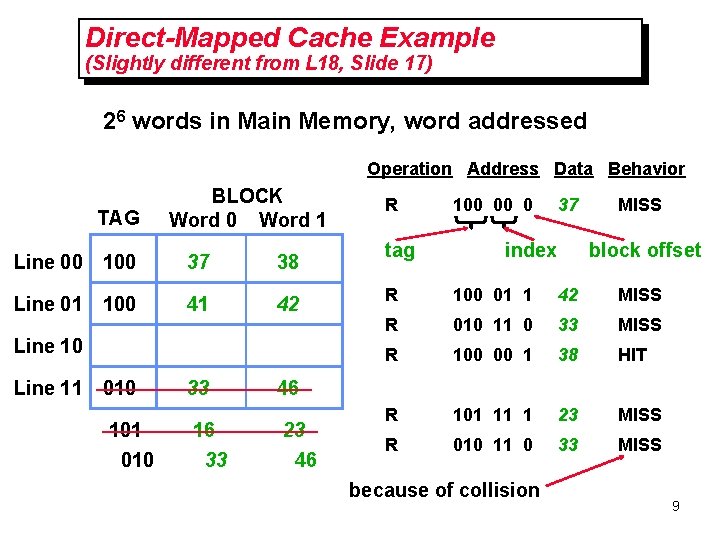

Direct-Mapped Cache Example (Slightly different from L 18, Slide 17) 26 words in Main Memory, word addressed Operation Address Data Behavior TAG BLOCK Word 0 Word 1 Line 00 100 37 38 Line 01 100 41 42 Line 10 Line 11 010 101 010 33 16 33 R tag 100 00 0 37 index MISS block offset R 100 01 1 42 MISS R 010 11 0 33 MISS R 100 00 1 38 HIT R 101 11 1 23 MISS R 010 11 0 33 MISS 46 23 46 because of collision 9

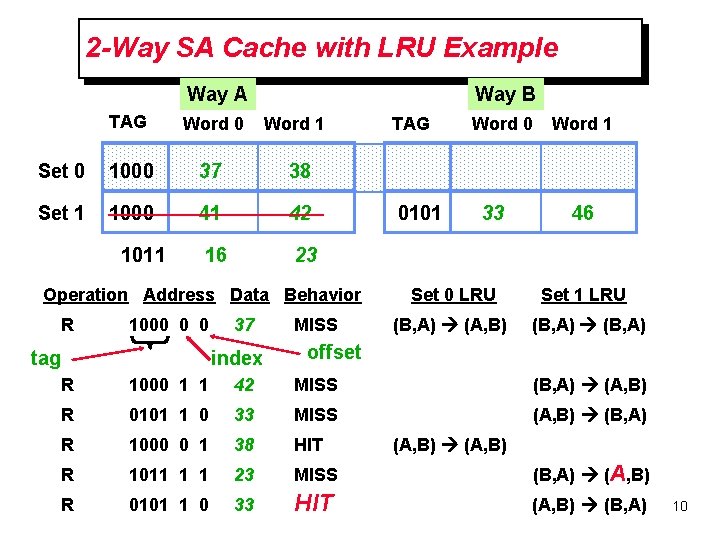

2 -Way SA Cache with LRU Example Way A Way B TAG Word 0 Set 0 1000 37 38 Set 1 1000 41 42 16 23 1011 Word 1 Operation Address Data Behavior R 1000 0 0 tag 37 index MISS TAG 0101 Word 0 33 Word 1 46 Set 0 LRU Set 1 LRU (B, A) (A, B) (B, A) offset R 1000 1 1 42 MISS (B, A) (A, B) R 0101 1 0 33 MISS (A, B) (B, A) R 1000 0 1 38 HIT R 1011 1 1 23 MISS (B, A) (A, B) R 0101 1 0 33 HIT (A, B) (B, A) (A, B) 10

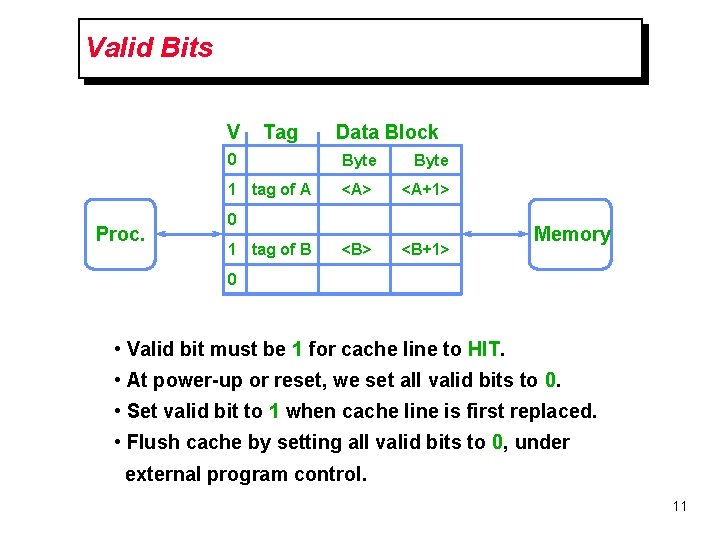

Valid Bits V Proc. Tag Data Block 0 Byte 1 tag of A <A> <A+1> <B+1> 0 1 tag of B Memory 0 • Valid bit must be 1 for cache line to HIT. • At power-up or reset, we set all valid bits to 0. • Set valid bit to 1 when cache line is first replaced. • Flush cache by setting all valid bits to 0, under external program control. 11

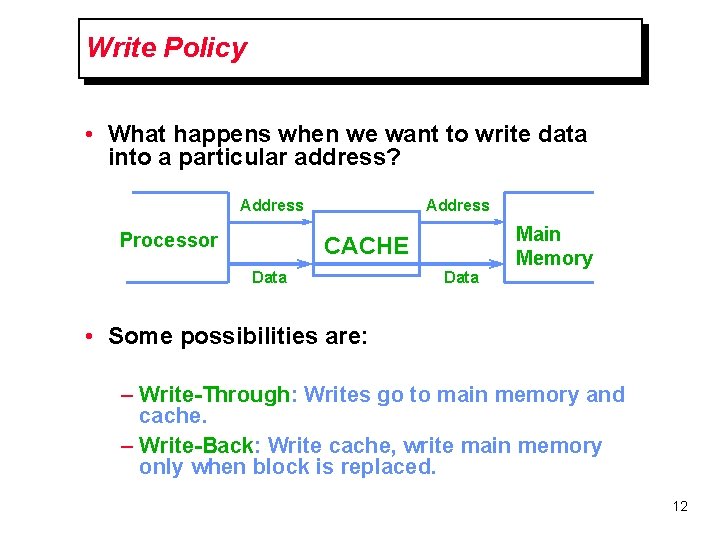

Write Policy • What happens when we want to write data into a particular address? Address Processor Address CACHE Data Main Memory • Some possibilities are: – Write-Through: Writes go to main memory and cache. – Write-Back: Write cache, write main memory only when block is replaced. 12

![Write-back ON REFERENCE TO Mem[X]: Look for X among cache tags. . . HIT: Write-back ON REFERENCE TO Mem[X]: Look for X among cache tags. . . HIT:](http://slidetodoc.com/presentation_image_h2/b837116e7ed41eb3be99582c7c3b3d90/image-13.jpg)

Write-back ON REFERENCE TO Mem[X]: Look for X among cache tags. . . HIT: X = TAG(i) , for some cache line i – READ: return DATA(i) – WRITE: change DATA(i); Start Write to Mem[X] MISS: X not found in TAG of any cache line – REPLACEMENT SELECTION: » Select some line k to hold Mem[X] » Write Back: Write Data(k) to Mem[Tag[k]] – READ: Read Mem[X] Set TAG[k] = X, DATA[k] = Mem[X] – WRITE: Start Write to Mem[X] Set TAG[k] = X, DATA[k] = new Mem[X] 13

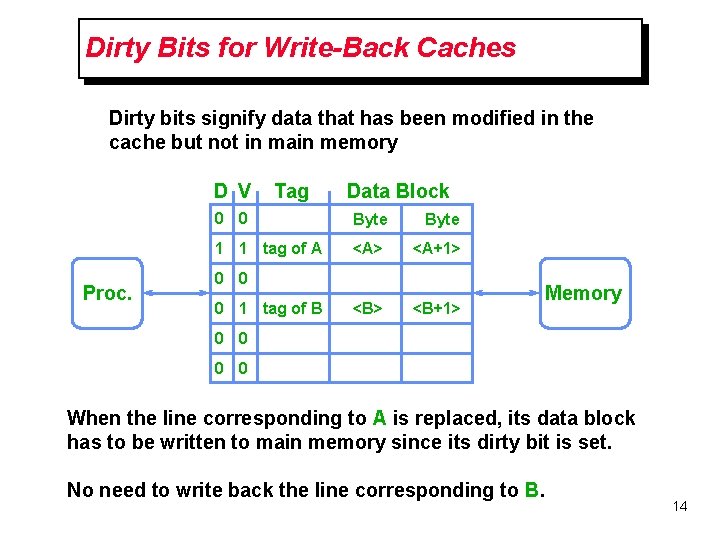

Dirty Bits for Write-Back Caches Dirty bits signify data that has been modified in the cache but not in main memory D V Proc. Tag Data Block 0 0 Byte 1 1 tag of A <A> <A+1> <B+1> 0 0 0 1 tag of B Memory 0 0 When the line corresponding to A is replaced, its data block has to be written to main memory since its dirty bit is set. No need to write back the line corresponding to B. 14

![Write-back w/ “Dirty” bits ON REFERENCE TO Mem[X]: Look for X among cache tags. Write-back w/ “Dirty” bits ON REFERENCE TO Mem[X]: Look for X among cache tags.](http://slidetodoc.com/presentation_image_h2/b837116e7ed41eb3be99582c7c3b3d90/image-15.jpg)

Write-back w/ “Dirty” bits ON REFERENCE TO Mem[X]: Look for X among cache tags. . . HIT: X = TAG(i) , for some cache line i – READ: return DATA(i) – WRITE: change DATA(i); Start Write to Mem[X] D[i] = 1 MISS: X not found in TAG of any cache line – REPLACEMENT SELECTION: » Select some line k to hold Mem[X] » If D[k] == 1 (Write Back) Write Data(k) to Mem[Tag[k]] – READ: Read Mem[X]; Set TAG[k] = X, DATA[k] = Mem[X], D[k] = 0 – WRITE: Start Write to Mem[X] D[k] = 1 Set TAG[k] = X, DATA[k] = new Mem[X] 15

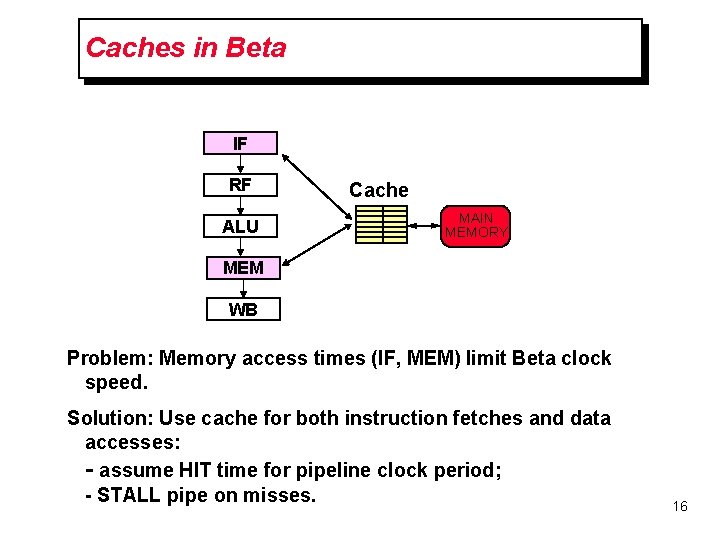

Caches in Beta IF RF ALU Cache MAIN MEMORY MEM WB Problem: Memory access times (IF, MEM) limit Beta clock speed. Solution: Use cache for both instruction fetches and data accesses: - assume HIT time for pipeline clock period; - STALL pipe on misses. 16

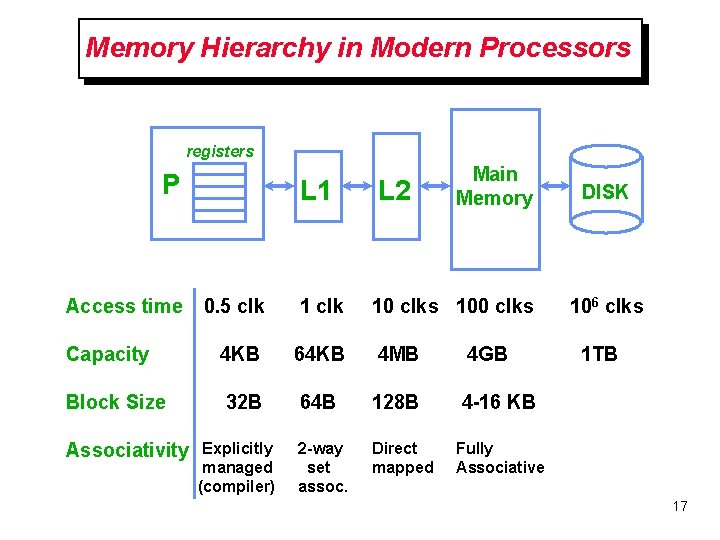

Memory Hierarchy in Modern Processors registers P Main Memory L 1 L 2 0. 5 clk 10 clks 100 clks Capacity 4 KB 64 KB 4 MB 4 GB Block Size 32 B 64 B 128 B 4 -16 KB 2 -way set assoc. Direct mapped Access time Associativity Explicitly managed (compiler) DISK 106 clks 1 TB Fully Associative 17

Next Time: Communication Technology Dilbert : S. Adams 18

- Slides: 18