C Program Command Line Arguments A normal C

- Slides: 45

C Program Command Line Arguments A normal C program specifies command line arguments to be passed to main with: int main(int argc, char *argv[]) where • argc is the argument count and • argv[] is an array of character pointers. – First entry is a pointer to program name – Subsequent entries point to subsequent strings on the command line. Grid Computing, B. Wilkinson, 2004 1

MPI C program command line arguments • Implementations of MPI remove from the argv array any command line arguments used by the implementation. • Note MPI_Init requires argc and argv (specified as addresses) Grid Computing, B. Wilkinson, 2004 2

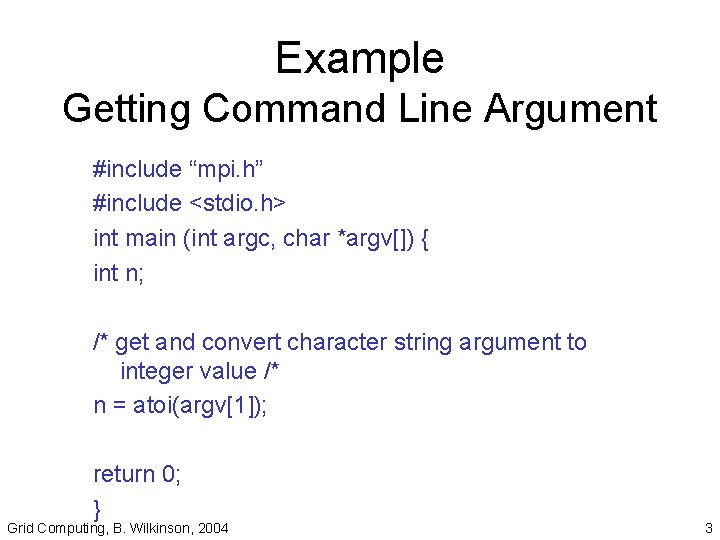

Example Getting Command Line Argument #include “mpi. h” #include <stdio. h> int main (int argc, char *argv[]) { int n; /* get and convert character string argument to integer value /* n = atoi(argv[1]); return 0; } Grid Computing, B. Wilkinson, 2004 3

Executing MPI program with command line arguments mpirun -np 2 my. Prog 56 123 argv[0] Removed by MPI implementation MPI_Init() ? Grid Computing, B. Wilkinson, 2004 argv[1] argv[2] Remember these array elements hold pointers to the arguments. 4

More Information on MPI • Books: “Using MPI Portable Parallel Programming with the Message-Passing Interface 2 nd ed. , ” W. Gropp, E. Lusk, and A. Skjellum, The MIT Press, 1999. • MPICH: http: //www. mcs. anl. gov/mpi • LAM MPI: http: //www. lam-mpi. org Grid Computing, B. Wilkinson, 2004 5

Grid-enabled MPI Grid Computing, B. Wilkinson, 2004 6

Several versions of MPI developed for a grid: • MPICH-G, MPI-G 2 • PACXMPI We will use MPICH-G 2, which is based on MPICH for a grid, and uses Globus. Grid Computing, B. Wilkinson, 2004 7

MPI code for the grid No difference in code from regular MPI code. Key aspect is MPI implementation: • Communication methods • Resource management Grid Computing, B. Wilkinson, 2004 8

Communication Methods • Implementation should take into account whether messages are between processor on the same computer or processors on different computers on the network. • Pack messages into less larger message, even if this requires more computations Grid Computing, B. Wilkinson, 2004 9

MPICH-G 2 • Complete implementation of MPI • Can use existing MPI programs on a grid without change • Uses Globus to start tasks, etc. • Version 2 a complete redesign from MPICH-G for Globus 2. 2 or later. Grid Computing, B. Wilkinson, 2004 10

Compiling Application Program As with regular MPI programs, compile on each machine you intend to use (unless binary compatible and have common file system). For C programs: <MPICH_INSTALL_PATH>/bin/mpicc Grid Computing, B. Wilkinson, 2004 11

Running an MPICH-G 2 Program mpirun • mpirun submits a Globus RSL script (Resource Specification Language Script) to launch application • RSL script can be created by mpirun or you can write your own. • RSL script gives powerful mechanism to specify different executables etc. , but low level. Grid Computing, B. Wilkinson, 2004 12

mpirun (with it constructing RSL script) • Use if want to launch a single executable on binary compatible machines with a shared file system. • Requires a “machines” file - a list of computers to be used (and job managers) Grid Computing, B. Wilkinson, 2004 13

“Machines” file • Computers listed by their Globus job manager service followed by optional maximum number of node (tasks) on that machine. • If job manager omitted (i. e. , just name of computer), will default to Globus job manager. Grid Computing, B. Wilkinson, 2004 14

Location of “machines” file • mpirun command expects the “machines” file either in – the directory specified by -machinefile flag – the current directory used to execute the mpirun command, or – in <MPICH_INSTALL_PATH>/bin/machines Grid Computing, B. Wilkinson, 2004 15

Running MPI program • Uses the same command line as a regular MPI program: mpirun -np 25 my_prog creates 25 tasks allocated on machines in “machines’ file in around robin fashion Grid Computing, B. Wilkinson, 2004 16

Example With the machines file containing: ”jupitor. cs. wcu. edu" 4 "venus. cs. wcu. edu" 5 and the command: mpirun -np 10 my. Prog the first 4 processes (jobs) would run on jupitor, the next 5 on venus, and the remaining one on jupitor. Grid Computing, B. Wilkinson, 2004 17

mpirun with your own RSL script • Necessary if machines not executing same executable. • Easiest way to create script is to modify existing one. • Use mpirun -dumprsl which causes script printed out. Application program not launched. Grid Computing, B. Wilkinson, 2004 18

Example mpirun -dumprsl -np 2 myprog will generate appropriate printout of an rsl document according to the details of the job from the command line and machine file. Grid Computing, B. Wilkinson, 2004 19

Given rsl file, my. RSL. rsl, use: mpirun -globusrsl my. RSL. rsl to submit modified script. . More details and practice in assignment 5 (once I have finished it!) Grid Computing, B. Wilkinson, 2004 20

MPICH-G internals • Processes allocated a “machine-local” number and a “grid global” number translated into where process actually resides. • Non-local operations uses grid services • Local operations do not. • globusrun command submits simultaneous job requests Grid Computing, B. Wilkinson, 2004 21

Limitations • “machines” file limits computers to those known - no discovery of resources • If machines heterogeneous, need appropriate executables available, and RSL script • Speed an issue - original version MPI-G slow. Grid Computing, B. Wilkinson, 2004 22

More information on MPI-G 2 http: //www. niu. edu/mpi http: //www. globus. org/mpi http: //www. hpclab. niu. edu/mpi/g 2_body. htm Grid Computing, B. Wilkinson, 2004 23

Parallel Programming Techniques Suitable for a Grid Computing, B. Wilkinson, 2004 24

Message-Passing on a Grid • VERY expensive, sending data across network costs millions of cycles • Bandwidth shared with other users • Links unreliable Grid Computing, B. Wilkinson, 2004 25

Computational Strategies • As a computing platform, a grid favors situations with absolute minimum communication between computers. Grid Computing, B. Wilkinson, 2004 26

Strategies With no/minimum communication: • “Embarrassingly Parallel” Computations – those computations which obviously can be divided into parallel independent parts. Parts executed on separate computers. • Separate instance of the same problem executing on each system, each using different data Grid Computing, B. Wilkinson, 2004 27

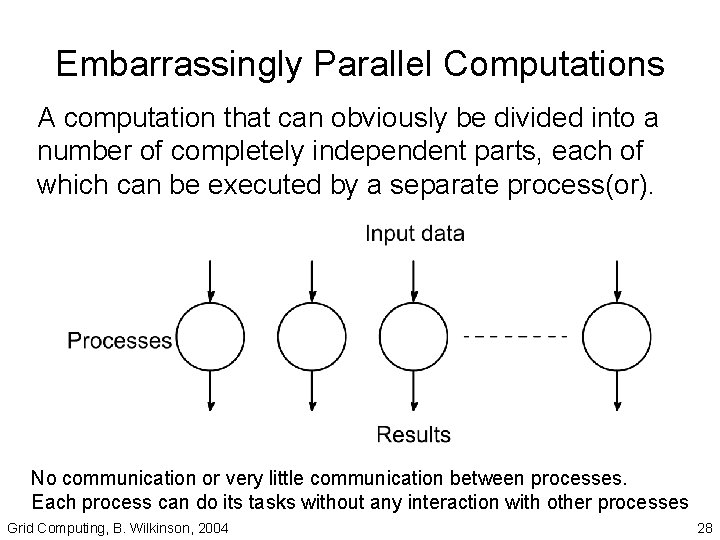

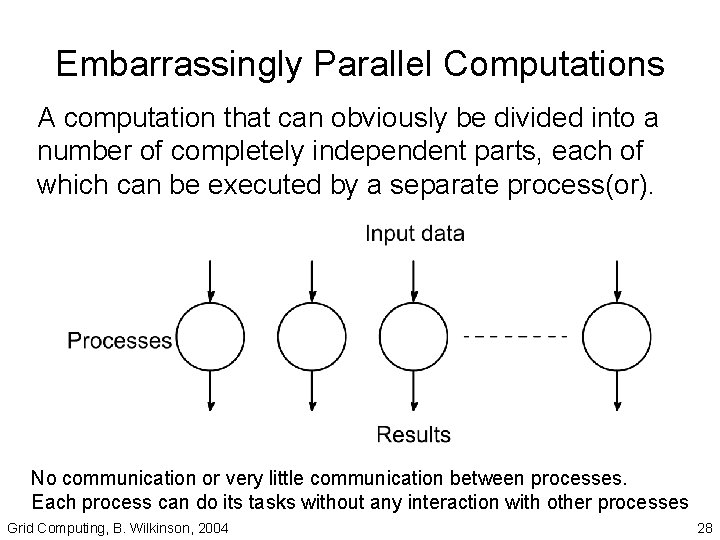

Embarrassingly Parallel Computations A computation that can obviously be divided into a number of completely independent parts, each of which can be executed by a separate process(or). No communication or very little communication between processes. Each process can do its tasks without any interaction with other processes Grid Computing, B. Wilkinson, 2004 28

Monte Carlo Methods • An embarrassingly parallel computation. • Monte Carlo methods use of random selections. Grid Computing, B. Wilkinson, 2004 29

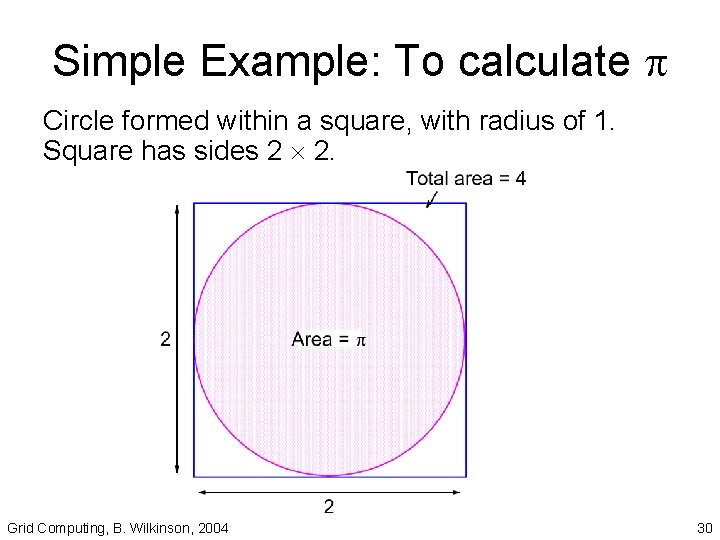

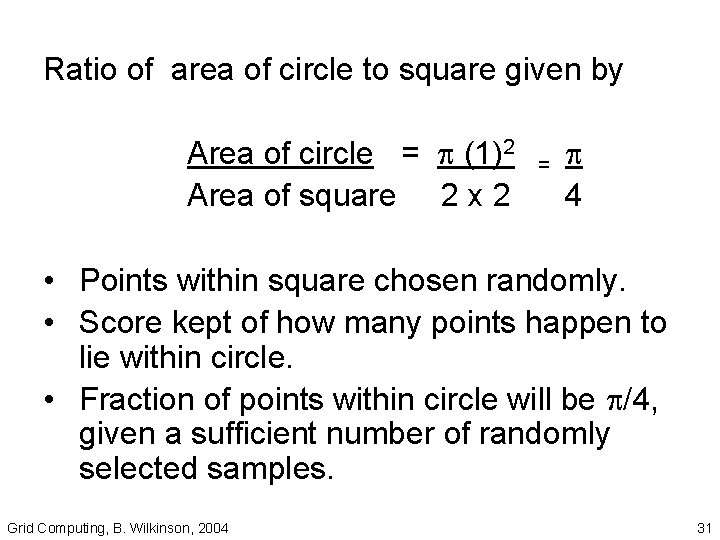

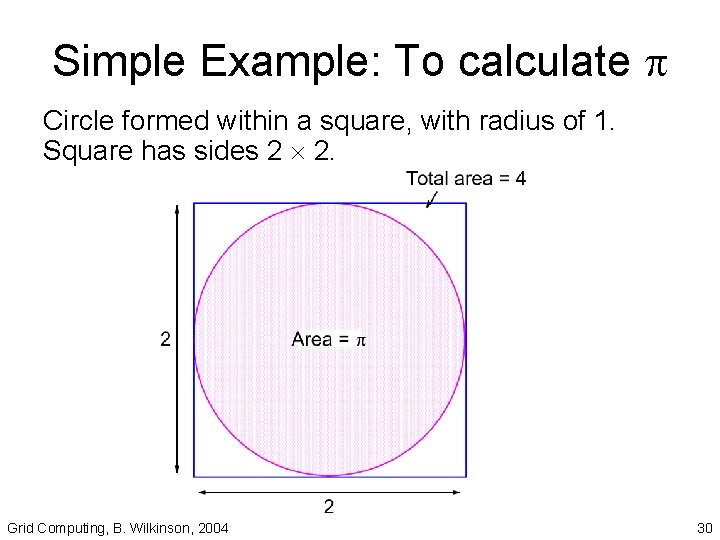

Simple Example: To calculate Circle formed within a square, with radius of 1. Square has sides 2 2. Grid Computing, B. Wilkinson, 2004 30

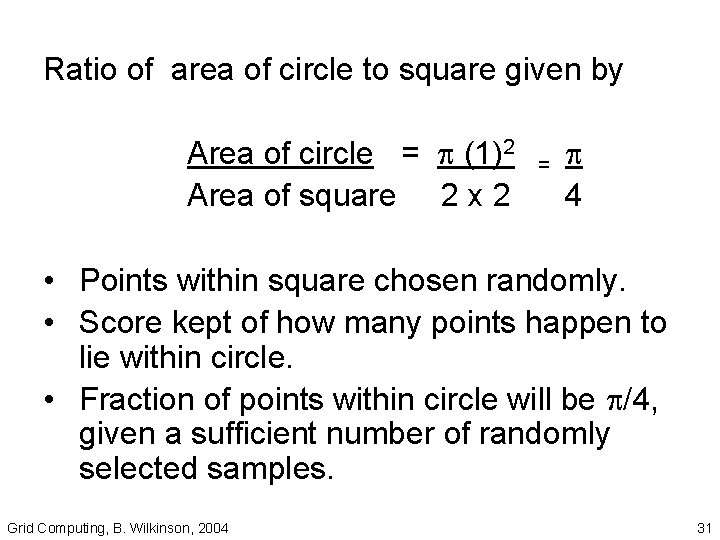

Ratio of area of circle to square given by Area of circle = (1)2 Area of square 2 x 2 = 4 • Points within square chosen randomly. • Score kept of how many points happen to lie within circle. • Fraction of points within circle will be /4, given a sufficient number of randomly selected samples. Grid Computing, B. Wilkinson, 2004 31

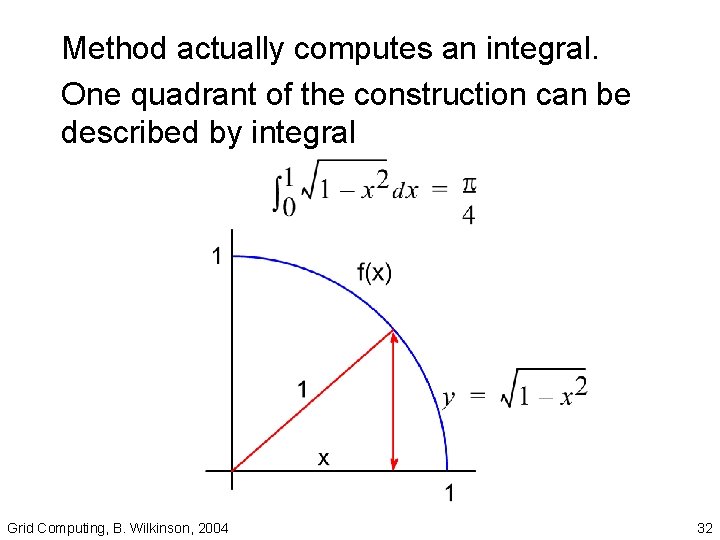

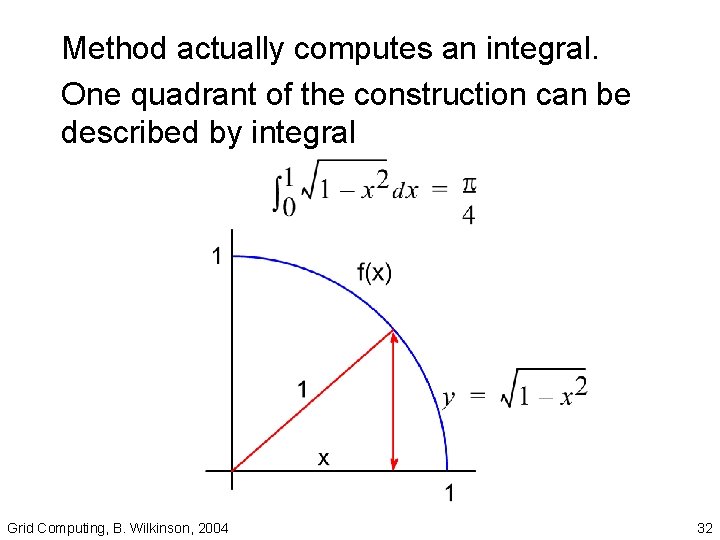

Method actually computes an integral. One quadrant of the construction can be described by integral Grid Computing, B. Wilkinson, 2004 32

So can use method to compute any integral! Monte Carlo method very useful if the function cannot be integrated numerically (maybe having a large number of variables). Grid Computing, B. Wilkinson, 2004 33

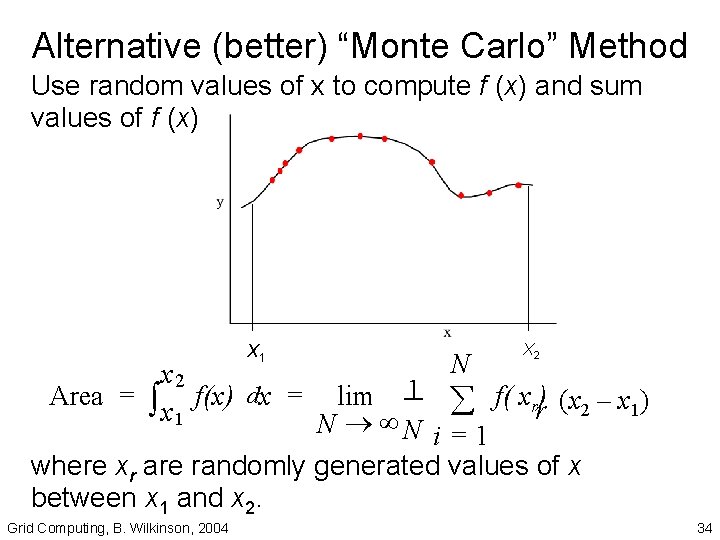

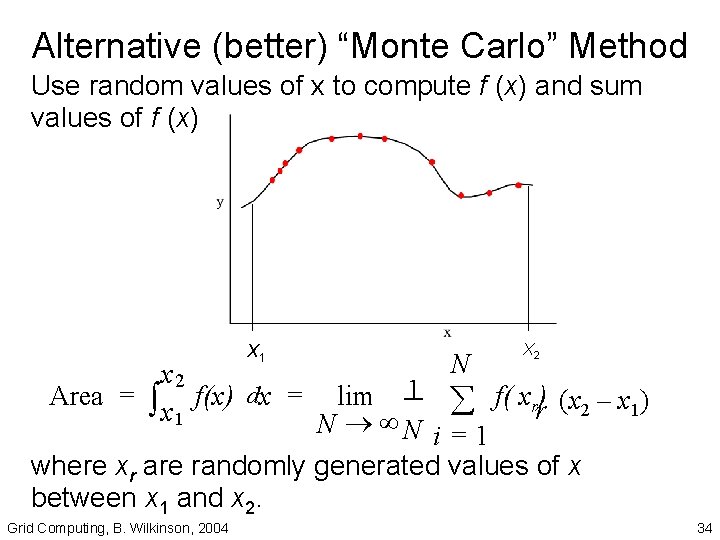

Alternative (better) “Monte Carlo” Method Use random values of x to compute f (x) and sum values of f (x) X 1 X 2 N x 2 1 å f( xr) (x – x ) Area = ò f(x) dx = lim ---2 1 r x 1 N ® ¥N i = 1 where xr are randomly generated values of x between x 1 and x 2. Grid Computing, B. Wilkinson, 2004 34

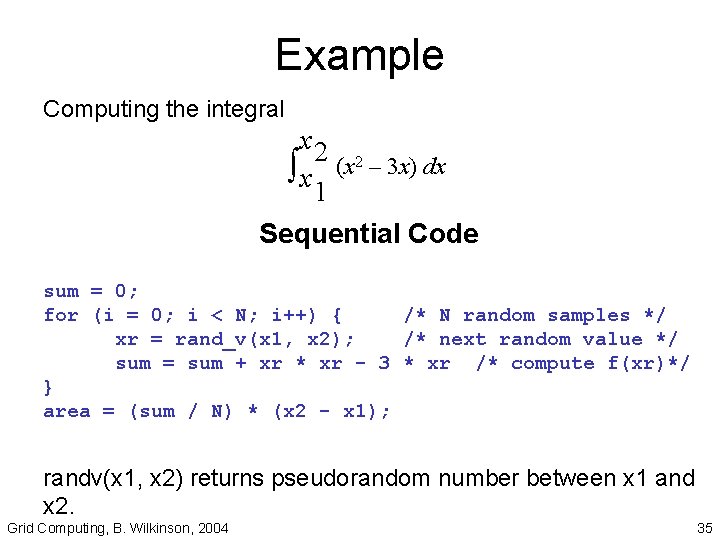

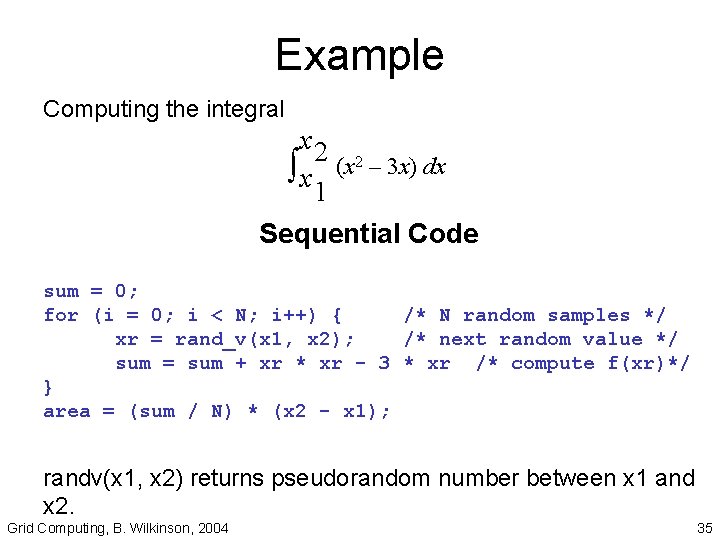

Example Computing the integral x 2 ò x (x 2 – 3 x) dx 1 Sequential Code sum = 0; for (i = 0; i < N; i++) { /* N random samples */ xr = rand_v(x 1, x 2); /* next random value */ sum = sum + xr * xr - 3 * xr /* compute f(xr)*/ } area = (sum / N) * (x 2 - x 1); randv(x 1, x 2) returns pseudorandom number between x 1 and x 2. Grid Computing, B. Wilkinson, 2004 35

For parallelizing Monte Carlo code, must address best way to generate random numbers in parallel. Can use SPRNG (Scalable Pseudorandom Number Generator) -- supposed to be a good parallel random number generator. Grid Computing, B. Wilkinson, 2004 36

Executing separate problem instances In some application areas, same program executed repeatedly - ideal if with different parameters (“parameter sweep”) Nimrod/G -- a grid broker project that targets parameter sweep problems. Grid Computing, B. Wilkinson, 2004 37

Techniques to reduce effects of network communication • Latency hiding with communication/computation overlap • Better to have fewer larger messages than many smaller ones Grid Computing, B. Wilkinson, 2004 38

Synchronous Algorithms • Many tradition parallel algorithms require the parallel processes to synchronize at regular and frequent intervals to exchange data and continue from known points This is bad for grid computations!! All traditional parallel algorithms books have to be thrown away for grid computing. Grid Computing, B. Wilkinson, 2004 39

Techniques to reduce actual synchronization communications • Asynchronous algorithms – Algorithms that do not use synchronization at all • Partially synchronous algorithms – those that limit the synchronization, for example only synchronize on every n iterations – Actually such algorithms known for many years but not popularized. Grid Computing, B. Wilkinson, 2004 40

Big Problems “Grand challenge” problems Most of the high profile projects on the grid involve problems that are so big usually in number of data items that they cannot be solved otherwise Grid Computing, B. Wilkinson, 2004 41

Examples • • • High-energy physics Bioinformatics Medical databases Combinatorial chemistry Astrophysics Grid Computing, B. Wilkinson, 2004 42

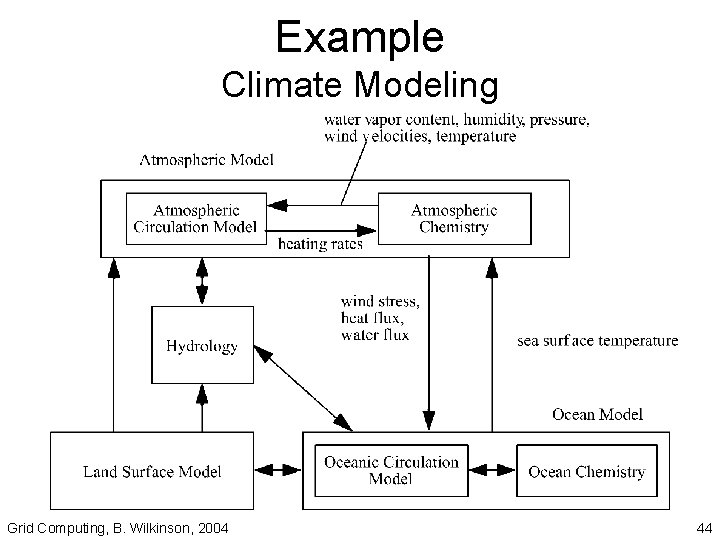

Workflow Technique • Use functional decomposition - dividing problem into separate functions which take results from other functions units and pass on results to functional units interconnection patterns depends upon the problem. • Workflow - describes the flow of information between the units. Grid Computing, B. Wilkinson, 2004 43

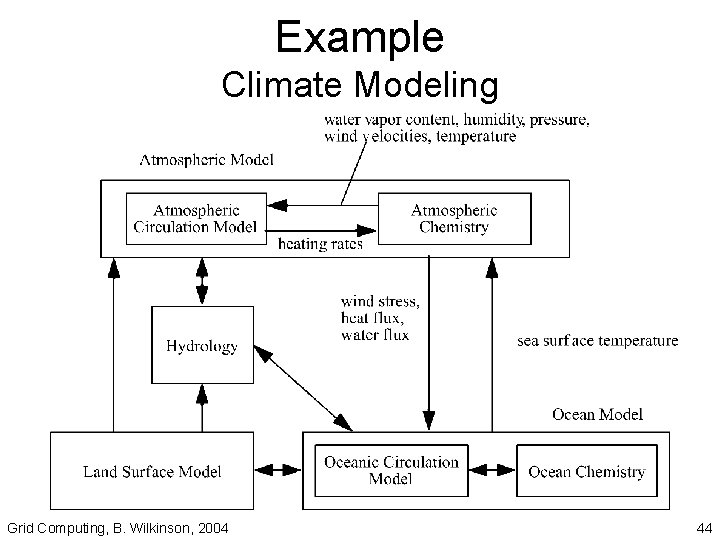

Example Climate Modeling Grid Computing, B. Wilkinson, 2004 44

Next topic UNC-Wilmington’s GUI workflow editor. Next week Professor Clayton Ferner Grid Computing, B. Wilkinson, 2004 45