Building the NCSC Summative Assessment Towards a Stage

Building the NCSC Summative Assessment: Towards a Stage. Adaptive Design Sarah Hagge, Ph. D. , and Anne Davidson, Ed. D. Mc. Graw-Hill Education CTB CCSSO New Orleans, LA June 25, 2014 Copyright © 2014 CTB/Mc. Graw-Hill LLC.

Overview § § § Rationale for stage-adaptive test Proposed stage-adaptive design Overview of pilot testing: Plan and goals Summary of results from Pilot Phase I Main findings and next steps Copyright © 2014 CTB/Mc. Graw-Hill LLC. 2

Rationale for Stage-Adaptive Test § § Targeted to student proficiency levels Improved precision of student test scores Reduced total testing time Reduced testing burden to students and teacher test administrators Copyright © 2014 CTB/Mc. Graw-Hill LLC. 3

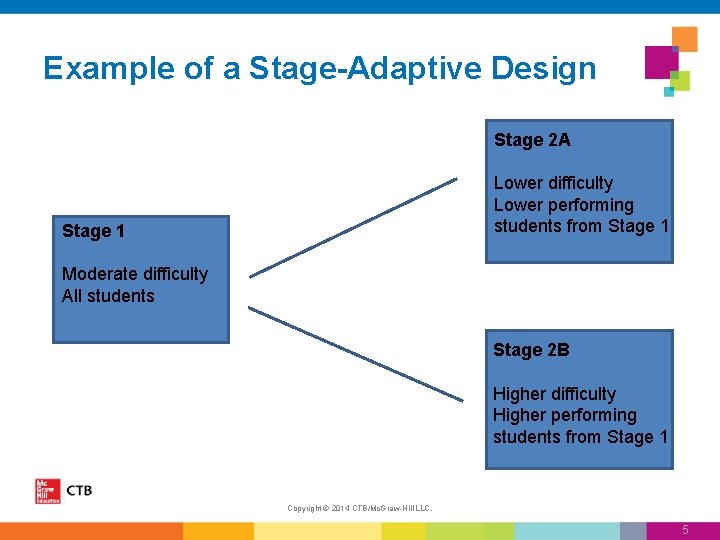

Proposed Stage-Adaptive Design § All students will receive tests with the same content distribution § Tests will be adaptive based on tiers and item difficulty – All students receive the same or a similar first stage, or testlet of items – Students will receive a second stage of items of lower, higher or about the same difficulty based on their performance on the first stage of the test Copyright © 2014 CTB/Mc. Graw-Hill LLC. 4

Example of a Stage-Adaptive Design Stage 2 A Lower difficulty Lower performing students from Stage 1 Moderate difficulty All students Stage 2 B Higher difficulty Higher performing students from Stage 1 Copyright © 2014 CTB/Mc. Graw-Hill LLC. 5

Overview of Pilot Testing

Purpose of Pilot Testing § Collect information necessary to support development and refinement of NCSC summative assessment design § Pilot Phase 1 – Item tryout – Spring 2014 – – Generate student performance data Investigate administration conditions Understand how the items are functioning Investigate the proposed item scoring processes and procedures § Pilot Phase 2 – Test forms – Fall 2014 – Investigate the adaptive algorithm – Collect form and student performance data Copyright © 2013 CTB/Mc. Graw-Hill LLC. 7

Broad goals § § Try out items Evaluate items Understand administration policies Understand administration processes – Computer based system – Accommodations § Investigate building an IRT scale § Develop the stage adaptive design specification Copyright © 2014 CTB/Mc. Graw-Hill LLC. 8

ELA Content and Forms § Grades 3 -8, 11 § 8 forms/grade – Four reading passages • Two literary and two informational • Foundational items in Grades 3 and 4 – 22 – 35 items/form – One passage at each of the four tiers – Selected response and dichotomously scored constructed response items Copyright © 2014 CTB/Mc. Graw-Hill LLC. 9

Math Content and Forms § Grades 3 -8, 11 § 8 forms/grade – 25 items per form – Each form contained a mix of all four item tiers – Content distribution percentages similar across the 8 forms – Selected response and dichotomously scored constructed response items Copyright © 2014 CTB/Mc. Graw-Hill LLC. 10

Initial Analysis § Demographic characteristics of student sample – Descriptive statistics (e. g. , gender, ethnicity) were collected for the sample of students. – Learner characteristic inventory was used to collect profile information about students who participated. – Accommodations data was collected prior to administration as well as whether the eligible student used the accommodation. § § Form-level results Classical item analysis Tier analysis Item response time Copyright © 2014 CTB/Mc. Graw-Hill LLC. 11

Flagging Criteria for Item Reviews § Classical Item Analysis – Low p-value, <0. 50 (note Tier-1 items have 2 answer choices) – High p-value, >0. 90 – Low point-biserial correlation, < 0. 20 – High option point-biserial correlation, >0. 05 – Omit rate, >5% § Tier reversals (Tier 1 p-value < Tier 4) § Key checks (Distractor analysis) § Survey and student interaction study results Copyright © 2014 CTB/Mc. Graw-Hill LLC. 12

Pilot Phase I Results

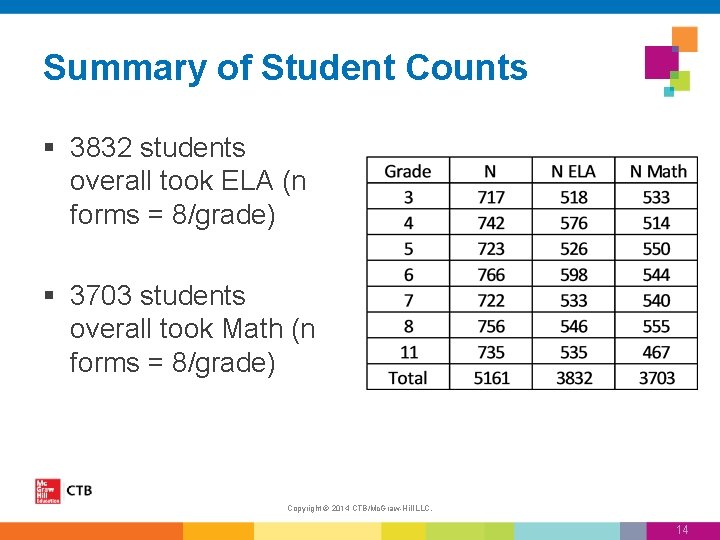

Summary of Student Counts § 3832 students overall took ELA (n forms = 8/grade) § 3703 students overall took Math (n forms = 8/grade) Copyright © 2014 CTB/Mc. Graw-Hill LLC. 14

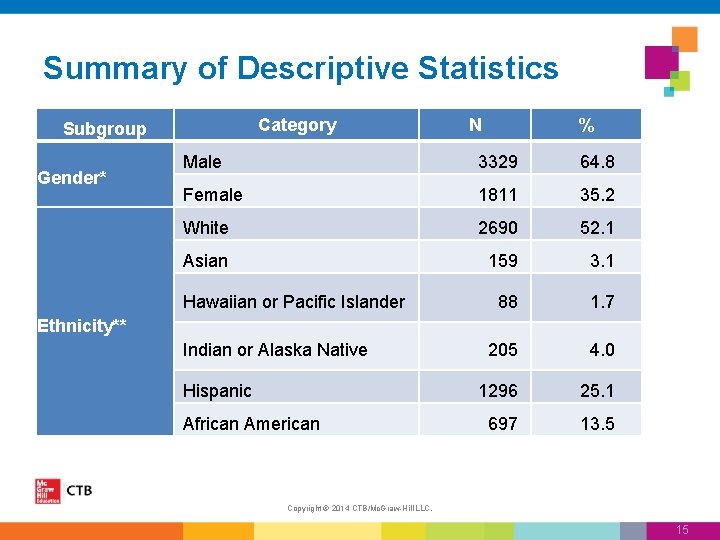

Summary of Descriptive Statistics Category Subgroup Gender* N % Male 3329 64. 8 Female 1811 35. 2 White 2690 52. 1 Asian 159 3. 1 88 1. 7 205 4. 0 1296 25. 1 697 13. 5 Hawaiian or Pacific Islander Ethnicity** Indian or Alaska Native Hispanic African American Copyright © 2014 CTB/Mc. Graw-Hill LLC. 15

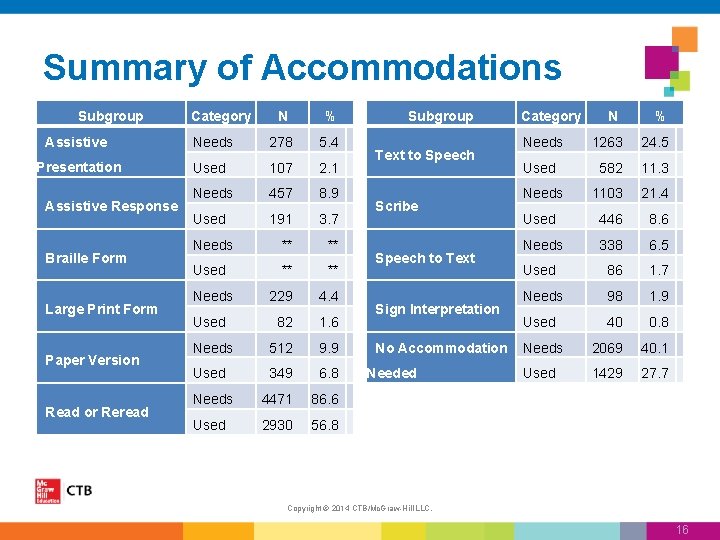

Summary of Accommodations Subgroup Category N % Assistive Needs 278 5. 4 Presentation Used 107 2. 1 Needs 457 8. 9 Used 191 3. 7 Needs ** ** Used ** ** Needs 229 4. 4 Used 82 1. 6 Needs 512 9. 9 Used 349 6. 8 Needs 4471 86. 6 Used 2930 56. 8 Assistive Response Braille Form Large Print Form Paper Version Read or Reread Subgroup Text to Speech Scribe Speech to Text Sign Interpretation No Accommodation Needed Category N % Needs 1263 24. 5 Used 582 11. 3 Needs 1103 21. 4 Used 446 8. 6 Needs 338 6. 5 Used 86 1. 7 Needs 98 1. 9 Used 40 0. 8 Needs 2069 40. 1 Used 1429 27. 7 Copyright © 2014 CTB/Mc. Graw-Hill LLC. 16

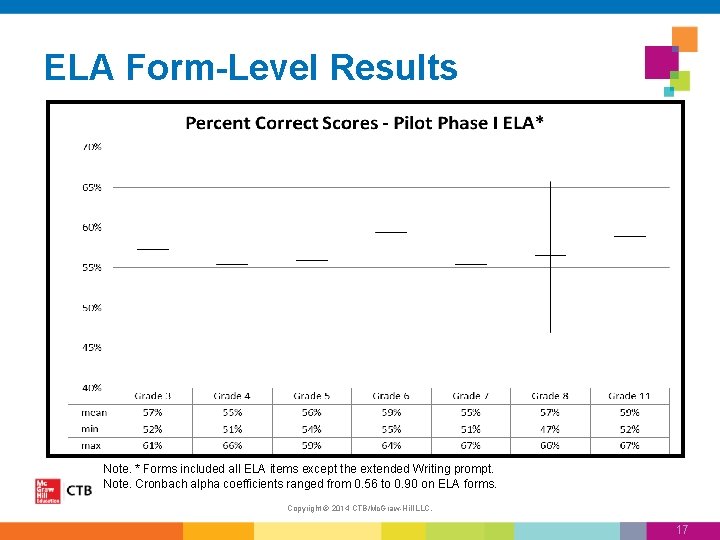

ELA Form-Level Results Note. * Forms included all ELA items except the extended Writing prompt. Note. Cronbach alpha coefficients ranged from 0. 56 to 0. 90 on ELA forms. Copyright © 2014 CTB/Mc. Graw-Hill LLC. 17

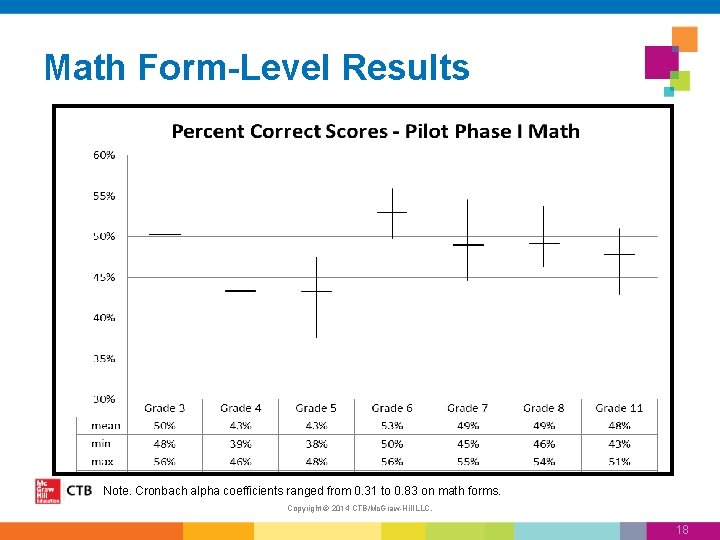

Math Form-Level Results Note. Cronbach alpha coefficients ranged from 0. 31 to 0. 83 on math forms. Copyright © 2014 CTB/Mc. Graw-Hill LLC. 18

Classical Item Results § Range of item p-values – 0. 05 to 0. 95 – P-value standard deviation of 0. 11 to 0. 23 depending on test form – Very few items with low or high p-values § Item omit rates less than 3% across all items § Majority of flagged items had low point-biserial or high option point-biserial Copyright © 2014 CTB/Mc. Graw-Hill LLC. 19

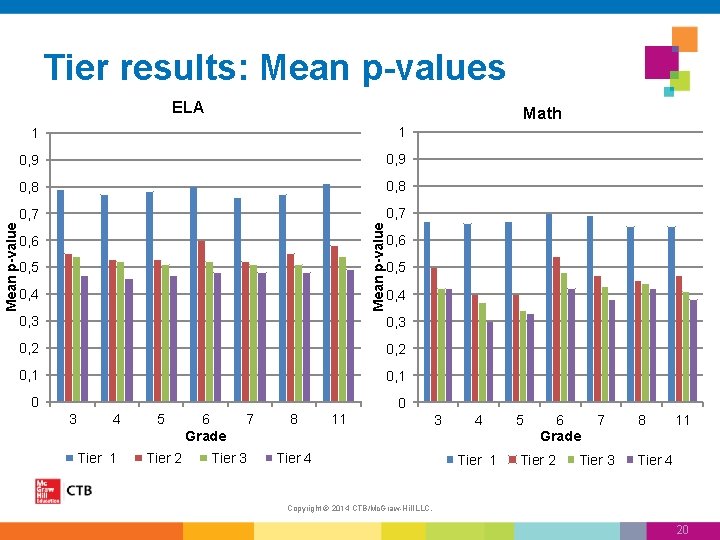

Tier results: Mean p-values Math 1 1 0, 9 0, 8 0, 7 Mean p-value ELA 0, 6 0, 5 0, 4 0, 3 0, 2 0, 1 0 0 3 4 Tier 1 5 Tier 2 6 Grade 7 Tier 3 8 11 Tier 4 3 4 Tier 1 5 6 Grade Tier 2 7 Tier 3 8 11 Tier 4 Copyright © 2014 CTB/Mc. Graw-Hill LLC. 20

Discussion and Next Steps

Main Findings – Evidence that content is appropriate for students in the Phase I Pilot sample. • Range of p-values • Relatively few items flagged for high or low p-values • Item omit rates and not-reached rates 3% or less • Form percent correct range of approximately 45 -70% – Evidence that tiers are functioning according to design at an aggregate level • Tier 1 easier than the other four tiers • Tiers 2, 3 and 4 tended to have a pattern of difficulty ranging from least to most difficult – Evidence that item bank can support forms at different difficulty levels • Items exhibit a range of p-values Copyright © 2014 CTB/Mc. Graw-Hill LLC. 22

Next Steps – Investigate IRT scaling on forms with higher N counts – Conduct item and form-level analysis by student subgroups – Conduct simulation studies of the adaptive design – Pilot Phase 2 • Field-test items to obtain statistics for operational item bank • Evaluate stage-adaptive design Copyright © 2014 CTB/Mc. Graw-Hill LLC. 23

Thank you! Copyright © 2014 CTB/Mc. Graw-Hill LLC.

- Slides: 24